1. Introduction

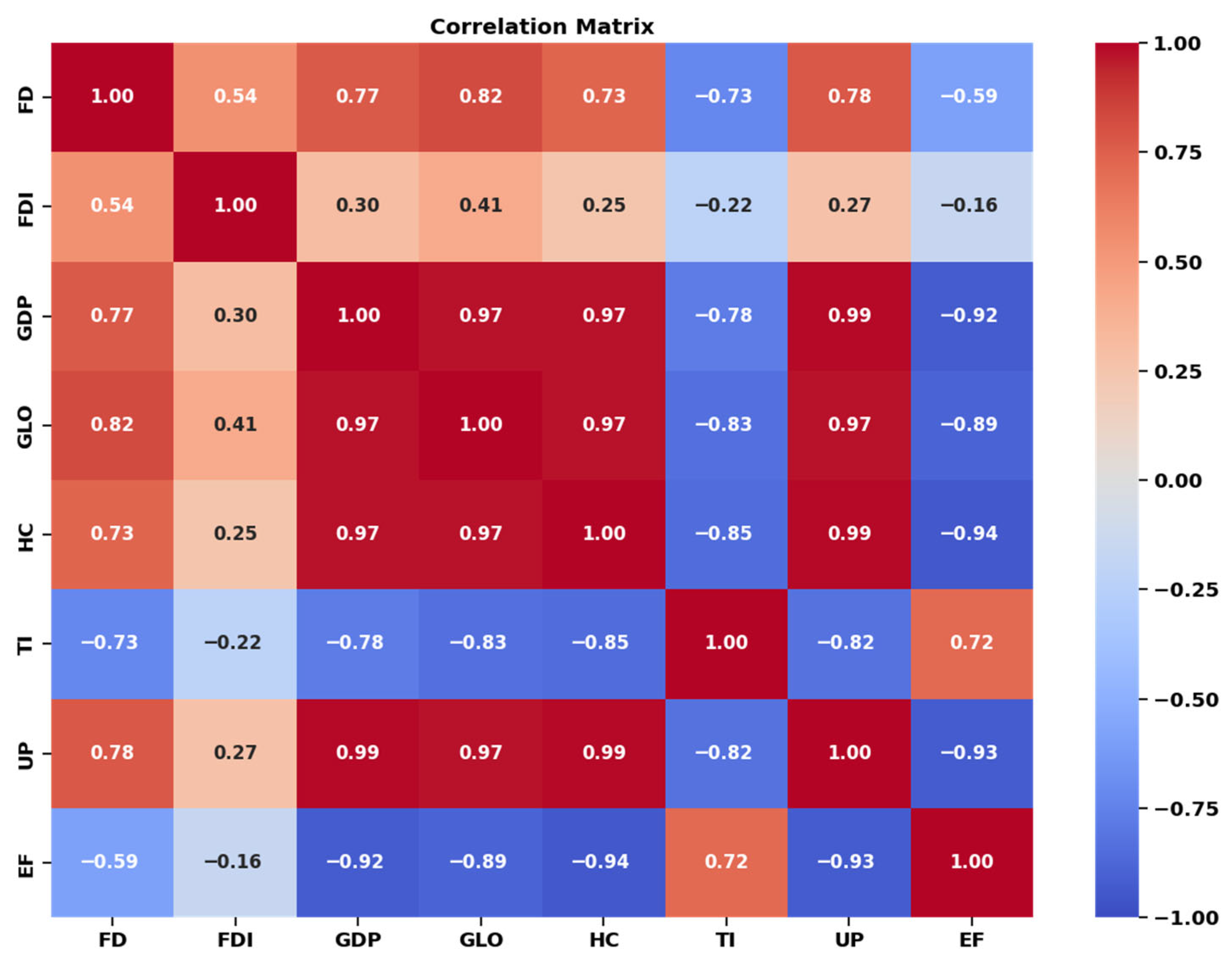

Sustaining human well-being within planetary boundaries requires reliable, policy-relevant indicators that translate complex resource demands into actionable insights for governments. The Ecological Footprint (EF) remains one of the most widely used, consumption-based indicators of human pressure on ecosystems, summarizing competing demands on bioproductive land and sea, including cropland, grazing land, fishing grounds, forest, built-up land, and carbon uptake land. Its long, harmonized time series have underpinned national and international sustainability assessments and overshoot diagnostics for decades, with the most recent national footprint and biocapacity accounts updating country-level trajectories for decades and documenting persistent ecological deficits in most nations [

1,

2]. Against the backdrop of accelerating globalization and capital mobility, accurately forecasting EF has direct implications for designing green growth strategies, aligning investment with decarbonization, and targeting interventions that reduce resource overuse without undermining development gains. Yet the EF’s responsiveness to macro-financial conditions, demographic pressures, and cross-border integration renders its dynamics highly nonlinear and time-varying, features that challenge classical econometric tools and motivate robust machine learning (ML) approaches. Beyond its headline appeal, EF accounting rests on a well-specified and continuously refined methodology that reconciles demand for ecosystem services with the biosphere’s regenerative capacity. Methodological updates since the early 2010s have clarified yield and equivalence factor calculations, improved carbon uptake land estimates, and strengthened the use of United Nations-based statistical sources, thereby supporting cross-country comparability and temporal consistency [

2]. These methodological advances justify EF’s use as a dependent variable in forecasting frameworks that integrate socio-economic and financial covariates, provided models can capture structural breaks and complex interactions among drivers. A rapidly expanding empirical literature has examined EF’s macro-determinants, typically within panel frameworks spanning diverse income groups. A recent systematic review synthesizing several decades of work identifies GDP per capita, urbanization, energy structure, biocapacity, human capital, financial development, trade openness, and foreign direct investment as recurrent and significant correlates, though their signs and magnitudes vary across contexts, time horizons, and estimation strategies [

3]. Studies focusing on globalization suggest heterogeneous effects: while some panels report that broader integration amplifies EF in developing regions, others find regime-dependent or statistically insignificant relationships once cross-sectional dependence and slope heterogeneity are addressed [

4,

5]. These inconsistencies underscore the need for predictive models that can (i) flexibly represent nonlinearities and thresholds and (ii) quantify the relative importance of overlapping drivers. Financial development has emerged as a particularly influential, yet ambiguous, factor. Multidimensional measures (depth, access, efficiency) often exhibit an inverted-U relationship with EF for financial institutions, whereas the contribution of financial markets appears less consistently benign, suggesting channel-specific scale, composition, and technology effects [

6]. Complementary evidence from developed economies confirms that finance can either worsen or improve environmental quality, depending on structural conditions and time scales, again arguing for context-aware, data-driven methods [

7]. Because financial cycles and investment booms propagate through energy use, urban expansion, and capital deepening, an EF prediction framework based on macro-financial indicators could offer early signals of sustainability risks, provided the models are accurate and interpretable. Concurrently, Earth and environmental sciences are witnessing a methodological shift: hybrid ML systems and data-driven models are increasingly used to emulate complex dynamics, from sub-grid climate processes to ecosystem fluxes [

8,

9]. While these advances demonstrate the feasibility of ML for high-dimensional environmental prediction, their translation to national-scale EF forecasting has been comparatively limited, with many EF studies still anchored in linear cointegration or vector autoregression (VAR) frameworks that can struggle with nonstationarity, interaction effects, and regime changes [

3,

10]. The opportunity, therefore, is to leverage modern learning algorithms that reconcile speed, accuracy, and interpretability for policy-relevant EF horizons.

Extreme Learning Machines (ELMs) offer an attractive base learner for such tasks. ELMs are single-hidden-layer feedforward networks with randomly assigned input weights and biases and analytically solved output weights, enabling exceptionally fast training and competitive generalization across regression and classification tasks [

11]. However, the very features that make ELMs fast, random hidden-layer initialization and one-shot output estimation, introduce variance and sensitivity to hyperparameters, leading to inconsistent performance, especially under noise, heteroskedasticity, or nonstationarity [

12,

13]. Addressing these sources of instability without sacrificing computational efficiency is thus central to positioning ELMs as reliable EF forecasters. In response, a rich strand of research has coupled ELMs with metaheuristic optimizers to tune input weights, hidden biases, and structural hyperparameters. Swarm and physics-inspired algorithms such as the Grey Wolf Optimizer (GWO) [

14], the Sine Cosine Algorithm (SCA) [

15], and the Arithmetic Optimization Algorithm (AOA) [

16] have been widely used to enhance exploration–exploitation balance, escape local minima, and improve convergence profiles. In energy and industrial forecasting, GWO-ELM variants have improved short-term wind power prediction, while other metaheuristic–ELM hybrids have reported consistent gains over standalone ELM in both accuracy and stability [

17,

18,

19]. These studies suggest that lightweight random-feature learners can be robust via biologically inspired search, opening a pathway for fast, accurate EF prediction at national scales.

Despite this progress, three gaps persist in the EF prediction literature. First, relative to energy and emissions forecasting, there are few studies that tailor metaheuristic-tuned ELMs to EF specifically, even though EF aggregates multiple pressures and is likely to exhibit stronger nonlinearities than single-pollutant targets [

3,

10]. Second, model interpretability is often treated as secondary to accuracy; many studies report error metrics without systematically decomposing feature contributions, limiting the policy insights that can be drawn from black-box predictors. Third, ELM’s stochasticity is rarely addressed with rigorous stability assessments, making it difficult to separate genuine model superiority from favorable random initializations. Together, these gaps motivate the development of an EF optimizer-based predictive model that combines (i) principled stochastic optimization for consistent training, (ii) post hoc explanation techniques for transparent inference, and (iii) robust experimental comparison. Model interpretability is essential for environmental governance, where decisions must balance economic and social objectives under uncertainty. Shapley Additive Explanations (SHAP) provide a theoretically grounded framework for local and global attribution that is model-agnostic and consistent, enabling decomposition of predictions into additive feature contributions and interaction effects [

20,

21]. Integrating SHAP into EF prediction can reveal, for instance, whether increases in financial development amplify EF via scale effects or mitigate it via composition and technology effects, and how these effects vary with investment intensity or globalization. Such transparency is particularly valuable when ML models inform industrial policies that incorporate environmental constraints.

Our approach builds on this agenda by introducing a new nature-inspired optimizer, the Chinese Pangolin Optimizer (CPO), and coupling it with ELMs to form a hybrid CPO–ELM predictor. The Chinese pangolin (Manis pentadactyla) is a nocturnal, myrmecophagous mammal whose foraging ecology combines targeted excavation of prey-rich patches with adaptive movement across heterogeneous terrain; empirical studies describe how burrow site selection depends on slope, canopy cover, and proximity to ant nests, and how activity rhythms and home ranges adjust to resource density [

22,

23]. Abstracted into an optimizer, these behaviors naturally map onto intensification (localized exploitation of promising regions), diversification (movement across patches under uncertainty), and parsimonious step selection (energy-aware exploration). By mathematically encoding pangolin-inspired operators for patch identification, depth-adaptive probing, and guided relocation, the CPO seeks a principled exploration–exploitation trade-off suited to the rugged error landscapes often encountered when tuning ELMs’ hidden-layer parameters. The proposed model was tested on monthly ecological footprint and macro indicators from 1991 to 2020 from the United States, which supports robust model training and validation. The United States exhibits substantial variation in the drivers considered in this study, including GDP per capita, human capital, financial development, globalization, urbanization, and foreign direct investment, enabling a stringent stress test of the learning algorithm. The country’s policy relevance for climate and sustainability agendas makes interpretability especially valuable for decision makers. This study addresses the aforementioned gaps by developing and evaluating a Chinese Pangolin Optimizer–Extreme Learning Machine (CPO–ELM) framework for sustainable ecological forecasting. Below are the contributions of this study:

Improved EF prediction: We introduce the CPO–ELM model, which leverages pangolin-inspired foraging intelligence to optimize ELM parameters, achieving higher accuracy and stability than baseline ELMs and established metaheuristic–ELM hybrids.

Interpretable insights: Using SHAP, we quantify the individual and interactive effects of socio-economic drivers, GDP per capita, human capital, financial development, total investment, urban population, globalization, and foreign direct investment on EF predictions, enabling transparent, policy-relevant interpretation.

Robust validation: We provide comprehensive metrics on accuracy, error dispersion, and bias, mitigating stochastic variability.

The remainder of this manuscript is organized as follows.

Section 2 provides a critical review of the literature.

Section 3 details the theoretical foundations of ELM, formalizes the mathematical operators of the Chinese Pangolin Optimizer (CPO), and describes the dataset and input variables.

Section 4 presents the empirical results, including predictive performance metrics and SHAP-based interpretability analyses.

Section 5 offers concluding remarks and provides directions for future research.

2. Literature Review

Advancements in machine learning and metaheuristic optimization have transformed the forecasting of environmental degradation and associated environmental metrics, enabling more precise assessments of sustainability pressures amid global resource constraints. Moayedi et al. [

24] explored hybrid metaheuristic–ANN frameworks to forecast energy-related CO

2 emissions. Their model incorporated GDP and multiple energy-source variables as predictors, optimizing multilayer perceptrons (MLPs) using five distinct algorithms. Results showed that Teaching–Learning-Based Optimization (TLBO) delivered superior generalization, achieving the lowest testing MSE values, thus outperforming other hybrids in both convergence and stability. Ghorbal et al. [

25] addressed the problem of accurately forecasting global CO

2 emissions by combining a Dual-Path Recurrent Neural Network (DPRNN) with the novel Ninja Optimization Algorithm (NiOA). They first applied Principal Component Analysis (PCA) and Blind Source Separation (BSS) for feature-cleaning and then trained the DPRNN and tuned it using NiOA. The resulting hybrid achieved lower error metrics and superior performance relative to competing metaheuristics. Janković et al. [

26] developed and rigorously compared four distinct hybrid machine learning models to forecast the total ecological footprint of consumption, using energy-related macroeconomic indicators as primary inputs. Their methodology centered on Bayesian-optimized ensemble techniques to handle the inherent nonlinearity in the data. The study demonstrated that the hybrid models significantly outperformed their standalone counterparts. Almsallti et al. [

27] introduced a novel hybrid framework, the RBMO-ELM, which integrates a metaheuristic optimizer with an Extreme Learning Machine to predict CO

2 emissions, a key component of the broader ecological footprint. The research applied this model to a rich, multidimensional Indonesian dataset encompassing economic and technological variables. Empirical validation showed that the RBMO-ELM model surpassed several state-of-the-art hybrid competitors.

A recent study, focused on India, by Mukherjee et al. [

28] evaluated a suite of conventional machine learning algorithms to predict the country’s national ecological footprint, leveraging a set of eleven socio-economic and trade-related predictors. The research found that Random Forests provided the most robust and accurate forecasts for this complex, developing economy. Meng and Noman [

29] designed an artificial intelligence system based on machine learning to project future CO

2 emission trajectories for major global economies. Their work emphasized the model’s ability to capture long-term, nonlinear trends in emission data, providing policymakers with a powerful tool for scenario analysis and long-range environmental planning. The proposed model exhibited high predictive fidelity. Feda et al. [

30] systematically investigated the impact of harmonizing various established metaheuristic algorithms, including the ELM, for carbon emission prediction. Their comparative analysis revealed that integrating these optimizers consistently improved the ELM’s stability and accuracy by effectively mitigating its random initialization issue. Khajavi and Rastgoo [

31] introduced a hybrid approach that employed six metaheuristic algorithms to fine-tune the hyperparameters of a Random Forest model for forecasting carbon dioxide emissions from road transport. Their results indicated that the RF model optimized by the Slime Mould Algorithm (SMA) achieved the lowest prediction error, demonstrating the value of metaheuristic-driven hyperparameter optimization in this domain. Arık et al. [

32] applied five distinct metaheuristic algorithms to forecast Turkey’s national carbon dioxide emissions. The study’s objective was to identify the most efficient and accurate algorithm for this specific national context, using historical emission data as the primary input. The comparative results indicated that the metaheuristic-tuned optimizer demonstrated superior accuracy. Moros-Ochoa et al. [

33] extended the application of machine learning to the joint forecasting of both biocapacity and ecological footprint.

Their work built upon earlier hybrid modeling successes, demonstrating that advanced ML techniques could effectively model the dynamic balance between a nation’s ecological demand and its regenerative supply. Priya et al. [

34] independently developed machine learning and deep learning systems to monitor and predict India’s carbon footprint, motivated by the country’s status as a top global emitter. Their models, which included both classical ML and neural network architectures, were trained on national greenhouse gas inventories and aimed to provide a real-time monitoring framework. Alhussan and Metwally [

35] systematically investigated the efficacy of machine learning models optimized with the metaheuristic optimizer for CO

2 emissions prediction using a detailed fuel consumption dataset. Their work provided a granular analysis of model performance on high-resolution data, finding that ensemble methods again demonstrated superior predictive power. Jin and Sharifi [

36] performed a comprehensive review of 75 studies that applied machine learning to forecast urban greenhouse gas emissions, a critical sub-component of the national ecological footprint. Their analysis mapped the evolution of methodological approaches and identified a clear trend toward the adoption of hybrid and ensemble models, which consistently delivered higher accuracy and reliability for urban-scale environmental prediction.

4. Experiment and Discussion

In this section, a comprehensive experimental analysis was conducted to evaluate the predictive performance and robustness of the proposed CPO–ELM model in comparison with other ELM-based hybrid optimizers. The assessment was carried out in two distinct validation phases to ensure the models’ stability and generalization capability. A 5-fold cross-validation procedure was implemented in the first phase to assess the model’s consistency across different data partitions. The complete dataset was divided into five equal subsets, with four used for training and one for testing in each iteration. This process was repeated five times, ensuring that each subset served once as the test set while the remaining four were used for model training. The average performance across all folds was then computed to provide an unbiased estimation of the model’s generalization accuracy.

In the second phase, an independent simulation experiment consisting of 20 separate runs was performed for each algorithm under identical experimental conditions. This procedure was adopted to mitigate the influence of stochastic variability inherent to population-based optimization algorithms and to confirm the results’ statistical reliability. The mean and standard deviation of performance metrics across the 20 runs were used to evaluate model stability and convergence consistency. The optimization parameters utilized for all comparative algorithms are summarized in

Table 2. These include the specific control parameters. The hidden-layer neuron size for both the proposed CPO–ELM model and all comparative ELM-based models was fixed at 10 to maintain uniform model complexity across experiments. The search bounds for the weights and biases optimized by each metaheuristic were constrained to –10 to 10, ensuring feasible parameter exploration while preventing numerical instability during training. All optimizers used a population size of 20 and 50 iterations. To ensure a fair and systematic comparison, the performance of the proposed CPO–ELM model was benchmarked against widely recognized metaheuristic-based ELM optimizers: the Aquila Optimizer (AO) [

47], Exponential Distribution Optimizer (EDO) [

48], Grey Wolf Optimizer (GWO) [

14], and Sine Cosine Algorithm (SCA) [

15]. These algorithms were selected for their established performance in nonlinear optimization and proven ability to balance exploration and exploitation in complex search spaces. To ensure a comprehensive and objective comparison of model performance, all experiments were evaluated using a consistent set of statistical performance indicators. The selected metrics evaluate the models’ predictive accuracy, reliability, and error characteristics. Specifically, the evaluation criteria included the Coefficient of Determination (R

2), root mean squared error (RMSE), mean squared error(MSE), Mean Error (ME), and Relative Absolute Error (RAE). All experiments were conducted in Python 3.10 using Windows 11. Core libraries included NumPy for numerical operations, pandas for data handling, scikit-learn for model evaluation and preprocessing, and Matplotlib 3.0.7 for visualization. The optimization and ELM procedures were implemented in native Python, and SHAP was used for interpretability analysis. Computations were performed on an Intel Core i7 processor with 16 GB RAM.

The application of the proposed model United States data shows consistent accuracy and stability of the model across folds and repetitions. The performance results of the proposed CPO-ELM model and the comparative hybrid algorithms obtained from the 5-fold cross-validation experiments are presented in

Table 3 and

Table 4, respectively, for the training and held-out validation phases. These results comprehensively evaluate each model’s predictive performance, generalization, and convergence stability in forecasting the ecological footprint. As shown in

Table 3, the proposed CPO-ELM model achieved the best overall performance across all evaluation metrics, outperforming both the standalone ELM and the other optimized variants. Specifically, CPO-ELM achieved the highest R

2 of 0.9828, indicating that it explained approximately 98.3% of the variance in the ecological footprint data during training. This value was superior to the AO-ELM (0.9747), EDO-ELM (0.9738), GWO-ELM (0.9756), SCA-ELM (0.9774), and conventional ELM (0.9324), clearly demonstrating the improved fitting accuracy achieved through CPO-based optimization. Furthermore, the CPO-ELM exhibited the lowest RMSE and MSE values of 2.688 × 10

−2 and 7.246 × 10

−4, respectively, which reflect its superior precision and reduced residual variance compared to other models. The ME and RAE also highlight the robustness of the proposed approach. The CPO-ELM recorded the smallest ME (9.792 × 10

−2) and RAE (7.249 × 10

−3), indicating that its predictions were more consistent and closer to the actual ecological footprint values. The relatively low standard deviations (STDs) across all performance metrics for the CPO-ELM confirm the algorithm’s high convergence stability and reduced sensitivity to stochastic variations across folds. In contrast, the conventional ELM demonstrated the weakest performance, with a higher RMSE (5.324 × 10

−2), MSE (2.837 × 10

−3), and the lowest R

2 (0.9324), suggesting that its random weight and bias initialization led to suboptimal convergence and higher predictive uncertainty. Among the other optimizers, the SCA-ELM and GWO-ELM performed relatively well, with R

2 values of 0.9774 and 0.9756, respectively, but their accuracy metrics remained inferior to those of the CPO-ELM. The AO-ELM and EDE-ELM variants also achieved competitive results, though their higher RMSE and MSE values indicate less efficient exploitation during training. The superior results of the CPO-ELM model in the training phase can be attributed to the pangolin’s adaptive foraging dynamics, which effectively balance exploration and exploitation to identify optimal weight–bias configurations for the ELM network.

The predictive performance of the models on the unseen validation folds reported in

Table 4 further substantiates the generalization advantage of the proposed CPO-ELM model. During the held-out phase, the CPO-ELM maintained the highest R

2 value of 0.9793, closely followed by the SCA-ELM (0.9739) and EDO-ELM (0.9721), while the baseline ELM again recorded the lowest R

2 of 0.9271. This result confirms that the proposed model fits the training data effectively and generalizes well to unseen samples without overfitting. Similarly, the CPO-ELM achieved the lowest RMSE (2.890 × 10

−2) and MSE (8.476 × 10

−4) among all competing models, reflecting its superior prediction accuracy and reduced error dispersion on the validation sets. The ME and RAE values for the CPO-ELM, recorded at 8.882 × 10

−2 and 7.798 × 10

−3, respectively, were also the smallest among all compared models, reinforcing its consistent predictive precision and minimal bias. The relatively small standard deviations across all metrics indicate stable convergence across the five folds, validating the algorithm’s reliability across different training–test partitions. In contrast, the conventional ELM model exhibited a clear degradation in generalization performance, with the highest RMSE (5.452 × 10

−2), MSE (3.004 × 10

−3), and the lowest R

2 (0.9271), confirming its instability due to random initialization. Among the hybrid competitors, the SCA-ELM and GWO-ELM maintained moderately good validation accuracy, though their slightly higher errors and greater variability suggest less consistent convergence than the CPO-ELM.

To further verify the proposed CPO-ELM model’s robustness, stability, and convergence reliability, an extensive set of 20 independent experimental runs was conducted for each algorithm under identical conditions. The evaluation metrics’ averaged and standard deviation values across the 20 runs are summarized in

Table 5 and

Table 6, respectively. As shown in

Table 5, the CPO-ELM model consistently achieved superior predictive accuracy and convergence stability across all evaluation metrics compared to the other ELM-based hybrid models and the standalone ELM. The proposed model attained the highest R

2 of 0.9855, indicating that it captured approximately 98.5% of the variance in the training data, outperforming the AO-ELM (0.9764), EDO-ELM (0.9739), GWO-ELM (0.9706), SCA-ELM (0.9721), and ELM (0.9335). Correspondingly, the CPO-ELM recorded the lowest RMSE and MSE values of 2.396 × 10

−2 and 5.837 × 10

−4, respectively, demonstrating its superior accuracy and minimized residual error. In addition, the ME and RAE values for the CPO-ELM were the smallest among all models, indicating that its predictions closely aligned with the observed ecological footprint values. The low standard deviations across all metrics reflect consistent convergence and robustness against random initialization effects. By contrast, the standalone ELM model exhibited the poorest performance R

2 of 0.9335 R

2 and RMSE of 5.390 × 10

−2, reaffirming its limitation due to random parameter initialization and a lack of adaptive tuning. The AO-ELM and EDO-ELM achieved moderately strong performance among the comparative hybrid models, with R

2 values of 0.9764 and 0.9739, respectively, but both fell short of the precision achieved by the CPO-ELM. The GWO-ELM and SCA-ELM models also demonstrated satisfactory performance but showed higher RMSE and MSE values, suggesting weaker convergence. The results from this phase clearly indicate that the CPO-ELM model’s olfactory-guided adaptive mechanism provides a more efficient balance between exploration and exploitation, thereby identifying near-optimal weight–bias configurations for the ELM network.

The robustness of these findings was further confirmed in the test experiment, as summarized in

Table 6, which yielded consistent performance trends. The CPO-ELM model maintained its dominance, achieving the highest R

2 of 0.9880 and the lowest RMSE and MSE values of 2.322 × 10

−2 and 5.479 × 10

−4, respectively. These results emphasize that the proposed model fits the data accurately and demonstrates strong generalization capability and repeatability across multiple random trials. In terms of error measures, the CPO-ELM again outperformed all competitors, achieving the lowest ME (7.516 × 10

−2) and RAE (6.221 × 10

−3), indicating minimal bias and consistently reliable predictions. The low standard deviation across all metrics confirms the algorithm’s high stability and resistance to stochastic fluctuations, a key advantage of the pangolin-inspired dynamic learning mechanism. When compared to the alternative hybrid models, the AO-ELM and EDO-ELM achieved R

2 values of 0.9734 and 0.9760, respectively, but with higher RMSE and MSE, indicating less efficient convergence toward optimal solutions. The GWO-ELM and SCA-ELM variants also produced acceptable results but were less precise and displayed greater variability. The conventional ELM again recorded the lowest performance, with R

2 of 0.9249, a RMSE of 5.334 × 10

−2, and a MSE of 2.845 × 10

−3, confirming its inadequacy in nonlinear and high-dimensional learning tasks without optimization support. The results from both sets of independent runs conclusively demonstrate the superior reliability and performance of the CPO-ELM model. The minimal variation between the two experimental trials indicates strong reproducibility and convergence consistency, validating the model’s capacity to achieve near-optimal solutions under diverse initialization conditions. The enhanced performance of the CPO-ELM can be attributed to its dual-phase optimization strategy, in which the luring phase fine-tunes around promising solutions and the predation phase maintains sufficient exploration to avoid local minima. This adaptive, energy-driven mechanism enables the CPO-ELM to achieve a better trade-off between accuracy and stability than other metaheuristic-based ELM variants. The CPO–ELM framework can be adapted to countries with varying economic and environmental structures. Because the CPO dynamically balances exploration and exploitation, it can tune ELM parameters even under different data distributions or nonlinear relationships.

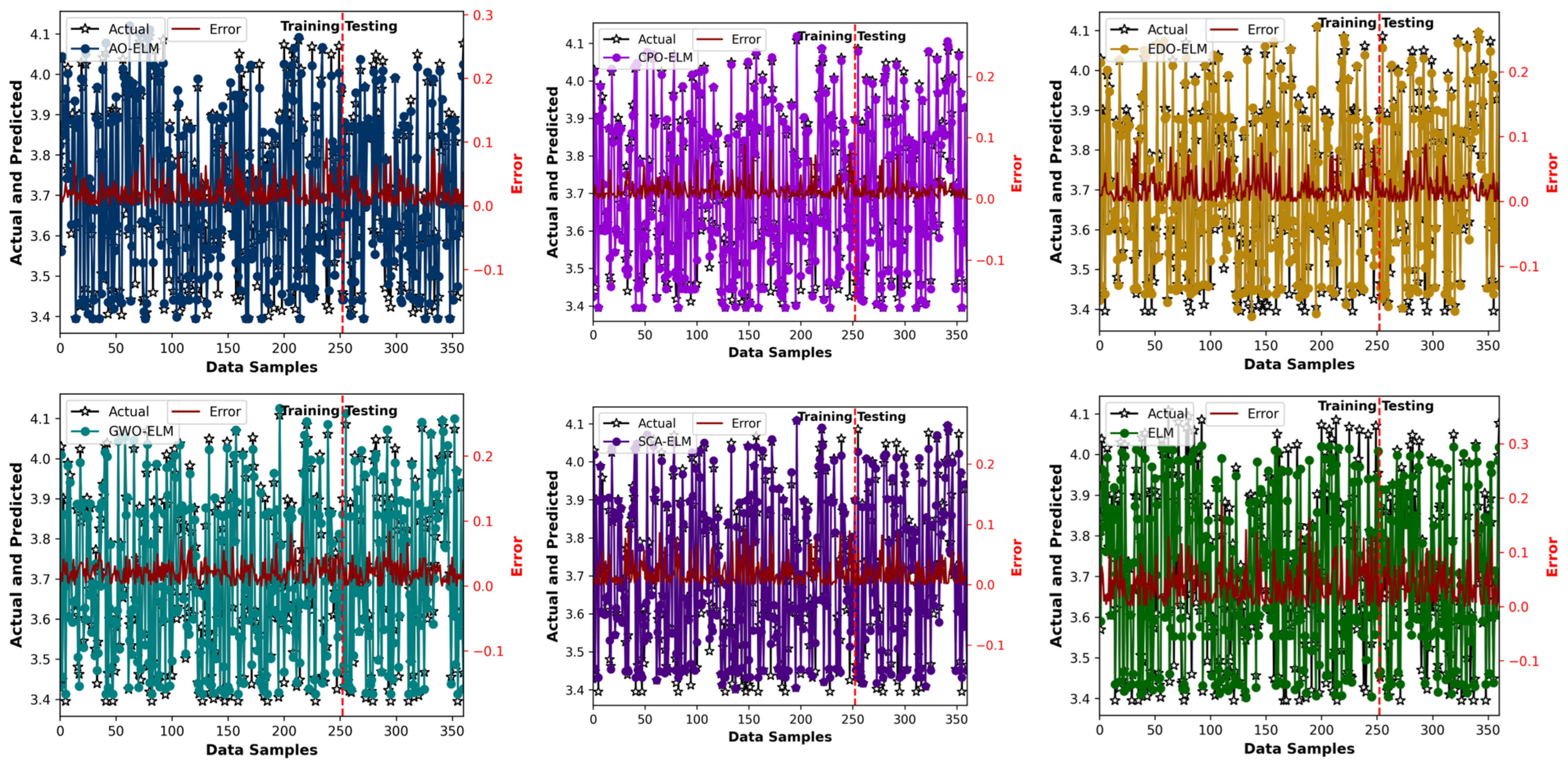

The predictive performance of the developed models was further examined through the visual comparison of the predicted versus actual ecological footprint values, as presented in

Figure 4. These scatter plots illustrate the alignment between the observed and estimated outputs for both the training and testing datasets across all hybrid ELM-based models, and the standalone ELM. The plotted results show that the proposed CPO–ELM model exhibits the highest degree of agreement between predicted and actual ecological footprint values, with data points clustering closely along the ideal line. This strong linear correspondence indicates that the CPO–ELM model effectively captured the underlying functional relationships among the predictors, such as total investment, human capital, globalization, financial development, and GDP per capita, and the target ecological footprint variable. The minimal deviation between predicted and actual values reflects the model’s exceptional fitting accuracy and low generalization error. By contrast, the comparative models, particularly the conventional ELM, exhibit greater dispersion of data points around the reference line, suggesting less consistent approximation and greater prediction bias. Among the other hybrid models, the SCA–ELM and GWO–ELM show moderate alignment, while the AO–ELM and EDO–ELM exhibit slightly higher variance, indicative of less stable convergence patterns. The CPO–ELM model not only demonstrates superior predictive precision but also maintains stable performance across both training and test sets, confirming its robustness and reliability in modeling complex nonlinear ecological footprint dynamics. The close alignment between actual and predicted outputs further validates the efficacy of the CPO’s adaptive search mechanism in optimizing the ELM’s parameters for improved generalization and accuracy.

The prediction error plots presented in

Figure 5 provide a visual depiction of the models’ performance in approximating the ecological footprint over the full dataset. Each subplot corresponds to a distinct ELM-based model: the AO-ELM, CPO-ELM, EDO-ELM, GWO-ELM, SCA-ELM, and standalone ELM. The black star markers represent the actual ecological footprint values, while the colored lines denote the predicted outputs of each model. The red line, plotted along the secondary

y-axis, indicates the absolute prediction error distribution, capturing the magnitude and variability of deviations between predicted and actual values. The vertical dashed line separates the training and testing regions, allowing for a clear visual distinction of model performance in both learning and generalization phases. Across all models, the fluctuations of the red error curves around the zero baseline indicate the presence and magnitude of residual prediction differences. However, the CPO-ELM model demonstrates the most desirable error characteristics. Its error curve remains tightly concentrated near zero across both training and testing samples, reflecting minimal bias and excellent prediction stability. The amplitude of the error fluctuations is consistently lower than that observed in the other models, signifying superior learning consistency and a more accurate approximation of the ecological footprint dynamics. The predicted line of the CPO-ELM closely follows the actual data trajectory, illustrating the optimizer’s ability to effectively tune the ELM parameters for precise mapping of complex nonlinear relationships.

In contrast, the other hybrid models—the AO-ELM, EDO-ELM, GWO-ELM, and SCA-ELM—exhibit relatively wider deviations. Although these models maintain moderate accuracy, the presence of larger oscillations and sporadic spikes indicates less consistent error control. Among them, the SCA-ELM and GWO-ELM perform comparatively better than the AO-ELM and EDO-ELM, yet both still show regions with slightly higher error amplitudes, suggesting weaker generalization in certain data intervals. The AO-ELM and EDO-ELM models display noticeable irregularities, implying that their convergence was occasionally trapped in suboptimal local solutions during training. The standalone ELM model presents the largest and most irregular error variations, especially in the testing phase. The red error line deviates significantly from the zero baseline, confirming the model’s high sensitivity to random initialization and its inability to maintain stable predictive performance without parameter optimization. The misalignment between its predicted and actual curves further highlights ELM’s lack of adaptive learning control. The plots confirm that the CPO-ELM model achieves the best predictive balance, producing the smallest and most stable error distribution across all data samples. The compact clustering of its predicted values around the actual observations and the near-zero mean of the error curve demonstrate both strong fitting and excellent generalization. This result aligns with the statistical outcomes from the cross-validation and independent-run analyses, reinforcing the conclusion that the CPO-ELM is the most robust and reliable approach for ecological footprint prediction among the tested models.

Figure 6 compares the average runtime of all examined models. The proposed CPO–ELM model exhibits the shortest execution time among the hybrid models, which demonstrates its computational efficiency relative to other hybrid approaches. The EDO–ELM and AO–ELM required moderate processing time, while the SCA–ELM and GWO–ELM consumed more resources. The conventional ELM exhibited the least runtime due to its lack of adaptive parameter search. The superior efficiency of the CPO–ELM among the hybrids confirms that its adaptive exploration–exploitation mechanism not only enhances convergence accuracy but also reduces computational cost, making it suitable for large-scale ecological forecasting tasks.

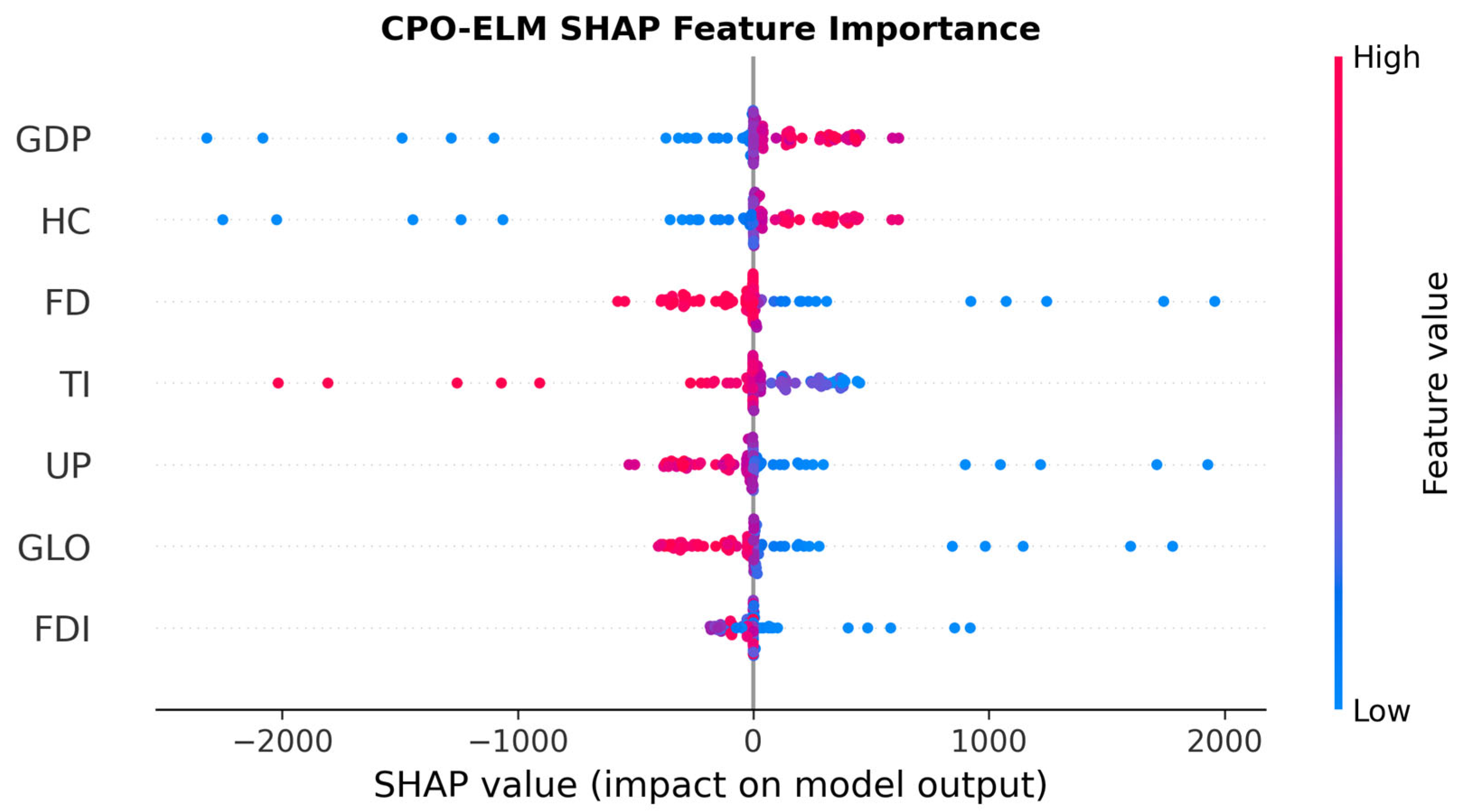

To further interpret the internal learning behavior of the proposed CPO–ELM model and to identify the most influential determinants of the ecological footprint, a SHAP analysis was conducted. The SHAP framework provides a theoretically grounded approach to quantifying the marginal contribution of each predictor variable to the model’s output, thereby enabling a transparent and interpretable assessment of feature importance across the entire prediction space. As depicted in

Figure 7, the SHAP summary plot organizes features vertically by their overall impact magnitude, with the horizontal axis representing the SHAP value, the directional and quantitative influence of each feature on the predicted ecological footprint, and the color gradient indicating the underlying feature value, ranging from low (blue) to high (red). GDP does demonstrate a clear trend wherein higher values (red dots) correlate with positive SHAP values, implying increased ecological pressure. Its distribution also includes substantial negative SHAP values at lower GDP levels, suggesting contextual heterogeneity. This pattern is consistent with the Environmental Kuznets Curve hypothesis, which posits that environmental degradation initially rises with income but may plateau or decline at very high levels of development, contingent on institutional quality, and technological adoption. Similarly, HC exhibits mixed effects: while some high-HC observations yield positive SHAP values, others yield negative values, indicating that human capital alone does not linearly drive ecological burden. Rather, its effect appears to be moderated by other socioeconomic conditions; for instance, education may enhance environmental awareness in advanced economies but stimulate consumption-driven footprints in developing contexts.

Financial development (FD) and total investment (TI) emerge as the next most potent mitigating forces. High FD values (red dots) are strongly associated with large negative SHAP values, indicating that robust financial systems, particularly those that facilitate green finance, sustainable lending, or equity markets aligned with environmental, social, and governance (ESG) criteria, exert a significant downward pressure on the ecological footprint. Likewise, TI shows an unequivocally negative relationship: higher investment levels consistently correspond to lower ecological impact, likely reflecting capital allocation toward energy-efficient infrastructure, renewable energy deployment, and circular-economy initiatives. These findings underscore the critical role of institutional and financial architecture in decoupling economic activity from environmental degradation. Urban population (UP) and globalization (GLO) exhibit moderate yet discernible influences. UP shows a tendency for high population density to correlate with positive SHAP values, reinforcing the notion that urbanization intensifies resource demand and emissions, particularly in rapidly industrializing regions lacking sustainable urban planning. GLO, while less dominant than GDP or FD, demonstrates a net positive association with ecological footprint, consistent with the literature highlighting that trade-induced production externalities, transport emissions, and pollution have an effect, whereby global supply chains relocate environmentally intensive activities to jurisdictions with weaker regulations. The interpretation of foreign direct investment (FDI) requires particular nuance; the plot suggests that high FDI (red dots) is associated with small or slightly negative SHAP values, while low FDI (blue dots) corresponds to more variable and occasionally positive impacts. This implies that FDI, when present at scale, may contribute modestly to footprint reduction, perhaps through technology transfer or adherence to international environmental standards. Rather, the ecological consequences of FDI appear contingent on sectoral composition, host-country governance, and investor intent.

Collectively, these results suggest that while macroeconomic indicators such as GDP remain central to ecological footprint dynamics, their influence is neither uniform nor deterministic. Instead, the model reveals that structural factors, including financial system maturity, investment orientation, and global integration play, decisive roles in modulating environmental outcomes. Policy implications therefore extend beyond growth management to encompass institutional design: fostering inclusive financial development, directing public and private investment toward sustainability, and embedding environmental safeguards within globalization frameworks may prove more effective than targeting GDP or human capital alone. The results align with several UN Sustainable Development Goals. The positive association between GDP per capita and ecological footprint highlights the tension between decent work and economic growth and climate action, suggesting that growth-oriented policies must integrate low-carbon strategies. The influence of financial development supports responsible consumption and production by indicating that sustainable investment channels can mitigate environmental pressure. The significant impact of human capital highlights the crucial role of education and innovation in promoting affordable and clean energy, sustainable cities, and communities through behavioral and technological adaptation. These relationships demonstrate how the CPO–ELM framework can help policymakers balance economic and environmental objectives in line with the UN Agenda.