From General Intelligence to Sustainable Adaptation: A Critical Review of Large-Scale AI Empowering People’s Livelihood

Abstract

1. Introduction

2. Background

2.1. The Evolution and Capabilities of LAMs

2.2. Positioning This Review: A Taxonomy of the Literature and Research Gap

- In the medical domain, LAMs power clinical decision support systems for disease diagnosis, treatment recommendation, and healthcare administration, improving accuracy and efficiency in patient care [29];

- In the agriculture domain, LAMs facilitate farming by analyzing remote sensing imagery, soil, and weather data to optimize crop yield prediction, disease detection, and personalized irrigation advice for farmers [30];

- In the education domain, personalized tutoring systems powered by LLMs offer adaptive learning pathways, automated content generation, and real-time student assessment, enabling scalable, individualized educational support [31];

- In the financial domain, LAMs enhance fraud detection, risk management, and algorithmic trading through advanced language-based analysis of transaction data and regulatory documents, thereby strengthening financial integrity and operational resilience [32];

- In the transportation domain, LAMs are dedicated to dynamic traffic prediction, route optimization, and autonomous vehicle decision-making, contributing to safer and more efficient mobility [33].

2.3. Methodological Rationale: Domain Selection

- Medicine directly corresponds to SDG 3 (Good Health and Well-being), the foundation of human welfare;

- Agriculture is fundamental to SDG 2 (Zero Hunger), representing the basis of human subsistence and our relationship with the natural environment;

- Education aligns with SDG 4 (Quality Education), which is crucial for individual development and social equity;

- Finance and Transportation act as critical enablers for the broader socio-economic system, underpinning SDG 8 (Decent Work and Economic Growth), SDG 9 (Industry, Innovation and Infrastructure), and SDG 11 (Sustainable Cities and Communities).

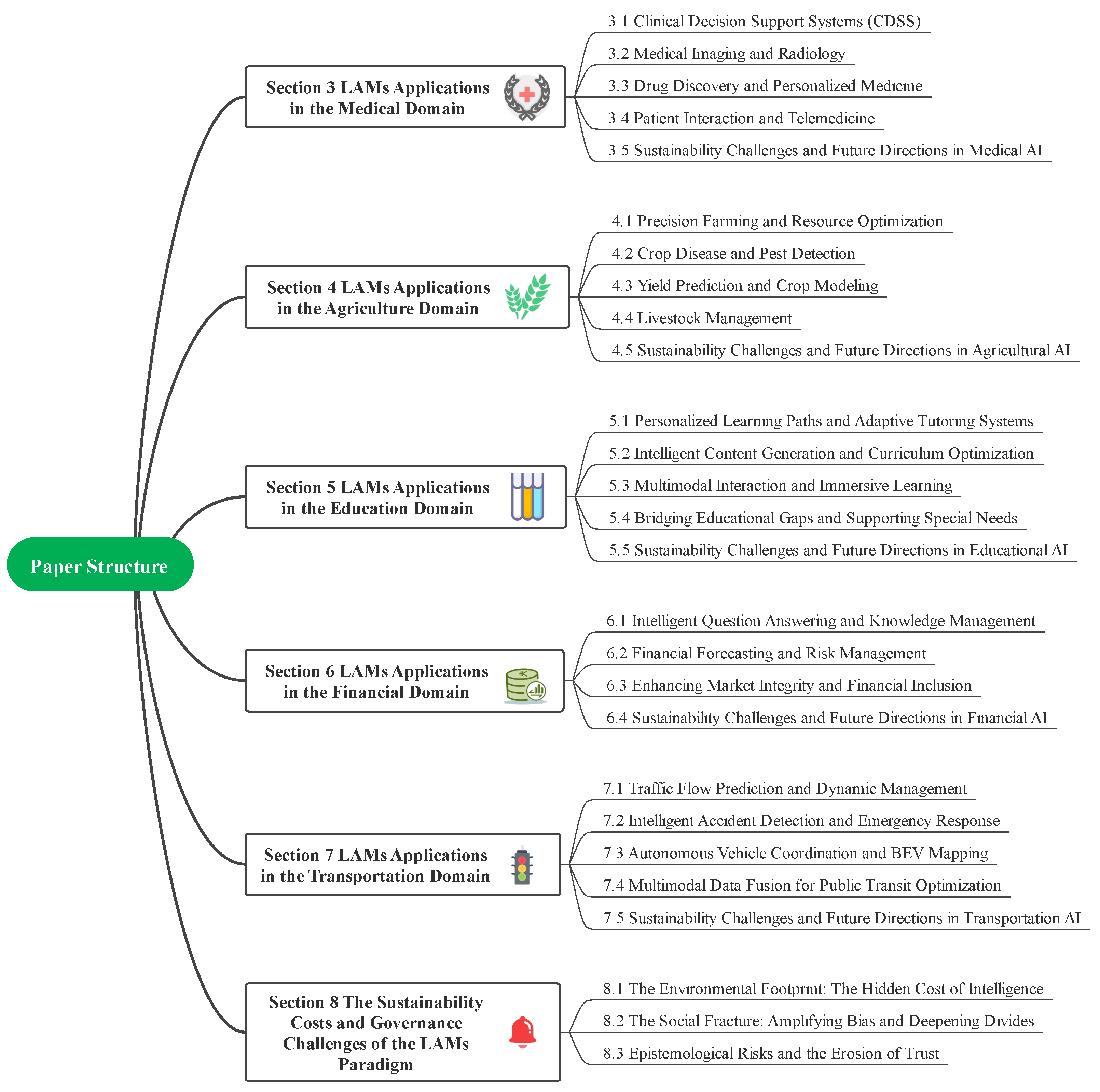

2.4. Paper Structure

3. LAM Applications in the Medical Domain

3.1. Clinical Decision Support Systems (CDSSs)

3.2. Medical Imaging and Radiology

3.3. Drug Discovery and Personalized Medicine

3.4. Patient Interaction and Telemedicine

3.5. Sustainability Challenges and Future Directions in Medical AI

- Algorithmic Fairness and Clinical Trust: Perhaps the most significant challenge is the risk of LAMs perpetuating or even amplifying existing health disparities. Models trained on biased datasets, which may underrepresent certain demographic groups, can produce clinically valid but inequitable recommendations, undermining social sustainability. Furthermore, the “black box” nature of many models poses a barrier to clinical trust and accountability. As noted in the literature [42], a model’s inability to justify its reasoning can impede its adoption in scenarios where lives are at stake. Future work must prioritize the development of interpretable, fair, and transparent AI architectures.

- Data Governance and the Equity Divide: The effectiveness of LAMs is contingent on access to vast amounts of high-quality health data. This raises profound issues of data privacy, security, and ownership. Without robust governance frameworks, there is a risk that patient data could be exploited, or that privacy breaches could erode public trust [43]. Moreover, the high cost and technical expertise required to develop and deploy medical LAMs could widen the global health equity gap, creating a world where only affluent institutions and nations benefit from these advanced tools. It should be noted that federated learning (FL) offers a promising path for training models across institutions without centralizing sensitive data [44]. FL offers a promising path for training powerful AI models across multiple institutions without centralizing sensitive patient data. As comprehensively reviewed by Babar et al. [9], FL is a cornerstone of digital transformation in healthcare, designed to unlock the value of large, distributed datasets while upholding stringent privacy and security requirements [45]. By enabling collaborative model training directly on local hospital servers, FL can significantly mitigate the risks of data breaches and help address the “data governance” dilemma. However, the widespread adoption of FL is not without its own set of challenges, particularly the issue of data heterogeneity. In real-world medical scenarios, data distributions vary significantly across different hospitals due to diverse patient demographics, imaging equipment, and clinical protocols. In a pertinent empirical study, Babar et al. [46] demonstrated that the performance of standard FL algorithms drops significantly when trained on non-identically and independently distributed (non-IID) medical imaging data compared to idealized homogenous data. This performance degradation underscores that while FL provides a powerful framework for privacy, future research must focus on developing more robust algorithms that can effectively handle the data heterogeneity inherent in the healthcare ecosystem. Overcoming this hurdle is key to ensuring that the benefits of large-scale collaborative AI models are realized equitably and do not inadvertently penalize institutions with unique or smaller datasets.

- Economic and Implementation Barriers: While LAMs can improve efficiency, their implementation requires significant upfront investment in computational infrastructure, data management systems, and workforce training. For many healthcare systems, especially those in low-resource settings, the total cost of ownership is prohibitively high. This creates an economic sustainability challenge, limiting widespread adoption. Therefore, future research should also focus on creating lightweight, cost-effective, and easily integrable models that can function effectively within existing clinical workflows without demanding a complete infrastructural overhaul. The ultimate goal must be the development of hybrid human–AI collaborative systems that augment, rather than replace, human expertise.

4. LAM Applications in the Agriculture Domain

4.1. Precision Farming and Resource Optimization

4.2. Crop Disease and Pest Detection

4.3. Yield Prediction and Crop Modeling

4.4. Livestock Management

4.5. Sustainability Challenges and Future Directions in Agricultural AI

- Environmental Trade-Offs and the Digital Carbon Footprint: While precision agriculture can reduce on-farm resource use, the digital infrastructure it relies on has its own substantial environmental footprint. The manufacturing of sensors, drones, and edge devices, coupled with the immense energy consumed by data centers for training and running complex LAMs, creates a new set of environmental costs [60,61]. A true sustainability assessment must adopt a lifecycle perspective, balancing on-farm efficiencies against the carbon cost of the underlying technology.

- Socio-Economic Inequities and the Digital Divide: The high capital and knowledge requirements for implementing advanced AI systems risk creating a pronounced digital divide [62]. Large, well-capitalized agribusinesses can readily adopt these technologies, potentially consolidating their market power, while smallholder farmers, who constitute the majority of the world’s agricultural producers, may be left behind due to costs, lack of infrastructure, or insufficient technical literacy. Without deliberate policies to ensure equitable access, AI could inadvertently exacerbate rural inequality. This growing disparity is a critical sustainability issue because it undermines the social resilience of rural communities and threatens the long-term economic viability and diversity of the global food system, which depends on the participation of smallholder farmers.

- Data Governance, Farmer Autonomy, and Trust: The collection of vast amounts of farm-level data raises critical questions of ownership, privacy, and power. Who controls the data generated on a farm, and how is it used? There is a risk that this data could be exploited by large corporations, locking farmers into specific technology platforms or using their data to influence market prices. Building trust and ensuring farmer autonomy through transparent and fair data governance frameworks is therefore essential for the social sustainability and long-term adoption of these technologies.

- Resilience vs. Over-Optimization: LAMs are trained to optimize for specific outcomes based on historical data. This can lead to highly efficient but potentially brittle systems that are vulnerable to “black swan” events, unprecedented climate extremes, novel pests, or geopolitical shocks not present in the training data. A key future direction is to integrate causal reasoning and ecological principles into LAMs to foster genuine resilience rather than just narrow optimization, ensuring agricultural systems can adapt to an increasingly uncertain future.

5. LAM Applications in the Education Domain

5.1. Personalized Learning Paths and Adaptive Tutoring Systems

5.2. Intelligent Content Generation and Curriculum Optimization

5.3. Multimodal Interaction and Immersive Learning

5.4. Bridging Educational Gaps and Supporting Special Needs

5.5. Sustainability Challenges and Future Directions in Educational AI

- The Equity Paradox: Personalization vs. Bias: While LAMs promise to foster equity through personalization, they are trained on data from an often-inequitable world. There is a substantial risk that these models will absorb and amplify existing societal biases, creating personalized learning paths that steer students from marginalized groups toward less ambitious outcomes. Without rigorous fairness audits and bias mitigation strategies, the very tool designed to close equity gaps could end up cementing them.

- The Access Dilemma: Democratization vs. Cost: The vision of democratizing education is challenged by the high computational and financial costs of deploying state-of-the-art LAMs [71]. This creates a new digital divide, where only well-funded institutions and affluent families can afford the best AI tutors. This is both an economic sustainability issue and an environmental one, as the energy footprint of large-scale educational AI cannot be ignored [76]. The development of smaller, more efficient, and open-source models is crucial for ensuring that AI becomes a great equalizer, not a great divider.

- The Pedagogical Conundrum: Efficiency vs. Critical Thinking: An over-reliance on LAM-generated content and answers risks undermining the core mission of education: to cultivate critical thinking, creativity, and problem-solving skills. If students become passive consumers of AI-generated knowledge rather than active constructors of it, we risk a decline in cognitive resilience and intellectual curiosity [65]. The future of educational AI must focus on creating systems that act as Socratic partners or “intellectual sparring partners,” challenging students to think rather than simply providing them with answers.

- The Trust-Privacy Trade-Off: Effective personalization requires the collection of vast amounts of sensitive student data, creating an inherent tension with privacy and data protection. Building frameworks that ensure student data are used ethically, transparently, and solely for educational benefit is paramount. Without establishing this trust among students, parents, and educators, the social license for deploying these powerful technologies will quickly erode.

6. LAM Applications in the Financial Domain

6.1. Intelligent Question Answering and Knowledge Management

6.2. Financial Forecasting and Risk Management

6.3. Enhancing Market Integrity and Financial Inclusion

6.4. Sustainability Challenges and Future Directions in Financial AI

- The Stability Paradox: Risk Mitigation vs. New Systemic Threats: While LAMs are designed to manage risk, their speed, complexity, and interconnectedness could inadvertently create new, faster-moving systemic threats. The review by Sai et al. [85] emphasizes that while Generative AI offers powerful tools for risk assessment and fraud detection, its ’black box’ nature poses a significant challenge to interpretability, which is a cornerstone of financial regulation and trust. This opacity can create new forms of systemic risk where model-driven decisions, if flawed, could propagate silently across the financial system. An AI-driven “flash crash,” triggered by algorithmic misinterpretations of news or coordinated bot activity, could destabilize markets in minutes. The homogeneity of popular foundation models used across the industry could also create single points of failure, where a flaw in one model leads to cascading errors across the system, threatening economic sustainability.

- The Equity Dilemma: Unbiased Decisions vs. Algorithmic Redlining: LAMs are often touted as a solution to human bias in lending and credit scoring. However, if these models are trained on historical data that reflect past discriminatory practices, they may learn and even amplify those biases. Sai et al. [85] reinforce this concern, noting that bias in training data is a primary challenge for GenAI in finance, potentially leading to discriminatory outcomes in areas like automated loan approval or insurance underwriting. Addressing these biases requires not only technical solutions but also robust data governance and ethical oversight frameworks to ensure fairness. This can lead to “algorithmic redlining,” where certain demographic groups are systematically denied financial services, not because of individual risk, but because of correlations present in the data. This poses a grave threat to social sustainability and risks using technology to create a new, more opaque form of discrimination.

- The Confidentiality–Performance Trade-Off: The financial industry is built on sensitive data, creating an acute tension between the need for data to train powerful models and the imperative to protect confidentiality. Centralized data training poses significant security risks and raises privacy concerns [86]. While solutions like federated learning offer a path forward, a breach of financial data can erode trust on a massive scale. Furthermore, “hallucinations” or errors in LAM outputs could lead to disastrous financial advice or non-compliant actions, making model robustness a critical concern [87].

- The Arms Race and the Environmental Burden: The quest for a competitive edge, particularly in algorithmic trading, has sparked a computational arms race [88,89]. This results in immense and growing energy consumption to power the data centers that train these increasingly complex models and execute trades in microseconds. This issue of high resource demand is a recurring theme in recent literature [85]. However, innovative solutions are emerging to mitigate these costs. For example, the hybrid framework proposed by Jehnen et al. [80] demonstrates a resource-efficient approach. By using an efficient sentence-transformer for the bulk of the workload and selectively engaging a more powerful RAG-LLM only for ambiguous cases (less than 10% of the data), they create a scalable yet powerful system. This highlights a promising future direction: designing hybrid, cost-effective models that balance performance with computational and, by extension, environmental sustainability. This direct environmental impact is a frequently ignored externality of the financial AI boom, posing a challenge to the industry’s broader environmental, social, and governance (ESG) commitments. The development of more energy-efficient models and benchmarks like FinBen are crucial steps toward a more sustainable financial AI ecosystem [84].

7. LAM Applications in the Transportation Domain

7.1. Traffic Flow Prediction and Dynamic Management

7.2. Intelligent Accident Detection and Emergency Response

7.3. Autonomous Vehicle Coordination and BEV Mapping

7.4. Multimodal Data Fusion for Public Transit Optimization

7.5. Sustainability Challenges and Future Directions in Transportation AI

- The Efficiency Paradox: Reduced Emissions vs. Increased Energy Demand: While AI-optimized traffic flow and AVs can reduce the fuel consumption of individual vehicles, the digital infrastructure required to run them is incredibly energy-intensive [96]. The constant data transmission, processing in large data centers, and the manufacturing of sensors and edge devices create a new, and often invisible, carbon footprint. Future research must focus on developing lightweight, energy-efficient “green AI” for transportation to ensure that we are solving the emissions problem, not simply displacing it.

- The Equity Divide: Smart Cities vs. Underserved Communities: The benefits of AI-driven transportation may not be distributed evenly. There is a significant risk that advanced technologies like AVs, smart signaling, and on-demand transit will be deployed primarily in affluent urban centers, while rural and low-income communities are left with deteriorating traditional infrastructure [100,101]. This could exacerbate existing mobility inequalities, creating a two-tiered system and undermining the goal of social sustainability.

- The ‘Black Box’ Dilemma: Safety vs. Accountability: In safety-critical applications like autonomous driving or emergency response, the “black box” nature of some LAMs poses a major accountability challenge. When an AI system makes a life-or-death decision, who is responsible? The lack of clear interpretability and accountability frameworks impedes regulatory approval and public trust, creating a significant barrier to the deployment of technologies that could otherwise save lives. This accountability vacuum is a fundamental sustainability issue because a socio-technical system that cannot ensure responsibility, learn from failures, or maintain public trust is inherently unstable and cannot be sustained over the long term.

- The Data Privacy vs. System Optimization Trade-Off: A fully optimized city-wide traffic network requires access to vast amounts of granular, real-time data from vehicles and personal devices. This creates an inherent conflict with individual privacy. Building robust, privacy-preserving data-sharing frameworks (e.g., using federated learning) is essential [97,102]. Without them, the public’s trust may be eroded, creating a social backlash that could halt the progress toward a more efficient and sustainable transportation system.

8. The Sustainability Costs and Governance Challenges of the LAM Paradigm

8.1. The Environmental Footprint: The Hidden Cost of Intelligence

- Energy Consumption and Carbon Emissions: The training of a single state-of-the-art foundation model requires a colossal amount of computational power, consuming electricity on the scale of a small city over several months. This results in a carbon footprint equivalent to hundreds of trans-atlantic flights [103]. Critically, this energy cost is not a one-time expenditure; the subsequent fine-tuning and the billions of daily queries (inference) globally create a continuous and growing energy demand, placing immense strain on power grids [104].

- Water Usage: Data centers, the physical homes of LAMs, are incredibly water-intensive. Billions of gallons of fresh water are used annually for cooling the massive server farms that power the AI boom [105]. This places significant stress on local ecosystems, particularly as many data centers are located in regions already facing water scarcity.

- Hardware Lifecycle and E-Waste: The relentless pursuit of greater computational power drives a rapid hardware upgrade cycle for specialized processors (GPUs/TPUs). The mining of rare-earth minerals required for this hardware is often environmentally destructive and fraught with ethical labor issues. Furthermore, the short lifespan of these components contributes to a growing global crisis of electronic waste (e-waste), one of the most toxic forms of refuse. A true sustainability assessment must therefore consider the entire lifecycle, from mine to landfill.

8.2. The Social Fracture: Amplifying Bias and Deepening Divides

- Systemic Amplification of Bias: Models trained on internet-scale text and images inevitably absorb the historical and societal biases embedded within that data [103]. As demonstrated in our domain reviews, this can lead to inequitable health recommendations for certain demographics, discriminatory “algorithmic redlining” in finance, or the reinforcement of stereotypes in educational content. Unlike individual human bias, algorithmic bias operates at an unprecedented scale and speed, systemically disadvantaging entire communities under a false veneer of technological objectivity.

- The Global Digital Divide: The immense cost and technical expertise required to develop and deploy cutting-edge LAMs are creating a stark global divide. This leads to a “Matthew effect”, where affluent nations and large corporations reap the benefits of AI-driven productivity gains, while low-resource communities are left further behind. This threatens to create a world of AI “haves” and “have-nots”, exacerbating global inequalities rather than alleviating them [106].

- Concentration of Power: The astronomical costs of training foundation models have created a market where only a handful of trillion-dollar technology corporations can compete. This has led to an unprecedented concentration of power, stifling competition and creating a high dependency on proprietary, closed-source models. This quasi-monopolistic structure threatens to dictate the future of digital innovation and raises serious anti-trust concerns [106].

8.3. Epistemological Risks and the Erosion of Trust

- The ‘Black Box’ and Accountability: As highlighted across all high-stakes domains, the lack of true interpretability in many LAMs creates a critical accountability vacuum. In medicine, finance, or autonomous transport, an inability to understand why a model made a specific decision makes it nearly impossible to assign responsibility when things go wrong, eroding clinical and public trust.

- “Hallucinations” and Information Pollution: LAMs’ tendency to generate confident-sounding but entirely fabricated information (“hallucinations”) poses a direct threat to our information ecosystem [107]. When deployed at scale, this can pollute public discourse with sophisticated misinformation, making it increasingly difficult for citizens to distinguish fact from fiction.

- Cognitive Offloading and De-skilling: An over-reliance on LAMs for cognitive tasks—from writing emails to generating code and creating educational content—risks a long-term “de-skilling” of the human population. As explored in the education section, if AI systems consistently provide answers rather than foster inquiry, they may undermine the development of critical thinking, creativity, and problem-solving skills, posing a risk to our long-term cognitive resilience.

9. Conclusions and Recommendations for a Sustainable AI Future

- Quantifying the AI Lifecycle and Developing “Green AI” Benchmarks: Future work must move beyond fragmented estimations of AI’s environmental impact. A critical research direction is the development of a standardized Lifecycle Assessment (LCA) framework to quantify the complete environmental footprint of LAMs, from hardware manufacturing and data center construction to model training, inference, and disposal. In parallel, the research community should establish and promote “Green AI” benchmarks that elevate metrics like energy efficiency (e.g., performance-per-watt) and computational cost to be as critical as traditional accuracy scores, fostering innovation in resource-efficient algorithms such as model compression and quantization.

- Cross-Domain Metrics and Interventions for Algorithmic Fairness and Social Inclusion: Addressing algorithmic bias requires more than generic fairness metrics. Future research should focus on creating context-aware fairness frameworks tailored to specific livelihood domains, accounting for unique domain vulnerabilities (e.g., equitable access for rare disease patients in medicine). This includes advancing causal reasoning in LAMs to prevent decisions based on spurious correlations in historical data and exploring robust, privacy-preserving technologies like federated learning to empower low-resource communities and bridge the digital divide.

- Longitudinal Studies on Human–AI Cognitive Partnership and Resilience: The long-term societal impact of LAMs on human cognition remains largely unexplored. A crucial, forward-looking research agenda involves conducting longitudinal studies to assess the effects of sustained LAM use on critical thinking, problem-solving skills, and creativity in fields like education and science. The focus should shift from designing AI that merely provides answers to creating “intelligence-augmenting” AI that fosters inquiry and acts as an intellectual sparring partner. This also entails developing meaningful Explainable AI (XAI) to help users properly calibrate their trust in AI systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kalyuzhnaya, A.; Mityagin, S.; Lutsenko, E.; Getmanov, A.; Aksenkin, Y.; Fatkhiev, K.; Fedorin, K.; Nikitin, N.O.; Chichkova, N.; Vorona, V.; et al. LLM Agents for Smart City Management: Enhancing Decision Support Through Multi-Agent AI Systems. Smart Cities 2025, 8, 19. [Google Scholar] [CrossRef]

- Wu, S.; Chen, N.; Xiao, A.; Jia, H.; Jiang, C.; Zhang, P. AI-Enabled Deployment Automation for 6G Space-Air-Ground Integrated Networks: Challenges, Design, and Outlook. IEEE Netw. 2024, 38, 219–226. [Google Scholar] [CrossRef]

- Qiu, J.; Li, L.; Sun, J.; Peng, J.; Shi, P.; Zhang, R.; Dong, Y.; Lam, K.; Lo, F.P.W.; Xiao, B.; et al. Large AI Models in Health Informatics: Applications, Challenges, and the Future. IEEE J. Biomed. Health Inform. 2023, 27, 6074–6087. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.; Chen, N.; Xiao, A.; Zhang, P.; Jiang, C.; Zhang, W. AI-Empowered Virtual Network Embedding: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2025, 27, 1395–1426. [Google Scholar] [CrossRef]

- Tan, L.; Aldweesh, A.; Chen, N.; Wang, J.; Zhang, J.; Zhang, Y.; Kostromitin, K.I.; Zhang, P. Energy Efficient Resource Allocation Based on Virtual Network Embedding for IoT Data Generation. Autom. Softw. Eng. 2024, 31, 66. [Google Scholar] [CrossRef]

- Farrell, H.; Gopnik, A.; Shalizi, C.; Evans, J. Large AI Models are Cultural and Social Technologies. Science 2025, 387, 1153–1156. [Google Scholar] [CrossRef]

- Raza, M.; Jahangir, Z.; Riaz, M.B.; Saeed, M.J.; Sattar, M.A. Industrial Applications of Large Language Models. Sci. Rep. 2025, 15, 13755. [Google Scholar] [CrossRef]

- Chen, N.; Shen, S.; Duan, Y.; Huang, S.; Zhang, W.; Tan, L. Non-Euclidean Graph-Convolution Virtual Network Embedding for Space–Air–Ground Integrated Networks. Drones 2023, 7, 165. [Google Scholar] [CrossRef]

- Babar, M.; Qureshi, B.; Koubaa, A. Review on Federated Learning for Digital Transformation in Healthcare through Hig Data Analytics. Future Gener. Comput. Syst. 2024, 160, 14–28. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 10 September 2025).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Rosoł, M.; Gąsior, J.S.; Łaba, J.; Korzeniewski, K.; Młyńczak, M. Evaluation of the Performance of GPT-3.5 and GPT-4 on the Polish Medical Final Examination. Sci. Rep. 2023, 13, 20512. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. Palm: Scaling Language Modeling with Pathways. J. Mach. Learn. Res. 2023, 24, 1–113. [Google Scholar]

- Anil, R.; Dai, A.M.; Firat, O.; Johnson, M.; Lepikhin, D.; Passos, A.; Shakeri, S.; Taropa, E.; Bailey, P.; Chen, Z.; et al. Palm 2 Technical Report. arXiv 2023, arXiv:2305.10403. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and Efficient Foundation Language Lodels. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Le Scao, T.; Fan, A.; Akiki, C.; Pavlick, E.; Ilić, S.; Hesslow, D.; Castagné, R.; Luccioni, A.S.; Yvon, F.; Gallé, M.; et al. Bloom: A 176b-Parameter Open-Access Multilingual Language Model. arXiv 2023, arXiv:2211.05100. [Google Scholar]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 Technical Teport. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Kocoń, J.; Cichecki, I.; Kaszyca, O.; Kochanek, M.; Szydło, D.; Baran, J.; Bielaniewicz, J.; Gruza, M.; Janz, A.; Kanclerz, K.; et al. ChatGPT: Jack of All Trades, Master of None. Inf. Fusion 2023, 99, 101861. [Google Scholar] [CrossRef]

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A Family of Highly Capable Multimodal Models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A Survey on Evaluation of Large Language Models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Zhang, P.; Chen, N.; Shen, S.; Yu, S.; Kumar, N.; Hsu, C.H. AI-Enabled Space-Air-Ground Integrated Networks: Management and Optimization. IEEE Netw. 2024, 38, 186–192. [Google Scholar] [CrossRef]

- Patil, R.; Gudivada, V. A Review of Current Trends, Techniques, and Challenges in Large Language Lodels (LLMs). Appl. Sci. 2024, 14, 2074. [Google Scholar] [CrossRef]

- Shen, Y.; Shao, J.; Zhang, X.; Lin, Z.; Pan, H.; Li, D.; Zhang, J.; Letaief, K.B. Large Language Models Empowered Autonomous Edge AI for Connected Intelligence. IEEE Commun. Mag. 2024, 62, 140–146. [Google Scholar] [CrossRef]

- Wu, S.; Chen, N.; Wen, G.; Xu, L.; Zhang, P.; Zhu, H. Virtual Network Embedding for Task Offloading in IIoT: A DRL-Assisted Federated Learning Scheme. IEEE Trans. Ind. Inform. 2024, 20, 6814–6824. [Google Scholar] [CrossRef]

- Vrdoljak, J.; Boban, Z.; Vilović, M.; Kumrić, M.; Božić, J. A Review of Large Language Models in Medical Education, Clinical Decision Support, and Healthcare Administration. Healthcare 2025, 13, 603. [Google Scholar] [CrossRef]

- Tzachor, A.; Devare, M.; Richards, C.; Pypers, P.; Ghosh, A.; Koo, J.; Johal, S.; King, B. Large Language Models and Agricultural Extension Services. Nat. Food 2023, 4, 941–948. [Google Scholar] [CrossRef]

- Chen, E.; Lee, J.E.; Lin, J.; Koedinger, K. GPTutor: Great Personalized Tutor with Large Language Models for Personalized Learning Content Generation. In Proceedings of the Eleventh ACM Conference on Learning@ Scale, Atlanta, GA, USA, 18–20 July 2024; pp. 539–541. [Google Scholar]

- Huang, K.; Chen, X.; Yang, Y.; Ponnapalli, J.; Huang, G. ChatGPT in Finance and Banking. In Beyond AI: ChatGPT, Web3, and the Business Landscape of Tomorrow; Springer: Cham, Switzerland, 2023; pp. 187–218. [Google Scholar]

- Wandelt, S.; Zheng, C.; Wang, S.; Liu, Y.; Sun, X. Large Language Models for Intelligent Transportation: A Review of the State of the Art and Challenges. Appl. Sci. 2024, 14, 7455. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, N.; Bashir, A.K.; Alshehri, M.D.; Liu, L.; Zhang, P.; Yu, K. A Web Knowledge-Driven Multimodal Retrieval Method in Computational Social Systems: Unsupervised and Robust Graph Convolutional Hashing. IEEE Trans. Comput. Soc. Syst. 2024, 11, 3146–3156. [Google Scholar] [CrossRef]

- Ijiga, A.C.; Peace, A.; Idoko, I.P.; Agbo, D.O.; Harry, K.D.; Ezebuka, C.I.; Ukatu, I. Ethical Considerations in Implementing Generative AI for Healthcare Supply Chain Optimization: A Cross-Country Analysis Across India, the United Kingdom, and the United States of America. Int. J. Biol. Pharm. Sci. Arch. 2024, 7, 048–063. [Google Scholar] [CrossRef]

- Shool, S.; Adimi, S.; Saboori Amleshi, R.; Bitaraf, E.; Golpira, R.; Tara, M. A Systematic Review of Large Language Model (LLM) Evaluations in Clinical Medicine. BMC Med. Inform. Decis. Mak. 2025, 25, 117. [Google Scholar] [CrossRef]

- Busch, F.; Hoffmann, L.; Rueger, C.; van Dijk, E.H.; Kader, R.; Ortiz-Prado, E.; Makowski, M.R.; Saba, L.; Hadamitzky, M.; Kather, J.N.; et al. Current Applications and Challenges in Large Language Models for Patient Care: A Systematic Review. Commun. Med. 2025, 5, 26. [Google Scholar] [CrossRef]

- Park, A. How AI Is Changing Medical Imaging to Improve Patient Care; Time USA LLC: New York, NY, USA, 2022. [Google Scholar]

- Nazi, Z.A.; Peng, W. Large Language Models in Healthcare and Medical Domain: A Review. Informatics 2024, 11, 57. [Google Scholar] [CrossRef]

- Lin, C.; Kuo, C.F. Roles and Potential of Large Language Models in Healthcare: A Comprehensive Review. Biomed. J. 2025, 48, 100868. [Google Scholar] [CrossRef] [PubMed]

- Meskó, B.; Topol, E.J. The Imperative for Regulatory Oversight of Large Language Models (or Generative AI) in Healthcare. npj Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef] [PubMed]

- Hager, P.; Jungmann, F.; Holland, R.; Bhagat, K.; Hubrecht, I.; Knauer, M.; Vielhauer, J.; Makowski, M.; Braren, R.; Kaissis, G.; et al. Evaluation and Mitigation of the Limitations of Large Language Models in Clinical Decision-Making. Nat. Med. 2024, 30, 2613–2622. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Zhou, Z.; Lyu, H.; Wang, Z. Large Language Models-Powered Clinical Decision Support: Enhancing or Replacing Human Expertise? Intell. Med. 2025, 5, 1–4. [Google Scholar] [CrossRef]

- Zhang, P.; Chen, N.; Li, S.; Choo, K.K.R.; Jiang, C.; Wu, S. Multi-Domain Virtual Network Embedding Algorithm Based on Horizontal Federated Learning. IEEE Trans. Inf. Forensics Secur. 2023, 18, 3363–3375. [Google Scholar] [CrossRef]

- Zhang, P.; Li, Y.; Kumar, N.; Chen, N.; Hsu, C.H.; Barnawi, A. Distributed Deep Reinforcement Learning Assisted Resource Allocation Algorithm for Space-Air-Ground Integrated Networks. IEEE Trans. Netw. Serv. Manag. 2023, 20, 3348–3358. [Google Scholar] [CrossRef]

- Babar, M.; Qureshi, B.; Koubaa, A. Investigating the Impact of Data Heterogeneity on the Performance of Federated Learning Algorithm Using Medical Imaging. PLoS ONE 2024, 19, e0302539. [Google Scholar] [CrossRef]

- Sun, H.; Li, W.; Guo, X.; Wu, Z.; Mao, Z.; Feng, J. How Does Digital Inclusive Finance Affect Agricultural Green Development? Evidence from Thirty Provinces in China. Sustainability 2025, 17, 1449. [Google Scholar] [CrossRef]

- Sai, S.; Kumar, S.; Gaur, A.; Goyal, S.; Chamola, V.; Hussain, A. Unleashing the Power of Generative AI in Agriculture 4.0 for Smart and Sustainable Farming. Cogn. Comput. 2025, 17, 63. [Google Scholar] [CrossRef]

- Park, J.j.; Choi, S.j. LLMs for Enhanced Agricultural Meteorological Recommendations. arXiv 2024, arXiv:2408.04640. [Google Scholar]

- Zhao, X.; Chen, B.; Ji, M.; Wang, X.; Yan, Y.; Zhang, J.; Liu, S.; Ye, M.; Lv, C. Implementation of Large Language Models and Agricultural Knowledge Graphs for Efficient Plant Disease Detection. Agriculture 2024, 14, 1359. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, N.; Zhang, P.; Kumar, N.; Chang, L.; Wen, W. MS2GAH: Multi-Label Semantic Supervised Graph Attention Hashing for Robust Cross-Modal Retrieval. Pattern Recognit. 2022, 128, 108676. [Google Scholar] [CrossRef]

- Kumar, S.S.; Khan, A.K.M.A.; Banday, I.A.; Gada, M.; Shanbhag, V.V. Overcoming LLM Challenges Using Rag-Driven Precision in Coffee Leaf Disease Remediation. In Proceedings of the 2024 International Conference on Emerging Technologies in Computer Science for Interdisciplinary Applications (ICETCS), Bengaluru, India, 22–23 April 2024; pp. 1–6. [Google Scholar]

- Fernandes, I.K.; Vieira, C.C.; Dias, K.O.; Fernandes, S.B. Using Machine Learning to Combine Genetic and Environmental data for Maize Grain Yield Predictions Across Multi-Environment Trials. Theor. Appl. Genet. 2024, 137, 189. [Google Scholar] [CrossRef]

- Eckhardt, R.; Arablouei, R.; Ingham, A.; McCosker, K.; Bernhardt, H. Livestock Behaviour Forecasting via Generative Artificial Intelligence. Smart Agric. Technol. 2025, 11, 100987. [Google Scholar]

- Qiao, Y.; Kong, H.; Clark, C.; Lomax, S.; Su, D.; Eiffert, S.; Sukkarieh, S. Intelligent Perception-Based Cattle Lameness Detection and Behaviour Recognition: A Review. Animals 2021, 11, 3033. [Google Scholar] [CrossRef]

- Cockburn, M. Application and Prospective Discussion of Machine Learning for the Management of Dairy Farms. Animals 2020, 10, 1690. [Google Scholar] [CrossRef]

- De Clercq, D.; Nehring, E.; Mayne, H.; Mahdi, A. Large Language Models can Help Boost Food Production, but be Mindful of Their Risks. Front. Artif. Intell. 2024, 7, 1326153. [Google Scholar] [CrossRef]

- Li, J.; Xu, M.; Xiang, L.; Chen, D.; Zhuang, W.; Yin, X.; Li, Z. Foundation Models in Smart Agriculture: Basics, Opportunities, and Challenges. Comput. Electron. Agric. 2024, 222, 109032. [Google Scholar] [CrossRef]

- Duan, Y.; Chen, N.; Chang, L.; Ni, Y.; Kumar, S.S.; Zhang, P. CAPSO: Chaos Adaptive Particle Swarm Optimization Algorithm. IEEE Access 2022, 10, 29393–29405. [Google Scholar] [CrossRef]

- Zhang, X.; Liang, J.; Chen, N.; Xiang, L.; Tan, L.; Zhang, P. Internet of Vehicles Task Offloading Based on Edge Computing Using Double Deep Q-Network. Clust. Comput. 2025, 28, 785. [Google Scholar] [CrossRef]

- Zhang, P.; Chen, N.; Kumar, N.; Abualigah, L.; Guizani, M.; Duan, Y.; Wang, J.; Wu, S. Energy Allocation for Vehicle-to-Grid Settings: A Low-Cost Proposal Combining DRL and VNE. IEEE Trans. Sustain. Comput. 2023, 9, 75–87. [Google Scholar] [CrossRef]

- Chen, N.; Zhang, P.; Kumar, N.; Hsu, C.H.; Abualigah, L.; Zhu, H. Spectral Graph Theory-Based Virtual Network Embedding for Vehicular Fog Computing: A Deep Reinforcement Learning Architecture. Knowl.-Based Syst. 2022, 257, 109931. [Google Scholar] [CrossRef]

- Sakamoto, M.; Tan, S.; Clivaz, S. Social, Cultural and Political Perspectives of Generative AI in Teacher Education: Lesson Planning in Japanese Teacher Education. In Exploring New Horizons: Generative Artificial Intelligence and Teacher Education; Association for the Advancement of Computing in Education: Waynesville, NC, USA, 2024; p. 178. [Google Scholar]

- Luo, Y.; Yang, Y. Large Language Model and Domain-Specific Model Collaboration for Smart Education. Front. Inf. Technol. Electron. Eng. 2024, 25, 333–341. [Google Scholar] [CrossRef]

- Tong, R.J.; Hu, X. Future of Education with Neuro-Symbolic AI Agents in Self-Improving Adaptive Instructional Systems. Front. Digit. Educ. 2024, 1, 198–212. [Google Scholar] [CrossRef]

- Delsoz, M.; Hassan, A.; Nabavi, A.; Rahdar, A.; Fowler, B.; Kerr, N.C.; Ditta, L.C.; Hoehn, M.E.; DeAngelis, M.M.; Grzybowski, A.; et al. Large Language Models: Pioneering New Educational Frontiers in Childhood Myopia. Ophthalmol. Ther. 2025, 14, 1281–1295. [Google Scholar] [CrossRef]

- Sajja, R.; Sermet, Y.; Cikmaz, M.; Cwiertny, D.; Demir, I. Artificial Intelligence-Enabled Intelligent Assistant for Personalized and Adaptive Learning in Ligher Education. Information 2024, 15, 596. [Google Scholar] [CrossRef]

- Chen, C.; Gao, S.; Pei, H.; Chen, N.; Shi, L.; Zhang, P. Novel Surveillance View: A Novel Benchmark and View-Optimized Framework for Pedestrian Detection from UAV Perspectives. Sensors 2025, 25, 772. [Google Scholar] [CrossRef]

- Lucas, H.C.; Upperman, J.S.; Robinson, J.R. A Systematic Review of Large Language Models and Their Implications in Medical Education. Med. Educ. 2024, 58, 1276–1285. [Google Scholar] [CrossRef]

- Shahzad, T.; Mazhar, T.; Tariq, M.U.; Ahmad, W.; Ouahada, K.; Hamam, H. A Comprehensive Review of Large Language Models: Issues and Solutions in Learning Environments. Discov. Sustain. 2025, 6, 27. [Google Scholar] [CrossRef]

- Stogiannidis, I.; Vassos, S.; Malakasiotis, P.; Androutsopoulos, I. Cache Me If You Can: An Online Cost-Aware Teacher-Student Framework to Reduce the Calls to Large Language Models. arXiv 2023, arXiv:2310.13395. [Google Scholar]

- Chen, L.; Zaharia, M.; Zou, J. Frugalgpt: How to Use Large Language Models While Reducing Cost and Improving Performance. arXiv 2023, arXiv:2305.05176. [Google Scholar] [CrossRef]

- Koubaa, A.; Qureshi, B.; Ammar, A.; Khan, Z.; Boulila, W.; Ghouti, L. Humans Are Still Better Than ChatGPT: Case of the IEEEXtreme Competition. Heliyon 2023, 9, e21624. [Google Scholar] [CrossRef] [PubMed]

- Garcia, M.B.; Revano, T.F.; Maaliw, R.R.; Lagrazon, P.G.G.; Valderama, A.M.C.; Happonen, A.; Qureshi, B.; Yilmaz, R. Exploring Student Preference between AI-Powered ChatGPT and Human-Curated Stack Overflow in Resolving Programming Problems and Queries. In Proceedings of the 2023 IEEE 15th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Coron, Palawan, Philippines, 19–23 November 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Ansah, L.O. Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning. J. AI 2023, 7, 52–62. [Google Scholar] [CrossRef]

- Wang, L.; Zheng, Z.; Chen, N.; Chi, Y.; Liu, Y.; Zhu, H.; Zhang, P.; Kumar, N. Multi-Target-Aware Energy Orchestration Modeling for Grid 2.0: A Network Virtualization Approach. IEEE Access 2023, 11, 21699–21711. [Google Scholar]

- Wakunuma, K.; Eke, D. Africa, ChatGPT, and Generative AI Systems: Ethical Benefits, Concerns, and the Need for Governance. Philosophies 2024, 9, 80. [Google Scholar] [CrossRef]

- Nie, Y.; Kong, Y.; Dong, X.; Mulvey, J.M.; Poor, H.V.; Wen, Q.; Zohren, S. A Survey of Large Language Models for Financial Applications: Progress, Prospects and Challenges. arXiv 2024, arXiv:2406.11903. [Google Scholar] [CrossRef]

- Konstantinidis, T.; Iacovides, G.; Xu, M.; Constantinides, T.G.; Mandic, D. Finllama: Financial Sentiment Classification for Algorithmic Trading Applications. arXiv 2024, arXiv:2403.12285. [Google Scholar] [CrossRef]

- Jehnen, S.; Ordieres-Meré, J.; Villalba-Díez, J. Fintextsim-Llm: Improving Corporate Performance Prediction Through Scalable, Llm-Enhanced Financial Topic Modeling and Aspect-Based Sentiment. 2025. Available online: https://ssrn.com/abstract=5365002 (accessed on 10 September 2025).

- Huang, J.; Xiao, M.; Li, D.; Jiang, Z.; Yang, Y.; Zhang, Y.; Qian, L.; Wang, Y.; Peng, X.; Ren, Y.; et al. Open-Finllms: Open Multimodal Large Language Lodels for Linancial Applications. arXiv 2024, arXiv:2408.11878. [Google Scholar]

- Joshi, R.; Pandey, K.; Kumari, S. Generative AI: A Transformative Tool for Mitigating Risks for Financial Frauds. In Generative Artificial Intelligence in Finance: Large Language Models, Interfaces, and Industry Use Cases to Transform Accounting and Finance Processes; Scrivener Publishing LLC: Beverly, MA, USA, 2025; pp. 125–147. [Google Scholar]

- Fuad, M.M.; Akuthota, V.; Rahman, A.; Farea, S.A.; Rahman, M.; Chowdhury, A.E.; Anwar, A.S.; Ashraf, M.S. Financial Voucher Analysis with LVMs and Financial LLMs. In Proceedings of the 2025 International Conference on Computing Technologies (ICOCT), Bengaluru, India, 13–14 June 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Xie, Q.; Han, W.; Chen, Z.; Xiang, R.; Zhang, X.; He, Y.; Xiao, M.; Li, D.; Dai, Y.; Feng, D.; et al. Finben: A Holistic Financial Benchmark for Large Language Models. Adv. Neural Inf. Process. Syst. 2024, 37, 95716–95743. [Google Scholar]

- Sai, S.; Arunakar, K.; Chamola, V.; Hussain, A.; Bisht, P.; Kumar, S. Generative AI for Finance: Applications, Case Studies and Challenges. Expert Syst. 2025, 42, e70018. [Google Scholar] [CrossRef]

- Li, J.; Bian, Y.; Wang, G.; Lei, Y.; Cheng, D.; Ding, Z.; Jiang, C. CFGPT: Chinese Financial Assistant with Large Language Model. arXiv 2023, arXiv:2309.10654. [Google Scholar] [CrossRef]

- Lee, J.; Stevens, N.; Han, S.C. Large Language Models in Finance (FinLLMs). Neural Comput. Appl. 2025, 37, 24853–24867. [Google Scholar]

- Li, X.; Li, C.; Dai, W.; Kostromitin, K.I.; Chen, S.; Chen, N. Multi-Objective Collaborative Resource Allocation for Cloud-Edge Networks: A VNE Approach. Trans. Emerg. Telecommun. Technol. 2025, 36, e70197. [Google Scholar]

- Zhan, K.; Chen, N.; Santhosh Kumar, S.V.N.; Kibalya, G.; Zhang, P.; Zhang, H. Edge Computing Network Resource Allocation Based on Virtual Network Embedding. Int. J. Commun. Syst. 2025, 38, e5344. [Google Scholar]

- Mangione, F.; Barbuto, V.; Savaglio, C.; Fortino, G. A Generative AI-Driven Architecture for Intelligent Transportation Systems. In Proceedings of the 2024 IEEE 10th World Forum on Internet of Things (WF-IoT), Ottawa, ON, Canada, 10–13 November 2024; pp. 1–6. [Google Scholar]

- Duan, Y.; Chen, N.; Shen, S.; Zhang, P.; Qu, Y.; Yu, S. FDSA-STG: Fully Dynamic Self-Attention Spatio-Temporal Graph Networks for Intelligent Traffic Flow Prediction. IEEE Trans. Veh. Technol. 2022, 71, 9250–9260. [Google Scholar] [CrossRef]

- Liu, C.; Hettige, K.H.; Xu, Q.; Long, C.; Xiang, S.; Cong, G.; Li, Z.; Zhao, R. ST-LLM+: Graph Enhanced Spatio-Temporal Large Language Models for Traffic Prediction. IEEE Trans. Knowl. Data Eng. 2025, 37, 4846–4859. [Google Scholar] [CrossRef]

- Wang, C.; Zuo, K.; Zhang, S.; Lei, H.; Hu, P.; Shen, Z.; Wang, R.; Zhao, P. PFNet: Large-Scale Traffic Forecasting With Progressive Spatio-Temporal Fusion. IEEE Trans. Intell. Transp. Syst. 2023, 24, 14580–14597. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Y.; Shao, Q.; Li, B.; Lv, Y.; Piao, X.; Yin, B. ChatTraffic: Text-to-Traffic Generation via Diffusion Model. IEEE Trans. Intell. Transp. Syst. 2025, 26, 2656–2668. [Google Scholar] [CrossRef]

- Zhang, C.; Zhang, Y.; Shao, Q.; Feng, J.; Li, B.; Lv, Y.; Piao, X.; Yin, B. BjTT: A Large-Scale Multimodal Dataset for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2024, 25, 18992–19003. [Google Scholar] [CrossRef]

- Wei, Y.; Zeng, Z.; Zhong, Y.; Kang, J.; Liu, R.W.; Hossain, M.S. Bi-LSTM based Multi-Agent DRL with Computation-aware Pruning for Agent Twins Migration in Vehicular Embodied AI Networks. arXiv 2025, arXiv:2505.06378. [Google Scholar] [CrossRef]

- Gan, L.; Chu, W.; Li, G.; Tang, X.; Li, K. Large Models for Intelligent Transportation Systems and Autonomous Vehicles: A Survey. Adv. Eng. Inform. 2024, 62, 102786. [Google Scholar]

- Wang, P.; Wei, X.; Hu, F.; Han, W. TransGPT: Multi-Modal Generative Pre-Trained Transformer for Transportation. In Proceedings of the 2024 International Conference on Computational Linguistics and Natural Language Processing (CLNLP), Yinchuan, China, 19–21 July 2024; pp. 96–100. [Google Scholar]

- Zhou, S.; Han, Y.; Chen, N.; Huang, S.; Igorevich, K.K.; Luo, J.; Zhang, P. Transformer-Based Discriminative and Strong Representation Deep Hashing for Cross-Modal Retrieval. IEEE Access 2023, 11, 140041–140055. [Google Scholar] [CrossRef]

- Babar, M.; Qureshi, B. A New Distributed Approach to Leveraging AI for Sustainable Healthcare in Smart Cities. In Proceedings of the 2nd International Conference on Sustainability: Developments and Innovations, Riyadh, Saudi Arabia, 18–22 February 2024; Springer: Singapore, 2024; pp. 256–263. [Google Scholar]

- Mishra, P.; Singh, G. Smart Healthcare in Sustainable Smart Cities. In Sustainable Smart Cities: Enabling Technologies, Energy Trends and Potential Applications; Springer: Cham, Switzerland, 2023; pp. 195–219. [Google Scholar]

- Zhang, P.; Chen, N.; Xu, G.; Kumar, N.; Barnawi, A.; Guizani, M.; Duan, Y.; Yu, K. Multi-Target-Aware Dynamic Resource Scheduling for Cloud-Fog-Edge Multi-Tier Computing Network. IEEE Trans. Intell. Transp. Syst. 2024, 25, 3885–3897. [Google Scholar] [CrossRef]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, New York, NY, USA, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Patterson, D.; Gonzalez, J.; Le, Q.; Liang, C.; Munguia, L.M.; Rothchild, D.; So, D.; Texier, M.; Dean, J. Carbon Emissions and Large Neural Network Training. arXiv 2021, arXiv:2104.10350. [Google Scholar] [CrossRef]

- Garcia, M. Navigating Regulation Of AI Data Centers’ Water Footprint Post-Watershed Loper Bright Decision. Navigating Regulation Of AI Data Centers’Water Footprint Post-Watershed Loper Bright Decision (December 13, 2024). 2024. Available online: https://ssrn.com/abstract=5064473 (accessed on 10 September 2025).

- Cupać, J.; Schopmans, H.; Tuncer-Ebetürk, İ. Democratization in the Age of Artificial Intelligence: Introduction to the Special Issue. Democratization 2024, 31, 899–921. [Google Scholar] [CrossRef]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.J.; Madotto, A.; Fung, P. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

| Domain | Reference | Application Ask | Key Contribution |

|---|---|---|---|

| Medical | [9] | Privacy-Preserving Data Analysis | Demonstrates the critical role of FL in enabling collaborative analysis of large-scale healthcare data while preserving patient privacy, addressing a core data governance challenge. |

| [46] | FL under Data Heterogeneity | Empirically shows that real-world data heterogeneity significantly degrades the performance of FL algorithms, highlighting a key sustainability challenge in achieving equitable and robust models across diverse healthcare institutions. | |

| Agriculture | [50] | Plant Disease Detection | Integrates LLMs with Agricultural Knowledge Graphs to improve the efficiency and accuracy of plant disease detection, showcasing a data-driven approach to sustainable pest management. |

| [52] | Coffee Leaf Disease Remediation | Employs RAG to overcome LLM limitations, achieving high precision in diagnosing crop diseases and providing context-aware treatment advice, thereby reducing false negatives. | |

| Education | [73] | Competitive Programming Education | Reveals that human programmers still significantly outperform ChatGPT in complex programming tasks, providing a critical perspective on the limitations of current LAMs in high-level reasoning and creative problem-solving. |

| [74] | Student Learning Preferences | Explores student choices between ChatGPT and human-curated platforms (Stack Overflow), concluding that a symbiotic “human–AI partnership” is the most effective model for learning complex skills. | |

| Finance | [80] | Corporate Performance Prediction | Introduces a hybrid, resource-efficient framework that combines specialized transformers with a RAG-LLM, significantly improving prediction accuracy while addressing the high computational costs associated with large-scale financial analysis. |

| [83] | Automated Voucher Analysis | Presents a multimodal framework using LVMs and financial LLMs to automate auditing tasks, demonstrating that domain-specific models enhance market integrity by significantly reducing errors in compliance checks. | |

| Transportation | [33] | Intelligent Transportation Systems | Provides a comprehensive review of LAMs in transportation, covering applications from traffic flow prediction to autonomous vehicle coordination and highlighting challenges related to data privacy and system optimization. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Zhang, P. From General Intelligence to Sustainable Adaptation: A Critical Review of Large-Scale AI Empowering People’s Livelihood. Sustainability 2025, 17, 9051. https://doi.org/10.3390/su17209051

Li J, Zhang P. From General Intelligence to Sustainable Adaptation: A Critical Review of Large-Scale AI Empowering People’s Livelihood. Sustainability. 2025; 17(20):9051. https://doi.org/10.3390/su17209051

Chicago/Turabian StyleLi, Jiayi, and Peiying Zhang. 2025. "From General Intelligence to Sustainable Adaptation: A Critical Review of Large-Scale AI Empowering People’s Livelihood" Sustainability 17, no. 20: 9051. https://doi.org/10.3390/su17209051

APA StyleLi, J., & Zhang, P. (2025). From General Intelligence to Sustainable Adaptation: A Critical Review of Large-Scale AI Empowering People’s Livelihood. Sustainability, 17(20), 9051. https://doi.org/10.3390/su17209051