1. Introduction

Waste generation has steadily increased with population growth and urbanization, rising from 88.1 million tons in 1960 to 292.4 million tons in 2018. Projections indicate that global waste production could increase by 70% to reach 3.4 billion tons by 2050, posing significant challenges for municipal solid waste management efforts [

1,

2]. Effective and precise waste classification is a key component of sustainable waste management that has immediate benefits for the environment, the conservation of resources, and the preservation of circular economy principles [

1,

3]. The usual waste-sorting methods, which regularly involve hands-on human work, suffer from inefficiencies, high operational costs, and limited scalability [

4,

5]. As waste streams become more diverse and complex, there is an urgent need for intelligent, automated solutions that can operate effectively in dynamic real-world conditions [

6,

7,

8].

Convolutional neural networks (CNNs), a subset of deep learning, have proven to be highly effective for image-driven waste classification. CNNs can automatically learn hierarchical visual patterns, eliminating the need for manual feature extraction and improving accuracy across various waste categories, such as recyclable, organic, hazardous, and general waste [

9,

10]. Existing research demonstrates the feasibility of CNNs and transfer learning approaches using public datasets, achieving promising classification results [

11].

Recent advancements have further enhanced the efficiency of these models. Approaches like transfer learning for knowledge reuse, model pruning for computational efficiency, and lightweight architectures for mobile deployment have been widely adopted [

12,

13]. Hybrid models combining multiple pre-trained CNNs have shown improved robustness [

14], while architectural innovations, including novel activation functions and attention mechanisms, have led to better feature learning and performance optimization [

15,

16,

17].

Despite these advances, significant challenges remain. CNN-based models often struggle to generalize across heterogeneous environments due to issues like class imbalance, inter-class visual similarity, and variability in lighting and backgrounds [

18,

19]. These limitations reduce model effectiveness in real-world applications, particularly in urban settings where waste streams are highly inconsistent and complex [

5,

8]. Addressing these challenges is vital for the practical deployment of intelligent waste classification systems in smart cities and environmental monitoring infrastructure [

2,

20].With increasing emphasis on sustainable development and real-time decision-making, there is a strong need for solutions that are not only accurate but also scalable, lightweight, and robust against environmental variations [

21,

22].

To address these limitations, this paper takes a deep dive into how deep learning can be used effectively for waste classification. The proposed approach involves systematic benchmarking of eleven state-of-the-art CNN architectures, the development of two optimized lightweight models for edge deployment, and the design of a hybrid multi-stream ensemble model that integrates features from multiple pre-trained networks. To ensure real-world applicability, the training pipeline incorporates adaptive learning strategies, class rebalancing techniques, and interpretability mechanisms.

The key contributions of this research are outlined below:

Development and Optimization of CNN Architectures: A custom baseline CNN was initially developed to evaluate the model’s feasibility, followed by extensive benchmarking of eleven pre-trained architectures, including ResNet50, EfficientNet variants, DenseNet, MobileNetV3, VGG16, InceptionV3, and Xception. The use of transfer learning and layer fine-tuning significantly improved model convergence and classification accuracy across various waste categories.

Design of Lightweight Custom Models: Two novel lightweight models—EcoMobileNet and EcoDenseNet—were specifically designed for deployment on mobile and embedded systems. These architectures leverage the backbones of MobileNetV3 and DenseNet201, enhanced with attention modules such as Squeeze-and-Excitation (SE) and the Convolutional Block Attention Module (CBAM). They also integrate advanced data augmentation techniques and loss optimization strategies for improved generalization and performance.

Hybrid and Ensemble Modeling: A robust hybrid model was constructed by fusing intermediate features extracted from ResNet50, EfficientNetV2-M, and DenseNet201, using a weighted averaging scheme to balance the contributions of each network. Furthermore, ensemble methods, including soft voting, hard voting, and stacking, were explored to enhance classification stability and overall accuracy.

Optimized Training and Interpretability Framework: The training pipeline was refined using strategies such as class weighting, mixed precision training, early stopping, and adaptive learning rate scheduling. To improve model transparency, Grad-CAM visualizations were employed to highlight class-discriminative regions in input images, facilitating interpretability and trust in model decisions.

The remainder of this paper is structured as follows:

Section 2 reviews existing deep learning methods for waste classification, discussing current models, challenges, and research gaps.

Section 3 discusses the methodology, including dataset details, preprocessing, model architectures, training strategies, and evaluation metrics.

Section 4 presents the experimental results, comparing different CNN models, analyzing ensemble learning effects, and discussing insights from custom architectures.

Section 5 concludes the study with key findings and future directions for improving waste classification using deep learning.

2. Related Works

Effective waste classification is an important part of sustainable waste management and environmental protection. With growing urbanization and increasing volumes of municipal solid waste, automated and intelligent waste-sorting systems have gained importance. Tanveer et al. [

1] highlighted the role of AI-driven garbage classification within the circular economy context, emphasizing the integration of deep learning and green technologies to enhance recycling efficiency. A wide range of research has explored the use of deep learning models in waste classification tasks. Gupta et al. [

4] leveraged InceptionNet to attain 98.15% accuracy, and Hasan et al. [

5] proposed a fusion of DenseNet169, InceptionNet-v4, and MobileNet, achieving 97.0% accuracy. Meng and Chu [

9] employed ResNet50 for image-based classification and achieved an accuracy of 95.35%. Similarly, Poojary et al. [

6] deployed CNNs in a robotic classification setting and achieved 97.0% accuracy, while Wang et al. [

12] developed a pruned CNN, which achieved 90.88% accuracy but lacked fine-tuning. Cao and Xiang [

11] utilized InceptionV3, which functioned via transfer learning and attained 93.20%, whereas Bhattacharya et al. [

7] achieved 95.0% accuracy with a CNN, though it struggled with feature extraction under complex scenarios. Otero et al. [

8] adopted traditional machine learning with BoF and pixel features, but the model generalization was poor due to the small Kaggle dataset. Joshi et al. [

10] achieved 92.5%, but consistency issues limited their model’s practical applicability.

To improve model performance, newer architectures and hybrid models have been introduced. The multilayer hybrid CNN (MLHCNN) by Kaya et al. [

15] achieved 92.6% accuracy using dual pre-trained extractors and cross-validation. DenseNet169 combined with hyperparameter tuning scored 96.42% accuracy [

19]. Haque et al. [

23] introduced a hybrid CNN with Mish activation. Wang et al. [

2] extended TrashNet to nine categories, reporting 94.26% accuracy with MobileNetV3. Yang et al. [

13] enhanced MLH-CNN using preprocessing and achieved 93.72% accuracy. Feng et al. [

16] embedded an ECA module into EfficientNet, boosting accuracy to 94.23%. Alrayes et al. [

17] proposed a hybrid ViT-CNN model, achieving 95.8%, suggesting that transformer-based methods can surpass CNNs, although latency remained untested. Singh et al. [

24] compared multiple pre-trained models, with ResNeXt-50 achieving 98.02%, underscoring the significance of transfer learning. Yang et al. [

25] introduced GarbageNet, an incremental learning framework that addresses class expansion, noisy data, and limited samples in real-world garbage classification tasks. Recent advancements have focused on scalable and efficient hybrid solutions. Aldossary et al. [

14] evaluated MobileNetV2, Xception, EfficientNetB7, ResNet152V2, and DenseNet201, finding MobileNetV2 (96% accuracy) to be most suitable for real-time use. Malik et al. [

3] applied EfficientNet-B3 to classify 34 waste types, achieving 74–84% accuracy. Adedeji and Wang [

26] used EfficientNet-B0/B3 in the Gray Thung and Mindy Yang dataset, resulting in 80–85% accuracy. Azhar et al. [

27] proposed a ResNet50–MobileNet hybrid model for smart bins, achieving 97.90% accuracy in simple datasets and 73.49% in complex datasets. Yuan and Liu [

28] introduced a two-stream hybrid model with 98.5% accuracy on TrashNet. For model interpretability, Grad-CAM [

29] has become a widely used technique to visualize important image regions that contribute to predictions [

30]. Its gradient-based localization approach helps validate model decisions and identify potential biases, making it particularly valuable in sensitive real-world applications. Hossen et al. [

22] presented RWC-Net, combining DenseNet201 and MobileNetV2, achieving 95.01% accuracy and strong F1 scores using Score-CAM visualizations. Zhao et al. [

31] proposed a practical system using an improved MobileNetV3-Large model integrated with Raspberry Pi and the WeChat applet, achieving 81% image accuracy and 97.61% text classification accuracy.

Although prior studies demonstrate the promise of deep learning for automated waste sorting, several systematic gaps remain. Most of the reported results are based on relatively small or simplified datasets such as TrashNet or other 6-class collections, which restrict generalization to real-world waste streams that are much more diverse. Few works analyze efficiency in terms of model size, inference speed, or memory usage, leaving edge-device feasibility largely untested. Hybrid and ensemble approaches have been attempted, but they often use naive fusion rules without systematic weighting or optimization, and robustness testing across diverse conditions is limited. In addition, techniques such as class weighting, advanced augmentation, and mixed precision learning, which are crucial to handling imbalance and deployment efficiency, are rarely combined in previous studies. These gaps underscore the need for approaches that jointly target high accuracy, computational efficiency, and generalization.

To address these challenges, the present study proposes a feature-weighted hybrid deep learning architecture that integrates ResNet50, EfficientNetV2-M, and DenseNet201 for accurate and robust waste classification. In addition, we introduce two lightweight custom models—EcoMobileNet and EcoDenseNet—tailored for mobile devices and edge environments. The training methodology incorporates class weighting, PolyFocal loss, and advanced augmentation to combat overfitting and imbalance. Using mixed-precision training and multi-GPU acceleration, the proposed framework ensures scalability and efficiency, bridging the gap between performance and deployment feasibility for real-world intelligent waste management.

3. Methodology

The overall framework of the proposed waste classification system is illustrated in

Figure 1. The pipeline begins with data acquisition and preprocessing, where raw images are cleaned, resized, normalized, and augmented to improve model generalization and handle class imbalance. Following this, the dataset is stratified and split into training, testing, and validation subsets. During model selection, multiple deep learning architectures are explored, including transfer learning models, lightweight eco-friendly variants, and a hybrid ensemble model. The training phase incorporates optimization strategies, regularization, fine-tuning, and the use of class weights to mitigate imbalance. Finally, model performance is calculated using precision, accuracy, recall, F1-score, and confusion matrices, along with interpretability tools such as Grad-CAM [

30] to ensure transparency. This systematic and modular approach ensures both accuracy and efficiency, making the system viable for practical deployment.

3.1. Dataset

For this study, we used a dataset that consists of 4691 high-quality JPEG images, all resized to 224×224 pixels and converted to RGB format to ensure compatibility and consistency across deep learning pipelines. This dataset combines two sources. The primary component is the trash type image dataset by Farzad Nekouei (Kaggle) [

32], which contains 2527 labeled images in six common waste categories. To improve the generalization capabilities of the model and the representation of underrepresented waste types, an additional 2164 images were compiled into a supplementary dataset titled Extended Waste Dataset [

33], curated by the authors and publicly released on Kaggle.

These additional images introduced four new categories (battery, biological, clothes, and shoes), bringing the total number of classes to ten. All images were resized to 224 × 224 pixels, converted to RGB format, and stored in class-wise directories to facilitate label extraction during data loading. The extended dataset is licensed under the MIT license and is freely accessible for reproducibility and further research. An overview of the attributes of the dataset is provided in

Table 1, while the distribution of images across the ten categories is shown in

Table 2.

While larger publicly available datasets such as HGI-30 (18k images, 30 classes) [

34], waste classification datasets (15k–25k images, 10–12 classes), and garbage classification datasets (15k images) exist, we focused on constructing the Extended Garbage dataset to ensure domain relevance, balanced representation across categories, and high-quality labeling. To mitigate overfitting risks from the relatively smaller dataset size, we employed extensive augmentation strategies, class weighting, and advanced regularization. In addition, we validated our models on the external TrashNet dataset [

4,

7] to demonstrate strong generalizability across different data distributions. Future work will extend our approach to larger benchmark datasets for broader applicability. To provide a qualitative understanding of the dataset’s visual diversity and complexity,

Figure 2 displays sample images from each class. This dataset serves as both a benchmark for evaluating deep learning-based classification models and a foundation for scalable waste-sorting solutions. Its diversity and structure make it suitable for advancing intelligent waste management systems within the broader framework of sustainable environmental technologies.

3.2. Data Preprocessing

A uniform preprocessing pipeline was implemented on the dataset to maintain consistency across all deep-learning models. The image data was first cleaned by removing corrupted, duplicate, and grayscale images. All files were confirmed to be in JPEG format and converted to RGB to maintain uniform color representation. The dataset was then resized to a fixed resolution of 224 × 224 pixels, compatible with most convolutional neural network (CNN) architectures. To maintain class balance, a stratified split was performed, dividing the dataset into 80% training, 10% validation, and 10% testing subsets, ensuring a proportional representation of each class across all splits.

To promote better generalization and address class imbalance, a thorough data augmentation approach was applied to the training dataset. This involved techniques such as horizontal and vertical flipping, rotation, shifting, zooming, shearing, adjustments in brightness, and variations in channel intensity. These augmentations introduced realistic variability without compromising label accuracy, thereby enhancing model performance and lowering the likelihood of overfitting. The importance of data augmentation for improving model robustness and generalization in visual classification tasks has also been highlighted in recent studies, such as that by Javanmardi et al. [

35]. Additionally, normalization was performed to standardize pixel value distributions throughout the dataset. Each RGB channel was scaled using its mean and standard deviation to achieve a distribution centered around zero with a standard deviation of one. This step improved compatibility with pre-trained CNN architectures and supported more stable and efficient model training.

3.3. Model Development

3.3.1. Custom CNN Model

As an initial step in model development, a custom convolutional neural network (CNN) was designed and trained from scratch to establish a baseline for evaluating the feasibility of deep learning in waste classification tasks. This model was developed without using transfer learning to examine the learning capability solely from the available labeled data. The architecture, as shown in

Figure 3, is made up of four convolutional layers with increasing filter sizes (16, 32, 64, and 128), each followed by max-pooling operations to reduce spatial dimensions and extract salient features. Subsequently, a dense layer containing 512 units with ReLU activation was added, followed by a dropout layer (set at 0.5) to minimize overfitting. The model concluded with a softmax layer that estimated the probability distribution across ten waste classes.

All of the images were rescaled to 224 × 224 pixels and normalized to a [0, 1] range. To improve model generalization, extensive data augmentation was applied during training, including random rotations, shifts, zooming, shearing, horizontal flips, and brightness adjustments. The dataset was stratified and split into 80% training, 10% validation, and 10% testing sets. The loss function was modified using calculated class weights, enabling the model to learn more effectively from underrepresented classes and maintain balanced training. The training was performed with the Nadam optimizer and categorical cross-entropy loss, while learning rate scheduling and early stopping were applied to improve convergence and maintain training stability. This custom CNN served as a foundational benchmark, helping to evaluate the limitations and potential of training from scratch before progressing to deeper architectures, with its performance discussed in

Section 4.1.

3.3.2. Transfer Learning with Pretrained Models

In transfer learning, given a source domain

and a source task

, along with a target domain

and a target task

, the objective is to improve the learning of the target predictive function

in

using knowledge from

and

, as shown in (1):

Here,

denotes the source domain with input space

and marginal distribution

, while

defines the label space

and the predictive function

. Similarly,

and

describe the target domain and the task [

36].

Transfer learning addresses core limitations of traditional machine learning, including insufficient labeled data, high computational demands, and distribution mismatches between source and target domains. It enables the reuse of knowledge from related tasks to improve performance in data-scarce environments [

37].

After establishing a baseline using a custom CNN model, we evaluated the performance of eleven pre-trained deep learning architectures via transfer learning. These models were trained on the same dataset with uniform augmentation strategies, yet their precision varied significantly, ranging from 40% to 87%. This performance gap underscores the inherent challenge of training deep models from scratch, particularly in domains with limited and imbalanced data.

To address these challenges, we adopted transfer learning, a technique that leverages knowledge from a source domain to improve learning in a target domain. Specifically, we utilized models pre-trained on the ImageNet dataset, which contains millions of annotated natural images across 1000 categories. Transfer learning enables the reuse of low-level features such as edges, textures, and shapes, which are highly transferable even to domains like waste classification, where large labeled datasets are scarce. The effectiveness of transfer learning for improving model performance and reducing training costs in visual classification tasks has been demonstrated in several domains, including agricultural product sorting [

38].

Our transfer learning pipeline consisted of several key steps. First, each model was initialized with pre-trained ImageNet weights. The early convolutional layers were frozen to retain generic feature representations, while deeper layers were unfrozen for fine-tuning the target waste classification dataset. The original classification heads were replaced with custom dense softmax layers suitable for the ten-category classification task. To mitigate the effects of class imbalance, class weights computed from the training data were incorporated into the loss function. A consistent and extensive data augmentation strategy was applied across all models to further improve their generalization and robustness.

The pre-trained architectures evaluated include ResNet-50, EfficientNet-B0, MobileNetV3-Large, EfficientNetV2-S, EfficientNetV2-M, EfficientNet-B7, DenseNet-201, DenseNet-169, VGG16, InceptionV3, and Xception. All models were trained under uniform experimental conditions, with identical input dimensions (224 × 224 pixels), preprocessing pipelines, and optimization parameters, including the Adam optimizer, learning rate scheduling via ReduceLROnPlateau, and EarlyStopping for overfitting prevention. The training was accelerated using mixed-precision techniques and multi-GPU configurations.

The comparative performance analysis of these models—in terms of classification accuracy, parameter counts, and training behavior—is presented in

Section 4.2.

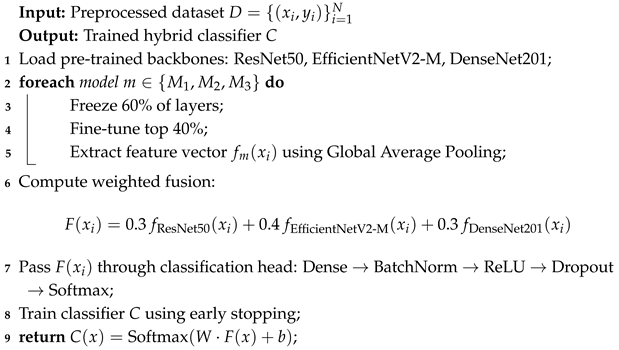

3.3.3. Hybrid Model: Combining Feature Extractors

To address the limitations of standalone CNN architectures and leverage their complementary strengths, we propose a hybrid ensemble model integrating ResNet50, EfficientNetV2-M, and DenseNet201. This choice is driven by empirical observations: ResNet50 is resilient to distortions, EfficientNetV2-M achieves superior classification accuracy, and DenseNet201 captures fine-grained structural patterns.

Notation and Complexity Analysis: Let denote an input image and its ground truth label from dataset , where N is the number of training samples. The base models correspond to ResNet50, EfficientNetV2-M, and DenseNet201, respectively. These extract a feature vector of size . The fused embedding is computed as a weighted sum of the individual feature vectors, where . The final classifier maps to a probability distribution over c waste classes using fully connected layers and softmax activation.

For clarity, we define the notation as follows:

N—number of training samples in the dataset.

—dimension of the feature embedding from the m-th backbone.

d—dimension of the fused embedding (taken as the maximum ).

c—number of target waste classes.

—feature vector produced by the m-th backbone for input .

—fused feature embedding after weighted combination.

—learnable weights and bias of the classification head.

The computational complexity of feature extraction per model is per sample, resulting in a total fusion cost of . The classification head, comprising dense layers and softmax, has a cost of per sample. Training complexity scales with per epoch, and memory usage is dominated by the largest backbone (DenseNet201) and the fusion buffer.

Rather than relying on a single model, the ensemble merges diverse feature representations to enhance generalization. A key innovation is the differentiated augmentation strategy, where each model is trained with data variations tailored to its learning characteristics. This ensures not only stronger individual model performance but also a richer, more complementary combined feature space. Weighted feature fusion is employed, assigning weights of 0.4 to EfficientNetV2-M and 0.3 each to ResNet50 and DenseNet201, prioritizing the most discriminative embeddings without risking overfitting. Rather than relying on a single model, the ensemble merges diverse feature representations to enhance generalization. A key innovation is the differentiated augmentation strategy, where each model is trained with data variations tailored to its learning characteristics. This ensures not only stronger individual model performance but also a richer, more complementary combined feature space. Weighted feature fusion is employed, assigning weights of 0.4 to EfficientNetV2-M and 0.3 each to ResNet50 and DenseNet201, prioritizing the most discriminative embeddings without risking overfitting.

Figure 4 visually illustrates the architecture, highlighting the parallel input pipelines, the processing through individual pre-trained networks, feature extraction via Global Average Pooling, weighted concatenation, and final classification through dense layers and softmax. Algorithm 1 outlines the hybrid model’s core workflow, emphasizing its novel components such as weighted feature fusion and partial fine-tuning. Routine training operations are omitted for brevity. The temporal and spatial complexity of the major steps are detailed alongside the notation for reproducibility and scalability analysis. The hybrid approach is strategic and data-driven, consistently outperforming single models in terms of accuracy, robustness, and macro-F1-score. It serves as a strong candidate for real-world waste classification and lays a foundation for future enhancements such as attention mechanisms or edge deployment [

27]. The performance of the hybrid model is discussed in

Section 4.3.

| Algorithm 1: Hybrid Model for Deep Waste Classification |

![Sustainability 17 08761 i001 Sustainability 17 08761 i001]() |

3.3.4. Ensemble Methods for Robustness

To enhance the performance of the proposed waste classification framework, multiple ensemble strategies were investigated by combining predictions from two high-performing convolutional neural networks: DenseNet201 and EfficientNetV2-M. These models offer complementary representational strengths, and their integration allows for more accurate and reliable classification across complex and diverse waste categories. The ensemble strategies investigated included soft voting, weighted voting, logit averaging, stacking, test-time augmentation (TTA), and Monte Carlo (MC) dropout [

39]. Algorithm 2 outlines the stacking ensemble using DenseNet201 and EfficientNetV2-M.

| Algorithm 2: Stacking Ensemble using DenseNet201 and EfficientNetV2-M |

![Sustainability 17 08761 i002 Sustainability 17 08761 i002]() |

Notation Clarification: Here, and denote the two base CNN models (DenseNet201 and EfficientNetV2-M). For each test set D, represents the predicted probability distribution over c classes from model . The ground-truth labels are denoted by Y. The concatenated base model predictions are used to form the meta-feature matrix , paired with as supervision for training the meta-classifier C. At inference, is constructed analogously from and , and the final ensemble predictions are represented by .

In soft voting, the final prediction is derived by averaging the class probabilities output by the individual models. Weighted voting further refines this by assigning confidence-based weights to each model, derived from validation performance. Logit averaging combines the raw output logits from each model before applying the softmax function, allowing for a smoother probabilistic fusion. Stacking introduces a higher-level meta-classifier trained on the output probabilities of the base models, enabling it to learn optimal fusion patterns from data [

40]. TTA enhances generalization by averaging predictions across multiple augmented versions of each test sample, effectively simulating variations in real-world conditions. MC dropout estimates prediction uncertainty by performing multiple stochastic forward passes at inference time and aggregating the results.

Among all methods, stacking demonstrated the most robust classification performance, validating its effectiveness in leveraging complementary model outputs. The meta-classifier is trained on concatenated class probabilities from DenseNet201 and EfficientNetV2-M. This strategy allows the meta-learner to capture inter-model dependencies and produce more accurate final predictions [

41]. The results of various ensemble techniques, such as stacking, are shown in

Section 4.4.

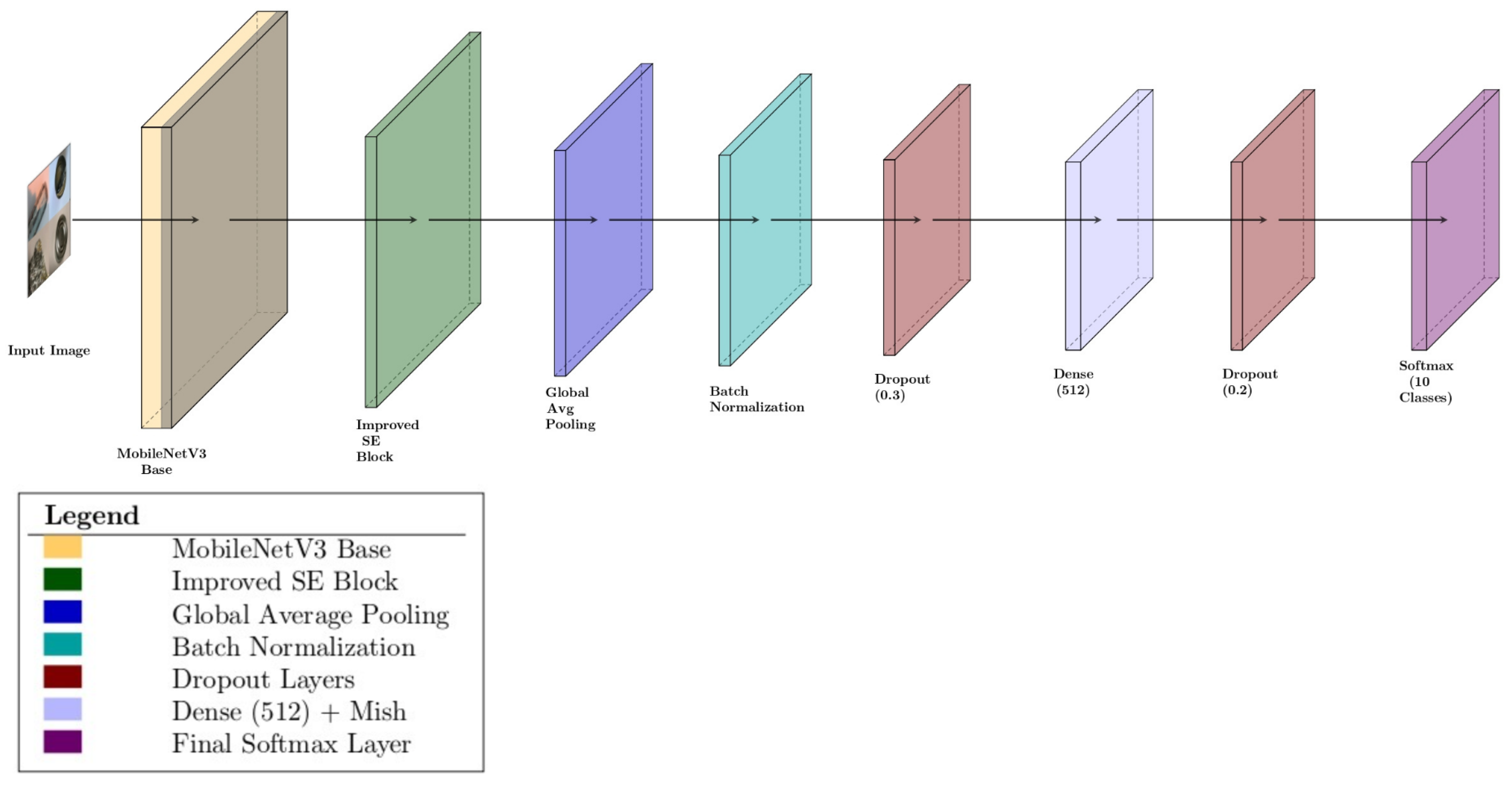

3.3.5. Custom Optimized Models: EcoMobileNet and EcoDenseNet

To meet the need for fast, lightweight, and accurate models suited for real-time use in mobile and edge devices, we designed two custom architectures—EcoMobileNet and EcoDenseNet—optimized to deliver strong classification performance while maintaining computational efficiency in waste-sorting tasks.

EcoMobileNet is an optimized version of MobileNetV3-Large, augmented with Squeeze-and-Excitation (SE) blocks to enhance feature recalibration and improve model sensitivity to informative channels. This design reduces the model’s computational cost and memory footprint, making it ideal for mobile scenarios. Additionally, the Mish activation function replaces traditional ReLU-based activations to provide smoother gradients and improved convergence during training.

Figure 5 illustrates the architecture of EcoMobileNet.

The key stages include customized data augmentation, class imbalance handling using class weights, and the application of a novel PolyFocal loss function, which combines focal and cross-entropy losses to address class imbalance effectively. Transfer learning is employed by initializing with ImageNet-pre-trained weights, with selective fine-tuning of deeper layers. Regularization is enforced through dropout, batch normalization, and early stopping mechanisms to mitigate overfitting. Algorithm 3 outlines EcoMobileNet for Deep Waste Classification.

| Algorithm 3: EcoMobileNet for Deep Waste Classification |

- 1

Input: Training dataset , Validation dataset , Test dataset - 2

Output: Trained waste classification model M - 3

Apply data augmentation (rotation, zoom, flip); - 4

Set up data generators for all datasets; - 5

Compute class weights W; - 6

Define custom PolyFocal loss; - 7

PolyFocal ; - 8

where , ; - 9

Load MobileNetV3Large with ImageNet weights; - 10

Add Squeeze-and-Excitation (SE) blocks; - 11

Replace ReLU with Mish activation; - 12

Freeze first 100 layers; - 13

Fine-tune deeper layers; - 14

Add classification head: GAP → BatchNorm → Dropout → Dense(512) → Dropout → Dense(10); - 15

Compile with Adam optimizer () and PolyFocal loss; - 16

Configure callbacks: ReduceLROnPlateau and EarlyStopping; - 17

Train model for 50 epochs with class weights W; - 18

return Trained model M;

|

Notation and Complexity Analysis: Let denote an input image and its ground truth label from dataset , where N is the number of training samples. The EcoMobileNet framework leverages a pre-trained MobileNetV3Large model to extract deep features, augmented with Squeeze-and-Excitation (SE) blocks and Mish activations for improved representational capacity and gradient flow. The model is fine-tuned by freezing the initial layers and training the top layers with a lightweight classification head. To handle class imbalance, class weights W are computed and a custom PolyFocal loss is used during training.

For clarity, the notations are defined as follows:

N—number of training samples.

e—number of training epochs.

f—number of FLOPs (floating-point operations) required per forward–backward pass of the backbone.

d—dimension of the final feature vector after global average pooling.

c—number of target waste classes.

b—batch size used during training.

a—average activation size per sample across layers.

P—number of trainable and non-trainable parameters in the pre-trained MobileNetV3Large backbone.

The computational complexity of a single forward–backward pass through the model is per sample. The classification head, comprising fully connected layers, dropout, and softmax, adds an additional cost of , where d is the size of the final feature vector and c the number of classes. Training over N samples for e epochs thus results in an overall time complexity of . Space complexity during training is dominated by activation maps and batch-wise gradients, yielding a cost of . Additional memory is consumed by the pre-trained model parameters , with the total footprint bounded by the backbone size and the training batch.

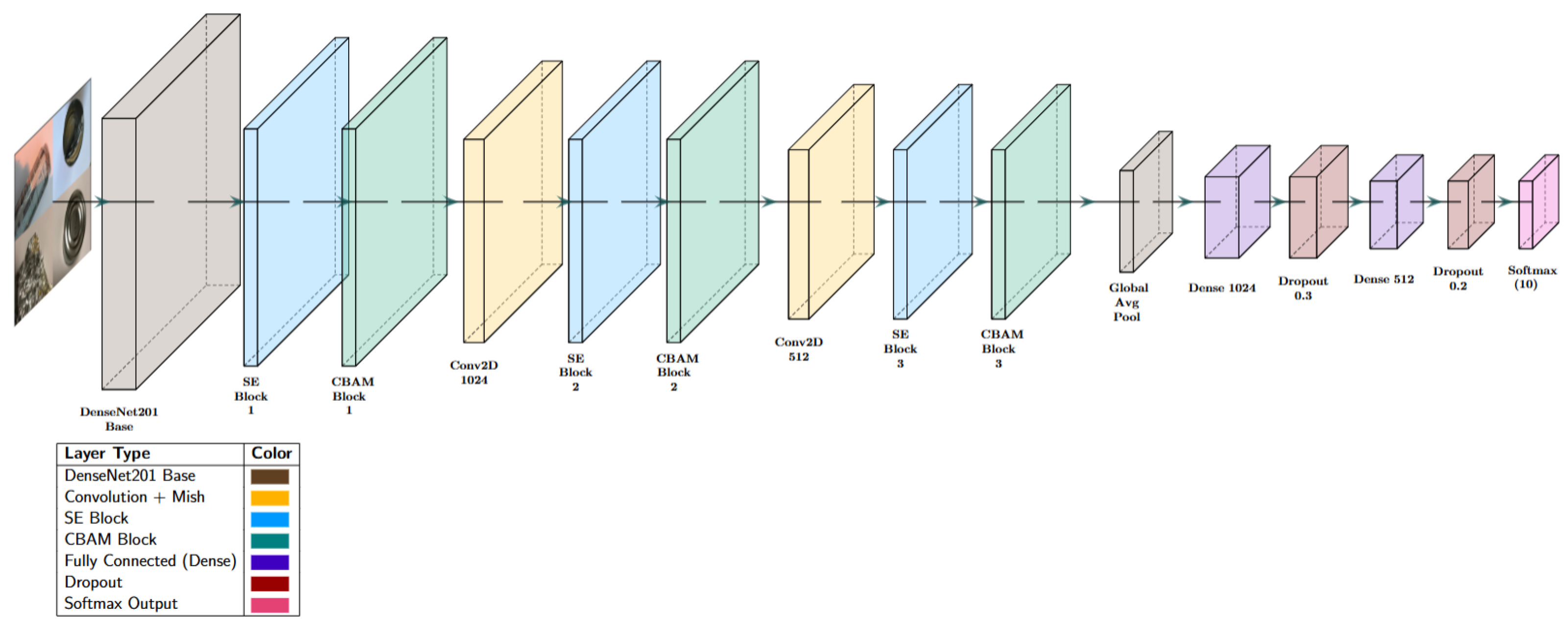

In contrast, EcoDenseNet enhances the DenseNet-201 backbone by incorporating Squeeze-and-Excitation (SE) blocks and Convolutional Block Attention Modules (CBAMs). These attention mechanisms allow the network to prioritize significant spatial and channel-specific features, which is especially useful in waste classification tasks with subtle inter-class differences. The network is further refined using Mish activation, followed by global average pooling and fully connected layers for classification.

Figure 6 illustrates the architectural layout of EcoDenseNet. Algorithm 4 outlines the EcoDenseNet for Deep Waste Classification.

| Algorithm 4: EcoDenseNet for Deep Waste Classification |

Input: Dataset Output: Trained waste classification model M - 1

Define Mish activation: ; - 2

Load DenseNet201 with ImageNet weights; - 3

Freeze first 70 layers; - 4

Add Squeeze-and-Excitation (SE) blocks; - 5

Add Convolutional Block Attention Module (CBAM); - 6

Extend network; - 7

Add Conv2D + BatchNorm + Mish; - 8

Apply Global Average Pooling; - 9

Add Dense layer + Dropout; - 10

Add final Softmax layer; - 11

Preprocess data: apply augmentation and handle class imbalance; - 12

Compile model with AdamW optimizer and categorical cross-entropy loss; - 13

Configure callbacks: EarlyStopping and ReduceLROnPlateau; - 14

Train model with dataset D; - 15

Evaluate with test-time augmentation (TTA); - 16

Save best model and define inference function; - 17

return trained model M;

|

Notation and Complexity Analysis: Let denote an input image and its corresponding label from dataset , where N is the number of training samples. EcoDenseNet employs a DenseNet201 backbone pre-trained on ImageNet, enhanced with SE (Squeeze-and-Excitation) and CBAM (Convolutional Block Attention Module) blocks for attention. The initial 70 layers of DenseNet201 are frozen, and the network is extended with additional Conv2D, BatchNorm, Mish activation, global average pooling, dense, and dropout layers, followed by a softmax classification head.

The feature extractor outputs an embedding of dimension d, with computational complexity of per image for the backbone. Attention modules (SE + CBAM) add overhead but remain within the same order of complexity. The classification head, including dense layers and softmax over c waste classes, has a complexity of . Therefore, the overall inference complexity per sample is . Training scales with per epoch. The space complexity is also dominated by the DenseNet201 backbone and attention modules, with additional memory required for batch normalization parameters, attention maps, and training buffers, such as those used for early stopping and learning rate scheduling.

The model training process integrates test-time augmentation (TTA) for enhanced generalization, and the AdamW optimizer is utilized to ensure stable convergence. Class imbalance is again addressed via dynamic weighting strategies, and training is guided by standard callbacks such as ReduceLROnPlateau and EarlyStopping. EcoMobileNet and EcoDenseNet collectively provide a balanced solution between computational efficiency and predictive accuracy, making them ideal for use in resource-limited environments without sacrificing performance. Their modular design, attention-based enhancements, and optimization strategies collectively enable them to deliver robust results in practical waste classification applications. The performance of these two models is covered in

Section 4.5.

3.4. Model Training and Evaluation

To ensure robust training, generalization, and computational efficiency, a consistent set of strategies, regularization techniques, and evaluation protocols was applied across all models in this study. The training framework was compatible with both traditional CNNs and transfer learning models, effectively handling the challenges of multi-class waste classification. Models were trained using categorical cross-entropy or its modified variants, with custom loss functions used in cases of class imbalance to penalize confident misclassifications and better represent minority classes. Optimizers like Adam, AdamW, and Nadam were selected for their adaptive learning capabilities and efficient convergence, with hyperparameters such as learning rate, weight decay, and momentum tuned per architecture. Early stopping was used to terminate training when the validation performance stopped improving, with the model reverting to the weights corresponding to the best validation accuracy. Learning rate scheduling was implemented via plateau-based reduction strategies. Dropout and batch normalization were implemented to improve regularization and guarantee stable convergence. To optimize memory usage, accelerate training, and enable scalability to larger datasets, mixed precision training (using float16 and float32) and multi-GPU strategies were adopted. Transfer learning models were initialized with ImageNet weights and fine-tuned by freezing early layers while adapting deeper ones. Each backbone was augmented with a custom classification head comprising global pooling, batch normalization, dropout, and dense layers with softmax activation for the final output.

3.5. Hyperparameters and Tuning Strategy

For reproducibility, we provide a comprehensive overview of the hyperparameters used in our experiments.

Table 3 summarizes the final configurations for each model. The optimizers included Adam, AdamW, and Nadam, with initial learning rates ranging from

to

. Batch sizes of {16, 32, 64}, dropout values between 0.1 and 0.5, and weight decay values in

were explored.

Hyperparameter tuning was performed using a combination of grid and random search. EcoMobileNet employed grid search over discrete learning rates and dropout levels, while the EcoDenseNet and Hybrid models used random search across continuous ranges. Validation accuracy and validation loss served as the selection metrics, with

EarlyStopping and

ReduceLROnPlateau callbacks ensuring training stability. The final selected configurations are reported in

Table 3.

To prevent overfitting and ensure a fair comparison across models, we employed the

EarlyStopping callback during training. Validation loss (

val_loss) was used as the monitoring metric. For EcoDenseNet, patience was set to 20 epochs, while for EcoMobileNet and the Hybrid model, patience was set to 15 epochs. In all cases, the

restore_best_weights option was enabled, ensuring that the model parameters corresponding to the lowest validation loss were retained. Consequently, although the nominal maximum training duration was 50 epochs, most models converged earlier (typically between 30 and 40 epochs. The training and validation loss/accuracy curves for the key models used in the hybrid system (DenseNet201, ResNet50, MobileNetV3, EfficientNetV2-M) are visualized in

Figure 7, emphasizing their convergence behaviors and model stability.

Model performance was evaluated on a held-out test set using accuracy, precision, recall, and F1-score, with confusion matrices used to visualize class-wise performance and misclassification trends. During inference, test-time augmentation (TTA) enhanced prediction robustness by applying multiple augmentations per sample and averaging the softmax outputs. For ensemble models and uncertainty estimation, techniques like soft voting, weighted averaging, and Monte Carlo Dropout were explored to improve reliability and interpretability, offering insights into prediction confidence and supporting robust decision-making in real-world scenarios.

4. Experimental Results and Analysis

4.1. Custom CNN Model

Custom CNN achieved a test accuracy of 85.96% after training for 200 epochs. The classification report revealed strong performance for most categories, but lower recall for certain classes such as plastics and shoes, indicating misclassifications due to overlapping features. In particular, the trash category (only 2.92% of the total images) had the lowest F1-score (0.74), emphasizing the challenges posed by class imbalance. The macro-F1-score of 0.85 suggested overall effectiveness, but the lower recall scores highlighted the need for more advanced architectures to improve generalization. This baseline experiment underscored the limitations of a custom CNN in handling complex waste classification tasks and motivated the transition to pre-trained deep learning models using transfer learning.

Figure 8a also displays a confusion matrix for the custom CNN, revealing class-wise prediction strengths and weaknesses. The training and validation loss and accuracy curves for the custom CNN, along with enhanced models like EcoMobileNet, EcoDenseNet, and the Hybrid model, are presented in

Figure 9, showcasing learning dynamics across epochs.

4.2. Transfer Learning vs. Training Without Pretrained Weights

We evaluated 11 deep learning architectures for waste classification, comparing models trained without pre-trained weights to those using transfer learning. The results demonstrate the superiority of transfer learning in both accuracy and training efficiency.

Transfer learning models, particularly ResNet50 and EfficientNetV2-M, achieved test accuracies close to 95%, benefiting from pre-trained ImageNet features. In contrast, models trained from scratch exhibited lower accuracy and overfitting, and required longer training times and extensive hyperparameter tuning.

Table 4 presents a direct comparison of test accuracies for each architecture with and without transfer learning, illustrating the consistent advantage of pre-training. Furthermore, the structural details—input size, number of layers, frozen/fine-tuned layers, parameters, and performance—for all 11 CNN architectures are summarized in

Table 5, providing a comprehensive architectural comparison. It is important to emphasize that all models, whether trained from scratch or fine-tuned via transfer learning, were configured under identical experimental settings to ensure fairness and reproducibility. Specifically, the same data augmentation pipeline (rotation, zoom, flips, and color jitter), optimizer (AdamW), initial learning rate (

), batch size, and maximum epoch count (50, with early-stopping patience in 7) were consistently applied across both training conditions.

The stark performance gap observed for MobileNetV3-Large (12.79% when trained from scratch vs. 97.01% when fine-tuned) stems from the fact that training such a deep model entirely from scratch on a limited dataset led to severe underfitting and optimization collapse, even after careful hyperparameter tuning. In contrast, transfer learning leverages pre-trained ImageNet weights, enabling the model to converge rapidly and achieve high accuracy. This finding highlights the critical role of transfer learning for very deep or resource-optimized backbones, particularly when the dataset size is limited.

4.3. Hybrid Models: Combining Feature Extractors

The hybrid model comprising ResNet50, EfficientNetV2-M, and DenseNet201 demonstrated significant improvements in both accuracy and generalization, outperforming individual networks. This combination leveraged the complementary strengths of each architecture to better handle the diverse and overlapping characteristics in waste images. The training and validation curves of the hybrid model are also shown in

Figure 9, and its confusion matrix is included in

Figure 8, reflecting enhanced class-wise performance.

4.4. Ensemble Methods for Robustness

Ensemble techniques, including soft voting, weighted averaging, and stacking, were explored. Among them, stacking achieved the highest accuracy of 98.29%, outperforming all individual and hybrid models.

These results are summarized in

Table 6, which compares ensemble configurations (based on EfficientNetV2-M and DenseNet201) and their corresponding classification accuracies. Ensemble methods helped reduce variance, minimize overfitting, and improve prediction robustness.

4.5. Custom Optimized Models: EcoMobileNet and EcoDenseNet

EcoMobileNet and EcoDenseNet were designed for performance–efficiency trade-offs. EcoMobileNet achieved a test accuracy of 98.08%, while EcoDenseNet reached 97.86% accuracy, highlighting the potential of lightweight, customized models for real-world deployment. The comparative learning curves for these models are presented in

Figure 9, and their respective confusion matrices are shown in

Figure 8, illustrating their precise and consistent predictions.To enable a comprehensive evaluation,

Table 7 compares the test accuracy, precision, recall, and F1-score of both the pre-trained models and the newly introduced architectures. The custom models—EcoMobileNet, EcoDenseNet, and the Hybrid variant—consistently outperform their pre-trained counterparts across key performance indicators, including accuracy and other classification metrics.

Table 8 presents ablation study results.

Table 9 shows the relative deployment characteristics of proposed models. To contextualize the performance of our proposed models, we conducted a comparative analysis with recent deep learning-based waste classification studies, as shown in

Table 10. The hybrid, lightweight, and ensemble models developed in this work demonstrate competitive or superior accuracy when compared to prior state-of-the-art architectures such as ResNet50, InceptionNet, DenseNet variants, and two-stream hybrid CNNs. Our Stacking Ensemble achieves the highest overall accuracy of 98.29%, while EcoMobileNet balances performance and efficiency, making it suitable for real-time deployment. These results confirm the efficacy of our architectural choices across diverse evaluation settings. The superiority of the proposed models is rooted in their architectural design. EcoDenseNet enhances DenseNet201 with the Mish activation function and attention modules (SE and CBAM), improving feature selectivity and gradient flow, especially in visually cluttered waste images. EcoMobileNet builds upon MobileNetV3-Large by incorporating Squeeze-and-Excitation blocks and optimized regularization, achieving high accuracy with low computational cost, ideal for deployment on edge devices. The hybrid model combines ResNet50 (residual learning), EfficientNetV2-M (compound scaling efficiency), and DenseNet201 (dense connectivity), resulting in richer and more discriminative feature embeddings. These structural enhancements, coupled with differential fine-tuning and weighted fusion, collectively contribute to the observed improvements in accuracy and generalization across diverse waste categories.

To quantify the individual contributions of key architectural and optimization components in our proposed models, we conducted a comprehensive ablation study. Each enhancement—Squeeze-and-Excitation (SE) blocks, the Convolutional Block Attention Module (CBAM), Mish activation, PolyFocal loss, and weighted feature fusion—was independently removed or replaced with standard alternatives. The models were then re-trained and evaluated on the same 10-class waste dataset.

Table 8 presents the performance metrics for each variant, including the test accuracy and macro-F

1-score. The results highlight that SE blocks and CBAM provide significant gains by enabling fine-grained attention to spatial and channel-wise features. Replacing Mish with ReLU slightly reduced performance, confirming the benefits of smoother gradients. Similarly, PolyFocal loss outperformed standard categorical cross-entropy in handling class imbalance. In the Hybrid model, removing weighted fusion and using equal averaging reduced both accuracy and F

1, confirming the importance of fusion weighting.

These findings reinforce the cumulative value of architectural optimizations and ensemble strategies. Notably, SE blocks, CBAM, and weighted fusion had the highest impact, improving model focus and output synergy. Ablating PolyFocal loss and Mish activation caused moderate drops, confirming their support in boosting training stability and class balance handling.

While this study primarily focuses on experimental evaluation, we briefly investigated the inference efficiency of the proposed models during testing in a cloud-based environment. Specifically, inference was performed on unseen general waste images using a Tesla T4 GPU on the Kaggle platform. EcoMobileNet, with 3.49 million parameters, exhibited low latency and minimal GPU memory consumption during inference, making it a promising candidate for deployment in mobile or embedded settings. Similarly, EcoDenseNet balanced performance and complexity well, maintaining consistent accuracy with moderate memory usage. Although we did not benchmark physical mobile hardware, the compact parameter size and observed runtime behavior suggest that these models, especially EcoMobileNet, are well-suited for real-world applications such as smart bins, mobile apps, or edge IoT devices.

It is important to note that the current efficiency assessment was performed in a cloud-based environment (Tesla T4 GPU) rather than on physical mobile or embedded hardware. Thus, our claims of our model’s edge-device suitability are based on indirect indicators such as parameter size, memory footprint, and inference latency, which have been widely adopted in prior lightweight CNN research.

The model’s actual deployment on platforms such as Jetson Nano, Raspberry Pi, or mobile SoCs will be pursued as part of future work to validate its practical real-world integration.

Table 9 summarizes the models’ sizes and relative inference behavior. Future work will extend this analysis with platform-specific deployment, energy profiling, and quantized model testing.

4.6. Statistical Validation

To ensure robustness, reproducibility, and statistical soundness of the reported results, all core experiments were repeated using five different random seeds: {42, 52, 62, 72, 82}. This process was applied to the highest-performing models—EcoMobileNet, EcoDenseNet, the hybrid model, and the Stacking Ensemble. For each run, the test accuracy and macro-F

1-score were recorded.

Table 11 summarizes the results as the mean ± standard deviation across the five runs.

To evaluate the statistical significance of the performance differences, a paired t-test was conducted between the Stacking Ensemble and the hybrid model across the five trials. The resulting p-value was found to be less than 0.05, indicating a statistically significant improvement in both accuracy and macro-F1-score by the Stacking Ensemble over the hybrid model.

These results demonstrate that the proposed ensemble strategy not only achieves high classification performance but also maintains consistency across varying initializations, highlighting its robustness and generalizability.

4.7. External Benchmarking on TrashNet Dataset

To evaluate the cross-dataset generalization capability of our proposed models, we conducted external benchmarking on the publicly available TrashNet dataset [

42], which includes six waste categories: cardboard, glass, metal, paper, plastic, and trash. Although our models were trained on a 10-class dataset, predictions were post-processed to retain only relevant outputs corresponding to TrashNet’s label space. The TrashNet dataset [

42] contains 2527 images across six waste categories.

Table 12 summarizes the per-class distribution, which highlights a mild imbalance (e.g., fewer trash samples compared to paper or glass). This class distribution ensures transparency and strengthens the validity of our cross-dataset evaluation.

The final EcoDenseNet, EcoMobileNet, and hybrid model models were evaluated using this cross-domain setup.

Table 13 summarizes the evaluation metrics, including test accuracy, weighted precision, recall, and F

1-score. The results confirm strong generalization even without retraining, emphasizing the robustness of the proposed architectures across different data distributions.

To aid with interpretation, Grad-CAM visualizations were generated for selected predictions, highlighting the key regions influencing classification. Additionally, confidence distributions were plotted to visualize the certainty levels of predictions. The average inference time was measured for each model on the TrashNet test set, with EcoMobileNet achieving the fastest performance at ~34.58 ms/image, followed by EcoDenseNet (~41.27 ms), the hybrid model (~56.83 ms), and the Stacking Ensemble (~58.12 ms/image).

These results validate the real-world applicability and robustness of the proposed system, showcasing its capacity to maintain high performance across unseen waste datasets without retraining. Although the primary focus of this study is model accuracy, we provide an illustrative estimate of the model’s practical benefits. Assuming a medium-sized facility processes 100,000 waste items per day with a baseline accuracy of 95% (typical for current automated sorters), improving the accuracy to 98.08% with EcoMobileNet would correctly classify ≈3080 additional items daily. If 60% of items are recyclable and the average weight is 0.2 kg, this equates to ≈370 kg of recyclables recovered per day, or 135 tonnes annually, that would otherwise be lost to landfills. These results demonstrate that even a 3% accuracy improvement can translate into substantial material recovery and sustainability gains.

5. Conclusions and Future Work

In this research, we examined multiple deep learning approaches to enhance waste classification accuracy, leveraging transfer learning, ensemble methods, and custom CNN architectures. We fine-tuned eleven pre-trained models, including ResNet-50, EfficientNet variants, and DenseNet-201, to evaluate their effectiveness in classifying waste materials. To further improve model performance, we implemented ensemble techniques such as weighted averaging, soft voting, and stacking, leading to a hybrid model that outperformed individual networks. Additionally, we introduced two novel architectures: EcoMobileNet, an optimized MobileNetV3 Large variant with Squeeze-and-Excitation blocks for efficient mobile deployment, and EcoDenseNet, a DenseNet-201 variant enhanced with Mish activation and attention mechanisms to improve feature extraction.

The experimental results demonstrated that EcoMobileNet achieved a test accuracy of 98.08%, EcoDenseNet reached 97.86% accuracy, and our ensemble stacking approach attained the highest accuracy of 98.29%, outperforming individual CNN models. These findings confirm the effectiveness of hybrid and ensemble strategies in improving classification robustness while maintaining computational efficiency. This study aids in creating scalable and automated waste classification systems that enhance recycling efficiency and help lower environmental harm.

Future research will focus on enhancing waste classification through segmentation-based preprocessing using models like U-Net and Mask R-CNN to improve feature extraction on cluttered backgrounds. Addressing class imbalance remains crucial, and techniques such as GAN-based synthetic data generation, adaptive loss functions, and meta-learning will be explored. To enable real-time deployment, we will further optimize EcoMobileNet through model quantization, knowledge distillation, and pruning for edge devices. Additionally, multi-modal fusion approaches integrating image data with metadata (e.g., weight, material composition) will be investigated to enhance classification accuracy. Finally, expanding the dataset with diverse waste categories and real-world images from various environments will improve the model’s generalization and robustness for practical applications.