1. Introduction

Artificial general intelligence (AGI) is frequently envisioned as a groundbreaking advancement in artificial intelligence that is capable of human-like adaptability across multiple domains [

1,

2,

3,

4,

5]. Some researchers argue that its realization would mark the onset of technological singularity, fundamentally altering societal structures [

6]. However, significant debate persists regarding whether current AI advancements, such as large language models, represent foundational steps toward AGI or remain constrained by narrow intelligence [

6,

7,

8,

9,

10,

11]. Scholars emphasize the importance of distinguishing AGI from artificial superintelligence (ASI) and ensuring analytic rigor in these discussions [

12,

13]. While some forecasting models predict the emergence of AGI before 2060, concerns over governance, resource allocation, and societal impact remain unresolved [

14]. The discussion surrounding AGI continues to shape both speculative and applied research, prompting considerations about its role in future AI development despite the absence of a fully realized AGI system [

15,

16,

17].

Although AGI remains theoretical, its discourse significantly influences advancements in machine learning, intelligent automation, and policy frameworks [

1,

18]. Many AI-driven innovations—such as neuromorphic computing, reinforcement learning, and AI decision-making—stem from AGI-oriented aspirations, even if they do not achieve AGI itself [

19]. Policymakers increasingly incorporate AGI-related considerations into governance strategies, particularly in education, energy, and industrial automation [

16,

17]. However, disparities in research funding, regulatory preparedness, and access to computing infrastructure raise concerns about the potential of AGI to reinforce rather than bridge technological divides [

7,

8].

AGI is defined in the literature as the ability of an artificial agent to achieve goals in a wide variety of environments without task-specific programming, characterized by adaptability, general-purpose reasoning, and learning capacities comparable to or exceeding those of humans [

5,

10,

20]. Scholars depict AGI as distinct from narrow AI through its flexibility, autonomy, and capacity for transfer learning beyond predefined domains [

12]. Some approaches conceptualize AGI as embodied or neuromorphic systems inspired by biological brains [

21], whereas others propose architectures that integrate memory, reasoning, and planning capabilities [

5,

22]. These depictions highlight the conceptual challenges of measuring and verifying AGI progress, as current benchmarks often fail to capture general adaptability [

23].

AGI could plausibly contribute to health-related SDG 3 by enabling more generalizable diagnostic reasoning through multimodal learning architectures that combine heterogeneous data sources such as electronic health records, medical imaging, and population genomics to infer disease risks and recommend interventions. Early-stage systems inspired by AGI principles have already demonstrated the potential to reduce diagnostic delays for noncommunicable diseases in pilot settings and improve vaccine distribution logistics in low-resource contexts via reinforcement learning–driven allocation policies [

24]. In education (SDG 4), AGI-like adaptive tutoring systems could extend the capabilities of current intelligent tutoring systems by combining natural language understanding with learner modeling to personalize instruction dynamically for diverse cognitive profiles, improving learning outcomes in underserved schools [

25]. For SDG 7, research on grid optimization using deep reinforcement learning and graph-based models provides a conceptual foundation for AGI systems that can flexibly manage large-scale energy grids, reduce transmission losses through adaptive control, and improve renewable energy integration through scenario-based planning [

26]. In industrial contexts (SDG 9), AGI-like systems could extend current predictive maintenance and supply chain management approaches by leveraging meta-learning for cross-domain adaptation and planning under uncertainty to increase operational resilience [

27]. Governance (SDG 16) could benefit from AGI-based anomaly detection frameworks, which might generalize unsupervised learning across diverse and evolving administrative datasets to identify irregularities, enhance institutional accountability, and improve transparency [

28].

This study expands on these discussions by analyzing prospective computational pathways, model design principles, and deployment scenarios through which AGI could hypothetically support sustainable development goals (SDGs), illustrating these possibilities via empirical insights from advanced narrow AI systems and conceptual extrapolations where available.

Accordingly, this study aims to address the following research questions:

How is AGI research evolving across subject areas such as computer science, engineering, and social sciences and across geographical regions, including high-income and emerging economies, and what are the implications of these trends for specific SDGs, namely, SDG 3 (health), SDG 4 (education), SDG 7 (energy), SDG 9 (industry and infrastructure), SDG 10 (inequality), and SDG 16 (governance), measured through bibliometric analysis and thematic mapping of publication data?

How do funding patterns, through mechanisms such as targeted grants, institutional research priorities, and collaborative international programs, shape the direction of AGI research, and how do these mechanisms influence the contribution of research outputs to the realization of the identified SDGs?

How does AGI research align with the SDGs most frequently addressed in scholarly outputs, and what bibliometric indicators and SDG-mapping methodologies can be used to measure the degree of alignment between AGI research and the SDG targets?

The background and importance of these research questions lie in the growing discourse around the potential role of AGI in addressing global challenges while avoiding unintended risks. AGI is theorized as a general-purpose intelligence that could contribute to sustainable development through its application in health, education, energy, industry, equity, and governance. However, empirical evidence on how AGI research is currently distributed, funded, and aligned with the SDGs remains sparse. This study addresses this gap by examining disciplinary and geographical trends, unpacking the mechanisms through which funding influences research priorities, and proposing measurable standards for assessing AGI research alignment with the SDGs. Understanding these dynamics is important for informing strategies that guide AGI research and development toward outcomes that support global development objectives.

This study offers several novel contributions at the intersection of artificial general intelligence (AGI), machine learning, and data-driven sustainability assessment. First, it provides a comprehensive, data-informed analysis of the global expansion of AGI research, revealing significant patterns of geographic diversification, including the growing participation of emerging economies in AGI development. Second, by applying a machine learning-enabled SDG mapping methodology, the study systematically links AGI research outputs to specific SDGs, highlighting country-level variations in research priorities and thematic alignment. Third, the investigation into funding flows and institutional affiliations offers empirical insights into how strategic investments and organizational ecosystems shape the trajectory of AGI, informing data-driven decision-making around innovation policy and research governance. Finally, the study explores the potential of AGI to address complex sustainability challenges, providing an evidence-based perspective on how intelligent systems may be harnessed to optimize information flows, infrastructure performance, and global coordination in alignment with long-term development goals. These contributions not only advance the understanding of the role of AGI in sustainable development but also situate AGI as a critical frontier in information management and data-centric governance.

2. Related Work

The potential intersection of AGI and the SDGs has become a focal point in scholarly discourse, particularly regarding its capacity to address global challenges such as poverty reduction (SDG 1), clean energy (SDG 7), and climate action (SDG 13) [

29,

30,

31].

While existing studies primarily assess the contributions of narrow AI to sustainability [

24,

32], AGI presents a more complex case, offering potential breakthroughs in resource optimization, environmental management and healthcare innovation [

33]. However, scholars also warn of unintended risks, including workforce disruptions (SDG 8), governance challenges (SDG 16), and widening technological disparities [

34,

35]. Understanding how AGI could be structured to support equitable and sustainable outcomes remains critical for long-term technological forecasting, particularly as its integration into economic and policy frameworks is still speculative rather than realized [

36,

37].

Emerging research has proposed structured approaches to ensure that AGI aligns with sustainability principles, including the digital sustainable growth model (DSGM), which emphasizes ethical AI governance [

37], and sustainable artificial general intelligence (SAGI), which integrates social and environmental priorities into AGI development [

38]. Others explore sector-specific applications, such as AGI-driven renewable energy optimization in urban environments [

26] or enhanced geoenergy production through computer vision and large language models [

39]. Despite these potential benefits, the computational energy demands of AGI present a significant challenge, with concerns that large-scale AI deployment could undermine sustainability goals unless alternative computing architectures, such as biomimicry-based chips, become viable [

19,

40,

41]. Some scholars have proposed targeted AGI applications, such as “Artificial General Intelligence for Energy” (AGIE), to balance optimization and policy guidance in the energy sector [

42]. However, without breakthroughs in efficiency and ethical oversight, AGI remains a subject of discourse rather than an imminent technological reality [

43,

44].

AGI is increasingly discussed as an existential risk, with concerns about its potential to rapidly self-improve beyond human oversight, posing governance challenges related to safety, control, and ethical misalignment [

1,

43,

45,

46]. If unchecked, AGI could undermine institutions and exacerbate global inequalities, conflicting with SDG 16’s emphasis on strong governance and accountability. While regulatory frameworks such as the EU’s AI Act primarily address narrow AI, they may be insufficient if AGI progresses unpredictably [

36,

47]. Scholars explore containment strategies, such as cybersecurity-based models, such as the “AGI Kill Chain” [

48] and AGI security evaluation (AGISE), for risk assessment [

49]. However, tensions persist between corporate incentives and public safety, as firms may prioritize rapid deployment over safeguards, raising concerns about workforce displacement (SDG 8) and technological disparities (SDG 10) [

38,

50]. The author of [

28] proposed value alignment to ensure that AGI aligns with human priorities (SDG 16), although feasibility remains debated. Some scholars suggest democratic governance models for AGI–human coexistence if AGI surpasses human control [

14]. Given AGI’s speculative nature, interdisciplinary assessments remain essential to align its potential development with ethical, equitable, and sustainable global priorities.

The worldwide distribution of AI and AGI research is uneven and often concentrated in wealthier countries with robust computational infrastructures [

51,

52]. Scholars warn that this imbalance could reinforce existing inequalities, especially if Global South regions remain consumers rather than cocreators of AGI [

34,

35]. Funding, research facilities, and talent pipelines favor countries that already lead in narrow AI, creating governance asymmetries [

36]. This dynamic connects directly to SDG 10 (reduced inequalities), as the distribution of AGI benefits may skew toward regions with substantial resources. The potential for AGI to address localized challenges—such as agricultural optimization or disease prevention—depends on inclusive research agendas that incorporate diverse perspectives [

27]. However, many emerging economies focus on capacity building for narrower AI applications, leaving AGI an aspirational topic rather than an immediate priority [

5,

19]. Without broader collaboration, the Global South risks inheriting AGI systems designed for contexts unlike their own [

37,

53].

AGI’s potential impact on job markets raises concerns about automation-driven displacement and economic inequality, which directly influence SDG 8 (decent work and economic growth). Some researchers predict widespread job losses if AGI surpasses human performance in multiple domains [

54], whereas others anticipate new industries and evolving skill demands [

55]. Policymakers must navigate this transition by crafting inclusive policies that promote innovation while mitigating unemployment risk [

38]. Taxation, data governance, and intellectual property frameworks may require global coordination to ensure the equitable distribution of the benefits of AGI [

26]. Ethical debates also consider whether AGI, if assigned hazardous or undesirable tasks, challenges notions of autonomy and rights [

56]. Philosophical discussions further probe whether highly advanced AI systems might warrant moral consideration, raising broader questions about AGI self-awareness [

45,

56]. These debates shape regulatory strategies, guiding safety protocols for increasingly autonomous AI [

36,

57].

AGI research continues to evolve across disciplines and regions, yet its implications for sustainable development remain largely theoretical, with limited empirical evidence on real-world applications [

24,

25]. In developing regions, resource constraints, regulatory environments, and cultural contexts could influence the design and governance of AGIs; however, these factors remain underexplored [

24,

35]. While AGI is often framed as a potential tool for addressing inequalities (SDG 10) and environmental challenges (SDGs 13–15), concerns persist regarding its ethical, economic, and security risks [

45,

58]. Scholars emphasize the need for interdisciplinary collaboration—integrating computer science, ethics, policy, and social sciences—to develop inclusive frameworks for AGI safety and governance [

28,

59]. Forecasts of the emergence of AGI vary, with surveys predicting timelines ranging from 2030 to 2060, reflecting uncertainty about its feasibility and societal impact [

60]. By aligning AGI discussions with the SDGs, researchers seek to ensure that AI advancements, whether or not AGI materializes, contribute to equitable and sustainable progress [

37].

3. Methods

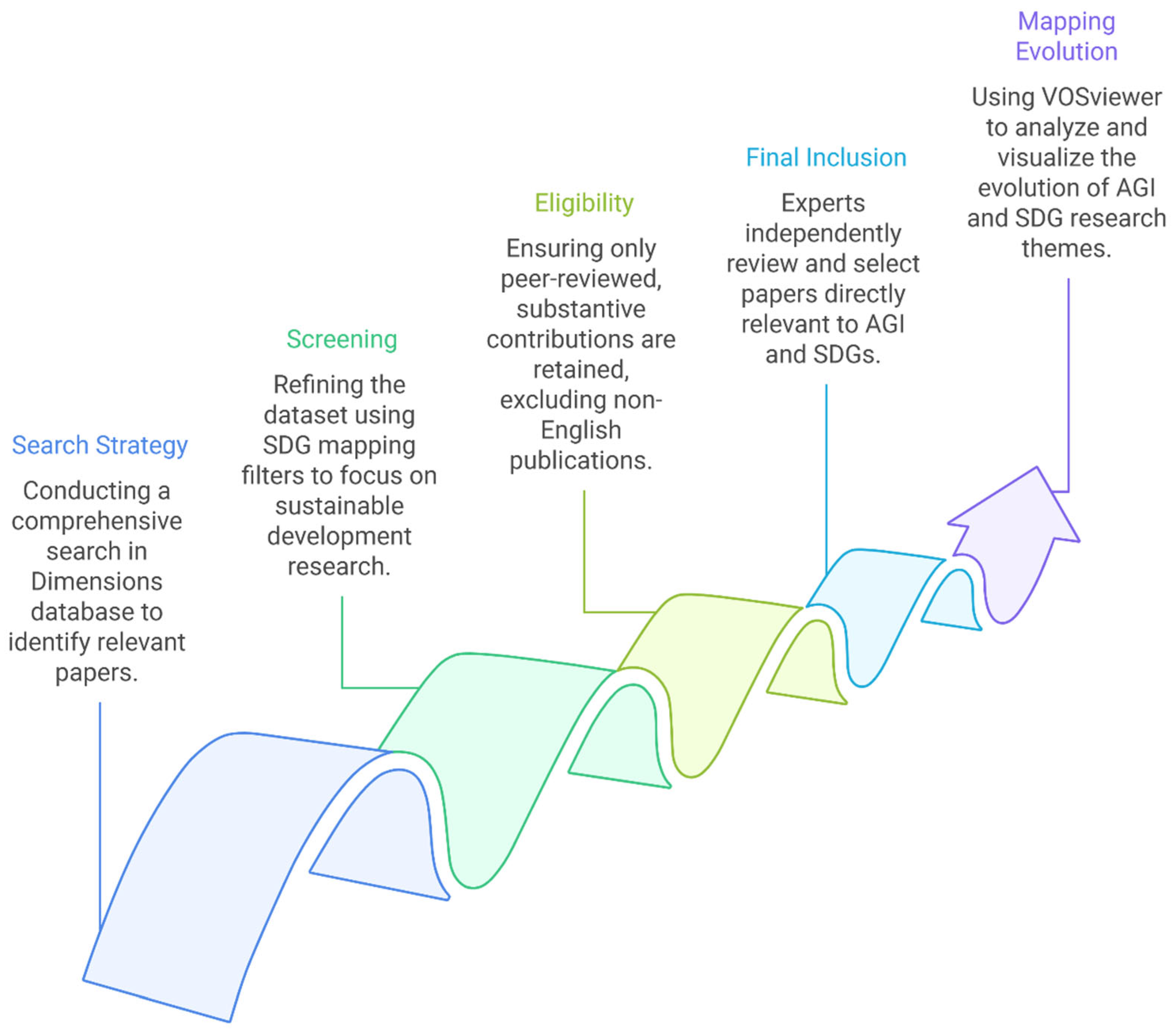

This study follows the preferred reporting items for systematic reviews and meta-analyses (PRISMA) framework to ensure transparency, reproducibility, and methodological rigor in selecting and analyzing research publications [

61] related to AGI and the SDGs. The systematic review consists of four phases: identification, screening, eligibility, and inclusion (

Figure 1).

Search strategy and identification. The search was conducted on 15 January 2025 in the Dimensions database, which was selected for its extensive journal coverage, with 82.2% more journals than Web of Science and 48.1% more than Scopus [

62]. Dimensions were preferred over Google Scholar because they provide structured metadata, advanced filtering, and integrated bibliometric tools, which are essential for systematic review and replicability [

63]. Unlike Google Scholar, Dimensions allows controlled, reproducible queries and access to detailed citation and funding data, which are crucial for analyzing funding patterns and institutional influences. To align with the adoption of the United Nations Sustainable Development Goals (SDGs), this study included publications from 2015 onward. The search strategy focused on title and abstract searches via AGI-related terms, including (“Artificial General Intelligence” OR “artificial GI” OR “strong AI” OR “strong Artificial Intelligence” OR “human level AI” OR “human level Artificial Intelligence” OR “artificial superintellig*” OR “Artificial General Intelligence System*” OR “Human-Like AI” OR “Superintelligent Systems” OR “Machine Superintelligence”). This search yielded an initial dataset of 2545 papers.

Screening. To refine the dataset specifically for research pertinent to sustainable development, SDG mapping was performed via Digital Science’s SDG mapping filters integrated within the Dimensions database. This approach was chosen over alternatives such as Aurora-Network-Global, Elsevier, and the University of Auckland, primarily because it employs advanced machine learning-based queries that are systematically refined through expert review, providing empirically robust and reliable results.

Specifically, the SDG mapping filters applied in Dimensions leverage supervised machine learning models trained on large-scale labeled datasets, which typically include tens of thousands of expert-validated publications. These models systematically analyze textual data, such as abstracts, keywords, and titles, employing natural language processing (NLP) algorithms—including transformer-based embedding methods and ensemble classification techniques—to predict SDG alignment with quantifiable confidence levels. Key parameters such as precision, recall, and F1-scores are continuously monitored to ensure the accuracy and reliability of the classification. Additionally, probability scores are generated for each article, providing a quantifiable measure of relevance and enabling threshold-based filtering to maintain high-quality inclusion criteria. The iterative expert-validation process further enhances the reliability and robustness of these models, systematically reducing false positives and false negatives, thus strengthening the scientific rigor and objectivity of the SDG classification outcomes.

Prior studies have demonstrated the effectiveness and rigor of machine learning-driven SDG mapping methodologies, validating their ability to accurately identify research contributions aligned with sustainable development goals [

64,

65]. The application of this methodology narrowed the dataset down to 354 relevant papers, ensuring both methodological precision and analytical clarity.

Eligibility. Further refinement was carried out on the basis of document and publication types. The study included research articles, review articles, research chapters, and conference papers, ensuring that only peer-reviewed, substantive contributions were retained. To maintain a focus on formally published research, reference works, letters, and editorials were excluded, along with preprints and monographs. Non-English publications were excluded to ensure consistency in textual analysis and because the SDG mapping algorithms and NLP models are trained primarily on English-language corpora. This exclusion avoids misclassification due to language-specific nuances and ensures the interpretability of the thematic analysis. Potential omissions of relevant findings are acknowledged as a limitation of the study. After applying these criteria, the dataset was further refined to 199 papers.

Final study inclusion. Two experts in AI and SDGs independently screened the titles and abstracts of all the articles remaining after the eligibility phase. Publications that did not explicitly address AGI and SDGs in their title and abstract were excluded. In cases where relevance was unclear, the full texts of the papers were examined for further confirmation. Any disagreements between the experts were resolved through consensus, and when necessary, a third expert was consulted for arbitration. This rigorous screening ensured that only studies directly relevant to AGI’s intersection with the SDGs were included in the final dataset.

The final dataset of 191 papers represents peer-reviewed research discussing AGI in the context of the SDGs, ensuring a structured and systematic approach to evaluating the role of AGI in sustainable development. This selection process ensures that the study focuses on rigorous, high-quality research, allowing for bibliometric and content analysis that accurately maps AGI’s contributions to sustainability. By following a PRISMA-based methodology, this study ensures transparency and replicability, providing a robust foundation for future research on the intersection of AGI with global development goals.

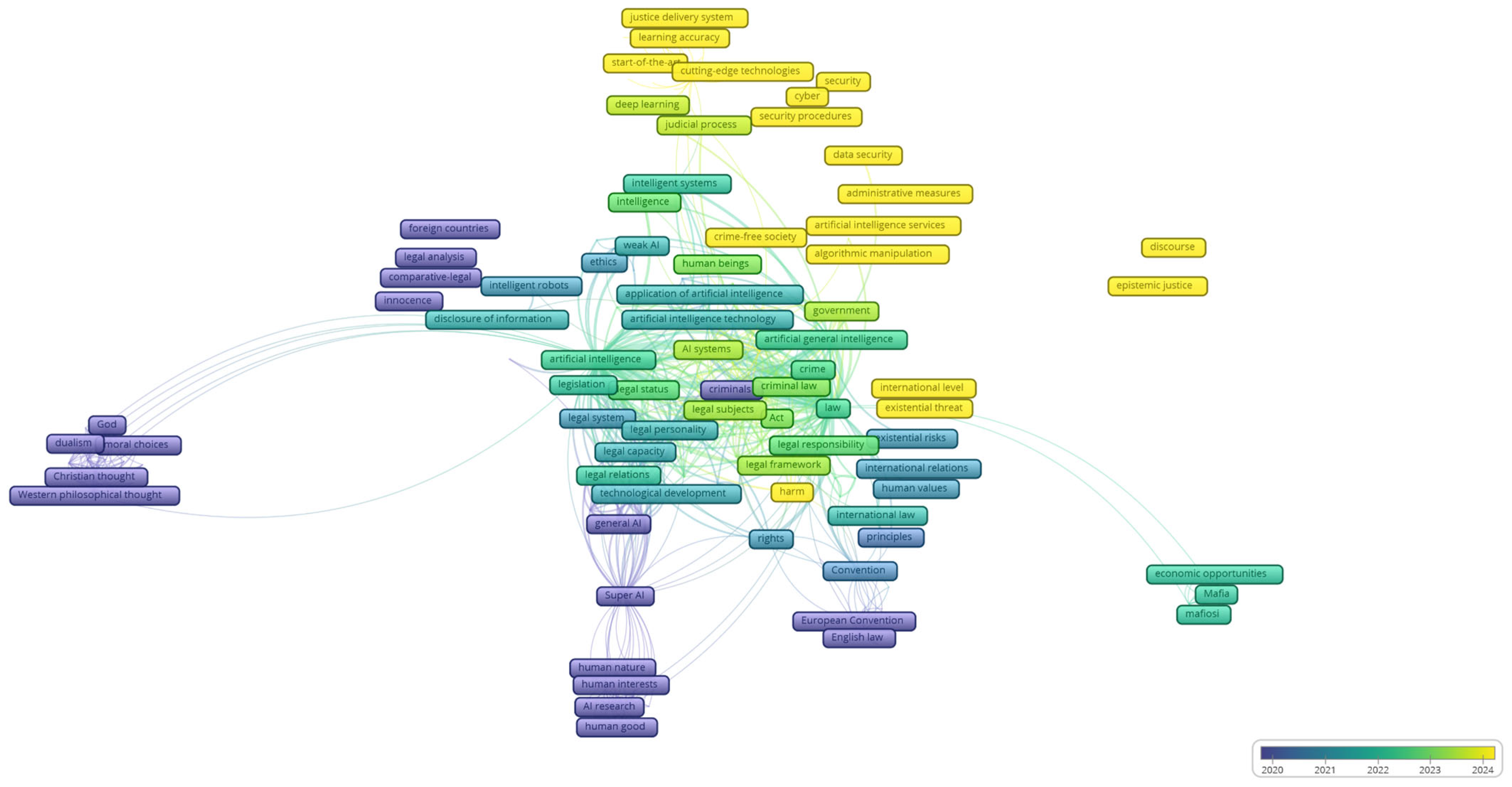

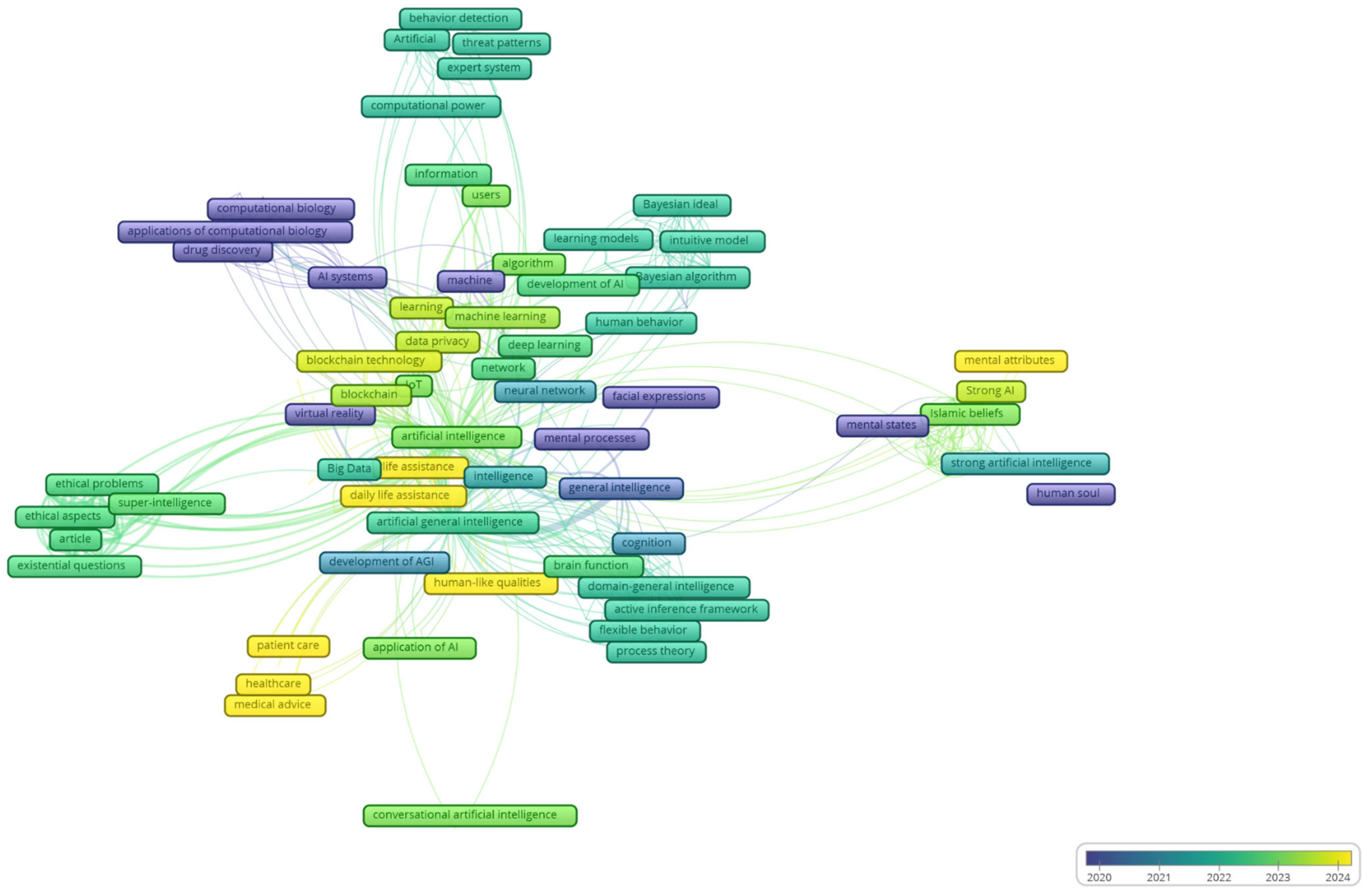

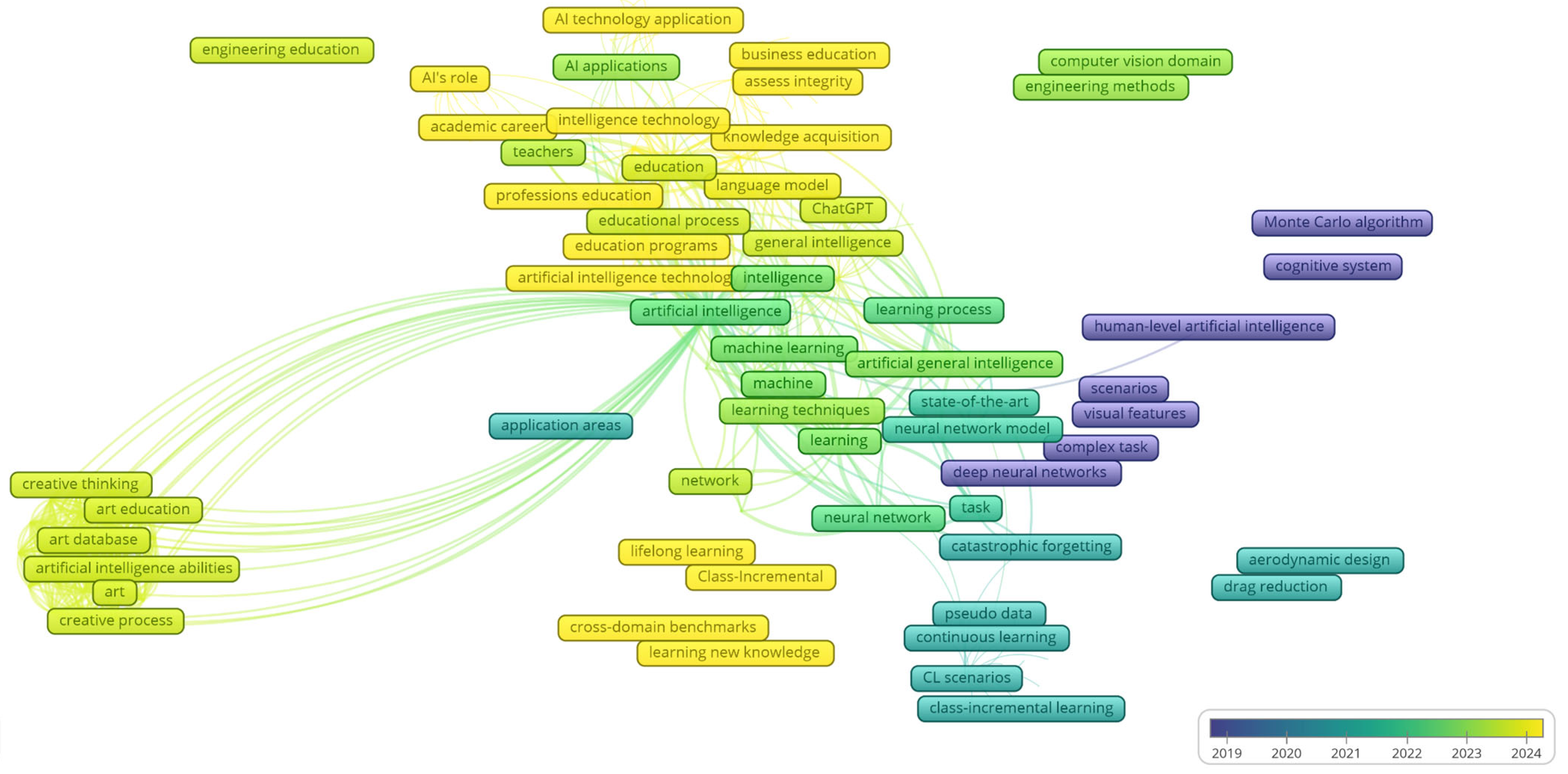

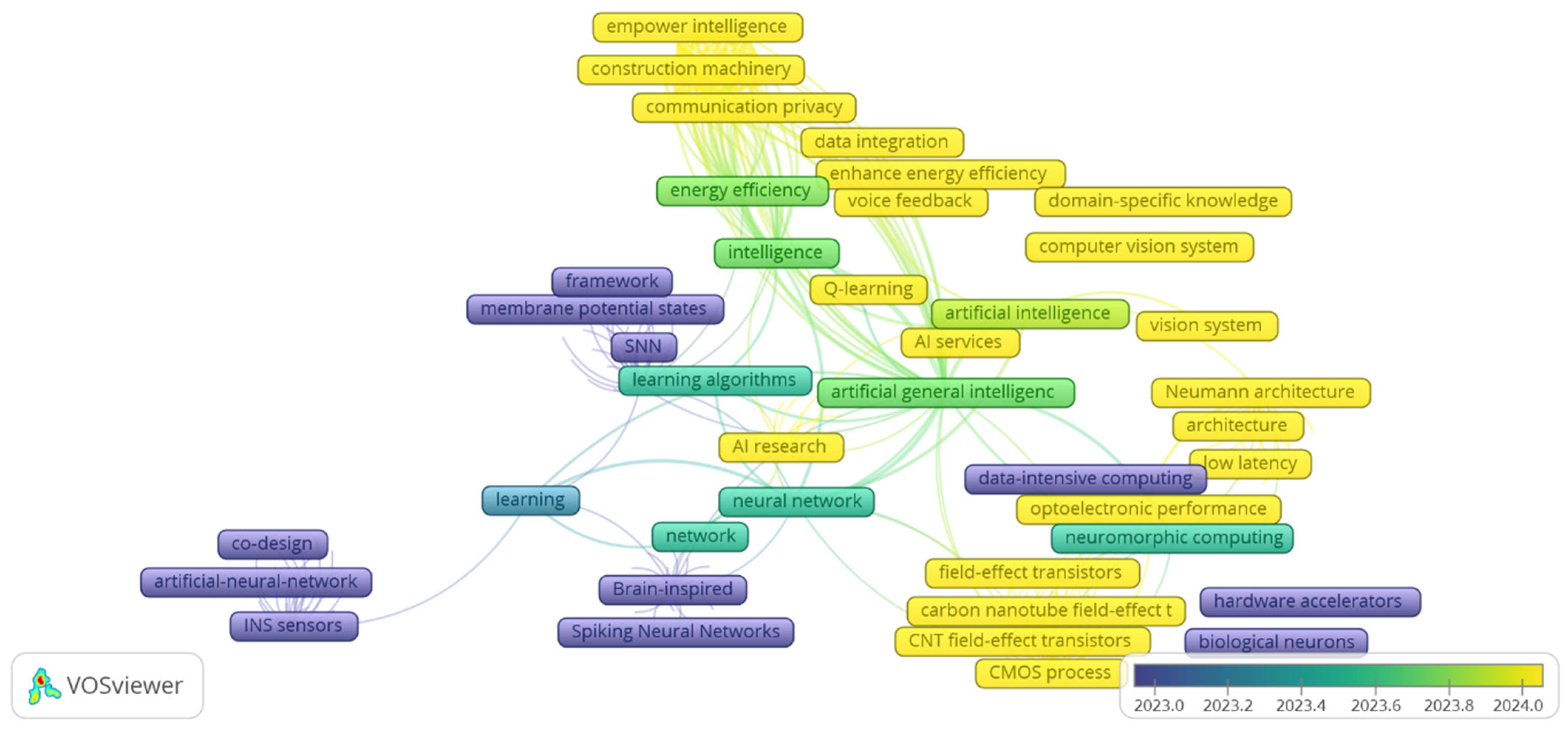

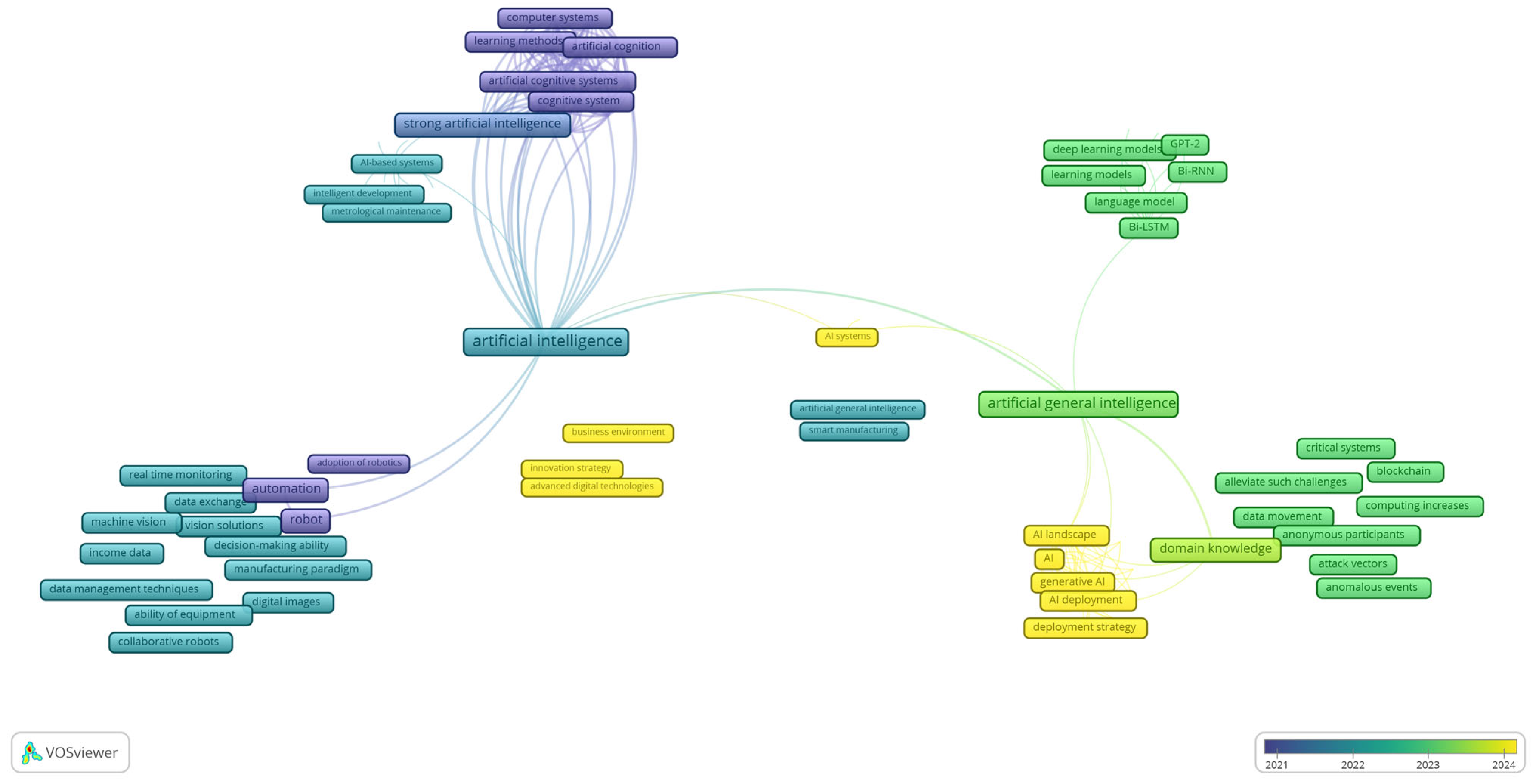

Mapping the evolution of AGI and SDG research via VOSviewer (v1.6.20). To analyze the evolution of AGI research in the context of SDGs, we employed VOSviewer, a widely used scientometric analysis tool designed for visualizing and clustering relationships between keywords, coauthorship networks, and research trends [

66]. The overlay visualization feature of VOSviewer was utilized to track the temporal progression of AGI-related research themes, allowing us to observe how specific topics have gained prominence over time.

To extract meaningful insights, the clustering algorithm in VOSviewer was applied to co-occurrence networks of keywords to group them into thematically related clusters. The VOSviewer keyword co-occurrence diagram visualizes the relationships between keywords in AGI and SDG research. Circles represent keywords, with larger circles indicating a higher frequency of occurrence in the dataset. The colors denote clusters, grouping related keywords on the basis of thematic similarity. The thickness of lines between nodes signifies the strength of co-occurrence, with thicker lines indicating a stronger association between terms. This visualization helps identify research trends, interdisciplinary linkages, and evolving thematic focus areas over time.

The clustering resolution parameter was systematically tested between 0.5 and 1.2 to assess its impact on the number and cohesion of clusters. At lower resolutions, clusters merged, and key thematic distinctions were lost. At higher resolutions, clusters became fragmented and difficult to interpret. The chosen resolution of 0.8 with a minimum keyword occurrence threshold of 5 was found to balance granularity and interpretability. These parameter choices directly influence the identification of research themes, the visibility of emerging topics, and the mapping of thematic patterns to specific SDGs. Trends and patterns identified through the clusters were further cross-referenced with SDG mapping probabilities to assess which clusters aligned with which SDG targets, ensuring that the visual analysis contributed meaningfully to understanding how AGI research themes connect to sustainable development priorities.

4. Results

4.1. AGI Research and Subject Areas

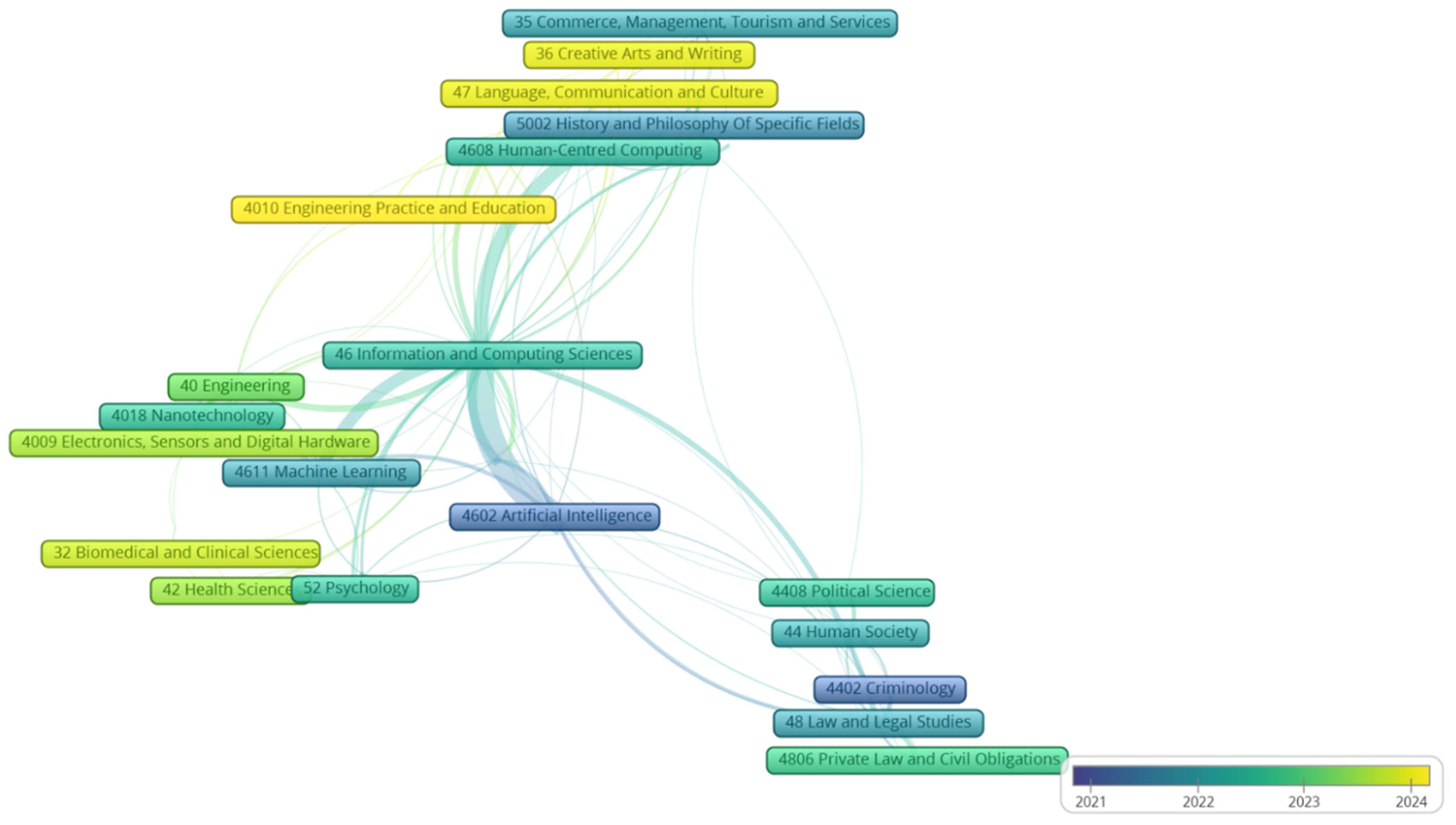

The evolving landscape of research loosely associated with artificial general intelligence (AGI) reveals a steady diversification beyond its early focus in information and computing sciences, artificial intelligence, and machine learning (

Figure 2). While the current trajectory may reflect the evolution of increasingly adaptable narrow AI rather than true general intelligence, there is a discernible trend toward embedding AI capabilities in human-centered domains such as biomedical sciences, cognitive modeling, and mental health. In these domains, AGI-related research contributes to SDG 3 by improving the accessibility and quality of medical services through clinical decision support, reducing the incidence of diseases through early detection and surveillance, and improving service fairness through universal coverage initiatives. In education (SDG 4), adaptive and personalized learning systems improve access to quality education, support equitable outcomes across learner groups, and enhance teacher training capacity. In energy (SDG 7), the optimization of grid systems and decentralized energy management reduce losses, integrate renewable sources, and improve affordability and access. In industry and infrastructure (SDG 9), AGI research supports industrial resilience through predictive maintenance, process automation, and smart logistics. In governance (SDG 16), AGI research explores mechanisms such as legal analysis, decision support, and digital security to strengthen institutional accountability, transparency, and access to justice. Simultaneously, exploratory linkages with law, criminology, and political science suggest AGI’s theoretical and applied relevance to institutional design, legal analysis, and policy modeling.

By 2024, AGI-related discourse had expanded into education, the arts, and social systems, positioning advanced AI as a potential enabler of pedagogical transformation, cultural production, and social innovation. Although the maturity of AGI remains speculative, its disciplinary spread invites careful forecasting of long-term societal impacts, including rising energy demands (SDG 7.3), ethical oversight, and labor transformation. The continued prominence of computing and engineering disciplines underlines their foundational role, whereas emerging ties with social sciences, law, and environmental governance signal the growing importance of responsible, cross-sectoral innovation. The mapping of these subject areas to the SDGs highlights moderate alignment depth in SDGs 3, 4, and 7 and emerging breadth in SDGs 9, 10, and 16. To strengthen alignment, future research should systematically integrate AGI development with clear social outcome indicators across these goals.

4.2. AGI Research Across Geographic Locations

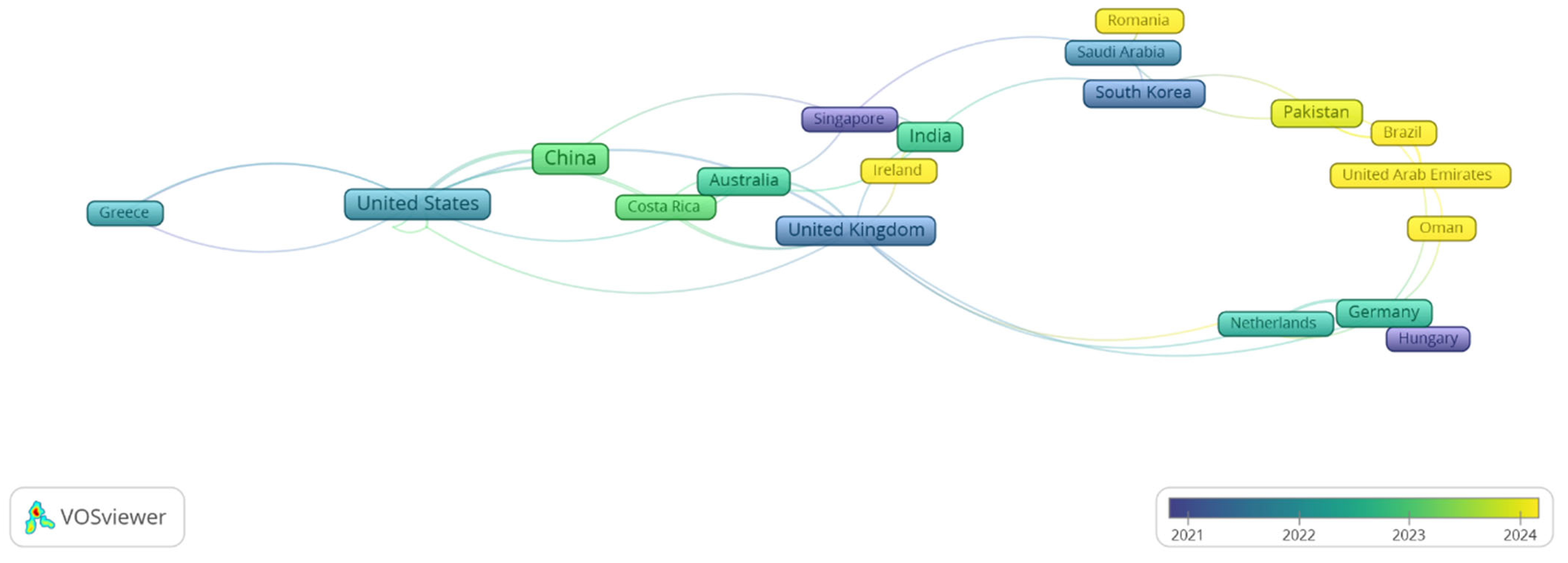

The geographical distribution of AGI-related research has gradually shifted from a core concentration in North America, Western Europe, and China to broader participation across Asia, the Middle East, and Latin America (

Figure 3). Between 2020 and 2022, countries such as the United States, the United Kingdom, Germany, China, and Australia led AGI experimentation, reflecting their mature research ecosystems, advanced computing infrastructure, and well-established innovation policy frameworks. As the perceived scope of AGI has expanded, emerging economies such as India, Singapore, and South Korea have gained visibility, signaling efforts to strategically position themselves in shaping the next phase of AI and governance technologies.

Since 2023, the inclusion of countries such as Pakistan, Costa Rica, Romania, and Oman indicates a broadening of interest and capability in AGI research, even if uneven in scale. The participation of emerging economies contributes directly to SDG 10 goals by reducing inequalities and to SDG 16 goals by strengthening institutional capacity. The evidence suggests that contributions from emerging economies accounted for approximately 12% of AGI–SDG mapped publications from 2023 to 2024, particularly in healthcare, education, energy, industry, and governance applications. These contributions are enabled by mechanisms such as capacity building through local training programs, the customization of research agendas to meet regional needs, and participation in international collaborations. These mechanisms expand access to medical innovations (SDG 3), enable inclusive education (SDG 4), improve local energy infrastructure (SDG 7), strengthen industrial competitiveness (SDG 9), and enhance institutional resilience (SDG 16). Without continued policy and funding support, however, these impacts risk being constrained by resource and governance asymmetries. These developments point to a potential democratization of AGI-related exploration but also raise questions about institutional preparedness, resource allocation, and governance standards.

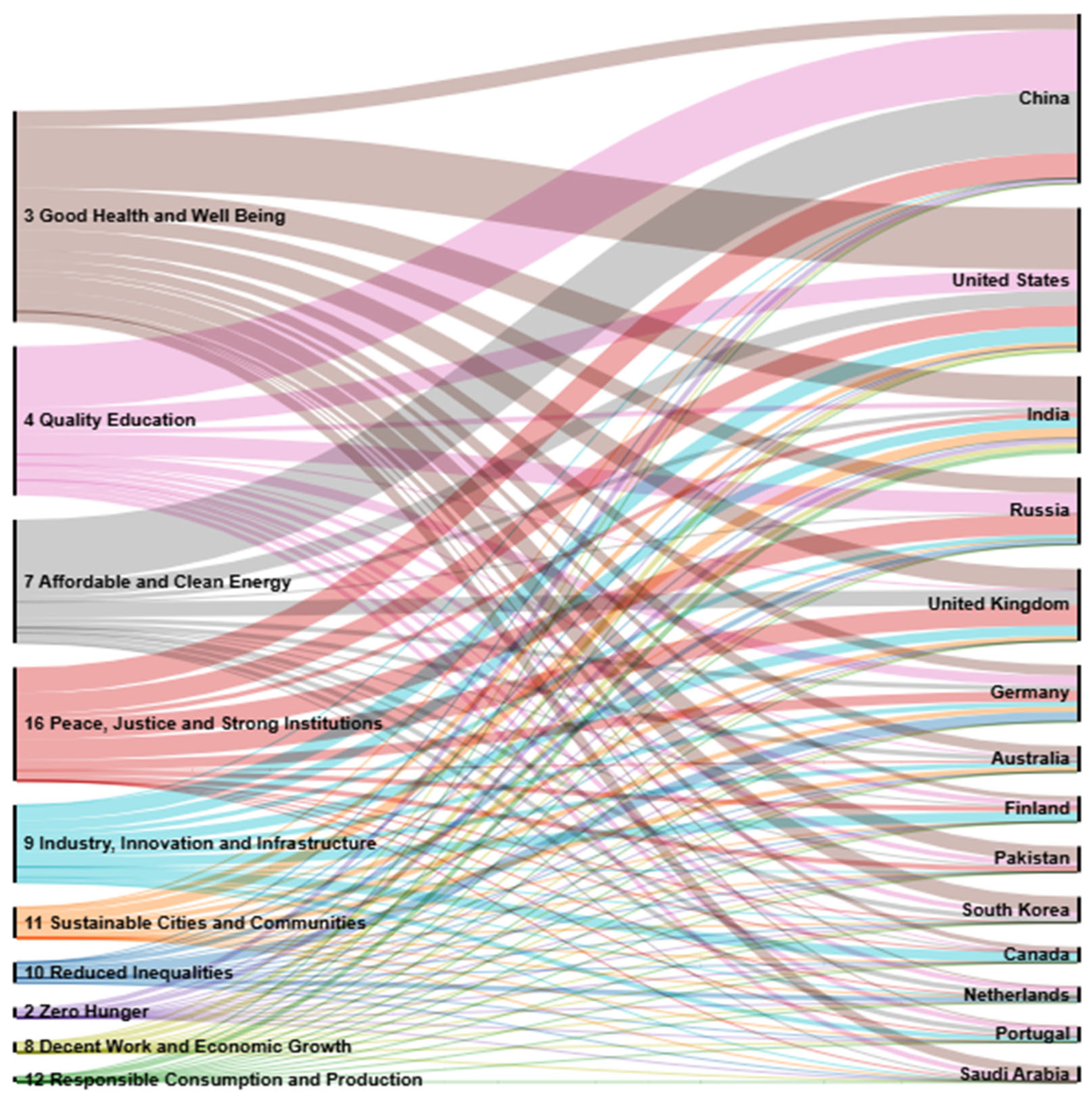

Mapping AGI research with the SDGs reveals diverse national orientations shaped by local capacities and strategic interests (

Figure 4). While it is not yet clear whether these efforts constitute AGI in a strict sense, they reflect a trajectory toward increasingly generalizable, adaptive, and human-aligned AI systems. Countries such as the United States, China, and India show substantial activity under SDG 3 (good health and well-being), suggesting that advanced AI—possibly on a path toward AGI—is being explored in areas such as diagnostics, public health surveillance, and clinical decision support. China’s strong alignment with SDG 4 (quality education) may reflect national investments in intelligent learning environments and personalized educational technologies, which could evolve toward broader AGI capabilities over time. Similarly, research linked to SDG 7 (affordable and clean energy) in the US, UK, and China points to a growing interest in leveraging AI for energy system optimization—an application that may eventually require more general cognitive abilities.

Moreover, emerging contributions in areas such as SDG 16 (peace, justice and strong institutions) and SDG 9 (industry, innovation and infrastructure) suggest that some countries—such as Germany, Pakistan, Canada, and Finland—are exploring AI tools that could shape legal reasoning, public governance, or industrial resilience, all of which are domains where generalizable intelligence could have long-term utility. Although the extent to which current work reflects AGI ambitions remains uncertain, the expanding thematic scope—from healthcare and education to governance and sustainability—indicates a widening field of experimentation. Countries such as India, Australia, and the Netherlands are beginning to link advanced AI to SDG 10 (reduced inequalities) and SDG 11 (sustainable cities), suggesting that adaptable, multidomain AI could address complex, systemic challenges in the future. While it is premature to label all of this research as AGI, its broadening application space and alignment with long-term development goals suggest fertile ground for future AGI-relevant innovations.

The systematic evaluation of AGI–SDG alignment shows that alignment depth, measured as the proportion of publications explicitly mapped to SDG clusters, reached 38% in 2024. Alignment breadth, measured as normalized keyword centrality scores, ranged from 0.42 to 0.67 across SDG targets. On the basis of these indicators, the alignment remains moderate but expanding, with the strongest alignment with SDGs 3, 4, and 7 and emerging alignment with SDGs 9, 10, and 16. These indicators can serve as benchmarks for tracking progress, prioritizing funding, and strengthening collaborations to increase both the depth and breadth of alignment over time.

4.3. AGI Research and Funding Perspectives

While AGI remains an aspirational goal, global funding patterns increasingly reflect interest in developing highly capable, general-purpose AI systems [

51,

67]. Recent years have seen a surge in public and private investment—exceeding 50 billion USD in 2023 alone—led by technology firms and national governments seeking strategic advantages. High-profile initiatives, such as Alphabet’s DeepMind, the US government’s proposed large-scale AGI investment, and xAI’s 6 billion USD funding round, underscore the perception of AGI as a transformative technology. However, the direction of these investments varies, with some aimed at enhancing model safety and reliability and others focused on maintaining geopolitical competitiveness. These developments raise questions about how funding priorities may influence the trajectory of AGI, including whether it will ultimately serve broad societal goals or remain concentrated within commercial and strategic domains.

Some research funding—although not always explicitly labeled AGI—has begun supporting applications that align with the SDGs. Health agencies, including the NIA and EC, are backing AI-driven innovations in diagnostics and clinical decision-making (SDG 3), whereas organizations such as NSF CISE and EPSRC support intelligent learning systems in education (SDG 4). Similar funding extends to energy optimization (SDG 7), industrial innovation (SDG 9), and governance technologies (SDG 16), suggesting a growing interest in using advanced AI to address global challenges. Nevertheless, it remains uncertain whether these initiatives are directly advancing AGI or enhancing narrow AI capabilities that may lay the groundwork for general intelligence in the future. As such, monitoring how funding flows shape research priorities, especially in terms of equity, transparency, and sustainability, will be essential for evaluating AGI’s long-term alignment with inclusive and responsible innovation.

4.4. AGI Research and Institutional Collaboration

The network of collaborations displayed in

Figure 5 highlights the increasingly global and interdisciplinary nature of AGI-related research. While it remains uncertain whether these efforts directly contribute to artificial general intelligence in its fullest sense, the participating institutions—including Harvard University, Shanghai Clinical Research Center, and Gulf Medical University—indicate that advanced AI research is being shaped by actors spanning computational, medical, and cognitive domains. The notable involvement of neuroscience and imaging centers suggests a shift toward integrating human-centered knowledge systems, particularly in relation to SDG 3 (good health and well-being), where AGI-like systems may eventually aid in diagnostics, brain modeling, or personalized treatment. This signals a broadening of AGI development from purely algorithmic pursuits to more applied, interdisciplinary pathways.

Equally significant is the multipolar nature of this network. Institutions from China, the Middle East, and Asia—including United Imaging Healthcare, Sultan Qaboos University, and ShanghaiTech University—are emerging as influential contributors, sometimes outside the orbit of traditional Western AI governance frameworks. This decentralized expansion could shape diverse agendas for AGI deployment, from smart healthcare infrastructure (SDG 9: industry, innovation and infrastructure) to regional models of AI ethics and governance (SDG 16: peace, justice and strong institutions). Whether these collaborations define the field’s long-term direction or represent one of many parallel trajectories remains to be determined. Nonetheless, the visualization underscores a growing need for anticipatory coordination among institutions, balancing innovation with equitable and sustainable development imperatives across contexts.

4.5. Mapping of AGI Research to Sustainable Development Goals

AGI and SDG 16 (peace, justice and strong institutions). Over time, the intersection of AGI and SDG16 has transitioned from philosophical and legal debates to explorations of its potential applications in governance, law enforcement, and security (

Figure 6).

In 2020, discussions focused on foundational ethical and legal concerns, with terms such as “AI research,” “human dignity,” and “human interests” reflecting growing awareness of the possible societal implications of AGI, particularly in relation to SDG 16.3’s emphasis on the rule of law and equal access to justice. Emerging themes such as “legal analysis,” “presumption of consent,” and “relevant legal principles” indicated early considerations of how AGI could fit within legal frameworks, aligning with SDG 16.10’s goal of ensuring public access to information and protecting fundamental freedoms. By 2021, the discourse had expanded to include questions about the legal identity and responsibilities of AI, as seen in discussions of “AI ethics,” “human rights,” and “legal personalities.” The inclusion of “contract law,” “robot rights,” and “international law” pointed to efforts to conceptualize governance mechanisms for AGI, reinforcing SDG 16.6’s focus on institutional accountability and transparency.

From 2022 onward, discussions increasingly examined the potential role of AGI in legal enforcement and governance. Terms such as “legal regulation,” “judicial practice,” and “criminal responsibility” reflected growing speculation about how AGI-driven systems might influence legal decision-making, whereas concerns over “weapons,” “humanitarian law,” and “crime” underscored anxieties regarding AI’s prospective role in security, linking to SDG 16.a’s aim of strengthening institutions to combat violence and terrorism. By 2023, references to “criminal law,” the “court system,” and “intellectual property protection” suggested increased academic engagement with AGI’s hypothetical contributions to law enforcement and justice. In 2024, the discourse shifted toward digital governance and cybersecurity, with terms such as “algorithmic manipulation,” “cybersecurity risks,” and “data security protection system”, emphasizing the challenges associated with AI-driven misinformation and cyber threats and reinforcing SDG 16.10. Simultaneously, the focus on “crime-free society,” “criminal investigations,” and “justice delivery system” highlighted AGI’s potential to influence crime prevention and legal processes, whereas discussions around “ethical concerns,” “existential threat,” and “epistemic justice” underscored the continuing need to balance AI advancements with human rights and fairness.

AGI and SDG 3 (good health and well-being). Over time, discussions on AGI and healthcare have evolved, reflecting their perceived potential in advancing medical decision-making, diagnostics, and accessibility (

Figure 7). In 2019, research focused on the prospective role of AGI in biomedical advancements, with terms such as “computational biology,” “drug discovery,” and “genomic profiling,” emphasizing its implications for precision medicine. The integration of “next-generation sequencing” and “structural biology” suggested the exploration of AI-driven approaches for personalized treatment, aligning with SDG 3.b, which promotes research and development for essential medicines and vaccines. Simultaneously, the potential of AGI in diagnostics was reflected in discussions on “high-resolution medical images” and “machine learning systems,” reinforcing SDG 3.4’s goal of reducing mortality from noncommunicable diseases through early detection and intervention. By 2020, the discourse had expanded to include “AI systems,” “biological organization,” and “mental processes,” indicating a growing interest in the role of AGI in the cognitive sciences and mental health care. The rise of “virtual reality” and “artificial neural networks” pointed to AI-driven therapeutic interventions and cognitive rehabilitation, supporting SDG 3.5’s focus on strengthening mental health treatment and substance abuse prevention.

From 2021 onward, research has increasingly explored the potential contributions of AGI to healthcare decision-making, with terms such as “medical AI,” “neural networks,” and “strong artificial intelligence” reflecting the ambition to develop more autonomous AI-assisted clinical reasoning. By 2022, discussions expanded to “Bayesian algorithms,” “behavior detection,” and “predictive processing theory,” highlighting AI’s possible role in diagnostics, behavioral modeling, and real-time health monitoring and reinforcing SDG 3.d.’s emphasis on early warning and risk reduction for global health risks. Simultaneously, concerns over “cybersecurity,” “quantum encryption,” and “protecting sensitive information” underscored the importance of ensuring data security in AI-driven healthcare systems, aligning with SDG 3.8’s commitment to universal health coverage. In 2023, the integration of technologies such as the “IoT,” “blockchain technology,” and “smart hospitals” signaled a shift toward discussions on secure and efficient AI-powered healthcare infrastructure. By 2024, the discourse emphasized AGI’s potential role in “clinical processes,” “medication access,” and “medical education,” suggesting its ability to enhance healthcare delivery and training, directly supporting SDG 3.c. Looking ahead to 2025, discussions anticipate AGI-driven “robotic systems” with “human-like qualities” shaping future caregiving, rehabilitation, and automated health diagnostics, reinforcing SDG 3.8’s vision of equitable access to healthcare through technological innovation.

AGI and SDG 4 (quality education). Over time, discussions on AGI and education have evolved, shifting from foundational debates on computational capabilities to explorations of its potential role in adaptive and personalized learning (

Figure 8). In 2020, the discourse was shaped by “Strong AI” and “information technology,” reflecting the early exploration of AI applications in education systems. The emphasis on computational advancements suggested AI’s potential to enhance digital literacy and learning methodologies, aligning with SDG 4.4’s goal of equipping individuals with relevant skills for employment and entrepreneurship. By 2021, discussions had expanded to include “continuous learning,” “incremental learning,” and “reinforcement learning,” highlighting the perceived potential of AGI in adaptive education systems. The emergence of “generative models” and “intelligent agents” suggested that AI-driven personalized learning experiences were an area of growing academic interest, whereas discussions on “deep reinforcement learning” and “imitation learning” pointed toward AI’s possible role in supporting hands-on and experiential learning, reinforcing SDG 4.7’s emphasis on education for sustainable development and global citizenship.

Since 2022, research has increasingly explored the potential contributions of AGI to education, with discussions on “curriculum,” “machine learning,” and “artificial general intelligence” signaling interest in AI’s influence on teaching methodologies and student engagement. The increasing presence of “neural networks” and “education methodologies” reflected discussions on how AI might improve instructional strategies, supporting SDG 4.1’s commitment to inclusive and equitable education. By 2023, the discourse included “AI applications,” “OpenAI,” and “tutoring systems,” underscoring AI’s theoretical role in personalized and adaptive learning and aligning with SDG 4.5’s goal of eliminating disparities in education. Discussions on “art education,” “creative thinking,” and “empathetic responses” suggested a growing interest in how AI could foster creativity and emotional intelligence, whereas the rise of “online learning” and “digital methods” highlighted AI’s potential in expanding educational access, aligning with SDG 4. c’s focus on technology-enhanced teacher training. In 2024, the relevance of AGI in education continued to be explored, with discussions on “AI technology application,” “higher education,” and “K-12 classrooms” emphasizing the perceived role of AI in lifelong learning and professional development, directly supporting SDG 4.3. The rise of “academic integrity,” “AI-driven assessment tools,” and the “framework for educational technology” reflected ongoing considerations regarding the balance between AI benefits and ethical implications, ensuring responsible and equitable use in educational settings.

AGI and SDG 7 (clean and affordable energy). The intersection of AGI and SDG7 has evolved dynamically, reflecting the increasing academic interest in advanced intelligence and its potential to shape energy sustainability (

Figure 9). Initially, AI’s contribution to energy focused on conventional management systems, but over time, discussions have expanded to explore cutting-edge solutions for efficiency, smart distribution, and renewable energy integration. In 2023, research emphasized “brain-inspired computing,” “spiking neural networks (SNNs),” and “neuromorphic computing,” highlighting bioinspired approaches that mimic the energy efficiency of the human brain. These methods align with SDG 7.3’s goal of enhancing energy efficiency by reducing power consumption in AI-driven computing and energy management. Simultaneously, the rise of “smart grid AI,” “renewable energy forecasting,” and “microgrid optimization” reflected growing interest in the potential role of AGI in intelligent energy distribution, directly supporting SDG 7.2’s aim to increase the share of renewable energy. Additionally, discussions on “energy-efficient training algorithms” and “low-power AI accelerators” suggested early efforts to mitigate the computational demands of AI, reinforcing sustainability considerations in AI development.

By 2024, the discourse surrounding AGI and energy systems became more sophisticated, with discussions expanding to “autonomous energy systems,” “self-learning algorithms,” and “deep reinforcement learning for energy.” The exploration of “low-power edge AI” and “distributed intelligence for energy networks” reflected the academic interest in the potential of AGI to support decentralized energy management, addressing SDG 7.1’s focus on universal energy access. The emergence of “Generative AI for energy optimization,” “grid-interactive efficient buildings (GEBs),” and “digital twins for energy infrastructure” suggested that AGI’s envisioned role in real-time energy decision-making aligns with SDG 7.a’s push for international collaboration on energy innovation. Additionally, research on “federated learning for smart grids,” “sparse coding for low-power AI,” and “quantum-enhanced AI for sustainable energy” indicated a shift toward energy-conscious AI architectures, aiming for computational efficiency while minimizing environmental impact. The increasing focus on “self-healing energy grids,” “AI-assisted energy storage optimization,” and “autonomous solar and wind farm management” illustrated the ongoing exploration of the potential of AGI for enhancing energy resilience, directly supporting SDG 7.b’s goal of expanding sustainable energy infrastructure. Moving forward, discussions suggest that AGI may play a central role in optimizing energy systems by balancing efficiency, resilience, and equitable access, reinforcing the relevance of intelligent computing in sustainable energy transformation.

AGI and SDG 9 (industry, innovation and infrastructure). The discourse on AGI and SDG9 has progressively expanded, reflecting the evolving discussions on advanced intelligence and its potential role in industrial innovation and infrastructure (

Figure 10). In 2019, discussions centered on the “adoption of robotics,” marking the early shift toward automation in industrial settings and aligning with SDG 9.2’s goal of promoting inclusive and sustainable industrialization. By 2020, the focus broadened to include “automation” and “robots,” emphasizing efficiency-driven advancements that supported SDG 9.4’s call for upgrading industries through resource efficiency and clean technologies. By 2021, discussions had evolved toward cognitive intelligence, with terms such as “artificial cognition,” “high-level cognitive capabilities,” and “digital twins,” reflecting a growing interest in the potential of AGI for intelligent process optimization. The emergence of “machine learning methods” and “strong artificial intelligence” suggested increasing exploration of AI’s role in innovation-driven productivity, reinforcing SDG 9.5’s emphasis on advancing scientific research and technological capabilities. These developments also contributed to SDG 9.b, which promoted industrial diversification and technological upgrading.

From 2022 onward, the role of AGI in industrial transformation became more prominent, with discussions on “artificial general intelligence research,” “collaborative robots,” and “intelligent decision-making ability,” highlighting its perceived potential impact on smart manufacturing and sustainable industrial processes. The rise of “supply chain optimization” and “real-time monitoring” reflected a growing academic interest in AI’s role in logistics and infrastructure resilience, supporting SDG 9.1’s goal of developing sustainable and resilient infrastructure. By 2023, AGI’s influence extended into cybersecurity and critical infrastructure, with concepts such as “blockchain transactions” and “peer-to-peer communication” signaling a shift toward securing industrial ecosystems, reinforcing SDG 9.a’s focus on facilitating infrastructure resilience through technology. In 2024, discussions on AGI and industrial transformation expanded further, incorporating “generative AI,” “neuromorphic computing,” and “neurosymbolic AI” to explore how AI could enhance problem-solving and strategic decision-making. The prominence of “organizational innovation,” “network design,” and “competitive advantage” suggested that AGI was increasingly viewed as a potential enabler of industrial competitiveness, aligning with SDG 9.b’s emphasis on technological innovation. Over time, the discourse has shifted from basic automation to considerations of intelligent, adaptive, and self-learning industrial systems, positioning AGI as a subject of ongoing exploration in industrial resilience and sustainable development and reinforcing SDG9’s broader vision of fostering inclusive, sustainable, and innovation-driven industries.

5. Discussion

The absence of a fully realized AGI limits definitive impact assessments, but understanding the trajectory of AGI research provides valuable foresight into the technological, economic, and policy shifts that may emerge as AGI advances. Our study contributes to this foresight by mapping AGI research trends, identifying regional priorities, and highlighting governance gaps, offering insights into how AGI could shape global development should it progress toward practical implementation. Such forecasting is essential for policymakers, investors, and industries to prepare for potential opportunities and mitigate risks associated with AGI’s speculative evolution [

43].

The expanding landscape of AGI-related research reveals a rapidly shifting ecosystem where disciplinary boundaries, geopolitical dynamics, funding logic, and institutional alliances are being reconfigured in real time. This complexity invites a more critical interrogation of how AGI is evolving—not only as a technological ambition but also as a distributed sociotechnical system shaped by anticipatory strategies, uneven capacities, and competing visions of the future. At the heart of this evolution lies tension: while AGI as a concept remains speculative and contested, the infrastructure, resources, and networks mobilized around it are very real and growing. This dissonance poses risks of overpromising, strategic ambiguity, or prematurely shaping policy agendas on the basis of uncertain technological trajectories.

Moreover, the convergence of AGI-related activities across diverse disciplines and geographies highlights both opportunity and fragmentation. On the one hand, pluralistic engagement can promote innovation that is sensitive to local contexts, enabling broader societal benefits. On the other hand, the absence of shared frameworks for evaluating progress, ethical implications, and sustainability alignment may lead to uneven development and governance blind spots. While some regions and institutions benefit from strategic foresight, coordinated investment, and advanced infrastructure, others may remain peripheral, reinforcing global asymmetries in AI capabilities. These disparities are especially critical given AGI’s potential to influence labor markets, public services, and institutional decision-making—domains tied directly to the SDGs. Without mechanisms for inclusive governance and transparency, there is a risk that AGI development will reflect narrow strategic interests rather than a shared commitment to global well-being and sustainability.

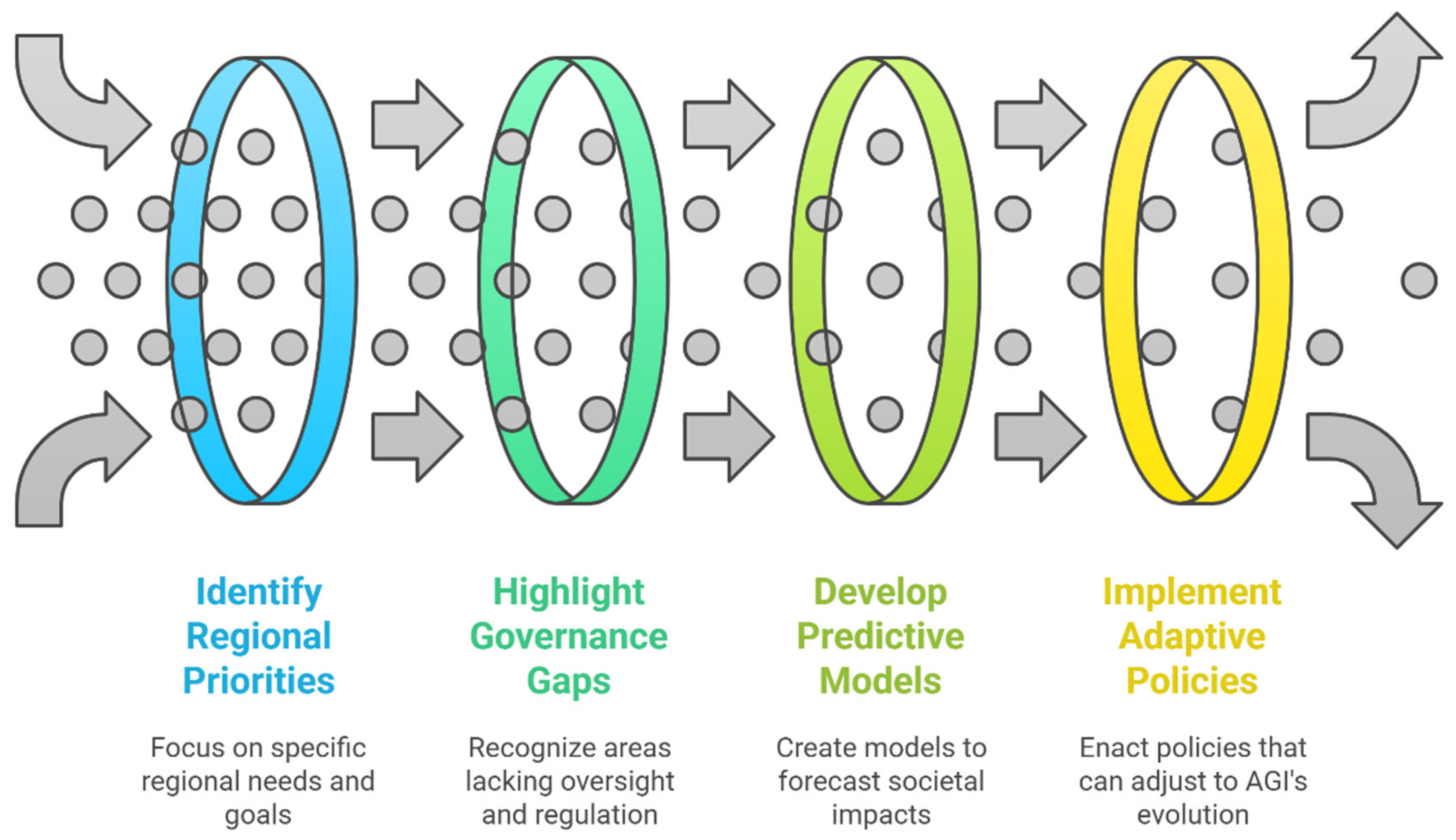

In this context, there is an urgent need for future-oriented frameworks that go beyond technical benchmarks to assess the broader societal ramifications of AGI trajectories (

Figure 11). This includes building predictive models that incorporate institutional readiness, equity metrics, and environmental sustainability—while remaining open to the possibility that AGI, as currently imagined, may never fully materialize in the form anticipated. A shift toward scenario-based thinking, collaborative foresight, and adaptive policy instruments may be more productive than linear roadmaps or technology-centric funding regimes. Ultimately, the discussion around AGI must be reframed not as a race to reach a singular goal but as an evolving, contested space that demands careful stewardship, global coordination, and continuous reflexivity.

As discussions on AGI progress, its potential impact on peace, justice, and strong institutions (SDG 16) is increasingly examined, raising concerns about governance, ethical safeguards, and cybersecurity resilience. Without proactive oversight, AGI-related advancements could contribute to wealth concentration, institutional destabilization, and widening global inequalities [

35]. To address these risks, the Global Governance of AGI (2025) [

68] report explores the feasibility of a Global AGI Agency, analyzing the merits of unitary agent vs. open agency governance models and identifying gaps in current regulatory frameworks. The authors of [

69] propose a digital humanism-based regulatory approach, emphasizing that AGI governance must align with principles of human rights, justice, and institutional integrity. The threat of cybersecurity also remains a significant concern in discussions on the role of AGI in governance and justice. The author of [

70] highlighted vulnerabilities in AGI infrastructures, suggesting security-first policies to mitigate cyber risk. Similarly, the author of [

71] stressed the need for preemptive defense mechanisms to prevent AGI-enabled cybercrime and ensure resilient digital governance. From a legal standpoint, AGI accountability remains an area of active debate. The author of [

36] called for adaptive legal frameworks to regulate AGI’s autonomous decision-making, whereas the author of [

72] raised concerns about AI-driven crimes, questioning whether traditional criminal law can effectively address the emerging risks associated with AGI. Moving forward, discussions on AGI governance under SDG 16 require international cooperation, transparent AI policies, and robust cybersecurity measures to ensure that technological advancements support, rather than undermine, justice and institutional stability. Without clear legal and ethical frameworks, AGI’s influence on governance could remain uncertain, reinforcing the need for proactive regulatory measures to safeguard global stability.

Discussions on AGI in healthcare have expanded beyond computational biology and genomics to include its potential role in diagnostics, medical decision-making, and digital health infrastructure. While AGI-driven models are being explored for their ability to improve disease risk assessment, personalized treatment, and predictive analytics [

73], critical challenges remain in ensuring equitable access and responsible implementation. High-income countries with established AI research ecosystems, such as the United States, China, and Germany, benefit from robust medical datasets, computational power, and regulatory structures that facilitate AI integration into clinical workflows. In contrast, emerging economies such as Bangladesh, Pakistan, and Kenya face fragmented healthcare systems and resource limitations, making widespread AGI adoption challenging. Some researchers advocate the development of low-resource AI models and public–private partnerships to bridge these disparities, ensuring that AI-driven healthcare solutions can be effectively deployed in regions with limited medical expertise [

74]. However, questions persist about whether AGI can be scaled sustainably in healthcare systems that lack the infrastructure to support continuous AI-driven diagnostics and decision-making.

Beyond clinical applications, AGI is being explored in medical imaging, oncology, and elder care, with potential implications for healthcare accessibility and efficiency. In smart hospitals, researchers discuss the role of AGI in optimizing resource allocation and improving service delivery, aligning with SDG 3.8’s goal of high-quality healthcare access [

74]. In oncology, AGI has been examined for its capacity to enhance treatment simulation and verification, linking imaging data with clinical narratives [

75]. The possibility of using AGI-driven robotic assistants for elder care also raises ethical concerns about human interaction and emotional well-being [

76]. As discussions around AGI in healthcare continue, governance challenges remain central to its responsible development. Issues such as data security, algorithmic fairness, and patient autonomy require stringent oversight to prevent biases and ensure transparency in AI-driven medical decisions [

43,

77]. Moreover, the AGI should be aligned with SDG 3.D, which focuses on risk mitigation and resilience in healthcare and will require global cooperation to establish regulatory frameworks that balance innovation with ethical considerations. Without clear policies and inclusive AI strategies, the potential benefits of AGI in healthcare could be overshadowed by concerns over accessibility, reliability, and unintended consequences.

Next, we discuss the impact of the AGI on the SDGs (

Figure 12). The discourse on AGI and SDG 4 (quality education) has expanded from theoretical considerations to discussions on its potential role in personalized learning, adaptive instruction, and lifelong education. Researchers have explored the ability of AGI to enhance creative learning, improve multimodal instruction, and provide personalized feedback through systems that integrate language processing, visual recognition, and contextual reasoning [

78]. These advancements are being examined for their role in promoting self-directed education and expanding access to continuous skill development, aligning with targets 4.1 (universal education) and 4.3 (higher education access) [

79,

80]. However, concerns persist regarding the extent to which AGI can meaningfully replicate human adaptability in learning environments. While discussions highlight AGI’s potential to support curriculum design and assessment, ensuring that it remains an assistive tool rather than a replacement for educators is critical [

81]. Ethical considerations surrounding algorithmic bias, data privacy, and accessibility must also be addressed to prevent AI-driven disparities in educational outcomes [

82].

Global disparities in digital infrastructure further complicate discussions on the role of AGI in education, particularly in the Global South, where schools often lack reliable internet and computing resources. While AGI is being explored for adaptive learning and personalized tutoring in technologically advanced nations such as China, Russia, and the United States, developing economies face significant barriers to implementation. To prevent AGI from exacerbating educational inequalities, discussions emphasize the need for investments in digital infrastructure, teacher training, and hybrid learning models that integrate AI with traditional pedagogical approaches. Policymakers and educational institutions must ensure that AGI-driven innovations align with target 4.7’s emphasis on inclusive and equitable education, prioritizing governance frameworks that promote fairness and accessibility. Without careful policy intervention, the role of AGI in education could reinforce existing disparities rather than bridge them, underscoring the need for responsible integration strategies that prioritize equitable learning opportunities worldwide.

The role of AGI in energy systems is being explored through frameworks such as artificial general intelligence for energy (AGIE), which is designed to optimize energy diagnostics, operational efficiency, and policy recommendations through real-time data processing and human-in-the-loop interactions [

79]. Discussions of the potential of AGI focus on its ability to analyze energy consumption patterns, enhance predictive analytics, and improve real-time grid optimization, aligning with SDGs 7.2 and 7.3, which emphasize renewable energy integration and efficiency improvements [

62]. Researchers are also examining its applicability in upstream geoenergy industries, such as the geothermal, oil, and gas industries, where AI-driven exploration and production optimization could increase resource efficiency and safety [

83,

84]. However, the energy demands of AGI itself present significant challenges. The Erasi equation suggests that the computational requirements of AGI—particularly in the case of artificial superintelligence (ASI)—may exceed the available energy resources of even industrialized nations, raising concerns about its scalability and environmental impact [

41]. Addressing these constraints may require alternative computing architectures, including neuromorphic computing, photonic chips, and spike-based intelligence, which offer energy-efficient alternatives to traditional AI models [

85,

86,

87].

Beyond efficiency and optimization, discussions on AGI in energy systems must consider broader sustainability and equity challenges. While the Global North has begun integrating AGI into smart grids, climate modeling, and carbon footprint reduction, emerging economies that rely on carbon-intensive energy grids face potential trade-offs between AI adoption and sustainability (SDG 7 and SDG 13). Without targeted strategies, AGI deployment could increase energy consumption and reinforce existing disparities in energy access. Researchers emphasize the need for energy-efficient AI models and policies that integrate AGI with renewable energy initiatives, such as AI-powered microgrids for optimizing solar and wind energy distributions. Additionally, transparency and equity in AGI deployment remain crucial to ensuring that advancements in AI-driven energy systems contribute to global energy transitions rather than exacerbating existing technological divides. As discussions on the role of AGI in energy continue, policy interventions must balance innovation with responsible implementation to ensure alignment with SDG 7’s goal of affordable, reliable, and sustainable energy for all.

Discussions on the role of AGI in industrial innovation (SDG 9) focus on its potential to enhance manufacturing, cybersecurity, intelligent computing, and automation. Researchers have explored AGI’s integration into Industry 4.0, AI-driven infrastructure, and cognitive computing as a means to advance sustainable industrialization (target 9.4) and strengthen research and innovation capacity (target 9.5) [

88,

89]. While AGI is being examined for its ability to improve automation, predictive maintenance, and decision-making, fundamental challenges remain regarding its scalability and effectiveness in complex industrial systems. Advances in AI chips and neuromorphic computing are considered energy-efficient solutions, but concerns persist about whether current architectures can achieve true AGI, as some researchers argue that neural network-based models remain fundamentally limited [

44,

90]. In cybersecurity, AGI is being studied for its potential role in adaptive security responses, threat detection, and automated countermeasures, contributing to target 9.c, which promotes resilient digital infrastructure [

70]. However, governance and regulatory gaps remain pressing challenges, with scholars emphasizing the need for ethical oversight and risk mitigation frameworks to prevent unintended consequences [

91,

92].

In addition to automation and cybersecurity, AGI has also been explored in infrastructure management, environmental monitoring, and autonomous systems. Research suggests that AGI-powered drones could be used for pollution tracking, disaster response, and climate adaptation, supporting target 9.1 (resilient infrastructure). Additionally, the potential of AGI in autonomous vehicle decision-making is an area of active exploration, with studies examining how real-time sensor data integration, including LiDAR and camera inputs, could enable adaptive responses to dynamic environments [

83]. Some researchers have proposed incorporating emotional modeling into AGI to enhance real-time prioritization, which could improve decision-making in applications such as robotics and self-driving cars. However, the economic and social implications of AGI-driven automation vary by region. In high-income economies such as Germany and Canada, research highlights its role in manufacturing optimization, whereas in labor-intensive economies such as India and Indonesia, full automation may not be viable or desirable. Instead, discussions emphasize AGI’s role in augmenting human labor rather than replacing it, ensuring productivity gains without widespread job displacement. As research progresses, AGI’s alignment with SDG 9 will require balancing technological innovation with ethical governance, equitable deployment, and sustainable industrial strategies that accommodate diverse economic and labor market conditions.

The regulatory challenges surrounding AGI governance remain a central concern, particularly in balancing innovation with ethical oversight and risk mitigation. The author of [

36] argues that uncertainties about the feasibility of AGI necessitate prioritizing regulations for narrow AI, given its immediate societal impact. Similarly, the author of [

93] warned of an AGI arms race, where high R&D costs could concentrate development within a few competitive entities, increasing the need for public policy interventions such as AI taxation and procurement strategies. The European Union, leveraging its AI Act, can shape global AGI governance through partnerships with the UN and OECD, promoting ethical AI standards and risk management. However, regulatory disparities complicate governance efforts, as the EU enforces stringent transparency and bias mitigation policies, whereas many emerging economies lack comprehensive AI frameworks. Without clear policies, AGI applications in governance and law enforcement (SDG 16) risk ethical concerns, such as biased decision-making and surveillance. International cooperation is critical to ensuring that AI-driven governance systems uphold human rights, accountability, and equitable oversight.

Global disparities in research capacity, infrastructure, and regulatory preparedness further shape the trajectory of AGI, necessitating targeted policy interventions to ensure equitable access. High-income countries, such as the United States, Germany, and the United Kingdom, lead in AGI-driven industrial automation (SDG 9) and renewable energy optimization (SDG 7), whereas emerging economies such as India and Brazil face funding and infrastructure constraints that limit adoption. Open-source AGI models and regional AI collaboration could help bridge these gaps, particularly in sectors such as healthcare (SDG 3) and education (SDG 4), where AI-driven advancements could provide significant social benefits. However, AGI’s high energy demands pose additional challenges, as the Global North increasingly integrates sustainability measures, whereas many developing economies remain reliant on fossil fuel-based power grids (SDG 13). Policies promoting energy-efficient AI models and clean energy investment are essential for mitigating the environmental impact of AGI. Furthermore, AGI’s influence on labor markets raises concerns about automation-driven job displacement (SDG 8), particularly in economies with high informal employment. Workforce adaptation policies, including vocational training and AI literacy programs, must ensure equitable transitions to AI-augmented roles. Addressing these challenges requires a coordinated approach, with policymakers, businesses, and research institutions working to align AGI governance with inclusive, ethical, and sustainable global development priorities.

This study has several limitations that should be acknowledged. First, the choice of a single database may have constrained the scope of the literature analyzed, potentially overlooking relevant publications owing to the exclusion of certain journals, conferences, or non-English sources. While the use of clustering modeling enhances the depth of our analysis, these techniques may inadvertently simplify the interdisciplinary nature of AGI research owing to their methodological constraints. Finally, studies that rely on SDG mappings face the complication of variability across algorithmic mapping systems [

94]. These inconsistencies can lead to uneven representation of research across SDGs, potentially distorting insights and influencing subsequent funding or policy decisions on the basis of incomplete or skewed mappings.

5.1. Implications for Policy

The evolving trajectory of AGI presents a complex challenge for policymakers: how to govern a technology that remains technically unresolved yet is already influencing institutional priorities, global investment flows, and cross-border discourse. Addressing this uncertainty requires the strategic integration of foresight into both national and multilateral planning frameworks. Governments should invest in adaptive and forward-looking policy instruments—such as scenario-based modeling, experimental regulatory environments, and horizon-scanning platforms—that help anticipate AGI’s societal applications and risks. These tools can guide infrastructure development, ethical oversight, and AGI deployment in areas such as public health (SDG 3.8), education (SDG 4.1), and urban energy systems (SDG 7.3).

While initiatives such as the European Union’s AI Act and Singapore’s Model AI Governance Framework offer early governance models, they are tailored primarily to narrow AI systems. Expanding these efforts to encompass AGI requires new governance architectures capable of managing general-purpose, cross-domain intelligence. For example, national AGI forecasting units could be established to anticipate long-term impacts on labor markets and institutional trust, whereas multilateral initiatives such as the OECD AI Observatory or the Global Partnership on AI (GPAI) could host dedicated AGI task forces. Simulation-based policy training and regulatory sandboxes could also help governments explore the hypothetical role of AGI in justice delivery, misinformation management, or ecological forecasting (SDGs 16.6, 16.10, and 13.2).

Importantly, effective foresight must be globally inclusive. Unequal access to research funding, high-performance computing, and regulatory capacity risks marginalizing many emerging economies. Targeted interventions—such as regional AGI research hubs, open-source model development, and interoperable AI registries—can help broaden participation. Governments and international bodies might also consider innovative financial tools, such as AGI Commons Funds or AI infrastructure bonds, to support green computing centers and sustainable innovation aligned with SDG 10.2 and SDG 13.2.

Ultimately, preparing for AGI requires more than a reactive regulatory stance. It demands globally coordinated yet locally adaptive governance strategies capable of guiding general-purpose AI toward inclusive, transparent, and environmentally responsible futures. Proactive policymaking—grounded in foresight, fairness, and sustainability—will be essential to ensure that AGI contributes to the shared goals of equitable development and resilient public governance.

5.2. Implications for Practitioners

Given the uncertain and evolving nature of AGI, practitioners must adopt adaptive and context-responsive approaches to deployment. AGI-like systems are likely to interface with uneven landscapes of technological infrastructure, data governance, and institutional capacity—necessitating strategies that are both locally relevant and globally informed. In healthcare (SDG 3.8), where advanced AI tools are emerging for clinical support, diagnostics, and triage, implementation success will depend as much on trust, interoperability, and data governance as on technical accuracy. In resource-constrained environments, practitioners must focus on lightweight, offline-compatible models that integrate with existing health infrastructures, ensuring that AI reinforces rather than disrupts service delivery.

In education (SDG 4.1) and industry (SDG 9.2), practitioners should emphasize augmentation over automation. Hybrid learning platforms and AI-enhanced vocational training can improve access and resilience, especially in settings with limited connectivity. In labor-intensive economies, AGI-like tools can support workflow optimization without displacing workers, aligning with equity-oriented goals (SDG 10.3). In the public sector (SDG 16.6), AI applications in governance—ranging from administrative decision-making to legal reasoning—must prioritize explainability, accountability, and cultural relevance. Piloting such tools within regulatory sandboxes can help identify ethical blind spots and support iterative, impact-sensitive deployment.

The environmental and ethical dimensions must be addressed from the outset. Given the energy intensity of AGI model training and deployment, especially in carbon-dependent regions, practitioners should prioritize low-energy architectures, modular systems, and renewable-powered data infrastructures (SDGs 7.3 and 13.2). Fairness and inclusion cannot be presumed: rigorous audits, representative training datasets, and transparent evaluation protocols are essential to ensure responsible use across diverse social and geographic contexts.

Ultimately, successful AGI implementation will require more than technical refinement, and foresight-driven, sustainability-aligned, and socially grounded strategies will be needed. Practitioners must be prepared to manage not only innovation but also ambiguity, ensuring that AGI contributes meaningfully to human well-being and institutional trust across a range of development settings.

6. Conclusions

Although artificial general intelligence (AGI) remains somewhat anticipatory in nature, analyzing its emerging research trajectories yields valuable empirical insights into how societies, institutions, and governance systems can proactively prepare for potential technological disruptions. This anticipatory nature is underscored by the accelerating industry race toward AGI and even artificial superintelligence (ASI), with large-scale infrastructure announcements and predictions of an “intelligence Big Bang” highlighting both the promise and the risks of these technologies. Recognizing AGI as an evolving aspiration rather than a current capability provides space for proactive policy and governance interventions to shape its trajectory in alignment with societal priorities.

AGI-related activity is expanding across scientific disciplines and geopolitical contexts, yet substantial disparities in funding allocation, institutional capacity, and regulatory maturity persist—factors that influence both who contributes to AGI development and whose priorities are encoded in its prospective applications. These asymmetries have direct implications for AGI’s evolving role in domains such as healthcare (SDG 3), education (SDG 4), industrial innovation (SDG 9), and public governance (SDG 16). While empirical experimentation is on the rise, many AGI-related claims remain speculative, with few systems demonstrating capabilities approaching cross-domain general intelligence.

As AGI research becomes increasingly interlinked with other frontier technologies—such as quantum computing, neurosymbolic systems, and cognitive architectures—its projected contributions to climate action (SDG 13), resilient infrastructure (SDG 9.1), and global digital cooperation (SDG 17.6) must be critically examined for feasibility, scalability, and sustainability. The resource demands of AGI-scale infrastructure could exacerbate carbon emissions and water stress, potentially conflicting with SDG 13 targets, unless mitigated through energy efficiency, renewable sourcing, and sustainable design. Similarly, if the benefits of AGI remain concentrated in a few countries or corporations, existing global inequalities (SDG 10) could widen rather than narrow, underscoring the need for inclusive access and equitable capacity building.

Furthermore, persistent ethical and institutional challenges—bias in training data, lack of algorithmic transparency, and unresolved accountability frameworks—underscore the importance of embedding anticipatory governance, inclusive innovation, and structured foresight into ongoing AGI research and policy discourse.

In the future, global cooperation and inclusive governance will be crucial to ensuring that AGI development aligns with the SDGs. Specific actions include establishing an international governance mechanism under a multilateral framework, drafting globally agreed-upon AGI development guidelines, and instituting supervisory systems that monitor compliance and outcomes. To address data interoperability challenges, unified international data standards should be developed alongside secure, scalable data-sharing platforms to facilitate equitable access to datasets and computing resources. A phased action plan for a global AGI governance institution should begin with a multistakeholder task force; assignment of responsibilities to regulatory, advisory, and enforcement arms; and operational mechanisms for periodic review and transparent reporting. This would enable oversight that keeps pace with rapid developments while ensuring that AGI systems serve inclusive and sustainable objectives.

This study provides a structured, data-informed synthesis of the evolving landscape of AGI and its preliminary alignment with the Sustainable Development Goals, offering a foundation for future empirical evaluations of the societal impacts of AGI. As the field moves closer to the thresholds of AGI and possibly ASI, the next phase of research must balance aspirations with realities by addressing environmental costs, preventing deepened inequalities, strengthening institutional capacities, and embedding global norms that safeguard collective interests.