A Dynamic Energy-Saving Control Method for Multistage Manufacturing Systems with Product Quality Scrap

Abstract

1. Introduction

2. Literature Review

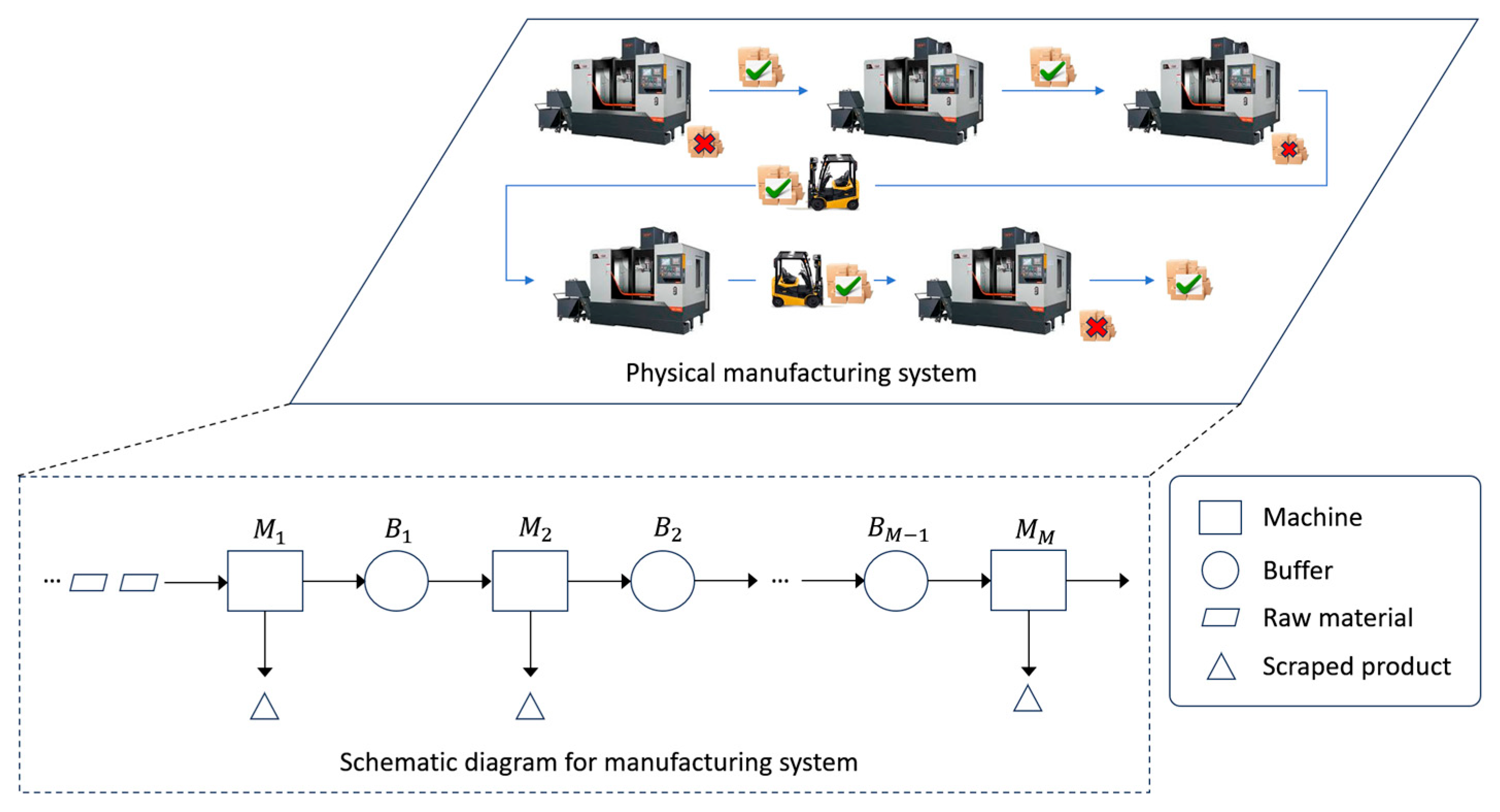

3. System Descriptions

- The manufacturing system consists of machines, denoted as where, and buffers, denoted as where .

- All machines operate with an identical cycle time, which is the time to process a product on a machine. The timeline is discretized into time slots, each corresponding to the length of one cycle time.

- Each machine is assumed to operate under an independent geometric reliability model if there is no EC action. In each time slot, if machine is up, it may fail and transition to the down state with probability , referred to as its failure probability. Conversely, if the machine is down, it can be restored to the up state with probability , known as its repair probability. The state of each machine is determined at the beginning of each time slot. An operation-dependent failure mode is assumed, meaning that failures occur only when the machine is processing a part, for example, a tool breakage.

- A machine in the up state may be transitioned to the energy-saving state and can also return from the energy-saving state to up state. A machine cannot transition directly between the energy-saving state and down state. Production does not occur when a machine is either in the energy-saving state or in the down state. The state of machine at time is denoted by the variable . Specifically, indicates that machine is operating in the energy-saving state, indicates the up state, and indicates the down state.

- Machine yields a good product with probability and generates a defective product with probability . Defective products are promptly scrapped, whereas good products are moved to the adjacent downstream buffer for further processing.

- Buffer has a finite buffer capacity, also denoted as , where . The number of products in buffer at time is denoted by , where . The numbers of products in the buffers are updated at the end of each time slot.

- Machine , where , is blocked if it can produce a product but its immediate downstream buffer is full and the downstream machine is unable to produce. Machine is never blocked.

- Machine , where , is starved if it can produce a product but the buffer is empty. Machine is never starved.

- When machine is producing a product, it consumes energy at a rate of . When it is idle, either due to starvation or blockage, the energy consumption rate reduces to . In energy-saving state, the consumption rate is further reduced to . No energy is consumed by machine while it is in the down state.

- Machine requires a fixed warmup energy each time it transitions from the energy-saving state to up state. Transitioning from the up state to energy-saving state does not require extra energy.

- (1)

- To develop analytical methods for evaluating system performance and determining the optimal EC policy for two-stage manufacturing systems with product quality scrap.

- (2)

- To propose an effective and computationally efficient algorithm to approximate the optimal EC policy for each machine within multistage manufacturing systems.

4. A Markov Decision Process for Two-Stage Manufacturing Systems

4.1. State and Action Spaces

4.2. Reward Function and Transition Matrix

4.3. Dynamic Programming Algorithm

| Algorithm 1: Dynamic Programming Algorithm |

| 1. Input: state space

, action space

, machine failure rate

, repair rate

and good products probability

, buffer capacity

, discount factor

, convergence threshold . 2. Initialization: For all states , initialize value function 3. For each state 4. Compute the value function 5. If 6. Stop 7. End If 8. Update the value function 9. End For 10. Compute the optimal policy 11. Output: Optimal EC policy |

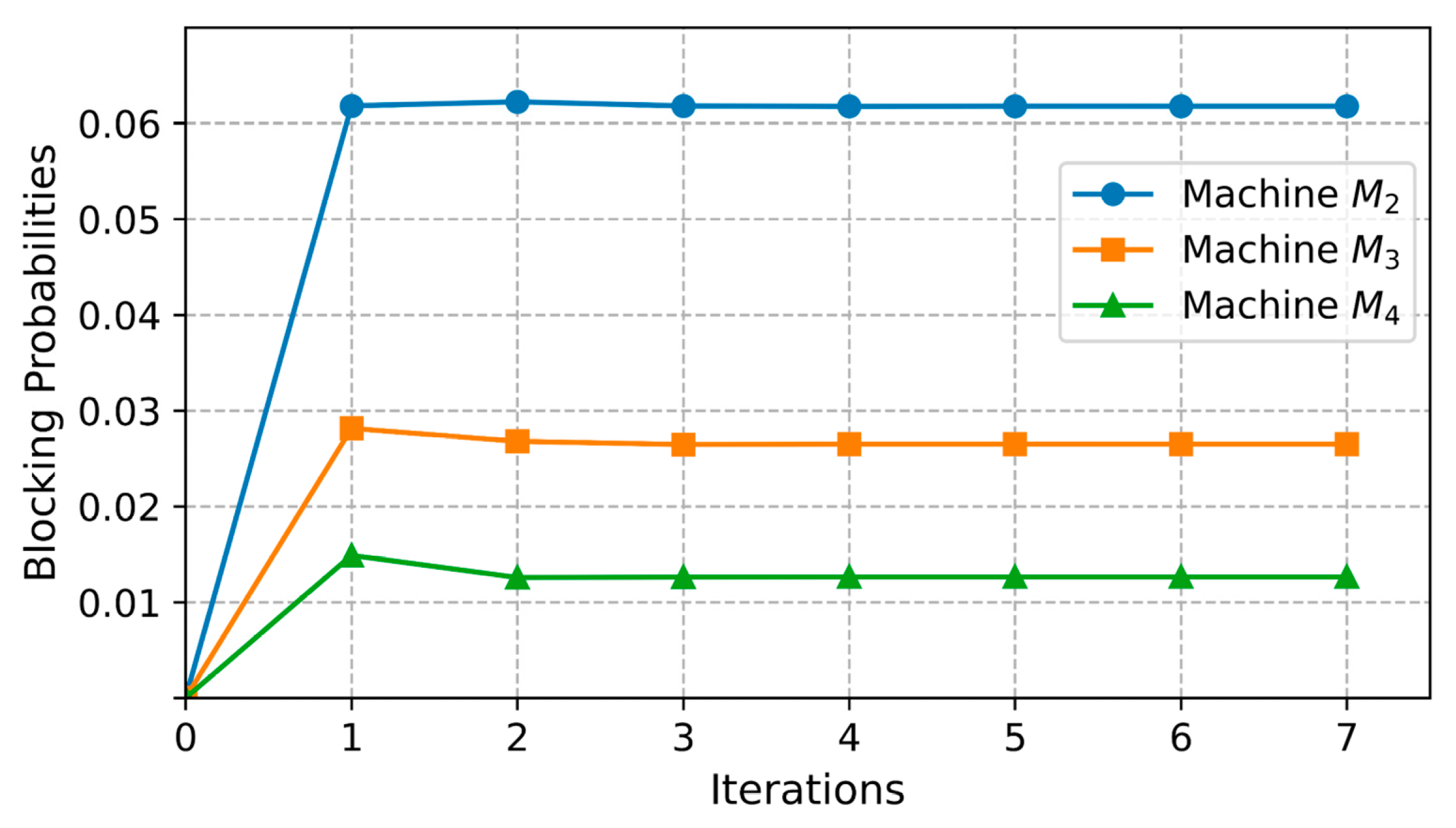

5. An Aggregation Procedure for Multistage Manufacturing Systems

5.1. State Aggregation

5.2. Initial Policies Generation

| Algorithm 2: Generating initial EC policies |

| 1. Input: machine failure rate

, repair rate

and good products probability

, buffer capacity

. 2. For 3. If , 4. 5. If , 6. 7. If , 8. . 9. End For 10. Output: initial EC policies , . |

5.3. Aggregation Procedure

| Algorithm 3: Aggregation procedure |

| 1. Input: machine failure rate

, repair rate

and good products probability

, buffer capacity

, convergence threshold

, maximum iteration count

. 2. Initialization: , . 3. For 4. For 5. If , 6. 7. If , 8. 9. If , 10. . 11. End For 12. If , 13. Break 14. End If 15. End For 16. Output: EC policies , . |

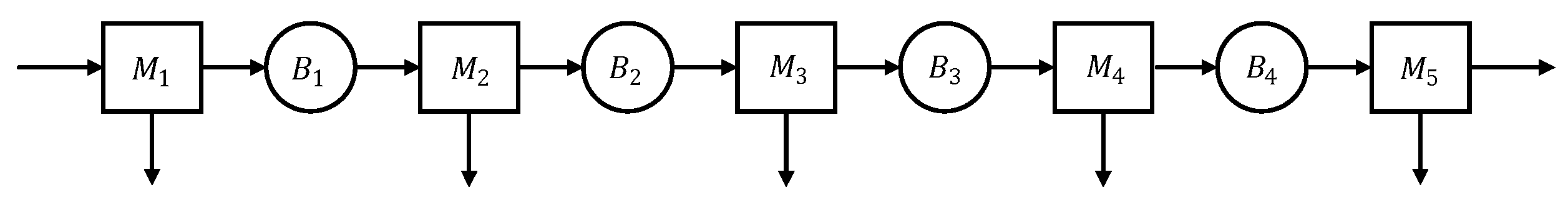

6. Numerical Experiments

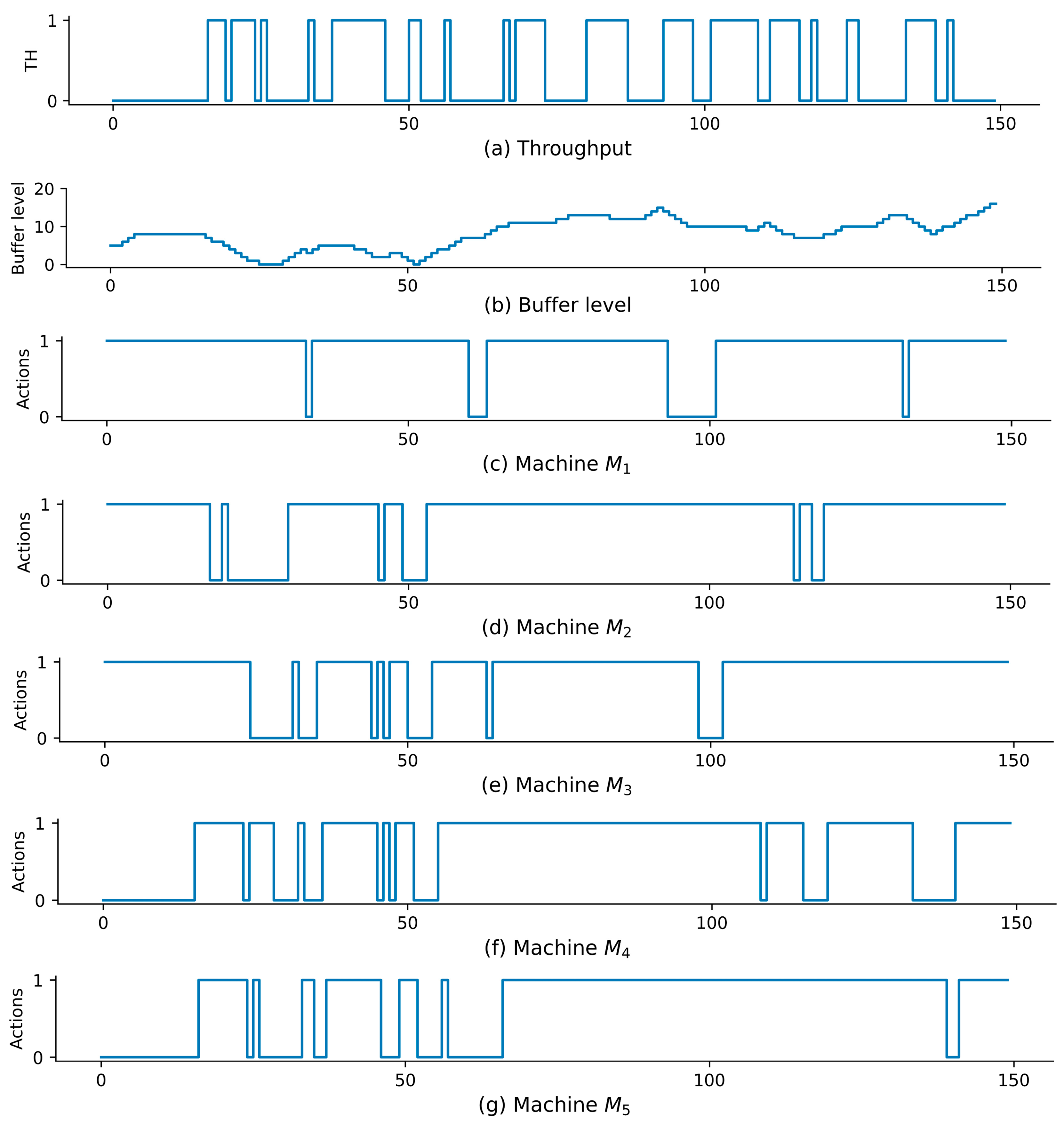

6.1. Illustrative Example

- (1)

- When the buffer level is low, downstream machines are more likely to perform EC actions, while upstream machines tend to remain in the up state. For example, after the 20th time step, the buffer level continues to decrease, machine remains in the up state to replenish parts, whereas downstream machines are more frequently switched to the energy-saving state to avoid starvation.

- (2)

- When the buffer level is high, upstream machines have the opportunity to perform EC actions. For example, after the 90th time step, as the buffer level increases, machine is switched to the energy-saving state, while downstream machines remain in the up state.

6.2. Effectiveness Analysis

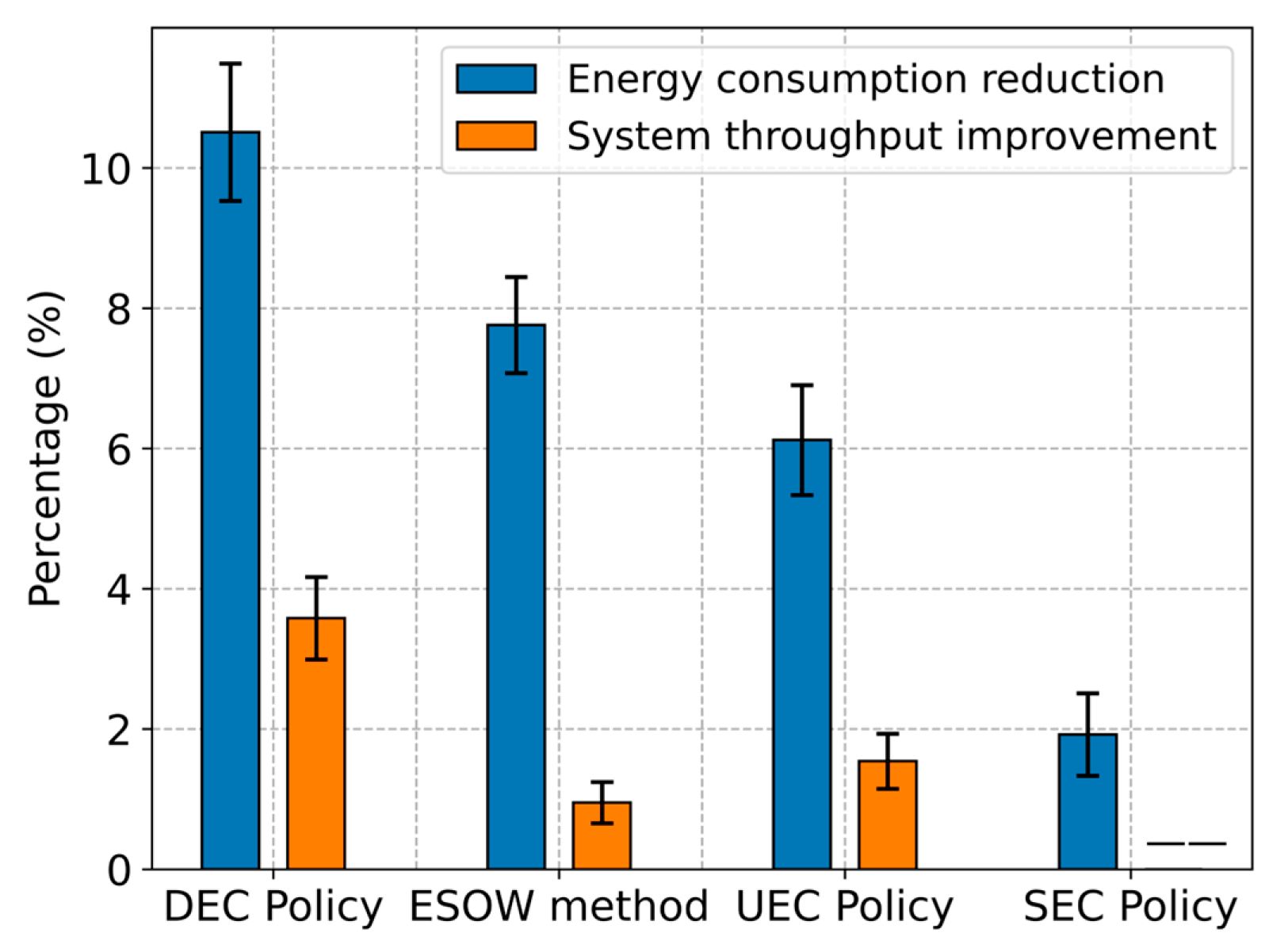

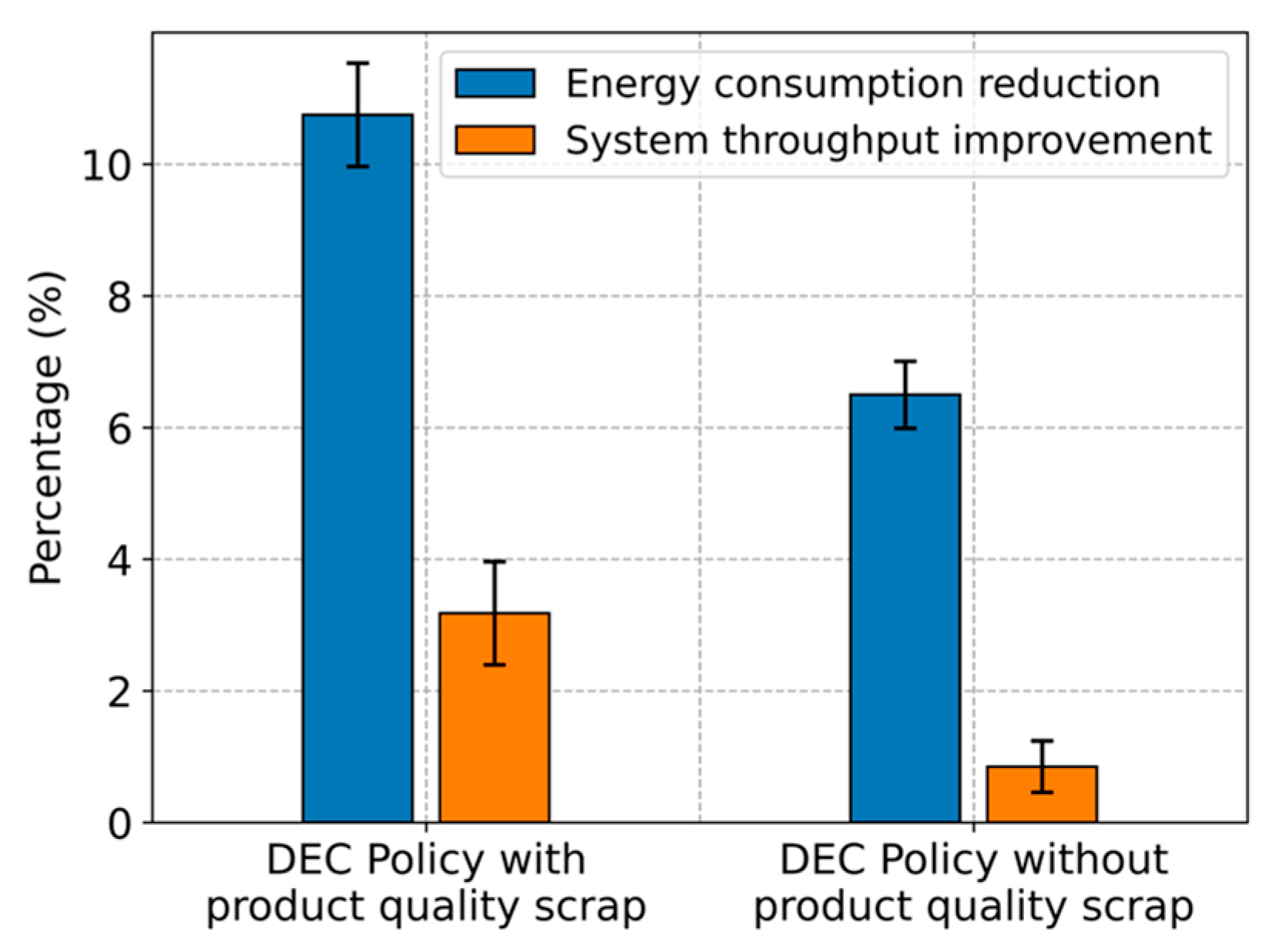

- (1)

- The DEC policy outperforms all these three methods, i.e., the SEC policy, the UEC policy, and ESOW method, primarily due to its ability to identify energy-saving opportunities by comprehensively analyzing the interrelationships among production, energy consumption, and quality within manufacturing systems.

- (2)

- The DEC policy significantly reduces energy consumption costs and slightly improves system throughput. This performance can be attributed to the policy’s ability to utilize machine idle periods for EC. By switching machines to the energy-saving state, the DEC policy indirectly reduces the likelihood of machines producing defective products, thereby improving throughput.

6.3. Comparative Analysis

7. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nota, G.; Nota, F.D.; Peluso, D.; Lazo, A.T. Energy Efficiency in Industry 4.0: The Case of Batch Production Processes. Sustainability 2020, 12, 6631. [Google Scholar] [CrossRef]

- Jauhari, W.A.; Pujawan, I.N.; Suef, M.; Govindan, K. Low Carbon Inventory Model for Vendor–buyer System with Hybrid Production and Adjustable Production Rate under Stochastic Demand. Appl. Math. Model. 2022, 108, 840–868. [Google Scholar] [CrossRef]

- Ardolino, M.; Bacchetti, A.; Dolgui, A.; Franchini, G.; Ivanov, D.; Nair, A. The Impacts of Digital Technologies on Coping with the COVID-19 Pandemic in the Manufacturing Industry: A Systematic Literature Review. Int. J. Prod. Res. 2024, 62, 1953–1976. [Google Scholar] [CrossRef]

- Li, Y.; Cui, P.-H.; Wang, J.-Q.; Chang, Q. Energy-Saving Control in Multistage Production Systems Using a State-Based Method. IEEE Trans. Autom. Sci. Eng. 2022, 19, 3324–3337. [Google Scholar] [CrossRef]

- Brundage, M.P.; Chang, Q.; Zou, J.; Li, Y.; Arinez, J.; Xiao, G. Energy Economics in the Manufacturing Industry: A Return on Investment Strategy. Energy 2015, 93, 1426–1435. [Google Scholar] [CrossRef]

- Wessel, J.; Turetskyy, A.; Cerdas, F.; Herrmann, C. Integrated Material-Energy-Quality Assessment for Lithium-Ion Battery Cell Manufacturing. Procedia CIRP 2021, 98, 388–393. [Google Scholar] [CrossRef]

- Sahoo, S.; Lo, C.-Y. Smart Manufacturing Powered by Recent Technological Advancements: A Review. J. Manuf. Syst. 2022, 64, 236–250. [Google Scholar] [CrossRef]

- Phuyal, S.; Bista, D.; Bista, R. Challenges, Opportunities and Future Directions of Smart Manufacturing: A State of Art Review. Sustain. Futures 2020, 2, 100023. [Google Scholar] [CrossRef]

- Barenji, A.V.; Liu, X.; Guo, H.; Li, Z. A Digital Twin-Driven Approach towards Smart Manufacturing: Reduced Energy Consumption for a Robotic Cell. Int. J. Comput. Integr. Manuf. 2021, 34, 844–859. [Google Scholar] [CrossRef]

- Cañas, H.; Mula, J.; Campuzano-Bolarín, F.; Poler, R. A Conceptual Framework for Smart Production Planning and Control in Industry 4.0. Comput. Ind. Eng. 2022, 173, 108659. [Google Scholar] [CrossRef]

- Jiang, P.; Wang, Z.; Li, X.; Wang, X.V.; Yang, B.; Zheng, J. Energy Consumption Prediction and Optimization of Industrial Robots Based on LSTM. J. Manuf. Syst. 2023, 70, 137–148. [Google Scholar] [CrossRef]

- El Abdelaoui, F.Z.; Jabri, A.; El Barkany, A. Optimization Techniques for Energy Efficiency in Machining Processes—A Review. Int. J. Adv. Manuf. Technol. 2023, 125, 2967–3001. [Google Scholar] [CrossRef]

- Nouiri, M.; Bekrar, A.; Trentesaux, D. An Energy-Efficient Scheduling and Rescheduling Method for Production and Logistics Systems†. Int. J. Prod. Res. 2020, 58, 3263–3283. [Google Scholar] [CrossRef]

- Karimi, S.; Kwon, S.; Ning, F. Energy-Aware Production Scheduling for Additive Manufacturing. J. Clean. Prod. 2021, 278, 123183. [Google Scholar] [CrossRef]

- Tan, B.; Karabağ, O.; Khayyati, S. Production and Energy Mode Control of a Production-Inventory System. Eur. J. Oper. Res. 2023, 308, 1176–1187. [Google Scholar] [CrossRef]

- Loffredo, A.; May, M.C.; Matta, A.; Lanza, G. Reinforcement Learning for Sustainability Enhancement of Production Lines. J. Intell. Manuf. 2024, 35, 3775–3791. [Google Scholar] [CrossRef]

- Loffredo, A.; Frigerio, N.; Lanzarone, E.; Matta, A. Energy-Efficient Control in Multi-Stage Production Lines with Parallel Machine Workstations and Production Constraints. IISE Trans. 2024, 56, 69–83. [Google Scholar] [CrossRef]

- Pandiyan, V.; Cui, D.; Richter, R.A.; Parrilli, A.; Leparoux, M. Real-Time Monitoring and Quality Assurance for Laser-Based Directed Energy Deposition: Integrating Co-Axial Imaging and Self-Supervised Deep Learning Framework. J. Intell. Manuf. 2025, 36, 909–933. [Google Scholar] [CrossRef]

- Anifowose, O.N.; Ghasemi, M.; Olaleye, B.R. Total Quality Management and Small and Medium-Sized Enterprises’ (Smes) Performance: Mediating Role of Innovation Speed. Sustainability 2022, 14, 8719. [Google Scholar] [CrossRef]

- Tangour, F.; Nouiri, M.; Abbou, R. Multi-Objective Production Scheduling of Perishable Products in Agri-Food Industry. Appl. Sci. 2021, 11, 6962. [Google Scholar] [CrossRef]

- Steinbacher, L.M.; Rippel, D.; Schulze, P.; Rohde, A.-K.; Freitag, M. Quality-Based Scheduling for a Flexible Job Shop. J. Manuf. Syst. 2023, 70, 202–216. [Google Scholar] [CrossRef]

- Ouzineb, M.; Mhada, F.Z.; Pellerin, R.; El Hallaoui, I. Optimal Planning of Buffer Sizes and Inspection Station Positions. Prod. Manuf. Res. 2018, 6, 90–112. [Google Scholar] [CrossRef]

- Cui, P.-H.; Wang, J.-Q.; Li, Y. Data-Driven Modelling, Analysis and Improvement of Multistage Production Systems with Predictive Maintenance and Product Quality. Int. J. Prod. Res. 2022, 60, 6848–6865. [Google Scholar] [CrossRef]

- Wang, J.-Q.; Song, Y.-L.; Cui, P.-H.; Li, Y. A Data-Driven Method for Performance Analysis and Improvement in Production Systems with Quality Inspection. J. Intell. Manuf. 2023, 34, 455–469. [Google Scholar] [CrossRef]

- Kang, Y.; Ju, F. Flexible Preventative Maintenance for Serial Production Lines with Multi-Stage Degrading Machines and Finite Buffers. IISE Trans. 2019, 51, 777–791. [Google Scholar] [CrossRef]

- Li, J.; Meerkov, S.M. Production Systems Engineering; Springer: New York, NY, USA; London, UK, 2009. [Google Scholar]

- Cui, P.-H.; Wang, J.-Q.; Li, Y.; Yan, F.-Y. Energy-Efficient Control in Serial Production Lines: Modeling, Analysis and Improvement. J. Manuf. Syst. 2021, 60, 11–21. [Google Scholar] [CrossRef]

- Li, Y.; Wang, J.-Q.; Chang, Q. Event-Based Production Control for Energy Efficiency Improvement in Sustainable Multistage Manufacturing Systems. J. Manuf. Sci. Eng. 2019, 141, 021006. [Google Scholar] [CrossRef]

- Ulger, F.; Yuksel, S.E.; Yilmaz, A.; Gokcen, D. Solder Joint Inspection on Printed Circuit Boards: A Survey and a Dataset. IEEE Trans. Instrum. Meas. 2023, 72, 1–21. [Google Scholar] [CrossRef]

- Mönch, L.; Fowler, J.W.; Mason, S.J. Production Planning and Control for Semiconductor Wafer Fabrication Facilities: Modeling, Analysis, and Systems; Operations Research/Computer Science Interfaces Series; Springer: Dordrecht, The Netherlands, 2013; Volume 52. [Google Scholar]

| 1 | ||

| , | ||

|

,

or , | ||

| , | ||

| , | ||

|

, or , |

| Station | |||||

|---|---|---|---|---|---|

| 0.10 | 0.08 | 0.12 | 0.09 | 0.10 | |

| 0.30 | 0.25 | 0.27 | 0.29 | 0.30 | |

| 0.95 | 0.98 | 0.95 | 0.97 | 0.96 | |

| 15.5 | 14.0 | 15.0 | 14.5 | 15.0 | |

| 12.0 | 11.5 | 12.0 | 11.5 | 12.0 | |

| 0.5 | 0.4 | 0.6 | 0.5 | 0.6 | |

| 5.0 | 5.5 | 5.0 | 5.5 | 5.0 | |

| Buffer | |||||

| 3 | 5 | 4 | 5 |

| Throughput (Parts) | Energy Consumption Cost ($) | |

|---|---|---|

| DEC | 4797 | 44,844.7 |

| SEC | 4795 | 50,307.7 |

| UEC | 4712 | 48,077.1 |

| ESOW | 4703 | 47,086.3 |

| BL | 4656 | 51,874.3 |

| Parameters | Sets |

|---|---|

| Machine number | |

| Failure rate | |

| Repair rate | |

| Good products probability | |

| Energy consumption rate |

, , |

| Buffer capacity | |

| Throughput benefit | |

| Energy consumption cost |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, P.; Lu, X. A Dynamic Energy-Saving Control Method for Multistage Manufacturing Systems with Product Quality Scrap. Sustainability 2025, 17, 6164. https://doi.org/10.3390/su17136164

Cui P, Lu X. A Dynamic Energy-Saving Control Method for Multistage Manufacturing Systems with Product Quality Scrap. Sustainability. 2025; 17(13):6164. https://doi.org/10.3390/su17136164

Chicago/Turabian StyleCui, Penghao, and Xiaoping Lu. 2025. "A Dynamic Energy-Saving Control Method for Multistage Manufacturing Systems with Product Quality Scrap" Sustainability 17, no. 13: 6164. https://doi.org/10.3390/su17136164

APA StyleCui, P., & Lu, X. (2025). A Dynamic Energy-Saving Control Method for Multistage Manufacturing Systems with Product Quality Scrap. Sustainability, 17(13), 6164. https://doi.org/10.3390/su17136164