1. Introduction

Training highly competent engineers has become a global priority for higher education institutions. In a context characterised by rapid technological advances, globalisation and complex social challenges such as climate change and social equity, engineering programmes face increasing pressure to ensure that their graduates possess not only high-level technical knowledge but also transversal or generic competences such as teamwork, problem-solving, effective communication and autonomous learning, among other skills that are indispensable to face the challenges of a globalised and constantly evolving work environment. This approach is essential to foster inclusive and quality education, aligned with the principles of UNESCO’s Sustainable Development Goal 4 (SDG4).

In this scenario, the effective assessment of competences is positioned as a fundamental component to ensure that students achieve the expected standards of professional training. The assessment of generic competences poses a complex challenge for higher education institutions. The tools used must be valid, reliable and adapted to the academic and professional context of the students. In addition, they must allow an equitable and standardised measurement without neglecting the uniqueness of each institution and programme. In this sense, previous studies have highlighted the importance of integrating standardised tests, such as the Collegiate Learning Assessment (CLA), with qualitative methodologies, such as evaluating portfolios and using rubrics [

1,

2].

Technology has increasingly played a crucial role in optimising assessment processes [

3,

4], particularly in the context of online education and the integration of artificial intelligence (AI) tools to support inclusive and personalised learning. Despite the variety of existing assessment tools, many exhibit limitations in terms of scope, flexibility, and their capacity to comprehensively capture the qualitative and contextual dimensions of competencies. Despite incorporating artificial intelligence and machine learning-based tools that promise to improve accuracy and reduce bias, they still face barriers to widespread adoption due to ethical and technological concerns [

5,

6]. These tools must be designed and used ethically, promoting equity and accessibility at all educational levels. In addition, many studies have focused on quantitative or generic indicators, leaving aside aspects such as application in real scenarios or the integration of interdisciplinary approaches. These shortcomings generate a significant gap in the literature and educational practice, making it challenging to implement assessment approaches that are effective, inclusive, and aligned with the needs of both students and the labour market.

Universities, for their part, have become aware of the need to improve the assessment of these competencies, but development is generally less than desired [

7]. Thus, the need to formulate tailor-made evaluations could further strengthen the reliability and validity of the evaluations. It is necessary to develop specific assessments that focus on the set of generic competencies to ensure a good reliability of the assessment. At the same time, it is crucial to consider relevant previous studies and work that can provide valuable perspectives, data, and experiences that are useful to the educational process and can be improved and enriched. This involves reviewing the existing literature on assessment tools and specific competencies within engineering careers. Integrating these tools can provide a more robust framework for measuring the development of technical and soft skills in students, thus ensuring that the training received is aligned with the demands of the labour market. This will not only allow for more accurate assessment but will also encourage continuous learning and the adaptation of students to the new technologies and work methods required in the engineering field [

8,

9].

The aim of this paper is twofold. First, it analyses the current state of competency-based assessment in engineering education through a systematic mapping of the literature from 2019 to 2024. This mapping highlights trends, gaps, and challenges—particularly the limited attention to transversal skills and the scarce integration of digital tools into assessment practices. Second, the paper presents SmartRubrics, an AI-powered tool developed in direct response to the findings of the mapping. The tool supports the automatic generation of assessment rubrics aligned with competency-based education frameworks, aiming to enhance assessment quality, consistency, and inclusivity.

Our main contribution lies in bridging empirical evidence and technological innovation: we provide a systematic and up-to-date synthesis of the literature on competency-based assessment in engineering, and based on that, we propose SmartRubrics as a concrete, AI-based solution that addresses the identified gaps. This combination of literature-driven analysis and practical tool development offers a comprehensive approach to strengthening competency assessment practices in engineering education.

The article is organised as follows:

Section 2 presents general background related to competency assessment in engineering;

Section 3 provides a brief overview of related research;

Section 4 details the methodology used for the systematic literature mapping;

Section 5 describes the results obtained from the systematic mapping and the answers to the research questions;

Section 6 presents a proposed AI-based tool for the automatic generation of competency assessment instruments; and finally,

Section 8 presents the conclusions and future work.

2. Background

Engineering programmes involve acquiring a set of competencies that students must develop throughout their academic training, which are essential for their professional development. These competencies include technical knowledge and transversal skills, which enable graduates to tackle real-world problems and find effective solutions. The concept of competency encompasses knowledge, attitudes, and skills, and its development must be measurable to determine whether it has been successfully achieved [

10,

11,

12,

13]. However, the assessment of competencies in engineering presents significant challenges, as it involves clearly defining what should be measured and how to measure it [

14]. In this process, the lack of a clear methodology can lead to unreliable data collection, making it difficult to make evidence-based educational decisions. Inadequate assessment may generate information that leads to inappropriate decisions, ultimately affecting the training of future engineers [

14].

To address this challenge, several tools and methodologies have been developed to assess engineering competencies. Higher education has widely used assessment rubrics to establish specific performance criteria. However, they stand out in assessing competencies such as systems thinking and complex problem-solving [

15,

16]. In a different context, workplace-based assessments (WBAs) have been implemented in medical education to provide real-time feedback, although studies show that these tools often lack clear strategies for performance improvement [

17]. Assessment automation has gained relevance in engineering, improving learning through data analysis. An assessment framework developed at the Pontificia Universidad Católica de Valparaiso (Chile) optimised the detection of curricular deficiencies and provided personalised feedback to improve student performance [

18]. In addition, design-based learning (DBL) strategies have been shown to enhance cross-cutting skills such as collaboration and critical thinking. They are particularly effective in developing sustainability competencies [

16].

Engineering competencies can be divided into two main categories: professional competencies and transversal competencies. Professional competencies are specific to the practice of the profession and are based on disciplinary knowledge acquired throughout academic training. In contrast, transversal competencies encompass skills essential to the overall performance of the engineer, such as leadership, effective communication, teamwork, and the ability to solve interdisciplinary problems. Recent literature has shown that transversal competencies are fundamental for the professional success of engineers, as they facilitate their integration into multidisciplinary teams and improve their adaptability in a constantly changing work environment [

19].

Despite these advances, most of the studies on competency assessment in engineering have focused on measuring technical competencies, leaving a gap in the assessment of generic competencies. Competency-based education (CBE) has promoted incorporating assessment methodologies that are more aligned with learning outcomes, such as the Outcome-Based Education (OBE) model, which emphasises the importance of measuring student achievement in a structured way [

19]. However, implementing this approach in engineering faces significant challenges, such as the lack of specific methodologies to assess the development of transversal competencies and the need to update the curriculum to adapt to this model [

20].

Recent literature suggests that combining technological tools with innovative educational approaches can improve the effectiveness of competency assessment in engineering. However, there are still gaps in integrating these methods within the curricula [

21]. In this context, studies need to quantify the degree of attention that the literature pays to the assessment of generic competencies in engineering education, allowing the identification of trends and opportunities for improvement in the teaching and learning processes.

Some research has highlighted the need to integrate sustainability, ethics, and transversal skills into engineering education to address global challenges and meet industry expectations. For example, strategies that embed sustainability competencies and collaborative learning environments have proven effective in fostering critical thinking and professional responsibility among engineering students [

22,

23]. These approaches align with the growing emphasis on holistic education models, which demand both technical proficiency and socio-ethical awareness in graduates [

23,

24].

3. Related Work

The assessment of competencies in engineering education has been a widely studied topic. Various methodologies, tools, and strategies have been proposed to guarantee the quality of learning and its alignment with the needs of the industrial sector [

14]. For example, in [

25], the authors explored the use of learning models to assess cognitive competencies in programming students. This approach is based on the application of Bloom’s taxonomy as a framework to classify levels of cognitive development, ranging from basic skills to higher-order thinking processes. By utilising specialised neural networks and text analysis techniques, the system is capable of automatically evaluating open-ended responses and accurately identifying cognitive strengths and weaknesses. This line of research highlights the potential of artificial intelligence to transform educational assessment processes, surpassing the limitations of traditional manual methods, which often hinder the provision of timely and personalised feedback. In this context, the need for automated, adaptable tools aligned with standardised educational frameworks is reinforced—such as those being developed through the SmartRubrics initiative.

Another line of research has addressed the challenge of assessing professional competencies in computer science education, emphasising the need to coherently align learning outcomes with the assessment instruments employed [

26]. Various studies have demonstrated that traditional assessment practices are insufficient for capturing the development of complex skills, such as communication, critical thinking, collaboration, and problem-solving. Furthermore, a lack of clear conceptual frameworks has been identified to guide the design of practical and context-sensitive assessment tools. In this scenario, the development of solutions such as SmartRubrics becomes particularly relevant, enabling the automated generation of customised rubrics aligned with professional standards and designed to support more valid, systematic, and competency-focused assessments.

Various studies have contributed to defining models of specific competencies, establishing strategies for their assessment, and proposing monitoring systems that ensure continuous improvement in learning [

25,

26,

27,

28]. This conceptual framework addresses four key dimensions: competency models in engineering, the assessment of mathematical competencies, evaluation and monitoring mechanisms, and the development of professional competencies. Each of these dimensions provides essential elements for understanding the current challenges in competency-based education and for supporting innovative proposals such as SmartRubrics.

3.1. Competency Models in Engineering

Various studies have proposed competency models for engineering education. For example, the Engineering Competency Model provides guidance for the professional development of engineers, promoting the understanding of essential skills for a globally competitive workforce [

29]. In addition, Male developed a model of 11 generic competency factors required in engineering in Australia, which supports improving curriculum design and programme evaluation processes [

30].

3.2. Assessment of Mathematical Competencies

A critical aspect of engineering education is the assessment of mathematical competencies. A recent study evaluated these competencies in electrical engineering students, identifying eight key components and suggesting that a lack of proficiency in these areas affects performance in highly mathematical courses [

31]. Another innovative strategy is the use of video lessons as a tool to assess mathematical competencies in engineering, allowing for evaluation beyond written exams [

32].

3.3. Assessment and Monitoring of Competencies

Assessing and monitoring competencies in engineering programmes is key to improving educational quality. Hermosilla et al. proposed a system that links expected learning outcomes with competency assessment, enabling more precise tracking of student development [

33]. Similarly, the systematic review by Moozeh et al. explored the drivers and barriers to implementing competency-based assessments in engineering programmes [

34].

3.4. Development of Professional Competencies

Another important focus is the development of professional competencies. Malheiro et al. proposed the 4C2S framework, which includes critical thinking, problem-solving, communication, teamwork, ethics, and sustainability, to ensure the comprehensive preparation of engineering students [

35]. Additionally, Ebrahiminejad identified key competencies needed in the industry and strategies to address training deficiencies among students [

36].

The reviewed studies highlight the importance of well-defined competency models, innovative strategies for assessing mathematical skills, and effective monitoring systems. Furthermore, they recognise the need to develop professional competencies aligned with the demands of the industrial sector. While implementing competency-based assessment strategies remains challenging, recent research provides a solid framework to enhance engineering education and its alignment with the labour market.

4. Method

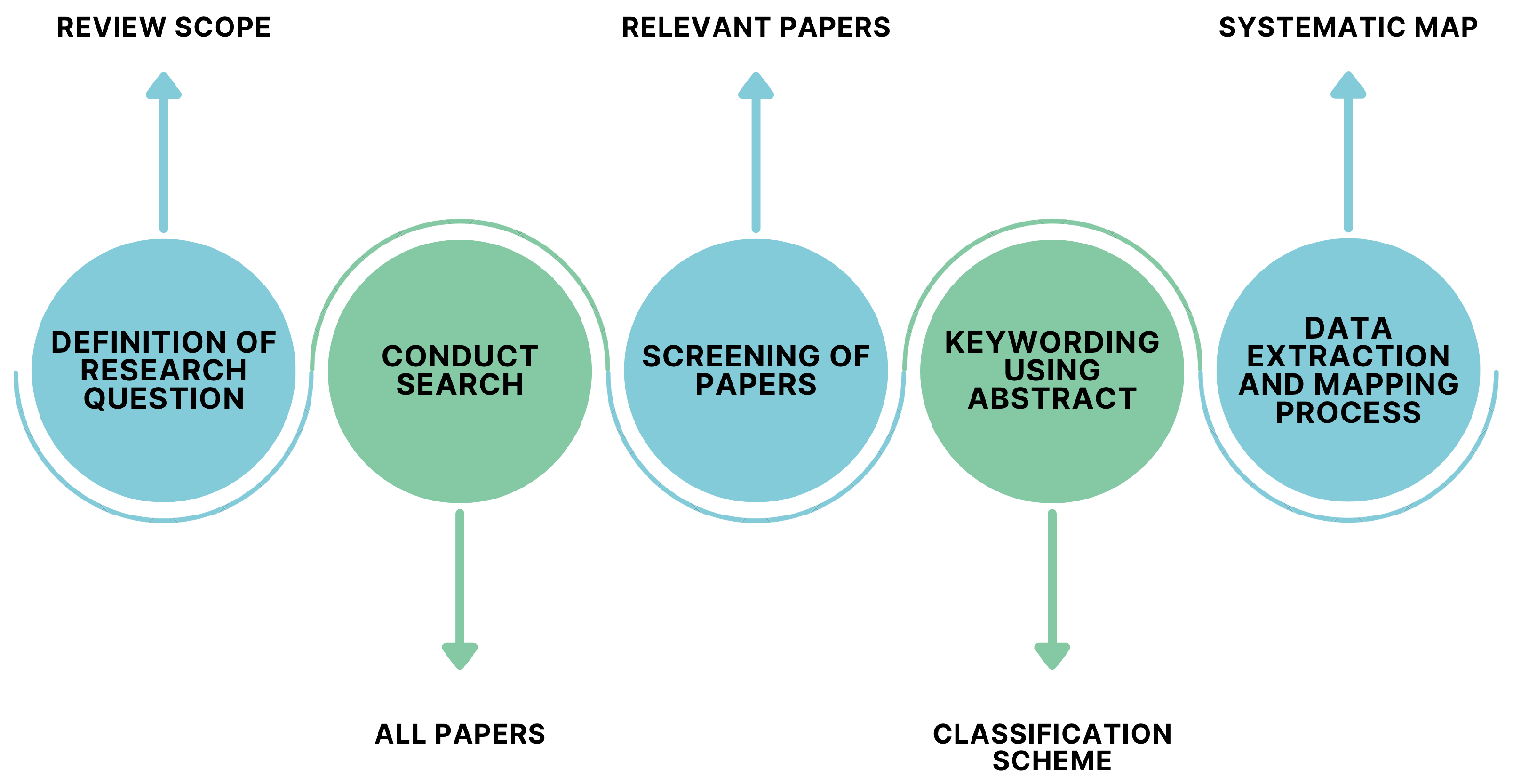

The methodology applies specific steps of the systematic mapping technique, which allows for identifying, classifying and analysing the relevant literature for a specific research topic [

37]. This method is commonly used to answer one or more research questions systematically. We opted for a systematic mapping study (SMS) instead of a systematic literature review (SLR) due to the exploratory and scoping nature of our investigation. The field is characterised by a wide diversity of approaches, assessment models, technologies, and target competencies (e.g., technical vs. transversal), which are not uniformly addressed in the literature. SMS is particularly suitable for gaining a broad overview of research activity, identifying gaps, and categorising contributions across multiple dimensions (tools, strategies, frameworks). In contrast, SLRs are more appropriate when addressing narrow and well-defined research questions, typically involving comparisons of effectiveness or synthesis of evidence. Therefore, the SMS enabled us to map the landscape and identify the need for a tailored tool, such as SmartRubrics, which directly addresses the deficiencies identified in the reviewed literature.

The phases that make up the adapted systematic mapping process are described in

Figure 1.

4.1. Objectives and Research Questions

This systematic mapping aims to identify, analyse, and categorise the tools used for assessing competencies in engineering subjects and provide a broad and detailed view of the methods and approaches used in measuring technical and soft skills. To this end, the aim is to compile and classify the specialised literature, describe the most common assessment procedures (such as rubrics, projects, portfolios and competency-based assessments), and examine their advantages and limitations in different educational contexts. This process will also make it possible to detect trends and gaps in research and teaching practice, highlighting areas that require further deepening or innovation, especially in the context of inclusive and sustainable education.

We have developed specific research questions, which are detailed in

Table 1, to gather relevant literature and discern trends associated with the research topic.

This approach will give us a more complete and detailed understanding of the research topic, providing us with the tools necessary to address it effectively and meaningfully.

4.2. Search String to Be Applied

To answer the research questions, we conducted a search of data sources using search strings consisting of study-specific keywords. We used the PICO (Population, Intervention, Comparison, and Outcome) strategy outlined by Petersen [

38] and searched for articles using the search string described in

Table 2.

4.3. Data Extraction

To conduct a comprehensive and robust data extraction search to support this study, a strategy was implemented based on the selection of sources from digital databases of scientific publications, including Web of Science (WOS), SCOPUS, IEEE Xplore, and the ACM Digital Library.

The selection of these databases was guided by the goal of obtaining a broad and diverse range of articles related to our research topic. Utilising these four sources allowed us access to a substantial body of relevant academic and scientific literature, enriching our analysis’s knowledge base.

Specific criteria were established to enhance search accuracy, with particular attention given to document titles, abstracts, introductions, and conclusions. Leveraging this functionality, available across all consulted databases, proved essential in identifying academic and scientific articles most closely aligned with our research objectives.

4.4. Inclusion and Exclusion Criteria

The articles selected from the previously described data sources were filtered according to the following inclusion and exclusion criteria.

4.4.1. Inclusion Criteria

Research articles from scientific journals and conferences.

Articles considering tools for assessing generic competencies in engineering programmes.

Articles published since 2019.

4.4.2. Exclusion Criteria

Articles published before 2019.

Articles that do not include topics related to tools for assessing generic competencies in engineering programmes.

Duplicate studies found in different databases.

Incomplete articles.

Articles not published in journals or conference proceedings.

Articles not available in open access.

Articles not relevant to the search string.

Reviews (which may be used in related works).

4.5. Search Execution

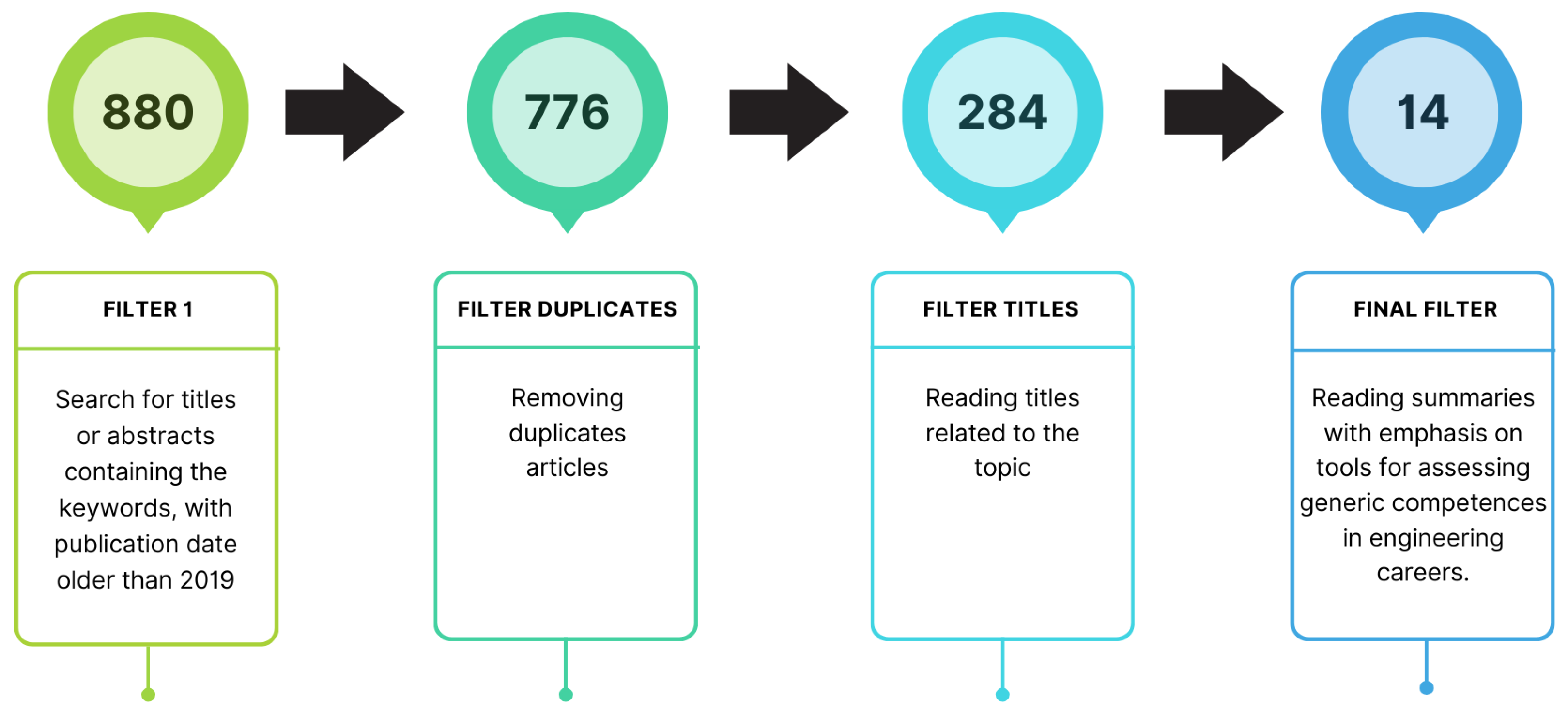

The chosen search string was used in the designated digital databases of scientific publications, resulting in a primary set of 880 related papers (see

Table 3).

Data collection was conducted using the export functions provided by each digital library. After removing duplicate articles, the total count was reduced to 776. Subsequently, the agreed inclusion and exclusion criteria were applied. Titles, abstracts, and introductions were evaluated, and 284 articles relevant to the search were selected.

Finally, a detailed review of the selected articles was conducted. During this stage, articles addressing topics related to the assessment of competencies in contexts outside engineering education, general curriculum development, or teaching methodologies not directly involving the measurement or evaluation of technical and soft skills in engineering programmes were excluded. After applying these criteria, 14 relevant articles were identified and retained for further analysis in this research.

This item filtering process is depicted in

Figure 2.

Table 4 details the 14 selected articles, including their bibliographic reference, title, and year of publication.

4.6. Classification Scheme

The 14 selected articles were classified into three main categories: Analysis, Use of Existing Solutions, and Implementation/Proposals. Regarding the temporal dimension, the articles were categorised according to their year of publication, highlighting that the selection was limited to those published from 2019 onwards. This temporal criterion allows us to focus on recent and relevant research to obtain an updated perspective on the topic of study.

The article type dimension categorises the papers into the following:

Analysis: This refers to articles describing analyses and comparisons of the literature on tools for assessing generic competencies in engineering careers.

Use of Existing Resources (Experiences): This refers to studies or papers related to the application or use of resources, tools, methodologies, knowledge or technologies that have been previously developed and established to assess generic competencies in engineering careers.

Implementation/Proposals: This involves the real-world application of a tool, model, or framework in formal educational contexts (e.g., integration into existing curricula or courses). This category implies a more mature stage of development, where the solution is used under authentic teaching and learning conditions.

It is important to note that an article may be classified into more than one category, as the nature of academic contributions often spans multiple scopes of analysis and application. For instance, a study may simultaneously present a literature review or theoretical analysis (Analysis), alongside a practical case where the proposed or existing tool is implemented or applied in an educational context (Use of Existing Resources or Implementation/Proposals). This overlap reflects the interdisciplinary and integrative character of research in engineering education, where theoretical reflection, practical application and innovation frequently converge within the same work. Therefore, allowing for multi-categorisation ensures a more accurate representation of the content and purpose of each article analysed.

4.7. Map Construction

As part of the systematic mapping focused on tools for assessing generic competencies in engineering careers, a map has been developed that acts as a key tool to facilitate the representation and analysis of the data collected. In

Section 5.1, we present the map generated in this process, which provides a visual and organised representation of the emerging categories identified in the studies analysed.

5. Results

In this section, we detail the results obtained from the process of classification and analysis of the studies selected through the review of the literature pertinent to our research topic related to tools for assessing generic competencies in engineering careers.

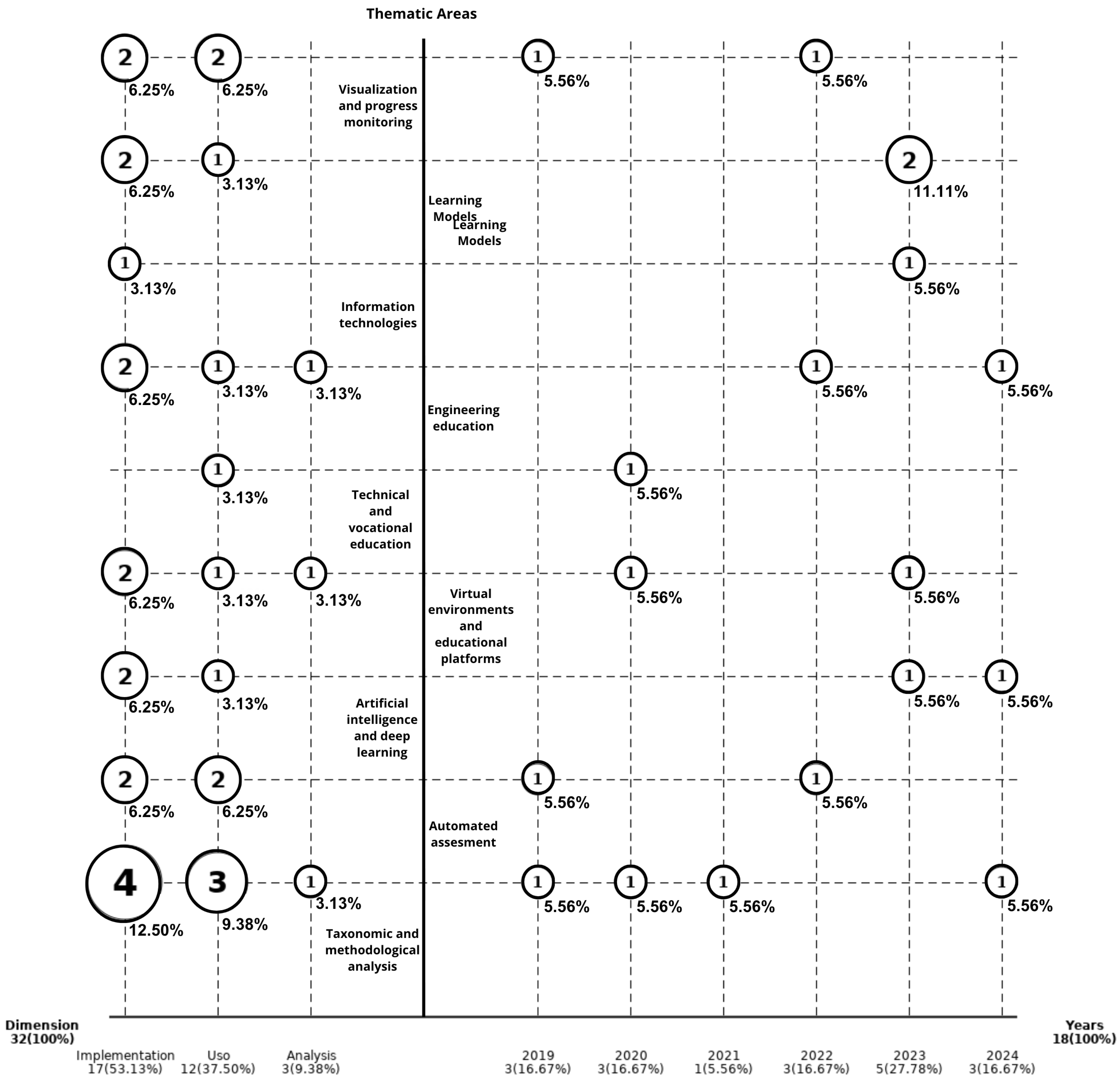

5.1. Systematic Mapping

A visual map has been developed to effectively represent and analyse the studies selected for this research.

Figure 3 presents these studies organised according to three main classification dimensions:

Thematic Areas: Nine content-based categories reflecting key topics related to competency-based assessment in engineering education (e.g., learning models, automated assessment, artificial intelligence and deep learning, technical and vocational education, etc.). These are shown along the central vertical axis.

Type of Contribution: This refers to the nature of the study. Articles are classified into Analysis (literature-based or conceptual work), Use (practical application of existing tools or models), or Implementation (theoretical proposals of new tools or approaches). This classification is displayed along the lower left horizontal axis.

Publication Year: This indicates the temporal distribution of studies from 2019 to 2024, and is shown along the lower right horizontal axis.

The labels along the left margin of the figure correspond to the thematic areas, allowing a clear view of the diversity of topics addressed by the selected literature.

Each article was reviewed and assigned to one or more types of contribution, depending on its content. For example, a study may include a theoretical proposal (Implementation) and also apply that tool in a case study (Use). This multi-classification explains why the total number of instances exceeds the 14 articles selected through the systematic mapping protocol.

As previously explained, the classification framework allows for multi-categorisation, whereby a single article may be assigned to more than one type of contribution, for example, combining an analytical review with a practical implementation or use case. This methodological decision enables a more nuanced representation of the literature and helps capture the complexity of contributions in the field of engineering education. According to this framework, the quadrant on the left of the figure illustrates the distribution of articles by type of contribution and thematic area. The classification reveals that most articles are concentrated in the Implementation category, with 17 instances. The Use category is the second most represented, with twelve instances, while the Analysis category includes only three articles.

The findings allow us to identify clear trends in research on competence assurance in engineering. First, there is a marked predominance of articles classified in the Implementation category, with presence in almost all the topics. This reflects a strong focus of researchers on the theoretical development of tools and methodologies without necessarily having yet implemented them in real contexts. This situation suggests that the field is in a stage of conceptual consolidation, where the design of solutions is privileged over their implementation.

These results suggest that current research in competency-based assessment within engineering education is primarily focused on conceptual development, rather than practical application or empirical validation. This trend indicates a stage of conceptual consolidation, where researchers prioritise proposing new models over their implementation in real-world educational contexts.

Secondly, the Use category is also significantly represented, especially in Taxonomic and Methodological Analysis. This indicates that some initiatives have managed to advance towards the practical validation of their proposals, which is a positive sign of maturity in certain lines of research. However, the fewer articles in this category than in Implementation suggests that the practical adoption of the proposed methodologies is still limited.

The Analysis category presents marginal participation, with only three articles distributed on specific topics such as learning outcomes, generic competencies, and technological tools. This scarce representation may indicate that most papers have already passed the exclusively exploratory stage and are currently focused on applied proposals. This may be interpreted as a reflection of the state of maturity of the field, where efforts are concentrated on designing concrete solutions rather than continuing with initial diagnoses.

Finally, when observing the thematic distribution, it is identified that the areas with the highest number of proposals, both in Implementation and Use, are evaluation of competencies, assurance of competencies, and learning outcomes, which suggests that these constitute the most developed thematic cores in the literature reviewed. This concentration could also be due to the strategic importance of these dimensions within engineering training processes, where the assurance of competencies is key to guaranteeing educational quality.

In the right quadrant of the figure, the classification of publications is organised by year intervals, offering a chronological perspective and highlighting the research’s temporal trends. In the central axis, the different thematic categories of the papers are shown, allowing a quick understanding of the research areas addressed.

The analysis of this framework reveals that the majority of articles were published between 2019 and 2022, which indicates a sustained increase in interest in this line of research. This boom could be linked to the increasing incorporation of competency-based educational approaches and the need to respond to the transformations of the professional and technological environment.

In general, an even and sustained distribution is observed throughout the years of the evaluated period. The average productivity is three articles per year, except in 2021, when only one article on this subject was published. In contrast, five articles were published in 2023.

The impact of the COVID-19 pandemic from 2020 onwards can explain the publication rate. The global health crisis forced educational institutions to quickly adapt their teaching and assessment methodologies, which prompted greater attention to measuring learning outcomes, using technological tools, and revising educational models. In this context, topics such as competency assessment, competency assurance, and learning outcomes became priorities in teaching practice and academic research.

This behaviour also reflects thematic diversification, which is evidence of an expanding field in depth and breadth. When reviewing the distribution by topic, it is observed that areas such as competency assessment and competency assurance maintain a constant presence over time, which indicates that they are central and consolidated topics within research in the area. Other categories such as generic competencies, technological tools, and curriculum appeared more strongly in recent years, probably in response to new training challenges, particularly those associated with digital transformation, the forced virtualisation of learning and the search for strategies to guarantee educational quality in remote environments.

5.2. Overall Analysis by Types of Approaches

This section presents an analysis of the results obtained from the thematic classification of the 14 studies selected. In contrast to the analysis presented in

Section 5.1, this section takes a closer look at the types of approaches used in assessing competences in engineering degrees, grouping the proposals found into three main types of approaches: assessment tools, assessment methodologies, and assessment strategies. This distinction enables a more precise structuring of the diversity of approaches present in the reviewed literature.

Below are three tables that group the studies according to the types of approach identified, describing each approach and its application in education.

Table 5 presents a collection of tools designed for automated and systematic assessment of engineering competencies. These tools include systems based on artificial intelligence, machine learning, and digital platforms that enable the monitoring and assessment of student skills.

In

Table 6, the methodologies used for the assessment of competencies in engineering careers are presented. These methodologies include structured approaches based on theoretical models, such as Bloom’s taxonomy and decision trees, and frameworks for assessing IT competencies.

Table 7 compiles various strategies used in assessing engineering competencies. Unlike tools and methodologies, these strategies include pedagogical approaches encouraging active learning and assessment in applied contexts.

This category includes strategies such as hackathons and ideathons, which allow the assessment of competencies by solving real problems in collaborative environments.

5.3. Responding to Research Questions

RQ1: How many documents present proposals to assess competencies?

Of the 14 documents analysed in this study, 13 explicitly present proposals for competency assessment. The systematic review shows that there are several approaches to competency assessment, including technological tools, structured methodologies, and innovative pedagogical strategies. This finding allows identifying areas of opportunity for future research, such as the development of more flexible and adaptive assessment models or the integration of new technologies in engineering assessment processes.

RQ2: Of the selected documents, how many present proposals to assess generic competencies in engineering careers?

Six documents have been identified that present specific proposals to assess generic competencies in engineering careers. The documents are as follows:

Design of a Self-Assessment Proposal for the Development of Student Self-Regulation in Higher Education [

45]; this proposes a self-assessment system to develop competencies such as self-regulation, critical thinking and decision making in students.

Towards the Development of Complex Thinking in University Students: Mixed Methods with Ideathon and Artificial Intelligence [

48]; this presents a methodology based on Ideathons with artificial intelligence to foster critical thinking, creativity and teamwork in engineering students.

Innovative Technologies for the Formation and Assessment of Competencies and Skills in the XXI Century [

43]; this explores emerging technologies for assessing cross-cutting skills, including autonomous learning and complex problem solving in educational settings.

GRAF: A System for the Assessment, Accreditation and Representation of Competency Learning in Online Higher Education [

44]; this article proposes a system to evaluate both technical competencies and soft skills necessary for professional performance.

Competence-Based Assessment Tools for Engineering Higher Education: A Case Study on Complex Problem-Solving [

50]; this programme presents structured tools to measure students’ ability to solve complex problems and work collaboratively.

The e.DO Cube Hackathon—Transitioning to Graduate School [

46]; this article analyses the use of hackathons as a strategy for evaluating transversal competencies such as collaboration, creativity and time management.

These documents address the evaluation of generic competencies in engineering, focusing on aspects such as critical thinking, teamwork, leadership and communication. Their analysis allows us to identify that, although there is interest in these transversal competencies, they are still an area with opportunities for further development and research in the technical literature. The literature review reveals that while there is a significant focus on assessing technical competencies in engineering, the number of studies that focus on cross-cutting competencies remains relatively low. This finding suggests the need for further research and development of specific strategies to assess these competencies, given their fundamental role in forming engineers capable of facing the challenges of the professional world.

RQ3: What assessment tools/techniques are used to assess competencies in the selected proposals?

The results show that the most commonly used tools are approaches based on practical and participatory activities, such as hackathons, ideathons, and generation of real or simulated situations and environments that reflect authentic problems and practical experiences. These techniques are well aligned with the needs of the educational context in engineering, where competency assessment must be objective, applicable, and flexible.

In addition, there is a gap in assessing interpersonal competencies, such as leadership and teamwork, which could benefit from techniques such as collaborative simulations or interaction analysis in group projects.

RQ4: What technological tools have been integrated into engineering competency-based assessment models?

Table 8 indicates that artificial intelligence, machine learning, and digital assessment platforms are the most commonly used technologies. These tools have been implemented in various models to improve assessment accuracy, optimise feedback, and enable more detailed monitoring of student learning.

These technologies are aligned with engineering education needs, facilitating more objective, personalised, and scalable assessments.

5.4. Discussion of Results

The systematic mapping allowed for identifying relevant patterns in the use of tools, methodologies, and strategies for competency assessment in engineering programmes. Based on the analysis of the 14 selected studies, a series of key findings emerged that help to understand the current state of this research area and its main challenges.

First, there is a strong concentration of studies in the category of theoretical implementation, indicating a predominance of conceptual proposals over practical applications. Although these initiatives represent a significant advance in the design of competence-based assessment models, their limited empirical validation is a notable weakness. This observation coincides with [

14], who highlight the persistent disconnect between the proposed assessment frameworks and their actual application in educational contexts.

Notably, only a minority of the studies reviewed focus explicitly on the assessment of generic or transversal competencies, despite the increasing relevance of these skills in professional engineering contexts. This finding echoes concerns raised by Male [

11,

30], who stress the need to systematically integrate soft skills—such as teamwork, communication, and critical thinking—into curriculum design and evaluation models. The current imbalance between the assessment of technical and transversal skills suggests an ongoing challenge in operationalising comprehensive competency-based education. The integration of digital technologies, including artificial intelligence (AI) and machine learning (ML), is emerging as a promising area. However, the review reveals that many proposed systems remain isolated prototypes with limited LMS integration and few examples of large-scale deployment. This limitation reduces their practical value and scalability. It also raises concerns about sustainability, usability, and institutional adoption—factors highlighted in previous studies on educational technology implementation [

3,

5].

Additionally, limitations in personalising assessment processes were identified, as many of the analysed models do not allow for the adaptation of assessment instruments to the specific needs of courses, institutions, or student profiles. This hinders effective formative feedback and restricts the potential of these tools to support autonomous and self-regulated learning.

Finally, a lack of automation in the generation of assessment instruments was detected, contributing to a high workload for professors and inconsistency in the applied criteria. This situation underscores the need to explore solutions that can optimise the creation, adaptation, and application of rubrics and other instruments while maintaining the validity and reliability required for rigorous competency assessment.

These findings highlight significant gaps in the automation of competency assessment within engineering education. In particular, challenges are identified in the effective integration of digital technologies, the systematic evaluation of transversal competencies, the standardisation of assessment instruments, and the personalisation of processes according to the educational context. These limitations hinder the full potential of automated tools to enhance efficiency, equity, and feedback in assessment practices. Addressing these gaps is essential to move toward more robust, scalable, and learner-centred models that meet the current demands of professional training in engineering.

6. Proposal of an AI-Based Tool for the Generation of Competency-Based Evaluation Rubrics

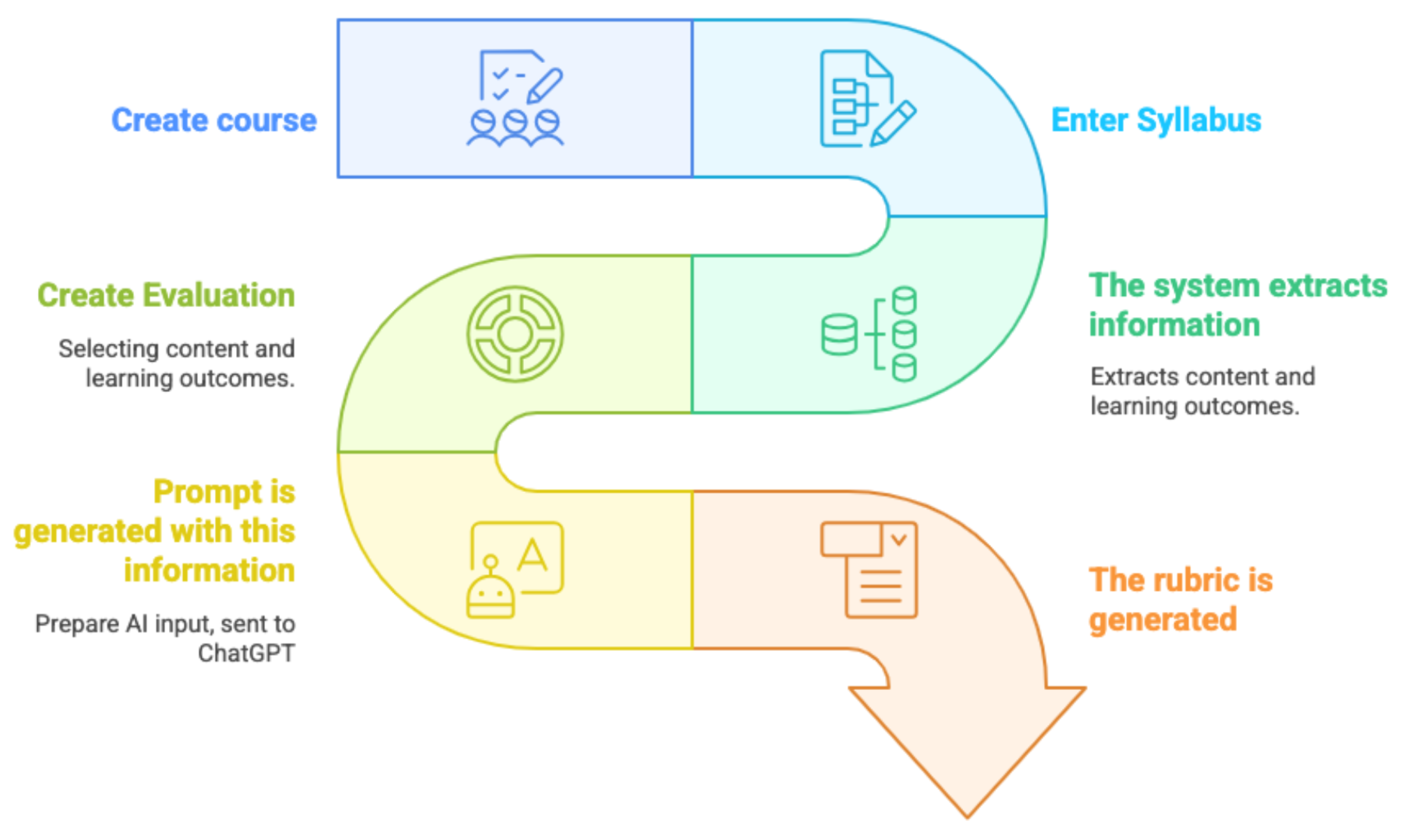

Based on the findings of the systematic mapping, we identified key challenges that hinder effective competency assessment in engineering education. In response to these challenges, we propose SmartRubrics, an AI-based tool specifically designed to support the generation of evaluation rubrics that address the gaps found in the literature—namely, the need for automation, standardisation, customisation, and digital integration.

The analysis carried out in this study has made it possible to identify several gaps in the literature on assessing competencies in engineering careers. In particular, limitations have been detected in the standardisation of assessment instruments, the lack of empirical validation of existing tools, the limited automation of assessment processes, the limited integration with learning management systems (LMS) and the low capacity to customise assessment models. In order to address these challenges, we propose the development of a tool based on artificial intelligence (AI) that allows the automatic generation of rubrics for assessing competencies in educational environments.

The proposed tool, SmartRubrics, employs artificial intelligence based on a supervised machine learning model, specifically, a tuned version of GPT-3.5-turbo using Natural Language Processing (NLP), to build rubrics aligned with international frameworks such as CDIO (Conceive, Design, Implement and Operate), ABET (Accreditation Board for Engineering and Technology), and OBE (Outcome-Based Education). The system receives as input the learning outcomes and course contents, which are structured through an API that formats this information into a prompt compatible with the language model. Professors can define assessment criteria, performance levels, and target competencies through an intuitive interface, while the AI generates a structured rubric adapted to the specific context of each course or subject. The output is provided in editable Excel format, facilitating immediate application or integration into LMS platforms.

You can see the details of this flow in

Figure 4.

The design of this tool responds directly to the gaps identified in the literature. First, the automated generation of rubrics allows for mitigating the lack of standardisation in assessment instruments, as the rubrics created will follow criteria based on international frameworks. Second, incorporating of supervised machine learning techniques, specifically through Natural Language Processing (NLP) models (fine-tuned GPT-3.5-turbo) in the system will facilitate empirical validation of the rubrics, allowing iterative adjustments based on professors feedback and student performance. In addition, being an automated system, workload of professors is considerably reduced, thus addressing the low level of automation identified in the literature.

Another key aspect of the tool is its ability to integrate with LMS platforms (learning management systems), such as Moodle, Blackboard and Canvas, through specific APIs. This will allow the generated rubrics to be used directly within digital learning systems, improving the traceability of assessments and optimising feedback to students. Finally, the AI model will include customisation options that will allow each educational institution to adjust the parameters of the rubrics according to their own needs, thus addressing the problem of the low flexibility of existing tools.

6.1. Prototype Development Methodology

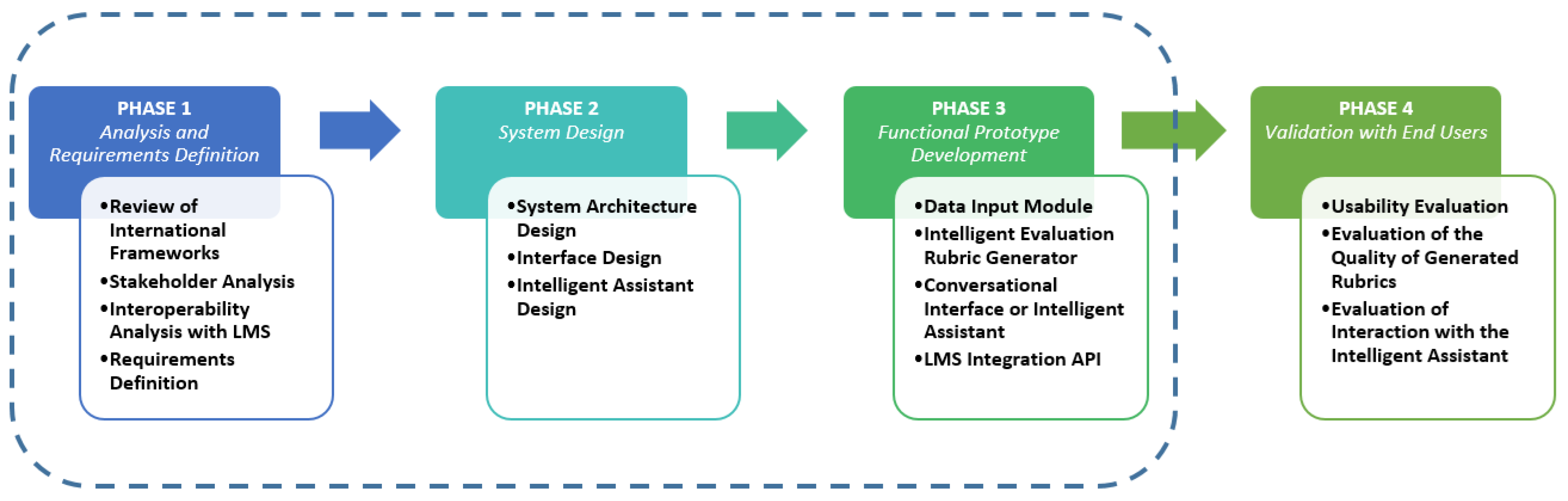

The methodology adopted for developing the SmartRubrics tool was structured in four main phases, which progressively allowed the progress from the initial analysis to the final validation of the functional prototype. Each of these phases considers specific activities oriented to guarantee the design and implementation of a relevant technological solution that is adaptable and aligned with the needs of competency-based assessment in engineering education.

It should be noted that the progress of the work developed corresponds to the completion of Phase 3, reaching the construction of a functional prototype of the SmartRubrics tool. At this stage, the solution has been subjected to an initial process of functional tests with a small group of professors belonging to the IT area. The purpose of these tests has been to evaluate the correct functioning of the system’s main features and identify possible errors, limitations or areas for improvement. The feedback obtained from these preliminary experiences has allowed the implementation of adjustments to optimise the usability, the quality of the generated rubrics and the interaction with the intelligent assistant. This stage is a fundamental preliminary step before proceeding with the planned experimental application in regular subjects. This instance will allow validation of the tool in a real educational context and evaluation of its impact on the competency-based assessment processes.

The stages considered in this project are described below (see

Figure 5):

Phase 1: Analysis and definition of requirements: The objective of this phase was to characterise the solution’s context and functional and non-functional requirements. International competency-based assessment frameworks, such as CDIO, ABET and OBE, were reviewed to align the tool’s functionalities with recognised standards. In addition, a stakeholder analysis was developed, considering the needs of professors, students and those responsible for quality assurance. Aspects of interoperability with learning management systems (LMS) were also evaluated, ending with the definition of the functional and technical requirements of the system.

Phase 2: System design: In this stage, the conceptual and technical components of the tool were built. The system architecture was designed to define its main modules and their interactions. At the same time, the user interfaces were designed to ensure usability and accessibility. A distinctive element of this phase was the design of the intelligent assistant, conceived as a conversational interface capable of guiding professors in generating and customising evaluation rubrics.

Phase 3: Development of the functional prototype: This phase contemplated the implementation of a functional prototype of the tool. The following main modules were developed: a data entry module to capture evaluation requirements, an intelligent evaluation rubric generator capable of creating customised instruments, a conversational interface for user-system interaction, and an integration API that allows interoperability with LMS platforms. So far, preliminary functional tests have been carried out with a small group of professors in computer science to identify errors, inconsistencies or limitations in the tool’s operation. These exploratory tests have allowed gathering direct feedback from users, facilitating the implementation of improvements and adjustments in usability, functionalities and generation of rubrics.

Phase 4: Validation with end users: This phase corresponds to a stage planned as future work, oriented to perform a broader and more formal validation of the prototype developed in real contexts of educational use. As part of the planned validation process, an experimental test will be carried out in a regular course of studies in the computer science area. This experience will be designed using an exploratory approach, where a group of participating professors will use SmartRubrics to support their competency-based evaluation processes. The experimental evaluation will consider aspects such as the tool’s usability, the perception of usefulness by the professors, the quality of the rubrics generated and the users’ satisfaction level. For this purpose, the application of data collection instruments such as surveys, semi-structured interviews and analysis of the generated rubrics is foreseen to identify strengths, weaknesses and opportunities for improvement of the solution before its possible deployment on a larger scale.

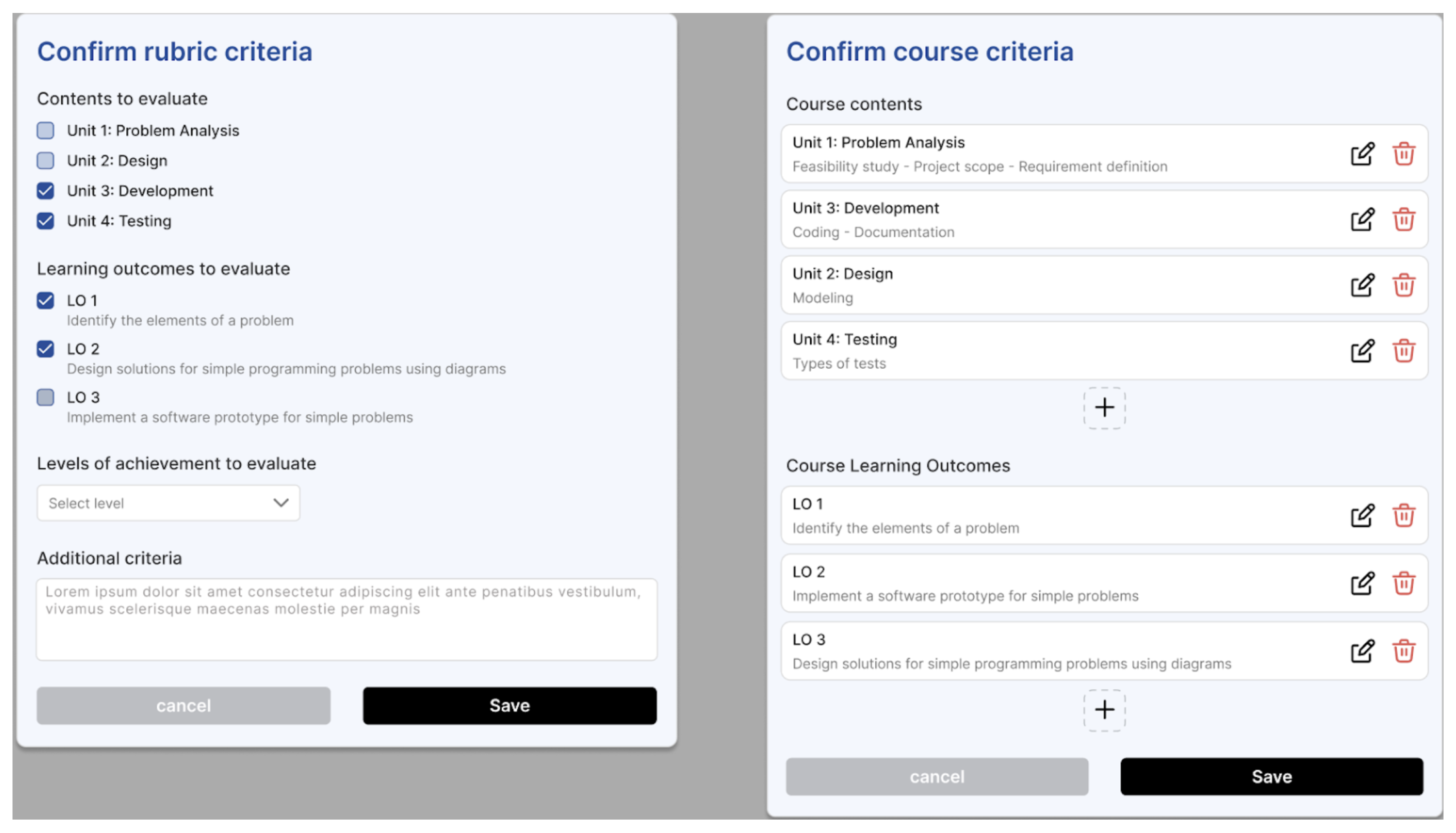

6.2. Main System Interfaces

The effectiveness of an educational technology tool depends not only on its underlying functionalities but also on the clarity, accessibility, and intuitiveness of its user interface. In this section, we present the graphical interface of the SmartRubrics system, developed to support the creation and management of competency-based assessment rubrics using artificial intelligence. The design prioritises usability for professors and assessors, facilitating the integration of advanced assessment techniques into educational practice. The following figures illustrate the key screens of the platform, highlighting its structure, navigation flow and the user-centred design principles that have been applied.

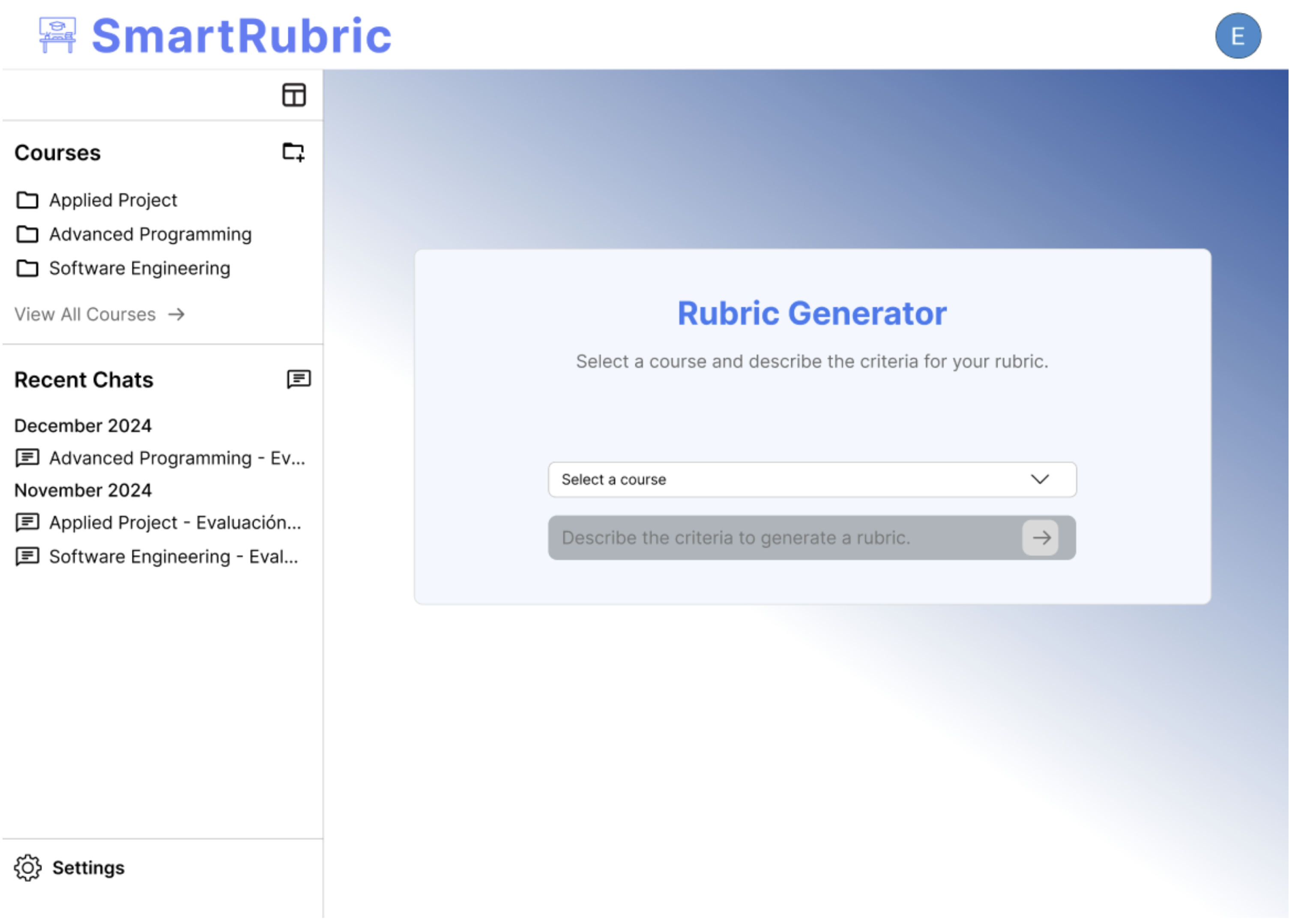

Figure 6 presents the SmartRubrics application’s home screen, which serves as the entry point to the system, clearly and concisely presenting the tool’s main features. This interface highlights the registration and login options, as well as a brief description of the system’s benefits aimed at facilitating the creation of assessment tools with artificial intelligence support. In addition, shortcuts to explanatory resources and technical documentation are provided, allowing users to quickly understand the purpose of the platform and its usefulness in higher education contexts. This first view serves an essential introductory function, providing a user-friendly and accessible experience for professors interested in improving their assessment practices through technology.

Once the user has logged into SmartRubrics, they are taken to a main view (see

Figure 7) that acts as a central management panel. This interface displays recent chats with artificial intelligence, making it easy to resume previous conversations related to rubric generation. In addition, there is a prominent option to create a new assessment, facilitating the quick start of new instruments. From this screen, users can manage their rubrics in development, access previous versions, and continue working where they left off, reinforcing the continuity of the assessment process. This functionality centralises the system’s key tasks, providing an efficient and organised experience for both professors and assessors.

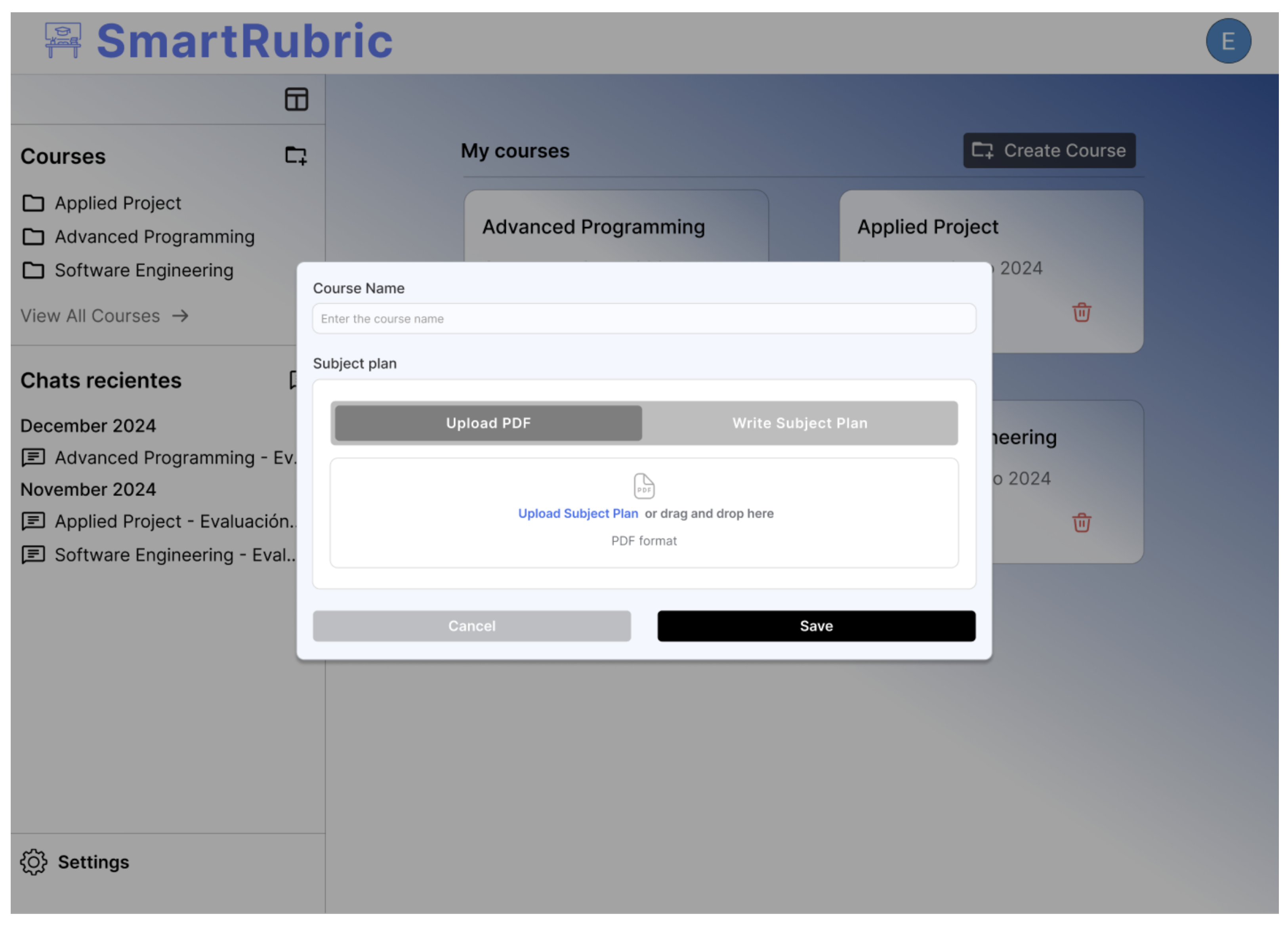

Figure 8 shows the course creation interface that allows the user to configure the fundamental elements of a subject to generate rubrics aligned to the learning outcomes (RA). This screen offers two main options: the manual entry of the ARs, designed for users who wish to directly control the contents, and the uploading of a PDF file corresponding to the subject plan, from which the system can automatically extract the course objectives using word processing techniques. This functionality aims to reduce the time and effort required to initiate an assessment, while ensuring coherence between the assessment criteria and the expected learning outcomes. In this way, it facilitates an effective alignment between teaching planning and the design of assessment instruments.

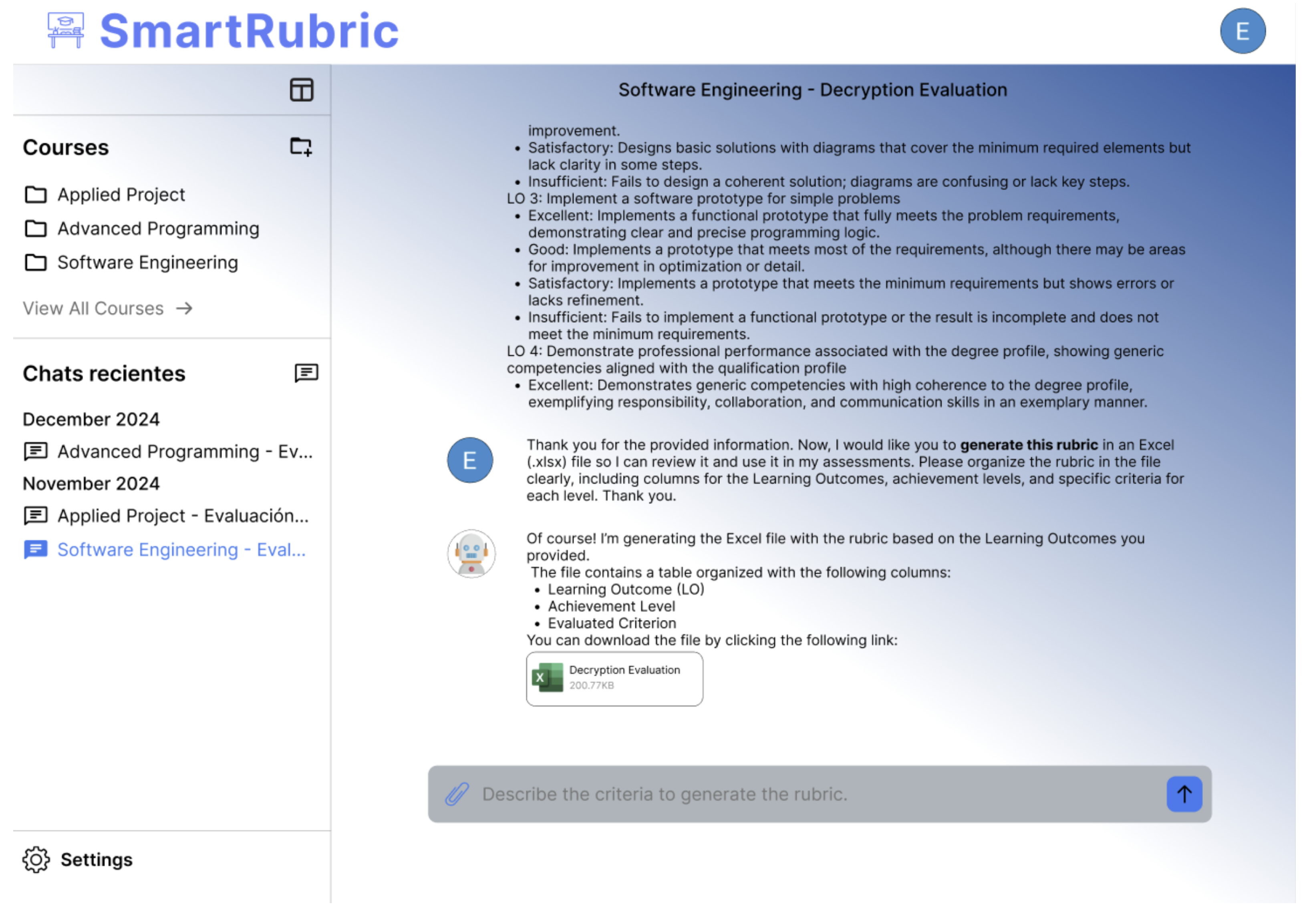

Figure 9 shows the artificial intelligence chat interface, designed to assist users in the creation and improvement of assessment rubrics. This tool not only allows for recommendations and adjustments to the assessment criteria, but also automatically generates an Excel file with the rubric structured according to the subject. This functionality facilitates the export, editing and direct application of the instrument in educational contexts, optimising the teaching work and promoting a more coherent and efficient evaluation.

Finally, in

Figure 10, the selection of learning outcomes and competence areas screen is displayed, the system allows the user to choose, from among the previously entered or extracted learning outcomes, the ones that will be assessed in the rubric. In addition, it offers an interface to select the associated generic and specific competence areas that will serve as the basis for generating the assessment criteria. This functionality is key to ensure that the rubrics created are aligned not only with the course objectives but also with the competency frameworks defined by the institution or professional standards. The interface is organised in sections that allow each learning outcome to be visualised and linked to the relevant evaluative dimensions, thus favouring a comprehensive and competency-focused assessment.

This concludes the demonstration of the main functionalities of the tool, showing its potential to support competency-based assessment processes efficiently and flexibly, aligned with the training requirements of engineering degrees.

7. Discussion

Analysing the tools, methodologies, and strategies used in assessing competencies in engineering careers allows for identifying key approaches to measuring student learning and skills development. The literature review classified studies into three broad categories: technological tools, structured methodologies, and innovative pedagogical strategies. This analysis is crucial to promote inclusive and quality education, aligned with the principles of SDG4.

Assessment tools, such as automated systems, self-assessment platforms, and artificial intelligence-based approaches, have proven effective in providing immediate feedback and improving learning monitoring. These technologies allow professors to identify difficulties early and adapt educational processes to students’ individual needs, optimising teaching and promoting accessible and personalised education.

Assessment methodologies, such as Bloom’s taxonomy, decision trees, and frameworks for IT competencies, offer structured and objective schemes that favour the coherence and validity of assessment processes, contributing to sustainable and accessible education, aligned with the needs of the labour market. Their implementation in engineering education helps ensure that students acquire the necessary competencies to effectively meet the challenges of the professional field.

Assessment strategies, such as hackathons, ideathons and context-based learning, have proven effective in assessing competencies in real environments and fostering hands-on learning. These strategies not only measure technical skills, but also promote the development of cross-cutting competencies, strengthening students’ ability to solve complex problems in real-world scenarios.

The study shows that combining these approaches can significantly enhance engineering education’s teaching and assessment processes. Integrating technological tools with well-defined methodologies and innovative strategies can improve the quality of assessment and facilitate the adaptation of educational programmes to the demands of the labour market.

Regarding the research questions, it was identified that 14 papers present proposals to assess engineering competencies, reflecting an active interest in the academic community in developing effective assessment models. However, when analysing how many of these studies specifically address the assessment of generic competencies, only five papers focus on transversal skills such as communication, teamwork, critical thinking and leadership. This indicates that, although there are efforts in this area, there is still a gap in research on assessing transversal skills in engineering education.

Similarly, the tools and evaluation techniques used in the selected proposals were analysed, revealing that the most commonly used approaches include ideathons, hackathons, context-based learning, self-assessment, and observational assessment. These strategies have proven effective in measuring technical and transversal competencies in active learning environments.

From a technological perspective, various digital tools and artificial intelligence systems applied to competency assessment in engineering were identified. Technologies such as machine learning, deep learning, digital assessment platforms, and adaptive feedback systems have been integrated into evaluation models to enhance the accuracy and personalisation of learning. These findings confirm the importance of technology in modernising competency assessment, enabling more efficient, scalable, and adaptive processes.

Based on these findings, several key implications can be drawn for research and educational practice in engineering:

The assessment of generic competencies requires greater attention in the literature, as it remains a less explored area compared to the assessment of technical competencies.

Active learning strategies, such as hackathons and ideathons, have proven effective in competence assessment, suggesting that their implementation could be further expanded in engineering programmes, favouring educational inclusion and the development of transversal competences.

Technology will continue to play a key role in competency assessment, with growing trends toward the use of artificial intelligence and automated analysis to enhance learning personalisation and feedback.

8. Conclusions

In conclusion, this study offers a dual contribution: a systematic and up-to-date synthesis of the literature on competency-based assessment in engineering, and the design of a technological tool—SmartRubrics—that responds directly to the shortcomings identified. By grounding the tool’s development in empirical evidence, we bridge the gap between research and practice, proposing a scalable, inclusive, and flexible solution whose impact on educational quality will be evaluated in future studies. Future research will focus on validating this tool in real educational contexts, measuring its impact on teaching practices and student learning outcomes.

This study introduces SmartRubrics, an AI-based tool designed to support and enhance competency-based assessment practices in engineering education. The development of the tool is grounded in diagnostic insights obtained through a systematic mapping study (SMS), which highlighted key limitations and research gaps in the current academic landscape.

SmartRubrics was conceived in response to the fragmentation of approaches and the limited empirical application of assessment models identified in the literature. The tool offers a structured and adaptable framework for rubric design, validation, and implementation, integrating AI functionalities to assist in aligning assessment criteria with learning outcomes, ensuring traceability, and facilitating continuous improvement.

Unlike many proposals that remain at the conceptual level (Implementation) or are applied in limited experimental contexts (Use), SmartRubrics aims to bridge the gap between theoretical models and practical deployment. Its architecture allows for interoperability with educational platforms, supports automated feedback processes, and is designed to promote both transparency and scalability in competency evaluation.

The underlying SMS revealed three important patterns: (1) a concentration of theoretical contributions with low transition to practical use; (2) a thematic emphasis on assessment tools, transversal skills, and learning outcomes; and (3) a growing research interest in the past five years, without yet reaching maturity in standardised practices. These findings validated the need for a robust, AI-supported solution such as SmartRubrics.

While the current version of the tool remains in a developmental stage, its design is informed by both empirical gaps and practical requirements observed in engineering education. A key strength of SmartRubrics lies in its potential to unify diverse assessment criteria, support inclusive and context-sensitive evaluation practices, and offer meaningful analytics to educators and institutions.

As with any emerging solution, limitations remain. The tool has yet to be trialled in fully authentic educational settings, and its impact on assessment quality, student learning, and institutional decision-making is still to be evaluated. Moreover, challenges such as data protection, ethical use of AI, and pedagogical alignment must be addressed during its adoption.

Future work will focus on piloting SmartRubrics across varied educational environments, including undergraduate and postgraduate engineering programmes, to examine its adaptability to diverse institutional contexts and curricular designs. These pilot studies will involve collaboration with academic staff to integrate the tool into actual assessment workflows, allowing for the collection of both quantitative and qualitative data on its usability, reliability, and pedagogical impact.

In particular, forthcoming research will investigate how SmartRubrics influences the clarity and consistency of rubrics, the quality of feedback provided to students, and the alignment between assessment criteria and learning outcomes. Evaluations will also consider its impact on student engagement and perceptions of fairness and transparency in assessment.

Additionally, efforts will be made to explore the potential of machine learning techniques to enhance the tool’s capacity for rubric recommendation, refinement, and semi-automated generation based on historical assessment data. This line of work aims to reduce academic workload while maintaining rigour and promoting formative feedback practices.

Ethical and regulatory aspects will also be addressed, including compliance with data protection regulations and the development of governance frameworks for the responsible use of AI in educational assessment. Finally, future iterations of SmartRubrics will aim to support multilingual and interdisciplinary contexts, facilitating broader adoption beyond engineering education outcomes.