Navigating the Last Mile: A Stakeholder Analysis of Delivery Robot Teleoperation

Abstract

1. Introduction

1.1. Last-Mile Delivery Robots

1.2. Remote Operation

1.2.1. Teleoperation of Autonomous Agents (Robots and AVs)

1.2.2. Teleoperation and the LMDR Ecosystem

1.3. Research Objectives

2. Methodology

2.1. Participants

2.2. Interview Protocol

2.3. Data Coding and Thematic Analysis

3. Findings

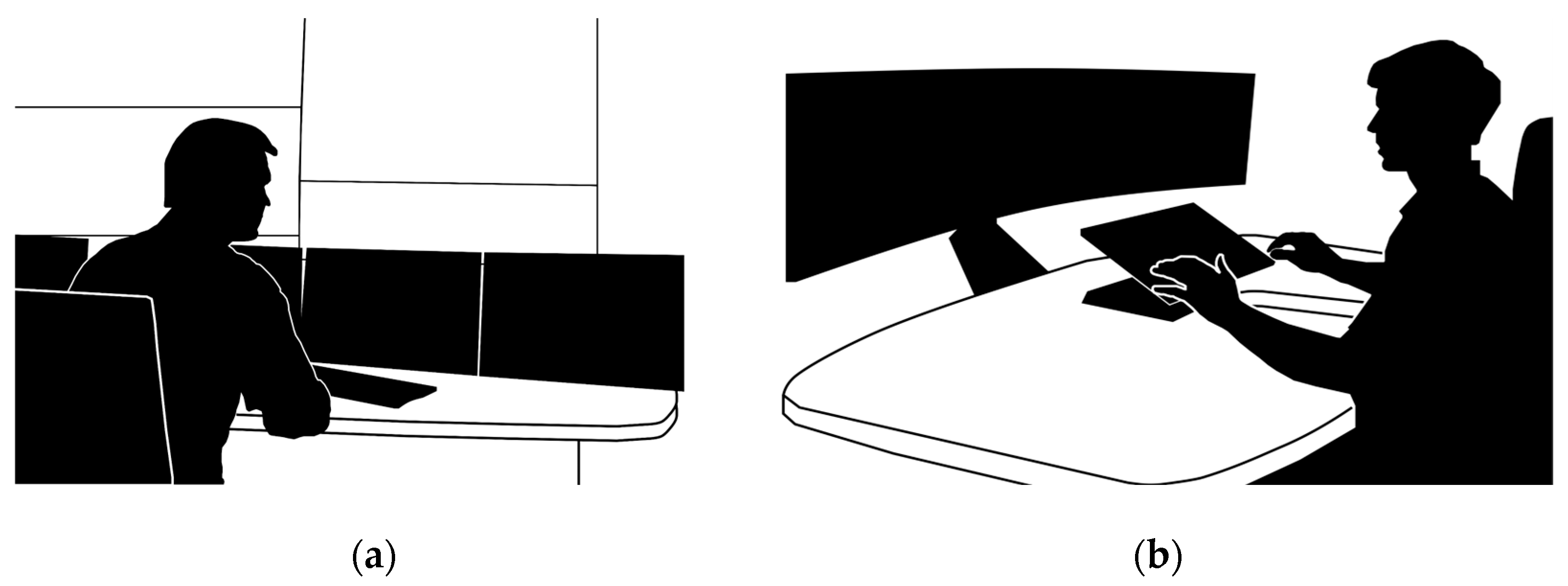

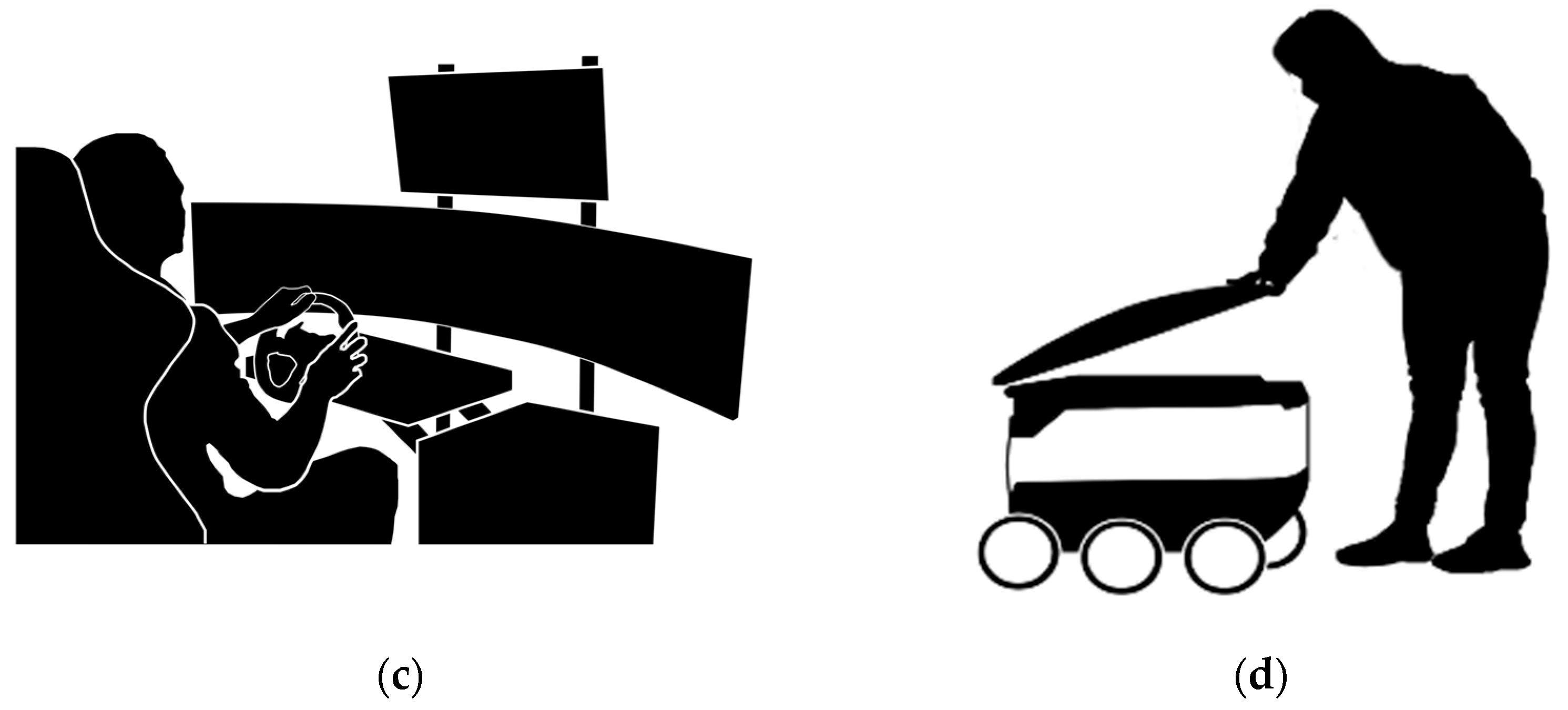

3.1. Teleoperation Modes

3.1.1. Remote Monitoring

3.1.2. Remote Intervention: Tele-Assistance

3.1.3. Remote Intervention: Tele-Driving

3.1.4. Field Team

3.1.5. Response Procedures

3.2. Remote Operation Centers

3.2.1. ROC Structure

3.2.2. Robots-Operator Ratio

3.2.3. Allocating Teleoperation Calls

3.2.4. ROC Location

3.2.5. ROs’ UI

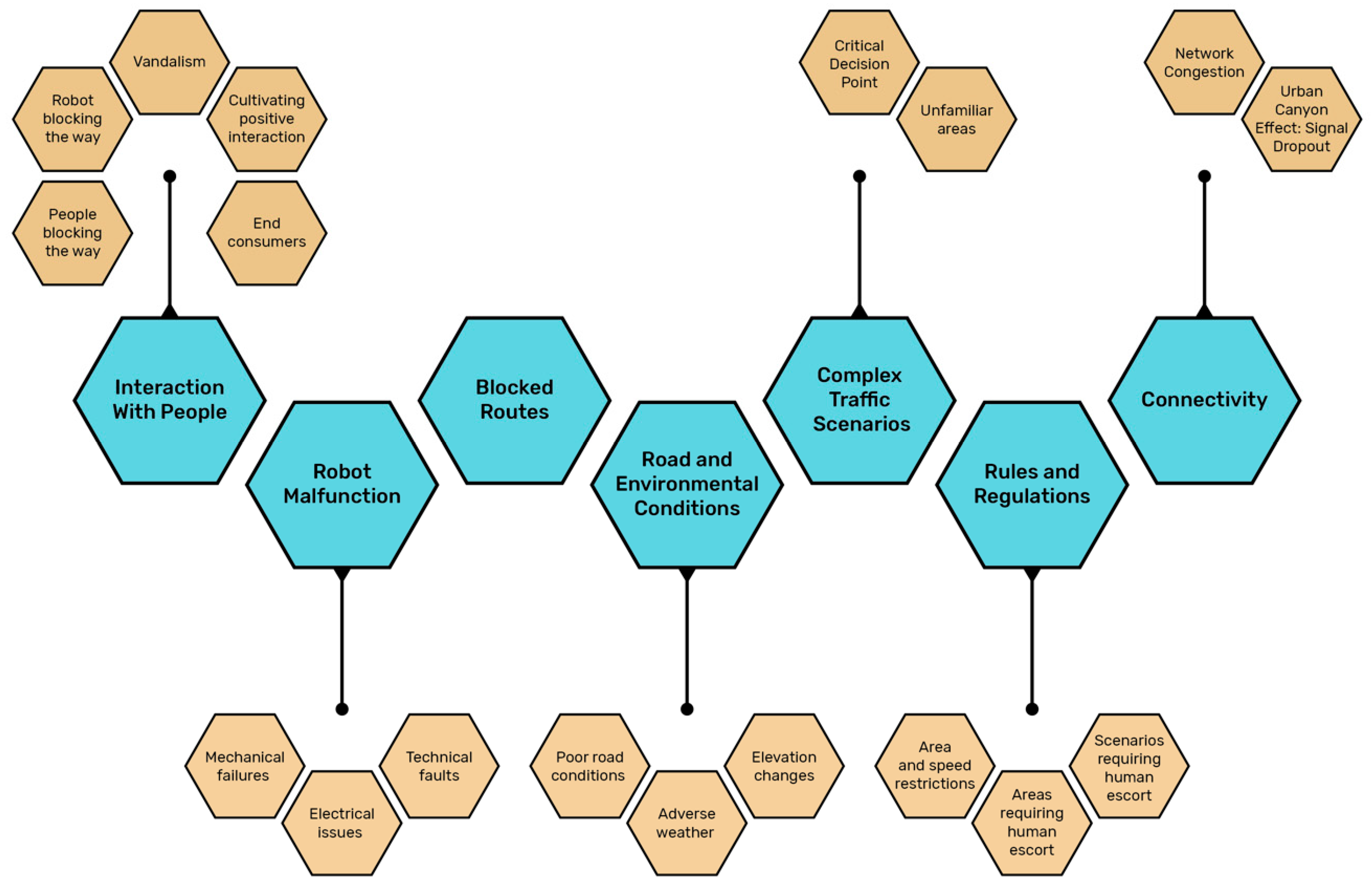

3.3. Key Intervention Scenarios

3.3.1. Interaction with People

Negative Response: Intentional Interaction

Intentional Interaction-Positive Intention

Robot as an Obstacle/Impact on Human

RO’s Role Within the Interaction

3.3.2. Connectivity

3.3.3. Blocked Routes

3.3.4. Road and Environmental Conditions

3.3.5. Complex Traffic Scenarios

3.3.6. Rules and Regulations

3.3.7. Robot Malfunction

4. Discussion

- Operational and Financial: Issues such as delays, incomplete deliveries, disrupted planning, repair of damaged robots, the need for substitutions, and customer compensation.

- Health and Safety: Potential harm caused to road users.

- Reputational: Impacts on public perception and potential loss of customer trust.

4.1. Limitations

4.2. Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LMDR | Last-Mile Delivery Robot |

| RO | Remote Operator |

| UI | User Interface |

| HMI | Human–Machine Interface |

| AV | Autonomous Vehicles |

| IMU | Inertial Measurement Units |

| GPS | Global Positioning Systems |

| ROC | Remote Operation Center |

References

- Wang, Y.; Zhang, D.; Liu, Q.; Shen, F.; Lee, L.H. Towards enhancing the last-mile delivery: An effective crowd-tasking model with scalable solutions. Transp. Res. Part E Logist. Transp. Rev. 2016, 93, 279–293. [Google Scholar] [CrossRef]

- Engesser, V.; Rombaut, E.; Vanhaverbeke, L.; Lebeau, P. Autonomous Delivery Solutions for Last-Mile Logistics Operations: A Literature Review and Research Agenda. Sustainability 2023, 15, 2774. [Google Scholar] [CrossRef]

- Mohammad, W.A.; Nazih Diab, Y.; Elomri, A.; Triki, C. Innovative solutions in last mile delivery: Concepts, practices, challenges, and future directions. Supply Chain Forum Int. J. 2023, 24, 151–169. [Google Scholar] [CrossRef]

- Fortune Business Insights. Autonomous Last Mile Delivery Market Size, Share & Industry Analysis 2024–2032. FBI105598105598. January 2025. Available online: https://www.fortunebusinessinsights.com/autonomous-last-mile-delivery-market-105598 (accessed on 26 January 2025).

- Grand View Research. Autonomous Last Mile Delivery Market Size, Share & Trends Analysis Report, 2023–2030. Grand View Research, GVR-4-68039-204-2. Available online: https://www.grandviewresearch.com/industry-analysis/autonomous-last-mile-delivery-market (accessed on 26 January 2025).

- Sahay, R.; Wolff, C. Pandemic, Parcels and Public Vaccination Envisioning the Next Normal for the Last-Mile Ecosystem. World Economic Forum, Insight Report. April 2021. Available online: https://www3.weforum.org/docs/WEF_Pandemic_Parcels_and_Public_Vaccination_report_2021.pdf (accessed on 26 January 2025).

- Lemardelé, C.; Pinheiro Melo, S.; Cerdas, F.; Herrmann, C.; Estrada, M. Life-cycle analysis of last-mile parcel delivery using autonomous delivery robots. Transp. Res. Part D Transp. Environ. 2023, 121, 103842. [Google Scholar] [CrossRef]

- Chen, C.; Demir, E.; Huang, Y.; Qiu, R. The adoption of self-driving delivery robots in last mile logistics. Transp. Res. Part E Logist. Transp. Rev. 2021, 146, 102214. [Google Scholar] [CrossRef] [PubMed]

- De Maio, A.; Ghiani, G.; Laganà, D.; Manni, E. Sustainable last-mile distribution with autonomous delivery robots and public transportation. Transp. Res. Part C Emerg. Technol. 2024, 163, 104615. [Google Scholar] [CrossRef]

- Hoffmann, T.; Prause, G. On the Regulatory Framework for Last-Mile Delivery Robots. Machines 2018, 6, 33. [Google Scholar] [CrossRef]

- Fordham, C.; Fowler, C.; Kemp, I.; Williams, D. GATEway Safety and Insurance Ensuring Safety for Autonomous Vehicle Trials. TRL UK, PPR859. September 2018. Available online: https://www.trl.co.uk/Uploads/TRL/Documents/D2.2_-Safety-and-Insurance_PPR859_Optimized.pdf (accessed on 26 January 2025).

- Lee, J.S.; Ham, Y.; Park, H.; Kim, J. Challenges, tasks, and opportunities in teleoperation of excavator toward human-in-the-loop construction automation. Autom. Constr. 2022, 135, 104119. [Google Scholar] [CrossRef]

- Moniruzzaman, M.; Rassau, A.; Chai, D.; Islam, S.M.S. Teleoperation methods and enhancement techniques for mobile robots: A comprehensive survey. Robot. Auton. Syst. 2022, 150, 103973. [Google Scholar] [CrossRef]

- Salvini, P.; Reinmund, T.; Hardin, B.; Grieman, K.; Ten Holter, C.; Johnson, A.; Kunze, L.; Winfield, A.; Jirotka, M. Human involvement in autonomous decision-making systems. Lessons learned from three case studies in aviation, social care and road vehicles. Front. Polit. Sci. 2023, 5, 1238461. [Google Scholar] [CrossRef]

- Pedestrian and Bicycle Information Center. Sharing Spaces with Robots: The Basics of Personal Delivery Devices; UNC Highway Safety Research Center: Chapel Hill, NC, USA, 2021; Available online: https://www.pedbikeinfo.org/downloads/PBIC_InfoBrief_SharingSpaceswithRobots.pdf (accessed on 23 March 2025).

- Buldeo Rai, H.; Touami, S.; Dablanc, L. Autonomous e-commerce delivery in ordinary and exceptional circumstances. The French case. Res. Transp. Bus. Manag. 2022, 45, 100774. [Google Scholar] [CrossRef]

- Clamann, M.; Podsiad, K.; Cover, A. Personal Delivery Devices (PDDs) Legislative Tracker. Available online: https://www.pedbikeinfo.org/resources/resources_details.php?id=5314 (accessed on 1 May 2023).

- Srinivas, S.; Ramachandiran, S.; Rajendran, S. Autonomous robot-driven deliveries: A review of recent developments and future directions. Transp. Res. Part E Logist. Transp. Rev. 2022, 165, 102834. [Google Scholar] [CrossRef]

- Plank, M.; Lemardelé, C.; Assmann, T.; Zug, S. Ready for robots? Assessment of autonomous delivery robot operative accessibility in German cities. J. Urban Mobil. 2022, 2, 100036. [Google Scholar] [CrossRef]

- Thiel, M.; Ziegenbein, J.; Blunder, N.; Schrick, M.; Kreutzfeldt, J. From Concept to Reality: Developing Sidewalk Robots for Real-World Research and Operation in Public Space. Logist. J. Proc. 2023, 19, 1–19. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed]

- Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. J3016_202104. April 2021. Available online: https://www.sae.org/standards/content/j3016_202104/ (accessed on 6 October 2024).

- Bogdoll, D.; Orf, S.; Töttel, L.; Zöllner, J.M. Taxonomy and Survey on Remote Human Input Systems for Driving Automation Systems. In Proceedings of the Future of Information and Communication Conference, Online, 3–4 March 2022; Volume 439, pp. 94–108. [Google Scholar]

- Caldwell, D.G.; Reddy, K.; Kocak, O.; Wardle, A. Sensory requirements and performance assessment of tele-presence controlled robots. In Proceedings of the IEEE International Conference on Robotics and Automation, Minneapolis, MN, USA, 22–28 April 1996; Volume 2, pp. 1375–1380. [Google Scholar]

- Li, Y.Y.; Fan, W.H.; Liu, Y.H.; Cai, X.P. Teleoperation of robots via the mobile communication networks. In Proceedings of the 2005 IEEE International Conference on Robotics and Biomimetics—ROBIO, Hong Kong, China, 5–9 July 2005; pp. 670–675. [Google Scholar]

- Luo, J.; He, W.; Yang, C. Combined perception, control, and learning for teleoperation: Key technologies, applications, and challenges. Cogn. Comput. Syst. 2020, 2, 33–43. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–Robot Interaction: Status and Challenges. Hum. Factors J. Hum. Factors Ergon. Soc. 2016, 58, 525–532. [Google Scholar] [CrossRef]

- Sheridan, T.B. Space teleoperation through time delay: Review and prognosis. IEEE Trans. Robot. Autom. 1993, 9, 592–606. [Google Scholar] [CrossRef]

- Skaar, S.B.; Ruoff, C.F. (Eds.) Teleoperation and Robotics in Space; American Institute of Aeronautics and Astronautics: Washington, DC, USA, 1994. [Google Scholar] [CrossRef]

- Isaacs, J.; Knoedler, K.; Herdering, A.; Beylik, M.; Quintero, H. Teleoperation for Urban Search and Rescue Applications. Field Robot. 2022, 2, 1177–1190. [Google Scholar] [CrossRef]

- Stopforth, R.; Holtzhausen, S.; Bright, G.; Tlale, N.S.; Kumile, C.M. Robots for Search and Rescue Purposes in Urban and Underwater Environments—A survey and comparison. In Proceedings of the 2008 15th International Conference on Mechatronics and Machine Vision in Practice, Auckland, New Zealand, 2–4 December 2008; pp. 476–480. [Google Scholar]

- Waharte, S.; Trigoni, N. Supporting Search and Rescue Operations with UAVs. In Proceedings of the 2010 International Conference on Emerging Security Technologies, Canterbury, UK, 6–7 September 2010; pp. 142–147. [Google Scholar]

- Adamides, G.; Katsanos, C.; Parmet, Y.; Christou, G.; Xenos, M.; Hadzilacos, T.; Edan, Y. HRI usability evaluation of interaction modes for a teleoperated agricultural robotic sprayer. Appl. Ergon. 2017, 62, 237–246. [Google Scholar] [CrossRef]

- Murakami, N.; Ito, A.; Will, J.D.; Steffen, M.; Inoue, K.; Kita, K.; Miyaura, S. Development of a teleoperation system for agricultural vehicles. Comput. Electron. Agric. 2008, 63, 81–88. [Google Scholar] [CrossRef]

- Mining Editor. The Rise of Remote Operating Centres in Mining. Australian Mine Safety Journal. Available online: https://www.amsj.com.au/the-rise-of-remote-operating-centres-in-mining/ (accessed on 26 January 2025).

- Harnett, B.M.; Doarn, C.R.; Rosen, J.; Hannaford, B.; Broderick, T.J. Evaluation of Unmanned Airborne Vehicles and Mobile Robotic Telesurgery in an Extreme Environment. Telemed. E-Health 2008, 14, 539–544. [Google Scholar] [CrossRef]

- Marescaux, J.; Leroy, J.; Gagner, M.; Rubino, F.; Mutter, D.; Vix, M.; Butner, S.E.; Smith, M.K. Transatlantic robot-assisted telesurgery. Nature 2001, 413, 379–380. [Google Scholar] [CrossRef] [PubMed]

- Deckers, L.; Madadi, B.; Verduijn, T. Tele-operated driving in logistics as a transition to full automation: An exploratory study and research agenda. In Proceedings of the 27th ITS World Congress, Hamburg, Germany, 11–15 October 2021; pp. 11–15. [Google Scholar]

- Meir, A.; Grimberg, E.; Musicant, O. The human-factors’ challenges of (tele)drivers of Autonomous Vehicles. Ergonomics 2024, 68, 947–967. [Google Scholar] [CrossRef] [PubMed]

- Tener, F.; Lanir, J. Driving from a Distance: Challenges and Guidelines for Autonomous Vehicle Teleoperation Interfaces. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–13. [Google Scholar] [CrossRef]

- Ollero, A.; Tognon, M.; Suarez, A.; Lee, D.; Franchi, A. Past, Present, and Future of Aerial Robotic Manipulators. IEEE Trans. Robot. 2022, 38, 626–645. [Google Scholar] [CrossRef]

- Sheridan, T.B. Teleoperation, telerobotics and telepresence: A progress report. Control Eng. Pract. 1995, 3, 205–214. [Google Scholar] [CrossRef]

- Siciliano, B.; Khatib, O. (Eds.) Springer Handbook of Robotics; Springer International Publishing: Cham, Switzerland, 2016. [Google Scholar] [CrossRef]

- Boker, A.; Lanir, J. Bird’s Eye View Effect on Situational Awareness in Remote Driving. In Proceedings of the Adjunct 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ingolstadt, Germany, 18–21 September 2023; ACM: New York, NY, USA, 2023; pp. 36–41. [Google Scholar]

- Steinfeld, A.; Fong, T.; Kaber, D.; Lewis, M.; Scholtz, J.; Schultz, A.; Goodrich, M. Common metrics for human-robot interaction. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; ACM: New York, NY, USA, 2006; pp. 33–40. [Google Scholar]

- Chen, J.Y.; Haas, E.C.; Barnes, M.J. Human Performance Issues and User Interface Design for Teleoperated Robots. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2007, 37, 1231–1245. [Google Scholar] [CrossRef]

- Durantin, G.; Gagnon, J.-F.; Tremblay, S.; Dehais, F. Using near infrared spectroscopy and heart rate variability to detect mental overload. Behav. Brain Res. 2014, 259, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Van Erp, J.B.; Padmos, P. Image parameters for driving with indirect viewing systems. Ergonomics 2003, 46, 1471–1499. [Google Scholar] [CrossRef]

- Tener, F.; Lanir, J. Devising a High-Level Command Language for the Teleoperation of Autonomous Vehicles. Int. J. Hum.-Comput. Interact. 2024, 41, 5299–5315. [Google Scholar] [CrossRef]

- Rea, D.J.; Seo, S.H. Still Not Solved: A Call for Renewed Focus on User-Centered Teleoperation Interfaces. Front. Robot. AI 2022, 9, 704225. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.C.Y.; Barnes, M.J.; Harper-Sciarini, M. Supervisory Control of Multiple Robots: Human-Performance Issues and User-Interface Design. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2011, 41, 435–454. [Google Scholar] [CrossRef]

- Cooke, N.J. Human Factors of Remotely Operated Vehicles. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2006, 50, 166–169. [Google Scholar] [CrossRef]

- Alverhed, E.; Hellgren, S.; Isaksson, H.; Olsson, L.; Palmqvist, H.; Flodén, J. Autonomous last-mile delivery robots: A literature review. Eur. Transp. Res. Rev. 2024, 16, 4. [Google Scholar] [CrossRef]

- Nikkei, A. Allowed on Sidewalks, South Korean Delivery Robots Poised to Take Off. Available online: https://kr-asia.com/allowed-on-sidewalks-south-korean-delivery-robots-poised-to-take-off (accessed on 1 February 2024).

- Mike, O. Real Life Robotics Debuts Delivery Robot at Toronto Zoo. Available online: https://www.therobotreport.com/real-life-robotics-debuts-delivery-robot-toronto-zoo/ (accessed on 11 September 2024).

- Lim, X.-J.; Chang, J.Y.-S.; Cheah, J.-H.; Lim, W.M.; Kraus, S.; Dabić, M. Out of the way, human! Understanding post-adoption of last-mile delivery robots. Technol. Forecast. Soc. Change 2024, 201, 123242. [Google Scholar] [CrossRef]

- Ostermeier, M.; Heimfarth, A.; Hübner, A. Cost-optimal truck-and-robot routing for last-mile delivery. Networks 2022, 79, 364–389. [Google Scholar] [CrossRef]

- Patel, S. Human Interaction with Autonomous Delivery Robots: Navigating the Intersection of Psychological Acceptance and Societal Integration. In Proceedings of the 2024 AHFE International Conference on Human Factors in Design, Engineering, and Computing, Honolulu, HI, USA, 20–24 April 2024. [Google Scholar] [CrossRef]

- Yu, X.; Hoggenmüller, M.; Tran, T.T.M.; Wang, Y.; Tomitsch, M. Understanding the Interaction between Delivery Robots and Other Road and Sidewalk Users: A Study of User-generated Online Videos. ACM Trans. Hum.-Robot Interact. 2024, 13, 1–32. [Google Scholar] [CrossRef]

- Robinson, O.C. Sampling in Interview-Based Qualitative Research: A Theoretical and Practical Guide. Qual. Res. Psychol. 2014, 11, 25–41. [Google Scholar] [CrossRef]

- Church, J. Funding Rounds Explained: A Guide for Startups. Medium. 2024. Available online: https://jameschurch.medium.com/funding-rounds-explained-a-guide-for-startups-7d2ba23a4424 (accessed on 16 June 2025).

- Dashboard|Tracxn. Available online: https://platform.tracxn.com/a/dashboard (accessed on 16 June 2025).

- GlobeNewswire. Delivery Robots Market Report 2025: Bear Robotics, Starship Technologies, Amazon Robotics, and Kiwibot Lead the Space. March 2025. Available online: https://www.globenewswire.com/news-release/2025/03/25/3048871/0/en/Delivery-Robots-Market-Report-2025-Bear-Robotics-Starship-Technologies-Amazon-Robotics-and-Kiwibot-Lead-the-Space.html (accessed on 15 June 2025).

- MacQueen, K.M.; McLellan, E.; Kay, K.; Milstein, B. Codebook Development for Team-Based Qualitative Analysis. CAM J. 1998, 10, 31–36. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Guest, G.; Bunce, A.; Johnson, L. How Many Interviews Are Enough? An Experiment with Data Saturation and Variability. Field Methods 2006, 18, 59–82. [Google Scholar] [CrossRef]

- Mutzenich, C.; Durant, S.; Helman, S.; Dalton, P. Updating our understanding of situation awareness in relation to remote operators of autonomous vehicles. Cogn. Res. Princ. Implic. 2021, 6, 9. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.C.Y.; Barnes, M.J. Supervisory Control of Multiple Robots: Effects of Imperfect Automation and Individual Differences. Hum. Factors J. Hum. Factors Ergon. Soc. 2012, 54, 157–174. [Google Scholar] [CrossRef]

- Wong, J.C. San Francisco Sours on Rampant Delivery Robots: Not Every Innovation Is Great. The Guardian, 10 December 2017. [Google Scholar]

- Kettwich, C.; Schrank, A.; Oehl, M. Teleoperation of Highly Automated Vehicles in Public Transport: User-Centered Design of a Human-Machine Interface for Remote-Operation and Its Expert Usability Evaluation. Multimodal Technol. Interact. 2021, 5, 26. [Google Scholar] [CrossRef]

- Tener, F.; Lanir, J. Guiding, not Driving: Design and Evaluation of a Command-Based User Interface for Teleoperation of Autonomous Vehicles. arXiv 2025, arXiv:2502.00750. [Google Scholar]

- Majstorović, D.; Hoffmann, S.; Pfab, F.; Schimpe, A.; Wolf, M.M.; Diermeyer, F. Survey on teleoperation concepts for automated vehicles. In Proceedings of the 2022 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Prague, Czech Republic, 9–12 October 2022; pp. 1290–1296. [Google Scholar]

- Naujoks, F.; Forster, Y.; Wiedemann, K.; Neukum, A. A Human-Machine Interface for Cooperative Highly Automated Driving. In Advances in Human Aspects of Transportation; Stanton, N.A., Landry, S., Di Bucchianico, G., Vallicelli, A., Eds.; Advances in Intelligent Systems and Computing; Springer International Publishing: Cham, Switzerland, 2017; Volume 484, pp. 585–595. [Google Scholar] [CrossRef]

- Feiler, J.; Hoffmann, S.; Diermeyer, F. Concept of a Control Center for an Automated Vehicle Fleet. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Ikeda, S.; Ito, T.; Sakamoto, M. Discovering the efficient organization structure: Horizontal versus vertical. Artif. Life Robot. 2010, 15, 478–481. [Google Scholar] [CrossRef]

- Lee, S. The myth of the flat start-up: Reconsidering the organizational structure of start-ups. Strateg. Manag. J. 2022, 43, 58–92. [Google Scholar] [CrossRef]

- Neumeier, S.; Wintersberger, P.; Frison, A.-K.; Becher, A.; Facchi, C.; Riener, A. Teleoperation: The Holy Grail to Solve Problems of Automated Driving? Sure, but Latency Matters. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; ACM: New York, NY, USA, 2019; pp. 186–197. [Google Scholar]

- Luck, J.P.; McDermott, P.L.; Allender, L.; Russell, D.C. An investigation of real world control of robotic assets under communication latency. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; ACM: New York, NY, USA, 2006; pp. 202–209. [Google Scholar]

- Chiou, M.; Hawes, N.; Stolkin, R. Mixed-initiative Variable Autonomy for Remotely Operated Mobile Robots. ACM Trans. Hum.-Robot Interact. 2021, 10, 37. [Google Scholar] [CrossRef]

- Tener, F.; Lanir, J. Investigating intervention road scenarios for teleoperation of autonomous vehicles. Multimed. Tools Appl. 2024, 83, 61103–61119. [Google Scholar] [CrossRef]

- Mortimer, M.; Horan, B.; Seyedmahmoudian, M. Building a Relationship between Robot Characteristics and Teleoperation User Interfaces. Sensors 2017, 17, 587. [Google Scholar] [CrossRef]

- Nielsen, J.; Molich, R. Heuristic evaluation of user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems Empowering People—CHI ’90, Seattle, WA, USA, 1–5 April 1990; ACM Press: New York, NY, USA, 1990; pp. 249–256. [Google Scholar]

- Shneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction, 4th ed.; Pearson/Addison-Wesley: Boston, MA, USA, 2005. [Google Scholar]

- Aven, T. Risk assessment and risk management: Review of recent advances on their foundation. Eur. J. Oper. Res. 2016, 253, 1–13. [Google Scholar] [CrossRef]

- Pillay, M. The Utility of M-31000 for Managing Health and Safety Risks: A Pilot Investigation. In Occupational Health and Safety —A Multi-Regional Perspective; Pillay, M., Tuck, M., Eds.; InTech: Houston, TX, USA, 2018. [Google Scholar] [CrossRef]

- ISO 31000:2018; Risk Management—Guidelines. International Organization for Standardization: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/65694.html (accessed on 16 June 2025).

- Ng, Y.J.; Yeo, M.S.K.; Ng, Q.B.; Budig, M.; Muthugala, M.A.V.J.; Samarakoon, S.M.B.P.; Mohan, R.E. Application of an adapted FMEA framework for robot-inclusivity of built environments. Sci. Rep. 2022, 12, 3408. [Google Scholar] [CrossRef]

- Fuentes-Bargues, J.L.; Bastante-Ceca, M.J.; Ferrer-Gisbert, P.S.; González-Cruz, M.C. Study of Major-Accident Risk Assessment Techniques in the Environmental Impact Assessment Process. Sustainability 2020, 12, 5770. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive Load Theory. In Psychology of Learning and Motivation; Elsevier: Amsterdam, The Netherlands, 2011; Volume 55, pp. 37–76. [Google Scholar] [CrossRef]

- Pignatiello, G.A.; Martin, R.J.; Hickman, R.L. Decision fatigue: A conceptual analysis. J. Health Psychol. 2020, 25, 123–135. [Google Scholar] [CrossRef]

- Geng, Y.; Zhang, D.; Li, P.; Akcin, O.; Tang, A.; Chinchali, S.P. Decentralized Sharing and Valuation of Fleet Robotic Data. In Proceedings of the 5th Conference on Robot Learning, London, UK, 8–11 November 2021; Volume 164, pp. 1795–1800. Available online: https://proceedings.mlr.press/v164/geng22a.html (accessed on 23 May 2025).

- Grimberg, E. Acceptability of Autonomous Vehicles: Does Contextual Consideration Make a Difference? Ph.D. Thesis, The University of Queensland, St Lucia, Australia, 23 September 2022. [Google Scholar]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey. Found. Trends® Hum.-Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Boin, A.; Hart, P. Organising for Effective Emergency Management: Lessons from Research. Aust. J. Public Adm. 2010, 69, 357–371. [Google Scholar] [CrossRef]

- Hao, X.; Demir, E.; Eyers, D. Exploring collaborative decision-making: A quasi-experimental study of human and Generative AI interaction. Technol. Soc. 2024, 78, 102662. [Google Scholar] [CrossRef]

- Gehrke, S.R.; Phair, C.D.; Russo, B.J.; Smaglik, E.J. Observed sidewalk autonomous delivery robot interactions with pedestrians and bicyclists. Transp. Res. Interdiscip. Perspect. 2023, 18, 100789. [Google Scholar] [CrossRef]

| Participant | Company Products/Services | Role | Deployment Stage/Fleet Size [62] |

|---|---|---|---|

| P1 | European project to pilot and validate a fully autonomous last-mile logistics system | PhD candidate-Project manager | Experimental pilot (few experimental robots) |

| P2 | Korean AI-powered outdoor robot company | Managing Director | Startup-Early stages (unfunded) |

| P3 | Software company that offers teleoperation safety solutions and remote operations for logistics vehicles | Ex Co-Founder & COO | Startup–Series B, Soonicorn |

| P4 | Software company that offers teleoperation safety solutions and remote operations for logistics vehicles | Chief Product Officer | Startup–Series B, Soonicorn |

| P5 | Technology company that develops AVs and robots tailored for delivery uses | Head of Marketing and Public Relations | NA * |

| P6 | Autonomous delivery robots | Business development | Startup–Series A |

| P7 | A company that develops teleoperation software for Avs | Chief Operating Officer (Remote operation) | Startup–Series A |

| P8 | AI navigation for delivery robots | Co-Founder and CEO | Startup–Series B |

| P9 | AI-driven drone and robot operating system | Co-Founder & CXO | Startup–Series B |

| P10 | Interactive urban service robots | Operation manager | Startup–Seed |

| P11 | Solutions for autonomous Applications | VP Business Development | Startup–Series B |

| P12 | Design and innovation consultancy which was responsible for designing a robotic delivery vehicle | President/Principal designer | Represents a Series C company, one of the top 5 in the category [63] |

| P13 | Robotics software development platform | CEO | Startup–Series A |

| P14 | A company that develops LMDRs | Head of Fleet Quality | Startup–Series A, (Fleet size: >1000 robots, Soonicorn) |

| P15 | Innovation and investment arm of a large automotive company dealing with AVs | Open Innovation Manager | Open innovation Center of an international motor group |

| # | UI Component | Purpose |

|---|---|---|

| 1 | Notifications/alerts | ROs can see various notification types, such as intervention reason-related, latency-related, mission-related, object identification-related, maintenance-related, etc. (P9, P10, P13). Examples of alerts include “human alert,” “dynamic obstacle alert,” etc. |

| 2 | Battery level status | The RO should understand how much energy is left (P14). |

| 3 | Network quality status | At any point, the RO should know the network quality (P3, P9). |

| 4 | Speed | Shows the speed of the LMDR to alleviate the difficulty of physical disconnect from the LMDR (P14). |

| 5 | Mission duration | Since LMDRs are used to deliver things from origin to destination, the RO needs to know how long it takes to deliver things from the starting point to the destination (P14). |

| 6 | Control ownership | A status that shows whether the LMDR is in autonomous or manual modes (P9). |

| 7 | On-screen AR layers | It can show the RO’s possible routes for overtaking an obstacle (P7) showing the LMDR’s width or the distance to the nearby object (P4). |

| 8 | Status of physical components | ROs might want to know and control the status of various robot hardware components, such as the trunk (P13), light, flag-mast height, emergency lights, etc. (P14) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boker, A.; Grimberg, E.; Tener, F.; Lanir, J. Navigating the Last Mile: A Stakeholder Analysis of Delivery Robot Teleoperation. Sustainability 2025, 17, 5925. https://doi.org/10.3390/su17135925

Boker A, Grimberg E, Tener F, Lanir J. Navigating the Last Mile: A Stakeholder Analysis of Delivery Robot Teleoperation. Sustainability. 2025; 17(13):5925. https://doi.org/10.3390/su17135925

Chicago/Turabian StyleBoker, Avishag, Einat Grimberg, Felix Tener, and Joel Lanir. 2025. "Navigating the Last Mile: A Stakeholder Analysis of Delivery Robot Teleoperation" Sustainability 17, no. 13: 5925. https://doi.org/10.3390/su17135925

APA StyleBoker, A., Grimberg, E., Tener, F., & Lanir, J. (2025). Navigating the Last Mile: A Stakeholder Analysis of Delivery Robot Teleoperation. Sustainability, 17(13), 5925. https://doi.org/10.3390/su17135925