Enhancing Demand Prediction: A Multi-Task Learning Approach for Taxis and TNCs

Abstract

1. Introduction

- The evolving shared mobility sector longs for better demand prediction for different formats of sharing services. This study proposes a multi-task learning model to predict the demand for the TNC and taxi modes simultaneously to meet these needs.

- This study explores methodological improvements to increase the prediction accuracy. The techniques considered include a gating mechanism to mitigate the negative transfer between the two modes and spatial embedding, capturing the interaction of spatial dependency.

- Extensive experiments are conducted using actual taxi and TNC trip data from Manhattan, NYC. The experimental results show that the proposed modeling approach outperforms the single-task learning model and other benchmark learning models.

2. Literature Review

2.1. Modeling Spatial–Temporal Dependency of Transportation Demand

2.2. Multi-Task Learning

3. Methodology

3.1. Preliminary: Problem Definition

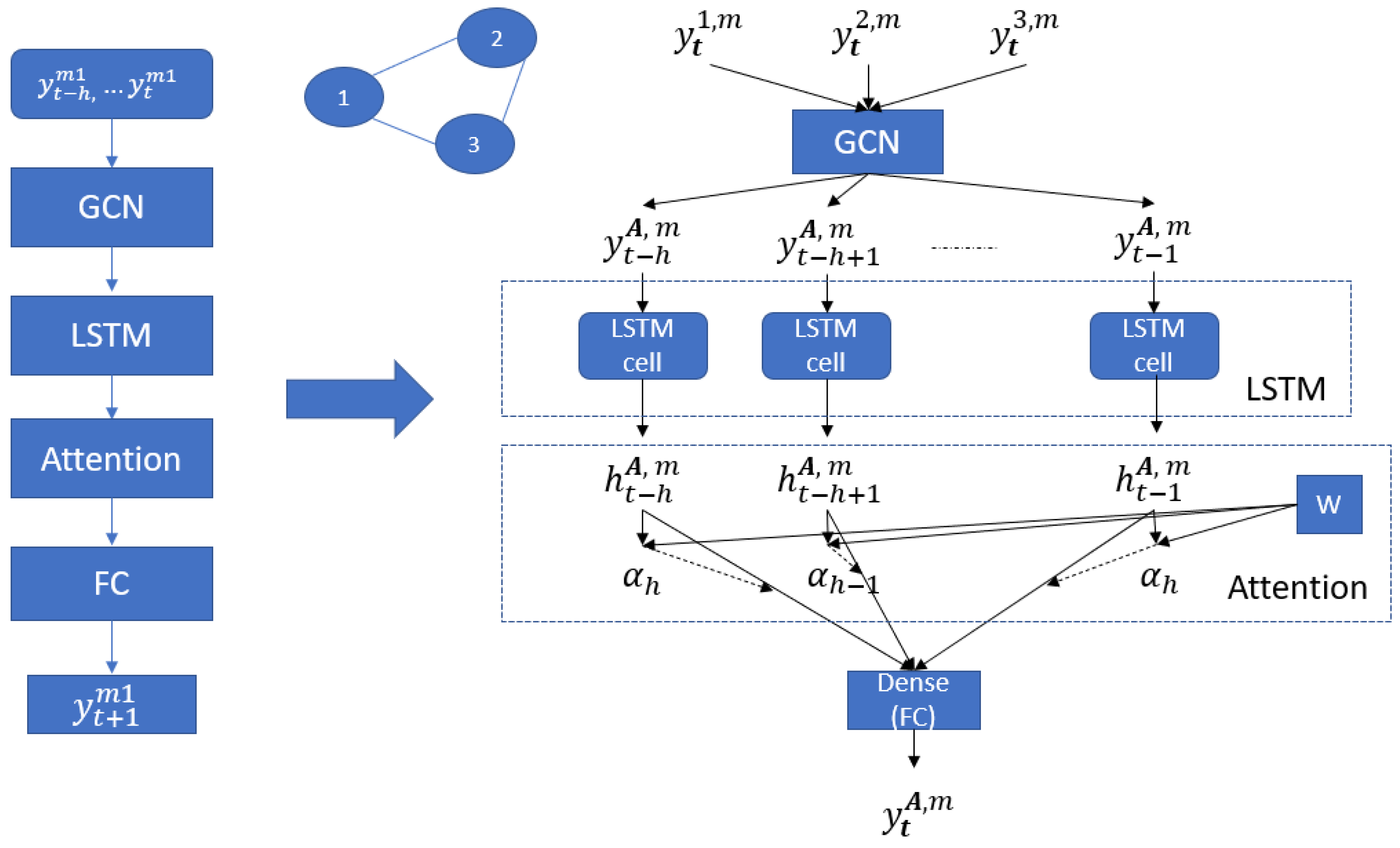

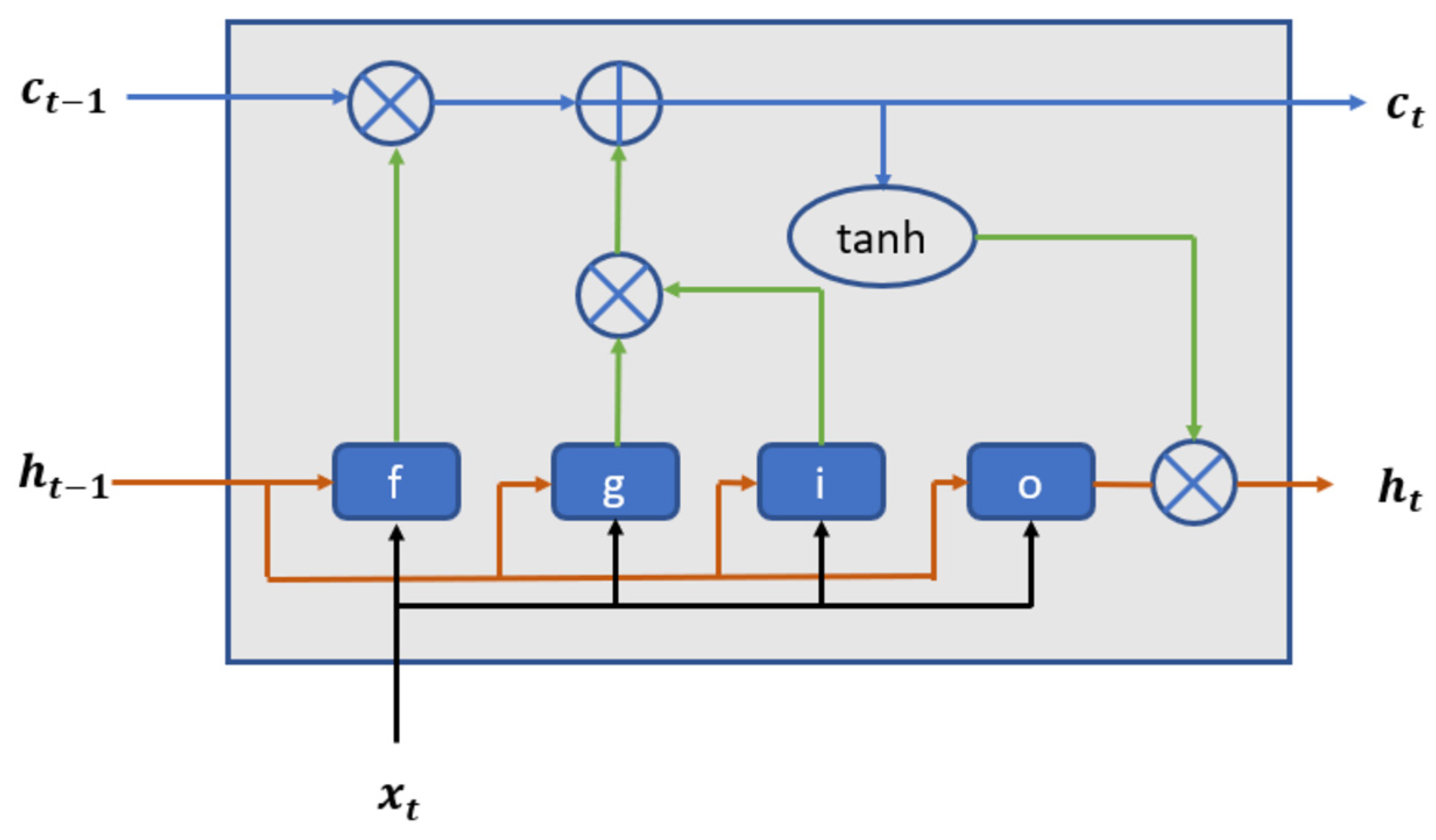

3.2. Single-Task Learning Model

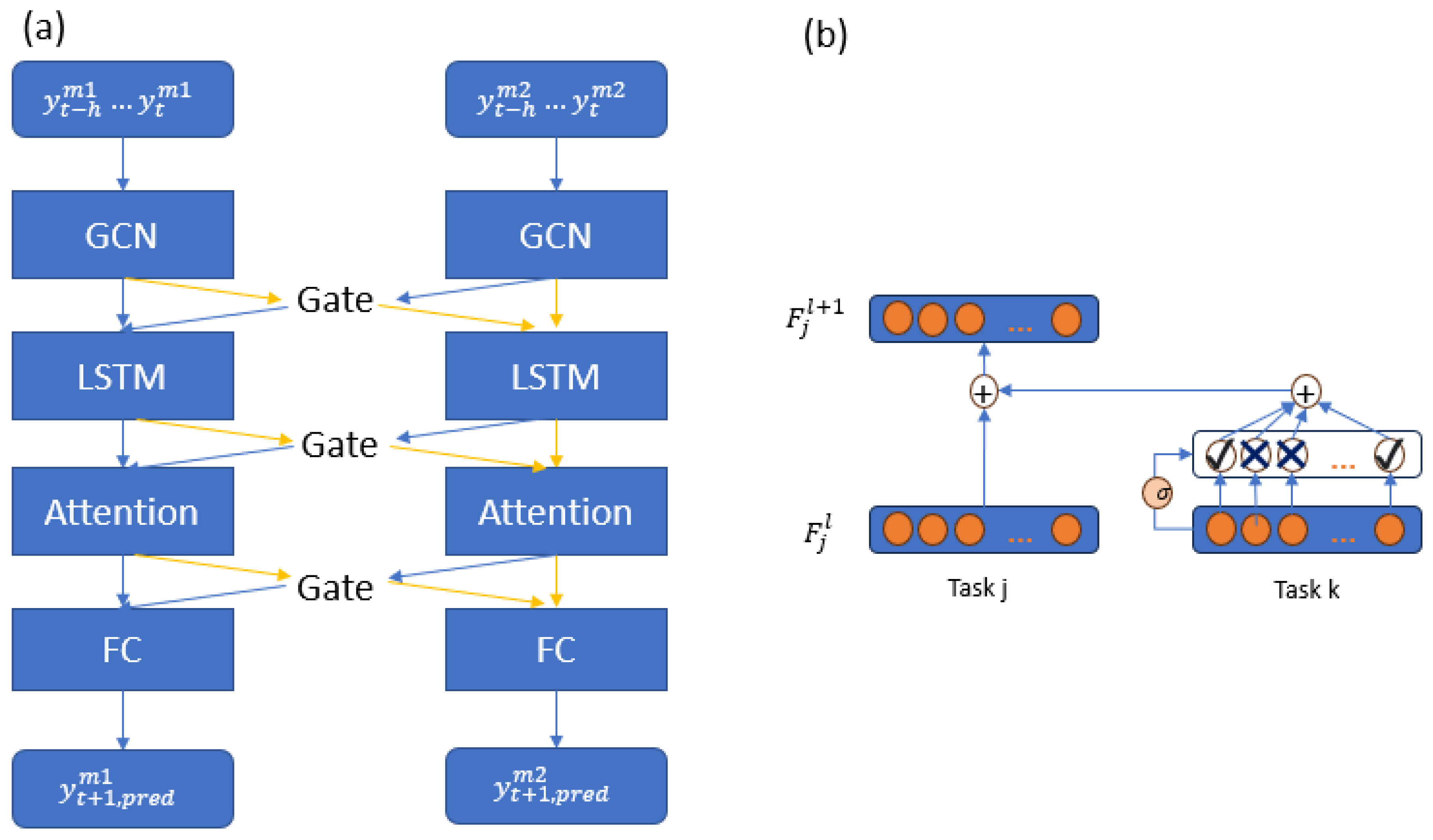

3.3. Multi-Task Learning Model

4. Experiments and Model Performance Evaluation

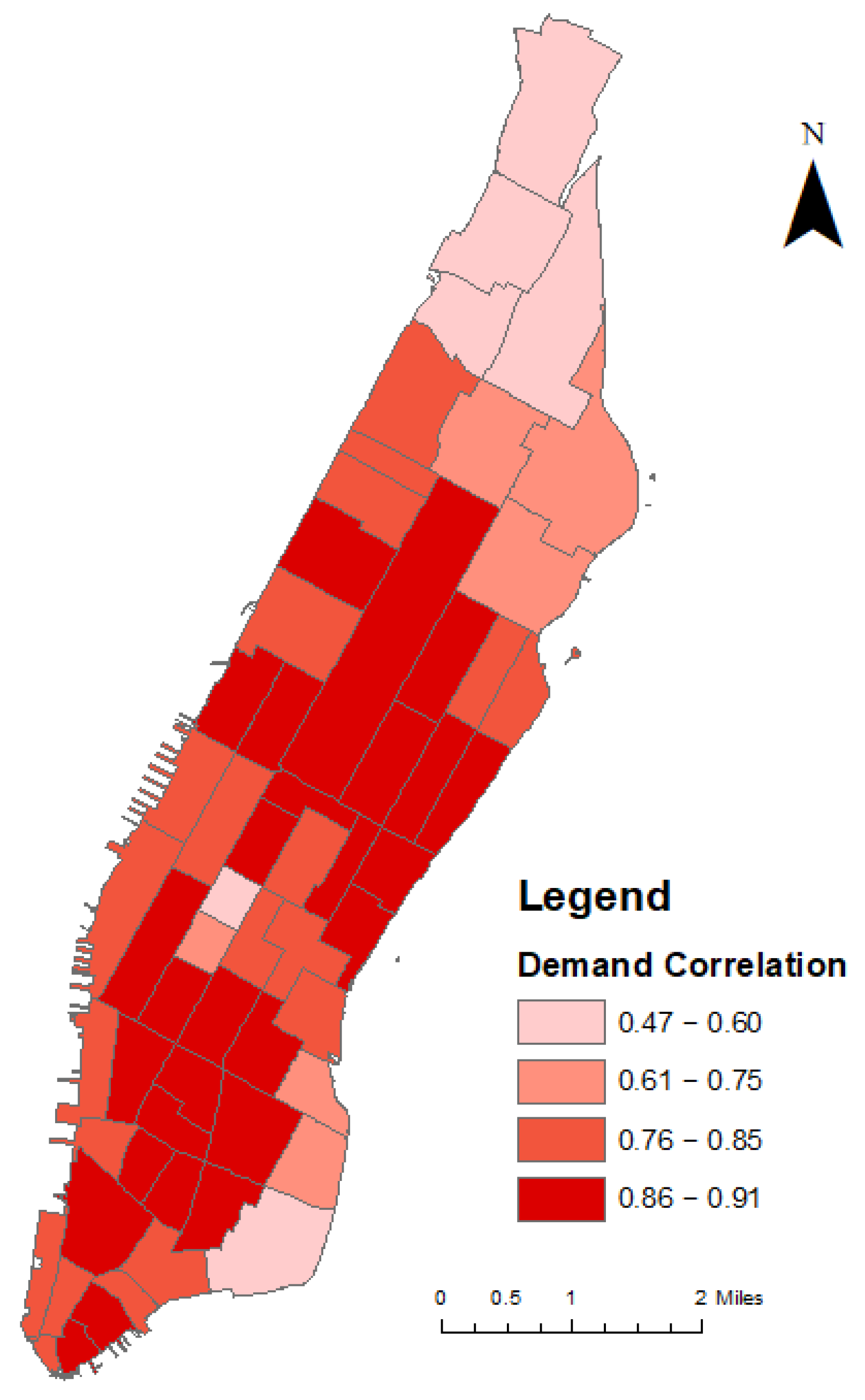

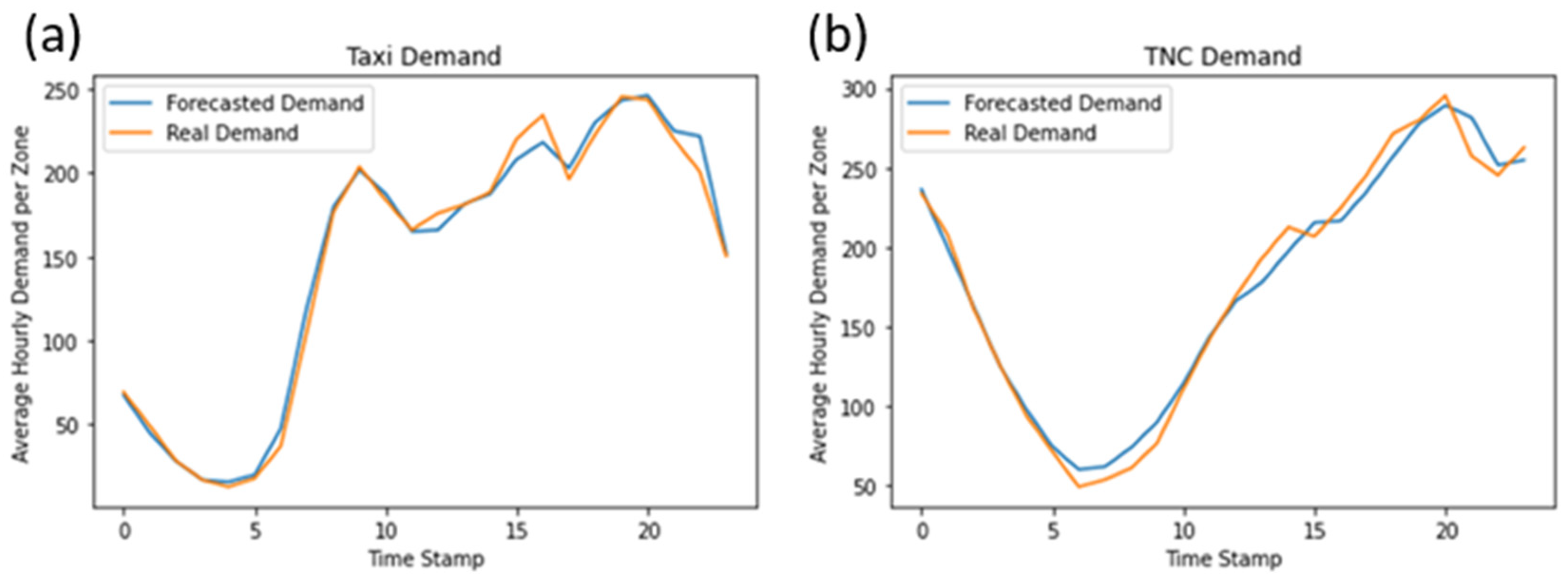

4.1. Study Area

4.1.1. Study Site Selection and Data Preprocessing

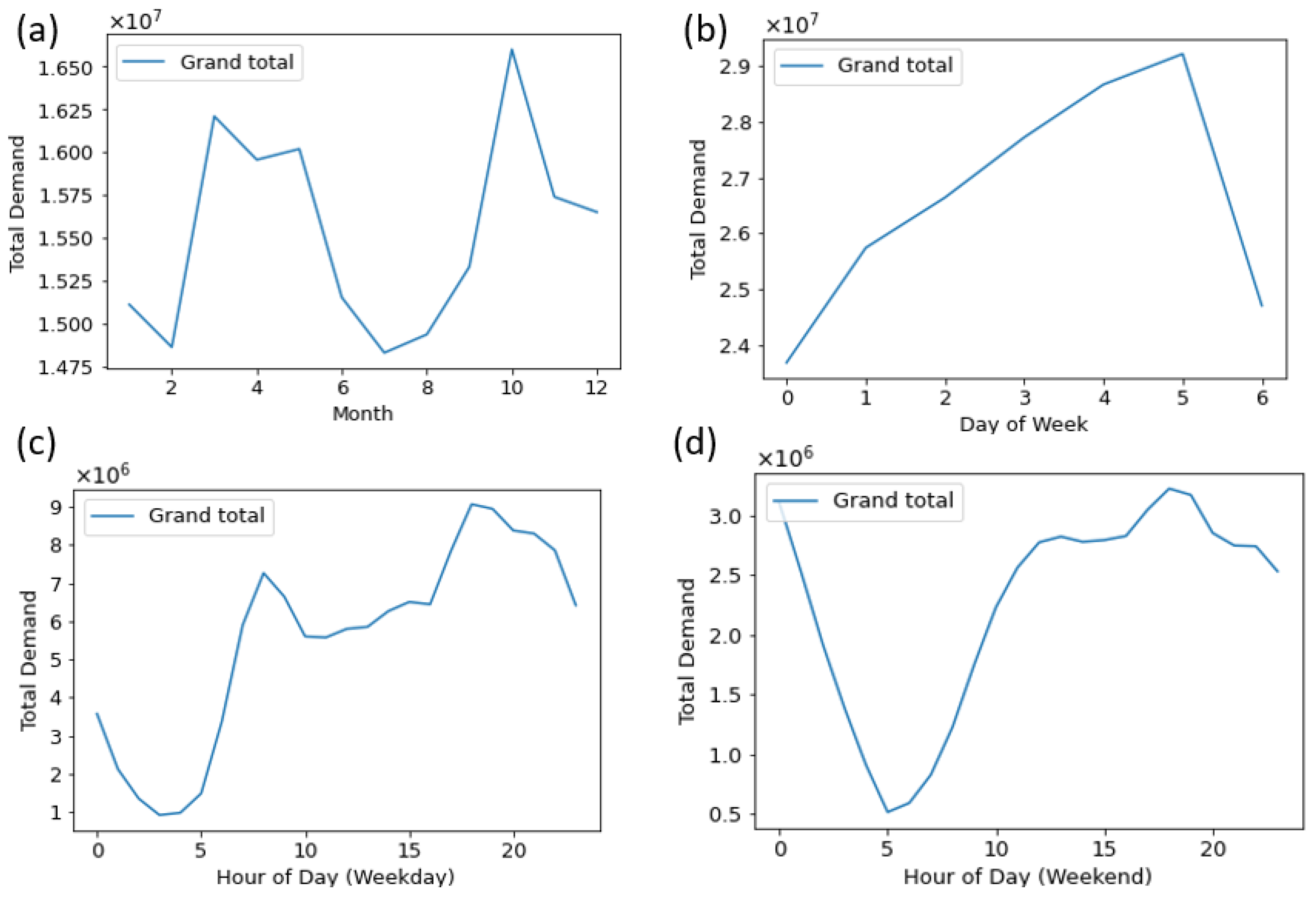

4.1.2. Data Analysis

4.2. Model Training

4.3. Model Evaluation

4.3.1. Description of the Baseline Models and the Proposed Models in the Experiment

- ARIMA: An autoregressive integrated moving average model, a statistical model widely used for time-series forecasting.

- MLP: A multi-layer perception, the most basic neural network. In this study, a three-layer neural network is used, which includes an input layer, a dense layer, and an output layer.

- XGBoost: eXtreme Gradient Boosting, which applies boosting to a tree-based machine learning model—widely known as an efficient model that solves data science problems accurately [49].

- Single-task learning (without a GCN): Single-task learning model shown in Figure 1 without a GCN layer.

- Multi-task learning (without a GCN): MTL model shown in Figure 3 without a GCN layer. The Gated Sharing Unit is applied after the LSTM layer.

- Single-task learning (GCN-Distance): Single-task learning model shown in Figure 1, with graph edge defined as the inverse distance between zones.

- Multi-task learning (GCN-Distance): MTL model shown in Figure 3, with graph edge defined as the inverse distance between zones.

- Single task learning (GCN-Neighbor): Single-task learning model shown in Figure 1, with graph edge defined as 1 if two zones share boundaries and 0 otherwise.

- Multi-task learning (GCN-Neighbor): MTL model shown in Figure 3, with graph edge defined as 1 if two zones share boundaries and 0 otherwise.

- Single task learning (GCN-Interaction): Single-task learning model shown in Figure 1, with graph edge defined as the product of inverse distance dependency and neighbor dependency.

- Multi-task learning (GCN-Interaction): MTL model shown in Figure 3, with graph edge defined as the product of inverse distance dependency and neighbor dependency.

4.3.2. Evaluation Metrics

4.4. Results and Discussions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Iqbal, M. Uber Revenue and Usage Statistics. Available online: https://www.businessofapps.com/data/uber-statistics/ (accessed on 28 March 2023).

- Cramer, J.; Krueger, A.B. Disruptive change in the taxi business: The case of Uber. Am. Econ. Rev. 2016, 106, 177–182. [Google Scholar] [CrossRef]

- SFCTA. TNCs Today: A Profile of San Francisco Transportation Network Company Activity; SFCTA: San Francisco, CA, USA, 2017.

- Shekhar, S.; Williams, B.M. Adaptive seasonal time series models for forecasting short-term traffic flow. Transp. Res. Rec. 2007, 2024, 116–125. [Google Scholar] [CrossRef]

- Li, X.; Pan, G.; Wu, Z.; Qi, G.; Li, S.; Zhang, D.; Zhang, W.; Wang, Z. Prediction of urban human mobility using large-scale taxi traces and its applications. Front. Comput. Sci. 2012, 6, 111–121. [Google Scholar] [CrossRef]

- Moreira-Matias, L.; Gama, J.; Ferreira, M.; Mendes-Moreira, J.; Damas, L. Predicting taxi–passenger demand using streaming data. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1393–1402. [Google Scholar] [CrossRef]

- Ke, J.; Zheng, H.; Yang, H.; Chen, X.M. Short-term forecasting of passenger demand under on-demand ride services: A spatio-temporal deep learning approach. Transp. Res. Part C Emerg. Technol. 2017, 85, 591–608. [Google Scholar] [CrossRef]

- Yao, H.; Tang, X.; Wei, H.; Zheng, G.; Li, Z. Revisiting spatial-temporal similarity: A deep learning framework for traffic prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 5668–5675. [Google Scholar]

- Jin, G.; Cui, Y.; Zeng, L.; Tang, H.; Feng, Y.; Huang, J. Urban ride-hailing demand prediction with multiple spatio-temporal information fusion network. Transp. Res. Part C Emerg. Technol. 2020, 117, 102665. [Google Scholar] [CrossRef]

- Li, C.; Bai, L.; Liu, W.; Yao, L.; Waller, S.T. Knowledge adaption for demand prediction based on multi-task memory neural network. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Online, 19–23 October 2020; pp. 715–724. [Google Scholar]

- Bai, L.; Yao, L.; Kanhere, S.S.; Yang, Z.; Chu, J.; Wang, X. Passenger demand forecasting with multi-task convolutional recurrent neural networks. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Macau, China, 14–17 April 2019; pp. 29–42. [Google Scholar]

- Ke, J.; Feng, S.; Zhu, Z.; Yang, H.; Ye, J. Joint predictions of multi-modal ride-hailing demands: A deep multi-task multi-graph learning-based approach. Transp. Res. Part C Emerg. Technol. 2021, 127, 103063. [Google Scholar] [CrossRef]

- Liang, J.; Tang, J.; Gao, F.; Wang, Z.; Huang, H. On region-level travel demand forecasting using multi-task adaptive graph attention network. Inf. Sci. 2023, 622, 161–177. [Google Scholar] [CrossRef]

- Liu, H.; Wu, Q.; Zhuang, F.; Lu, X.; Dou, D.; Xiong, H. Community-Aware Multi-Task Transportation Demand Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021. [Google Scholar]

- Zhao, J.; Chen, C.; Huang, H.; Xiang, C. Unifying Uber and taxi data via deep models for taxi passenger demand prediction. Pers. Ubiquitous Comput. 2020, 27, 523–535. [Google Scholar] [CrossRef]

- Poulsen, L.K.; Dekkers, D.; Wagenaar, N.; Snijders, W.; Lewinsky, B.; Mukkamala, R.R.; Vatrapu, R. Green cabs vs. uber in new york city. In Proceedings of the 2016 IEEE International Congress on Big Data (BigData Congress), San Francisco, CA, USA, 27 June–2 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 222–229. [Google Scholar]

- Correa, D.; Xie, K.; Ozbay, K. Exploring the taxi and Uber demand in New York City: An empirical analysis and spatial modeling. In Proceedings of the 96th Annual Meeting of the Transportation Research Board, Washington, DC, USA, 8–12 January 2017. [Google Scholar]

- Hu, W.; Browning, K.; Zraick, K. Uber Partners with Yellow Taxi Companies in N.Y.C. Available online: https://www.nytimes.com/2022/03/24/business/uber-new-york-taxis.html (accessed on 30 March 2023).

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar]

- Yu, X.; Shi, S.; Xu, L. A spatial–temporal graph attention network approach for air temperature forecasting. Appl. Soft Comput. 2021, 113, 107888. [Google Scholar] [CrossRef]

- Geng, X.; Li, Y.; Wang, L.; Zhang, L.; Yang, Q.; Ye, J.; Liu, Y. Spatiotemporal multi-graph convolution network for ride-hailing demand forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 3656–3663. [Google Scholar]

- Bischoff, J.; Maciejewski, M.; Sohr, A. Analysis of Berlin’s taxi services by exploring GPS traces. In Proceedings of the 2015 International Conference on Models and Technologies for Intelligent Transportation Systems (MT-ITS), Budapest, Hungary, 3–5 June 2015; pp. 209–215. [Google Scholar]

- Nuzzolo, A.; Comi, A.; Papa, E.; Polimeni, A. Understanding taxi travel demand patterns through Floating Car Data. In Proceedings of the Conference on Sustainable Urban Mobility, Skiathos Island, Greece, 24–25 May 2018; Springer: Cham, Switzerland, 2018; pp. 445–452. [Google Scholar]

- Dong, X.; Zhang, M.; Zhang, S.; Shen, X.; Hu, B. The analysis of urban taxi operation efficiency based on GPS trajectory big data. Phys. A Stat. Mech. Its Appl. 2019, 528, 121456. [Google Scholar] [CrossRef]

- Yang, Z.; Franz, M.L.; Zhu, S.; Mahmoudi, J.; Nasri, A.; Zhang, L. Analysis of Washington, DC taxi demand using GPS and land-use data. J. Transp. Geogr. 2018, 66, 35–44. [Google Scholar] [CrossRef]

- Nuzzolo, A.; Comi, A.; Polimeni, A. Exploring on-demand service use in large urban areas: The case of Rome. Arch. Transp. 2019, 50, 77–90. [Google Scholar] [CrossRef]

- Luo, H.; Cai, J.; Zhang, K.; Xie, R.; Zheng, L. A multi-task deep learning model for short-term taxi demand forecasting considering spatiotemporal dependences. J. Traffic Transp. Eng. 2020, 8, 83–94. [Google Scholar] [CrossRef]

- Kuang, L.; Yan, X.; Tan, X.; Li, S.; Yang, X. Predicting taxi demand based on 3D convolutional neural network and multi-task learning. Remote Sens. 2019, 11, 1265. [Google Scholar] [CrossRef]

- Ferreira, N.; Poco, J.; Vo, H.T.; Freire, J.; Silva, C.T. Visual exploration of big spatio-temporal urban data: A study of new york city taxi trips. IEEE Trans. Vis. Comput. Graph. 2013, 19, 2149–2158. [Google Scholar] [CrossRef]

- Lu, X.; Ma, C.; Qiao, Y. Short-term demand forecasting for online car-hailing using ConvLSTM networks. Phys. A Stat. Mech. Its Appl. 2021, 570, 125838. [Google Scholar] [CrossRef]

- Xu, Y.; Li, D. Incorporating graph attention and recurrent architectures for city-wide taxi demand prediction. ISPRS Int. J. Geo-Inf. 2019, 8, 414. [Google Scholar] [CrossRef]

- Yao, H.; Wu, F.; Ke, J.; Tang, X.; Jia, Y.; Lu, S.; Gong, P.; Ye, J.; Li, Z. Deep multi-view spatial-temporal network for taxi demand prediction. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3837–3845. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Xu, J.; Rahmatizadeh, R.; Bölöni, L.; Turgut, D. Real-time prediction of taxi demand using recurrent neural networks. IEEE Trans. Intell. Transp. Syst. 2017, 19, 2572–2581. [Google Scholar] [CrossRef]

- Zhao, X.; Sun, K.; Gong, S.; Wu, X. RF-BiLSTM Neural Network Incorporating Attention Mechanism for Online Ride-Hailing Demand Forecasting. Symmetry 2023, 15, 670. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Z.; Lyu, C.; Ye, J. Attention-based deep ensemble net for large-scale online taxi-hailing demand prediction. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4798–4807. [Google Scholar] [CrossRef]

- Zhang, K.; Liu, Z.; Zheng, L. Short-term prediction of passenger demand in multi-zone level: Temporal convolutional neural network with multi-task learning. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1480–1490. [Google Scholar] [CrossRef]

- Liang, Y.; Huang, G.; Zhao, Z. Joint demand prediction for multimodal systems: A multi-task multi-relational spatiotemporal graph neural network approach. Transp. Res. Part C Emerg. Technol. 2022, 140, 103731. [Google Scholar] [CrossRef]

- Ruder, S.; Bingel, J.; Augenstein, I.; Søgaard, A. Latent multi-task architecture learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 4822–4829. [Google Scholar]

- Strezoski, G.; van Noord, N.; Worring, M. Learning task relatedness in multi-task learning for images in context. In Proceedings of the 2019 on International Conference on Multimedia Retrieval, Ottawa, ON, Canada, 10–13 June 2019; pp. 78–86. [Google Scholar]

- Misra, I.; Shrivastava, A.; Gupta, A.; Hebert, M. Cross-stitch networks for multi-task learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3994–4003. [Google Scholar]

- Xiao, L.; Zhang, H.; Chen, W. Gated multi-task network for text classification. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Short Papers. Volume 2, pp. 726–731. [Google Scholar]

- Olah, C. Understanding LSTM Networks. Available online: https://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 1 April 2020).

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- NYC Taxi & Limousine Commission. TLC Trip Record Data; NYC Taxi & Limousine Commission: New York, NY, USA, 2023.

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M. Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Yu, T.; Kumar, S.; Gupta, A.; Levine, S.; Hausman, K.; Finn, C. Gradient surgery for multi-task learning. arXiv 2020, arXiv:2001.06782. [Google Scholar]

- Crawshaw, M. Multi-task learning with deep neural networks: A survey. arXiv 2020, arXiv:2009.09796. [Google Scholar]

- Guo, Y.; Zhang, Y. Understanding factors influencing shared e-scooter usage and its impact on auto mode substitution. Transp. Res. Part D Transp. Environ. 2021, 99, 102991. [Google Scholar] [CrossRef]

| Taxi | TNC | |||

|---|---|---|---|---|

| RMSE | MAE | RMSE | MAE | |

| ARIMA | 54.1 | 32.6 | 56.3 | 37.1 |

| MLP | 47.9 | 30.0 | 49.5 | 34.4 |

| XGBoost | 36.9 | 21.8 | 41.0 | 26.1 |

| Single-task learning (without a GCN) | 37.7 | 22.6 | 41.0 | 27.2 |

| Multi-task learning (without a GCN) | 36.1 | 21.8 | 39.5 | 26.1 |

| Single-task learning (GCN-Distance) | 36.7 | 21.8 | 40.2 | 26.2 |

| Multi-task learning (GCN-Distance) | 35.8 | 21.5 | 40.5 | 25.9 |

| Single-task learning (GCN-Neighbor) | 37.4 | 22.2 | 39.8 | 26.1 |

| Multi-task learning (GCN-Neighbor) | 36.5 | 21.9 | 39.4 | 25.5 |

| Single-task learning (GCN-Interaction) | 35.8 | 21.1 | 38.2 | 25.0 |

| Multi-task learning (GCN-Interaction) | 34.7 | 20.9 | 37.2 | 24.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, Y.; Chen, Y.; Zhang, Y. Enhancing Demand Prediction: A Multi-Task Learning Approach for Taxis and TNCs. Sustainability 2024, 16, 2065. https://doi.org/10.3390/su16052065

Guo Y, Chen Y, Zhang Y. Enhancing Demand Prediction: A Multi-Task Learning Approach for Taxis and TNCs. Sustainability. 2024; 16(5):2065. https://doi.org/10.3390/su16052065

Chicago/Turabian StyleGuo, Yujie, Ying Chen, and Yu Zhang. 2024. "Enhancing Demand Prediction: A Multi-Task Learning Approach for Taxis and TNCs" Sustainability 16, no. 5: 2065. https://doi.org/10.3390/su16052065

APA StyleGuo, Y., Chen, Y., & Zhang, Y. (2024). Enhancing Demand Prediction: A Multi-Task Learning Approach for Taxis and TNCs. Sustainability, 16(5), 2065. https://doi.org/10.3390/su16052065