Abstract

Before COVID-19, universities in the Philippines sparingly used online learning instructional methods. Online learning is now widely known, and universities are increasingly keen to adopt it as a mainstream instructional method. Accounting is a popular discipline of study undertaken by students, but its online adoption is less well known. This study investigated university accounting students’ perceptions of the cognitive load of learning and how it influences their effect on learning memory at a university in the Philippines. During the COVID-19 period, after introducing online learning, 482 university undergraduate accounting students provided their perceptions using a five-point Likert scale survey questionnaire. The study measured teaching quality, learning content quality, and learning management system (LMS) quality, representing the cognitive load of learning. It measured electronic learning (e-learning) quality, learner satisfaction, and behavioral intentions to adopt online learning, continually representing the learning memory framework. The data analyzed using a structural equation model showed that students managing their cognitive load positively influenced their short-term learning. Learning content, teaching, and LMS quality positively influenced e-learning quality and student satisfaction. Student satisfaction positively influenced, but e-learning quality did not influence, students’ continued willingness for online learning. The findings were largely consistent across the second- and third-year enrolments. Findings from the first-year students showed that teaching quality did not influence student satisfaction and e-learning quality. This is the first study to test the influence of the cognitive load of learning on the learning memory of accounting students in an online learning environment.

1. Introduction

If a single thread could interconnect all 17 United Nations Sustainable Development Goals (SDGs), it would be SDG 4 Quality Education for all. Education is a silver line that can transform oppression into emancipation and deprivation into abundance (Power, 2015) [1]. Higher education occupies a special place because it is where the best minds congregate to generate sustainable ideas and address social inequalities. In view of this, higher education must reach out to as many people as possible who wish to receive an education (Kromydas, 2017) [2].

There were three motivations for undertaking this study. First, online educational delivery can introduce variability in the quality of education (Chen et al., 2020) [3]. Traditional universities are likely to consider online learning less productive, especially if they have not previously adopted it (Means, 2009) [4]. Second, the digital divide can drive the recipients of education, and it is especially relevant to developing countries such as the Philippines, where social inequality is a deciding barrier to undertaking education (Dhawan., 2020) [5]. Online education can help reduce social inequality. However, developing countries such as the Philippines face special challenges in the adoption of online learning. These include unstable Internet connectivity, inadequate learning resources available for online learners, electrical power interruptions, and limited teacher scaffolds. Online students can encounter responsibilities at home that conflict with their learning efforts and a poor learning environment (Rotas & Cahapay, 2020) [6]. Third, online learning has both positive and negative aspects for students and universities; however, it is unknown where Philippine universities stand.

On the positive axiom, online learning is excellent for self-directed students and encourages lifelong learning. Travel time to universities is eliminated, and students and teachers can save time and resources. Virtual interaction games can be introduced more seamlessly into online learning environments (Mukhtar et al., 2020; Skulmowski & Xu, 2022) [7,8]. On the negative axiom, it is much harder to read students’ nonverbal cues to determine whether they understand the learning material being taught. Students can develop a shorter attention span because of more distractions in their chosen learning environment. There are also challenges to upholding academic integrity because students are virtually monitored and not physically observed (Barrot et al., 2021; Mukhtar et al., 2020) [7,9].

The positive aspects of online educational delivery can increase student motivation and engagement, whereas the negative aspects can decrease it. However, these factors can influence student cognitive load differently, which is a measure of ease or difficulty in learning imposed by teaching quality, learning content quality, and learning management system (LMS) quality. Taking advantage of the online learning environment and effectively balancing student cognitive load is crucial yet ill-understood (Skulmowski & Xu, 2022) [8]. The Philippines has a large number of young people, with a median age of 25 years. They are at the height of receiving quality education to become productive citizens (Worldometer, 2023) [10].

This study aims to fill this research gap by understanding how university accounting students in the Philippines perceive teaching quality, learning content quality, and LMS quality, and their effects on short-term learning memory measured as electronic learning (e-learning) quality and student satisfaction. Additionally, LMS quality and student satisfaction can influence students’ propensity to engage in online learning and increase long-term learning memory, which is a crucial parameter for universities to continue online education. The study chose the Philippines as a research location because it shares common characteristics of the online learning environments of developing countries where the digital divide has disadvantaged people accessing information and learning through hardware and software (Rotas & Caha-pay, 2020) [6].

Studies have contributed to understanding and highlighting online delivery in tertiary education. This study contributes to the ill-understood area in this domain by focusing on students’ perceptions of online learning in accounting education. Learning accounting subjects requires building complex memory patterns known as schemas (Abeysekera & Jebeile, 2019; Blaney et al., 2016) [11,12]. Previous research conducted at a university in the Middle East has shown that accounting students are better supported by participation and collaboration in traditional learning than in online learning environments (Shabeeb et al., 2022) [13]. The study findings differ due to national societal and cultural settings and how online learning was designed and implemented. To develop a deeper understanding, this study focuses on undergraduate accounting students at a university in the Philippines. This study established student perceptions and satisfaction levels that can help understand the behavioral intentions of students undertaking continuing studies under online delivery. This study was conducted during the COVID-19 2020–2021 academic year in the Philippines and addressed the following eight questions.

(1) To what extent does teaching quality influence e-learning quality?

(2) To what extent does teaching quality influence student satisfaction?

(3) To what extent does learning content quality influence e-learning quality?

(4) To what extent does learning content quality influence student satisfaction?

(5) To what extent does LMS quality influence e-learning quality?

(6) To what extent does LMS quality influence student satisfaction?

(7) To what extent does e-learning quality influence continuing online learning adoption?

(8) To what extent does student satisfaction influence continuing online learning adoption?

The Philippine Republic Act No. 11469, also known as the “Bayanihan to Heal as One Act”, was responsible for the response of the Philippine government to COVID-19. The Commission on Higher Education (CHED) adopted and promulgated Resolution No. 412–2020 of the Act that outlined the Guidelines on Flexible Learning (FL) to be implemented by the Higher Education Institutions (HEIs) (CHED Memo No. 4, 2020) [14]. The memorandum in the CHED defined online learning as a pedagogical approach that allows flexibility of time, place, and audience, including, but not solely focused on, the use of technology. The university in this study opened its academic year in August 2020 despite the ongoing pandemic crisis. In pursuing academic excellence, it provides online education to all its students, including those in the accountancy degree. The online instruction was conducted using the learning management system (LMS) Canvas and was used by the faculty staff and students.

The next section discusses the literature on student online learning to show the importance of fulfilling the research aims. Section 3 presents theoretical frameworks—cognitive learning theory and learning memory framework—and states the hypotheses tested using structural equation modeling. Section 4 presents the methodology for data collection using a five-point Likert scale survey questionnaire. Section 5 presents the findings and discusses their implications for higher education. The final section presents the concluding remarks with implications for policymakers, theory, methodology, limitations that bound result interpretation, and future research propositions.

2. Review of the Literature

Online learning has a sporadic history that took root in widespread adoption during COVID-19. During the early adoption periods, the focus was on whether students accepted the environment as a learning environment. Instrument scales were developed to measure the usefulness and ease of use of hardware (Davis, 1989) [15]. Students who have undertaken online learning perceived their strengths and weaknesses. Strengths include a sense of independence in choosing their study time and style and being at ease in their comfort whilst learning. Students feel lonely and unsupported when they learn without physical peers. Successful online learning requires self-discipline and a firm commitment to learning (Song et al., 2004) [16].

The availability and readiness of the online learning environment influenced positive feedback (Almahasees et al., 2021) [17]. These included computer hardware, software, and connectivity (wired and wireless Internet), not just hardware a few decades ago (Davis, 1989) [15]. Students were less motivated and interacted less during online learning (Almahasees et al., 2021) [17]. A study revealed that only one-third of the students had a positive opinion of online learning, while the other two-thirds had negative or undecided opinions. A total of 69.4% of students who used online learning complained about technical difficulties. Teachers did not use the full features of a learning platform, leading to negative student views. A total of 60.5% of students reported cognitive difficulty in processing information for learning (Coman et al., 2020) [18].

The cognitive difficulties arose from instructional methods adopted by teachers where students found more theoretical than practical learning content, and infrastructure inflexibility was associated with the LMS. Students admitted that they share responsibility for developing favorable perceptions, as their ill-preparedness with using the LMS and not balancing their studies with family and work life have contributed to adverse perceptions (Dhawan, 2020) [5].

Research has proposed that overcoming student cognitive difficulties in online learning could facilitate students to perceive online learning positively and receive a satisfactory experience. The proposed techniques included providing frequent breaks to students, combined with other learning methods, such as the flip classroom approach, to shorten lectures and increase interactions. It also proposed that the government improve Internet speed and access (Mukhtar et al., 2020) [7]. Although studies have not commented on the LMS, its infrastructure functionality can influence student perception and satisfaction with online learning and help them perceive it as a helpful learning platform. It must also be easy to use with the least amount of effort. A study evaluating the LMS used by medical staff found that 77.1% of staff perceived it as helpful to their learning and 76.5% found it easy to use (Zalat et al., 2021) [19]. These factors increased LMS's perceived usefulness and ease of use, which can increase students’ online learning perception and learning satisfaction.

Table 1 summarizes the research interconnected by citations. These publications show that the early focus of online learning was on hardware (Davis, 1989) [15], and its importance has now re-emerged (Zalat et al., 2021) [19]. The emergence was in the context of what software is possible for online learning, such as Zoom, Microsoft Teams, and WhatsApp platforms (Almahasees et al., 2021; Almahasees et al., 2020) [17,20]. These platforms have increased the possibilities of degree offerings and teaching methods (Pokhrel & Chhetri, 2021) [21], which has broadened the online education outlook (Zhang et al., 2022) [22].

The quality of online learning is at the forefront (Ehlers & Pawlowski, 2006) [23], evaluating advantages and disadvantages (Mukhtar et al., 2020) [7]. Research is actively evaluating the perceptions of faculty staff (Al-Salman & Haider, 2020; Zalat et al., 2021) [19,24] and students (Coman et al., 2020) [25], with a special focus on adolescents (Elashry et al., 2021) [26] and university students (Asif et al., 2022) [27].

Studies have identified specific ways to refine their investigations of online learning effectiveness (Fox et al., 2023; Elalouf et al., 2022) [28,29], acknowledging that engagement, perception, and satisfaction are separate constructs leading to different implications in pedagogy (Coman et al., 2020; Dubey, 2023; Sumilong, 2022) [25,30,31]. Research has investigated the logistical support required (Alammary, 2022; Assadi & Kashkosh, 2022) [32,33] with government interventions to improve online education to improve supporting infrastructure (Daher et al., 2023) [34].

Online learning can be offered in synchronous, asynchronous, and hybrid modes. The increasingly available tools to prepare learning content and delivery of content have enabled students to learn with faculty staff teaching them at scheduled times. However, the synchronous learning mode can become inconvenient for students who cannot fit into scheduled times, such as working students. The asynchronous mode can accommodate a wider range of students because learning materials are pre-recorded to access students’ convenience for learning. Research has shown that control-oriented students are more engaged in the synchronous mode, and autonomous-oriented students are more engaged in the asynchronous mode (Giesbers et al., 2014) [35]. A study conducted during the COVID-19 period showed that students were more satisfied with asynchronous learning than with synchronous learning. The authors proposed that teaching institutions use LMS infrastructure that students can use and continuously update learning materials for effective online learning [36]. These studies have highlighted the importance of investigating student perception and satisfaction in developing countries that began introducing online learning on a large scale, such as the Philippines.

Table 1.

A subset of relevant literature.

Table 1.

A subset of relevant literature.

| Authors | Research Investigation of Online Learning | |

|---|---|---|

| 1 | Davis (1989) [15] | Ease of Use and Acceptance of Information Technology |

| 2 | Ehlers and Pawlowski (2006) [23] | Quality of online learning. Quality is more highly perceived than face-to-face learning |

| 3 | Dhawan (2020) [5] | Importance of Online Learning as a Learning Platform |

| 4 | Mukhtar et al. (2020) [7] | Advantages, limitations, and recommendations |

| 5 | Almahasees et al. (2020) [20] | Facebook as a Learning Platform |

| 6 | Al-Salman and Haider (2020) [24] | Staff perceptions of Online Learning |

| 7 | Coman et al. (2020) [25] | Student perspectives on Online Learning |

| 8 | Almahasees et al. (2021) [17] | Usefulness of Zoom, Microsoft Teams, offering online interactive classes, and WhatsApp as online platforms |

| 9 | Pokhrel and Chhetri (2021) [21] | Must innovate and implement alternative learning platforms, such as online learning |

| 10 | Elashry et al. (2021) [26] | Adolescent Perceptions and Academic Stress |

| 11 | Zalat et al. (2021) [19] | Staff Acceptance of Online Learning as a tool |

| 12 | Alammary (2022) [32] | Toolkit to support blended learning degrees |

| 13 | Daher et al. (2023) [34] | Government intervention and leadership to promote online learning among students and teachers |

| 14 | Assadi and Kashkosh (2022) [33] | Strategies used by teacher trainers to address the technological, pedagogical, social, and emotional challenges of student–teacher interactions |

| 15 | Asif et al. (2022) [27] | University students’ perceptions |

| 16 | Elalouf et al. (2022) [29] | Student perception of learning structured query language through online and face-to-face learning |

| 17 | Sumilong (2022) [31] | Students’ self-expression, participation, and discourse |

| 18 | Zhang et al. (2022) [22] | A metaverse for education that includes online learning for blended, competence-based, and inclusive education |

| 19 | Fox et al. (2023) [28] | Efficacy of online learning for degree studies: Mental health recovery for carers |

| 20 | Dubey (2023) [30] | Factors behind student engagement in online learning |

| 21 | Giesbers et al. (2014) [35] | Student Engagement with synchronous and asynchronous online learning |

| 22 | Dargahi et al. (2023) [36] | Student satisfaction with synchronous and asynchronous online learning |

3. Theoretical Framework and Hypothesis Development

3.1. Theoretical Framework

Survival-oriented learning to build primary knowledge is an important genetically driven aspect of learning, but it is not considered here (Sweller et al., 2011, pp. 3–14) [37]. Providing new knowledge, preparing students for the workforce, and making ideal contributions to society are the focus of higher education institutions to which this study has drawn attention (Chan, 2016) [38]. Student learning requires the use of cognitive resources that interact with instructional and learning resources to attain learning efficiencies. According to cognitive learning theory (CLT), learning efficiencies can be attained through two methods of efficiently managing cognitive loads. The intrinsic cognitive load arises from the intrinsic nature of the instructional material, which must be decreased. The extrinsic cognitive load that arises from how learning materials are presented to students must be decreased. Germane processing is the students’ effort to store knowledge in short-term and long-term memory by developing memory patterns known as schema, and pedagogy facilitates building and increasing schema (Jiang & Kalyuga, 2020; Sweller et al., 2019) [39,40].

The teaching quality of faculty staff represents the intrinsic cognitive load aspect as they can take the lead in making harder learning content easier for students. The LMS quality in an online learning platform represents the extraneous cognitive load aspect as a usable LMS can assist students in learning. The learner’s effort to process learning content and automate it into memory patterns becomes schema representations. A high learning content quality can ease the amount of students’ learning content to process, which can support the germane load to learn.

The Human Memory System Framework shows that appropriately assorting schema occurs through the sensory system and should be sufficiently receptive to the students’ receptive system to draw attention to learning. After passing the sensory system, students build schema or patterns (or configurations) of memory and hold them in the working memory store, which is a short-term memory reserve. The highest learning efficiency occurs when short-term memory is transformed into long-term memory with lasting schemas (Atkinson & Shiffrin, 1968) [41]. The amount of information and the amount the learner knows impacts memory retention (Miller, 1956) [42]. There are two parties in the learning contract: pedagogies managing intrinsic and extraneous cognitive load and learners managing germane processing. The amount of information can become a variance in learning, and reducing the variance by providing information that the learner can accommodate can assist in memory building. The human memory system is unified in encoding, storing, and retrieving; however, there is a qualitative difference in retrieving between short- and long-term memory. Neurologically, the anterior frontal cortex plays a role in retrieving long-term episodes by judging remembrances (Nee et al., 2008) [43]. Short-term or working memory is only an episodic buffer. Although intimately linked with long-term memory, short-term memory does not simply represent an activation of long-term memory, but rather a gateway to it (Baddeley, 2000) [44].

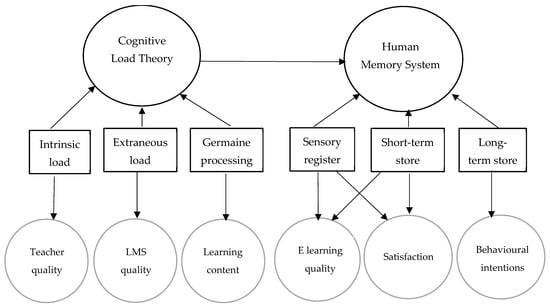

As shown in Figure 1, this study combined the cognitive load theory and human memory system framework to build a theoretical model. The model became the benchmark for testing the data collected from students regarding online learning perceptions. This study investigated whether managing cognitive load causally influences learners’ memory retention in an online learning environment.

Figure 1.

Theoretical framework.

This study translated the cognitive load theory and human memory system framework theoretical dimensions to develop a theoretical model. In that, it identified the teaching quality to represent the intrinsic cognitive load, the LMS quality to represent the extraneous cognitive load, and the learning content quality to represent the germane load. The sensory register and short-term learning stored in the working memory were measured using objectively based e-learning quality and subjectively based student satisfaction. The long-term store of memory was measured using student behavioral intentions to adopt online learning for their continuing studies.

Online teaching using digital technology offers many benefits over traditional face-to-face teaching models. It can offer virtual reality closer to what is happening in workplaces, allowing interactions to increase learning and gain near-practical experience while learning online. Interactive quizzes and assessments can provide real-time personalized feedback. However, LMS design factors can increase extraneous cognitive load. These two cognitive loads, intrinsic and extraneous, can influence the germane processing load in learning. For example, too much immersion in a single online task can deplete learners’ germane processing, decreasing overall learning efficiency (Frederiksen et al., 2020) [45].

The five design factors used in online learning are as follows: (1) interactive learning media (interaction leads to remembering and understanding the learning content); (2) immersion (believable digital environment that makes one forget the real environment); (3) realism (realistic and schematic visualizations); (4) disfluency (avoiding non-readable fonts); and (5) emotional (affective aspects of learning) design, which commonly aim to decrease extraneous load on learners so that they can easily use different forms of germane processing (such as verbal, mathematical, or procedural processing) to increase learning efficiency. Student learning can then be formally assessed by considering the germane processing aspects used by students for learning (Skulmowski & Xu, 2022) [8].

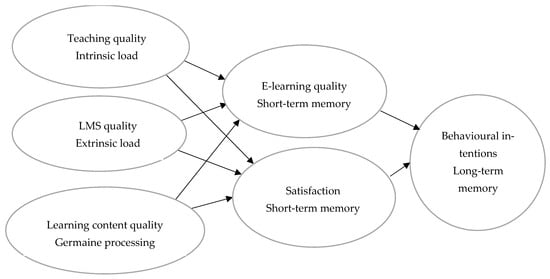

Figure 2 shows the conceptual framework in which the tested data fit the hypothesized model (Theresiawati et al., 2020) [46]. Three exogenous constructs exist: teacher quality, LMS quality, and learning content quality. The exogenous constructs represent the CLT. The theoretical framework noted that these three constructs influence e-learning quality and learner satisfaction. E-learning quality is whether it meets learners’ objective expectations. Satisfaction refers to learners’ feelings arising from comparing their perceptions with expectations and measuring subjective expectations. This study argues that these learning efficiencies, by improving cognitive load, can lead to improved working memory. However, the ultimate learning efficiency occurs in the e-learning quality, and student satisfaction contributes to building students’ long-term learning memory by developing learning schemas (patterns). The study argued that such long-term learning efficiencies lead to behavioral intentions encouraging students to continue using online learning (Theresiawati et al., 2020) [46].

Figure 2.

Conceptual model.

Teaching quality comprises four dimensions: (1) Assurance is a guarantee that faculty staff have knowledge and understanding of the learning materials provided to guide students to obtain a sense of confidence in their learning. Faculty staff should be fair and objective in assessing students’ learning achievements and capabilities. (2) Responsiveness refers to the faculty staff’s willingness to help and respond quickly and efficiently to the needs of students by answering their questions and assisting them in problem-solving activities. (3) Reliability refers to the consistency of lecturers in providing materials to the curriculum set by the study. (4) Empathy includes faculty staff’s concern for students and their encouragement and motivation to do their best (Udo et al., 2011; Uppal et al., 2018) [47,48]. Usability indicators measure LMS quality. Usability represents the physical facilities of the LMS, including various learning activities, ease of use and accessibility of the e-learning user interface, and ease of management by students (Udo et al., 2011; Uppal et al., 2018) [47,48]. Learning content quality refers to the availability of materials and services directly related to student learning outcomes (Uppal et al., 2018; Cao et al., 2005) [1,48].

E-learning quality is about the availability of instructions to use e-learning aspects that are continually updated and are clear and adequately described. Satisfaction refers to student satisfaction with the decision to use an e-learning system and environment. Behavioral intention describes the intention of users to continue using online learning (Udo et al., 2011; Uppal et al., 2018) [47,48].

3.2. Hypotheses

From the above discussion, the following hypotheses were resolved to test the theoretical model.

3.2.1. Teaching Quality

Although teachers are not at the forefront of teaching as in face-to-face classroom environments, their importance in students’ learning quality and satisfaction remains the same through the assurance, responsiveness, reliability, and empathy provided to students with cognitive, mental, and emotional support to positively influence e-learning quality and student satisfaction. A high teaching quality can decrease the intrinsic cognitive load on students’ learning and positively influence e-learning quality and student satisfaction with online learning. Therefore, the following two hypotheses are proposed.

Hypothesis H1a:

Teacher quality is positively correlated with e-learning quality.

Hypothesis H1b:

Teacher quality is positively correlated with student satisfaction.

3.2.2. LMS Quality

An up-to-date, easy-to-use LMS can facilitate student learning by decreasing their extraneous cognitive load, thereby increasing e-learning quality and student satisfaction. Hence, the following two hypotheses are proposed.

Hypothesis H2a:

LMS quality is positively correlated with e-learning quality.

Hypothesis H2b:

LMS quality is positively correlated with student satisfaction.

3.2.3. Learning Content Quality

Learning content quality represents the ease of processing learning content for student learning. Hence, the following two hypotheses are proposed.

Hypothesis H3a:

Learning content quality is positively correlated with e-learning quality.

Hypothesis H3b:

Learning content quality is positively correlated with student satisfaction.

3.2.4. E-Learning Quality

E-learning quality can increase students’ short-term storage (working memory). E-learning quality positively influences long-term memory storage, leading to students wanting to use online learning.

Hypothesis H4:

E-learning quality is positively correlated with behavioral intentions.

3.2.5. Student Satisfaction

Student satisfaction can increase students’ short-term storage (working memory). Student satisfaction can positively influence long-term memory storage, leading to students wanting to use online learning.

Hypothesis H5:

Student satisfaction is positively correlated with behavioral intentions.

4. Methodology

4.1. Participants and Sampling

As part of a larger study in a business school, the participants of this study were undergraduate students enrolled in the accountancy degree at a university in the Philippines, with 482 participants in the 2020–2021 academic year: 80 students in the first year (16.6%), 195 students in the second year (40.4%), and 207 students in the third year (43%) of enrolment. Of the 482 students, 392 (81%) were female and 90 (19%) were male.

This was the first time that the University introduced online learning to students and used synchronized learning to resemble face-to-face learning environments. The teaching was conducted live stream, and students were required to attend online classes, have spontaneous and immediate interactions, and build an online learning community (Daher et al., 2023; Giesbers et al., 2014) [35,36].

4.2. Survey Instrument

This study used a survey instrument used in Indonesia (Theresiawati et al. (2020) [46]. As a neighboring country to the Philippines, the Indonesian setting had more societal–cultural similarities (Hudjashov et al., 2017) [49]. However, before using the survey questions, they were pre-tested for content and face validity by four academic staff members in the Philippines for the Philippines university setting.

All questions were presented on a 5-point Likert scale, except for those that collected demographic data from the respondents. The questionnaire has seven (7) parts, namely: (Part 1) Teaching quality construct measured using assurance, empathy, responsiveness, and reliability; (Part 2) Learning Management Systems (LMS) quality construct measured for its usability; (Part 3) Learning content quality was measured for features presented in it such as videos, audios, and animation, as a single construct; (Part 4) E-learning quality measured for its usage for updated and clear instructions contained, as a single construct; (Part 5) Satisfaction comprised students experience, decision to enroll in online learning, and was measured as a single construct; and, (Part 6) Behavioral intention measured for recommending it to others, continue using it, and as a single construct. Part 7 of the questionnaire obtained students’ personal information about gender, study location, and enrolment year and degree type.

Although the instrument has been validated in Indonesia, the study conducted validity and reliability tests to obtain the validity and reliability of the collected data before conducting theoretical model testing using structural equation modeling (SEM). SEM requires valid and reliably represented constructs as a pre-condition. Table 2 shows the preparedness of the data for structural equation modeling. The study tested preparedness by factor analysis of indicators to determine whether they represent underlying constructs in the hypothesized model.

Table 2.

Validity and reliability measurements of the constructs.

The Kaiser–Meyer–Olkin (KMO) test shows the adequacy of indicators for factor analysis. The indicators must have less common variance and more unique variances to qualify for factor analysis. This shows that each indicator distinctly contributes to the underlying construct. A KMO output of more than 0.8 indicates an excellent contribution and a KMO output of over 0.7 indicates a good contribution. All indicators met the KMO output benchmark for constructs (Shkeer & Awang, 2019) [50].

The Bartlett sphericity test determined whether the correlation among indicators was unrelated and unsuited for factor analysis. This is known as the search for identity matrix, and if the indicators are related, they become suitable for factor analysis. As shown in the table, the statistically significant values computed for indicators with the chi-square test (and degree of freedom) showed that the indicators are suitable for factor analysis (Chatzopoulos et al., 2022; Rossoni et al., 2016) [51,52].

A construct becomes valid only if it is reliable. Validity is not a pre-condition for reliability, but reliability is a pre-condition for validity. The variance shared by indicators (or covariance) for that construct measured the reliability of the underlying construct. This means that indicators are interconnected or correlated with each other. Cronbach’s alpha measures reliability based on these underlying principles. A low Cronbach alpha value can occur because of the low interconnectedness of indicators, a low number of indicators representing the construct, or the heterogeneity of the construct. An acceptable alpha value is 0.7. All reported values were greater than 0.7 (Tavakol & Dennick, 2011) [53].

4.3. Data Collection

Google Forms are widely used to collect survey data, which this study used. The questionnaire was uploaded through Google Forms, and the link was sent to the respondents via a messenger group through the help and efforts of the faculty. In collecting data, the study took steps to decrease social desirability bias where students could give favorable answers to appease the faculty staff and the university. Accounting students have higher moral codes, which could discourage them from acting unethically (Nguyen &, Dellaportas, 2021) [54]. The survey did not collect personally identifiable information where otherwise they could engage in providing more favorable answers than their actual perceptions (Grimm, 2010) [55]. The data gathered were summarized in Excel or CSV files for more accessible data analysis. The total number of respondents officially enrolled was obtained from the university registrar.

The questionnaire comprised the cover letter first, explaining the purpose and importance of the research. The researchers requested written permission from the respondents regarding the data to be gathered in the study. The university approved the ethics consent, and assent letters were shared with the respondents. All responses from the respondents were kept strictly confidential. Before implementing the research protocol, the researchers sought the respondents’ approval regarding the research objective, including the importance of their participation in the study. The data obtained in the online survey form were appropriately protected and archived upon completion of the study.

4.4. Data Analysis

The study used SPSS Version 29 to organize and analyze the data. The software conducted the descriptive analysis, KMO and Bartlett Sphercity of Test, and Cronbach Alpha computations. SPSS Amos Version 28 was used to conduct structural equation modeling (SEM) by feeding in research data from SPSS Version 29.

As eight hypotheses are being tested, there are arguments that increased testing of hypotheses with null hypotheses set at 0.05% statistical significance can lead to the parameters estimated to be accepted as meeting the null hypothesis, which is a Type 1 error; in fact, it must be rejected. Bonferroni correction is proposed to remedy this by decreasing the statistical significance in proportion to the number of hypotheses tested (Cribbie, 2007) [56]. There are several reasons for keeping the statistical significance intact. First, research studies in SEM presume that Type 1 error does not increase, although multiple hypotheses are tested as in SEM. Second, the Bonferroni test sets 0.05% statistical significance for all hypotheses in the SEM model, considering each hypothesis tested is a family of those hypotheses. It sets the significance level at an extremely low level, where inferences can defy common sense. For instance, eight hypotheses are tested, and significance is set at 0.05/8 = 0.00625%. Third, the Bonferroni test decreases Type 1 error, meaning it increases Type 2 error, leading to accepting a result as true or correct, when it is not (Perneger, 1998) [57]. Hence, this study proceeds with conventional significance levels at the hypothesis level: 0.1% as weak, 0.05% as moderate, and 0.01% as strong.

5. Results and Discussion

5.1. Main Analysis

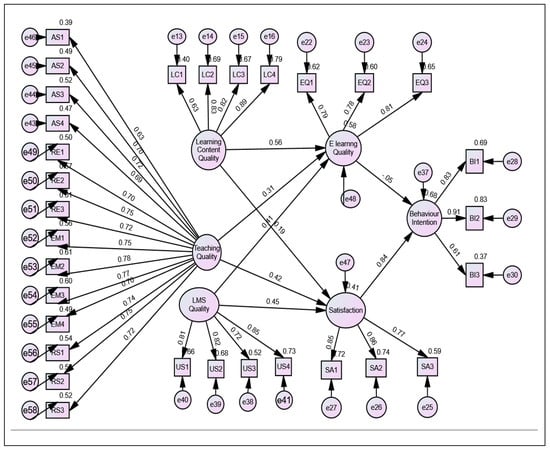

Figure 3 shows the graphical SEM output. The SEM achieved the minimum requirements and was statistically significant at 0.01%, with Chi-square = 1624.44 and a degree of freedom = 426 (i.e., 527 number of distinct sample moments − 101 number of distinct parameters to be estimated). Although the model fit, CMIN shows a statistical discrepancy between the SEM model and the underlying data structure because PCMIN/DF = 3.8 at 0.01% significance. An inflated PCMIN/DF is possible with a larger sample size of more than 250 observations. A value below three is excellent, and a value below five indicates that the hypothesized model can explain the data of this study (Marsh & Hocevar, 1985) [58].

Figure 3.

Structural Equation Model output with standardized regression coefficients.

The model fit to data was investigated using fit indices. The Comparative Fit Index Statistic (CFI) was 0.9, the Tucker–Lewis Index (TLI) was closer to 0.9 (0.9), and the Normed Fit Index was 0.9, showing a good fit as they were closer to 1. The root mean square error approximation (RMSEA) was 0.08, which is closer to zero, indicating that the square root of population misfit per degree of freedom was small (Hayashi et al., 2010, pp. 202–234) [59].

Table 3 shows the coefficient values for each indicator. Structural equation analysis conducted using maximum likelihood estimates showed that all relationships are statistically and positively significant, except for the negative relationship between e-learning quality and behavioral intention.

Table 3.

Standardized coefficients for the full sample.

The average variance extracted (AVE) was calculated manually by squaring each indicator for a given construct and computing the average value of the squared values added together because AMOS does not have the automated functionality to compute AVE. AVE indicates the amount of variance captured by the construct, rather than due to measurement errors resulting from the maximum likelihood estimates method conducted for the structural equation modeling computation. The AVE for Teaching Quality was 0.54, and the AVE for Learning Content Quality was 0.65. The AVE for e-learning quality was 0.65, satisfaction was 0.69, behavior indicator was 0.63, and LMS quality was 0.64. They were above 0.5, which showed acceptable convergent validity (Fornell & Larcker, 1981) [60]. The normality assessment of variables showed that kurtosis values were less than 5, with only variables reporting 1.4 and 1.1, and the remaining variables having values less than 1, which was confirmed using the maximum likelihood technique for data analysis in the model (Bentler, 2005) [61].

Figure 3 and Table 3 show the indicators well informed the measurement of the Teaching Quality with regression estimates of more than 0.5. However, teaching quality was influenced by 0.11 on e-learning quality and 0.45 on student satisfaction. The University adopted online learning for accounting students for the first time, and the low regression coefficients indicate the capacity to improve teaching quality in the future to contribute to improving e-learning quality and student satisfaction. Previous research has proposed using pictures, diagrams, and other visual aids to decrease the intrinsic cognitive load in accounting learning. Learning with multiple representations of content, such as visual (animations and pictures), auditory, and textual, can assist in decreasing the intrinsic cognitive load that is otherwise embedded in learning accounting subjects (Sithole et al., 2017) [60].

The learning content quality indicators represented their construct well, with standardized coefficients over 0.5. Learning Content Quality had a positive influence on E-Learning Quality by 0.56. However, its influence on student satisfaction was 0.19. Learning content was objectively appropriate to improve e-learning quality, but the subjective evaluation of students was that it did not satisfy them well. Multiple factors lead to student satisfaction. They include behavioral, cognitive, and emotional aspects. Satisfaction results from online interaction, student acceptance of learning content efficacy, and student engagement with learning can all be influenced by the social-cultural aspects of a country (Nia et al., 2023) [62].

With standardized coefficients over 0.5, the indicators represented the LMS quality construct well. LMS quality had a low positive influence of 0.11 on e-learning quality and a moderate positive influence of 0.45 on student satisfaction. The results indicate that LMS quality can be improved by making it more usable to students. Usability can be enhanced through access, ease of use provided to students, and whether the system has sufficient features to increase student engagement and interaction (Nasir et al., 2021) [63].

With the standardized coefficients over 0.5, the indicators represented the behavioral intention well. Student satisfaction was strongly influenced with a 0.84 standardized coefficient toward their adoption of online learning on an ongoing basis. Well-designed online learning lessons and strengthened digital infrastructure are two key aspects that can contribute to improving students’ behavioral intentions (Xu & Xue, 2023) [64].

5.2. Additional Analysis by Years of Study

Faculty staff at the introductory level of accounting in the first year of student enrolment face several challenges. The first year has large student enrolments, and students join the accounting degree with different motivations. It is the first year where students study at the university for the first time, where they find a large volume of learning to be completed in a short time (Jones & Fields, 2001) [65]. Students perceive that introductory accounting degrees are more difficult than other degrees (Opdecam & Everaert, 2012) [66].

Table 4 shows the additional analysis conducted to investigate whether there were any differences in student perceptions regarding the influence of cognitive load on learning memory by student enrolment year. Year one comprised 80 students, year two comprised 195 students, and year three comprised 207 students. A sample size greater than 100 with no missing data is sufficient for confirmatory factor analysis (Ding et al., 1995) [67]. Hence, the enrolment year 1 output is reported with a reservation for interpretation.

Table 4.

Standardized coefficients by enrolment year.

The models for enrolment years 1, 2, and 3 achieved the minimum requirements and were statistically significant at 0.01% and a degree of freedom = 426 (i.e., 527 number of distinct sample moments − 101 number of distinct parameters to be estimated). The Chi-square value was 742.91 for enrolment year 1, 912.09 for enrolment year 2, and 1034.39 for enrolment year 3. The model fit measured by CMIN/DF for year 1 was 2.3, year 2 was 2.1, and year 3 was 2.4, which showed an excellent fit of data with the hypothesized model with values being less than 3 [56]. The kurtosis values of indicators and constructs were less than five for the enrolment years 1, 2, and 3 samples, confirming normality and suitability to use the maximum likelihood technique [61].

The estimated values shown in Table 4 showed similar patterns across the years. However, in enrolment year 1, the ELQ and TQ relationship and the TQ and student satisfaction relationship were not statistically significant. Accounting terminology is typically introduced in the first year, which can increase student’s cognitive load due to intrinsic load. Given these data relate to the first implementation of online learning, the teaching faculty staff were yet to perfect the teaching techniques to decrease extraneous cognitive load for first-year students (Sithole et al., 2017) [68]. Presenting accounting with texts and diagrams along with numbers has been shown to increase student learning by decreasing extraneous cognitive load (Seedwell & Abeysekera, 2017, chapter 1) [69].

In all three study years, E-Learning Quality had no statistical influence on behavioral intentions to adopt continuing online learning. Learning Content Quality had a weak statistically positive influence on learner satisfaction in the enrolment cohort. The BI and ELQ relationship was not statistically significant for all three years. All other relationships were statistically and positively significant at 1%.

6. Conclusions

In relation to CLT, the findings showed that H1a (teacher quality influence on e-learning quality) and H1b (teacher quality influence on student satisfaction) were supported. H2a (LMS quality on e-learning quality) and H2b (LMS quality on student satisfaction) were supported. H3a (learning content quality on e-learning quality) and H3b (learning content quality on student satisfaction) were supported.

In relation to the Human Memory System, H4 (e-learning quality on students’ behavioral intentions) was not supported. H5 (student satisfaction on students’ behavioral intentions) was supported. The previous Section discussed the implications for universities arising from the findings of the study. In conclusion, this study has highlighted the implications of the findings. These include public policy, theoretical, and methodological implications. In addition, this Section notes the study’s limitations and future research propositions.

6.1. Public Policy Implications

The Philippines comprises over 100 million people, with a substantially younger population. It would be a huge benefit for the Philippines to make them more productive with knowledge and skills to increase gross domestic product. Online education has opened up possibilities to reach a wider population to share knowledge and skills, and universities can play a leading role in reaching the rural poor. There is a strong positive correlation between education and poverty reduction in the Philippines. However, online education requires national infrastructure support with accessible Internet and cheaper computer hardware and software. COVID-19 highlighted the digital divide, with only 14% of students in poor households having access to a computer or tablet and 16% having access to the Internet (Republic of the Philippines, 2023) [70]. The Philippine government must consider narrowing the digital divide and promoting online education, especially degrees such as accounting offered by universities that can lead to skill-based employment. The Philippine government can partner with universities, the private sector, and bilateral and multilateral agencies to achieve quality education (SDG 4) of sustainable development with informed social values such as increasing human dignity, equity, and efficiency. A clear policy analysis with a specific problem statement, such as increasing access to university education for the rural poor, an explicit policy analysis framework as to the strategies and how to operationalize them to bridge the gap to reach set targets, and a bibliographic evidence-based understanding of how to reach the targets can enable the Philippine government and its partners to create expected values by effectively expending resources (Vining & Weimer, 2005) [71].

6.2. Theoretical Implications

The COVID-19 period of online teaching provided important lessons for the university to reflect upon in order to continue online education and assist a greater student population with university education. Such offerings can decrease inequality (SDG 10) and improve quality education (SDG 4) toward sustainable development in the Philippines. The findings show that the university must reflect on how to decrease the intrinsic load in learning accounting. LMS quality can be improved to decrease extrinsic cognitive load and improve e-learning quality and student satisfaction. These can help increase students’ working memory in learning, which can help to store their learning in short-term memory. The findings showed that student satisfaction strongly contributes to retaining their learning in the long-term memory. The results also showed that improving e-learning quality can substantially improve students’ long-term memory. The findings show that managing student cognitive load improves the student memory framework, with positive statistical coefficients.

6.3. Methodological Implications

The indicators used in the study to represent teaching quality, LMS quality, and learning content quality showed that they strongly represent the chosen constructs, as evidenced by high standardized coefficients. The questions related to them in the questionnaire can serve as indicators to test student cognitive load. The indicators used to measure e-learning quality, student satisfaction, and behavioral intentions strongly represent these constructs. They can methodologically serve to represent student memory system measurements during learning.

6.4. Limitations

Most participants in the study were females, which bounded the findings. Although previous findings showed no clear preference for a single gender toward online learning, research evidence supports that female students are more perseverant, engaging, and self-regulated than male students. Male students use more learning strategies and have better technical skills (Yu, 2021) [72]. Other demographic factors such as student age, student prior knowledge about accounting, and personal and professional characteristics of the lecturer can influence the findings.

Second, the research was conducted at a single university in the Philippines that introduced online learning for the first time. The novelty of the pedagogical approach can influence the findings. Online teaching and learning have specific pedagogical knowledge contents and contexts related to designing and organizing better learning experiences. Online learning also requires creating digital environments that are different from face-to-face classroom learning environments. The study was conducted during the COVID-19 period; students had no choice but to study online, and situational factors may have influenced their perceptions. Perfecting these requires time and resources (Rapanta et al., 2020) [73].

Third, this study investigated the online learning of accounting undergraduate students. The limited available evidence shows that the level of digital exposure can influence the propensity for online learning. In such situations, blended learning can provide additional support (Simonds & Brocks, 2014) [74]. Educational disciplines can be categorized as hard-pure, hard-applied, soft-pure, and soft-applied, and research has found notable differences in the usage of digital tools among these discipline categories (Smith et al., 2008) [75].

6.5. Future Research

COVID-19 provided important lessons for introducing and continuing online learning for universities. First, future research can overcome the limitations encountered in this study to make further contributions to online learning. The limitations suggest that a future study should investigate the effects of student age, prior accounting knowledge, teacher characteristics, and their effects on students’ short- and long-term learning memory.

Second, given that online education is known and widely practiced, students’ perceptions may have changed since the COVID-19 period. A replicate study can compare results to determine what changes have taken place in relation to students’ perceptions of the cognitive load of online learning and its effect on learning memory. This study investigated the synchronous learning setting. However, asynchronous learning settings where students can learn at their own pace through pre-recorded learning content can provide flexibility and include learners who cannot commit to specific learning times. A hybrid setting where pre-recorded learning content is offered followed by scheduled meetings for discussions with academic teaching staff can complement learners (Dargahi et al., 2023; Giesbers et al., 2014) [35,36]. A future study could compare synchronous, asynchronous, and hybrid learning settings to investigate the effect of cognitive learning on student learning memory.

Third, the findings showed that in the main model, teaching quality positively but moderately influenced e-learning quality and student satisfaction. As pointed out by the Cognitive Load Theory, inappropriate instructional formats can increase extraneous cognitive load, making it harder for students to learn. This includes fewer faculty members’ preparedness to teach online (Saha et al., 2021) [76]. A future study should investigate the effectiveness of teaching materials used in online teaching to increase e-learning quality and student satisfaction.

Fourth, the findings showed that e-learning quality had no statistically significant influence on encouraging students to embrace online learning. A contributing factor could have been the difficulty of teaching practical syllabuses such as accounting and the lack of direct contact students have with the faculty staff (Stecuła & Wolniak, 2022) [77]. A future study can investigate the effects of work-integrated learning on student learning memory systems.

Fifth, in this study, the learning outcome descriptor was short- and long-term memory. However, there are various descriptors related to learning outcomes. Degree qualifications, university-generic learning expectations, and funders’ educational expectations can influence learning outcome descriptors (Gudeva et al., 2011) [78]. A future study could investigate the influence of student cognitive load on chosen learning outcome descriptors in online learning.

Sixth, the 1987 Philippine Constitution states that it will protect and promote quality education for all citizens at all levels and will take the necessary steps to achieve this. However, since its independence in 1946, the Philippines has faced formidable challenges in executing its agenda effectively. The economic disparity between the haves and have-nots is a major issue with widespread poverty, which keeps 16.7 million people below the poverty line. The government initiated the Pantawid Pamilyang Pilipino Programme (4Ps), which provides cash transfers to poor families and helps them with education expenses such as school supplies, uniforms, and transportation costs (Republic of the Philippines, 2023) [64]. Its effectiveness on citizens receiving a university education is not well established. Future research can investigate the effectiveness of the 4P program in supporting SDG 4 of quality education.

Seventh, Filipinos spend more than nine hours a day online; 59.7% of screen time is on the phone, and 40.3% of time is on computers (Datareportal, 2023) [79]. Smartphones open up the possibility of reaching out to a greater population to take up university education such as undertaking accounting degree studies. ChatGPT can complement this with easily accessible knowledge, which has reset what is known, can be known, and is beyond knowledge. It has revised the benchmarks of pedagogy to ensure integrity in student learning (Abeysekera, 2024) [80]. These tools can help decrease educational institution costs and costs of student learning, increase education quality, and reduce social inequality. A future study can investigate using mobile phone applications as an LMS platform with knowledge along with the learning potentials offered by ChatGPT knowledge as an additional factor, and the effect of cognitive load on student learning memory.

Author Contributions

E.S., A.G. and R.D. made initial conceptualizations; E.S. and A.G. collected and investigated data; E.S., A.G. and R.D. analyzed data and wrote the initial draft; I.A. reconceptualized dataset and then revised and rewrote the entire manuscript until publication in the journal. E.S., A.G. and R.D. reviewed and approved the final rewritten manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This study received no external funding.

Institutional Review Board Statement

This study was conducted according to the Declaration of Helsinki for studies involving humans with the approval of Holy Angel University, the Philippines, received on 14 December 2020.

Informed Consent Statement

The study obtained informed consent from the participants.

Data Availability Statement

The data supporting this study’s findings and supplementary materials are available at https://doi.org/10.6084/m9.figshare.24759588.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Power, C. The Power of Education. In The Power of Education. Education in the Asia-Pacific Region: Issues, Concerns and Prospects; Springer: Singapore, 2015; Volume 27. [Google Scholar] [CrossRef]

- Kromydas, T. Rethinking higher education and its relationship with social inequalities: Past knowledge, present state and future potential. Palgrave Commun. 2017, 3, 1. [Google Scholar] [CrossRef]

- Chen, T.; Peng, L.; Yin, X.; Rong, J.; Yang, J.; Cong, G. Analysis of User Satisfaction with Online Education Platforms in China during the COVID-19 Pandemic. Healthcare 2020, 8, 200. [Google Scholar] [CrossRef]

- Means, B.; Toyama, Y.; Murphy, R.; Bakia, M.; Jones, K. Evaluation of Evidence-Based Practices in On-Line Learning: A Meta-Analysis and Review of Online Learning Studies. 2009. Available online: http://repository.alt.ac.uk/id/eprint/629 (accessed on 15 January 2024).

- Dhawan, S. Online Learning: A Panacea in the Time of COVID-19 Crisis. J. Educ. Technol. Syst. 2020, 49, 5–22. [Google Scholar] [CrossRef]

- Rotas, E.E.; Cahapay, M.B. Difficulties in Remote Learning: Voices of Philippine University Students in the Wake of COVID-19 Crisis. Asian J. Distance Educ. 2020, 15, 147–158. [Google Scholar]

- Mukhtar, K.; Javed, K.; Arooj, M.; Sethi, A. Advantages, Limitations and Recommendations for online learning during COVID-19 pandemic era. Pak. J. Med. Sci. 2020, 36, S27. [Google Scholar] [CrossRef] [PubMed]

- Skulmowski, A.; Xu, K.M. Understanding Cognitive Load in Digital and Online Learning: A New Perspective on Extraneous Cognitive Load. Educ. Psychol Rev. 2022, 34, 171–196. [Google Scholar] [CrossRef]

- Barrot, J.S.; Llenares, I.I.; del Rosario, L.S. Students’ online learning challenges during the pandemic and how they cope with them: The case of the Philippines. Educ. Inf. Technol. 2021, 26, 7321–7338. [Google Scholar] [CrossRef] [PubMed]

- Worldometer. Phillppines Population. 2023. Available online: https://www.worldometers.info/world-population/philippines-population/ (accessed on 11 January 2024).

- Abeysekera, I.; Jebeile, S. Why Learners Found Transfer Pricing Difficult? Implications for Directors. J. Asian Financ. Econ. Bus. 2019, 6, 9–19. [Google Scholar] [CrossRef]

- Blayney, P.; Kalyuga, S.; Sweller, J. The impact of complexity on the expertise reversal effect: Experimental evidence from testing accounting students. Educ. Psychol. 2016, 36, 1868–1885. [Google Scholar] [CrossRef]

- Shabeeb, M.A.; Sobaih, A.E.E.; Elshaer, I.A. Examining Learning Experience and Satisfaction of Accounting Students in Higher Education before and amid COVID-19. Int. J. Environ. Res. Public Health 2022, 19, 16164. [Google Scholar] [CrossRef]

- CHED Memo. No. 4. 2020. Available online: https://ched.gov.ph/wp-content/uploads/CMO-No.-4-s.-2020-Guidelines-on-the-Implementation-of-Flexible-Learning.pdf (accessed on 13 January 2024).

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Song, L.; Singleton, E.S.; Hill, J.R.; Koh, M.H. Improving online learning: Student perceptions of useful and challenging characteristics. Internet High. Educ. 2004, 7, 59–70. [Google Scholar] [CrossRef]

- Almahasees, Z.; Mohsen, K.; Amin, M.O. Faculty’s and Students’perceptions of Online Learning during COVID-19. Front. Educ. 2021, 6, 638470. [Google Scholar] [CrossRef]

- Cronin, J.J.; Taylor, S.A. Measuring Service Quality: A Reexamination and Extension. J. Mark. 1992, 56, 55–68. [Google Scholar] [CrossRef]

- Zalat, M.M.; Hamed, M.S.; Bolbol, S.A. The experiences, challenges, and acceptance of e-learning as a tool for teaching during the COVID-19 pandemic among university medical staff. PLoS ONE 2021, 16, e0248758. [Google Scholar] [CrossRef]

- Almahasees, Z.; Jaccomard, H. Facebook translation service (FTS) usage among jordanians during COVID-19 lockdown. Adv. Sci. Technol. Eng. Syst. 2020, 5, 514–519. [Google Scholar] [CrossRef]

- Pokhrel, S.; Chhetri, R. A Literature Review on Impact of COVID-19 Pandemic on Teaching and Learning. High. Educ. Future 2021, 8, 133–141. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, C.; Zhang, Z.; Lin, H.; Zhang, Z.; Sun, Y.; Mueller, J.; Manmatha, R.; Li, M.; Meta, A.S.; et al. Snap, Amazon, ByteDance, & SenseTime. ResNeSt Split.-Atten. Netw. 2022. Available online: https://openaccess.thecvf.com/content/CVPR2022W/ECV/papers/Zhang_ResNeSt_Split-Attention_Networks_CVPRW_2022_paper.pdf (accessed on 13 January 2024).

- Ehlers, U.D.; Pawlowski, J.M. Quality in European e-learning: An introduction. In Handbook on Quality and Standardisation in E-Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar] [CrossRef]

- Al-Salman, S.; Haider, A.S. Jordanian University Students’ Views on Emergency Online Learning During COVID-19. Online Learn. 2021, 25, 286–302. [Google Scholar] [CrossRef]

- Coman, C.; Țîru, L.G.; Meseșan-Schmitz, L.; Stanciu, C.; Bularca, M.C. Online Teaching and Learning in Higher Education during the Coronavirus Pandemic: Students’ Perspective. Sustainability 2020, 12, 10367. [Google Scholar] [CrossRef]

- Elashry, R.S.; Brittler, M.C.; Saber, E.-H.; Ahmed, F.A. Adolescents’ Perceptions and Academic Stress towards Online Learning during COVID-19 Pandemic. Assiut Sci. Nurs. J. 2021, 9, 68–81. [Google Scholar] [CrossRef]

- Asif, M.; Khan, M.A.; Habib, S. Students’ Perception towards New Face of Education during This Unprecedented Phase of COVID-19 Outbreak: An Empirical Study of Higher Educational Institutions in Saudi Arabia. Eur. J. Investig. Health Psychol. Educ. 2022, 12, 835–853. [Google Scholar] [CrossRef]

- Fox, J.; Griffith, J.; Smith, A.M. Exploring the Efficacy of an Online Training Programme to Introduce Mental Health Recovery to Carers. Community Ment Health J. 2023, 59, 1193–1207. [Google Scholar] [CrossRef]

- Elalouf, A.; Edelman, A.; Sever, D.; Cohen, S.; Ovadia, R.; Agami, O.; Shayhet, Y. Students’ Perception and Performance Regarding Structured Query Language Through Online and Face-to-Face Learning. Front. Educ. 2022, 7, 935997. [Google Scholar] [CrossRef]

- Dubey, P.; Pradhan, R.L.; Sahu, K.K. Underlying factors of student engagement to E-learning. J. Res. Innov. Teach. Learn. 2023, 16, 17–36. [Google Scholar] [CrossRef]

- Sumilong, M.J. Learner Reticence at the Time of the Pandemic: Examining Filipino Students’Communication Behaviors in Remote Learning. Br. J. Teach. Educ. Pedagog. 2022, 1, 1–13. [Google Scholar] [CrossRef]

- Alammary, A.S. How to decide the proportion of online to face-to-face components of a blended course? A Delphi Study. SAGE Open 2022, 12, 21582440221138448. [Google Scholar] [CrossRef]

- Assadi, N.; Kashkosh, E. Training Teachers’ Perspectives on Teacher Training and Distance Learning During the COVID-19 Pandemic. J. Educ. Soc. Res. 2022, 12, 40–55. [Google Scholar] [CrossRef]

- Daher, W.; Shayeb, S.; Jaber, R.; Dawood, I.; Abo Mokh, A.; Saqer, K.; Bsharat, M.; Rabbaa, M. Task design for online learning: The case of middle school mathematics and science teachers. Front. Educ. 2023, 8, 1161112. [Google Scholar] [CrossRef]

- Giesbers, B.; Rienties, B.; Tempelaar, D.; Gijselaers, W. A dynamic analysis of the interplay between asynchronous and synchronous communication in online learning: The impact of motivation. J. Comput. Assist. Learn. 2014, 30, 30–50. [Google Scholar] [CrossRef]

- Dardahi, H.; Kooshkebaghi, M.; Mireshghollah, M. Learner satisfaction with synchronous and asynchronous virtual learning systems during the COVID-19 pandemic in Tehran university of medical sciences: A comparative analysis. BMC Med. Educ. 2023, 23, 886. [Google Scholar] [CrossRef]

- Sweller, J.; Ayers, P.; Kalyuga, S. Cognitive Load Theory; Springer: New York, NY, USA, 2011. [Google Scholar]

- Chan, R.Y. Understanding the Purpose of Higher Education: An Analysis of the Economic and Social Benefits for Completing a College Degree. IEPPA 2016, 6, 1–40. Available online: https://scholar.harvard.edu/files/roychan/files/chan_r._y._2016._understanding_the_purpose_aim_function_of_higher_education._jeppa_65_1-40.pdf (accessed on 15 January 2024).

- Jiang, D.; Kalyuga, S. Confirmatory factor analysis of cognitive load ratings supports a two-factor model. Tutor. Quant. Methods Psychol. 2020, 16, 216–225. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.; Paas, F. Cognitive architecture and instructional design: 20 years later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Atkinson, R.C.; Shiffrin, R.M. Human Memory: A Proposed System and its Control Processes. Psychol. Learn. Motiv. 1968, 2, 89–195. [Google Scholar] [CrossRef]

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef] [PubMed]

- Nee, D.E.; Berman, M.G.; Moore, K.S.; Jonides, J. Neuroscientific Evidence About the Distinction Between Short- and Long-Term Memory. Curr. Dir. Psychol. Sci. 2008, 17, 102–106. [Google Scholar] [CrossRef] [PubMed]

- Baddeley, A. The episodic buffer: A new component of working memory? Trends Cogn. Sci. 2000, 4, 417–423. [Google Scholar] [CrossRef]

- Frederiksen, J.G.; Sørensen, S.M.D.; Konge, L.; Svendsen, M.B.S.; Nobel-Jørgensen, M.; Bjerrum, F.; Andersen, S.A.W. Cognitive load and performance in immersive virtual reality versus conventional virtual reality simulation training of laparoscopic surgery: A randomized trial. Surg. Endosc. 2020, 34, 1244–1252. [Google Scholar] [CrossRef] [PubMed]

- Theresiawati, S.H.B.; Hidayanto, A.N.; Abidin, Z. Variables Affecting E-Learning Services Quality in Indonesian Higher Education: Students’ Perspectives. J. Inf. Technol. Educ. Res. 2020, 19, 259–286. [Google Scholar] [CrossRef]

- Udo, G.J.; Bagchi, K.K.; Kirs, P.J. Using SERVQUAL to assess the quality of e-learning experience. Comput. Hum. Behav. 2011, 27, 1272–1283. [Google Scholar] [CrossRef]

- Uppal, M.A.; Ali, S.; Gulliver, S.R. Factors determining e-learning service quality. Br. J. Educ. Technol. 2018, 49, 412–426. [Google Scholar] [CrossRef]

- Hudjashov, G.; Karafet, T.M.; Lawson, D.J.; Downey, S.; Savina, O.; Sudoyo, H.; Lansing, J.S.; Hammer, M.F.; Cox, M.P. Complex Patterns of Admixture across the Indonesian Archipelago. Mol. Biol. Evol. 2017, 34, 2439–2452. [Google Scholar] [CrossRef]

- Shkeer, A.S.; Awang, Z. Exploring the Items for Measuring the Marketing Information System Construct: An Exploratory Factor Analysis. Int. Rev. Manag. Mark. 2019, 9, 87–97. [Google Scholar] [CrossRef]

- Chatzopoulos, A.; Kalogiannakis, M.; Papadakis, S.; Papoutsidakis, M. A Novel, Modular Robot for Educational Robotics Developed Using Action Research Evaluated on Technology Acceptance Model. Educ. Sci. 2022, 12, 274. [Google Scholar] [CrossRef]

- Rossoni, L.; Engelbert, R.; Bellegard, N.L. Normal science and its tools: Reviewing the effects of exploratory factor analysis in management. Rev. Adm. 2016, 51, 198–211. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef]

- Nguyen, A.L.; Dellaportas, S. Accounting Ethics Education Research: A Historical Review of the Literature. In Accounting Ethics Education Teaching Virtues and Values; Routledge: New York, NY, USA, 2021; Volume 3, pp. 44–80. [Google Scholar] [CrossRef]

- Grimm, P. Social desirability bias. In Wiley International Encyclopedia of Marketing; Sheth, J.N., Malhotra, N.K., Eds.; Wiley Sons and Limited: Hoboken, NJ, USA, 2010. [Google Scholar] [CrossRef]

- Cribbie, R.A. Multiplicity control in structural equation modelling. Struct. Equ. Model. A Multidiscip. J. 2007, 14, 98–112. [Google Scholar] [CrossRef]

- Perneger, T.V. What’s Wrong with Bonferroni Adjustments. BMJ Br. Med. J. 1998, 316, 1236–1238. [Google Scholar] [CrossRef]

- Marsh, H.W.; Hocevar, D. Application of confirmatory factor analysis to the study of self-concept: First- and higher order factor models and their invariance across groups. Psychol. Bull. 1985, 97, 562–582. [Google Scholar] [CrossRef]

- Hayashi, K.; Bentler, P.M.; Yuan, K.-H. Structural Equation Modeling. In Essential Statistical Methods for Medical Statistics, Handbook of Statistics; Elsevier: Amsterdam, The Netherlands, 2011; Volume 27, pp. 202–234. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Bentler, P.M. Structural Equations Program Manual; EQS 6; Multivariate Software: Encino, CA, USA, 2006. [Google Scholar]

- Nia, H.S.; Marôco, J.; She, L.; Fomani, F.K.; Rahmatpour, P.; Ilic, I.S.; Ibrahim, M.M.; Ibrahim, F.M.; Narula, S.; Esposito, G.; et al. Student satisfaction and academic efficacy during online learning with the mediating effect of student engagement: A multi-country study. PLoS ONE 2023, 18, e0285315. [Google Scholar] [CrossRef]

- Nasir, F.D.M.; Hussain, M.A.M.; Mohamed, H.; Mokhtar, M.; Karim, N.A. Student Satisfaction in Using a Learning Management System (LMS) for Blended Learning Courses for Tertiary Education. Asian J. Univ. Educ. 2021, 17, 442–454. [Google Scholar] [CrossRef]

- Xu, T.; Xue, L. Satisfaction with online education among students, faculty, and parents before and after the COVID-19 outbreak: Evidence from a meta-analysis. Front. Psychol. 2023, 14, 1128034. [Google Scholar] [CrossRef]

- Jones, J.P.; Fields, K.T. The role of supplemental instruction in the first accounting course. Issues Account. Educ. 2001, 16, 531–547. [Google Scholar] [CrossRef]

- Opdecam, E.; Everaet, P. Improving student satisfaction in a first-year undergraduate accounting course by team learning. Issues Account. Educ. 2011, 27, 53–82. [Google Scholar] [CrossRef]

- Ding, L.; Velicer, W.F.; Harlow, L. Effects of Estimation Methods, Number of Indicators Per Factor, and Improper Solutions on Structural Equation Modeling Fit Indices. Struct. Equ. Model. A Multidiciplinary J. 1995, 2, 119–143. [Google Scholar] [CrossRef]

- Sithole, S.; Chandler, P.; Abeysekera, I.; Paas, F. Benefits of Guided Self-Management of Attention on Learning Accounting. J. Educ. Psychol. 2017, 109, 220–232. [Google Scholar] [CrossRef]

- Sithole, S.T.M.; Abeysekera, I. Accounting Education: Cognitive Load Theory Perspective; Routledge: New York, NY, USA, 2017. [Google Scholar]

- Republic of the Philippines. Educational Challenges in the Philippines. Philippine Institute for Development Studies. 2023. Available online: https://pids.gov.ph/details/news/in-the-news/educational-challenges-in-the-philip-pines#:~:text=The%201987%20Philippine%20Constitution%20states,such%20education%20accessible%20to%20all.%E2%80%9D (accessed on 15 January 2024).

- Vining, A.R.; Weimer, D.L. The P-Case. In Thinking Like a Policy Analyst; Geva-May, I., Ed.; Palgrave Macmillan: New York, NY, USA, 2005; pp. 153–170. [Google Scholar] [CrossRef]

- Yu, Z. The effects of gender, educational level, and personality on online learning outcomes during the COVID-19 pandemic. Int. J. Educ. Technol. High. Educ. 2021, 18, 14. [Google Scholar] [CrossRef]

- Rapanta, C.; Botturi, L.; Goodyear, P.l.; Guardia, L.; Koole, M. Online University Teaching During and After the COVID-19 Crisis: Refocusing Teacher Presence and Learning Activity. Postdigit. Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef]

- Simonds, T.A.; Brocks, B.L. Relationship between Age, Experience, and Student Preference for Types of Learning Activities in Online Courses. 2014. Available online: https://files.eric.ed.gov/fulltext/EJ1020106.pdf (accessed on 15 January 2024).

- Smith, G.; Heindel, A.J.; Torres-Ayala, A.T. E-learning commodity or community: Disciplinary differences between online courses. Internet High. Educ. 2008, 11, 152–159. [Google Scholar] [CrossRef]

- Saha, S.M.; Pranty, S.A.; Rana, M.J.; Islam, M.J.; Hossain, M.E. Teaching during a pandemic: Do university teachers prefer online teaching? Heliyon 2021, 8, e08663. [Google Scholar] [CrossRef] [PubMed]

- Stecuła, K.; Wolniak, R. Advantages and Disadvantages of E-Learning Innovations during COVID-19 Pandemic in Higher Education in Poland. J. Open Innov. Technol. Mark. Complex. 2022, 8, 159. [Google Scholar] [CrossRef]

- Gudeva, L.K.; Dimova, V.; Daskalovska, N.; Trajkova, F. Designing Descriptors of Learning Outcomes for Higher Education Qualification. Procedia—Soc. Behav. Sci. 2011, 46, 1306–1311. [Google Scholar] [CrossRef]

- Datareportal. Digital 2023: Global Overview Report. 2023. Available online: https://datareportal.com/reports/digital-2023-global-overview-report (accessed on 15 January 2024).

- Abeysekera, I. ChatGPT and academia on accounting assessments. J. Open Innov. Technol. Mark. Complex. 2024, 10, 100213. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).