Real-Time 3D Reconstruction for the Conservation of the Great Wall’s Cultural Heritage Using Depth Cameras

Abstract

1. Introduction

2. Literature Review

2.1. Main Technologies for Architectural Heritage Data Collection

2.2. Challenges in the Collection of Architectural Heritage Information

3. Methodology

3.1. Overall Framework

3.2. Process of Modules

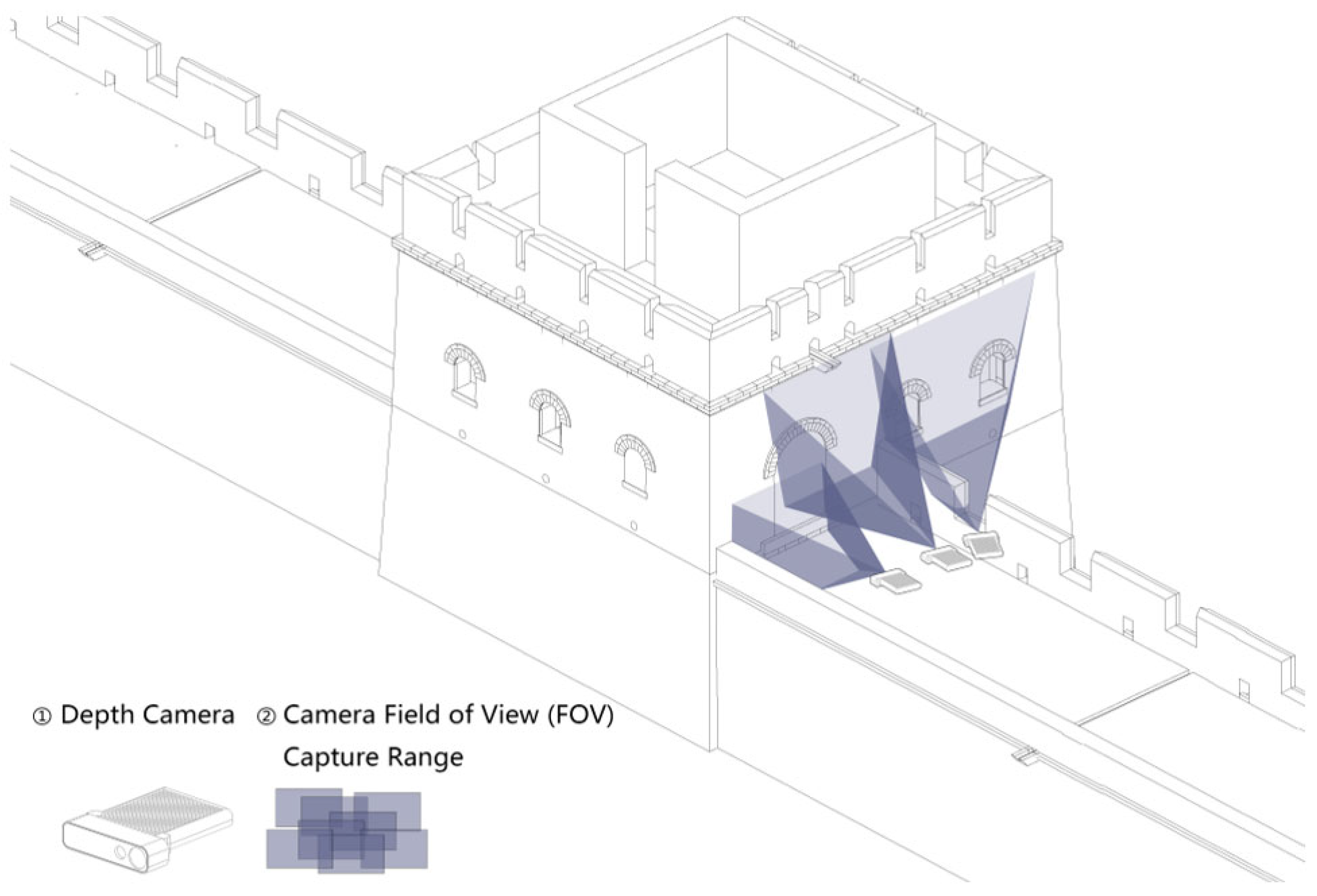

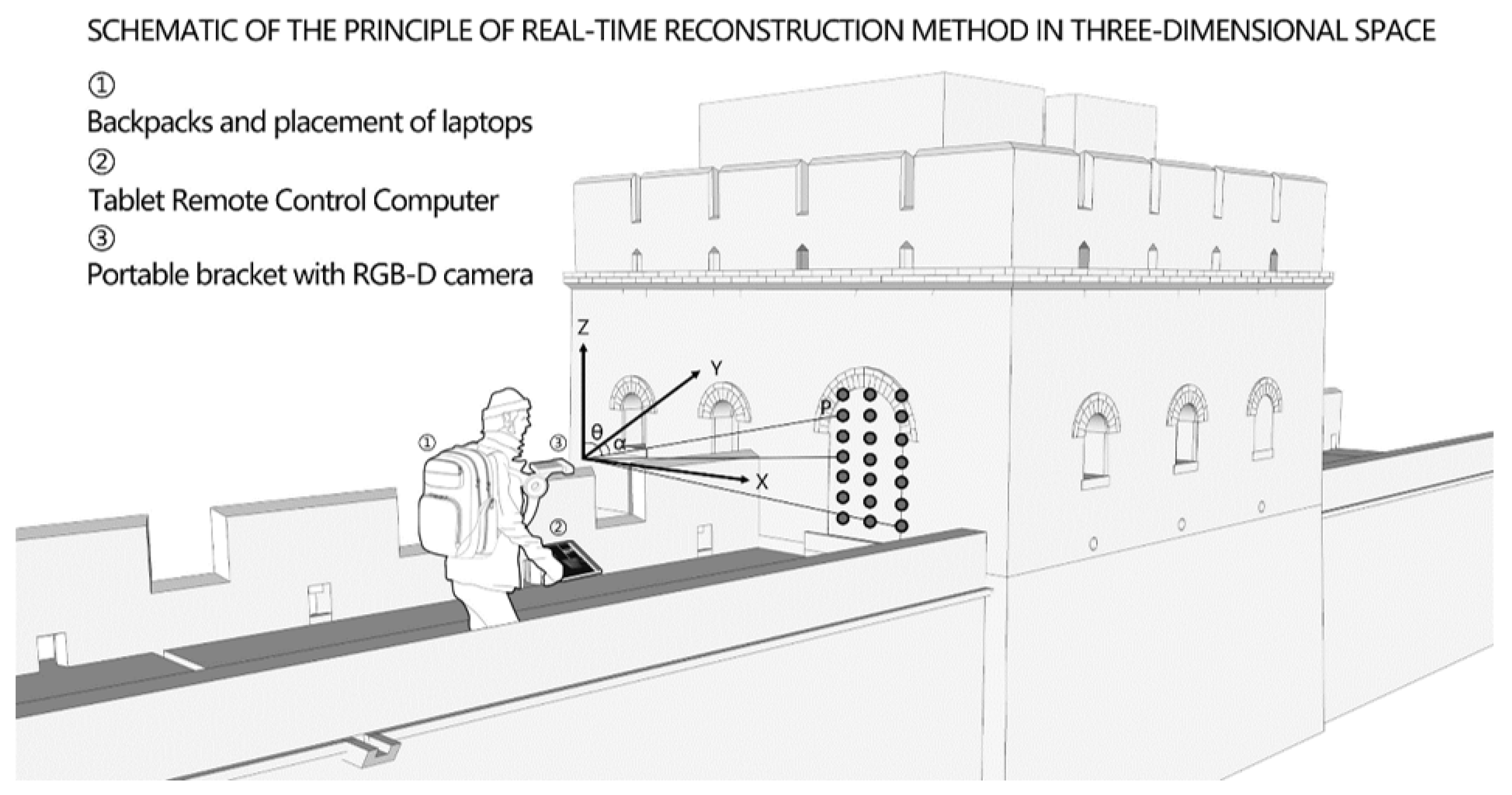

3.2.1. Adjust and Fix the Angle of the Depth Camera

3.2.2. Select Traditional Building Areas to Be Examined

3.2.3. Plan Regional Collection Routes

3.2.4. Collecting Target Ground and Building Facades along Set Routes and Obtaining Site Point Cloud Models

3.3. Derivation of Point Cloud Model Reconstruction

- Feature Extraction and Matching: To obtain the modality-invariant features from the RGB images and depth maps based on the protection and repair requirements of ancient buildings, SIFT (Scale-Invariant Feature Transform) is adopted for geometric feature extraction [19]. Given a set of RGB images and depth maps , where and denote the -th RGB image and depth map, and is the number of image frames, the RGB features and depth features are extracted using the SIFT algorithm. denotes the number of feature points in this set and , where denotes the number of feature points in each image. After obtaining the feature points of the RGB and depth images, we calculate the nearest point for each feature point to achieve cross-modal feature registration. For better representation, the feature registration can be defined as follows, minimizing the expected distance:where denotes the L2 norm function for distance metric evaluation, and represents the matched depth feature point of the RGB feature point .

- Pose Estimation: After obtaining the corresponding relationship from feature matching, the PnP algorithm is adopted for the pose estimation of image frames [20]. The objective is to estimate the camera pose of each image frame. Specifically, the estimated poses are calculated by minimizing the re-projection error given the depth points (3D points) and their corresponding RGB pixels (2D points):where is the RGB pixels of the feature points , is the depth point of , and is the nearest point of ranked by feature matching.For each RGB pixel , the corresponding 3D point can be calculated as .

- Optimization and Mapping: To refine the estimated poses and rebuild the spatial geometric structure of the target scenes, the optimization and mapping module are adopted here. The optimization and mapping of poses are achieved using the Bundle Adjustment algorithm to refine the series of poses [21]. This approach minimized the projection errors between the re-projected pixels and 3D points. For the mapping stage, the TSDF integration algorithm is used here for 3D reconstruction [22]. The TSDF value at the 3D point is updated as follows:where denotes the signed distance function which represents the distance from point to the nearest surface point , and and denote the old and new weighted values for reconstruction, respectively. And is the new measurement of the signed distance at point .

4. Experiments and Analysis

4.1. Experimental Settings

4.1.1. Area for Data Collection

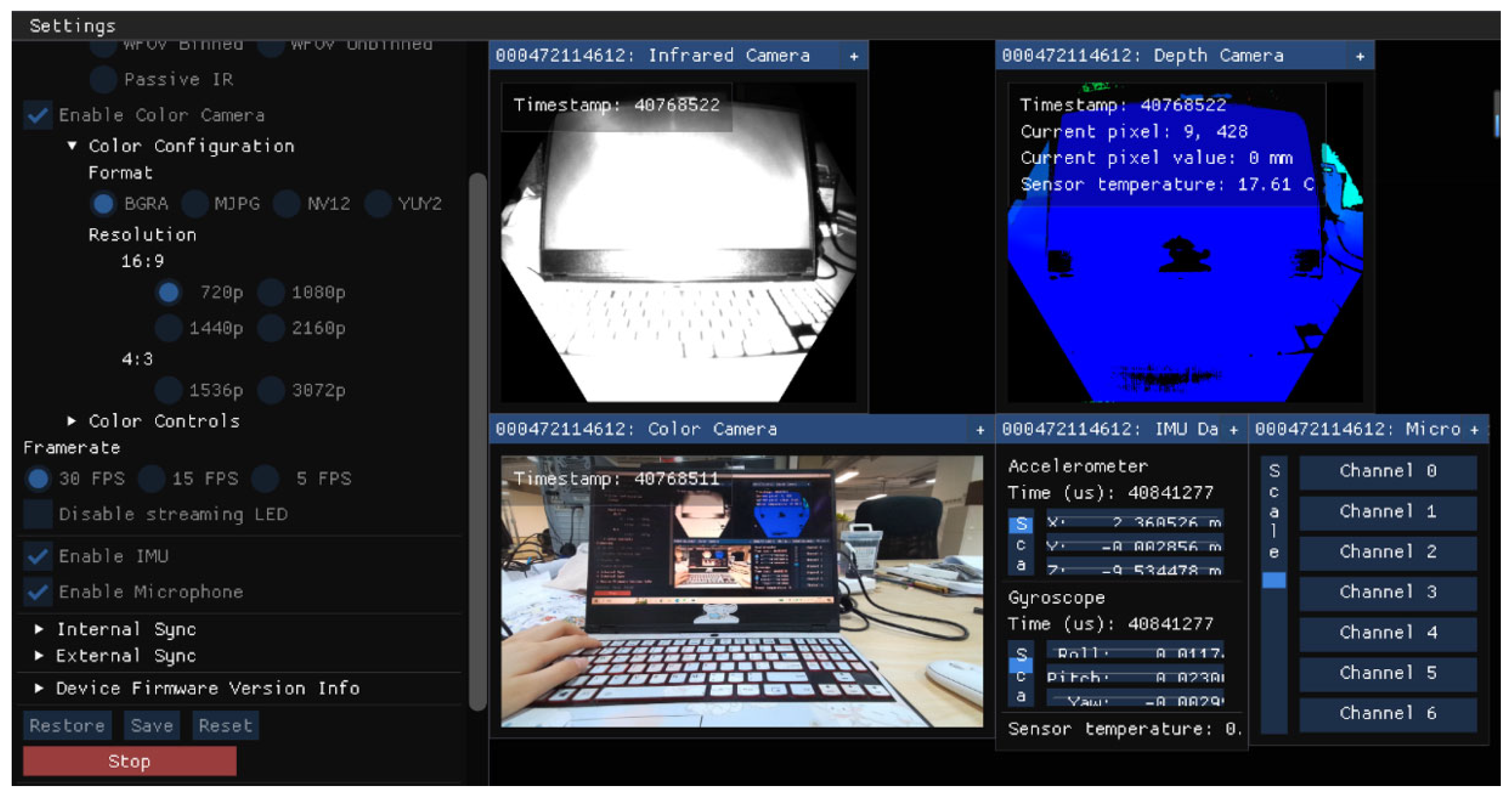

4.1.2. Data Preprocessing for the Proposed Method

4.2. Experimental Results and Analysis

4.3. Outlook and Comparison

4.3.1. Safety Information Monitoring of Building Heritage

4.3.2. Assisted Architectural Heritage Repair and Rehabilitation

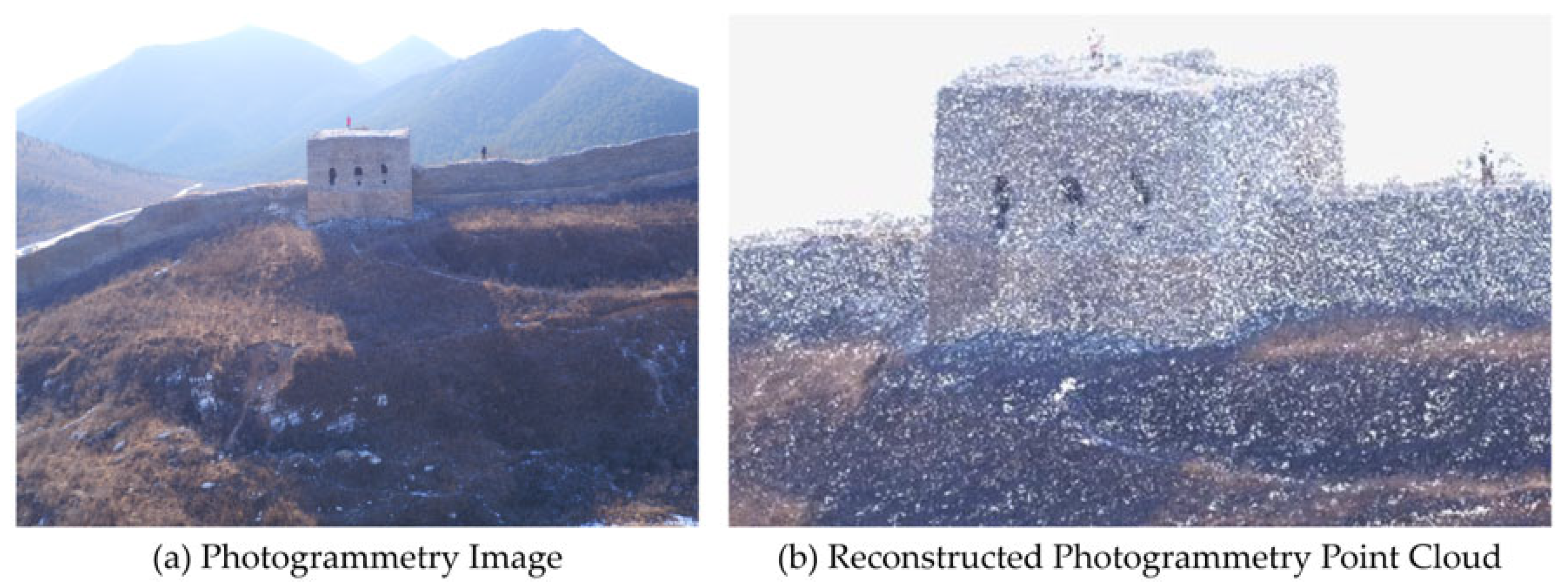

4.3.3. Comparison with Photogrammetry-Based Method

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, L.; Li, C.; Ruan, C. Application of 3D Laser Scanning Technology in Building Elevation Surveying and Mapping. Urban Geotech. Investig. Surv. 2023, 1, 144–147. [Google Scholar]

- Ali, S.; Omar, N.; Abujayyab, S. Investigation of the accuracy of surveying and buildings with the pulse (non prism) total station. Int. J. Adv. Res. 2016, 4, 1518–1528. [Google Scholar]

- Thoeni, K.; Giacomini, A.; Murtagh, R.; Kniest, E. A comparison of multi-view 3D reconstruction of a rock wall using several cameras and a laser scanner. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 40, 573–580. [Google Scholar] [CrossRef]

- Zhao, Z.H.; Sun, H.; Zhang, N.X.; Xing, T.H.; Cui, G.H.; Lai, J.X.; Liu, T.; Bai, Y.B.; He, H.J. Application of unmanned aerial vehicle tilt photography technology in geological hazard investigation in China. Nat. Hazards 2024, 6, 1–32. [Google Scholar] [CrossRef]

- Lerma, J.L.; Navarro, S.; Cabrelles, M.; Seguí, A.E.; Haddad, N.; Akasheh, T. Integration of laser scanning and imagery for photorealistic 3D architectural documentation. In Laser Scanning, Theory and Applications; IntechOpen: London, UK, 2011; pp. 414–430. [Google Scholar]

- Fais, S.; Cuccuru, F.; Ligas, P.; Casula, G.; Bianchi, M.G. Integrated ultrasonic, laser scanning and petrographical characterisation of carbonate building materials on an architectural structure of a historic building. Bull. Eng. Geol. Environ. 2017, 76, 71–84. [Google Scholar] [CrossRef]

- Zeng, P.; Liu, C.L. Research and Engineering Practice of Building Elevation Measurement Method Combining 3D Laser Scanning and Total Station. Urban Geotech. Investig. Surv. 2018, 168, 139–142. [Google Scholar]

- Sharma, O.; Arora, N.; Sagar, H. Image Acquisition for High Quality Architectural Reconstruction. Graph. Interface 2019, 18, 1–9. [Google Scholar]

- Gao, G. Study on the application of multi-angle imaging related technology in the construction process. Appl. Math. Nonlinear Sci. 2024, 9, 3. [Google Scholar] [CrossRef]

- Sun, Z.; Cao, Y.; Zhang, Y. Applications of Image-based Modeling in Architectural Heritage Surveying. Res. Herit. Preserv. 2018, 3, 30–36. [Google Scholar] [CrossRef]

- Wang, W.; Peng, F.; Li, J.; Chen, S. Research on 3D Reconstruction and Utilization of Historic Buildings Based on Air-Ground Integration Surveying Method: A Case of the Former Residence of Kim Koo on Chaozong Street in Changsha. Urban. Archit. 2022, 19, 135–142. [Google Scholar] [CrossRef]

- Liu, C.; Zeng, J.; Zhang, S. True 3D Modelling Towards a Special-shaped Building Unit by Unmanned Aerial Vehicle with a Single Camera. J. Tongji Univ. Nat. Sci. 2018, 46, 550–556. [Google Scholar]

- He, Y.; Chen, P.; Su, Z. Ancient Buildings Reconstruction based on 3D Laser Scanning and UAV Tilt Photography. Remote Sens. Technol. Appl. 2019, 34, 1343–1352. [Google Scholar]

- Li, R. Mobile mapping: An emerging technology for spatial data acquisition. Photogramm. Eng. Remote Sens. 1997, 63, 1085–1092. [Google Scholar]

- Liu, K. Application of 3D Laser Geometric Information Acquisition Based on the Protection and Repair Requirements of Ancient Buildings. Ph.D. Thesis, Beijing University of Technology, Beijing, China, 2019. [Google Scholar]

- Murtiyoso, A.; Grussenmeyer, P.; Suwardhi, D. Technical considerations in Low-Cost heritage documentation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 225–232. [Google Scholar] [CrossRef]

- Krátký, V.; Petráček, P.; Nascimento, T.; Čadilová, M.; Škobrtal, M.; Stoudek, P.; Saska, M. Safe documentation of historical monuments by an autonomous unmanned aerial vehicle. ISPRS Int. J. Geo Inf. 2021, 10, 738. [Google Scholar] [CrossRef]

- Zhang, Q. 3D Reconstruction of Large Scene Based on Kinect. Master’s thesis, University of Electronic Science and Technology of China, Chengdu, China, 2018. [Google Scholar]

- Ng, P.C.; Henikoff, S. SIFT: Predicting amino acid changes that affect protein function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Kuang, Y.; Sugimoto, S.; Astrom, K.; Okutomi, M. Revisiting the pnp problem: A fast, general and optimal solution. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 2–8 December 2013; pp. 2344–2351. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In Proceedings of the Vision. Algorithms: Theory and Practice: International Workshop on Vision, Algorithms, Corfu, Greece, 21–22 September 1999; pp. 298–372. [Google Scholar]

- Shi, L.; Hou, M.; Hu, Y. Research on 3D Representation Method of Ancient Architecture Based on Point Cloud Data and BIM. Res. Herit. Preserv. 2018, 3, 46–52. [Google Scholar] [CrossRef]

- Vizzo, I.; Guadagnino, T.; Behley, J.; Stachniss, C. Vdbfusion: Flexible and efficient tsdf integration of range sensor data. Sensors 2022, 22, 1296. [Google Scholar] [CrossRef]

- Li, Z.; Huang, S.; Zhang, M.; Li, Y. The Method of Rapid Acquisition of Facade Image and Intelligent Retrieval of the Decoration Category of Traditional Chinese Rural Dwellings: A Case Study on the Decorative Style of the Residential Entrance of Liukeng Village. Zhuangshi 2019, 16–20. [Google Scholar] [CrossRef]

- Wang, B.; Xu, X. Beijing Diming Dian; China Federation of Literary and Art Circles Publishing Corporation: Beijing, China, 2001. [Google Scholar]

- Zhang, Y.; Zhang, Y.; Chen, X. Research on Key Technology of 3D Laser Scanning in Surveying and Mapping of Ancient Buildings. Archit. J. 2013, S2, 29–33. [Google Scholar]

- Wu, Y.; Zhang, Y. Survey on the Standard of the Application of 3D Laser Scanning Technique on the Cultural Heritage’s Conservation. Res. Herit. Preserv. 2016, 1, 1–5. [Google Scholar] [CrossRef]

- Sun, Z.; Cao, Y. Accuracy Evaluation of Architectural Heritage Surveying from Photogrammetry Based on Consumer–Level UAV–Born Images: Case Study of the Auspicious Multi-door Stupa. Herit. Archit. 2017, 4, 120–127. [Google Scholar]

| Aspect | Properties | Prism-Free Total Station Measurement Technology | Ground Close-Up Multi-View Photography Technology | UAV Tilt Photography Technology |

|---|---|---|---|---|

| Data acquisition cost | Operation cost | USD 600–4000 | USD 800–5000 | USD 1000–10,000 |

| Lower | Low | High | ||

| Portability | Poor | Good | Good | |

| Acquisition time | Long | Short | Short | |

| Modeling time | Long | Long | Short | |

| Mapping spatial location | Roof | Inconvenient collection | Convenient collection | Convenient collection |

| Indoor | Inconvenient collection | Inconvenient collection | Inconvenient collection | |

| Mapping environmental requirements | Dependence on distance | Dependence | Independence | Independence |

| Dependence on light | Dependence | Dependence | Independence | |

| Dependence on weather | Dependence | Dependence | Dependence | |

| Data error analysis | 3D information | Indirect acquisition | Indirect acquisition | Direct acquisition |

| Accuracy | Millimeter-level | Big error | at centimeter level | |

| Source of error | Limited by the laser beam, the ranging effect of corners or dark objects is not ideal | Complexity of scene structure, image overlap rate | Layout of image control points, image quality, image overlap, and flight height | |

| Details | General | Good | Good | |

| Material | No | Yes | Yes |

| Data Bag | Data Acquisition Location | Data Acquisition Range (m) | Data Acquisition Time (s) | Reconstruction Time (s) | Reconstruction Speed (s/m) |

|---|---|---|---|---|---|

| 1 | Nankou Town | 3.5 | 2.8 | 11.3 | 3.23 |

| 2 | Mutianyu | 7.5 | 6.0 | 25.4 | 3.39 |

| 3 | Shuiguan | 9.5 | 7.6 | 30.9 | 3.25 |

| 4 | Xuliukou | 10.5 | 8.4 | 34.2 | 3.26 |

| 5 | Juyongguan | 15.5 | 12.4 | 50.4 | 3.25 |

| Average | - | 46.5 | 37.2 | 152.2 | 3.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, L.; Xu, Y.; Rao, Z.; Gao, W. Real-Time 3D Reconstruction for the Conservation of the Great Wall’s Cultural Heritage Using Depth Cameras. Sustainability 2024, 16, 7024. https://doi.org/10.3390/su16167024

Xu L, Xu Y, Rao Z, Gao W. Real-Time 3D Reconstruction for the Conservation of the Great Wall’s Cultural Heritage Using Depth Cameras. Sustainability. 2024; 16(16):7024. https://doi.org/10.3390/su16167024

Chicago/Turabian StyleXu, Lingyu, Yang Xu, Ziyan Rao, and Wenbin Gao. 2024. "Real-Time 3D Reconstruction for the Conservation of the Great Wall’s Cultural Heritage Using Depth Cameras" Sustainability 16, no. 16: 7024. https://doi.org/10.3390/su16167024

APA StyleXu, L., Xu, Y., Rao, Z., & Gao, W. (2024). Real-Time 3D Reconstruction for the Conservation of the Great Wall’s Cultural Heritage Using Depth Cameras. Sustainability, 16(16), 7024. https://doi.org/10.3390/su16167024