Unveiling Genetic Reinforcement Learning (GRLA) and Hybrid Attention-Enhanced Gated Recurrent Unit with Random Forest (HAGRU-RF) for Energy-Efficient Containerized Data Centers Empowered by Solar Energy and AI

Abstract

:1. Introduction

2. Background and Related Works

2.1. Energy Consumption in Data Centers

- IT Equipment Energy Consumption: Approximately 40% of the total energy consumption in a data center can be attributed to the operation of IT equipment, encompassing servers, networks, and storage infrastructure [2,7,11]. The continuous operation and efficient functioning of these components are essential for providing the computational and networking services that data centers offer [4,5,6,7].

- Cooling and Conditioning Systems: The remaining portion, constituting roughly 45% to 50% of the total energy usage, is dedicated to cooling and conditioning systems [6,7]. These systems are indispensable in maintaining the appropriate temperature and humidity levels, ensuring the optimal performance and longevity of the IT equipment [7,11]. High-density servers and networks generate considerable heat, necessitating robust cooling mechanisms [10,11].

2.2. Solar Energy

3. Materials and Methods

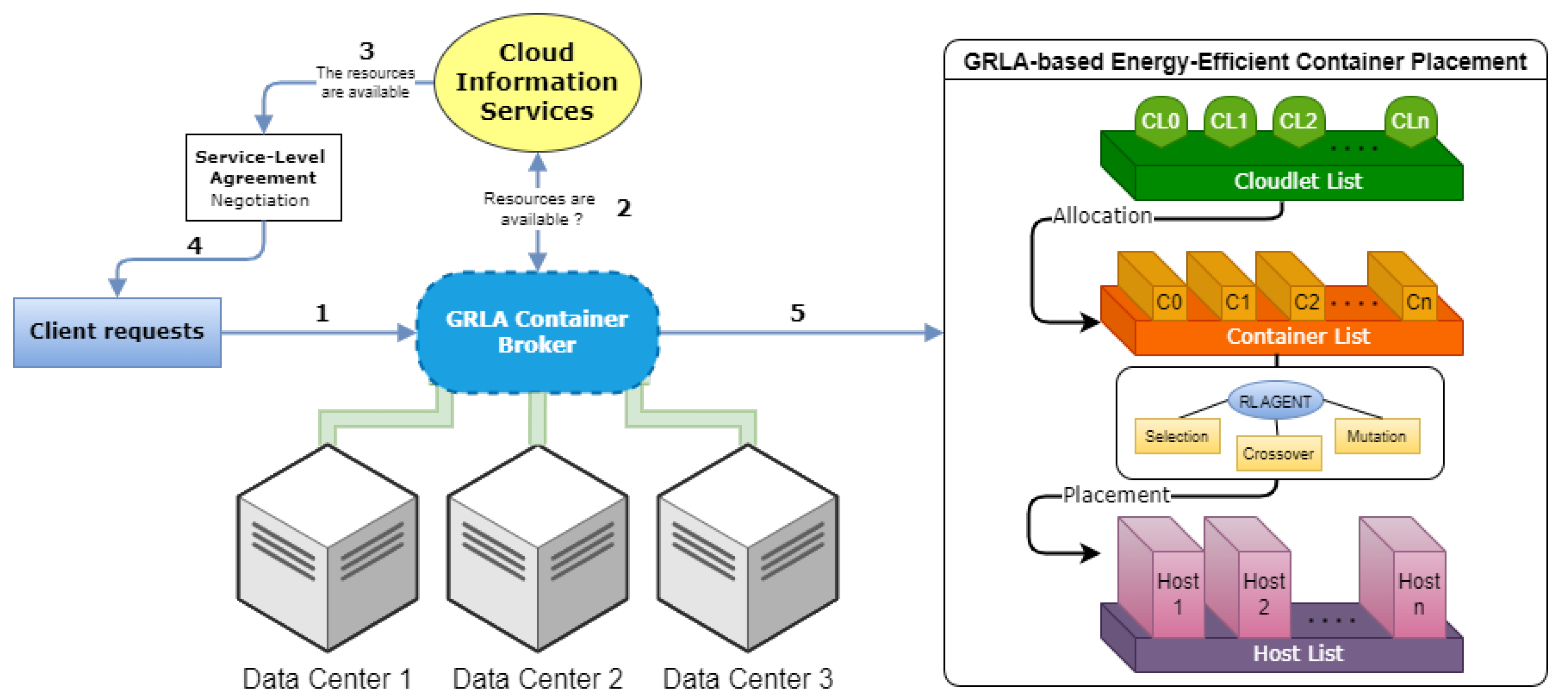

3.1. GRLA-Based Energy-Efficient Container Placement

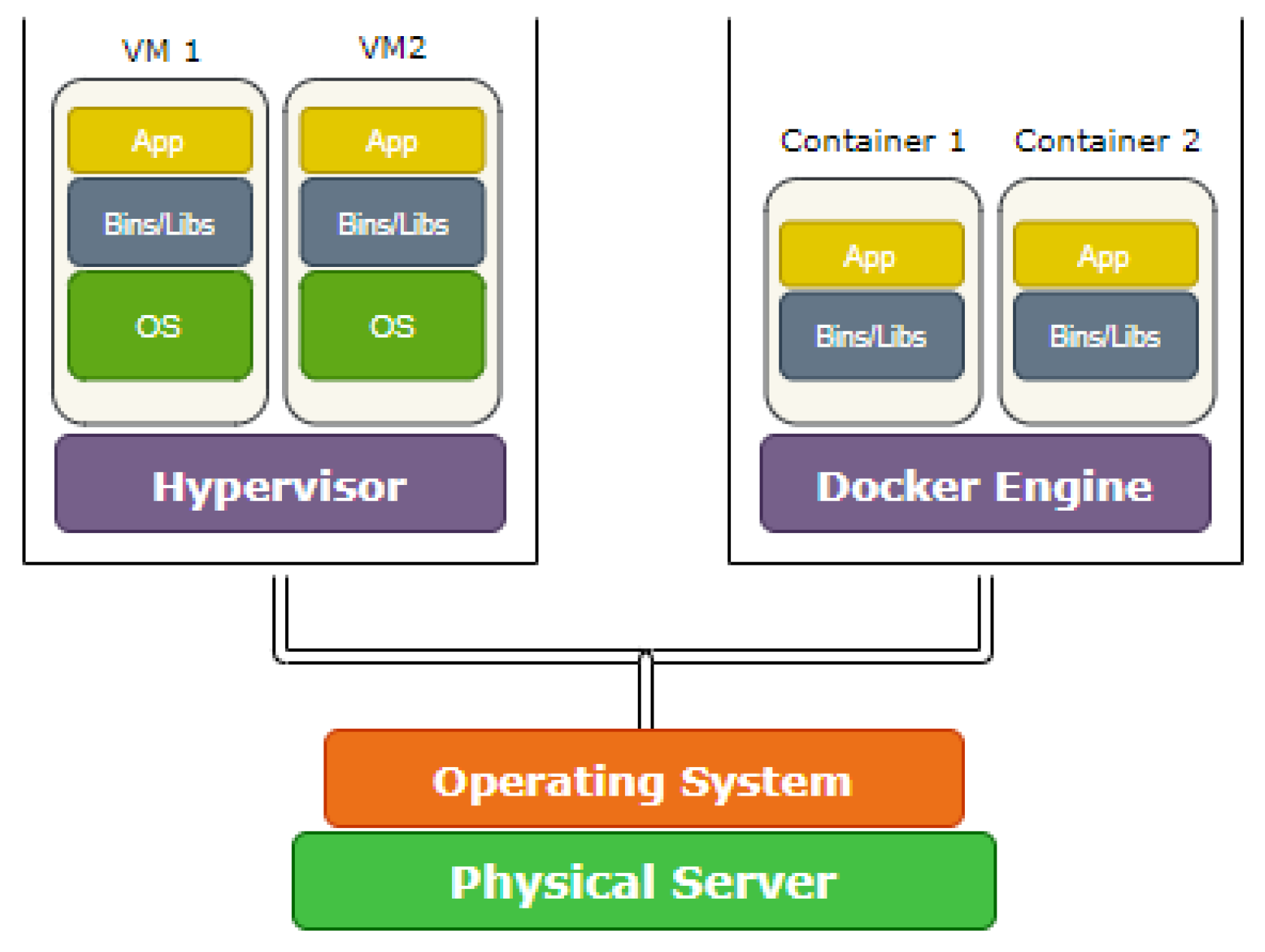

3.1.1. Containerized Data Centers

3.1.2. Genetic Reinforcement Learning Algorithm (GRLA)

Genetic Algorithm

Reinforcement Learning

Problem Modeling

- All containers must be allocated to one or more hosts, ensuring that no container remains unassigned at the end of the allocation process.

- Each container must be uniquely assigned to a single host, preventing multiple hosts from containing the same container simultaneously.

- The cumulative resource usage (RAM, CPU, BW, and Storage) of containers hosted on any given server should not surpass 80% of the capacity limit of that server, leaving 20% of the capacity for task operation of cloud users to ensure smooth functioning without any performance issues.

Integration of RL with GA in GRLA

- Selection Phase: The selection phase is modified to incorporate the decisions of the RL agent. Based on the RL agent’s actions, the selection pressure is adjusted to favor individuals with higher fitness values, as indicated in Algorithm 1, promoting the selection of more promising solutions.

| Algorithm 1: Selection Phase with RL |

Require: Population P, RL Agent Ensure: Selected Individuals 1: Initialize an empty list 2: for each individual I in Population P do 3: Calculate the Fitness Score based on RL’s learned policy 4: Store for individual I 5: end for 6: Sort individuals in Population P based on their Fitness Score in descending order 7: for i from 1 to do 8: Add the ith individual to 9: end for 10: return as the selected parents |

- Crossover Phase: The crossover phase is adapted to reflect the influence of the RL agent. The RL agent can adjust the crossover probability or select appropriate crossover strategies based on its learned policy, leading to more effective genetic recombination, as presented in Algorithm 2.

| Algorithm 2: Crossover Phase with RL |

Require: Population P, Crossover Case, RL Agent Ensure: Crossover Offsprings 1: Initialize an empty map 2: Define a RL agent with action space and policy 3: for each individual I in Population P do 4: Compute the state S based on the current population and crossover parameters 5: Select an action A using the RL agent based on state S 6: Adjust crossover parameters (e.g., crossover probability) based on action A 7: Divide I into two parts, and , based on adjusted crossover parameters 8: Assign and to parents 1 and 2, respectively 9: end for 10: return as the offspring resulting from crossover |

- Mutation Phase: Similarly, the mutation phase integrates the decisions of the RL agent, as shown in Algorithm 3. The RL agent can adapt the mutation rate or mutation strategies based on the current state of the GA, facilitating the exploration of diverse solution spaces.

| Algorithm 3: Mutation Phase with RL |

Require: Crossover Offsprings, Host List, Container List, RL Agent Ensure: Mutated Offsprings 1: Initialize an empty map 2: Define a RL agent with action space and policy 3: for each offspring O in Crossover Offsprings do 4: Compute the state S based on the current offspring and mutation parameters 5: Select an action A using the RL agent based on state S 6: Adjust mutation parameters (e.g., mutation probability) based on action A 7: for each Container C in the offspring O do 8: Compute the state based on the current VM and mutation parameters 9: Select an action using the RL agent based on state 10: Adjust mutation parameters (e.g., Container migration) based on action 11: end for 12: Apply mutation operations based on adjusted parameters to modify the offspring 13: Add the mutated offspring to the map 14: end for 15: return as the mutated offsprings resulting from mutation |

| Algorithm 4: GRLA for Energy-Efficient Container Placement |

Require: List of Hosts, List of Containers Ensure: Optimized Container Placement 1: Initialize population, best solutions, workable solutions, crossover, mutation maps 2: Initialize list of workables and temporary solution list 3: Compute characteristics of hosts and containers 4: if CheckHostContResources(hostList, ContList) then 5: Compute threshold and check if minimization is possible 6: if Check_Minim_Placement(hostList, ContList, threshold) then 7: Print constraint 3 violation message 8: else 9: Generate initial population 10: for i from 0 to do 11: Perform selection on initial population using RL-based fitness evaluation 12: Identify workable solutions from best solutions using RL-based evaluation Store workable solutions in a list 13: Store workable solutions in a list 14: if then 15: Perform crossover with strategy 1, guided by RL 16: else if then 17: Perform crossover with strategy 2, guided by RL 18: else 19: Perform crossover with strategy 3, guided by RL 20: end if 21: Perform mutation on crossover offspring, with RL-based decisions 22: Generate new generation from best solutions and mutated offspring using RL guidance 23: Update initial population with the new generation 24: end for 25: end if 26: else 27: Print resource constraint 3 violation message 28: end if 29: return Optimized container placement |

3.2. Solar Panel Energy Forecasting with AI

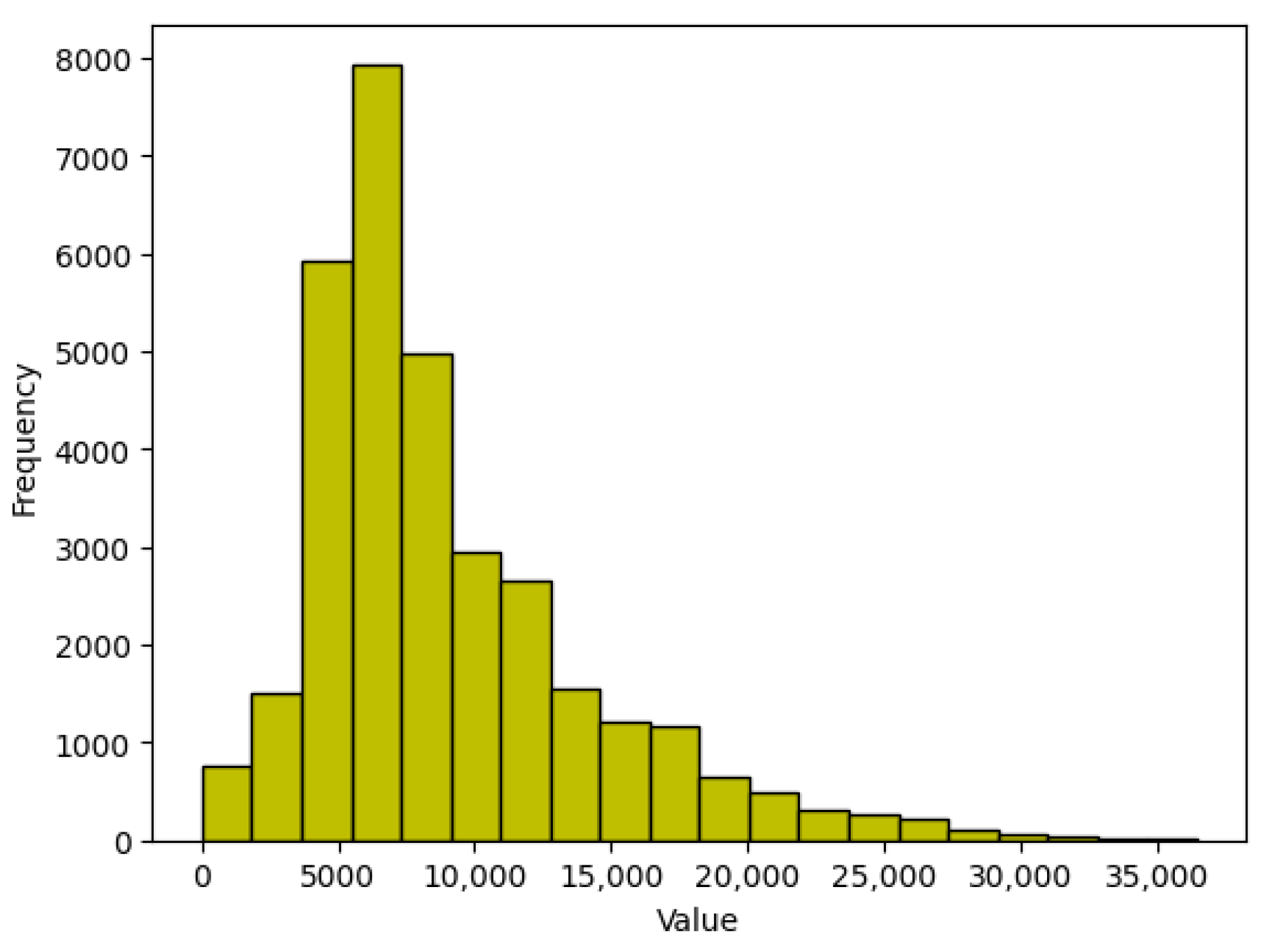

3.2.1. Dataset

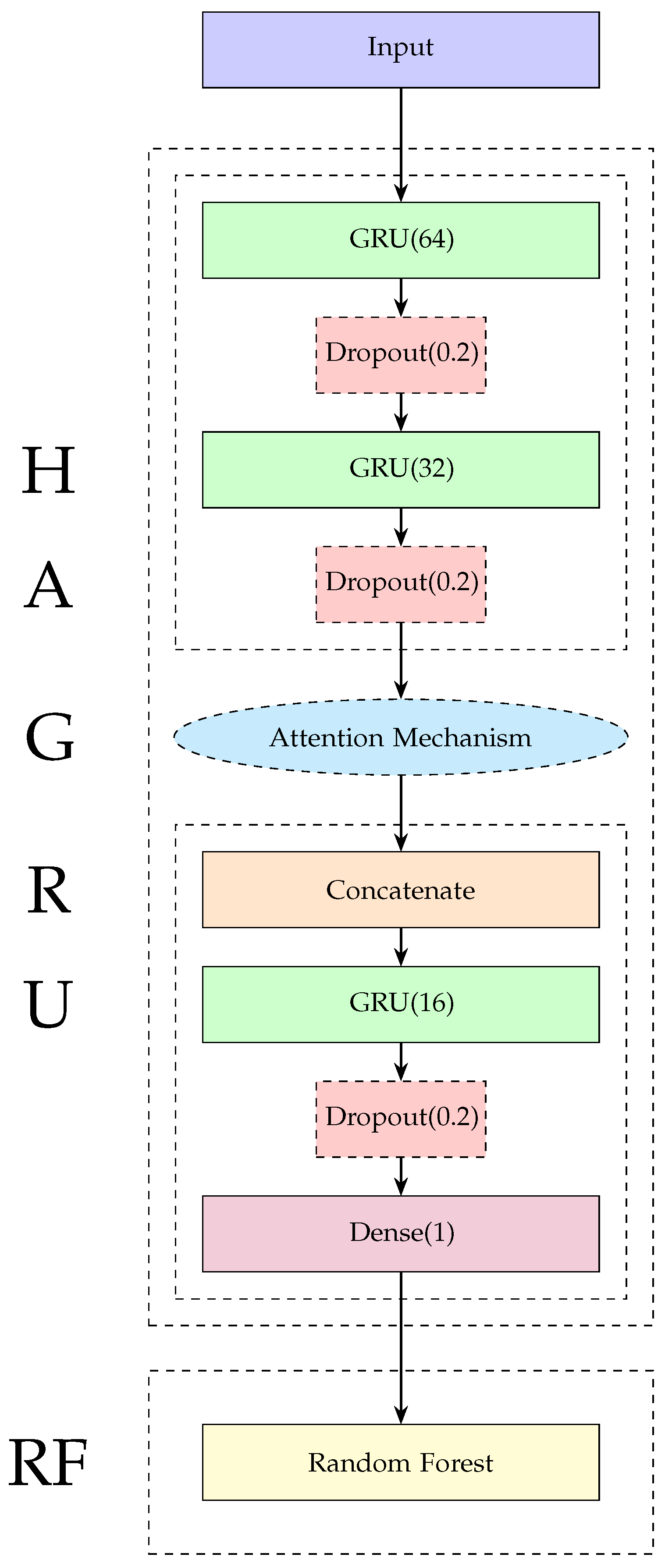

3.2.2. HAGRU-RF-Based Forecasting Model

Gated Recurrent Unit (GRU)

Self-Attention Mechanism

Random Forest (RF) Integration

4. Results and Analysis

4.1. Results and Analysis of the GRLA

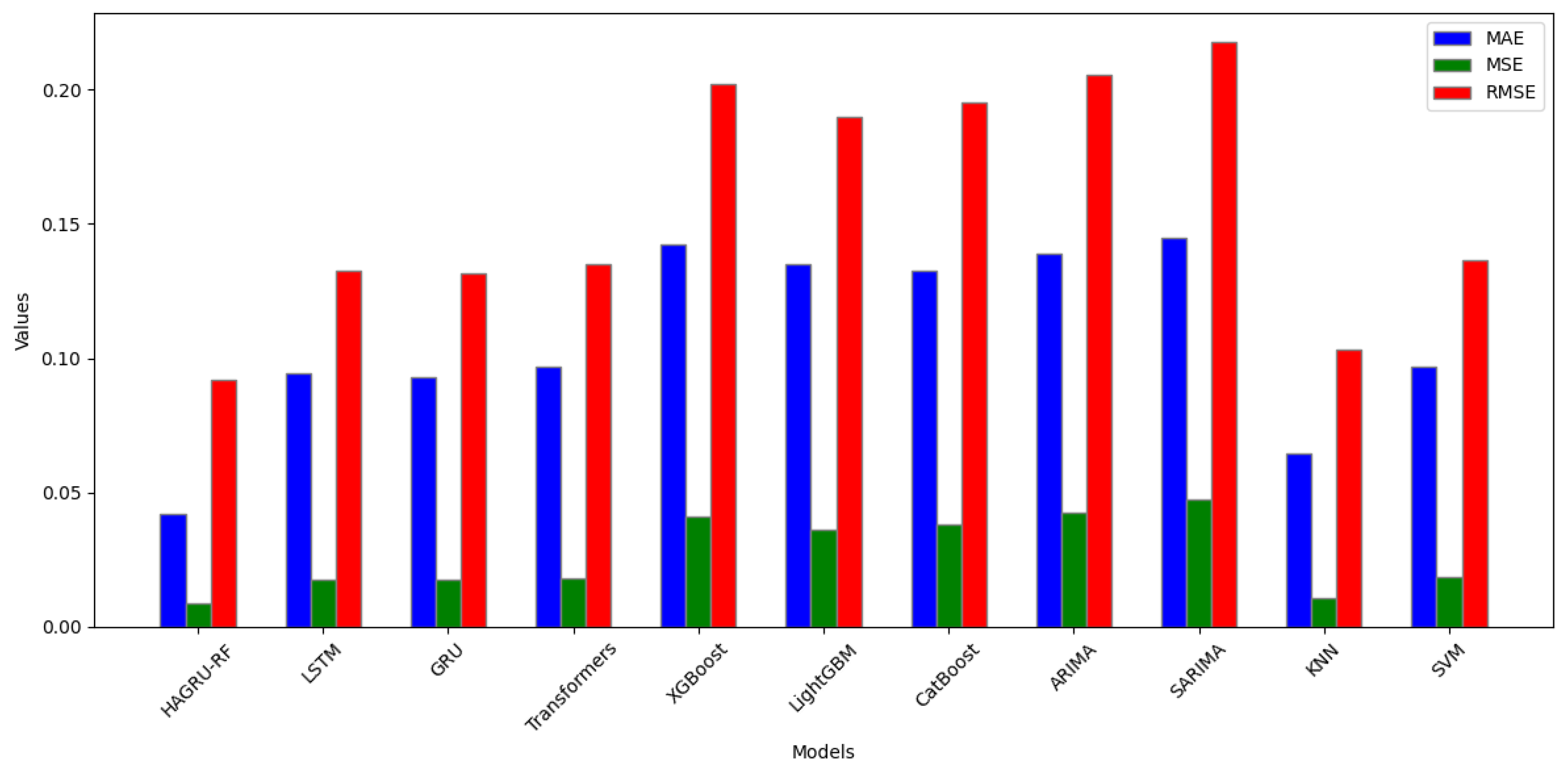

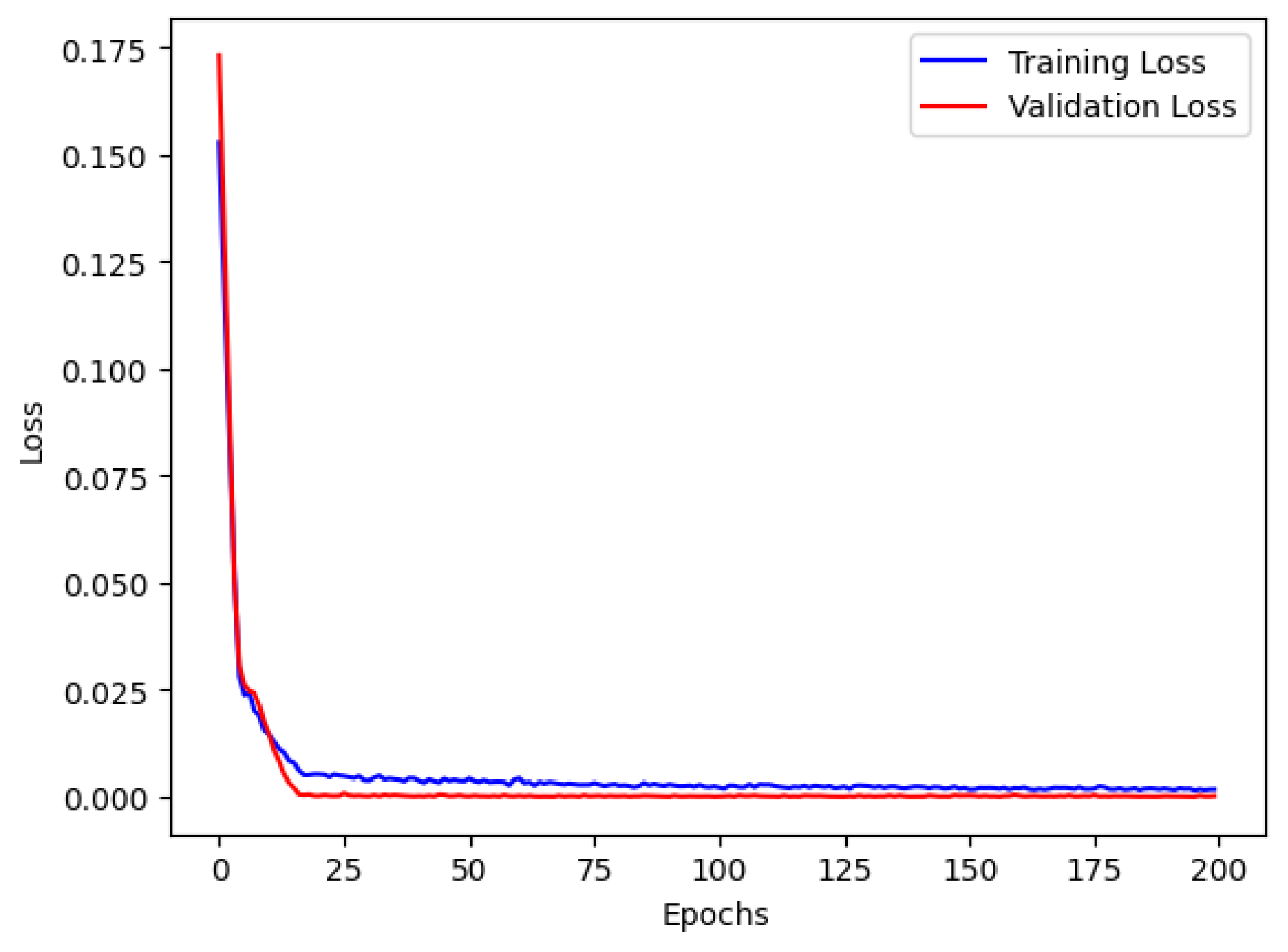

4.2. Results and Analysis of the HAGRU-RF

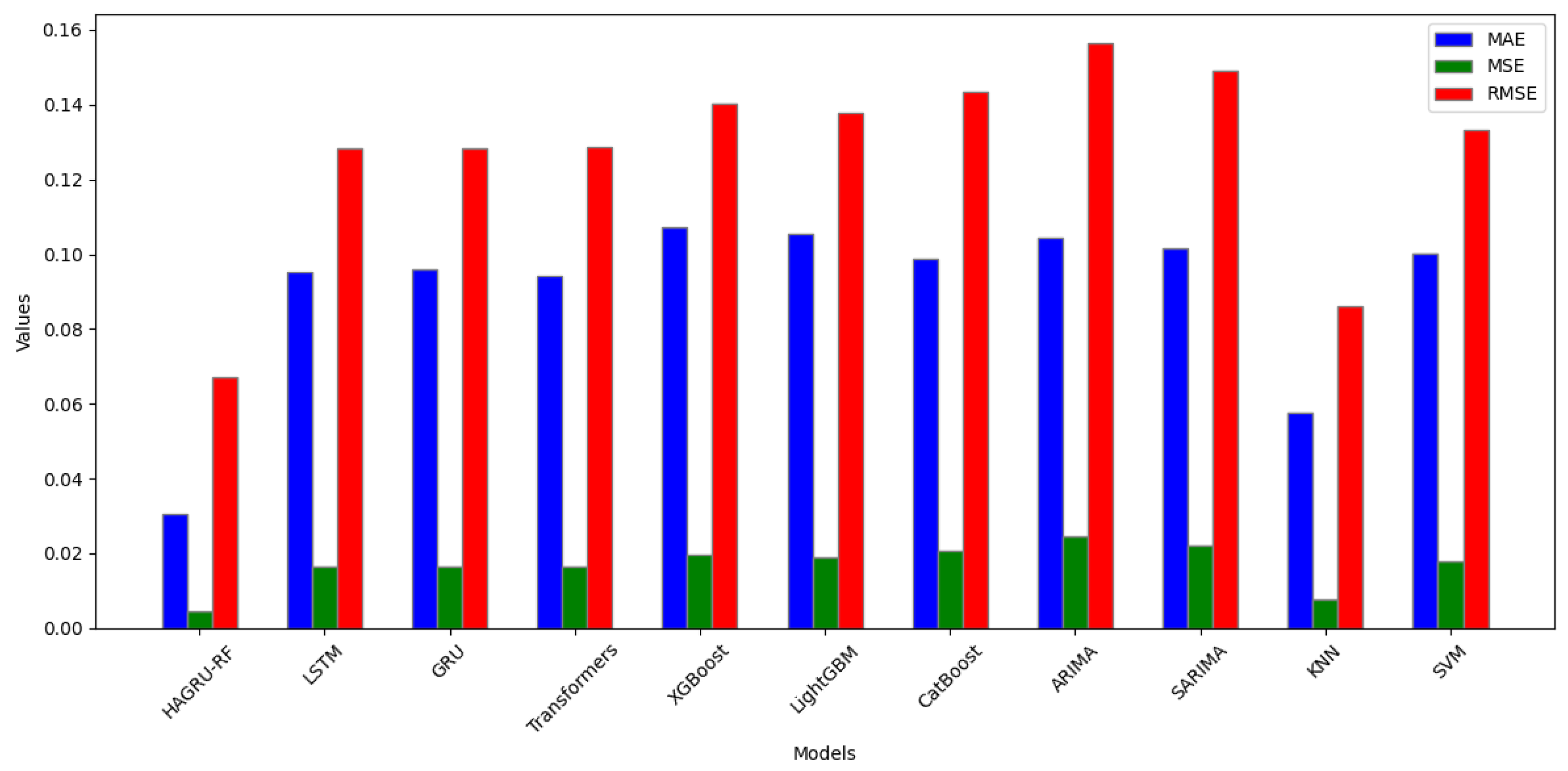

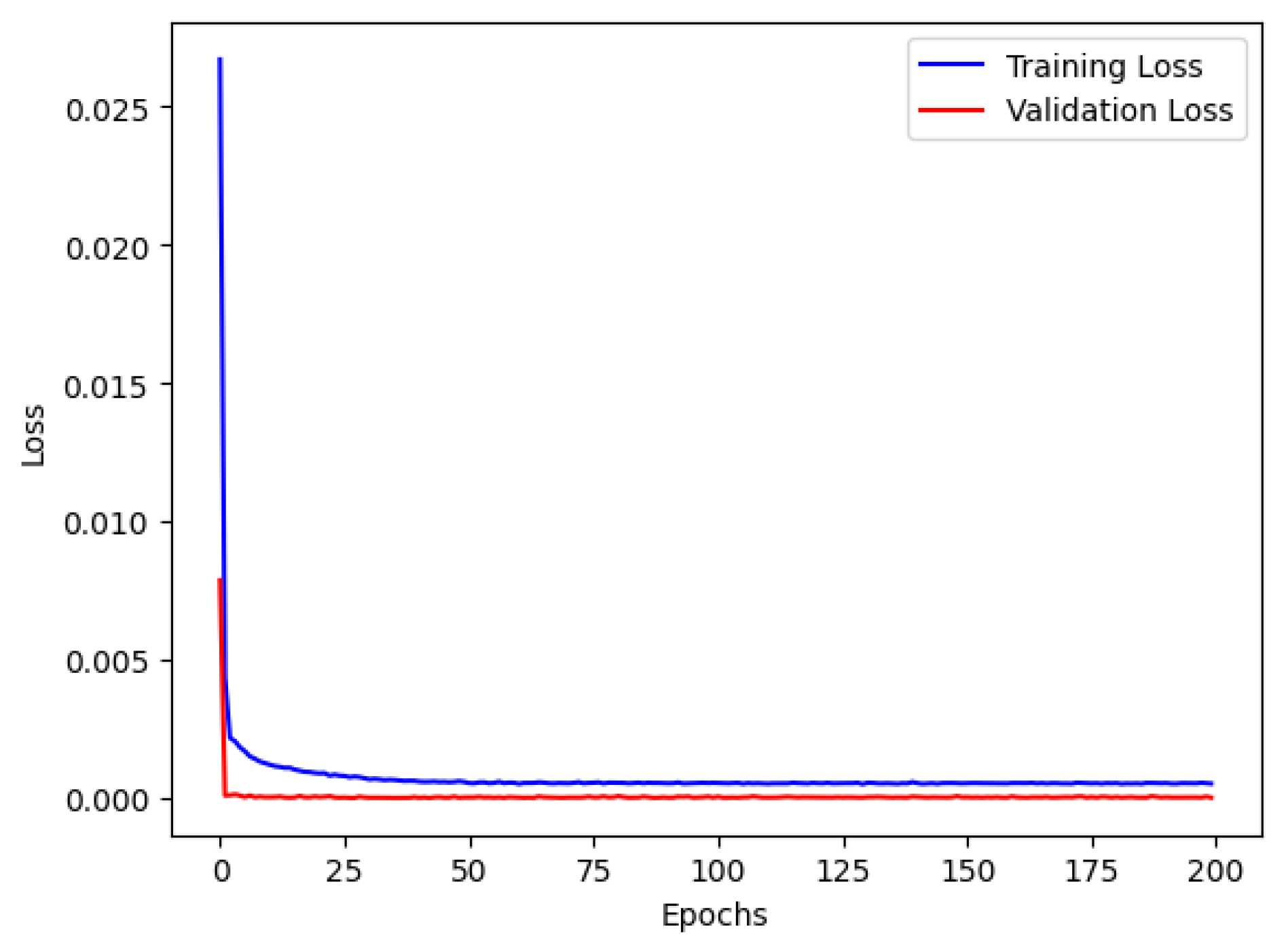

4.2.1. One-Day Solar Energy Forecasting

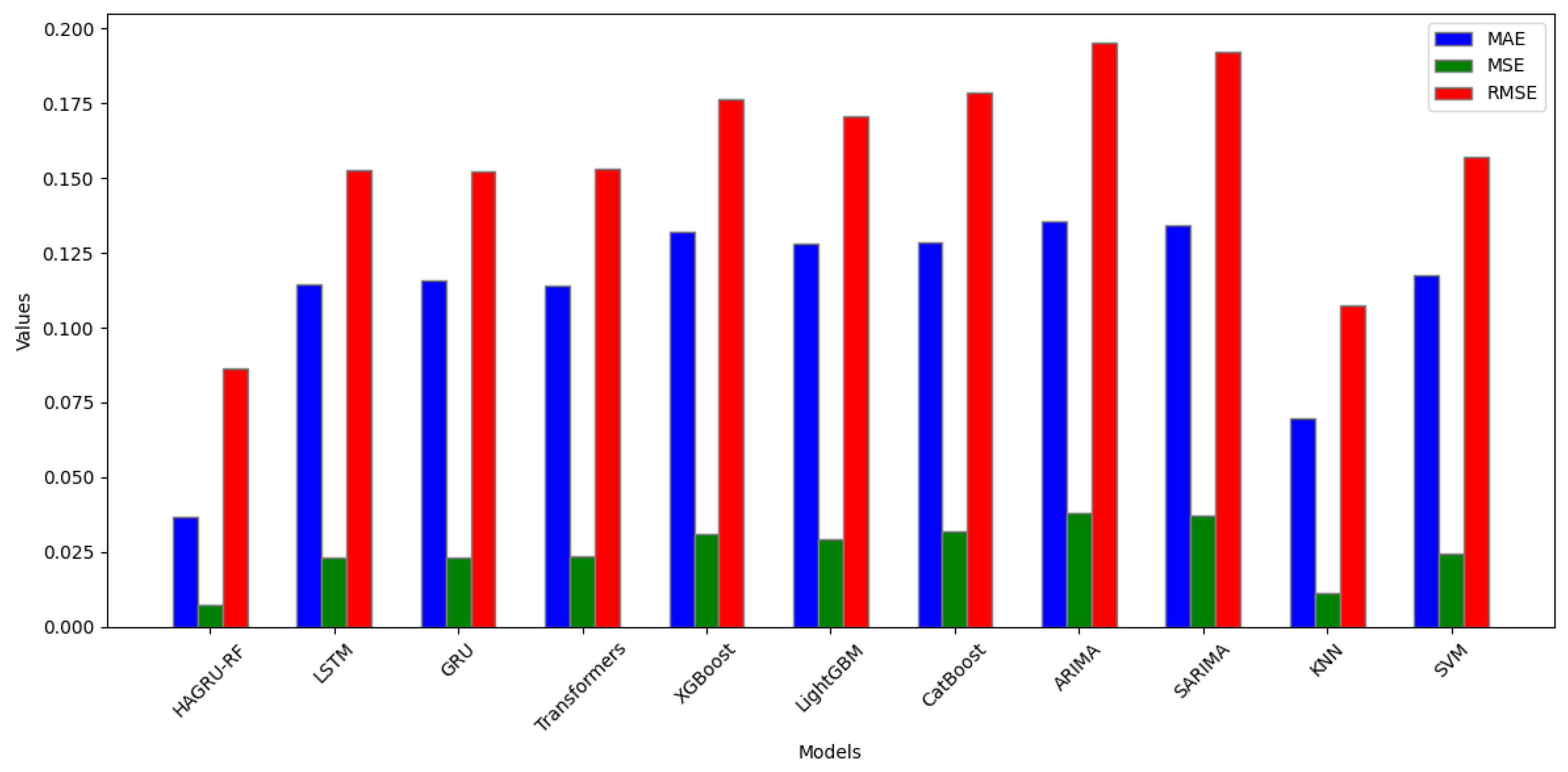

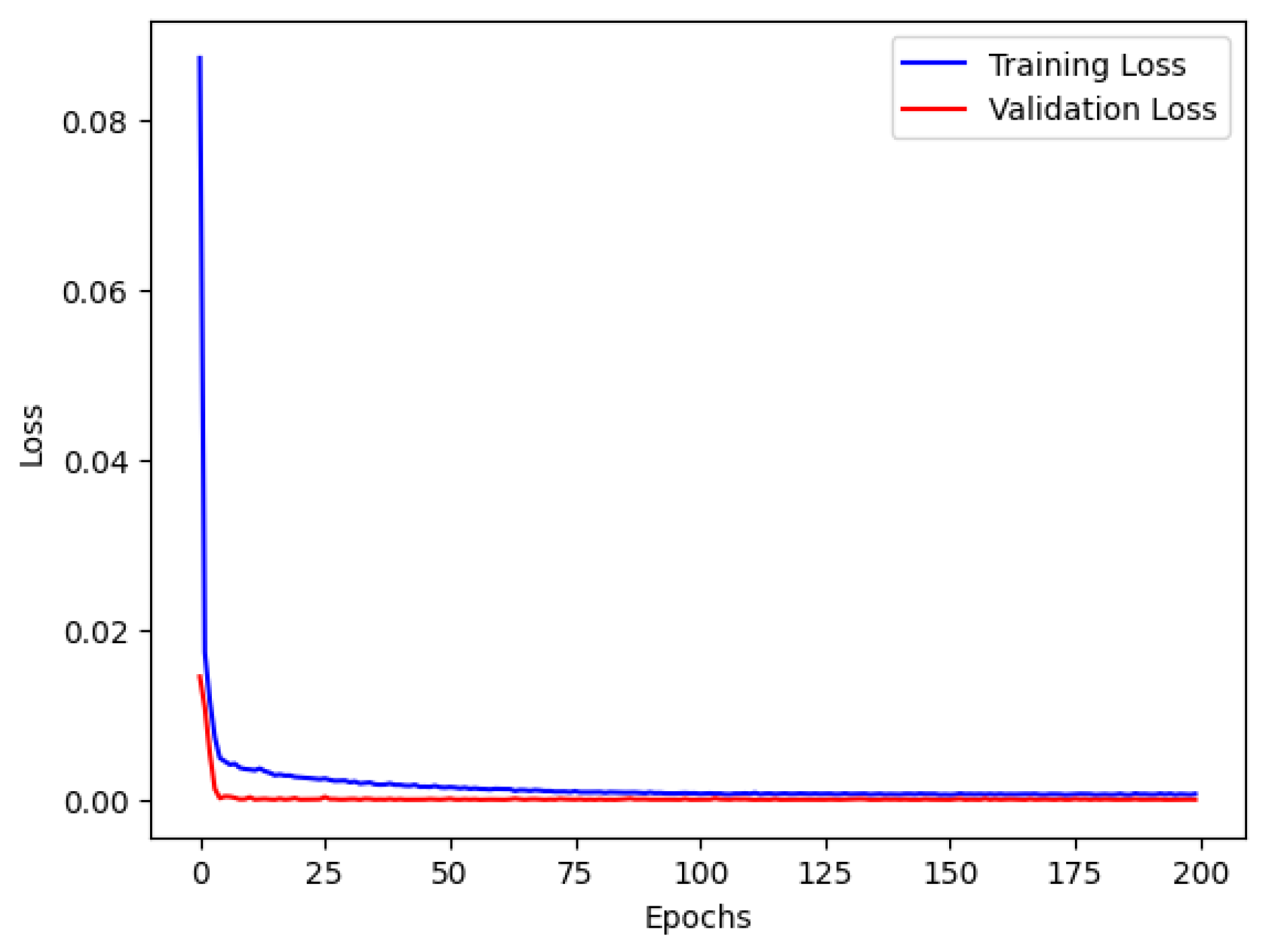

4.2.2. One-Week Solar Energy Forecasting

4.2.3. One-Month Solar Energy Forecasting

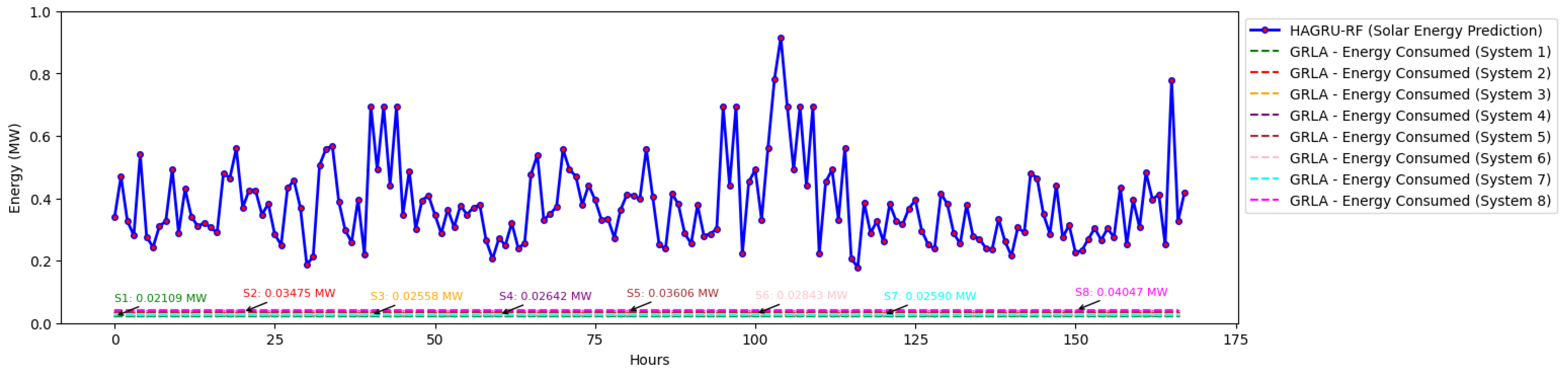

4.3. Integration of GRLA and HAGRU-RF for Green Cloud Computing

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HAGRU-RF | Hybrid Attention-enhanced Gated Recurrent Unit and Random Forest |

| GRLA | Genetic-Reinforcement Learning Algorithm |

| GA | Genetic Algorithm |

| RL | Reinforcement Learning |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| CNNs | Convolutional Neural Networks |

| LSTM | Long Short-Term Memory |

| VMs | Virtual Machines |

| GRU | Gated Recurrent Unit |

| RNN | Recurrent Neural Network |

| RF | Random Forest |

| BW | Bandwidth |

| ACO | Ant Colony Optimization |

| SA | Simulated Annealing |

| FFD | First Fit Decreasing |

| XGBoost | Extreme Gradient Boosting |

| LightGBM | Light Gradient Boosting Machine |

| CatBoost | Categorical Boosting |

| ARIMA | AutoRegressive Integrated Moving Average |

| SARIMA | Seasonal AutoRegressive Integrated Moving Average |

| KNN | K-Nearest Neighbors |

| SVM | Support Vector Machines |

| MAE | Mean Absolute Error |

| MSE | Mean Squared Error |

| RMSE | Root Mean Squared Error |

References

- Katal, A.; Dahiya, S.; Choudhury, T. Energy efficiency in cloud computing data centers: A survey on software technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef] [PubMed]

- Shao, X.; Zhang, Z.; Song, P.; Feng, Y.; Wang, X. A review of energy efficiency evaluation metrics for data centers. Energy Build. 2022, 271, 112308. [Google Scholar] [CrossRef]

- Mahil, M.; Jayasree, T. Combined particle swarm optimization and Ant Colony System for energy efficient cloud data centers. Concurr. Comput. Pract. Exp. 2021, 33, e6195. [Google Scholar] [CrossRef]

- Shen, Z.; Zhou, Q.; Zhang, X.; Xia, B.; Liu, Z.; Li, Y. Data characteristics aware prediction model for power consumption of data center servers. Concurr. Comput. Pract. Exp. 2022, 34, e6902. [Google Scholar] [CrossRef]

- Liu, X.F.; Zhan, Z.H.; Deng, J.D.; Li, Y.; Gu, T.; Zhang, J. An Energy Efficient Ant Colony System for Virtual Machine Placement in Cloud Computing. IEEE Trans. Evol. Comput. 2018, 22, 113–128. [Google Scholar] [CrossRef]

- Bouaouda, A.; Afdel, K.; Abounacer, R. Forecasting the Energy Consumption of Cloud Data Centers Based on Container Placement with Ant Colony Optimization and Bin Packing. In Proceedings of the 2022 5th Conference on Cloud and Internet of Things (CIoT), Marrakech, Morocco, 28–30 March 2022; pp. 150–157. [Google Scholar] [CrossRef]

- Li, Y.; Wen, Y.; Tao, D.; Guan, K. Transforming Cooling Optimization for Green Data Center via Deep Reinforcement Learning. IEEE Trans. Cybern. 2020, 50, 2002–2013. [Google Scholar] [CrossRef] [PubMed]

- Koot, M.; Wijnhoven, F. Usage impact on data center electricity needs: A system dynamic forecasting model. Appl. Energy 2021, 291, 116798. [Google Scholar] [CrossRef]

- Peng, X.; Bhattacharya, T.; Cao, T.; Mao, J.; Tekreeti, T.; Qin, X. Exploiting renewable energy and UPS systems to reduce power consumption in data centers. Big Data Res. 2022, 27, 100306. [Google Scholar] [CrossRef]

- Huang, P.; Copertaro, B.; Zhang, X.; Shen, J.; Löfgren, I.; Rönnelid, M.; Fahlen, J.; Andersson, D.; Svanfeldt, M. A review of data centers as prosumers in district energy systems: Renewable energy integration and waste heat reuse for district heating. Appl. Energy 2020, 258, 114109. [Google Scholar] [CrossRef]

- Dayarathna, M.; Wen, Y.; Fan, R. Data Center Energy Consumption Modeling: A Survey. IEEE Commun. Surv. Tutor. 2016, 18, 732–794. [Google Scholar] [CrossRef]

- Cao, Z.; Zhou, X.; Hu, H.; Wang, Z.; Wen, Y. Toward a systematic survey for carbon neutral data centers. IEEE Commun. Surv. Tutor. 2022, 24, 895–936. [Google Scholar] [CrossRef]

- Wu, W.; Ma, X.; Zeng, B.; Zhang, Y.; Li, W. Forecasting short-term solar energy generation in Asia Pacific using a nonlinear grey Bernoulli model with time power term. Energy Environ. 2021, 32, 759–783. [Google Scholar] [CrossRef]

- Shaikh, M.R.; Shaikh, S.; Waghmare, S.; Labade, S.; Tekale, A. A Review Paper on Electricity Generation from Solar Energy. Int. J. Res. Appl. Sci. Eng. Technol. 2017, 887, 1884–1889. [Google Scholar] [CrossRef]

- Tang, N.; Mao, S.; Wang, Y.; Nelms, R.M. Solar Power Generation Forecasting With a LASSO-Based Approach. IEEE Internet Things J. 2018, 5, 1090–1099. [Google Scholar] [CrossRef]

- Sun, Y.; Venugopal, V.; Brandt, A. Convolutional Neural Network for Short-Term Solar Panel Output Prediction; IEEE: Piscataway, NJ, USA, 2018; pp. 2357–2361. [Google Scholar] [CrossRef]

- Sun, Y.; Venugopal, V.; Brandt, A.R. Short-term solar power forecast with deep learning: Exploring optimal input and output configuration. Sol. Energy 2019, 188, 730–741. [Google Scholar] [CrossRef]

- Güney, T. Solar energy, governance and CO2 emissions. Renew. Energy 2022, 184, 791–798. [Google Scholar] [CrossRef]

- Zhou, H.; Liu, Q.; Yan, K.; Du, Y.; Zhang, X. Deep Learning Enhanced Solar Energy Forecasting with AI-Driven IoT. Wirel. Commun. Mob. Comput. 2021, 2021, 9249387. [Google Scholar] [CrossRef]

- Li, G.; Xie, S.; Wang, B.; Xin, J.; Li, Y.; Du, S. Photovoltaic Power Forecasting With a Hybrid Deep Learning Approach. IEEE Access 2020, 8, 175871–175880. [Google Scholar] [CrossRef]

- Lee, W.; Kim, K.; Park, J.; Kim, J.; Kim, Y. Forecasting Solar Power Using Long-Short Term Memory and Convolutional Neural Networks. IEEE Access 2018, 6, 73068–73080. [Google Scholar] [CrossRef]

- Bajpai, P. The Top Five Nations Leading in Solar Energy Generation. Technical Report. 2021. Available online: https://www.nasdaq.com/articles/the-top-five-nations-leading-in-solar-energy-generation-2021-08-17 (accessed on 8 March 2024).

- Victoria, M.; Haegel, N.; Peters, I.M.; Sinton, R.; Jäger-Waldau, A.; del Cañizo, C.; Breyer, C.; Stocks, M.; Blakers, A.; Kaizuka, I.; et al. Solar photovoltaics is ready to power a sustainable future. Joule 2021, 5, 1041–1056. [Google Scholar] [CrossRef]

- Kut, P.; Pietrucha-Urbanik, K. Bibliometric Analysis of Renewable Energy Research on the Example of the Two European Countries: Insights, Challenges, and Future Prospects. Energies 2023, 17, 176. [Google Scholar] [CrossRef]

- Yagli, G.M.; Yang, D.; Srinivasan, D.; Monika. Solar Forecast Reconciliation and Effects of Improved Base Forecasts. In Proceedings of the 2018 IEEE 7th World Conference on Photovoltaic Energy Conversion (WCPEC) (A Joint Conference of 45th IEEE PVSC, 28th PVSEC 34th EU PVSEC), Waikoloa, HI, USA, 10–15 June 2018; pp. 2719–2723. [Google Scholar] [CrossRef]

- Suresh, V.; Janik, P.; Rezmer, J.; Leonowicz, Z. Forecasting Solar PV Output Using Convolutional Neural Networks with a Sliding Window Algorithm. Energies 2020, 13, 723. [Google Scholar] [CrossRef]

- Pahl, C.; Brogi, A.; Soldani, J.; Jamshidi, P. Cloud Container Technologies: A State-of-the-Art Review. IEEE Trans. Cloud Comput. 2019, 7, 677–692. [Google Scholar] [CrossRef]

- Hardikar, S.; Ahirwar, P.; Rajan, S. Containerization: Cloud Computing based Inspiration Technology for Adoption through Docker and Kubernetes. In Proceedings of the 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 4–6 August 2021; pp. 1996–2003. [Google Scholar] [CrossRef]

- Liu, Y.; Lan, D.; Pang, Z.; Karlsson, M.; Gong, S. Performance Evaluation of Containerization in Edge-Cloud Computing Stacks for Industrial Applications: A Client Perspective. IEEE Open J. Ind. Electron. Soc. 2021, 2, 153–168. [Google Scholar] [CrossRef]

- Dziurzanski, P.; Zhao, S.; Przewozniczek, M.; Komarnicki, M.; Indrusiak, L.S. Scalable distributed evolutionary algorithm orchestration using Docker containers. J. Comput. Sci. 2020, 40, 101069. [Google Scholar] [CrossRef]

- Katoch, S.; Chauhan, S.S.; Kumar, V. A review on genetic algorithm: Past, present, and future. Multimed. Tools Appl. 2021, 80, 8091–8126. [Google Scholar] [CrossRef]

- Alhijawi, B.; Awajan, A. Genetic algorithms: Theory, genetic operators, solutions, and applications. Evol. Intell. 2024, 17, 1245–1256. [Google Scholar] [CrossRef]

- Wang, X.; Wang, S.; Liang, X.; Zhao, D.; Huang, J.; Xu, X.; Dai, B.; Miao, Q. Deep reinforcement learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5064–5078. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.n.; Liu, N.; Zhang, Y.y.; Feng, D.w.; Huang, F.; Li, D.s.; Zhang, Y.m. Deep reinforcement learning: A survey. Front. Inf. Technol. Electron. Eng. 2020, 21, 1726–1744. [Google Scholar] [CrossRef]

- Matsuo, Y.; LeCun, Y.; Sahani, M.; Precup, D.; Silver, D.; Sugiyama, M.; Uchibe, E.; Morimoto, J. Deep learning, reinforcement learning, and world models. Neural Netw. 2022, 152, 267–275. [Google Scholar] [CrossRef]

- Li, S.; Li, W.; Cook, C.; Zhu, C.; Gao, Y. Independently Recurrent Neural Network (IndRNN): Building A Longer and Deeper RNN. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5457–5466. [Google Scholar] [CrossRef]

- Chen, J.; Zeng, G.Q.; Zhou, W.; Du, W.; Lu, K.D. Wind speed forecasting using nonlinear-learning ensemble of deep learning time series prediction and extremal optimization. Energy Convers. Manag. 2018, 165, 681–695. [Google Scholar] [CrossRef]

- Bouaouda, A.; Afdel, K.; Abounacer, R. Meta-heuristic and Heuristic Algorithms for Forecasting Workload Place- ment and Energy Consumption in Cloud Data Centers. Adv. Sci. Technol. Eng. Syst. J. 2023, 8, 1–11. [Google Scholar] [CrossRef]

- Smimite, O.; Afdel, K. Hybrid Solution for Container Placement and Load Balancing based on ACO and Bin Packing. Int. J. Adv. Comput. Sci. Appl. 2020, 11. [Google Scholar] [CrossRef]

- Dubey, K.; Sharma, S.C.; Nasr, A.A. A Simulated Annealing based Energy-Efficient VM Placement Policy in Cloud Computing. In Proceedings of the 2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), Vellore, India, 24–25 February 2020; pp. 1–5. [Google Scholar] [CrossRef]

| Date | From | To | MW |

|---|---|---|---|

| . . . | . . . | . . . | . . . |

| 1 January 2010 | 10:00 | 10:15 | 8 |

| 1 January 2010 | 10:15 | 10:30 | 11 |

| 1 January 2010 | 10:30 | 10:45 | 12 |

| 1 January 2010 | 10:45 | 11:00 | 16 |

| 1 January 2010 | 11:00 | 11:15 | 16 |

| 1 January 2010 | 11:15 | 11:30 | 17 |

| 1 January 2010 | 11:30 | 11:45 | 17 |

| 1 January 2010 | 11:45 | 12:00 | 18 |

| 1 January 2010 | 12:00 | 12:15 | 19 |

| . . . | . . . | . . . | . . . |

| Statistic | Value |

|---|---|

| Count | 3287 |

| Mean | 8960.66 MW |

| Standard Deviation | 5223.10 MW |

| Minimum | 33 MW |

| 25th Percentile | 5496.75 MW |

| 50th Percentile (Median) | 7392.60 MW |

| 75th Percentile | 11,316.41 MW |

| Maximum | 36,412 MW |

| GRU Layer | Number of Units | Dropout Rate |

|---|---|---|

| 1 | 64 | 0.2 |

| 2 | 32 | 0.2 |

| 3 | 16 | 0.2 |

| Servers | Containers | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| System | Number |

RAM (GB) |

Number of CPUs |

BW (bps) |

Storage (MB) | Number |

RAM (MB) |

Number of CPUs |

BW (bps) |

Size (MB) |

| Homogeneous Systems | ||||||||||

| 1 | 30 | 16 | 64 | 1,000,000 | 1,000,000 | 1000 | 256 | 1 | 1024 | 2500 |

| 2 | 50 | 32 | 128 | 1,000,000 | 1,000,000 | 1000 | 256 | 1 | 1024 | 2500 |

| 1000 | 512 | 1 | 1024 | 2500 | ||||||

| 1000 | 512 | 1 | 1024 | 2500 | ||||||

| 3 | 75 | 64 | 512 | 3,000,000 | 3,000,000 | 1000 | 256 | 1 | 1024 | 2500 |

| 1000 | 128 | 1 | 1024 | 2500 | ||||||

| 2000 | 512 | 1 | 1024 | 2500 | ||||||

| 4 | 100 | 128 | 1024 | 4,000,000 | 4,000,000 | 2000 | 256 | 1 | 1024 | 2500 |

| 2000 | 128 | 1 | 1024 | 2500 | ||||||

| Heterogeneous Systems | ||||||||||

| 5 | 25 | 16 | 64 | 1,000,000 | 1,000,000 | 2000 | 512 | 1 | 1024 | 2500 |

| 25 | 32 | 128 | 2,000,000 | 2,000,000 | 2000 | 256 | 1 | 1024 | 2500 | |

| 25 | 64 | 512 | 2,000,000 | 2,000,000 | ||||||

| 25 | 128 | 1024 | 4,000,000 | 4,000,000 | 2000 | 128 | 1 | 1024 | 2500 | |

| 50 | 128 | 1024 | 5,000,000 | 5,000,000 | 2000 | 512 | 1 | 1024 | 2500 | |

| 6 | 50 | 74 | 1024 | 4,000,000 | 4,000,000 | 2000 | 256 | 1 | 1024 | 2500 |

| 50 | 64 | 1024 | 3,000,000 | 3,000,000 | 2000 | 128 | 1 | 1024 | 2500 | |

| 7 | 150 | 256 | 1024 | 4,000,000 | 4,000,000 | 4500 | 512 | 1 | 1024 | 2500 |

| 150 | 128 | 1024 | 4,000,000 | 4,000,000 | 4500 | 256 | 1 | 1024 | 2500 | |

| 50 | 256 | 1024 | 4,000,000 | 4,000,000 | ||||||

| 8 | 50 | 128 | 1024 | 3,000,000 | 3,000,000 | 10000 | 512 | 1 | 1024 | 2500 |

| 50 | 64 | 1024 | 2,000,000 | 2,000,000 | ||||||

| System | Number of Active Servers | Energy Consumed (Wh) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| GRLA | GA | ACO | SA | FFD | GRLA | GA | ACO | SA | FFD | |

| 1 | 20 | 21 | 25 | 30 | 21 | 21,087.473 | 21,957.932 | 25,594.266 | 30,146.836 | 21,957.932 |

| 2 | 30 | 30 | 37 | 50 | 30 | 34,745.918 | 34,760.46 | 41,572.242 | 54,190.523 | 34,760.46 |

| 3 | 18 | 19 | 41 | 75 | 19 | 25,581.467 | 26,370.557 | 44,714.742 | 72,395.54 | 26,276.115 |

| 4 | 18 | 20 | 48 | 100 | 20 | 26,418.555 | 28,074.16 | 52,273.07 | 93,528.33 | 28,050.65 |

| 5 | 27 | 33 | 75 | 100 | 66 | 36,056.582 | 40,285.02 | 81,737.7 | 104,668.75 | 79,380.33 |

| 6 | 27 | 28 | 35 | 128 | 37 | 28,426.984 | 30,979.496 | 40,463.742 | 11,9542.945 | 36,940.28 |

| 7 | 16 | 17 | 54 | 128 | 17 | 25,903.475 | 27,047.932 | 57,832.508 | 102,757.484 | 27,595.234 |

| 8 | 29 | 32 | 51 | 128 | 75 | 40,467.508 | 43,696.324 | 61,204.734 | 102,757.484 | 77,376.734 |

| System | Energy Saved (Wh) | Execution time (Seconds) | ||||||||

| GRLA | GA | ACO | SA | FFD | GRLA | GA | ACO | SA | FFD | |

| 1 | 11,123.054 | 10,252.595 | 6616.261 | 2063.691 | 10,252.595 | 40.74 | 33.802 | 0.275 | 7.557 | 0.174 |

| 2 | 26,306.712 | 26,292.17 | 19,480.388 | 6862.107 | 26,292.17 | 475.926 | 426.01 | 0.349 | 135.037 | 0.531 |

| 3 | 97,576.423 | 96,787.333 | 78,443.148 | 50,762.35 | 96,881.775 | 1116.8 | 641.634 | 0.677 | 194.353 | 0.943 |

| 4 | 13,7791.965 | 136,136.36 | 111,937.45 | 70,682.19 | 136,159.87 | 5159.742 | 5510.662 | 1.878 | 1772.836 | 9.088 |

| 5 | 10,3417.108 | 99,188.67 | 57,735.99 | 34,804.94 | 60,093.36 | 10,637.451 | 9075.251 | 1.321 | 2063.53 | 5.161 |

| 6 | 168,415.116 | 165,862.604 | 156,378.358 | 77,299.155 | 159,901.82 | 6186.972 | 6280.223 | 1.129 | 885.335 | 13.433 |

| 7 | 466,728.085 | 465,583.628 | 434,799.052 | 389,874.076 | 465,036.326 | 16,339.565 | 15,089.255 | 6.085 | 4605.858 | 112.916 |

| 8 | 174,269.332 | 171,040.516 | 153,532.106 | 111,979.356 | 119,465.366 | 33,395.339 | 35,192.357 | 2.527 | 7804.932 | 30.363 |

| Model | Epoch/ Round | Batch Size | Learning Rate | MAE | MSE | RMSE | Training Time (Seconds) |

|---|---|---|---|---|---|---|---|

| 50 | 16 | 0.001 | 0.0297 | 0.0045 | 0.0674 | 1136.4093 | |

| HAGRU-RF | 100 | 32 | 0.0001 | 0.0287 | 0.0042 | 0.0646 | 1183.909 |

| 200 | 64 | 0.0005 | 0.0304 | 0.0045 | 0.067 | 1159.8975 | |

| Sequence Processing Models | |||||||

| 50 | 16 | 0.001 | 0.0979 | 0.0169 | 0.1299 | 1093.2417 | |

| LSTM | 100 | 32 | 0.0001 | 0.0932 | 0.0159 | 0.1262 | 1106.0732 |

| 200 | 64 | 0.0005 | 0.0952 | 0.0165 | 0.1285 | 1133.1099 | |

| 50 | 16 | 0.001 | 0.0952 | 0.0168 | 0.1297 | 1088.9554 | |

| GRU | 100 | 32 | 0.0001 | 0.0933 | 0.0159 | 0.1262 | 1104.789 |

| 200 | 64 | 0.0005 | 0.0959 | 0.0165 | 0.1285 | 1155.652 | |

| 50 | 16 | 0.001 | 0.0936 | 0.0168 | 0.1297 | 1245.6252 | |

| Transformers | 100 | 32 | 0.0001 | 0.0942 | 0.016 | 0.1265 | 1294.9273 |

| 200 | 64 | 0.0005 | 0.0943 | 0.0165 | 0.1286 | 1455.8436 | |

| Gradient Boosting Methods | |||||||

| 50 | - | 0.001 | 0.1079 | 0.0204 | 0.1428 | 0.0718 | |

| XGBoost | 100 | - | 0.0001 | 0.1052 | 0.019 | 0.138 | 0.2183 |

| 200 | - | 0.0005 | 0.1072 | 0.0197 | 0.1403 | 0.0501 | |

| 50 | - | 0.001 | 0.107 | 0.0201 | 0.1417 | 0.2092 | |

| LightGBM | 100 | - | 0.0001 | 0.105 | 0.019 | 0.1377 | 0.4643 |

| 200 | - | 0.0005 | 0.1053 | 0.019 | 0.138 | 0.575 | |

| 50 | - | 0.001 | 0.1005 | 0.0218 | 0.1477 | 1.084 | |

| CatBoost | 100 | - | 0.0001 | 0.0983 | 0.0205 | 0.1433 | 2.1859 |

| 200 | - | 0.0005 | 0.0989 | 0.0206 | 0.1434 | 3.8037 | |

| Traditional Time Series Models | |||||||

| Model | Non-seasonal order (p, d, q) | Seasonal order (P, D, Q, s) | MAE | MSE | RMSE | Training Time | |

| (1, 1, 1) | - | 0.1139 | 0.0208 | 0.1442 | 4.2684 | ||

| ARIMA | (2, 1, 2) | - | 0.1049 | 0.019 | 0.1379 | 8.316 | |

| (0, 1, 1) | - | 0.1043 | 0.0245 | 0.1564 | 1.9391 | ||

| (1, 1, 1) | (1, 1, 1, 1) | 0.1152 | 0.021 | 0.1447 | 69.879 | ||

| SARIMA | (2, 1, 2) | (2, 1, 2, 1) | 0.1049 | 0.019 | 0.138 | 465.2336 | |

| (0, 1, 1) | (0, 1, 1, 1) | 0.1016 | 0.0222 | 0.1491 | 38.6835 | ||

| Classical Machine Learning Methods | |||||||

| Model | K (Neighbors), Distance Metric | Kernel | Random State | MAE | MSE | RMSE | Training Time |

| (5, Euclidean) | - | - | 0.0308 | 0.0049 | 0.0697 | 0.0478 | |

| KNN | (7, Chebyshev) | - | - | 0.0347 | 0.0046 | 0.068 | 0.0721 |

| (10, Manhattan) | - | - | 0.0575 | 0.0076 | 0.0869 | 0.008 | |

| - | RBF | - | 0.0978 | 0.0169 | 0.1302 | 14.3208 | |

| SVM | - | Linear | - | 0.0952 | 0.0161 | 0.1267 | 12.0128 |

| - | Polynomial | - | 0.1002 | 0.0177 | 0.1332 | 1473.9687 | |

| Model | Epoch/ Round | Batch Size | Learning Rate | MAE | MSE | RMSE | Training Time (Seconds) |

|---|---|---|---|---|---|---|---|

| 50 | 16 | 0.001 | 0.0335 | 0.0064 | 0.0799 | 263.9631 | |

| HAGRU-RF | 100 | 32 | 0.0001 | 0.035 | 0.0068 | 0.0823 | 157.2848 |

| 200 | 64 | 0.0005 | 0.0366 | 0.0074 | 0.0862 | 175.1979 | |

| Sequence Processing Models | |||||||

| 50 | 16 | 0.001 | 0.1001 | 0.018 | 0.134 | 156.3016 | |

| LSTM | 100 | 32 | 0.0001 | 0.1031 | 0.0193 | 0.1389 | 191.3676 |

| 200 | 64 | 0.0005 | 0.1146 | 0.0233 | 0.1526 | 197.4381 | |

| 50 | 16 | 0.001 | 0.1031 | 0.0181 | 0.1345 | 155.8559 | |

| GRU | 100 | 32 | 0.0001 | 0.1032 | 0.0193 | 0.1389 | 159.5079 |

| 200 | 64 | 0.0005 | 0.1158 | 0.0232 | 0.1522 | 182.8227 | |

| 50 | 16 | 0.001 | 0.1017 | 0.018 | 0.1342 | 177.2639 | |

| Transformers | 100 | 32 | 0.0001 | 0.1036 | 0.0192 | 0.1386 | 212.3213 |

| 200 | 64 | 0.0005 | 0.1142 | 0.0234 | 0.153 | 241.0792 | |

| Gradient Boosting Methods | |||||||

| 50 | - | 0.001 | 0.1182 | 0.0255 | 0.1598 | 0.0384 | |

| XGBoost | 100 | - | 0.0001 | 0.1223 | 0.0273 | 0.1652 | 0.0291 |

| 200 | - | 0.0005 | 0.132 | 0.0311 | 0.1765 | 0.0349 | |

| 50 | - | 0.001 | 0.1165 | 0.0247 | 0.1571 | 0.0965 | |

| LightGBM | 100 | - | 0.0001 | 0.1219 | 0.0271 | 0.1646 | 0.1653 |

| 200 | - | 0.0005 | 0.1282 | 0.0291 | 0.1705 | 0.3296 | |

| 50 | - | 0.001 | 0.1164 | 0.0272 | 0.1648 | 0.8028 | |

| CatBoost | 100 | - | 0.0001 | 0.1208 | 0.0291 | 0.1707 | 1.42 |

| 200 | - | 0.0005 | 0.1284 | 0.0318 | 0.1784 | 2.2506 | |

| Traditional Time Series Models | |||||||

| Model | Non-seasonal order (p, d, q) | Seasonal order (P, D, Q, s) | MAE | MSE | RMSE | Training Time | |

| (1, 1, 1) | - | 0.1177 | 0.0274 | 0.1655 | 0.4534 | ||

| ARIMA | (2, 1, 2) | - | 0.122 | 0.0274 | 0.1655 | 2.1972 | |

| (0, 1, 1) | - | 0.1355 | 0.0381 | 0.1952 | 0.4418 | ||

| (1, 1, 1) | (1, 1, 1, 7) | 0.1181 | 0.0268 | 0.1636 | 4.5728 | ||

| SARIMA | (2, 1, 2) | (2, 1, 2, 7) | 0.1215 | 0.0273 | 0.1654 | 19.018 | |

| (0, 1, 1) | (0, 1, 1, 7) | 0.1344 | 0.037 | 0.1924 | 4.411 | ||

| Classical Machine Learning Methods | |||||||

| Model | K (Neighbors), Distance Metric | Kernel | Random State | MAE | MSE | RMSE | Training Time |

| (5, Euclidean) | - | - | 0.0342 | 0.0067 | 0.0818 | 0.0196 | |

| KNN | (7, Chebyshev) | - | - | 0.0405 | 0.0071 | 0.0842 | 0.0023 |

| (10, Manhattan) | - | - | 0.0698 | 0.0115 | 0.1073 | 0.0018 | |

| - | RBF | - | 0.0982 | 0.0175 | 0.1322 | 0.4413 | |

| SVM | - | Linear | - | 0.1029 | 0.019 | 0.1378 | 0.2835 |

| - | Polynomial | - | 0.1174 | 0.0246 | 0.1569 | 45.4624 | |

| Model | Epoch/ Round | Batch Size | Learning Rate | MAE | MSE | RMSE | Training Time (Seconds) |

|---|---|---|---|---|---|---|---|

| 50 | 16 | 0.001 | 0.0404 | 0.0099 | 0.0996 | 31.9085 | |

| HAGRU-RF | 100 | 32 | 0.0001 | 0.0359 | 0.0047 | 0.0688 | 82.8323 |

| 200 | 64 | 0.0005 | 0.0421 | 0.0085 | 0.0919 | 51.1468 | |

| Sequence Processing Models | |||||||

| 50 | 16 | 0.001 | 0.1004 | 0.0212 | 0.1455 | 34.2723 | |

| LSTM | 100 | 32 | 0.0001 | 0.0807 | 0.0128 | 0.1131 | 83.9937 |

| 200 | 64 | 0.0005 | 0.0945 | 0.0176 | 0.1328 | 61.609 | |

| 50 | 16 | 0.001 | 0.1017 | 0.0212 | 0.1457 | 33.9635 | |

| GRU | 100 | 32 | 0.0001 | 0.081 | 0.0128 | 0.1132 | 82.0984 |

| 200 | 64 | 0.0005 | 0.093 | 0.0173 | 0.1316 | 48.5333 | |

| 50 | 16 | 0.001 | 0.1051 | 0.0222 | 0.1489 | 33.3177 | |

| Transformers | 100 | 32 | 0.0001 | 0.0883 | 0.0145 | 0.1204 | 91.149 |

| 200 | 64 | 0.0005 | 0.097 | 0.0182 | 0.1349 | 63.2777 | |

| Gradient Boosting Methods | |||||||

| 50 | - | 0.001 | 0.1505 | 0.0439 | 0.2096 | 0.0455 | |

| XGBoost | 100 | - | 0.0001 | 0.1131 | 0.0225 | 0.1499 | 0.0812 |

| 200 | - | 0.0005 | 0.1424 | 0.0408 | 0.2019 | 0.0223 | |

| 50 | - | 0.001 | 0.1471 | 0.0416 | 0.2041 | 0.0522 | |

| LightGBM | 100 | - | 0.0001 | 0.1125 | 0.0222 | 0.1491 | 0.1737 |

| 200 | - | 0.0005 | 0.135 | 0.0362 | 0.1901 | 0.2085 | |

| 50 | - | 0.001 | 0.1483 | 0.0446 | 0.2112 | 0.5531 | |

| CatBoost | 100 | - | 0.0001 | 0.0965 | 0.0193 | 0.1388 | 1.4589 |

| 200 | - | 0.0005 | 0.1326 | 0.0382 | 0.1953 | 1.0334 | |

| Traditional Time Series Models | |||||||

| Model | Non-seasonal order (p, d, q) | Seasonal order (P, D, Q, s) | MAE | MSE | RMSE | Training Time | |

| (1, 1, 1) | - | 0.151 | 0.0442 | 0.2103 | 0.4811 | ||

| ARIMA | (2, 1, 2) | - | 0.1223 | 0.0244 | 0.1563 | 1.2557 | |

| (0, 1, 1) | - | 0.1391 | 0.0424 | 0.2058 | 0.0605 | ||

| (1, 1, 1) | (1, 1, 1, 30) | 0.1522 | 0.0462 | 0.2149 | 13.6703 | ||

| SARIMA | (2, 1, 2) | (2, 1, 2, 30) | 0.1629 | 0.0369 | 0.1922 | 121.1857 | |

| (0, 1, 1) | (0, 1, 1, 30) | 0.1448 | 0.0474 | 0.2177 | 10.5254 | ||

| Classical Machine Learning Methods | |||||||

| Model | K (Neighbors), Distance Metric | Kernel | Random State | MAE | MSE | RMSE | Training Time |

| (5, Euclidean) | - | - | 0.0515 | 0.0122 | 0.1105 | 0.005 | |

| KNN | (7, Chebyshev) | - | - | 0.0449 | 0.0058 | 0.0759 | 0.0044 |

| (10, Manhattan) | - | - | 0.0645 | 0.0107 | 0.1034 | 0.001 | |

| - | RBF | - | 0.103 | 0.0223 | 0.1494 | 0.0246 | |

| SVM | - | Linear | - | 0.0847 | 0.0139 | 0.1179 | 0.0288 |

| - | Polynomial | - | 0.0967 | 0.0187 | 0.1367 | 1.9749 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bouaouda, A.; Afdel, K.; Abounacer, R. Unveiling Genetic Reinforcement Learning (GRLA) and Hybrid Attention-Enhanced Gated Recurrent Unit with Random Forest (HAGRU-RF) for Energy-Efficient Containerized Data Centers Empowered by Solar Energy and AI. Sustainability 2024, 16, 4438. https://doi.org/10.3390/su16114438

Bouaouda A, Afdel K, Abounacer R. Unveiling Genetic Reinforcement Learning (GRLA) and Hybrid Attention-Enhanced Gated Recurrent Unit with Random Forest (HAGRU-RF) for Energy-Efficient Containerized Data Centers Empowered by Solar Energy and AI. Sustainability. 2024; 16(11):4438. https://doi.org/10.3390/su16114438

Chicago/Turabian StyleBouaouda, Amine, Karim Afdel, and Rachida Abounacer. 2024. "Unveiling Genetic Reinforcement Learning (GRLA) and Hybrid Attention-Enhanced Gated Recurrent Unit with Random Forest (HAGRU-RF) for Energy-Efficient Containerized Data Centers Empowered by Solar Energy and AI" Sustainability 16, no. 11: 4438. https://doi.org/10.3390/su16114438

APA StyleBouaouda, A., Afdel, K., & Abounacer, R. (2024). Unveiling Genetic Reinforcement Learning (GRLA) and Hybrid Attention-Enhanced Gated Recurrent Unit with Random Forest (HAGRU-RF) for Energy-Efficient Containerized Data Centers Empowered by Solar Energy and AI. Sustainability, 16(11), 4438. https://doi.org/10.3390/su16114438