A Study of Factors Influencing the Use of the DingTalk Online Lecture Platform in the Context of the COVID-19 Pandemic

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Methods

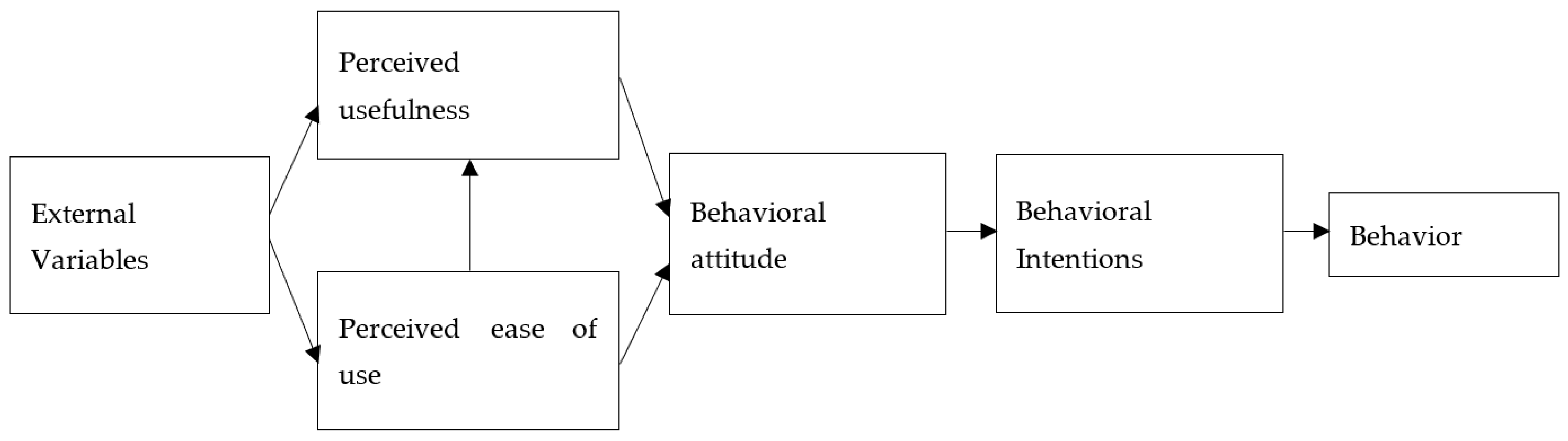

2.1.1. Technology Acceptance Model

2.1.2. Structural Equation Model

2.2. Questionnaire Design

3. Questionnaire Statistics and Data Analysis

3.1. Data from Data Collection

3.2. Analysis of the Basic Information of the Questionnaire

3.3. Data Analysis

3.3.1. Reliability Analysis

3.3.2. Validity Analysis

3.3.3. Test of Variability

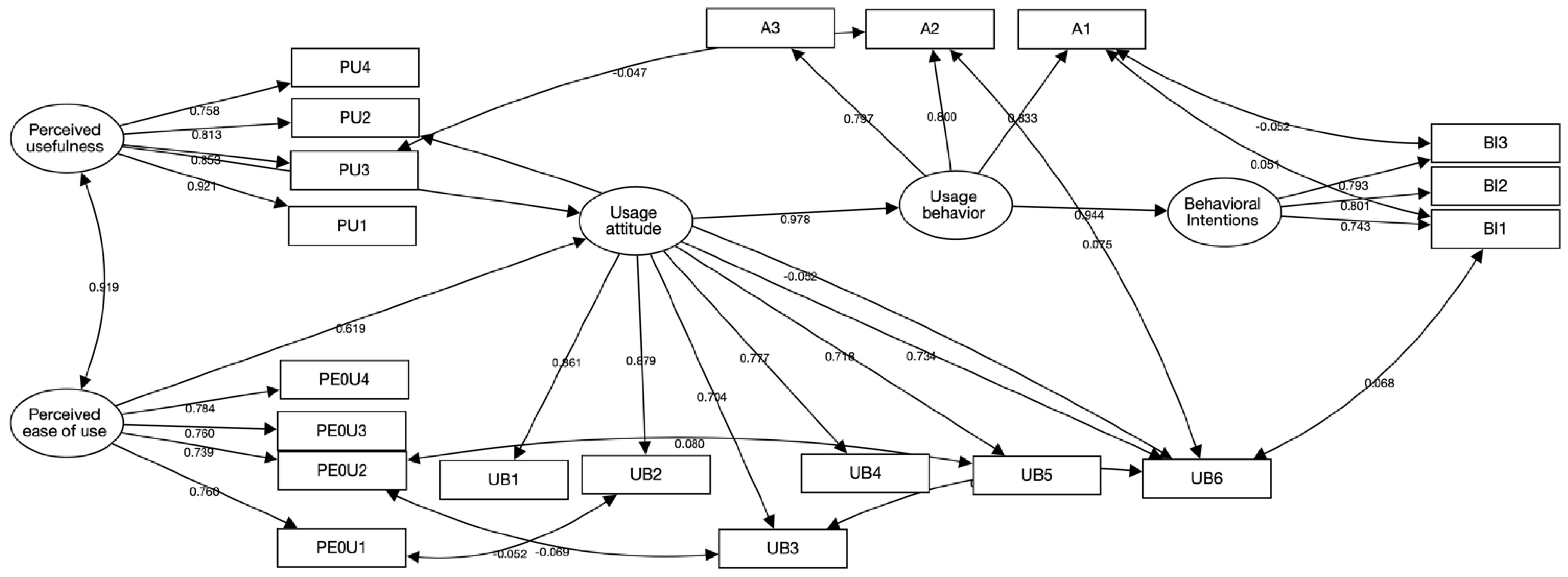

3.3.4. Structural Equation Model Analysis

4. Conclusions

4.1. Factors Influencing Willingness to Learn Online

- (1)

- Perceived usefulness has a significant indirect effect on students’ willingness to use the online teaching DingTalk platform on their own, which indicates that perceived usefulness is a direct influence on willingness to use, and students are largely influenced by outside sources. As the influence of COVID-19 grows, schools use the teaching DingTalk platform in order to allow students to be managed uniformly on the Internet, and also because schools ensure the quality of teaching, most schools choose the DingTalk platform, so students then use the DingTalk platform in their classes. Therefore, it is more likely to be constrained by the degree of efficiency when making decisions. Therefore, a good and efficient teaching platform is crucial.

- (2)

- Perceived ease of use also has a significant indirect effect on students’ willingness to use the online teaching DingTalk platform. Students find the DingTalk platform easy to use, and they may have positive evaluations of the platform’s features, performance, and design, thus increasing their satisfaction with the platform and motivating them to use it more actively.

- (3)

- Behavioral attitudes have a direct effect on students’ willingness to use the online teaching DingTalk platform, which is a way of using the Internet and digital technology for distance education that involves students and teachers interacting and learning in a virtual environment. The analysis of the questionnaire shows that students’ behavioral attitudes, i.e., their perceptions, attitudes, and beliefs about online teaching and learning, affect their willingness to use online teaching and learning, i.e., their willingness to adopt and participate in online teaching and learning.

- (4)

- Students’ behavior toward using the DingTalk platform is directly influenced by their willingness to use it. DingTalk platform provides rich and practical functions and has a simple and friendly interface, so students will be more inclined to actively use the platform. When students answer that they have higher expectations for the effectiveness of online teaching on the DingTalk platform, they also have higher expectations for usage behavior. Students are active on the platform, and they may use it more frequently because of their social needs. Students are likely to use the DingTalk platform more actively if the school uses it as a primary tool for student learning and communication, and if it is positively evaluated and supported.

- (5)

- The study found that students’ willingness to use the online teaching platform DingTalk was influenced by several factors. First, perceived usefulness has an indirect effect on students’ willingness to use. Students are largely influenced by external factors, such as the COVID-19 epidemic, which led schools to choose the DingTalk platform for online teaching, so an efficient teaching platform is crucial to students’ willingness to use. Second, perceived ease of use also has an indirect effect on students’ intention to use: students’ perception of the DingTalk platform as easy to use increases their satisfaction and motivates them to use the platform more actively. Again, students’ behavioral attitudes have a direct impact on willingness to use; students’ perceptions, attitudes, and beliefs about online teaching and learning influence their willingness to adopt and participate in online teaching and learning. Finally, students’ behaviors toward using the DingTalk platform are directly influenced by their intention to use it. Students who have high expectations of the platform and are positively evaluated and supported are likely to use it more actively, especially in contexts where social needs are high.

4.2. Recommendations

- (1)

- Strengthening the top-level design and systematic planning of curriculum teaching implementation.

- (2)

- Strengthening the top-level design and systematic planning of course teaching implementation.

- (3)

- Integrate and improve the teaching supervision and inspection mechanism.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moftakhar, L.; Seif, M. The exponentially increasing rate of patients infected with COVID-19 in Iran. Arch. Iran. Med. 2020, 23, 235–238. [Google Scholar] [CrossRef]

- Chen, N.; Zhou, M.; Dong, X.; Qu, J.; Gong, F.; Han, Y.; Zhang, L. Epidemiological and clinical characteristics of 99 cases of 2019 novel coronavirus pneumonia in Wuhan, China: A descriptive study. Lancet 2020, 395, 507–513. [Google Scholar] [CrossRef] [PubMed]

- Yu, L.; Huang, L.; Tang, H.-R.; Li, N.; Rao, T.-T.; Hu, D.; Wen, Y.-F.; Shi, L.-X. Analysis of factors influencing the network teaching effect of college students in a medical school during the COVID-19 epidemic. BMC Med. Educ. 2021, 21, 397. [Google Scholar] [CrossRef]

- Plaza-Ccuno, J.N.R.; Vasquez Puri, C.; Calizaya-Milla, Y.E.; Morales-García, W.C.; Huancahuire-Vega, S.; Soriano-Moreno, A.N.; Saintila, J. Physical Inactivity Is Associated with Job Burnout in Health Professionals during the COVID-19 Pandemic. Risk Manag. Healthc. Policy 2023, 16, 725–733. [Google Scholar] [CrossRef]

- Sobaih, A.E.E.; Hasanein, A.M.; Abu Elnasr, A.E. Responses to COVID-19 in higher education: Social media usage for sustaining formal academic communication in developing countries. Sustainability 2020, 12, 6520. [Google Scholar] [CrossRef]

- Ali, W. Online and remote learning in higher education institutes: A necessity in light of COVID-19 pandemic. High. Educ. Stud. 2020, 10, 16–25. [Google Scholar] [CrossRef]

- Bezovski, Z.; Poorani, S. The evolution of e-learning and new trends. IISTE 2016, 6, 50–57. [Google Scholar]

- Coman, C.; Țîru, L.G.; Meseșan-Schmitz, L.; Stanciu, C.; Bularca, M.C. Online Teaching and Learning in Higher Education during the Coronavirus Pandemic: Students’ Perspective. Sustainability 2020, 12, 10367. [Google Scholar] [CrossRef]

- Lee, B.C.; Yoon, J.O.; Lee, I. Learners’ acceptance of e-learning in South Korea: Theories and results. Comput. Educ. 2009, 53, 1320–1329. [Google Scholar] [CrossRef]

- Sangrà, A.; Vlachopoulos, D.; Cabrera, N. Building an inclusive definition of e-learning: An approach to the conceptual framework. Int. Rev. Res. Open Distrib. Learn. 2012, 13, 145–159. [Google Scholar] [CrossRef]

- Khan, M.A.; Raad, B. The Role of E-Learning in COVID-19 Crisis. 2020. Available online: https://www.researchgate.net/publication/340999258_THE_ROLE_OF_E-LEARNING_IN_COVID-19_CRISIS (accessed on 7 October 2022).

- Anaraki, F. Developing an Effective and Efficient eLearning Platform. Int. J. Comput. Internet Manag. 2004, 12, 57–63. Available online: https://dialnet.unirioja.es/servlet/articulo?codigo=5823402 (accessed on 27 October 2022).

- Costa, C.; Alvelos, H.; Teixeira, L. The use of Moodle e-learning platform: A study in a Portuguese University. Procedia Technol. 2012, 5, 334–343. [Google Scholar] [CrossRef]

- Cacheiro-Gonzalez, M.L.; Medina-Rivilla, A.; Dominguez-Garrido, M.C.; Medina-Dominguez, M. The learning platform in distance higher education: Student’s perceptions. Turk. Online J. Distance Educ. 2019, 20, 71–95. [Google Scholar] [CrossRef]

- Marinoni, G.; Van’t Land, H.; Jensen, T. The Impact of COVID-19 on Higher Education around the World. International Association of Universities. Available online: https://www.iau-aiu.net/IMG/pdf/iau_covid19_and_he_survey_report_final_may_2020.pdf (accessed on 14 November 2022).

- Ouadoud, M.; Nejjari, A.; Chkouri, M.Y.; El-Kadiri, K.E. Learning management system and the underlying learning theories. In Proceedings of the Mediterranean Symposium on Smart City Applications, Tangier, Morocco, 25–27 October 2017; Springer International Publishing: Cham, Switzerland, 2018; pp. 732–744. [Google Scholar]

- Qiu, T.S.; Wang, H.Y. CMS, LMS and LCMS for elearning. J. e-Sci. 1994, 16, 569–575. [Google Scholar]

- Martín-Blas, T.; Serrano-Fernández, A. The role of new technologies in the learning process: Moodle as a teaching tool in physics—Sciencedirect. Comput. Educ. 2009, 52, 35–44. [Google Scholar] [CrossRef]

- Rawat, R.S.; Kothari, H.C.; Chandra, D. Role of the Digital Technology in Accelerating the Growth of Micro, Small and Medium Enterprises in Uttarakhand: Using TAM (Technology Acceptance Model). Int. J. Technol. Manag. Sustain. Dev. 2022, 21, 205–227. [Google Scholar] [CrossRef]

- Allo, M.D.G. Is the online learning good in the midst of COVID-19 Pandemic? The case of EFL learners. J. Sinestesia 2020, 10, 1–10. [Google Scholar]

- Nieto-Márquez, N.L.; Baldominos, A.; Soilán, M.I.; Dobón, E.M.; Arévalo, J.A.Z. Assessment of COVID-19′s Impact on EdTech: Case Study on an Educational Platform, Architecture and Teachers’ Experience. Educ. Sci. 2020, 12, 681. [Google Scholar] [CrossRef]

- Alqahtani, A.Y.; Rajkhan, A.A. E-learning critical success factors during the covid-19 pandemic: A comprehensive analysis of e-learning managerial perspectives. Educ. Sci. 2020, 10, 216. [Google Scholar] [CrossRef]

- Vogel-Walcutt, J.J.; Fiorella, L.; Malone, N. Instructional strategies framework for military training systems. Comput. Hum. Behav. 2013, 29, 1490–1498. [Google Scholar] [CrossRef]

- Cesari, V.; Galgani, B.; Gemignani, A.; Menicucci, D. Enhancing qualities of consciousness during online learning via multisensory interactions. Behav. Sci. 2021, 11, 57. [Google Scholar] [CrossRef] [PubMed]

- Huang, D. Analysis of the Application of Ali Nails in University Management Departments. Educ. Mod. 2018, 5, 338–339. [Google Scholar] [CrossRef]

- Lin, A. The design and implementation of the office automation system of the second-level college based on “nail”. Comput. Knowl. Technol. 2020, 16, 89–90+95. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, Z. A Cultural-Centered Model Based on User Experience and Learning Preferences of Online Teaching Platforms for Chinese National University Students: Taking Teaching Platforms of WeCom, VooV Meeting, and DingTalk as Examples. Systems 2022, 10, 216. [Google Scholar] [CrossRef]

- Linke, M.; Landenfeld, K. Competence-Based Learning in Engineering Mechanics in an Adaptive Online Learning Environment. Teach. Math. Appl. Int. J. IMA 2019, 38, 146–153. [Google Scholar] [CrossRef]

- Chuenyindee, T.; Montenegro, L.D.; Ong, A.K.S.; Prasetyo, Y.T.; Nadlifatin, R.; Ayuwati, I.D.; Sittiwatethanasiri, T.; Robas, K.P.E. The perceived usability of the learning management system during the COVID-19 pandemic: Integrating system usability scale, technology acceptance model, and task-technology fit. Work 2022, 73, 41–58. [Google Scholar] [CrossRef] [PubMed]

- Alturki, U.; Aldraiweesh, A. Application of Learning Management System (LMS) during the COVID-19 Pandemic: A Sustainable Acceptance Model of the Expansion Technology Approach. Sustainability 2021, 13, 10991. [Google Scholar] [CrossRef]

- Navarro, M.M.; Prasetyo, Y.T.; Young, M.N.; Nadlifatin, R.; Redi, A.A.N.P. The Perceived Satisfaction in Utilizing Learning Management System among Engineering Students during the COVID-19 Pandemic: Integrating Task Technology Fit and Extended Technology Acceptance Model. Sustainability 2021, 13, 10669. [Google Scholar] [CrossRef]

- Zhang, S.; Li, Y.F. A study of college teachers’ online teaching behavior based on technology acceptance model. J. Distance Educ. 2014, 3, 56–63. [Google Scholar] [CrossRef]

- Xiao, R.X.; Wang, H.; Qu, J.P. A study of online teaching behavior of college teachers based on technology acceptance model. Chin. J. Multimed. Web-Based Teach. Learn. 2021, 49, 27–30. (In Chinese) [Google Scholar]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Browne, M.W.; Cudeck, R. Testing Structural Equation Models. 1993. Available online: https://www.researchgate.net/publication/284653185_Testing_Structural_Equation_Models (accessed on 29 October 2022.).

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Bhattacherjee, A. This paper examines cognitive beliefs and affect influencing one’s intention to continue using (continuance) information systems (is) expectation-confirmat. SBPM 2010, 48, 162–164. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Bentler, P.M. EQS Structural Equations Program Manual (Vol. 6). Encino, CA: Multivariate Software. 1995. Available online: https://www.mvsoft.com/wp-content/uploads/2021/04/EQS_6_Prog_Manual_422pp.pdf (accessed on 25 October 2022).

- Bagozzi, R.P.; Heatherton, T.F. A general approach to representing multifaceted personality constructs: Application to state self-esteem. Struct. Equ. Model. Multidiscip. J. 1994, 1, 35–67. [Google Scholar] [CrossRef]

- Zhang, D.; Huang, G.; Yin, X.; Gong, Q. Residents’ Waste Separation Behaviors at the Source: Using SEM with the Theory of Planned Behavior in Guangzhou, China. Int. J. Environ. Res. Public Health 2015, 12, 9475–9491. [Google Scholar] [CrossRef]

- Yuan, Q.; Gu, Y.; Wu, Y.; Zhao, X.; Gong, Y. Analysis of the Influence Mechanism of Consumers’ Trading Behavior on Reusable Mobile Phones. Sustainability 2020, 12, 3921. [Google Scholar] [CrossRef]

- Shangguan, Z.; Wang, M.Y.; Huang, J.; Shi, G.; Song, L.; Sun, Z. Study on Social Integration Identification and Characteristics of Migrants from “Yangtze River to Huaihe River” Project: A Time-Driven Perspective. Sustainability 2019, 12, 211. [Google Scholar] [CrossRef]

- Shuangli, P.; Guijun, Z.; Qun, C. The Psychological Decision-Making Process Model of Giving up Driving under Parking Constraints from the Perspective of Sustainable Traffic. Sustainability 2020, 12, 7152. [Google Scholar] [CrossRef]

- Maaravi, Y.; Heller, B. Digital Innovation in Times of Crisis: How Mashups Improve Quality of Education. Sustainability 2021, 13, 7082. [Google Scholar] [CrossRef]

- Lee, J.; Hwang, C.; Kwon, D. On the Effect of Perceived Security, Perceived Privacy, Perceived Enjoyment, Perceived Interactivity on Continual Usage Intention through Perceived Usefulness in Mobile Instant Messenger for business. J. Korea Soc. Digit. Ind. Inf. Manag. 2015, 11, 159–177. [Google Scholar] [CrossRef]

| Variables | Source | Indicator Content | Indicators |

|---|---|---|---|

| Perceived usefulness | Davis, F. D. (1989) [34], Bhattacherjee, A. (2001) [37] | Timeliness of posting news | PU1 |

| Effectiveness of sharing resources | PU2 | ||

| Ease of replying to messages | PU3 | ||

| The degree of improvement of teaching efficiency | PU4 | ||

| Perceived ease of use | Davis, F. D. et al. (1989) [38] | Simple and easy to understand software pages | PE0U1 |

| The extent to which it is restricted by time zone | PE0U2 | ||

| Ease of use for software tools | PE0U3 | ||

| Degree of familiarity with software functions | PE0U4 | ||

| Usage behavior | Davis, F. D. (1989) [31], Bhattacherjee, A. (2010) [37] | Stability of platform operation | UB1 |

| Clarity of screen and audio | UB2 | ||

| Timeliness of teacher–student interaction | UB3 | ||

| Smoothness of file transfer | UB4 | ||

| Satisfaction of course playback function | UB5 | ||

| Satisfaction of classroom effect | UB6 | ||

| Usage attitude | Davis, F. D. et al. (1989) [38] | The effect of teaching having high expectations | A1 |

| Being satisfied with my learning needs | A2 | ||

| The satisfaction with the DingTalk platform software online teaching | A3 | ||

| Behavioral Intentions | Bhattacherjee, A. (2001) [39] | Supporting the continuation of the online teaching format | BI1 |

| Willingness to participate in teaching activities that are conducted by the software | BI2 | ||

| Willingness to share resources with teachers and students | BI3 |

| Indicators | Indicator Description |

|---|---|

| PU1 | The DingTalk platform allows one to watch important information posted by teachers in a timely manner, which will help one to actively integrate it into their learning. |

| PU2 | Students and teachers can share learning resources with each other in DingTalk platform, which is helpful for their learning in the course. |

| PU3 | Students and teachers can respond to messages quickly through the DingTalk platform, which helps one to actively participate in the course. |

| PU4 | Online teaching can improve the efficiency of the classroom and can help learning progress. |

| PE0U1 | The DingTalk platform’s pages are simple and easy to understand, which helps one in practice. |

| PE0U2 | Where one thinks the DingTalk platform software is less restricted by time zones. |

| PE0U3 | One thinks the DingTalk platform software is easy to use. |

| PE0U4 | One is familiar with the functions of the DingTalk platform software. |

| UB1 | One thinks the DingTalk platform software is relatively stable. |

| UB2 | One thinks that the DingTalk platform software has a clearer screen and audio. |

| UB3 | One can get timely responses on DingTalk platform when teachers and students interact with each other. |

| UB4 | One thinks that the file transfer of the DingTalk platform software is smooth and fast. |

| UB5 | One can review the class content through the DingTalk platform software lesson playback function, which brings one a convenient way by which to review the lessons. |

| UB6 | One thinks the online classroom effect of the DingTalk platform software is no worse than the offline class effect. |

| A1 | One has high expectations for the effectiveness of the DingTalk platform online teaching. |

| A2 | The DingTalk platform online classroom can meet learning needs. |

| A3 | One’s satisfaction with the DingTalk platform’s capacity for online teaching. |

| BI1 | One supports the continuation of the online teaching format. |

| BI2 | One is willing to participate in the teaching activities that are conducted by the software. |

| BI3 | One is willing to share their learning resources with teachers and students on the DingTalk platform. |

| Variables | Options | Frequency | Percentage | Average Value | Standard Deviation |

|---|---|---|---|---|---|

| Gender | Male | 203 | 41% | 1.59 | 0.492 |

| Female | 292 | 59% | |||

| Age | Under 18 years old | 43 | 8.70% | 2.22 | 0.739 |

| 18–30 years old | 344 | 69.50% | |||

| 31–40 years old | 74 | 14.90% | |||

| 41–50 years old | 26 | 5.30% | |||

| 51 years old and above | 8 | 1.60% | |||

| Education level | Junior, High School, and below | 15 | 3% | 2.99 | 0.727 |

| High School | 89 | 18% | |||

| Undergraduate | 279 | 56.40% | |||

| Graduate Student | 112 | 22.60% |

| Cronbach Alpha | Cronbach Alpha, Based on Standardized Terms | Number of Items | Number of Samples |

|---|---|---|---|

| 0.967 | 0.967 | 20 | 495 |

| KMO Sampling Suitability Quantity | 0.98 | |

|---|---|---|

| Bartlett’s sphericity test | Approximate cardinality | 7925.11 |

| Degree of freedom | 190 | |

| Significance | 0 | |

| Variables | Q1 | Number of Cases | Average Value | Standard Deviation | t | Sig |

|---|---|---|---|---|---|---|

| PU | Male | 203 | 27.23 | 6.268 | −0.796 | 0.426 |

| Female | 292 | 27.69 | 6.4 | |||

| PE0U | Male | 203 | 27.09 | 6.263 | −0.523 | 0.601 |

| Female | 292 | 27.39 | 6.353 | |||

| UB | Male | 203 | 40.8 | 9.404 | −0.31 | 0.757 |

| Female | 292 | 41.06 | 9.035 | |||

| A | Male | 203 | 20.34 | 4.754 | 0.202 | 0.84 |

| Female | 292 | 20.25 | 4.957 | |||

| BI | Male | 203 | 20.41 | 4.825 | 0.068 | 0.946 |

| Female | 292 | 20.38 | 4.897 |

| X | → | Y | Un−Standardized Regression Coefficients | SE | z (CRValue) | p | Standardized Regression Coefficients |

|---|---|---|---|---|---|---|---|

| Perceptual usefulness | → | Usage attitude | 0.283 | 0.106 | 2.661 | 0.008 | 0.287 |

| Perceived Usefulness | → | Behavioral intention | −0.108 | 0.151 | −0.715 | 0.474 | −0.117 |

| Perceived ease of use | → | Perceived usefulness | −2.177 | 4.440 | −0.490 | 0.624 | −2.036 |

| Perceived ease of use | → | Attitude toward use | 0.737 | 0.118 | 6.266 | 0.000 | 0.701 |

| Usage Behavior | → | Perceived usefulness | 2.960 | 4.125 | 0.718 | 0.473 | 2.981 |

| Usage Behavior | → | Perceived ease of use | 0.925 | 0.047 | 19.768 | 0.000 | 0.996 |

| Usage Attitude | → | Behavioral intentions | 0.996 | 0.161 | 6.196 | 0.000 | 1.059 |

| Commonly-Used Indicators | χ2 | df | p | Cardinality Ratio of Freedom, χ2/df | GFI | RMSEA | RMR | CFI | NFI | NNFI |

|---|---|---|---|---|---|---|---|---|---|---|

| Judgment criteria | - | - | >0.05 | <3 | >0.9 | <0.10 | <0.05 | >0.9 | >0.9 | >0.9 |

| Value | 361.756 | 163 | 0.000 | 2.219 | 0.930 | 0.050 | 0.092 | 0.975 | 0.955 | 0.971 |

| Other indicators | TLI | AGFI | IFI | PGFI | PNFI | SRMR | RMSEA 90% CI | |||

| Judgment standard | >0.9 | >0.9 | >0.9 | >0.9 | >0.9 | <0.1 | - | |||

| Value | 0.971 | 0.910 | 0.975 | 0.722 | 0.819 | 0.026 | 0.043~0.057 | |||

| Default model: χ2(190) = 8063.576, p = 1.000 | ||||||||||

| Item | Relationships | Item | MI Value | Par Change |

|---|---|---|---|---|

| PE0U2 | ↔ | UB3 | 15.281 | −0.331 |

| PE0U1 | ↔ | UB2 | 12.338 | −0.196 |

| A2 | ↔ | PU3 | 11.390 | −0.191 |

| A1 | ↔ | BI3 | 13.450 | −0.228 |

| A1 | ↔ | BI1 | 12.647 | 0.234 |

| UB6 | ↔ | UB3 | 11.083 | 0.309 |

| UB6 | ↔ | PU2 | 10.629 | −0.228 |

| UB6 | ↔ | BI1 | 14.364 | 0.314 |

| UB6 | ↔ | A2 | 17.067 | 0.307 |

| UB5 | ↔ | PE0U2 | 15.095 | 0.276 |

| Item | Relationships | Item | MI Value | Par Change |

|---|---|---|---|---|

| Usage Behavior | → | Attitude to use | 10.266 | 10.937 |

| Usage Attitude | → | Perceived usefulness | 12.495 | −4.514 |

| Item | R-Squared Value | Item | R-Squared Value |

|---|---|---|---|

| Perceived usefulness | 0.945 | PU4 | 0.576 |

| Perceived ease of use | 0.991 | PU3 | 0.726 |

| Attitude toward use | 0.949 | PU2 | 0.659 |

| Behavioral Intentions | 0.903 | BI2 | 0.646 |

| BI3 | 0.615 | PU1 | 0.850 |

| UB3 | 0.496 | BI1 | 0.566 |

| UB2 | 0.767 | A3 | 0.636 |

| UB1 | 0.744 | A2 | 0.642 |

| PE0U4 | 0.603 | A1 | 0.697 |

| PE0U3 | 0.563 | UB6 | 0.543 |

| PE0U2 | 0.533 | UB5 | 0.522 |

| PE0U1 | 0.548 | UB4 | 0.604 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, F.; Pang, J.; Guo, Y.; Zhu, Y.; Zhang, H. A Study of Factors Influencing the Use of the DingTalk Online Lecture Platform in the Context of the COVID-19 Pandemic. Sustainability 2023, 15, 7274. https://doi.org/10.3390/su15097274

Zhang F, Pang J, Guo Y, Zhu Y, Zhang H. A Study of Factors Influencing the Use of the DingTalk Online Lecture Platform in the Context of the COVID-19 Pandemic. Sustainability. 2023; 15(9):7274. https://doi.org/10.3390/su15097274

Chicago/Turabian StyleZhang, Fan, Jianbo Pang, Yanlong Guo, Yelin Zhu, and Han Zhang. 2023. "A Study of Factors Influencing the Use of the DingTalk Online Lecture Platform in the Context of the COVID-19 Pandemic" Sustainability 15, no. 9: 7274. https://doi.org/10.3390/su15097274

APA StyleZhang, F., Pang, J., Guo, Y., Zhu, Y., & Zhang, H. (2023). A Study of Factors Influencing the Use of the DingTalk Online Lecture Platform in the Context of the COVID-19 Pandemic. Sustainability, 15(9), 7274. https://doi.org/10.3390/su15097274