Study on the Prediction of the Uniaxial Compressive Strength of Rock Based on the SSA-XGBoost Model

Abstract

:1. Introduction

2. Data Analysis

3. SSA-XGBoost Model

3.1. XGBoost Model

3.2. Sparrow Search Algorithm

- (1)

- When a predator approaches the sparrow population and is found by the alert, the alert will warn the population to transfer its position in time by chirping;

- (2)

- The identities of the discoverer and the participant can be interchanged under certain circumstances;

- (3)

- The lower the energy reserve level of the sparrows, the worse and more dangerous the areas where they can feed;

- (4)

- The joiners can compete for food with the discoverers; the winners obtain food, and the losers are forced to leave;

- (5)

- The discoverer has the highest stockpiling level, has the priority to locate areas containing a large amount of food, and provides the joiners with food location information;

- (6)

- When a predator is close to a sparrow population, individuals at the edge of the sparrow population close to the predator move to a safe area and occupy a better food search position.

3.3. SSA-XGBoost Composite Model

4. Prediction Results and Analysis

4.1. SSA-XGBoost Model Parameter Optimization Results

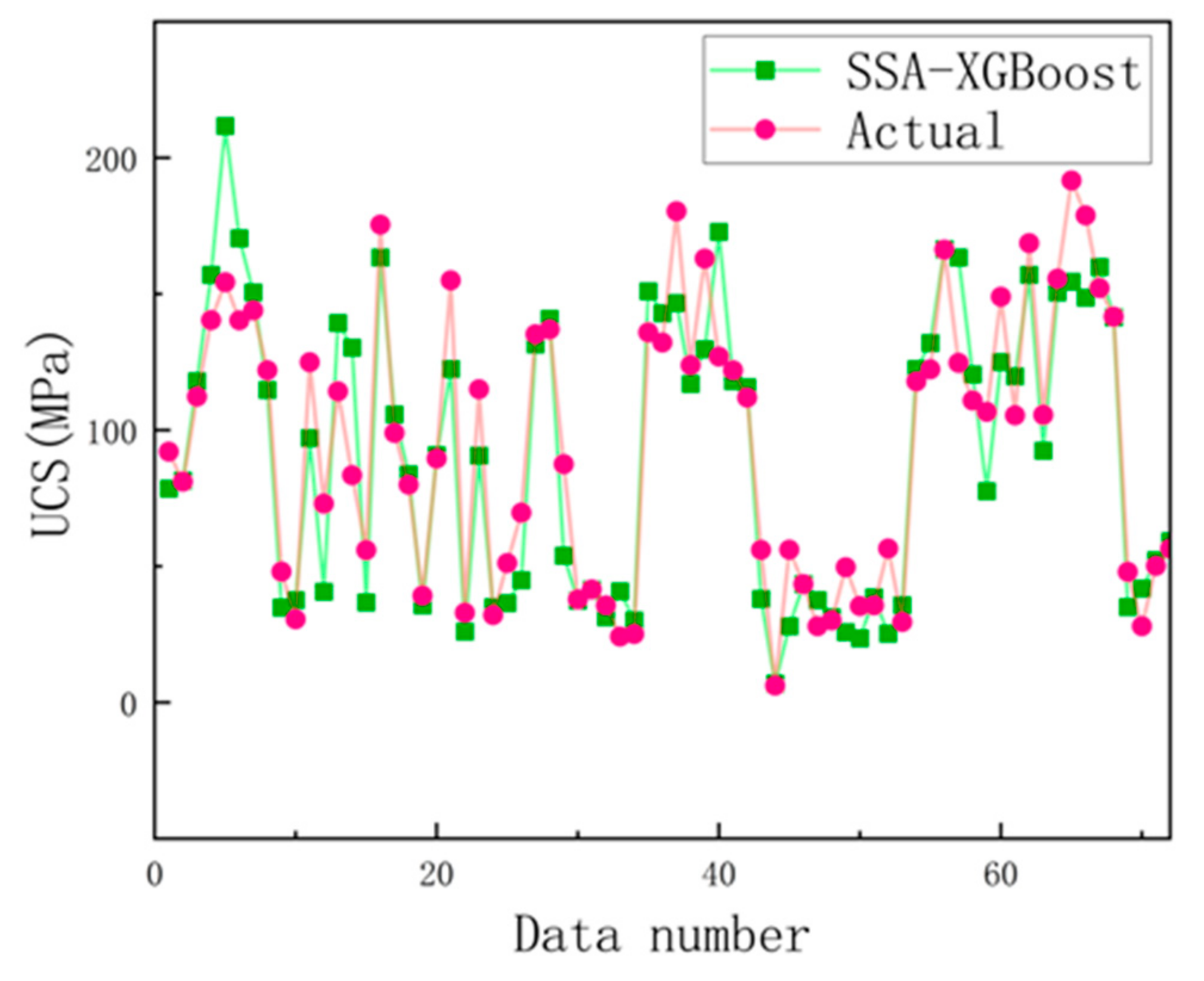

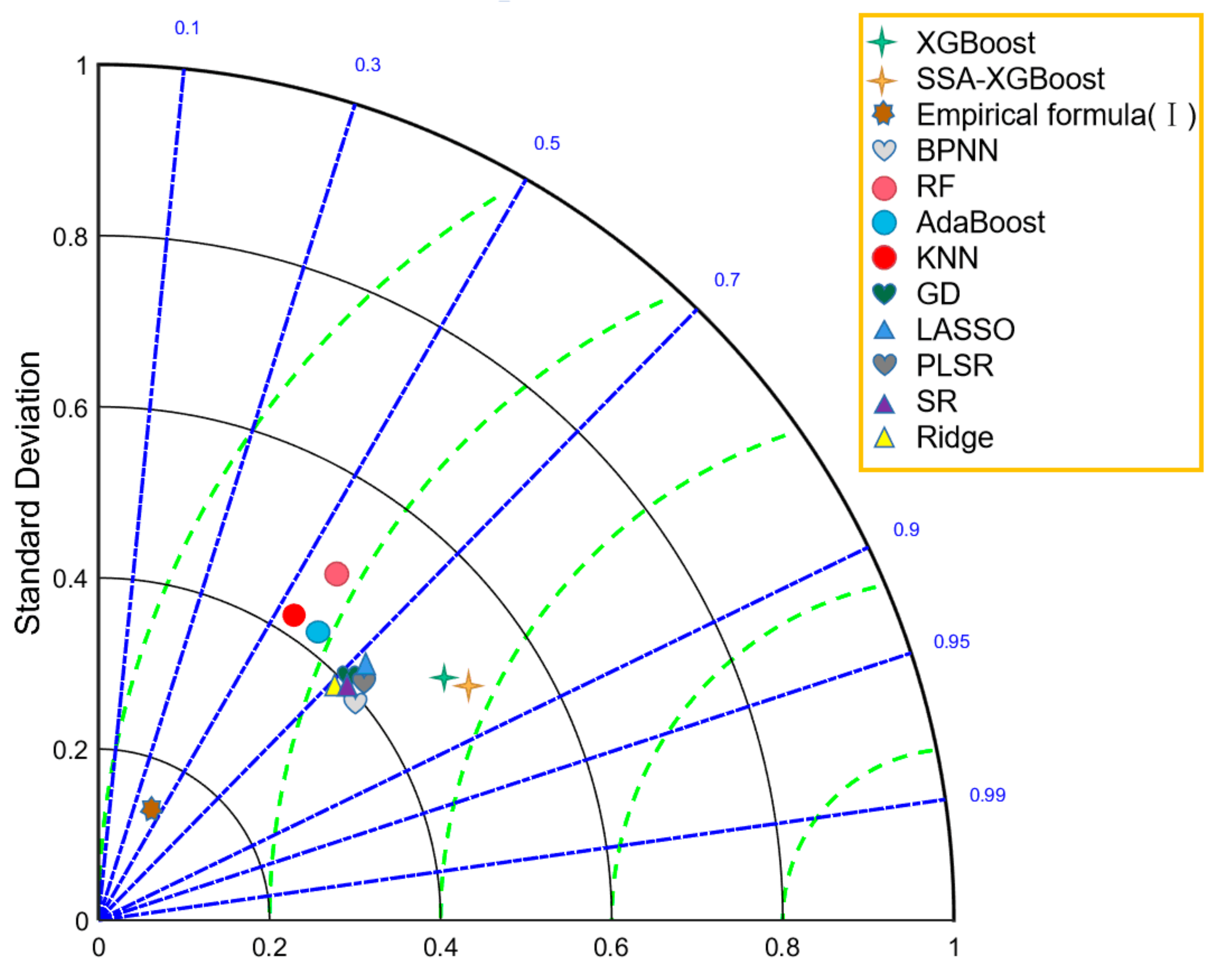

4.2. SSA-XGBoost Prediction Result Analysis

5. Conclusions

- (1)

- A total of 290 groups of rock sample data, including many types of rocks, were collected in the study. Based on the data, an XGBoost model was introduced, and a sparrow search algorithm was used to optimize its parameters to obtain better prediction performance, which provides a new method for predicting rock uniaxial compressive strength.

- (2)

- Compared with empirical formula methods and other machine learning prediction models, the SSA-XGBoost, XGBoost, and BPNN models have good R2, RMSE, VAF, and MAE values. Meanwhile, the SSA-XGBoost model (with higher R2 and VAF and lower RMSE and MAE, R2 = 0.84, VAF = 81.36, RMSE = 19.85, and MAE = 14.79) can achieve the best prediction results, which indicated that the SSA-XGBoost model has the best generalization ability and more accurate prediction results and can solve the problem that other machine learning prediction models have of lower accuracy in predicting different types of rocks.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, X. Study of uniaxial compressive strength of Shaximiao formation rock in Chongqing urban area. Rock Soil Mech. 2014, 35, 2994–2999. [Google Scholar]

- Li, Z.; Liu, J.; Liu, H.; Zhao, H.; Xu, R.; Gurkalo, F. Stress distribution in direct shear loading and its implication for engineering failure analysis. Int. J. Appl. Mech. 2023. [Google Scholar] [CrossRef]

- Li, Z.; Liu, J.; Xu, R.; Liu, H.; Shi, W. Study of grouting effectiveness based on shear strength evaluation with experimental and numerical approaches. Acta Geotech. 2021, 16, 3991–4005. [Google Scholar] [CrossRef]

- Xie, S.; Han, Z.; Hu, H.; Lin, H. Application of a novel constitutive model to evaluate the shear deformation of discontinuity. Eng. Geol. 2022, 304, 106693. [Google Scholar] [CrossRef]

- Xie, S.; Lin, H.; Duan, H. A novel criterion for yield shear displacement of rock discontinuities based on renormalization group theory. Eng. Geol. 2023, 314, 107008. [Google Scholar] [CrossRef]

- Yang, X.; Meng, Y.; Li, G.; Wang, L.; Li, C. An empirical equation to estimate uniaxial compressive strength for anisotropic rocks. Rock Soil Mech. 2017, 38, 2655–2661. [Google Scholar]

- He, L.; Fu, Z.; Wang, Q.; Fang, T.; Gao, N. Linear relationship between point load strength and uniaxial compressive strength of rock. Coal Geol. Explor. 2014, 42, 68–73. [Google Scholar]

- Li, W.; Tan, Z. Prediction of uniaxial compressive strength of rock based on P-wave modulus. Rock Soil Mech. 2016, 37, 381–387. [Google Scholar]

- Kahraman, S.; Gunaydin, O. The effect of rock classes on the relation between uniaxial compressive strength and point load index. Bull. Eng. Geol. Environ. 2009, 68, 345–353. [Google Scholar] [CrossRef]

- Kiliç, A.; Teymen, A. Determination of mechanical properties of rocks using simple methods. Bull. Eng. Geol. Environ. 2008, 67, 237–244. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, N.; Wang, R.; Chen, K.; Xia, J. Study the Relationship between Uniaxial Compressive Strength of Rock Mass and Bedding Joint Dip Angle of Jurassic Shaximiao Formation in Chongqing Area. J. Nanchang Univ. (Nat. Sci.) 2022, 46, 98–102. [Google Scholar]

- Ge, H.; Liang, Y.; Liu, W.; Gu, X. Application of artificial neural neyworks and genetic alogorithms to rock mechanics. Chin. J. Rock Mech. Eng. 2004, 23, 1542–1550. [Google Scholar]

- Li, W.; Tan, Z. Research on Rock Strength Prediction Based on Least Squares Support Vector Machine. Geotech. Geol. Eng. 2017, 35, 385–393. [Google Scholar] [CrossRef]

- Peng, T.; Deng, H.; Lin, Y.; Jin, Z. Assessment on water resources carrying capacity in karst areas by using an innovative DPESBRM concept model and cloud model. Sci. Total Environ. 2020, 767, 144353. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Lin, Y.; Zhou, X.; Zhang, M. Grading Evaluation of Goaf Stability Based on Entropy and Normal Cloud Model. Adv. Civ. Eng. 2022, 2022, 9600909. [Google Scholar] [CrossRef]

- Tonnizam Mohamad, E.; Jahed Armaghani, D.; Momeni, E.; Yazdavar, A.H.; Ebrahimi, M. Rock strength estimation: A PSO-based BP approach. Neural Comput. Appl. 2018, 30, 343–354. [Google Scholar]

- Tiryaki, B. Predicting intact rock strength for mechanical excavation using multivariate statistics, artificial neural networks, and regression trees. Eng. Geol. 2008, 99, 51–60. [Google Scholar] [CrossRef]

- Momeni, E.; Armaghani, D.J.; Hajihassani, M.; Amin, M.F.M. Prediction of uniaxial compressive strength of rock samples using hybrid particle swarm optimization-based artificial neural networks. Measurement 2015, 60, 50–63. [Google Scholar] [CrossRef]

- Madhubabu, N.; Singh, P.K.; Kainthola, A.; Mahanta, B.; Tripathy, A.; Singh, T.N. Prediction of compressive strength and elastic modulus of carbonate rocks. Measurement 2016, 88, 202–213. [Google Scholar] [CrossRef]

- Li, W.; Tan, Z. Comparison on Rock Strength Prediction Models Based on MLR and LS-SVM. J. Min. Res. Dev. 2016, 36, 36–40. [Google Scholar]

- Matin, S.S.; Farahzadi, L.; Makaremi, S.; Chehreh Chelgani, S.; Sattari, G. Variable selection and prediction of uniaxial compressive strength and modulus of elasticity by random forest. Appl. Soft Comput. 2018, 70, 980–987. [Google Scholar] [CrossRef]

- Tan, H.; Yang, Q.; Xing, J.; Huang, K.; Zhao, S.; Hu, H. Photovoltaic Power Prediction based on XGBoost-LSTM Model. Acta Energ. Sol. Sin. 2022, 43, 75–81. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- He, J.; Lin, G.; Shen, X.; Xu, L.; Fei, L.; Yu, T. Prediction of Tunnel Subsidence Based on Bayesian Optimized XGBoost. Comput. Syst. Appl. 2022, 31, 379–385. [Google Scholar]

- Ye, H.; Hu, J.; Lei, T.; Li, N.; Wang, Q.; Ghislain, D.M. Fragmentation Prediction of rock Blasting by LOO-XGboost Model. Blasting 2022, 39, 16–21. [Google Scholar]

- Xie, X.; Li, D.; Kong, L.; Ye, Y.; Gao, S. Rockburst propensity prediction model based on CRITIC-XGB algorithm. Chin. J. Rock Mech. Eng. 2020, 39, 1975–1982. [Google Scholar]

- Li, H.; Zhang, Y.; Zhang, Y. Study of transformer fault diagnosis based on improved sparrow search algorithm optimized support vector machine. J. Electron. Meas. Instrum. 2021, 35, 123–129. [Google Scholar]

- Wu, S.; Wang, Y.; Zhang, H. Study on Prediction Method of Uniaxial Compressive Strength of Rocks Based on Stacking Ensemble Algorithm. Min. R D 2022, 42, 105–111. [Google Scholar]

- Ma, T.; Lin, Y.; Zhou, X.; Wei, P.; Li, R.; Su, J. Entropy weight-normal cloud model for water inrush risk prediction of coal seam floor. China Saf. Sci. J. 2022, 32, 171–177. [Google Scholar]

- Zhang, Q.; Guan, S.; Lin, J. Estimation of Uniaxial Compressive Strength by Rebound Hardness. Constr. Technol. 2020, 47, 48–49. [Google Scholar]

- Zhao, C.; Liu, C.; Zhou, P.; Miao, Y. Prediction of Uniaxial Compressive Strength of Granite Based on P-wave Modulus. Mod. Min. 2022, 642, 126–128, 132. [Google Scholar]

- Lei, S.; Kang, H.; Gao, F.; Zhang, X. Point load strength test of fragile coal samples and predictive analysis of uniaxial compressive strength. Coal Sci. Technol. 2019, 47, 107–113. [Google Scholar]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control. Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Barzegar, R.; Sattarpour, M.; Deo, R.; Fijani, E.; Adamowski, J. An ensemble tree-based machine learning model for predicting the uniaxial compressive strength of travertine rocks. Neural Comput. Appl. 2020, 32, 9065–9080. [Google Scholar] [CrossRef]

- Taylor, K.E. Summarizing multiple aspects of model performance in a single diagram. J. Geophys. Res. Atmos. 2001, 106, 7183–7192. [Google Scholar] [CrossRef]

| Sample Number | n/% | Rn | Vp/ (km·s−1) | Is(50)/MPa | UCS/MPa |

|---|---|---|---|---|---|

| 1 | 3.35 | 27.42 | 5.66 | 3.00 | 63.68 |

| 2 | 9.35 | 27.38 | 5.38 | 2.86 | 47.46 |

| 3 | 8.14 | 30.13 | 5.09 | 3.63 | 37.89 |

| 4 | 2.24 | 25.75 | 5.81 | 3.10 | 56.08 |

| 5 | 9.76 | 27.38 | 5.35 | 3.39 | 43.46 |

| 6 | 9.64 | 27.63 | 4.82 | 2.54 | 25.17 |

| 7 | 4.50 | 26.00 | 5.59 | 3.16 | 30.96 |

| 8 | 8.32 | 29.75 | 5.25 | 4.01 | 27.08 |

| 9 | 9.45 | 29.13 | 5.39 | 3.74 | 41.51 |

| 10 | 2.11 | 26.13 | 5.54 | 2.73 | 28.00 |

| … | … | … | … | … | … |

| 281 | 1.18 | 57.00 | 4.76 | 3.90 | 143.00 |

| 282 | 4.41 | 57.00 | 5.57 | 4.00 | 131.00 |

| 283 | 0.35 | 60.00 | 6.00 | 3.00 | 127.00 |

| 284 | 2.32 | 52.00 | 4.75 | 3.30 | 122.00 |

| 285 | 0.66 | 59.00 | 5.84 | 2.20 | 112.00 |

| 286 | 2.39 | 55.00 | 4.58 | 2.60 | 111.00 |

| 287 | 0.98 | 50.00 | 4.71 | 1.70 | 72.00 |

| 288 | 6.25 | 48.00 | 3.48 | 1.50 | 70.00 |

| 289 | 4.17 | 46.00 | 4.50 | 1.70 | 62.00 |

| 290 | 12.37 | 41.00 | 3.21 | 1.60 | 56.00 |

| Model Type Parameter | Maximum Iterations | Learning Rate | Maximum Depth of Tree |

|---|---|---|---|

| SSA-XGBoost | 88 | 0.9019 | 5 |

| XGBoost | 70 | 0.9495 | 9 |

| Model | R2 | RMSE | MAE | VAF |

|---|---|---|---|---|

| XGBoost | 0.81 | 25.43 | 17.25 | 70.98 |

| SSA-XGBoost | 0.84 | 19.85 | 14.79 | 81.36 |

| Empirical formula (I) | 0.43 | 64.03 | 50.79 | 39.10 |

| Empirical formula (II) | −1.43 | 83.46 | 69.44 | 44.80 |

| SVM | −1.43 | 75.88 | 56.25 | −103.3 |

| BPNN | 0.75 | 24.47 | 20.12 | 75.43 |

| RF | 0.58 | 31.71 | 23.30 | 57.41 |

| AdaBoost | 0.60 | 30.88 | 24.64 | 58.88 |

| KNN | 0.54 | 33.00 | 26.66 | 52.67 |

| GD | 0.71 | 26.15 | 20.94 | 71.21 |

| LASSO | 0.73 | 25.03 | 22.78 | 69.07 |

| PLSR | 0.73 | 24.99 | 22.81 | 73.75 |

| SR | 0.73 | 24.96 | 22.50 | 73.75 |

| Ridge | 0.71 | 26.82 | 24.47 | 65.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, B.; Tan, Y.; Sun, W.; Ma, T.; Liu, H.; Wang, D. Study on the Prediction of the Uniaxial Compressive Strength of Rock Based on the SSA-XGBoost Model. Sustainability 2023, 15, 5201. https://doi.org/10.3390/su15065201

Xu B, Tan Y, Sun W, Ma T, Liu H, Wang D. Study on the Prediction of the Uniaxial Compressive Strength of Rock Based on the SSA-XGBoost Model. Sustainability. 2023; 15(6):5201. https://doi.org/10.3390/su15065201

Chicago/Turabian StyleXu, Bing, Youcheng Tan, Weibang Sun, Tianxing Ma, Hengyu Liu, and Daguo Wang. 2023. "Study on the Prediction of the Uniaxial Compressive Strength of Rock Based on the SSA-XGBoost Model" Sustainability 15, no. 6: 5201. https://doi.org/10.3390/su15065201

APA StyleXu, B., Tan, Y., Sun, W., Ma, T., Liu, H., & Wang, D. (2023). Study on the Prediction of the Uniaxial Compressive Strength of Rock Based on the SSA-XGBoost Model. Sustainability, 15(6), 5201. https://doi.org/10.3390/su15065201