Abstract

With the exponential growth of educational data, increasing attention has been given to student learning supported by learning analytics dashboards. Related research has indicated that dashboards relying on descriptive analytics are deficient compared to more advanced analytics. However, there is a lack of empirical data to demonstrate the performance and differences between different types of analytics in dashboards. To investigate these, the study used a controlled, between-groups experimental method to compare the effects of descriptive and prescriptive dashboards on learning outcomes. Based on the learning analytics results, the descriptive dashboard describes the learning state and the prescriptive dashboard provides suggestions for learning paths. The results show that both descriptive and prescriptive dashboards can effectively promote students’ cognitive development. The advantage of prescriptive dashboard over descriptive dashboard is its promotion in learners’ learning strategies. In addition, learners’ prior knowledge and learning strategies determine the extent of the impact of dashboard feedback on learning outcomes.

1. Introduction

With the exponential growth of educational data analysis, the use of learning analytics dashboards (LADs) in school instruction is becoming more prevalent, combined with an increasing appetite for using data in decision making, which has inspired significant research and development efforts around LADs [1].

In technology-enabled learning scenarios, learning analytics can improve learning by measuring, collecting, analyzing, and reporting on student learning data to understand and improve the learning context [2], enable objective and accurate judgments about the state of student learning, and provide a scientific basis for the content of student self-directed learning.

As tools to facilitate teaching and learning activities, learning analytics dashboards provide insights based on data collected by Learning Management Systems and Student Information Systems, and have traditionally been used to help instructors monitor their entire program, and provide a scientific basis for student learning strategies and content to promote self-regulated learning.

The constructivist-based theoretical model of self-regulated learning views learners as active subjects who use a range of cognitive, physical, and digital tools to process raw information to create learning artifacts and progress toward their learning goals [3]. Zimmerman and Schunk [4] describe self-directed learning as the desired outcome of the process of “the systematic generation of ideas and behaviors by students to achieve their learning goals” [5]. Learners regulate their learning process by continuously assessing the effectiveness of their learning and the effectiveness of their chosen learning strategies. This metacognitive monitoring process is influenced by a range of internal and external conditions. The former includes prior knowledge, affective state, and learning ability, while external conditions depend to a large extent on elements of the instructional environment, such as the role of the teacher, course requirements, and the availability and form of feedback [6].

In essence, a learning analytics dashboard is the sense-making component of a learning analytics system. The construction of data-mining-based metrics is the intrinsic basis of the design, and the appropriate form of media representation is an important external element. Therefore, as a visualization-based learning analytics tool, it is important to design the interface of the learning dashboard with effective information.

Studies classify dashboards from different perspectives, such as classifying dashboards into teacher dashboards and student dashboards according to users or classifying dashboards into three categories according to application scenarios: for traditional face-to-face lectures, for face-to-face group work, and for awareness, reflection, sensemaking, and behavior change in online or blended learning [7]. Based on the type of feedback information, dashboards can be categorized into descriptive analytics, predictive analytics, and prescriptive analytics [8]. The focus of descriptive analytics is to answer the question “what happened”, the goal of predictive analytics is to provide a response to the question “what will happen”, and the focus of prescriptive analytics is to articulate the learning process as “what we should do”. Researchers have designed and developed different dashboards for different learning environments and groups of learners with these analytics. However, the question of what the ‘right’ information is to display to different stakeholders and the question of how this information should be presented remain largely unresolved [9]. In recent years, a growing body of research has suggested that dashboards that rely on descriptive analysis have limited effectiveness, and that predictive and prescriptive analysis can provide more valuable feedback than descriptive analysis; among them, the most sophisticated and insightful form of analysis is prescriptive analytics, which combines the results of descriptive and predictive analytics to suggest the best teaching and learning actions to teachers and/or students [10]. Empirical research on dashboards has explored the impact of dashboards on learning and the role of individual learner traits; however, the relationship between the type of feedback provided on dashboards and the learning outcomes is unclear.

The purpose of this study is to explore the changes in students’ learning outcomes, learning strategies, and learning attitudes in dashboards containing different categories of feedback information (the simplest and most elementary form, descriptive analytics, and the most complex and revealing form, prescriptive analytics). It also aims to explore how internal factors of learners, such as differences in levels of prior knowledge experience, differences in levels of learning strategies, and differences in learning attitudes, affect the effectiveness of different types of learning analytics dashboards. To explore the differences in learning effectiveness between the two types of dashboard feedback, the main research method used in this study is an experimental research approach.

2. Literature Review

2.1. Feedback on the Dashboards

In general, learning analytics consist of four steps, measurement, acquisition, analysis, and reporting [11], and LAD is the final step in learning analytics, which is the presentation of data analysis results. In recent years, although scholars continue to invest in learning analytics and its applications [12], there has been no consensus on the definition of LAD [9] and researchers use a variety of terms to describe LAD such as “dashboard for learning analytics”, “educational dashboard”, “data dashboard”, and “web dashboard”. Beyond definitional differences, the larger challenge is that the question of what the “right” information is to display to different stakeholders, and how that information should be presented, remains largely unresolved due to the increasing amounts of available data and the candidate indicators that can be incorporated [9,13]. According to the type of feedback information contained in the dashboard, most dashboards can be classified as descriptive analytics, predictive analytics, and prescriptive analytics [8,14].

By evaluating and reporting on transactional and interactive data about the learning process, descriptive analytics, which are visualized by learning analytics dashboards, are aimed at identifying relevant patterns and insights [15]. Typical descriptive analytics feedback includes information on grades, pass rate predictions, length of online learning, online learning behaviors, online interactions, etc., for example providing learning analytics data on participation, interaction, discussion content keywords, discussion message types, and the distribution of debate opinions for asynchronous online discussions [16], knowledge elaboration, behavioral patterns, and social interaction information for collaborative learning [17], information on collaborative network diagrams, artifact interactions, feedback, artifact progress, task progress, and task execution, and learning outcomes for team collaboration [18].

The predictive analytics visualized by learning analytics dashboards are designed to forecast future trends based on the likelihood of what will happen next and to warn teachers and students by assessing the chance of dropping out, failing a course, and/or achieving a certain success rate. Typical predictive feedback includes grades, pass rates, and dropout predictions, such as the probability of earning a score of 50 or more in a course [9], supporting the decision-making process of academic advisers through comparative and predictive analysis [19]. This type of dashboard helps at-risk students succeed in their programs by identifying and supporting them in order to provide early intervention [20].

Those dashboards that provide prescriptive analytics are designed to combine the results of descriptive and predictive analytics to offer suggestions on the best teaching and learning actions for instructors and students to take. Examples include behavioral recommendations for groups in the collaborative learning process in relation to the emotional state and discussion content [21,22], and the generation of suggestions for corrective revision actions based on learners’ reading behavior [23]. Some dashboards provide multiple types of feedback simultaneously, such as comparative and predictive analysis in support of the academic advisor’s decision-making process [19], or provide students with class-comparative descriptive components as well as the student’s predicted final grade [24].

While researchers have tried, in practice, to find some usable learning analytics to present visually as dashboards, Stephen Few [25] notes that, while visually appealing, many dashboards lack the ability to provide truly useful information. In previous studies, dashboard designers have chosen the “right” information based on reviews of learning theory [5,26], exploratory data analysis [27], researcher empirical judgments [28], or findings from other studies to select predictor variables that are closely related to achievement [29].

Currently, researchers consider dashboards that rely on descriptive analytics to be severely deficient compared with those that include more advanced analytics. Recent studies have also pointed to prescriptive analytics as being more sophisticated and capable of providing more valuable information than descriptive and predictive analysis, but few studies have attempted to explore the differences in feedback information types. What could be found was Valle’s exploration of the differences between descriptive and predictive dashboards, but the authors reported that the predictive dashboard helped only the highly motivated students to sustain their motivation levels, while both dashboards failed to demonstrate their effectiveness in affecting final outcomes [30]. Thus, there is still a lack of empirical data comparing and demonstrating the differences in the impact of different types of learning analytics as feedback in a dashboard on learning effectiveness in the same educational environment.

To make learning analytics dashboards a useful tool for instructional decision making and learning support, determining what information should be displayed in dashboards in a timely and accurate manner and how that information should be displayed becomes a related research challenge. In order to determine the differences in the effectiveness of the different types of feedback in dashboards, particularly descriptive and prescriptive analytics in dashboards, application practice should be conducted in a real teaching environments to compare the effectiveness of using learning analytics dashboards with different types of feedback to determine which type of learning analytics feedback is more effective in facilitating learning effectiveness.

2.2. The Impact of Dashboards on Student Learning

The Learning Analytics Dashboard (LAD) is a visualization system for curating and presenting data about student learning and engagement in educational settings [9]. Learning analytics dashboards already have many current implementations in higher education environments, such as dashboards for students to monitor classroom progress [1], dashboards for faculty to monitor student learning and receive feedback on teaching practices [31], and dashboards for university administrators to manage and support students, faculty, and staff [32].

Most LADs are designed to be scalable across many students, courses, and organizational units so that they can be easily deployed across the university to reach an increasing number of students, faculty, and staff [33,34]. There are also some dashboards designed for employees working in student support services, such as academic advising [35].

Although some studies point out that many published learning analytics dashboards are only in the prototype stage, and those in the pilot implementation stage are in the minority [36,37], in fact, many of them have been used in actual teaching and learning activities and related studies have measured many effects of these dashboards on the learning process. In studies where dashboards were actually used in instructional activities and the effects were measured, researchers have explored the impact of dashboards on student learning in a variety of learning environments, such as using dashboards in face-to-face classrooms to promote offline collaborative learning [38], sending regular emails with links to dashboards to promote student achievement in higher education [39], enabling students to enhance the effectiveness of self-regulated learning through indirect contact between teachers and dashboards [40], and using process-oriented learning feedback to enhance student learning outcomes [41]. These studies explore the impact of dashboards on the learning process in different teaching environments and teaching models, but the promoting effect of dashboards on academic performance is not always explicit in research findings, with the common conclusion that dashboards changed student behavior patterns, but the experimental group showed only a non-significant advantage in performance [18,39].

As the use of learning analytics to track the learning process in real time enables the timely personalization of students’ learning paths and the provision of personalized resources and support, studies have shown that technology support for the self-directed learning process enables students to make more or better use of learning strategies and thus improve academic performance [42,43,44].

By analyzing the learners’ personal characteristics, the researchers found that students’ effectiveness in learning processes supported by learning analytics dashboards was not only related to the design of the dashboard information, or the type of feedback, but also closely related to their personal trait factors such as effect and metacognition. For example, Aguilar [40] found a correlation between student achievement goal orientation and the effect of indirect exposure to the dashboard. Jivet [45] used a mixed-methods approach to investigate the impact of learner goals and self-regulated learning (SRL) skills on dashboard meaning formation, identifying three potential variables: transparency of design, frame of reference, and support for action. Joseph-Richard [21] found that college students’ affective responses when viewing the predictive learning analysis (PLA) dashboard influenced their self-regulated learning behaviors. Kokoc’s practice confirms that both metacognitive and dashboard interaction behaviors are significant predictors of academic performance [46]. Although some possible learner factors were identified, there is still a lack of research evidence on how these internal factors from individual learners show differences in learning effectiveness in relation to the type of feedback on the dashboard.

In previous studies, the type of feedback information contained in the dashboard was not generally considered as a factor when improving dashboard design. The design factors of the dashboard, co-creation [47] and human-centered design [48] in design theory are not commonly used, and researchers usually use the results of the dashboard application to evaluate the effectiveness of the dashboard information design. Researchers have compared the effectiveness of different dashboard systems in terms of the availability of notifications [49], recommendation engines [50], and convenience [51] in increasing dashboard interaction and improving performance and, thus, student learning engagement [52]. There is a lack of research on the relationship between the type of feedback information included in the dashboard and differences in application effectiveness.

Overall, while some studies have confirmed the impact of dashboards on learning behavior and effectiveness, and found that prescriptive analytics is a more mature type of analysis that provides more insight than descriptive analytics, there are inconsistent findings regarding whether dashboards can guide behavioral change and promote learning effectiveness, and what the “right” information should be to be presented on dashboards. Studies have also found that the learning effectiveness of dashboards is related to students’ personal trait factors, but their relationship with dashboard feedback is not yet established. Therefore, in this study, dashboards containing different types of feedback were designed to experimentally compare their learning effectiveness, while also considering some internal factors that influence the learning process, mainly how students’ learning strategies, learning attitudes, and prior knowledge levels affect learning outcomes in different types of dashboards. The specific research questions are as follows:

Hypothesis 1 (H1).

Dashboard with prescriptive analytics has better impact on learning performance compared to descriptive analytics.

Hypothesis 2 (H2).

Dashboard with prescriptive analytics has better impact on learning strategy compared to descriptive analytics.

Hypothesis 3 (H3).

Dashboard with prescriptive analytics has better impact on learning attitude compared to descriptive analytics.

Hypothesis 4 (H4).

The effectiveness of learning analytics in facilitating learning is related to individual differences in learners.

3. Methods

3.1. Participants and Methods

The experimental method was used as the main research method for this study. Three classes with comparable achievement participated in a controlled between-groups experiment from the fourth grade of an elementary school in Ningxia, China. There were 77 male students and 68 female students. One of the classes with 48 students was randomly selected as the control group and the other two classes, with 48 or 49 students, as the experimental group, using two different types of dashboards in the subject of mathematics. The class that served as the control group did not undergo any intervention other than data collection. Before this experiment, none of the students had been exposed to a similar LA intervention system.

Based on the student learning process data collected by the learning platform, the fluctuations in learning effectiveness of the students in the experimental group were tracked historically to see whether personalized learning supported by the two dashboards could significantly improve student learning. In addition, based on the self-regulated learning theory and the findings of some recent studies related to the influence of external and internal factors of learning on learning effectiveness, a questionnaire was used to explore the differences in students’ learning strategies in three dimensions, cognitive, metacognitive, and resource management, and the differences in students’ learning attitudes in three dimensions: affective, behavioral, and cognitive. In addition, the learning performance of the experimental and control groups in the pre- and post-experimental tests was compared and analyzed to see whether there were significant differences in the effects of different types of dashboards and traditional learning styles.

3.2. Research Tools

The two dashboards used in this study are based on the “Big Data for Learning” platform developed by the research group Cognitive diagnosis engine to analyze students’ cognitive states. Based on the purpose of comparing the differences in the application effects of descriptive and prescriptive feedback, we designed a descriptive dashboard with descriptive analytics and a prescriptive dashboard with prescriptive analytics to visually present the analytic results of students’ current learning for students, to provide feedback and support for their adaptive and personalized learning.

3.2.1. Descriptive Dashboard

The primary purpose of descriptive analytics is to provide a response to the inquiry “What has already occurred?”. It is the simplest form of extracting insights from data.

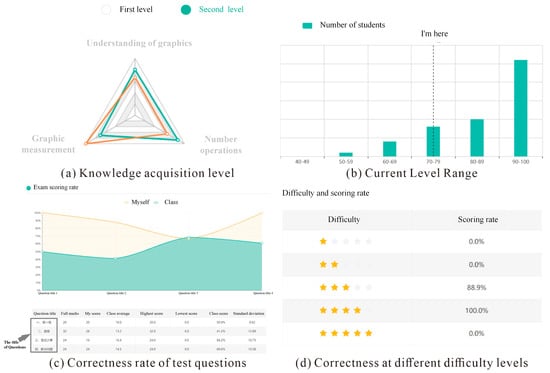

Conducted in a traditional face-to-face classroom setting in elementary schools, the descriptive dashboards used in this study for cognitive outcomes of classroom learning activities included real-time cognitive diagnostics based on subject knowledge mapping, class-level, and grade-level academic comparisons, and accurate error correction based on cognitive diagnostic results. Based on the study of our new curriculum standards and syllabus, the subject knowledge map was constructed through structured interviews with experts and front-line teachers and embedded in a learning analysis system. The system performs cognitive diagnostics on the results of each assignment and quiz, and descriptive dashboards provide learners with a visual display of cognitive diagnostic results. At the same time, it provides students with comparative information on the average knowledge mastery level of the class and grade, the score band in which the test results are located and the total number of students in each score band, and the correct rate of test questions, as shown in Figure 1.

Figure 1.

Descriptive dashboard.

The reason for providing student learning analysis information in the form of comparisons in the description dashboard is that, based on the social comparison theory, people tend to self-evaluate through social comparisons by which they identify their attributes, which is called self-evaluative social comparison. When the dashboard provides students with academic comparison information, it helps students to gain a basis for social comparison and thus helps to motivate learning to promote self-regulated learning. Since providing academic comparison information enhances student perceptions [8], many applications of learning analytics in research have included this basis for student motivation by comparing student behavior or performance indicators to class averages.

3.2.2. Prescriptive Dashboard

The goal of the prescriptive analytics dashboard is to express “what we should do” during the learning process. Prescriptive analytics can be used to enable the underlying model to infer possible cause-and-effect relationships, thereby advising users and providing suggestions on which specific behavioral changes are most likely to produce positive outcomes [10]. Common prescriptive feedback includes information on learning content suggestions, grade trend predictions, pass rate predictions, learning behavior suggestions, and learning strategy suggestions.

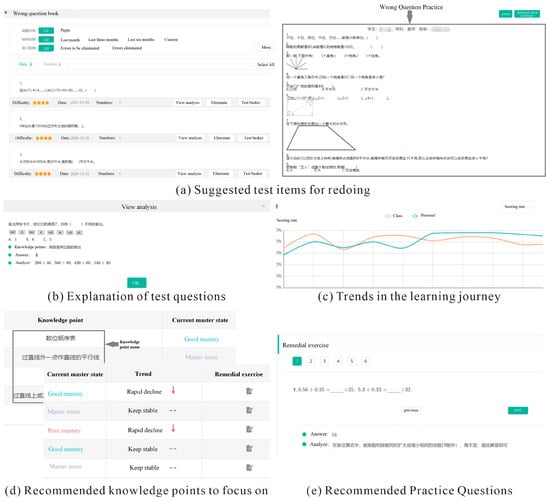

In this study, the prescriptive dashboard includes suggested questions, learning journey trends based on stage academic analysis, and suggested knowledge points (including learning resources and practice questions). The wrong question book module of the prescriptive dashboard automatically categorizes and consolidates test questions into student error books based on the cognitive diagnostic results, and requires students to practice on their own after class. Through the statistics of students’ learning history and visualization within a certain period, the prescriptive dashboard analyzes students’ stage learning processes as a whole, understands students’ individual learning and knowledge mastery in a certain stage, presents a graph of students’ personal information compared with class information, and provides a basis for students to master their learning fluctuations. At the same time, according to the results of academic prediction, the prescriptive dashboard accurately locates the weak points of students’ knowledge mastery and recommends personalized practice tests and other learning resources, as shown in Figure 2.

Figure 2.

Prescriptive dashboard.

3.3. Evaluation Procedure

Relying on the learning analytics system developed by the project team as a support platform, an intelligent learning environment was built in the experimental school to collect students’ learning data in class and after class, mine and analyze learning activity data based on artificial intelligence, educational big data, and learning analytics technology, and track, monitor and evaluate the learning process. Students’ academic development evaluations are automatically formed through the big data platform, and finally a descriptive dashboard containing descriptive analysis and a prescriptive dashboard presenting prescriptive analysis are formed based on the learning analysis results.

In this process, teachers design and carry out teaching based on the results of data analysis, students self-regulate their learning based on the results of data analysis presented in the dashboard, and the platform collects and records relevant data generated by students in their learning activities to historically track the fluctuations of learners’ learning effectiveness.

In addition to the dashboard data in the system, this study uses pre-tests, post-tests, and questionnaires to measure students’ learning outcomes, learning attitudes, learning strategies, and learning styles. Both the pre-test and the post-test were designed by three teachers with over ten years of teaching experience, and the test aim was to evaluate their knowledge level. Both tests consisted of three types of items, multiple-choice, fill-in-the-blank, and answer, with a total score of 100. For instance, in the multiple-choice items, the students were asked to select the correct option from among three alternative answers. In application problems, students are asked to apply their mathematical knowledge to solve problems posed based on virtual events.

The attitude questionnaire is adapted from the Test of Science Related Attitudes (TOSRA), with a total of 25 items, of which 10 were affective, nine were behavioral and six were cognitive. The reason for the division into these three dimensions is that, according to the A-B-C structural theory of attitudes, attitudes are composed of three components: affect (A), behavior (B), and cognition (C) [53]. A five-point Likert scale was adopted, where 1 represented “Strongly disagree” and “5” represented “Strongly agree.” The Cronbach’s alpha value of the questionnaire was 0.83.

The learning strategies questionnaire proposed by Wang [54] was adopted, composed of cognitive strategies, metacognitive strategies and resource management, and the questionnaire consisted of 30 questions.

Before the experiment began, students’ initial academic level was determined by measuring their attitudes, learning strategies, and learning styles using questionnaires.

In the Learning Analysis System section, multiple homework performance were collected for cognitive analysis and the prior cognitive structure distribution of each student was determined and used for individualized learning feedback and support. To avoid experimental errors caused by teachers’ and students’ unfamiliarity with the system, the researcher conducted three-week operational training with teachers on the use of the dashboard before the start of the experiment to help teachers use the data collection system to upload daily generated student learning data, but no individualized interventions were conducted during this period. From the fourth week onwards, the experiment was formally conducted. During the implementation of the experiment, the two experimental groups used the descriptive and prescriptive dashboards, respectively, while the control group was not given any type of dashboard. A post-test was administered at the end of the eighth week to understand the changes in the students.

At the end of the experiment, a single proficiency diagnostic was administered to all students and the learning strategies and attitudes to learning questionnaires were given to the experimental class again.

4. Results

4.1. H1. Learning Performance of the Descriptive Dashboard and the Prescriptive Dashboard

4.1.1. Comparison between Two Experimental Groups and the Control Group

Two tests of comparable difficulty, examining the same knowledge content, were used to diagnose the cognition of students in the three classes before and after using the dashboard to confirm the change in performance before and after the experiment. In order to determine the differences in the initial achievement levels of the three groups, the pre-test scores of the students in the three groups were compared by one-way ANOVA, and it was found that there were no statistically significant differences in the pre-test scores of the three groups, although the scores were not identical. Therefore, it can be concluded that there was no significant difference in the overall cognitive level of the three groups before the experiment.

The ANOVA results for the post-test scores, on the other hand, showed that the mean scores of the three groups showed significant differences, and further comparison of the mean scores of the three groups revealed that both experimental groups performed better than the control group. In other words, the students’ learning effectiveness was significantly improved after using the dashboard study. In other words, whether using the description dashboard or the specification dashboard, students’ learning performance improved significantly after learning with the dashboard.

4.1.2. Comparison between the Two Experimental Groups

Comparing the post-experimental scores between the two experimental groups, we also found that although the mean cognitive level of group B after using the dashboard was slightly higher than that of group A, there was no significant difference between the cognitive levels of experimental group A (using the description dashboard) and experimental group B (using the prescriptive dashboard) (see Table 1).

Table 1.

Results of the ANOVA analysis of learning effects.

4.2. H2. Learning Strategy of the Descriptive Dashboard and the Prescriptive Dashboard

To determine the differences in learning strategy levels between the experimental and control groups, the results of a one-way ANOVA analysis were first used to compare the learning strategy levels of students in the three classes, which showed significant differences (F(2,143) = 3.817, p = 0.024). The results of the post hoc comparisons revealed that the strategy levels in experimental group B were significantly higher than in the other two experimental groups.

Comparing the overall learning strategy performances of the two experimental groups using independent samples t-tests revealed that experimental group B (using the prescriptive analytics dashboard) showed higher levels of learning strategies compared to experimental group A (using the descriptive analytics dashboard) (see Table 2), and we speculate that students in this experimental group were effectively trained in learning strategies based on the intervention process of descriptive analytics during the use of the prescriptive dashboard. Exploring students’ learning strategies in three separate areas, cognitive, metacognitive, and resource management, we found significant differences in cognitive and metacognitive strategies between the two experimental groups, non-significant differences in resource management strategies, and better results of the prescriptive dashboard in cognitive and metacognitive strategies. Therefore, we concluded that the prescriptive analytics of the dashboard could promote students’ cognitive and metacognitive strategies.

Table 2.

Result of the t-test of the learning strategy.

4.3. H3. Learning Attitude of the Descriptive Dashboard and the Prescriptive Dashboard

When determining differences in initial learning attitude levels between the experimental and control groups, the results of a one-way ANOVA analysis comparing the pre-experimental learning attitude levels of the three groups of students did not show significant differences (F(2,144) = 0.914, p = 0.404).

Comparing the levels of learning attitudes in the three groups after the experiment revealed that experimental group B had the best learning attitudes and the control group had the worst learning attitudes, but the results of the one-way ANOVA analysis showed no significant difference in the overall level of learning attitudes between the students in the experimental and control groups (F(2,144) = 2.921, p = 0.057). Comparing the students’ attitudes toward learning in each of the three dimensions, affective, cognitive, and behavioral, again did not show significant differences.

Comparing the performance of students with different attitude levels, a positive correlation was found between the level of learning attitudes and students’ performance, indicating that the dashboard did not have a significant effect on students’ learning attitudes, but the effectiveness of learning based on the dashboard depends to some extent on whether students have positive learning attitudes or not.

4.4. H4. Influence of Individual Factors

4.4.1. Prior Knowledge Level

In order to compare the degree of effectiveness achieved by different students in learning supported by learning analytics feedback from two dashboards, we subtracted the cognitive diagnostic scores from the pre- and post-tests to obtain the pre–post score difference as the basis for determining the degree of improvement. Using Pearson’s correlation analysis, the correlation between the pretest score and the pre–posttest score difference was calculated separately for the two groups of students to explore the relationship between the level of prior knowledge and the learning enhancement effect of the students in the two experimental classes (Table 3). It was found that the prior knowledge level and the score difference were significantly negatively correlated in both experimental groups, meaning that those students with lower initial proficiency levels were able to improve more than those with higher initial proficiency levels through learning analytics feedback from both the descriptive dashboard and the prescriptive dashboard. This suggests that students with weaker cognitive levels need more support from external learning tools, and that independent learning with the help of tools such as dashboards can lead to better learning outcomes.

Table 3.

Correlation analysis of student learning effectiveness.

4.4.2. Learning Attitude Level

The effect of the learning attitude level on learning outcomes was not significant in either experimental group. Those students with better learning attitudes had only a slight, statistically insignificant advantage in learning outcomes. This may be due to the fact that the learning process for this group of students is highly regulated and they are required to complete daily learning tasks regardless of their attitudes towards the course.

4.4.3. Learning Strategy Level

The results of the one-way ANOVA showed no significant association between strategy level and score difference in experimental group B, and a significant positive relationship between strategy level and score difference in experimental group A. That is, a high level of learning strategy leads to higher learning effectiveness in the learning process based on the description dashboard, and effectiveness using the prescriptive dashboard is not affected by a high level of learning strategy. This may be due to the fact that, for experimental group A, students need to fully mobilize their learning abilities to select appropriate learning strategies when faced with information from the description dashboard in order to achieve effective learning. For the students using the prescriptive dashboard, since the prescriptive dashboard already gave specific learning paths and suggestions, the learning process did not require students to call on their own metacognitive abilities and the learning strategy selection process was already carried out by the dashboard system, so the students’ own learning strategy levels did not have a significant impact on their learning effectiveness.

5. Findings

Two dashboards containing different types of learning analytics data were designed for this study. One of the dashboards visually displays descriptive analytics of learning status, and the other provides students with prescriptive analysis based on learning status. Both learning analytics dashboards provide students with timely feedback on their learning after each test or exercise, and save the analytics to the system. We explored the differences in the use of descriptive and prescriptive analytics by comparing the performance of students using the two dashboards.

First, prescriptive analytics on the dashboard had a better impact on learning performance than descriptive analysis, although even simple descriptive analysis had a significant positive impact on learning outcomes.

The comparison of the performance of the two experimental groups and the control group shows that both dashboards have a facilitative effect on student learning, and that the group which uses the prescriptive dashboard has a slightly greater facilitative effect. Current research related to learning analytics, especially in the technical category, has mostly focused on the exploration of predictors and dashboard presentation, but in practice even simple descriptive analytics can still have significant effects on learning performance. From a summary of previous research findings, we found that the learning effects of dashboards are not always significant [18,39]. Comparing the dashboards we designed with the information visualized in these dashboards, we believe that this is a difference due to the different closeness of the dashboard information to the cognitive processing. In both dashboards used in this study, both descriptive and prescriptive analytics were undertaken with respect to students’ cognitive structures, and those dashboards that did not reveal significant achievement changes were usually used as a tool for progress monitoring and did not involve students’ cognitive processing. In contrast, those dashboards that have had a significant impact on learning performance usually require that students changed their learning strategies based on the results of the learning analysis.

Second, the prescriptive analytics of the dashboard are beneficial to the students’ independent learning skills. By investigating the differences in learning effectiveness and learning strategies between the two dashboards, we found that the class using the prescriptive dashboard not only showed higher cognitive levels after the experiment, but the class also had a higher average level of learning strategies than the other classes. This indicates that the prescriptive dashboard can promote both students’ learning outcomes and their independent learning skills. According to previous research, the advantage of prescriptive analytics is its ability to simplify the learner’s decision-making process by providing adaptive learning recommendations to maximize learning outcomes [10]. That is, by directly telling the learner what to do, prescriptive analytics provides the learner with a model of how to properly adjust the learning process, and the learner gains better decision-making skills as he or she substitutes and compares his or her own judgments with the decisions made by the learning analytics. In contrast, when the dashboard uses descriptive analytics, no learning path suggestions are provided; students are simply told what is happening, and then learners invoke their own metacognition to choose a learning strategy and have no way of knowing whether their learning decisions are efficient. Not surprisingly, when we compared the achievement gains of students with different strategy levels during the experiment, we also found a correlation between the learning effects of the descriptive dashboard and students’ learning strategy levels. This finding is also similar to those of studies in traditional learning environments [55].

Therefore, an effective learning analytics dashboard for students with low self-directed learning ability should provide some learning strategy support to help plan learning paths, guide students on “what to do”, and improve learning outcomes by enhancing students’ learning strategy levels. The design and application of the dashboard should take into account whether the feedback from the dashboard can adequately motivate students’ learning strategies.

Third, learning analytics feedback on dashboards has a greater enhancement effect on learners with low levels of prior knowledge. In comparing the learning outcomes of students with different levels of prior knowledge, we found that although students at different levels benefited from the learning support provided by the dashboard, the learning analytic feedback from the dashboard led to a greater improvement in learning outcomes for students with lower initial cognitive levels compared to other students. Constructivist learning theory assumes that learners often build on their own experiences when learning new information and solving new problems, relying on their own cognitive abilities to form explanations of the problems, and therefore often emphasizes the role of learning scaffolding in the learning process [56]. According to the nearest developmental zone and scaffolding theories, those students who are less able to learn are in greater need of such learning support.

Fourth, individual learner differences have a large impact on the learning outcomes supported by learning analytic dashboards. Our analysis of student learning effectiveness in the two experimental groups shows that differences in the type of dashboard feedback, while leading to differences in students’ learning abilities, did not lead to differences in their academic performance. In terms of student achievement, those internal factors that come from individual learners, such as their level of prior knowledge and self-regulated learning ability, have a greater impact on their learning performance. This finding is consistent with the findings of Jovanovic [6]. At the individual learner level, descriptive dashboards are more appropriate for students with higher levels of learning strategies, whereas students with lower levels of self-directed learning are suitable for prescriptive dashboards that explicitly tell them what to do. Therefore, in future research, we should not only consider the characteristics of the feedback information when designing learning dashboards, but also take into account more the internal student factors, such as using different forms of feedback information for differences in learning ability and learning attitudes. Individual student differences should also be fully considered when designing strategies to facilitate student learning using learning analytics or other techniques.

6. Conclusions

By visually presenting learning analytics results, learning analytics dashboards can visually and efficiently communicate the results of data analysis, and have thus become a major learning analytics application tool in teaching and learning environments. In this study, we compared the effects of a learning analytics dashboard containing descriptive analysis and a learning analytics dashboard containing prescriptive analysis on academic achievement in offline elementary schools and found that the advantage of the dashboard containing prescriptive analysis over descriptive analysis was its facilitative effect on learners’ learning strategies. In terms of learning achievement, both descriptive and prescriptive dashboards were effective in promoting students’ cognitive development, and the magnitude of improvement in learning achievement was very similar for both. There were also no significant differences between the two dashboards containing different feedback information in terms of learners’ attitudes toward learning. In addition, an examination of the relationship between learner differences and learning effectiveness revealed that learners’ prior knowledge and learning strategies were factors that determined the magnitude of the impact of dashboard feedback on learning outcomes.

This study suggests that, when using learning analytic information to support the learning process, prescriptive analytics on learning analytic dashboards are the more advantageous type of feedback for the purpose of facilitating the development of students’ self-regulated learning skills. At the same time, both descriptive and prescriptive analytics can be beneficial to learning, especially when students’ prior knowledge and self-directed learning skills are deficient. More analysis of the impact of more fine-grained learning analytics feedback elements on the learning process is needed to further explore the potential of learning analytics in influencing the self-directed learning process. At the same time, there is a need to explore how to improve learning data collection through concomitant sensing techniques during the application of learning analytics systems to reduce the workload of teachers and students in order to improve the experience of using them. By examining the effects of learning analytics feedback types on learning, this study provides useful insights for researchers and teachers who intend to use learning analytics to improve the learning process of elementary school students. When using learning analytics to support self-regulated learning processes, the feedback information used should be fully considered and the possible application effects obtained should be anticipated based on the type of feedback. However, the learning analytics in this study were only used to improve students’ independent learning after class, and further exploration is needed on how to provide more real-time feedback in the classroom, with the help of various interactive technologies to enhance students’ classroom performance. In future work, we will further explore the differences between feedback elements of the learning analytics dashboard in terms of learning performance, and the mechanisms of action and the practical effects of learning analytics information on influencing the learning process.

Author Contributions

Conceptualization, H.W.; methodology, H.W.; software, T.H.; validation, H.W. and Y.Z.; formal analysis, H.W.; investigation, H.W.; resources, T.H.; data curation, H.W.; writing—original draft preparation, H.W.; writing—review and editing, T.H.; visualization, S.H.; supervision, T.H.; project administration, T.H.; funding acquisition, T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant No. 61977033), the State Key Program of National Natural Science of China (Grant No. U20A20229) and the Central China Normal University National Teacher Development Collaborative Innovation Experimental Base Construction Research Project (Grant No. CCNUTEIII 2021-03).

Institutional Review Board Statement

The study was approved by the Ethic Institutional Review Board of Central China Normal University (Approval Code: CCNU-IRB-202006008b).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Bodily, R.; Verbert, K. Review of Research on Student-Facing Learning Analytics Dashboards and Educational Recommender Systems. IEEE Trans. Learn. Technol. 2017, 10, 405–418. [Google Scholar] [CrossRef]

- Yoo, Y.; Lee, H.; Jo, I.-H.; Park, Y. Educational Dashboards for Smart Learning: Review of Case Studies. In Emerging Issues in Smart Learning; Chen, G., Kumar, V., Kinshuk, Huang, R., Kong, S.C., Eds.; Lecture Notes in Educational Technology; Springer: Berlin/Heidelberg, Germany, 2015; pp. 145–155. [Google Scholar] [CrossRef]

- Winne, P.H. A metacognitive view of individual differences in self-regulated learning. Learn. Individ. Differ. 1996, 8, 327–353. [Google Scholar] [CrossRef]

- Zimmerman, B.J.; Schunk, D.H. Self-Regulated Learning and Academic Achievement: Theoretical Perspectives; Routledge: Uniondale, NY, USA, 2001. [Google Scholar]

- Sedrakyan, G.; Malmberg, J.; Verbert, K.; Järvelä, S.; Kirschner, P.A. Linking learning behavior analytics and learning science concepts: Designing a learning analytics dashboard for feedback to support learning regulation. Comput. Hum. Behav. 2018, 107, 105512. [Google Scholar] [CrossRef]

- Jovanović, J.; Saqr, M.; Joksimović, S.; Gašević, D. Students matter the most in learning analytics: The effects of internal and instructional conditions in predicting academic success. Comput. Educ. 2021, 172, 104251. [Google Scholar] [CrossRef]

- Verbert, K.; Govaerts, S.; Duval, E.; Santos, J.L.; Van Assche, F.; Parra, G.; Klerkx, J. Learning dashboards: An overview and future research opportunities. Pers. Ubiquitous Comput. 2013, 18, 1499–1514. [Google Scholar] [CrossRef]

- Kokoç, M.; Altun, A. Effects of learner interaction with learning dashboards on academic performance in an e-learning environment. Behav. Inf. Technol. 2019, 40, 161–175. [Google Scholar] [CrossRef]

- Schwendimann, B.A.; Rodriguez-Triana, M.J.; Vozniuk, A.; Prieto, L.P.; Boroujeni, M.S.; Holzer, A.; Gillet, D.; Dillenbourg, P. Perceiving Learning at a Glance: A Systematic Literature Review of Learning Dashboard Research. IEEE Trans. Learn. Technol. 2016, 10, 30–41. [Google Scholar] [CrossRef]

- Susnjak, T.; Ramaswami, G.S.; Mathrani, A. Learning analytics dashboard: A tool for providing actionable insights to learners. Int. J. Educ. Technol. High. Educ. 2022, 19, 1–23. [Google Scholar] [CrossRef]

- Siemens, G.; Long, P. Penetrating the Fog: Analytics in Learning and Education. Educ. Rev. 2011, 46, 30. [Google Scholar]

- Sønderlund, A.L.; Hughes, E.; Smith, J. The efficacy of learning analytics interventions in higher education: A systematic review. Br. J. Educ. Technol. 2018, 50, 2594–2618. [Google Scholar] [CrossRef]

- Leitner, P.; Ebner, M.; Ebner, M. Learning Analytics Challenges to Overcome in Higher Education Institutions. In Utilizing Learning Analytics to Support Study Success; Ifenthaler, D., Mah, D.-K., Yau, J.Y.-K., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 91–104. [Google Scholar] [CrossRef]

- Umer, R.; Susnjak, T.; Mathrani, A.; Suriadi, L. Current stance on predictive analytics in higher education: Opportunities, challenges and future directions. Interact. Learn. Environ. 2021, 1–26. [Google Scholar] [CrossRef]

- Daniel, B. Big Data and analytics in higher education: Opportunities and challenges. Br. J. Educ. Technol. 2014, 46, 904–920. [Google Scholar] [CrossRef]

- Yoo, M.; Jin, S.-H. Development and Evaluation of Learning Analytics Dashboards to Support Online Discus-sion Activities. Educ. Technol. Soc. 2020, 23, 1–18. [Google Scholar]

- Bao, H.; Li, Y.; Su, Y.; Xing, S.; Chen, N.-S.; Rosé, C.P. The effects of a learning analytics dashboard on teachers’ diagnosis and intervention in computer-supported collaborative learning. Technol. Pedagog. Educ. 2021, 30, 287–303. [Google Scholar] [CrossRef]

- Zamecnik, A.; Kovanović, V.; Grossmann, G.; Joksimović, S.; Jolliffe, G.; Gibson, D.; Pardo, A. Team interactions with learning analytics dashboards. Comput. Educ. 2022, 185, 104514. [Google Scholar] [CrossRef]

- Gutiérrez, F.; Seipp, K.; Ochoa, X.; Chiluiza, K.; De Laet, T.; Verbert, K. LADA: A learning analytics dashboard for academic advising. Comput. Hum. Behav. 2018, 107, 105826. [Google Scholar] [CrossRef]

- Hu, Y.-H.; Lo, C.-L.; Shih, S.-P. Developing early warning systems to predict students’ online learning performance. Comput. Hum. Behav. 2014, 36, 469–478. [Google Scholar] [CrossRef]

- Joseph-Richard, P.; Uhomoibhi, J.; Jaffrey, A. Predictive learning analytics and the creation of emotionally adaptive learning environments in higher education institutions: A study of students’ affect responses. Int. J. Inf. Learn. Technol. 2021, 38, 243–257. [Google Scholar] [CrossRef]

- Zheng, L.; Zhong, L.; Niu, J. Effects of personalised feedback approach on knowledge building, emotions, co-regulated behavioural patterns and cognitive load in online collaborative learning. Assess. Eval. High. Educ. 2021, 47, 109–125. [Google Scholar] [CrossRef]

- Sadallah, M.; Encelle, B.; Maredj, A.-E.; Prié, Y. Towards fine-grained reading dashboards for online course revision. Educ. Technol. Res. Dev. 2020, 68, 3165–3186. [Google Scholar] [CrossRef]

- Fleur, D.S.; van den Bos, W.; Bredeweg, B. Learning Analytics Dashboard for Motivation and Performance. In Intelligent Tutoring Systems; Kumar, V., Troussas, C., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; pp. 411–419. [Google Scholar] [CrossRef]

- Few, S. Information Dashboard Design; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2006. [Google Scholar]

- Iandoli, L.; Quinto, I.; De Liddo, A.; Shum, S.B. Socially augmented argumentation tools: Rationale, design and evaluation of a debate dashboard. Int. J. Hum.-Comput. Stud. 2014, 72, 298–319. [Google Scholar] [CrossRef]

- Feild, J. Improving Student Performance Using Nudge Analytics; International Educational Data Mining Society: Paris, France, 2015. [Google Scholar]

- Sansom, R.L.; Bodily, R.; Bates, C.O.; Leary, H. Increasing Student Use of a Learner Dashboard. J. Sci. Educ. Technol. 2020, 29, 386–398. [Google Scholar] [CrossRef]

- Ott, C.; Robins, A.; Haden, P.; Shephard, K. Illustrating performance indicators and course characteristics to support students’ self-regulated learning in CS1. Comput. Sci. Educ. 2015, 25, 174–198. [Google Scholar] [CrossRef]

- Valle, N.; Antonenko, P.; Valle, D.; Sommer, M.; Huggins-Manley, A.C.; Dawson, K.; Kim, D.; Baiser, B. Predict or describe? How learning analytics dashboard design influences motivation and statistics anxiety in an online statistics course. Educ. Technol. Res. Dev. 2021, 69, 1405–1431. [Google Scholar] [CrossRef] [PubMed]

- Brown, M. Seeing students at scale: How faculty in large lecture courses act upon learning analytics dashboard data. Teach. High. Educ. 2020, 25, 384–400. [Google Scholar] [CrossRef]

- Guerra, J.; Ortiz-Rojas, M.; Zúñiga-Prieto, M.A.; Scheihing, E.; Jiménez, A.; Broos, T.; De Laet, T.; Verbert, K. Adaptation and evaluation of a learning analytics dashboard to improve academic support at three Latin American universities. Br. J. Educ. Technol. 2020, 51, 973–1001. [Google Scholar] [CrossRef]

- Ahn, J.; Campos, F.; Hays, M.; Digiacomo, D. Designing in Context: Reaching Beyond Usability in Learning Analytics Dashboard Design. J. Learn. Anal. 2019, 6, 70–85. [Google Scholar] [CrossRef]

- Widjaja, H.A.E.; Santoso, S.W. University Dashboard: An Implementation of Executive Dashboard to University. In Proceedings of the 2014 2nd International Conference on Information and Communication Technology (ICoICT), Bandung, Indonesia, 28–30 May 2014; IEEE: Bandung, Indonesia, 2014; pp. 282–287. [Google Scholar] [CrossRef]

- Hilliger, I.; De Laet, T.; Henríquez, V.; Guerra, J.; Ortiz-Rojas, M.; Zuñiga, M.Á.; Baier, J.; Pérez-Sanagustín, M. For Learners, with Learners: Identifying Indicators for an Academic Advising Dashboard for Students. In Addressing Global Challenges and Quality Education; Alario-Hoyos, C., Rodríguez-Triana, M.J., Scheffel, M., Arnedillo-Sánchez, I., Dennerlein, S.M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; pp. 117–130. [Google Scholar] [CrossRef]

- Chen, L.; Lu, M.; Goda, Y.; Yamada, M. Design of Learning Analytics Dashboard Supporting Metacognition; International Association for the Development of the Information Society: Lisbon, Portugal, 2019. [Google Scholar]

- Yilmaz, F.G.K.; Yilmaz, R. Learning analytics as a metacognitive tool to influence learner transactional distance and motivation in online learning environments. Innov. Educ. Teach. Int. 2020, 58, 575–585. [Google Scholar] [CrossRef]

- Han, J.; Kim, K.H.; Rhee, W.; Cho, Y.H. Learning analytics dashboards for adaptive support in face-to-face collaborative argumentation. Comput. Educ. 2020, 163, 104041. [Google Scholar] [CrossRef]

- Hellings, J.; Haelermans, C. The effect of providing learning analytics on student behaviour and performance in programming: A randomised controlled experiment. High. Educ. 2020, 83, 1–18. [Google Scholar] [CrossRef]

- Aguilar, S.J.; Karabenick, S.A.; Teasley, S.D.; Baek, C. Associations between learning analytics dashboard exposure and motivation and self-regulated learning. Comput. Educ. 2020, 162, 104085. [Google Scholar] [CrossRef]

- Wang, D.; Han, H. Applying learning analytics dashboards based on process-oriented feedback to improve students’ learning effectiveness. J. Comput. Assist. Learn. 2020, 37, 487–499. [Google Scholar] [CrossRef]

- Blau, I.; Shamir-Inbal, T. Re-designed flipped learning model in an academic course: The role of co-creation and co-regulation. Comput. Educ. 2017, 115, 69–81. [Google Scholar] [CrossRef]

- Jung, H.; Park, S.W.; Kim, H.S.; Park, J. The effects of the regulated learning-supported flipped classroom on student performance. J. Comput. High. Educ. 2021, 34, 132–153. [Google Scholar] [CrossRef]

- Lai, C.-L.; Hwang, G.-J. A self-regulated flipped classroom approach to improving students’ learning performance in a mathematics course. Comput. Educ. 2016, 100, 126–140. [Google Scholar] [CrossRef]

- Jivet, I.; Scheffel, M.; Schmitz, M.; Robbers, S.; Specht, M.; Drachsler, H. From students with love: An empirical study on learner goals, self-regulated learning and sense-making of learning analytics in higher education. Internet High. Educ. 2020, 47, 100758. [Google Scholar] [CrossRef]

- Kokoc, M.; Kara, M. A Multiple Study Investigation of the Evaluation Framework for Learning Analytics: Instrument Validation and the Impact on Learner Performance. Educ. Technol. Soc. 2021, 24, 16–28. [Google Scholar]

- Sanders, E.B.-N.; Stappers, P.J. Co-creation and the new landscapes of design. Codesign 2008, 4, 5–18. [Google Scholar] [CrossRef]

- Liu, W.; Lee, K.-P.; Gray, C.M.; Toombs, A.L.; Chen, K.-H.; Leifer, L. Transdisciplinary Teaching and Learning in UX Design: A Program Review and AR Case Studies. Appl. Sci. 2021, 11, 10648. [Google Scholar] [CrossRef]

- Xu, Z.; Makos, A. Investigating the Impact of a Notification System on Student Behaviors in a Dis-course-Intensive Hybrid Course: A Case Study. In Proceedings of the Fifth International Conference on Learning Analytics and Knowledge; LAK ’15, Poughkeepsie, NY, USA, 16–20 March 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 402–403. [Google Scholar]

- Holanda, O.; Ferreira, R.; Costa, E.; Bittencourt, I.I.; Melo, J.; Peixoto, M.; Tiengo, W. Educational resources recommendation system based on agents and semantic web for helping students in a virtual learning environment. Int. J. Web Based Communities 2012, 8, 333–353. [Google Scholar] [CrossRef]

- Muldner, K.; Wixon, M.; Rai, D.; Burleson, W.; Woolf, B.; Arroyo, I. Exploring the Impact of a Learning Dashboard on Student Affect. In Artificial Intelligence in Education; Conati, C., Heffernan, N., Mitrovic, A., Verdejo, M.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; pp. 307–317. [Google Scholar] [CrossRef]

- Kearsley, G.; Shneiderman, B. Engagement Theory: A Framework for Technology-Based Teaching and Learning. Educ. Technol. 1998, 38, 20–23. [Google Scholar]

- Abelson, R.P.; Rosenberg, M.J. Symbolic Psycho-Logic: A Model of Attitudinal Cognition. In Attitude Change; Routledge: Uniondale, NY, USA, 1968. [Google Scholar]

- Wang, Y. Study on Learning Strategies of High-Efficiency Mathematics Learning in Grades 5–6 of Primary School; Tianjin Normal University: Tianjin, China, 2019. [Google Scholar]

- Theobald, M.; Bellhäuser, H.; Imhof, M. Deadlines don’t prevent cramming: Course instruction and individual differences predict learning strategy use and exam performance. Learn. Individ. Differ. 2021, 87, 101994. [Google Scholar] [CrossRef]

- Kellen, K.; Antonenko, P. The role of scaffold interactivity in supporting self-regulated learning in a community college online composition course. J. Comput. High. Educ. 2017, 30, 187–210. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).