Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment

Abstract

1. Introduction

2. Literature Review

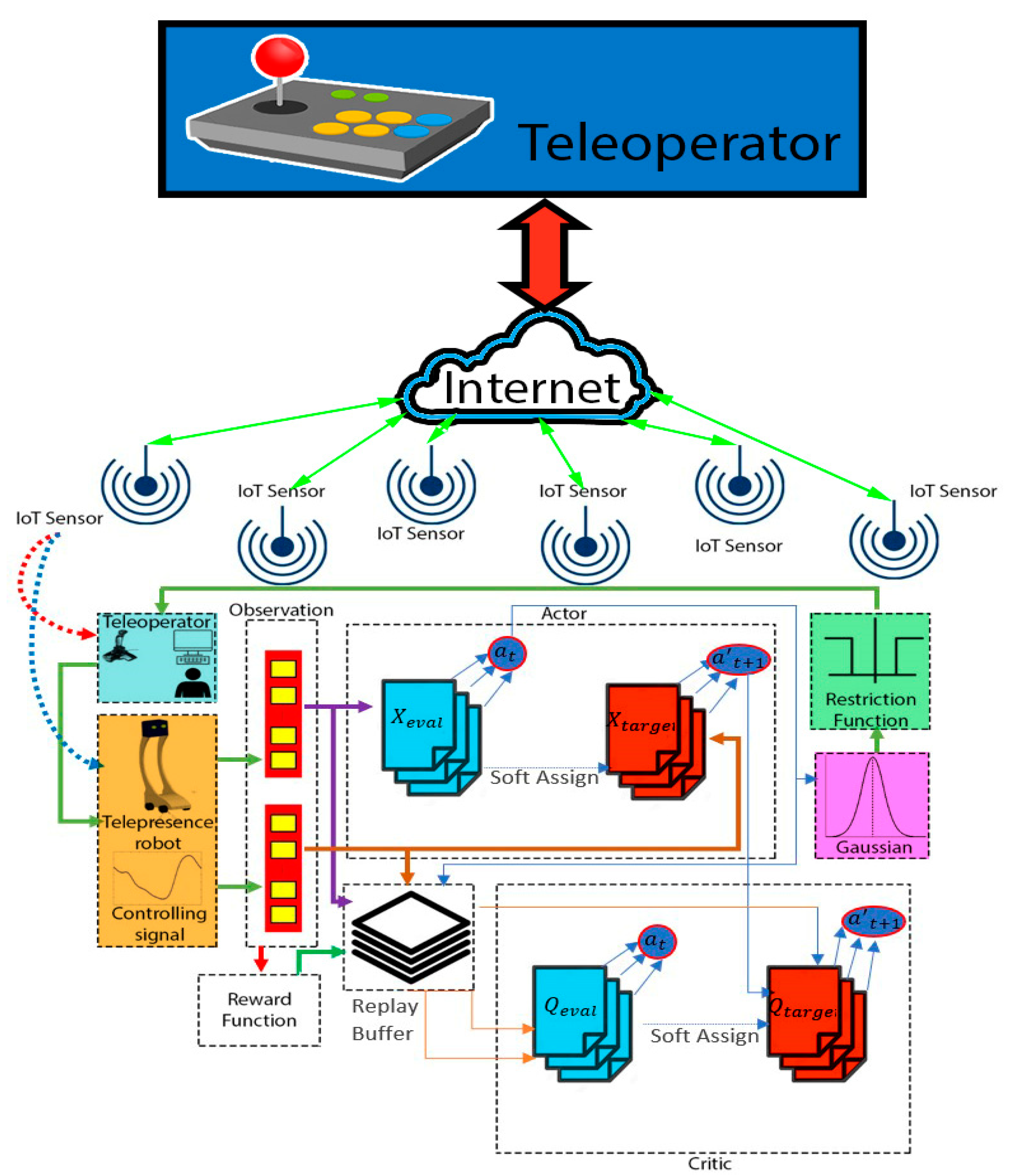

3. The Proposed Model

- Convolutional neural networks (CNN) are used to approximate networks and , and they are updated to improve the algorithm’s convergence.

- To prevent the issue of sample correlation-related overfitting in neural networks, we use a replay buffer.

- To enhance network convergence and stabilize the learning process, we develop the network and the target network.

| Algorithm 1. Training of Telepresence Robot Control Agent | |

| 1: | telepresence robot state, teleoperator command state. |

| 2: | network with random values |

| 3: | replay buffer |

| 4: | for each episode, do |

| 5: | observe the current state of the telepresence robot |

| 6: | for each step in the environment, do |

| 7: | select action from the network, according to the |

| 8: | wait 1 s to observe the telepresence robot’s status |

| 9: | observe reward |

| 10: | update current state |

| 11: | store in replay buffer |

| 12: | |

| 13: | |

| 14: | end for |

| 15: | end for |

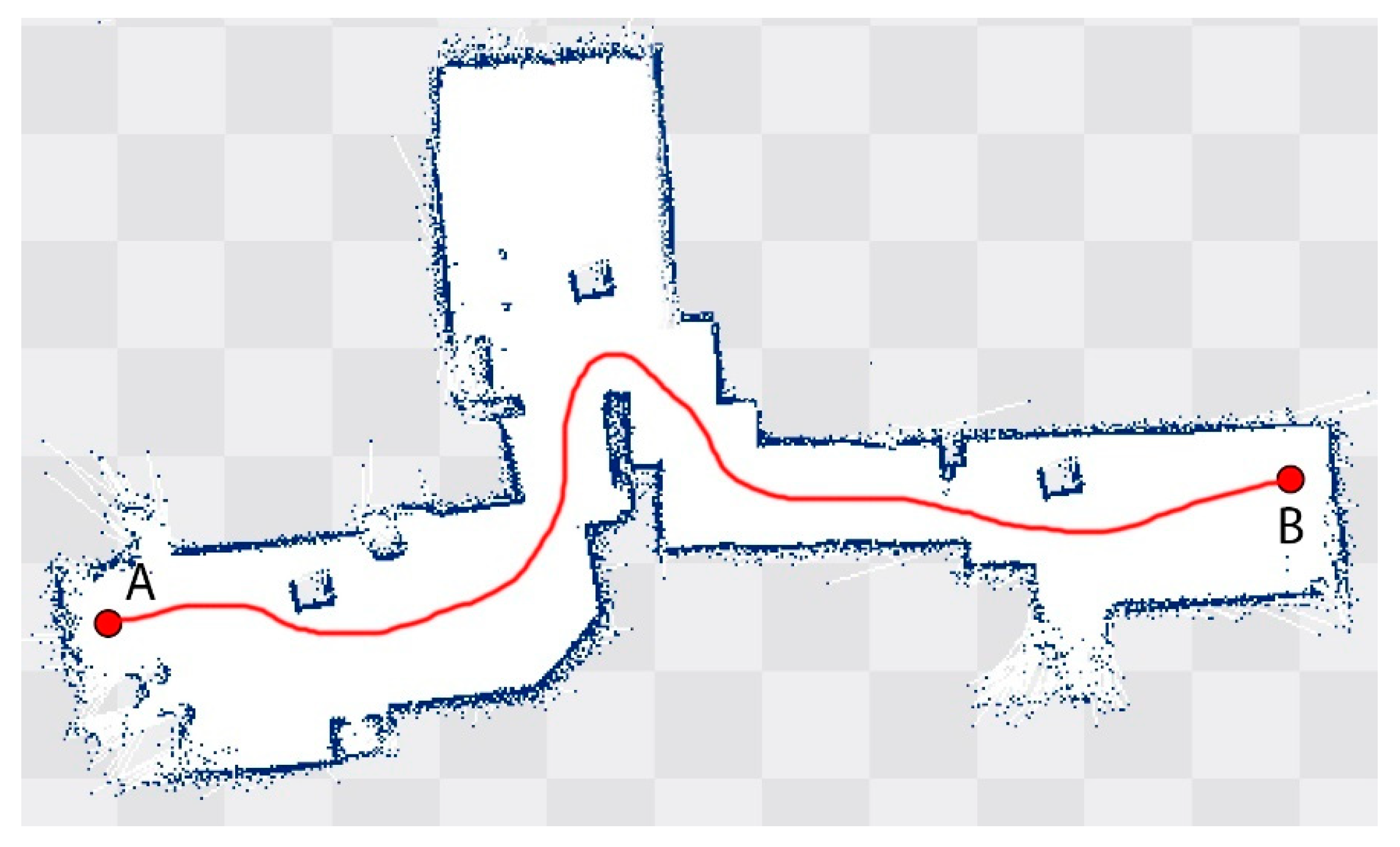

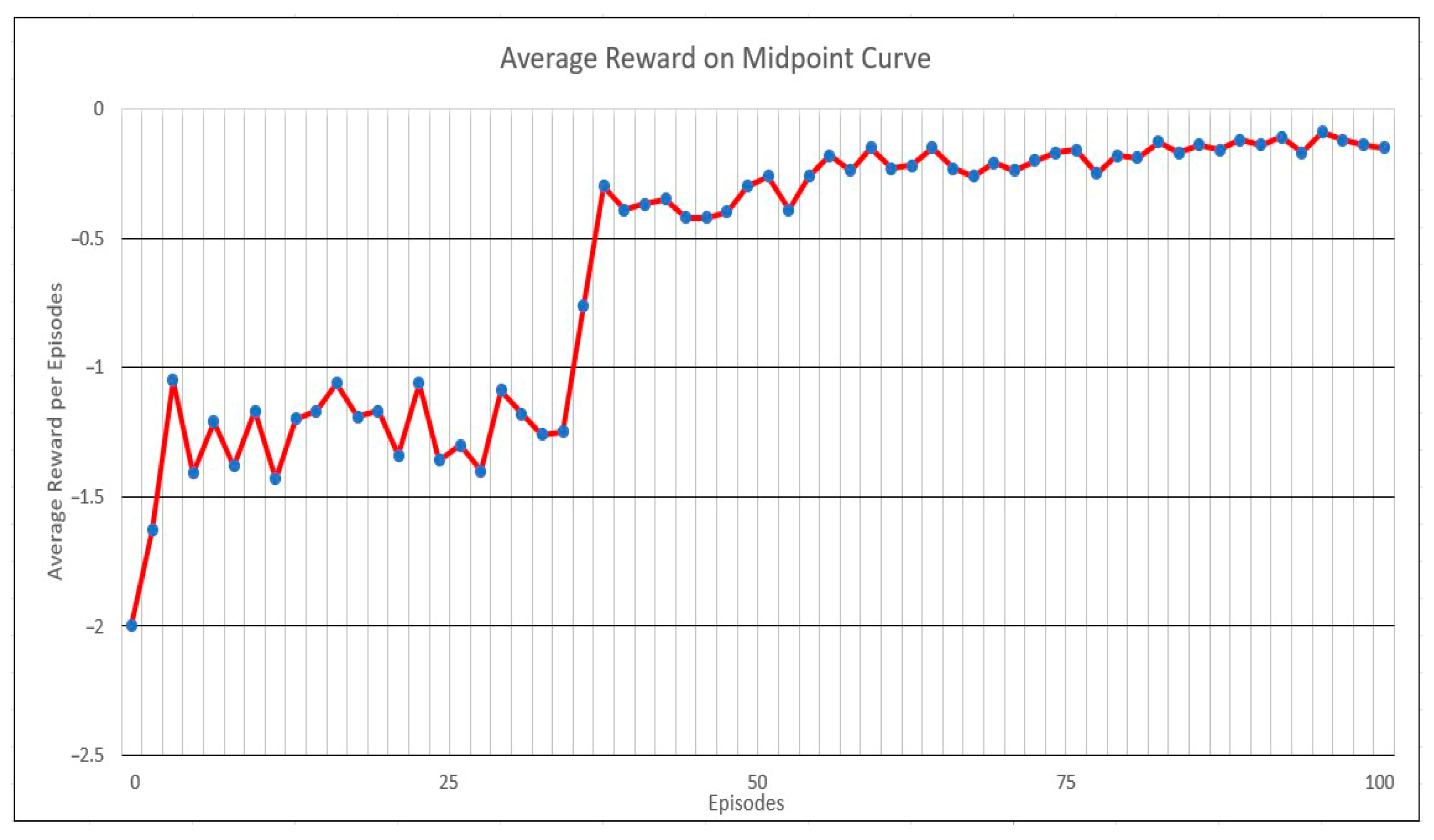

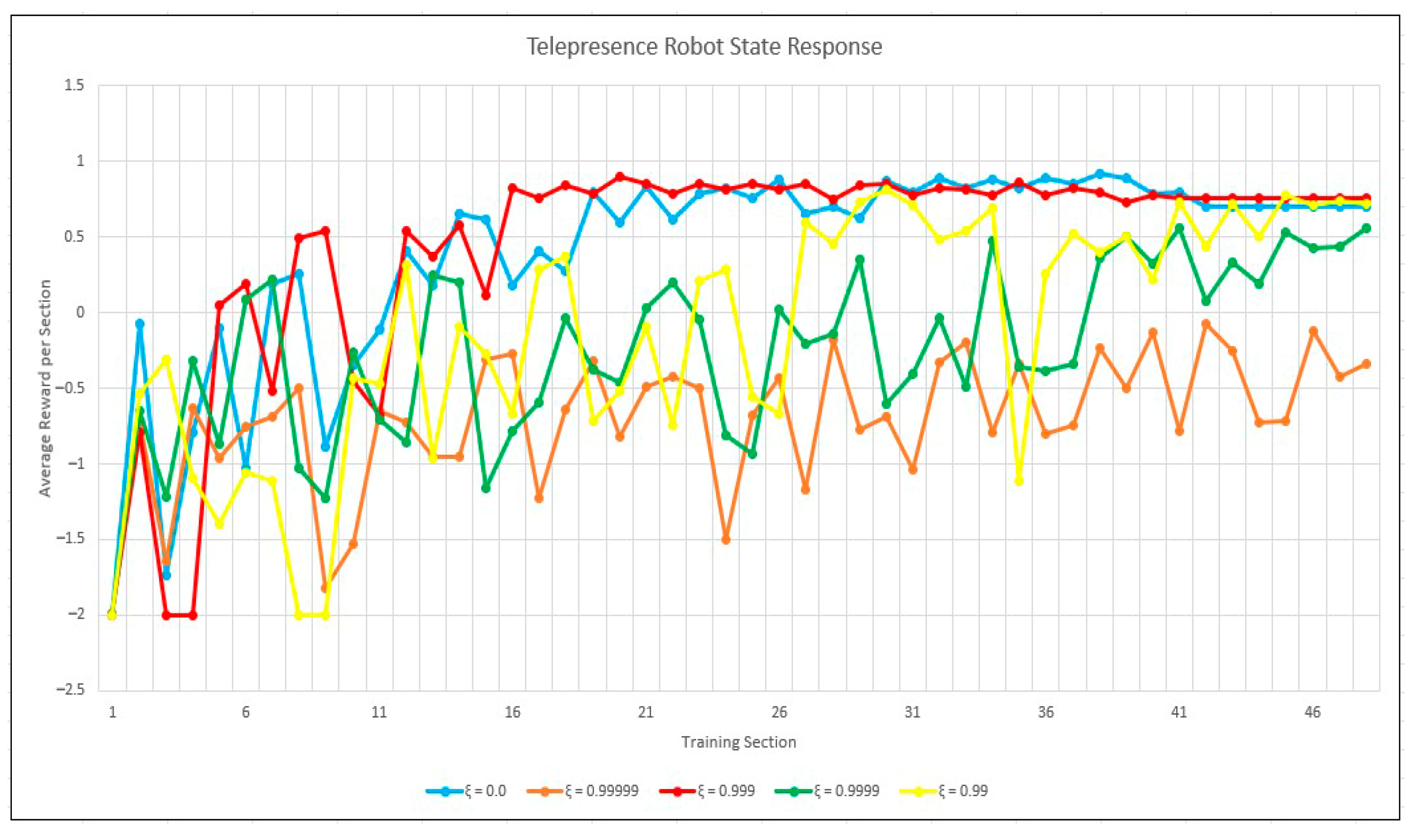

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edwards, J. Telepresence: Virtual Reality in the Real World [Special Reports]. IEEE Signal Process. Mag. 2011, 28, 9–142. [Google Scholar] [CrossRef]

- Alotaibi, Y. Automated Business Process Modelling for Analyzing Sustainable System Requirements Engineering. In Proceedings of the 2020 6th International Conference on Information Management (ICIM), London, UK, 27–29 March 2020. [Google Scholar] [CrossRef]

- Soda, K.; Morioka, K. 2A1-E08 a Remote Navigation System Based on Human-Robot Cooperation (Cooperation between Human and Machine). In Proceedings of the JSME Annual Conference on Robotics and Mechatronics (Robomec); Japan Science and Technology Agency: Kawaguchi, Japan, 2013. [Google Scholar] [CrossRef]

- Bar-Shalom, Y. Update with Out-of-Sequence Measurements in Tracking: Exact Solution. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 769–777. [Google Scholar] [CrossRef]

- Lakshmanna, K.; Subramani, N.; Alotaibi, Y.; Alghamdi, S.; Khalafand, O.I.; Nanda, A.K. Improved Me-taheuristic-Driven Energy-Aware Cluster-Based Routing Scheme for IoT-Assisted Wireless Sensor Net-works. Sustainability 2022, 14, 7712. [Google Scholar] [CrossRef]

- Larsen, T.D.; Andersen, N.A.; Ravn, O.; Poulsen, N.K. Incorporation of Time Delayed Measurements in a Discrete-Time Kalman Filter. In Proceedings of the 37th IEEE Conference on Decision and Control (Cat. No.98CH36171), Tampa, FL, USA, 18 December 1998. [Google Scholar] [CrossRef]

- Bak, M.; Larsen, T.D.; Norgaard, M.; Andersen, N.A.; Poulsen, N.K.; Ravn, O. Location Estimation Using Delayed Measurements. AMC’98-Coimbra. In Proceedings of the 1998 5th International Workshop on Advanced Motion Control, Proceedings (Cat. No.98TH8354), Coimbra, Portugal, 29 June–1 July 1998. [Google Scholar] [CrossRef]

- Chen, Y.; Hu, H. Internet of Intelligent Things and Robot as a Service. Simul. Model. Pract. Theory 2013, 34, 159–171. [Google Scholar] [CrossRef]

- Loke, S.W. Context-Aware Artifacts: Two Development Approaches. IEEE Pervasive Comput. 2006, 5, 48–53. [Google Scholar] [CrossRef]

- Cardenas, I.S.; Kim, J.-H. Advanced Technique for Tele-Operated Surgery Using an Intelligent Head-Mount Display System. In Proceedings of the 2013 29th Southern Biomedical Engineering Conference, Miami, FL, USA, 3–5 May 2013. [Google Scholar] [CrossRef]

- Abdul Jalil, S.B.; Osburn, B.; Huang, J.; Barley, M.; Markovich, M.; Amor, R. Avatars at a Meeting. In Proceedings of the 13th International Conference of the NZ Chapter of the ACM’s Special Interest Group on Human-Computer Interaction-CHINZ ’12, New York, NY, USA, 2–3 July 2012. [Google Scholar] [CrossRef]

- Park, C.H.; Howard, A.M. Real World Haptic Exploration for Telepresence of the Visually Impaired. In Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction, New York, NY, USA, 5–8 March 2012. [Google Scholar] [CrossRef]

- Vlahovic, S.; Suznjevic, M.; Kapov, L.S. Subjective Assessment of Different Locomotion Techniques in Virtual Reality Environments. In Proceedings of the 2018 Tenth International Conference on Quality of Multimedia Experience (QoMEX), Cagliari, Italy, 29 May–1 June 2018. [Google Scholar] [CrossRef]

- Shih, C.-F.; Chang, C.-W.; Chen, G.-D. Robot as a Storytelling Partner in the English Classroom-Preliminary Discussion. In Proceedings of the Seventh IEEE International Conference on Advanced Learning Technologies (ICALT 2007), Nigata, Japan, 18–20 July 2007. [Google Scholar] [CrossRef]

- Gross, H.-M.; Schroeter, C.; Mueller, S.; Volkhardt, M.; Einhorn, E.; Bley, A.; Martin, C.; Langner, T.; Merten, M. Progress in developing a socially assistive mobile home robot companion for the elderly with mild cognitive impairment. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2011), San Francisco, CA, USA, 25–30 September 2011. [Google Scholar] [CrossRef]

- Wikipedia. Willow Garage. Available online: https://en.wikipedia.org/wiki/Willow_Garage#Robots (accessed on 1 November 2022).

- Anybots. Anybots-Your Personal Avatar. Available online: http://anybots.com/#qbLaunch (accessed on 12 August 2022).

- VGo Communications. VGo Robot. Available online: http://www.vgocom.com (accessed on 21 September 2022).

- TelaDoc, I. InTouch Health|Home. Available online: http://www.intouchhealth.com/products-remote-presence-endpoint- (accessed on 14 December 2022).

- Mantarobot Inc. Mantarobot. Available online: http://mantarobot.com/ (accessed on 13 August 2022).

- Giraff Inc. Giraff Robot for Caregivers. Available online: http://www.giraff.org/professional-caregivers/?lang=en/ (accessed on 13 September 2022).

- Lazewatsky, D.A.; Smart, W.D. An inexpensive robot platform for teleoperation and experimentation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011. [Google Scholar] [CrossRef]

- Subahi, A.F.; Khalaf, O.I.; Alotaibi, Y.; Natarajan, R.; Mahadev, N.; Ramesh, T. Modified Self-Adaptive Bayesian Algorithm for Smart Heart Disease Prediction in IoT System. Sustainability 2022, 14, 14208. [Google Scholar] [CrossRef]

- Feng, Y.; Liu, L.; Shu, J. A Link Quality Prediction Method for Wireless Sensor Networks Based on XGBoost. IEEE Access 2019, 7, 155229–155241. [Google Scholar] [CrossRef]

- Singh, S.P.; Alotaibi, Y.; Kumar, G.; Rawat, S.S. Intelligent Adaptive Optimisation Method for Enhance-ment of Information Security in IoT-Enabled Environments. Sustainability 2022, 14, 13635. [Google Scholar] [CrossRef]

- Smeets, H.; Meurer, T.; Shih, C.-Y.; Marron, P.J. Demonstration abstract: A lightweight, portable device with integrated USB-Host support for reprogramming wireless sensor nodes. In Proceedings of the 2014 13th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Berlin, Germany, 15–17 April 2014. [Google Scholar] [CrossRef]

- He, D.; Chen, C.; Chan, S.; Bu, J.; Vasilakos, A.V. A Distributed Trust Evaluation Model and Its Application Scenarios for Medical Sensor Networks. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 1164–1175. [Google Scholar] [CrossRef]

- Rashid, R.A.; Rezan Bin Resat, M.; Sarijari, M.A.; Mahalin, N.H.; Abdullah, M.S.; Hamid, A.H.F.A. Performance investigations of frequency agile enabled TelosB testbed in Home area network. In Proceedings of the 2014 IEEE 2nd International Symposium on Telecommunication Technologies (ISTT), Langkawi, Malaysia, 24–26 November 2014. [Google Scholar] [CrossRef]

- Wang, Y.; Tan, R.; Xing, G.; Wang, J.; Tan, X. Profiling Aquatic Diffusion Process Using Robotic Sensor Networks. IEEE Trans. Mob. Comput. 2014, 13, 880–893. [Google Scholar] [CrossRef]

- Nagappan, K.; Rajendran, S.; Alotaibi, Y. Trust Aware Multi-Objective Metaheuristic Optimization Based Secure Route Planning Technique for Cluster Based IIoT Environment. IEEE Access 2022, 10, 112686–112694. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping for Small AR Workspaces. In Proceedings of the 2007 6th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Nara, Japan, 13–16 November 2007. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Newcombe, R.A.; Lovegrove, S.J.; Davison, A.J. DTAM: Dense tracking and mapping in real-time. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar] [CrossRef]

- Singh Gill, H.; Ibrahim Khalaf, O.; Alotaibi, Y.; Alghamdi, S.; Alassery, F. Multi-Model CNN-RNN-LSTM Based Fruit Recognition and Classification. Intell. Autom. Soft Comput. 2022, 33, 637–650. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel Tracking and Mapping on a camera phone. In Proceedings of the 2009 8th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Orlando, FL, USA, 19–22 October 2009. [Google Scholar] [CrossRef]

- Taleb, T.; Dutta, S.; Ksentini, A.; Iqbal, M.; Flinck, H. Mobile Edge Computing Potential in Making Cities Smarter. IEEE Commun. Mag. 2017, 55, 38–43. [Google Scholar] [CrossRef]

- Liu, P.; Willis, D.; Banerjee, S. ParaDrop: Enabling Lightweight Multi-tenancy at the Network’s Extreme Edge. In Proceedings of the 2016 IEEE/ACM Symposium on Edge Computing (SEC), Washington, DC, USA, 27–28 October 2016. [Google Scholar] [CrossRef]

- Alotaibi, Y.; Noman Malik, M.; Hayat Khan, H.; Batool, A.; ul Islam, S.; Alsufyani, A.; Alghamdi, S. Suggestion Mining from Opinionated Text of Big Social Media Data. Comput. Mater. Contin. 2021, 68, 3323–3338. [Google Scholar] [CrossRef]

- Tsai, P.-S.; Wang, L.-S.; Chang, F.-R. Modeling and Hierarchical Tracking Control of Tri-Wheeled Mobile Robots. IEEE Trans. Robot. 2006, 22, 1055–1062. [Google Scholar] [CrossRef]

- Yong, Q.; Yanlong, L.; Xizhe, Z.; Ji, L. Balance control of two-wheeled self-balancing mobile robot based on TS fuzzy model. In Proceedings of the 2011 6th International Forum on Strategic Technology (IFOST), Harbin, China, 22–24 August 2011. [Google Scholar] [CrossRef]

- Salerno, A.; Angeles, J. A New Family of Two-Wheeled Mobile Robots: Modeling and Controllability. IEEE Trans. Robot. 2007, 23, 169–173. [Google Scholar] [CrossRef]

- Alotaibi, Y. A New Meta-Heuristics Data Clustering Algorithm Based on Tabu Search and Adaptive Search Memory. Symmetry 2022, 14, 623. [Google Scholar] [CrossRef]

- Chaplot, D.S.; Gandhi, D.; Gupta, S.; Gupta, A.; Salakhutdinov, R. Learning to Explore Using Active Neural SLAM. arXiv 2020. [Google Scholar] [CrossRef]

- Ramakrishnan, S.K.; Al-Halah, Z.; Grauman, K. Occupancy Anticipation for Efficient Exploration and Navigation. In Proceedings of the European Conference on Computer Vision ECCV, Glasgow, UK, 23–28 August 2020. [Google Scholar] [CrossRef]

- Gupta, S.; Davidson, J.; Levine, S.; Sukthankar, R.; Malik, J. Cognitive Mapping and Planning for Visual Navigation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Tai, L.; Liu, M. A robot exploration strategy based on Q-learning network. In Proceedings of the 2016 IEEE International Conference on Real-Time Computing and Robotics (RCAR), Angkor Wat, Cambodia, 6–10 June 2016. [Google Scholar] [CrossRef]

- Monahan, G.E. State of the Art—A Survey of Partially Observable Markov Decision Processes: Theory, Models, and Algorithms. Manag. Sci. 1982, 28, 1–16. [Google Scholar] [CrossRef]

- Anuradha, D.; Subramani, N.; Khalaf, O.I.; Alotaibi, Y.; Alghamdi, S.; Rajagopal, M. Chaotic Search-and-Rescue-Optimization-Based Multi-Hop Data Transmission Protocol for Underwater Wireless Sensor Networks. Sensors 2022, 22, 2867. [Google Scholar] [CrossRef]

- Tai, L.; Liu, M. Mobile Robots Exploration through Cnn-Based Reinforcement Learning. Robot. Biomim. 2016, 3, 24. [Google Scholar] [CrossRef] [PubMed]

- Bai, S.; Chen, F.; Englot, B. Toward autonomous mapping and exploration for mobile robots through deep supervised learning. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar] [CrossRef]

- Srilakshmi, U.; Alghamdi, S.A.; Vuyyuru, V.A.; Veeraiah, N.; Alotaibi, Y. A Secure Optimization Routing Algorithm for Mobile Ad Hoc Networks. IEEE Access 2022, 10, 14260–14269. [Google Scholar] [CrossRef]

- Marroquin, A.; Gomez, A.; Paz, A. Design and implementation of explorer mobile robot controlled remotely using IoT technology. In Proceedings of the 2017 CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Pucon, Chile, 18–20 October 2017. [Google Scholar] [CrossRef]

- Srividhya, S.; Kumar, G.D.; Manivannan, J.; Mohamed Wasif Rihfath, V.A.; Ragunathan, K. IoT Based Vigilance Robot using Gesture Control. In Proceedings of the 2018 Second International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 15–16 February 2018. [Google Scholar] [CrossRef]

- Sennan, S.; Kirubasri; Alotaibi, Y.; Pandey, D.; Alghamdi, S. EACR-LEACH: Energy-Aware Cluster-based Routing Protocol for WSN Based IoT. Comput. Mater. Contin. 2022, 72, 2159–2174. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, H.; Hao, X. Design of an intelligent housekeeping robot based on IOT. In Proceedings of the 2015 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 28–30 November 2015. [Google Scholar] [CrossRef]

- Lam, M.C.; Prabuwono, A.S.; Arshad, H.; Chan, C.S. A Real-Time Vision-Based Framework for Human-Robot Interaction. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 257–267. [Google Scholar] [CrossRef]

- Tomar, P.; Kumar, G.; Verma, L.P.; Sharma, V.K.; Kanellopoulos, D.; Rawat, S.S.; Alotaibi, Y. CMT-SCTP and MPTCP Multipath Transport Protocols: A Comprehensive Review. Electronics 2022, 11, 2384. [Google Scholar] [CrossRef]

- Zhuang, C.; Yamins, D. Using Multiple Optimization Tasks to Improve Deep Neural Network Models of Higher Ventral Cortex. J. Vis. 2018, 18, 905. [Google Scholar] [CrossRef]

| Symbol | Description | Value |

|---|---|---|

| ε | Soft assign rate | 0.007 |

| Υ | Discounting factor of reward | 0.85 |

| ξ | Decay rate | 0.9996 |

| Initial variance of the exploration space | 40 |

| Parameters | Non-Optimized Mean | Optimized Mean |

|---|---|---|

| 2 | 15 | |

| 6.500 | 0.867 | |

| 0.500 | 0.200 | |

| 0.684 | 0.730 | |

| 0.544 | 0.602 | |

| 0.852 | 0.839 | |

| 0.830 | 0.910 |

| Parameters | SPID Mean | DDPG Mean | SPID Best | DDPG Best |

|---|---|---|---|---|

| 15 | 15 | - | - | |

| 9.467 | 1.067 | 3 | 0 | |

| 0.790 | 0.679 | 0.639 | 0.470 | |

| 1.091 | 0.897 | 0.845 | 0.650 |

| SPID | DDPG | SPID | DDPG | SPID | DDPG | |

|---|---|---|---|---|---|---|

| 21 | 2 | 0.872 | 0.734 | 1.200 | 1.015 | |

| 10 | 1 | 0.806 | 0.676 | 1.136 | 0.950 | |

| 8 | 0 | 0.800 | 0.615 | 1.072 | 0.736 | |

| 10 | 2 | 0.774 | 0.898 | 1.069 | 1.146 | |

| 6 | 0 | 0.838 | 0.877 | 1.190 | 0.964 | |

| 9 | 1 | 0.683 | 0.819 | 0.918 | 1.020 | |

| 7 | 2 | 0.813 | 0.631 | 1.118 | 0.794 | |

| 3 | 0 | 0.794 | 0.585 | 1.084 | 0.858 | |

| 3 | 3 | 0.639 | 0.674 | 0.845 | 0.956 | |

| 5 | 1 | 0.794 | 0.470 | 1.084 | 0.650 | |

| 9 | 0 | 0.792 | 0.626 | 1.072 | 0.854 | |

| 9 | 0 | 0.806 | 0.601 | 1.103 | 0.820 | |

| 14 | 1 | 0.737 | 0.839 | 1.041 | 1.130 | |

| 19 | 1 | 0.890 | 0.509 | 1.265 | 0.659 | |

| 9 | 2 | 0.807 | 0.631 | 1.164 | 0.904 | |

| Average | 9.467 | 1.067 | 0.790 | 0.679 | 1.091 | 0.897 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Naseer, F.; Khan, M.N.; Altalbe, A. Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment. Sustainability 2023, 15, 3585. https://doi.org/10.3390/su15043585

Naseer F, Khan MN, Altalbe A. Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment. Sustainability. 2023; 15(4):3585. https://doi.org/10.3390/su15043585

Chicago/Turabian StyleNaseer, Fawad, Muhammad Nasir Khan, and Ali Altalbe. 2023. "Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment" Sustainability 15, no. 4: 3585. https://doi.org/10.3390/su15043585

APA StyleNaseer, F., Khan, M. N., & Altalbe, A. (2023). Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment. Sustainability, 15(4), 3585. https://doi.org/10.3390/su15043585