Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems

Abstract

1. Introduction

- The method is more adaptable in terms of implementation;

- The computational complexity is comparatively low compared to other machine learning methods;

- By employing this technique, we can achieve virtual doctor–patient interactions in any difficult and hazardous situation.

2. Background

- There is a dire demand for a telepresence robot to be designed that could be utilized in the pandemic situation. A safe physical distance between the target and the transmitter is usually required.

- The design of such a robot, capable of human–robot interaction, is a popular topic to explore, and is receiving much attention in academia, industry, and healthcare systems.

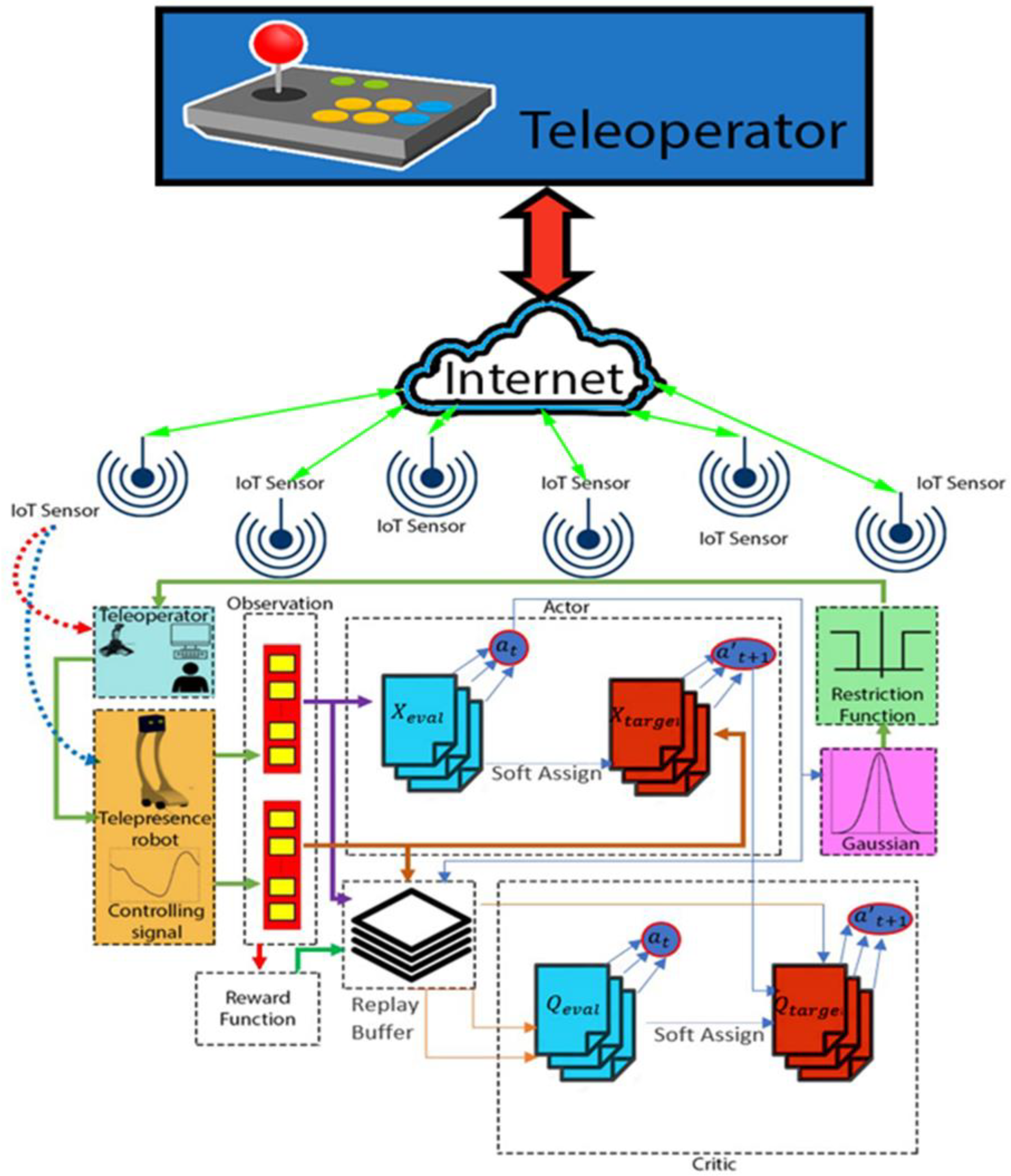

3. System Design and Implementation

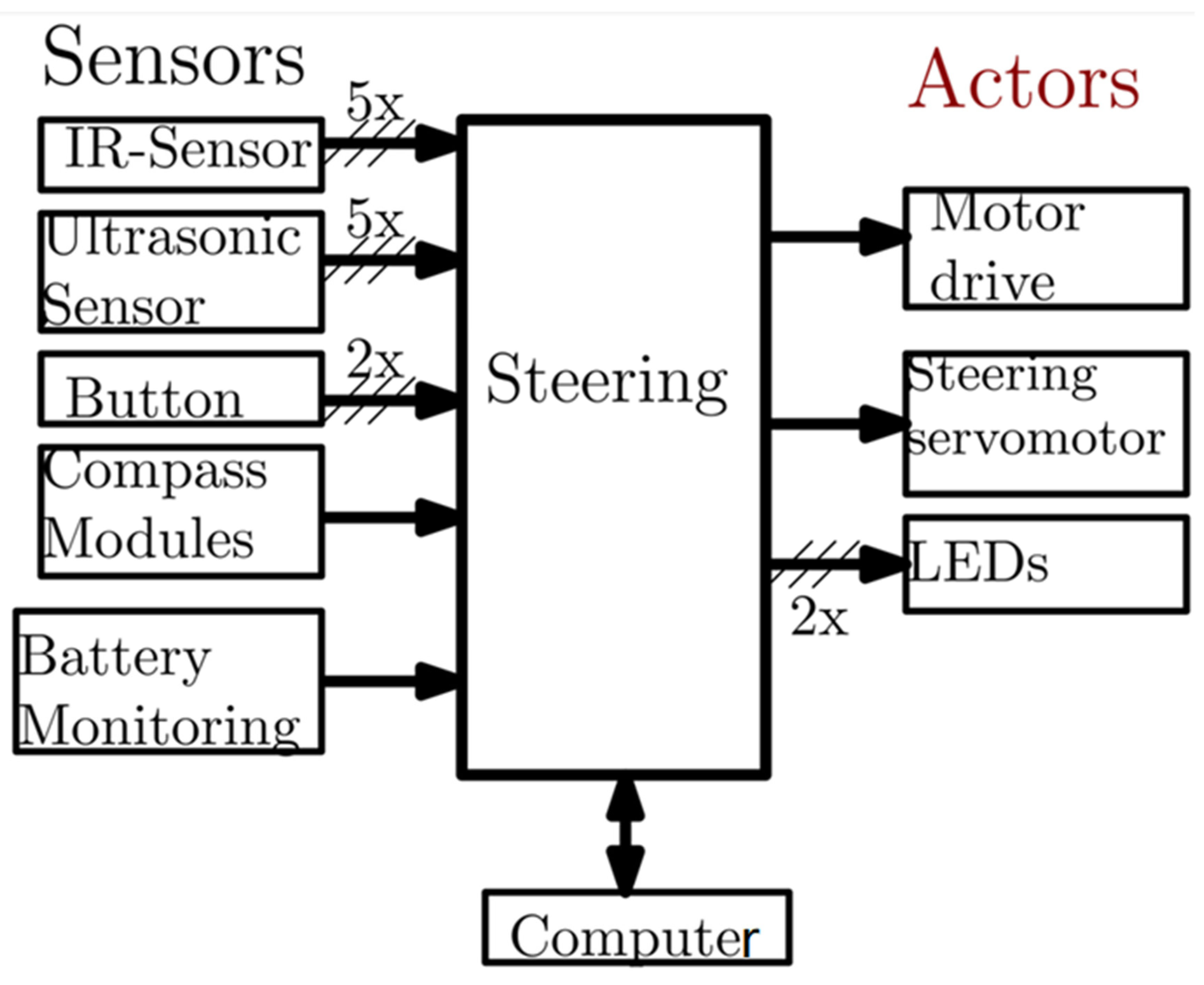

3.1. Sensors Modeling and Design

3.2. Robot Chassis

3.3. Steering

3.4. Modeling and Algorithms

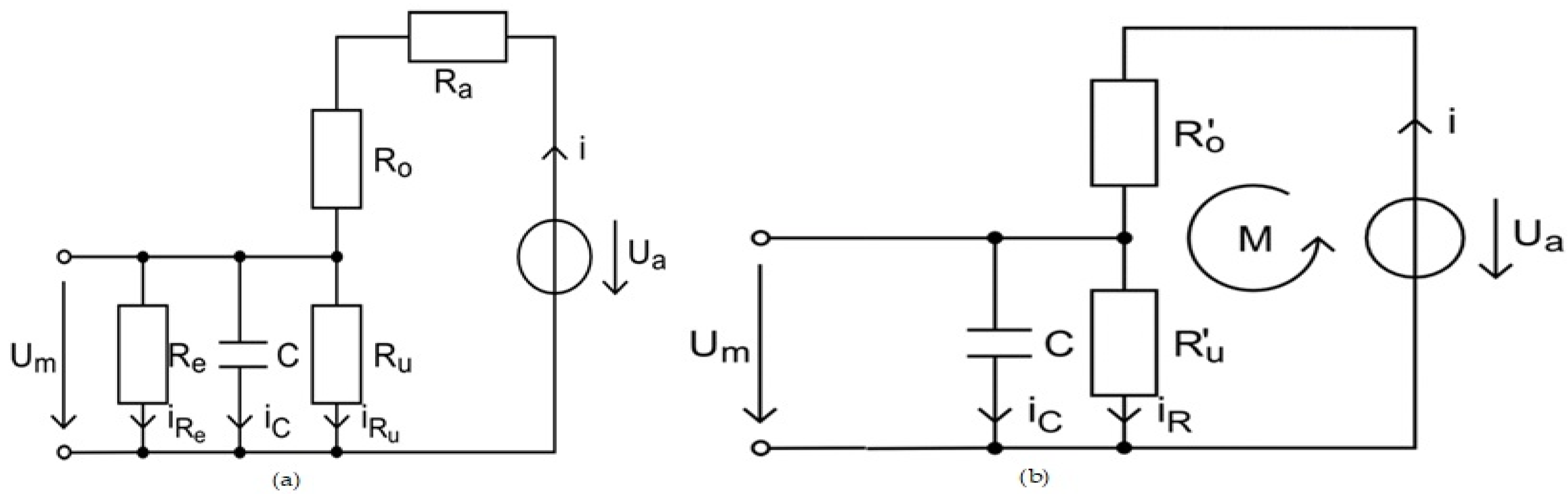

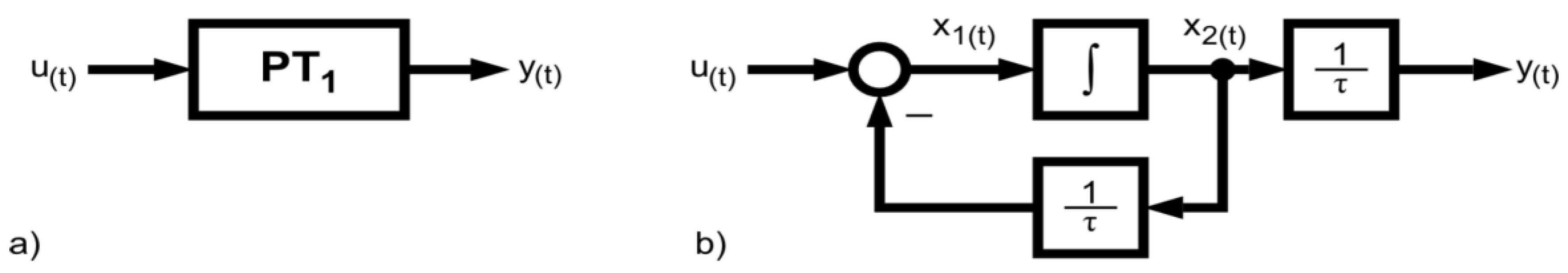

3.4.1. Digital PT1-Element

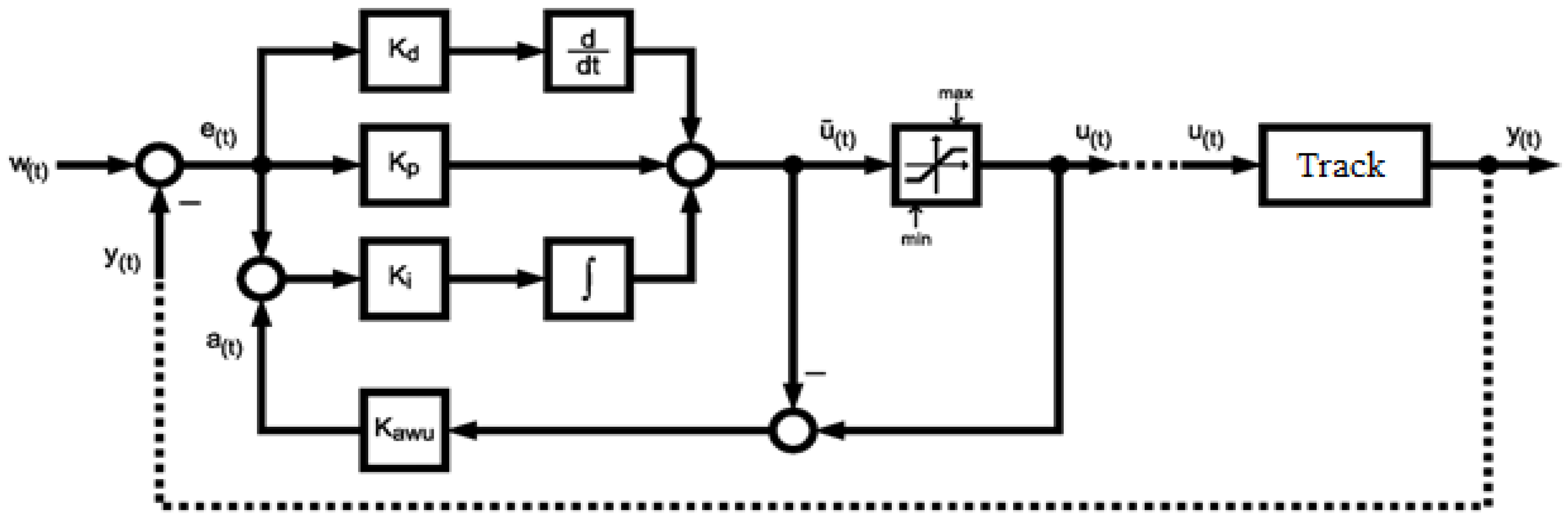

3.4.2. Digital PID Controller with Anti-Wind-Up Return

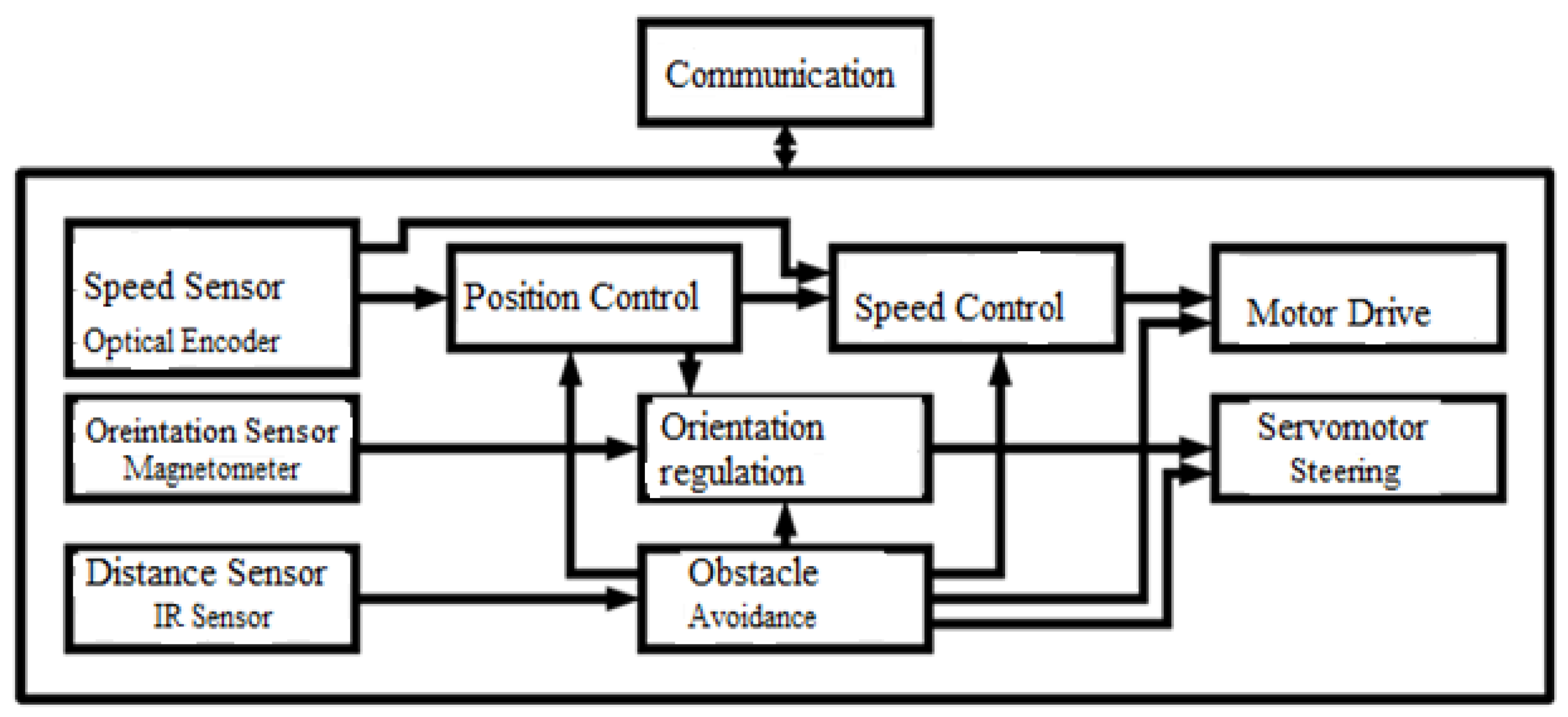

3.4.3. Controller Control Concept

4. Results and Discussion

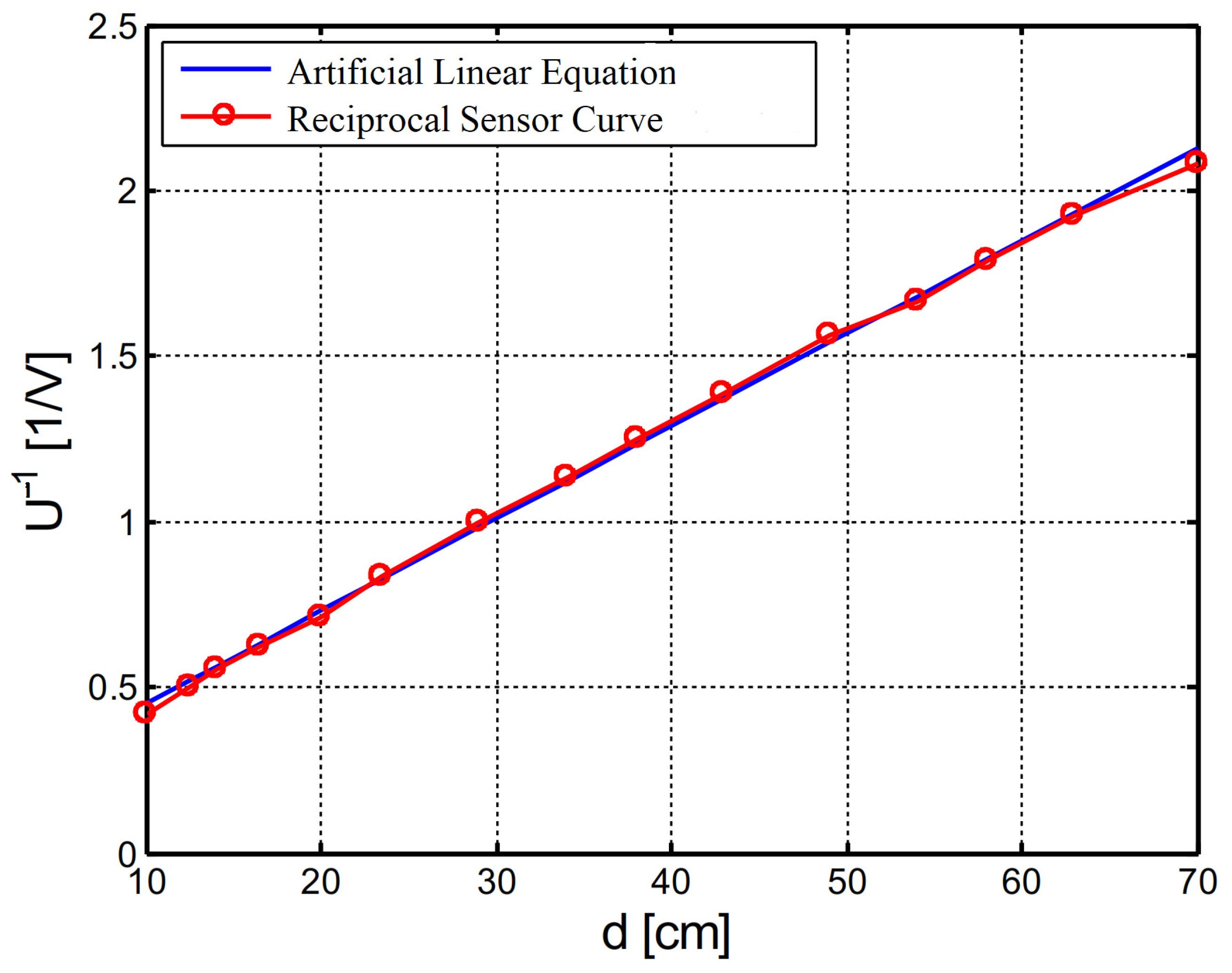

4.1. Linearization of the IR Sensor Characteristic Curve

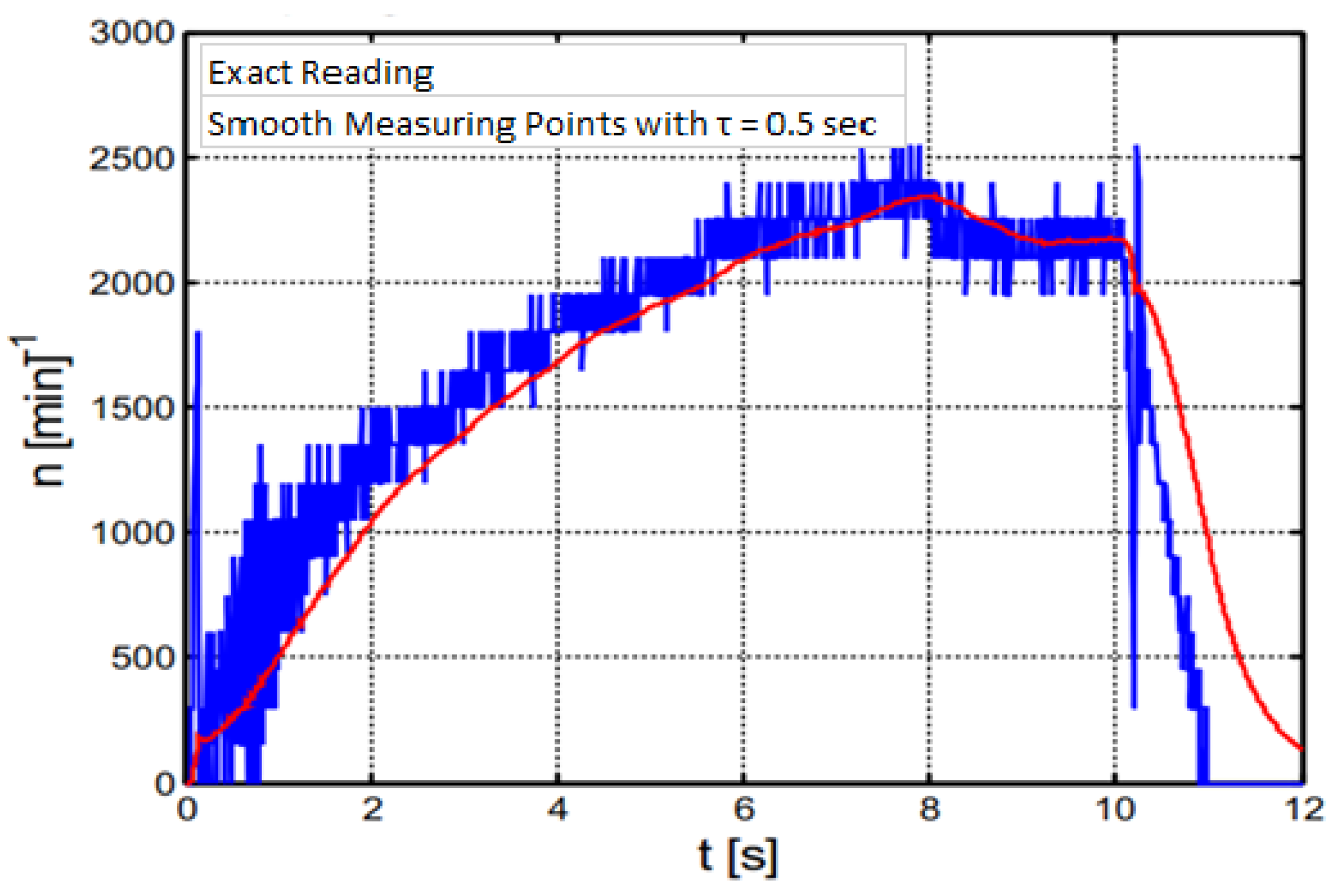

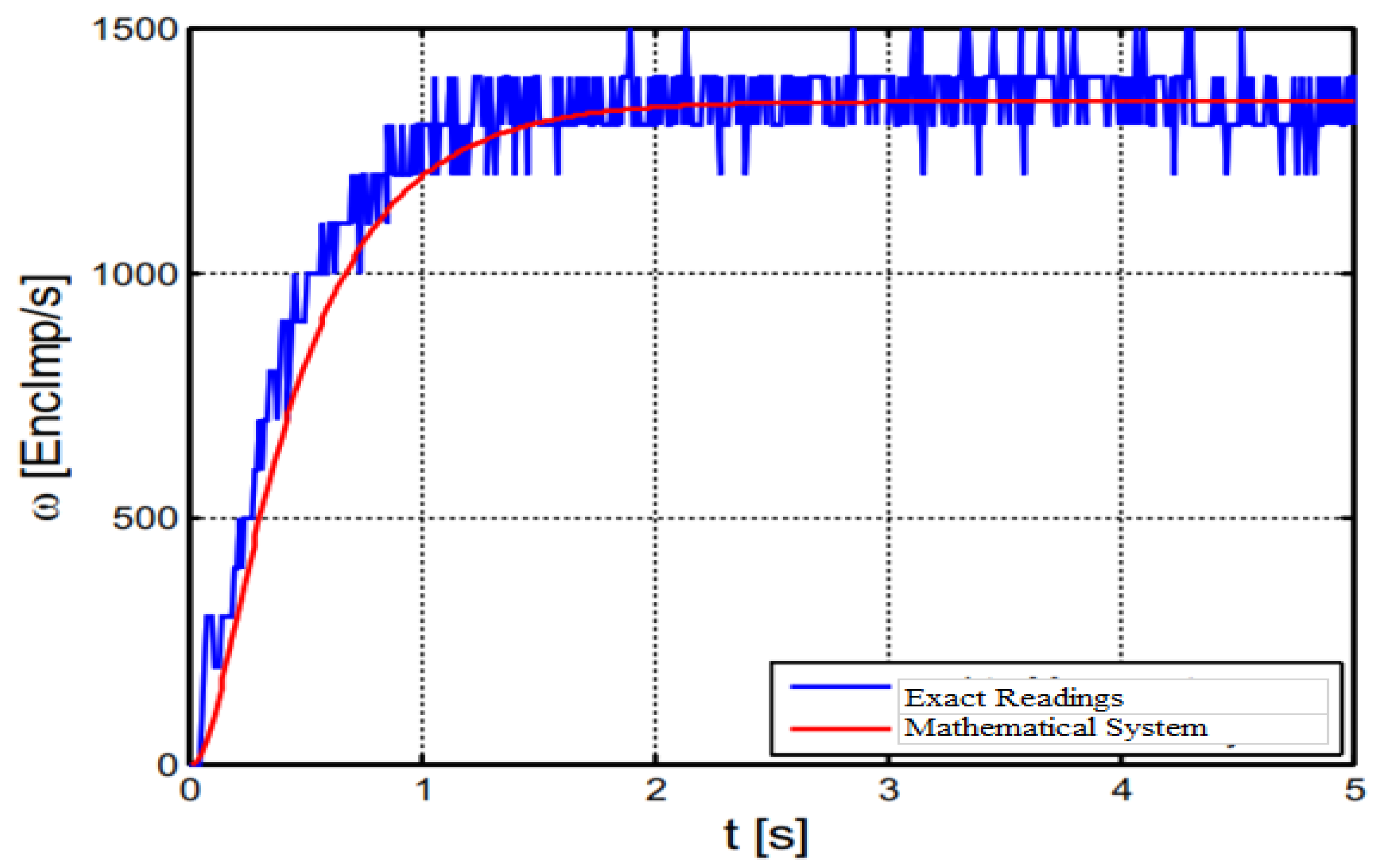

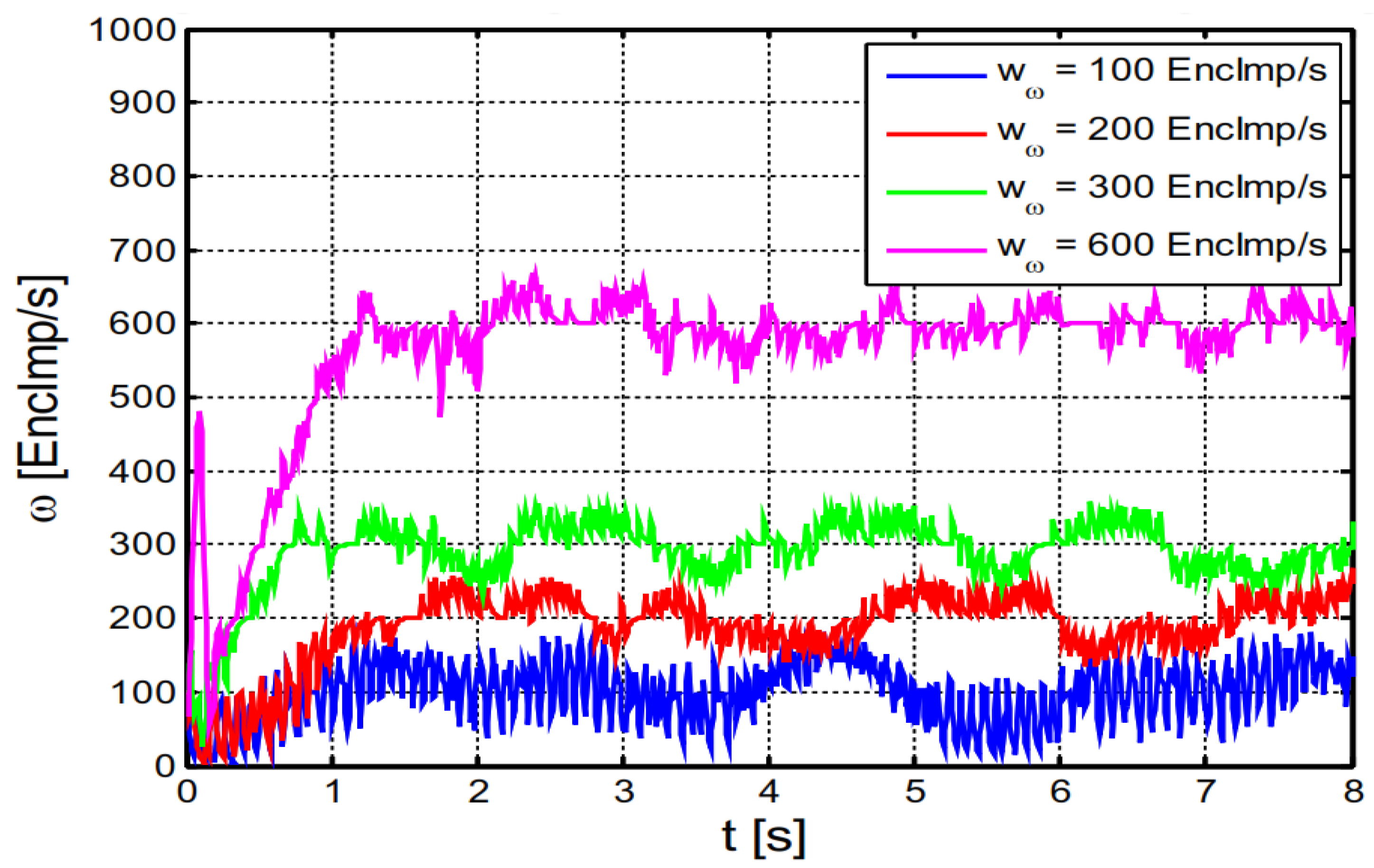

4.2. Speed Control Loop

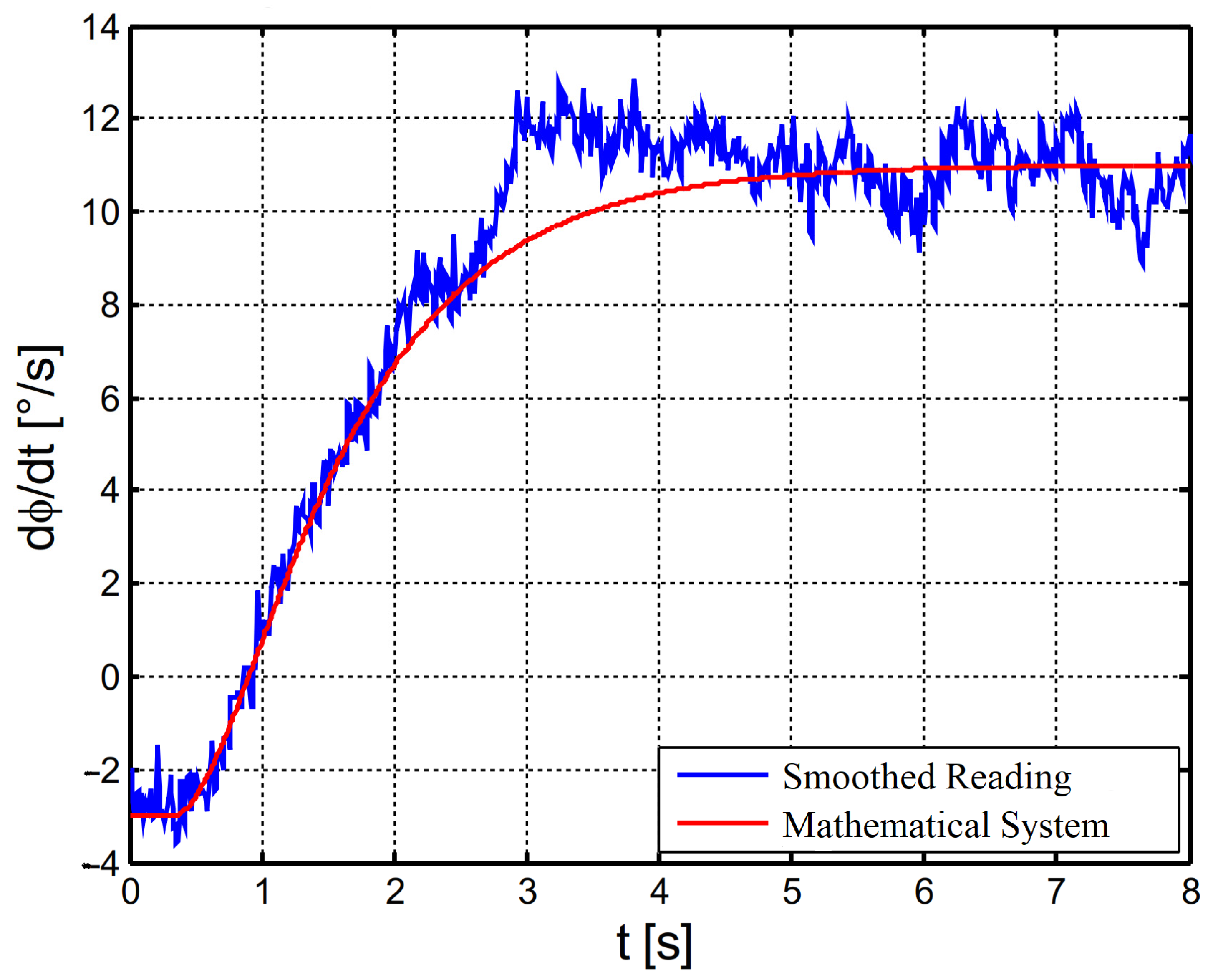

4.3. Orientation Determination Using Magnetometers

4.4. Self-Localization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Guillén-Climent, S.; Garzo, A.; Muñoz-Alcaraz, M.N.; Casado-Adam, P.; Arcas-Ruiz-Ruano, J.; Mejías-Ruiz, M.; Mayordomo-Riera, F.J. A Usability Study in Patients with Stroke Using MERLIN, a Robotic System Based on Serious Games for Upper Limb Rehabilitation in the Home Setting. J. Neuroeng. Rehabil. 2021, 18, 41. [Google Scholar] [CrossRef] [PubMed]

- Alotaibi, Y. Automated Business Process Modelling for Analyzing Sustainable System Requirements Engineering. In Proceedings of the 2020 6th International Conference on Information Management (ICIM), London, UK, 27–29 March 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Karimi, M.; Roncoli, C.; Alecsandru, C.; Papageorgiou, M. Cooperative Merging Control via Trajectory Optimization in Mixed Vehicular Traffic. Transp. Res. Part C Emerg. Technol. 2020, 116, 102663. [Google Scholar] [CrossRef]

- Kitazawa, O.; Kikuchi, T.; Nakashima, M.; Tomita, Y.; Kosugi, H.; Kaneko, T. Development of Power Control Unit for Compact-Class Vehicle. SAE Int. J. Altern. Powertrains 2016, 5, 278–285. [Google Scholar] [CrossRef]

- Alotaibi, Y.; Malik, M.N.; Khan, H.H.; Batool, A.; ul Islam, S.; Alsufyani, A.; Alghamdi, S. Suggestion Mining from Opinionated Text of Big Social Media Data. Comput. Mater. Contin. 2021, 68, 3323–3338. [Google Scholar] [CrossRef]

- Alotaibi, Y. A New Meta-Heuristics Data Clustering Algorithm Based on Tabu Search and Adaptive Search Memory. Symmetry 2022, 14, 623. [Google Scholar] [CrossRef]

- Rodríguez-Lera, F.J.; Matellán-Olivera, V.; Conde-González, M.Á.; Martín-Rico, F. HiMoP: A Three-Component Architecture to Create More Human-Acceptable Social-Assistive Robots. Cogn. Process. 2018, 19, 233–244. [Google Scholar] [CrossRef]

- Anuradha, D.; Subramani, N.; Khalaf, O.I.; Alotaibi, Y.; Alghamdi, S.; Rajagopal, M. Chaotic Search-And-Rescue-Optimization-Based Multi-Hop Data Transmission Protocol for Underwater Wireless Sensor Networks. Sensors 2022, 22, 2867. [Google Scholar] [CrossRef]

- Laengle, T.; Lueth, T.C.; Rembold, U.; Woern, H. A Distributed Control Architecture for Autonomous Mobile Robots-Implementation of the Karlsruhe Multi-Agent Robot Architecture (KAMARA). Adv. Robot. 1997, 12, 411–431. [Google Scholar] [CrossRef]

- Lakshmanna, K.; Subramani, N.; Alotaibi, Y.; Alghamdi, S.; Khalafand, O.I.; Nanda, A.K. Improved Metaheuristic-Driven Energy-Aware Cluster-Based Routing Scheme for IoT-Assisted Wireless Sensor Networks. Sustainability 2022, 14, 7712. [Google Scholar] [CrossRef]

- Atsuzawa, K.; Nilwong, S.; Hossain, D.; Kaneko, S.; Capi, G. Robot Navigation in Outdoor Environments Using Odometry and Convolutional Neural Network. In Proceedings of the IEEJ International Workshop on Sensing, Actuation, Motion Control, and Optimization (SAMCON), Chiba, Japan, 4–6 March 2019. [Google Scholar]

- Nagappan, K.; Rajendran, S.; Alotaibi, Y. Trust Aware Multi-Objective Metaheuristic Optimization Based Secure Route Planning Technique for Cluster Based IIoT Environment. IEEE Access 2022, 10, 112686–112694. [Google Scholar] [CrossRef]

- Subahi, A.F.; Khalaf, O.I.; Alotaibi, Y.; Natarajan, R.; Mahadev, N.; Ramesh, T. Modified Self-Adaptive Bayesian Algorithm for Smart Heart Disease Prediction in IoT System. Sustainability 2022, 14, 14208. [Google Scholar] [CrossRef]

- Anavatti, S.G.; Francis, S.L.; Garratt, M. Path-Planning Modules for Autonomous Vehicles: Current Status and Challenges. In Proceedings of the 2015 International Conference on Advanced Mechatronics, Intelligent Manufacture, and Industrial Automation (ICAMIMIA), Surabaya, Indonesia, 15–17 October 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 205–214. [Google Scholar]

- Rae, I.; Venolia, G.; Tang, J.C.; Molnar, D. A framework for understanding and designing telepresence. In Proceedings of the 18th ACM Conference on Computer Supported Cooperative Work & Social Computing, Vancouver, WA, Canada, 14–18 March 2015; pp. 1552–1566. [Google Scholar]

- Tuli, T.B.; Terefe, T.O.; Rashid, M.U. Telepresence mobile robots design and control for social interaction. Int. J. Soc. Robot. 2020, 13, 877–886. [Google Scholar] [CrossRef] [PubMed]

- Alami, R.; Chatila, R.; Fleury, S.; Ghallab, M.; Ingrand, F. An Architecture for Autonomy. Int. J. Robot. Res. 1998, 17, 315–337. [Google Scholar] [CrossRef]

- Hitec HS-5745MG Servo Specifications and Reviews. Available online: https://servodatabase.com/servo/hitec/hs-5745mg (accessed on 28 November 2022).

- Optical Encoder M101|MEGATRON. Available online: https://www.megatron.de/en/products/optical-encoders/optoelectronic-encoder-m101.html (accessed on 28 November 2022).

- Singh, S.P.; Alotaibi, Y.; Kumar, G.; Rawat, S.S. Intelligent Adaptive Optimisation Method for Enhancement of Information Security in IoT-Enabled Environments. Sustainability 2022, 14, 13635. [Google Scholar] [CrossRef]

- Kress, R.L.; Hamel, W.R.; Murray, P.; Bills, K. Control Strategies for Teleoperated Internet Assembly. IEEE/ASME Trans. Mechatron. 2001, 6, 410–416. [Google Scholar] [CrossRef]

- Goldberg, K.; Siegwart, R. Beyond Webcams: An Introduction to Online Robots; MIT Press: Cambridge, MA, USA, 2002; ISBN 0-262-07225-4. [Google Scholar]

- Brito, C.G. Desenvolvimento de Um Sistema de Localização Para Robôs Móveis Baseado Em Filtragem Bayesiana Não-Linear. 2017. Available online: https://bdm.unb.br/bitstream/10483/19285/1/2017_CamilaGoncalvesdeBrito.pdf (accessed on 3 February 2023).

- Rozevink, S.G.; van der Sluis, C.K.; Garzo, A.; Keller, T.; Hijmans, J.M. HoMEcare ARm RehabiLItatioN (MERLIN): Telerehabilitation Using an Unactuated Device Based on Serious Games Improves the Upper Limb Function in Chronic Stroke. J. NeuroEngineering Rehabil. 2021, 18, 48. [Google Scholar] [CrossRef] [PubMed]

- Schilling, K. Tele-Maintenance of Industrial Transport Robots. IFAC Proc. Vol. 2002, 35, 139–142. [Google Scholar] [CrossRef]

- Srilakshmi, U.; Alghamdi, S.A.; Vuyyuru, V.A.; Veeraiah, N.; Alotaibi, Y. A Secure Optimization Routing Algorithm for Mobile Ad Hoc Networks. EEE Access 2022, 10, 14260–14269. [Google Scholar] [CrossRef]

- Sennan, S.; Kirubasri; Alotaibi, Y.; Pandey, D.; Alghamdi, S. EACR-LEACH: Energy-Aware Cluster-Based Routing Protocol for WSN Based IoT. Comput. Mater. Contin. 2022, 72, 2159–2174. (accessed on 29 April 2022). [Google Scholar] [CrossRef]

- Ahmad, A.; Babar, M.A. Software Architectures for Robotic Systems: A Systematic Mapping Study. J. Syst. Softw. 2016, 122, 16–39. [Google Scholar] [CrossRef]

- Sharma, O.; Sahoo, N.C.; Puhan, N.B. Recent Advances in Motion and Behavior Planning Techniques for Software Architecture of Autonomous Vehicles: A State-of-the-Art Survey. Eng. Appl. Artif. Intell. 2021, 101, 104211. [Google Scholar] [CrossRef]

- Ziegler, J.; Werling, M.; Schroder, J. Navigating Car-like Robots in Unstructured Environments Using an Obstacle Sensitive Cost Function. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 787–791. [Google Scholar]

- González-Santamarta, M.Á.; Rodríguez-Lera, F.J.; Álvarez-Aparicio, C.; Guerrero-Higueras, Á.M.; Fernández-Llamas, C. MERLIN a Cognitive Architecture for Service Robots. Appl. Sci. 2020, 10, 5989. [Google Scholar] [CrossRef]

- Shao, J.; Xie, G.; Yu, J.; Wang, L. Leader-Following Formation Control of Multiple Mobile Robots. In Proceedings of the 2005 IEEE International Symposium on, Mediterrean Conference on Control and Automation Intelligent Control, Limassol, Cyprus, 27–29 June 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 808–813. [Google Scholar]

- Faisal, M.; Hedjar, R.; Al Sulaiman, M.; Al-Mutib, K. Fuzzy Logic Navigation and Obstacle Avoidance by a Mobile Robot in an Unknown Dynamic Environment. Int. J. Adv. Robot. Syst. 2013, 10, 37. [Google Scholar] [CrossRef]

- Favarò, F.; Eurich, S.; Nader, N. Autonomous Vehicles’ Disengagements: Trends, Triggers, and Regulatory Limitations. Accid. Anal. Prev. 2018, 110, 136–148. [Google Scholar] [CrossRef]

- Gopalswamy, S.; Rathinam, S. Infrastructure Enabled Autonomy: A Distributed Intelligence Architecture for Autonomous Vehicles. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 986–992. [Google Scholar]

- Allen, J.F. Towards a General Theory of Action and Time. Artif. Intell. 1984, 23, 123–154. [Google Scholar] [CrossRef]

- Hu, H.; Brady, J.M.; Grothusen, J.; Li, F.; Probert, P.J. LICAs: A Modular Architecture for Intelligent Control of Mobile Robots. In Proceedings of the Proceedings 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human Robot Interaction and Cooperative Robots, Pittsburgh, PA, USA, 5–9 August 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 471–476. [Google Scholar]

- Alami, R.; Chatila, R.; Espiau, B. Designing an Intelligent Control Architecture for Autonomous Robots; ICAR: New Delhi, India, 1993; Volume 93, pp. 435–440. [Google Scholar]

- Khan, M.N.; Hasnain, S.K.; Jamil, M.; Imran, A. Electronic Signals and Systems: Analysis, Design and Applications; River Publishers: Gistrup, Denmark, 2022. [Google Scholar]

- Kang, J.-M.; Chun, C.-J.; Kim, I.-M.; Kim, D.I. Channel Tracking for Wireless Energy Transfer: A Deep Recurrent Neural Network Approach. arXiv 2018, arXiv:1812.02986. [Google Scholar]

- Zhao, W.; Gao, Y.; Ji, T.; Wan, X.; Ye, F.; Bai, G. Deep Temporal Convolutional Networks for Short-Term Traffic Flow Forecasting. IEEE Access 2019, 7, 114496–114507. [Google Scholar] [CrossRef]

- Schilling, K.J.; Vernet, M.P. Remotely Controlled Experiments with Mobile Robots. In Proceedings of the Thirty-Fourth Southeastern Symposium on System Theory (Cat. No. 02EX540), Huntsville, AL, USA, 19 March 2002; IEEE: Piscataway, NJ, USA, 2002; pp. 71–74. [Google Scholar]

- Moon, T.-K.; Kuc, T.-Y. An Integrated Intelligent Control Architecture for Mobile Robot Navigation within Sensor Network Environment. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566), Sendai, Japan, 28 September–2 October 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 1, pp. 565–570. [Google Scholar]

- Lefèvre, S.; Vasquez, D.; Laugier, C. A Survey on Motion Prediction and Risk Assessment for Intelligent Vehicles. Robomech J. 2014, 1, 1. [Google Scholar] [CrossRef]

- Behere, S.; Törngren, M. A Functional Architecture for Autonomous Driving. In Proceedings of the First International Workshop on Automotive Software Architecture, Montreal, QC, Canada, 4–8 May 2015; pp. 3–10. [Google Scholar]

- Carvalho, A.; Lefévre, S.; Schildbach, G.; Kong, J.; Borrelli, F. Automated Driving: The Role of Forecasts and Uncertainty—A Control Perspective. Eur. J. Control. 2015, 24, 14–32. [Google Scholar] [CrossRef]

- Liu, P.; Paden, B.; Ozguner, U. Model Predictive Trajectory Optimization and Tracking for On-Road Autonomous Vehicles. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3692–3697. [Google Scholar]

- Weiskircher, T.; Wang, Q.; Ayalew, B. Predictive Guidance and Control Framework for (Semi-) Autonomous Vehicles in Public Traffic. IEEE Trans. Control. Syst. Technol. 2017, 25, 2034–2046. [Google Scholar] [CrossRef]

- Zhou, X.; Xu, P.; Lee, F.C. A Novel Current-Sharing Control Technique for Low-Voltage High-Current Voltage Regulator Module Applications. IEEE Trans. Power Electron. 2000, 15, 1153–1162. [Google Scholar] [CrossRef]

- Gil, A.; Segura, J.; Temme, N.M. Numerical Methods for Special Functions; SIAM: Philadelphia, PA, USA, 2007; ISBN 0-89871-634-9. [Google Scholar]

- Milla, K.; Kish, S. A Low-Cost Microprocessor and Infrared Sensor System for Automating Water Infiltration Measurements. Comput. Electron. Agric. 2006, 53, 122–129. [Google Scholar] [CrossRef]

- Microchip Technology Inc. DSPIC33FJ32MC302-I/SO-16-Bit DSC, 28LD,32KB Flash, Motor, DMA,40 MIPS, NanoWatt-Allied Electronics & Automation, Part of RS Group. Available online: https://www.alliedelec.com/product/microchip-technology-inc-/dspic33fj32mc302-i-so/70047032/?gclid=Cj0KCQiA1ZGcBhCoARIsAGQ0kkqp_8dGIbQH-bCsv1_OMKGCqwJWGl9an18jsfWWs9DhtuKKYZec_aoaAheKEALw_wcB&gclsrc=aw.ds (accessed on 28 November 2022).

- #835 RTR Savage 25. Available online: https://www.hpiracing.com/en/kit/835 (accessed on 28 November 2022).

- Types of Magnetometers-Technical Articles. Available online: https://www.allaboutcircuits.com/technical-articles/types-of-magnetometers/ (accessed on 28 November 2022).

- Zhu, H.; Brito, B.; Alonso-Mora, J. Decentralized probabilistic multi-robot collision avoidance using buffered uncertainty-aware Voronoi cells. Auton. Robot. 2022, 46, 401–420. [Google Scholar] [CrossRef]

- Batmaz, A.U.; Maiero, J.; Kruijff, E.; Riecke, B.E.; Neustaedter, C.; Stuerzlinger, W. How automatic speed control based on distance affects user behaviours in telepresence robot navigation within dense conference-like environments. PLoS ONE 2020, 15, e0242078. [Google Scholar] [CrossRef] [PubMed]

- Xia, P.; McSweeney, K.; Wen, F.; Song, Z.; Krieg, M.; Li, S.; Du, E.J. Virtual Telepresence for the Future of ROV Teleoperations: Opportunities and Challenges. In Proceedings of the SNAME 27th Offshore Symposium, Houston, TX, USA, 22 February 2022. [Google Scholar]

- Dong, Y.; Pei, M.; Zhang, L.; Xu, B.; Wu, Y.; Jia, Y. Stitching videos from a fisheye lens camera and a wide-angle lens camera for telepresence robots. Int. J. Soc. Robot. 2022, 14, 733–745. [Google Scholar] [CrossRef]

- Fiorini, L.; Sorrentino, A.; Pistolesi, M.; Becchimanzi, C.; Tosi, F.; Cavallo, F. Living With a Telepresence Robot: Results From a Field-Trial. IEEE Robot. Autom. Lett. 2022, 7, 5405–5412. [Google Scholar] [CrossRef]

- Wang, H.; Lou, S.; Jing, J.; Wang, Y.; Liu, W.; Liu, T. The EBS-A* algorithm: An improved A* algorithm for path planning. PLoS ONE 2022, 17, e0263841. [Google Scholar] [CrossRef]

- Howard, T.M.; Green, C.J.; Kelly, A.; Ferguson, D. Statespace sampling of feasible motions for high-performance mobile robotnavigation in complex environments. J. Field Robot. 2008, 25, 325–345. [Google Scholar] [CrossRef]

- Wang, S. State Lattice-Based Motion Planning for Autonomous on-Roaddriving. PhD Thesis, Freie University, Berlin, Germany, 2015. [Google Scholar]

- Likhachev, M.; Ferguson, D.; Gordon, G.; Stentz, A.; Thrun, S. Any-time search in dynamic graphs. Artif. Intell. 2008, 172, 1613–1643. [Google Scholar] [CrossRef]

- Brezak, M.; Petrovi, I. Real-time approximation of clothoids withbounded error for path planning applications. IEEE Trans. Robot. 2014, 30, 507–515. [Google Scholar] [CrossRef]

- Lim, W.; Lee, S.; Sunwoo, M.; Jo, K. Hierarchical trajectoryplanning of an autonomous car based on the integration of a samplingand an optimization method. IEEE Trans. Intell. Transp. Syst. 2018, 19, 613–626. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014. [Google Scholar]

- Naseer, F.; Khan, M.N.; Altalbe, A. Telepresence Robot with DRL Assisted Delay Compensation in IoT-Enabled Sustainable Healthcare Environment. Sustainability 2023, 15, 3585. [Google Scholar] [CrossRef]

- Schouten, A.P.; Portegies, T.C.; Withuis, I.; Willemsen, L.M.; Mazerant-Dubois, K. Robomorphism: Examining the effects of telepresence robots on between-student cooperation. Comput. Hum. Behav. 2022, 126, 1069800. [Google Scholar] [CrossRef]

| Parameters | Unit | Value |

|---|---|---|

| Obstacles | 3 × 3 feet | 9 (fixed) |

| Track | Coiled | 1 |

| Initial Point | - | Doctor’s Office |

| Termination Point | - | Patient Ward |

| Total Distance | Meters | 130 |

| Authors | Approaches | Limitations |

|---|---|---|

| T. M. Howard [61] | This approach creates sensitive search spaces which showadditional benefits over existing approaches when steering in diverse paths. It digitizess the stroke and state space, and transforms the original control problem into a graph search problem, which permits the use of pre-computed motion. | Utilizing this approach, it is easy to satisfy the environmental constraints when the planned trajectories are expressed in state space, but their dynamic probability cannot be easily guaranteed. It also requires a high amount of computational power. |

| S Wang [62] | This approach utilizes the state lattice for the trajectories and chooses the optimum constraint based on the cost criteria. In this approach, it is simpler producing reasonable paths using the motion planner. | This approach increases the chances of unnecessary shorts in a spatial horizon. For optimum work, it is recommended that a non-uniform sampling of the spatial horizon should be used to construct the state lattice. |

| M. Likhachev [63] | Authors in this approach present an algorithm which solves the constrained sub-optimality. | It requires superior a priori modelling of the environment. If the models are not precise, the algorithms give inaccurare results, which is also not recommended in a dynamic environment. |

| M. Brezak [64] | This approach interpolates lines and circles, and conducts the path investigation over the continuous trajectory planning. | The obtained results are not optimal, and the trajectories are not smooth. |

| W. Lim [65] | These approaches implement numerical optimization, which is an extension of sampling and interpolation. | Due to the additional optimization step, thecomplexity increases, and this approach not recommended for real-time applications in this case. |

| David Silver [66] | Authors demonstrate a deterministic policy gradient algorithm with continuous actions. | The implementation of this approach can result in rough-moving behavior. The cost function derivation becomes complex. It is also not recommended in an environment of moving obstacles. |

| F Naseer [67] | The deep reinforcement learning (DRL)-based deep deterministic policy gradient (DDPG) enhances control over the TR in case of connectivity issues. It also suggests a proper approach to maneuver the TR in unknown scenarios. | This method needs further enhancement in case of dynamic obstacles and further improvement for multi-robotic tasks. Its performance is worse in cases of moving obstacles and disconnectivity. |

| 2.5 kΩ | 1.119 V | 447.6 µA | 32 mV | 71.5 Ω |

| 1 kΩ | 1.070 V | 1.07 mA | 81 mV | 75.7 Ω |

| Parameter | Significance |

|---|---|

| Gain of the differential component of the PID controller | |

| Gain of the proportional component of the PID controller | |

| Amplification of the integral component of the PID controller | |

| Strengthening the anti-wind-up return | |

| The lower limit of the manipulated variable | |

| The upper limit of the manipulated variable | |

| The time constant of the smoothing in the feedback branch in seconds | |

| MaxErr | Value in encoder pulses differentiate the target’s actual position from the target position. The robot’s actual position is at the end of a Teiltrajektorie in the interval [−MaxErr target position, target position MaxErr+], so the goal is reached and the robot is stopped. |

| MaxSpeed | Maximum average speed in EncImp/Cyc at which the robot should accelerate |

| Accel | Acceleration of the robot in EncImp/Cyc2 |

| Algorithms | Examples | Trial 1 | Trial 2 | Trial 3 | Mean |

|---|---|---|---|---|---|

| Distance (m)/Time(s) | Distance (m)/Time(s) | Distance (m)/Time(s) | Distance (m)/Time(s) | ||

| A* | First trip | 8.85 m/91 s | 9.79 m/99 s | 9.15 m/95 s | 9.25 m/95 s |

| Second trip | 9.95 m/90 s | 9.15 m/87 s | 8.15 m/88 s | 9.10 m/88 s | |

| Proposed Framework | First trip | 8.15 m/84 s | 8.85 m/89 s | 7.75 m/85 s | 8.25 m/86 s |

| Second trip | 7.85 m/86 s | 7.55 m/87 s | 8.15 m/88 s | 7.85 m/87 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Altalbe, A.A.; Khan, M.N.; Tahir, M. Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems. Sustainability 2023, 15, 5692. https://doi.org/10.3390/su15075692

Altalbe AA, Khan MN, Tahir M. Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems. Sustainability. 2023; 15(7):5692. https://doi.org/10.3390/su15075692

Chicago/Turabian StyleAltalbe, Ali A., Muhammad Nasir Khan, and Muhammad Tahir. 2023. "Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems" Sustainability 15, no. 7: 5692. https://doi.org/10.3390/su15075692

APA StyleAltalbe, A. A., Khan, M. N., & Tahir, M. (2023). Design of a Telepresence Robot to Avoid Obstacles in IoT-Enabled Sustainable Healthcare Systems. Sustainability, 15(7), 5692. https://doi.org/10.3390/su15075692