Urban Park Lighting Quality Perception: An Immersive Virtual Reality Experiment

Abstract

1. Introduction

1.1. Immersive Virtual Reality for Outdoor Lighting Design

1.2. Subjective Design Factors for Outdoor Lighting

1.3. Eye-Tracking for Lighting Design

1.4. Aim of the Research

- Lighting management in urban green parks and the comprehensive investigation of human feeling in different lighting environments (limitations underlined in Section 1.1);

- Evaluation of the effects of light brightness and CCT on fixation behaviors and pupil changes measured by IPA indexes (limitations pointed out in Section 1.2);

- Investigation of the effects of brightness and CCT on the perceived quality of street lighting, fixation, and pupillary activity (in contrast to feeling sensations, motivation, and sense of safety analyzed in [13]).

2. Materials

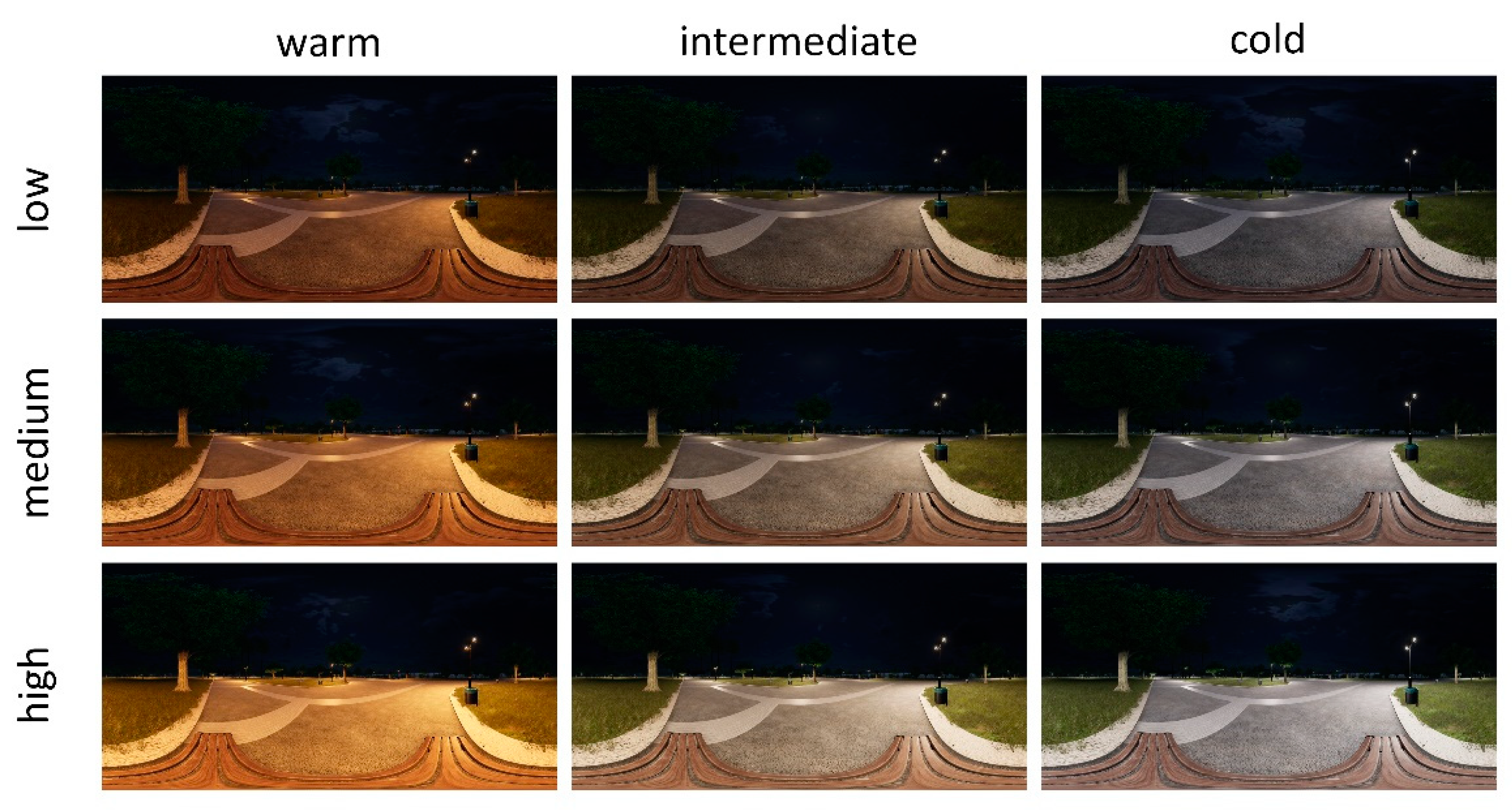

2.1. Virtual Environment

2.2. Questionnaire

3. Methodology

3.1. Experimental Design

3.2. Participants

3.3. Eye-Tracking Measurement

4. Results

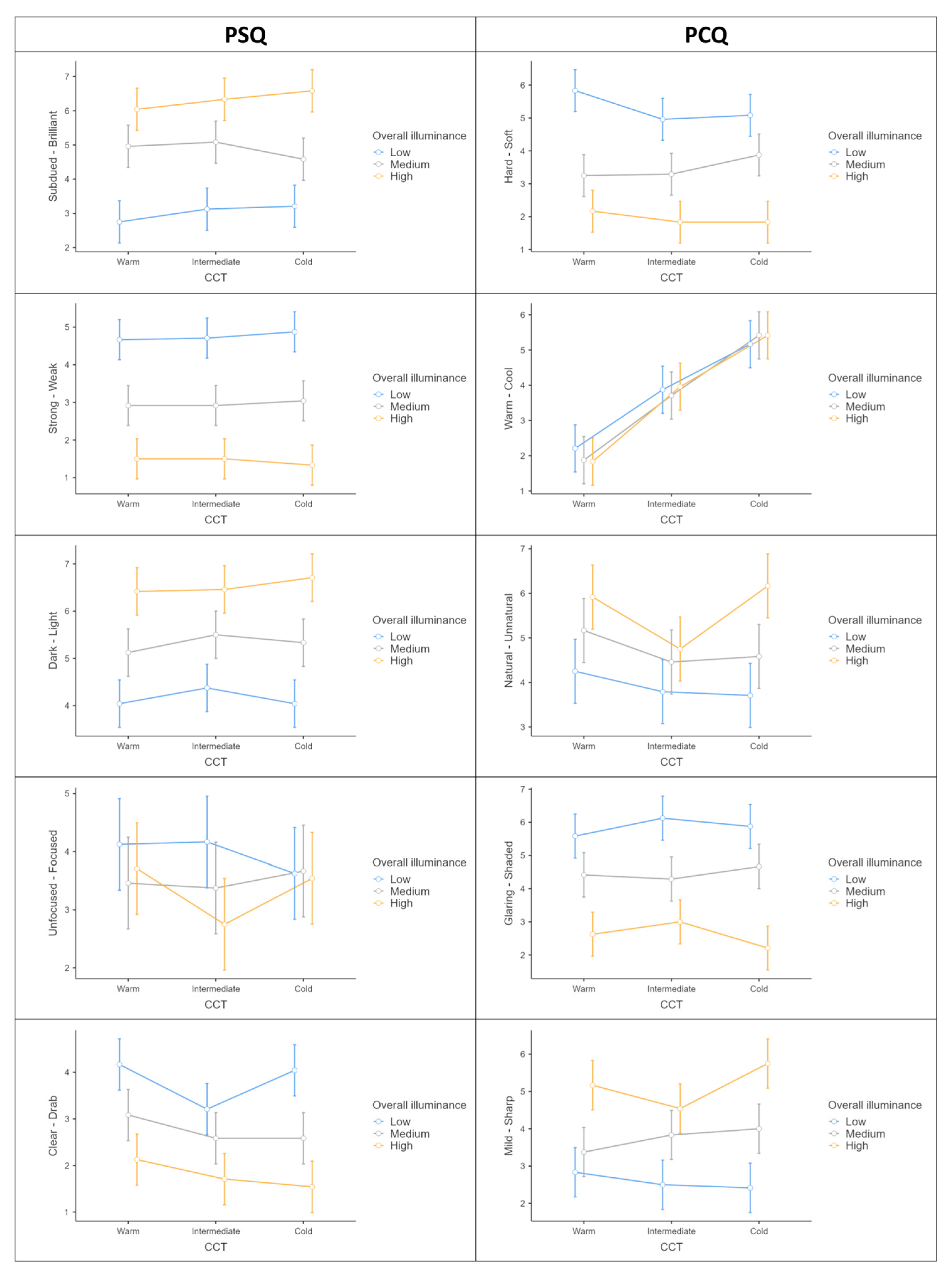

4.1. POLQ Questionnaire Results

4.2. Eye-Tracking Measurements Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Senese, V.P.; Pascale, A.; Maffei, L.; Cioffi, F.; Sergi, I.; Gnisci, A.; Masullo, M. The Influence of Personality Traits on the Measure of Restorativeness in an Urban Park: A Multisensory Immersive Virtual Reality Study. In Neural Approaches to Dynamics of Signal Exchanges; Springer: Berlin/Heidelberg, Germany, 2020; pp. 347–357. [Google Scholar]

- Commission International de l’Eclairage. CIE 115-2010: Lighting of Roads for Motor and Pedestrian Traffic; CIE: Vienna, Austria, 2010. [Google Scholar]

- EN 12665:2018; Light and Lighting—Basic Terms and Criteria for Specifying Lighting Requirements. European Committee for Standardization: Brussels, Belgium, 2018.

- Donatello, S.; Rodríguez, R.; Quintero, M.G.C.; JRC, O.W.; Van Tichelen, P.; Van, V.; Hoof, T.G.V. Revision of the EU Green Public Procurement Criteria for Road Lighting and Traffic Signals; Publications Office of the European Union: Luxembourg, 2019; p. 127. [Google Scholar]

- EN 12464-1:2021; Light and Lighting—Lighting of Work Places—Part 1: Indoor Work Places. European Committee for Standardization: Brussels, Belgium, 2021.

- EN 12193:2018; Light and Lighting—Sports Lighting. European Committee for Standardization: Brussels, Belgium, 2018.

- EN 13201-1:2014—Street lighting; Guidelines on Selection of Lighting Classes. CEN: Brussels, Belgium, 2014.

- Scorpio, M.; Laffi, R.; Masullo, M.; Ciampi, G.; Rosato, A.; Maffei, L.; Sibilio, S. Virtual Reality for Smart Urban Lighting Design: Review, Applications and Opportunities. Energies 2020, 13, 3809. [Google Scholar] [CrossRef]

- DiLaura, D.L.; Houser, K.; Mistrick, R.; Steffy, G.R. The Lighting Handbook: Reference and Application; Illuminating Engineering Society of North America: New York, NY, USA, 2011; p. 1328. [Google Scholar]

- Moyer, J.L. The Landscape Lighting Book; Wiley: Hoboken, NJ, USA, 2013; p. 13. ISBN 1-118-41875-1. [Google Scholar]

- Li, F.; Chen, Y.; Liu, Y.; Chen, D. Comparative in Situ Study of LEDs and HPS in Road Lighting. Leukos 2012, 8, 205–214. [Google Scholar]

- Smith, B.; Hallo, J. Informing Good Lighting in Parks through Visitors’ Perceptions and Experiences. Int. J. Sustain. Light. 2019, 21, 47–65. [Google Scholar] [CrossRef]

- Masullo, M.; Cioffi, F.; Li, J.; Maffei, L.; Scorpio, M.; Iachini, T.; Ruggiero, G.; Malferà, A.; Ruotolo, F. An investigation of the influence of the night lighting in a urban park on individuals’ emotions. Sustainability 2022, 14, 8556. [Google Scholar] [CrossRef]

- Schroer, S.; Hölker, F. Impact of Lighting on Flora and Fauna. In Handbook of Advanced Lighting Technology; Karlicek, R., Sun, C.-C., Zissis, G., Ma, R., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 1–33. ISBN 978-3-319-00295-8. [Google Scholar]

- Łopuszyńska, A.; Bartyna-Zielińska, M. Lighting of Urban Green Areas–the Case of Grabiszyn Park in Wrocław. Searching for the Balance between Light and Darkness through Social and Technical Issues. EDP Sci. 2019, 100, 00049. [Google Scholar] [CrossRef]

- Chen, Y.; Cui, Z.; Hao, L. Virtual Reality in Lighting Research: Comparing Physical and Virtual Lighting Environments. Light. Res. Technol. 2019, 51, 820–837. [Google Scholar] [CrossRef]

- Chamilothori, K.; Wienold, J.; Andersen, M. Adequacy of Immersive Virtual Reality for the Perception of Daylit Spaces: Comparison of Real and Virtual Environments. Leukos 2019, 15, 203–226. [Google Scholar] [CrossRef]

- Lee, J.H.; Lee, Y. The Effectiveness of Virtual Reality Simulation on the Qualitative Analysis of Lighting Design. J. Digit. Landsc. Archit. 2021, 6, 195–202. [Google Scholar]

- Scorpio, M.; Laffi, R.; Teimoorzadeh, A.; Ciampi, G.; Masullo, M.; Sibilio, S. A Calibration Methodology for Light Sources Aimed at Using Immersive Virtual Reality Game Engine as a Tool for Lighting Design in Buildings. J. Build. Eng. 2022, 48, 103998. [Google Scholar] [CrossRef]

- Sanchez-Sepulveda, M.; Fonseca, D.; Franquesa, J.; Redondo, E. Virtual Interactive Innovations Applied for Digital Urban Transformations. Mixed Approach. Future Gener. Comput. Syst. 2019, 91, 371–381. [Google Scholar] [CrossRef]

- Nasar, J.L.; Bokharaei, S. Lighting Modes and Their Effects on Impressions of Public Squares. J. Environ. Psychol. 2017, 49, 96–105. [Google Scholar] [CrossRef]

- Rockcastle, S.; Danell, M.; Calabrese, E.; Sollom-Brotherton, G.; Mahic, A.; Van Den Wymelenberg, K.; Davis, R. Comparing Perceptions of a Dimmable LED Lighting System between a Real Space and a Virtual Reality Display. Light. Res. Technol. 2021, 53, 701–725. [Google Scholar] [CrossRef]

- Siess, A.; Wölfel, M. User Color Temperature Preferences in Immersive Virtual Realities. Comput. Graph. 2019, 81, 20–31. [Google Scholar] [CrossRef]

- Bastürk, S.; Maffei, L.; Perea Pérez, F.; Ranea Palma, A. Multisensory evaluation to support urban decision making. In Proceedings of the International Seminar on Virtual Acoustics, Valencia, Spain, 24–25 November 2011; pp. 114–121. [Google Scholar]

- Houser, K.W.; Boyce, P.R.; Zeitzer, J.M.; Herf, M. Human-Centric Lighting: Myth, Magic or Metaphor? Light. Res. Technol. 2021, 53, 97–118. [Google Scholar] [CrossRef]

- Bellazzi, A.; Bellia, L.; Chinazzo, G.; Corbisiero, F.; D’Agostino, P.; Devitofrancesco, A.; Fragliasso, F.; Ghellere, M.; Megale, V.; Salamone, F. Virtual Reality for Assessing Visual Quality and Lighting Perception: A Systematic Review. Build. Environ. 2022, 209, 108674. [Google Scholar] [CrossRef]

- Flynn, J.E.; Hendrick, C.; Spencer, T.; Martyniuk, O. A Guide to Methodology Procedures for Measuring Subjective Impressions in Lighting. J. Illum. Eng. Soc. 1979, 8, 95–110. [Google Scholar] [CrossRef]

- Boyce, P.R. Human Factors in Lighting, 3rd ed.; Taylor & Francis: Oxford, UK, 2014; pp. 163–193. [Google Scholar]

- Allan, A.C.; Garcia-Hansen, V.; Isoardi, G.; Smith, S.S. Subjective Assessments of Lighting Quality: A Measurement Review. LEUKOS 2019, 15, 115–126. [Google Scholar] [CrossRef]

- Shikakura, T.; Kikuchi, S.; Tanaka, T.; Furuta, Y. Psychological Evaluation of Outdoor Pedestrian Lighting Based on Rendered Images by Computer Graphics. J. Light Vis. Environ. 1992, 16, 37–44. [Google Scholar] [CrossRef]

- Johansson, M.; Rosén, M.; Küller, R. Individual Factors Influencing the Assessment of the Outdoor Lighting of an Urban Footpath. Light. Res. Technol. 2011, 43, 31–43. [Google Scholar] [CrossRef]

- Nikunen, H. Perceptions of Lighting, Perceived Restorativeness, Preference and Fear in Outdoor Spaces. Ph.D. Thesis, School of Electrical Engineering, Tokyo, Japan, 2013. [Google Scholar]

- Nikunen, H.; Puolakka, M.; Rantakallio, A.; Korpela, K.; Halonen, L. Perceived Restorativeness and Walkway Lighting in Near-Home Environments. Light. Res. Technol. 2014, 46, 308–328. [Google Scholar] [CrossRef]

- Kim, D.H.; Noh, K.B. Perceived Adequacy of Illumination and Pedestrians’ Night-Time Experiences in Urban Obscured Spaces: A Case of London. Indoor Built Environ. 2018, 27, 1134–1148. [Google Scholar] [CrossRef]

- Johansson, M.; Pedersen, E.; Maleetipwan-Mattsson, P.; Kuhn, L.; Laike, T. Perceived Outdoor Lighting Quality (POLQ): A Lighting Assessment Tool. J. Environ. Psychol. 2014, 39, 14–21. [Google Scholar] [CrossRef]

- Foulsham, T.; Walker, E.; Kingstone, A. The Where, What and When of Gaze Allocation in the Lab and the Natural Environment. Vis. Res. 2011, 51, 1920–1931. [Google Scholar] [CrossRef] [PubMed]

- Cottet, M.; Vaudor, L.; Tronchère, H.; Roux-Michollet, D.; Augendre, M.; Brault, V. Using Gaze Behavior to Gain Insights into the Impacts of Naturalness on City Dwellers’ Perceptions and Valuation of a Landscape. J. Environ. Psychol. 2018, 60, 9–20. [Google Scholar] [CrossRef]

- Fotios, S.; Uttley, J.; Cheal, C.; Hara, N. Using Eye-Tracking to Identify Pedestrians’ Critical Visual Tasks, Part 1. Dual Task Approach. Light. Res. Technol. 2015, 47, 133–148. [Google Scholar] [CrossRef]

- Fotios, S.; Uttley, J.; Yang, B. Using Eye-Tracking to Identify Pedestrians’ Critical Visual Tasks. Part 2. Fixation on Pedestrians. Light. Res. Technol. 2015, 47, 149–160. [Google Scholar] [CrossRef]

- Zhang, L.M.; Zhang, R.X.; Jeng, T.S.; Zeng, Z.Y. Cityscape Protection Using VR and Eye Tracking Technology. J. Vis. Commun. Image Represent. 2019, 64, 102639. [Google Scholar] [CrossRef]

- Anderson, N.; Bischof, W. Eye and Head Movements While Looking at Rotated Scenes in VR.: Session “Beyond the Screen’s Edge” at the 20th European Conference on Eye Movement Research (ECEM) in Alicante, 19.8.2019. J. Eye Mov. Res. 2019, 12. [Google Scholar] [CrossRef]

- Haskins, A.J.; Mentch, J.; Botch, T.L.; Robertson, C.E. Active Vision in Immersive, 360° Real-World Environments. Sci. Rep. 2020, 10, 14304. [Google Scholar] [CrossRef]

- Kim, J.H.; Kim, J.Y. Measuring Visual Attention Processing of Virtual Environment Using Eye-Fixation Information. Archit. Res. 2020, 22, 155–162. [Google Scholar]

- Uttley, J.; Simpson, J.; Qasem, H. Eye-Tracking in the Real World: Insights About the Urban Environment. In Handbook of Research on Perception-Driven Approaches to Urban Assessment and Design; IGI Global: Hershey, PA, USA, 2018; pp. 368–396. [Google Scholar]

- Naber, M.; Alvarez, G.; Nakayama, K. Tracking the Allocation of Attention Using Human Pupillary Oscillations. Front. Psychol. 2013, 4, 919. [Google Scholar] [CrossRef] [PubMed]

- Schwiedrzik, C.M.; Sudmann, S.S. Pupil Diameter Tracks Statistical Structure in the Environment to Increase Visual Sensitivity. J. Neurosci. 2020, 40, 4565. [Google Scholar] [CrossRef] [PubMed]

- Binda, P.; Gamlin, P.D. Renewed Attention on the Pupil Light Reflex. Trends Neurosci. 2017, 40, 455–457. [Google Scholar] [CrossRef]

- Partala, T.; Surakka, V. Pupil Size Variation as an Indication of Affective Processing. Int. J. Hum. -Comput. Stud. 2003, 59, 185–198. [Google Scholar] [CrossRef]

- Sterpenich, V.; D’Argembeau, A.; Desseilles, M.; Balteau, E.; Albouy, G.; Vandewalle, G.; Degueldre, C.; Luxen, A.; Collette, F.; Maquet, P. The Locus Ceruleus Is Involved in the Successful Retrieval of Emotional Memories in Humans. J. Neurosci. 2006, 26, 7416. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Krejtz, K.; Krejtz, I.; Biele, C.; Niedzielska, A.; Kiefer, P.; Raubal, M.; Giannopoulos, I. The Index of Pupillary Activity: Measuring Cognitive Load Vis-à-Vis Task Difficulty with Pupil Oscillation. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–13. [Google Scholar]

- Imaoka, Y.; Flury, A.; de Bruin, E.D. Assessing Saccadic Eye Movements With Head-Mounted Display Virtual Reality Technology. Front. Psychiatry 2020, 11, 572938. [Google Scholar] [CrossRef]

- Robertson, A.R. The CIE 1976 Color-Difference Formulae. Color Res. Appl. 1977, 2, 7–11. [Google Scholar] [CrossRef]

- Safdar, M.; Luo, M.R.; Mughal, M.F.; Kuai, S.; Yang, Y.; Fu, L.; Zhu, X. A Neural Response-Based Model to Predict Discomfort Glare from Luminance Image. Light. Res. Technol. 2018, 50, 416–428. [Google Scholar] [CrossRef]

- Chamilothori, K.; Chinazzo, G.; Rodrigues, J.; Dan-Glauser, E.S.; Wienold, J.; Andersen, M. Subjective and Physiological Responses to Façade and Sunlight Pattern Geometry in Virtual Reality. Build. Environ. 2019, 150, 144–155. [Google Scholar] [CrossRef]

- Abd-Alhamid, F.; Kent, M.; Bennett, C.; Calautit, J.; Wu, Y. Developing an Innovative Method for Visual Perception Evaluation in a Physical-Based Virtual Environment. Build. Environ. 2019, 162, 106278. [Google Scholar] [CrossRef]

- Faul, F.; Erdfelder, E.; Lang, A.-G.; Buchner, A. G*Power 3: A Flexible Statistical Power Analysis Program for the Social, Behavioral, and Biomedical Sciences. Behav. Res. Methods 2007, 39, 175–191. [Google Scholar] [CrossRef] [PubMed]

- Sipatchin, A.; Wahl, S.; Rifai, K. Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare 2021, 9, 180. [Google Scholar] [CrossRef] [PubMed]

- Agtzidis, I.; Startsev, M.; Dorr, M. 360-Degree Video Gaze Behaviour: A Ground-Truth Data Set and a Classification Algorithm for Eye Movements. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1007–1015. [Google Scholar]

- Huang, R. ET-Remove-Artifacts. GitHub, 2020. Available online: https://github.com/EmotionCognitionLab/ET-remove-artifacts (accessed on 6 October 2021).

- Mahanama, B.; Jayawardana, Y.; Rengarajan, S.; Jayawardena, G.; Chukoskie, L.; Snider, J.; Jayarathna, S. Eye Movement and Pupil Measures: A Review. Front. Comput. Sci. 2022, 3, 733531. [Google Scholar] [CrossRef]

- Kuhn, L.; Johansson, M.; Laike, T.; Govén, T. Residents’ Perceptions Following Retrofitting of Residential Area Outdoor Lighting with LEDs. Light. Res. Technol. 2013, 45, 568–584. [Google Scholar] [CrossRef]

- Davis, R.G.; Ginthner, D.N. Correlated Color Temperature, Illuminance Level, and the Kruithof Curve. J. Illum. Eng. Soc. 1990, 19, 27–38. [Google Scholar] [CrossRef]

- Fotios, S. A Revised Kruithof Graph Based on Empirical Data. null 2017, 13, 3–17. [Google Scholar] [CrossRef]

- Yang, W.; Jeon, J.Y. Effects of Correlated Colour Temperature of LED Light on Visual Sensation, Perception, and Cognitive Performance in a Classroom Lighting Environment. Sustainability 2020, 12, 4051. [Google Scholar] [CrossRef]

- Rahm, J.; Sternudd, C.; Johansson, M. “In the Evening, I Don’t Walk in the Park”: The Interplay between Street Lighting and Greenery in Perceived Safety. URBAN DESIGN Int. 2021, 26, 42–52. [Google Scholar] [CrossRef]

- Li, Y.; Ru, T.; Chen, Q.; Qian, L.; Luo, X.; Zhou, G. Effects of Illuminance and Correlated Color Temperature of Indoor Light on Emotion Perception. Sci. Rep. 2021, 11, 14351. [Google Scholar] [CrossRef]

- Lan, L.; Hadji, S.; Xia, L.; Lian, Z. The Effects of Light Illuminance and Correlated Color Temperature on Mood and Creativity. Build. Simul. 2021, 14, 463–475. [Google Scholar] [CrossRef]

- Zhang, J.; Dai, W. Research on Night Light Comfort of Pedestrian Space in Urban Park. Comput. Math. Methods Med. 2021, 2021, 3130747. [Google Scholar] [CrossRef] [PubMed]

| Virtual Scene CCT | |||||

|---|---|---|---|---|---|

| Warm | Intermediate | Cool | |||

| Virtual scene Overall illuminance level | Low | CCT (K) | 2890 | 5413 | 7912 |

| E (lux) | 6.7 | 5.5 | 5.4 | ||

| Medium | CCT (K) | 2727 | 4918 | 6922 | |

| E (lux) | 12.8 | 11.3 | 11.5 | ||

| High | CCT (K) | 2871 | 4907 | 6629 | |

| E (lux) | 23.2 | 20.6 | 19.3 | ||

| English | Italian | |

|---|---|---|

| Perceived strength quality (PSQ) | Clear–Drab | Chiara–Cupa |

| Strong–Weak | Forte–Debole | |

| Unfocused–Focused | Uniforme–Concentrata | |

| Subdued–Brilliant | Fioca–Brillante | |

| Dark–Light | Scura–Luminosa | |

| Perceived comfort quality (PCQ) | Mild–Sharp | Morbida–Netta |

| Hard–Soft | Intensa–Soffusa | |

| Warm–Cool | Calda–Fredda | |

| Glaring–Shaded | Abbagliante–Non abbagliante | |

| Natural–Unnatural | Naturale–Innaturale |

| POLQ Dim. | Items | Overall Illuminance Level | CCT | Overall Illuminance Level × CCT | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| F | p | ɳp2 | F | p | ɳp2 | F | p | ɳp2 | ||

| Perceived strength quality (PSQ) | Subdued–Brilliant | 116.603 | <0.001 *** | 0.835 | 0.719 | 0.493 | 0.030 | 0.631 | 0.642 | 0.0027 |

| Strong–Weak | 125.292 | <0.001 *** | 0.845 | 0.034 | 0.967 | 0.001 | 0.172 | 0.952 | 0.007 | |

| Dark–Light | 66.072 | <0.001 *** | 0.742 | 0.664 | 0.519 | 0.028 | 0.411 | 0.800 | 0.018 | |

| Unfocused–Focused | 1.251 | 0.296 | 0.052 | 0.652 | 0.525 | 0.028 | 1.479 | 0.215 | 0.060 | |

| Clear–Drab | 37.03 | <0.001 *** | 0.617 | 5.00 | 0.011 * | 0.179 | 1.23 | 0.305 | 0.051 | |

| Perceived comfort quality (PCQ) | Hard–Soft | 97.47 | <0.001 *** | 0.809 | 1.60 | 0.213 | 0.065 | 1.27 | 0.287 | 0.052 |

| Warm–Cool | 0.089 | 0.915 | 0.004 | 47.151 | <0.001 *** | 0.672 | 1.003 | 0.410 | 0.042 | |

| Natural–Unnatural | 51.70 | <0.001 *** | 0.465 | 3.08 | 0.056 | 0.118 | 2.29 | 0.066 | 0.091 | |

| Glaring–Shaded | 87.767 | <0.001 *** | 0.792 | 0.488 | 0.617 | 0.021 | 1.301 | 0.276 | 0.054 | |

| Mild–Sharp | 43.10 | <0.001 *** | 0.652 | 1.41 | 0.255 | 0.058 | 1.94 | 0.111 | 0.078 | |

| PSQ | PCQ | ||||||

|---|---|---|---|---|---|---|---|

| Warm | Intermediate | Cold | Warm | Intermediate | Cold | ||

| low | M = 3.62 (SD = 0.87) | M = 3.89 (SD = 0.87) | M = 3.51 (SD = 0.99) | low | M = 5.26 (SD = 0.84) | M = 4.98 (SD = 0.9) | M = 4.75 (SD = 0.81) |

| medium | M = 4.79 (SD = 0.98) | M = 4.88 (SD = 0.95) | M = 4.82 (SD = 0.93) | medium | M = 4.18 (SD = 1.23) | M = 3.89 (SD = 0.91) | M = 3.75 (SD = 1.07) |

| high | M = 5.72 (SD = 1.05) | M = 5.6 (SD = 0.77) | M = 5.97 (SD = 0.43) | high | M = 3.15 (SD = 0.98) | M = 3.13 (SD = 1.23) | M = 2.15 (SD = 0.74) |

| Variables | Generalized Linear Model | ||

|---|---|---|---|

| X² | df | p | |

| Overall illuminance level | 14.5058 | 2 | <0.001 *** |

| CCT | 0.0619 | 2 | 0.970 |

| Labels | 149.6701 | 7 | < 0.001 *** |

| Lightness | s64.1997 | 1 | <0.001 *** |

| Overall illuminance level × CCT | 10.6036 | 4 | 0.031 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masullo, M.; Cioffi, F.; Li, J.; Maffei, L.; Ciampi, G.; Sibilio, S.; Scorpio, M. Urban Park Lighting Quality Perception: An Immersive Virtual Reality Experiment. Sustainability 2023, 15, 2069. https://doi.org/10.3390/su15032069

Masullo M, Cioffi F, Li J, Maffei L, Ciampi G, Sibilio S, Scorpio M. Urban Park Lighting Quality Perception: An Immersive Virtual Reality Experiment. Sustainability. 2023; 15(3):2069. https://doi.org/10.3390/su15032069

Chicago/Turabian StyleMasullo, Massimiliano, Federico Cioffi, Jian Li, Luigi Maffei, Giovanni Ciampi, Sergio Sibilio, and Michelangelo Scorpio. 2023. "Urban Park Lighting Quality Perception: An Immersive Virtual Reality Experiment" Sustainability 15, no. 3: 2069. https://doi.org/10.3390/su15032069

APA StyleMasullo, M., Cioffi, F., Li, J., Maffei, L., Ciampi, G., Sibilio, S., & Scorpio, M. (2023). Urban Park Lighting Quality Perception: An Immersive Virtual Reality Experiment. Sustainability, 15(3), 2069. https://doi.org/10.3390/su15032069