Abstract

Despite the measures put in place in different countries, road traffic fatalities are still considered one of the leading causes of death worldwide. Thus, the reduction of traffic fatalities or accidents is one of the contributing factors to attaining sustainability goals. Different factors such as the geometric structure of the road, a non-signalized road network, the mechanical failure of vehicles, inexperienced drivers, a lack of communication skills, distraction and the visual or cognitive impairment of road users have led to this increase in traffic accidents. These factors can be categorized under four headings that are: human, road, vehicle factors and environmental road conditions. The advent of machine learning algorithms is of great importance in analysing the data, extracting hidden patterns, predicting the severity level of accidents and summarizing the information in a useful format. In this study, three machine learning algorithms for classification, such as Decision Tree, LightGBM and XGBoost, were used to model the accuracy of road traffic accidents in the UK for the year 2020 using their default and hyper-tuning parameters. The results show that the high performance of the Decision Tree algorithm with default parameters can predict traffic accident severity and provide reference to the critical variables that need to be monitored to reduce accidents on the roads. This study suggests that preventative strategies such as regular vehicle technical inspection, traffic policy strengthening and the redesign of vehicle protective equipment be implemented to reduce the severity of road accidents caused by vehicle characteristics.

1. Introduction

Traffic accident severity results from the complex interaction between one or more of the following factors: human, vehicle, road and environment, with the human factor found to be the most important but also the hardest to change [1,2,3]. Annually, it is reported globally that around 1.3 million people die in road traffic accidents, with children and young adults mostly affected [4]. Also, road traffic accidents contribute to huge financial losses in term of infrastructure damage, loss of productivity, road accident fund payouts for any country and the individual involved [4,5,6]. With so many losses, the reduction/prevention of traffic fatalities or accidents is one of the factors in attaining global sustainability goals and becoming a priority in transportation management [7,8,9]. At any road accident, an accident report is collected; this report covers different accident attributes that can be used to further investigate the possible cause of the accident at that particular road section. However, most of the developing countries and under-developed ones are lagging behind the rest of the world in the availability of reliable accident data [10].

Additionally, from the accident reports, road sections with frequent accidents can be determined and are subjected to an accident study that could involve the professional reconstruction of accident scenes. In the reconstruction of an accident scene, it can be quite expensive and challenging to recreate the actual behaviour of road users and the mechanical performance of the vehicle that contributed to the accident. Thus, the use of existing accident traffic data and using analytical solutions could be of help in predicting and averting a future traffic accident for an existing road or a new road network. Overall, the investigation of the nature of traffic accidents and what causes their severity is crucial to building better and safer transportation systems [11]. Using data on road accidents that occurred in the UK in 2020, this study aims to investigate and pinpoint the primary causes of traffic accidents.

1.1. Current Trends: Road Accident Predictions

Accident predictions have become a necessity in order to identify the main contributing factors to road traffic accidents and in turn help with providing an appropriate solution to minimize their adverse effects [8,11,12]. Building a better and safer transportation system requires a proper understanding of the complex interactions that exist between the different accident attributes. The accident database forms an enormous database that covers different accident attributes under the following categories: road users, vehicles, roadway and environment [1,2]. Methods such as statistical models and artificial intelligence models have been used to determine and understand the interactions between these attributes in relation to the severity of the accident [1,2,8,13,14].

However, artificial intelligence models are currently gaining momentum as these can determine the interactions between variables that would be impossible to establish directly using statistical models and with the capability of handling and processing large datasets [11,13,14,15,16]. Machine learning (ML) is a branch of artificial intelligence that makes provision for data analysis, decision making and data preparation for the real-time problem and allows self-learning for computers with limited complex coding [8,11,13]. Machine learning identifies data patterns and makes decisions with minimal human intervention.

1.2. Machine Learning and Road Accidents

Machine learning is a data-driven method and it has found application in many real-world application domains and academic fields [16,17,18,19]. In recent years, ML has been applied in the field of transportation engineering [15,16]. Machine learning has been explored in the following traffic engineering areas: the identification of road locations prone to accidents, the determination of the severity of damage/injury from an accident, the role of road users in traffic accidents, the impact of drinking and driving on injury severity and the impact of environmental factors, to mention just a few [13,14,16,17,20,21,22]. Furthermore, researchers have explored the various models available in ML, which are categorized as supervised, unsupervised and semi-supervised [8,23]. The supervised model is further categorized as regression and classification. Overall, the proper prediction of traffic accident severity will help with adequate provision in terms of timely traffic safety management and strategies [2,6].

Annually, road accidents constitute a significant proportion of the number of serious injuries reported [4,7,9]. However, it is challenging to identify the specific conditions that lead to such an event and, hence, it is difficult for the road authority to properly address the number and severity of road accidents [24,25]. Furthermore, research has shown that human, vehicle, road and environmental factors play vital roles [1,2,3,5,6,8,10,12,13,16,26]. Human factors include age [14,16,26,27,28,29], gender [12,29], driving experience [30,31], the influence of alcohol and psychoactive substances [26], et cetera. On the other hand, vehicle factors include vehicle age, engine capacity, type and model, vehicle towing and articulation [2,12,13,16]. Road factors include road type, the condition of the road, road class, road geometry and speed limit [3,12,13,16]. Also, environmental factors include the day of the week, weather and light conditions [1,2,3]. Overall, of the aforementioned factors, human factors have been extensively explored and various measures have been put in place to mitigate them [14,16,26,27,28,29,30,31]; however, other factors need to be explored. Thus, it is difficult to concentrate on one factor and, hence, these questions remain regarding traffic accidents: (i) which factors contribute directly and indirectly to traffic accident fatality and (ii) what are the strategies to avert such incidents in the future? In order to answer these questions and specifically provide impact in the areas of the risk score on the probability of a driver having a fatal/serious accident solely based on inputs gathered from individual and vehicle data, this study incorporated the strength of machine learning and the United Kingdom’s road accidents database. Situational information was analysed to estimate the severity of an accident [25].

2. Research Objective and Methodology

This study’s main goal is to use analytical methods in ML to analyse traffic accident data in order to identify all the direct and indirect causes that have a significant impact on traffic accidents. To accomplish the objective of this study, a creative model was created using a variety of machine learning approaches, and the accuracy of the model was increased by employing the most recent and carefully structured datasets. The modelling process involves four primary stages that entail investigating and getting the datasets ready for modelling. The process includes attempting to comprehend the tabulated data, coming up with a better method of handling missing values, using statistical techniques to identify the factors most likely to cause traffic accidents, training the model using a machine learning algorithm and then assessing the model’s performance using existing classification metrics.

Traffic Crash Data: UK 2020

The tabular dataset used in this study’s model development was obtained from the UK’s Department of Transport and covered the year 2020. It is worth noting that the traffic accident data for year 2020 were impacted by the COVID-19 virus and the relative social gathering restrictions [32]. However, rather than seeing this as a limitation, it is an open window to explore other factors rather than human factors, which have been greatly explored [26,27,28,29,30,31]. Also, the use of a year’s worth of data in this study is an attempt to take into account the fact that most developing and undeveloped nations are only getting started with accident databases [33,34].

At the scene of the accidents in the UK, full information was gathered for each report, including environmental characteristics such as weather, road type and light conditions, driver factors such as gender and age, accident descriptors such as severity and police presence and vehicle descriptors such as age, power, type, model and the number of vehicles involved [16,35]. Furthermore, the accident data points are unique to the place on the road network and are used to explain certain aspects of the traffic and road conditions [16,36].

The original dataset contains a total of 135 453 data points with 60 attributes. Each variable in the dataset was classified as categorical or numerical based on its nature. Because training time increases exponentially with the number of features, dealing with a lot of features may, for example, have an impact on how well the model performs. It may also increase the risk of over-fitting. To simplify the problem and enhance the functionality of the model, certain highly pointless or unnecessary features were removed [13,16]. The selected feature variables consisted of the junction control, day of the week, road type, road surface conditions, sex of driver, age of the driver, age of the vehicles, light conditions, weather conditions, special conditions at site, speed limit, number of vehicles, vehicle type and vehicle manoeuvre. Table 1 shows the descriptive statistics for the utilized data. The justification of these selections is based on previous studies [2,14,16,19]; these variables have featured as the important variables that contribute to traffic accidents. The accident severity was the target variable, which was divided into three categories based on the severity of the resulting personal damage, namely fatalities, serious injuries and slight injuries. As a result, this study explores the data in order to determine how the chosen feature variables affect the accident severity.

Table 1.

Descriptive statistics data related to traffic accident.

A pre-processing step was performed before each model development to improve the model’s prediction capabilities. The noise was reduced by removing the outliers [37]. The data were cleaned and pre-processed to look for missing values that could disrupt the learning process. A machine learning feature selection method such as the Scikit-learn Random Forest library (RRID:SCR 002577) was used to identify the most relevant and correlated attributes influencing the learning process. These datasets were investigated using supervised learning to predict the class label based on driver and vehicle characteristics, weather conditions and road properties [14,19,35].

Since there are often more serious and minor injuries in accidents than fatal ones, the accident dataset is unbalanced. To overcome this problem, many researchers utilized the oversampling or undersampling technique on unbalanced data. In this study, to overcome this problem, SMOTE (Synthetic Minority Oversampling Technique) oversampling for imbalanced multi-class classification was used to synthesize new examples of the minority classes so that the number of examples in the minority class more closely resembled or matched the number of examples in the majority of classes. This is a very effective type of data augmentation for tabular data [19].

3. Model Development

A supervised machine learning technique that excels at analysing predictive data is prediction. It is based on the learning of fresh feature variables recorded into particular target variables based on relevant feature variable values through training data. It is crucial to employ cutting-edge prediction algorithms to ensure the best accuracy because of their capacity for handling complex related factors and their efficacy in handling connected variables. Multi-class classification algorithms with Sklearn (RRID: SCR 019053) were utilized in this study, including Decision Tree (DT), Light Gradient Boosting Machine (LightGBM) and Extreme Gradient Boosting (XGBoost). The justification for the selection of these algorithms is based on obtained good classification accuracy and noting that Decision Tree requires less effort for data preparation during pre-processing. Additionally, both LightGBM and XGBoost enable parallel arithmetic, but LightGBM is more potent than the XGBoost model due to a faster training speed and occupying less memory, which lowers the communication cost of parallel learning [14,19]. However, the XGBoost classifier is one of the newest and most effective machine learning-based prediction algorithms [19,21]. Following a thorough examination of various machine learning multi-class classification algorithms reported in the literature, the scalable, flexible, accurate and relatively fast XGBoost algorithm for classification was chosen to provide more regularized model formalization and better over-fitting control [14,19,21].

4. Model Evaluation

The goal of developing a predictive model is to create a model that is accurate on previously unseen data. This can be accomplished by employing statistical methods in which the training dataset is carefully selected in order to estimate the model’s performance on new and unexplored data. The most basic technique of model validation is to split off a portion of the labelled data and reserve them for evaluating the model’s final performance. It is critical to preserve the statistical properties of the available data when splitting them. This implies that in order to prevent bias in the trained model, the data in the training and test datasets must share similar statistical characteristics with the original data. The labelled dataset in the current study was divided into 80% training and 20% testing. The effectiveness of each model was evaluated in turn in order to compare their performance in terms of confusion matrix, sensitivity, specificity and area under the curve (AUC) of the receiver operating characteristic (ROC) for the severity of the accident. The model’s performance was evaluated using a variety of criteria provided by the confusion matrix. From this particular matrix, it can infer a set of evaluation metrics. One of these is the accuracy, which is basically the proportion of correct prediction, and it is calculated as follows [19,37]:

where TP stands for True Positives, TN stands for True Negatives, FP stands for False Positives and FN stands for False Negatives, followed by the precision, which is the proportion of the positive cases that were correctly identified, and it is computed as follows [19,37]:

and the recall/sensitivity, which is the proportion of the actual positive cases that were correctly identified and calculated as follows [19,37]:

and the specificity, which is the proportion of the actual negative cases that were correctly identified and calculated as follows [19,37]:

and lastly, the F1 score, which is measuring the balance between precision and sensitivity and can be computed as follows [37]:

The sensitivity and specificity can indicate whether the algorithm with default or tuning parameters is best for our data. If correctly identifying positives is more important in relation to the data, then the algorithm with the higher sensitivity is the best. If correctly identifying negatives is more important in relation to the data, then the algorithm with higher specificity is the best.

Using charts is also an easy way to gain a quick level of understanding when evaluating a classification model. The receiver operating characteristic (ROC) chart (RRID: SCR 008551), which is simply a graph of the true positive rate against the false positive rate, was also carried out in order to provide further evidence. Both macro-average and micro-average ROC curves were produced. As opposed to a micro-average ROC curve, which aggregates class participation to determine the average metric, a macro-average ROC curve measures the metric freely for each class before taking the average. Another metric derived from this is the area under the curve (AUC), which is the area of the applied surface under the ROC curve.

5. Results and Discussion

In this work, a total of 135,453 records of UK traffic accidents in the year 2020 were examined. Fifteen attributes selected using feature selection with ranking were used with the class variable of the severity of injury to predict the degree of injury severity in traffic accidents. The statistical analysis results show that male (63.2%) and young drivers (<24 years old) (30.7%) contribute the most to accidents. Also, the result shows that cars (67.70%) contributed the most under vehicle type, followed by the pedal cycle (10%). Additionally, under vehicle manoeuvres, going ahead of others (46%), turning left (18.32%) and slowing or stopping (5.24%), contributed the most to traffic accident severity. The number of vehicles at the scene of the accident further contributes to accident severity, with two vehicles (70%) on the scene taking the lead. The aspect of vehicle age highlighted that vehicles between 0 and 10 years old contribute more to traffic accidents. Furthermore, it was observed that accident numbers could depend on the amount of traffic on a particular day, and most of the accidents occurred on roads where the speed limit was 30 mph (48 km/h). Thus, more accidents could be expected on highways or major roadways. It was also noted that most of the accidents occurred during weekends and during daylight hours with streetlights present, with weather conditions that did not have an adverse effect on driving (dry road conditions) [2,16,19]. In order to build the prediction models, three different classification algorithms, such as Decision Tree, LightGBM and XGBoost, were applied to the dataset. Additionally, 10-fold cross-validation techniques were used to evaluate the prediction performance, a number of hyper-parameter settings were evaluated for each model, and the setting yielding the best performing model was chosen [6].

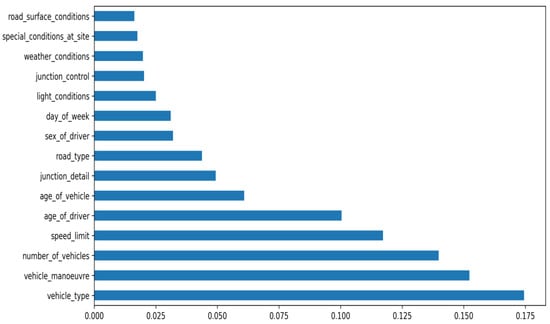

To rank the attributes in this study, Random Forest classification feature importance was used to highlight the most relevant feature at predicting the target variable, resulting in improved model performance. The feature selection method revealed that the vehicle characteristics, such as the vehicle type [16], vehicle manoeuvre “actions immediately before the accident” [16], the number of vehicles and age of the vehicle [16], had the greatest impact on accident severity out of the 15 features as depicted in Figure 1. Also, the age of drivers [12,16] under human characteristics, and speed limit under road characteristics [16,19], top the chart. The results show that vehicle characteristics play a major role in the accidents reported in the UK for the year 2020.

Figure 1.

The Random Forest Classifier’s bar chart for feature importance scores, displayed in ascending order.

Generally, vehicle characteristics include vehicle dimensions, weight, power, minimum turning radius, speed, acceleration and braking characteristics; however, the vehicle dimensions in vehicle type stood out. This implies that the width, length and height of vehicles significantly affects safe overtaking distance. Picking up also on the number of vehicles, after the understanding of the vehicle type, it is critical to know the number of vehicles involved in the accident (for example, a head-on collision will be two vehicles involved), as this will determine the extent of the accident severity. Although various studies [12,16,38,39] have highlighted human characteristics as the most critical, the results placed the spotlight on vehicle characteristics as a major factor contributing to traffic accident severity [16]. This is worth noting as the data used in the study were during the period with COVID-19 travel restrictions with a limited number of people travelling and the majority of vehicles not serviced as a result of the restrictions. Nevertheless, it is critical to point out that human characteristics such as drivers’ years of experience and the details on drivers’ licences (eligibility to drive) were not documented and this might have changed the dynamics.

5.1. Decision Tree Classification Algorithm Analysis Result

The outcome of the Decision Tree classification algorithm, which aids in looking at all possible predictions for each class, is presented and discussed in this paragraph. The result of the Decision Tree algorithm using its default parameters (DT-D) will be compared with the one using hyper-parameter settings (DT-H). As seen in Table 2, the Decision Tree classifier with default parameters was able to predict three classes of accident severity out of three with an overall accuracy of 84.61%. With hyper parameter tuning, the algorithm was able to predict the three classes of accident severity with 84.35% overall accuracy, as shown in Table 3. The slight difference in accuracy score shows that some algorithms perform better with default parameters. Precision, recall, specificity, F1 score and a false positive rate with default and hyper tuning parameters were also measured for each class of accident severity as shown in Table 2 and Table 3.

Table 2.

The Decision Tree algorithm analysis result with default parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements for the three classes. The overall accuracy of the model was also measured.

Table 3.

The Decision Tree algorithm analysis result with hyper-tuning parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements of the three classes. The overall accuracy of the model was also measured.

Additionally, for further analysis the confusion matrix with three rows and three columns was created to summarize the classification with three classes such as Fatal, Serious and Slight injury as shown in Table 4 and Table 5. As shown, the diagonal with values (20,825, 17,117, 17,528) represents the correct predictions and the other values on the table indicate incorrect predictions. The algorithm with default parameters correctly predicted more fatal and serious accident severity than the one with tuned parameters (20,756, 16,950, 17,591), which correctly predicted more slight injuries as depicted in Table 5. Looking carefully at the 3 × 3 confusion matrices displayed in Table 4 and Table 5, it is worth noting that the Decision Tree algorithm with default parameters performed significantly better. It predicted more fatal and serious injuries as true positives. After filling out the confusion matrix table, two useful metrics, such as sensitivity and specificity, were evaluated. Referring to Table 2, sensitivity for fatal injury indicates that 95.80% of fatal injuries were correctly identified positives and specificity for fatal injury indicates that 96.30% of fatal injuries were correctly identified negatives. On the other hand, sensitivity for serious injury indicates that 78.60% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 88.91% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injury indicates that 79.55% of slight injuries were correctly identified positives and specificity for slight injuries indicates that 85.95% of slight injuries were correctly identified negatives.

Table 4.

Confusion matrix for multi-class classification of Decision Tree algorithm analysis results with default parameters.

Table 5.

Confusion matrix for multi-class classification of Decision Tree algorithm analysis result with hyper-tuning parameters.

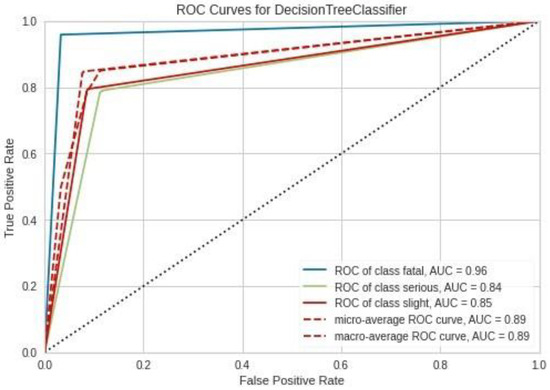

With the help of the ROC curve shown in Figure 2, it further examines how well the Decision Tree with default settings will predict the three classes of accident severity considering different thresholds. Additionally, the AUC gives a single value metric that makes it easy to comprehend how well the classification model performs in predicting each class. For the perfect unrealistic curve (a point at the upper left corner of the chart), the area under the curve will be 100%, while for the completely random class (the diagonal line on the chart) the area under the curve will be 50%. A class that performs worse than a random class will have an area below 50%. Here, class-1(fatal) is more accurate in this case than class-2(serious) and class-3(slight), as class-1 has a larger area under the curve than classes 2 and 3.

Figure 2.

Receiver Operating Characteristic (ROC) curves for each accident severity class of Decision Tree algorithm analysis result with default parameters. The area under the ROC curve is reported in the legend.

Regarding the Decision Tree algorithm with hyper-tuning parameters as shown in Table 3, sensitivity for fatal injury show that only 95.47% of fatal injuries were correctly identified positives and specificity for fatal injury shows that 96.55% of fatal injuries were correctly identified negatives. Additionally, sensitivity for serious injuries shows that 77.80% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 88.85% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injuries shows that 79.83% of slight injuries were correctly identified positives and specificity for slight injuries shows that 85.35% of slight injuries were correctly identified negatives.

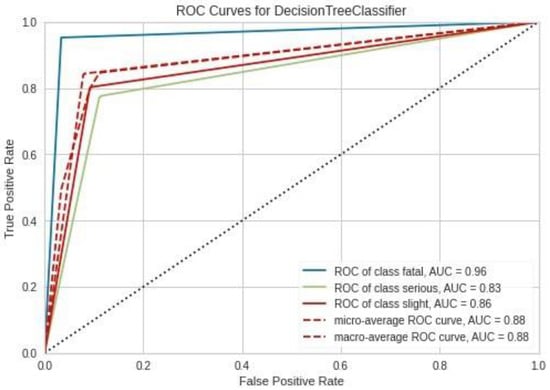

Figure 3 shows the resulting ROC chart of Decision Tree with hyper-tuning settings. The blue curve of the Fatal class is closer to the y-axis than the Serious and Slight classes and has a more moderate AUC of 0.96 than other classes. Overall, tuning the parameters slightly improves the AUC of the Decision Tree results in predicting the Slight class of accident severity. Both macro-average and micro-average ROC curves were also produced. Macro-averaged ROC curve, AUC: to be calculated.

Figure 3.

Receiver Operating Characteristic (ROC) curves for each accident severity class of Decision Tree algorithm analysis result with hyper-tuning parameters. The area under the ROC curve is reported in the legend.

Calculate the AUC for each class separately, then average them out. For the AUC, micro-averaged ROC curve: calculate true positive and false positive rates for each class and then use that to calculate the overall AUC. The micro- and macro-average ROC curve values obtained with hyper-tuning parameters are lower than those obtained with default parameters, i.e., AUC (dec.tree tuning) micro, macro = 0.88, 0.88, while AUC (dec.tree default) micro, macro = 0.89, 0.89 as shown in Figure 2 and Figure 3.

5.2. LightGBM Classification Algorithm Analysis Result

Table 6 and Table 7 provide an overview of the metrics defined for a multi-class confusion matrix and, in particular, the overall accuracy of the model, recall, precision and F1-score, specificity and false positive rate (FPR) in order to compare the performance of the LightGBM algorithm with the default (LGBM-D) and hyper-tuning parameters (LGBM-H). The algorithm with default parameters was able to predict three classes of accident severity out of three with an overall accuracy of 81.00%. With the hyper-tuning parameters there was a significant improvement in the model accuracy of the accident severity. It jumped from 81.00% for default parameters to 84.72% for tuned parameters as depicted in Table 6 and Table 7.

Table 6.

The LightGBM algorithm analysis with default parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements of the three classes. The overall accuracy of the model was also measured.

Table 7.

The LightGBM algorithm analysis with hyper-tuning parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements of the three classes. The overall accuracy of the model was also measured.

Furthermore, the confusion matrix with three rows and three columns was created to summarize the classification with three classes such as Fatal, Serious and Slight injury as shown in Table 8 and Table 9. As shown, the diagonal with default values (18,882, 12,492, 21,729) and tuning values (19,875, 13,920, 21,747) represent correct predictions and the other values on the tables indicate incorrect predictions. The algorithm with hyper-tuning parameters correctly predicted more fatal, serious and slight accident severity than the one with default parameters as depicted in Table 8 and Table 9. Looking carefully at the 3 × 3 confusion matrices displayed in Table 8 and Table 9, it is worth noting that the LightGBM classification algorithm with hyper-tuning parameters did much better. It predicted more fatal, serious and slight injuries as true positive. After filling out the confusion matrix table, two useful metrics such as sensitivity and specificity were evaluated. Referring to Table 6, sensitivity for fatal injury indicates that 86.85% of fatal injuries were correctly identified positives and specificity for fatal injury indicates that 90.80% of fatal injuries were correctly identified negatives. On the other hand, sensitivity for serious injuries indicates that 57.34% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 94.35% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injuries shows that 98.62% of slight injuries were correctly identified positives and specificity for slight injuries shows that 73.76% of slight injuries were correctly identified negatives.

Table 8.

Confusion matrix for multi-class classification of LightGBM algorithm analysis result with default parameters.

Table 9.

Confusion matrix for multi-class classification of LightGBM algorithm analysis result with hyper-tuning parameters.

According to Table 7, sensitivity for fatal injury indicates that 91.42% of fatal injuries were correctly identified positives and specificity for fatal injury indicates that 98.20% of fatal injuries were correctly identified negatives. On the other hand, sensitivity for serious injuries indicates that 63.90% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 96.07% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injuries indicates that 98.70% of slight injuries were correctly identified positives and specificity for slight injuries indicates that 76.36% of slight injuries were correctly identified negatives.

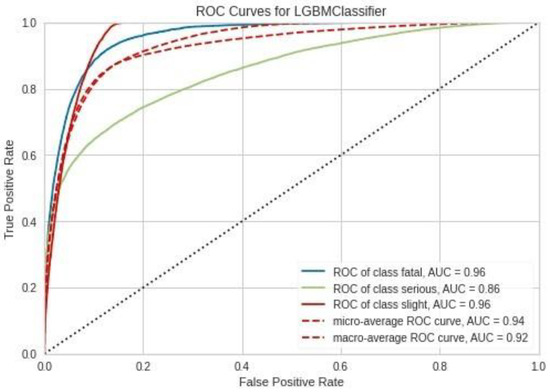

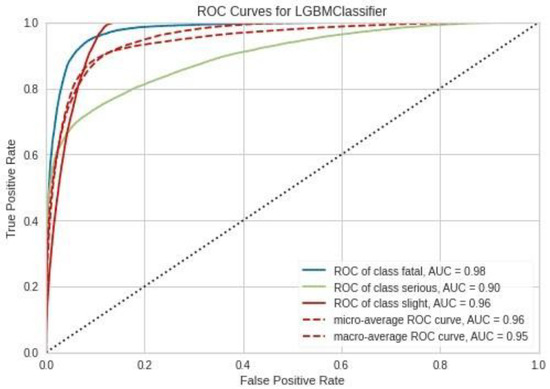

Figure 4 shows the resulting ROC chart of the LightGBM model with default settings of every type of injury. The AUC of fatal injury was 0.96, for serious injury it was 0.86 and for slight injury it was 0.96, all of which show that the model has a decent ability to predict outcomes/different classes. Figure 5 shows that the LightGBM model with hyper-tuning settings performed marginally better in predicting the AUC of the three classes. Both macro-average and micro-average ROC curves were also produced. The micro- and macro-average ROC curves values obtained with hyper-tuning parameters are slightly different to those obtained with default parameters, i.e., AUC (LightGBM tuning) micro, macro = 0.96, 0.95 while AUC (LightGBM default) micro, macro = 0.94, 0.92 as shown in Figure 4 and Figure 5.

Figure 4.

Receiver Operating Characteristic (ROC) curves for each accident severity class of LightGBM algorithm analysis result with default parameters. The area under the ROC curve is reported in the legend.

Figure 5.

Receiver Operating Characteristic (ROC) curves for each accident severity class of LightGBM algorithm analysis result with hyper-tuning parameters. The area under the ROC curve is reported in the legend.

5.3. XGboost Classification Algorithm Analysis Result

The performance of the hyper-tuned XGboost classification algorithm (XGB-H) was compared to that of the algorithm with default parameters (XGB-D). The performance of several predictions of each class of accident severity was the foundation for the comparison. Table 10 and Table 11 show the results of different multi-class metrics based on different measurements such as the overall accuracy of the model, recall, precision and F1-score, specificity and false positive rate (FPR), which helped to analyse the behaviour of the same model by tuning different parameters. The algorithm with both default and hyper tuning parameters has a similar performance in terms of predicting three classes of accident severity out of three with a significant increase in overall accuracy.

Table 10.

The XGboost algorithm analysis with default parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements for the three classes. The overall accuracy of the model was also measured.

Table 11.

The XGboost algorithm analysis result with hyper-tuning parameters includes a summary of precision, recall, per-class F1-Score, specificity and the false positive rate (FPR) measurements of the three classes. The overall accuracy of the model was also measured.

Furthermore, the confusion matrix with three rows and three columns was created to summarize the classification with three classes such as Fatal, Serious and Slight injury as shown in Table 12 and Table 13. As shown in the tables, the diagonal with default values of (17,484, 10,276, 21,077) and hyper- tuning values of (18,961, 12,732, 21,769) represents correct predictions and the other values on the tables indicate incorrect predictions. The algorithm with hyper-tuning parameters correctly predicted more fatal, serious and slight accident severity classes than the one with default parameters, which correctly predicted more slight injuries as depicted in Table 12 and Table 13. Looking carefully at the two confusion matrix tables, it is worthy of note that the XGboost classification algorithm with hyper-tuning parameters outperformed the one with default parameters. It predicted more fatal, serious and slight injuries as true positives. After filling out the confusion matrix table, two useful metrics such as sensitivity and specificity were evaluated. Referring to Table 10, sensitivity for fatal injury indicates that 80.43% of fatal injuries were correctly identified positives and specificity for fatal injury indicates that 85.44% of fatal injuries were correctly identified negatives. On the other hand, sensitivity for serious injuries indicates that 47.17% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 91.58% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injury shows that 95.66% of slight injuries were correctly identified positives and specificity for slight injuries shows that 71.75% of slight injuries were correctly identified negatives.

Table 12.

Confusion matrix for multi-class classification of XGboost algorithm analysis result with default parameters.

Table 13.

Confusion matrix for multi-class classification of XGboost algorithm analysis result with hyper-tuning parameters.

According to Table 11, sensitivity for fatal injury shows that 87.22% of fatal injuries were correctly identified positives and specificity for fatal injury indicates that 90.80% of fatal injuries were correctly identified negatives. On the other hand, sensitivity for serious injuries shows that 58.44% of serious injuries were correctly identified positives and specificity for serious injuries indicates that 94.30% of serious injuries were correctly identified negatives. Finally, sensitivity for slight injuries shows that 98.80% of slight injuries were correctly identified positives and specificity for slight injury indicates that 75.32% of slight injuries were correctly identified negatives.

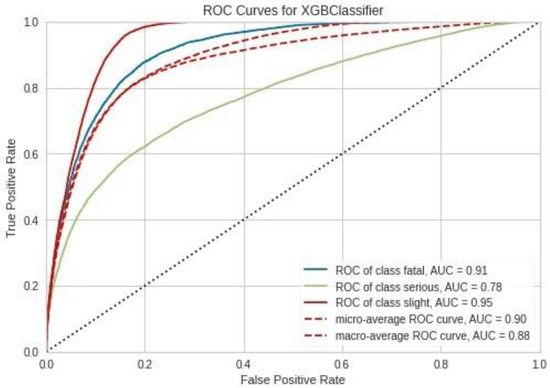

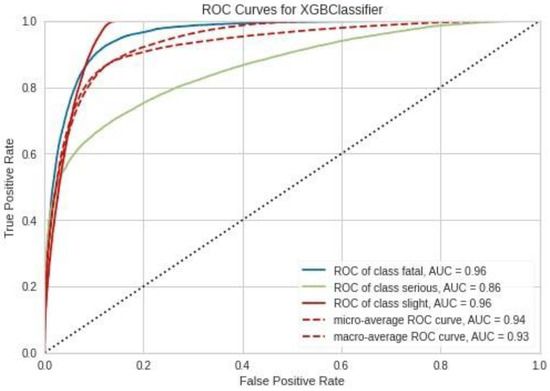

However, ROC curve analysis was also carried out for additional support. The resulting ROC graph and the area under the curve of the XGboost model with default and hyper-tuning parameters for each type of injury are shown in Figure 6 and Figure 7. It has been demonstrated that the normal setting for fatal injury AUC ROC was 0.91, for serious injury it was 0.78 and for slight injury it was 0.95, whereas the hyper-tuning setting for fatal injury was 0.96, for serious injury it was 0.86 and for slight injury it was 0.96, indicating good predictive power for the model with both default and hyper-tuning parameters for fatal class injury. Both macro-average and micro-average ROC curves were also produced. The micro- and macro-average ROC curve values obtained with hyper-tuning parameters are slightly different to those obtained with default parameters, i.e., AUC (dec.tree tuning) micro, macro = 0.94, 0.93 while AUC (dec.tree default) micro, macro = 0.90, 0.88 as shown in Figure 6 and Figure 7.

Figure 6.

Receiver Operating Characteristic (ROC) curves for each accident severity class of XGboost algorithm analysis result with default parameters. The area under the ROC curve is reported in the legend.

Figure 7.

Receiver Operating Characteristic (ROC) curves for each accident severity class of XGboost algorithm analysis result with hyper-tuning parameters. The area under the ROC curve is reported in the legend.

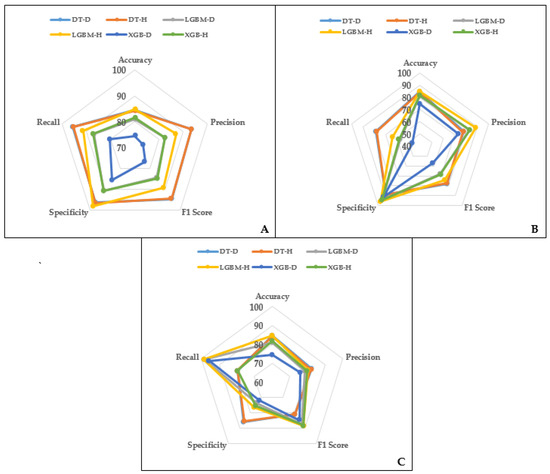

5.4. Comparative Analysis of Machine Learning Techniques

Using the radar chart, a comparative analysis for all machine learning techniques is presented in Figure 8. The result shows that overall the Decision Tree models (default and hyper-turning) performed better than the other developed models based on F1 score, precision, recall, specificity and accuracy value in predicting fatal accidents. However, the LightGBM models performed better in terms of precision and accuracy for serious injuries but not so well on F1 score and recall. It can be observed overall that XGboost had the weakest performance for the fatal and serious injury models. Furthermore, it can be observed that the hyper-tuning parameter enhanced the prediction power of all three models. Overall, the prediction accuracies in the study presented are comparable to the results of those from previous studies on traffic crash severity [13,19].

Figure 8.

Performance measures of the developed models. (A): Fatal, (B): Serious injuries and (C): Slight injuries.

6. Conclusions

The direct objective of this study was to use three groups of data-driven methodologies to account for the negative effects of traffic accidents on society. It was discovered that Decision Tree models did quite well in terms of number. Also, considering the confusion matrix, it is worth noting that the Decision Tree algorithm did much better. It predicted more fatal and serious injuries as true positives. The accuracy score is lower compared to the LightGBM hyper-tuning algorithm, and it is worth noting that the LightGBM and XGboost algorithms predicted the majority of slight accidents, and those numbers are high overall in the dataset. The confusion matrix helps us to understand which algorithm worked better in terms of looking at all the different predictions of each class. Furthermore, the results further highlight the possibility that the high performance of the Decision Tree algorithm can predict traffic accident severity and also provide reference to the critical variables that need to be monitored in order to reduce accidents on the roads. Overall, it was discovered that vehicle and road user characteristics played a leading role in the severity of accidents in the year 2020 in the UK.

Under vehicle characteristics, the vehicle type is observed to be the highest importance score, thus implying the necessity of furnishing the first accident respondent with information of the vehicle type, as the vehicle type will determine the number of passengers on board and consequently the possible number of accident causalities [16]. Following the vehicle type, the feature importance scores rank vehicle type, vehicle manoeuvre, number of vehicles, age of the vehicle and age of drivers, respectively.

Considering the results of the study, the following can be concluded:

(i) Prediction of Future Traffic Accidents—in the study, 20% of the year 2020 data were used to predict the annual accident data. Although the predictions via the machine learning techniques are a little higher than those of the actual accident data, it is worth noting that the machine learning techniques have excluded the imbalance in data and data with errors. Thus, with the scarcity of traffic accident data for developing and underdeveloped countries [13], the quarterly accident data of the year can be used to establish trends and analyse and make the necessary transportation planning for the whole year in terms of accident prevention strategies. Also, the understanding of the accident trends needs to be carefully monitored to consider the effect of various holidays.

(ii) Machine Learning as Identifier of Significant Traffic Variables—the study also highlighted vehicle characteristics as one of the important variables in the accident severity; however, it is worth noting that the data used for this study were impacted by COVID-19 travel restrictions. Overall, it can be concluded that human and vehicle characteristics play an important role in traffic accidents. The necessity of identifying the significant variables is to assist in strategic planning and sensitizing road users to the causes of road accidents, especially during accident peak season. As a result, this study concludes that more emphasis be placed on vehicle characteristics during the road geometric design phase. To reduce the severity of road accidents caused by vehicle characteristics, preventative strategies such as regular vehicle technical inspection, traffic policy strengthening and the redesign of vehicle protective equipment must be implemented. [40,41].

(iii) Vehicle technical inspection—more emphasis should be placed on the vehicle’s roadworthiness, as vehicle characteristics contribute to accident severity while policy strengthening can be ensured by continuously enforcing traffic control laws (such as speed limits and seatbelt/helmet use enforcement) by traffic police. Vehicle protective equipment focuses on the design of different vehicle modes other than cars and protective equipment, which may result in fewer injuries [40,41]. Also, the study emphasizes the need to incorporate more variables in terms of driver characteristics such as years of driving experience and recent involvement in any other traffic accident as this might affect the traffic accident. On a final note, this study concludes that machine learning models can be used to determine traffic accident fatalities under three severity levels such as fatal, serious injuries and minor injuries.

Author Contributions

Conceptualization, M.M.-T. and J.A.A.; Methodology, M.M.-T.; Formal analysis, J.A.A.; Investigation, M.M.-T. and J.A.A.; Data curation, M.M.-T.; Writing—original draft, M.M.-T. and J.A.A.; Writing—review & editing, M.M.-T. and J.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The STATS19 dataset is provided by the UK Department of Transport, https://data.gov.uk/dataset/cb7ae6f0-4be6-4935-9277-47e5ce24a11f/road-safety-data (accessed on 5 February 2022).

Acknowledgments

The authors would like to acknowledge the work and contribution of all participants in the study. Thanks are also due to the open-source Python community for providing free tools (Python Programming Language (RRID:SCR 008394)). We thank Google Colaboratory (Google CoLab (RRID:SCR 018009)), Anaconda (Conda (RRID:SCR 018317)), scikit-learn (RRID:SCR 002577), Pandas (RRID:SCR 018214), NumPy (RRID:SCR 008633) and Jupyter Note-book (RRID:SCR 018315).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alkheder, S.; Taamneh, M.; Taamneh, S. Severity prediction of traffic accident using an artificial neural network. J. Forecast. 2017, 36, 100–108. [Google Scholar] [CrossRef]

- Gan, J.; Li, L.; Zhang, D.; Yi, Z.; Xiang, Q. Alternative Method for Traffic Accident Severity Prediction: Using Deep Forests Algorithm. J. Adv. Transp. 2020, 2020, 1257627. [Google Scholar] [CrossRef]

- Fu, X.; Meng, H.; Wang, X.; Yang, H.; Wang, J. A hybrid neural network for driving behavior risk prediction based on distracted driving behavior data. PLoS ONE 2022, 17, e0263030. [Google Scholar] [CrossRef] [PubMed]

- WHO. Road Traffic Injuries. Available online: https://www.who.in (accessed on 22 March 2020).

- Ogwueleka, F.N.; Misra, S.; Ogwueleka, T.C.; Fernandez-Sanz, L. An artificial neural network model for road accident prediction: A case study of a developing country. Acta Polytech. Hung. 2014, 11, 177–197. [Google Scholar]

- Yan, M.; Shen, Y. Traffic Accident Severity Prediction Based on Random Forest. Sustainability 2022, 14, 1729. [Google Scholar] [CrossRef]

- Adedeji, J.A.; Hassan, M.M.; Abejide, S.O. Effectiveness of communication tools in road transportation: Nigerian perspective. In Proceedings of the International Conference on Traffic and Transport Engineering, Belgrade, Serbia, 24–25 November 2016; pp. 24–25. [Google Scholar]

- Bokaba, T.; Doorsamy, W.; Paul, B.S. A Comparative Study of Ensemble Models for Predicting Road Traffic Congestion. Appl. Sci. 2022, 12, 1337. [Google Scholar] [CrossRef]

- Adedeji, J.; Feikie, X. Exploring the Informal Communication of Driver-to-Driver on Roads: A Case Study of Durban City, South Africa. J. Road Traffic Eng. 2021, 67, 1–8. [Google Scholar] [CrossRef]

- Mukherjee, D.; Mitra, S. Pedestrian safety analysis of urban intersections in Kolkata, India using a combined proactive and reactive approach. J. Transp. Saf. Secur. 2022, 14, 754–795. [Google Scholar] [CrossRef]

- Mokoatle, M.; Vukosi Marivate, D.; Michael Esiefarienrhe Bukohwo, P. Predicting road traffic accident severity using accident report data in South Africa. In Proceedings of the 20th Annual International Conference on Digital Government Research, Dubai, United Arab Emirates, 18–20 June 2019; pp. 11–17. [Google Scholar]

- Yassin, S.S. Road accident prediction and model interpretation using a hybrid K-means and random forest algorithm approach. SN Appl. Sci. 2020, 2, 1576. [Google Scholar] [CrossRef]

- Assi, K.; Rahman, S.M.; Mansoor, U.; Ratrout, N. Predicting Crash Injury Severity with Machine Learning Algorithm Synergized with Clustering Technique: A Promising Protocol. Int. J. Environ. Res. Public Health 2020, 17, 5497. [Google Scholar] [CrossRef]

- Khattak, A.; Almujibah, H.; Elamary, A.; Matara, C.M. Interpretable Dynamic Ensemble Selection Approach for the Prediction of Road Traffic Injury Severity: A Case Study of Pakistan’s National Highway N-5. Sustainability 2022, 14, 12340. [Google Scholar] [CrossRef]

- Taamneh, S.; Taamneh, M. Evaluation of the performance of random forests technique in predicting the severity of road traffic accidents. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 21–25 July 2018; pp. 840–847. [Google Scholar]

- Rella Riccardi, M.; Mauriello, F.; Sarkar, S.; Galante, F.; Scarano, A.; Montella, A. Parametric and Non-Parametric Analyses for Pedestrian Crash Severity Prediction in Great Britain. Sustainability 2022, 14, 3188. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, T.; Kwon, S. Model evaluation for forecasting traffic accident severity in rainy seasons using machine learning algorithms: Seoul city study. Appl. Sci. 2020, 10, 129. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. Sn. Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef]

- Li, K.; Xu, H.; Liu, X. Analysis and visualization of accidents severity based on LightGBM-TPE. Chaos Solitons Fractals 2022, 157, 111987. [Google Scholar] [CrossRef]

- Komol, M.M.R.; Hasan, M.M.; Elhenawy, M.; Yasmin, S.; Masoud, M.; Rakotonirainy, A. Crash severity analysis of vulnerable road users using machine learning. PLoS ONE 2021, 16, e0255828. [Google Scholar] [CrossRef]

- Jamal, A.; Zahid, M.; Tauhidur Rahman, M.; Al-Ahmadi, H.M.; Almoshaogeh, M.; Farooq, D.; Ahmad, M. Injury severity prediction of traffic crashes with ensemble machine learning techniques: A comparative study. Int. J. Inj. Control. Saf. Promot. 2021, 28, 408–427. [Google Scholar] [CrossRef]

- Anderson, T.K. Kernel density estimation and K-means clustering to profile road accident hotspots. Accid. Anal. Prev. 2009, 41, 359–364. [Google Scholar] [CrossRef]

- AlMamlook, R.E.; Kwayu, K.M.; Alkasisbeh, M.R.; Frefer, A.A. Comparison of machine learning algorithms for predicting traffic accident severity. In Proceedings of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 9–11 April 2019; pp. 272–276. [Google Scholar]

- Zuccarelli, E. Using Machine Learning to Predict Car Accidents. Available online: https://towardsdatascience.com/using-machine-learning-to-predict-car-accidents-44664c79c942 (accessed on 21 January 2022).

- Fan, Z.; Liu, C.; Cai, D.; Yuer, S. Research on black spot identification of safety in urban traffic accidents based on machine learning method. Saf. Sci. 2019, 118, 607–616. [Google Scholar] [CrossRef]

- Bucsuházy, K.; Matuchová, E.; Zůvala, R.; Moravcová, P.; Kostíková, M.; Mikulec, R. Human factors contributing to the road traffic accident occurrence. Transp. Res. Procedia 2020, 45, 555–561. [Google Scholar] [CrossRef]

- Islam, M.K.; Gazder, U.; Akter, R.; Arifuzzaman, M. Involvement of Road Users from the Productive Age Group in Traffic Crashes in Saudi Arabia: An Investigative Study Using Statistical and Machine Learning Techniques. Appl. Sci. 2022, 12, 6368. [Google Scholar] [CrossRef]

- Tarlochan, F.; Ibrahim, M.I.M.; Gaben, B. Understanding Traffic Accidents among Young Drivers in Qatar. Int. J. Environ. Res. Public Health 2022, 19, 514. [Google Scholar] [CrossRef] [PubMed]

- Jeong, H.; Kim, I.; Han, K.; Kim, J. Comprehensive Analysis of Traffic Accidents in Seoul: Major Factors and Types Affecting Injury Severity. Appl. Sci. 2022, 12, 1790. [Google Scholar] [CrossRef]

- Pervez, A.; Huang, H.; Lee, J.; Han, C.; Li, Y.; Zhai, X. Factors affecting injury severity of crashes in freeway tunnel groups: A random parameter approach. J. Transp. Eng. Part A Syst. 2021, 48, 04022006. [Google Scholar] [CrossRef]

- Yang, Z.; Zhang, W.; Feng, J. Predicting multiple types of traffic accident severity with explanations: A multi-task deep learning framework. Saf. Sci. 2022, 146, 105522. [Google Scholar] [CrossRef]

- Sanmarchi, F.; Esposito, F.; Adorno, E.; De Dominicis, F.; Fantini, M.P.; Golinelli, D. The impact of the SARS-CoV-2 pandemic on cause-specific mortality patterns: A systematic literature review. J. Public Health 2022, 32 (Suppl. S3), 131–192. [Google Scholar] [CrossRef]

- Naji, J.A.; Djebarni, R. Shortcomings in road accident data in developing countries, identification and correction: A case study. IATSS Res. 2000, 24, 66–74. [Google Scholar] [CrossRef]

- Rabbani, M.B.A.; Musarat, M.A.; Alaloul, W.S.; Ayub, S.; Bukhari, H.; Altaf, M. Road Accident Data Collection Systems in Developing and Developed Countries: A Review. Int. J. Integr. Eng. 2022, 14, 336–352. [Google Scholar] [CrossRef]

- Department for Transport. Available online: https://data.gov.uk/dataset/cb7ae6f0-4be6-4935-9277-47e5ce24a11f/road-safety-data (accessed on 21 January 2022).

- Garc’ıa de Soto, B.; Bumbacher, A.; Deublein, M.; Adey, B.T. Predicting road traffic accidents using artificial neural network models. Infrastruct. Asset Manag. 2018, 5, 132–144. [Google Scholar] [CrossRef]

- Mohammad, H.; Sulaiman, M.N. A Review on Evaluation Metrics for Data Classification Evaluations. Int. J. Data Min. Knowl. Manag. Process 2015, 5, 501–511. [Google Scholar]

- Chen, T.Y.; Jou, R.C. Using HLM to investigate the relationship between traffic accident risk of private vehicles and public transportation. Transp. Res. Part A Policy Pract. 2019, 119, 148–161. [Google Scholar] [CrossRef]

- Hung, K.V. Education influence in traffic safety: A case study in Vietnam. IATSS Res. 2011, 34, 87–93. [Google Scholar] [CrossRef]

- Mohan, D.; Tiwari, G.; Varghese, M.; Bhalla, K.; John, D.; Saran, A.; White, H. PROTOCOL: Effectiveness of road safety interventions: An evidence and gap map. Campbell Syst. Rev. 2020, 16, e1077. [Google Scholar] [CrossRef]

- Bonnet, E.; Lechat, L.; Ridde, V. What interventions are required to reduce road traffic injuries in Africa? A scoping review of the literature. PLoS ONE 2018, 13, e0208195. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).