Abstract

This study introduces a comprehensive system designed to monitor and manage the performance of research and development (R&D) projects. The proposed system offers project managers a systematic methodology to enhance the efficiency of R&D performance management. To achieve this, the system categorizes R&D performance indicators based on their feasibility of measurement and the frequency of output generated during R&D activities. The progress rate of each indicator is then represented in terms of observable and latent progress, considering the unique characteristics of each indicator. The calculation methods vary depending on the classification of the indicator. Additionally, a progress priority matrix is developed to aid decision-making by evaluating the current progress and rate of progress increment of the indicators. This matrix enables project managers to prioritize tasks effectively. To assess the validity and usefulness of the proposed system, it is applied to a real case of large-scale R&D projects conducted at a Korean research institute. Interviews with project managers validate that the proposed system surpasses the effectiveness of the legacy system in monitoring R&D progress. Through its comprehensive framework and practical application, this study contributes to the enhancement of R&D project performance monitoring, providing valuable insights for project managers seeking to optimize their R&D management processes.

1. Introduction

Organizations use research and development (R&D) activities to find solutions to the challenges imposed by rapid changes in customer needs and the competitive environment of domestic and international markets [1,2]. R&D consumes significant human and technological resources, so it must be managed systematically [2,3,4,5]. This is a fundamental task for a successful business [4,6,7]. With an increase in R&D expenditure and the need for R&D productivity, performance management of R&D projects becomes increasingly important [1].

R&D is essential for advancing national competitiveness [5,8,9], prompting many national governments to invest in R&D and introduce R&D programs. According to the Main Science and Technology Indicators provided by the OECD statistical database, Gross Domestic Expenditure on R&D (GERD) in OECD countries has generally been increasing each year. In 2020, the average GERD as a percentage of Gross Domestic Product (GDP) was 2.74%. Israel (5.71%) and South Korea (4.80%) were the first and second largest investors, respectively. Specifically, the average government-financed GERD as a percentage of GDP in 2020 was 0.66%, with South Korea (1.07%) being the top investor. Government-funded R&D activities contribute to technology, economy, and society as a whole [6,10]. To fulfill these contributions, most developed countries operate Public Research Institutes (PRIs), which often conduct infrastructure and large-scale studies [6,11,12].

These studies have a long-term nature and are funded by taxes; therefore, it is important to monitor their progress to ensure valuable outcomes [5]. As a result, some cases monitor R&D performance using process indicators or interim evaluations [9,13,14]. Previous studies on R&D performance monitoring have also presented measurement systems or models with an emphasis on continuous progress checks [15,16,17,18,19,20]. However, if the monitoring intervals are short, the assessment may only detect “no measurable change” and miss latent progress, such as conceptual advances that have not been implemented yet [7]. The weakness of using process indicators is that they do not measure ultimate results [8,21]. Moreover, several well-known methods have limited applicability when monitoring the performance of R&D projects [6,9,22].

Therefore, this paper aims to present a system for monitoring R&D performance to overcome these limitations. After conducting a literature review, we design the system by classifying performance indicators, developing metrics to measure the progress level of each indicator, and suggesting ways to prioritize them. Subsequently, we conducted a case study to demonstrate its application, develop an information system, and discuss the practical results and contributions.

2. Related Works

2.1. Performance Measurement and Monitoring

Performance management has been defined as a continuous process of measuring and developing performance in organizations by linking each individual’s performance and objectives to the organization’s overall mission and goals [23]. Although the process consists of several phases and has been described differently by various researchers, it can be summarized as a cyclical set of actions: goal-setting, monitoring, evaluation, reward, and feedback [23,24,25]. This repetitive process provides organizations and individuals with opportunities to refine and improve their development activities [26].

For this reason, a performance management system (PMS) has been introduced in various institutions as an advantageous management tool. The PMS not only motivates employees but also monitors the contributions of organizational operations and managerial supervision [4,7,23]. It helps managers assist their subordinates in understanding the role and direction of their work through timely actions, while also helping subordinates become aware of their contributions to the organization.

Performance evaluation is used to manage components of the PMS cycle, such as planning and reward, and to improve the organization’s competitiveness. Some organizations consider performance evaluation synonymous with performance management, but the two concepts are different [27]. Performance evaluation is a one-time action at a specific time, whereas performance management is a dynamic and continuous process [26]. PMS involves continuous monitoring and reporting of performance, aiming to improve participants’ activities and achieve organizational goals [13,14,28].

Measurement of performance is a prerequisite for successful management. Performance measurement is the ongoing monitoring and reporting of program accomplishments, particularly progress towards pre-established objectives [29]. This diagnostic activity provides feedback on the current status of the organization, identifies problems that impede objective achievement, and enhances decision-making speed and accuracy and control over performance management [4,7,23]. Immediate feedback and control based on measurements not only motivate employee skill enhancement but also contribute to improving organizational competitiveness by involving all members of the organization in the evaluation process [30]. Employee participation in organizational operations increases their sense of responsibility, leading to meaningful improvements in organizational performance [31,32]. Additionally, a formal progress check can enhance an institution’s operational efficiency and reduce the occurrence of objective failures [22]. Therefore, successful performance management can be achieved through continuous monitoring and appropriate measurement.

2.2. Performance Management for R&D Projects

R&D activities are typically aimed at solving unique, unstructured, and complex problems [2,20]. These characteristics are similar to those of a project, which is unique, utilizes novel processes, operates within a limited time frame, and concludes upon achieving the objective [33,34]. As a result, R&D is primarily conducted in the form of projects. Various practical project monitoring methods have been proposed, including the Gantt chart [35], slip chart [36], Earned Value Analysis [37], and network-based techniques such as PERT and CPM (commonly used as PERT/CPM) [38]. While these methods are useful for project management, their application to R&D performance measurement and monitoring is limited. These methods typically require setting project milestones or breaking down the project into subtasks. However, due to the complexity of R&D, it is challenging to break down projects into subcomponents for monitoring, unlike projects such as construction jobs that have clearly defined work stages [22]. Additionally, previous project monitoring schemes have primarily focused on whether the project was completed on time, but for R&D, further management methods are needed to enable progress measurement towards a target.

Numerous modern methods suitable for measuring or evaluating R&D performance have been proposed. The first was Management by Objectives (MBO) [39], which is a theoretical framework for performance management. Several more recent developments have overcome MBO’s limitations of focusing on short-term and internal goals. Notably, the Balanced Scorecard (BSC) [40] is a performance management tool that considers multiple perspectives. As the resources invested in R&D projects increase, interest in evaluating project efficiency also grows. Therefore, several studies have been conducted to evaluate R&D productivity by applying Data Envelopment Analysis (DEA) [41,42,43]. While most performance management methods can be used to manage R&D performance, they are somewhat separate from performance monitoring. Previous studies related to R&D project performance management have covered a range of topics, including performance evaluation for R&D selection, measurement to quantify productivity, review (or development) of performance indicators, and diffusion of R&D outcomes [6,10,28,44]. These studies have used ex post facto evaluation and feedback phases of performance management at the endpoint of R&D projects or programs, so they cannot provide immediate feedback during R&D activities. Hence, a performance monitoring method for R&D activities should combine methods for project management and performance management.

We have reviewed previous studies and cases of R&D performance monitoring systems and their applications. Some studies presented an R&D performance monitoring system or model with continuous progress checks. These systems include assessing current levels of R&D processes to improve problematic processes [19], considering integrated perspectives of cost, progress, and time [20], and facilitating project performance analysis by applying a multicriteria approach [18]. Some works have examined measurement practices adopted by technology-intensive firms [16] or commercial and government organizations [17], or used in projects for developing new products [15]. Other studies attempted to monitor R&D performance by employing process indicators or interim evaluations [9,13,14]. While frequent progress checks enhance the effectiveness of performance monitoring, they can overlook latent progress. If the progress-check interval is too short, an evaluation may overlook progress that has been made but has not yet manifested in visible outputs. A performance management system may report “no progress,” leading to demotivation among staff, increased measurement burden, and the production of unnecessary ad hoc reports [7]. We found that previous studies and cases did not consider latent progress, making them inappropriate for measuring progress on short cycles. The use of process indicators also has the weakness of not measuring the ultimate results that need to be achieved [8,21].

In general, R&D is typically classified into basic research and applied research based on the research stage [16]. However, for the purpose of examining the differences in the characteristics of R&D projects, it is also possible to classify them into the public and private sectors based on the funding source. Public R&D and private R&D serve different purposes. Public R&D encompasses multidimensional contributions, including technological, economic, and social purposes. Its aims are to accumulate basic knowledge, establish promising industries for the future, foster human resources in R&D, and improve the quality of life and communities [6,10]. On the other hand, private R&D is primarily conducted for profit-making purposes, such as developing new products [6,10].

Many developed countries have Public Research Institutes (PRIs) that undertake national R&D projects, particularly infrastructural and large-scale studies with long-term characteristics. These projects may involve aerospace, energy, biotechnology fields, or the establishment of high-performance computing resources and experimental facilities [6,11,12]. Due to the nature of such projects, monitoring progress becomes challenging and limited. When tracking performance targets over an extended study period, it may be difficult to identify progress, resulting in a “no progress” report. Overlooking latent progress can prevent providing immediate feedback to R&D performers during the activities and hinder timely responses to work delays, failures, and plan modifications [20,45]. Therefore, a performance monitoring system for R&D projects is necessary to overcome these limitations.

3. Proposal of Performance Monitoring System

3.1. Classification of Performance Indicators

The progress rate is commonly calculated using the formula (100 × (Output−baseline)/(Target−baseline)), where the baseline represents the starting point of performance monitoring before any progress has been made. However, if the frequency of actually produced outputs for an indicator is low during the research process, the progress rate calculated using this formula can only account for outwardly visible progress and fails to quantify latent progress. The uniform calculation using this formula cannot solve this problem, and typical classification systems of performance indicators [46] are inadequate for addressing it. Therefore, we need to establish classification criteria and a system to address these issues.

The first criterion is the feasibility of measuring progress at a desired time. This criterion answers the following question: Can progress on the indicator be measured at any time? The feasibility of measuring progress depends on factors such as the availability of immediate progress data and the ease of objectively assessing the indicator. The R&D manager makes a decision based on the characteristics of the indicator. If the answer is “Feasible”, it means that the R&D manager can measure the produced output at any time. If the answer is “Infeasible”, it implies that measuring progress at a given time is impossible, difficult to objectively assess, or requires excessive resources. For example, the indicator “Number of academic papers published” is feasible as it can be counted at any time. However, the indicator “Level of customer satisfaction” based on an external survey is not feasible during the progress-check period because it requires surveying products that have not yet been completed, and obtaining results would require additional time and cost.

The second criterion is the frequency at which output is produced during the progress-check period. When an indicator has a small target value, a short progress-check interval may result in a report of “no progress” [7]. However, it is possible that progress is being made invisibly by the R&D performers, even if there is no visible output. Therefore, the second criterion answers the following question: Does the output of the indicator occur frequently? To determine the answer, we perform the following calculations. We define the “expected output per check” of an indicator as the target value of the indicator divided by the total number of progress checks during the monitoring period. We also define the “unit of measure” of an indicator as the minimum amount of changed output. If the expected output per check is larger than the unit of measure, then the indicator is classified as “Frequent.” If it is smaller, then it is classified as “Infrequent”. For example, let us consider the indicator “Number of academic papers published” with an annual target value of 20 and a progress-check interval of every month (total number of checks is 12). In this case, the unit of measure is 1 because the number of papers increases by 1 as a natural number. The expected output per check is 20/12 = 1.67. Since the expected output per check is larger than the unit of measure, the frequency of produced output is classified as “Frequent”. This classification suggests that a paper may have been published within one performance-check interval.

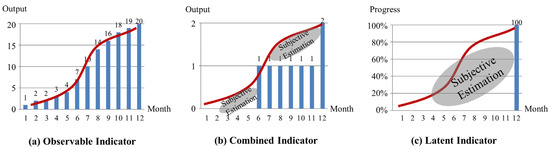

These criteria divide the performance indicators into three categories (Table 1, Figure 1): Observable Indicator (IndicatorOb), Latent Indicator (IndicatorLt), and Combined Indicator (IndicatorCo). IndicatorOb is more likely to show output for most progress checks. For this indicator, progress can be measured using actual output data. IndicatorCo is less likely to show output for most progress checks. To measure progress for this indicator, actual output data should be taken into account, considering the potential for latent progress that may not be immediately visible. IndicatorLt is infeasible to measure progress during the progress-check period. This indicator represents latent progress that cannot be objectively measured at a given time. Progress for these indicators is measured subjectively, taking into consideration the potential for latent progress.

Table 1.

Metrics for calculating progress rate by indicator category.

Figure 1.

Conceptual example of the measurement depending on the indicator.

3.2. Metric to Measure Progress Rate of Indicators

In this study, we utilized the indicator classification to develop metrics (Table 1) that represent the progress of each indicator. These metrics allow us to identify the Degree of Progress made in relation to the target value of the indicator. We refer to the calculated result as the “progress rate” in this paper. The progress rate is expressed as a percentage and indicates the level of achievement of the indicator.

The progress rate is calculated based on both “Observable Progress” and “Latent Progress”. Observable Progress refers to the visible progress obtained from the actual output during the progress-check period, expressed as a percentage of the output against the target value. On the other hand, latent progress represents the invisible progress that has not yet manifested as actual output during the progress-check period. It is estimated subjectively and expressed as a percentage for the remaining target value.

To determine the latent progress, researchers who are involved in the R&D project corresponding to the indicator perform a subjective self-check of the research progress. This self-check involves evaluating the progress and estimating the percentage of completion for the remaining target value. One approach to measuring the progress rate and evaluating R&D performance is to consult an expert who is knowledgeable about the technology or actively participating in the research [47,48,49]. Seeking input from external experts can provide relatively objective results. However, this approach may have practical limitations. Alternatively, self-check by the R&D practitioner has the advantage of the researcher being intimately familiar with the research schedule. However, relying solely on self-check by the researcher may introduce biases in the assessment [47]. Therefore, the R&D manager should be aware that the evaluation may be inflated and take necessary precautions when considering self-assessment results.

IndicatorOb considers only Observable Progress, IndicatorLt considers only latent progress, and IndicatorCo represents both Observable and latent progress. Let us consider an example where the target value for an IndicatorCo is 4 and the actual output observed during a progress-check is 1. In this case, the Observable Progress would be calculated as 100 × (1/4) = 25%. Now, let us assume that the researcher evaluates the progress for the remaining target value (3 out of 4) as 40%. This assessment represents the latent progress. To calculate the latent progress, we multiply the proportion of the remaining target value (3/4) by the researcher’s rating (40%), resulting in (3/4) × 100 × 0.4 = 30%. To obtain the overall progress rate for the indicator, we sum the Observable Progress and the latent progress: 25% + 30% = 55%. Therefore, the progress rate for the indicator in this example would be 55%.

3.3. Priority Evaluation to Future Directions

Appropriate allocation of resources enables R&D institutes to maximize their output [1]. During the R&D planning or evaluation phase, resource allocation for R&D requires evaluating the importance of tasks or comparing the input to the output. Similarly, reallocation of resources during the course of R&D requires evaluating the progress made to guide modifications in resource allocation or to provide additional support to researchers, maximizing the probability of achieving the target value [14,50]. In this step, we propose a prioritization method that considers the progress rate to support feedback to research participants by providing reasonable evidence of resource reallocation.

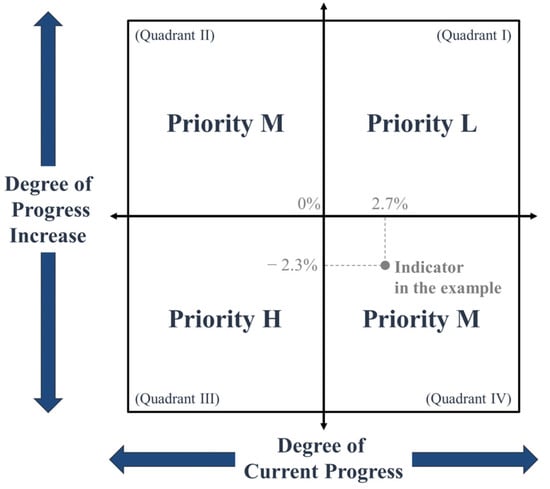

To determine the progress priority of indicators, we introduce the concept of the “Progress Priority Matrix” (Figure 2). This matrix takes into account potential errors that may arise from subjective evaluations of the progress rate. At each progress checkpoint, each indicator is positioned on the matrix based on comparisons with the Degree of Current Progress and the Degree of Progress Increase.

Figure 2.

Progress priority matrix.

The Degree of Current Progress is defined as the expected value of the progress rate that an indicator should normally achieve at the Nth progress checkpoint throughout the entire monitoring period. The Degree of Progress Increase represents the standard increment in the progress rate from the (N-1)th to the Nth progress checkpoint. In this study, we assume a linear increase in the progress rate over the entire monitoring period to derive the standard increment.

To determine the progress priority of indicators, two criteria are considered. First, if the progress rate of an indicator at the Nth checkpoint exceeds the Degree of Current Progress, it is placed on the right half of the matrix. Second, if the increment of the progress rate from the (N-1)th to the Nth checkpoint exceeds the Degree of Progress Increase, it is placed on the upper half of the matrix.

Based on the positioning of indicators on the matrix, their progress priorities are classified as high (H), medium (M), or low (L). Priority H corresponds to Quadrant III, Priority M corresponds to Quadrant II or IV, and Priority L corresponds to Quadrant I in the Cartesian coordinate system. The results of the progress priority matrix are used by the R&D management department to establish policies and take actions to increase progress toward the objective.

For example, let us consider a scenario where monthly progress checks are conducted on the last day of each month for a year. If the current checkpoint is April, the Degree of Current Progress can be estimated as approximately 100 × (4/12) = 33.3%, and the Degree of Progress Increase is (100 × (4/12)) − (100 × (3/12)) = 8.3%. Now, assuming that the progress rate of an indicator was 30.0% in March and 36.0% in April, the progress priority of the indicator in April would be assigned as Priority M (Quadrant IV). This is because the progress rate (36.0%) exceeds the Degree of Current Progress (33.3%), and the increment in the progress rate (6.0% = 36.0% − 30.0%) does not exceed the Degree of Progress Increase (8.3%).

4. Case Study

4.1. Backgrounds

South Korea is recognized as the world’s fifth-largest investor in R&D, having allocated USD 11 billion towards R&D activities. Additionally, South Korea holds the distinction of having the largest government-financed GERD as a percentage of GDP in 2020. Given the significant investment in public R&D, there is a need for a systematic performance monitoring system to effectively manage public R&D projects. In light of this, we applied the proposed system to a case study involving a Public Research Institute (PRI) in South Korea and developed an information system tailored to the institute’s requirements.

The case study institute, referred to as PRI-K, is a government-funded research institute primarily focused on conducting national-level R&D related to science and technology information and infrastructure in South Korea. PRI-K is required to submit a research plan to the government for a 3-year period, with annual interim evaluations conducted by the government. Despite PRI-K’s intention to monitor progress at short intervals during R&D activities, the institute faced challenges in quantifying the progress of indicators set on an annual basis. The collection of progress data was further complicated by the unique characteristics of the performance indicators. Some indicators could be measured immediately, while others required a certain project level to determine achievement. Additionally, some indicators had a small number of target values, such as 1 or 2 for a year.

PRI-K consists of four research divisions, each comprising multiple departments. The institute operates a large-scale R&D program consisting of various projects. These projects are allocated among the divisions and departments, and each project is associated with a set of performance indicators. Goals within PRI-K are organized hierarchically at the institute, division, and department levels, with each goal and performance indicator assigned a weight. While certain indicators are shared across multiple projects (e.g., “Number of academic papers published”), others are unique to specific projects. PRI-K aimed to monitor progress at approximately monthly intervals. In this context, we implemented the proposed system within PRI-K, utilizing its established performance indicators.

4.2. Implementation

Firstly, we classified a total of 123 indicators of all projects according to the classification criteria with the manager of the R&D management department, after preprocessing the target values to ensure their consistency. These indicators were constructed based on technological, infrastructural, social, or economic aspects, and were configured to evaluate performance annually with preset weights. The results were reviewed by each research division. The examples of the indicators by categories are as shown in Table 2. Finally, 28 were classified as IndicatorOb, and 29 as IndicatorCo. A total of 66 were classified as IndicatorLt, which is evaluated using statistical analysis, surveys, technical demonstrations, or comparison of experimental results with best practices.

Table 2.

A sample of performance indicators by categories (a case project from the Supercomputing Division).

Then, we derived the progress rate of each indicator by applying the developed metrics according to the indicator categories. The progress rate was calculated using the information system described in the next subsection of this paper. We operated the information system over a period of one year to derive the progress rate, and then derived the progress priorities once at the 6th checkpoint as a sample. During this step, we identified a few unusual cases and took actions as follows. The identical indicators such as ‘Number of academic papers published’ were used by all projects, but the indicator category could change according to the target value. In this case, two options were possible: to unify each category of the indicator into the same category, or to apply different categories by recognizing the distinct characteristics of the projects. The former was chosen after discussion with the manager. In addition, some indicators had a target value of 0, so the problem of dividing by zero occurs. In this case, we fixed the value of progress rate at 100%.

We calculated the progress rate by the levels of management, using the following weighted sum formulas:

Let the progress rate function and weight function defined on a discrete set Aj = {a1, a2, a3, …, ai} for a given number j; the weighted sum of the progress rate on Aj is defined as follows.

Then, the weighted sum of the progress rate on the project is as follows.

where

i is an index for indicator,

j is an index for project,

indi is an indicator i, belonging to project j,

prjj is a project j.

Therefore, the weighted sum of the progress rate by levels of management is as follows.

where

k is an index for department k,

l is an index for division l,

dptk is a department k,

divl is a division l,

inst is the institute, PRI-K.

4.3. Information System for R&D Performance Monitoring

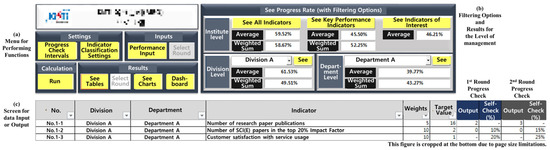

We developed a prototype information system (IS) for R&D performance monitoring using Visual Basic for Applications in Microsoft Excel. The IS was designed to provide convenience for the R&D manager and allow for expandability. The interface of the IS includes a menu for executing various functions, filtering options for selecting and printing specific content based on management levels, and a screen section for data input and result output. While the interface was originally developed in Korean, for the purpose of this paper, certain parts of Figure 3 and Figure 4 have been translated into English and are accompanied by corresponding descriptions.

Figure 3.

The interface of the Information System for PRI-K.

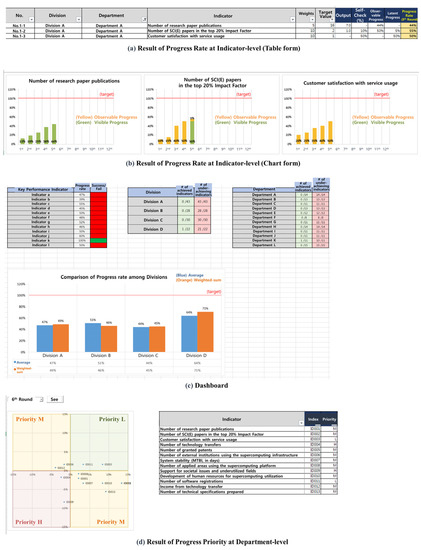

Figure 4.

Example of application results of the proposed system to PRI-K.

The R&D manager collects progress data from the researchers involved in the R&D project and utilizes the program to manage the progress and provide feedback to the researchers. The manager enters preset data such as the progress-check period, target values of indicators, and data required for determining the indicator category. The manager then inputs the progress data into the program. The program employs the provided data to calculate the progress rate and progress priority, which are subsequently displayed. The manager can provide feedback on the results to the researchers based on the displayed information. The progress rate of each indicator is presented in the form of tables and graphs, providing a summary of the information on a dashboard. Additionally, the progress priority results are shown to aid the manager in making decisions and allocating resources effectively. Overall, the IS serves as a valuable tool for R&D performance monitoring, facilitating the collection, calculation, and visualization of progress data, as well as enabling efficient feedback and decision-making processes within the R&D management department.

5. Discussions

To validate the effectiveness of the proposed system, we applied it to a real-world case institute, PRI-K. Over the course of a year, PRI-K implemented the IS and tested its functionality. We conducted interviews with four individuals from PRI-K, including three from the R&D management department and one from the R&D department, to confirm the system’s validity.

PRI-K previously lacked a short-term cycle performance monitoring system, aside from an annual interim evaluation. Progress checks on indicators were conducted through intermittent interdepartmental meetings or simple counting of certain indicator outputs. Due to limited quantitative verification, such as historical progress data and research hour records, we primarily relied on qualitative verification through interviews to assess the performance monitoring system and validate the IS.

The proposed system successfully allowed the manager to monitor the progress of all indicators at PRI-K within short-term cycles, addressing the issue of “no progress” and reducing the workload associated with manual tasks, reporting, and meetings. The IS provided visual representations of indicator progress and management levels, enabling a more intuitive understanding of the data. Interviewees expressed that the IS reduced the administrative burden of R&D monitoring by presenting results through a dashboard, eliminating the need for text-based reports to higher management. Additionally, employee involvement in the process of determining research progress facilitated clearer comprehension and self-management of work progress. Feedback from the interviewees indicated that the IS enabled the identification of historical project progress at each check, allowing for a comprehensive examination of achievement trends and the establishment or adjustment of future objectives. Overall, all interviewees agreed that the proposed system contributed to achieving institute-level goals and improving productivity compared to the previous monitoring system.

To assess the usefulness of the proposed system, we compared it with various performance and project monitoring methods using comparison criteria and results according to the prior study [20] (Table 3). While some comparisons proved challenging due to differences in purpose and operational methods, the test confirmed that the proposed system offers advantages in monitoring R&D project progress and managing projects by providing periodic feedback and considering the complexities of “latent progress”.

Table 3.

Comparisons between monitoring methods and the proposed system.

The proposed system and information systems (IS) contribute significantly to both academic and practical domains. The measurement of R&D performance has been approached from various perspectives, taking into account the organizational purpose and measurement perspectives [46]. Initially introduced by the Balanced Scorecard (BSC) [40], which expanded the traditional financial perspective to a more balanced one, subsequent studies have developed indicators from a nonfinancial standpoint [51,52]. Other studies have considered the type of R&D [53,54] based on the OECD Frascati Manual or the process phase [3,55] within the performance management cycle. Recognizing the social impact of R&D in the public sector, researchers have classified indicators from technological, social, or economic perspectives [6,56]. In this study, we propose a novel classification system that goes beyond these existing classifications, incorporating a different dimension capable of encompassing latent progress and assessing overall progress.

Employee participation in organizational management has long been recognized as a crucial factor in enhancing organizational performance and employee morale [31,57,58]. However, measuring R&D performance presents significant challenges, as it could potentially stifle researchers’ creativity and reduce productivity [56]. Despite these challenges, employee participation remains a key focus in R&D performance management. Whitley and Frost [49] highlighted the importance of involving not only R&D project directors but also the researchers themselves and their peers in the evaluation process. Similarly, Werner and Souder [54] proposed the use of multiple evaluators, including the researcher, peers, and external stakeholders, as a suitable approach. Additionally, Khalid and Nawab [32] analyzed the relationship between employee participation and organizational performance, finding a significant positive impact. A survey-based study of Korean R&D researchers [48] suggested that an effective measurement system should involve participation from both researchers and managers.

In this study, we have supported previous research that demonstrates how organizational participation enhances organizational productivity. We have also confirmed the successful achievement of organizational goals through performance management that includes researchers’ own progress assessments. Feedback from the research department interviewees indicated that this system alleviated concerns about the lack of actual performance achieved during the monitoring period. The system provided a participatory environment that actively engaged researchers in assessing the progress of their research. Therefore, we conclude that by understanding and acknowledging progress and implementing an appropriate monitoring system for researchers, a successful performance-oriented organizational culture can be established, eliminating skepticism surrounding one-sided progress supervision.

This IS offers a clear visualization of indicator progress, enabling managers to effectively monitor R&D performance and provide feedback. The overall progress is presented in a standardized format, allowing managers to easily identify progress by color-coded indicators. For instance, R&D managers can quickly identify whether indicators have been achieved, marked in green, or not achieved, marked in red. Observable and latent progress are distinguished by different colors, enabling a clear differentiation between actual output and expected output. Furthermore, the visual representation of progress facilitates comparisons both within indicators and among different divisions. Feedback received highlighted that the progress rate is automatically calculated from the indicator level to the institute level. This comparative analysis of divisional progress assists in the development of an administrator’s overall management strategy. The dashboard also simplifies comprehension of indicator achievement by displaying the total number of indicator achievements in aggregate. Notably, one interviewee mentioned that the original performance indicators can be utilized as they are, eliminating the need to establish new process indicators.

6. Conclusions

With the growing impact of R&D and the increasing need for effective management of R&D projects, monitoring their performance, especially in large-scale R&D endeavors, becomes increasingly crucial. In this context, we have presented a R&D performance monitoring system and demonstrated its application in a Public Research Institute. This study focused on monitoring R&D performance by tracking indicator trends to ensure the achievement of desired targets. To fulfill this purpose, we introduced a monitoring system that measures the progress rate of performance indicators and developed an information system for R&D project management.

While this paper proposes an improved method for monitoring R&D performance, it does have some limitations in its design and implementation. The quantification of resource inputs, such as research hours allocated to each project, was not conducted in the case study. Therefore, calculating the efficiency of R&D was not possible, leading to the development of the progress priority matrix as an alternative. Further research could address this limitation by establishing and solving an optimization problem for resource allocation, using tracked man-hours information. Additionally, a method to detect and mitigate biases in the self-checking of latent progress should be developed. While such biases may be identified through comparison with the Degree of Progress Increase, compensating for these biases can be achieved by analyzing self-check tendencies. Furthermore, in future research, the accumulated history of progress rates could be leveraged to identify performance achievement trends for each indicator beyond linear assumptions, thus informing subsequent iterations of goal setting and performance monitoring.

Author Contributions

Conceptualization, S.L.; Methodology, K.L.; Software, K.L.; Validation, S.L.; Investigation, K.L.; Writing—original draft, K.L.; Writing—review & editing, S.L.; Visualization, K.L.; Supervision, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bican, P.M.; Brem, A. Managing innovation performance: Results from an industry-spanning explorative study on R&D key measures. Creat. Innov. Manag. 2020, 29, 268–291. [Google Scholar]

- Drongelen, I.K.-V.; Nixon, B.; Pearson, A. Performance measurement in industrial R&D. Int. J. Manag. Rev. 2000, 2, 111–143. [Google Scholar] [CrossRef]

- Brown, W.B.; Gobeli, D. Observations on the measurement of R&D productivity: A case study. IEEE Trans. Eng. Manag. 1992, 39, 325–331. [Google Scholar]

- Chiesa, V.; Frattini, F.; Lazzarotti, V.; Manzini, R. Performance measurement in R&D: Exploring the interplay between measurement objectives, dimensions of performance and contextual factors. R&D Manag. 2009, 39, 487–519. [Google Scholar]

- Teather, G.G.; Montague, S. Performance measurement, management and reporting for S&T organizations—An overview. J. Technol. Transf. 1997, 22, 5–12. [Google Scholar]

- Coccia, M. New models for measuring the R&D performance and identifying the productivity of public research institutes. R&D Manag. 2004, 34, 267–280. [Google Scholar]

- Drongelen, I.C.K.-V.; Cooke, A. Design principles for the development of measurement systems for research and development processes. R&D Manag. 1997, 27, 345–357. [Google Scholar] [CrossRef]

- Chiesa, V.; Frattini, F.; Lazzarotti, V.; Manzini, R. An exploratory study on R&D performance measurement practices: A survey of Italian R&D-intensive firms. Int. J. Innov. Manag. 2009, 13, 65–104. [Google Scholar]

- Lee, M.; Son, B.; Om, K. Evaluation of national R&D projects in Korea. Res. Policy 1996, 25, 805–818. [Google Scholar]

- Bae, S.J.; Lee, H. The role of government in fostering collaborative R&D projects: Empirical evidence from South Korea. Technol. Forecast. Soc. Chang. 2020, 151, 119826. [Google Scholar]

- Feldman, M.P.; Kelley, M.R. The ex ante assessment of knowledge spillovers: Government R&D policy, economic incentives and private firm behavior. Res. Policy 2006, 35, 1509–1521. [Google Scholar]

- Luo, J.; Ordóñez-Matamoros, G.; Kuhlmann, S. The balancing role of evaluation mechanisms in organizational governance—The case of publicly funded research institutions. Res. Eval. 2019, 28, 344–354. [Google Scholar] [CrossRef]

- Nishimura, J.; Okamuro, H. Internal and external discipline: The effect of project leadership and government monitoring on the performance of publicly funded R&D consortia. Res. Policy 2018, 47, 840–853. [Google Scholar]

- Pearson, A. Planning and control in research and development. Omega 1990, 18, 573–581. [Google Scholar] [CrossRef]

- Chiesa, V.; Frattini, F.; Lazzarotti, V.; Manzini, R. Measuring performance in new product development projects: A case study in the aerospace industry. Proj. Manag. J. 2007, 38, 45–59. [Google Scholar] [CrossRef]

- Frattini, F.; Lazzarotti, V.; Manzini, R. Towards a system of performance measures for research activities: Nikem research case study. Int. J. Innov. Manag. 2006, 10, 425–454. [Google Scholar] [CrossRef]

- Kahn, C.; McGourty, S. Performance management at R&D organizations. In Practices and Metrics from Case Examples; The MITRE Corporation: Bedford, MA, USA, 2009. [Google Scholar]

- Lauras, M.; Marques, G.; Gourc, D. Towards a multi-dimensional project Performance Measurement System. Decis. Support Syst. 2010, 48, 342–353. [Google Scholar] [CrossRef]

- Lee, K.; Jeong, Y.; Yoon, B. Developing an research and development (R&D) process improvement system to simulate the performance of R&D activities. Comput. Ind. 2017, 92, 178–193. [Google Scholar]

- Pillai, A.S.; Rao, K.S. Performance monitoring in R&D projects. R&D Manag. 1996, 26, 57–65. [Google Scholar]

- Cho, E.; Lee, M. An exploratory study on contingency factors affecting R&D performance measurement. Int. J. Manpow. 2005, 26, 502–512. [Google Scholar]

- Nakamura, O.; Ito, S.; Matsuzaki, K.; Adachi, H.; Kado, T.; Oka, S. Using roadmaps for evaluating strategic research and development: Lessons from Japan’s Institute for Advanced Industrial Science and Technology. Res. Eval. 2008, 17, 265–271. [Google Scholar] [CrossRef]

- Aguinis, H. Performance Management; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2009. [Google Scholar]

- Yang, Y. Sustainability Analysis of Enterprise Performance Management Driven by Big Data and Internet of Things. Sustainability 2023, 15, 4839. [Google Scholar] [CrossRef]

- Hansen, A. The purposes of performance management systems and processes: A cross-functional typology. Int. J. Oper. Prod. Manag. 2021, 41, 1249–1271. [Google Scholar] [CrossRef]

- Mondy, R.; Martocchio, J.J.; Pearson, H.R.M. Human Resource Management; Pearson: Hoboken, NJ, USA, 2016. [Google Scholar]

- Toppo, L.; Prusty, T. From performance appraisal to performance management. J. Bus. Manag. 2012, 3, 1–6. [Google Scholar] [CrossRef]

- Barbosa, A.P.F.P.L.; Salerno, M.S.; de Souza Nascimento, P.T.; Albala, A.; Maranzato, F.P.; Tamoschus, D. Configurations of project management practices to enhance the performance of open innovation R&D projects. Int. J. Proj. Manag. 2021, 39, 128–138. [Google Scholar]

- General Accounting Office. Performance Measurement and Evaluation: Definitions and Relationships; General Accounting Office: Washington, DC, USA, 2005. [Google Scholar]

- Sicotte, H.; Langley, A. Integration mechanisms and R&D project performance. J. Eng. Technol. Manag. 2000, 17, 1–37. [Google Scholar]

- Chang, G.S.-Y.; Lorenzi, P. The effects of participative versus assigned goal setting on intrinsic motivation. J. Manag. 1983, 9, 55–64. [Google Scholar] [CrossRef]

- Khalid, K.; Nawab, S. Employee participation and employee retention in view of compensation. SAGE Open 2018, 8, 2158244018810067. [Google Scholar] [CrossRef]

- Institute, P.M. A Guide to the Project Management Body of Knowledge (PMBOK Guide); Project Management Ins: Singapore, 2000. [Google Scholar]

- Turner, J.R.; Müller, R. On the nature of the project as a temporary organization. Int. J. Proj. Manag. 2003, 21, 1–8. [Google Scholar] [CrossRef]

- Richman, L. Project Management Step-by-Step; AMACOM/American Management Association: New York, NY, USA, 2002. [Google Scholar]

- Lucko, G.; Araújo, L.G.; Cates, G.R. Slip chart–inspired project schedule diagramming: Origins, buffers, and extension to linear schedules. J. Constr. Eng. Manag. 2016, 142, 04015101. [Google Scholar] [CrossRef]

- Bryde, D.; Unterhitzenberger, C.; Joby, R. Conditions of success for earned value analysis in projects. Int. J. Proj. Manag. 2018, 36, 474–484. [Google Scholar] [CrossRef]

- Bagshaw, K.B. PERT and CPM in Project Management with Practical Examples. Am. J. Oper. Res. 2021, 11, 215–226. [Google Scholar] [CrossRef]

- Drucker, P. The Practice of Management; Routledge: London, UK, 2012. [Google Scholar]

- Kaplan, R.S.; Norton, D.P. The balanced scorecard—Measures that drive performance. Harv. Bus. Rev. 1992, 70, 71–79. [Google Scholar] [PubMed]

- Hoseini, S.A.; Fallahpour, A.; Wong, K.Y.; Antuchevičienė, J. Developing an integrated model for evaluating R&D organizations’ performance: Combination of DEA-ANP. Technol. Econ. Dev. Econ. 2021, 27, 970–991. [Google Scholar]

- Karadayi, M.A.; Ekinci, Y. Evaluating R&D performance of EU countries using categorical DEA. Technol. Anal. Strateg. Manag. 2019, 31, 227–238. [Google Scholar]

- Han, S.; Park, S.; An, S.; Choi, W.; Lee, M. Research on Analyzing the Efficiency of R&D Projects for Climate Change Response Using DEA–Malmquist. Sustainability 2023, 15, 8433. [Google Scholar]

- Coluccia, D.; Dabić, M.; Del Giudice, M.; Fontana, S.; Solimene, S. R&D innovation indicator and its effects on the market. An empirical assessment from a financial perspective. J. Bus. Res. 2020, 119, 259–271. [Google Scholar]

- Kennedy, W.G. Different Levels of Performance Measures for Different Users in Science and Technology. Evaluation 2005, 26–29. [Google Scholar]

- Ojanen, V.; Vuola, O. Coping with the multiple dimensions of R&D performance analysis. Int. J. Technol. Manag. 2006, 33, 279–290. [Google Scholar]

- Keller, R.T.; Holland, W.E. The measurement of performance among research and development professional employees: A longitudinal analysis. IEEE Trans. Eng. Manag. 1982, EM-29, 54–58. [Google Scholar] [CrossRef]

- Kim, B.; Oh, H. An effective R&D performance measurement system: Survey of Korean R&D researchers. Omega 2002, 30, 19–31. [Google Scholar]

- Whitley, R.; Frost, P.A. The measurement of performance in research. Hum. Relat. 1971, 24, 161–178. [Google Scholar] [CrossRef]

- Siddiqee, M.W. Adaptive management of large development projects. In Proceedings of the IEEE International Conference on Engineering Management, Gaining the Competitive Advantage, Santa Clara, CA, USA, 21–24 October 1990; IEEE: Piscataway, NJ, USA; pp. 191–198. [Google Scholar]

- Bremser, W.G.; Barsky, N.P. Utilizing the balanced scorecard for R&D performance measurement. R&D Manag. 2004, 34, 229–238. [Google Scholar]

- Benková, E.; Gallo, P.; Balogová, B.; Nemec, J. Factors affecting the use of balanced scorecard in measuring company performance. Sustainability 2020, 12, 1178. [Google Scholar] [CrossRef]

- Hauser, J.R.; Zettelmeyer, F. Metrics to Evaluate R, D&E. Res.-Technol. Manag. 1997, 40, 32–38. [Google Scholar]

- Werner, B.M.; Souder, W.E. Measuring R&D performance—State of the art. Res.-Technol. Manag. 1997, 40, 34–42. [Google Scholar]

- Tidd, J.; Bessant, J.R. Managing Innovation: Integrating Technological, Market and Organizational Change; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Brown, M.G.; Svenson, R.A. Measuring R&D productivity. Res.-Technol. Manag. 1988, 31, 11–15. [Google Scholar]

- Phipps, S.T.; Prieto, L.C.; Ndinguri, E.N. Understanding the impact of employee involvement on organizational productivity: The moderating role of organizational commitment. J. Organ. Cult. Commun. Confl. 2013, 17, 107. [Google Scholar]

- Shadur, M.A.; Kienzle, R.; Rodwell, J.J. The relationship between organizational climate and employee perceptions of involvement: The importance of support. Group Organ. Manag. 1999, 24, 479–503. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).