Transmission Line Equipment Infrared Diagnosis Using an Improved Pulse-Coupled Neural Network

Abstract

1. Introduction

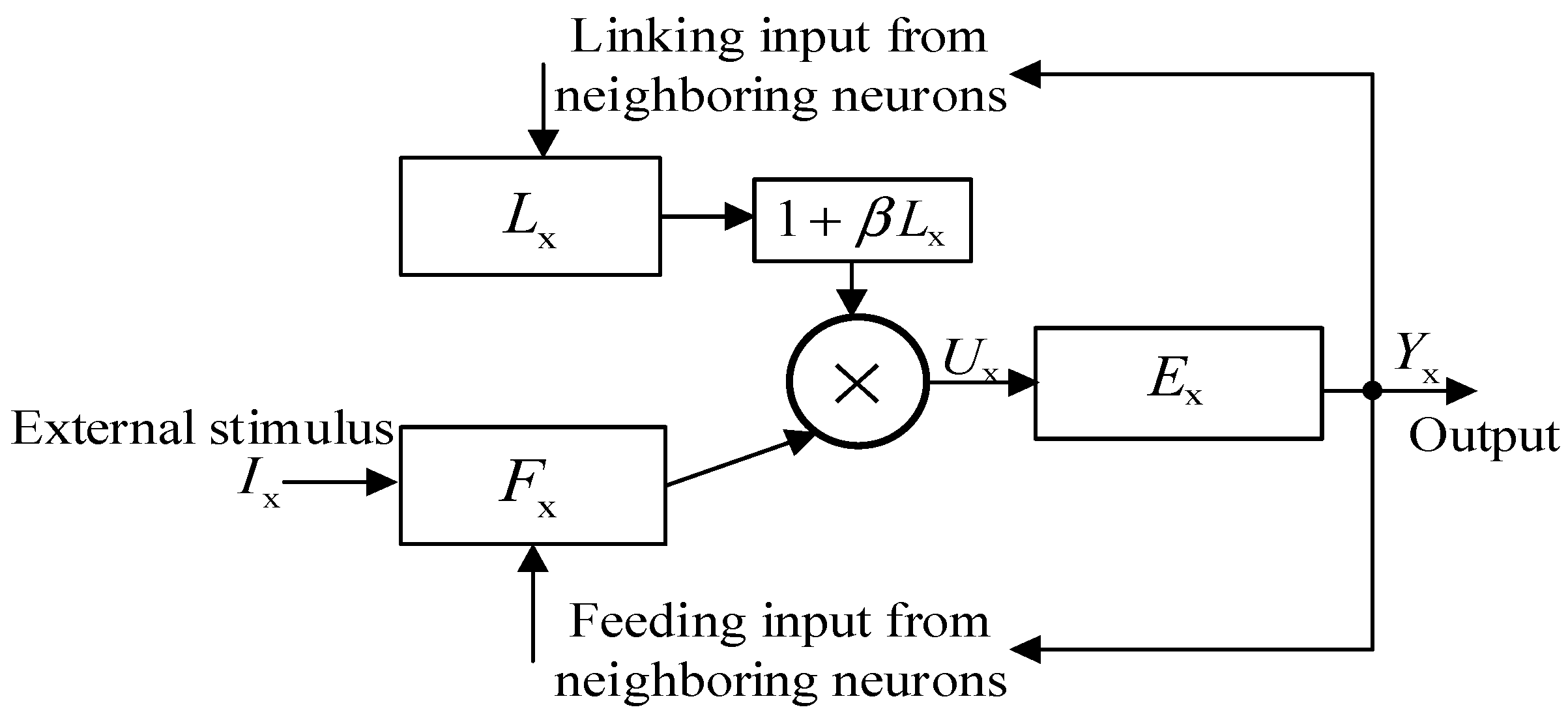

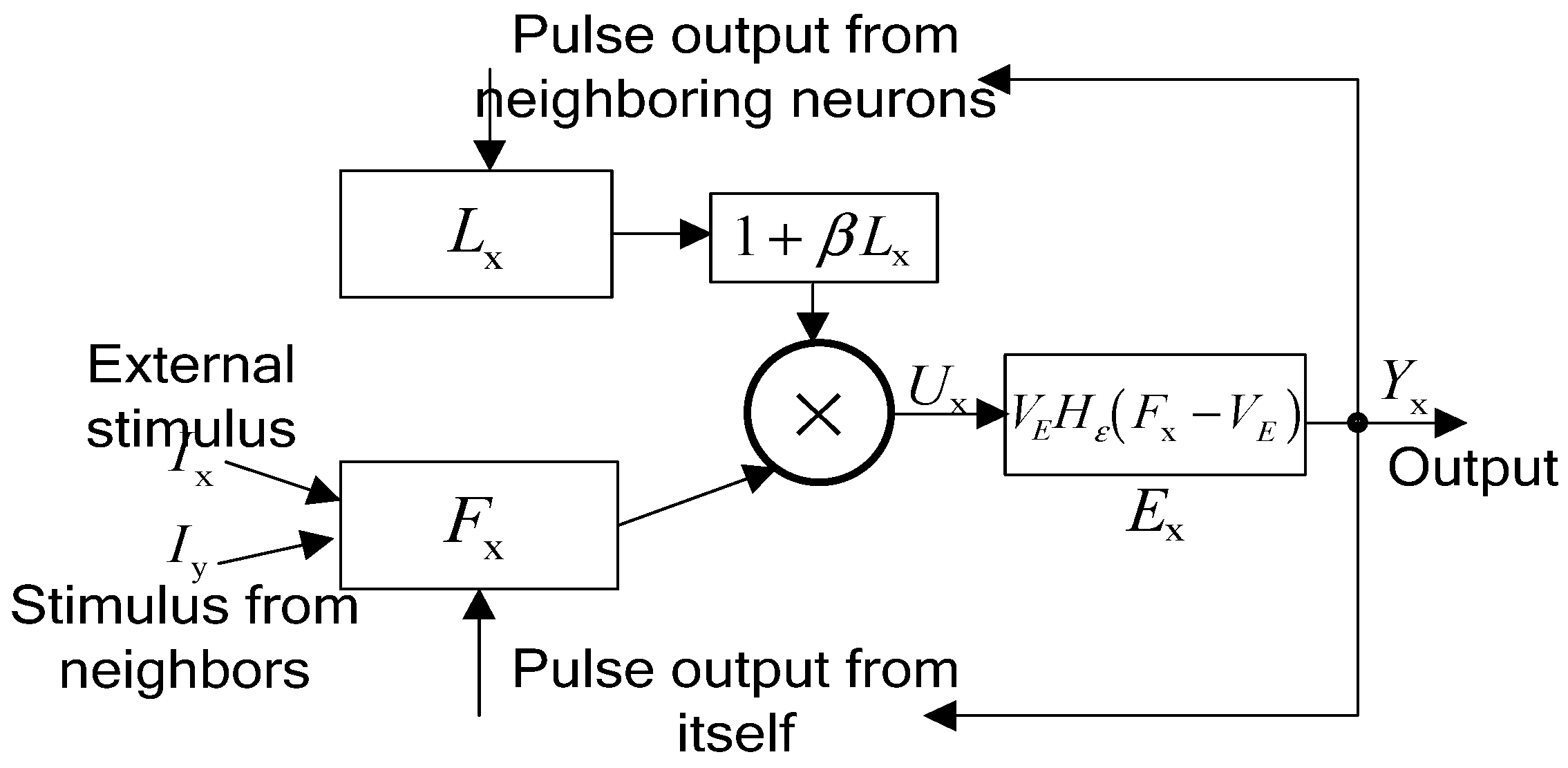

2. Pulse-Coupled Neural Network and Its Modification

2.1. The Original PCNN Model

2.2. Modification of PCNN Model

3. Optimized Parameter Setting Method for PCNN

3.1. The Maximum Thresholding Rule

3.2. Linking Coefficient β

| Algorithm 1: our PCNN model for image segmentation | |

| 1 Input | -- test images; -- PCNN parameter M/W in literature [2] -- the initial PCNN neural threshold E whose value is the highest intensity of the image, and ξ = 2. |

| 2 PCNN iteration | repeat Compute the parameter β in Equation (18); Calculate F, L, and U through iteration, as seen in Equations (6)–(8); Update neural threshold E in Equations (9) and (12); Update Y by Equation (10). until the pulse region Y does not change any more |

| 3 Output | --the marked region R as the final segmentation result |

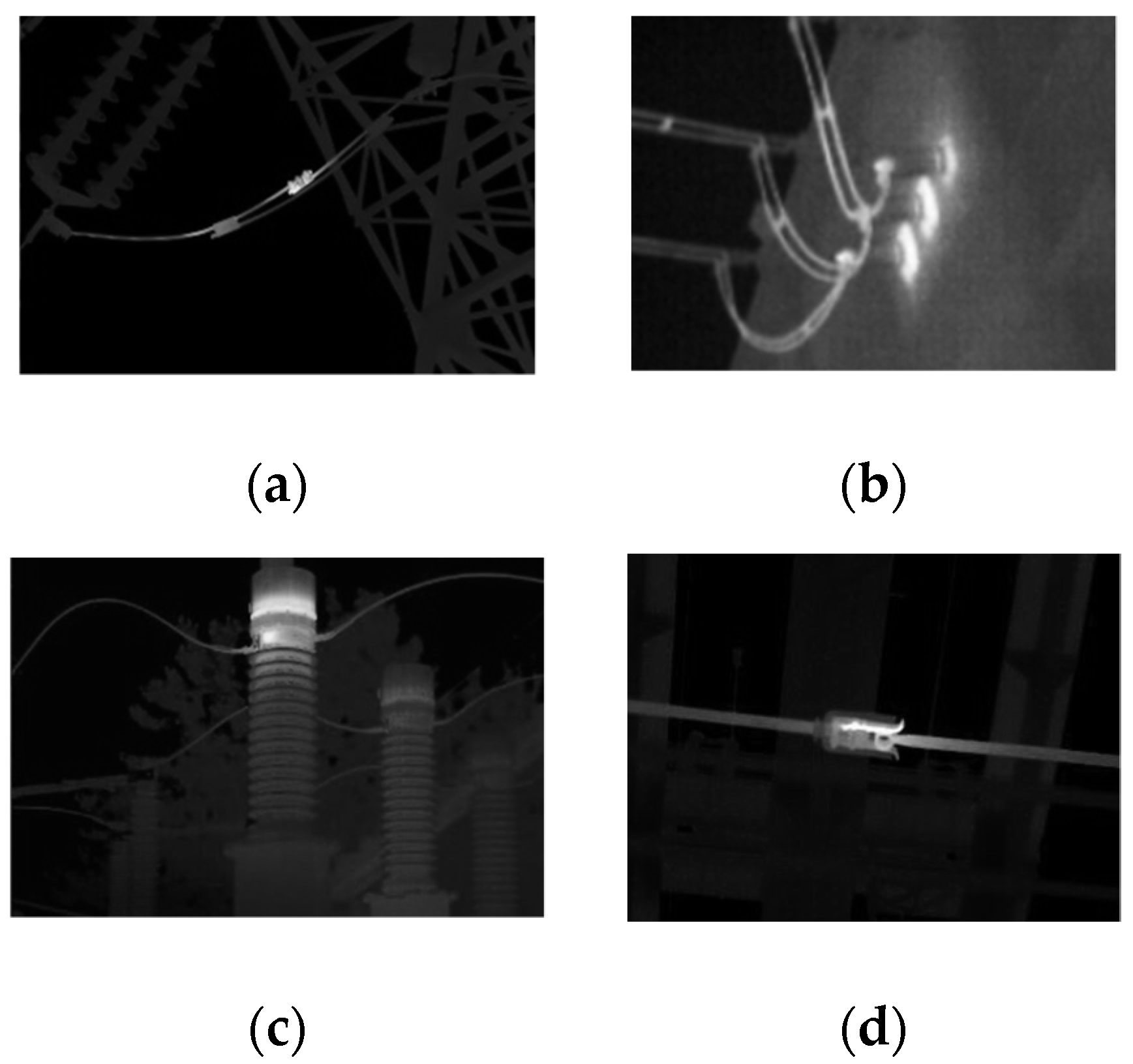

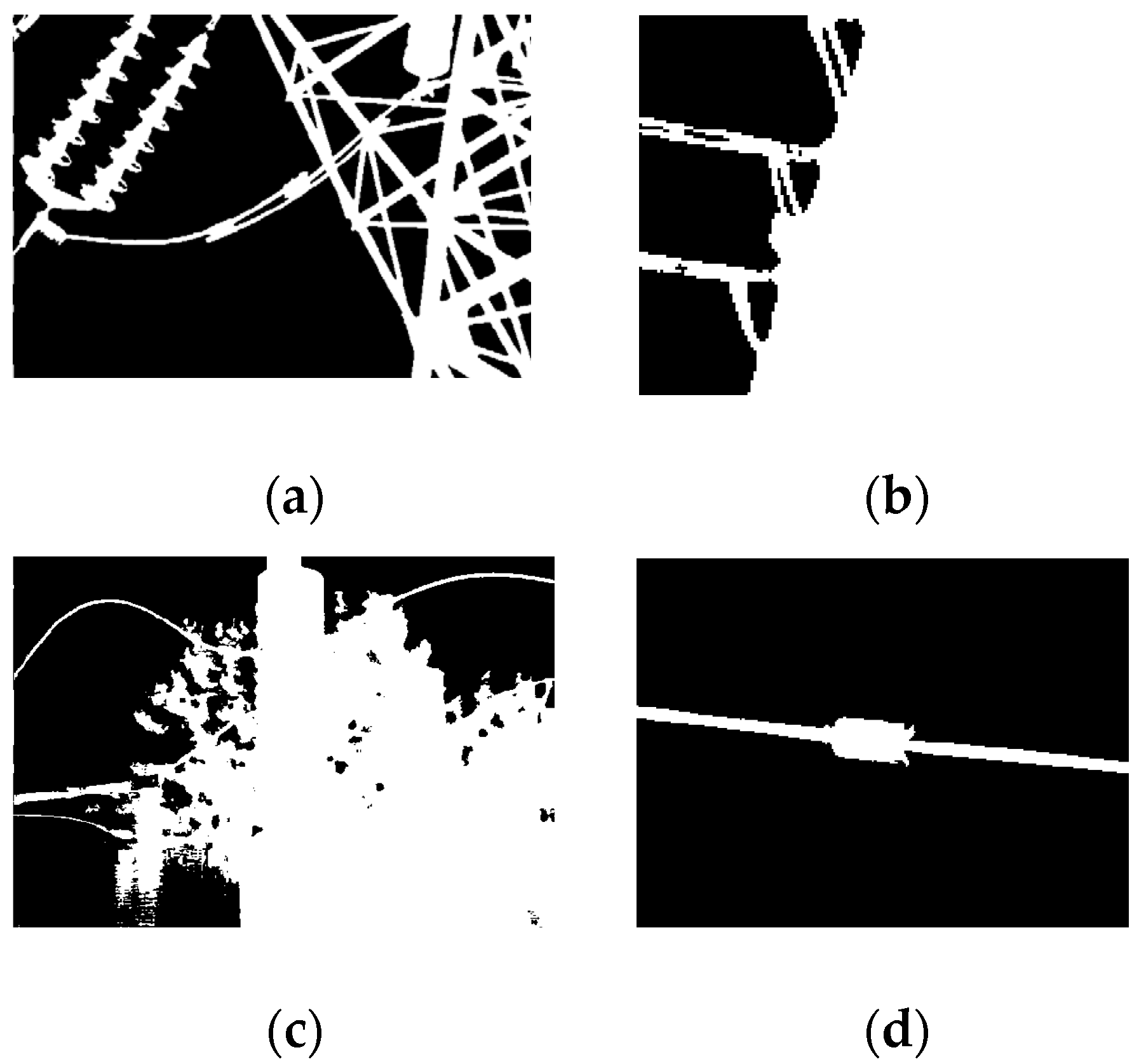

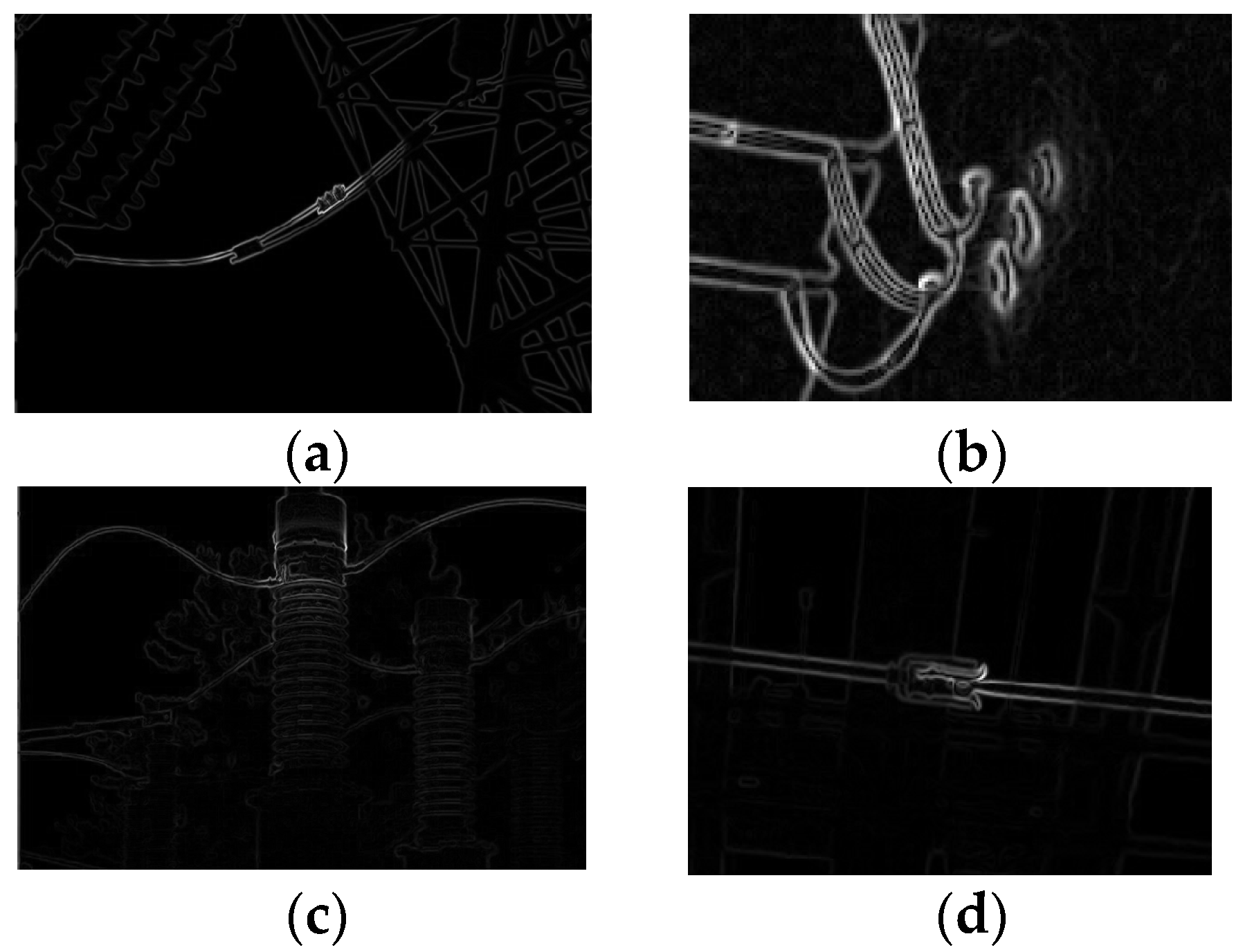

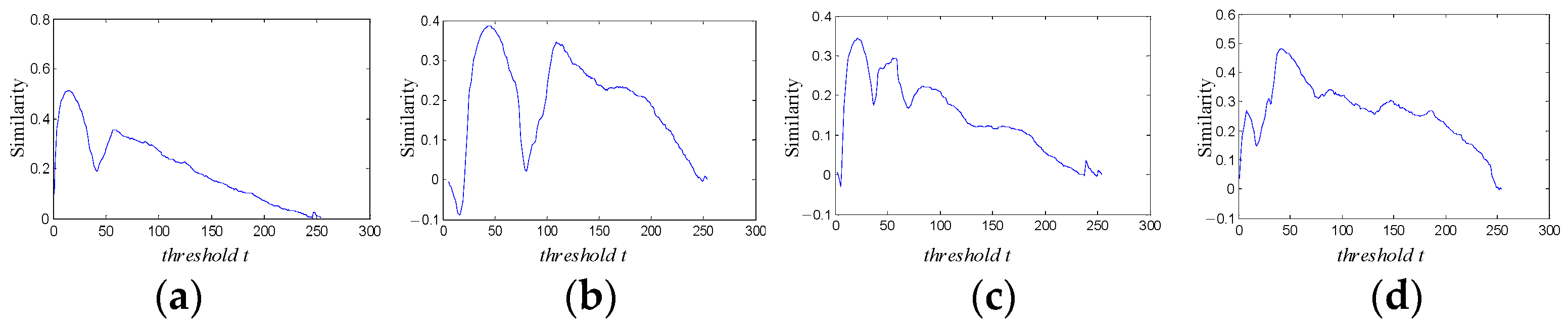

4. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Echhorn, R.; Reitboeck, H.J.; Arndt, M.; Dicke, P.W. Feature linking via synchronization among distributed assemblies: Simulations of results from cat visual cortex. Neural Comput. 1990, 2, 293–307. [Google Scholar] [CrossRef]

- Wang, Z.B.; Ma, Y.D.; Cheng, F.Y.; Yang, L.Z. Review of pulse coupled neural networks. Image Vis. Comput. 2010, 28, 5–13. [Google Scholar] [CrossRef]

- Gao, C.; Zhou, D.G.; Guo, Y.C. Automatic iterative algorithm for image segmentation using a modified pulse-coupled neural network. Neurocomputing 2013, 119, 332–338. [Google Scholar] [CrossRef]

- Zhou, D.; Gao, C.; Guo, Y.C. Simplified pulse coupled neural network with adaptive multilevel threshold for infrared human image segmentation. J. Comput.-Aided Des. Comput. Graph. 2013, 25, 208–214. [Google Scholar]

- Zhou, D.; Chi, M. Pulse-coupled neural network and its optimization for segmentation of electrical faults with infrared thermography. Appl. Soft Comput. 2019, 7, 252–260. [Google Scholar] [CrossRef]

- Kong, X.W.; Huang, J.; Shi, H. Infrared image multi-threshold segmentation algorithm based on improved pulse coupled neural networks. J. Infrared Millim. Waves 2001, 20, 365–379. [Google Scholar]

- Kong, X.W.; Huang, J.; Shi, H. Improved pulse coupled neural network for target segmentation in infrared images. In Proceedings of the SPIE-The International Society for Optical Engineering, Wuhan, China, 10–12 October 2001. [Google Scholar]

- Chen, X.J.; Chai, X.D. Infrared image segmentation based on a simplified PCNN. J. Anhui Univ. Nat. Sci. Ed. 2010, 34, 74–77. [Google Scholar]

- Ge, L.J.; Liu, H.X.; Li, Y.Z.; Zhang, J. A virtual data collection model of distributed PVs considering spatio-temporal coupling and affine optimization reference. IEEE Trans. Power Syst. 2022. early access. [Google Scholar] [CrossRef]

- Ge, L.J.; Liu, J.H.; Yan, J.; Rafiq, M.U. Improved harris hawks optimization for configuration of PV intelligent edge terminals. IEEE Trans. Sustain. Comput. 2022, 7, 631–643. [Google Scholar] [CrossRef]

- Shi, M.H.; Jiang, S.S.; Wang, H.R.; Xu, B.G. A simplified pulse-coupled neural network for adaptive segmentation of fabric defects. Mach. Vis. Appl. 2009, 20, 131–138. [Google Scholar] [CrossRef]

- Wei, S.; Hong, Q.; Hou, M.S. Automatic image segmentation based on PCNN with adaptive threshold time constant. Neurocomputing 2011, 74, 1485–1491. [Google Scholar] [CrossRef]

- Fu, J.C.; Chen, C.C.; Chai, J.W.; Wong, S.T.C.; Li, I.C. Image segmentation by EM-based adaptive pulse coupled neural networks in brain magnetic resonance imaging. Comput. Med. Imaging Graph. 2010, 34, 308–320. [Google Scholar] [CrossRef] [PubMed]

- Cheng, D.S.; Liu, X.F.; Tang, X.L.; Liu, J.F.; Huang, J.H. Image segmentation method based on an improved PCNN. Chin. High Technol. Lett. 2007, 17, 1228–1233. [Google Scholar]

- Kuntimad, G.; Ranganath, H.S. Perfect image segmentation using pulse coupled neural networks. IEEE Trans. Neural Netw. 1999, 10, 591–598. [Google Scholar] [CrossRef]

- Rava, T.H.; Bettaiah, V.; Ranganath, H.S. Adaptive pulse coupled neural network parameters for image segmentation. World Acad. Sci. Eng. Technol. 2011, 73, 1046–1056. [Google Scholar]

- Chen, Y.L.; Park, S.K.; Ma, Y.D.; Ala, R. A new automatic parameter setting method of a simplified PCNN for image segmentation. IEEE Trans. Neural Netw. 2011, 22, 880–892. [Google Scholar] [CrossRef]

- Fang, Y.; Qi, F.H.; Pei, B.Z. PCNN implementation and applications in image processing. J. Infrared Millim. Waves 2005, 24, 291–295. [Google Scholar]

- Gao, C.; Zhou, D.G.; Guo, Y.C. An iterative thresholding segmentation model using a modified pulse coupled neural network. Neural Process. Lett. 2014, 30, 81–95. [Google Scholar] [CrossRef]

- Zhou, D.G.; Gao, C.; Guo, Y.C. A coarse-to-fine strategy of iterative segmentation using simplified pulse-coupled neural network. Soft Comput. 2014, 18, 557–570. [Google Scholar] [CrossRef]

- Bi, Y.W.; Qiu, T.S.; Li, X.B.; Guo, Y. Automatic image segmentation based on a simplified pulse coupled neural network. Lect. Notes Comput. Sci. 2004, 3174, 405–410. [Google Scholar]

- Zhu, S.W.; Hao, C.Y. An approach for fabric defect image segmentation based on the improved conventional PCNN model. Acta Electron. Sinca 2012, 40, 611–617. [Google Scholar]

- Qi, Y.F.; Huo, Y.L.; Zhang, J.S. Automatic image segmentation method based on simplified PCNN and minimum scatter within clusters. J. Optoelectron. Laser 2008, 19, 1258–1260+1264. [Google Scholar]

- Peng, Z.M.; Jiang, B.; Xiao, J.; Meng, F.B. A novel method of image segmentation based on parallelized firing PCNN. Acta Autom. Sin. 2008, 34, 1169–1173. [Google Scholar] [CrossRef]

- Gu, X.D.; Guo, S.D.; Yu, D.H. A new approach for automated image segmentation based on unit-linking PCNN. In Proceedings of the International Conference on Machine Learning and Cybernetics, Beijing, China, 4–5 November 2002. [Google Scholar]

- Karvonen, J.A. Baltic sea ice SAR segmentation and classification using modified pulse-coupled neural networks. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1566–1574. [Google Scholar] [CrossRef]

- Li, M.; Cai, W.; Li, X.Y. An adaptive image segmentation method based on a modified pulse coupled neural network. Lect. Notes Comput. Sci. 2006, 4221, 471–474. [Google Scholar]

- Stewart, R.D.; Fermin, I.; Opper, M. Region growing with pulse-coupled neural networks: An alternative to seeded region growing. IEEE Trans. Neural Netw. 2002, 13, 1557–1562. [Google Scholar] [CrossRef]

- Lu, Y.F.; Miao, J.; Duan, L.J.; Qiao, Y.H.; Jia, R. A new approach to image segmentation based on simplified region growing PCNN. Appl. Math. Comput. 2008, 205, 807–814. [Google Scholar] [CrossRef]

- Yan, C.M.; Guo, B.L.; Ma, Y.D.; Zhang, X. New adaptive algorithm for image segmentation using the dual-level PCNN model. J. Optoelectronics. Laser 2011, 22, 1102–1106. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histogram. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Zou, Y.; Dong, F.; Lei, B. Maximum similarity thresholding. Digit. Image Process. 2014, 28, 120–135. [Google Scholar] [CrossRef]

- Ahmed, M.N.; Yamany, S.M.; Mohamed, N.; Farag, A.A.; Moriarty, T. A modified fuzzy c-means algorithm for bias field estimation and segmentation of MRI data. IEEE Trans. Med. Imaging 2002, 21, 193–199. [Google Scholar] [CrossRef] [PubMed]

- Qiyin, W.; Jiandong, X.; Xinhui, R. An adaptive segmentation method of substation equipment infrared image. Infrared Technol. 2016, 38, 770–773. [Google Scholar]

| Image 1 | Image 2 | Image 3 | Image 4 | |

|---|---|---|---|---|

| OTSU | 21 | 59 | 31 | 57 |

| MST | 15 | 45 | 22 | 41 |

| Mean Value | Image 1 | Image 2 | Image 3 | Image 4 | |

|---|---|---|---|---|---|

| FCM | v1 | 1.0603 | 21.05 | 1.01730 | 5.7834 |

| v2 | 41.3490 | 96.8447 | 53.8848 | 31.1222 | |

| Proposed method | v1 | 14.1560 | 66.2512 | 30.4860 | 14.9498 |

| v2 | 132.1046 | 132.1663 | 145.4363 | 129.3911 |

| Image 1 | Image 2 | Image 3 | Image 4 | |

|---|---|---|---|---|

| MST | 0.5120 | 0.3872 | 0.3433 | 0.4815 |

| Proposed | 0.3430 | 0.2419 | 0.2445 | 0.3515 |

| Image 1 | Image 2 | Image 3 | Image 4 | |

|---|---|---|---|---|

| OTSU | 0.1402 | 0.0022 | 0.1076 | 0.0042 |

| MST | 3.7802 | 0.2007 | 3.4117 | 0.9979 |

| FCM | 5.6357 | 0.9010 | 13.6103 | 7.7979 |

| Meanshift | 5.5414 | 0.2870 | 7.5225 | 1.1907 |

| Proposed | 3.2133 | 0.1252 | 3.2280 | 0.3673 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tong, J.; Zhang, X.; Cai, C.; He, Z.; Tan, Y.; Chen, Z. Transmission Line Equipment Infrared Diagnosis Using an Improved Pulse-Coupled Neural Network. Sustainability 2023, 15, 639. https://doi.org/10.3390/su15010639

Tong J, Zhang X, Cai C, He Z, Tan Y, Chen Z. Transmission Line Equipment Infrared Diagnosis Using an Improved Pulse-Coupled Neural Network. Sustainability. 2023; 15(1):639. https://doi.org/10.3390/su15010639

Chicago/Turabian StyleTong, Jie, Xiangquan Zhang, Changyu Cai, Zhouqiang He, Yuanpeng Tan, and Zhao Chen. 2023. "Transmission Line Equipment Infrared Diagnosis Using an Improved Pulse-Coupled Neural Network" Sustainability 15, no. 1: 639. https://doi.org/10.3390/su15010639

APA StyleTong, J., Zhang, X., Cai, C., He, Z., Tan, Y., & Chen, Z. (2023). Transmission Line Equipment Infrared Diagnosis Using an Improved Pulse-Coupled Neural Network. Sustainability, 15(1), 639. https://doi.org/10.3390/su15010639