Online Learning Engagement Recognition Using Bidirectional Long-Term Recurrent Convolutional Networks

Abstract

1. Introduction

1.1. Research Background

1.2. Learning Engagement and Its Measurement Methods

1.3. Video-Based Recognition of Learning Engagement

1.4. Dataset for Engagement Recognition

1.5. Problem Statement

- I

- How to construct a more realistic dataset of online learning engagement due to the lack of publicly available datasets?

- II

- How to improve the automatic recognition results of deep learning-based learning participation for practical applications?

- III

- How should learning engagement results help teachers develop teaching intervention strategies?

1.6. Contributions

- We created a dataset for the learning engagement of Chinese students that is more quantifiable, interpretable, and annotated by multiple engagement cues. The dataset consists of online learning videos of Chinese students, with a video duration of 10 s.

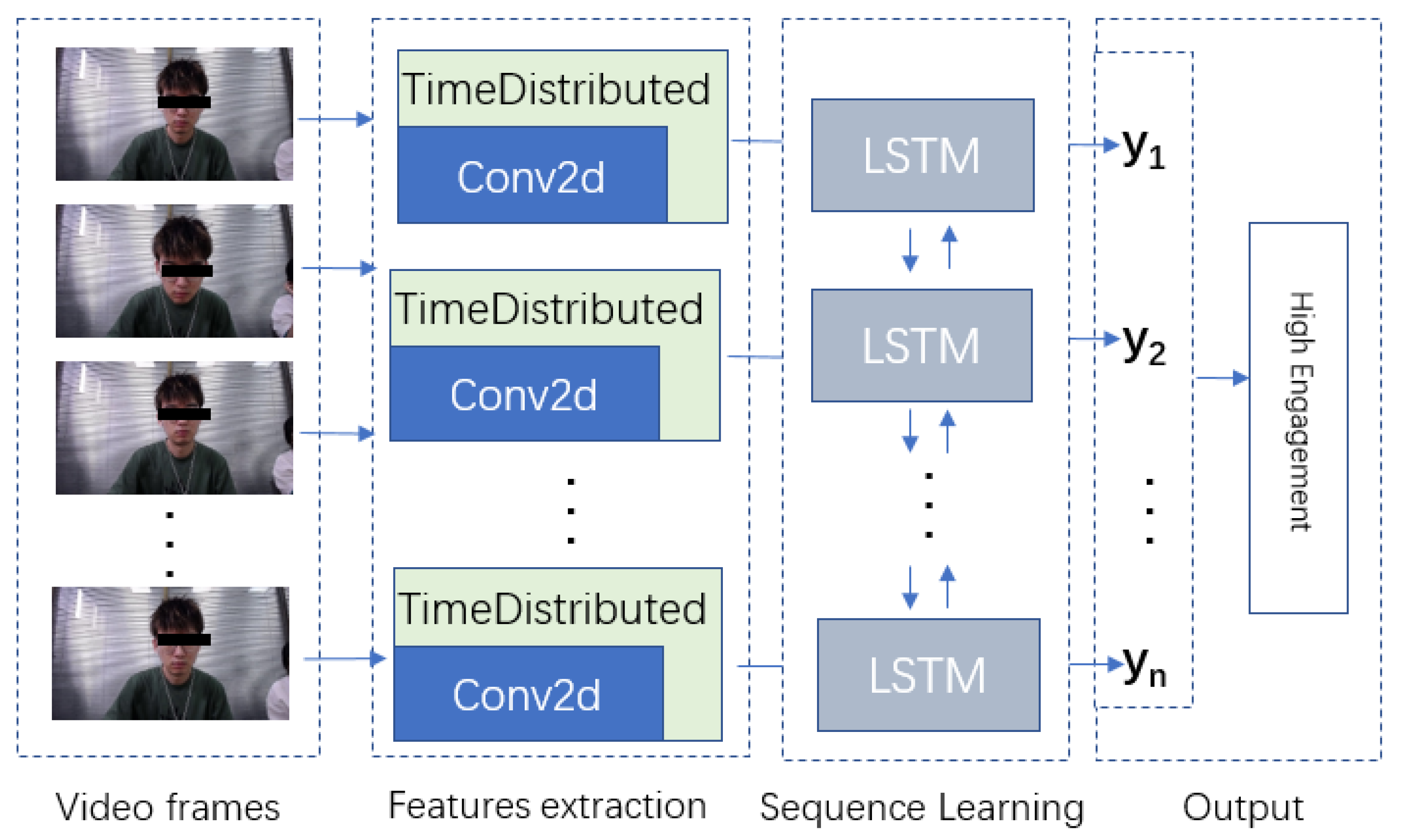

- We introduced the Bi-directional Long-Term Recurrent Convolutional Neural Networks (BiLRCN) framework for recognizing engagement from videos. This method focuses on the sequential features of learning engagement using the TimeDistributed layer, and its effectiveness has been verified on the self-build dataset.

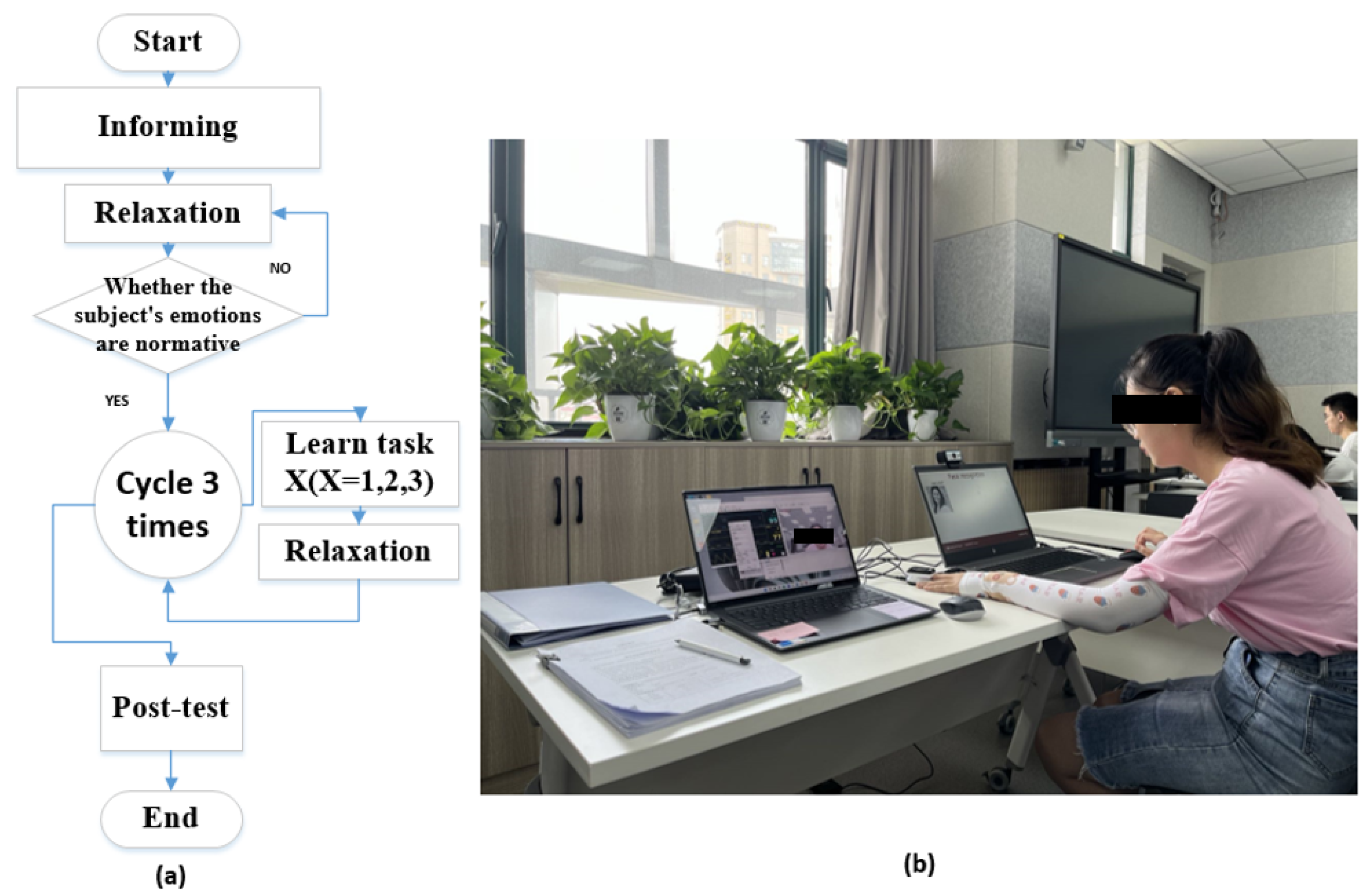

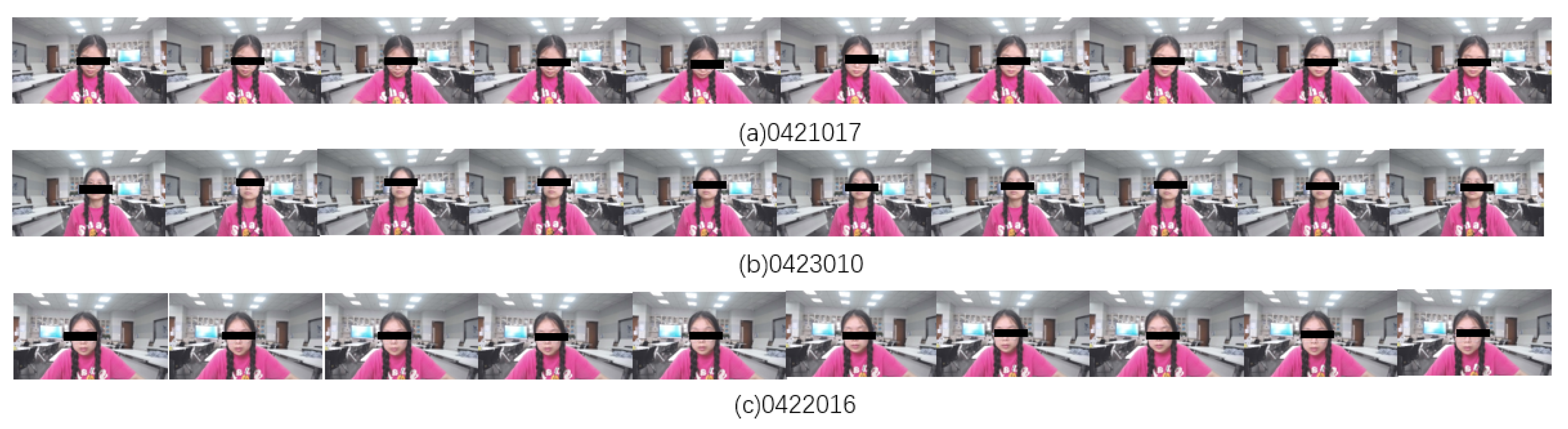

2. Dataset Construction

2.1. Data Collection

- Watch a one-minute, thirty-second medical video, then answer one easy multiple-choice question about the content within the allotted four minutes;

- Read documents related to machine learning material (accuracy and recall) and finish 9 challenging calculation questions with an 8-min time constraint based on the material;

- Answer two multiple-choice questions based on the content after watching a 6-min English video on the development of facial recognition. The participants in this exercise have a 7-min time constraint.

2.2. Data Annotation

3. Online Learning Engagement Recognition Method

3.1. Features Extraction

3.2. Sequence Learning

4. Experiment

4.1. Experimental Setting

4.2. Evaluation Metrics

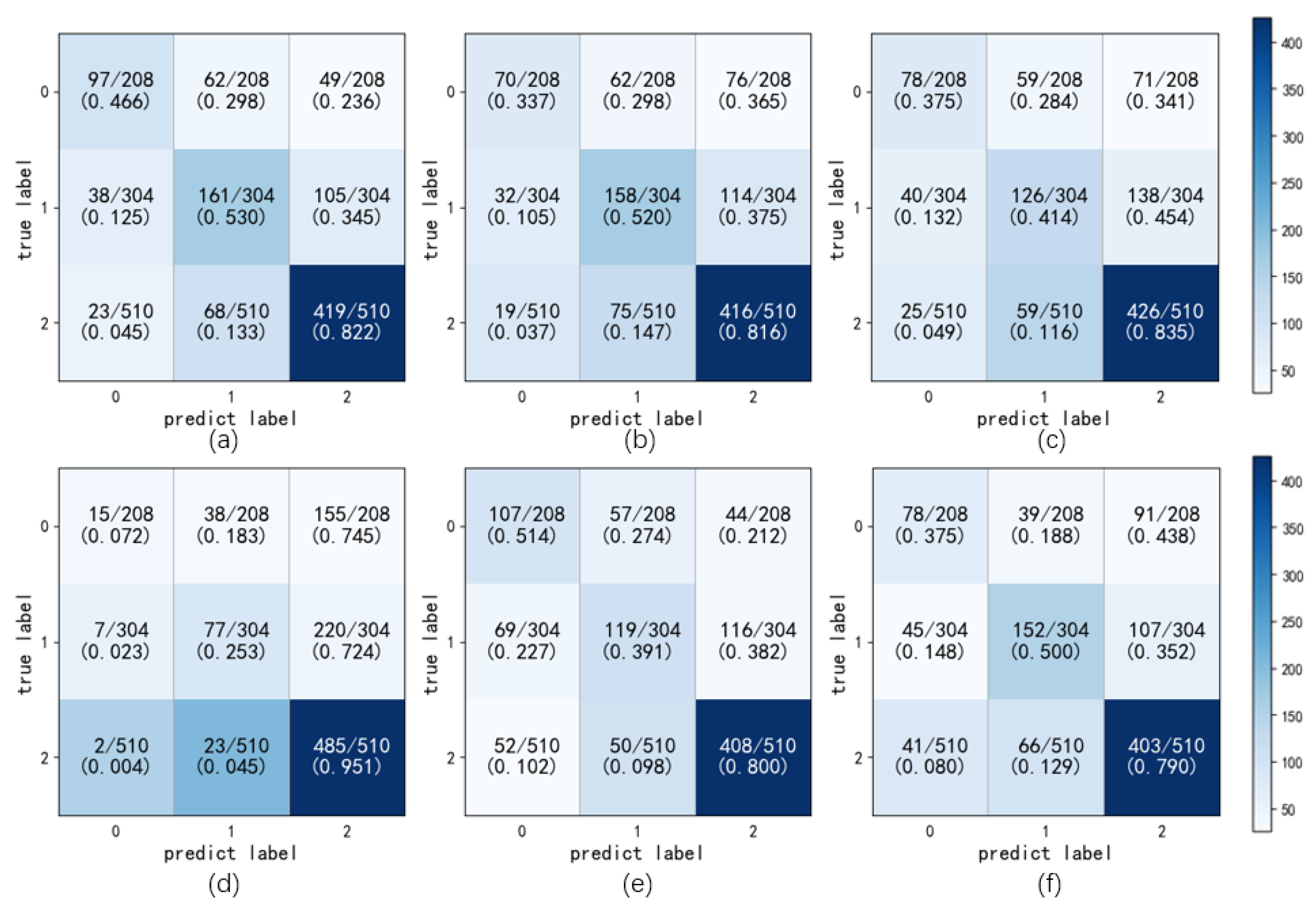

4.3. Experimental Results

5. Discussion

5.1. Discussion of Experimental Results

5.2. Discussion of Learning Engagement Application

5.3. Discussion of Future Development

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ladino Nocua, A.C.; Cruz Gonzalez, J.P.; Castiblanco Jimenez, I.A.; Gomez Acevedo, J.S.; Marcolin, F.; Vezzetti, E. Assessment of Cognitive Student Engagement Using Heart Rate Data in Distance Learning during COVID-19. Educ. Sci. 2021, 11, 540. [Google Scholar] [CrossRef]

- Pirrone, C.; Varrasi, S.; Platania, G.; Castellano, S. Face-to-Face and Online Learning: The Role of Technology in Students’ Metacognition. CEUR Workshop Proc. 2021, 2817, 1–10. [Google Scholar]

- Mubarak, A.A.; Cao, H.; Zhang, W. Prediction of students’ early dropout based on their interaction logs in online learning environment. Interact. Learn. Environ. 2020, 30, 1414–1433. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, L.; Ye, L. A nationwide survey of online teaching strategies in dental education in China. J. Dent. Educ. 2021, 85, 128–134. [Google Scholar] [CrossRef] [PubMed]

- Fei, M.; Yeung, D.Y. Temporal Models for Predicting Student Dropout in Massive Open Online Courses. In Proceedings of the 2015 IEEE International Conference on Data Mining Workshop (ICDMW), Atlantic City, NJ, USA, 14–17 November 2015; pp. 256–263. [Google Scholar] [CrossRef]

- Pirrone, C.; Di Corrado, D.; Privitera, A.; Castellano, S.; Varrasi, S. Students’ Mathematics Anxiety at Distance and In-Person Learning Conditions during COVID-19 Pandemic: Are There Any Differences? An Exploratory Study. Educ. Sci. 2022, 12, 379. [Google Scholar] [CrossRef]

- Liu, S.; Liu, S.; Liu, Z.; Peng, X.; Yang, Z. Automated detection of emotional and cognitive engagement in MOOC discussions to predict learning achievement. Comput. Educ. 2022, 181, 104461. [Google Scholar] [CrossRef]

- Sutarto, S.; Sari, D.; Fathurrochman, I. Teacher strategies in online learning to increase students’ interest in learning during COVID-19 pandemic. J. Konseling Dan Pendidik. 2020, 8, 129. [Google Scholar] [CrossRef]

- Hoofman, J.; Secord, E. The Effect of COVID-19 on Education. Pediatr. Clin. N. Am. 2021, 68, 1071–1079. [Google Scholar] [CrossRef]

- El-Sayad, G.; Md Saad, N.H.; Thurasamy, R. How higher education students in Egypt perceived online learning engagement and satisfaction during the COVID-19 pandemic. J. Comput. Educ. 2021, 8, 527–550. [Google Scholar] [CrossRef]

- You, W. Research on the Relationship between Learning Engagement and Learning Completion of Online Learning Students. Int. J. Emerg. Technol. Learn. (iJET) 2022, 17, 102–117. [Google Scholar] [CrossRef]

- Shen, J.; Yang, H.; Li, J.; Cheng, Z. Assessing learning engagement based on facial expression recognition in MOOC’s scenario. Multimed. Syst. 2022, 28, 469–478. [Google Scholar] [CrossRef] [PubMed]

- Meier, S.T.; Schmeck, R.R. The Burned-Out College Student: A Descriptive Profile. J. Coll. Stud. Pers. 1985, 26, 63–69. [Google Scholar]

- Fredricks, J.A.; Blumenfeld, P.C.; Paris, A.H. School Engagement: Potential of the Concept, State of the Evidence. Rev. Educ. Res. 2004, 74, 59–109. [Google Scholar] [CrossRef]

- Lei, H.; Cui, Y.; Zhou, W. Relationships between student engagement and academic achievement: A meta-analysis. Soc. Behav. Personal. Int. J. 2018, 46, 517–528. [Google Scholar] [CrossRef]

- Greene, B.A. Measuring Cognitive Engagement With Self-Report Scales: Reflections From Over 20 Years of Research. Educ. Psychol. 2015, 50, 14–30. [Google Scholar] [CrossRef]

- Dewan, M.; Murshed, M.; Lin, F. Engagement detection in online learning: A review. Smart Learn. Environ. 2019, 6, 1. [Google Scholar] [CrossRef]

- Hu, M.; Li, H. Student Engagement in Online Learning: A Review. In Proceedings of the 2017 International Symposium on Educational Technology (ISET), Hong Kong, China, 27–29 June 2017; pp. 39–43. [Google Scholar] [CrossRef]

- Zaletelj, J.; Košir, A. Predicting students’ attention in the classroom from Kinect facial and body features. EURASIP J. Image Video Process. 2017, 2017, 80. [Google Scholar] [CrossRef]

- Sümer, Ö.; Goldberg, P.; D’Mello, S.; Gerjets, P.; Trautwein, U.; Kasneci, E. Multimodal Engagement Analysis from Facial Videos in the Classroom. IEEE Trans. Affect. Comput. 2021. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, Z.; Liu, H.; Cao, T.; Liu, S. Data-driven Online Learning Engagement Detection via Facial Expression and Mouse Behavior Recognition Technology. J. Educ. Comput. Res. 2020, 58, 63–86. [Google Scholar] [CrossRef]

- Standen, P.J.; Brown, D.J.; Taheri, M.; Galvez Trigo, M.J.; Boulton, H.; Burton, A.; Hallewell, M.J.; Lathe, J.G.; Shopland, N.; Blanco Gonzalez, M.A.; et al. An evaluation of an adaptive learning system based on multimodal affect recognition for learners with intellectual disabilities. Br. J. Educ. Technol. 2020, 51, 1748–1765. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; Frosolone, M.; Improta, G.; Moccaldi, N.; Pollastro, A. EEG-based measurement system for monitoring student engagement in learning 4.0. Sci. Rep. 2022, 12, 5857. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; D’Cunha, A.; Awasthi, K.; Balasubramanian, V. DAiSEE: Towards User Engagement Recognition in the Wild. arXiv 2016, arXiv:1609.01885. [Google Scholar]

- Huang, T.; Mei, Y.; Zhang, H.; Liu, S.; Yang, H. Fine-grained Engagement Recognition in Online Learning Environment. In Proceedings of the 2019 IEEE 9th International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 12–14 July 2019; pp. 338–341. [Google Scholar] [CrossRef]

- Abedi, A.; Khan, S.S. Improving state-of-the-art in Detecting Student Engagement with Resnet and TCN Hybrid Network. arXiv 2021, arXiv:2104.10122. [Google Scholar]

- Liao, J.; Liang, Y.; Pan, J. Deep facial spatiotemporal network for engagement prediction in online learning. Appl. Intell. 2021, 51, 6609–6621. [Google Scholar] [CrossRef]

- Mehta, N.K.; Prasad, S.S.; Saurav, S.; Saini, R.; Singh, S. Three-Dimensional DenseNet Self-Attention Neural Network for Automatic Detection of Student’s Engagement. Appl. Intell. 2022, 52, 13803–13823. [Google Scholar] [CrossRef] [PubMed]

- Kaur, A.; Mustafa, A.; Mehta, L.; Dhall, A. Prediction and Localization of Student Engagement in the Wild. In Proceedings of the 2018 Digital Image Computing: Techniques and Applications (DICTA), Canberra, Australia, 10–13 December 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Whitehill, J.; Serpell, Z.; Lin, Y.C.; Foster, A.; Movellan, J.R. The Faces of Engagement: Automatic Recognition of Student Engagementfrom Facial Expressions. IEEE Trans. Affect. Comput. 2014, 5, 86–98. [Google Scholar] [CrossRef]

- Donahue, J.; Hendricks, L.A.; Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Darrell, T. Long-term Recurrent Convolutional Networks for Visual Recognition and Description. arXiv 2014, arXiv:1411.4389. [Google Scholar]

- Yan, S.; Adhikary, A. Stress Recognition in Thermal Videos Using Bi-Directional Long-Term Recurrent Convolutional Neural Networks. In Neural Information Processing: Proceedings of the 28th International Conference ICONIP 2021, Sanur, Bali, Indonesia, 8–12 December 2021; Mantoro, T., Lee, M., Ayu, M.A., Wong, K.W., Hidayanto, A.N., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 491–501. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. arXiv 2014, arXiv:1412.0767. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Los Alamitos, CA, USA, 2017; pp. 1800–1807. [Google Scholar] [CrossRef]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. arXiv 2018, arXiv:1812.03982. [Google Scholar]

- Parsons, J.; Taylor, L. Improving Student Engagement. Curr. Issues Educ. 2011, 14, 132. [Google Scholar]

- Bergdahl, N. Engagement and disengagement in online learning. Comput. Educ. 2022, 188, 104561. [Google Scholar] [CrossRef]

- Peters, M.; White, E.; Besley, T.; Locke, K.; Redder, B.; Novak, R.; Gibbons, A.; O’Neill, J.; Tesar, M.; Sturm, S. Video ethics in educational research involving children: Literature review and critical discussion. Educ. Philos. Theory 2020, 53, 1–9. [Google Scholar] [CrossRef]

| Research | Year | Data | Method | Setting | Accuracy |

|---|---|---|---|---|---|

| Gupta et al. [24] | 2016 | DAiSEE | LRCN | Online learning | 57.9% |

| Zaletelj et al. [19] | 2017 | posture, expression | DT, KNN | watch Lectures | - |

| Kaur et al. [29] | 2018 | in-the-wild | LSTM | watch videos | - |

| Huang et al. [25] | 2019 | DAiSEE | DERN | Online learning | 60.0% |

| Abedi et al. [26] | 2021 | DaiSEE | ResTCN | Online learning | 63.9% |

| Sümer et al. [20] | 2021 | posture, expression | SVM, DNN | Traditional classroom | - |

| Liao et al. [27] | 2021 | DAiSEE | DFSTN | Online learning | 58.84% |

| Mehta et al. [28] | 2022 | DAiSEE, EmotiW | DenseNet | Online learning | 63.59% |

| Cue | Student’s Performance |

|---|---|

| Low Engagement | The vision drifts or leaves the computer. The gaze is dull, the expression is sleepy, blinks more often, or appears to raise and lower the head and turn the head. |

| Engagement | The line of sight is basically on the screen, the eye movement is small, the line of sight jumps out of the screen and flashes back quickly, the number of blinks is high, the expression is normal, the head and body posture is more upright, and the line of sight returns to the screen quickly after the presence of a clear head-down keyboarding action. |

| High Engagement | The head posture remains stable, the eyes are focused on the screen, the eyes are wide open, the eyes stare at the screen for a long time, or the eyes swing regularly. The expression is more serious, there is a tight frown or pursed mouth movement, and the body leans forward significantly. |

| Label | Train | Valid | Test | Sum |

|---|---|---|---|---|

| 0 | 766 | 242 | 208 | 1216 |

| 1 | 1162 | 403 | 30 | 1869 |

| 2 | 1982 | 650 | 510 | 3142 |

| sum | 3910 | 1295 | 1022 | 6227 |

| Method | |||||||

|---|---|---|---|---|---|---|---|

| BiLRCN (ours) | 66.24% | 46.63% | 55.32% | 73.12% | 61.39% | 52.96% | 82.16 |

| LRCN [31] | 63.01% | 57.85% | 53.56% | 68.65% | 33.65% | 51.97% | 81.57% |

| ResTCN [26] | 61.65% | 54.55% | 51.46% | 67.09% | 37.50% | 41.45% | 83.53% |

| C3D [33] | 56.46% | 62.50% | 55.85% | 56.40% | 7.21% | 25.33% | 95.10% |

| Xception [34] | 62.04% | 46.93% | 52.65% | 71.83% | 51.44% | 39.14% | 80.00% |

| SlowFast [35] | 61.94% | 47.56% | 61.09% | 67.05% | 37.50% | 50.00% | 79.02% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Wei, Y.; Shi, Y.; Li, X.; Tian, Y.; Zhao, Z. Online Learning Engagement Recognition Using Bidirectional Long-Term Recurrent Convolutional Networks. Sustainability 2023, 15, 198. https://doi.org/10.3390/su15010198

Ma Y, Wei Y, Shi Y, Li X, Tian Y, Zhao Z. Online Learning Engagement Recognition Using Bidirectional Long-Term Recurrent Convolutional Networks. Sustainability. 2023; 15(1):198. https://doi.org/10.3390/su15010198

Chicago/Turabian StyleMa, Yujian, Yantao Wei, Yafei Shi, Xiuhan Li, Yi Tian, and Zhongjin Zhao. 2023. "Online Learning Engagement Recognition Using Bidirectional Long-Term Recurrent Convolutional Networks" Sustainability 15, no. 1: 198. https://doi.org/10.3390/su15010198

APA StyleMa, Y., Wei, Y., Shi, Y., Li, X., Tian, Y., & Zhao, Z. (2023). Online Learning Engagement Recognition Using Bidirectional Long-Term Recurrent Convolutional Networks. Sustainability, 15(1), 198. https://doi.org/10.3390/su15010198