Research on Parking Space Status Recognition Method Based on Computer Vision

Abstract

:1. Introduction

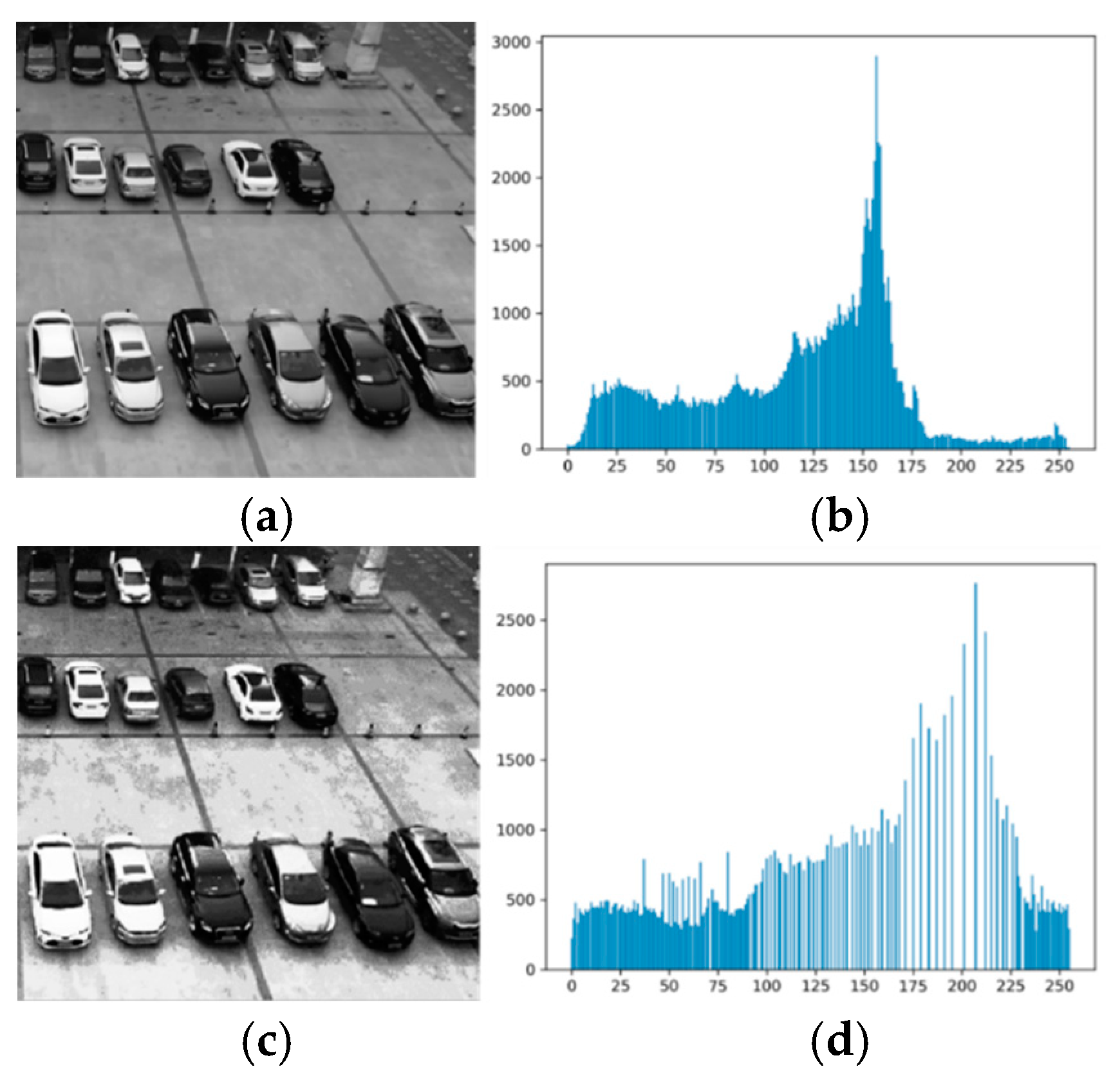

2. Image Pre-Processing

- is the gray value of the image at coordinates ;

- is the red component at that point;

- is the green component at that point;

- is the blue component at the point.

- is the gray value at the point of the output image;

- is a neighborhood at the point of the original image;

- is a pixel point within the neighborhood , and is its gray value.

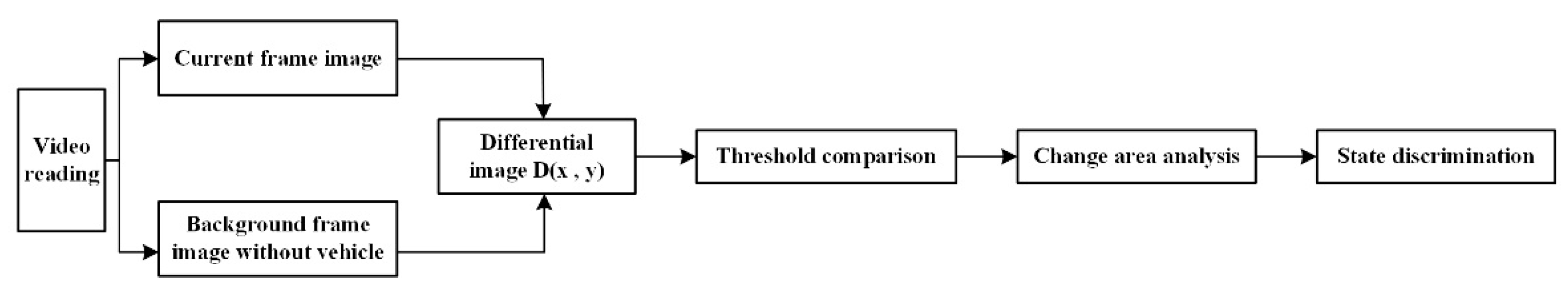

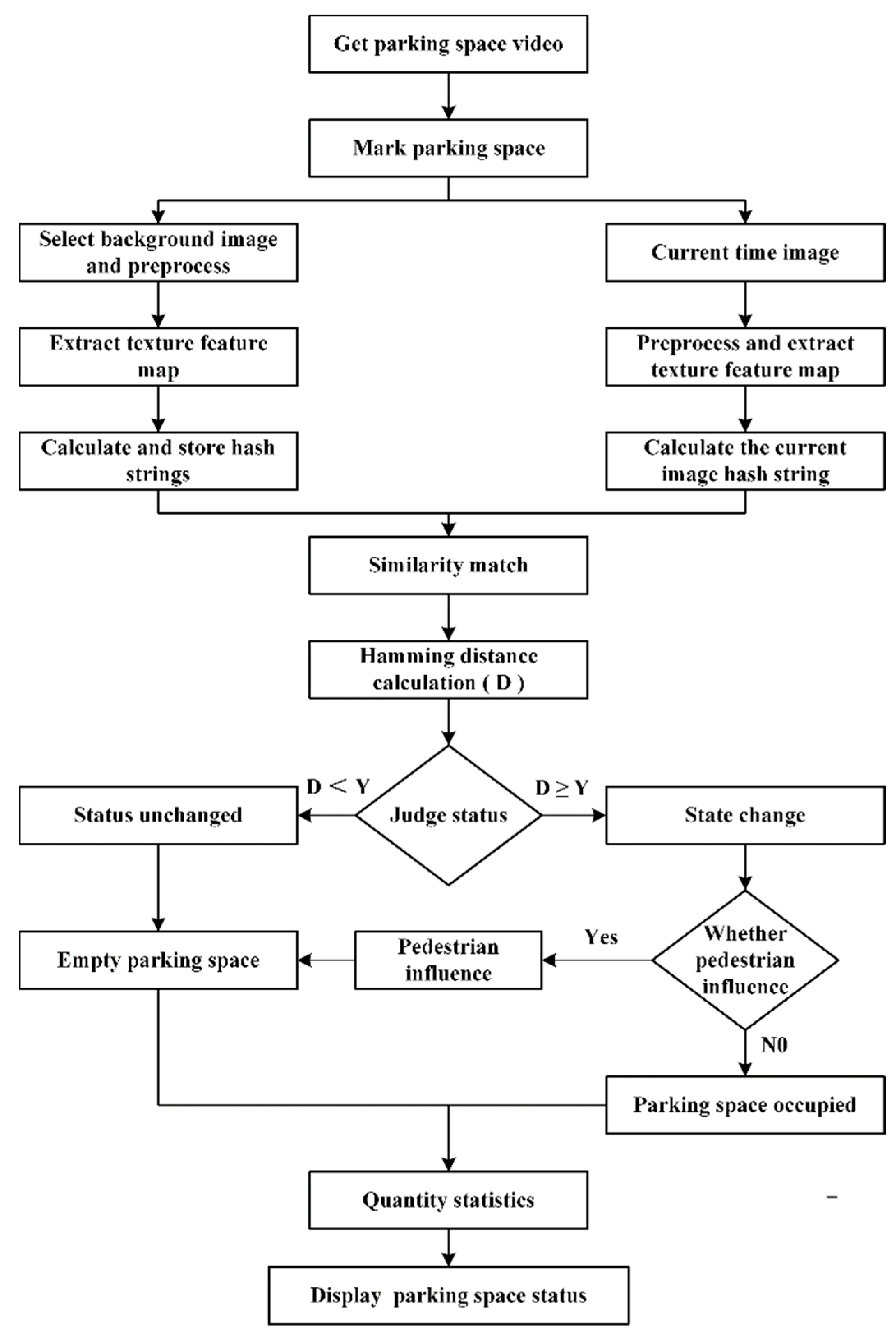

3. Improved Background Difference Method

3.1. Principle of Improved Background Difference Method

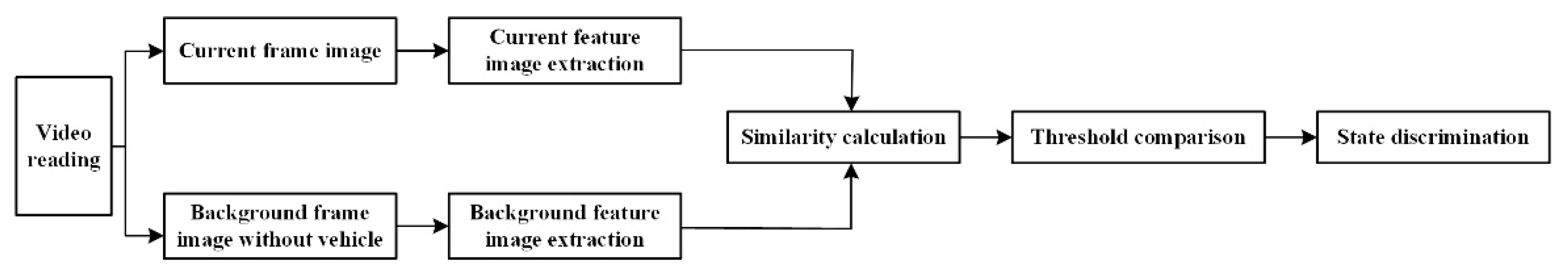

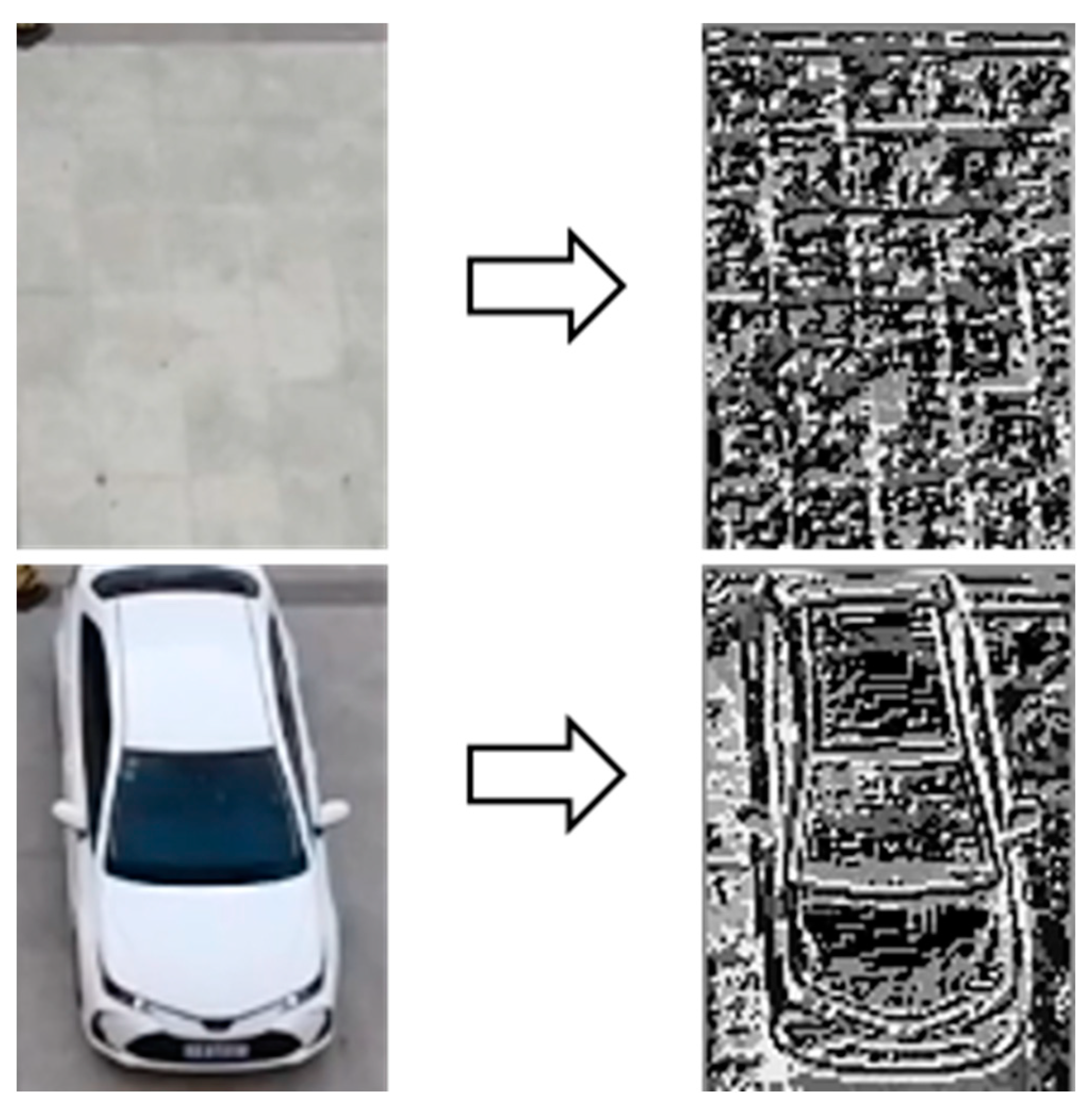

3.2. LBP Operator

- N is the number of sampling points;

- is the gray value of the “n” pixel;

- is the gray value of the central pixel.

3.3. Image Similarity Calculation

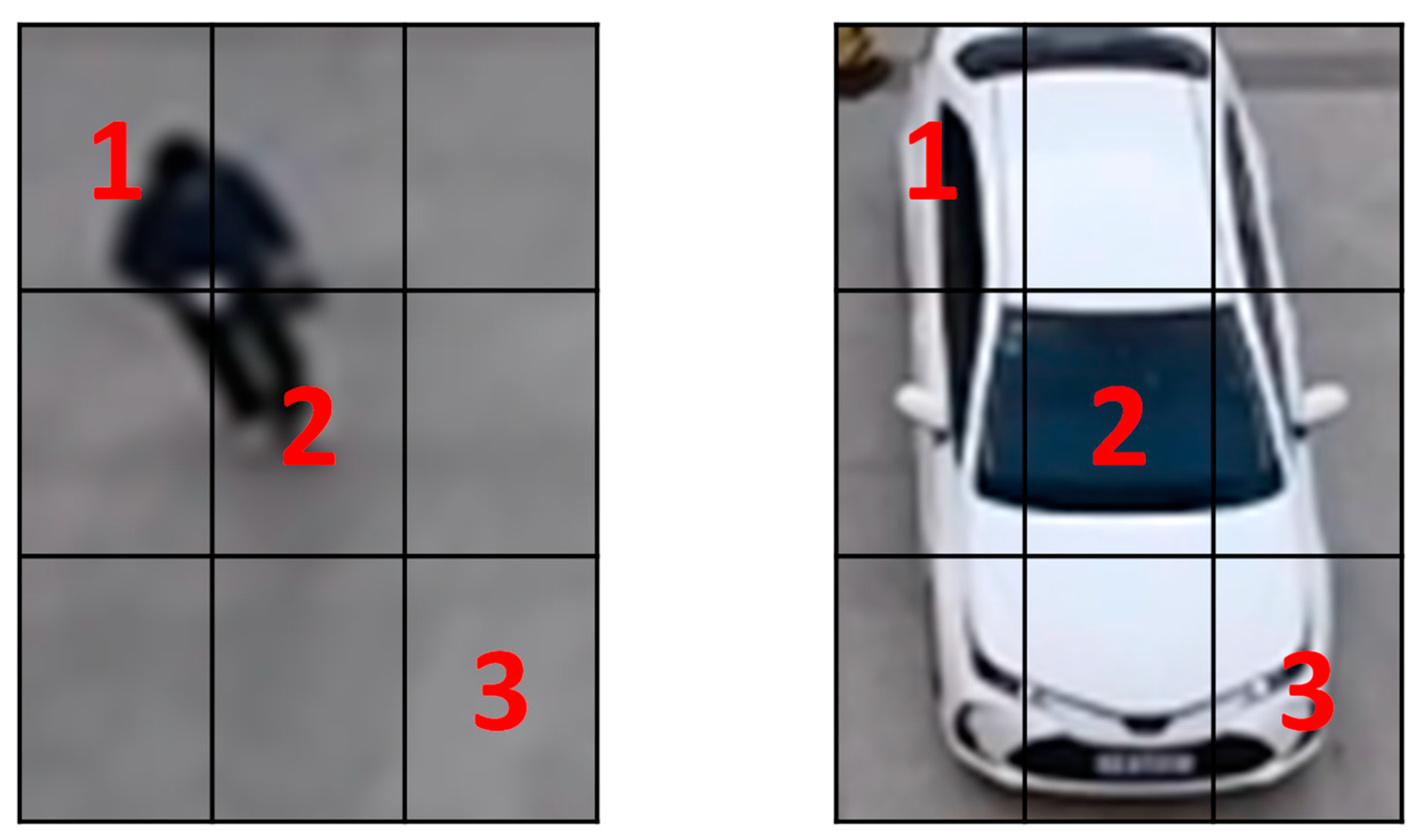

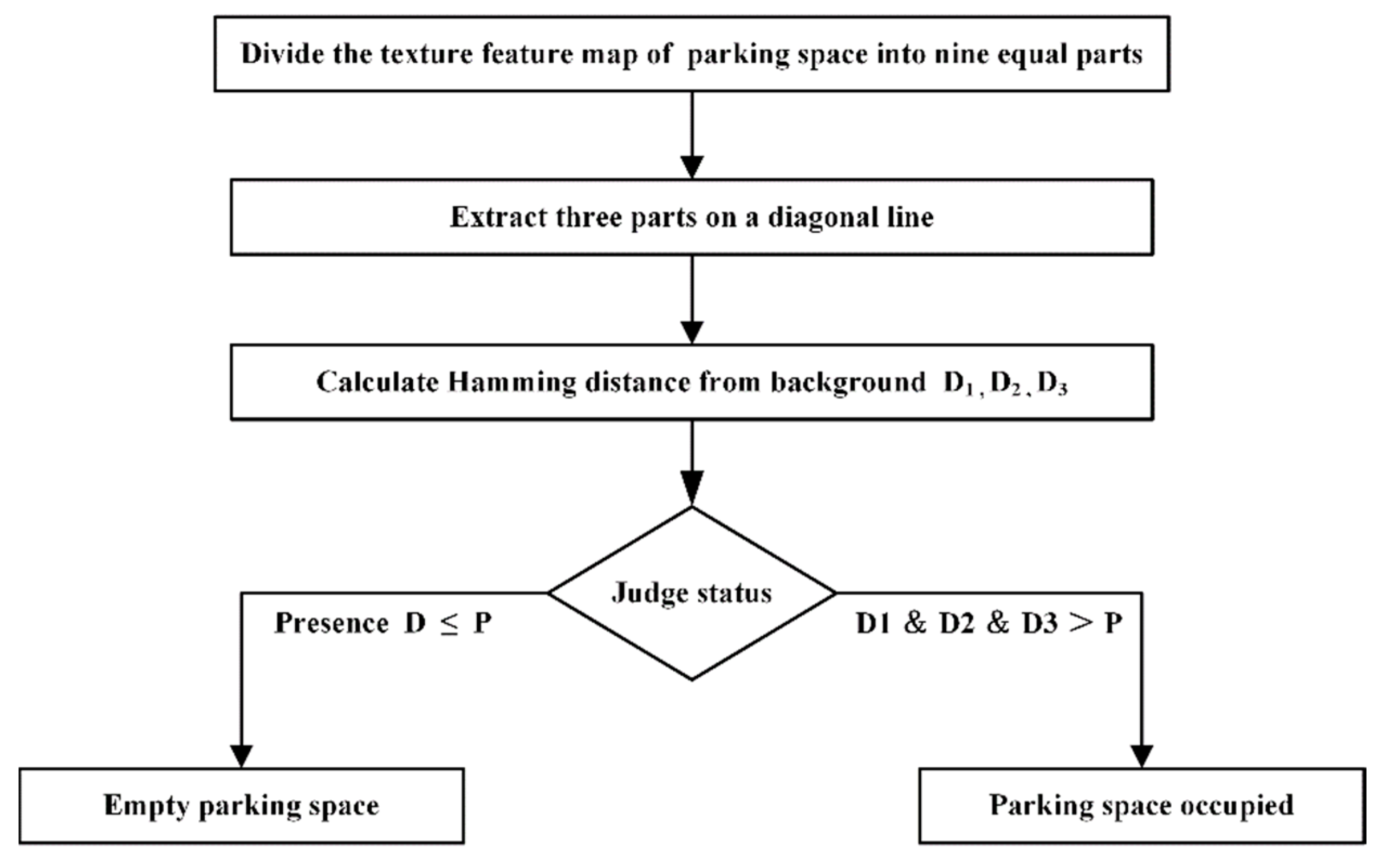

4. Parking Space Status Identification Method

4.1. Parking Space Status Identification Method

4.2. Status Discrimination Threshold

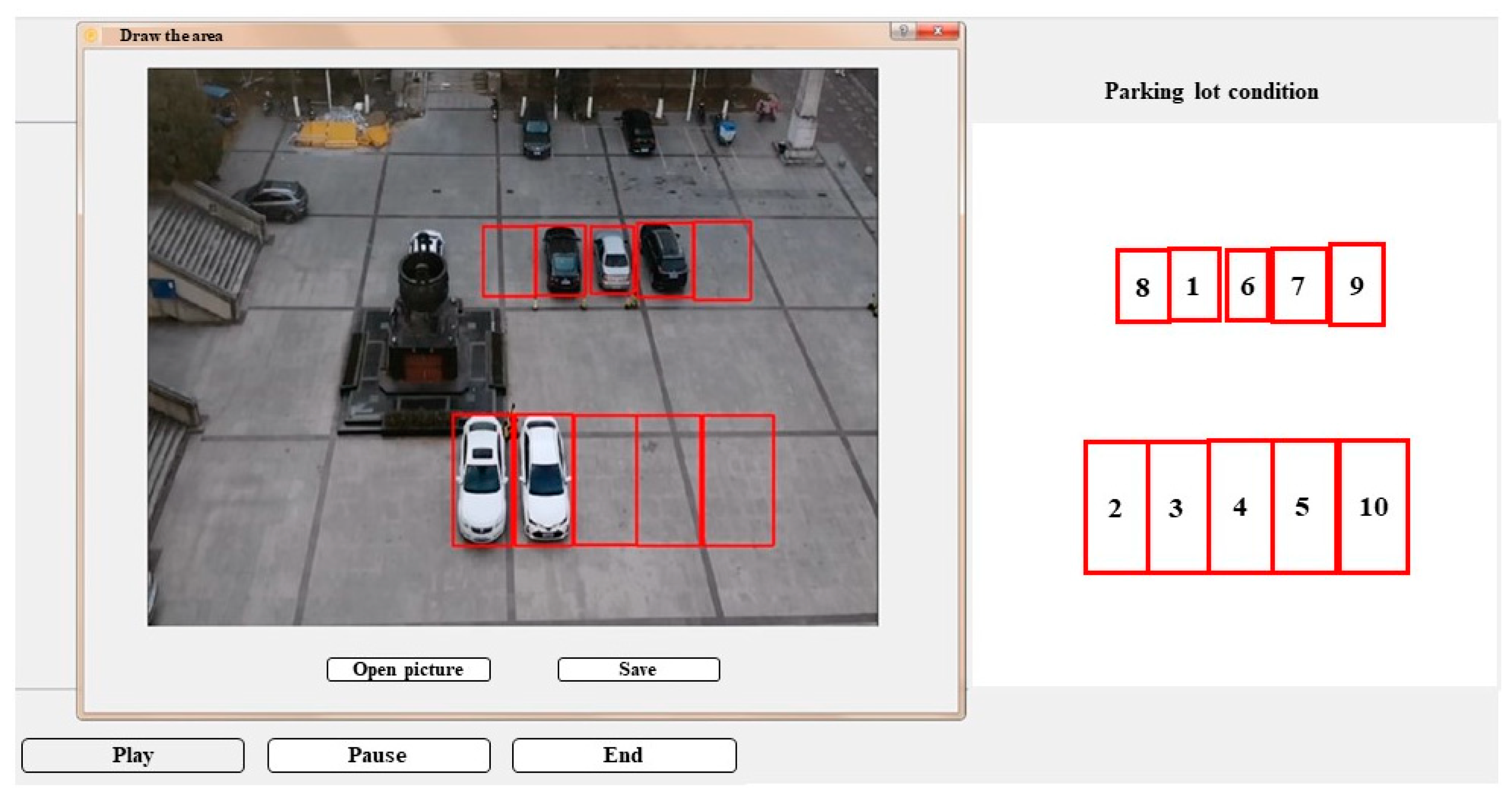

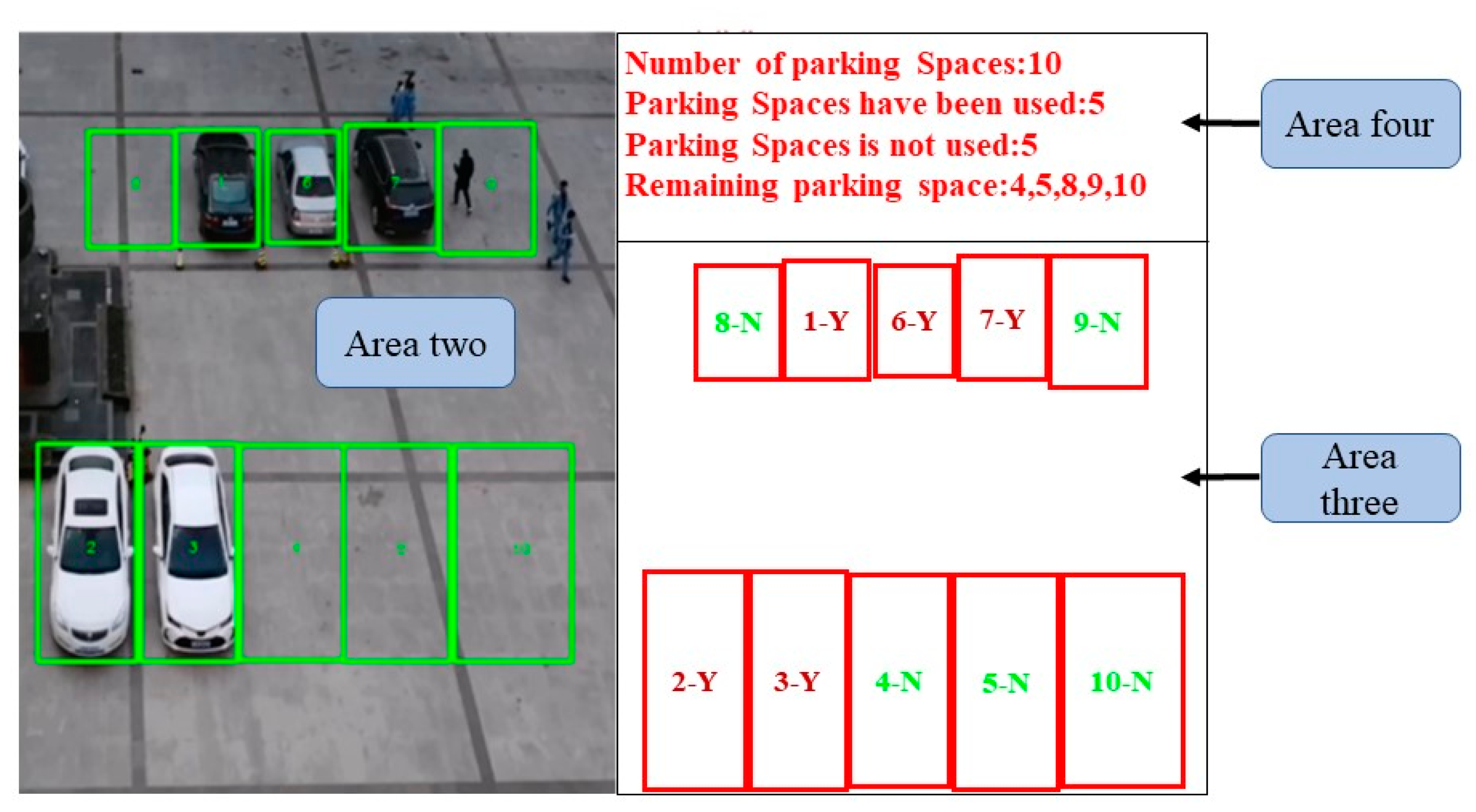

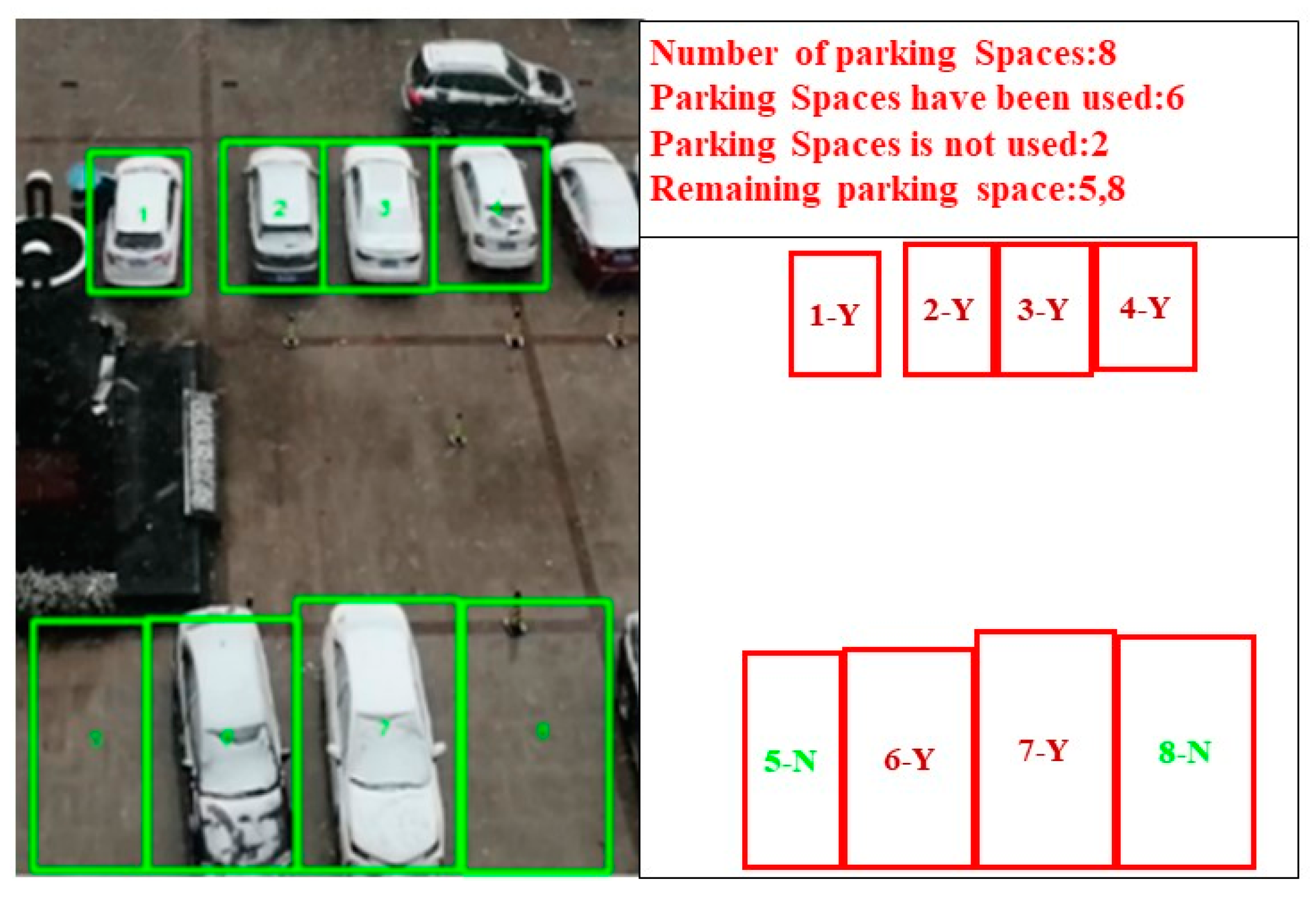

5. Model Validation

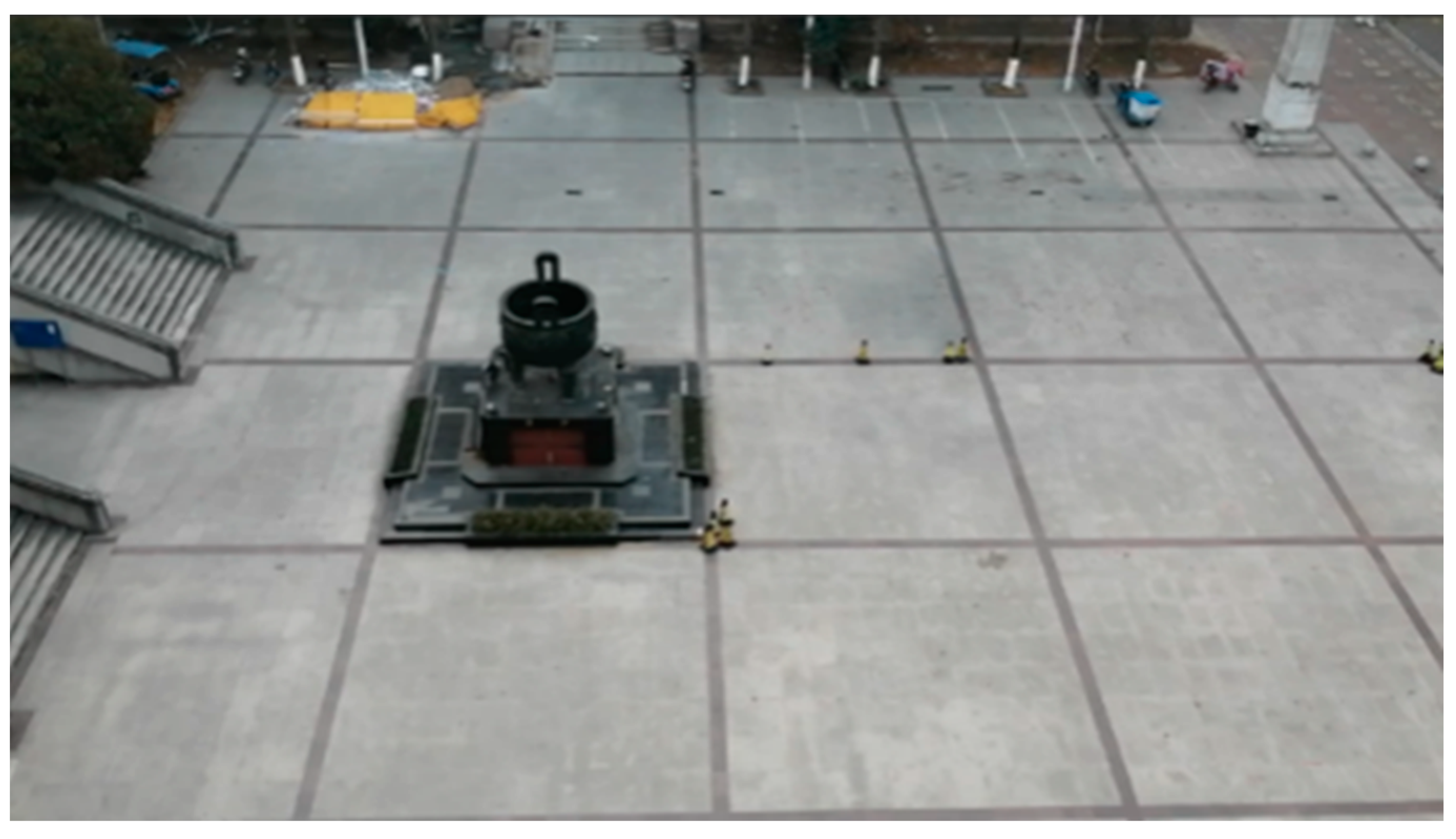

5.1. Study Subjects

5.2. Status Identification

5.3. Analysis of Experimental Results

6. Conclusions

- Grayscale the video, filter smoothing noise reduction, and image enhancement pre-processing to improve the overall recognition accuracy, introduce texture feature extraction method depending on the LBP operator and improve the background difference method. Then, the perceptual hashing algorithm is used to calculate the Hamming distance between the background image and the hash string of the current frame of the video to exclude the influence of light and pedestrians on the recognition accuracy.

- Develop a parking space status recognition system relied on the Python environment and identify parking spaces in three environmental states: with direct light, without direct light, and in rain and snow. The overall average accuracy of the experimental results was 97.2%, which proves that the accuracy of the model is excellent.

- The parking status recognition method based on computer vision designed in this paper effectively solves the problem of collecting parking status information in the guidance process of the parking guidance system to achieve the ultimate goal of the efficient information management of parking lot.

- In this paper, the authors only put forward the errors caused by the three influencing factors of weather, illumination, and pedestrian in the detection of parking status, but we did not take into account the necessary considerations of irregular parking methods (such as occupying two parking spaces) and vehicle types, so we will take these aspects into consideration in the next study.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, C.J.; Li, G.D. The Study of a City Smart Parking Mode Based on “Internet Plus”. Bull. Surv. Mapp. 2017, 11, 58. [Google Scholar]

- Liu, X.L. Intelligent features and outlook of GIS in the era of “Internet Plus”. Sci. Surv. Mapp. 2017, 42, 1. [Google Scholar]

- Yan, F.; Li, C. Research and Design of the Parking Management System Based on Internet of Things Technology. Comput. Sci. Appl. 2017, 7, 526. [Google Scholar]

- Wang, M.; Wang, G.; Bao, B.H. Parking space detection based on ultrasonic and loop detector. Inf. Technol. 2016, 54. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, S.P.; Chen, L.Y. Application of Wireless Parking Space Detection System. Tianjin Sci. Technol. 2017, 44, 60. [Google Scholar]

- Zhao, Z.Q.; Chen, Y.R.; Yi, W.D. Design of wireless vehicle detector based on AMR sensor. Electron. Meas. Technol. 2013, 1, 2. [Google Scholar]

- Zhang, Z.; Tao, M.; Yuan, H. A Parking Occupancy Detection Algorithm Based on AMR Sensor. Sens. J. 2014, 15, 1261–1269. [Google Scholar] [CrossRef]

- Shi, X.Y.; Xu, B.; Yu, G.L.; Long, W. Research and implementation of an intelligent guidance system for car parks based on infrared detection. Pract. Electron. 2013, 43–44. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. Sensor Fusion-based Vacant Parking Slot Detection and Tracking. IEEE Trans. Intell. Transp. Syst. 2013, 15, 21–36. [Google Scholar] [CrossRef]

- Jiang, H.B.; Ye, H.; Ma, S.D.; Chen, L. High Precision Identification of Parking Slot in Automated Parking System Based on Multi-Sensor Data Fusion. J. Chongqing Univ. Technol. (Nat. Sci.) 2019, 33, 1. [Google Scholar]

- Tschentscher, M.; Koch, C.; König, M.; Salmen, J.; Schlipsing, M. Scalable Real-time Parking Lot Classification: An Evaluation of Image Features and Supervised Learning Al-gorithms. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; p. 1. [Google Scholar]

- Xu, J.; Chen, G.; Xie, M. Vision-Guided Automatic Parking for Smart Car. In Proceedings of the Intelligent Vehicles Symposium, Dearborn, MI, USA, 3–5 October 2000; p. 725. [Google Scholar]

- Jung, H.G.; Kim, D.S.; Yoon, P.J.; Kim, J. Parking Slot Markings Recognition for Automatic Parking Assist System. In Proceedings of the Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; p. 106. [Google Scholar]

- Wan, T.T. Survey on Video-based Parking Cell Detection Methods. In Proceedings of the Seventh National Conference on Information Acquisition and Processing, Guilin, China, 6 August 2009. [Google Scholar]

- Wan, T.T.; Jiang, D.L. Parking Cell Detection Method Based on KL and Kernel Fisher Discriminant. Comput. Eng. 2011, 37, 204. [Google Scholar]

- Meng, Y.; Sun, J.; Tang, Y.P. Research on Parking State Detection Method Based on Machine Vision. Comput. Meas. Control 2012, 20, 638. [Google Scholar]

- Seo, M.G.; Ohm, S.Y. An Automatic Parking Space Identification System using Deep Learning Techniques. J. Converg. Cult. Technol. 2021, 7, 635–640. [Google Scholar]

- Xu, L.; Chen, X.; Ban, Y.; Huang, D. Method for Intelligent Detection of Parking Spaces Based on Deep Learning. Chin. J. Lasers 2019, 46, 0404013. [Google Scholar]

- Ma, S.; Fang, W.; Jiang, H.; Han, M.; Li, C. Parking space recognition method based on parking space feature construction in the scene of autonomous valet parking. Appl. Sci. 2021, 11, 2759. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, T.; Yin, X.; Wang, X.; Zhang, K.; Xu, J.; Wang, D. An improved parking space recognition algorithm based on panoramic vision. Multimed. Tools Appl. 2021, 80, 18181–18209. [Google Scholar] [CrossRef]

- Li, Y.; Yang, W.; Zhang, X.; Kang, X.; Li, M. Research on Automatic Driving Trajectory Planning and Tracking Control Based on Improvement of the Artificial Potential Field Method. Sustainability 2022, 14, 12131. [Google Scholar] [CrossRef]

- Huang, C.; Yang, S.; Luo, Y.; Wang, Y.; Liu, Z. Visual Detection and Image Processing of Parking Space Based on Deep Learning. Sensors 2022, 22, 6672. [Google Scholar] [CrossRef]

- Fintzel, K.; Bendahan, R.; Vestri, C.; Bougnoux, S.; Kakinami, T. 3D Parking Assistant System. In Proceedings of the Intelligent Vehicles Symposium, Parma, Italy, 14–17 June 2004; p. 881. [Google Scholar]

- Vestri, C.; Bougnoux, S.; Bendahan, R.; Fintzel, K.; Wybo, S.; Abad, F.; Kakinami, T. Evaluation of a Vision-Based Parking Assistance System. In Proceedings of the Intelligent Transportation Systems, Vienna, Austria, 16 September 2005; p. 131. [Google Scholar]

- Li, Y.; Zhang, M.; Ding, Y.; Zhou, Z.; Xu, L. Real-Time Travel Time Prediction Based on Evolving Fuzzy Participatory Learning Model. J. Adv. Transp. 2022, 2022, 2578480. [Google Scholar] [CrossRef]

- Wang, L.; Jiang, D. A Method of Parking Space Detection Based on Image Segmentation and LBP. In Proceedings of the International Conference on Multimedia Information Network and Security, Nanjing, China, 2–4 November 2012; p. 229. [Google Scholar]

- Almeida, P.; Oliveira, L.S.; Silva, E.; Britto, A.; Koerich, A. Parking Space Detection using Textural Descriptors. In Proceedings of the Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; p. 3603. [Google Scholar]

- Zhen, Y.L.; Chen, W.B. Study on Image Windows Median Filter Algorithm. Netw. New Media Technol. 2011, 32, 9. [Google Scholar]

- Yang, J.; Deng, R.F.; Wang, X.P. Illumination Preprocessing Algorithm of Face Based on Image Guided Filtering. Comput. Eng. 2014, 40, 182. [Google Scholar]

- Jiang, B.J.; Zhong, M.X. Improved histogram equalization algorithm in the image enhancement. Laser Infrared 2014, 44, 702. [Google Scholar]

| No. | Top Left | Bottom Right | ||

|---|---|---|---|---|

| X | Y | X | Y | |

| 1 | 425 | 169 | 479 | 244 |

| 2 | 334 | 372 | 399 | 513 |

| 3 | 402 | 372 | 465 | 513 |

| 4 | 467 | 373 | 535 | 512 |

| 5 | 535 | 373 | 605 | 513 |

| 6 | 485 | 170 | 532 | 242 |

| 7 | 536 | 166 | 597 | 246 |

| 8 | 367 | 170 | 424 | 245 |

| 9 | 598 | 165 | 660 | 249 |

| 10 | 608 | 373 | 685 | 513 |

| Project | Total Number of Car Spaces | Change of Status | No. of Errors | Accuracy |

|---|---|---|---|---|

| No direct light | 145 | 39 | 2 | 98.6% |

| With direct light | 85 | 31 | 3 | 96.5% |

| Rain and snow weather | 60 | 7 | 3 | 95% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Mao, H.; Yang, W.; Guo, S.; Zhang, X. Research on Parking Space Status Recognition Method Based on Computer Vision. Sustainability 2023, 15, 107. https://doi.org/10.3390/su15010107

Li Y, Mao H, Yang W, Guo S, Zhang X. Research on Parking Space Status Recognition Method Based on Computer Vision. Sustainability. 2023; 15(1):107. https://doi.org/10.3390/su15010107

Chicago/Turabian StyleLi, Yongyi, Hongye Mao, Wei Yang, Shuang Guo, and Xiaorui Zhang. 2023. "Research on Parking Space Status Recognition Method Based on Computer Vision" Sustainability 15, no. 1: 107. https://doi.org/10.3390/su15010107

APA StyleLi, Y., Mao, H., Yang, W., Guo, S., & Zhang, X. (2023). Research on Parking Space Status Recognition Method Based on Computer Vision. Sustainability, 15(1), 107. https://doi.org/10.3390/su15010107