A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

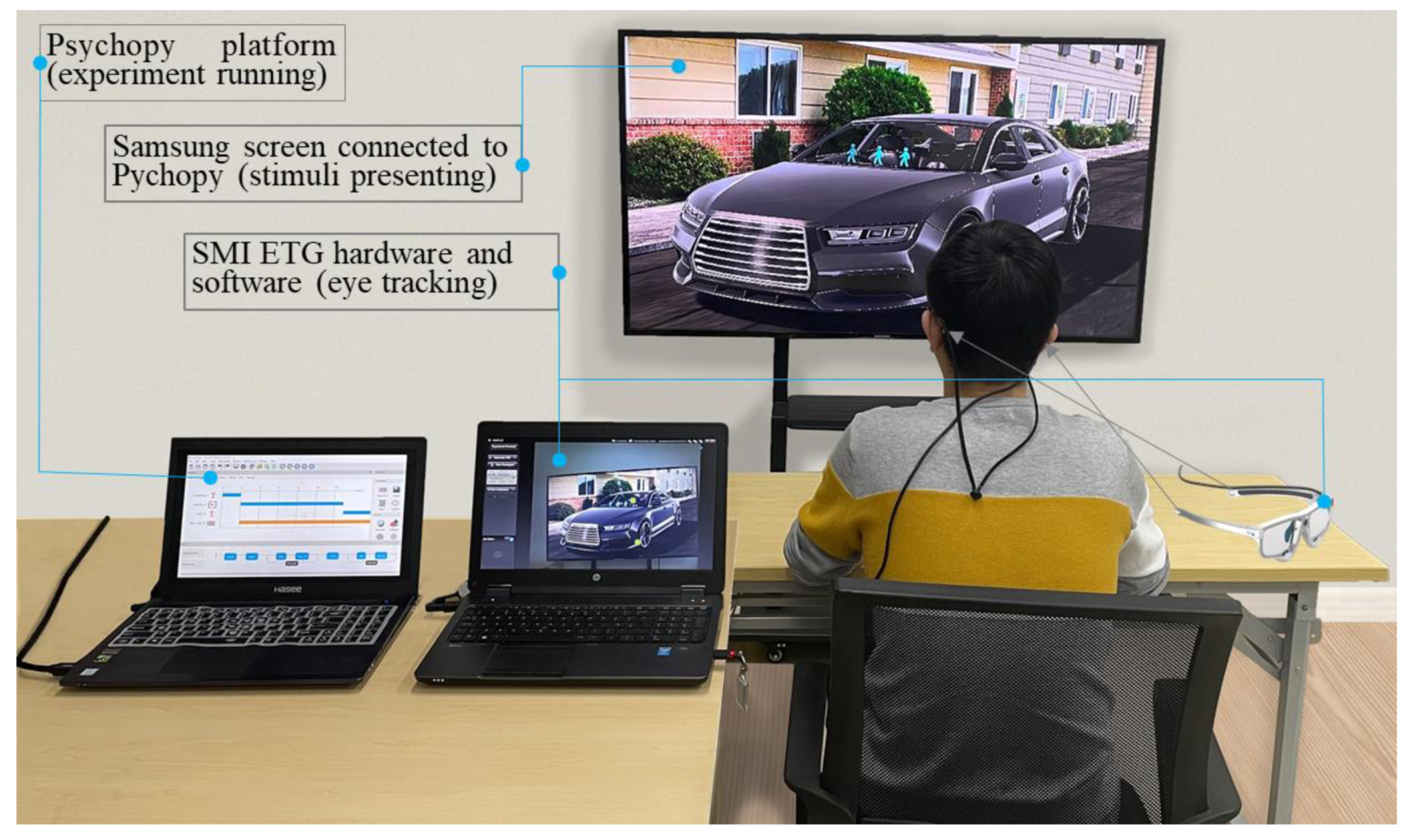

2.2. Apparatus and Materials

2.3. Experimental Design

2.3.1. Independent Variables (IVs)

2.3.2. Design of Video Clips

2.3.3. Procedure and Participants’ Task

2.3.4. Dependent Variables (DVs)

2.4. Data Preparation and Statistical Analyses

3. Results

3.1. Self-Reported Clarity

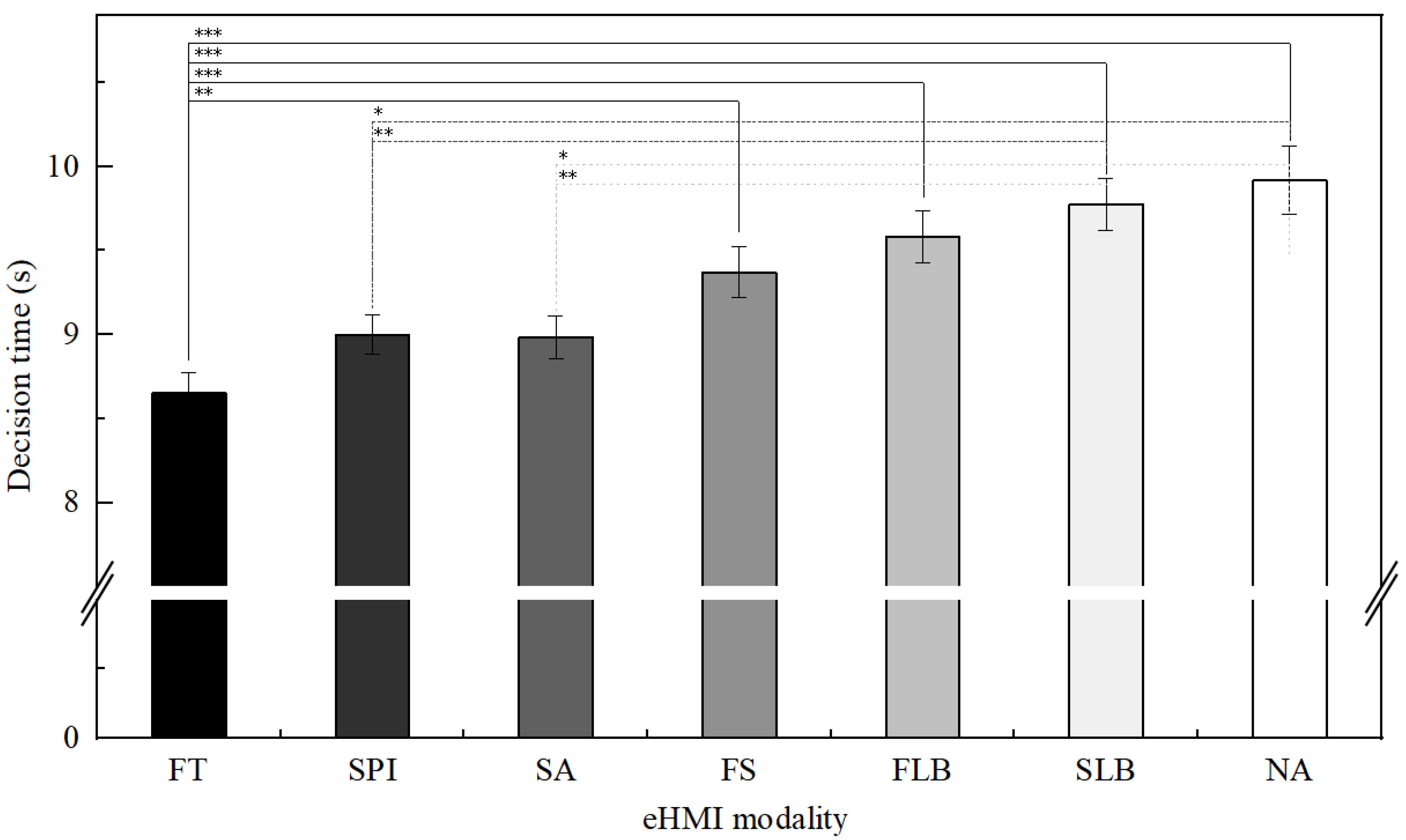

3.2. Decision Time

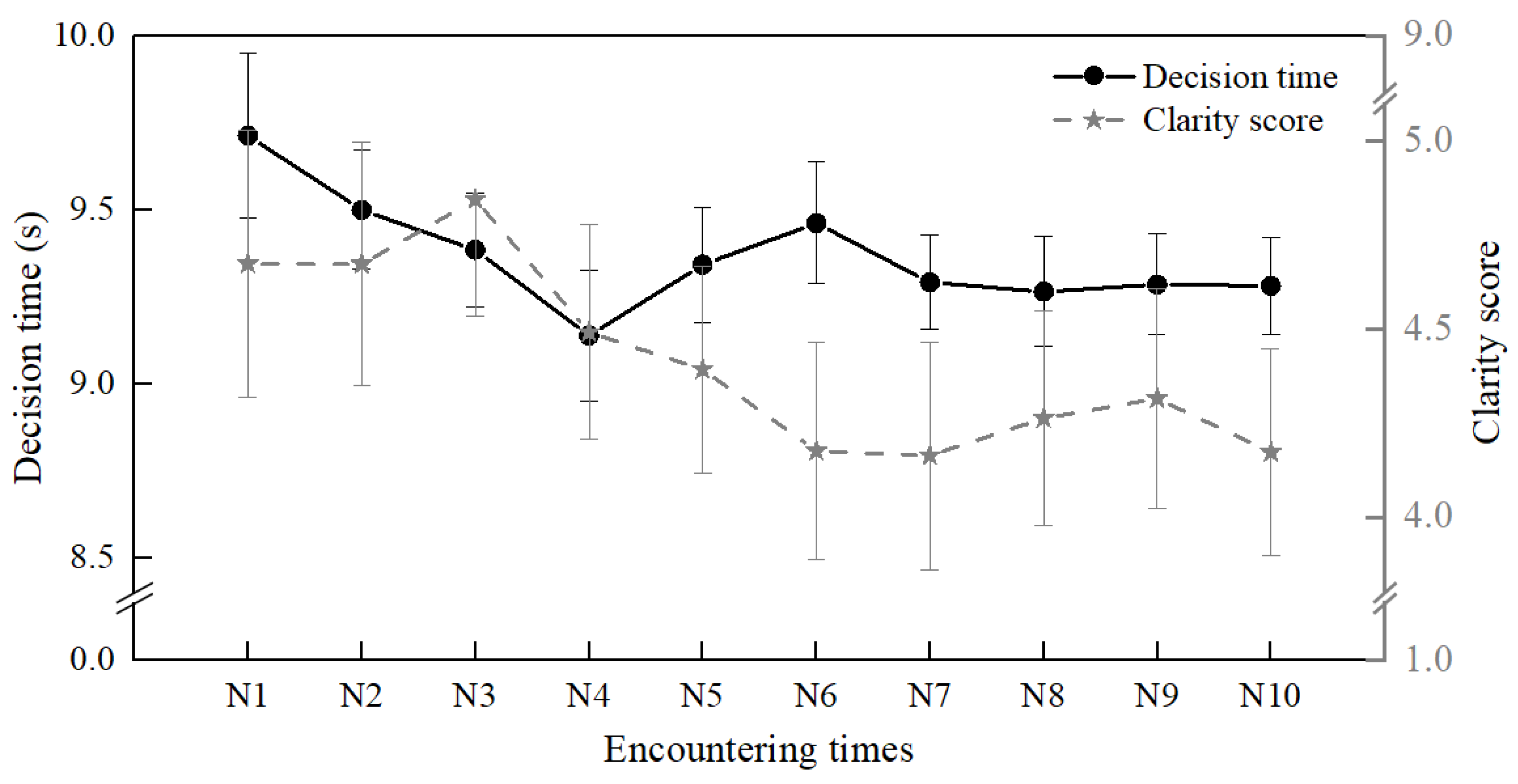

3.3. Clarity Score and Decision Time in the Nonyielding Condition

3.4. Gaze-Based Metrics

4. Discussion

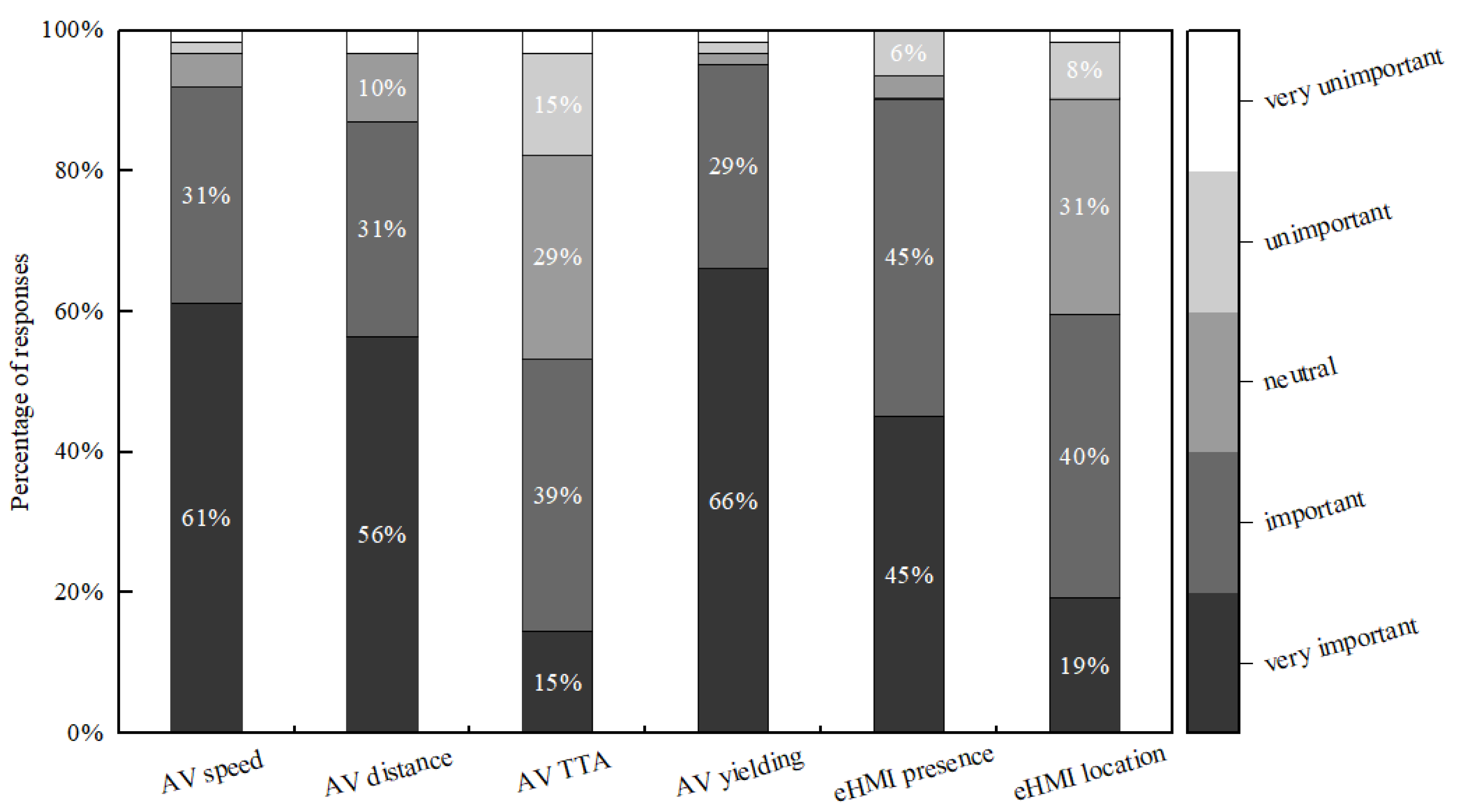

4.1. eHMI as a Necessity

4.2. Effect of eHMI Modality

4.3. Effect of eHMI Location

4.4. Potential Issues with eHMI Design from Gaze Behavior

4.5. Factors Related to Pedestrians

5. Limitations and Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fagnant, D.J.; Kockelman, K. Preparing a Nation for Autonomous Vehicles: Opportunities, Barriers and Policy Recommendations. Transp. Res. Part A Policy Pract. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Milakis, D.; Van Arem, B.; Van Wee, B. Policy and Society Related Implications of Automated Driving: A Review of Literature and Directions for Future Research. J. Intell. Transp. Syst. Technol. Plan. Oper. 2017, 21, 324–348. [Google Scholar] [CrossRef]

- Kaye, S.A.; Li, X.; Oviedo-Trespalacios, O.; Pooyan Afghari, A. Getting in the Path of the Robot: Pedestrians Acceptance of Crossing Roads near Fully Automated Vehicles. Travel Behav. Soc. 2022, 26, 1–8. [Google Scholar] [CrossRef]

- Bansal, P.; Kockelman, K.M.; Singh, A. Assessing Public Opinions of and Interest in New Vehicle Technologies: An Austin Perspective. Transp. Res. Part C Emerg. Technol. 2016, 67, 1–14. [Google Scholar] [CrossRef]

- Nordhoff, S.; Kyriakidis, M.; van Arem, B.; Happee, R. A Multi-Level Model on Automated Vehicle Acceptance (MAVA): A Review-Based Study. Theor. Issues Ergon. Sci. 2019, 20, 682–710. [Google Scholar] [CrossRef] [Green Version]

- Tabone, W.; de Winter, J.; Ackermann, C.; Bärgman, J.; Baumann, M.; Deb, S.; Emmenegger, C.; Habibovic, A.; Hagenzieker, M.; Hancock, P.A.; et al. Vulnerable Road Users and the Coming Wave of Automated Vehicles: Expert Perspectives. Transp. Res. Interdiscip. Perspect. 2021, 9, 100293. [Google Scholar] [CrossRef]

- Faas, S.M.; Mathis, L.; Baumann, M. External HMI for Self-Driving Vehicles: Which Information Shall Be Displayed ? Transp. Res. Part F Psychol. Behav. 2020, 68, 171–186. [Google Scholar] [CrossRef]

- SAE International. Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles; SAE International: Warrendale, PA, USA, 2018; Volume 4970. [Google Scholar]

- Rasouli, A.; Tsotsos, J.K. Autonomous Vehicles That Interact with Pedestrians: A Survey of Theory and Practice. IEEE Trans. Intell. Transp. Syst. 2020, 21, 900–918. [Google Scholar] [CrossRef] [Green Version]

- Dey, D.; Habibovic, A.; Löcken, A.; Wintersberger, P.; Pfleging, B.; Riener, A.; Martens, M.; Terken, J. Taming the EHMI Jungle: A Classification Taxonomy to Guide, Compare, and Assess the Design Principles of Automated Vehicles’ External Human-Machine Interfaces. Transp. Res. Interdiscip. Perspect. 2020, 7, 100174–100198. [Google Scholar] [CrossRef]

- Bazilinskyy, P.; Dodou, D.; Winter, J. De Survey on EHMI Concepts: The Effect of Text, Color, and Perspective. Transp. Res. Part F Psychol. Behav. 2019, 67, 175–194. [Google Scholar] [CrossRef]

- Fridman, L.; Mehler, B.; Xia, L.; Yang, Y.; Facusse, L.Y.; Reimer, B. To Walk or Not to Walk: Crowdsourced Assessment of External Vehicle-to-Pedestrian Displays. arXiv Prepr. 2017, arXiv:1707.02698. [Google Scholar] [CrossRef]

- Carmona, J.; Guindel, C.; Garcia, F.; de la Escalera, A. Ehmi: Review and Guidelines for Deployment on Autonomous Vehicles. Sensors 2021, 21, 2912. [Google Scholar] [CrossRef]

- Dey, D.; Matviienko, A.; Berger, M.; Martens, M.; Pfleging, B.; Terken, J. Communicating the Intention of an Automated Vehicle to Pedestrians: The Contributions of EHMI and Vehicle Behavior. IT-Inf. Technol. 2021, 63, 123–141. [Google Scholar] [CrossRef]

- Dey, D.; Habibovic, A.; Pfleging, B.; Martens, M.; Terken, J. Color and Animation Preferences for a Light Band EHMI in Interactions between Automated Vehicles and Pedestrians. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar]

- Tiesler-Wittig, H. Functional Application, Regulatory Requirements and Their Future Opportunities for Lighting of Automated Driving Systems. 2019. Available online: https://www.sae.org/publications/technical-papers/content/2019-01-0848/ (accessed on 12 March 2022).

- Werner, A. New Colours for Autonomous Driving: An Evaluation of Chromaticities for the External Lighting Equipment of Autonomous Vehicles. Colour Turn 2018, 1, 1–15. [Google Scholar] [CrossRef]

- Eisma, Y.B.; van Bergen, S.; ter Brake, S.M.; Hensen, M.T.T.; Tempelaar, W.J.; de Winter, J.C.F. External Human-Machine Interfaces: The Effect of Display Location on Crossing Intentions and Eye Movements. Information 2020, 11, 13. [Google Scholar] [CrossRef] [Green Version]

- NISSAN. Nissan IDS Concept: Nissan’s Vision for the Future of EVs and Autonomous Driving. Available online: https://www.nissan-global.com/EN/DESIGN/NISSAN/DESIGNWORKS/CONCEPTCAR/IDS/ (accessed on 12 December 2021).

- Daimler Autonomous Concept Car Smart Vision EQ Fortwo: Welcome to the Future Ofcar Sharing-Daimler Global Media Site. Available online: https://group-media.mercedes-benz.com/marsMediaSite/en/instance/ko/Autonomous-concept-car-smart-vision-EQ-fortwo-Welcome-to-the-future-of-car-sharing.xhtml?oid=29042725 (accessed on 12 March 2022).

- Semcon. The Smiling Car. Available online: https://semcon.com/smilingcar/ (accessed on 10 March 2022).

- Dou, J.; Chen, S.; Tang, Z.; Xu, C.; Xue, C. Evaluation of Multimodal External Human–Machine Interface for Driverless Vehicles in Virtual Reality. Symmetry (Basel) 2021, 13, 687. [Google Scholar] [CrossRef]

- Kaleefathullah, A.A.; Merat, N.; Lee, Y.M.; Eisma, Y.B.; Madigan, R.; Garcia, J.; Winter, J. de External Human–Machine Interfaces Can Be Misleading: An Examination of Trust Development and Misuse in a CAVE-Based Pedestrian Simulation Environment. Hum. Factors 2020, 1–16. [Google Scholar] [CrossRef]

- Ford Virginia Tech Go Undercover to Develop Signals That Enable Autonomous Vehicles to Communicate with People. Available online: https://media.ford.com/content/fordmedia/fna/us/en/news/2017/09/13/ford-virginia-tech-autonomous-vehicle-human-testing.html (accessed on 16 November 2020).

- Hensch, C.; Neumann, I.; Beggiato, M.; Halama, J.; Krems, J.F. How Should Automated Vehicles Communicate?–Effects of a Light-Based Communication Approach in a Wizard-of-Oz Study. In Proceedings of the International conference on applied human factors and ergonomics, Washington, DC, USA, 24–28 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 79–91. [Google Scholar]

- Chang, C.-M.; Toda, K.; Igarashi, T.; Miyata, M.; Kobayashi, Y. A Video-Based Study Comparing Communication Modalities between an Autonomous Car and a Pedestrian. In Proceedings of the 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada, 23–25 September 2018; ACM: New York, NY, USA, 2018; pp. 104–109. [Google Scholar]

- Holländer, K.; Colley, A.; Mai, C.; Häkkilä, J.; Alt, F.; Pfleging, B. Investigating the Influence of External Car Displays on Pedestrians’ Crossing Behavior in Virtual Reality. In Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services, Taipei, China, 1–4 October 2019; Association for Computing Machinery: Taipei, China, 2019; pp. 1–11. [Google Scholar]

- Ackermann, C.; Beggiato, M.; Schubert, S.; Krems, J.F. An Experimental Study to Investigate Design and Assessment Criteria: What Is Important for Communication between Pedestrians and Automated Vehicles? Appl. Ergon. 2019, 75, 272–282. [Google Scholar] [CrossRef]

- de Winter, J.; Dodou, D. External Human-Machine Interfaces: Gimmick or Necessity? Delft University of Technology, Delft, The Netherlands. 2022; submitted. [Google Scholar]

- Faas, S.M.; Baumann, M. Yielding Light Signal Evaluation for Self-Driving Vehicle and Pedestrian Interaction. In Proceedings of the International Conference on Human Systems Engineering and Design: Future Trends and Applications, Munich, Germany, 16–18 September 2019; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 189–194. [Google Scholar]

- de Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; de Winter, J.; Happee, R. External Human-Machine Interfaces on Automated Vehicles: Effects on Pedestrian Crossing Decisions. Hum. Factors 2019, 61, 1353–1370. [Google Scholar] [CrossRef] [Green Version]

- Lee, Y.M.; Madigan, R.; Garcia, J.; Tomlinson, A.; Solernou, A.; Romano, R.; Markkula, G.; Merat, N.; Uttley, J. Understanding the Messages Conveyed by Automated Vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2019, Utrecht, The Netherlands, 21–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 134–143. [Google Scholar] [CrossRef]

- Lee, Y.M.; Madigan, R.; Uzondu, C.; Garcia, J.; Romano, R.; Markkula, G.; Merat, N. Learning to Interpret Novel EHMI: The Effect of Vehicle Kinematics and EHMI Familiarity on Pedestrian’ Crossing Behavior. J. Saf. Res. 2022, 80, 270–280. [Google Scholar] [CrossRef]

- Fuest, T.; Michalowski, L.; Träris, L.; Bellem, H.; Bengler, K. Using the Driving Behavior of an Automated Vehicle to Communicate Intentions-A Wizard of Oz Study. In Proceedings of the 21st IEEE International Conference on Intelligent Transportation Systems, ITSC 2018, Maui, HI, USA, 4–7 November 2018; Institute of Electrical and Electronics Engineers Inc.: Garching, Germany, 2018; Volume 2018, pp. 3596–3601. [Google Scholar]

- Fuest, T.; Maier, A.S.; Bellem, H.; Bengler, K. How Should an Automated Vehicle Communicate Its Intention to a Pedestrian?–A Virtual Reality Study. In Proceedings of the International Conference on Human Systems Engineering and Design: Future Trends and Applications, Munich, Germany, 16–18 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 195–201. [Google Scholar]

- Ackermans, S.; Dey, D.; Ruijten, P.; Cuijpers, R.H.; Pfleging, B. The Effects of Explicit Intention Communication, Conspicuous Sensors, and Pedestrian Attitude in Interactions with Automated Vehicles. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar]

- Hochman, M.; Parmet, Y.; Oron-Gilad, T. Pedestrians’ Understanding of a Fully Autonomous Vehicle’s Intent to Stop: A Learning Effect Over Time. Front. Psychol. 2020, 11, 3407–3418. [Google Scholar] [CrossRef]

- Lévêque, L.; Ranchet, M.; Deniel, J.; Bornard, J.C.; Bellet, T. Where Do Pedestrians Look When Crossing? A State of the Art of the Eye-Tracking Studies. IEEE Access 2020, 8, 164833–164843. [Google Scholar] [CrossRef]

- Dey, D.; Walker, F.; Martens, M.; Terken, J. Gaze Patterns in Pedestrian Interaction with Vehicles: Towards Effective Design of External Human-Machine Interfaces for Automated Vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2019, Utrecht, The Netherlands, 21–25 September 2019; ACM: New York, NY, USA, 2019; pp. 369–378. [Google Scholar]

- Bazilinskyy, P.; Kooijman, L.; Dodou, D.; De Winter, J.C.F. Coupled Simulator for Research on the Interaction between Pedestrians and (Automated) Vehicles. In Proceedings of the 19th Driving Simulation Conference (DSC), Antibes, France, 9–11 September 2020; Driving Simulation Association (DSA): Antibes, France, 2020; pp. 1–7. [Google Scholar]

- Peirce, J.W. PsychoPy—Psychophysics Software in Python. J. Neurosci. Methods 2007, 162, 8–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hudson, C.; Deb, S.; CarruthJohn, D.; McGinley, J.; Frey, D. Pedestrian Perception of Autonomous Vehicles with External Interacting Features. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Orlando, FL, USA, 21–25 July 2018; Springer: Berlin/Heidelberg, Germany, 2018; Volume 781, pp. 33–39. [Google Scholar]

- Fillenberg, S.; Pinkow, S. Continental: Holistic Human-Machine Interaction for Autonomous Vehicles. Available online: https://www.continental.com/en/press/press-releases/2019-12-12-hmi-cube/#:~:text=Theultimategoalisto,thepathoftheshuttle (accessed on 11 March 2022).

- Othersen, I.; Conti-Kufner, A.S.; Dietrich, A.; Maruhn, P.; Bengler, K. Designing for Automated Vehicle and Pedestrian Communication: Perspectives on EHMIs from Older and Younger Persons. Proc. Hum. Factors Ergon. Soc. Eur. Chapter 2018 Annu. Conf. 2018, 4959, 135–148. [Google Scholar]

- Chang, C.M. A Gender Study of Communication Interfaces between an Autonomous Car and a Pedestrian. In Proceedings of the 12th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2020, Virtual Event, USA, 21–22 September 2020; ACM: New York, NY, USA, 2020; pp. 42–45. [Google Scholar]

- Knightarchive, W. New Self-Driving Car Tells Pedestrians When It’s Safe to Cross the Street. Available online: https://www.technologyreview.com/2016/08/30/7287/new-self-driving-car-tells-pedestrians-when-its-safe-to-cross-the-street/. (accessed on 8 March 2022).

- Deb, S.; Strawderman, L.J.; Carruth, D.W. Investigating Pedestrian Suggestions for External Features on Fully Autonomous Vehicles: A Virtual Reality Experiment. Transp. Res. Part F Traffic Psychol. Behav. 2018, 59, 135–149. [Google Scholar] [CrossRef]

- Wang, P.; Motamedi, S.; Bajo, T.C.; Zhou, X.; Qi, S.; Whitney, D.; Chan, C.-Y. Safety Implications of Automated Vehicles Providing External Communication to Pedestrians; University of Californi: Berkeley, CA, USA, 2019. [Google Scholar]

- Wilbrink, M.; Lau, M.; Illgner, J.; Schieben, A.; Oehl, M. Impact of External Human—Machine Interface Communication Strategies of Automated Vehicles on Pedestrians’ Crossing Decisions and Behaviors in an Urban Environment. Sustainability 2021, 13, 8396. [Google Scholar] [CrossRef]

- Schieben, A.; Wilbrink, M.; Kettwich, C.; Dodiya, J.; Sorokin, L.; Merat, N.; Dietrich, A.; Bengler, K.; Kaup, M. Testing External HMI Designs for Automated Vehicles–An Overview on User Study Results from the EU Project InterACT. In Proceedings of the 19 Tagung Automatisiertes Fahren, Munich, Germany, 21–22 November 2019; TüV: Munich, Germany, 2019; pp. 1–7. [Google Scholar]

- Dey, D.; Van Vastenhoven, A.; Cuijpers, R.H.; Martens, M.; Pfleging, B. Towards Scalable EHMIs: Designing for AV-VRU Communication beyond One Pedestrian. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, AutomotiveUI 2021, Leeds, UK, 9–14 September 2021; ACM: New York, NY, USA, 2021; pp. 274–286. [Google Scholar]

- Faas, S.M.; Kraus, J.; Schoenhals, A.; Baumann, M. Calibrating Pedestrians’ Trust in Automated Vehicles: Does an Intent Display in an External HMI Support Trust Calibration and Safe Crossing Behavior? In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021; pp. 1–17. [Google Scholar]

- Faas, S.M.; Kao, A.C.; Baumann, M. A Longitudinal Video Study on Communicating Status and Intent for Self-Driving Vehicle - Pedestrian Interaction. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020. [Google Scholar]

- Zuckerman, M.; Kolin, E.A.; Price, L.; Zoob, I. Development of a Sensation-Seeking Scale. J. Consult. Psychol. 1964, 28, 477–482. [Google Scholar] [CrossRef]

- Rosenbloom, T.; Mandel, R.; Rosner, Y.; Eldror, E. Hazard Perception Test for Pedestrians. Accid. Anal. Prev. 2015, 79, 160–169. [Google Scholar] [CrossRef]

- Herrero-Fernández, D.; Macía-Guerrero, P.; Silvano-Chaparro, L.; Merino, L.; Jenchura, E.C. Risky Behavior in Young Adult Pedestrians: Personality Determinants, Correlates with Risk Perception, and Gender Differences. Transp. Res. Part F Traffic Psychol. Behav. 2016, 36, 14–24. [Google Scholar] [CrossRef]

- Zhang, T.; Tao, D.; Qu, X.; Zhang, X.; Zeng, J.; Zhu, H.; Zhu, H. Automated Vehicle Acceptance in China: Social Influence and Initial Trust Are Key Determinants. Transp. Res. Part C Emerg. Technol. 2020, 112, 220–233. [Google Scholar] [CrossRef]

- Oculid Main Metrics in Eye Tracking. Available online: https://www.oculid.com/oculid-blog/main-metrics-in-eye-tracking. (accessed on 14 March 2022).

- Tobii Metrics for Eye Tracking Analytics. Available online: https://vr.tobii.com/sdk/learn/analytics/fundamentals/metrics/. (accessed on 14 March 2022).

- Zito, G.A.; Cazzoli, D.; Scheffler, L.; Jäger, M.; Müri, R.M.; Mosimann, U.P.; Nyffeler, T.; Mast, F.W.; Nef, T. Street Crossing Behavior in Younger and Older Pedestrians: An Eye- and Head-Tracking Study Psychology, Psychiatry and Quality of Life. BMC Geriatr. 2015, 15, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Jiang, K.; Ling, F.; Feng, Z.; Ma, C.; Kumfer, W.; Shao, C.; Wang, K. Effects of Mobile Phone Distraction on Pedestrians’ Crossing Behavior and Visual Attention Allocation at a Signalized Intersection: An Outdoor Experimental Study. Accid. Anal. Prev. 2018, 115, 170–177. [Google Scholar] [CrossRef]

- Gruden, C.; Ištoka Otković, I.; Šraml, M. Safety Analysis of Young Pedestrian Behavior at Signalized Intersections: An Eye-Tracking Study. Sustainability 2021, 13, 4419. [Google Scholar] [CrossRef]

- Smith, P.F. A Note on the Advantages of Using Linear Mixed Model Analysis with Maximal Likelihood Estimation over Repeated Measures ANOVAs in Psychopharmacology: Comment on Clark et al. J. Psychopharmacol. 2012, 26, 1605–1607. [Google Scholar] [CrossRef] [PubMed]

- Magezi, D.A. Linear Mixed-Effects Models for within-Participant Psychology Experiments: An Introductory Tutorial and Free, Graphical User Interface (LMMgui). Front. Psychol. 2015, 6, 1–7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, Y.M.; Madigan, R.; Giles, O.; Garach-Morcillo, L.; Markkula, G. Road Users Rarely Use Explicit Communication When Interacting in Today’s Traffic: Implications for Automated Vehicles. Cogn. Technol. Work 2020, 23, 367–380. [Google Scholar] [CrossRef]

- Dylan, M.; Currano, R.; Strack, G.E.; David, S. The Case for Implicit External Human-Machine Interfaces for Autonomous Vehicles. In Proceedings of the AutomotiveUI ’19: Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21–25 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 295–307. [Google Scholar]

- Domeyer, J.E.; Lee, J.D.; Toyoda, H. Vehicle Automation-Other Road User Communication and Coordination: Theory and Mechanisms. IEEE Access 2020, 8, 19860–19872. [Google Scholar] [CrossRef]

- Joseph, A.W.; Murugesh, R. Potential Eye Tracking Metrics and Indicators to Measure Cognitive Load in Human-Computer Interaction Research. J. Sci. Res. 2020, 64, 168–175. [Google Scholar] [CrossRef]

- Jacob, R.J.K.; Karn, K.S. Eye Tracking in Human-Computer Interaction and Usability Research: Ready to Deliver the Promises. In The Mind’s Eye; Elsevier: Amsterdam, The Netherlands, 2003; pp. 573–605. [Google Scholar]

- Ackermann, C.; Beggiato, M.; Bluhm, L.F.; Löw, A.; Krems, J.F. Deceleration Parameters and Their Applicability as Informal Communication Signal between Pedestrians and Automated Vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 757–768. [Google Scholar] [CrossRef]

- Borkoswki, S.; Spalanzani, A.; Vaufreydaz, D. EHMI Positioning for Autonomous Vehicle/Pedestrians Interaction. In Proceedings of the 31st Conference on L’Interaction Homme-Machine: Adjunct, Grenoble, France, 10–13 December 2019; ACM: New York, NY, USA, 2019; pp. 1–8. [Google Scholar]

- Jarmasz, J.; Herdman, C.M.; Johannsdottir, K.R. Object-Based Attention and Cognitive Tunneling. J. Exp. Psychol. Appl. 2005, 11, 3–12. [Google Scholar] [CrossRef]

- Holland, C.; Hill, R. The Effect of Age, Gender and Driver Status on Pedestrians’ Intentions to Cross the Road in Risky Situations. Accid. Anal. Prev. 2007, 39, 224–237. [Google Scholar] [CrossRef] [PubMed]

- Moyano Díaz, E. Theory of Planned Behavior and Pedestrians’ Intentions to Violate Traffic Regulations. Transp. Res. Part F Traffic Psychol. Behav. 2002, 5, 169–175. [Google Scholar] [CrossRef]

- Rosenbloom, T. Sensation Seeking and Pedestrian Crossing Compliance. Soc. Behav. Pers. 2006, 34, 113–122. [Google Scholar] [CrossRef]

- Kaye, S.A.; Somoray, K.; Rodwell, D.; Lewis, I. Users’ Acceptance of Private Automated Vehicles: A Systematic Review and Meta-Analysis. J. Saf. Res. 2021, 79, 352–367. [Google Scholar] [CrossRef]

| Reference | Descriptions | Grill | Windshield | Roof |

|---|---|---|---|---|

| FT | characters flashing at 0.5 Hz | [18,40] | [19,28] | [18,40] |

| SPI | icons sweeping from right to left (from pedestrian’s view) | [12,27] | [42,43] | [37] |

| SA | arrows sweeping from right to left (from pedestrian’s view) | [44,45] | [12,22] | [46] |

| FS | smiley flashing at 0.5 Hz | [21,27] | [22,47] | [48] |

| FLB | isosceles trapezoid (rectangle if on the roof) flashing at 0.5 Hz | [23] | [33,49] | [50] |

| SLB | light bars sweeping from both sides to the middle | [14,51] | [24,44] | [25,52] |

| eHMI Modality | eHMI Location | No. of Trials | Decision Time (s) | Clarity Score | ||

|---|---|---|---|---|---|---|

| M | SD | M | SD | |||

| Flashing text (FT) | Grill | 55 | 8.46 | 1.56 | 7.87 | 1.19 |

| Windshield | 56 | 8.72 | 1.80 | 7.96 | 1.03 | |

| Roof | 55 | 8.77 | 1.60 | 7.78 | 1.33 | |

| Sweeping pedestrian icon (SPI) | Grill | 53 | 8.86 | 1.58 | 7.77 | 1.23 |

| Windshield | 54 | 9.07 | 1.56 | 7.94 | 1.45 | |

| Roof | 52 | 9.07 | 1.38 | 7.73 | 1.17 | |

| Sweeping arrow (SA) | Grill | 53 | 9.01 | 1.63 | 7.51 | 1.31 |

| Windshield | 56 | 8.93 | 1.78 | 7.71 | 1.22 | |

| Roof | 57 | 9.02 | 1.64 | 7.19 | 1.59 | |

| Flashing smiley (FS) | Grill | 55 | 9.24 | 1.68 | 6.11 | 1.89 |

| Windshield | 54 | 9.48 | 2.36 | 6.17 | 1.75 | |

| Roof | 56 | 9.39 | 1.67 | 5.98 | 2.00 | |

| Flashing light band (FLB) | Grill | 55 | 9.38 | 2.05 | 5.87 | 2.24 |

| Windshield | 51 | 9.64 | 1.93 | 6.18 | 2.01 | |

| Roof | 53 | 9.74 | 1.88 | 5.94 | 2.11 | |

| Sweeping light bar (SLB) | Grill | 54 | 9.85 | 2.16 | 5.48 | 1.91 |

| Windshield | 53 | 9.78 | 1.79 | 5.45 | 1.86 | |

| Roof | 52 | 9.70 | 1.91 | 5.29 | 1.85 | |

| No eHMI (NA) | 54 | 9.92 | 1.51 | 5.37 | 2.09 | |

| Gender | Traffic Accident History | Sensation-Seeking Score (SSS) | Driving Experience | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Coding | Freq. | Coding | Freq. | Coding | Freq. | SSS (M (SD)) | Coding | Freq. | Mileage (M (SD)) |

| male | 30 | no | 50 | low SSS | 18 | 9.00 (1.88) | none | 18 | 0 (0) |

| female | 32 | yes | 12 | medium SSS | 24 | 14.67 (2.01) | novice driver | 36 | 1547.23 (88.92) |

| high SSS | 20 | 21.80 (1.91) | veteran driver | 8 | 3620.18 (147.26) | ||||

| Effect | df1 | df2 | Dwell Time | First Fixation Duration | Fixation Count | |||

|---|---|---|---|---|---|---|---|---|

| F | p | F | p | F | p | |||

| Gender | 1 | 658 | 3.32 | 0.069 | 3.75 | 0.053 | 22.87 | <0.001 |

| SSS | 2 | 658 | 10.37 | <0.001 | 2.97 | 0.052 | 11.45 | <0.001 |

| Accident history | 1 | 658 | 1.17 | 0.279 | 0.06 | 0.812 | 0.21 | 0.646 |

| Driving experience | 2 | 658 | 6.51 | 0.002 | 1.20 | 0.303 | 4.20 | 0.015 |

| eHMI modality | 6 | 658 | 9.34 | <0.001 | 3.11 | 0.005 | 4.89 | <0.001 |

| eHMI location | 2 | 658 | 3.18 | 0.042 | 9.70 | <0.001 | 8.14 | <0.001 |

| eHMI modality × eHMI location | 12 | 658 | 0.30 | 0.990 | 0.85 | 0.595 | 0.81 | 0.636 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, F.; Lyu, W.; Ren, Z.; Li, M.; Liu, Z. A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction. Sustainability 2022, 14, 5633. https://doi.org/10.3390/su14095633

Guo F, Lyu W, Ren Z, Li M, Liu Z. A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction. Sustainability. 2022; 14(9):5633. https://doi.org/10.3390/su14095633

Chicago/Turabian StyleGuo, Fu, Wei Lyu, Zenggen Ren, Mingming Li, and Ziming Liu. 2022. "A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction" Sustainability 14, no. 9: 5633. https://doi.org/10.3390/su14095633

APA StyleGuo, F., Lyu, W., Ren, Z., Li, M., & Liu, Z. (2022). A Video-Based, Eye-Tracking Study to Investigate the Effect of eHMI Modalities and Locations on Pedestrian–Automated Vehicle Interaction. Sustainability, 14(9), 5633. https://doi.org/10.3390/su14095633