1. Introduction

Precision agriculture can be considered a research field that focuses on using different tools to increase agricultural fields’ productivity [

1]. Generally, it is based on various sensors depending on the field of application [

2,

3]. Among these sensors, we can find different types of cameras, from Red, Green, Blue (RGB) to hyperspectral and multispectral cameras. All these tools have a common goal based on increasing yield and production improvement [

4]. The algorithmic side in precision agriculture is rich and depends on the nature of the application chosen. These applications aim to solve different problems encountered in traditional agriculture. For example, weeds, various plant diseases, as well as monitoring vegetation and vital visual signs using different indices [

5,

6,

7,

8]. These applications require a feasibility study in real scenarios to validate the different approaches proposed in the literature. As a solution, the use of embedded systems can help not only the validation but also the improvement of the different methods. The implementation of these methods requires an algorithmic and architectural study in order to propose optimal implementations that increase the reliability of calculation as well as reduce the processing time in applications that require a precise time. Besides processing time and reliability, we can also find the calculation accuracy in the different data collection tools [

9]. For example, robots and unmanned aerial vehicles are equipped with various tools that help the autonomous mobility of these robots. The accuracy of movement can be solved using GPS sensors or localization and mapping algorithms. But the problem here is that this autonomous movement of robots or Unmanned Arial Vehicle (UAVs) can create problems such as camera blur as well as memory saturation, which can influence the quality of the results obtained.

In fact, several approaches have been developed based on embedded systems, but they remain limited in real-time constraints as well as in time and complexity. For example, J. Rodríguez et al., 2021 proposed a system for monitoring potato fields. The work was based on a Tarot 680PRO hexacopter UAV and a MicaSense RedEdge multispectral camera. The authors used two types of data A and B; the results showed high accuracy in field A while field B had low accuracy [

10]. For the monitoring of agricultural fields, we can also find the work of [

11], which was based on a drone and a multispectral camera to monitor agricultural fields. Similarly, for the detection of weeds, A. Wang et al., 2020 used deep learning approaches to detect weeds. The study presented a detection accuracy about 96.12% [

12]. The work of S. Abouzahir et al., 2021 detects weeds on several types of crops based on an embedded platform with an accuracy that varies between 71.2% and 97.7% [

13]. In the case of counting plants, we can also find S. Tu et al., 2020 who proposed a fruit counting approach based on depth calculation to increase the counting accuracy. The work showed a counting accuracy and an F1 score that reaches up to 0.9 [

14]. All these approaches and systems proposed give us an idea of the different algorithms and embedded architecture proposed in this sense. This reflects the massive evolution of precision agriculture. However, the problem of these algorithms is the evaluation in real cases where time influences the accuracy of the results. This pushes us to investigate further the study of how we can embed these algorithms in low-cost architecture and energy consumption to guarantee an autonomy of treatment without the intervention of farmers. In addition, these algorithms and their implementation require a preprocessing that includes the detection of blur and its elimination that directly influences the accuracy and reliability of the results. Another critical factor when we talk about applications based on soil robots and UAVs is the memory saturation that requires data compression after their processing, which we will address in this study.

In our work, we propose a system divided into three parts. The first part focuses on the measurement of blur and then the elimination of this blur. In this context, we have used a hybrid algorithm that combines blur measurement and filtering to ensure images without motion. This blur removal algorithm uses blur measurement before removal compared to the other technique proposed in the literature, which is only based on blur suppression without measurement [

15]. The second part calculates the most general indices based on RGB images. These indices are NGRDI and VARI; choosing these indices is due to the high sensitivity to agricultural land cover [

16]. Moreover, they are easy to interpret compared to other indices, which are strongly related to our study. The third part of the work focused on studying the memory saturation problem. We have added a compression algorithm to eliminate this significant problem as a solution. These three parts combined on a single algorithm that processes agricultural fields’ images in real-time. Our novelty and contribution are as follows:

- (1)

The proposition of a new algorithm based on various techniques for compression, blur detection, and RGB indices processing such as NGRDI and VARI.

- (2)

The evaluation of the algorithm was based on our original database using a Phantom DJI pro 4 drone in different agricultural areas.

- (3)

The study of the temporal constraints was proposed based on the Hardware/Software Co-Design approach.

- (4)

A hardware acceleration was developed on several low-cost embedded architectures in order to respect the architectural and temporal constraints.

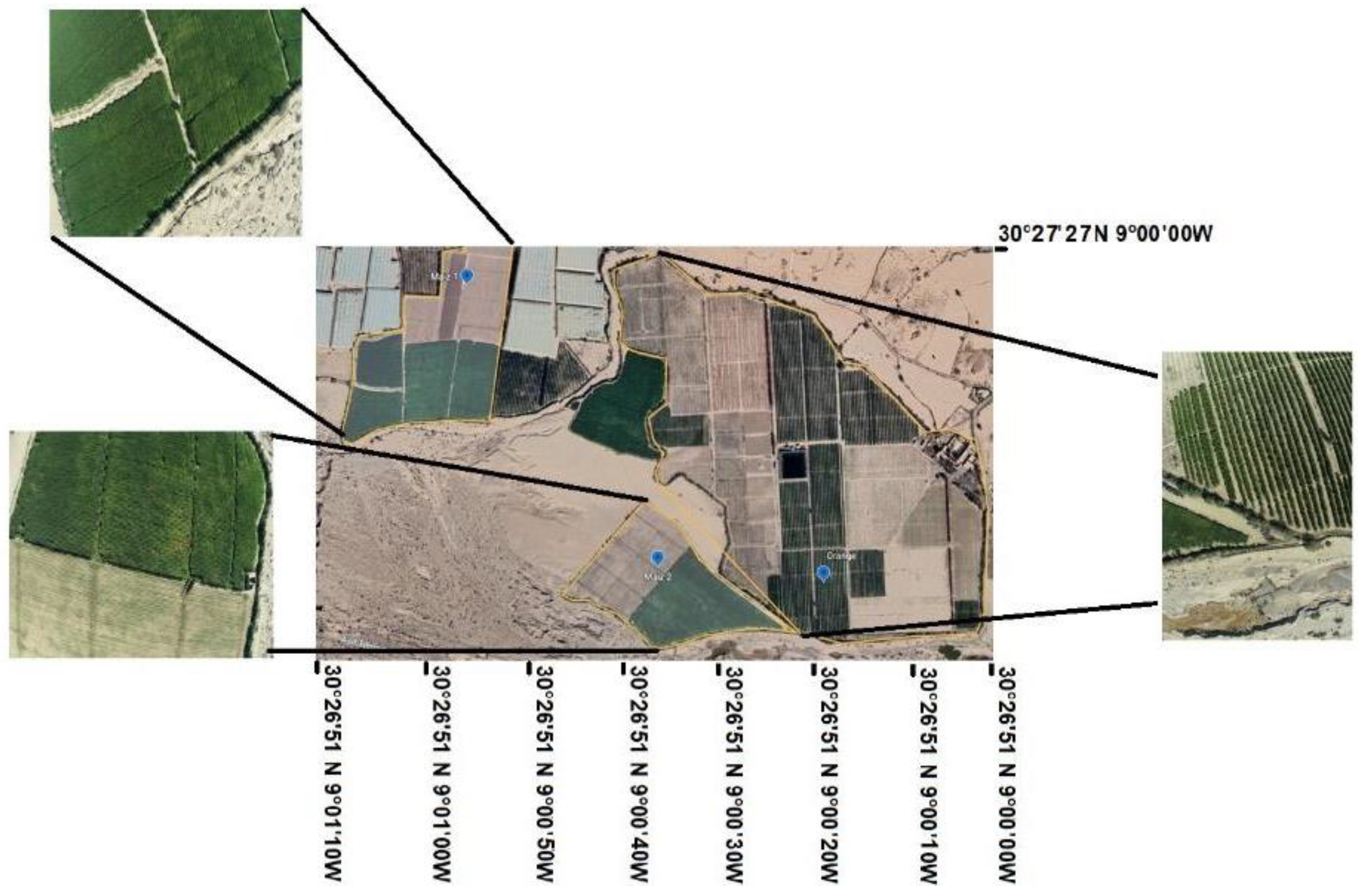

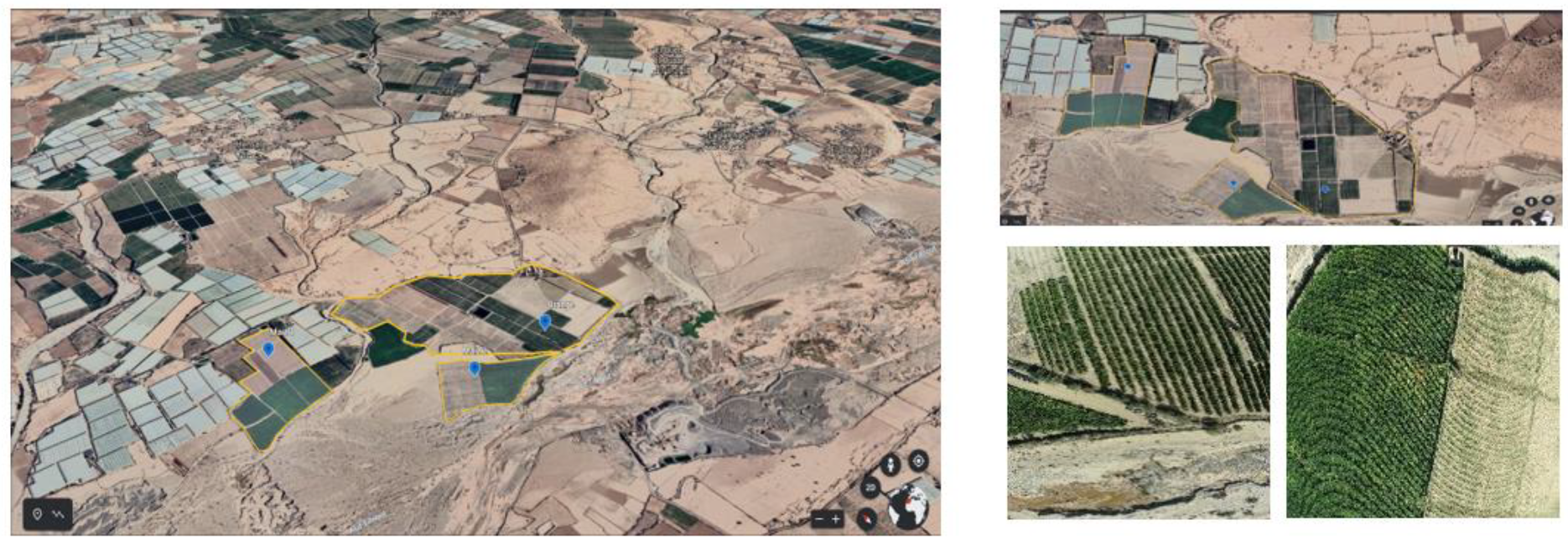

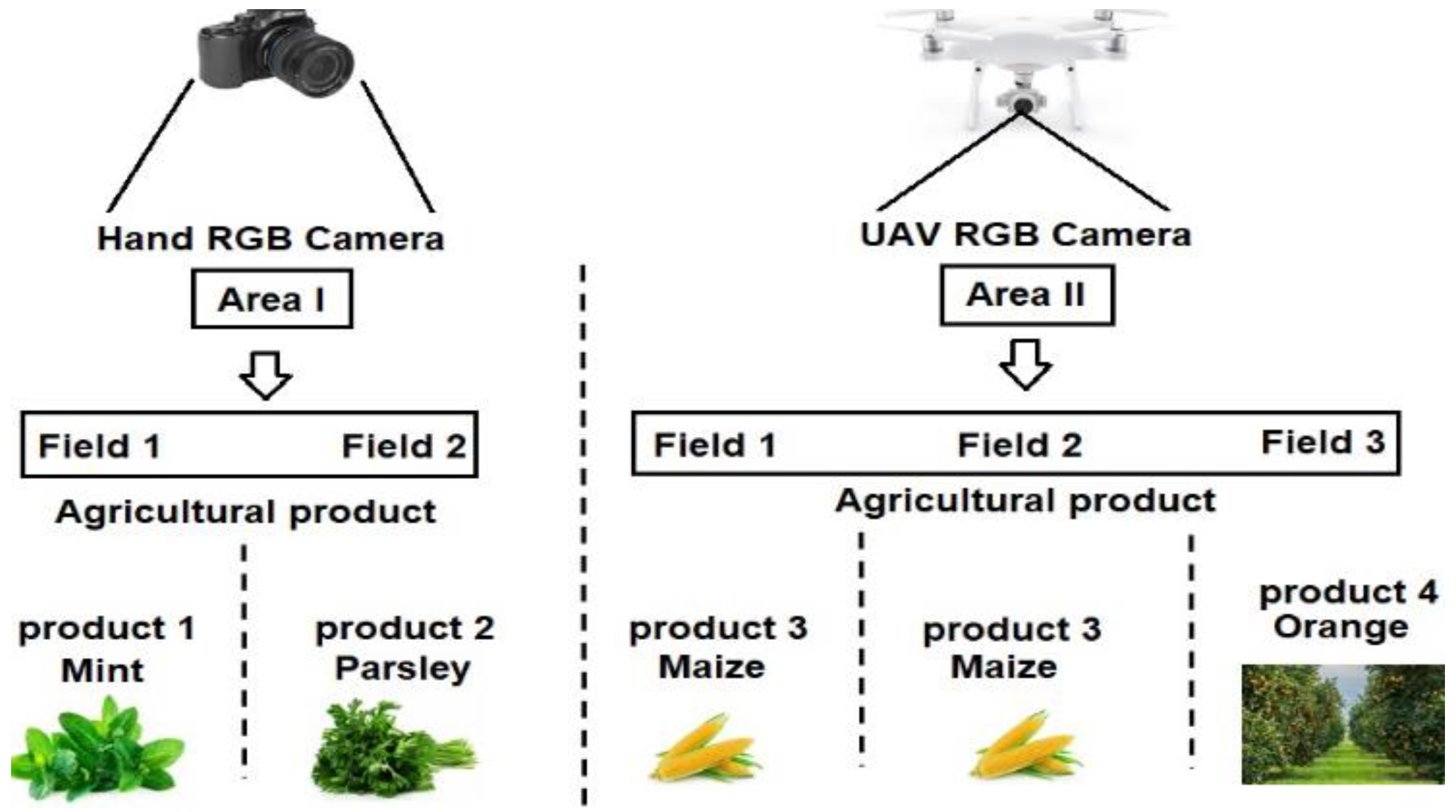

Our algorithm has been evaluated on several embedded systems such as XU4, Jetson Nano, and Raspberry. The objective of these implementations is to study the adequacy between the hardware and the software. The results showed that the XU4 card remains the best choice for our application, thanks to its low consumption and cost, as well as the solid, parallel computation. The tools used in this evaluation are based on C/C++, OpenMP, and OpenCL. The C/C++ language has been used to validate the algorithm proposed in the chosen system and OpenMP to exploit the parallelism in the selected card. For OpenCL, we used it to exploit the Graphics Processing Unit (GPU) part of our card for acceleration in order to minimize the processing time. The proposed algorithm has a complexity of O = K × (i × j) Log2 (i × j), where K is the number of data used and i × j is the image size. This study’s data were based on the Souss Massa region’s agriculture in the south of Morocco, which is considered one of the most productive regions of agriculture in Morocco. Our database was collected using UAV type DJI and RGB cameras for the fields of maize and orange, as well as a hand database for parsley and mint.

Our paper is summarized as follows: the first part focuses on the different recent works and an overview of the RGB index used in agriculture. The second part describes our methodology based on the algorithmic study and the agricultural fields that have been used. The third part is based on the proposed implementation and the software–hardware results obtained from the embedded systems used. Then, we have the real results obtained from the selected agricultural fields. Finally, we finish with a conclusion and future work.

2. Background and Related Work

Monitoring in agricultural fields helps a significant part of farmers to construct an idea about the different plants. This monitoring needs a special system able to extract useful information in the agricultural fields. Among the most relevant information in the crop coverage, we find the vegetation indexes. These indexes are based on algebraic equations that use as an argument the special bands of the cameras. Usually, the bands used vary between the different indices that will be used later. Vegetation in plants is based on an absorption and reflection process of the red, green, blue, shortwave, and near-infrared bands. Each reflection or absorption of these bands indicates different indices. For example, to calculate the vegetation and water index, it is necessary to use the R, G, B, and Near InfraRed (NIR) bands. On the other hand, the humidity index is based on the Short Wave Near-InfraRed (SWIR) and R waves. Data collection is done using several tools; the choice depends on the specific application. We can also find indices based on the R, G, and B bands only; these indices have proved a reliable precision in monitoring agricultural fields.

Table 1 shows the different indexes based on the three RGB bands.

Table 1 shows the most common vegetation indices used in precision agriculture; these indices are easy to calculate using RGB cameras. However, the Normalized Difference Vegetation Index (NDVI) aims to extract the vegetation amount from various plants and requires a multispectral camera, which is expensive compared to RGB cameras. A.A. Gitelson et al. showed that the VARI presented an error of <10% vegetation fraction [

19]. This shows the robustness of this index for vegetation estimation. The evaluation of the index was on a region near the city of Beer-Sheva, Israel. The authors in [

18] used a UAV to calculate these indexes with an acquisition frequency of two frames/s [

18]. We also find the work of P. Ranđelović et al., 2020, which was based on RGB indexes in order to predict plant density [

20].

The scientific literature presents various tools, but the most well-known and effective ones are the sole robot, the satellite, the UAVs, and the hand data. We can also find several sensors such as RGB, multispectral, and hyperspectral cameras. All these tools and sensors have a common goal based on monitoring and tracking the agricultural fields’ plantations. Nowadays, several works have been elaborated in this context, aiming to improve the quality of monitoring and the reliability of the results. D. Shadrin et al., 2019 propose an embedded system based on GPU that does plant growth analysis via artificial intelligence. They used an algorithm based on Long Short-Term Memory (LSTM); the evaluation of this algorithm was on a Desktop and a Raspberry Pi 3B card. The embedded tool used in this study is the GPU card, but the weak point here is the limitation of the Raspberry card at the level of computation in the GPU; also, these cards do not support the Compute Unified Device Architecture (CUDA) tool that accelerates processing in the GPU card. However, this work’s results have been detailed and are rich in information either at the level of execution time or the low energy consumption [

21]. Another work has been proposed to ensure robots’ autonomous movement to perform tasks such as weed detection, plant counting, or vegetation monitoring. The result was based on applying localization and mapping algorithms in agricultural fields using different Simultaneous Localization and Mapping (SLAM) algorithms. However, the work was evaluated on a laptop, and no embedded study has been made. This pushes us to conclude that the evaluation of these types of algorithms in conventional machines does not apply the implementation in low-cost embedded systems to ensure the optimal movement of the robot in agricultural fields. However, the work has shown the usefulness of the localization and mapping algorithms that were developed just for the automotive field, which shows that these algorithms can also be helpful in the agricultural area [

22]. X.P. Burgos-Artizzu, et al., 2011 proposed two subsystems for agricultural field monitoring and weed detection. The first system is dedicated to trajectory identification and the second one to weed detection. The algorithm was evaluated on a desktop with eight frames/s processing based on C++ language; the results showed an accuracy of 90% for weed detection [

23].

Table 2 presents a synthesis of the different works on agricultural field monitoring.

All these applications have been based on the monitoring of indices. These RGB indices are an alternative to the index based on multispectral cameras. This alternative allows us to monitor agricultural fields based on low-cost systems such as RGB cameras. The scientific development of embedded systems has enabled us to have a flexibility of choice, generally characterized by the use of low power, cost, and performance architecture, especially if we consider autonomous applications that do not require intervention. For this reason, if we want to develop this kind of system, we will have to consider the algorithmic, architecture, and energy consumption constraints, keeping the reliability and precision of the results [

24]. The use of embedded systems in agriculture will help us to achieve complicated tasks as fast as possible. Generally, we find a variety of systems; these systems are divided into two parts, either homogeneous, which is based on CPU, FPGA, and DSP, or heterogeneous, which combines CPU and CPU/GPU/FPGA/DSP; their primary role is the acceleration of algorithms based on high-level language. Usually, C/C++ is dedicated to homogeneous systems like CPU and DSP. For the construction of dedicated architecture, we can find the use of FPGA, which is characterized by low energy consumption. Still, its weak point is the coding complexity in this type of architecture. The C/C++ language is generally limited in the context where we want to speed up the processing [

30,

31,

32]. For this reason, the OpenMP directive remains an excellent solution to accelerate the code in C/C++.

On the other hand, CUDA and OpenCL offer a high-performance acceleration in heterogeneous systems type CPU-GPU for CUDA and CPU-GPU/FPGA/DSP for OpenCL. Despite its huge advantage, CUDA remains limited in heterogeneous systems due to its use only for Nvidia architecture, which encourages the use of OpenCL that gives flexibility in different architectures. For this reason, we have chosen to use OpenMP and OpenCL. The use of these languages as well as heterogeneous systems is still very limited in precision agriculture, as most of the works are based on software and workstations, which restrict the use of autonomous systems in real scenarios.

The non-use of embedded systems makes the processing offline, and this processing does not take into consideration a variety of problems that it can be confronted with. Among these problems, we can find the blur generated by the type of cameras or movement of tools used for data collection, either robots or UAVs. This blur can affect the reliability of the results, which does not respect the constraints of an autonomous embedded system [

33]. Moreover, a very critical parameter influencing the data collection is memory saturation [

34].

4. Hardware-Software Results based on CPU and CPU-GPU Architecture

The methodology followed in our hybrid algorithm implementation aims firstly to validate the global algorithm on the desktop in order to interpret the results. Once the algorithm is validated in the conventional machine, we go directly to the implementation in the embedded cards. The implementation of the algorithm passes firstly by the C/C++ language in order to evaluate the temporal constraint. Then we try to separate the algorithm into various blocks; in our case, we have three blocks. The first block is for the elimination and detection of the blur, the second is for the calculation of indices, and the third is for image compression—the technique followed in this block separation based on preprocessing, processing, and post-processing. After separating the blocks, we pass to the separation of each block in Functional Blocks (FB) and then the temporal evaluation of each block. As a result, we separate block 1 into six functional blocks (FB) and the third block into the six functional blocks. The temporal evaluation showed that FB4 in the first block consumes most of processing time, and in the third block, we have FB4 and FB5 consume more. The acceleration was based on OpenMP and OpenCL to exploit the parallelism in the CPU and the GPU. The choice of the card that will be studied after does not depend only on treatment time but also on energetic consumption, because the idea of the system is based on an architecture with low cost and consumption of energy.

4.1. Specific Systems

Our implementation was based on a desktop for validation and three embedded architectures for comparison. The desktop is intel i5-5200U with a CPU @ 2.2 Ghz based on Broadwell architecture and it supports a GPU @ 954 Mhz type GeForce 920M Nvidia based on Kepler architecture. For the embedded architecture, we used Odroid XU4, which supports OpenCL and has a CPU @ 2 Ghz for Cortex A15, @ 1.4 Ghz for cortex A7, and a GPU @ 600 Mhz type ARM Mali. The processor that integrates this architecture is Exynos5422 (Samsung), as well as the Raspberry 3 B+ card with a CPU @ 1.4 Ghz Cortex-A53 ARM and a GPU @ 400 Mhz type Broadcom Videocore-IV. The third architecture used in our evaluation is Jetson Nano with a CPU @ 1.43 Ghz based on ARM A57 and a GPU @ 640 Mhz based on 128-core Maxwell.

Table 6 defines the system specification used.

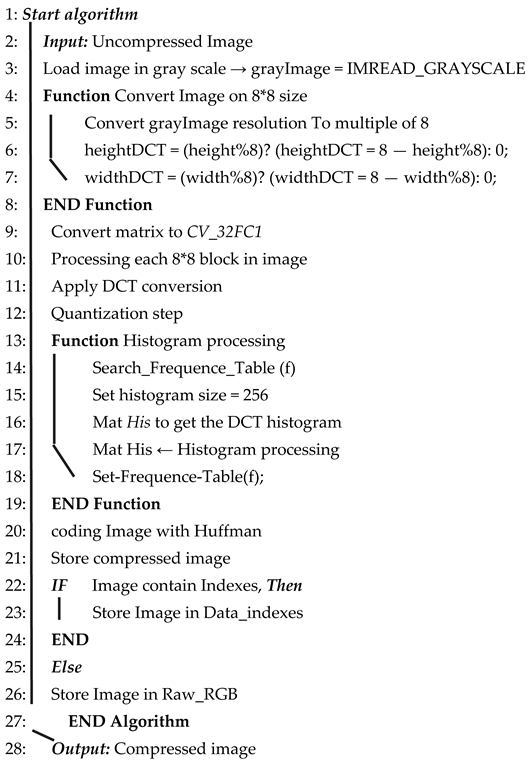

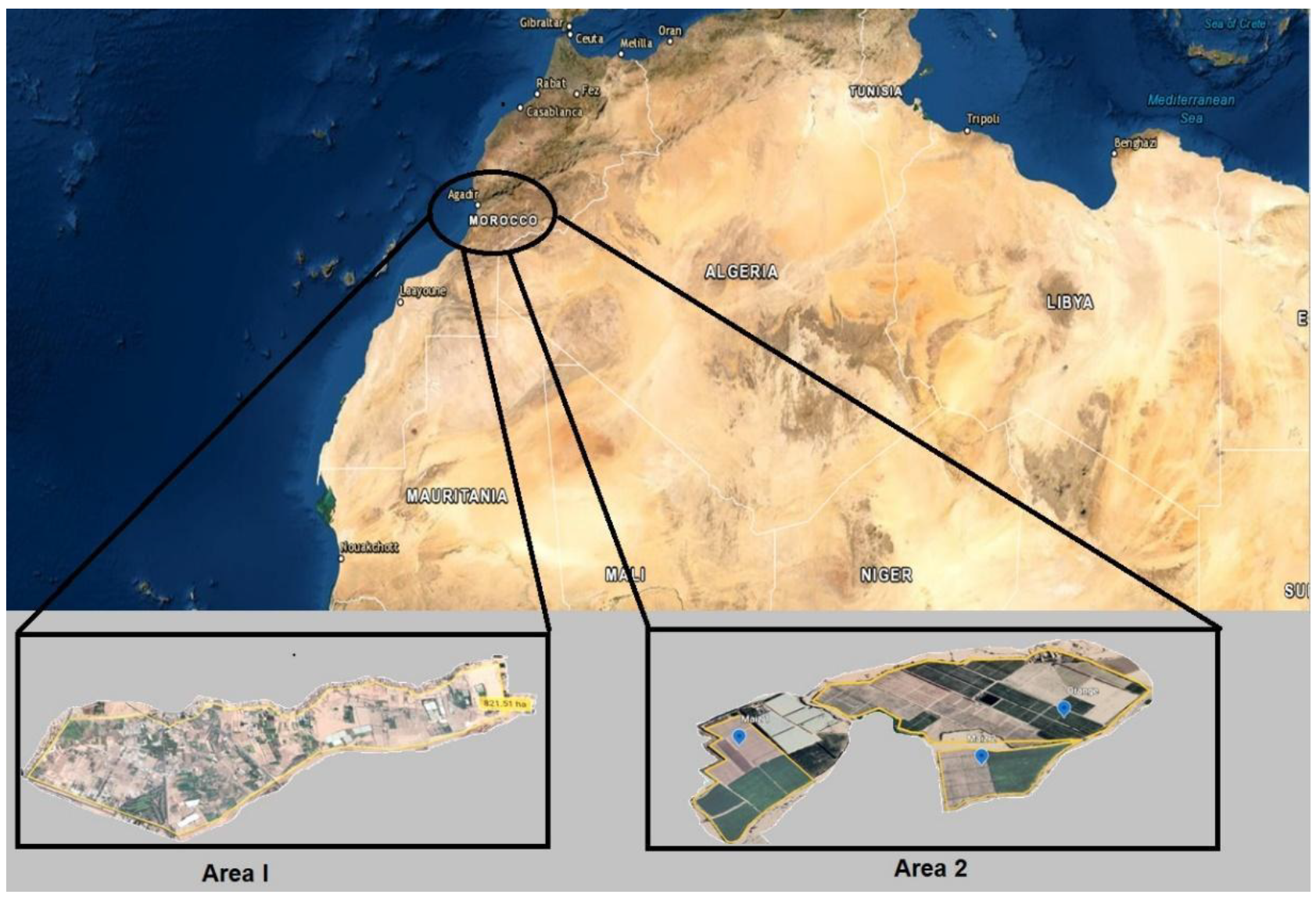

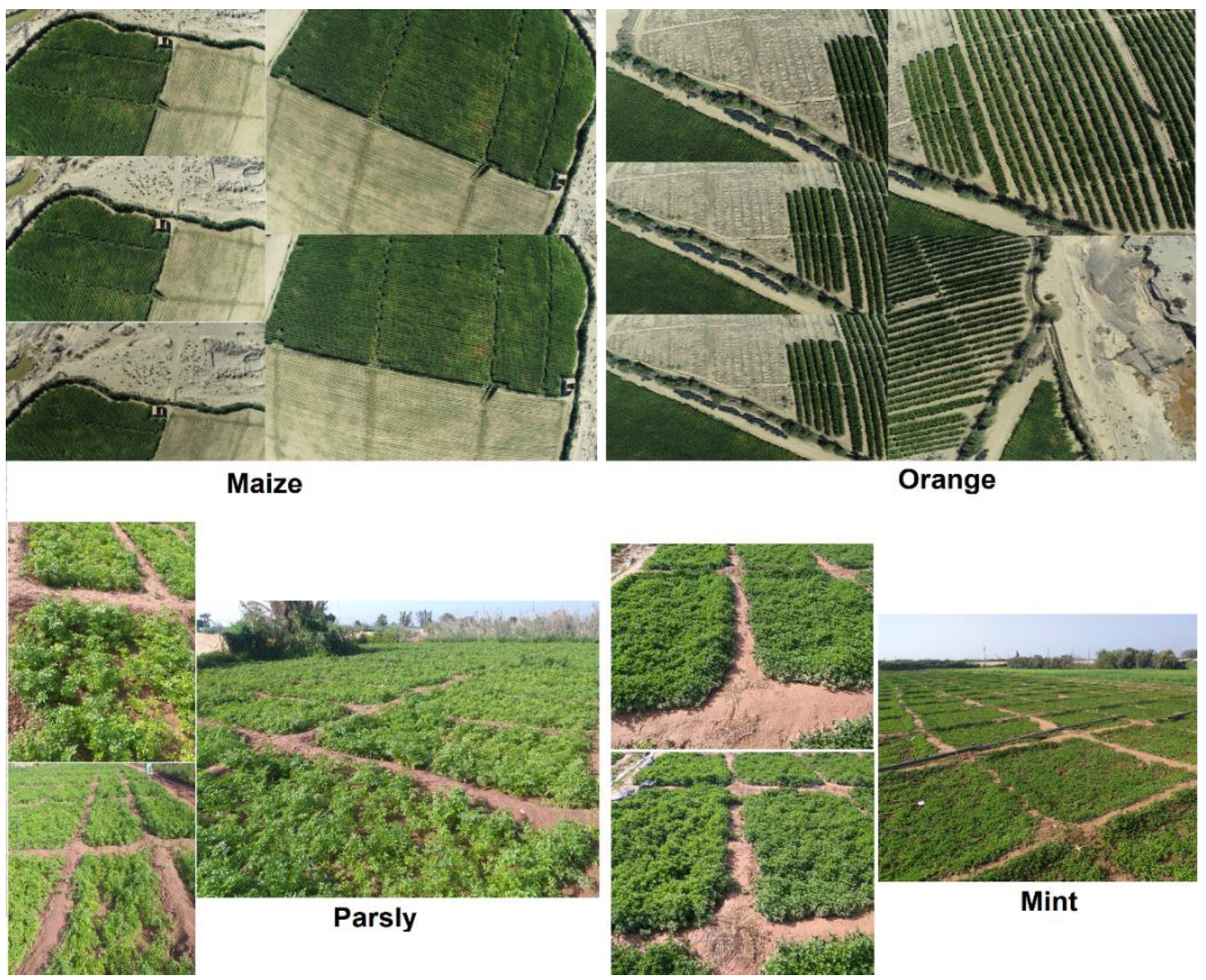

The data used in this paper is divided in two types—one collected by hand and the other collected by an unmanned aerial vehicle (UAV) type DJI.

Figure 8 shows an example of the data used in our evaluation. The left image was collected by a UAV for the maize and orange products. On the other hand, the figure on the right is for mint and parsley. The choice of this agricultural product was made due to the popularity of this type of farm in the southern Moroccan region.

4.2. Sequential Implementation of the CPU-Based Algorith

The implementation on the CPUs of the used architecture is generally done in a sequential mode. This implementation, in our case, is based on the C/C++ language. After the temporal evaluation of the different blocks, we proceed to the distribution of each block in functional blocks, which reflect the various treatments used in the chosen block.

Table 7 shows the processing time consumption of each block in our algorithm.

The time evaluation on several machines showed that the desktop consumes less time than the other tools used, giving a total time of 133.6 ms to process each image. In the other part, we have the two embedded systems, Jetson nano, and XU4, which consume, respectively, 316.4 and 386.4 ms for the processing of each image. These processing times are close, given the characteristics of each system. We also have the Raspberry card, which consumes 703.8 ms for each image. From the first analysis, we can say that blocks 1 and 3, which deal with blur detection and compression, consume more time than block 2, except in the desktop. This pushes us to analyze each block to see which part consumes more. The approach is to separate each block into functional blocks. These functional blocks take various tasks in the main block. In our case, we have tried to divide the first block into six functional blocks.

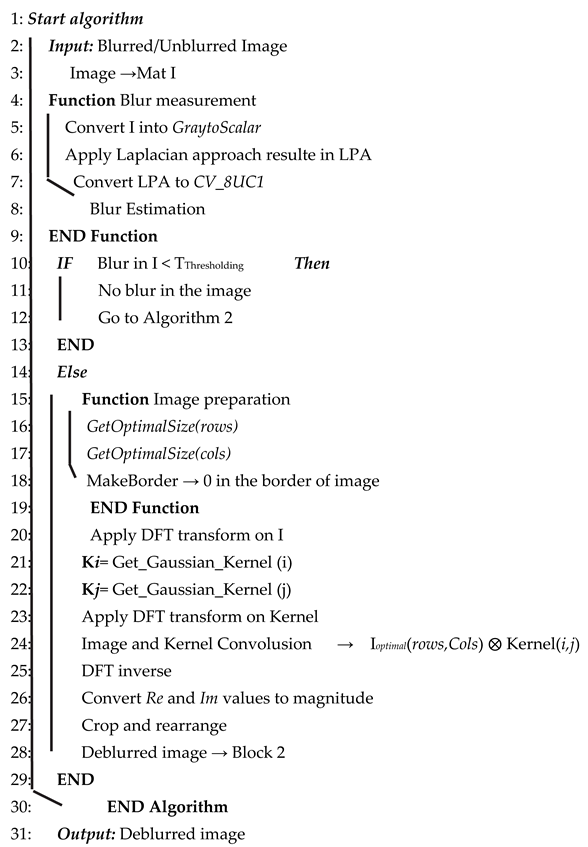

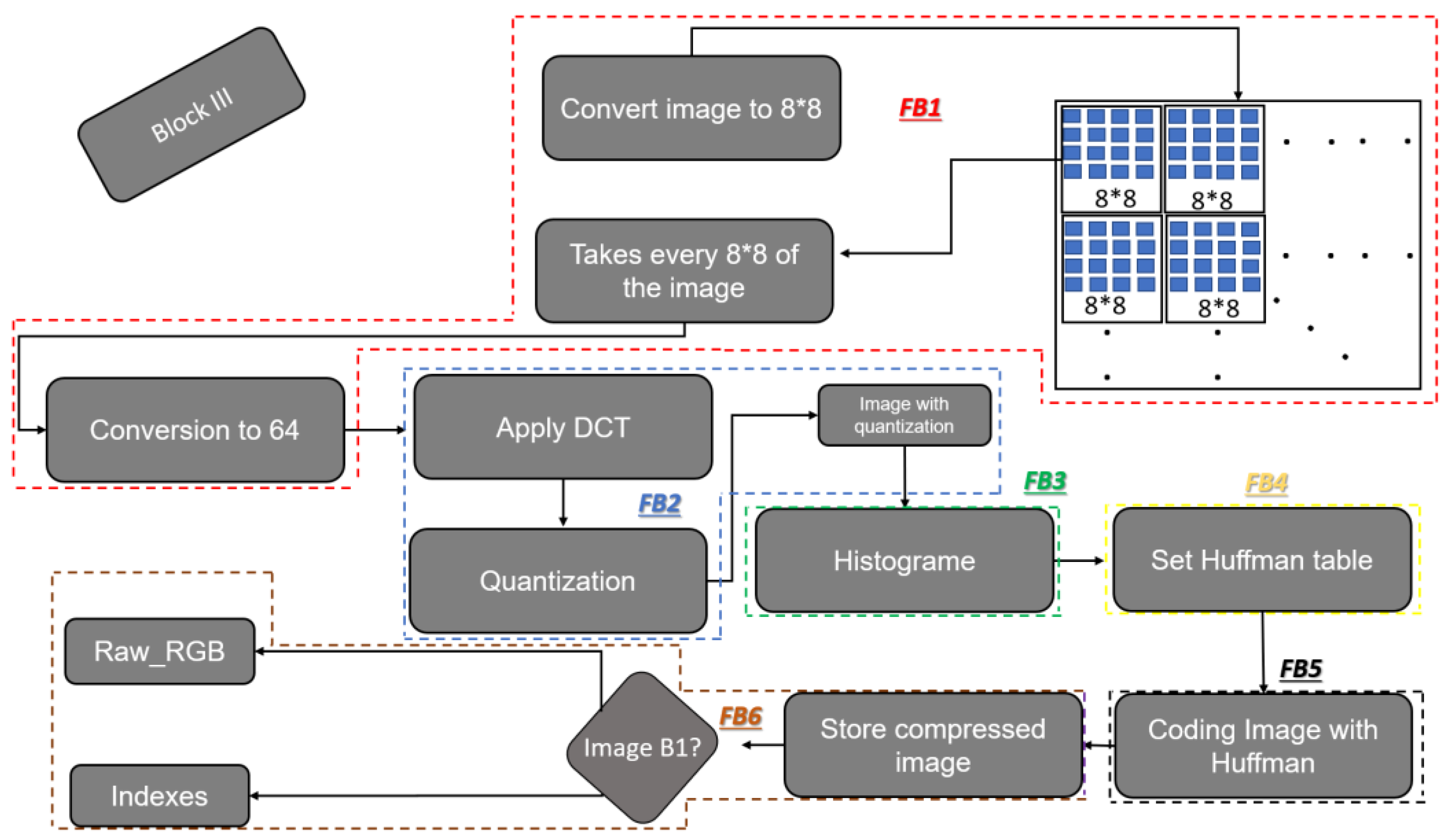

Figure 9 shows the functional blocks used in our case based on block 1, which is responsible for blur detection and elimination as indicated in Algorithm 1.

The first functional block is dedicated to the blur test; this test is very important to avoid the processing if an image does not contain blur, which will decrease the processing time in some cases. The advantage, here, over the other techniques used is that, if we do not have blur, the algorithm will go directly to block 2 to calculate the indices. FB2 focuses on image preparation if we have blurred images. The third functional block is for the application of DFT to the image and the kernel. FB4 focuses on the convolution between the image and kernel DFT. FB5 for the Inverse Discrete Fourier Transform and finally FB6 for the magnitude and rearrangement to send the image to block 2 for indexes processing. Algorithm 1 shows the processing details of B1. This functional block separation will convert our algorithm into a functional block map which consists of 6 FB in block 1, giving a global view on the processing of this algorithm.

Figure 10 shows block map 2.

In our case, the second block is described in [

30]; for this reason, we focused only on blocks 1 and 3.

Figure 10 shows that the compression algorithm is also divided into six functional blocks. FB1 focuses on searching optimal size to separate the image into 8*8 blocks and then convert it to 64 bits. FB2 takes care of the DCT application and the quantization, and FB3 for the histogram. Then FB4 and FB5 fill the Huffman table and the image coding based on these tables, respectively. The six functional blocks focus on the storage of the compressed images and the database management by applying a test to the image to see if it is an image that contains the different indices or a raw RGB image. After the specification of the different block functions, the time evaluation for the different blocks has to be applied in order to conclude which functional blocks consume more time. The time evaluation was based on the desktop, XU4, Jetson Nano, and Raspberry.

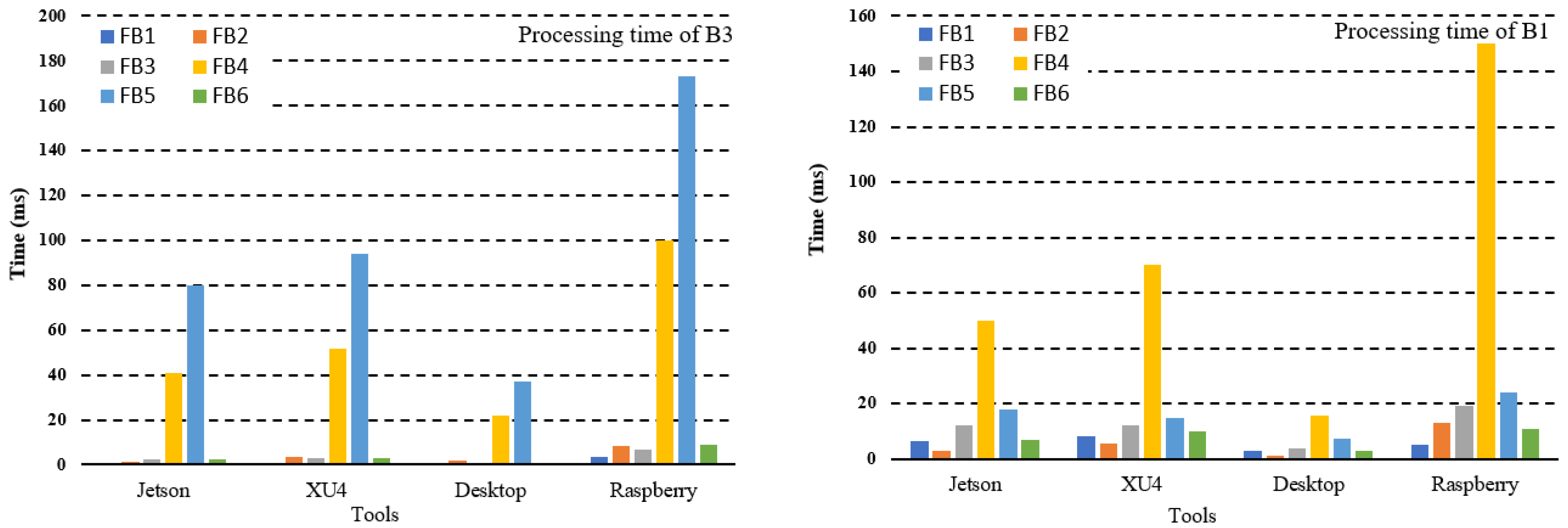

Figure 11 shows the results obtained for each FB.

Figure 11 shows the results of the time evaluation for block 1 and 3; from the processing time analysis, we can conclude that in the case of block 1 (figure on the right), we have FB1 consuming 6.4 ms for Jetson Nano, 8.1 ms on the XU4 board, 3.1 ms for the desktop, and 5 ms for the Raspberry board. For FB2, we have 2.8 ms consumed by the Jetson Nano, 5.4 ms for XU4, 1.2 ms, and 13 ms for the desktop and Raspberry board, respectively.

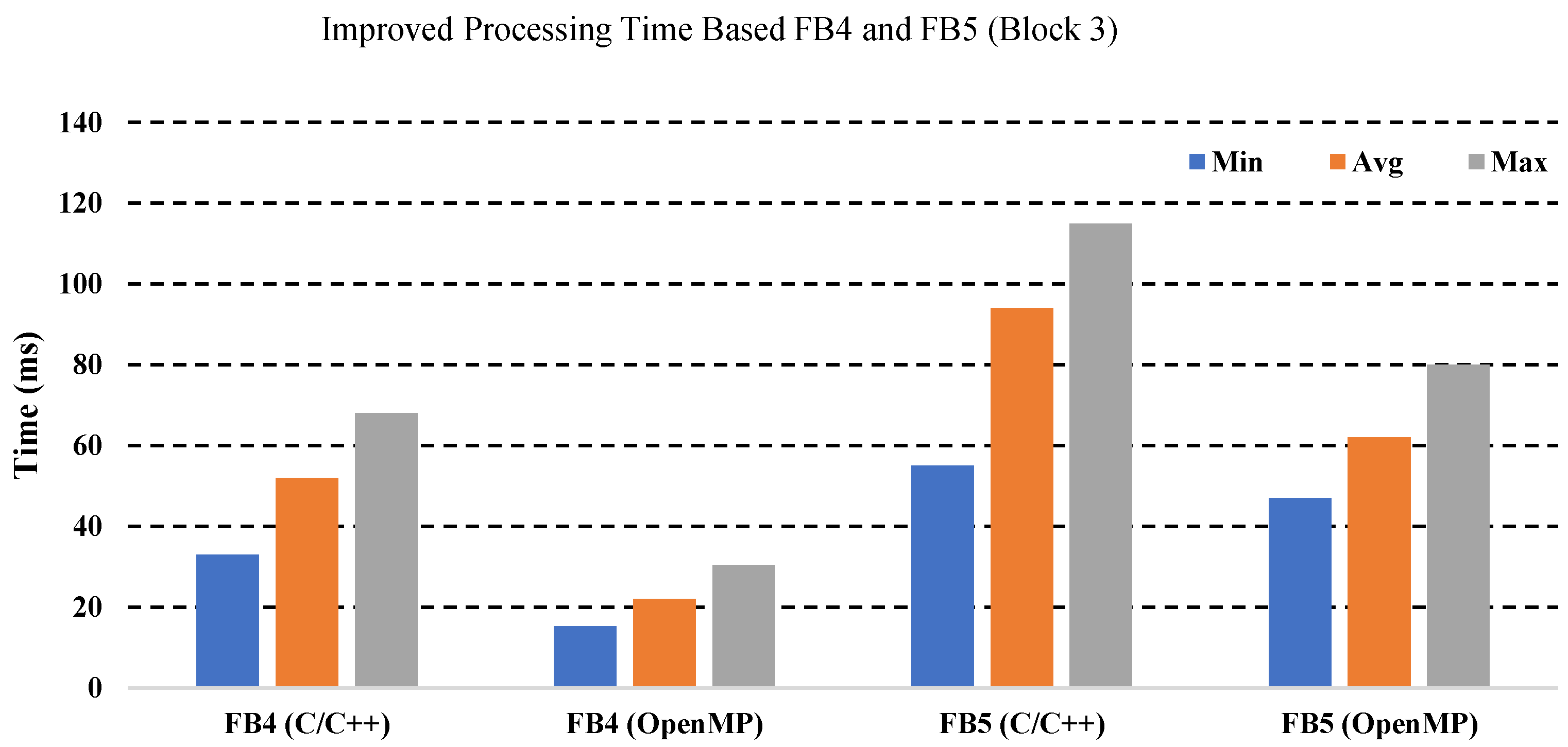

FB3 occupies a processing time between 3.7 ms and 19 ms for the desktop and Raspberry and 12 ms for both the XU4 and Jetson Nano. Functional block 4 takes the largest percentage of time due to the conventional product between the image and the kernel, shown in the yellow curve. This increase in time reflects the fact that the function block selected for acceleration is FB4, which will reduce the total time of block 1. On the other hand, FB5 and FB6 consume less time compared to FB4. For block 3, the time evaluation showed that FB4 and FB5 consume more time compared to the other functional blocks, which requires an acceleration in these functional blocks. The time evaluation of the blocks was based on a sequence of 100 images in order to calculate the average processing time.

Table 7 summarizes the different processing times obtained. From this table, we can conclude that the Jetson Nano card and the desktop are given the best results.

Although the desktop gives a lower time compared to other systems, the problem of this conventional machine is the power consumption and the high weight. In the same way, the Jetson nano card gives a difference of 70 ms compared to the XU4 card. This card indeed has a low cost, but it has a very high-power consumption compared to the XU4 and Raspberry cards. This does not reflect our interest because the study aims to build a reliable real-time system with low cost and low power consumption. In this case, the best choice is the XU4 and Raspberry board. The processing time analysis showed that the Raspberry board consumes more time by a factor of ×2.22 compared with XU4, which consumes 316 ms. That pushed us to select the XU4 board for the acceleration of the algorithm based on the exploitation of the CPU and GPU parts of this heterogeneous system. Due to the energy consumption in our case, we need to ensure the autonomy of the drone or the robot to provide the maximum processing capacity.

Table 8 shows the processing time of different FBs.

4.3. CPU-GPU Boarding Based OpenCL and OpenMP

Our second implementation was based on OpenCL and OpenMP to accelerate the functional blocks that take most of the time. In our study, we used both languages to ensure the exploitation of the CPU part using OpenMP and the different GPU cores using OpenCL. The acceleration in the CPU of the XU4 board was used for the compression part and OpenCL for the blur elimination and index processing part.

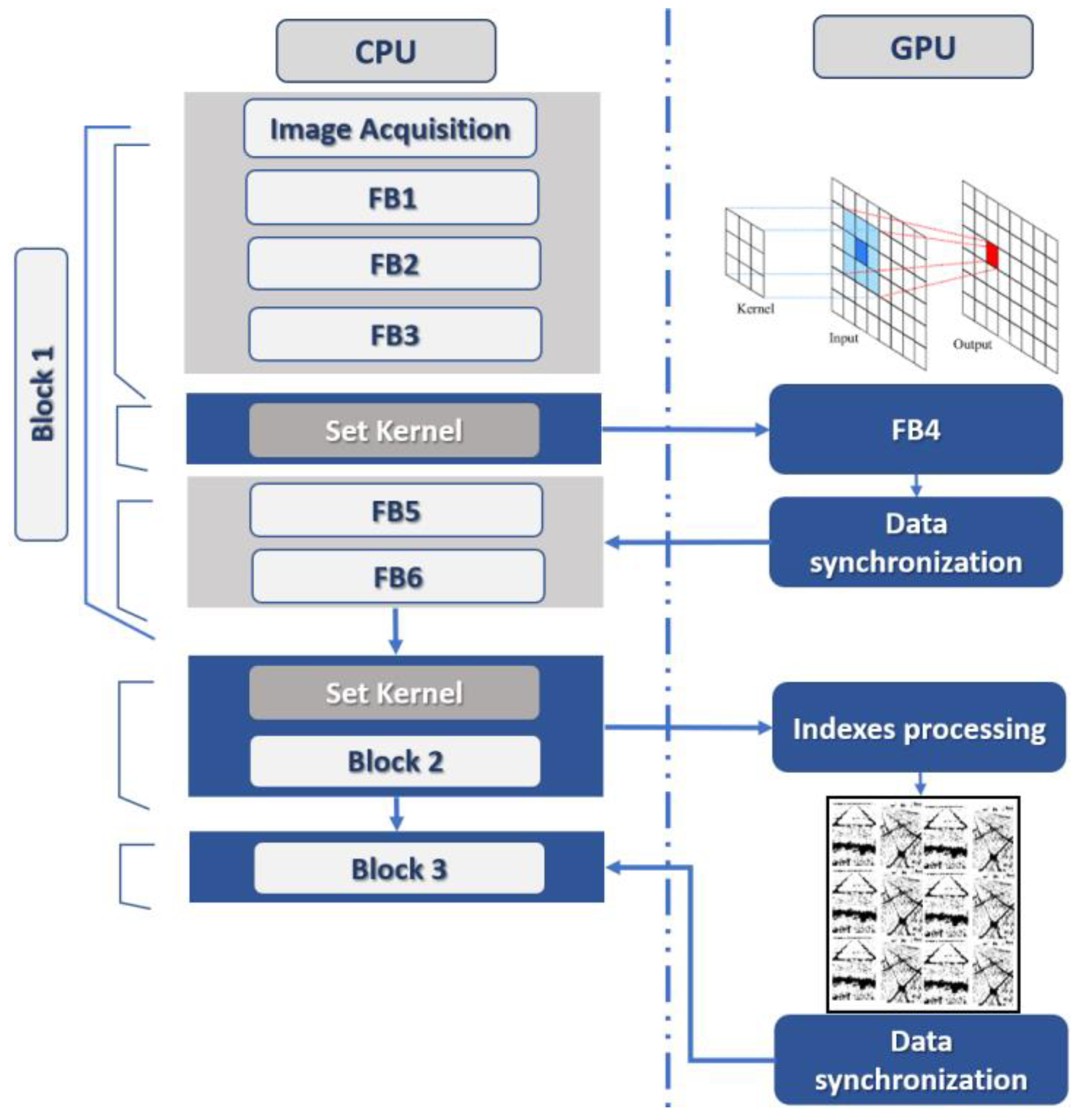

Figure 12 shows the implementation model based on the acceleration on the GPU part using OpenCL.

After the time evaluation shown in

Table 8 and

Figure 12, we have concluded that FB4 in block 1 consumes the most processing time. For this reason, we have opted to accelerate this FB in the GPU part of the board.

Figure 13 shows that after FB3, we call the kernel for running on the GPU part; in this case, the CPU part provides the necessary data for the execution. Thus, we have accelerated block two, which also takes a lot of time. After the kernels have been executed, we move on to FB4 and FB5 in block 3, which has been accelerated in the CPU part via OpenMP.

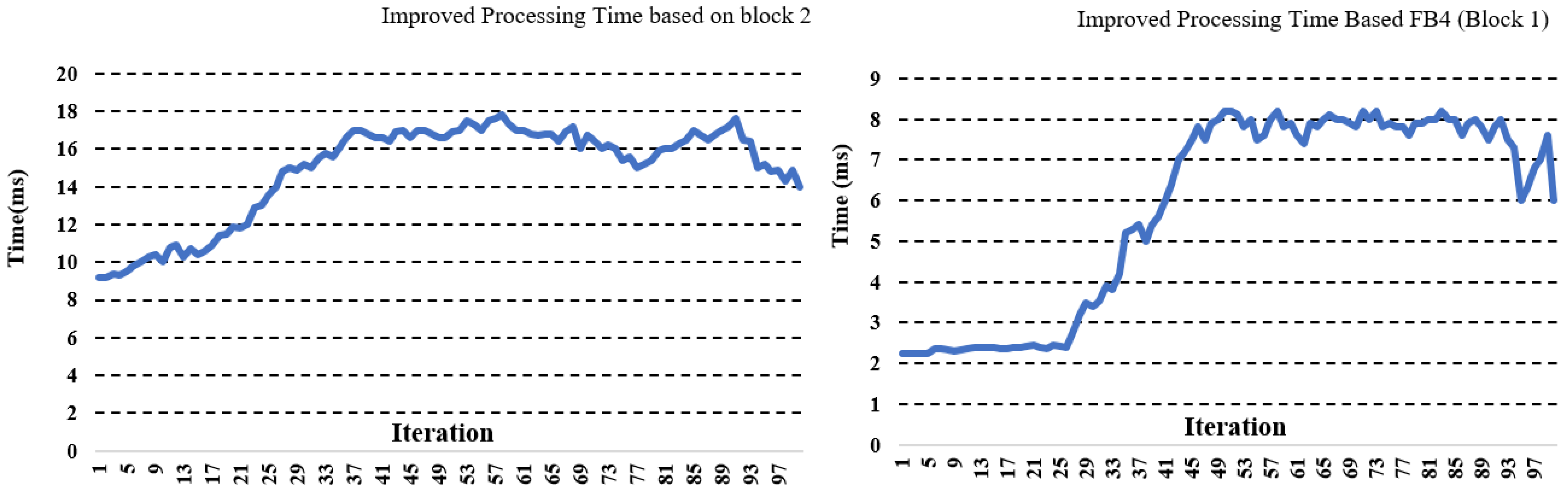

Figure 13 shows the results obtained.

Figure 14 shows the temporal evaluation obtained based on a sequence of 100 images. The image on the left shows the block 2 time graph, which varies between a minimum value of 9.2 ms and a maximum value of 17.8 ms; after taking the average of the image sequence, we obtained a processing time of 14.89 ms for the handling of each image. This shows an improvement of ×7.3. compared to the sequential version, which consumes 110 ms. The time variation in the curves is due to the fact that each image contains a different information weight, which causes a variation in processing time. The image on the right also shows the processing time for 100 frames of FB 4 in block 1. The time varies between a minimum value of 2.24 ms and a maximum of 8.2 ms, which gives an average of 5.8 ms with an improvement ×12 compared to the sequential version, which consumes 70 ms. After improving blocks 1 and 2, we also enhanced block 3.

Figure 14 and

Figure 15 show the results obtained.

Figure 14 shows the processing time of FB4 and FB5 of block 3, which took the most processing time. In this context, we obtained an average of 22 ms compared to the sequential version, which consumes 52 ms for FB4 and 62 ms for FB5, and which consumes in the sequential version 94 ms.

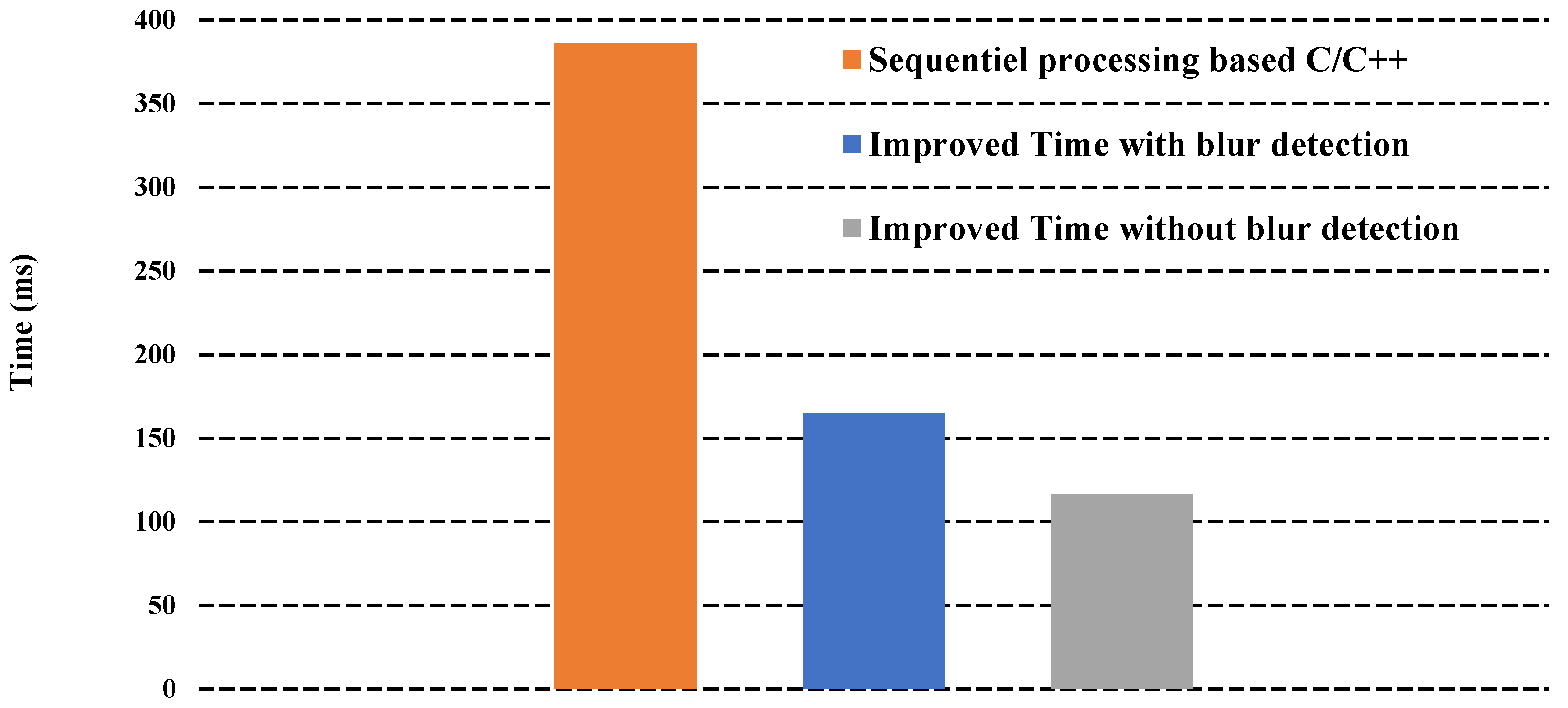

Figure 15 shows a comparison between the different times that include the sequential version and the improved version, as well as the case where we did not detect the blur in the image, so the algorithm moved directly to block 2 for the indices processing. This shows an improvement of ×3.3 in global processing time compared to the sequential version that took 386.4 ms.

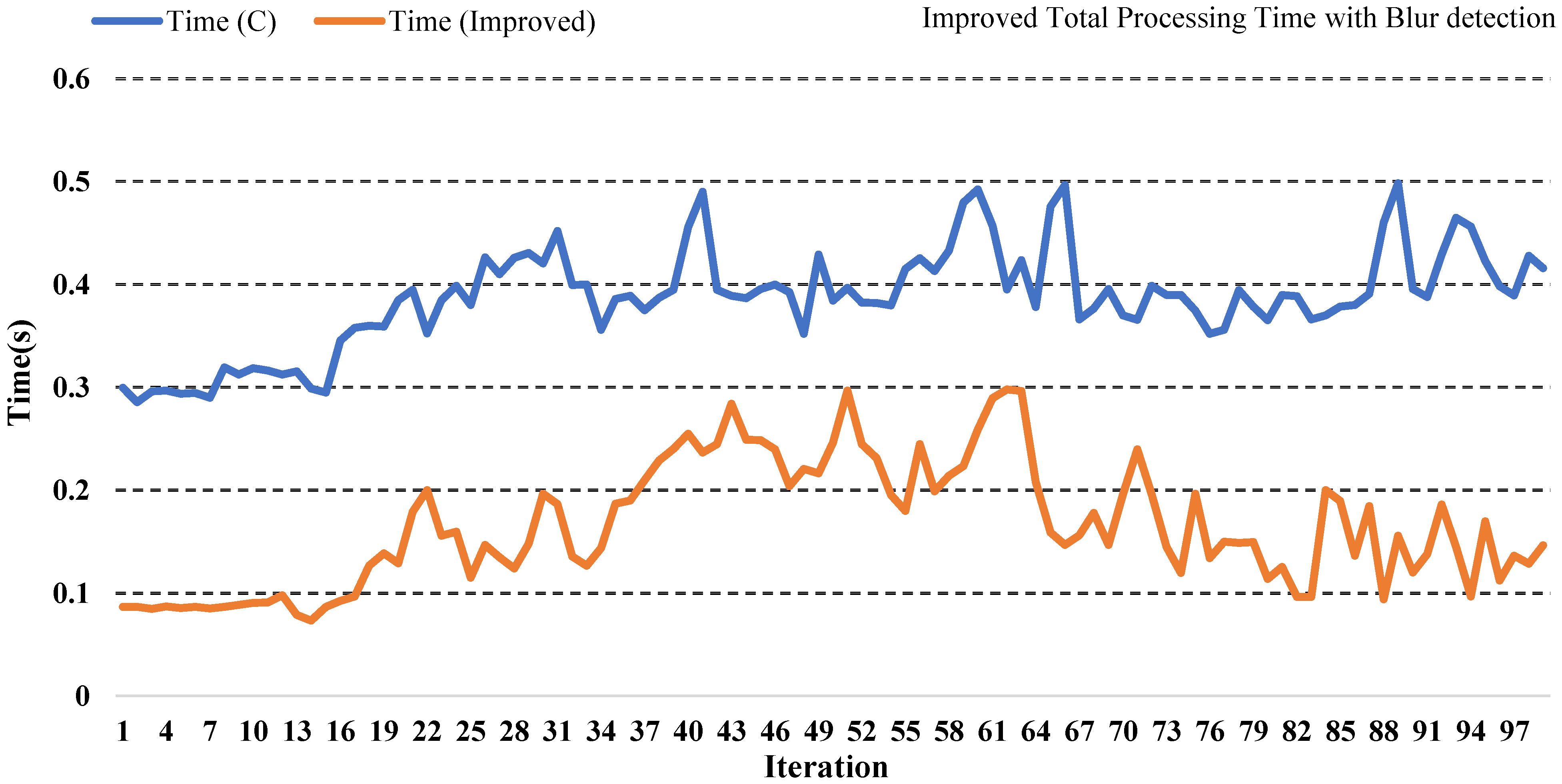

Figure 16 shows the total time obtained between the improved and sequential versions based on 100 iterations.

We subsequently evaluated our implementation based on several resolutions to see the effect of the resolution on the processing time and the number of images processed.

Table 9 shows the result obtained.

Table 9 shows that using 640 × 480 resolution, we can achieve a processing rate of 311 frames/s, and 5472 × 3648 resolution, we can process 6 frames/s. The fact that the number of frames in this resolution is six is due to the high resolution of the images, but it is still sufficient, and it respects the real-time constraint. If we take as an example the most used cameras in precision agriculture, we find Red-Edge Mecasens or Parrot Sequoia, which have a time-lapse of two frames/s in the case of 1280 × 960 resolution for the different bands Red, Green, and Blue and 4608 × 3456 for the case of RGB, which means the various bands in the same image. Our algorithm respects the time constraint, which is 2 fps making the real-time processing.

5. Experimental Results from Real Area

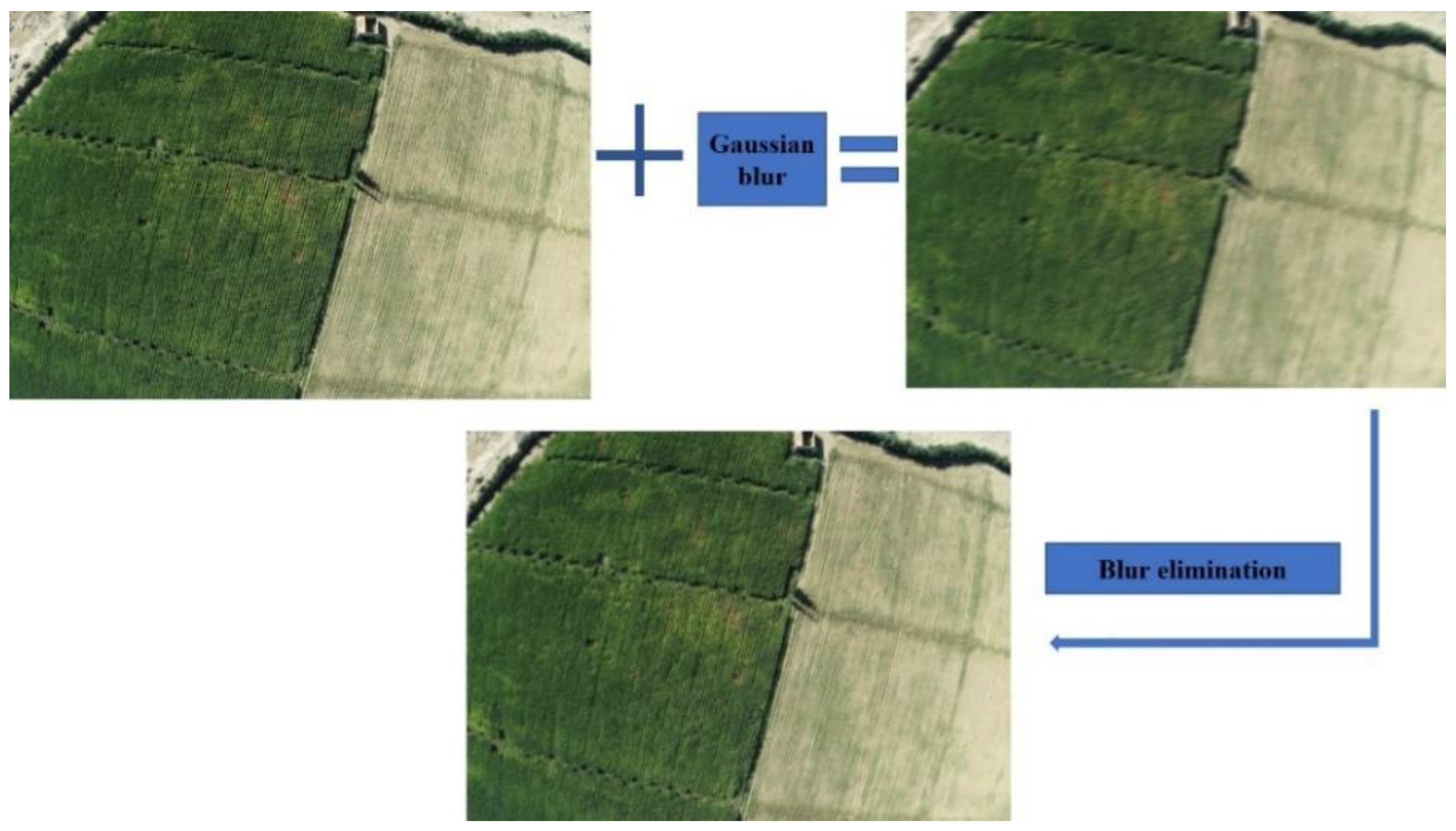

The temporal evaluation of our hybrid algorithm, which combines blur detection, index calculation, and image compression, has shown that we can use it in a real-time scenario. The compression results allowed us to reduce the image size by a factor of ×63. The decompression process to achieve the image construction was applied after the end of the sequence. This means that the decompression process is used after the global algorithm has processed all the images. For the blur detection, we tried to add a Gaussian blur to filter the image to see the result.

Figure 17 shows the original image, with blur and after blur removal.

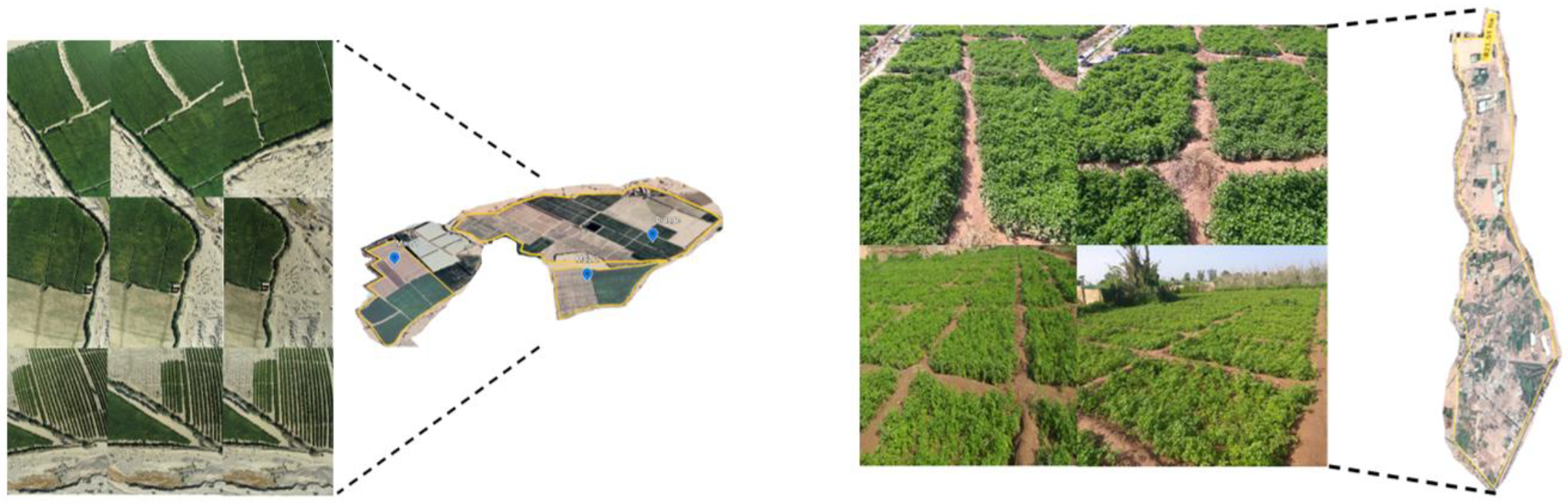

The indices evaluated in this work are the Normalized Green-Red Difference Index (NGRDI) and Visible Atmospherically Resistant Index (VARI). The choice of these indices is due to the robustness of the results given as well as their popularity in the field of precision agriculture. For this reason, we have evaluated both databases based on these indices. The images used in this evaluation are based on images collected by UAV and hand data.

Figure 18 shows images from the database used in this work.

In

Figure 18, we have the different images used in our evaluation. On the top left, we have an agricultural field of maize collected by a UAV; on the top right, we have a field of orange trees also collected by a UAV. We have a parsley field on the bottom left, and on the right, the mint field. These data were evaluated using the indices listed in

Table 1; in our case, we chose the two indices NGRDI and VARI.

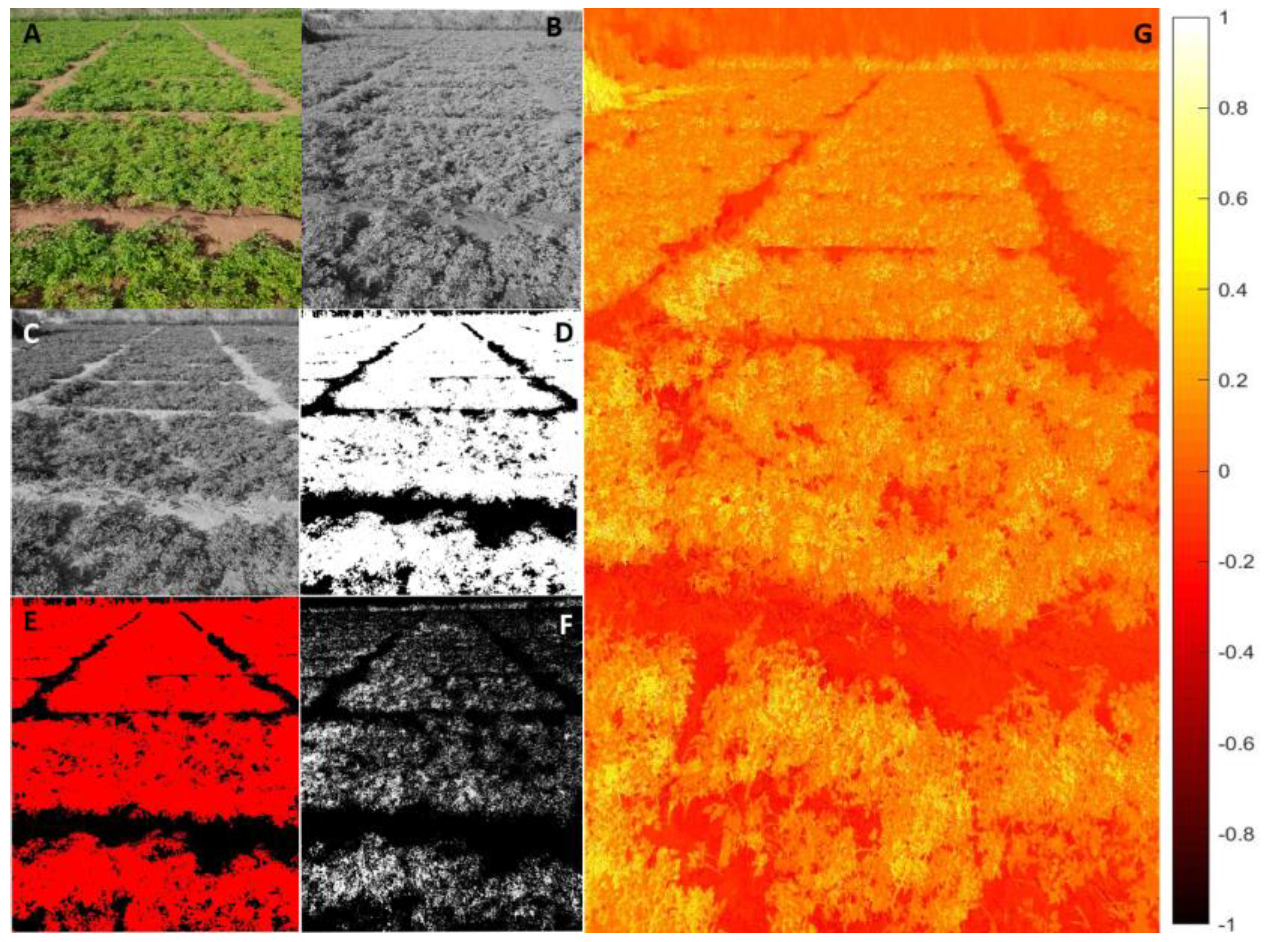

Figure 19 shows the results obtained after the evaluation.

Figure 19 shows the evaluation of the NGRDI based on mint plants; image A shows the agricultural field, B is the green band, C is the red band, and D is the calculated index based on a threshold of 0.12. Images E and F show the same calculated index but we varied the threshold; in this case, we used a threshold of 0.45. Image G shows an index matrix generated by MATLAB to see the different values that exist in the image.

Figure 20 shows the evaluation of parsley fields based on the NGRDI.

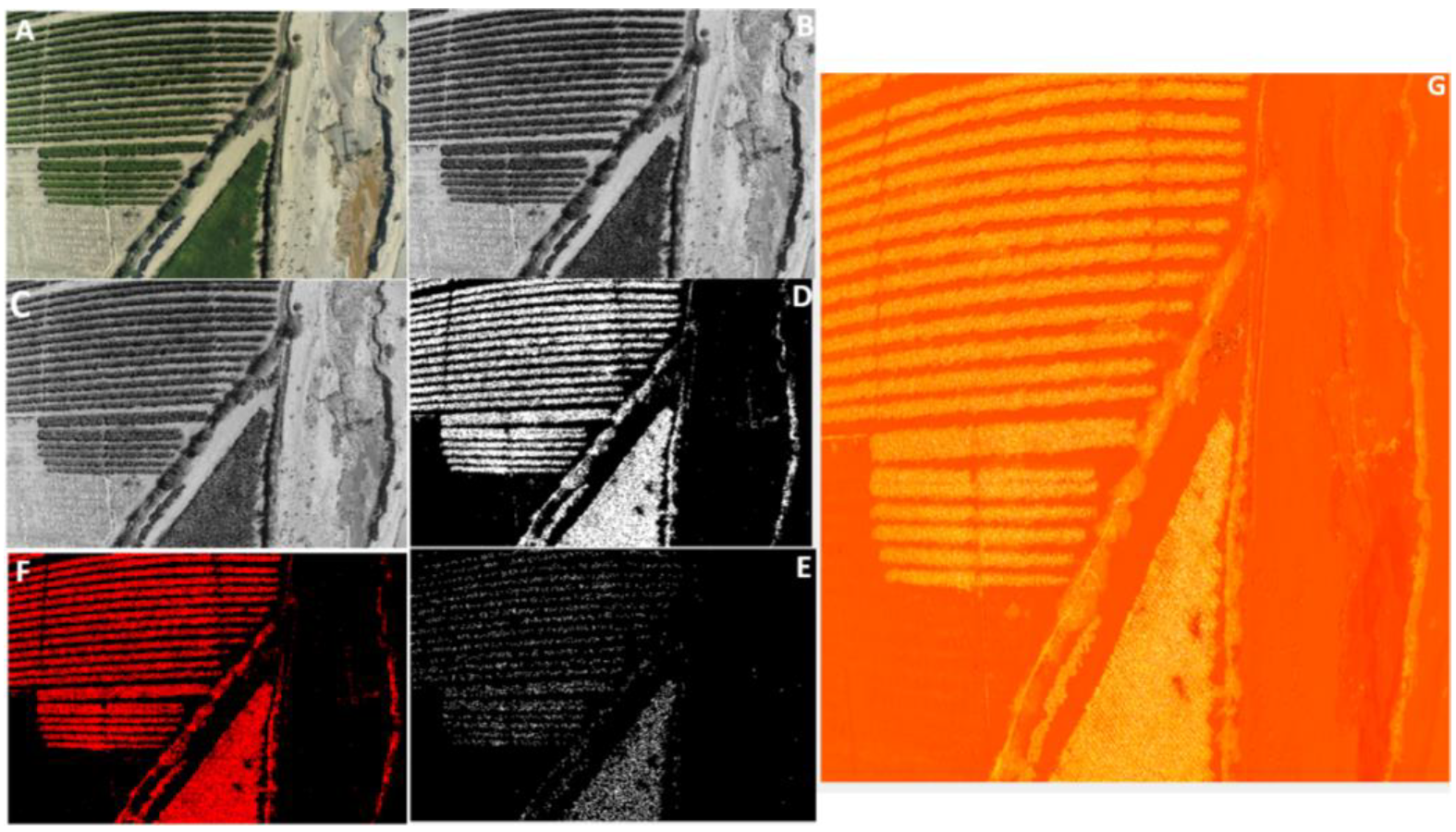

Thus, we have evaluated the orange plants as shown in

Figure 21.

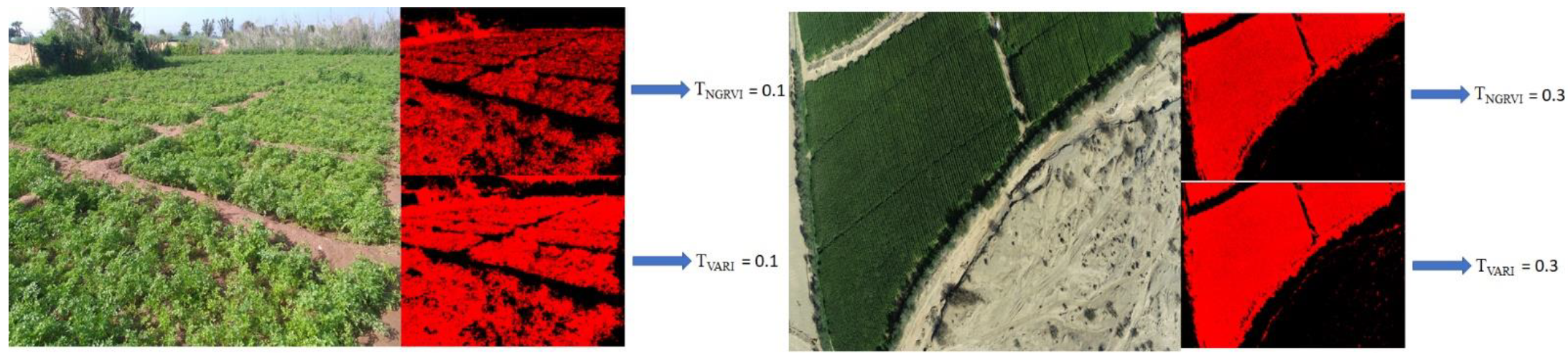

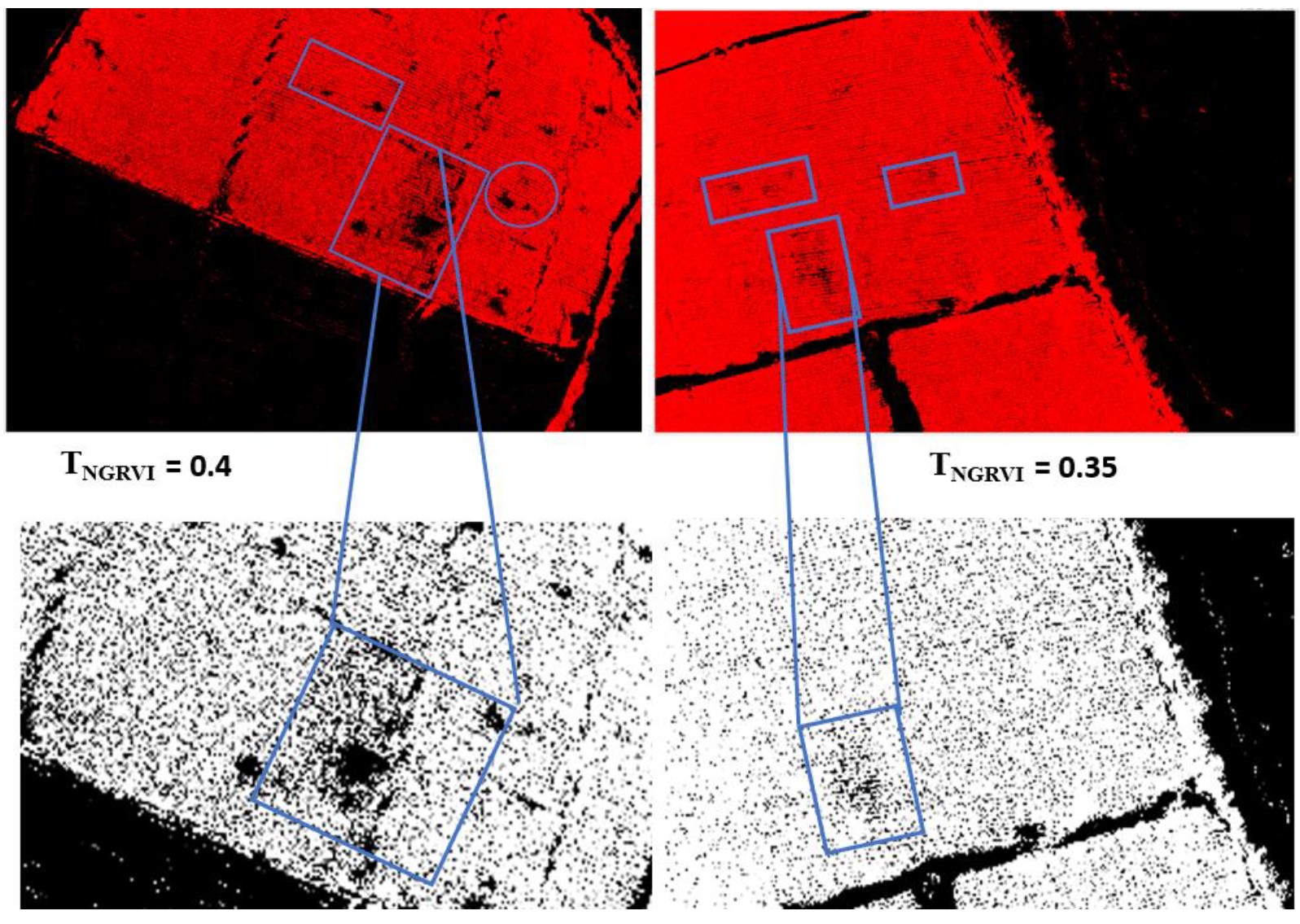

Figure 22 shows a comparison between NGRVI and VARI using parsley (in the left) and maize (in the right) plants. The image on the right shows the evaluation based on an image collected by UAV, and on the right, the database is collected by hand. The results showed that the VARI is more sensitive to vegetation than NGRVI based on the same threshold. Still, the results show that the VARI is robust to the sensitivity of the vegetation to be monitored.

Figure 23 shows the interpretation of the index results, the image on the right shows that we have parts of the agricultural field with a low index after the threshold operation. The appropriate threshold comes with using a soil sensor to determine the suitable threshold for each plant. The blue squares show the soil parts with a low index, which implies a low vegetation cover that requires an intervention.