Learning Performance Styles in Gamified College Classes Using Data Clustering

Abstract

1. Introduction

2. Materials and Methods

2.1. Gamified Class Design

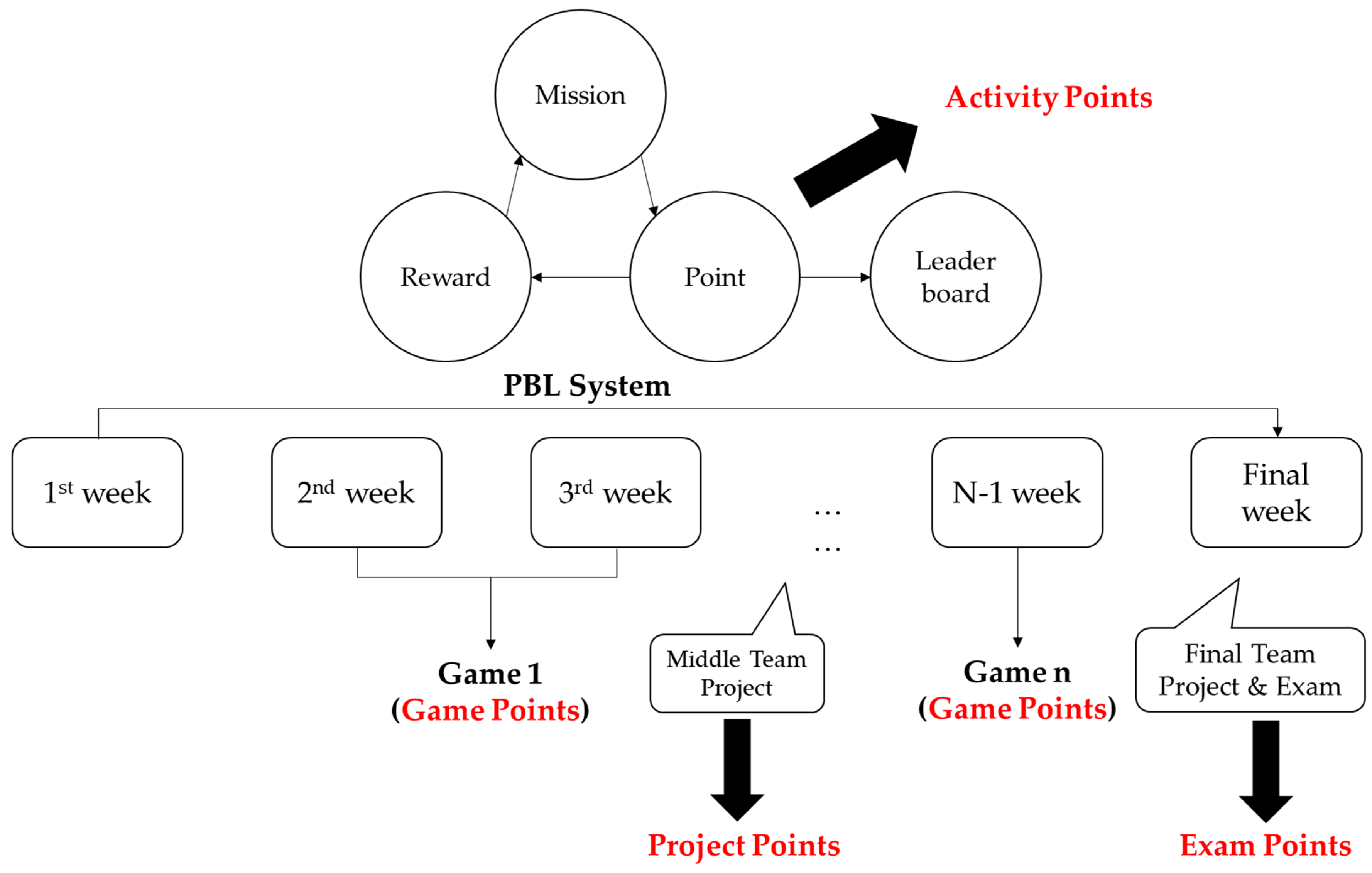

2.1.1. PBL System Design

2.1.2. Game Design

- Investment Game: In this game, learners established virtual companies in class or used the platform as a tool for peer evaluation of project outcomes. In the game, learners were provided with virtual currency, “Garnet,” which they invested in companies established by other teams (but not by their own teams). The points they earned differed according to the return on investment, and players with higher returns received higher points [10];

- Devil’s Advocate Game: This was a team activity game. Two members were recruited from each team and joined other teams in turn for a predetermined length of time to discuss into the disadvantages or flaws in the other teams’ ideas and provide negative feedback. Points were earned if the negative feedback was rational;

- Salary Auction Game: This game was played in the beginning of each semester when the teams were formed. The purpose of this game was to minimize the learners’ reluctance to participate in team activities and ensure that learners were mixed well [8].

2.2. Grade Evaluation and Data Collection

- Activity Points: Points earned in the PBL system mentioned in Section 2.1.1. Points were earned when leaners accomplished a mission given by the instructor;

- Game Points: Points earned in the games mentioned in Section 2.1.2. Points were considered the sum of points earned in each game;

- Project Points: Points earned in two team projects (mid-term and final). Points were determined by adding the points given by the instructors to individual learners based on their project evaluation to the points earned by the team in the Investment Game;

- Exam Points: Points earned by each learner on tests throughout the course.

2.3. Data Clustering Algorithm

- Setting parameters: Using the data in this study as an example, the number of data points is 369 and the number of attributes (i.e., the four categories mentioned above) is 4. Hence, a 369 × 4 two-dimensional matrix was created. Each data point (1st–369th data point) corresponds to the cluster in which it belongs. In addition, the appropriate number of clusters for the dataset should be specified. Thus, the number of clusters, k, should be specified and a solution display should be created to show the clusters and constituent data points. Different researchers used different methods to display a solution. For instance, Researcher A may have created a matrix of 369 × k and used a notation of 1-0-0 if the first data point belonged to Cluster 1. Conversely, Researcher B may have created a vector of 369 × 1 and written 1 (or 2) as the vector element for the first data point if it belonged to Cluster 1 (or Cluster 2);

- Computing mean values: After the parameters were specified, a mean value was calculated per cluster. In the Solution Display step, a mean value was computed for each attribute based only on the data points in Cluster 1. Additionally, attribute means were computed by using only the data points in Clusters 2–k;

- Computing the distances between the mean and the data points: The Euclidean distances between the empirical data points and the attribute mean (calculated in Step 2) were computed. For Clusters 1–k, the Euclidean distances between the mean and data points were summated to obtain k Euclidean distance indices;

- Examining final indices: A final index was computed by adding the k indices generated in Step 3. For additional final indices, the steps above were iterated by using a different solution display in Step 1. At this point, a data point in Cluster 1 may be moved to Cluster 2 or to a randomly selected cluster. Once a different solution display is created, Steps 2–4 should be repeated to obtain a better index.

- 5.

- Finalizing evaluations: A silhouette analysis was performed on the data according to the final solution display for which the exploration was completed. Next, the number of clusters, k, was increased from 2 to 16 to compute the silhouette coefficients, and the solution with the largest coefficient value was determined as the final product.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness: Defining “Gamification”. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Tampere, Finland, 28–30 September 2011; pp. 9–15. [Google Scholar]

- Kim, S.; Song, K.; Lockee, B.; Burton, J. Gamification in Learning and Education; GE; Springer: Berlin/Heidelberg, Germany, 2018; pp. 25–38. [Google Scholar]

- Park, S.; Kim, S. Is sustainable online learning possible with gamification?—The effect of gamified online learning on student learning. Sustainability 2021, 13, 4267. [Google Scholar]

- Park, S.; Kim, S. Identifying world types to deliver gameful experiences for sustainable learning in the metaverse. Sustainability 2022, 14, 1361. [Google Scholar] [CrossRef]

- Landers, R.N.; Tondello, G.F.; Kappen, D.L.; Collmus, A.B.; Mekler, E.D.; Nacke, L.E. Defining gameful experience as a psychological state caused by gameplay: Replacing the term ‘gamefulness’ with three distinct constructs. Int. J. Hum. Comput. Stud. 2019, 127, 81–94. [Google Scholar] [CrossRef]

- Park, S.; Min, K.; Kim, S. Differences in learning motivation among bartle’s player types and measures for the delivery of sustainable gameful experiences. Sustainability 2021, 13, 9121. [Google Scholar] [CrossRef]

- Werbach, K. Defining Gamification: A Process Approach. In International Conference on Persuasive Technology; Springer: Berlin/Heidelberg, Germany, 2014; pp. 266–272. [Google Scholar]

- Kim, S. Team organization method using salary auction game for sustainable motivation. Sustainability 2015, 7, 14358–14370. [Google Scholar]

- Sidhu, I.; Johnsson, C.; Singer, K.; Suoranta, M. A game-based method for teaching entrepreneurship. Appl. Innov. Rev. 2015, 1, 51–65. [Google Scholar]

- Kim, S.; Park, S. Learning effects of simulated investment game for startups. Int. J. Appl. Eng. Res. 2016, 11, 4586–4589. [Google Scholar]

- Kim, S. Role-playing game for training a design process of startup company compensation plan. Int. J. Game-Based Learn. 2019, 9, 40–54. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond k-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar]

- Knutas, A.; Ikonen, J.; Maggiorini, D.; Ripamonti, L.; Porras, J. Creating student interaction profiles for adaptive collaboration gamification design. Int. J. Hum. Cap. Inform. Technol. Prof. 2016, 7, 47–62. [Google Scholar] [CrossRef]

- Marisa, F.; Syed Ahmad, S.S.; Kausar, N.; Kousar, S.; Pamucar, D.; Al Din Ide, N. Intelligent gamification mechanics using fuzzy-AHP and k-means to provide matched partner reference. Discrete Dyn. Nat. Soc. 2022, 2022, 8292991. [Google Scholar] [CrossRef]

- Shukla, S.; Naganna, S. A review on K-means data clustering approach. Int. J. Inf. Comput. Technol. 2014, 4, 1847–1860. [Google Scholar]

- Selim, S.Z.; Alsultan, K. A simulated annealing algorithm for the clustering problem. Pattern Recognit. 1991, 24, 1003–1008. [Google Scholar] [CrossRef]

- Pal, R.; Saraswat, M. Data clustering using enhanced biogeography-based optimization. In Proceedings of the 2017 Tenth International Conference on Contemporary Computing (IC3), Noida, India, 10–12 August 2017; pp. 1–6. [Google Scholar]

- Pintrich, P.R. Understanding self-regulated learning. New Dir. Teach. Learn. 1995, 1995, 3–12. [Google Scholar]

- Pintrich, P.R. The role of goal orientation in self-regulated learning. In Handbook of Self-Regulation; NL; Elsevier: Amsterdam, The Netherlands, 2000; pp. 451–502. [Google Scholar]

- Raleiras, M.; Viana, J.; Costa, F. Adaptive Gamification Models in Higher Education: Is there a Place for Self-Regulated Learning? In Proceedings of EDULEARN20 Conference, Online Conference, 6–7 July 2020.

- Kaplan, A.; Maehr, M.L. The contributions and prospects of goal orientation theory. Educ. Psychol. Rev. 2007, 19, 141–184. [Google Scholar]

- Schunk, D.H.; Zimmerman, B.J. Self-Regulated Learning: From Teaching to Self-Reflective Practice; Guilford Press: New York, NY, USA, 1998. [Google Scholar]

- Roese, N.J. The functional basis of counterfactual thinking. J. Pers. Soc. Psychol. 1994, 66, 805. [Google Scholar]

- Park, S.; Kim, S. Leaderboard design principles to enhance learning and motivation in a gamified educational environment: Development study. JMIR Serious Games 2021, 9, e14746. [Google Scholar] [PubMed]

- Nakamura, J.; Csikszentmihalyi, M. Flow Theory and Research. In The Oxford Handbook of Positive Psychology, 2nd ed.; Lopez, S.J., Snyder, C.R., Eds.; Oxford University Press: Oxford, UK, 2009; pp. 195–206. [Google Scholar]

- Dubey, A.; Choubey, A.P.D.A. A systematic review on K-means clustering techniques. Int. J. Sci. Res. Eng. Tehcnol. 2017, 6, 624–627. [Google Scholar]

- Ghosh, S.; Dubey, S.K. Comparative analysis of k-means and fuzzy c-means algorithms. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 35–39. [Google Scholar]

| Year | Semester | Lecture | # of Data |

|---|---|---|---|

| 2016 | 2nd | Gamification Engineering | 247 |

| 2017 | 1st | Entrepreneurship | 29 |

| 2nd | Gamification Engineering | 30 | |

| 2018 | 1st | Technology Innovation | 33 |

| 2019 | 1st | Technology Innovation | 30 |

| Total | 369 | ||

| Lecture | Point | Average | Min | Max | Average (Normal) | Min (Normal) | Max (Normal) |

|---|---|---|---|---|---|---|---|

| 2016/2nd/ Gamification Engineering | Activity | 29.069 | 21 | 30 | 0.897 | 0 | 1 |

| Game | 33.332 | 28 | 40.4 | 0.43 | |||

| Project | 45.943 | 40 | 51.5 | 0.517 | |||

| Exam | 44.276 | 20 | 58 | 0.639 | |||

| 2017/1st/Entrepreneurship | Activity | 76.633 | 64 | 92 | 0.451 | 0 | 1 |

| Game | 110.133 | 72 | 142 | 0.545 | |||

| Project | 21.05 | 18.4 | 23.8 | 0.491 | |||

| Exam | 19.667 | 14 | 30 | 0.354 | |||

| 2017/2nd/Gamification Engineering | Activity | 44.862 | 28 | 66 | 0.444 | 0 | 1 |

| Game | 13.793 | 8 | 20 | 0.483 | |||

| Project | 20.172 | 15 | 25 | 0.517 | |||

| Exam | 13.983 | 3 | 19 | 0.686 | |||

| 2017/1st/Technology Innovation | Activity | 52.606 | 43 | 64 | 0.548 | 0 | 1 |

| Game | 23.506 | 9.7 | 24.9 | 0.908 | |||

| Project | 16.878 | 0 | 19 | 0.888 | |||

| Exam | 23.091 | 7.5 | 29 | 0.725 | |||

| 2018/1st/Technology Innovation | Activity | 61.6 | 53 | 77 | 0.43 | 0 | 1 |

| Game | 45.767 | 32 | 57 | 0.551 | |||

| Project | 22.472 | 19.41 | 25 | 0.548 | |||

| Exam | 30.333 | 18 | 37.5 | 0.632 |

| K | Silhouette Coefficient |

|---|---|

| 2 | 0.3167 |

| 3 | 0.3506 |

| 4 | 0.2998 |

| 5 | 0.2375 |

| 6 | 0.2226 |

| 7 | 0.236 |

| 8 | 0.2499 |

| 9 | 0.2511 |

| 10 | 0.2028 |

| 11 | 0.2536 |

| 12 | 0.2633 |

| 13 | 0.2327 |

| 14 | 0.2303 |

| 15 | 0.2197 |

| 16 | 0.2343 |

| Average | 0.25339 |

| Min | 0.2028 |

| Max | 0.3506 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, S.; Kim, S. Learning Performance Styles in Gamified College Classes Using Data Clustering. Sustainability 2022, 14, 15574. https://doi.org/10.3390/su142315574

Park S, Kim S. Learning Performance Styles in Gamified College Classes Using Data Clustering. Sustainability. 2022; 14(23):15574. https://doi.org/10.3390/su142315574

Chicago/Turabian StylePark, Sungjin, and Sangkyun Kim. 2022. "Learning Performance Styles in Gamified College Classes Using Data Clustering" Sustainability 14, no. 23: 15574. https://doi.org/10.3390/su142315574

APA StylePark, S., & Kim, S. (2022). Learning Performance Styles in Gamified College Classes Using Data Clustering. Sustainability, 14(23), 15574. https://doi.org/10.3390/su142315574