Abstract

To cope with complex environmental impacts in a changing climate, researchers are increasingly being asked to produce science that can directly support policy and decision making. To achieve such societal impact, scientists are using climate services to engage directly with stakeholders to better understand their needs and inform knowledge production. However, the wide variety of climate-services outcomes—ranging from establishing collegial relationships with stakeholders to obtaining specific information for inclusion into a pre-existing decision process—do not directly connect to traditional methods of measuring scientific impact (e.g., publication citations, journal impact factor). In this paper, we describe how concepts from the discipline of evaluation can be used to examine the societal impacts of climate services. We also present a case study from climate impacts and adaptation research to test a scalable evaluation approach. Those who conduct research for the purposes of climate services and those who fund applied climate research would benefit from evaluation from the beginning of project development. Doing so will help ensure that the approach, data collection, and data analysis are appropriately conceived and executed.

1. Introduction

1.1. Background

The defining characteristic of the past century is the impact of human activities on environmental systems, such as global climate change [1,2], that result in challenging and uncertain policy and decision contexts. To support policy and decision making, scientists are being asked to provide climate services—the provision of timely climate data and information created in a form that is useful, usable, and used (i.e., actionable) [3,4]. To generate such climate services, scientists are interacting “out in the world” with information end-users, known more broadly as stakeholder engagement [5,6]. Engagement of stakeholders in research projects has a demonstrated positive impact on subsequent information use for decision making [7]. However, traditional definitions of research success most often focus on agency or academic metrics, such as number of publications and citation metrics [8,9], and do not capture societal impacts well [10,11].

Defining success for societal impact can be challenging because the needs of stakeholders can vary from learning how to work collaboratively with researchers (collegial engagement) to being generally better informed (conceptual information use) to taking specific on-the-ground action (instrumental information use) [12,13]. Additionally, climate service providers have a wide range of engagement approaches available to them to meet these varying needs—spanning from informing stakeholders of results to empowering them as co-equal project investigators [14]. To accommodate this diverse range of engagement needs and approaches, evaluation processes need to be specifically tailored to examine the impact and actionability of such information to a community [15,16].

In this paper, we introduce concepts from the field of evaluation and describe how they may be used to help define indicators for and evaluate the societal impacts of climate services. We focus on climate impacts and adaptation research, as it is one area where the provision of climate services is growing at a rapid pace. We present results from a case study application of these concepts to research funded by the U.S. Geological Survey Climate Adaptation Science Center network and discuss how these findings can be further developed. The deliberate consideration of success and explicit attention to evaluation can improve the actionability of science.

1.2. Evaluation Theory and Practice

Evaluation helps individuals and organizations learn and improve program operations by testing the effectiveness of or changes in activities; it differs from assessment, which is intended to grade or score performance [17,18]. The field of evaluation uses several different theoretical approaches and methods for operationalization. It has a rich set of literature that differentiates between the advantages and limitations of these approaches and techniques and identifies the appropriate contexts for their use [19,20,21]. Therefore, no matter the context, it is incumbent upon the evaluator to initially determine the kind of evaluation required and ensure that they draw upon the appropriate best practices when designing the evaluation process.

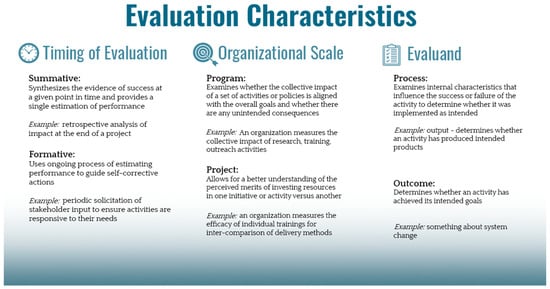

Figure 1 summarizes a few key concepts from the discipline of evaluation for consideration when designing and conducting an evaluation of climate services. Evaluations benefit from beginning with an appraisal of the following: (1) what it is that specifically needs to be evaluated (the evaluand), (2) what aspects of the evaluand (process, outputs, or outcomes) are most appropriate for evaluation, and (3) when (summatively or formatively) and at what organizational scale (program or project) the evaluation will be conducted [22,23]. As part of this appraisal, the evaluator identifies the purpose of the evaluation, inputs to the activity, and other contextual factors, such as the level of analysis or precision [22,23]. Once the approach and method are identified, the evaluator selects suitable variables for measurement and analysis. These variables cover necessary aspects of the evaluand that are to be evaluated, while also being scientifically sound (e.g., measured reliably, scaled appropriately) [22,23]. If the approach is quantitative or mixed-methods, then the evaluator also ensures that statistical assumptions and analyses are logically sound and allow sufficient statistical power.

Figure 1.

Evaluation key concepts relevant to what specific aspects of an activity require evaluation, when the evaluation will occur, and at what organizational scale it will take place [22,23].

These approaches intersect in layered ways when operationalized, and an evaluator can make intentional selections among them to meet the goals of the evaluation. For example, to enhance the investment of public funding for climate services, an evaluator may elect to conduct a formative evaluation of processes at the program level. This approach would help the funding program iteratively improve funding opportunities, proposal reviews, and project management to increase alignment with the overall goal of use of information for policy and decision making. In contrast, to improve their understanding of the operational practices necessary for successful delivery of climate services and pitfalls to avoid, an evaluator may instead elect to conduct a summative evaluation of project outputs and outcomes. Traditionally, evaluation of climate impacts and adaptation research has occurred in an ad hoc summative manner that has not been robustly informed by evaluation theory and practice, although resources are emerging to help climate service providers bridge this gap [8].

1.3. Success and Evaluation for Climate Services

Here, we present some applications of evaluation to better understand the societal impact of climate services. Several traditional models and mechanisms are available for gathering quantitative measures of scientific impact, including research inputs such as the amount of funding obtained and research outputs such as the number of publications, their associated journal impact factor, and number of citations [24] or the number of downloads of products from websites [25]. However, none of these measures identifies whether or how the stakeholder used the information to make a decision because knowledge delivery does not equal knowledge use [11]. To evaluate climate services, an evaluator can focus on stakeholder perception of the process (e.g., workshop evaluations) or, more important to actionability, how well stakeholder input increases the usability. Even better, the evaluation can examine the actual use of the research outputs.

In practice, evaluating information usability and use by policy and decision makers is notoriously difficult. Wall et al. [26] provide an initial direction for evaluating the societal impacts of climate services, such as if agencies and managers find the science credible and if the findings are explicitly applied in agency planning, resource allocation, or a policy decision. McNie [27] suggests other options, such as evaluating whether “all relevant information was considered” or “whether the science was understood and interpreted correctly”. Quantifying these impact metrics is difficult, but options include conducting follow-up interviews with decision makers engaged in projects [28] and analyzing the language in plans and decisions [29].

More nuanced approaches to incorporating perspectives from stakeholders require deeper engagement and focus primarily on understanding how the stakeholder experienced or perceived the engagement. These approaches can include examining factors such as the following: (1) the time required to build the relationship, (2) an understanding of how the project might influence the person or their community, and (3) the nature of the interactions between scientists and users, including building trust [30,31]. Data collection may include surveys (particularly those using open-ended questions that allow people to describe what they experienced or why they hold a certain view) or semi-structured interviews. The iterative nature of some stakeholder engagement in climate services means formative evaluation is possible through using longitudinal evaluation designs. For example, the same survey can be administered multiple times during the development of a decision support tool to ensure that updates to the tool enhance usability [32].

In situations where stakeholder engagement yields neither scientific nor societal impact, success may be defined in more intangible ways, including the depth of integration of stakeholders into the investigator team and their satisfaction with the process [26]. Here, methods for evaluation can focus on identifying and monitoring measurable outcomes on intermediary time scales. For example, a project team can design a conceptual logic model that captures stakeholder impact as a long-term outcome and identifies how to measure change at interim checkpoints [33]. Or the team can apply a theory of change-based framework where establishing and maintaining relationships are key social learning outcomes for an entire community of practice [34]. Regardless of the approach selected, thinking strategically about evaluation from the front-end of a project ensures that appropriate information is collected throughout to monitor whether goals are being achieved and take corrective actions as needed.

2. Case Study

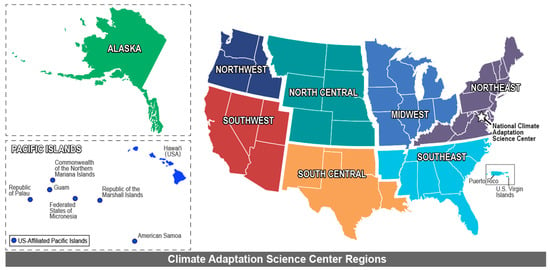

In this paper, we share case study data and results to demonstrate how a climate services boundary organization with the goal of funding the production of actionable science examined its projects to understand their societal impact. This work is part of a broader evaluation of climate impacts and adaptation research projects funded by the U.S. Geological Survey (USGS) South Central and North Central Climate Adaptation Science Centers (CASCs), two regional centers within a nationwide network (Figure 2). Our thoughts on the strengths and weaknesses of the selected evaluation methods and results are included in the Discussion to aid other climate services organizations in their evaluation planning efforts.

Figure 2.

Map of the National and Regional Climate Adaptation Science Centers (CASCs). This analysis focuses on projects funded by the North Central and South Central CASCs.

The CASC network was established by the U.S. Department of the Interior to “provide climate change impact data and analysis geared to the needs of fish and wildlife managers as they develop adaptation strategies in response to climate change” [35]. To achieve this mission, CASC project solicitations are intended to fund research that creates products and tools that directly support resource managers in their development and implementation of climate adaptation plans and actions. Although funded projects are usually research activities of two to three years in length, this emphasis on research use means that they also result in the provision of climate services and can generate partnerships that last beyond the length of an individual project. Examples of climate services activities from prior funded projects include (1) researchers and Tribal water managers working together to better understand micro-drought onset conditions to inform drought adaptation planning, (2) scientific synthesis of information on future fire regimes delivered to managers via training, and (3) the implementation by researchers of small-scale adaptation demonstration projects to illustrate the retention of water on the landscape to resource managers.

From 2013 to 2016, the CASC network was guided by the Federal Advisory Committee on Climate Change and Natural Resource Science, which produced a report providing recommendations on how to improve operations [36]. A key recommendation in this report was for USGS to develop an evaluation process to ensure that programmatic activities and funded projects align with the mission [36]. Suggested evaluation categories include “relevance, quality, processes, accessibility, and impact of science products and services”, although no framework or method for implementing this evaluation process was provided [36]. USGS headquarters conducts annual internal and five-year external program-level reviews of the regional centers to examine overall operations and impact [37] but does not pursue project-level evaluation. As a result, regional CASCs are developing and piloting their own supplemental project evaluation processes.

The broader evaluation of South Central and North Central CASC projects included an analysis of project documentation, a survey of stakeholders engaged in the projects, and a focused set of interviews with highly engaged stakeholders. This paper focuses on the survey, which was intended to provide a summative project-level evaluation of process, outputs and outcomes, and broader impacts based on the perspectives of stakeholders. This approach was chosen because formative evaluation was not a consideration in the development of the funding program. Furthermore, enough time had elapsed that multiple years of projects had reached completion. Our expectation was that evaluation of the entire suite of projects by the program office would provide us with sufficient data to compare characteristics between dissimilar types of projects (e.g., projects carried out at local scales in comparison to projects to create data at broad regional scales). We used an electronic survey of project stakeholders because it was a no-cost option; no resources other than limited staff capacity were dedicated to this evaluation effort. These limitations are commonplace in federal science programs, making this case a suitable proxy for conditions faced by other funders of climate services.

3. Methods

We contacted the primary investigators for 28 South Central CASC projects and 16 North Central CASC projects to identify the stakeholders whom they engaged during the project, resulting in a total of 186 unique contacts for the South Central CASC and 188 unique contacts for the North Central CASC. All contacts were invited via email from the research team to complete the survey, the protocol for which is publicly available from Bamzai-Dodson et al. [38] and the design for which is based on published indicators of usable science [26]. Institutional Review Board (IRB) approval was obtained via The University of Oklahoma (IRB number 7457). Paperwork Reduction Act approval was obtained from the U.S. Office of Management and Budget (control number 1090-0011).

The survey was divided into four sections: process, outputs and outcomes, impacts, and demographics. The survey protocol was pre-tested by 20 staff from across the nationwide CASC network, and their feedback was incorporated into the final form. Six questions asked respondents about the process of creating new knowledge together among investigators, resource managers, and decision makers, focusing on the nature and timing of interactions. Nine questions asked respondents about perceptions of the products developed through this project, including factors that promoted or limited their use by the individual or their agency. Six questions asked respondents about their partnership with the investigators, including what made it likely or unlikely for them to work together again. Four questions asked respondents for demographic information, such as the geography, sector, and professional role that they worked in. Questions were a mix of multiple choice, Likert scale, open-ended, and matrix table, based on accepted practices for effective survey design [39,40].

Survey dissemination and collection of responses was carried out electronically using Qualtrics [41], with a release date of 7 December 2018 and a 90-day dissemination window. Data collection was hampered due to the U.S. federal government shutdown from 22 December 2018 to 25 January 2019. Federal contacts were re-invited on 1 July 2019 to take the survey during a second 90-day dissemination window, but response rates remained low. Table 1 provides the response rate information per region, and Table 2 summarizes the demographics of respondents. All survey questions were optional to complete, so the total responses per question does not always equal the total number of complete responses (49). A public summary of the survey results is published in Bamzai-Dodson et al. [42].

Table 1.

Survey response rates for each Climate Adaptation Science Center (CASC) region.

Table 2.

Respondent demographics for both CASC regions by organization type and organizational role. “Other” self-identified as part of a “federally supported partnership”.

4. Results

4.1. Process: Engagement in the Process of Knowledge Production

Questions in this section of the survey were designed to examine the nature and focus of interactions between stakeholders and investigators during the process of knowledge production. Research indicates that when, how, and how often scientists and stakeholders interact with each other during a project can be important factors to the perceived success of the project [26]. More than half of the respondents (57.1 percent) indicated their engagement began prior to proposal development, with an additional 12.2 percent engaged during proposal development. Engagement during a project ranged from never (zero times per year) to at least every week (52 or more times per year), although most respondents (67.4 percent) were engaged between one to eight times per year. No respondents said that the level of interaction was too much; however, 16 percent said that there was too little interaction. These results indicate that early and ongoing interactions were common factors in CASC projects and that even high frequency engagement was not perceived as too much interaction by stakeholders. One respondent described their experience being engaged in a project late and expressed appreciation for the investigators’ responsiveness to their input: “The investigative team was slow to involve those of us who were able to provide more local expertise into the design process, however they did exhibit remarkable flexibility in inviting/allowing that input and then adapting their process to better include such material/knowledge”.

The phases of a project during which the most stakeholders reported interaction were definition of the problem (87.5 percent), selection of products (85.7 percent), and dissemination of findings (87 percent). No interaction was most often reported by stakeholders during the design of research methods (27.1 percent), the collection of project data (27.1 percent), and the analysis of project data (32.6 percent). Only one respondent (3.85 percent) indicated that a formal needs assessment was done as part of the project, and 10 respondents (38.5 percent) indicated that needs were determined through informal conversation. Eleven respondents (23.4 percent) indicated that a formal risk or vulnerability assessment was conducted, and 18 respondents (38.3 percent) indicated that risk or vulnerability were assessed through informal conversation. These findings indicate that stakeholders are primarily engaging in CASC projects at key decision points related to the context, scoping, and products of a project and not when decisions such as method selection, data collection, and data analysis are made about research design. CASC project teams are also preferentially choosing to use informal approaches when determining the management context of a research project instead of following established formal strategies for assessing needs, risk, or vulnerability (e.g., scenario planning, structured decision making, systems engineering).

The responses to these questions were informative for describing the frequency, timing, and intent of engagement. However, we found that our evaluation and survey design missed identifying who had initiated each stage of engagement, evaluating the perceived quality of interactions at those points, understanding why engagement was lower during design decisions, and whether a lack of engagement at those points was detrimental to project outcomes. One possibility is for funding programs or climate service providers to identify key decision points regarding the formation of research goals and questions and the development and dissemination of products during which the quality and outcomes of the engagement process can be evaluated in an ongoing manner. Such an approach would strengthen the alignment between stakeholder aspirations, priorities, and needs and project goals, outputs, and outcomes.

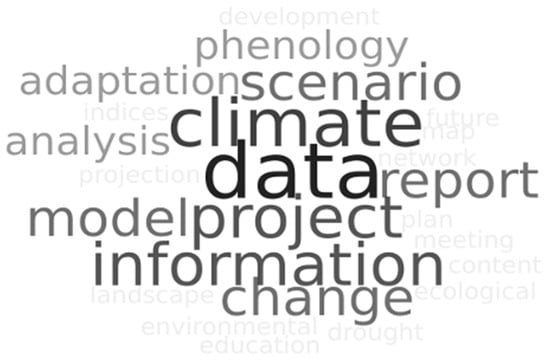

4.2. Outputs and Outcomes: Production and Use of Outputs

Questions in this section of the survey were designed to determine the types of outputs and knowledge produced by projects and understand how they were used by stakeholders. Research indicates that the number, type, quality, usability, and use of outputs from projects can be important factors to the perceived success of the project [26]. The most common project output reported by respondents was data provision, ranging from disseminating observations (e.g., place-based phenological data) to projections (e.g., climate model data) (Figure 3). Respondents also reported receiving summarized information from investigators, such as two-page overviews of new findings and quarterly newsletters. Notably, some respondents remarked on more subtle relational outcomes such as “many relationships” and “a new world view”. One respondent provided the following feedback on the networking opportunities that their project provided: “The most fruitful and beneficial outcomes from this project will be the connections established between collaborators. It is difficult to quantify [the potential outcomes of new relationships] but I think bringing people to the table is, nonetheless, extremely valuable and worth supporting”.

Figure 3.

Word cloud generated from 40 open-ended responses to the question “What kinds of information, data, tools, or other products did this project provide you?”.

All respondents indicated that projects helped them both be better informed broadly about an issue and be better informed specifically about a particular problem. However, stakeholders indicated that projects were not useful to gain a new technical skill (25.8 percent), formulate policy (23.8 percent), and implement adaptation plans (13 percent). Respondents indicated that projects helped them to understand changes in weather and climate observations and model projections and to link those changes to impacts on resources or places that they manage; however, no respondents indicated that projects helped them identify, evaluate, or select potential adaptation strategies to cope with such impacts. These results indicate that although knowledge and outputs produced by these projects were used by stakeholders to inform adaptation planning, they were not used to make specific climate adaptation decisions (although they may have been used in the implementation of other resource management decisions).

Twenty-four respondents indicated that there were specific factors that they felt contributed to their use of project outputs and provided descriptions of these factors in open-ended replies. The most common factor was a strong partnership between the investigator and stakeholder, illustrated as “trust, relationships, open-mindedness on all sides” and “an attention to the relationship, protocol, transparency, and communication”. Some respondents described contexts with a very clear management challenge linked to a demonstrated information need, such as a “well defined management need to be explored” and “Federal mandated water settlement legislation”. Respondents also mentioned several different ways in which investigators were able to make broad results relevant to their specific management challenge. Examples include the creation of “fine spatial resolution climate products” and the provision of “alternatives to traditional drought indices”.

Thirteen respondents indicated that specific factors limited their use of project results. The most common barriers were a need for additional time to use results (19.2 percent) and resource constraints (15.4 percent). One respondent described how late engagement in a project could act as a barrier to information use: “The one area that could have been improved would have been upfront discussion of delivery mechanisms to achieve broader impacts. The proposal included a component of incorporating results into specific agency products, without talking to the agency manager for all of those products before the proposal was submitted”. Respondents also described a need for “continued data collection and processing,” especially in places where extreme weather events disrupted data continuity. No respondents indicated an issue with the quality of the science provided by investigators.

These results indicate that while funded projects resulted in conceptual use of outputs (informing) by stakeholders and may have resulted in instrumental use (implementation) for general resource management [13], they fell short of their intended goal of instrumental use for climate adaptation. Stakeholders had confidence in the quality and integrity of scientific outputs and understood their broad relationship to management contexts but lacked time and resources to apply such information to specific climate adaptation decisions, plans, or actions. However, it has been noted that moving from conceptual to instrumental use of information can partly be a factor of the maturity of the project and the relationship between the investigator and stakeholder [43], and thus it is possible that revisiting respondents after additional time has passed may reveal stronger instrumental use of information. To capture long-term use of outputs by stakeholders, funding programs and climate service providers may need to implement evaluation processes that continue on for multiple years after the formal conclusion of a single activity.

4.3. Impacts: Building of Relationships and Trust

Questions in this section of the survey were designed to examine the impacts of participating in a project to the building of relationships and trust between stakeholders and investigators. Research indicates that trust between investigators and stakeholders is foundational to two-way communication and accountability during the project and to sustain further work after the project [26]. Respondents reported positive feelings overall about their engagement in South Central and North Central CASC projects. Respondents felt satisfied with their experiences with the investigator team (93.6 percent) and felt satisfied with their experiences with the project (87.2 percent).

All respondents agreed that investigators were honest, sincere, and trustworthy, and 91.5 percent of respondents agreed that investigators were committed to the engagement process. The same percentage of respondents (91.5 percent) agreed that investigators appreciated and respected what they brought to the project, while 89.4 percent of respondents agreed that the investigators took their opinion seriously during the discussions. Furthermore, all respondents said it was likely that they would use additional results generated by this investigator team. These results indicate that stakeholders still felt goodwill towards investigators as individuals, even when engagement processes and integration of their input into the project might have fallen short of expectations.

Respondents provided a range of reasons that would make it likely for them to work with the investigators again in the future (Figure 4). Many respondents mentioned the nature of their relationship as a team, citing a desire to work with “good people” where the “collaborative spirit and tone of mutual respect is great”. In addition to a positive team atmosphere, respondents mentioned the level of expertise of investigators. One project investigator was identified as an “outstanding scientist and human being,” with the respondent adding that “[their] humility despite [their] great knowledge and intellect is inspiring”. Finally, respondents mentioned the importance of the relevance of findings, such as the “ability to provide useful products” and “good, practical, implementable results that were directly applicable to my agency’s goals and strategies”.

Figure 4.

Word cloud generated from 37 open-ended responses to the question “From your perspective, what reason(s) would make it likely for you to work with this investigator team or the CASC again in the future?”.

When provided the opportunity to give any other feedback on their experience, several respondents noted their appreciation for the integration into the project of informal knowledge or results. One respondent highlighted the investigators’ “willingness to more readily recognize and respond to non-peer reviewed (nascent) local research,” and another acknowledged that investigators were willing to implement “a demonstration project” for local stakeholders. A third respondent stated that they valued support for a project “that was not firmly deliverables based” because one of the main outputs was the creation of a collaborative network of individuals.

Results from this section demonstrate the perceived value to stakeholders of building trusted partnerships and communities of practice. In particular, funding programs and climate service providers would benefit from identifying empirical methods for measuring and monitoring trust between the producers and users of climate services, as trust plays a key role in the uptake of information for policy and decision making [30,31]. Beyond trust, formative evaluation during a project could help investigators identify instances where stakeholders may feel that their input is not being appreciated or their opinions are not being taken seriously. This would allow for the institution of corrective actions to improve the flow of communications and provide more responsive climate services.

5. Discussion and Recommendations

In this paper, we summarized a variety of approaches from the discipline of evaluation and described their relevance to defining success and evaluating the societal impacts of climate services within the context of climate impacts and adaptation research. We presented a case study to demonstrate how to operationalize selected approaches from this literature using a survey of stakeholders engaged in projects funded by the South Central and North Central CASCs. Funders of climate services, such as the CASCs, are positioned to influence the form and goals of research across many stages of the process, from setting the priorities that appear in a solicitation to identifying appropriate proposal review criteria to selecting which projects receive funding. Evaluation of and by funders of climate services is critical to understanding whether actions taken across each of these stages and by individual projects support the overall goal of societal impact [44,45].

Because virtually all respondents indicated satisfaction with projects and investigators, our ability to contrast projects and interpret differences among them was limited. Additionally, our case study was limited by the low survey response rate and relatively small sample size, possibly resulting from the immediate and lingering impact of the 2018–2019 U.S. federal government shutdown. As a result, although we were not able to use the collected data the way in which we originally intended, we still were able to examine the characteristics of investigators and projects that stakeholders found satisfactory. Describing these characteristics allowed us to meet our intended program objectives and provided lessons learned from completed projects that can be applied to subsequent similar projects.

Our results corroborated previous studies that have demonstrated that stakeholders prefer being engaged in projects early, often, and consistently [43,46]. Previous research has shown that stakeholders may become fatigued or stressed with interactions that do not result in perceptible changes to the research agenda to prioritize stakeholder benefits [47,48]. Our findings showed that even interacting with investigators more than once a week was perceived as satisfactory and not as too much interaction, opening the possibility that stakeholder fatigue may not be an issue when there is an obvious connection between the reason for the interaction and a benefit to the stakeholder. Almost all stakeholders left these interactions feeling better informed by the knowledge and outputs produced by projects and able to apply such knowledge and outputs to general resource management. However, very few of them were able to directly implement this information into climate adaptation planning or action, with several mentioning a need for additional time to use the results. Even so, stakeholders placed value on participation in these projects due to the relational benefits that they gained, such as growing their professional network and conversing with scientific experts in informal settings. Stakeholders also emphasized investigators’ personal collaborative natures such as their ability to demonstrate mutual respect and humility, illustrating the importance of an investigator’s willingness to take an “apprentice” role and learn from the decision makers [49].

Importantly, attempting to generate a summative “one size fits all” survey for such a broad set of objectives prevented us from examining the societal impact of individual projects, even if it helped identify characteristics of projects found satisfactory by stakeholders. Although we took care to design a single evaluation process that built on appropriate theory, methods, and survey design, we discovered that each project came with its own unique objective regarding societal impact, which ideally needed an individually tailored evaluand and measures. Surveys such as ours are an increasingly common way for programs to evaluate the societal impact of their activities, but the results can fall short of achieving that goal. Instead, we recommend that future initiatives to examine societal impact for the CASCs, and other climate services funding programs, consider that evaluation for each project be integrated up front into proposal development, such as asking investigators to create a logic model with measurable attributes. Such an approach would help ensure that subsequent project evaluations would then be designed with a specific purpose in mind and could ameliorate the issue of a low response rate.

This additional request for inclusion of evaluation design and implementation, however, can only be met with a matching provision of additional resources from funders. Doing so would allow climate service providers to work with relevant evaluation experts to conduct an initial appraisal and design and implement an evaluation process. Smart [50] suggests seven key questions to consider when planning for evaluation, which we map to concepts useful for answering these questions in Table 3. These questions range from the big picture (why is it needed?) to the practical (who will I collect data from?). When combined, answers to the questions aid in the selection of evaluation approaches that provide meaningful information and guide improvement. Investigators, funders, and evaluators can use these questions as a common starting point when discussing evaluation.

Table 3.

Seven key questions to consider when planning an evaluation process, and relevant concepts useful to answering these questions.

One unintended benefit of this study was that it fed into the broader conversation across the regional CASCs about whether it was possible to quantitatively measure the societal impacts of research projects that they fund. Since development and dissemination of this survey protocol, the Southeast CASC has carried out additional quantitative and qualitative research from which findings are still emerging. To date, their evaluation initiative has described the differing ways in which individuals and organizations use climate adaptation science [51] and the distinct pathways which projects that aim for societal impact can follow in comparison to projects that aim for high scientific impact [52]. These network-wide conversations are a continued effort to apply concepts from evaluation theory and practice to the challenge of funding and providing climate services.

6. Conclusions

Evaluation is a critical component of understanding the societal impact of the provision of climate services, yet many existing approaches fall short of achieving this goal. We set out to do program-wide evaluation at the project-level by creating a single survey instrument. While analyzing our data, we noted that the diverse array of project objectives meant that the single overarching survey did not contain enough nuance to evaluate individual projects. Instead, each project needed a tailored measurement tool that was developed with its unique objectives in mind. For example, we found that our survey could not capture the differing definitions of success between place-based projects for targeted stakeholders and projects producing large, regional-scale products for many stakeholders. Nor could our survey capture the differences between projects designed to build relationships and trust between people and those designed to provide context for making a specific decision. This study demonstrates the limitations of a program summatively evaluating projects and that embedding evaluation in each project from the start would be beneficial. In particular, funders of science can encourage applicants to proactively consider evaluation during proposal development and provide the resources to bring in relevant and necessary evaluation expertise to an investigator team.

Author Contributions

Conceptualization, A.B.-D. and R.A.M.; Investigation, A.B.-D.; Supervision, R.A.M.; Writing—original draft, A.B.-D.; Writing—review & editing, A.B.-D. and R.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the U.S. Geological Survey South Central and North Central Climate Adaptation Science Centers. Any use of trade, firm, or product names is for descriptive purposes only and does not imply endorsement by the U.S. Government.

Institutional Review Board Statement

The study was conducted in accordance with U.S. Geological Survey Fundamental Science Practices, the Declaration of Helsinki, and approved by the Institutional Review Board of The University of Oklahoma (IRB number 7457). Paperwork Reduction Act approval was obtained from the U.S. Office of Management and Budget (control number 1090-0011).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Bamzai-Dodson, A.; Lackett, J.; McPherson, R.A. North Central and South Central Climate Adaptation Science Center Project Evaluation: Survey Data Public Summary; U.S. Geological Survey data release: Reston, VA, USA 2022. https://doi.org/10.5066/P93WPOS5.

Acknowledgments

We thank the members of A.B.-D.’s dissertation committee, the Climate Adaptation Science Center Evaluation Working Group, and the Science of Actionable Knowledge Working Group for insightful discussions around what constitutes successful actionable science for climate adaptation. Special thanks are given to Kate Malpeli and Louise Johansson for their design work on Figure 1.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Crutzen, P.J. The “Anthropocene”. In Earth System Science in the Anthropocene; Ehlers, E., Krafft, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 13–18. ISBN 978-3-540-26590-0. [Google Scholar]

- National Research Council. Meeting the Challenge of Climate; The National Academies Press: Washington, DC, USA, 1982.

- Dilling, L.; Lemos, M.C. Creating Usable Science: Opportunities and Constraints for Climate Knowledge Use and Their Implications for Science Policy. Glob. Environ. Change 2011, 21, 680–689. [Google Scholar] [CrossRef]

- Brasseur, G.P.; Gallardo, L. Climate Services: Lessons Learned and Future Prospects. Earth’s Future 2016, 4, 79–89. [Google Scholar] [CrossRef]

- Reed, M.S.; Vella, S.; Challies, E.; de Vente, J.; Frewer, L.; Hohenwallner-Ries, D.; Huber, T.; Neumann, R.K.; Oughton, E.A.; del Ceno, J.S.; et al. A Theory of Participation: What Makes Stakeholder and Public Engagement in Environmental Management Work? Restor. Ecol. 2018, 26, S7–S17. [Google Scholar] [CrossRef]

- National Research Council. Informing Decisions in a Changing Climate; The National Academies Press: Washington, DC, USA, 2009.

- Nguyen, V.M.; Young, N.; Brownscombe, J.W.; Cooke, S.J. Collaboration and Engagement Produce More Actionable Science: Quantitatively Analyzing Uptake of Fish Tracking Studies. Ecol. Appl. 2019, 29, e01943. [Google Scholar] [CrossRef] [PubMed]

- Meadow, A.M.; Owen, G. Planning and Evaluating the Societal Impacts of Climate Change Research Project: A Guidebook for Natural and Physical Scientists Looking to Make a Difference; UA Faculty Publications: Tucson, AZ, USA, 2021. [Google Scholar] [CrossRef]

- Robinson, J.A.; Hawthorne, T.L. Making Space for Community-Engaged Scholarship in Geography. Prof. Geogr. 2018, 70, 277–283. [Google Scholar] [CrossRef]

- Cozzens, S.E. The Knowledge Pool: Measurement Challenges in Evaluating Fundamental Research Programs. Eval. Program Plan. 1997, 20, 77–89. [Google Scholar] [CrossRef]

- National Research Council. Using Science as Evidence in Public Policy; The National Academies Press: Washington, DC, USA, 2012.

- Meadow, A.M.; Ferguson, D.B.; Guido, Z.; Horangic, A.; Owen, G.; Wall, T. Moving toward the Deliberate Coproduction of Climate Science Knowledge. Weather Clim. Soc. 2015, 7, 179–191. [Google Scholar] [CrossRef]

- VanderMolen, K.; Meadow, A.M.; Horangic, A.; Wall, T.U. Typologizing Stakeholder Information Use to Better Understand the Impacts of Collaborative Climate Science. Environ. Manag. 2020, 65, 178–189. [Google Scholar] [CrossRef]

- Bamzai-Dodson, A.; Cravens, A.E.; Wade, A.; McPherson, R.A. Engaging with Stakeholders to Produce Actionable Science: A Framework and Guidance. Weather Clim. Soc. 2021, 13, 1027–1041. [Google Scholar] [CrossRef]

- Block, D.R.; Hague, E.; Curran, W.; Rosing, H. Measuring Community and University Impacts of Critical Civic Geography: Insights from Chicago. Prof. Geogr. 2018, 70, 284–290. [Google Scholar] [CrossRef]

- Ford, J.D.; Knight, M.; Pearce, T. Assessing the ‘Usability’ of Climate Change Research for Decision-Making: A Case Study of the Canadian International Polar Year. Glob. Environ. Chang. 2013, 23, 1317–1326. [Google Scholar] [CrossRef]

- Baylor, R.; Esper, H.; Fatehi, Y.; de Garcia, D.; Griswold, S.; Herrington, R.; Belhoussein, M.O.; Plotkin, G.; Yamron, D. Implementing Developmental Evaluation: A Practical Guide for Evaluators and Administrators; U.S. Agency for International Development: Washington, DC, USA, 2019.

- Patton, M.Q. Evaluation Science. Am. J. Eval. 2018, 39, 183–200. [Google Scholar] [CrossRef]

- Hansen, H.F. Choosing Evaluation Models. Evaluation 2005, 11, 447–462. [Google Scholar] [CrossRef]

- Preskill, H.; Russ-Eft, D. Building Evaluation Capacity; Sage Publications: New York, NY, USA, 2004; ISBN 1-4833-8931-6. [Google Scholar]

- Weiss, C.H. Have We Learned Anything New About the Use of Evaluation? Am. J. Eval. 1998, 19, 21–33. [Google Scholar] [CrossRef]

- Patton, M.Q. Developmental Evaluation: Applying Complexity Concepts to Enhance Innovation and Use; Guilford Press: New York, NY, USA, 2011. [Google Scholar]

- Patton, M.Q. Essentials of Utilization-Focused Evaluation; SAGE: Saint Paul, MN, USA, 2012. [Google Scholar]

- Coryn, C.L.S.; Hattie, J.A.; Scriven, M.; Hartmann, D.J. Models and Mechanisms for Evaluating Government-Funded Research. Am. J. Eval. 2007, 28, 437–457. [Google Scholar] [CrossRef]

- Doemeland, D.; Trevino, J. Which World Bank Reports Are Widely Read? The World Bank: Washington, DC, USA, 2014; pp. 1–34. [Google Scholar]

- Wall, T.; Meadow, A.M.; Horangic, A. Developing Evaluation Indicators to Improve the Process of Coproducing Usable Climate Science. Weather Clim. Soc. 2017, 9, 95–107. [Google Scholar] [CrossRef]

- McNie, E.C. Delivering Climate Services: Organizational Strategies and Approaches for Producing Useful Climate-Science Information. Weather Clim. Soc. 2013, 5, 14–26. [Google Scholar] [CrossRef]

- Guido, Z.; Hill, D.; Crimmins, M.; Ferguson, D. Informing Decisions with a Climate Synthesis Product: Implications for Regional Climate Services. Weather Clim. Soc. 2013, 5, 83–92. [Google Scholar] [CrossRef]

- VanLandingham, G.; Silloway, T. Bridging the Gap between Evidence and Policy Makers: A Case Study of the Pew-MacArthur Results First Initiative. Public Adm. Rev. 2016, 76, 542–546. [Google Scholar] [CrossRef]

- Boschetti, F.; Cvitanovic, C.; Fleming, A.; Fulton, E. A Call for Empirically Based Guidelines for Building Trust among Stakeholders in Environmental Sustainability Projects. Sustain. Sci. 2016, 11, 855–859. [Google Scholar] [CrossRef]

- Lacey, J.; Howden, M.; Cvitanovic, C.; Colvin, R.M. Understanding and Managing Trust at the Climate Science–Policy Interface. Nat. Clim. Chang. 2017, 8, 22–28. [Google Scholar] [CrossRef]

- Klink, J.; Koundinya, V.; Kies, K.; Robinson, C.; Rao, A.; Berezowitz, C.; Widhalm, M.; Prokopy, L. Enhancing Interdisciplinary Climate Change Work through Comprehensive Evaluation. Clim. Risk Manag. 2017, 15, 109–125. [Google Scholar] [CrossRef]

- Colavito, M.M.; Trainor, S.F.; Kettle, N.P.; York, A. Making the Transition from Science Delivery to Knowledge Coproduction in Boundary Spanning: A Case Study of the Alaska Fire Science Consortium. Weather Clim. Soc. 2019, 11, 917–934. [Google Scholar] [CrossRef]

- Owen, G.; Ferguson, D.B.; McMahan, B. Contextualizing Climate Science: Applying Social Learning Systems Theory to Knowledge Production, Climate Services, and Use-Inspired Research. Clim. Chang. 2019, 157, 151–170. [Google Scholar] [CrossRef]

- Salazar, K. Department of the Interior Secretarial Order 3289: Addressing the Impacts of Climate Change on America’s Water, Land, and Other Natural and Cultural Resources, 2009. Available online: https://www.doi.gov/elips/search?query=&name=&doc_type=2408&doc_num_label=&policy_category=All&approval_date=&so_order_num=3289&so_amended_num=&chapter=&dm_prt=&archived=All&office=All&date_from%5Bdate%5D=&date_to%5Bdate%5D=&sort_by=search_api_relevance&sort_order=DESC&items_per_page=10 (accessed on 30 May 2017).

- ACCCNRS. Report to the Secretary of the Interior; Advisory Committee on Climate Change and Natural Resource Science: Washington, DC, USA, 2015.

- USGS Program Evaluation|Climate Adaptation Science Centers. Available online: https://www.usgs.gov/programs/climate-adaptation-science-centers/program-evaluation (accessed on 25 October 2021).

- Bamzai-Dodson, A.; Lackett, J.; McPherson, R.A. CASC Project Evaluation Survey Template; U.S. Geological Survey Data Release: Reston, VA, USA, 2022. [CrossRef]

- Wardropper, C.B.; Dayer, A.A.; Goebel, M.S.; Martin, V.Y. Conducting Conservation Social Science Surveys Online. Conserv. Biol. 2021, 35, 1650–1658. [Google Scholar] [CrossRef] [PubMed]

- Wolf, C.; Joye, D.; Smith, T.; Fu, Y. The SAGE Handbook of Survey Methodology; SAGE Publications: London, UK, 2016. [Google Scholar]

- Qualtrics: Provo, UT Qualtrics (Copyright 2020). Available online: https://www.qualtrics.com/ (accessed on 30 May 2017).

- Bamzai-Dodson, A.; Lackett, J.; McPherson, R.A. North Central and South Central Climate Adaptation Science Center Project Evaluation: Survey Data Public Summary; U.S. Geological Survey Data Release: Reston, VA, USA, 2022. [CrossRef]

- Ferguson, D.B.; Meadow, A.M.; Huntington, H.P. Making a Difference: Planning for Engaged Participation in Environmental Research. Environ. Manag. 2022, 69, 227–243. [Google Scholar] [CrossRef] [PubMed]

- Arnott, J.C. Pens and Purse Strings: Exploring the Opportunities and Limits to Funding Actionable Sustainability Science. Res. Policy 2021, 50, 104362. [Google Scholar] [CrossRef]

- Arnott, J.C.; Kirchhoff, C.J.; Meyer, R.M.; Meadow, A.M.; Bednarek, A.T. Sponsoring Actionable Science: What Public Science Funders Can Do to Advance Sustainability and the Social Contract for Science. Curr. Opin. Environ. Sustain. 2020, 42, 38–44. [Google Scholar] [CrossRef]

- Steger, C.; Klein, J.A.; Reid, R.S.; Lavorel, S.; Tucker, C.; Hopping, K.A.; Marchant, R.; Teel, T.; Cuni-Sanchez, A.; Dorji, T.; et al. Science with Society: Evidence-Based Guidance for Best Practices in Environmental Transdisciplinary Work. Glob. Environ. Chang. 2021, 68, 102240. [Google Scholar] [CrossRef]

- Clark, T. “We’re Over-Researched Here!”: Exploring Accounts of Research Fatigue within Qualitative Research Engagements. Sociology 2008, 42, 953–970. [Google Scholar] [CrossRef]

- Young, N.; Cooke, S.J.; Hinch, S.G.; DiGiovanni, C.; Corriveau, M.; Fortin, S.; Nguyen, V.M.; Solås, A.-M. “Consulted to Death”: Personal Stress as a Major Barrier to Environmental Co-Management. J. Environ. Manag. 2020, 254, 109820. [Google Scholar] [CrossRef] [PubMed]

- Herrick, C.; Vogel, J. Climate Adaptation at the Local Scale: Using Federal Climate Adaptation Policy Regimes to Enhance Climate Services. Sustainability 2022, 14, 8135. [Google Scholar] [CrossRef]

- Smart, J. Planning an Evaluation: Step by Step; Australian Institute of Family Studies: Victoria, Australia, 2020.

- Courtney, S.; Hyman, A.; McNeal, K.S.; Maudlin, L.C.; Armsworth, P. Development of a Survey Instrument to Assess Individual and Organizational Use of Climate Adaptation Science. Environ. Sci. Policy 2022, 137, 271–279. [Google Scholar] [CrossRef]

- Hyman, A.; Courtney, S.; McNeal, K.S.; Bialic-Murphy, L.; Furiness, C.; Eaton, M.; Armsworth, P. Distinct Pathways to Stakeholder Use versus Scientific Impact in Climate Adaptation Research. Conserv. Lett. 2022, 15, e12892. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).