Safety Warning of Mine Conveyor Belt Based on Binocular Vision

Abstract

1. Introduction

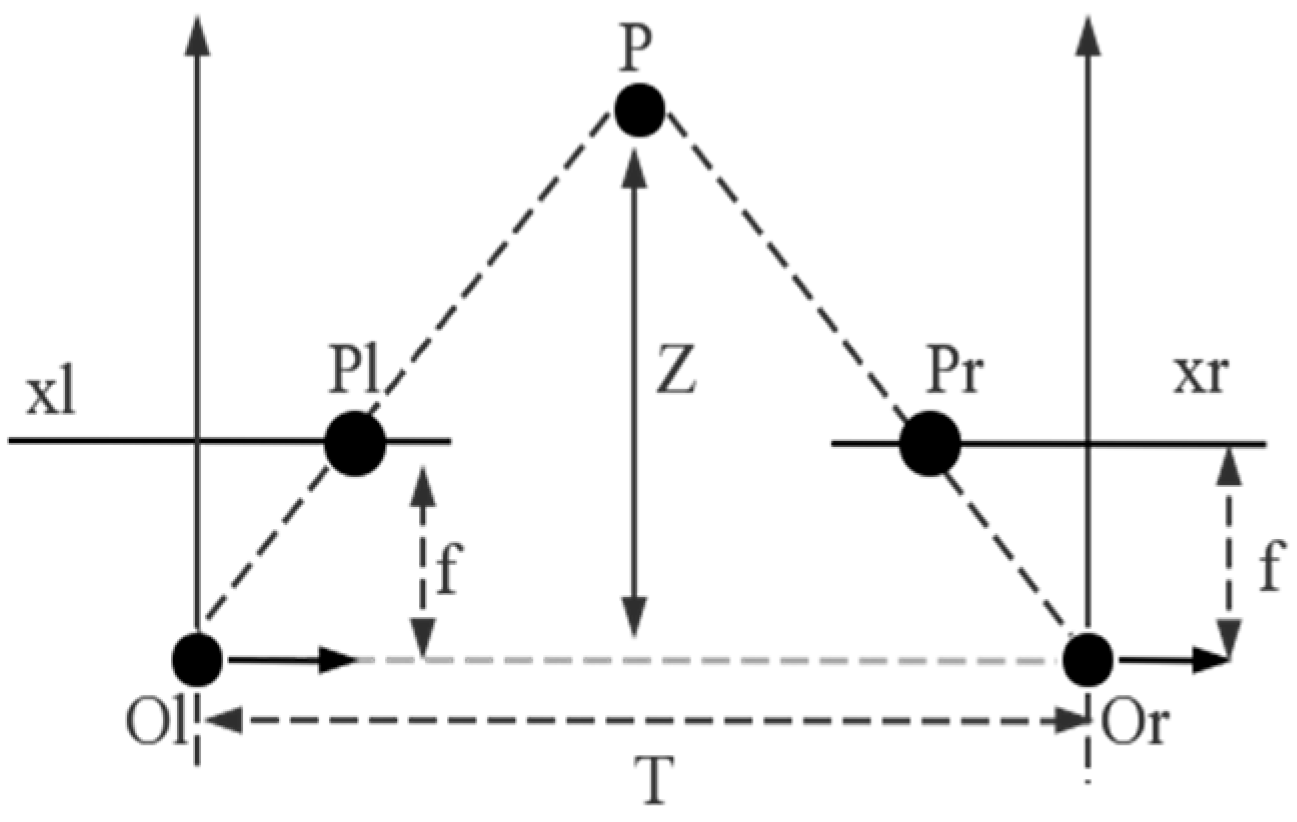

2. The principle of Binocular Vision

2.1. Binocular Camera Model

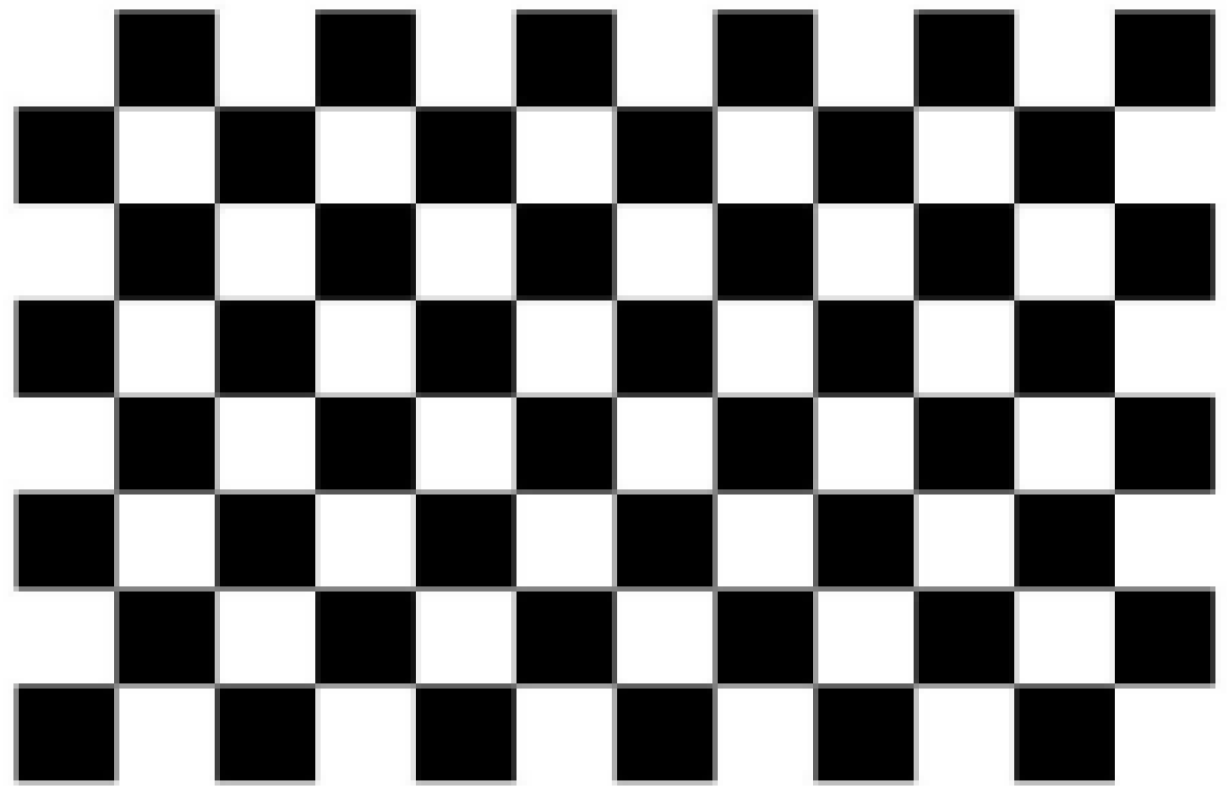

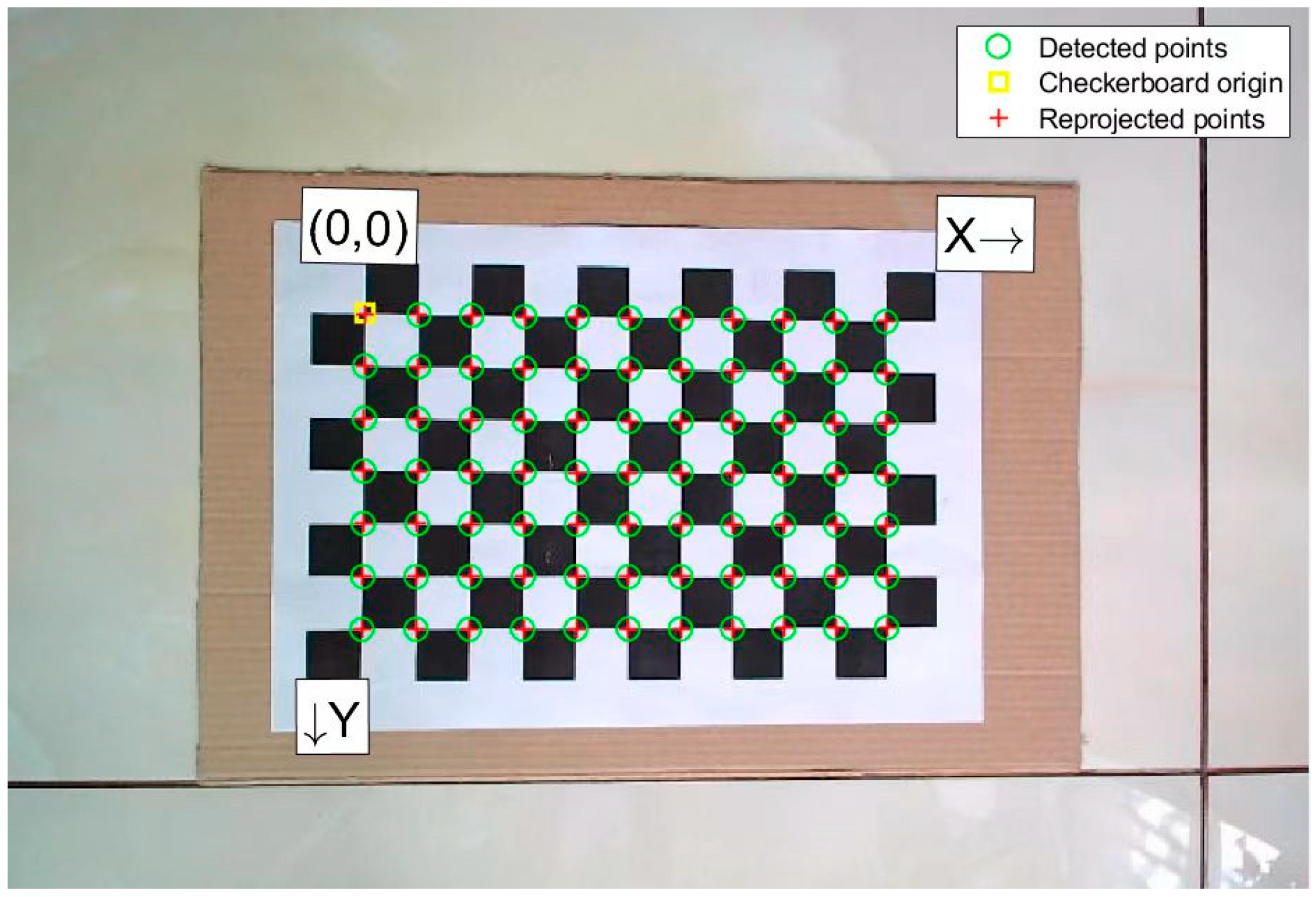

2.2. Camera Calibration

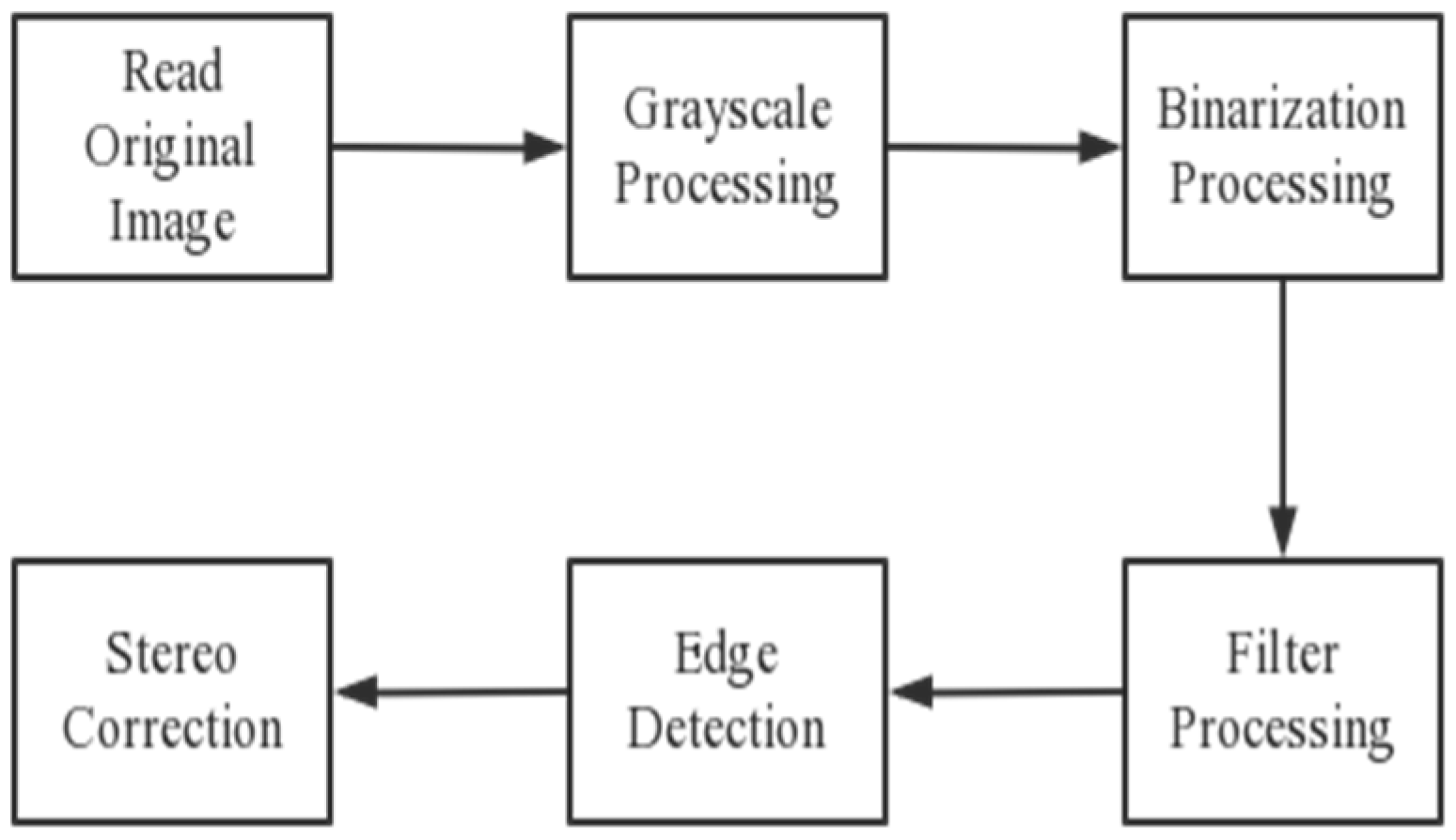

3. Image Preprocessing and Stereo Correction

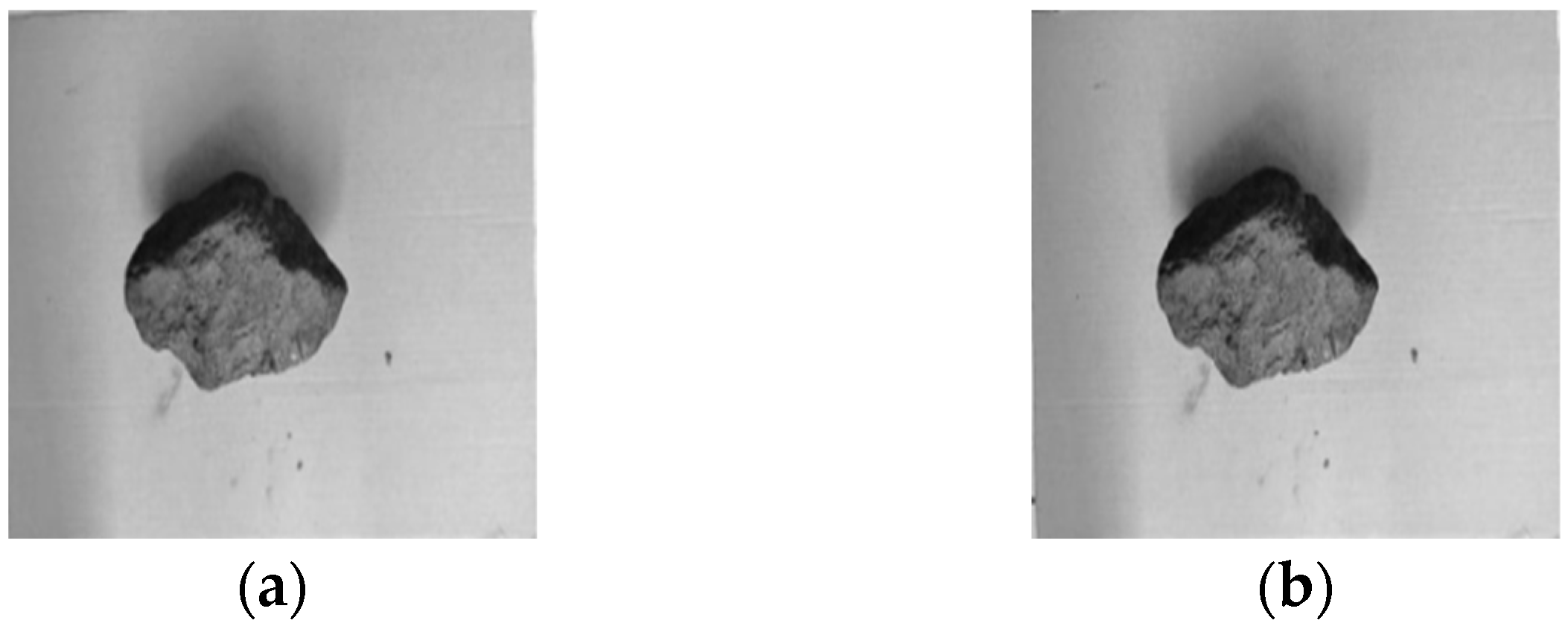

3.1. Image Preprocessing

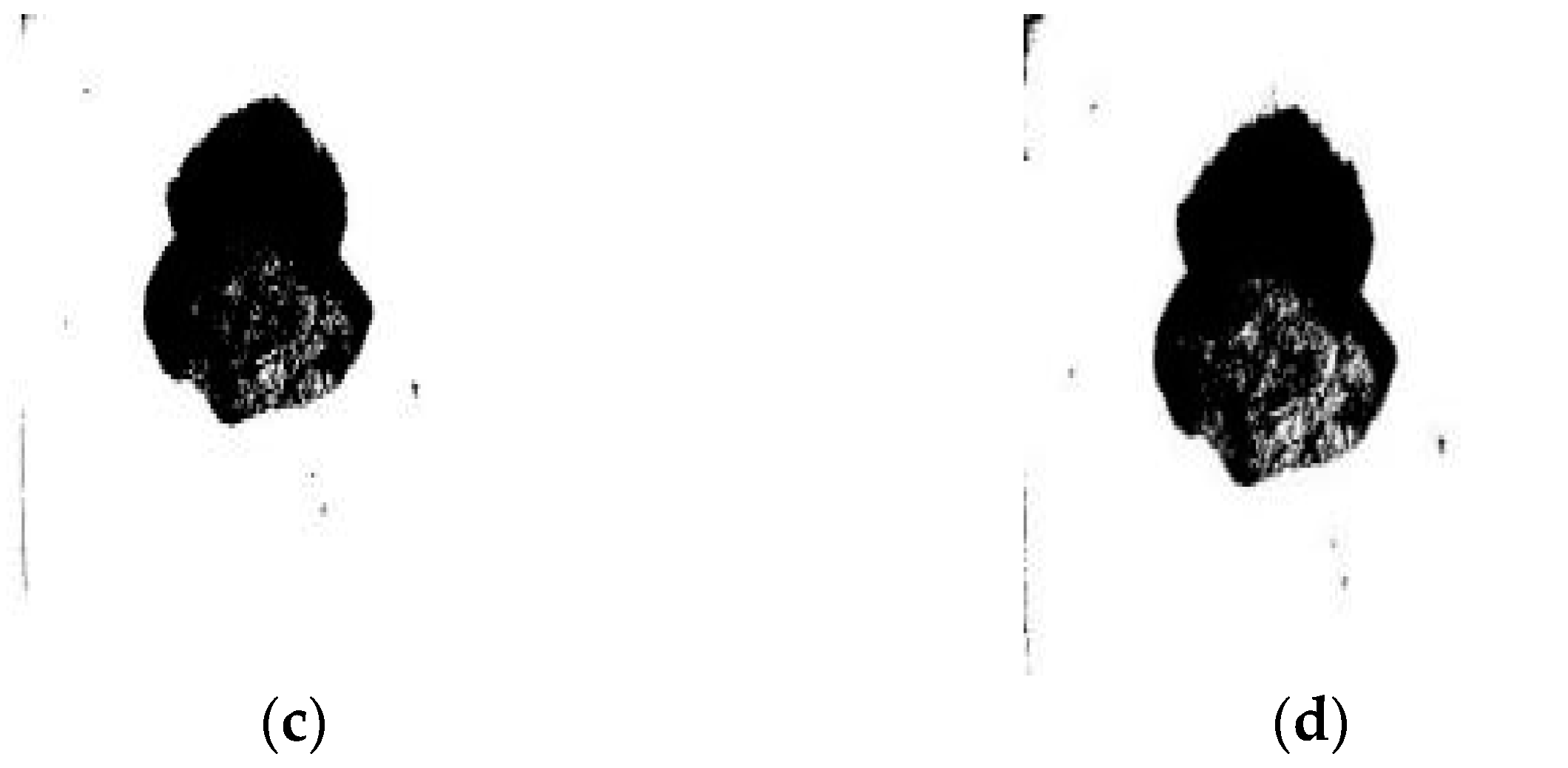

3.2. Stereo Correction

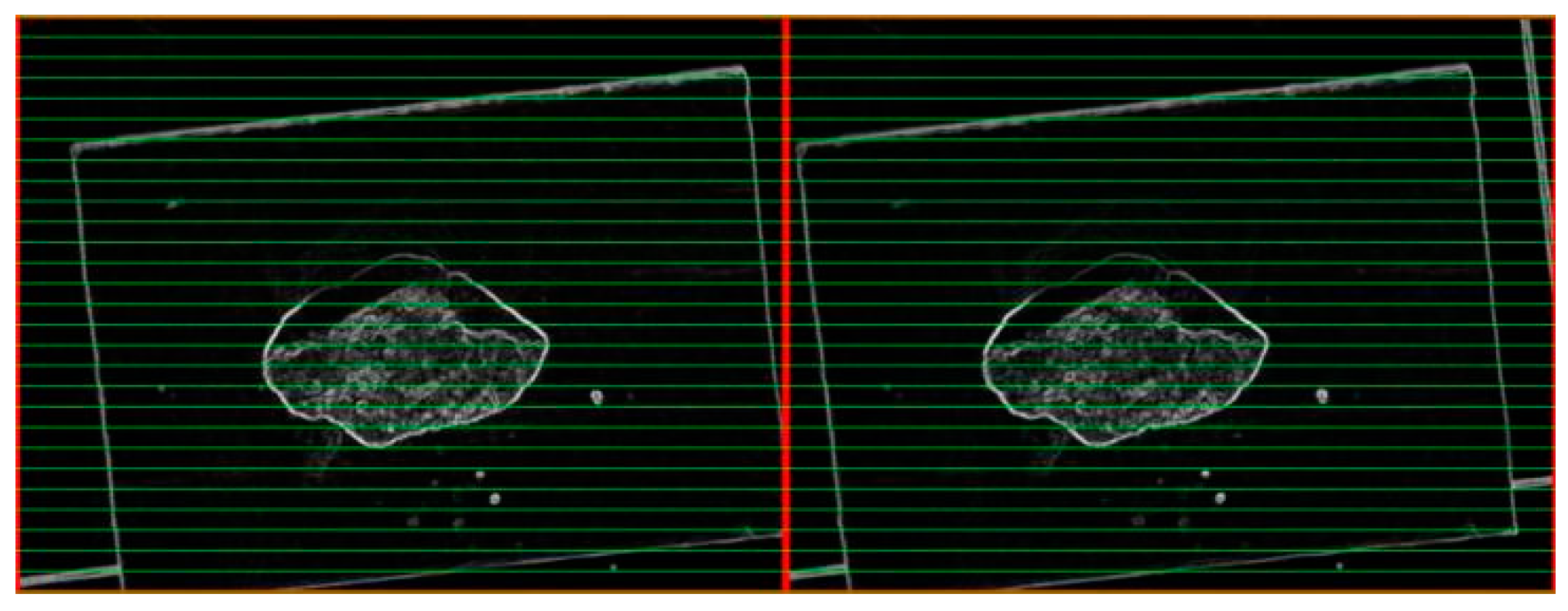

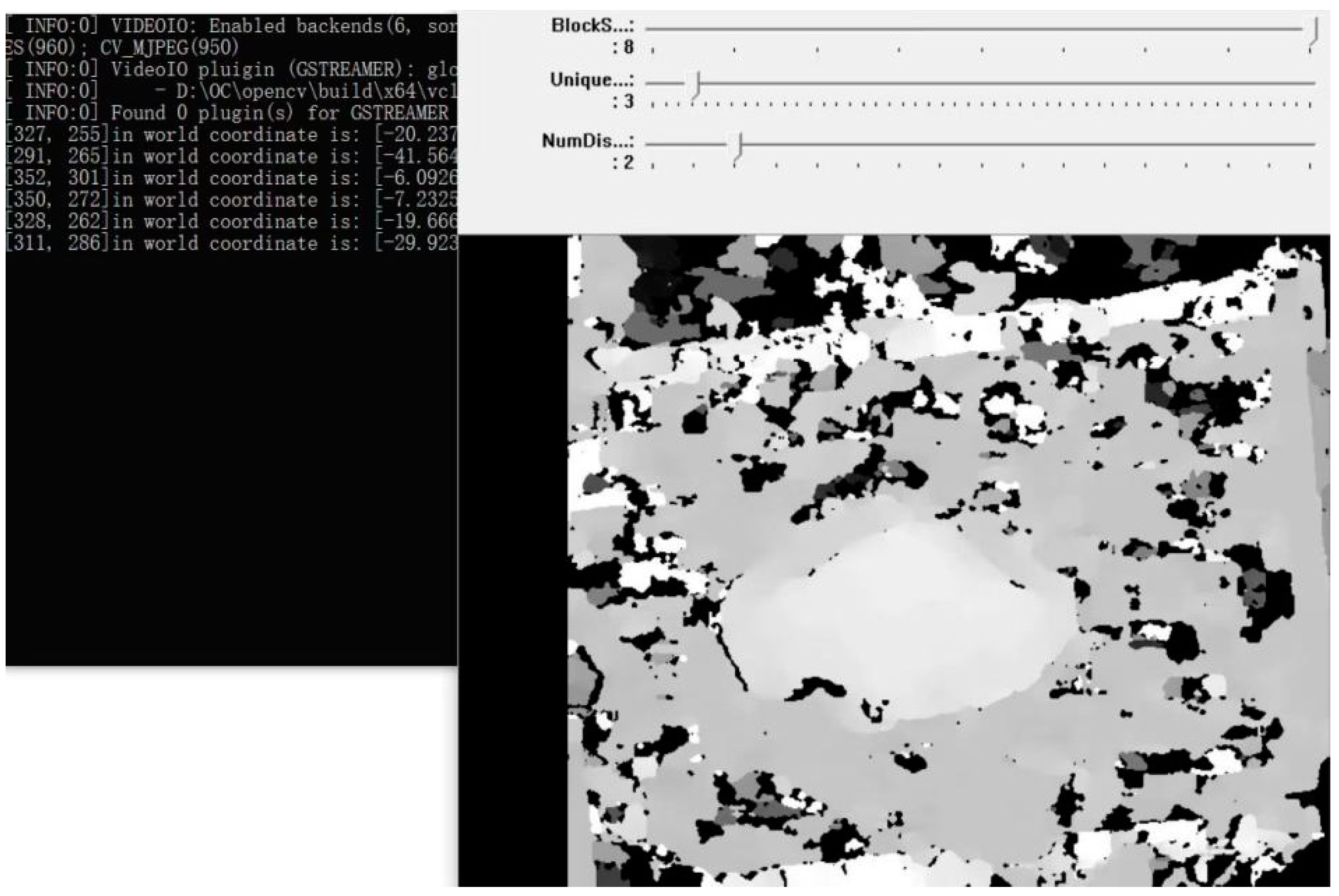

4. Stereo-Matching Algorithm

5. Calculation of Target Volume

- (1)

- The accuracy of the camera is not high enough, and the camera used is an entry-level commercial camera, which is far lower than the accuracy of an industrial camera;

- (2)

- The irregular shape on the coal block and the reason why its color distinction is not obvious enough cause the disparity map generated by the coal block in the algorithm to be flawed;

- (3)

- The experimental environment is simple, which has a certain influence on the measurement accuracy of the binocular camera.

6. Conclusions

- (1)

- Experiments show that the measurement error is about 5.65%; on the basis of this error, the volume of foreign bodies such as irregular large coal blocks and gangue is the set threshold. In this way, a mine conveyor belt safety-warning system is designed to achieve the timely maintenance of the mine conveyor belt for the normal safety of mine production and to protect the life and safety of mine workers.

- (2)

- This design was performed in a laboratory environment, with low cost, and simple and easy operation as the basic idea to use. In the practical application process, in natural light situations, the recognition effect is quite good. Further research is still needed in actual mines.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lin, B.; Chang, J.; Zhai, C. Analysis on Coal Mine Safety Situation in China and Its Countermeasures. China Saf. Sci. J. 2006, 5, 42–46+146. [Google Scholar] [CrossRef]

- Chen, J.; Zeng, B.; Liu, L.; Tao, K.; Zhao, H.; Zhang, C.; Zhang, J.; Li, D. Investigating the anchorage performance of full-grouted anchor bolts with a modified numerical simulation method. Eng. Fail. Anal 2022, 141, 106640. [Google Scholar] [CrossRef]

- Chen, J.; Liu, P.; Liu, L.; Zeng, B.; Zhao, H.; Zhang, C.; Zhang, J.; Li, D. Anchorage performance of a modified cable anchor subjected to different joint opening conditions. Constr. Build. Mater. 2022, 336, 127558. [Google Scholar] [CrossRef]

- Chen, R.; Yang, J.; Fang, H.; Huang, W.; Lin, W.; Wang, H. Comparative Study on Measuring Method and Experoment of Optical Belt Weigher. J. Huaqiao Univ. Nat. Sci. 2019, 40, 14–19. [Google Scholar] [CrossRef]

- Bi, L.; Wang, J. Construction Target, Task and Method of Digital Mine. Met. Mine 2019, 6, 148–156. [Google Scholar] [CrossRef]

- Mi, Y. Volume Measurement System of Express Parcel Based on Binocular Vision. Master’s Thesis, Hefei University of Technology, Hefei, China, 2017. [Google Scholar]

- Shao, B. Research of Dimensional Measurement of Cargo Based on Point Laser and Binocular Vision. Master’s Thesis, Dalian University of Technology, Dalian, China, 2016. [Google Scholar]

- Gao, R.; Wang, J. Volume Measurement of Coal Based on Binocular Stereo Vision. Comput. Syst. Appl. 2014, 23, 126–133. [Google Scholar]

- Hibert, P. Introduction to 3D Computer Vision Technology and Algorithms; National Defense Industry Press: Beijing, China, 2014. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zheng, K. Research on Camera Calibration and Stereo Matching Technology. Master’s Thesis, Nanjing University of Science and Technology, Nanjing, China, 2017. [Google Scholar]

- Ma, B. Study on Ore Volume Measurement Based on Binocular Vision. Master’s Thesis, Jiangxi University of Science and Technology, Jiangxi, China, 2021. [Google Scholar]

- Dong, M.; Liu, B.; Li, H.; Zhao, R. Research on Medical Image Segmentation. Inf. Rec. Mater. 2020, 21, 8–10. [Google Scholar] [CrossRef]

- Bradski, G.; Kaehler, A. Learning OpenCV, 2nd ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2014. [Google Scholar]

- Yuan, P.; Cai, D.; Cao, W.; Chen, C. Train Target Recognition and Ranging Technology Based on BinocularStereoscopic Vision. J. Northeast. Univ. Nat. Sci. 2022, 43, 335–343. [Google Scholar] [CrossRef]

- Martin, D.R.; Fowlkes, C.C.; Malik, J. Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 530–549. [Google Scholar] [CrossRef] [PubMed]

- Feng, C.T. Research and Implementation of SLAM Algorithm Based on Binocualr Vision. Master’s Thesis, Zhejiang University of Technology, Hangzhou, China, 2020. [Google Scholar]

- Peng, L. Design of Volume Monitoring System for Coal Stacking in Transmission Belt on Vision. Master’s Thesis, North University of China, Taiyuan, China, 2021. [Google Scholar]

- Li, H.; Shi, J.; Tian, C. A Laser Map-aided Visual Location in Outdoor Based on Depth Characteristics. Sci. Technol. Eng. 2020, 20, 5192–5197. Available online: http://www.stae.com.cn/jsygc/article/abstract/1908235 (accessed on 18 September 2022).

- Liang, L. Research on Measurement Method of Irregular Object Volume Based on Bimocular Stereo Vision. Master’s Thesis, Xi’an University of Technology, Xi’an, China, 2019. [Google Scholar]

| Internal Parameter Matrix | Distortion Parameter | |

|---|---|---|

| left camera | ||

| right camera |

| Height from Coal Block/mm | Height above Ground/mm | Object Height Measurement/mm | Relative Error | |

|---|---|---|---|---|

| Group One | 338.313 | 397.34 | 59.027 | 1.79% |

| Group Two | 345.335 | 406.019 | 60.684 | 0.97% |

| Group Three | 338.994 | 401.31 | 62.316 | 3.68% |

| Group Four | 337.066 | 397.38 | 60.314 | 0.37% |

| Group Five | 344.435 | 402.823 | 58.388 | 2.85% |

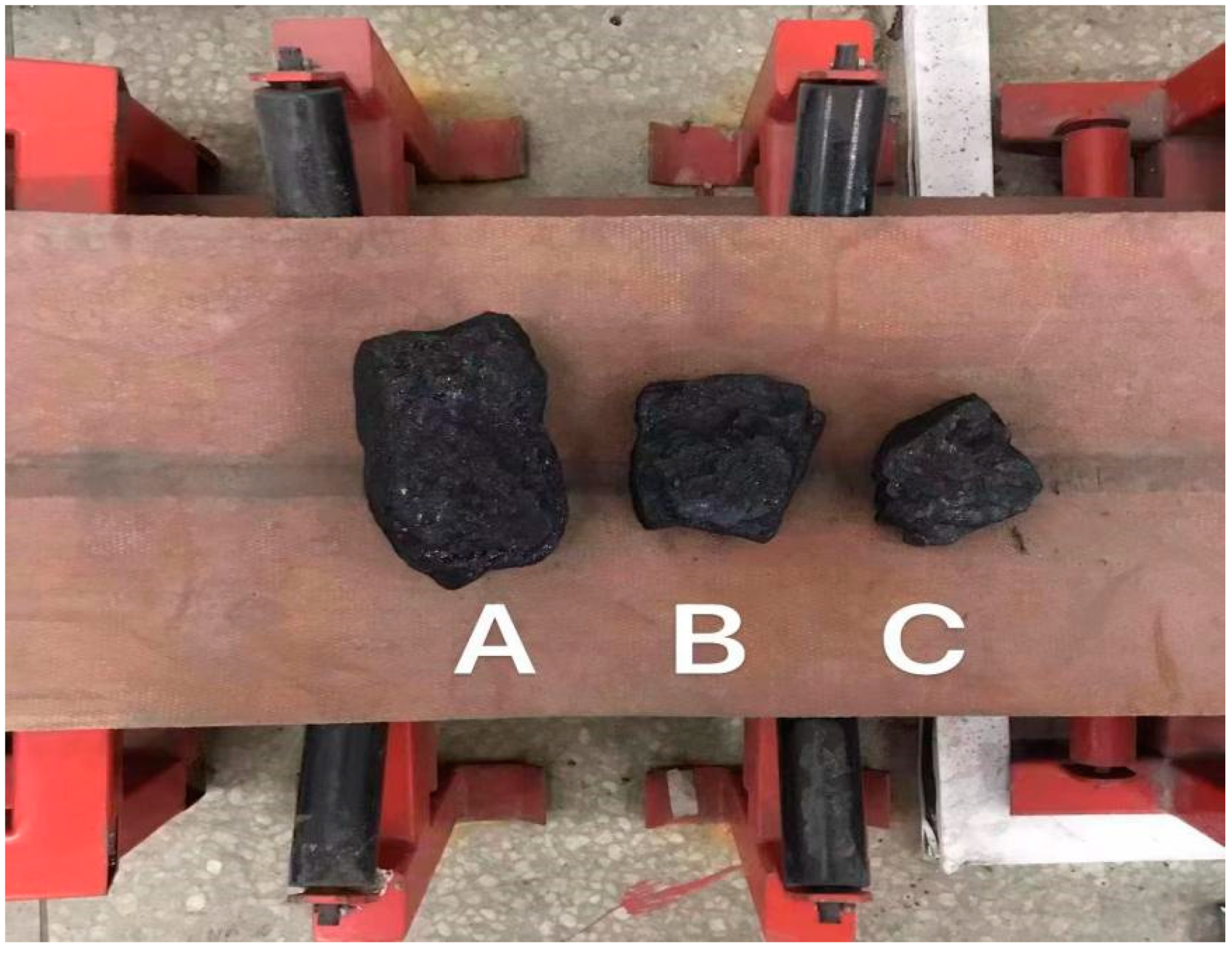

| Coal Measurement Volume/(mm3) | Actual Volume of Coal/(mm3) | Relative Error/% | |

|---|---|---|---|

| A | 674,177.828945 | 650,000 | 2.95% |

| B | 455,641.367206 | 430,000 | 5.96% |

| C | 244,690.001879 | 230,000 | 6.39% |

| B + C | 708,409.589607 | 660,000 | 7.33% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Hao, S.; Wang, H.; Wang, B.; Lin, J.; Sui, Y.; Gu, C. Safety Warning of Mine Conveyor Belt Based on Binocular Vision. Sustainability 2022, 14, 13276. https://doi.org/10.3390/su142013276

Zhang L, Hao S, Wang H, Wang B, Lin J, Sui Y, Gu C. Safety Warning of Mine Conveyor Belt Based on Binocular Vision. Sustainability. 2022; 14(20):13276. https://doi.org/10.3390/su142013276

Chicago/Turabian StyleZhang, Lei, Shangkai Hao, Haosheng Wang, Bin Wang, Jiangong Lin, Yiping Sui, and Chao Gu. 2022. "Safety Warning of Mine Conveyor Belt Based on Binocular Vision" Sustainability 14, no. 20: 13276. https://doi.org/10.3390/su142013276

APA StyleZhang, L., Hao, S., Wang, H., Wang, B., Lin, J., Sui, Y., & Gu, C. (2022). Safety Warning of Mine Conveyor Belt Based on Binocular Vision. Sustainability, 14(20), 13276. https://doi.org/10.3390/su142013276