A Study of the Relation between Byline Positions of Affiliated/Non-Affiliated Authors and the Scientific Impact of European Universities in Times Higher Education World University Rankings

Abstract

1. Introduction

- 1.

- What is the share in medical science of Q1–Q4 papers for the Top 5 European (University of Oxford, United Kingdom; ETH Zurich, Switzerland; Karolinska Institute, Sweden; Charité—Universitätsmedizin Berlin, Germany; and KU Leuven, Belgium) and V4 (Semmelweis University, Hungary; Jagiellonian University, Poland; Charles University Prague, Czech Republic; and Comenius University Bratislava, Slovakia) universities?;

- 2.

- What is the association between the JIF quartile share and the position of higher educational institutions in The TIMES World University Ranking by subject (Clinical and health) 2022?;

- 3.

- How does the byline position of authors influence CNCI and is this reflected in the position of selected universities in The TIMES World University Ranking by subject (Clinical and health) 2022?

2. Materials and Methods

3. Results

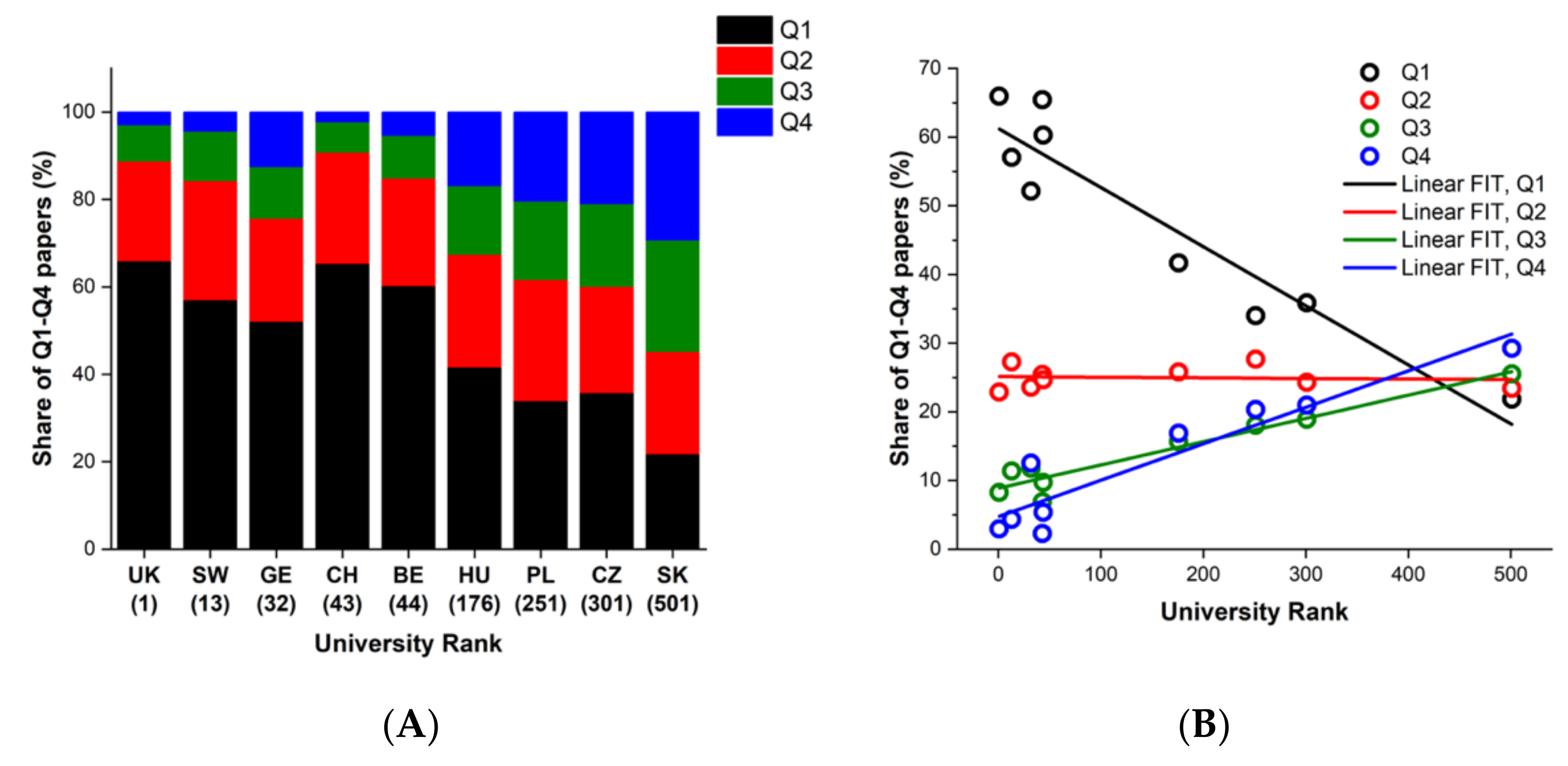

3.1. The Share of Q1–Q4 Papers Is Specific to University Rank Position

3.2. The Byline Position of Affiliated and Non-Affiliated Authors Influences the Scientific Impact of Research Papers

3.3. A Prestigious Byline Position of Non-Affiliated Authors Increases the CNCI for Q1 Research Papers

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Kivinen, O.; Hedman, J.; Artukka, K. Scientific publishing and global university rankings. How well are top publishing universities recognized? Scientometrics 2017, 112, 679–695. [Google Scholar] [CrossRef]

- Aldieri, L.; Kotsemir, M.; Vinci, C.P. The impact of research collaboration on academic performance: An empirical analysis for some European countries. Socio Econ. Plan. Sci. 2018, 62, 13–30. [Google Scholar] [CrossRef]

- Docampo, D.; Egret, D.; Cram, L. The effect of university mergers on the Shanghai ranking. Scientometrics 2015, 104, 175–191. [Google Scholar] [CrossRef]

- Soh, K.C.; Ho, K.K. A tale of two cities’ university rankings: Comparing Hong Kong and Singapore. High Educ. 2014, 68, 773–787. [Google Scholar] [CrossRef]

- Taylor, P.; Braddock, R. International University Ranking Systems and the Idea of University Excellence. J. High. Educ. Policy Manag. 2007, 29, 245–260. [Google Scholar] [CrossRef]

- Vašenda, J. Visegrad Group countries compared through world university rankings. Int. Educ. J. Comp. Perspect. 2019, 18, 100–115. [Google Scholar]

- European Commission; Directorate General for Research and Innovation. Science, Research and Innovation Performance of the EU, 2020: A Fair, Green and Digital Europe; Publications Office: Luxembourg, 2020. [Google Scholar]

- Dobbins, M. Higher Education Policies in Central and Eastern Europe; Palgrave Macmillan: London, UK, 2011; ISBN 978-1-349-33199-4. [Google Scholar]

- Adams, J. The fourth age of research. Nature 2013, 497, 557–560. [Google Scholar] [CrossRef]

- Leydesdorff, L.; Wagner, C.S. International collaboration in science and the formation of a core group. J. Informetr. 2008, 2, 317–325. [Google Scholar] [CrossRef]

- Adams, J.; Gurney, K.A. Bilateral and Multilateral Coauthorship and Citation Impact: Patterns in UK and US International Collaboration. Front. Res. Metr. Anal. 2018, 3, 12. [Google Scholar] [CrossRef]

- Lee, C.; Kogler, D.F.; Lee, D. Capturing information on technology convergence, international collaboration, and knowledge flow from patent documents: A case of information and communication technology. Inf. Processing Manag. 2019, 56, 1576–1591. [Google Scholar] [CrossRef]

- Lu, C.; Zhang, C.; Xiao, C.; Ding, Y. Contributorship in scientific collaborations: The perspective of contribution-based byline orders. Inf. Processing Manag. 2022, 59, 102944. [Google Scholar] [CrossRef]

- Aboukhalil, R. The rising trend in authorship. Winnower 2014, 2, e141832. [Google Scholar] [CrossRef]

- Albers, S. What Drives Publication Productivity in German Business Faculties? Schmalenbach Bus. Rev. 2015, 67, 6–33. [Google Scholar] [CrossRef]

- Armijos Valdivieso, P.; Avolio Alecchi, B.; Arévalo-Avecillas, D. Factors that Influence the Individual Research Output of University Professors: The Case of Ecuador, Peru, and Colombia. J. Hisp. High. Educ. 2021, 21, 450–468. [Google Scholar] [CrossRef]

- Aksnes, D.W.; Langfeldt, L.; Wouters, P. Citations, Citation Indicators, and Research Quality: An Overview of Basic Concepts and Theories. SAGE Open 2019, 9, 215824401982957. [Google Scholar] [CrossRef]

- Lozano, G.A.; Larivière, V.; Gingras, Y. The weakening relationship between the impact factor and papers’ citations in the digital age. J. Am. Soc. Inf. Sci Technol 2012, 63, 2140–2145. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Measuring Co-Authorship and Networking-Adjusted Scientific Impact. PLoS ONE 2008, 3, e2778. [Google Scholar] [CrossRef]

- Khor, K.A.; Yu, L.-G. Influence of international co-authorship on the research citation impact of young universities. Scientometrics 2016, 107, 1095–1110. [Google Scholar] [CrossRef]

- Smith, M.J.; Weinberger, C.; Bruna, E.M.; Allesina, S. The Scientific Impact of Nations: Journal Placement and Citation Performance. PLoS ONE 2014, 9, e109195. [Google Scholar] [CrossRef]

- Marušić, A.; Bošnjak, L.; Jerončić, A. A Systematic Review of Research on the Meaning, Ethics and Practices of Authorship across Scholarly Disciplines. PLoS ONE 2011, 6, e23477. [Google Scholar] [CrossRef]

- Sauermann, H.; Haeussler, C. Authorship and contribution disclosures. Sci. Adv. 2017, 3, e1700404. [Google Scholar] [CrossRef]

- Katz, J.S.; Martin, B.R. What is research collaboration? Res. Policy 1997, 26, 1–18. [Google Scholar] [CrossRef]

- Grácio, M.C.C.; de Oliveira, E.F.T.; Chinchilla-Rodríguez, Z.; Moed, H.F. Does corresponding authorship influence scientific impact in collaboration: Brazilian institutions as a case of study. Scientometrics 2020, 125, 1349–1369. [Google Scholar] [CrossRef]

- Lancho Barrantes, B.S.; Guerrero Bote, V.P.; Rodríguez, Z.C.; de Moya Anegón, F. Citation flows in the zones of influence of scientific collaborations. J. Am. Soc. Inf. Sci. 2012, 63, 481–489. [Google Scholar] [CrossRef]

- Lancho-Barrantes, B.S.; Guerrero-Bote, V.P.; de Moya-Anegón, F. Citation increments between collaborating countries. Scientometrics 2013, 94, 817–831. [Google Scholar] [CrossRef]

- Li, H.; Yin, Z. Influence of publication on university ranking: Citation, collaboration, and level of interdisciplinary research. J. Librariansh. Inf. Sci. 2022, 2022, 096100062211061. [Google Scholar] [CrossRef]

- Haeussler, C.; Sauermann, H. Division of labor in collaborative knowledge production: The role of team size and interdisciplinarity. Res. Policy 2020, 49, 103987. [Google Scholar] [CrossRef]

- Lu, C.; Zhang, Y.; Ahn, Y.; Ding, Y.; Zhang, C.; Ma, D. Co-contributorship network and division of labor in individual scientific collaborations. J. Assoc. Inf. Sci. Technol. 2020, 71, 1162–1178. [Google Scholar] [CrossRef]

- Birnholtz, J.P. What does it mean to be an author? The intersection of credit, contribution, and collaboration in science. J. Am. Soc. Inf. Sci. 2006, 57, 1758–1770. [Google Scholar] [CrossRef]

- Yang, S.; Wolfram, D.; Wang, F. The relationship between the author byline and contribution lists: A comparison of three general medical journals. Scientometrics 2017, 110, 1273–1296. [Google Scholar] [CrossRef]

- Mattsson, P.; Sundberg, C.J.; Laget, P. Is correspondence reflected in the author position? A bibliometric study of the relation between corresponding author and byline position. Scientometrics 2011, 87, 99–105. [Google Scholar] [CrossRef]

- Perneger, T.V.; Poncet, A.; Carpentier, M.; Agoritsas, T.; Combescure, C.; Gayet-Ageron, A. Thinker, Soldier, Scribe: Cross-sectional study of researchers’ roles and author order in the Annals of Internal Medicine. BMJ Open 2017, 7, e013898. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Yin, C. The relationship between the corresponding author and its byline position: An investigation based on the academic big data. J. Phys. Conf. Ser. 2021, 1883, 012129. [Google Scholar] [CrossRef]

- Yegros-Yegros, A.; Rafols, I.; D’Este, P. Does Interdisciplinary Research Lead to Higher Citation Impact? The Different Effect of Proximal and Distal Interdisciplinarity. PLoS ONE 2015, 10, e0135095. [Google Scholar] [CrossRef]

- García, J.A.; Rodriguez-Sánchez, R.; Fdez-Valdivia, J.; Martinez-Baena, J. On first quartile journals which are not of highest impact. Scientometrics 2012, 90, 925–943. [Google Scholar] [CrossRef]

- Bornmann, L.; Williams, R. Can the journal impact factor be used as a criterion for the selection of junior researchers? A large-scale empirical study based on ResearcherID data. J. Informetr. 2017, 11, 788–799. [Google Scholar] [CrossRef]

- Chinchilla-Rodríguez, Z.; Zacca-González, G.; Vargas-Quesada, B.; de Moya-Anegón, F. Benchmarking scientific performance by decomposing leadership of Cuban and Latin American institutions in Public Health. Scientometrics 2016, 106, 1239–1264. [Google Scholar] [CrossRef]

- Miranda, R.; Garcia-Carpintero, E. Comparison of the share of documents and citations from different quartile journals in 25 research areas. Scientometrics 2019, 121, 479–501. [Google Scholar] [CrossRef]

- Orbay, K.; MiRanda, R.; Orbay, M. Invited Article: Building Journal Impact Factor Quartile into the Assessment of Academic Performance: A Case Study. Particip. Educ. Res. 2020, 7, 1–13. [Google Scholar] [CrossRef]

- Vȋiu, G.-A.; Păunescu, M. The lack of meaningful boundary differences between journal impact factor quartiles undermines their independent use in research evaluation. Scientometrics 2021, 126, 1495–1525. [Google Scholar] [CrossRef]

- Bornmann, L.; Marx, W. How to evaluate individual researchers working in the natural and life sciences meaningfully? A proposal of methods based on percentiles of citations. Scientometrics 2014, 98, 487–509. [Google Scholar] [CrossRef]

- Liu, W.; Hu, G.; Gu, M. The probability of publishing in first-quartile journals. Scientometrics 2016, 106, 1273–1276. [Google Scholar] [CrossRef]

- Bornmann, L. Does the normalized citation impact of universities profit from certain properties of their published documents—Such as the number of authors and the impact factor of the publishing journals? A multilevel modeling approach. J. Informetr. 2019, 13, 170–184. [Google Scholar] [CrossRef]

- Ravenscroft, J.; Liakata, M.; Clare, A.; Duma, D. Measuring scientific impact beyond academia: An assessment of existing impact metrics and proposed improvements. PLoS ONE 2017, 12, e0173152. [Google Scholar] [CrossRef]

- Chen, K.; Zhang, Y.; Zhu, G.; Mu, R. Do research institutes benefit from their network positions in research collaboration networks with industries or/and universities? Technovation 2020, 94–95, 102002. [Google Scholar] [CrossRef]

- Paraskevopoulos, P.; Boldrini, C.; Passarella, A.; Conti, M. The academic wanderer: Structure of collaboration network and relation with research performance. Appl. Netw. Sci. 2021, 6, 31. [Google Scholar] [CrossRef]

- Li, E.Y.; Liao, C.H.; Yen, H.R. Co-authorship networks and research impact: A social capital perspective. Res. Policy 2013, 42, 1515–1530. [Google Scholar] [CrossRef]

- Bautista-Puig, N.; Orduña-Malea, E.; Perez-Esparrells, C. Enhancing sustainable development goals or promoting universities? An analysis of the times higher education impact rankings. Int. J. Sustain. High. Educ. 2022, 23, 211–231. [Google Scholar] [CrossRef]

- Diaz-Sarachaga, J.M.; Jato-Espino, D.; Castro-Fresno, D. Is the Sustainable Development Goals (SDG) index an adequate framework to measure the progress of the 2030 Agenda? Sustain. Dev. 2018, 26, 663–671. [Google Scholar] [CrossRef]

- Veidemane, A. Education for Sustainable Development in Higher Education Rankings: Challenges and Opportunities for Developing Internationally Comparable Indicators. Sustainability 2022, 14, 5102. [Google Scholar] [CrossRef]

- Blasco, N.; Brusca, I.; Labrador, M. Drivers for Universities’ Contribution to the Sustainable Development Goals: An Analysis of Spanish Public Universities. Sustainability 2020, 13, 89. [Google Scholar] [CrossRef]

- Çakır, M.P.; Acartürk, C.; Alaşehir, O.; Çilingir, C. A comparative analysis of global and national university ranking systems. Scientometrics 2015, 103, 813–848. [Google Scholar] [CrossRef]

- Abramo, G.; D’Angelo, C.A. The relationship between the number of authors of a publication, its citations and the impact factor of the publishing journal: Evidence from Italy. J. Informetr. 2015, 9, 746–761. [Google Scholar] [CrossRef]

- Kohus, Z.; Demeter, M.; Szigeti, G.P.; Kun, L.; Lukács, E.; Czakó, K. The Influence of International Collaboration on the Scientific Impact in V4 Countries. Publications 2022, 10, 35. [Google Scholar] [CrossRef]

| University Name | Country Code | THE Ranking 2022 | WoS Paper (N) | Research Papers by Journal JIF Quartile (%) | |||

|---|---|---|---|---|---|---|---|

| Q1 | Q2 | Q3 | Q4 | ||||

| University of Oxford | UK | 1 | 31,089 | 65.96 | 22.87 | 8.25 | 2.92 |

| Karolinska Institutet | SW | 13 | 40,545 | 57.03 | 27.28 | 11.36 | 4.33 |

| Charité—Universitätsmedizin Berlin | GE | 32 | 27,073 | 52.14 | 23.56 | 11.76 | 12.54 |

| ETH Zurich | CH | 43 | 5989 | 65.42 | 25.43 | 6.90 | 2.25 |

| KU Leuven | BE | 44 | 22,435 | 60.30 | 24.63 | 9.70 | 5.37 |

| Semmelweis University | HU | 176–200 | 6921 | 41.67 | 25.79 | 15.65 | 16.89 |

| Jagiellonian University | PL | 251–300 | 7940 | 33.99 | 27.64 | 18.02 | 20.34 |

| Charles University Prague | CZ | 301–350 | 12,110 | 35.84 | 24.29 | 18.91 | 20.96 |

| Comenius University Bratislava | SK | 501–550 | 2942 | 21.82 | 23.42 | 25.53 | 29.23 |

| Linear Regression (R Square) | Linear Regression (F Test) | Pearson Correlation (Correlation Coefficient) | Pearson Correlation (Correlation Probability) | |

|---|---|---|---|---|

| University rank vs. Q1 paper ratio | 0.89 | 0.0001, *** | −0.94 | 0.0001, *** |

| University rank vs. Q2 paper ratio | 0.01 | 0.82, NS | −0.08 | 0.82, NS |

| University rank vs. Q3 paper ratio | 0.094 | <0.0001, *** | 0.96 | <0.0001, *** |

| University rank vs. Q4 paper ratio | 0.87 | 0.0003, *** | 0.93 | 0.0003, *** |

| University Name (Country Abbreviation) | The TIMES Ranking 2022 | Affiliated (N) | NOT Affiliated (N) | Affiliated (MEDIAN) | NOT Affiliated (MEDIAN) | Sig. |

|---|---|---|---|---|---|---|

| University of Oxford (UK) | 1 | 17,411 | 13,681 | 0.99 | 1.22 | *** |

| Karolinska Institutet (SW) | 13 | 24,613 | 15,935 | 0.77 | 1.04 | *** |

| Charité—Universitätsmedizin Berlin (GE) | 32 | 16,262 | 10,811 | 0.62 | 1.06 | *** |

| ETH Zurich (CH) | 43 | 3437 | 2555 | 0.95 | 1.04 | *** |

| KU Leuven (BE) | 44 | 12,833 | 9605 | 0.81 | 1.16 | *** |

| Semmelweis University (HU) | 176 | 4079 | 2845 | 0.43 | 0.95 | *** |

| Jagiellonian University (PL) | 251 | 5348 | 2595 | 0.50 | 0.87 | *** |

| Charles University Prague (CZ) | 301 | 7817 | 4297 | 0.42 | 1.01 | *** |

| Comenius University Bratislava (SK) | 501 | 2062 | 884 | 0.35 | 0.67 | * |

| University name | Affiliated (N) | Not Affiliated (N) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Paper Count (N) | Median | Sig. | Paper Count (N) | Median | Sig. | |||||

| Q1 | NOT Q1 | Q1 | NOT Q1 | Q1 | NOT Q1 | Q1 | NOT Q1 | |||

| University of Oxford (UK) | 10,979 | 6432 | 1.3 | 1.56 | *** | 9526 | 4152 | 0.58 | 0.66 | *** |

| KU Leuven | 13,223 | 11,389 | 1.099 | 1.44 | *** | 9900 | 6033 | 0.53 | 0.63 | *** |

| Charité—Universitätsmedizin Berlin | 7323 | 6288 | 1.07 | 1.63 | *** | 8939 | 4523 | 0.35 | 0.57 | *** |

| ETH Zurich | 2220 | 1217 | 1.206 | 1.35 | *** | 1698 | 854 | 0.66 | 0.6 | *** |

| Karolinska Institutet | 7208 | 5625 | 1.148 | 1.57 | *** | 6321 | 3281 | 0.516 | 0.65 | *** |

| Semmelweis University | 1244 | 2835 | 0.89 | 1.41 | *** | 1640 | 1202 | 0.32 | 0.52 | *** |

| Jagiellonian University | 1401 | 3947 | 0.85 | 1.49 | * | 1298 | 1294 | 0.39 | 0.5 | *** |

| Charles University Prague | 1899 | 5917 | 0.901 | 1.67 | *** | 2441 | 1853 | 0.337 | 0.49 | *** |

| Comenius University Bratislava | 280 | 1781 | 0.751 | 1.24 | NS | 362 | 519 | 0.319 | 0.41 | *** |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kohus, Z.; Demeter, M.; Kun, L.; Lukács, E.; Czakó, K.; Szigeti, G.P. A Study of the Relation between Byline Positions of Affiliated/Non-Affiliated Authors and the Scientific Impact of European Universities in Times Higher Education World University Rankings. Sustainability 2022, 14, 13074. https://doi.org/10.3390/su142013074

Kohus Z, Demeter M, Kun L, Lukács E, Czakó K, Szigeti GP. A Study of the Relation between Byline Positions of Affiliated/Non-Affiliated Authors and the Scientific Impact of European Universities in Times Higher Education World University Rankings. Sustainability. 2022; 14(20):13074. https://doi.org/10.3390/su142013074

Chicago/Turabian StyleKohus, Zsolt, Márton Demeter, László Kun, Eszter Lukács, Katalin Czakó, and Gyula Péter Szigeti. 2022. "A Study of the Relation between Byline Positions of Affiliated/Non-Affiliated Authors and the Scientific Impact of European Universities in Times Higher Education World University Rankings" Sustainability 14, no. 20: 13074. https://doi.org/10.3390/su142013074

APA StyleKohus, Z., Demeter, M., Kun, L., Lukács, E., Czakó, K., & Szigeti, G. P. (2022). A Study of the Relation between Byline Positions of Affiliated/Non-Affiliated Authors and the Scientific Impact of European Universities in Times Higher Education World University Rankings. Sustainability, 14(20), 13074. https://doi.org/10.3390/su142013074