1. Introduction

Environmental protection and low-carbon economy have gradually become the focus of attention, and the exploitation of energy has gradually changed to the direction of sustainability [

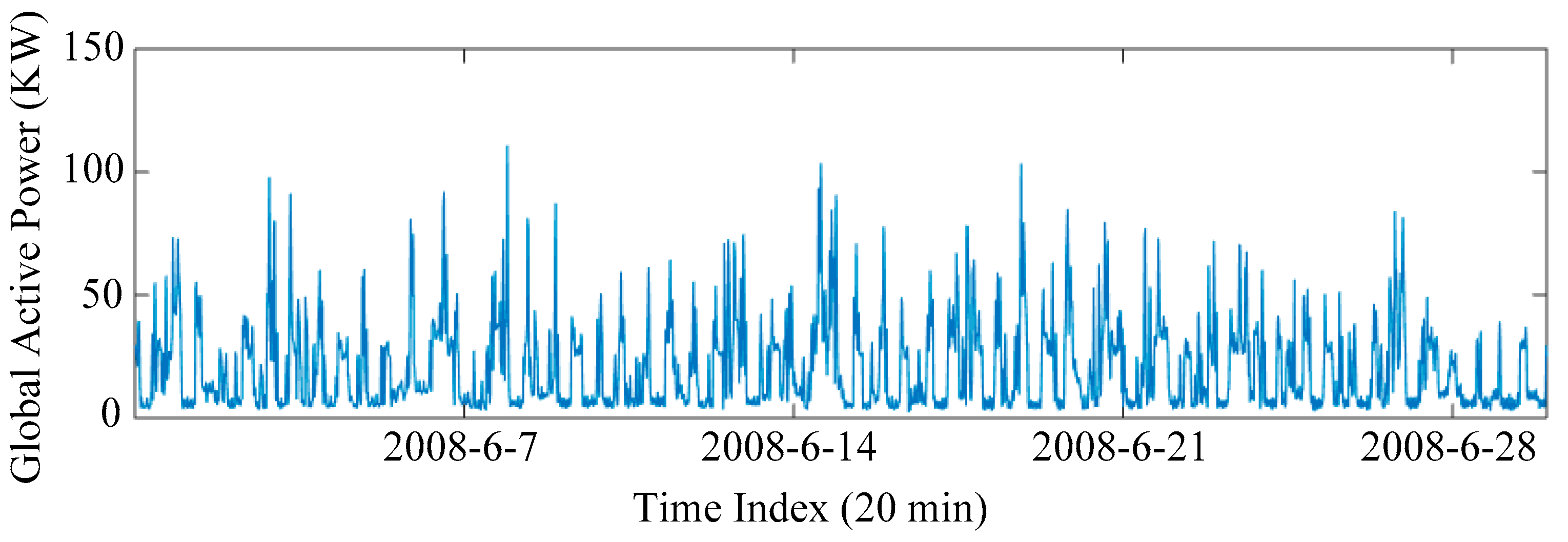

1]. Energy consumption is an important factor in economic benefits, energy conservation and emission reduction contribute to the realization of social sustainable development. The role of electricity in energy supply has been gradually strengthened. Grasping the development trend of energy and electricity in the future has become a necessary way for economic growth and environmental protection. Correctly predicting household electricity consumption on the power grid is of great significance for energy transformation and the pursuit of sustainable development goals [

2]. With economic development and population growth, global power consumption has increased significantly. Accurate power consumption prediction is very important for energy supply, power dispatching and distribution, capital investment and market research management [

3]. More and more scholars devote themselves to the research of accurate and reliable energy consumption prediction methods. In essence, energy consumption prediction is a typical time series problem, including univariate prediction and multivariate prediction. Due to the uncertainty and volatility of power data, the traditional machine learning methods cannot predict power consumption well. In contrast, the deep learning techniques can achieve higher accuracy. For the problem of power consumption prediction, numerous related literatures have investigated abundant prediction models based on machine learning or even deep learning. Summarizing the relevant literature, the prediction models for power consumption are roughly divided into three categories: statistical methods, machine learning techniques [

4,

5,

6,

7] and hybrid models [

8,

9,

10,

11,

12,

13,

14].

The first category focuses on the statistical analysis of historical time series data with different features, and the identification of the relationship among variables. The classical statistical learning methods mainly consist of the Markov process, regression model, exponential smoothing model, autoregressive model (AR), moving average model (MA), autoregressive moving average model (ARMA), autoregressive comprehensive moving average model (ARIMA) and so on [

15]. These methods have the merits of fast calculation speeds and strong interpretability in predicting energy generation and power consumption. However, they cannot deal with sudden non-stationary data in general. More specifically, power consumption data are prone to be influenced by the seasons, the working day and other factors. Hence, this high variability can greatly reduce the prediction accuracy of statistical learning methods. The machine learning models can learn the complex nonlinear relationships and other relevant parameters in time series. Generally, these advanced learning models are superior to those statistical learning methods because of their powerful representation ability.

With the maturity of artificial intelligence technology, machine learning models have been widely applied in the community of energy generation and power consumption [

16,

17,

18]. These models can effectively extract high-dimensional complex features and construct nonlinear mapping directly from input to output. Machine learning models adopted in the power consumption mainly include support vector machines (SVM) [

19], support vector regression (SVR) [

20,

21], decision trees and artificial neural network (ANN) [

22,

23]. In the early stage of ANN, the shallow network construction is easier to implement and thus is widely used. The number of hidden layers in a shallow neural network is relatively small to avoid over-fitting, falling into a local minimum and gradient disappearance. One possible disadvantage of the aforementioned shallow topology is that the features contained in the data may not be completely extracted. As a result, the prediction accuracy needs to be further improved.

Deep learning techniques have emerged and achieved a rapid development with the continuous improvement of computer hardware, software and big data technology. So far, the boom of deep neural network technology has bred massive deep learning models, including deep belief network [

24], convolutional neural network (CNN), long short-term memory (LSTM) [

25,

26], generative adversarial network, deep residual network [

27], etc. As a branch of neural networks, a CNN has also been employed in time series in recent years. Compared with fully connected network, a CNN has advantages in fewer parameters and lower complexity. In addition, LSTM is a variant of a recurrent neural network (RNN), and it considers feedback connections to update the state of previous input neurons. The main difference between RNN and LSTM is that the latter has a long-term memory unit, which overcomes the disadvantage of unstable gradients in long-term dependent sequences. Nowadays, the LSTM model has been extensively applied in power system [

28,

29,

30].

The hybrid methods concentrate the advantages of physical methods and data-driven methods, and they are widely utilized in building, household and urban power load forecasting. Yang et al. [

31] explored the potential of hybrid models based on extreme learning machines, RNN, SVM and multi-objective particle swarm optimization (MOPSO) in multi-step load forecasting. The experimental results in [

31] showed that the combined prediction model has higher prediction accuracy than the single model. Deep learning methods have also been extensively adopted in multi-step advance energy generation and power load forecasting [

32,

33]. In [

20], an integration of generalized RNN and SVM was proposed to predict power demand, and it ensured the accuracy of prediction results and the robustness of the model. In [

34], a power consumption prediction method was developed based on bidirectional LSTM (BiLSTM) and ANN, and it was enhanced with a multiple simultaneously decreasing delays approach coupled with function fitting neural networks. The hybrid method in [

34] was also employed to predict the total power consumption of business center consumers and refrigerator storage room. Wu et al. [

35] explored the CNN–LSTM–BiLSTM model with attention mechanism to predict short-term power load. Furthermore, the combination of the clustering method and deep learning model has also been comprehensively studied in power system prediction [

36,

37], such as the energy consumption based on k-means clustering [

38,

39], residential power load [

40] and residential and small commercial building power demand by means of k-nearest neighbor [

41].

In the power system, the accurate prediction of energy generation and power consumption has increasingly become a research hotspot. Various methods and technologies have been proposed to predict the energy generation, power demand or consumption, so as to provide a basic guarantee for the safe and stable operation in the power grid.

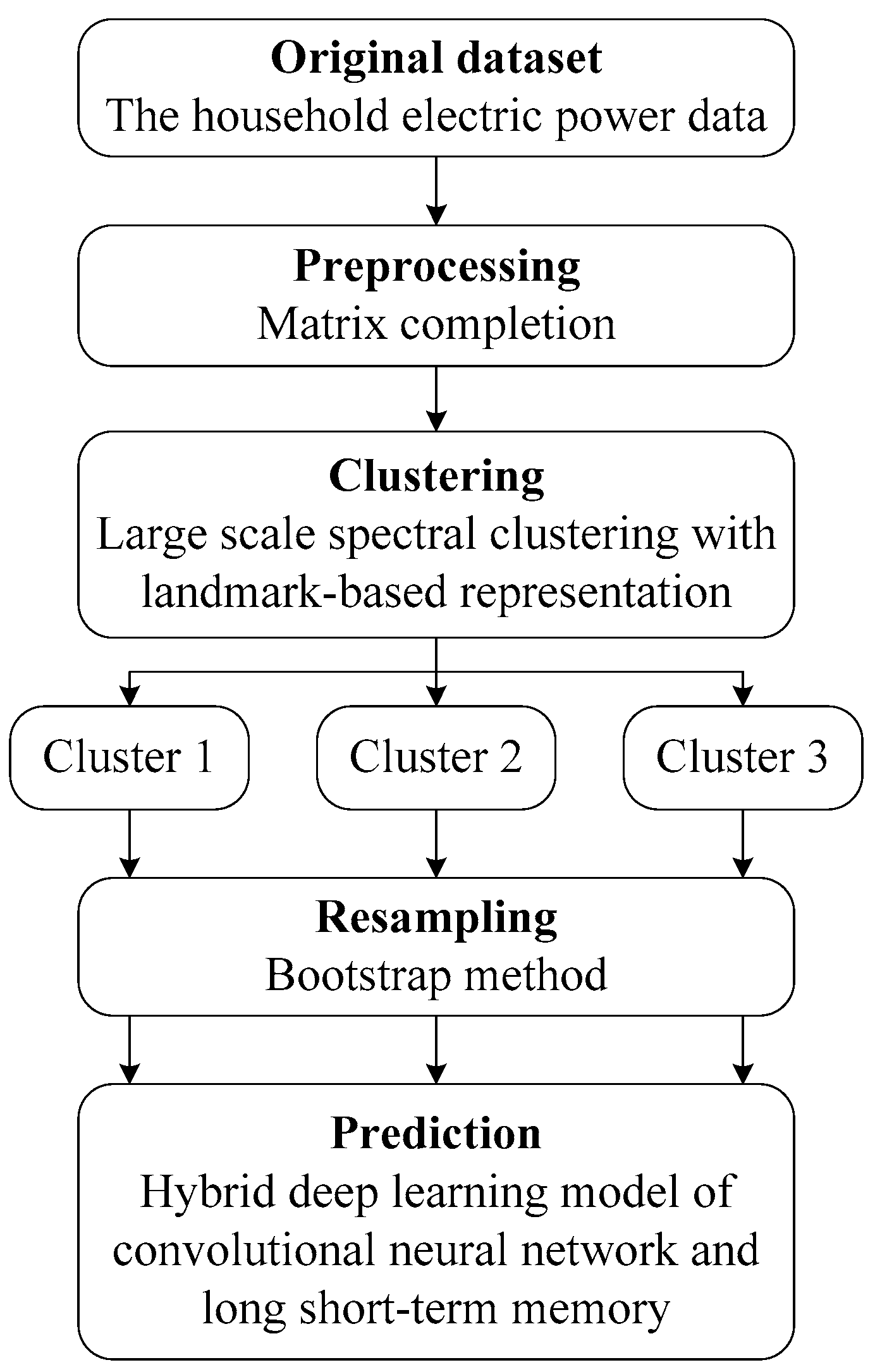

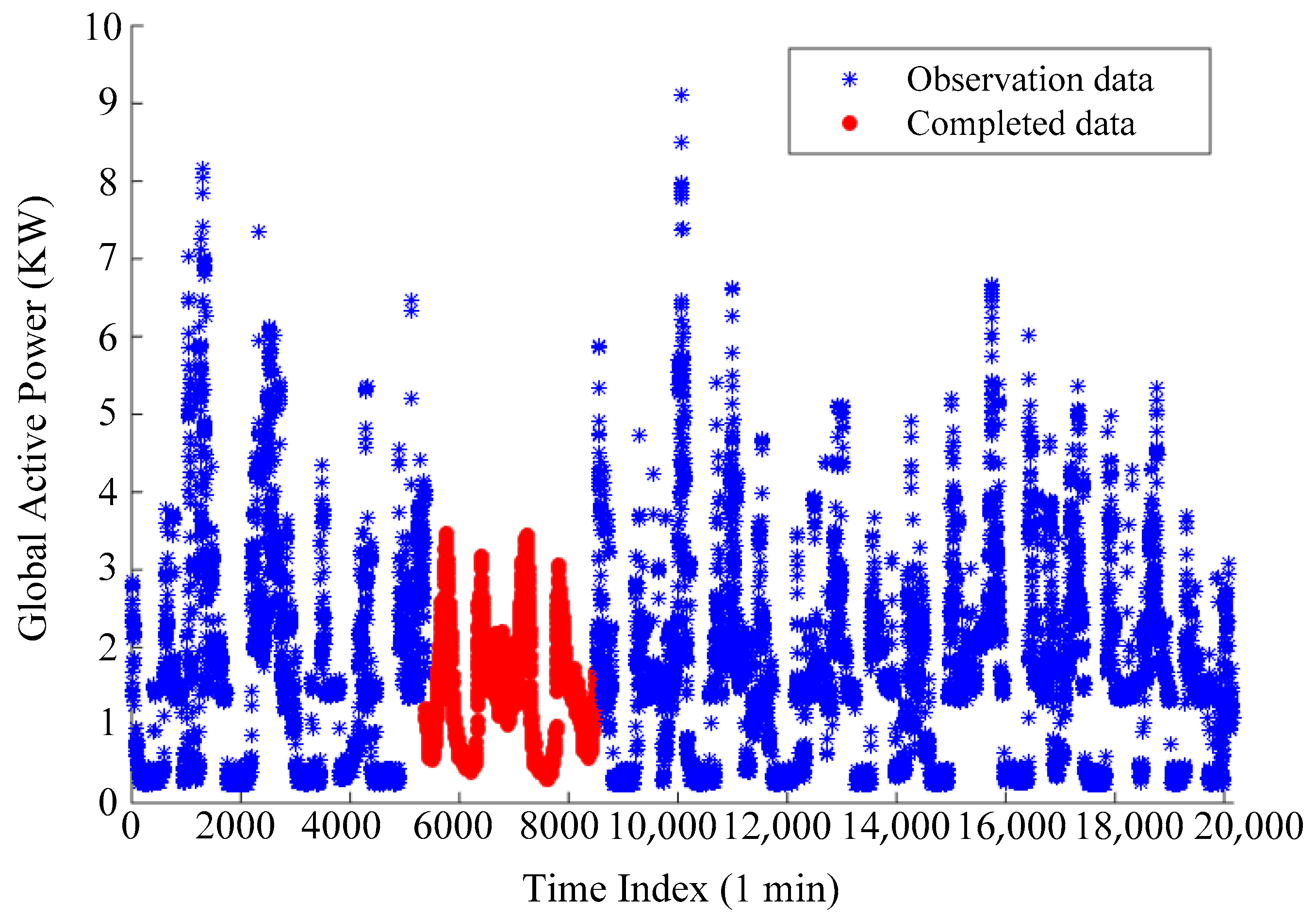

The aim of this paper is to forecast the household electric power through fusing landmark-based spectral clustering (LSC) and deep learning. Firstly, the missing values are recovered by matrix completion. Then, the linear normalization is employed to guarantee that the features are scaled in a proper order of magnitude. Next, household electric power samples are partitioned by LSC, where LSC is a scalable clustering method in massive datasets. Ultimately, a deep learning model is established by combining the advantages of CNN and LSTM. As a subsequence, a hybrid of LSC and deep learning is proposed for household electric power forecast.

The remaining structure of the paper is organized as follows.

Section 2 elaborates on the investigated dataset, reviews methods for data preprocessing and provides a framework of the hybrid model.

Section 3 introduces landmark-based spectral clustering and several deep learning techniques and proposes the LSC–CNN–LSTM model. The experimental results and analysis are discussed in

Section 4. Finally, the conclusions are drawn in

Section 5.

3. A Deep Learning Framework by Combining LSC, CNN and LSTM

3.1. Landmark-Based Spectral Clustering

Spectral clustering is a kind of clustering method based on matrix decomposition [

43]. Compared with other algorithms, spectral clustering usually produces better experimental performance because it considers the manifold structure of samples instead of Euclidean space. A key process in spectral clustering is calculating the eigenvectors of Laplacian matrix constructed by a similarity matrix. However, the eigen-decomposition has high computational complexity. Hence, the unscalable clustering method is limited in large scale data applications.

Landmark-based spectral clustering (LSC) can effectively deal with the issue of large scale samples [

44]. The basic principle of LSC is to design an effective eigen-decomposition method of a Laplace matrix by means of constructing a novel graph. In implementation,

representative data points are firstly selected as landmarks and each sample in the original data is approximately represented by the sparse linear combinations of these landmarks, where

, and

is the number of samples. More specially, the sparse representation coefficients are directly obtained through the landmark-based representation matrix.

Matrix decomposition attempts to compress data by seeking a set of basis vectors and thus each data point is written as a linear combination of the bases [

44]. Given the samples matrix

, it is approximately decomposed into the product of two low-rank matrices:

where the base matrix

and the coefficients matrix

. As a basis vector, each column of

captures the high-dimensional features from the original samples space. Meanwhile, each column of

is the

-dimensional representation coefficient of the corresponding input instance under the new basis vectors. The difference between two matrices is frequently measured by the Frobenius norm

of the residual. Hence, the optimal low-rank representation can be obtained by solving the following optimization problem:

The optimal coefficient matrix

in the above minimization problem is usually dense, which means that each sample is a linear combination of all bases. These dense coefficients may result in a negative effect on classification performance. Sparse coding in matrix decomposition is a popular method to overcome the aforementioned defect. Furthermore, a sparse regularization term is imposed on the objective function in Equation (6), and a new optimization problem is formulated as below:

where

is a function to evaluate the sparsity of each column in

and

is a tradeoff coefficient to control the sparsity penalty.

To simplify model and reduce the computational complexity, LSC takes the landmarks as the basis vectors, which means all elements in basis matrix are not variables. The landmarks can be randomly chosen from the samples set or acquired via k-means clustering algorithm, where all clustering centers are regarded as the landmarks.

For data point

, its estimated value is denoted by

, where

is the

-th column of

and

is an element in the

-th row and

-th column of

. A natural assumption is that the closer

is to

, the larger the value of

should be. Let

be the index set composed by

nearest neighbors of

from

and

. If

, then

is set to 0; otherwise, the value of

is determined by the following formula:

where

is a kernel function. In practice, the Gaussian function or thermal kernel is a priority to recommend the kernel function:

where

is a fixed bandwidth. The value of

restricts that

is a sparse matrix.

According to the sparse representation matrix

calculated from landmarks, the affinity matrix, also named as similarity matrix, can be obtained as follows:

where

,

is a

-order diagonal square matrix whose diagonal elements are the sum of each row of matrix

, i.e.,

.

The thin singular value decomposition is performed on matrix

and thus

is obtained, where

and

are singular values of

. Denote

and

, where

is called left singular vector and

is the right singular vector. It is easy to draw the following conclusions: each column of matrix

is an eigenvector of matrix

, each column of matrix

is an eigenvector of matrix

, and

is the

-th largest eigenvalue of

or

. Furthermore, it holds that:

The procedure of LSC is summarized in Algorithm 1.

| Algorithm 1 LSC |

| input: : samples set, : number of clusters, : number of landmarks. |

| output: clusters after the clustering process. |

| 1. Generate landmarks using k-means clustering or a random selection from . |

| 2. Construct the sparse affinity matrix between data points and landmarks according to Equation (8) and normalize it by . |

| 3. Calculate the first top eigenvectors of matrix and form a matrix by stacking them column by column. |

| 4. Compute matrix through Equation (11). |

| 5. Regard each row of matrix as a new data point and cluster them with k-means clustering or other algorithms to obtain the final result. |

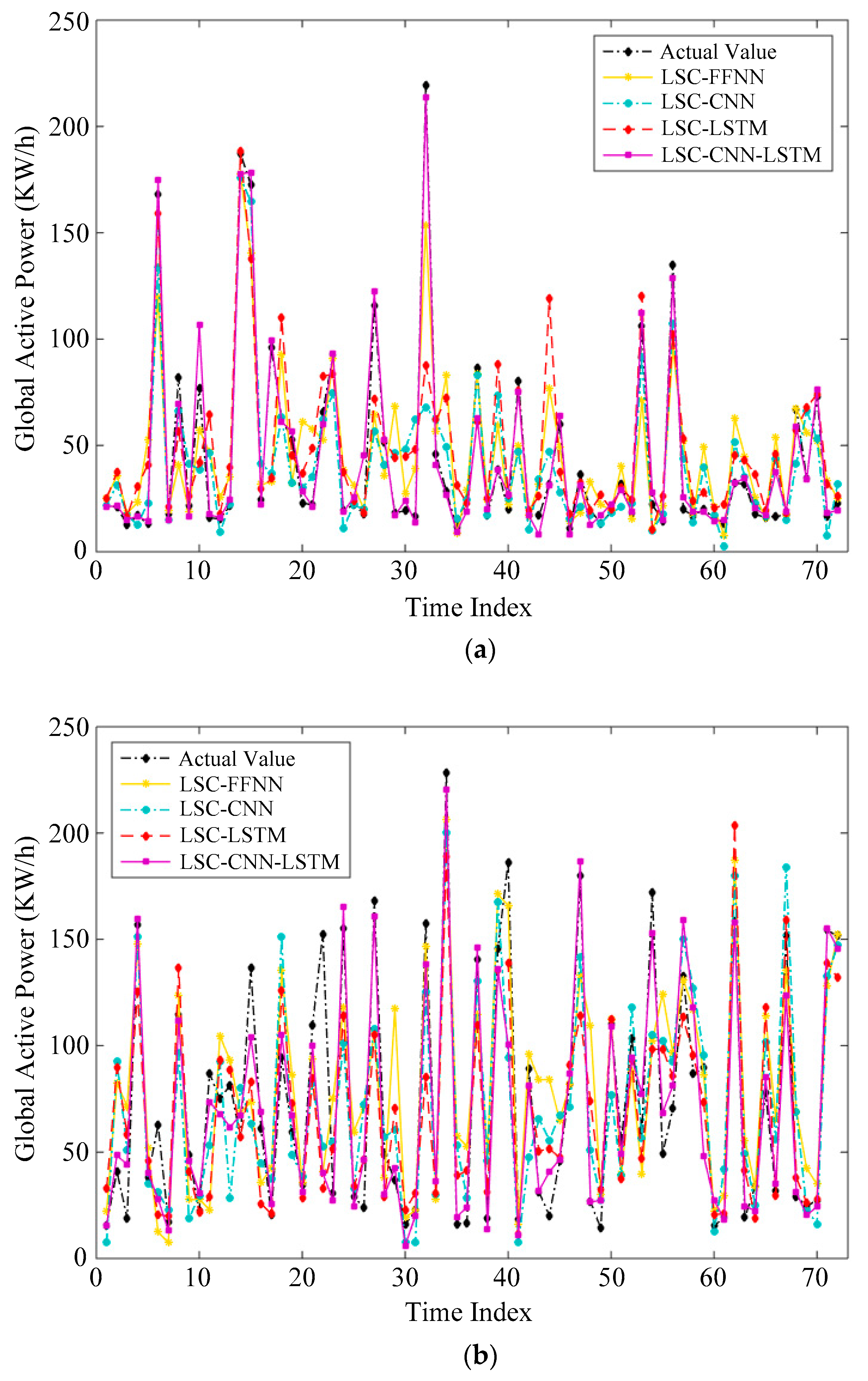

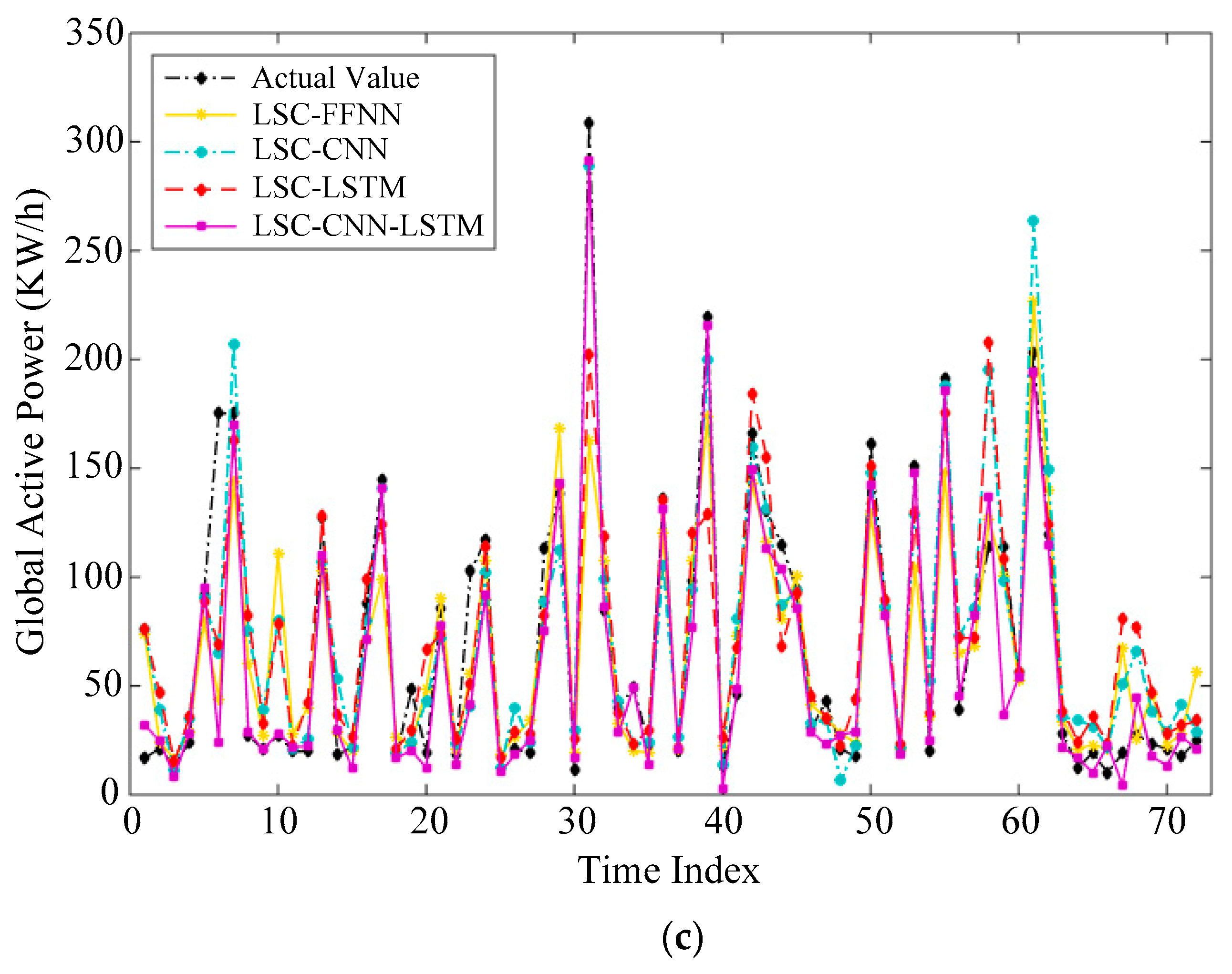

In Algorithm 1, the constructed samples matrix is used as the input of clustering. Each column of is regarded as an original sample, and all samples are eventually grouped into three clusters in our experiments. Under ideal conditions, the similarity of data points from the same cluster is high; otherwise, the similarity is low. The resulting three clusters are respectively adopted as the training set of deep neural network for prediction.

3.2. Bootstrap Aggregating

Generally, the number of training samples will decrease significantly owing to the utilization of LSC. However, all deep learning techniques require sufficient training samples. Under such circumstances, the proposed clustering method may lead to a risk of deteriorating overfitting. Meanwhile, fewer sample points will also seriously affect the prediction performance of deep learning. For these reasons, a sample augmentation strategy is proposed.

Bootstrap aggregating, also called as bagging, is a commonly-used ensemble learning method. For a given sample set, this method will generate a new sample set using a resampling technique. In the process of resampling, each point in original sample set is randomly selected according to the uniform distribution, and duplicate samples are permissible in the resulting set. Though the bootstrap aggregating, samples in each cluster can be augmented arbitrarily. For each cluster, this paper generates stochastically an updated sample set whose sample number is .

Once each cluster is augmented, the prediction process will be executed based on deep learning methods. It is worth noting that the bootstrap aggregating technique allows us to fairly compare the experimental results before and after clustering, and simultaneous overfitting is alleviated to a certain extent. In addition, the data variability caused by bootstrap aggregating has a low risk of overfitting because it has little impact on batches.

3.3. Convolutional Neural Network

As a special case of a feedforward neural network, a convolutional neural network (CNN) owns the characteristics of convolution calculation and depth structure, and it has been one of the representative models among deep learning techniques. What is more, CNN can effectively learn the latent features from input data, respond to the surrounding units within the coverage, and have strong learning representation ability.

Although CNN is usually applied to extracting features on two-dimensional images, it also supports multiple one-dimensional input and is very suitable for a multi-step time series prediction. This paper will pay more attention to one-dimensional CNN, which mainly includes an input layer, several hidden layers and an output layer. Among them, the hidden layer consists of a convolution operator, pooling operator and full connection operator.

The first operator involves multiple convolution kernels and its function is to extract the features from the corresponding input. Each element of a convolution kernel represents a weight coefficient. The size of a convolution nucleus determines the size of a receptive field, and the step size controls the sliding length of the receptive field.

After feature extraction via the convolution operator, the output feature map will be transferred to the pooling operation for feature selection and information filtering. The effect of the pooling is to replace the single point results in the feature graph with the feature statistics of adjacent regions. The pooling area and the sliding size are, respectively, controlled by the pooling size and the step size, where the step is similar to that in the convolution kernel for the scanning feature graph.

The full connection operator is equivalent to the hidden layer in traditional feedforward neural network (FFNN). Both the convolution and pooling can extract the features from the input data, and the full connection is utilized to nonlinearly combine the extracted features to obtain the output. The prior layer of the output layer in CNN is usually the full connection.

An analytical diagram of one-dimensional convolution and pooling process is shown in

Figure 5. The input vector with

dimensions is operated with

convolution kernels. In the later experiments, the size of convolution kernel and the step size are set to 3 and 1, respectively. Convolution operation is a process of continuously sliding weighted summation, where the height is set to 3. When the input sample is passed through the sliding window operation with

convolution kernels, a matrix

can be calculated through weighted summation:

The sliding window operation in pooling is similar to the convolution operation, and the pooling size is set to 2 in the experimental section. Pooling operator is a process of dimensionality reduction by continuously sliding the window with height 2 and width 1. Maximum pooling selects the largest value from the fixed region of interest as the output, where the region is obtained by convolution in the sliding window. After convolution and pooling operators, the previous feature matrix is eventually be transformed into another matrix , where , .

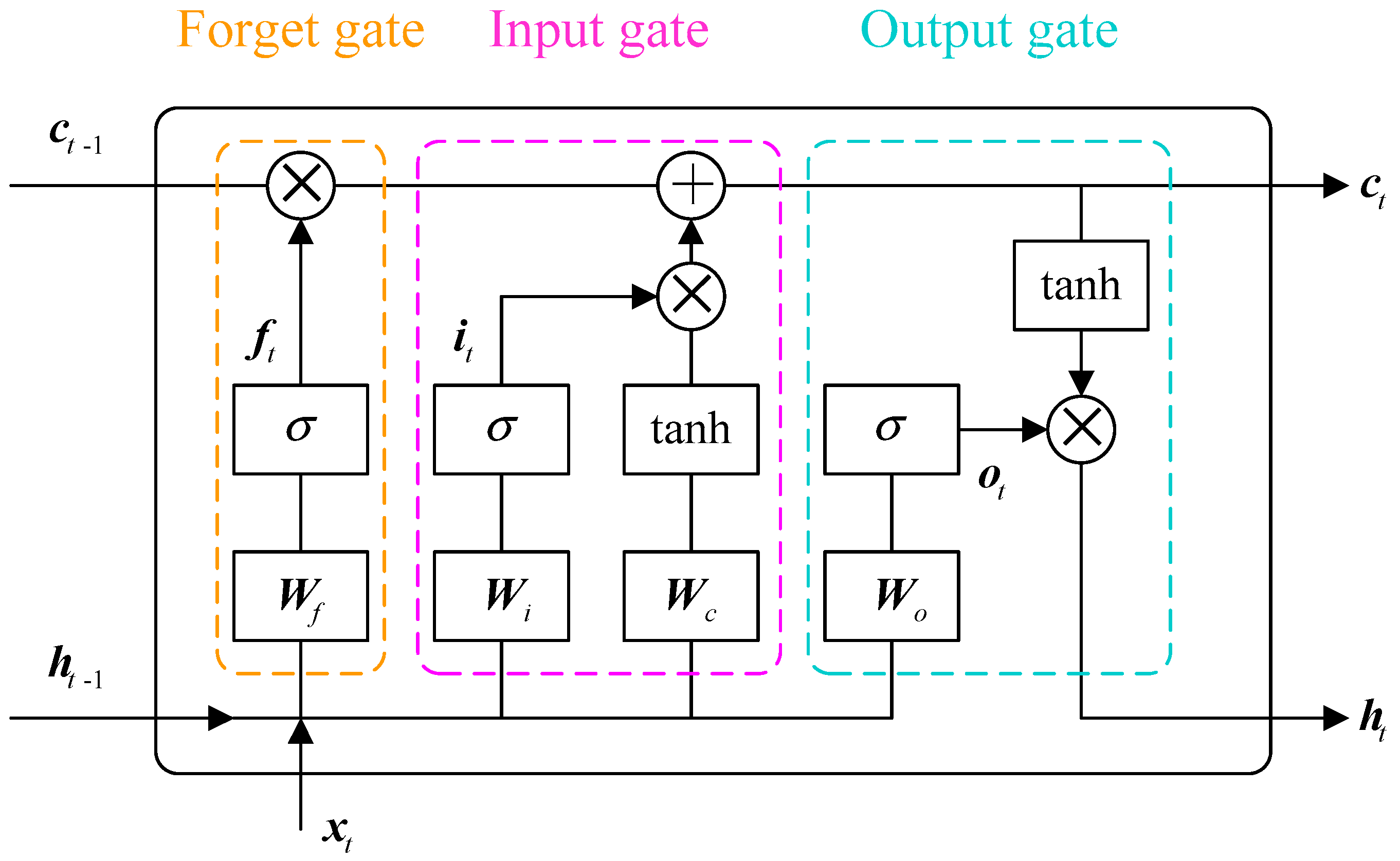

3.4. Long Short-Term Memory

A recurrent neural network (RNN) carries the memory information from prior inputs and outputs, and it is especially suitable for the sequential data or time series data. As a popular variant of RNN, long short-term memory (LSTM) can overcome the problem of gradient disappearance and exploding by fusing self-connected gates in hidden cells.

Figure 6 gives the architecture of an LSTM unit. There exist three types of gates in LSTM, i.e., input gate, forgetting gate and output gate. The first gate controls the input of new information in the memory cell. The second gate is response for whether previous information should be forgotten from memory cells. The last gate controls the output of information. The information is transmitted in the following order. The current information is first passed through the input gate to see whether there is input information. The second step is to judge whether the forgetting gate chooses to forget the information in the memory cell. Finally, the available information is further transmitted the output gate to judge whether to output the information at that moment.

Given input vector

in LSTM unit at time

, the calculation process is shown in Equations (13)–(18):

where the notation

indicates the Hadamard product,

is the sigmoid activation function and

is the hyperbolic tangent activation function. In Equations (13)–(15),

,

and

are, respectively, the forget gate, the input gate and the output gate;

,

and

are the weight matrices of forget gate, input gate and output gate connecting the output of the previous unit, respectively;

,

and

are the weight matrices of aforementioned three gates connecting the input of the current unit, respectively. In Equations (16)–(18),

,

and

are, respectively, the candidate values, the state vector and the output vector;

and

are the input weight matrices connecting the output of the previous unit, respectively. Moreover,

,

and

are the bias vectors of three gates, respectively, and

is the input bias vector.

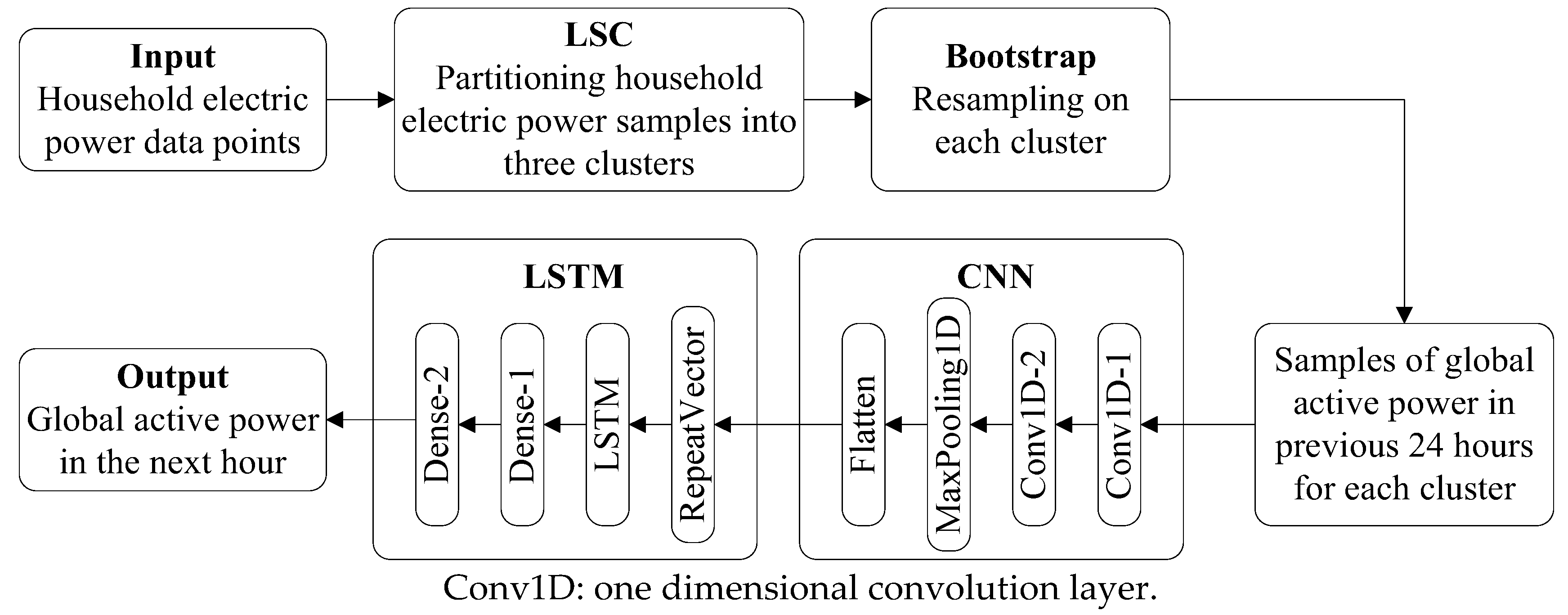

3.5. Integration of LSC, CNN and LSTM

LSC classifies the original samples into several groups. In general, samples in each group have lower model complexity than all samples, which means that the trained forecasting model tends to have higher performance. CNN has powerful ability in feature extraction and representation learning. In addition, LSTM has natural advantage to transmitting information in time series data. Combining the advantages of these three methods, this paper presents a hybrid forecasting model, called as LSC–CNN–LSTM.

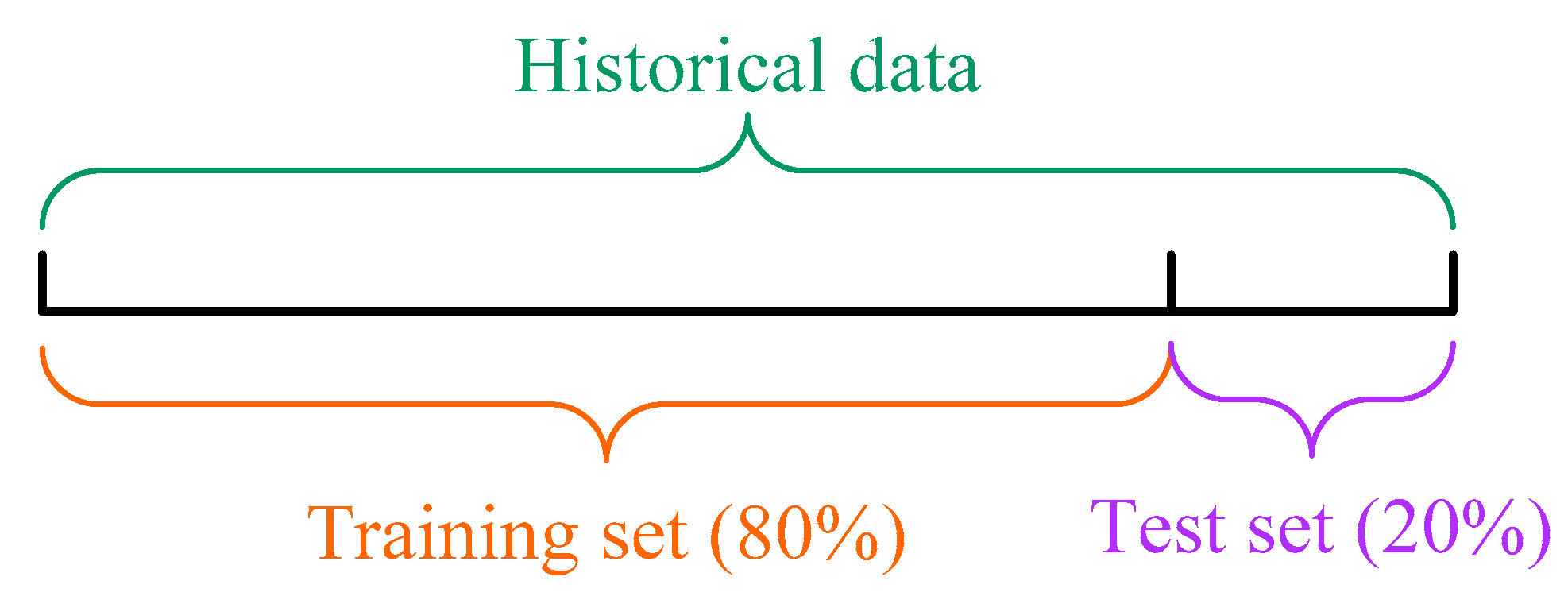

Figure 7 sketches the framework of the proposed novel deep learning model. In this figure, the structure of LSC–CNN–LSTM mainly includes input feature vector module, large scale spectral clustering model, resampling module, CNN feature extraction module and sequence training prediction module.

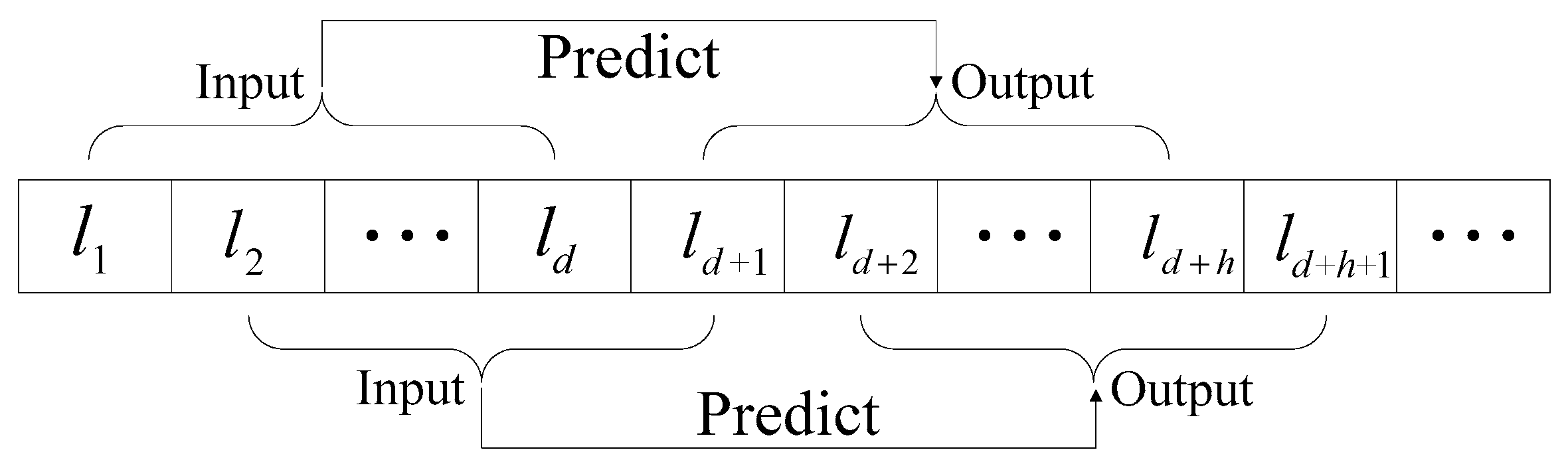

Samples generated by the global active power data are firstly passed on to the input feature vector module, and then all input samples are partitioned into three clusters through LSC. Subsequently, bootstrap aggregating is applied in each cluster to increase the number of samples. For each cluster, the global active power of household electricity in previous 24 h is used as the input of deep neural network. The feature extraction module in CNN mainly includes two one-dimensional convolution layers and a maximum pooling layer. Specifically, one-dimensional convolution can read the sequence input features and automatically learn the latent features. The first convolution layer establishes the feature map from the input sequence, and the second performs the same convolution operation on the feature map created by the first layer to enlarge its salient features. The maximum pooling layer simplifies the feature map obtained by convolution for the sake of dimensionality reduction. Finally, the reduced feature map is flattened into a long vector through leveling.

The purpose of the Repeat Vector is that it repeats the internal representation of the input sequence multiple times, and repeats once for each time step in the output sequence. The output of CNN is input into the LSTM unit. The subsequent sequence prediction module mainly consists of one LSTM layer and two full connection layers. The LSTM layer is connected by two fully connected layers. The fully connected layer makes a nonlinear transformation to the previously extracted features, extracts the correlation between features and maps them to the output space. The global active power of the next hour is ultimately obtained after feature extraction and sequence training prediction.

5. Conclusions

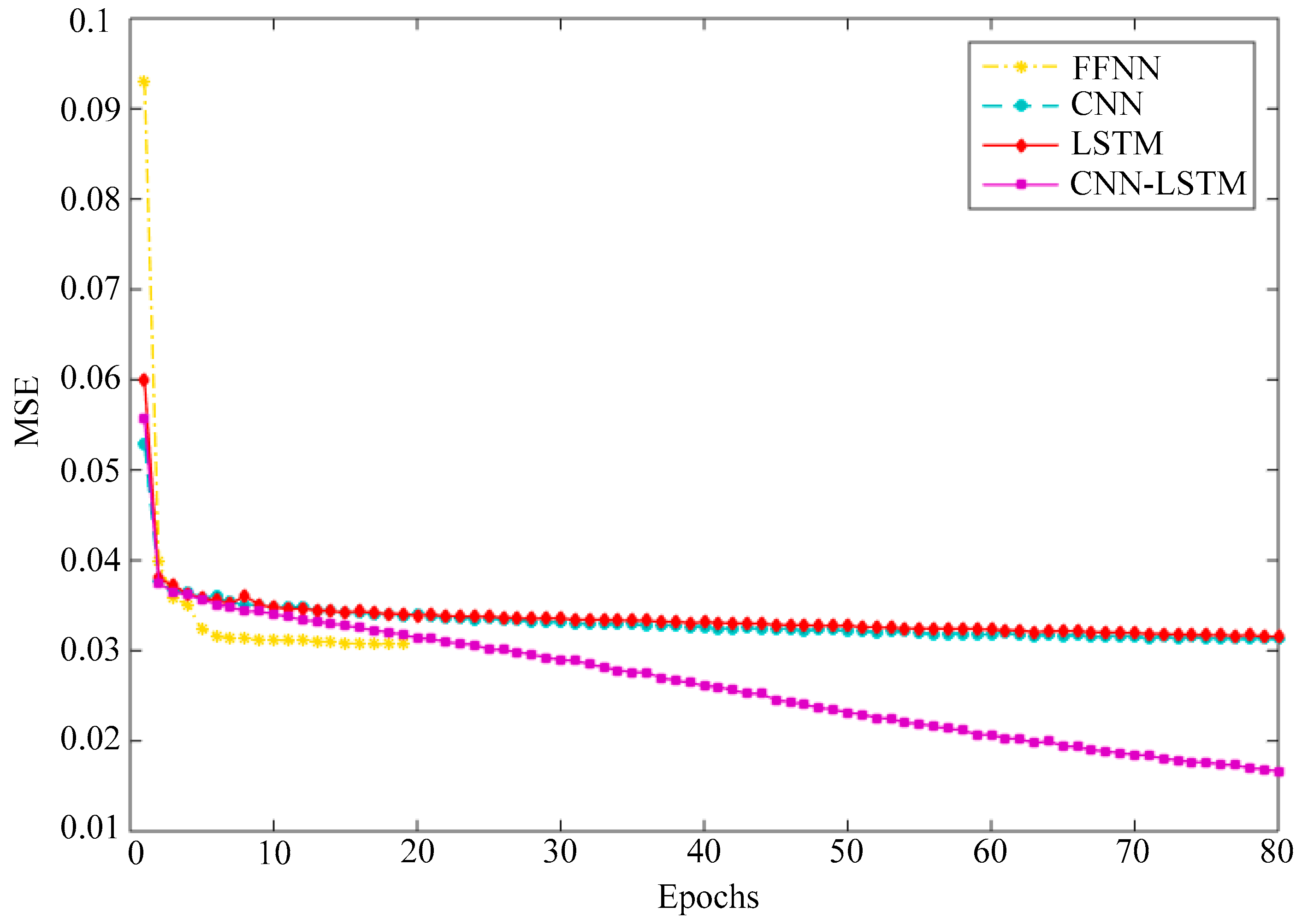

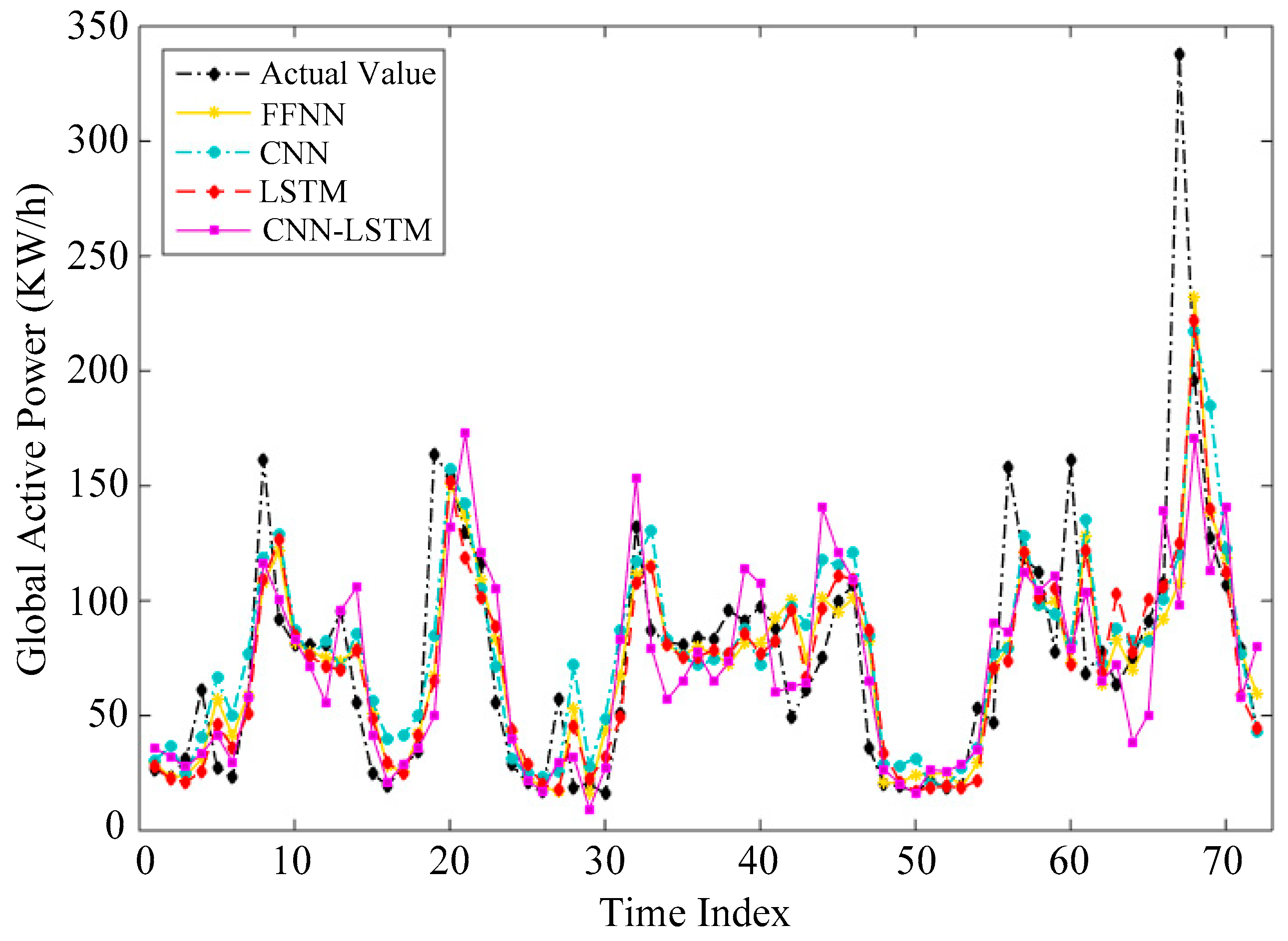

This paper investigates the problem of household electric power prediction. The fluctuation and uncertainty of power consumption bring out great challenges to the prediction of future power consumption. To address these issues, a hybrid forecasting model is proposed by combining LSC, CNN and LSTM. Firstly, the large scale spectral clustering method based on landmarks is employed to cluster all samples according to the periodicity and seasonality of electricity consumption. Then, the samples in each cluster are expanded via the bootstrap aggregating technique. Next, the combined deep learning model is used to accomplish prediction task on all clusters. Finally, the prediction performances of with clustering and without clustering are compared. The experimental results show that the proposed LSC–CNN–LSTM model outperforms other machine learning or deep learning methods in predicting household power consumption. Simultaneously, these results verify the effectiveness of the hybrid deep learning model based on the LSC method and CNN–LSTM.

The combination of clustering and deep learning can effectively deal with the massive samples brought to us in the era of big data and has considerable development prospects in the field of artificial intelligence. This paper only considers one-step prediction and one attribute in household power consumption. Multiple-step prediction involving multiple attributes is quite worthwhile for further research. In addition, although bootstrap aggregating is employed in the proposed method, the ensemble learning technique is not considered. The ensemble of deep learning can yield better generalization performance and provide an interval prediction. Hence, a deep ensemble model will be a promising research subject. All aforementioned models also can be more widely applied in renewable energy fields, such as solar energy, wind energy and geothermal power generation, which is a promising research direction.