Abstract

India has an estimated 12 million visually impaired people and is home to the world’s largest number in any country. Smart walking stick devices use various technologies including machine vision and different sensors for improving the safe movement of visually impaired persons. In machine vision, accurately recognizing an object that is near to them is still a challenging task. This paper provides a system to enable safe navigation and guidance for visually impaired people by implementing an object recognition module in the smart walking stick that uses a local feature extraction method to recognize an object under different image transformations. To provide stability and robustness, the Weighted Guided Harris Corner Feature Detector (WGHCFD) method is proposed to extract feature points from the image. WGHCFD discriminates image features competently and is suitable for different real-world conditions. The WGHCFD method evaluates the most popular Oxford benchmark datasets, and it achieves greater repeatability and matching score than existing feature detectors. In addition, the proposed WGHCFD method is tested with a smart stick and achieves 99.8% recognition rate under different transformation conditions for the safe navigation of visually impaired people.

1. Introduction

In India, visual impairments are a major problem and the number of visually impaired people is 12 million according to the statistics of the World Health Organization (WHO). Secure and safe navigation is one of the most challenging actions handled by vision-impaired people in the real-life environment. Hence, assistive devices help them to complete their daily tasks including uninterrupted navigation. However, confirming secure and safe navigation for visually impaired people is a difficult task that involves precision and effectiveness; one of the major problems that is faced by visually impaired people is accurately recognizing an object that is near to them [1].

With the advancement of technology like the Internet of Things (IoT), machine vision, mobile devices, and Artificial Intelligence (AI), several assistive devices are designed for visually impaired people to support their perfect navigation. A few researchers have started to utilize a camera to identify and recognize impediments in real time and provide the information for efficient navigation and safe guidance. This method gives the correct information about their surroundings; however, it fails to recognize an object when it undergoes various transformations such as scale, illumination, rotation, JPEG compression, and viewpoint change.

The object recognition task aims to identify an object from an image or video, and a common technique for object recognition is to extract local features from images and match them against features from other images. Recently, a Smart vision system was implemented to help visually impaired people using technologies like image processing, IoT, and machine learning,. Many systems use computer vision to detect indoor and outdoor objects accurately.

The system identifies an object from an image using an advanced Tensor flow model that has been trained by using Microsoft Common Objects in Context (MSCOCO) Dataset [1]. Another system identifies several types of objects by utilizing the MobileNet model to extricate the features of the input image [2]. RetinaNet is a type of deep networking model used to extract features from images to identify and recognize an object [3]. The above method achieves better recognition, but if the training time and memory storage are greater it can lead to delay in that process. In recent times, deep learning feature extraction methods have been developed to train the images to provide feature points that are robust to distortion. Even though they have a specific degree of scale invariance by learning, they fail in cases with a significant change in scale, illumination, etc.

Finding the best features is still an open problem in computer vision tasks. The primitive feature extraction task is an elementary role in the efficiency of high-level descriptors in machine vision jobs. The problematic task is to build descriptors for better distinctiveness using the features invariant to scale, rotation and viewpoint. The reliability of feature-based object recognition mainly depends on the robustness of the selected feature detector and descriptor. The primary goal of using a local feature detector is to describe an object effectively by performing feature matching between two images. To achieve this goal a good feature extractor should extract repeatable and precise feature points to describe the same object in an image, distinctive in extracted features that can be localized reliably under varying conditions such as scale, illumination, rotation, noisy, compression, etc.

In this paper, a robust and stable Weighted Guided Harris Corner Feature Detector (WGHCFD) is proposed to recognize an object from the captured image, and the effectiveness of the proposed work is compared with the existing feature detectors and descriptors. In addition, the proposed WGHCFD is tested with a smart walking stick for object recognition tasks, and it provides safe navigation for visually impaired people.

The organization of the paper is as follows: Section 2 describes the literature review on local feature extraction for the object recognition task. Section 3 describes the proposed vision system for visually impaired people when the detailed object recognition module using the proposed WGHCFD detector is implemented. Section 4 presents the experimental results with the standard datasets and object recognition application results. Section 5 concludes this work and gives the scope for the future.

2. Related Work

In this section, we discuss the various local feature extraction methods for object recognition tasks in computer vision.

Accurately recognizing an object in an image is still a challenging task, especially when it has complicated structures like changes in shape, location, size, and orientation. The local feature extraction method successfully handles changes in scale, rotation, and the illumination of objects in an image. Great strides have been made in the field of local invariant feature detection during the last few years, but there are still many avenues that need to be pursued and remain challenging for vision researchers.

SIFT is a well-known real-time local feature detector and descriptor that efficiently extracts image features under different image transformations. The traditional SIFT feature extraction method has four stages to detect highly distinctive features from an image that are suitable for application in machine vision tasks like identification, detection, and the tracking of objects. SIFT descriptors are invariant to pose, cluttered background, scale, illumination, partial occlusion, and viewpoint changes [4]. Hence, SIFT features are commonly used in many real-time applications like image stitching [5], object recognition [6], object tracking [7], object detection [8], object classification [9], and forgeries detection systems [10], etc.

SIFT has a wide variety including Geometric Scale-Invariant Terrain Feature Transform (GSIFT) [11], Principal Component Analysis—SIFT (PCA-SIFT) [12], Colored SIFT (CSIFT) [13] and Affine invariant SIFT (ASIFT) [14]. The intuition of SIFT algorithms provides less capability to real-time system performance by ensuring high efficiency of the SIFT algorithms. Common feature point detectors are: Harris and Hessian–Laplace detectors [15]; FAST [16]; STAR [17]; Speeded-Up Robust Features (SURF) [18]; KAZE [19] and Binary Robust Invariant Scalable Keypoints (BRISK) [20]; Oriented FAST; and Rotated BRIEF (ORB) [21]. Table 1 shows the comparison of various feature detectors with their invariant properties for scale changes and rotation changes image in the existing method.

Table 1.

Overview of Feature Detectors and Invariance Properties.

The performance of the SIFT algorithm has been increased by using a Bilateral filter in place of a Gaussian filter. Bilateral in SIFT (Bi-SIFT) preserves edge characteristics better than Gaussian filters in the SIFT algorithm. Bi-SIFT was found to improve the feature detection algorithm and is robust to various image transformations. The proposed result illustrates that Bi-SIFT provides higher repeatability and a greater number of correspondences for all imaging conditions [22]. The trilateral filter in the SIFT algorithm gives a better performance for all geometric transformation images, yet it fails to provide higher repeatability and score matching due to halo artifacts problems [23]. Fast Feature Detector (FFD) [24] formulates the superimposition problem by assigning the blurring ratio to 2 and smoothness to 0.627 in a scale-space pyramid of DoG in SIFT algorithm. The proposed feature detector FFD takes a computational time of only 5% that of SIFT and is much more accurate and reliable than SIFT.

From Table 1, Harris–Laplace, Hessian–Laplace, SIFT (DoG), SURF (Fast Hessian), ORB, BRISK, STAR, KAZE, Bi-SIFT, and Tri-SIFT detectors have the invariance properties of scale and rotation. Hence, these detectors are commonly considered for comparison with the detectors proposed in this paper. The detectors like Harris, Shi–Tomasi, SUSAN, FAST, and FFD fail to provide invariance to both scale and rotation changes. Table 2 shows the comparison of the most popular feature detectors and descriptors by scale space, orientation, descriptor generation, feature size, and robustness.

Table 2.

Comparison of Popular Feature Descriptors.

Common feature descriptors like SIFT, GLOH [15], SURF, BRIEF [25], ORB, BRISK, FREAK [26], DaLI [27], Bi-SIFT, DERF [28], HiSTDO [29], Tri-SIFT, RAGIH [30], ROEWA [31], DOG–ADTCP [32], and LPSO [33] are studied in this research. GLOH and LPSO are more expensive in computation than the SIFT algorithm. From the comparison in Table 2: ROEWA gives less performance for scale change images; RAGIH gives less performance other than rotation and scale change; HiSTDO gives less performance other than background clutter, illumination, and noise; DERF gives less performance other than scale and rotation change; DaLI gives less performance other than image deformations and illumination; DOG–ADTCP and LPSO give less performance other than rotation and illumination change when compared to all imaging conditions, hence it is not considering for further analysis in this research. Binary Robust Independent Elementary Features (BRIEF) requires less complexity than SIFT with the same matching performance but less performance for different rotation conditions. The fast retina keypoint (FREAK) descriptor is faster to compute with lesser memory load and is mostly used in embedded applications.

In recent times, deep learning feature extraction methods have been developed to train the images to provide feature points that are robust to distortion. Even though they have a specific degree of scale invariance by learning, they fail in cases with a significant change in scale [34,35].

The feature point detection and descriptor methods discussed above fail to give up the best results in all imaging transformation conditions. This research work aims to extract a robust feature detector for different imaging conditions, improve localization accuracy and match features accurately to recognize an object in an image. Hence, the feature detectors like SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace, and descriptors like SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, Tri-SIFT are used to prove the effectiveness of the proposed WGHCFD due to the robustness for various image transformation.

3. Proposed Vision System for Visually Impaired People

This section presents the proposed vision system for visually impaired people using an object recognition module that utilizes the proposed WGHCFD detector to recognize an object.

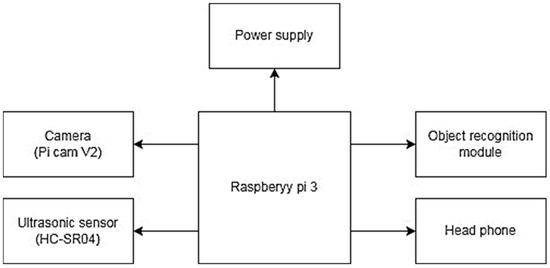

The proposed system uses an IoT-based machine vision system with the proposed WGHCFD feature detector for visually impaired people. Figure 1 shows the block diagram of the proposed vision system, and it has a major module of power supply, camera, ultrasonic sensor (HC-SR04), Raspberry Pi 3, object recognition module, and headphones. In the proposed system, ultrasonic sensors are used to detect the obstacle and measure its distance. The Raspberry Pi 3 acts as a small computer and has USB ports for connecting devices. The Pi camera has a fixed lens to capture high-definition images, which makes object identification easier. The captured images are processed by the object recognition module to recognize an object and can be communicated via headphones to improve the safe navigation of visually impaired people.

Figure 1.

Block diagram of the proposed vision system.

3.1. Object Recognition Module

The object recognition module in the proposed system recognizes several types of objects like chairs, people, tables etc. The module uses Pi camera module v2, and it is attached to the front side of the prototype to capture the image from the environment. The captured image is a fixed resolution of 720 × 680 pixels and this image is sent to the feature extractor for recognizing an object. In the proposed vision system, WGHCFD is utilized to extricate the features of the captured images, and the overall process of the feature extraction using WGHCFD is discussed in this section.

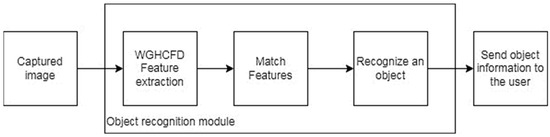

The working process of the object recognition module is shown in Figure 2. The captured image from the smart walking stick is given to the WGHCFD detector to extract features that are matched using similarity distance measurement to recognize an object even if it has various image transformations.

Figure 2.

Working flow of the object recognition module.

Feature Extraction Using Proposed WGHCFD Detector

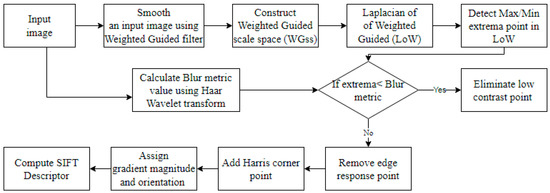

In this section, four different stages of the proposed WGHCFD feature extraction method are explained briefly for the invariant object recognition task. The proposed WGHCFD method follows four stages and it is shown in Figure 3.

Figure 3.

Block diagram of 4 stages of the proposed WGHCFD method.

The proposed WGHCFD method consists of four stages and each of these is explained in detail below:

- Stage 1: The Weighted Guided scale space construction (WGss)

Smooth an input image using Weighted Guided Filter (WGF) [36,37] that reduces the halo artifacts in an image and preserves the sharp gradient information. Hence, WGF is used to smooth an input image I(p, q). A guide image (G) and the variance of G () in the 3 × 3 window Ω are assigned. An edge-aware weight of G is defined in Equation (1):

where ε is a constant. The is larger than 1 for an edge point and smaller than 1 for a smooth area. More weight is given to sharp edges than pixels in the smooth area. The weighting equation is introduced to obtain the weighted guided image filter using Equation (2):

where λ is a regularization parameter. The value of ai′ and bi′ are computed by Equation (3):

The symbol ⊙ is an element-by-element product of G and I. μ(G), μ(I) and μ(G⊙I) represent the mean values of G, I, and G⊙I, respectively.

The scale-invariant feature is determined by searching all consecutive scales. The input image I(p, q) is convolved with weighted guided filter WG(p, q) to obtain a smoothed image L(p, q, σ) by using Equation (4):

where σ denotes the standard deviation of the weighted guided filter. Stable feature point locations are determined using scale-space extrema for various scales. The Laplacian of Weighted (LoW) guided filter detects feature point locations in scale space using Equation (5):

where L(p, q) is a fixed Laplacian filter. The local extrema points are obtained using LoW.

- Stage 2: Feature point Localization

Once the extrema points are detected, eliminate the low-contrast points to get a stable feature point. The low-contrast threshold parameter (0.03) eliminates low-contrast feature points as described in [4]. These low-contrast points are generated due to noise and smoothing filters, and unwanted low-contrast points are eliminated by calculating the blur metric value.

The blur metric value is calculated using the Haar wavelet transform [38]. By using edge sharpness analysis, the blur extent occurrence in the given image can be identified. The following steps calculates the blur metric value:

- Step 1: Perform level 3 decomposition of the input image using Haar wavelet transform.

- Step 2: Construct an edge map for each scale using Equation (6):

- Step 3: Partition the edge maps and find local maxima Emaxi (i = 1, 2, 3).

If Emaxi (k, l) > threshold then (k, l) is an Edge point.

- Step 4: Calculate blur metric value.

By using Equation (7) the blur metric value is calculated to eliminate a low contrast point instead of the 0.03 value. The threshold value has been chosen using Equation (8):

Threshold value = Blur metric(I)

The feature point below the threshold value is considered as a low contrast point and has been eliminated. The edge response feature points are removed by comparing the second-order Taylor’s expansion of LoW at the corresponding feature point location against a certain threshold, and it has been eliminated to provide stable characteristics.

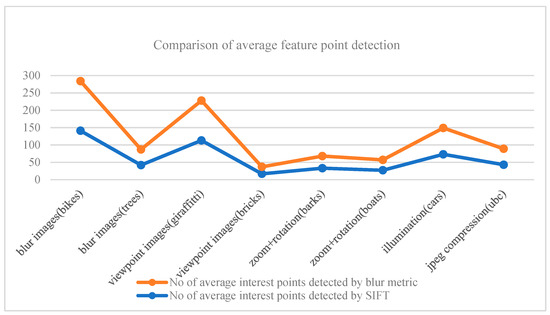

The average feature point detection using the SIFT detector and proposed blur metric calculation detector for Oxford datasets under different image conditions are shown in Figure 4.

Figure 4.

Average feature point detection using blur metric value.

The average feature point extracted using blur metric value is greater than the conventional SIFT algorithm as low contrast points are eliminated. The Harris corner points are detected for the input image I(p, q) and further added to the extrema point to obtain a stable and robust feature point [39].

- Stage 3: Feature point Magnitude and Orientation Assignment

The gradient magnitude m(p, q) and orientation θ(p, q) are calculated using Equation (9) for the smooth image L(p, q):

An orientation histogram is formed to find the dominant direction of local gradients.

- Stage 4: Generate Descriptor

After assigning the scale, location, and orientation of each feature point, the feature point descriptor is computed based on its local neighborhood and is invariant to image transformations. In SIFT, the descriptor is built up from an orientation histogram of the 4 × 4 array with 8 bins each. Finally, the descriptor consists of 128 feature elements. The performance of the WGHCFD detector proposed above is experimentally analyzed with standard datasets [29].

4. Qualitative Analysis of the Proposed WGHCFD

The dataset used in the experiments to evaluate the performance of the proposed system are explained in detail in this section. The proposed WGHCFD performances of repeatability and score matching are compared with the existing popular feature detectors and descriptors, are discussed in the following subsections.

The Oxford dataset [40] consists of two classes viz. structured or textured blur change images, two classes of viewpoint change images, two classes of zoom + rotation change images, one class of illumination change images, and one class of JPEG compression images. Figure 5 shows the example images from the Oxford dataset. The first image in each class is of good quality, whereas the remaining images in each class have small changes from the first image. The proposed WGHCFD performance was compared with the existing popular feature detectors SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace, and descriptors SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, and Tri-SIFT for repeatability and matching scores to prove the effectiveness.

Figure 5.

Oxford data sets: (a) blur change image; (b) viewpoint change image; (c) zoom+rotation change image; (d) illumination change image; (e) jpeg compression image.

4.1. Performance Measures

The repeatability score is used to measure the stability and accuracy of the feature point detector and is given in Equation (10). The repeatability criterion is calculated by the ratio of the corresponding feature points and the minimum total number of feature points detected in both images. Corresponding points between images enable the estimation of parameters describing geometric transforms between the images [4,41]:

A matching score is used to measure the matching accuracy between two images and is given in Equation (11). The matching score is calculated by the ratio of the number of correct matching points and the total number of matching points [4,41]:

The performance of the descriptors has been evaluated using the evaluation criteria of recall and 1-precision. The input image and test images are matched by the Euclidean distance of descriptors lower than a threshold, and each descriptor from the test image is compared with each descriptor in the test image. The results are achieved with recall and 1-precision. The Recall is the ratio of the number of correctly matched regions to the number of corresponding regions between two images of the same scene and is calculated using Equation (12):

The 1-precision is the ratio of the number of false matches to the total number of all matches and is represented by using Equation (13):

A good descriptor would give recall as 1 for any precision. Recall increases for an increasing threshold and distance between similar descriptors. The curves represent that the recall is attained with high precision [41].

4.2. Experimental Result Analysis

The following subsections discuss the experimental results from various image transformations such as blur change, viewpoint change, zoom+rotation change, Illumination change and JPEG compression images on the Oxford dataset, the performance of the proposed system is also analyzed in the object recognition task on a real-time dataset.

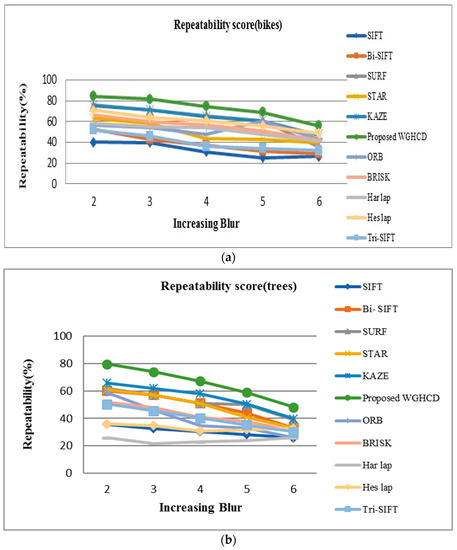

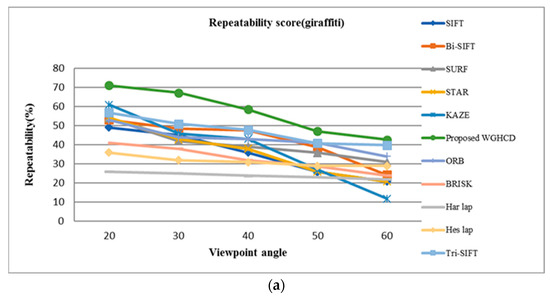

4.2.1. Blur Change Images

The repeatability and matching scores are calculated for existing detectors like SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace and proposed WGHCFD for variant blur image in the datasets. Figure 6a,b shows the repeatability performance of the SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace, and proposed WGHCFD for the bikes blurred dataset images [38]. The average percentage of repeatability score of the detectors SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace and proposed WGHCFD are 32.49%, 38.75%, 61.72%, 49.46%, 63.58%, 41.2%, 52.45%, 55.05%, 51.01%, 60.05%, and 89.12%, respectively, for bikes dataset as shown in Figure 6a. The repeatability score of the detectors such as SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace, and proposed WGHCFD detectors are 30.54%, 49.02%, 51.70%, 48.39%, 55.16%, 39.67%, 41.87%, 40.33%, 24.08%, 33.25% and 65.48%, respectively, for trees image in Figure 6b. WGHCFD gives the average repeatability score of 40.08%, 34.22%, 11.25%, 23.51%, 9.39%, 20.52%, 17.92%, 31.77%, 21.90% and 12.92% higher than SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace and Hessian–Laplace, respectively, for blur change bike datasets.

Figure 6.

Blur change images: (a) Repeatability score of bikes; (b) Repeatability score of trees; (c) Matching score of bikes; (d) Matching score of trees.

WGHCFD gives the average repeatability score of 44.12%, 16.25%, 13.78%, 17.09%, 10.32%, 25.81%, 23.61%, 25.15%, 41.01% and 32.23% higher than SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, Hessian–Laplace and Harris–Laplace detectors, respectively, for blur change trees datasets. It is observed from Figure 6c that the SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace, and proposed WGHCFD detector performs with an average matching score of 46.20%, 59.21%, 62.27%, 56.26%, 67.70%, 51.51%, 49.38%, 65.78%, 41.77%, 44.00%, and 75.79%, respectively, for the blur change images of bike. From Figure 6d the matching score of SIFT, Bi-SIFT, SURF, STAR, KAZE, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, Hessian–Laplace and proposed WGHCFD is 46.20%, 61.43%, 60.87%, 54.86%, 66.18%, 63.51%, 49.58%, 52.00%, 51.77%, 46.00% and 71.27%, respectively. WGHCFD gives the matching score of 41.36%, 10.56%, 13.29%, 12.31%, 7.87%, 38.19%, 45.79%, and 43.57% higher than SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, and Hessian–Laplace, respectively, for blur change images of bike. WGHCFD gives the matching score of 40.48%, 18.26%, 16.41%, 20.03%, 12.99%, 11.11%, 10.91%, 13% and 15.69% higher than SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for blur change images of trees. The proposed WGHCFD gives higher repeatability and matching score than existing algorithms such as SIFT, Bi-SIFT, SURF, STAR, BRISK, ORB, STAR, KAZE, Tri-SIFT, Harris–Laplace, and Hessian–Laplace, as the number of feature points detected by WGHCFD is greater than the other detectors because when the blur is increased, the edges are retained efficiently from the scene.

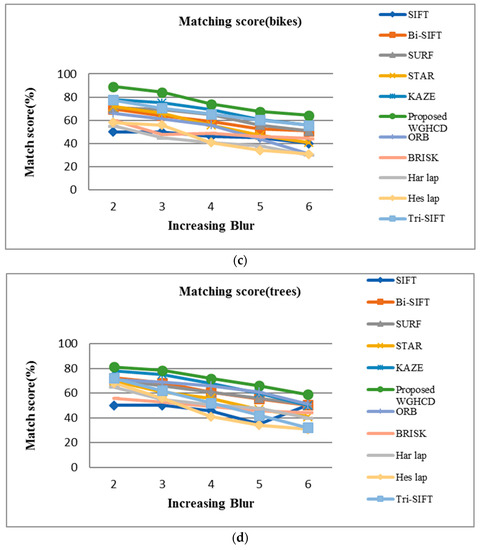

The performance of the descriptors is evaluated based on the criteria recall and 1-precision for blur change images of bikes and trees using Equations (12) and (13). Figure 7 shows the evaluation of descriptors such as SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, Tri-SIFT., and proposed WGHCFD. The results show that all descriptors are affected by blur change images. The proposed WGHCFD, Bi-SIFT, Tri-SIFT, and SIFT give high scores compared to other descriptors like SURF, ORB, BRIEF, BRISK, and FREAK as SIFT-based variant descriptors are robust to blur change images. The proposed WGHCFD gives a high score for blurred images compared with all other descriptors due to the largest number of matches.

Figure 7.

Blur change images—recall vs. 1-precision.

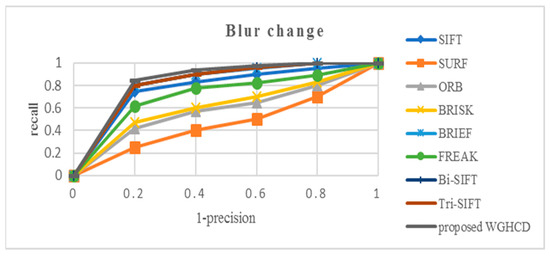

4.2.2. Viewpoint Change Images

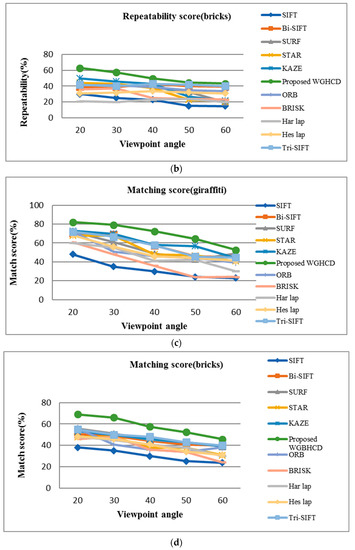

From Figure 8a, WGHCFD gives the average higher repeatability score of 39.08%, 26.69%, 18.99%, 20.72%, 15.38%, 11.18%, 15.15%, 8.89%, 20.03% and 22.75% than the SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for viewpoint change images of graffiti.

Figure 8.

Viewpoint change images: (a) Repeatability score of graffiti; (b) Repeatability score of bricks; (c) Matching score of graffiti; (d) Matching score of bricks.

WGHCFD performs an average higher repeatability score of 27.27%, 8.62%, 13.98%, 15.11%, 11.37%, 19.87%, 27.28%, and 16.87% than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for bricks dataset images as shown in Figure 8b. From Figure 8c, it is observed that WGHCFD gives the matching score of 33.20%, 27.70%, 19.50%, 22.93%, 16.94%, 10.58%, 9.58%, 9.45%, 18.50% and 23.90% higher than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively. WGHCFD performs the matching score of 24.12%, 22.63%, 23.84%, 19.06%, 17.47%, 15.91%, 10.11%, 9.45%, 21.45% and 24.49% higher than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, as shown in Figure 8d. The proposed WGHCFD performs better for viewpoint change images than existing algorithms due to the invariance property of the Harris corner point detector.

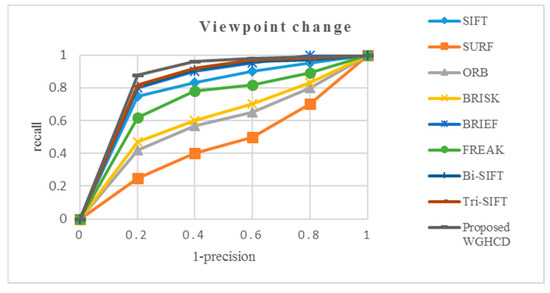

The performance of the WGHCFD descriptor is evaluated based on recall vs. 1-precision on the other descriptors like SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, Tri-SIFT, and the results are shown in Figure 9. The performance of the descriptors gradually increases for all descriptors. The SURF descriptor gives less performance compared to ORB, BRISK, BRIEF, and FREAK. The WGHCFD gives good performance compared to Bi-SIFT, Tri-SIFT, and SIFT due to Harris corner detector combined to give robustness to viewpoint change images.

Figure 9.

Viewpoint change images—recall vs. 1-precision.

4.2.3. Zoom+Rotation Change Images

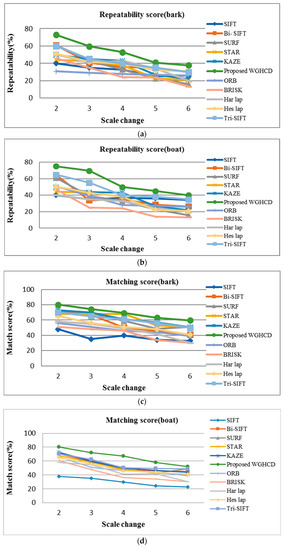

The evaluations carried out for the zoom+rotation change images of dataset images are shown in Figure 5c. Figure 10a–d shows the repeatability score and matching score of SIFT, Bi-SIFT, GBHCD, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace for bark and boat images. WGHCFD gives the average higher repeatability score of 34.39%, 17.45%, 21.90%, 22.23%, 19.69%, 21.57%, 19.37%, 8.34%, 21.45% and 24.79% and 32.45%, 17.50%, 21.89%, 19.42%, 18.08%, 9.56%, 11.76%, 10.23%, 20.34% and 21.33% than SIFT, Bi-SIFT, SURF, STAR, BRISK, KAZE, ORB, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for bark and boat images.

Figure 10.

Zoom+rotation change images: (a) Repeatability score of bark; (b) Repeatability score of boat; (c) Matching score of bark; (d) Matching score of boat.

WGHCFD gives the average higher matching score of 30.56%, 24.86%, 22.87%, 24.09%, 20.90%, 21.74%, 18.74%, 13.23%, 23.45% and 24.31% and 33.82%, 15.32%, 10.13%, 11.35%, 10.16%, 11.00%, 14.60%, 22.67%, 11.78%, 20.45% and 25.38% than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for dataset images as shown in Figure 10c,d. The proposed WGHCFD gives higher repeatability and matching score compared to other detectors since a greater number of feature points are extracted and matched correctly for the zoom+rotation change images.

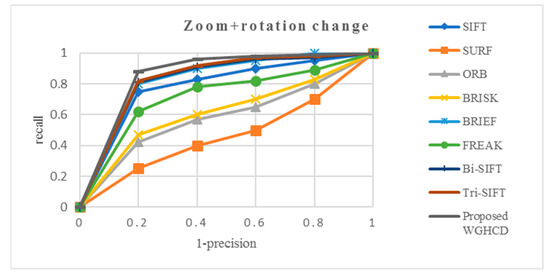

The performance of the proposed WGHCFD descriptor is evaluated based on recall vs. 1-precision SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, and Tri-SIFT descriptors and the results are shown in Figure 11. The proposed WGHCFD gives a high recall value compared to other descriptors such as SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, and Tri-SIFT. WGHCFD gives high performance for zoom+rotation change images due to the rotation invariance property.

Figure 11.

Zoom+rotation change images—recall vs. 1-precision.

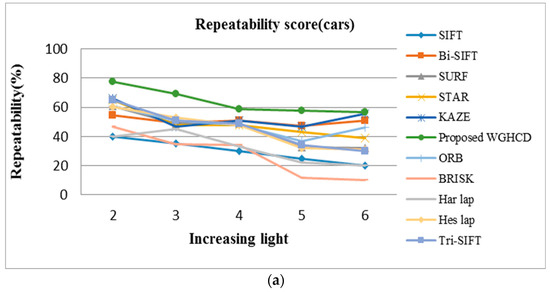

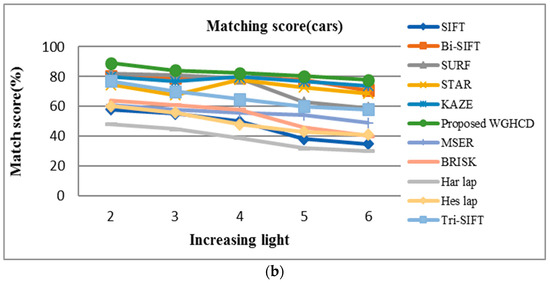

4.2.4. Illumination Change Images

Figure 12a,b shows the repeatability and matching score for illumination change images in datasets [38]. WGHCFD gives higher repeatability score of 37.94%, 16.96%, 20.85%, 18.07%, 13.24%, 18.92%, 15.52%, 14.23%, 20.34% and 25.69% and matching score of 32.80%, 24.50%, 18.31%, 21.53%, 14.14%, 16.18%, 10.18%, 8.32%, 23.54% and 24.35% than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively, for cars dataset images. The pro-posed WGHCFD performs better than existing algorithms due to low contrast elimination using blur metric calculation.

Figure 12.

Illumination change images: (a) Repeatability score of cars; (b) Matching score of cars.

The performance of the descriptor is performed for illumination change images and is shown in Figure 13. SIFT, Bi-SIFT, Tri-SIFT, and proposed WGHCFD give the largest performance compared to SURF, ORB, BRISK, BRIEF, and FREAK due to robustness against illumination conditions.

Figure 13.

Illumination change images—recall vs. 1-precision.

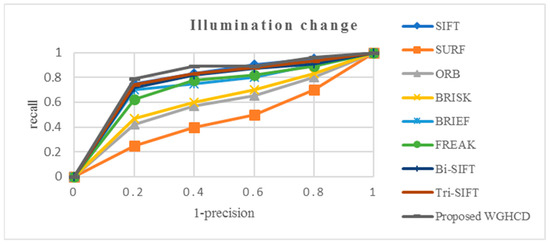

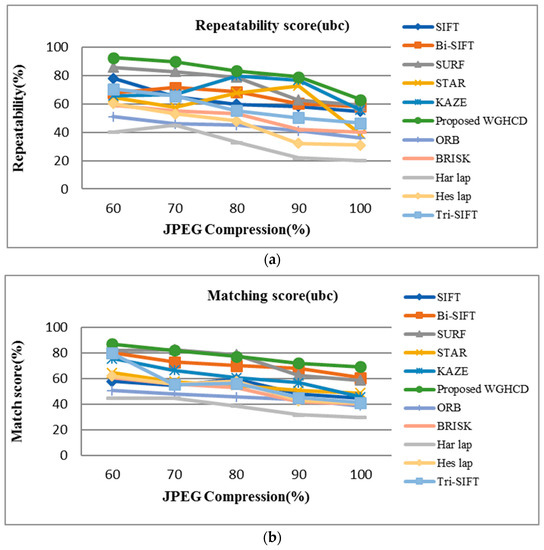

4.2.5. JPEG Compression Images

Figure 14a,b shows the repeatability and matching score for the various test images in the datasets [40]. WGHCFD gives better repeatability scores of 35.37%, 18.45%, 20.68%, 24.10%, 25.51%, 13.73%, 15.55%, 8.34%, 24.67% and 26.72% and matching scores of 30.96%, 19.06%, 20.07%, 23.69%, 24.10%, 13.72%, 15.94%, 10.34%, 24.12% and 29.11% than SIFT, Bi-SIFT, SURF, STAR, ORB, KAZE, BRISK, Tri-SIFT, Harris–Laplace and Hessian–Laplace detectors, respectively. The proposed WGHCFD provides good results compared to existing detectors as it extracts more stable points.

Figure 14.

JPEG compression images: (a) Repeatability score of ubc; (b) Matching score of ubc.

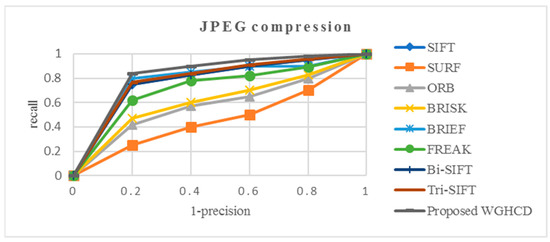

Figure 15 shows the evaluation performance of SIFT, SURF, ORB, BRISK, BRIEF, FREAK, Bi-SIFT, Tri-SIFT, and proposed WGHCFD descriptors for JPEG compression images. The performance gradually increases as all descriptors are affected by JPEG artifacts. Proposed WGHCFD obtain good results compared to SIFT, Bi-SIFT, SURF, Tri-SFT, ORB, BRISK, BRIEF, and FREAK since it is more stable toward the artifacts problem.

Figure 15.

JPEG compression image—recall vs. 1-precision.

From the feature detector experimental analysis, the Hessian and Harris–Laplace detector provides lower repeatability and matching scores for blurred images since the feature point detectors are not scale-invariant compared to other detectors. SURF detector offers results near the SIFT detector and high speed compared to SIFT but achieves less repeatability and matching score for all type of image conditions. STAR and BRISK feature detectors achieve less performance for all types of image transformations compared to the proposed WGHCFD. KAZE gives a better score than SIFT; however, the KAZE features are computationally more expensive. Bi-SIFT and Tri-SIFT detectors give moderate results for all image transformations.

From the descriptors evaluation analysis, the proposed WGHCFD achieves good performance when compared to SIFT, Bi-SIFT, SURF, Tri-SFT, ORB, BRISK, BRIEF, and FREAK for all image transformations. SURF gives less performance compared to SIFT descriptors. ORB provides good performance for rotation change images but not for other transformations. BRISK, BRIEF, and FREAK give less performance for blur change images. The experimental results obtained from the analysis confirmed that the WGHCFD provides an improved repeatability score, and the matching score and is also robust to various image transformations such as scale, viewpoint angle, zoom+rotation, illumination, and compression.

4.3. WGHCFD for Object Recognition Applications

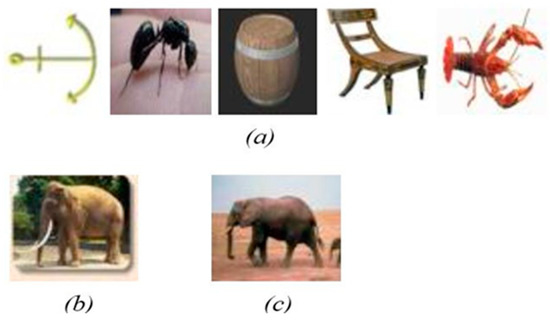

The performance of the proposed system is analyzed in the domain of object recognition on a real-time dataset that has 101 object categories and each object has 10 significant variations in scale, viewpoint, color, pose, and illumination changes. Figure 16a shows some of the real-time dataset objects. The WGHCD features are extracted from the input dataset image. For example, Figure 16b shows the image that has to be recognized in the dataset. The objects in the images are recognized correctly and it is shown in Figure 16c.

Figure 16.

(a) Sample objects in real-time dataset; (b) Input image for object recognition; (c) Recognized image.

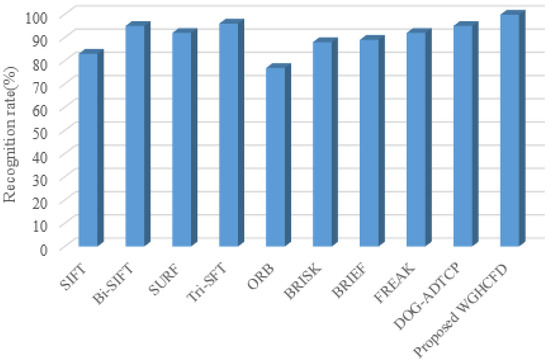

The recognition rate of this dataset is calculated by the ratio between the number of correctly recognized images and the total number of images. Figure 17 shows the comparison of recognition rate of the proposed WGHCFD method with the existing descriptors on the real time dataset of 1010 images. From the results shown in Table 3, the proposed WGHCFD has the best recognition rate in this experiment (99.8%) followed by Tri-SFT (96%), DOG–ADTCP (95%), Bi-SIFT (95%), FREAK (92%), SURF (92%), BRIEF (89%), BRISK (88%), SIFT (83%) and ORB (76.9%). The results also presented the number of images recognized by each method with their recognition rate from a total of 1010 images on 101 object categories dataset.

Figure 17.

Comparison of recognition rate of proposed WGHCFD with the existing descriptors.

Table 3.

Number of recognized images and recognition rate from a total of 1010 images for 101 object categories.

From the experimental analysis, the WGHCFD achieves a high recognition rate and is also invariant to various image transformations when compared to the existing feature detectors and descriptors. The recognition rate of WGHCFD achieves 99.8%, hence it is more suitable for the object recognition task and supports visually impaired people for safe navigation.

5. Conclusions and Future Work

This paper proposes a WGHCFD features extraction method under different real-world conditions such as scale, rotation, illumination, and viewpoint changes. WGHCFD uses a weighted guided image filter to smooth an image to reduce halo artifacts. The Haar wavelet transform provides blur metric values to eliminate the unstable feature points; Harris corner points in WGHCFD give more robust feature points. WGHCFD achieves higher repeatability and matching scores compared to existing feature extraction methods. Eliminating vision problems in visually impaired persons, the proposed work can be used in recognizing the objects with various transformations like scale, rotation, viewpoint, and illumination. A robust WGHCFD feature detector with high recognition accuracy supports visually impaired people for safe navigation. In the future, the proposed work can be extended for use in the mobile phone system for better navigation of visually impaired persons.

Author Contributions

Conceptualization, M.R., P.S., T.S., S.A. and H.K.; methodology, M.R. and P.S.; software, M.R., P.S. and T.S.; validation, M.R., P.S., T.S., S.A. and H.K.; formal analysis, M.R. and P.S.; investigation, M.R., P.S. and T.S.; resources, H.K.; data curation, M.R. and S.A.; writing—original draft preparation, M.R. and P.S.; writing—review and editing, M.R., P.S., T.S., S.A. and H.K.; visualization, M.R., P.S. and T.S.; supervision, T.S., S.A. and H.K.; project administration, H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2017R1D1A1B04032598).

Institutional Review Board Statement

Not Applicable.

Informed Consent Statement

Not Applicable.

Data Availability Statement

The datasets used in this paper are publicly available and their links are provided in the reference section.

Acknowledgments

We thank the anonymous reviewers for their valuable suggestions that improved the quality of this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shanthi, K.G. Smart Vision using Machine learning for Blind. Int. J. Adv. Sci. Technol. 2020, 29, 12458–12463. [Google Scholar]

- Rahman, M.A.; Sadi, M.S. IoT Enabled Automated Object Recognition for the Visually Impaired. Comput. Methods Programs Biomed. Update 2021, 1, 100015. [Google Scholar] [CrossRef]

- Afif, M.; Ayachi, R.; Said, Y.; Pissaloux, E.; Atri, M. An Evaluation of RetinaNet on Indoor Object Detection for Blind and Visually Impaired Persons Assistance Navigation. Neural Process. Lett. 2020, 51, 2265–2279. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant interest points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, G.; Jia, Z. An image stitching algorithm based on histogram matching and SIFT algorithm. Int. J. Pattern Recognit. Artif. Intell. 2017, 31, 1754006. [Google Scholar] [CrossRef] [Green Version]

- Arth, C.; Bischof, H. Real-time object recognition using local features on a DSP-based embedded system. J. Real-Time Image Process. 2008, 3, 233–253. [Google Scholar] [CrossRef]

- Zhou, H.; Yuan, Y.; Shi, C. Object tracking using SIFT features and mean shift. Comput. Vis. Image Underst. 2009, 113, 345–352. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Urban area and building detection using SIFT keypoints and graph theory. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1156–1167. [Google Scholar] [CrossRef]

- Chang, L.; Duarte, M.M.; Sucar, L.E.; Morales, E.F. Object class recognition using SIFT and Bayesian networks. Adv. Soft Comput. 2010, 6438, 56–66. [Google Scholar]

- Soni, B.; Das, P.K.; Thounaojam, D.M. Keypoints based enhanced multiple copy-move forgeries detection system using density-based spatial clustering of application with noise clustering algorithm. IET Image Process. 2018, 12, 2092–2099. [Google Scholar] [CrossRef]

- Lodha, S.K.; Xiao, Y. GSIFT: Geometric scale invariant feature transform for terrain data. Int. Soc. Opt. Photonics 2006, 6066, 60660L. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A more distinctive representation for local image descriptors. CVPR 2004, 4, 506–513. [Google Scholar]

- Abdel-Hakim, A.E.; Farag, A.A. CSIFT: A SIFT descriptor with color invariant characteristics. Comput. Vis. Pattern Recognit. 2006, 2, 1978–1983. [Google Scholar]

- Morel, J.M.; Yu, G. ASIFT: A new framework for fully affine invariant image comparison. SIAM J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Agrawal, M.; Konolige, K.; Blas, M.R. Censure: Center surround extremas for realtime feature detection and matching. In Proceedings of the European Conference on Computer Vision, Marseille, France, 12–18 October 2008; pp. 102–115. [Google Scholar]

- Bay, H.; Andreas, E.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the IEEE International Conference on Computer Vision (ICCV 2011), Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Rublee, M.E.; Rabaud, V.; Konolige, K.; Bradski, G.R. ORB: An efficient alternative to SIFT or SURF. ICCV 2011, 11, 2. [Google Scholar]

- Huang, M.; Mu, Z.; Zeng, H.; Huang, H. A novel approach for interest point detection via Laplacian-of-bilateral filter. J. Sens. 2015, 2015, 685154. [Google Scholar] [CrossRef]

- Şekeroglu, K.; Soysal, O.M. Comparison of SIFT, Bi-SIFT, and Tri-SIFT and their frequency spectrum analysis. Mach. Vis. Appl. 2017, 28, 875–902. [Google Scholar] [CrossRef]

- Ghahremani, M.; Liu, Y.; Tiddeman, B. FFD: Fast Feature Detector. IEEE Trans. Image Process. 2021, 30, 1153–1168. [Google Scholar] [CrossRef]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. BRIEF: Binary robust independent elementary features. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010. [Google Scholar]

- Alahi, A.; Ortiz, R.; Vandergheynst, P. Freak: Fast retina keypoint. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2012, Providence, RI, USA, 16–21 June 2012; pp. 510–517. [Google Scholar]

- Simo-Serra, E.; Torras, C.; Moreno-Noguer, F. DaLI: Deformation and Light Invariant Descriptor. Int. J. Comput. Vis. 2015, 115, 115–136. [Google Scholar] [CrossRef]

- Weng, D.W.; Wang, Y.H.; Gong, M.M.; Tao, D.C.; Wei, H.; Huang, D. DERF: Distinctive efficient robust features from the biological modeling of the P ganglion cells. IEEE Trans. Image Process. 2015, 24, 2287–2302. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.; Han, J. Directional coherence-based spatiotemporal descriptor for object detection in static and dynamic scenes. Mach. Vis. Appl. 2017, 28, 49–59. [Google Scholar] [CrossRef]

- Sadeghi, B.; Jamshidi, K.; Vafaei, A.; Monadjemi, S.A. A local image descriptor based on radial and angular gradient intensity histogram for blurred image matching. Vis. Comput. 2019, 35, 1373–1391. [Google Scholar] [CrossRef]

- Yu, Q.; Zhou, S.; Jiang, Y.; Wu, P.; Xu, Y. High-Performance SAR Image Matching Using Improved SIFT Framework Based on Rolling Guidance Filter and ROEWA-Powered Feature. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 920–933. [Google Scholar] [CrossRef]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Selfsupervised interest point detection and description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Jingade, R.R.; Kunte, R.S. DOG-ADTCP: A new feature descriptor for protection of face identification system. Expert Syst. Appl. 2022, 201, 117207. [Google Scholar] [CrossRef]

- Yang, W.; Xu, C.; Mei, L.; Yao, Y.; Liu, C. LPSO: Multi-Source Image Matching Considering the Description of Local Phase Sharpness Orientation 2022. IEEE Photonics J. 2022, 14, 7811109. [Google Scholar] [CrossRef]

- Dusmanu, M. D2-net: A trainable CNN for joint description and detection of local features. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8092–8101. [Google Scholar]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2015, 24, 120–129. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef]

- Tong, H.; Li, M.; Zhang, H.; Zhang, C. Blur detection for digital images using wavelet transform. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME 2004), Taipei, Taiwan, 27–30 June 2004; pp. 17–20. [Google Scholar]

- Harris, C.; Stephens, M. A combined corner and edge detection. In Proceedings of the fourth alvey vision conference (UK, 1988), Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Mikolajczyk, K. Oxford Data Set. Available online: http://www.robots.ox.ac.uk/~vgg/research/affine (accessed on 24 May 2022).

- Schmid, C.; Mohr, R.; Bauckhage, C. Evaluation of interest point detectors. Int. J. Comput. Vis. 2000, 37, 151–172. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).