Abstract

In robotic manipulation, object grasping is a basic yet challenging task. Dexterous grasping necessitates intelligent visual observation of the target objects by emphasizing the importance of spatial equivariance to learn the grasping policy. In this paper, two significant challenges associated with robotic grasping in both clutter and occlusion scenarios are addressed. The first challenge is the coordination of push and grasp actions, in which the robot may occasionally fail to disrupt the arrangement of the objects in a well-ordered object scenario. On the other hand, when employed in a randomly cluttered object scenario, the pushing behavior may be less efficient, as many objects are more likely to be pushed out of the workspace. The second challenge is the avoidance of occlusion that occurs when the camera itself is entirely or partially occluded during a grasping action. This paper proposes a multi-view change observation-based approach (MV-COBA) to overcome these two problems. The proposed approach is divided into two parts: (1) using multiple cameras to set up multiple views to address the occlusion issue; and (2) using visual change observation on the basis of the pixel depth difference to address the challenge of coordinating push and grasp actions. According to experimental simulation findings, the proposed approach achieved an average grasp success rate of 83.6%, 86.3%, and 97.8% in the cluttered, well-ordered object, and occlusion scenarios, respectively.

1. Introduction

Object grasping is an important step in a variety of robotic tasks, yet it poses a challenging task in robotic manipulation [1]. Additionally, perception of the surroundings is a valuable skill that the robot should possess in order to perform robotic tasks with multiple actions (e.g., push or grasp) in clutter using deep reinforcement learning (deep-RL) algorithms [2]. The robot should be able to detect changes in workspace state by comparing pixel depth differences between the previous and current workspace states. Therefore, an ineffective push which might not really help grasping leaves the robot struggling to grasp. Next, the second concern to be considered is that, in an occluded workspace whereby intelligent grasp pose estimation is needed to pick up all of the objects, a single view of the workspace might not be sufficient to accomplish these tasks. The drawback of using a single camera during a robotic grasp task is that it may suffer from a lack of visual data due to the single view, making it difficult to precisely predict object locations in an occluded environment. The two problems of robotic grasping in cluttered surroundings and avoidance of occlusion are closely related to each other. We wondered if there is an approach to avoid these two problems and improve grasp efficiency by using a multi-view and visual change observation-based execution of grasping tasks in both cluttered and occluded environments.

Data representation with several levels of abstraction is achievable with deep learning (DL) [3]. On the other hand, reinforcement learning (RL) refers to how software agents learn to act in a way that maximizes cumulative reward via trial and error. Combining these two machine learning approaches takes advantage of deep learning’s representation ability to address the reinforcement learning challenge. A typical deep-RL system employs a deep neural network to calculate a non-linear mapping from perceptual inputs to action values, as well as RL signals to update the weights in the networks, generally using backpropagation, to yield better rewards [4]. In robotic grasping, the robot examines the surroundings using RGB-D data and takes the best action possible within the policy. In this paper, deep-RL is used to address robotic grasping in cluttered and occluded environments.

Various studies have been conducted on grasping an object from a single viewpoint using deep-RL. Robot grasping [5,6,7,8,9,10,11,12], for example, has been extensively researched, but the emphasis has primarily been on performing a particular task in clutter with a single viewpoint. In performing various robotic manipulations, several techniques have been used such as cooperative object manipulation [13] and reach-to-grasp-to-place [14,15,16,17,18,19,20]. To perform pattern exploration and grasp identification, Guo et al. [21] proposed a mutual convolutional neural network (CNN). To solve the issue of mixing grasp recognition and object detection with relationship logic in piles of objects, Zhang et al. [22] proposed a multi-task convolution robotic grasping network. Park et al. [23], on the other hand, used a single multi-task deep neural network to learn grasp recognition, object detection, and object relationship reasoning. Currently, the most common input for determining a grasp is a single RGB-D (red green blue with depth) image from a single fixed location, which lacks visual data. When partial views are insufficient to gain a thorough understanding of the target object, having more perspective data is useful. For example, Morrison et al. [24] examined various informative perspectives in order to reduce uncertainty in grasp pose estimation caused by clutter and occlusions. This enables the robot to perform grasping of invisible objects. However, these approaches do not consider whether the camera itself is fully or partially occluded.

Robot grasping, pushing [25], shift-to-grasp [26], and push-to-grasp [27] have been intensively studied, but mostly on performing a specific task. Some approaches have been involved in performing various robotic manipulations. Performing a push or shift action during a grasping task in clutter has been implemented in different studies. By contrast, object pushing or shifting in a workspace can be useful even if the workspace is not cluttered. For instance, the object could be in a place where it fails to be grasped. Thus, shifting the object can facilitate easy grasping for the robot. In prior studies, the pushing action and the grasping action were synergized as complementary parts for each other on the basis of selecting the maximum Q-value by , either to clear objects from the table [27], grasp invisible objects using color segmentation [28,29] or the object index number [30], or perform block arrangement tasks [31]. The maximum value strategy can be enhanced by Q-learning on two neural networks (NNs) in parallel, in which the first NN is used to estimate the push Q-value , whilst the second is used to determine the grasp Q-value (e.g., in each iteration state (), NN estimates the Q-values for each grasp and push; if the grasp Q-value is low and the push Q-value is high, the push action will be executed and vice versa). Specifically, these challenges are presented as follows. (1) When pushing and grasping are determined based on the maximum predicted Q-values of two primitive actions (i.e., grasp and push) from two neural networks, the robot will recognize the full clutter and prioritize push over grasp as the grasp Q-values remain low. Limited by the workspace size, the foremost objects will tend to be moved out of the workspace’s borders, which cannot be grasped by the robot. (2) For the training robot to coordinate between push and grasp (e.g., deciding when and how to push or grasp), many iterations are required, and in some cases, the robot will fail to disassemble closely arranged objects. (3) The robot may struggle to grasp since the camera is either fully or partially occluded because it may suffer from a lack of visual data, making it difficult to precisely predict object locations in occluded environments.

This paper proposes a multi-view and change observation-based approach (MV-COBA) that uses two cameras to achieve multiple views of state changes in the workspace and goes on to coordinate grasp and push execution in an effective manner. This approach aims to prevent a lack of visual data due to having a single view and perform effective robotic grasping in various working scenarios. The MV-COBA is proposed to emphasize the additional challenges of grasping in a cluttered environment and occlusion, as a main objective in this paper. The MV-COBA is divided into two parts: In the first part, the proposed approach’s workflow begins with two RGB-D cameras capturing an RGB-D image of the workspace from two separate perspectives. Next, the extracted features are fused and fed to a single grasp network after each captured RGB-D image’s intermediate feature extraction. In the second part, the robot performs a grasp and then observes the previous and current states of the workspace. If no visual change observation is available, then the robot will perform a push action. The main technical contributions of this paper are as follows:

- Using multiple views to maximize grasp efficiency in both cluttered and occluded environments;

- Establishing a robust change observation for coordinating the execution of primitive grasp and push actions through a fully self-supervised learning manner;

- Incorporating a multi-view and change observation-based approach to perform push and grasp actions in wide scenarios;

- The learning of MV-COBA is entirely self-supervised, and its performance is validated via simulation.

This paper is organized as follows. Section 2 discusses the related works. Section 3 states the research problem definition and the motivation of the proposed approach. Section 4 describes the proposed MV-COBA, including change observation, action representation, problem formulation, and the approach overview. Section 5 presents the simulation setup and training protocol. Section 6 provides the simulation results and discussions. Section 7 presents the conclusion and future work.

2. Related Works

An industrial robot should be able to perceive and interact with its surroundings. The ability to grasp is essential and crucial among many of the basic skills because it can provide tremendous strength to society in performing robotic tasks [2]. Several different approaches have been proposed in recent years to perform grasping in clutter by using deep-RL.

2.1. Single View with Grasp-Only Policy

The grasp-only policy is a strategy for teaching a robot to grasp objects using only the grasp action when no other primitive actions (such as push, shift, and poke) are involved. A single depth image has recently been used to execute grasping, where the depth image is passed to a four-branch convolutional neural network (CNN) with a shared encoder–decoder [32]. Although the active vision approach has been implemented to address the problem of robotic perception [33,34,35], which is considered the key subtask in grasping, active vision is not intensively exploited in addressing the issue of grasping in a cluttered environment. Kopicki et al. [36] addressed the issue of how to improve dexterity in order to grasp novel items viewed from a single perspective. They proposed a single-view mechanism, where the single view similarly works as a direct regression of point clouds. However, the backside view of the object is invisible to the camera, which leads to the absence of some data, increasing the difficulty of grasping the object from the backside view. In addition, a 6-DoF (degree of freedom) grasping of the target object has been performed in a cluttered scene using point cloud-based partial observation [9,37]. Recent studies that focused on multi-finger grasps (e.g., [8,32,38,39]) primarily employed planned precision grasping for known objects. However, grasping in crowded settings with a multi-finger gripper is inefficient, particularly when dealing with retrieving a target object from the clutter, since the multi-finger hands may struggle to find enough space for their fingers to move freely in the workspace. In comparison, other studies have concentrated on robotic grasping of unknown objects adopting parallel-jaw grippers (e.g., [37,40,41,42]). As stated in [43,44], multi-finger grasps remain more problematic than parallel-jaw grasps. However, these policies (grasp-only) might fail to grab items when they are engaged in a well-organized cluttered object situation that requires assistance from other actions (e.g., pushing, shifting) to detach the objects from the arrangement. Furthermore, they do not consider the occlusion challenge, particularly when the camera itself is either partially or fully occluded.

2.2. Suction and Multifunctional Gripper-Based Grasping

Instead of gripper-based grasping, suction-based grasping is an effective mechanism for grasping amid cluttered environments via deep-RL. For pick-and-place tasks, Shao et al. [45] utilized a suction grasp mechanism, rather than a parallel-jaw gripper, to avoid the challenge of grasping objects in a cluttered environment by training via Q-learning on the ResNet and U-net structures. Another study [46] presented a suction grasp point affordance network (SGPA-Net) in which two neural networks (NNs) were trained in series using greedy policy sampling-based Q-learning. However, their approach of connecting two networks and training them with mixed loss produced unsatisfactory results. Furthermore, the authors in [47] attempted to enhance the success rate of suction grasp by utilizing the dataset of [40]. On the other hand, rather than grasp-only, or suction-based grasping, other researchers preferred grasping in cluttered environments by coordinating both grip and suction at the same time using deep-RL, the device for which is referred to as a multifunctional gripper, which is a composite robotic hand with a suction cup. For instance, Zeng et al. [48] created a robotic pick-and-place system that could grasp and detect both known and unknown objects in a cluttered environment via a deep Q-network (DQN). Yen-Chen et al. [49] focused on ‘learning to perceive’ and transferred that knowledge to ‘learning to act’. In addition, the deep graph convolutional network (GCN) model was used to predict suction and gripper end-effector affordances in robotic bin picking [50]. However, these research methods concentrate on grasp-only policies, which may be ineffective in circumstances involving the detachment of well-organized items. Additionally, they neglect the occlusion issue, which happens when the camera is partially or fully obscured.

2.3. Synergizing Two Primitive Actions

Synergizing two primitive actions can be easily performed in clutter once these actions are executed as a complementary scenario. Synergizing two actions is a fundamental strategy, but it remains a challenging policy to learn. Accordingly, several studies which focused on improving grasping performance have contributed to this field. For instance, visual push-to-grasp (VPG) was proposed in [27] by learning pushing and grasping in a parallel manner using Q-learning with fully connected networks (FCNs). In another study, grasping was performed in clutter using a deep Q-critic [28], which learns the annotation of the target objects through FCNs. In addition, the task of singulating an object from its surrounding objects was performed in [51] by using a split-deep Q-network (split-DQN) that learns optimal push policies. Object color detection, which was used in [52], is limited to certain types of objects in training and testing scenarios. Hundt et al. [31] described the schedule for positive task (SPOT) reward and the SPOT-Q RL algorithm, which efficiently learns multistep block manipulation tasks. Another method for promoting object grasping in clutter using DQN was determined in [26] via shifting an object by putting the fingers of a gripper on top of the target object to ensure improvement in the grasping performance. Given depth observation of a scene, an RL-based strategy was used to achieve optimal push policies, as presented in [53]. These policies facilitate grasping of the target objects in cluttered scenes by separating them from the surrounding objects, possibly unseen before during the training phase. However, the authors employed an instance push policy, in which a sole push policy is learned for the recognizable target in clutter via Q-learning.

A goal-conditioned hierarchical RL formulation with a high sample efficiency was presented in [30] to train a push-to-grasp technique for grasping a specific object in clutter. In [54], the visual foresight tree (VFT) method was presented to identify the shortest sequence of actions to retrieve a target object from a densely packed environment. The method combined a deep interactive prediction network (DIPN) for estimating the push action outcomes, and Monte Carlo tree search (MCTS) for selection of the best action. However, the time required by VFT is long due to the large MCTS tree that must be computed. Additionally, it assumes prior knowledge and relies on a target object with a specific color. Alternatively, the DQN-based obstacle rearrangement (DORE) algorithm was proposed [55], and the success rates were checked using intermediate performance tests (IPTs). These approaches, however, are limited to retrieving the target object using identical grid space-based object rearrangement, which cannot be applied to instances that are not modeled by regularly spaced identical grids. These studies concentrated on object retrieval tasks rather than object removal tasks. They assumed prior knowledge and relied on a target object with a specific color.

Furthermore, Yang et al. [56] relied on a segmentation module that must be able to identify the target object; by contrast, [56] accepted the target object as an image. However, this approach failed in some cases, and the grasp success rate reached 50% for different reasons, particularly in heavily cluttered scenes, due to the physical limitations of the gripper and the visual similarity of the models in the random dataset, especially in the cluttered setting. A similar concept of image segmentation combined with a visuomotor mechanical search was used in [57] using deep-RL agents that could efficiently uncover a target object obscured by a pile of unknown items. However, the agent repeated the same action without causing any change in the environment. In other cases, the agent moved near the target object but did not interact with the items occluding it.

2.4. Multi-View-Based Grasping

The occlusion scenario has been avoided in some studies through various techniques such as grasping visible objects by using color segmentation [28] and radio frequency perception [52]. Other approaches have employed multiple views from a single camera to increase the probabilities of grasp success in clutter. For example, Morrison et al. [24,58] proposed a multi-view approach that employs active vision to choose insightful perspectives of different grasp pose estimates. A grasping task in a cluttered environment has been performed by using a generative grasping-CNN (GG-CNN). A composite algorithm has been proposed for estimating the pose of a target whose models are derived from multiple views [59]. Other approaches aimed at crowded scenes, in which a robot has to know whether an object is on another object in a pile of objects in order to grasp it successfully, have also been proposed. For instance, a shared CNN has been proposed for discovering and detecting the occluded target [21]. While avoiding the issue of mixing grasp execution and object detection in piles of objects, a multi-task convolution robotic grasping network was proposed in [22]. The authors developed a framework that uses multiple deep neural networks for generating local feature maps, grasp recognition, object detection, and interaction reasoning separately. Instead of multiple tasks, a single task has been proposed to avoid the same issue of mixing grasp execution and object detection [23]. In addition, instance methods of segmentation have mostly been used in studies to detect the target object. For example, a grasping task was achieved in [40] by training the Q-learning policy on pre-trained models (i.e., VGG neural network) by using fully convolutional networks (FCNs). In this work, the authors used multiple views from different sides by capturing RGB-D images.

2.5. The Knowledge Gap

Some difficulties arise as a result of combining the two actions. From the literature, the push motion has been used to accomplish specific goals such as retrieving an object from its cluttered surroundings [28,30,34,54,55,56,57,60,61,62]. This approach assumes prior knowledge and relies on a predefined target object either using color, quick response (QR) code, or barcode objects. In object removal tasks [26,27,63,64,65,66], the pushing and grasping actions have been synergized by using two parallel FCNs, one each for grasping and pushing. The works of [9,27,67] are the closest to our work. Al-Shanoon et al. [9] proposed the Deep Reinforcement Grasp Policy (DRGP). DRGP [9] focuses on performing the grasp-only policy, with no other actions (such as push, shift, and poke) involved. This strategy encounters difficulty especially in a well-organized shape situation where there is no space for the robot’s gripper’s fingers to execute the grasp. The other two papers [27,67] focused on synergizing push and grasp using the maximum Q-value strategy, predicted by two FCNs. This strategy is considered the basis of our work. However, this strategy fails in certain cases because the robot proceeds to push the entire pile of objects, causing the items to be pushed out of the robot’s workspace. In addition, it performs a push movement when it is not necessary, resulting in a series of grasping and pushing actions due to the estimation of grasp points and push direction on separated FCNs.

Another challenge dealing with the pushing behavior is that the push action could be less efficient when it is used in random clutters. The reason behind this issue is that the best action for the robot to take is determined by the highest predicted Q-values. As a result, the robot takes the push action as the best action since grasping Q-values remain low, as reported in [27]. To overcome the aforementioned challenges, we advocate training both the push and the grasp action with the same FCN. The reason is twofold. First, it reduces model complexity. Second, it helps to minimize unnecessary push behavior generated by the maximum Q-value strategy. The push and grasp actions are then coordinated using the observation of the change in the pixel depth in the workspace. Additionally, those who used multiple views (e.g., [24,40]) employed a single camera-based multi-view strategy with no regard to whether the camera is completely or partially obscured, resulting in the robot failing to complete the task. Furthermore, they only performed grasp policies, which they were unable to accomplish when they involved detaching well-organized objects, causing the robot to struggle to complete the tasks. The proposed MV-COBA, using dual cameras for multiple views, as well as change observation for the effective execution of primitive grasp and push actions as complementary parts, is considered to alleviate challenges in clutter and occlusion scenarios. In the next section, the problem description of the aforementioned related works is explained and discussed in detail.

3. Problem Definitions

The work presented in this paper addresses one of the most fundamental problems in robotic manipulation: robotic grasping in cluttered scenarios. Consider a well-organized shape situation where objects are placed close to each other, as illustrated in Figure 1. Such an arrangement leaves no space for the gripper’s fingers to execute a grasp without first detaching the tightly arranged objects. DRGP [9], which teaches a robot to grasp objects using only the grasp action, with no other non-prehensile actions (such as push, shift, and poke), would not be able to perform efficient grasps in this situation. Synergizing non-prehensile and grasp actions is seen as a mechanism for addressing the challenges that grasp-only policies cannot handle. Previous studies have focused on synergizing push and grasp using the maximum Q-value strategy (e.g., [7,27,67]), in which two NNs, one for each action, were trained to predict the best push and grasp Q-values and went on to execute the action with the higher Q-value.

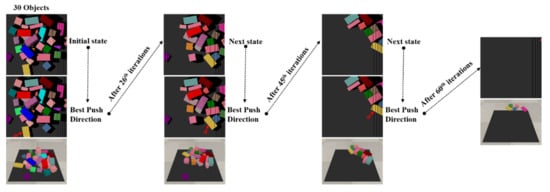

Figure 1.

Challenge one of the first problem: learning to push the entire pile of objects in a well-ordered scenario.

The issues with this learning scheme have been identified: (1) Ineffective push execution, in which the robot pushes the entire pile instead of the targeted object, which results in the need for several pushes in order to detach the objects from their arrangement (see Figure 1). (2) Push is favored over grasp, particularly in heavy clutters, because grasping Q-values remain low, as reported in [27], which results in the entire pile being pushed out of the workspace (see Figure 2). (3) The robot performs the push action when the grasp action should be performed instead. This implies that the robot could grasp without needing to push, and vice versa; however, the maximum value strategy might cause the robot to push when grasping is the best option.

Figure 2.

Challenge two of the first problem: some objects tend to be pushed out of the workspace in a randomly cluttered scenario.

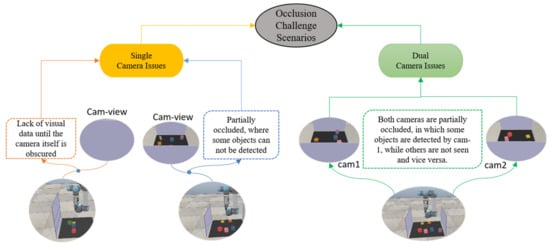

The second challenge is the scenario of occlusion avoidance. Several studies have effectively performed grasping tasks. However, to achieve grasping in clutter, where objects are stacked on top of each other in a randomly cluttered scenario [28], a single-perspective view is insufficient. Additionally, employing multiple views of a single movable camera [24] to increase the probabilities of grasping success in clutter would not be an adequate solution. This strategy suffers from a lack of visual data until the camera itself is obscured, and there is no other camera available to compensate for the lack of a visual sensor. Although multiple views have been implemented to grasp occluded objects [59], there has been no consideration of whether one of the cameras is totally obstructed or both cameras are partially obscured. Additionally, how the two cameras compensate for each other throughout the grasping task to avoid such difficulties remains to be explored. To alleviate clutter and occlusion, however, executing the grasp action using dual camera-based multiple views as complementary parts has not been previously considered. Figure 3 demonstrates the occlusion problem, where one of the cameras is fully or partially occluded.

Figure 3.

The challenge of occlusion scenarios.

The MV-COBA’s Motivation

The proposed MV-COBA is motivated to offer a significant perspective on the issue of learning to push and grasp in cluttered environments using deep-RL. In this paper, we demonstrate how the MV-COBA allows robotic systems to acquire a range of sophisticated vision-based manipulation abilities (e.g., pushing, grasping) that can be generalized to a number of different scenarios while consuming less data. Since the single-view-based robotic learning strategy is not adequate to overcome the occlusion challenge, the problem is modeled through multiple views using two cameras (). MV-COBA-based grasping in clutter and occlusion is seen an alternative approach that can facilitate spatial equivariance in learning robotic manipulation. We hope that by emphasizing the importance of spatial equivariance in learning manipulation, the MV-COBA presented in this paper can inform key design decisions for future manipulation approaches.

In this paper, we introduce approaches based on deep reinforcement learning frameworks for learning to grasp objects in clutter. To map visual observations to actions, we employ the Q-learning reinforcement learning framework trained on a fully convolutional network (FCN). The idea is to utilize traditional controllers to perform primitive actions (e.g., grasp and push), and then to use an FCN to learn visual affordance-based grasping or pushing Q-values. In other words, the FCN maps visual observations (e.g., RGB-D image) to perceived affordances (e.g., confidence scores or action values). For instance, to learn the visual affordances of grasping or pushing Q-values with a parallel-jaw gripper, we can use a fully convolutional network that takes an RGB-D image of the robot workspace (e.g., a workspace filled with objects) as input and outputs a dense pixel-wise map of the probability (Q-value) of picking or pushing success for each observable surface in the image. The robot then guides the robot’s end effector, which is equipped with a parallel-jaw gripper, to perform a primitive action that connects the pixel with the highest estimated affordance value.

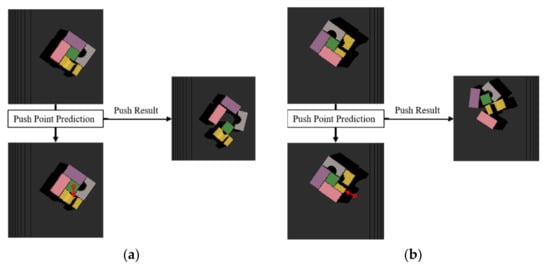

The MV-COBA is divided into two main parts. The first stage is perception, where a single FCN is used to learn visual affordance-based grasping or pushing Q-values. When two FCNs are used to predict the Q-values for pushing and grasping separately, the robot performs the best action based on the highest predicted Q-value. In certain situations, especially crammed clutters, the push action will be prioritized over the grasp action because the grasp Q-values remain low. This results in unnecessary push behavior. Even when the model was trained for over 7000 iterations, this problem still occurred [68]. To mitigate this issue, we propose training grasp and push actions on a single sharable network. For example, since the grasp and push actions share the same point of prediction, the robot could be enabled to shift a specific object (Figure 4a) rather than the whole pile (Figure 4b). Accordingly, this strategy ensures that the robot can detach the objects in fewer iterations. The second reason why using a single fully connected layer can improve grasp performance is that the push behavior, as shown in Figure 2, can be inefficient when dealing with randomly cluttered object scenarios, which become more difficult as the number of objects increases.

Figure 4.

Push action’s efficiency in detaching objects from their arrangement: (a) shifting a specific object (proposed MV-COBA); (b) pushing the entire pile (VPG policy [27]).

Catenation of multimodal features can provide important and complimentary information [69,70]. Thus, in this stage, feature fusion has also been used to fuse the extracted features from the multiples views using raw images to address the occlusion challenge illustrated in Figure 3. The goal of feature fusion is to compensate for the circumstance that one of the cameras is completely or partially obscured. This type of application could be beneficial in industrial line production when static cameras are employed to observe the robot’s workspace. For instance, if an obstacle obscures one camera’s view of the workspace, the second camera can compensate for the loss of visual data. Similarly, if both of them are partially obscured, they can compensate for the absence of visual input.

In the second stage of the MV-COBA, that is, design, pixel depth differencing-based change observation is adopted as a coordinating mechanism to decide which action should be performed. By examining the visual change in the previous state of the workspace and the current state, the robot learns if the previous grasp action has been successful or introduced a detectable change in the arrangement of the objects. On the other hand, if no visual change is detected, this indicates an ineffective grasp has been performed. Based on the principle that a grasp should be attempted by default, whereas a push should be performed only when it is needed, we minimize unnecessary push behavior by performing the push action when the previous grasp action has failed. We address the problem of coordinating two actions in this paper; existing related works addressed this problem using the maximum value strategy.

Previous works predicted the grasp pose and push direction using separated NNs. This implies that the push NN and the grasp NN take the RGB-D image separately, and then each one predicts the Q-value and thus makes a selection of the maximum value within the grasp net prediction and push net prediction. However, the object can be grasped without being pushed in certain situations and vice versa. To replace the maximum value-based strategy to synergize two actions, pixel depth differencing is proposed since it has been shown (test scenarios) to be more efficient than the maximum value strategy. Although pixel depth differencing is not the best option, it has been shown to effectively address the aforementioned problem. In contrast to previous works, the robot ensures that pushing is performed when it is needed, and the same is applicable to grasping. To achieve these goals, a change observation based on pixel depth differencing to synergize two actions is proposed.

The methodology is outlined in the following section, which includes the proposed approach workflow, learning policy, and theoretical formula.

4. Methodology

To begin, detailed descriptions of the grasp and push actions are provided to explain how they are executed. Following that, the MV-COBA is introduced to contextualize the approach’s procedure. Next, the procedure for observing the changes based on pixel depth differencing is described. Finally, the formulation problem and reward functions are discussed in detail.

4.1. Change Observation

One of the key issues in visual sensing is change observation (also called change detection), which aids in perceiving any changes on an object’s surface. Change detection, as described by Singh, is the process of noticing differences in the condition of an object or phenomenon over time by monitoring [71]. Change detection is also described as the process of visually observing the robot’s workspace and detecting the change between the previous and current states. Furthermore, change detection could be divided into three categories [72]: (1) binary change, which utilizes a binary indication for change or non-change areas; (2) triple change mask, which displays the change based on the geometrical label of the status, which may be positive, negative, or non-change; and (3) type change, which utilizes a full change matrix. Additionally, change detection techniques (3D data) are divided into seven categories [73]: geometric comparison, geometry–spectrum analysis, visual analysis, classification, advanced models, geographic information system methods, and other approaches. Each category has its own set of algorithms for detecting changes in 3D data [74]. The benefits of applying change detection to synergize grasp and push motions in grasping tasks have been highlighted in the current research. To achieve the study’s objective, the geometric comparison utilizes the Euclidean distance, which is the foundation of height differencing, and projection-based difference methods are used. The basic and direct height differencing technique [75] is sufficient to observe the change in the robot’s workspace in the implementation to enhance the change detection process.

Depth Differencing (Image Differencing)

Change detection has mostly been used in various applications that track the changes on the object’s surface [76], including urban (e.g., building and infrastructure change detection), environment, ecology (e.g., landslides, forest, and vegetation monitoring), and civil contexts (e.g., construction monitoring). In robotic grasping, change detection is mostly used in detecting the objects on the workspace in order to start the robot’s training or testing session. Observing the change in the robot’s workspace, as a part of controlling the robot’s grasping performance, appears to be a new mechanism that can be used to synergize grasp and push actions. We used the image differencing method that subtracts data of the previous state image from those of the current state image (pixel by pixel). In other words, image differencing is a technique that involves subtracting the digital number (DN) value of one data point from the digital number (DN) value of another data point in the same pixel for the same band, resulting in a new picture. Mathematically, pixel depth image differencing is represented by subtracting the depth image of the current state from the depth image of the previous state (), as stated in Equation (1).

The main challenge in using the image differencing technique is the selection of the threshold value. The threshold value varies by application, and it can only be obtained from a trial-and-error process. We investigated the robot’s workspace with different threshold values; we found that the threshold can be obtained based on trial-and-error processes, and there is no specific formula for obtaining that value. Increasing the value of the threshold means the robot can detect if there is a considerable change (clearly observed), whereas reducing the threshold value means the robot can detect even a small change which the human eye might not be able to notice. With the selected threshold value, a change observation (CO) is examined if the total of exceeds the threshold value , as shown in Equation (2).

In this instance, the MV-COBA identifies a CO if the value of is greater than the threshold value (). A grasping attempt that is successful is also considered a CO. Otherwise, no CO exists, as shown in Equation (3).

In the event that no change is detected, the robot executes a push operation. If the push action does not result in a change in the workspace, the robot repeats the push action.

4.2. Grasp and Push Action Execution

Each action is presented as a primitive motion to be performed at a 3D position , which is projected from a pixel of the heightmap image that represents the state (Equation (4)).

Two primitive motions to be executed by the robot are grasping and pushing actions. To perform a grasp action, the robot positions the middle point of the gripper’s parallel jaw at P in 1 of 16 orientations. To ensure the robot reaches the desired object, the robot slides its gripper’s fingers down 3 cm before closing its fingers and picks up the object. At a distance of almost 30 cm, the gripper’s fingers are vertically measured against the workspace. The difference between the gripper’s location before and after grasping attempts is compared to 30 cm to determine if a grasp action has been accomplished. This is needed to keep the robot inside the workspace and prevent singulation. As an alternative, when the robot’s fingers are not completely closed, a successful grasping attempt is counted. This signifies that an object stays intact in its gripper’s fingers until it is placed down.

In terms of pushing action, the robot executes a pushing action at the starting position P with a push length of 10 cm in 1 of 16 directions. The robot closes its fingers before moving down to push with the tips of its closed gripper’s fingers. If the robot completes the push length on the workspace, the pushing operation is deemed successful. Motion planning of the robot arm is conducted automatically in both primitive grasp and push actions using a reliable, collision-free inverse kinematic solver.

4.3. Problem Formulation

The grasping and pushing actions are formulated as a Markov decision process (MDP) [48]. MDP serves as the theoretical basis for reinforcement learning, since it allows the interaction process of reinforcement learning to be described in probabilistic terms. MDP can be expressed as a tuple , which consists of a set of states , a set of actions , the function of the transaction probability , a reward function , and a discount factor . After achieving a state () and executing an action () according to the current policy () at every time step (), the agent subsequently transits to a new state according to the probability of transition () and receives a corresponding reward (). This process is repeated for each time step. The goal of reinforcement learning is to find an optimum policy () that maximizes long-term returns, , over the time horizon and taking into account the discount factor . The discount factor determines the importance of the future rewards at the present state. With a smaller value of , the agent focuses more on optimizing the immediate rewards. However, while performing an action, we cannot be certain of the future states.

One way to determine the best policy is to compute the state–action value function first, which is known as Q-learning. Accordingly, we must consider all potential scenarios and derive an estimate of the long-term returns of based on the likelihood of a state change, as stated in Equation (5), which is known as the Bellman equation [77]. The state–action value function () is the long-term expected returns after performing an action () at the present state (), and then following the policy ().

The action with the highest action value is the output, and it results in an instant reward. As a result, the policy is reinforced by choosing the action that has the highest state–action value, as shown in Equation (6) [77]. The agent’s purpose is to choose the optimal action with the highest state value, maximizing the action value function and the sum of future reward expectation returns. Maximization is achieved by choosing the action with the highest value (among all potential actions).

We propose adopting deep Q-learning, which is a method of deep reinforcement learning that combines fully connected networks (FCNs) with Q-learning in order to create Q-values by fitting a function rather than a Q-table. In this paper, we define the learning process for synergizing two actions (e.g., grasp and push). In previous related work, the two actions were predicted by two separated networks; however, we reformulated the problem with one prediction network () for both primitive grasp and push actions (). Firstly, the target FCN network calculates for each potential action at a given state and finds the highest Q-value using a greedy policy, . The policy attempts to address the exploration and exploitation issue in Q-learning. The value of will progressively drop throughout the training phase in order to achieve a favorable trade-off between exploration and exploitation. For example, once an action is executed in the current state based on the policy, the action that can receive the highest Q-value is selected to calculate the target Q-value in the next state, whereas Equation (7) is used to obtain the target Q-value (), which is used to update the Q-value for each action by adding the immediate reward provided to the agent at the current state and the discounted Q-value.

Then, the action value function is updated for each action in a given state using the temporal difference (TD) method, which is aimed at achieving an optimal policy toward the estimated return TD target. TD is the process of minimizing the temporal difference error in Q-values through a learning process that includes subtracting two Q-values, such as the current Q-value , from the target Q-value , as stated in Equation (8).

We consider the heightmap in the same way that we treat the state, i.e., . Our model’s purpose is to learn the action value function , which estimates the expected return for a grasping or pushing action in a state under a policy . However, instead of a single RGB-D camera, we have two RGB-D cameras with two perspectives, which means that we feed a two-color heightmap and a two-depth heightmap as the state . In the proposed approach, the problem is modeled as a discrete MDP. Thus, we observe the robot workspace from multiple views, which means 2 are generated. Accordingly, the state representation can be expressed as the set . In MDP, the robot performs actions in a state , then transitions to the next state , and receives the rewards . Our model’s purpose is to learn the action value function , which estimates the expected return for a grasping or pushing action in a state under a policy . To begin, each and is rotated to, for example, orientation, before being sent to the FCN to create Q-values, and then the highest Q-value, , is selected. The optimal actions are calculated based on the by the depth pixel difference (PDD) in comparison to the threshold , as stated in Equation (2). Since we have two state representations, the PDD will be obtained for both and , as stated in Equation (9). The change observation observes if either or .

To represent Equation (7), the action is selected using the change in observation caused by subtracting from , as stated in Equation (3). Thus, the action selection could be carried out in the manner specified in . Then, Equation (7) could be represented as in Equation (10).

The is parameterized as a vector ), where denotes the middle position of the gripper, and is the rotation of the gripper during the action execution in the table plane. In addition, the action value function, which evaluates the quality of each potential action at a given state, is solved using a fully connected network (FCN). To ease learning-oriented pushing and grasping actions, the input heightmap is rotated by 16 orientations (), each of which corresponds to a push or grasp action at different multiples of 22.5° orientation from the original state, before being sent to the FCN. The retrieved visual features and execution parameters are then concatenated by the Q-function network to generate the policy state. The Q-value policy samples the optimal action by mapping the action distribution across the current state representation using the FCN (e.g., action network ()) at . The primitive actions () and pixel () with the greatest Q-value of all 16 pixel-wise Q-value maps are the actions that maximize the Q-function (Equation (11)).

Once the pixel with the best predicted Q-value is obtained, a grasp is performed. During the subsequent iterations, the visual changes in the previous state of the workspace and the current state are examined to determine the suitable action. The next section describes the proposed change observation strategy.

Reward function: This provides the agent with a score indicating how well the algorithm performs in relation to the environment. It denotes how good or bad the agent is performing in the environment. In other words, rewards represent both gains and losses. To mitigate their impact, future rewards must be multiplied by the discount factor (), as written in Equation (10). Therefore, the reward function for MV-COBA is divided into two groups: grasp reward and push reward, as follows.

Grasp reward: is assigned for a failed grasp attempt and no change detection in the workspace, and is assigned for a failed grasp attempt with change detection in the workspace, whereas is assigned for a successful grasp attempt.

Push reward: is assigned for pushes with no change detection in the workspace, and is assigned for pushes with change detection in the workspace.

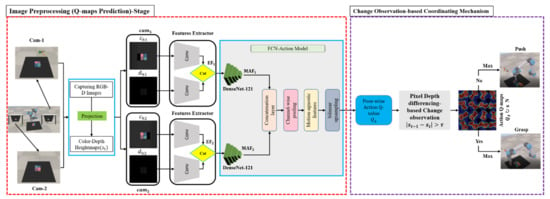

4.4. MV-COBA Overview

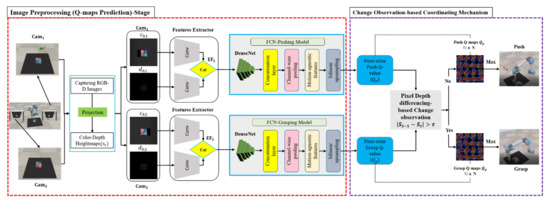

An illustration of the proposed MV-COBA is presented in Figure 5. The MV-COBA works in two stages. The first stage is to take multiple views of the workspace into consideration, which aims to increase the likelihood of grasping by having more complete visual data of the workspace. It helps to alleviate difficulty in generating dexterous grasping points due to visual occlusion in a single-view setting. In the second stage, the robot decides on a push or grasp action based on the highest Q-value (obtained from the first stage) and the observation of the visual change in the workspace.

Figure 5.

The MV-COBA for executing primitive push and grasp actions.

Firstly, the two fixed-mount RGB-D cameras capture the robot’s specified workspace from multiple opposing views. Then, all the images () are resized to a size of , which is represented as a 2D vector, and two sets of random patches are extracted from them. One set is RGB patches with a size of , and the other one is D patches with a size of . Each set of patches is then normalized separately, whereas the , , and are the height, width, and channel, respectively. Therefore, RGB has three channels (red-green-blue), and D has one channel. To construct a color heightmap () and a depth heightmap (), the RGB and depth images orthographically project in the direction of gravity using a known extrinsic camera parameter. The current status of the robot workspace is represented by this heightmap image. The workspace has a resolution of 224 by 224 pixels and covers an area of of the tabletop surface. As a result, each pixel in the 3D workspace represents a vertical column of the heightmap.

Secondly, features are retrieved from each heightmap in a convolutional manner with the two trained feature extractor networks (FENs), which are two-layer residual networks [78], whereas each FEN has two channels. One channel uses the patches set as input and is used for extracting color features from the RGB images; the other channel uses the patches set as input and is used for extracting shape features from the depth images. Therefore, the extracted features can be expressed as and . Before feeding the extracted features into DenseNet, the set of and the set of are catenated independently. The output of each FEN is represented as a vector , which is fed into DenseNet-121 [79], pre-trained on ImageNet [80], to generate the motion agnostic features (MAF).

After forward propagation through the FENs and DenseNet, we concatenate their outputs as the final feature vector. These features (e.g., MAF) are then sent to the action net , which is a three-layer residual network followed by bilinear up-sampling, to estimate the Q-value of the grasp and push actions at 16 orientations (different multiples of 22.5°). The action that maximizes the Q-function is the primitive action and pixel with the highest Q-value across all 32 pixel-wise Q-value maps. Experience replay [61] is employed, which is used to store the agent’s experiences at each time step in a dataset that is pooled across many episodes to create a replay memory.

The primitive push or grasp action is selected based on the highest Q-value earned. At the same time, the visual change in the workspace is examined by comparing the workspace images in the two subsequent states. The robot will perform a push action if no noticeable visual change is detected. Otherwise, a grasp action will be executed. In self-supervised learning, this process is repeated continuously. The grasp and push actions are trained with a single shared network, that is, a fully connected network (FCN). The grasp and push actions are predicted using the same prediction network process, in contrast to other previous works which trained grasp and push actions on two parallel separated networks. Training grasp and push actions on a single network is a privilege to avoid executing unnecessary push behaviors and prevent objects being pushed out of the workspace borders.

5. Simulation of Experiments

A V-REP simulator was employed in this paper to execute a simulation experiment with a UR5 robot equipped with a parallel-jaw gripper. First, the camera took the RGB-D image of the workspace with a resolution of 640 × 480 pixels. Each pixel reflected a horizontally oriented physical grasp of the heightmap centered at the point in the scene. Training was conducted via PyTorch, which was built on a 3.7 GHz Intel Core i7-8700HQ CPU with an NVIDIA 1660Ti GPU.

5.1. Baseline Comparisons

For performance comparison, several baseline models as described below were adopted. The results of the MV-COBA and these baseline models are presented and discussed in Section 6.

MV-COBA-2FCNs: This baseline is almost identical to the proposed MV-COBA. Rather than utilizing a single fully connected network (FCN) to estimate either a push or a grab action, the predictions for each action are trained on two FCNs, as shown in Figure 6. The MV-COBA-2FCNs approach trains the push and grab actions using separable FCNs based on change observation. The MV-COBA-2FCNs approach adopts a similar framework as MV-COBA. However, instead of using a single shared to predict either a push or a grasp action, the push and grasp predictions are trained on two separated : one is for grasp , and the second is for push , as illustrated in Figure 6. The first camera is utilized to offer visual data for the push action prediction , while the second camera provides visual data for the grasp point prediction (. The goal of this baseline is to determine how the robot executes both actions if each has its own network predictor, as well as aiding in the assessment process. The proposed MV-COBA-2NNs approach seeks to enhance the grasping probability of success by the synergy of push and grasp actions; yet, the grasping action must be executed with more proficiency to boost grasp efficiency. Accordingly, the MV-COBA-2FCNs approach is grouped into two stages. The first stage is a multi-perspective approach that employs two cameras. It emphasizes the additional difficulties of grasping in a cluttered setting. The second stage is the visual change observation approach, which entails regulating pushing and grasping actions in chaos and in a confined space.

Figure 6.

The MV-COBA-2FCNs approach for executing primitive push and grasp actions.

DRGP [9]: Al-Shanoon et al. proposed the Deep Reinforcement Grasp Policy (DRGP), which focuses on performing the grasp-only policy with a single view when no other actions (such as push, shift, and poke) are involved. The difficulty in a well-organized shape situation is that there is no space for the robot’s gripper’s fingers to execute the grasp. The performance improvement of our proposed approach during a grasping task is compared to the DRGP baseline.

VPG [27]: The Visual Pushing for Grasping approach has worked effectively for grasping tasks in cluttered scenarios. However, the performance of push actions occasionally fails in some cases, which forces objects to be pushed outside the robot’s workspace, particularly during randomly cluttered object scenarios.

CPG [67]: Yang et al. developed a sophisticated Q-learning framework for collaborative pushing and grasping (CPG). Their paper proposed a non-maximum suppression policy (policyNMS) for dynamically evaluating pushing and grasping actions by imposing a suppression constraint on unreasonable actions. Additionally, a data-driven pushing reward network called PR-Net was used to determine the degree of separation or aggregation between objects. Even if the environment changes throughout the execution process, the prediction-based determination of the maximum Q-value could occasionally fail to determine the correct action to be executed. Additionally, this method lacks a restriction on the pushing distance, which could cause certain objects to be pushed out of the robot’s workspace border.

MVP [24]: The Multi-View Picking (MVP) baseline analyzes multiple informative perspectives for an eye-in-hand camera in order to reduce uncertainty in grasp pose estimate caused by clutter and occlusion observed when reaching for a grab in clutter. This enables it to execute grasps that are not always apparent from the first view. This baseline is almost identical to the proposed MV-COBA in terms of using multi-view-based grasping actions. Rather than utilizing two cameras, a single movable camera is used to capture multiple perspectives.

5.2. Training Scenarios

The MV-COBA was trained by self-supervised learning with a simulation platform. During training, a set of ten objects of varying shapes was randomly dropped into the robot’s workspace for training. The robot learned to execute either a grasp or push action by trial and error. After all objects were cleared from the workspace, another set of ten objects was dropped for further training. Experiment data were continuously collected until the robot completed 3000 training iterations.

For the training stage, the stochastic gradient descent (SGD) optimizer was applied to train the FCN with a learning rate of , momentum of 0.9, and a weight decay of The agent learned exploration using the epsilon greedy (-greedy) policy, where it started at 0.5 and reduced during the training session. The grasping action’s learning performance improved incrementally over the training iterations. At each iteration, the agent was trained by reducing the temporal difference error () using the Huber loss function, as stated in Equation (12)), where denotes the neural network parameters at iteration (), and denotes the target network parameters between individual updates. We only passed gradients through the single-pixel () and action network values used to compute the predicted value of the executed action. At each iteration, all other pixels backpropagated with no loss.

5.3. Testing Scenarios

The proposed MV-COBA was put to the test in a variety of scenarios to see how well it performed the grasping task. The testing experiment was divided into three sections, each of which validates a different type of test scenario: The first two scenarios successfully highlight the importance of applying the visual CO idea to synergize grasp and push actions, while the third scenario is presented to explain the core benefit of employing multiple views to prevent occlusion. By using two cameras to capture multiple views to observe the robot’s workspace, they act as complementary parts to each other. Once the first camera is occluded to see the workspace clearly, the second camera will compensate the lack of vision.

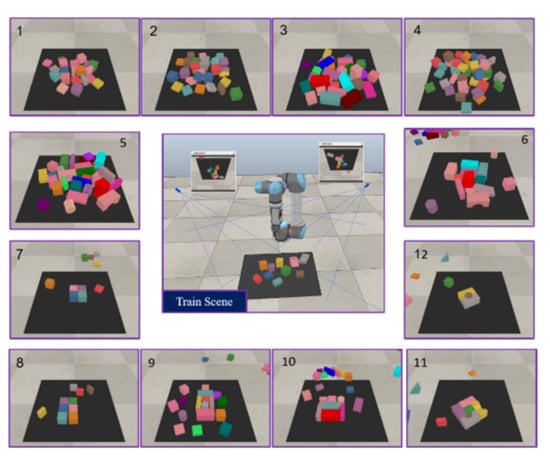

The challenge of randomly cluttered objects (test cases 1–6): The proposed approach was tested on four scenarios with a random selection of 20–40 objects cluttered together, as shown in Figure 7.

Figure 7.

A series of randomly cluttered object challenge scenarios (test cases 1–4) and well-ordered object challenge scenarios (test cases 5–10).

The challenge of well-ordered objects (test cases 7–12): The proposed approach was tested on six scenarios, where 4–17 objects were manually arranged to reflect some challenging selection scenarios, as shown in Figure 7. For instance, some objects were tightly arranged without space for grasping.

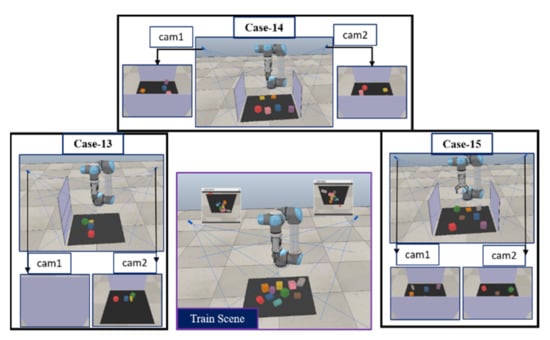

The challenge of occluded objects: The proposed approach was tested on three test cases, in which the view of some objects was occluded from one side, while they could be seen from the other side and vice versa, as illustrated in Figure 8. The occlusion between the camera and target objects can affect the robot’s performance, which may lead to failure in grasping objects. To solve this, we observed the robot’s workspace from two sides using two cameras.

Figure 8.

Challenge scenarios with occluded objects. Case-1: cam1 is completely occluded, whereas cam2 is not. Case-2 and Case-3: both cameras are partially occluded, with some objects detected by cam1 but not visible to cam2, and vice versa.

The first challenge scenario, i.e., randomly cluttered objects, is most commonly seen in the actual world. The robot may be able to execute grasping effectively even with the grasp-only policy, while the other baselines under consideration have shown lower efficiency due to frequent grasping failure and push behavior. In the second challenge scenario (well-ordered objects), however, accomplishing the grasping task with the grasp-only policy is challenging for robots. As a result, the robot may frequently fail to grip the object in a consistent manner, thereby forcing the robot to begin a new test session without completing the task. In other words, in the first challenge scenario, the robot with the grasp-only policy is capable of performing the grasping task successfully after several attempts. In the second challenge scenario, however, due to the arrangement of the objects, the robot is more likely to fail to grip the object. In the third challenge scenario (occluded objects), because of the occlusion between the camera and the objects, the robot may be unable to grasp the object with a single view. These three types of scenarios were used to test the MV-COBA’s ability to overcome the aforementioned issues. The key goals of these scenarios are as follows: (1) to see whether using two cameras is more successful than using a single camera in executing grasping tasks in a variety of settings, not just in clutter but also under occlusion; (2) to determine if pushing action can aid the robot in executing grasping tasks, and to see if the approach of change observation-based grasp and push action execution can be used to complete grasping tasks in a variety of scenarios, not only in well-ordered object challenges.

These scenarios, which differ from the training scenario as explained in Section 5.2, were created by hand to represent difficult situations. In some of these test cases, objects were placed close together in places and orientations where even the best grasping policy would struggle to pick up any of them without first separating them. Furthermore, a single isolated object which was separated from the configuration was set in the workspace as a checkpoint indicating the readiness of the training policy; the policy was deemed not ready if the isolated object was not grasped.

5.4. Evaluation Metrics

The proposed MV-COBA and baseline methods were evaluated in a series of the above-mentioned test cases. The robot was required to pick up and clear all objects from the workspace. For each test case, five test runs (denoted by n) were performed. The number of objects in the workspace varied in the range of 4–40 objects. Three evaluation metrics were used to assess the performance of the models. For all of these metrics, the higher the value, the better. These metrics are as follows:

- The grasp success rate: Ratio of successful grasp attempts to the total of executed actions over n test runs per test case.

- The action efficiency rate: Ratio of the number of objects to the number of executed actions before completion. It is used to measure the capability of the model to perform tasks by grasping all objects.

- The completion rate: This is the average of the total number of completed objects divided by the total number of objects. It is used to measure the capability of MV-COBA to grasp all objects in each test case without failing in more than five actions consecutively.

6. Results and Discussion

The findings are organized into training and testing sessions in this section. The outcomes of the baseline models besides MV-COBA are shown in the training session in the form of graphs of the grasp success rate and action efficiency, which indicate how each baseline behaved throughout the training phase and how it learned quickly and efficiently. In the testing session, a series of test cases, each of which was executed for five test runs, is presented. The performance of the models is evaluated based on the grasp success rate, action efficiency rate, and completion rate.

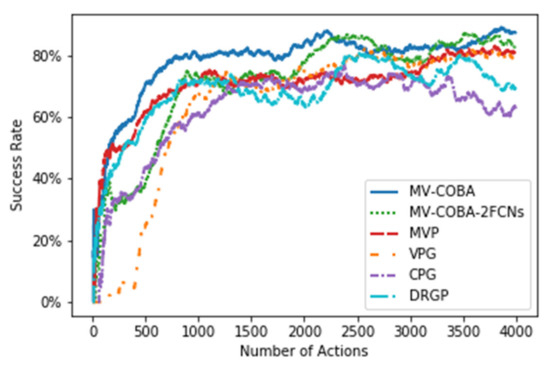

6.1. Training Session Findings

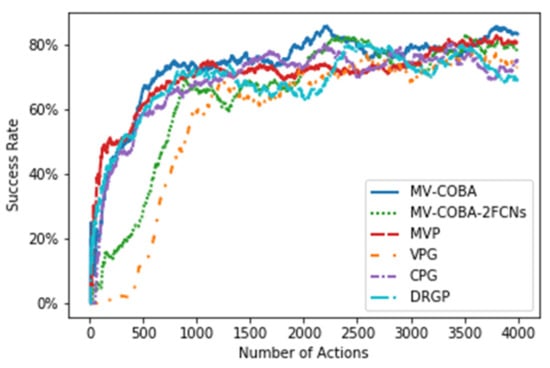

The proposed MV-COBA and other baselines were trained using the same training protocol. The grasping performance was assessed using the percentage of grasp success and action efficiency for every 200 recent grasp attempts (m = 200). At the earlier training trials, i.e., trials 𝑖 < 𝑚, the percentage was scaled by a factor of . The graphs of the grasp success rate and action efficiency rate for 4000 training iterations are illustrated in Figure 9 and Figure 10, respectively.

Figure 9.

The performance of MV-COBA compared to that of other baseline models in terms of grasp success rate during the training session.

Figure 10.

The performance of MV-COBA compared to that of other baseline models in terms of action efficiency during the training session.

The proposed MV-COBA outperformed the other baseline models with 87.2% and 82.8% in grasp success and action efficiency, respectively. MV-COBA-2NNs, which has almost the same model architecture as MV-COBA, where it employs a two-camera view for visual change detection and two FCNs for push and grasp prediction, showed a comparable performance, with an 83.8% grasp success rate and an 80.2% action efficiency rate. It is worth noting that the proposed MV-COBA demonstrated its capability in assisting the robot in quick learning while maintaining a consistent grasping performance. According to Figure 9 and Figure 10, MV-COBA achieved an 80% grasp success rate within the first 500 training iterations. On the other hand, the DRGP baseline had a grasp success (and action efficiency) rate of 74.1%. Likewise, the MVP baseline had a grasp success (and action efficiency) rate of 77.2%. The VPG and CPG baselines, which are regarded as having an ideal mechanism for coordinating two primitive actions when executing grasping tasks in a cluttered environment, showed relatively low grasp success and action efficiency rates, with 76.7% and 65.2% for VPG, and 71.3% and 59.4% for CPG, respectively. The VPG baseline also needed a substantial number of training iterations in order to learn policies that synergize grasping and pushing behaviors. It required more than 500 training cycles to achieve a 50% grasp success rate. Additionally, the CPG baseline struggled to reach 55% in grasp success within the first 1000 iterations, which indicates that it requires many iterations to gain experience in the grasping task. The performance of MV-COBA and other baseline models in terms of the grasp success rate and action efficiency is summarized in Table 1.

Table 1.

Evaluating the performance of baseline models during the training phase.

6.2. Testing Session Findings

The findings are divided into three sections: (1) randomly cluttered object challenge; (2) well-ordered object challenge; and (3) occluded object challenge. In performing all of the 15 test scenarios as illustrated in Figure 7 and Figure 8, the grasping performance of the proposed MV-COBA was compared with that of MV-COBA-2FCNs as well as earlier works implementing DRGP, MVP, VPG, and CPG. As detailed in Section 5.4, three parameters, the grasp success rate, the action efficiency rate, and the completion rate (or clearance), were considered for performance evaluation in the first and second challenge scenarios. The evaluation results for randomly cluttered and well-ordered object challenge scenarios are tabulated in Table 2 and Table 3, respectively. Meanwhile, in the third challenge scenario, the grasp success rate and completion rate were considered for performance evaluation, as tabulated in Table 4. The action efficiency rate was not calculated because CPG, VPG, and DRGP were unable to clear all of the objects (i.e., completion) in all of the test runs.

Table 2.

Evaluation of randomly cluttered object challenge scenarios.

Table 3.

Evaluation of well-ordered object challenge scenarios.

Table 4.

Evaluation of occluded object challenge scenarios.

6.2.1. Randomly Cluttered Object Challenge

Table 2 shows that the proposed MV-COBA outperformed the other approaches considering the three evaluation metrics in all test cases. The proposed MV-COBA achieved a high grasping performance, with an 83.6% average grasp success rate compared to 70.8% for MV-COBA-2FCNs, 56.6% for DRGP, 59.8% for MVP, 65.4% for VPG, and 64.3% for CPG. The grasp success rate affects the two other evaluation metrics, in which lower action efficiency and completion rates were recorded correspondingly for all the baseline methods. On average, MV-COBA improved the grasp success rate by 13% as compared with MV-COBA-2FCNs, by 25% compared with DRGP and MVP, and by 18% compared with VPG and CPG. Therefore, CPG and VPG fared poorly in the randomly cluttered object scenarios, demonstrating an average action efficiency rate of 48.7% and 51.1%, respectively, followed by DRGP at 56.6% and MVP at 64.5%. In contrast, the proposed MV-COBA could pick up the objects from the robot’s workspace efficiently with an action efficiency rate of 75% under the same challenging scenarios. The low action efficiency of CPG and VPG suggests that the policy executed a large number of push actions, many of which might not be helpful. In addition, excessive pushes tend to move the foremost objects out of the workspace borders, preventing the policy from completing the grasping task. VPG and CPG showed a moderate completion rate of 70% among all the methods, whereas DRGP and MVP showed the lowest completion rate of 50% among all the methods; on the other hand, MV-COBA demonstrated a high completion rate of 94% in picking up all objects from the workspace without failing consecutively for more than five actions.

6.2.2. Well-Ordered Object Challenge

The evaluation was conducted in this part based on other challenge scenarios, where the objects were tightly arranged without space for grasping. Table 3 shows that the proposed MV-COBA performed the grasping task more efficiently with an 86.3% grasp success rate as compared with the three other approaches. MV-COBA also exceeded the average performance of MV-COBA-2FCNs, DRGP, MVP, CPG, and VPG in all other evaluated metrics, with an 80.4% action efficiency rate and a 100% completion rate. As expected, DRGP and MVP showed the worst performance among all methods tested in the well-ordered object scenarios, with an average grasp success (and action efficiency) rate of 42.3% and 35.6%, respectively. The policy failed to complete the task in most scenarios, showing a mere 20.6% completion rate. Without assistance from any other non-prehensile actions such as push, the grasp-only (e.g., DRGP, MVP) policy was insufficient in disrupting the arrangement of blocks. The policy struggled to grasp objects around it continually. In cases where the policy experienced consecutive failures for more than five actions, the simulation restarted with a new run of tests without finishing the task. MV-COBA-2FCNs, CPG, and VPG recorded a better average performance than that of the grasp-only policy in all three evaluated metrics.

6.2.3. Occluded Object Challenge

In this part of the experiment, objects were purposely arranged in such a way that some objects were partially or fully occluded from the camera view. Using multiple views of the workspace, the proposed MV-COBA was able to reconstruct the workspace images and thus gain a better understanding of the workspace. As illustrated in Table 4, the proposed MV-COBA outperformed the three baseline approaches by a large margin. An average grasp success rate of 97.8% and completion rate of 100% were achieved by MV-COBA. The MV-COBA-2FCNs, DRGP, MVP, CPG, and VPG approaches fared very poorly in the occluded object scenarios, with an average grasp success rate of 44.3% for MVP, which is the highest among the other comparable baselines. MV-COBA-2FCNs employed multiple views from two cameras, but their performance was poor in these situations. The reason for this is that the first camera provided visual data of the workspace state for the push prediction, while the second camera provided visual data of the workspace state for the grasp prediction. The robot acted poorly once one or two cameras were partially or fully blocked, since there was no feature fusion of the cameras’ data. Therefore, with these approaches, the robot failed to complete the task in all scenarios, and thus a 0% completion rate was recorded for all of them. Apparently, the robot was unable to locate the hidden objects and thus failed to completely clear up all of the objects from the workspace.

Comparing other recent grasping methods, e.g., [26,27,67], the authors of these studies attempted to solve the issue of retaining object arrangement forms by combining two actions. Their methods, on the other hand, have certain limitations. Firstly, they performed the process of synergizing two actions based on the maximum value, in which they trained the grasping and pushing actions on two separated neural networks in a parallel manner and then selected the maximum value. This strategy, however, occasionally fails to detach the objects from the arrangement due to the push behavior. Secondly, their approaches were tested on detaching the objects from their arrangement shape, where they did not take into consideration the randomly arranged objects. Lastly, they mapped the visual observation to actions using just a single view. Those who used multi-view-based grasping tasks (e.g., [24,40]) used a single camera-based multi-view strategy, where there is no consideration of whether the camera itself is fully or partially obscured, causing the robot to fail to complete the task. Additionally, they performed the grasp-only policy, which cannot execute grasping since the objects are well organized, causing the robot to struggle to complete the tasks. In contrast, the proposed MV-COBA coordinated the grasp and push actions based on change observation rather than the maximum value. Differently, we trained both grasping and pushing actions using a single sharable neural network to avoid unnecessary pushes and ensure that shift actions were performed on a single object rather than pushing actions on the entire pile of objects. In our proposed MV-COBA, we also considered multiple views based on two cameras placed on opposite sides of each other. The goal of this was to improve the likelihood of a successful grasp and to avoid the occlusion issue in the event that one camera is completely obscured or both cameras are partially occluded, combining them to compensate for each other.

7. Conclusions and Future Work

One of the challenges in robotics is executing grasping tasks in an unstructured environment. In this paper, the proposed MV-COBA, which uses a dual camera-based multi-view approach in combination with a change observation-based approach, showed excellent grasping performance in various test scenarios involving randomly cluttered, well-ordered, and occluded objects. The simulation results show that the proposed MV-COBA could efficiently clear objects from the workspace. The method achieved grasp success rates of 83.6%, 86.3%, and 97.8% in the cluttered, well-ordered object, and occlusion scenarios, respectively, indicating its capability to complete a grasping task successfully. MV-COBA also recorded the highest action efficiency among the tested methods, implying that the policy was effective in synergizing the push and grasp behaviors. Furthermore, the proposed approach demonstrated a significantly high completion rate (94% in the cluttered scenario, and 100% in both the well-ordered and occlusion scenarios), even in challenging scenarios. The outstanding grasping performance achieved by the MV-COBA proves that the proposed learning policy is effective in overcoming the aforementioned problems, i.e., coordination of push and grasp actions, and occlusion due to having a single view. However, the proposed approach was tested on simulations, which might be a weakness to consider. In future work, implementation of the proposed approach on hardware would provide strong validation for those interested in conducting further research.

Author Contributions

Conceptualization, M.Q.M., L.C.K. and S.C.C.; formal analysis, L.C.K.; funding acquisition, L.C.K., A.S.A. and B.A.M.; investigation, L.C.K., S.C.C., A.A.-D., Z.G.A.-M. and B.A.M.; methodology, M.Q.M. and L.C.K.; project administration, L.C.K. and S.C.C.; resources, M.Q.M.; software, M.Q.M.; supervision, L.C.K. and S.C.C.; visualization, A.S.A., A.A.-D., Z.G.A.-M. and B.A.M.; writing—original draft, M.Q.M.; writing—review and editing, L.C.K., S.C.C., A.S.A., A.A.-D., Z.G.A.-M. and B.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Multimedia University (MMU) through the MMU GRA Scheme (MMUI/190004.02) and the MMU Internal Fund (MMUI/210111).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.

Acknowledgments

The authors are thankful to Multimedia University (MMU) for supporting this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Marwan, Q.M.; Chua, S.C.; Kwek, L.C. Comprehensive Review on Reaching and Grasping of Objects in Robotics. Robotica 2021, 39, 1849–1882. [Google Scholar] [CrossRef]

- Mohammed, M.Q.; Chung, K.L.; Chyi, C.S. Review of Deep Reinforcement Learning-Based Object Grasping: Techniques, Open Challenges, and Recommendations. IEEE Access 2020, 8, 178450–178481. [Google Scholar] [CrossRef]

- Mohri, M.; Rostamizadeh, A.; Talwalkar, A. Foundations of Machine Learning, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018; Volume 148, pp. 1–162. [Google Scholar]

- François-lavet, V.; Henderson, P.; Islam, R.; Bellemare, M.G.; François-lavet, V.; Pineau, J.; Bellemare, M.G. An Introduction to Deep Reinforcement Learning. Found. Trends Mach. Learn. 2018, 11, 219–354. [Google Scholar]

- Kumar, N.M.; Mohammed, M.A.; Abdulkareem, K.H.; Damasevicius, R.; Mostafa, S.A.; Maashi, M.S.; Chopra, S.S. Artificial intelligence-based solution for sorting COVID related medical waste streams and supporting data-driven decisions for smart circular economy practice. Process. Saf. Environ. Prot. 2021, 152, 482–494. [Google Scholar] [CrossRef]

- Mohammed, M.Q.; Chung, K.L.; Chyi, C.S. Pick and Place Objects in a Cluttered Scene Using Deep Reinforcement Learning. Int. J. Mech. Mechatron. Eng. IJMME 2020, 20, 50–57. [Google Scholar]

- Deng, Y.; Guo, X.; Wei, Y.; Lu, K.; Fang, B.; Guo, D.; Liu, H.; Sun, F. Deep Reinforcement Learning for Robotic Pushing and Picking in Cluttered Environment. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 619–626. [Google Scholar] [CrossRef]

- Wu, B.; Akinola, I.; Allen, P.K. Pixel-Attentive Policy Gradient for Multi-Fingered Grasping in Cluttered Scenes. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1789–1796. [Google Scholar] [CrossRef] [Green Version]

- Al-Shanoon, A.; Lang, H.; Wang, Y.; Zhang, Y.; Hong, W. Learn to grasp unknown objects in robotic manipulation. Intell. Serv. Robot. 2021, 14, 571–582. [Google Scholar] [CrossRef]

- Mohammed, M.Q.; Kwek, L.C.; Chua, S.C. Learning Pick to Place Objects using Self-supervised Learning with Minimal Training Resources. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 493–499. [Google Scholar]

- Lakhan, A.; Abed Mohammed, M.; Ahmed Ibrahim, D.; Hameed Abdulkareem, K. Bio-Inspired Robotics Enabled Schemes in Blockchain-Fog-Cloud Assisted IoMT Environment. J. King Saud Univ. Comput. Inf. Sci. 2021. [Google Scholar] [CrossRef]