Current Status and Future Directions of Deep Learning Applications for Safety Management in Construction

Abstract

:1. Introduction

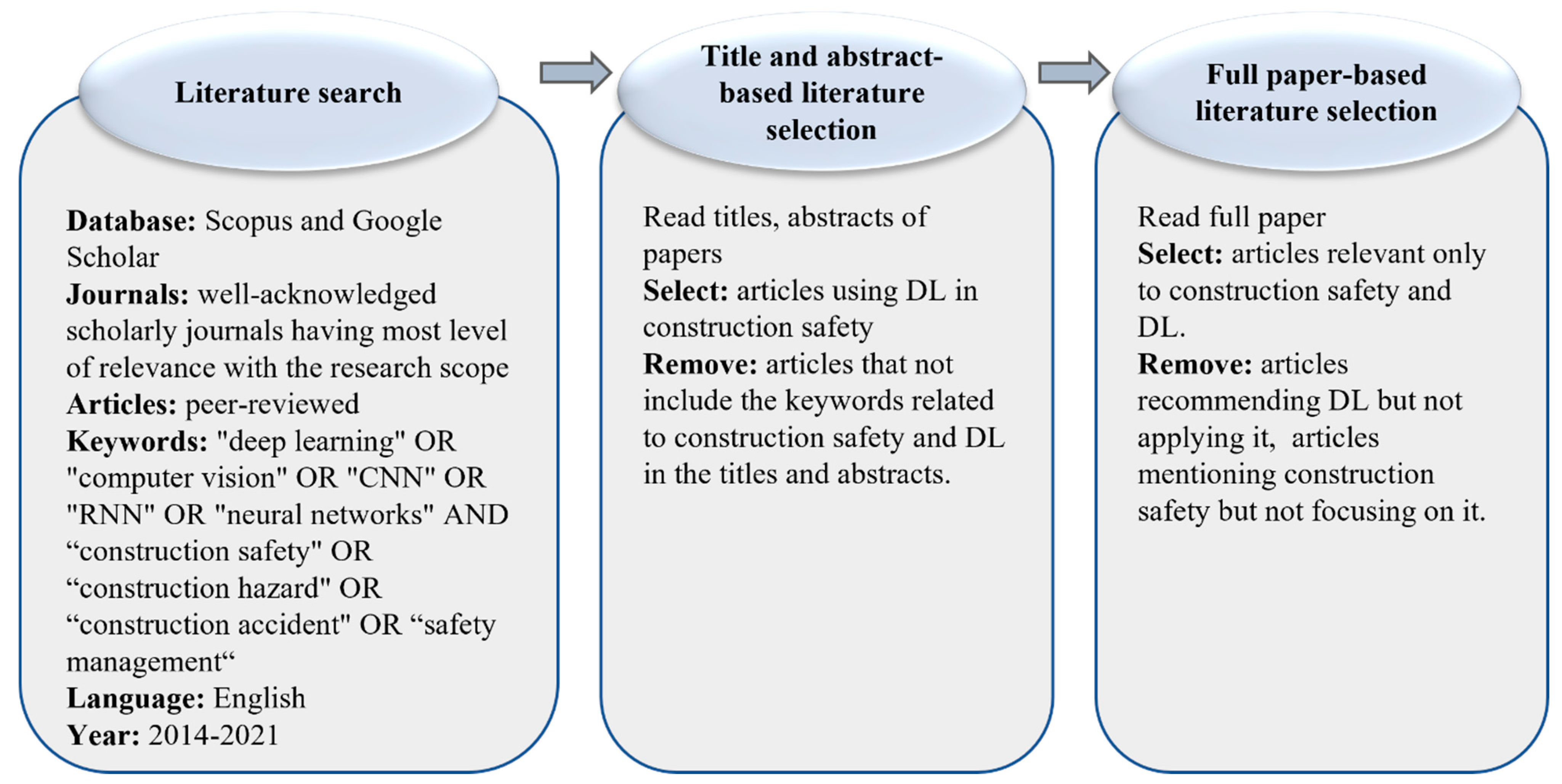

2. Research Methodology

2.1. Literature Search

2.2. Title- and Abstract-Based Literature Selection

2.3. Full-Paper-Based Literature Selection

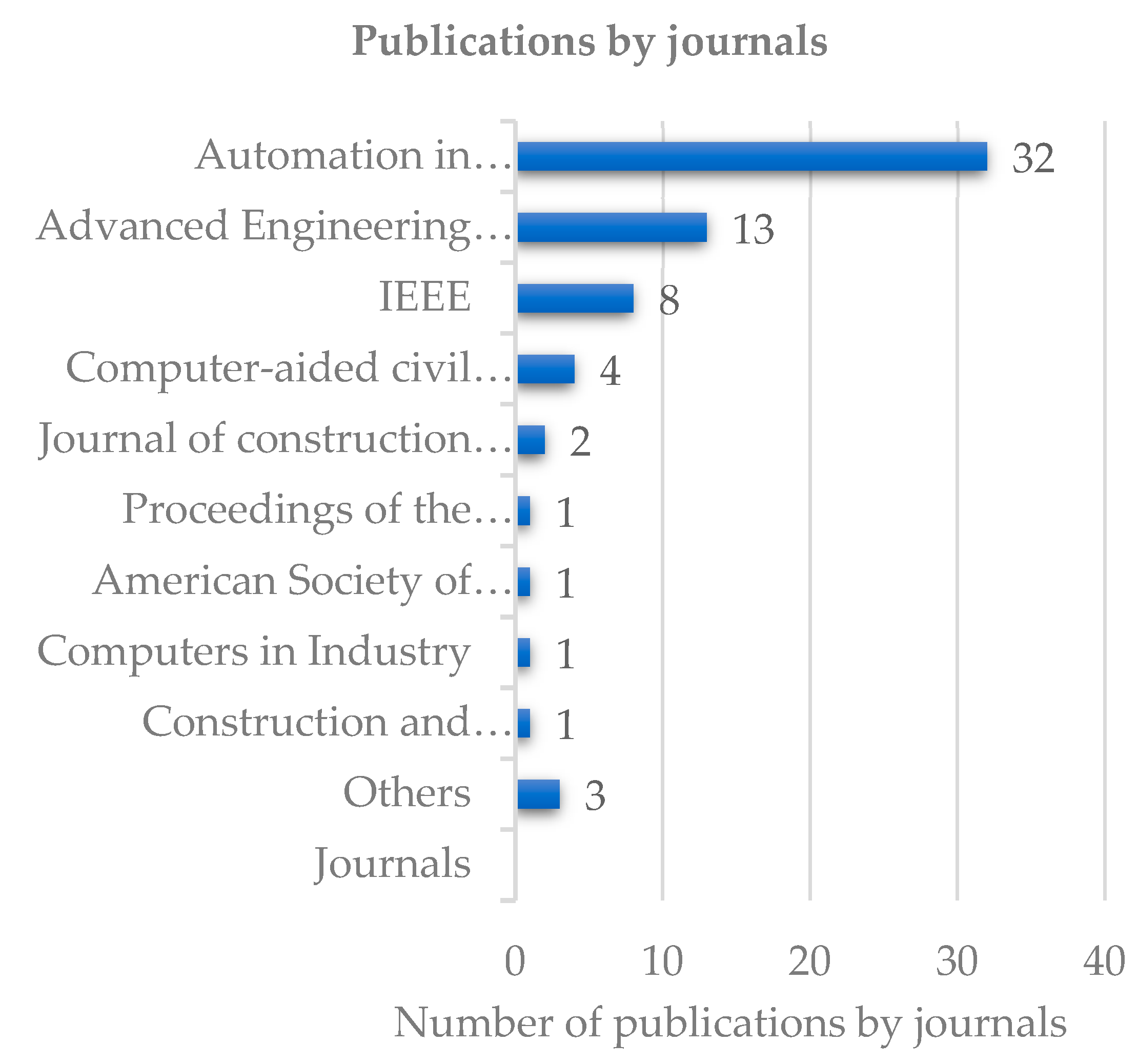

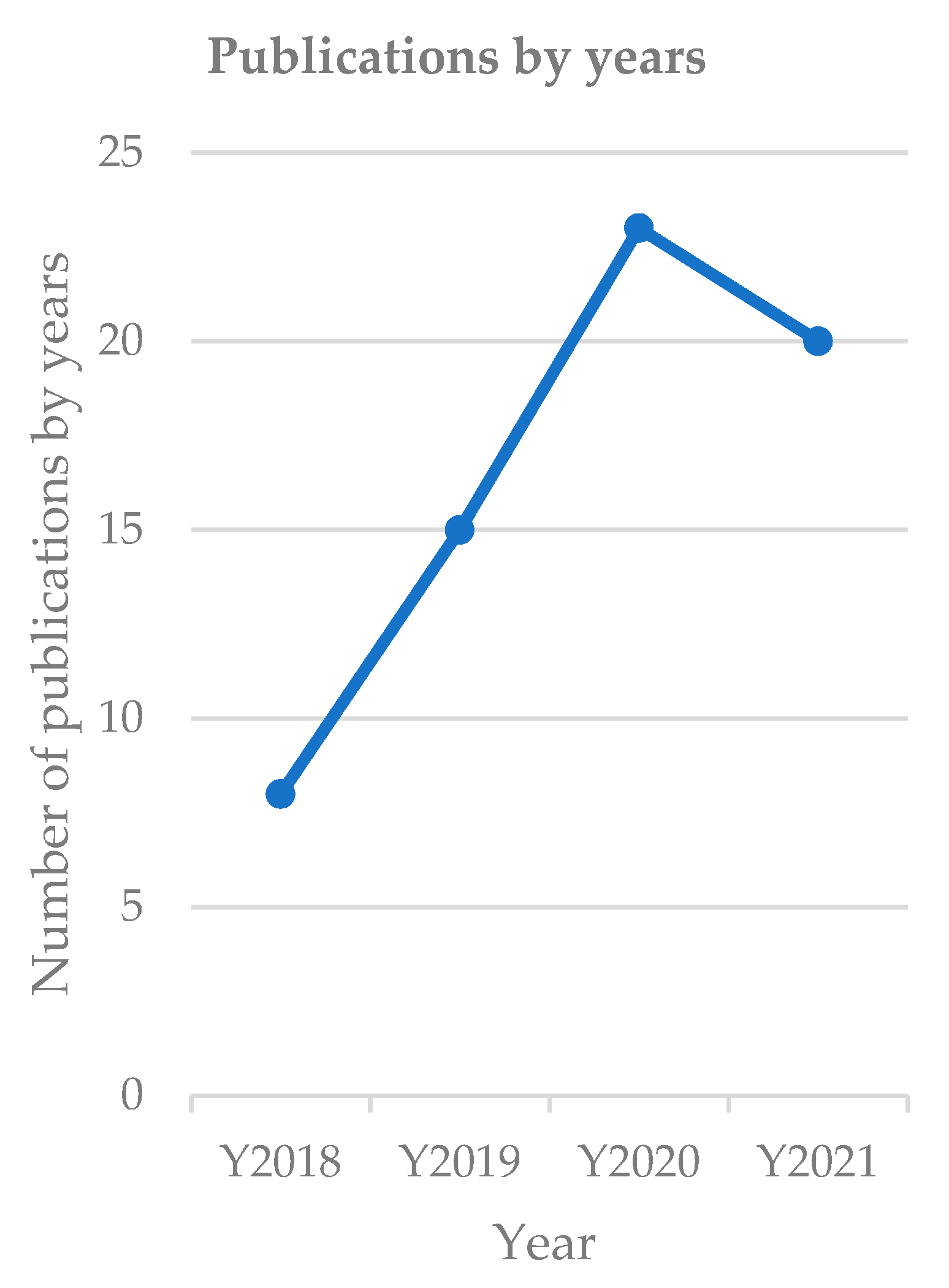

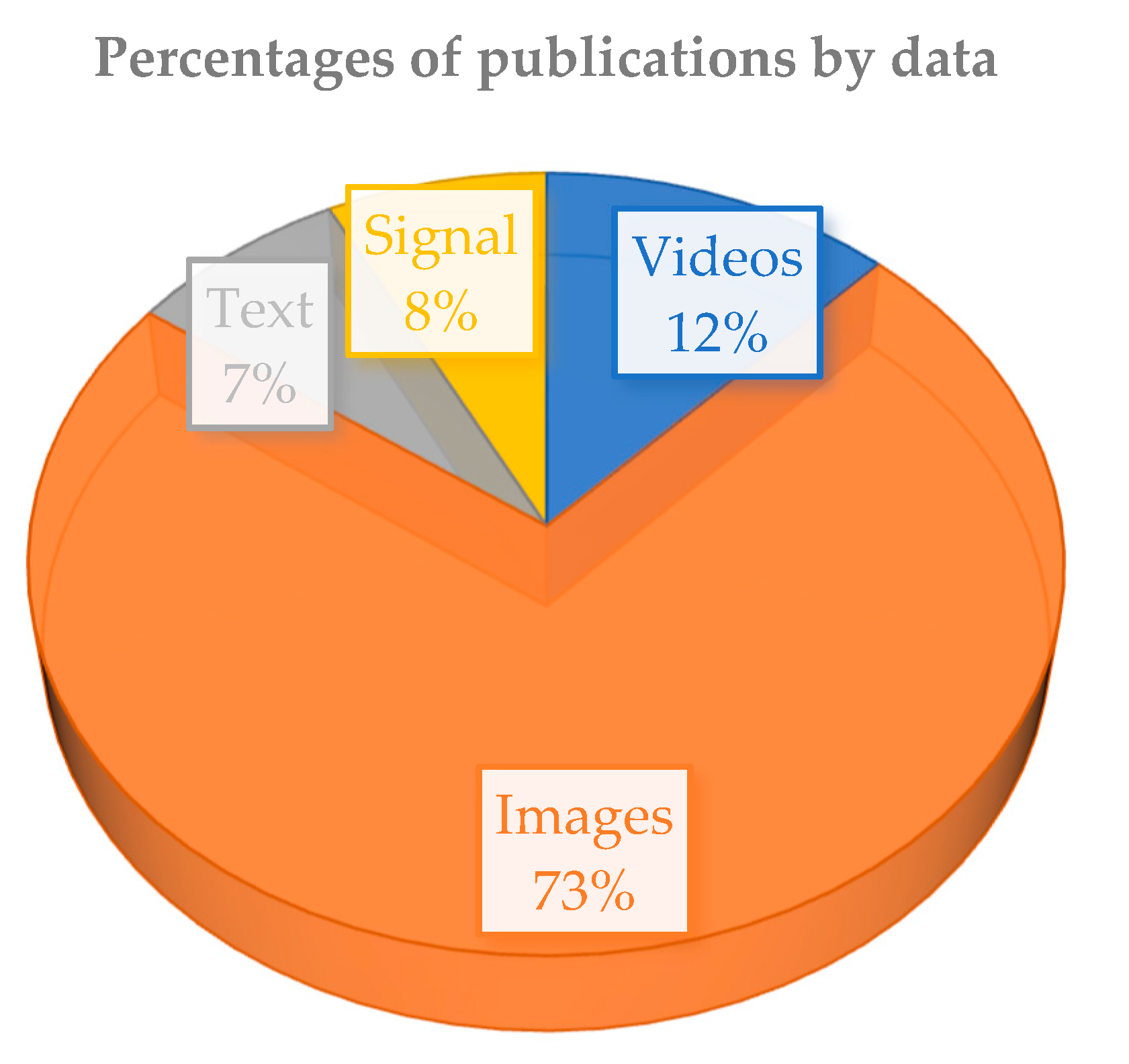

2.4. Results

3. Overview of Deep Learning Architectures

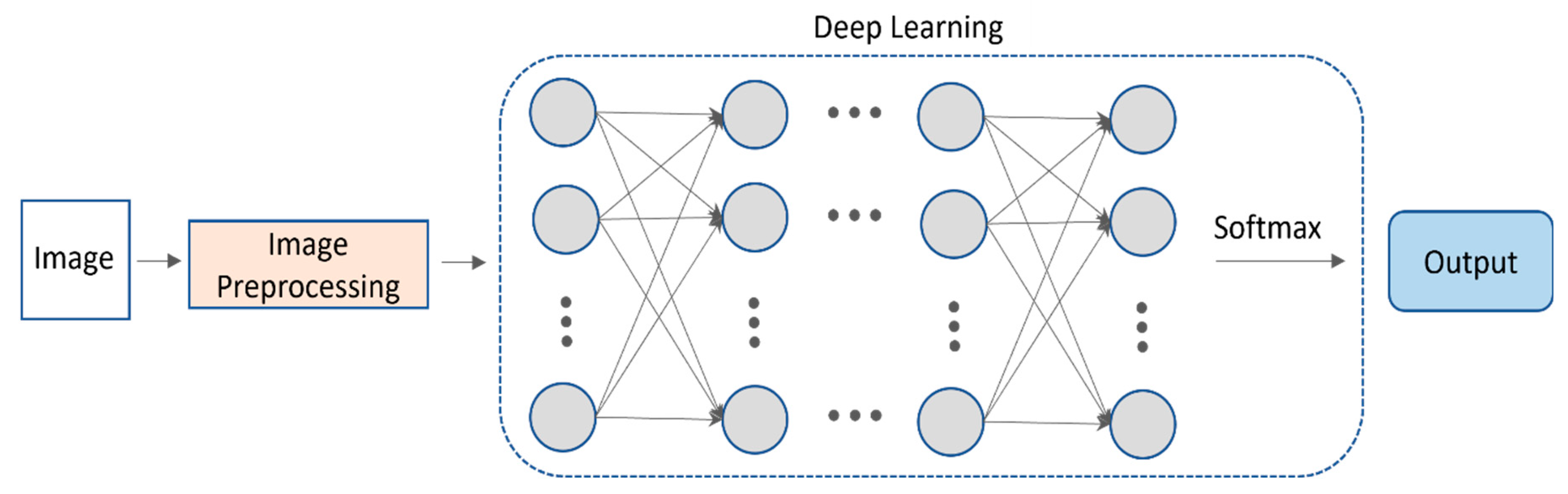

3.1. Convolutional Neural Networks

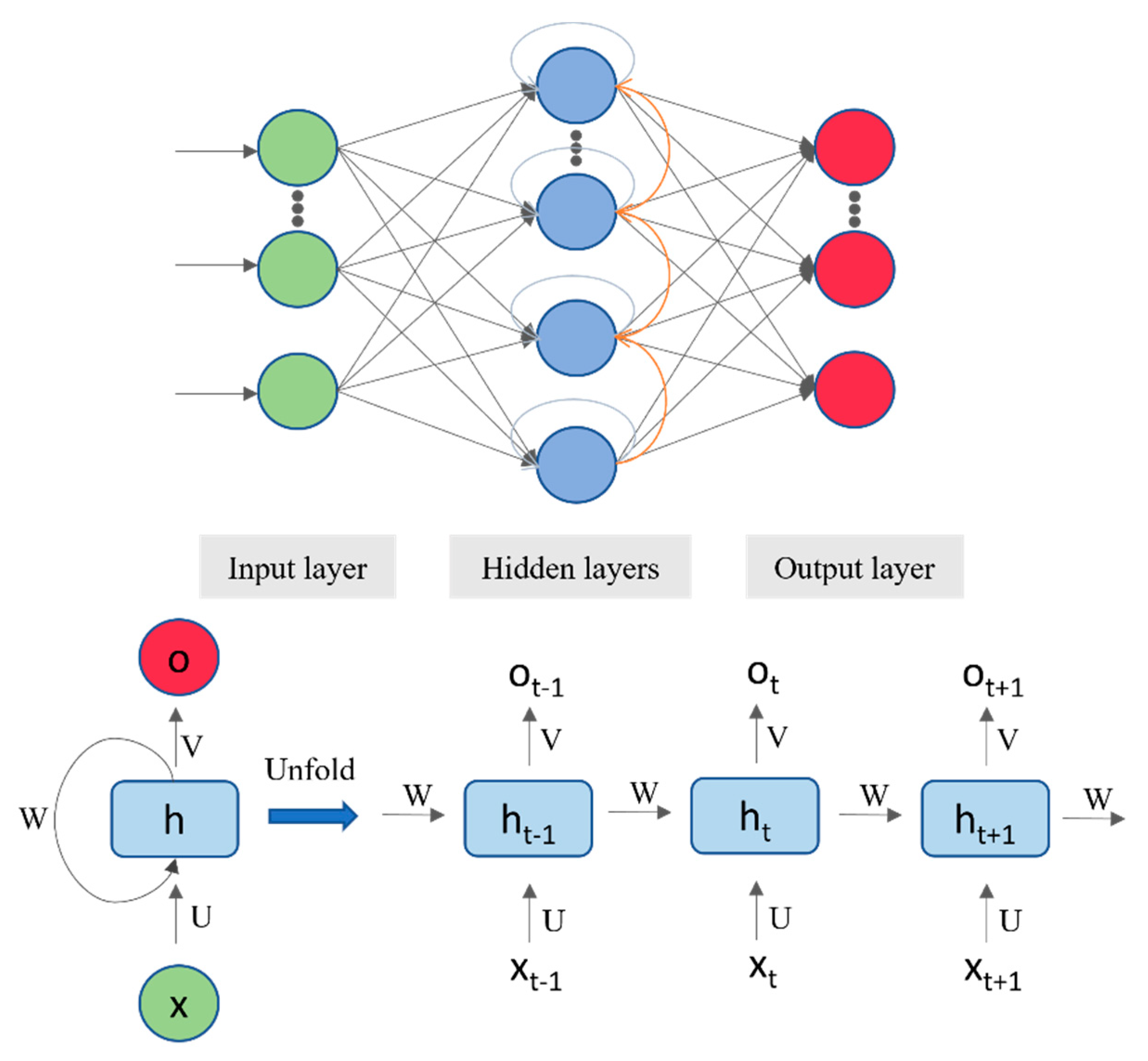

3.2. Recurrent Neural Networks

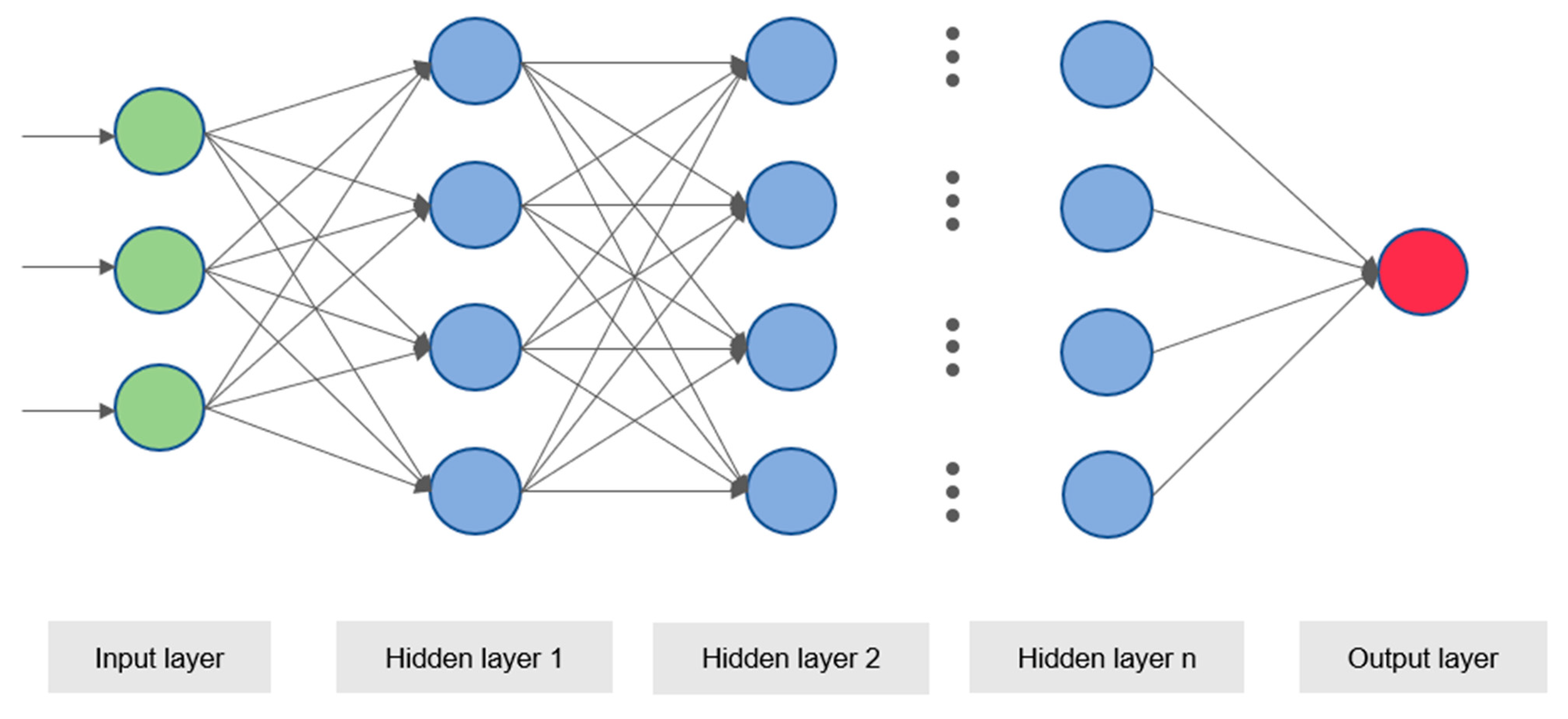

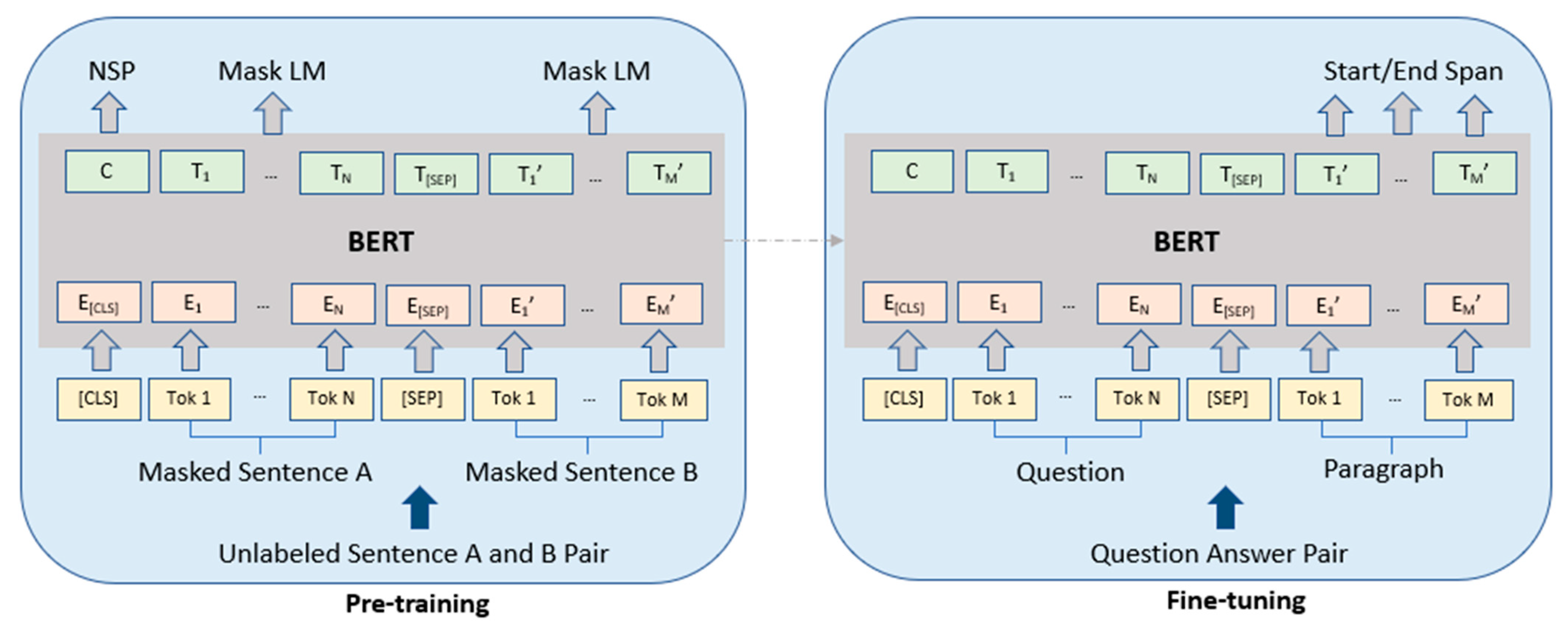

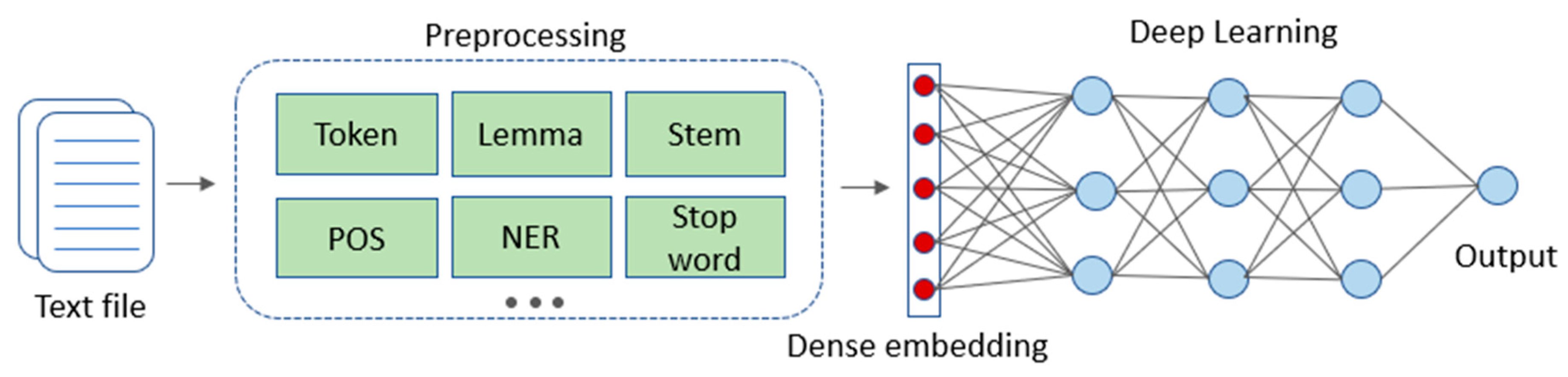

3.3. General Neural Networks

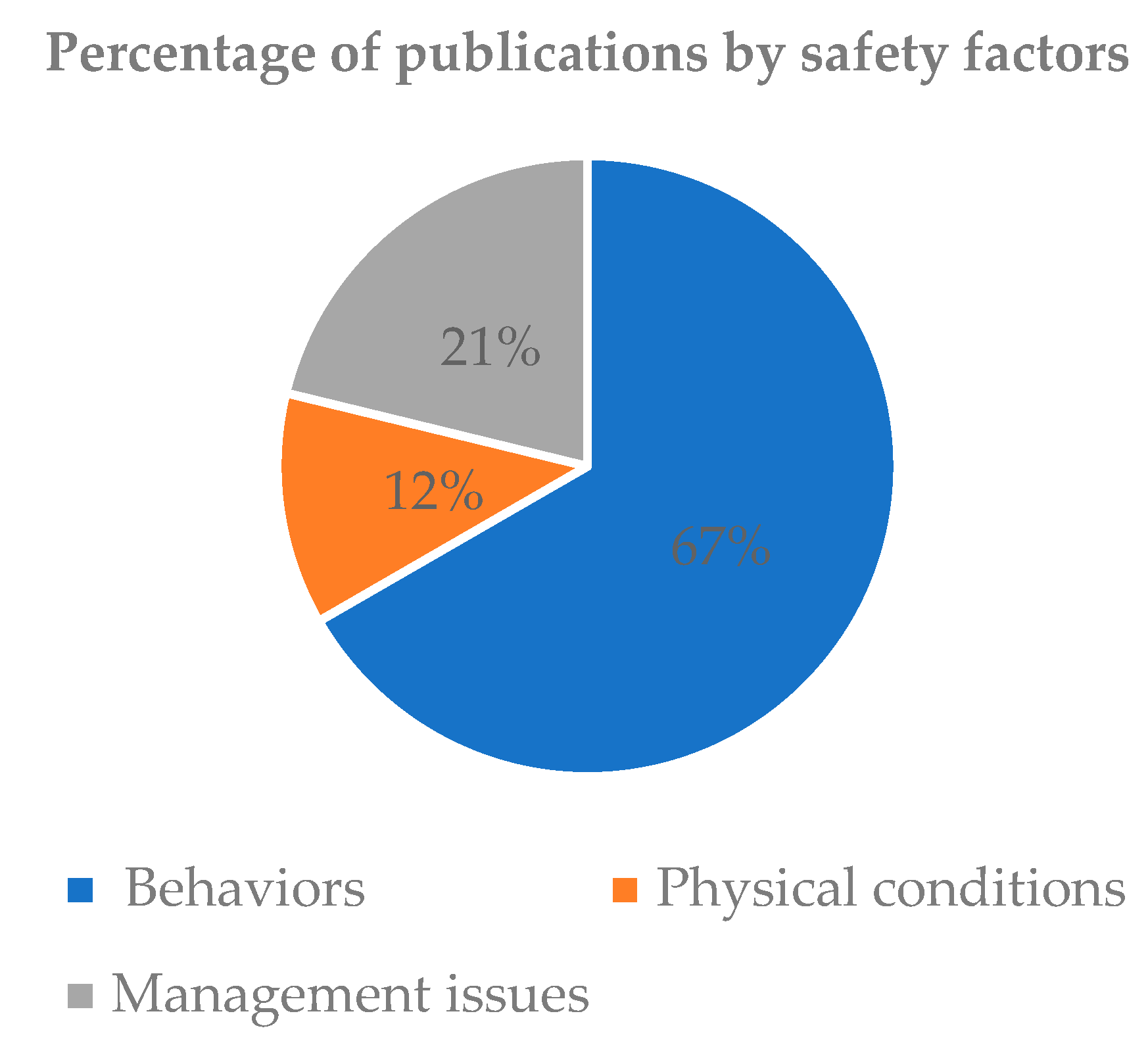

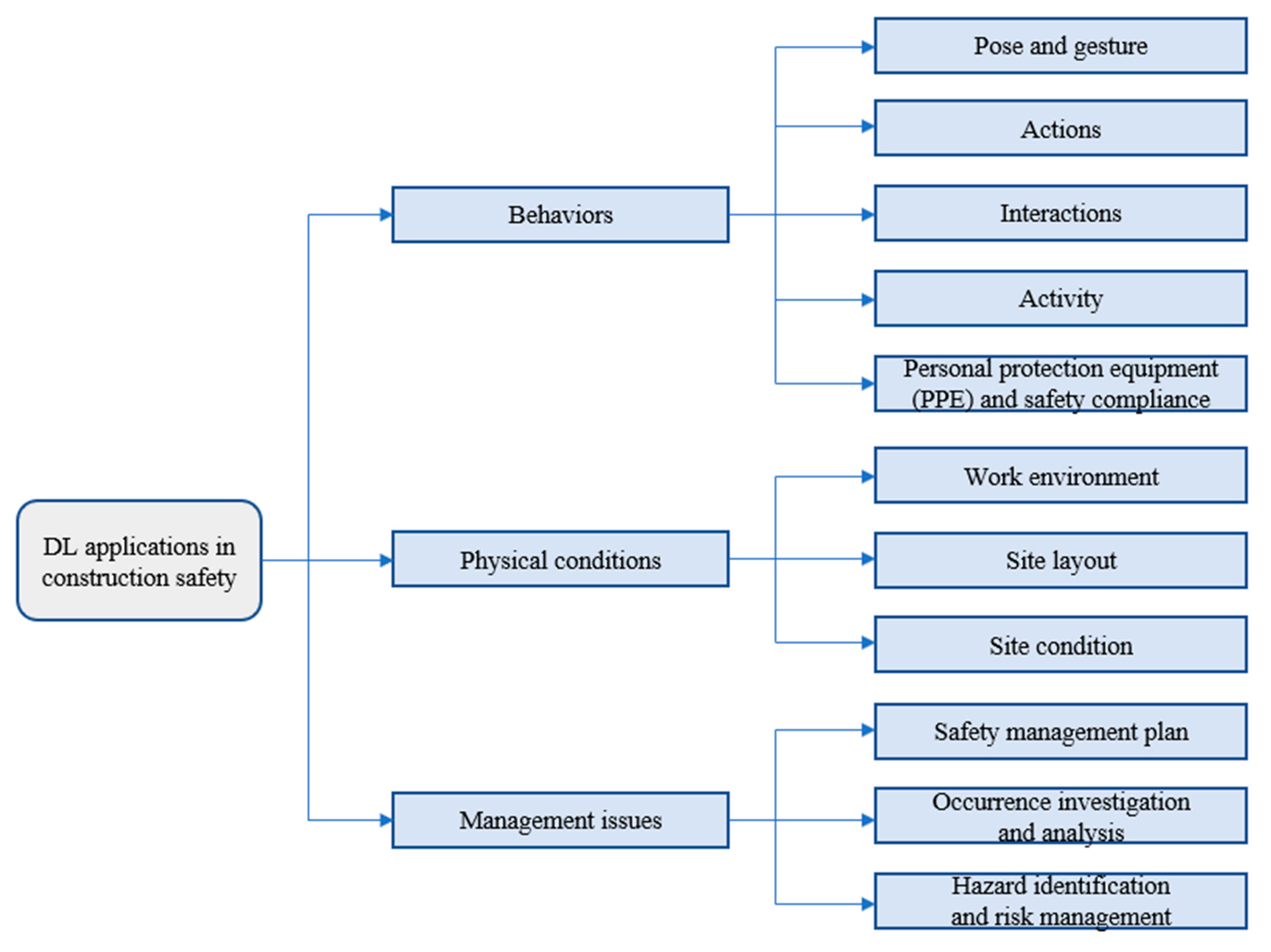

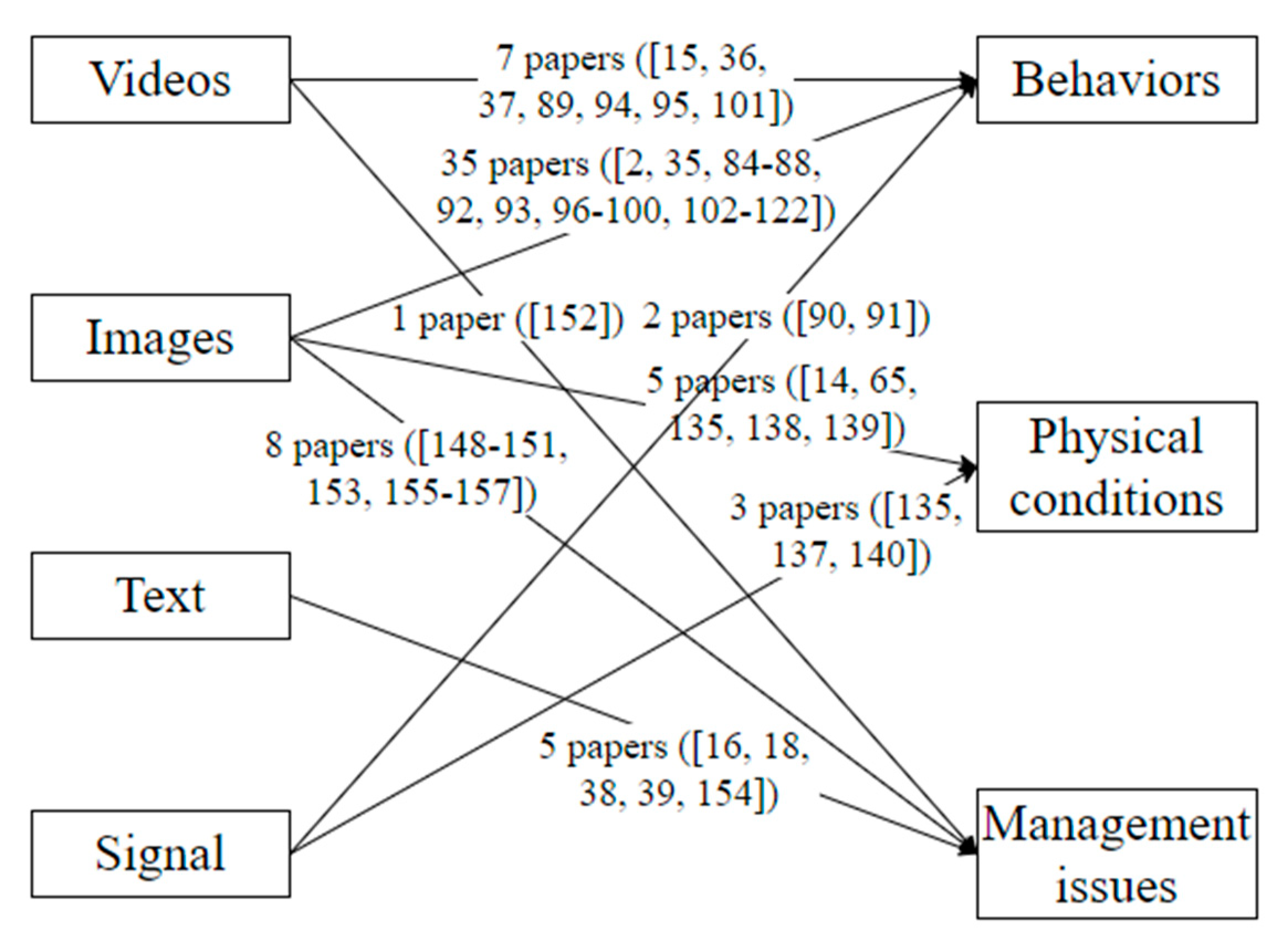

4. Deep Learning Applications for Construction Safety Management

4.1. Behaviors

4.1.1. Pose and Gesture

4.1.2. Action

4.1.3. Interaction

4.1.4. Activity

4.1.5. PPE and Safety Compliance

4.2. Physical Conditions

4.2.1. Work Environment (WE)

4.2.2. Site Layout (SL)

4.2.3. Site Condition (SC)

4.3. Management Issues

4.3.1. Safety Management Plan

4.3.2. Accident Investigation and Analysis

4.3.3. Hazard Identification and Risk Management

5. Overall Research Trends in Safety Management: Summary of Contributions and Limitations

5.1. Recognition of Unsafe Behavior

5.2. Physical Condition Identification

5.3. Safety Management

5.4. The Summary of Contributions and Limitations of Deep Learning on Safety Management

6. Future Research Directions

6.1. Expanding a Comprehensive Dataset

6.2. Improving Technical Restrictions Due to Occlusions

6.3. Identifying Individuals Who Performed Unsafe Behaviors

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rostami, A.; Sommerville, J.; Wong, L.; Lee, C. Risk management implementation in small and medium enterprises in the UK construction industry. Eng. Constr. Archit. Manag. 2015, 22, 91–107. [Google Scholar] [CrossRef] [Green Version]

- Son, H.; Kim, C. Integrated worker detection and tracking for the safe operation of construction machinery. Autom. Constr. 2021, 126, 103670. [Google Scholar] [CrossRef]

- Huang, X.; Hinze, J. Analysis of construction worker fall accidents. J. Constr. Eng. Manag. 2003, 129, 262–271. [Google Scholar] [CrossRef]

- Sousa, V.; Almeida, N.M.; Dias, L.A. Risk-based management of occupational safety and health in the construction industry–Part 1: Background knowledge. Saf. Sci. 2014, 66, 75–86. [Google Scholar] [CrossRef]

- 2003–2014 Census of Fatal Occupational Injuries. Available online: http://www.bls.gov/ (accessed on 15 March 2015).

- Agwu, M.O.; Olele, H.E. Fatalities in the Nigerian construction industry: A case of poor safety culture. J. Econ. Manag. Trade 2014, 4, 431–452. [Google Scholar] [CrossRef]

- Fang, D.; Huang, Y.; Guo, H.; Lim, H.W. LCB approach for construction safety. Saf. Sci. 2020, 128, 104761. [Google Scholar] [CrossRef]

- Sarkar, S.; Maiti, J. Machine learning in occupational accident analysis: A review using science mapping approach with citation network analysis. Saf. Sci. 2020, 131, 104900. [Google Scholar] [CrossRef]

- Xu, Z.; Saleh, J.H. Machine learning for reliability engineering and safety applications: Review of current status and future opportunities. Reliab. Eng. Syst. Saf. 2021, 107530. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Chen, H.; Chen, A.; Xu, L.; Xie, H.; Qiao, H.; Lin, Q.; Cai, K. A deep learning CNN architecture applied in smart near-infrared analysis of water pollution for agricultural irrigation resources. Agric. Water Manag. 2020, 240, 106303. [Google Scholar] [CrossRef]

- Akinosho, T.D.; Oyedele, L.O.; Bilal, M.; Ajayi, A.O.; Delgado, M.D.; Akinade, O.O.; Ahmed, A.A. Deep learning in the construction industry: A review of present status and future innovations. J. Build. Eng. 2020, 32, 101827. [Google Scholar] [CrossRef]

- Khallaf, R.; Khallaf, M. Classification and analysis of deep learning applications in construction: A systematic literature review. Autom. Constr. 2021, 129, 103760. [Google Scholar] [CrossRef]

- Kolar, Z.; Chen, H.; Luo, X. Transfer learning and deep convolutional neural networks for safety guardrail detection in 2D images. Autom. Constr. 2018, 89, 58–70. [Google Scholar] [CrossRef]

- Ding, L.; Fang, W.; Luo, H.; Love, P.E.; Zhong, B.; Ouyang, X. A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Autom. Constr. 2018, 86, 118–124. [Google Scholar] [CrossRef]

- Baker, H.; Hallowell, M.R.; Tixier, A.J.-P. Automatically learning construction injury precursors from text. Autom. Constr. 2020, 118, 103145. [Google Scholar] [CrossRef]

- Arya, K.M.; Ajith, K.K. A Review on Deep Learning Based Helmet Detection. In Proceedings of the International Conference on Systems, Energy & Environment (ICSEE) 2021, Kannur, India, 22–23 January 2021; Government College of Engineering Kannu: Kerala, India, 2021. [Google Scholar]

- Zhong, B.; Pan, X.; Love, P.E.; Ding, L.; Fang, W. Deep learning and network analysis: Classifying and visualizing accident narratives in construction. Autom. Constr. 2020, 113, 103089. [Google Scholar] [CrossRef]

- Seo, J.; Han, S.; Lee, S.; Kim, H. Computer vision techniques for construction safety and health monitoring. Adv. Eng. Inform. 2015, 29, 239–251. [Google Scholar] [CrossRef]

- Fang, W.; Love, P.E.; Luo, H.; Ding, L. Computer vision for behaviour-based safety in construction: A review and future directions. Adv. Eng. Inform. 2020, 43, 100980. [Google Scholar] [CrossRef]

- Hou, L.; Chen, H.; Zhang, G.K.; Wang, X. Deep Learning-Based Applications for Safety Management in the AEC Industry: A Review. Appl. Sci. 2021, 11, 821. [Google Scholar] [CrossRef]

- Mok, K.Y.; Shen, G.Q.; Yang, J. Stakeholder management studies in mega construction projects: A review and future directions. Int. J. Proj. Manag. 2015, 33, 446–457. [Google Scholar] [CrossRef]

- Content Analysis. Available online: https://www.publichealth.columbia.edu/research/population-health-methods/content-analysis (accessed on 30 September 2021).

- Zhang, M.; Shi, R.; Yang, Z. A critical review of vision-based occupational health and safety monitoring of construction site workers. Saf. Sci. 2020, 126, 104658. [Google Scholar] [CrossRef]

- Li, X.; Yi, W.; Chi, H.L.; Wang, X.; Chan, A.P. A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Liang, X.; Shen, G.Q.; Bu, S. Multiagent systems in construction: A ten-year review. J. Comput. Civ. Eng. 2016, 30, 04016016. [Google Scholar] [CrossRef] [Green Version]

- Zhong, B.; Li, H.; Luo, H.; Zhou, J.; Fang, W.; Xing, X. Ontology-based semantic modeling of knowledge in construction: Classification and identification of hazards implied in images. J. Constr. Eng. Manag. 2020, 146, 04020013. [Google Scholar] [CrossRef]

- Zhong, B.; Xing, X.; Love, P.; Wang, X.; Luo, H. Convolutional neural network: Deep learning-based classification of building quality problems. Adv. Eng. Inform. 2019, 40, 46–57. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef] [Green Version]

- Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H. A review on deep learning approaches in healthcare systems: Taxonomies, challenges, and open issues. J. Biomed. Inform. 2020, 113, 103627. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Hao, X.; Zhang, G.; Ma, S. Deep learning. Int. J. Semant. Comput. 2016, 10, 417–439. [Google Scholar] [CrossRef] [Green Version]

- Vateekul, P.; Koomsubha, T. A study of sentiment analysis using deep learning techniques on Thai Twitter data. In Proceedings of the 13th International Joint Conference on Computer Science and Software Engineering (JCSSE), Khon Kaen, Thailand, 13–15 July 2016; pp. 1–6. [Google Scholar]

- Mikołajczyk, A.; Grochowski, M. Data augmentation for improving deep learning in image classification problem. In Proceedings of the 2018 International Interdisciplinary PhD Workshop (IIPhDW), Swinoujscie, Poland, 9–12 May 2018; pp. 117–122. [Google Scholar]

- Kim, K.; Kim, S.; Shchur, D. A UAS-based work zone safety monitoring system by integrating internal traffic control plan (ITCP) and automated object detection in game engine environment. Autom. Constr. 2021, 128, 103736. [Google Scholar] [CrossRef]

- Lin, Z.-H.; Chen, A.Y.; Hsieh, S.-H. Temporal image analytics for abnormal construction activity identification. Autom. Constr. 2021, 124, 103572. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, Y.; Cai, H. Two-step long short-term memory method for identifying construction activities through positional and attentional cues. Autom. Constr. 2019, 106, 102886. [Google Scholar] [CrossRef]

- Feng, D.; Chen, H. A small samples training framework for deep Learning-based automatic information extraction: Case study of construction accident news reports analysis. Adv. Eng. Inform. 2021, 47, 101256. [Google Scholar] [CrossRef]

- Fang, W.; Luo, H.; Xu, S.; Love, P.E.; Lu, Z.; Ye, C. Automated text classification of near-misses from safety reports: An improved deep learning approach. Adv. Eng. Inform. 2020, 44, 101060. [Google Scholar] [CrossRef]

- Graves, A.; Mohamed, A.-R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Faust, O.; Hagiwara, Y.; Hong, T.J.; Lih, O.S.; Acharya, U.R. Deep learning for healthcare applications based on physiological signals: A review. Comput. Methods Programs Biomed. 2018, 161, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Fukushima, K.; Miyake, S. Neocognitron: A self-organizing neural network model for a mechanism of visual pattern recognition. In Competition and Cooperation in Neural Nets; Springer: Berlin/Heidelberg, Germany, 1982; pp. 267–285. [Google Scholar]

- LeCun, Y.; Bengio, Y. Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 1995, 3361, 1995. [Google Scholar]

- Lawrence, S.; Giles, C.L.; Tsoi, A.C.; Back, A.D. Face recognition: A convolutional neural-network approach. IEEE Trans. Neural Netw. 1997, 8, 98–113. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chen, S.; Xu, H.; Liu, D.; Hu, B.; Wang, H. A vision of IoT: Applications, challenges, and opportunities with china perspective. IEEE Internet Things J. 2014, 1, 349–359. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Liu, N.; Xu, D. Advanced deep-learning techniques for salient and category-specific object detection: A survey. IEEE Signal Process. Mag. 2018, 35, 84–100. [Google Scholar] [CrossRef]

- Wang, D.; Guo, Q.; Song, Y.; Gao, S.; Li, Y. Application of multiscale learning neural network based on CNN in bearing fault diagnosis. J. Signal Process. Syst. 2019, 91, 1205–1217. [Google Scholar] [CrossRef]

- Mu, R.; Zeng, X. A review of deep learning research. KSII Trans. Internet Inf. Syst. (TIIS) 2019, 13, 1738–1764. [Google Scholar]

- Lu, J.; Tan, L.; Jiang, H. Review on Convolutional Neural Network (CNN) Applied to Plant Leaf Disease Classification. Agriculture 2021, 11, 707. [Google Scholar] [CrossRef]

- Koirala, A.; Walsh, K.B.; Wang, Z.; McCarthy, C. Deep learning–Method overview and review of use for fruit detection and yield estimation. Comput. Electron. Agric. 2019, 162, 219–234. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Adarsh, P.; Rathi, P.; Kumar, M. YOLO v3-Tiny: Object Detection and Recognition using one stage improved model. In Proceedings of the 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 687–694. [Google Scholar]

- Liu, B.; Zhao, W.; Sun, Q. Study of object detection based on Faster R-CNN. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 6233–6236. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Uijlings, J.R.; Van De Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Salvador, A.; Giró-i-Nieto, X.; Marqués, F.; Satoh, S.i. Faster r-cnn features for instance search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 9–16. [Google Scholar]

- Huang, R.; Pedoeem, J.; Chen, C. YOLO-LITE: A real-time object detection algorithm optimized for non-GPU computers. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 2503–2510. [Google Scholar]

- Chen, C.; Qin, C.; Qiu, H.; Tarroni, G.; Duan, J.; Bai, W.; Rueckert, D. Deep learning for cardiac image segmentation: A review. Front. Cardiovasc. Med. 2020, 7, 25. [Google Scholar] [CrossRef] [PubMed]

- Abdulkadir, S.J.; Alhussian, H.; Nazmi, M.; Elsheikh, A.A. Long short term memory recurrent network for standard and poor’s 500 index modelling. Int. J. Eng. Technol 2018, 7, 25–29. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Kuan, L.; Yan, Z.; Xin, W.; Yan, C.; Xiangkun, P.; Wenxue, S.; Zhe, J.; Yong, Z.; Nan, X.; Xin, Z. Short-term electricity load forecasting method based on multilayered self-normalizing GRU network. In Proceedings of the 2017 IEEE Conference on Energy Internet and Energy System Integration (EI2), Beijing, China, 26–28 November 2017; pp. 1–5. [Google Scholar]

- Althelaya, K.A.; El-Alfy, E.-S.M.; Mohammed, S. Stock market forecast using multivariate analysis with bidirectional and stacked (LSTM, GRU). In Proceedings of the 2018 21st Saudi Computer Society National Computer Conference (NCC), Riyadh, Saudi Arabia, 25–26 April 2018; pp. 1–7. [Google Scholar]

- Wang, Y.; Liao, P.-C.; Zhang, C.; Ren, Y.; Sun, X.; Tang, P. Crowdsourced reliable labeling of safety-rule violations on images of complex construction scenes for advanced vision-based workplace safety. Adv. Eng. Inform. 2019, 42, 101001. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Acheampong, F.A.; Nunoo-Mensah, H.; Chen, W. Transformer models for text-based emotion detection: A review of BERT-based approaches. Artif. Intell. Rev. 2021, 54, 5789–5829. [Google Scholar] [CrossRef]

- Alatawi, H.S.; Alhothali, A.M.; Moria, K.M. Detecting white supremacist hate speech using domain specific word embedding with deep learning and BERT. IEEE Access 2021, 9, 106363–106374. [Google Scholar] [CrossRef]

- Tang, M.; Gandhi, P.; Kabir, M.A.; Zou, C.; Blakey, J.; Luo, X. Progress notes classification and keyword extraction using attention-based deep learning models with BERT. arXiv 2019, arXiv:1910.05786. [Google Scholar]

- Tenney, I.; Das, D.; Pavlick, E. BERT rediscovers the classical NLP pipeline. arXiv 2019, arXiv:1905.05950. [Google Scholar]

- Reason, J. Human error: Models and management. BMJ 2000, 320, 768–770. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Guo, B.H.; Yiu, T.W. Developing leading indicators to monitor the safety conditions of construction projects. J. Manag. Eng. 2016, 32, 04015016. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, X.; Skibniewski, M.J.; Zhong, J.; Lu, Y. Bayesian-network-based safety risk analysis in construction projects. Reliab. Eng. Syst. Saf. 2014, 131, 29–39. [Google Scholar] [CrossRef]

- Guo, H.; Yu, Y.; Skitmore, M. Visualization technology-based construction safety management: A review. Autom. Constr. 2017, 73, 135–144. [Google Scholar] [CrossRef]

- Carter, G.; Smith, S.D. Safety hazard identification on construction projects. J. Constr. Eng. Manag. 2006, 132, 197–205. [Google Scholar] [CrossRef]

- Zou, P.X.; Sunindijo, R.Y. Strategic Safety Management in Construction and Engineering; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Guo, B.H.; Zou, Y.; Fang, Y.; Goh, Y.M.; Zou, P.X. Computer vision technologies for safety science and management in construction: A critical review and future research directions. Saf. Sci. 2021, 135, 105130. [Google Scholar] [CrossRef]

- Edwards, M.; Deng, J.; Xie, X. From pose to activity: Surveying datasets and introducing CONVERSE. Comput. Vis. Image Underst. 2016, 144, 73–105. [Google Scholar] [CrossRef] [Green Version]

- Jazayeri, E.; Dadi, G.B. Construction safety management systems and methods of safety performance measurement: A review. J. Saf. Eng. 2017, 6, 15–28. [Google Scholar]

- Petersen, D. Techniques of Safety Management: A Systems Approach; American Society of Safety Engineers: Park Ridge, IL, USA, 1989. [Google Scholar]

- Welford, A.T. Fundamentals of Skill; Methuen: London, UK, 1968. [Google Scholar]

- Heinrich, H.W. Industrial Accident Prevention. A Scientific Approach, 2nd ed.; McGraw-Hill Book Company, Inc.: New York, NY, USA, 1941. [Google Scholar]

- Fam, I.M.; Nikoomaram, H.; Soltanian, A. Comparative analysis of creative and classic training methods in health, safety and environment (HSE) participation improvement. J. Loss Prev. Process Ind. 2012, 25, 250–253. [Google Scholar] [CrossRef]

- Luo, H.; Wang, M.; Wong, P.K.-Y.; Cheng, J.C. Full body pose estimation of construction equipment using computer vision and deep learning techniques. Autom. Constr. 2020, 110, 103016. [Google Scholar] [CrossRef]

- Son, H.; Choi, H.; Seong, H.; Kim, C. Detection of construction workers under varying poses and changing background in image sequences via very deep residual networks. Autom. Constr. 2019, 99, 27–38. [Google Scholar] [CrossRef]

- Lee, H.; Yang, K.; Kim, N.; Ahn, C.R. Detecting excessive load-carrying tasks using a deep learning network with a Gramian Angular Field. Autom. Constr. 2020, 120, 103390. [Google Scholar] [CrossRef]

- Luo, H.; Liu, J.; Fang, W.; Love, P.E.; Yu, Q.; Lu, Z. Real-time smart video surveillance to manage safety: A case study of a transport mega-project. Adv. Eng. Inform. 2020, 45, 101100. [Google Scholar] [CrossRef]

- Chen, H.; Luo, X.; Zheng, Z.; Ke, J. A proactive workers’ safety risk evaluation framework based on position and posture data fusion. Autom. Constr. 2019, 98, 275–288. [Google Scholar] [CrossRef]

- Zhao, J.; Obonyo, E. Convolutional long short-term memory model for recognizing construction workers’ postures from wearable inertial measurement units. Adv. Eng. Inform. 2020, 46, 101177. [Google Scholar] [CrossRef]

- Yang, K.; Ahn, C.R.; Kim, H. Deep learning-based classification of work-related physical load levels in construction. Adv. Eng. Inform. 2020, 45, 101104. [Google Scholar] [CrossRef]

- Kim, K.; Cho, Y.K. Effective inertial sensor quantity and locations on a body for deep learning-based worker’s motion recognition. Autom. Constr. 2020, 113, 103126. [Google Scholar] [CrossRef]

- Yu, Y.; Yang, X.; Li, H.; Luo, X.; Guo, H.; Fang, Q. Joint-level vision-based ergonomic assessment tool for construction workers. J. Constr. Eng. Manag. 2019, 145, 04019025. [Google Scholar] [CrossRef]

- Fang, W.; Zhong, B.; Zhao, N.; Love, P.E.; Luo, H.; Xue, J.; Xu, S. A deep learning-based approach for mitigating falls from height with computer vision: Convolutional neural network. Adv. Eng. Inform. 2019, 39, 170–177. [Google Scholar] [CrossRef]

- Cai, J.; Zhang, Y.; Yang, L.; Cai, H.; Li, S. A context-augmented deep learning approach for worker trajectory prediction on unstructured and dynamic construction sites. Adv. Eng. Inform. 2020, 46, 101173. [Google Scholar] [CrossRef]

- Luo, H.; Wang, M.; Wong, P.K.-Y.; Tang, J.; Cheng, J.C. Construction machine pose prediction considering historical motions and activity attributes using gated recurrent unit (GRU). Autom. Constr. 2021, 121, 103444. [Google Scholar] [CrossRef]

- Wang, M.; Wong, P.; Luo, H.; Kumar, S.; Delhi, V.; Cheng, J. Predicting safety hazards among construction workers and equipment using computer vision and deep learning techniques. In Proceedings of the International Symposium on Automation and Robotics in Construction, Banff Alberta, AB, Canada, 21–24 May 2019; IAARC Publications: Banff Alberta, AB, Canada, 2019; pp. 399–406. [Google Scholar]

- Tang, S.; Roberts, D.; Golparvar-Fard, M. Human-object interaction recognition for automatic construction site safety inspection. Autom. Constr. 2020, 120, 103356. [Google Scholar] [CrossRef]

- Kim, D.; Liu, M.; Lee, S.; Kamat, V.R. Remote proximity monitoring between mobile construction resources using camera-mounted UAVs. Autom. Constr. 2019, 99, 168–182. [Google Scholar] [CrossRef]

- Zhang, M.; Cao, Z.; Yang, Z.; Zhao, X. Utilizing computer vision and fuzzy inference to evaluate level of collision safety for workers and equipment in a dynamic environment. J. Constr. Eng. Manag. 2020, 146, 04020051. [Google Scholar] [CrossRef]

- Xiong, R.; Song, Y.; Li, H.; Wang, Y. Onsite video mining for construction hazards identification with visual relationships. Adv. Eng. Inform. 2019, 42, 100966. [Google Scholar] [CrossRef]

- Wang, X.; Zhu, Z. Vision-based hand signal recognition in construction: A feasibility study. Autom. Constr. 2021, 125, 103625. [Google Scholar] [CrossRef]

- Yan, X.; Zhang, H.; Li, H. Computer vision-based recognition of 3D relationship between construction entities for monitoring struck-by accidents. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 1023–1038. [Google Scholar] [CrossRef]

- Khan, N.; Saleem, M.R.; Lee, D.; Park, M.-W.; Park, C. Utilizing safety rule correlation for mobile scaffolds monitoring leveraging deep convolution neural networks. Comput. Ind. 2021, 129, 103448. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Yang, X.; Yu, Y.; Cao, D. Capturing and understanding workers’ activities in far-field surveillance videos with deep action recognition and Bayesian nonparametric learning. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 333–351. [Google Scholar] [CrossRef]

- Nath, N.D.; Behzadan, A.H.; Paal, S.G. Deep learning for site safety: Real-time detection of personal protective equipment. Autom. Constr. 2020, 112, 103085. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Rose, T.M.; An, W. Detecting non-hardhat-use by a deep learning method from far-field surveillance videos. Autom. Constr. 2018, 85, 1–9. [Google Scholar] [CrossRef]

- Chen, S.; Demachi, K. Towards on-site hazards identification of improper use of personal protective equipment using deep learning-based geometric relationships and hierarchical scene graph. Autom. Constr. 2021, 125, 103619. [Google Scholar] [CrossRef]

- Wu, J.; Cai, N.; Chen, W.; Wang, H.; Wang, G. Automatic detection of hardhats worn by construction personnel: A deep learning approach and benchmark dataset. Autom. Constr. 2019, 106, 102894. [Google Scholar] [CrossRef]

- Nath, N.D.; Behzadan, A.H. Deep Learning Detection of Personal Protective Equipment to Maintain Safety Compliance on Construction Sites. In Proceedings of the Construction Research Congress 2020: Computer Applications, Tempe, AZ, USA, 8–10 March 2020; pp. 181–190. [Google Scholar]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Luo, H.; Li, C. Computer vision aided inspection on falling prevention measures for steeplejacks in an aerial environment. Autom. Constr. 2018, 93, 148–164. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Luo, H.; Love, P.E. Falls from heights: A computer vision-based approach for safety harness detection. Autom. Constr. 2018, 91, 53–61. [Google Scholar] [CrossRef]

- Fang, Q.; Li, H.; Luo, X.; Ding, L.; Rose, T.M.; An, W.; Yu, Y. A deep learning-based method for detecting non-certified work on construction sites. Adv. Eng. Inform. 2018, 35, 56–68. [Google Scholar] [CrossRef]

- Xie, Z.; Liu, H.; Li, Z.; He, Y. A convolutional neural network based approach towards real-time hard hat detection. In Proceedings of the 2018 IEEE International Conference on Progress in Informatics and Computing (PIC), Suzhou, China, 14–16 December 2018; pp. 430–434. [Google Scholar]

- Gu, Y.; Xu, S.; Wang, Y.; Shi, L. An advanced deep learning approach for safety helmet wearing detection. In Proceedings of the 2019 International Conference on Internet of Things (iThings) and IEEE Green Computing and Communications (GreenCom) and IEEE Cyber, Physical and Social Computing (CPSCom) and IEEE Smart Data (SmartData), Atlanta, GA, USA, 14–17 July 2019; pp. 669–674. [Google Scholar]

- Cao, W.; Zhang, J.; Cai, C.; Chen, Q.; Zhao, Y.; Lou, Y.; Jiang, W.; Gui, G. CNN-based intelligent safety surveillance in green IoT applications. China Commun. 2021, 18, 108–119. [Google Scholar] [CrossRef]

- Zhang, C.; Tian, Z.; Song, J.; Zheng, Y.; Xu, B. Construction worker hardhat-wearing detection based on an improved BiFPN. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 8600–8607. [Google Scholar]

- Zhao, Y.; Chen, Q.; Cao, W.; Yang, J.; Xiong, J.; Gui, G. Deep learning for risk detection and trajectory tracking at construction sites. IEEE Access 2019, 7, 30905–30912. [Google Scholar] [CrossRef]

- Shen, J.; Xiong, X.; Li, Y.; He, W.; Li, P.; Zheng, X. Detecting safety helmet wearing on construction sites with bounding-box regression and deep transfer learning. Comput.-Aided Civ. Infrastruct. Eng. 2021, 36, 180–196. [Google Scholar] [CrossRef]

- Huang, L.; Fu, Q.; He, M.; Jiang, D.; Hao, Z. Detection algorithm of safety helmet wearing based on deep learning. Concurr. Comput. Pract. Exp. 2021, 2020, e6234. [Google Scholar] [CrossRef]

- Hu, J.; Gao, X.; Wu, H.; Gao, S. Detection of workers without the helments in videos based on YOLO V3. In Proceedings of the 2019 12th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 19–21 October 2019; pp. 1–4. [Google Scholar]

- Tan, S.; Lu, G.; Jiang, Z.; Huang, L. Improved YOLOv5 Network Model and Application in Safety Helmet Detection. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 330–333. [Google Scholar]

- Lee, M.-F.R.; Chien, T.-W. Intelligent Robot for Worker Safety Surveillance: Deep Learning Perception and Visual Navigation. In Proceedings of the 2020 International Conference on Advanced Robotics and Intelligent Systems (ARIS), Taipei, Taiwan, 19–21 August 2020; pp. 1–6. [Google Scholar]

- Kitsikidis, A.; Dimitropoulos, K.; Douka, S.; Grammalidis, N. Dance analysis using multiple kinect sensors. In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 789–795. [Google Scholar]

- Hong, Z.; Gui, F. Analysis on human unsafe acts contributing to falling accidents in construction industry. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Los Angeles, CA, USA, 17–21 July 2017; pp. 178–185. [Google Scholar]

- Chi, C.-F.; Chang, T.-C.; Ting, H.-I. Accident patterns and prevention measures for fatal occupational falls in the construction industry. Appl. Ergon. 2005, 36, 391–400. [Google Scholar] [CrossRef]

- Han, S.; Lee, S.; Peña-Mora, F. Comparative study of motion features for similarity-based modeling and classification of unsafe actions in construction. J. Comput. Civ. Eng. 2014, 28, A4014005. [Google Scholar] [CrossRef]

- Gong, J.; Caldas, C.H.; Gordon, C. Learning and classifying actions of construction workers and equipment using Bag-of-Video-Feature-Words and Bayesian network models. Adv. Eng. Inform. 2011, 25, 771–782. [Google Scholar] [CrossRef]

- Turaga, P.; Chellappa, R.; Subrahmanian, V.S.; Udrea, O. Machine recognition of human activities: A survey. IEEE Trans. Circuits Syst. Video Technol. 2008, 18, 1473–1488. [Google Scholar] [CrossRef] [Green Version]

- Haupt, T.C. The Performance Approach to Construction Worker Safety and Health; University of Florida: Gainesville, FL, USA, 2001. [Google Scholar]

- Nain, M.; Sharma, S.; Chaurasia, S. Safety and Compliance Management System Using Computer Vision and Deep Learning. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Jaipur, India, 22–23 December 2020; p. 012013. [Google Scholar]

- Park, M.-W.; Elsafty, N.; Zhu, Z. Hardhat-wearing detection for enhancing on-site safety of construction workers. J. Constr. Eng. Manag. 2015, 141, 04015024. [Google Scholar] [CrossRef]

- Enshassi, A.; Mayer, P.E.; Mohamed, S.; El-Masri, F. Perception of construction managers towards safety in Palestine. Int. J. Constr. Manag. 2007, 7, 41–51. [Google Scholar] [CrossRef]

- Törner, M.; Pousette, A. Safety in construction–a comprehensive description of the characteristics of high safety standards in construction work, from the combined perspective of supervisors and experienced workers. J. Saf. Res. 2009, 40, 399–409. [Google Scholar] [CrossRef] [PubMed]

- Fang, W.; Ding, L.; Love, P.E.; Luo, H.; Li, H.; Pena-Mora, F.; Zhong, B.; Zhou, C. Computer vision applications in construction safety assurance. Autom. Constr. 2020, 110, 103013. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, X.-X.; Chen, Y.; Jiang, W.; Gui, G.; Sari, H. Deep Learning-Based Automatic Safety Detection System for Crack Detection. In Proceedings of the 2020 7th International Conference on Dependable Systems and Their Applications (DSA), Xi’an, China, 28–29 November 2020; pp. 190–194. [Google Scholar]

- Zhao, H.-J.; Liu, W.; Shi, P.-X.; Du, J.-T.; Chen, X.-M. Spatiotemporal deep learning approach on estimation of diaphragm wall deformation induced by excavation. Acta Geotech. 2021, 16, 3631–3645. [Google Scholar] [CrossRef]

- Shi, J.; Sun, D.; Hu, M.; Liu, S.; Kan, Y.; Chen, R.; Ma, K. Prediction of brake pedal aperture for automatic wheel loader based on deep learning. Autom. Constr. 2020, 119, 103313. [Google Scholar] [CrossRef]

- Xiao, B.; Lin, Q.; Chen, Y. A vision-based method for automatic tracking of construction machines at nighttime based on deep learning illumination enhancement. Autom. Constr. 2021, 127, 103721. [Google Scholar] [CrossRef]

- Guo, Y.; Xu, Y.; Li, S. Dense construction vehicle detection based on orientation-aware feature fusion convolutional neural network. Autom. Constr. 2020, 112, 103124. [Google Scholar] [CrossRef]

- Mahmoodzadeh, A.; Mohammadi, M.; Noori, K.M.G.; Khishe, M.; Ibrahim, H.H.; Ali, H.F.H.; Abdulhamid, S.N. Presenting the best prediction model of water inflow into drill and blast tunnels among several machine learning techniques. Autom. Constr. 2021, 127, 103719. [Google Scholar] [CrossRef]

- Fatal Occupational Injuries for Selected Events or Exposures. Available online: https://www.bls.gov/news.release/cfoi.t02.htm (accessed on 10 October 2017).

- Arditi, D.; Lee, D.-E.; Polat, G. Fatal accidents in nighttime vs. daytime highway construction work zones. J. Saf. Res. 2007, 38, 399–405. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Li, H.; Dai, F.; Cao, D.; Yang, X.; Guo, H. Hierarchical bayesian model of worker response to proximity warnings of construction safety hazards: Toward constant review of safety risk control measures. J. Constr. Eng. Manag. 2017, 143, 04017006. [Google Scholar] [CrossRef]

- Awolusi, I.; Marks, E.; Hallowell, M. Wearable technology for personalized construction safety monitoring and trending: Review of applicable devices. Autom. Constr. 2018, 85, 96–106. [Google Scholar] [CrossRef]

- Jin, X.; Li, Y.; Luo, Y.; Liu, H. Prediction of city tunnel water inflow and its influence on overlain lakes in karst valley. Environ. Earth Sci. 2016, 75, 1–15. [Google Scholar] [CrossRef]

- Zhou, Y.; Ding, L.; Chen, L. Application of 4D visualization technology for safety management in metro construction. Autom. Constr. 2013, 34, 25–36. [Google Scholar] [CrossRef]

- Park, C.-S.; Kim, H.-J. A framework for construction safety management and visualization system. Autom. Constr. 2013, 33, 95–103. [Google Scholar] [CrossRef]

- Fang, W.; Ding, L.; Zhong, B.; Love, P.E.; Luo, H. Automated detection of workers and heavy equipment on construction sites: A convolutional neural network approach. Adv. Eng. Inform. 2018, 37, 139–149. [Google Scholar] [CrossRef]

- Xuehui, A.; Li, Z.; Zuguang, L.; Chengzhi, W.; Pengfei, L.; Zhiwei, L. Dataset and benchmark for detecting moving objects in construction sites. Autom. Constr. 2021, 122, 103482. [Google Scholar] [CrossRef]

- Zeng, T.; Wang, J.; Cui, B.; Wang, X.; Wang, D.; Zhang, Y. The equipment detection and localization of large-scale construction jobsite by far-field construction surveillance video based on improving YOLOv3 and grey wolf optimizer improving extreme learning machine. Constr. Build. Mater. 2021, 291, 123268. [Google Scholar] [CrossRef]

- Liu, H.; Wang, G.; Huang, T.; He, P.; Skitmore, M.; Luo, X. Manifesting construction activity scenes via image captioning. Autom. Constr. 2020, 119, 103334. [Google Scholar] [CrossRef]

- Wei, R.; Love, P.E.; Fang, W.; Luo, H.; Xu, S. Recognizing people’s identity in construction sites with computer vision: A spatial and temporal attention pooling network. Adv. Eng. Inform. 2019, 42, 100981. [Google Scholar] [CrossRef]

- Arabi, S.; Haghighat, A.; Sharma, A. A deep-learning-based computer vision solution for construction vehicle detection. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 753–767. [Google Scholar] [CrossRef]

- Zhong, B.; Pan, X.; Love, P.E.; Sun, J.; Tao, C. Hazard analysis: A deep learning and text mining framework for accident prevention. Adv. Eng. Inform. 2020, 46, 101152. [Google Scholar] [CrossRef]

- Xiao, B.; Yin, X.; Kang, S.-C. Vision-based method of automatically detecting construction video highlights by integrating machine tracking and CNN feature extraction. Autom. Constr. 2021, 129, 103817. [Google Scholar] [CrossRef]

- Fang, W.; Ma, L.; Love, P.E.; Luo, H.; Ding, L.; Zhou, A. Knowledge graph for identifying hazards on construction sites: Integrating computer vision with ontology. Autom. Constr. 2020, 119, 103310. [Google Scholar] [CrossRef]

- Jeelani, I.; Asadi, K.; Ramshankar, H.; Han, K.; Albert, A. Real-time vision-based worker localization & hazard detection for construction. Autom. Constr. 2021, 121, 103448. [Google Scholar]

- Love, P.E.; Smith, J.; Teo, P. Putting into practice error management theory: Unlearning and learning to manage action errors in construction. Appl. Ergon. 2018, 69, 104–111. [Google Scholar] [CrossRef]

- Love, P.E.; Teo, P.; Ackermann, F.; Smith, J.; Alexander, J.; Palaneeswaran, E.; Morrison, J. Reduce rework, improve safety: An empirical inquiry into the precursors to error in construction. Prod. Plan. Control 2018, 29, 353–366. [Google Scholar] [CrossRef]

- Love, P.E.; Teo, P.; Morrison, J. Unearthing the nature and interplay of quality and safety in construction projects: An empirical study. Saf. Sci. 2018, 103, 270–279. [Google Scholar] [CrossRef]

- Gouett, M.C.; Haas, C.T.; Goodrum, P.M.; Caldas, C.H. Activity analysis for direct-work rate improvement in construction. J. Constr. Eng. Manag. 2011, 137, 1117–1124. [Google Scholar] [CrossRef]

- Khosrowpour, A.; Niebles, J.C.; Golparvar-Fard, M. Vision-based workface assessment using depth images for activity analysis of interior construction operations. Autom. Constr. 2014, 48, 74–87. [Google Scholar] [CrossRef]

- Goh, Y.M.; Ubeynarayana, C. Construction accident narrative classification: An evaluation of text mining techniques. Accid. Anal. Prev. 2017, 108, 122–130. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J. Information extraction from text. In Mining Text Data; Springer: Berlin/Heidelberg, Germany, 2012; pp. 11–41. [Google Scholar]

- Liu, S.; Li, Y.; Fan, B. Hierarchical RNN for few-shot information extraction learning. In Proceedings of the International Conference of Pioneering Computer Scientists, Engineers and Educators, Zhengzhou, China, 21–23 September 2018; Springer: Singapore, 2018; pp. 227–239. [Google Scholar]

- Guo, L.; Zhang, D.; Wang, L.; Wang, H.; Cui, B. CRAN: A hybrid CNN-RNN attention-based model for text classification. In Proceedings of the International Conference on Conceptual Modeling, Xi’an, China, 22–25 October 2018; Springer: Cham, Switzerland, 2018; pp. 571–585. [Google Scholar]

- Bahn, S. Workplace hazard identification and management: The case of an underground mining operation. Saf. Sci. 2013, 57, 129–137. [Google Scholar] [CrossRef]

- Albert, A.; Hallowell, M.R.; Kleiner, B.M. Enhancing construction hazard recognition and communication with energy-based cognitive mnemonics and safety meeting maturity model: Multiple baseline study. J. Constr. Eng. Manag. 2014, 140, 04013042. [Google Scholar] [CrossRef]

- Jeelani, I.; Han, K.; Albert, A. Development of immersive personalized training environment for construction workers. Comput. Civ. Eng. 2017, 2017, 407–415. [Google Scholar]

- Daniel, G.; Chen, M. Video Visualization. In Proceedings of the IEEE Visualization, Washington, DC, USA, 19–24 October 2003. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision 2014, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Ma, S.; Zhang, X.; Jia, C.; Zhao, Z.; Wang, S.; Wang, S. Image and video compression with neural networks: A review. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1683–1698. [Google Scholar] [CrossRef] [Green Version]

- Hu, Y.; Lu, X. Learning spatial-temporal features for video copy detection by the combination of CNN and RNN. J. Vis. Commun. Image Represent. 2018, 55, 21–29. [Google Scholar] [CrossRef]

- Monshi, M.M.A.; Poon, J.; Chung, V. Deep learning in generating radiology reports: A survey. Artif. Intell. Med. 2020, 106, 101878. [Google Scholar] [CrossRef]

- Srivastava, M.; Muntz, R.; Potkonjak, M. Smart kindergarten: Sensor-based wireless networks for smart developmental problem-solving environments. In Proceedings of the 7th Annual International Conference on Mobile Computing and Networking, Rome, Italy, 16–21 July 2001; pp. 132–138. [Google Scholar]

- Lu, X.; Yang, Y.; Zhang, W.; Wang, Q.; Wang, Y. Face verification with multi-task and multi-scale feature fusion. Entropy 2017, 19, 228. [Google Scholar] [CrossRef] [Green Version]

| Categories | Type of Data | Numbers of Data Training–Validation–Testing | Method | Accuracy Value | Object/Action | Accident Type | References |

|---|---|---|---|---|---|---|---|

| Pose and gesture | Images | 4483–641–1281 | CNN | Accuracy: 0.93 | Excavator’s pose | (Struck-by) | [84] |

| Images | N/A–N/A–3241 | CNN | Accuracy: 0.94 Precision: 0.96 Recall: 0.98 | Worker’s standing, walking, squatting, sitting, or bending. | (Struck-by) | [85] | |

| Images | 2116–235–1008 | RNN | Accuracy: 0.96 | Ergonomic postures | WMSDs | [86] | |

| Images | 10,000–N/A–N/A | CNN | Accuracy: 0.91 | Workers’ and excavators’ status | Struck-by | [87] | |

| Images | N/A | CNN | Accuracy: 0.83 | Workers’ standing still, bending, ladder-climbing/stepping/standing | Fall | [88] | |

| Videos | N/A | CNN + RNN | F1-score: 0.83 | Ergonomic postures | WMSDs | [89] | |

| Signal | 2196 (training and testing) | RNN | Accuracy: 0.99 F1-score: 0.99 | Ergonomic postures | WMSDs | [90] | |

| Signal | 32,396 (60%–N/A–40%) | RNN | Accuracy: 0.95 | Workers’ standing, bending, squatting, walking, twisting, kneeling, and using stairs | WMSDs | [91] | |

| Images | N/A | CNN | Accuracy: 0.96 | Ergonomic postures | WMSDs | [92] | |

| Action | Videos | 160–N/A–40 | CNN + RNN | Accuracy: 0.92 | Ladder-climbing actions | Fall | [15] |

| Images | 1461–N/A–450 | CNN | Precision: 0.75 Recall: 0.9 | Worker traversing supports | Fall | [93] | |

| Interaction | Videos | 10 (80%–10%–10%) | RNN | N/A | Worker–equipment interactions | Struck-by | [94] |

| Videos | 5 (70%–10%–20%) | RNN | Accuracy: 0.9 | Excavators and dump truck interactions during earthmoving tasks | Struck-by | [95] | |

| Images | 2169 (training and validation)–241 | CNN | Precision: 0.87 | Worker–equipment interactions | Struck-by | [96] | |

| Images | 3652–N/A–913 | CNN | Precision: 0.66 Recall: 0.65 | Worker–tool interactions | General accident | [97] | |

| Images | 4114–N/A–398 | CNN | Precision: 0.91 | Worker–equipment interactions | Struck-by | [98] | |

| Images | 6000–N/A–N/A | CNN | Precision: 0.96 Recall: 0.93 | Worker–excavator interactions | (Struck-by) | [99] | |

| Images | 523,966–N/A–50,000 | CNN | Accuracy: 0.96 Precision: 0.98 Recall: 0.98 F1-score: 0.98 | Worker–equipment interactions | Struck-by | [2] | |

| Images | N/A | CNN | Recall: 0.5 | Components’ or crews’ relationships | General accident | [100] | |

| Videos | 8000–N/A–2000 | RNN | Accuracy: 0.95 | Worker–equipment interactions | Struck-by | [37] | |

| Videos | 210–70–84 | CNN + RNN | Accuracy: 0.93 | Hand signals for instructing tower crane operations | (Struck-by) | [101] | |

| Images | N/A | CNN | Precision: 1 Recall: 0.82 | Worker–equipment interactions | Struck-by | [102] | |

| Activity | Images | 96–N/A–N/A | CNN | Precision: 0.52 Recall: 0.45 F1-score: 0.48 | Mixed activities of workers and equipment | General accident | [35] |

| Images | 703–235–N/A | CNN | Accuracy: 0.86 | Scaffolding activity | Fall | [103] | |

| Videos | 7–N/A–3 | CNN + RNN | mAP: 0.73 | Earthmoving activity | (Struck-by) | [36] | |

| Images | N/A | CNN | Accuracy: 0.84 | Concrete pouring | (General accident) | [104] | |

| Safety compliance | Images | 944–240–288 | CNN | mAP: 0.72 | PPE (hard hat, vest) | Fall and struck-by | [105] |

| Images | 81,000–N/A–19,000 | CNN | Precision: 0.96 Recall: 0.95 | PPE (hard hat) | Fall and struck-by | [106] | |

| Images | 6029–N/A–6000 | CNN | Precision: 0.94 Recall: 0.83 | PPE (hard hat, glasses, dust mask, safety belt) | Fall and struck-by | [107] | |

| Images | 1587–N/A–1587 | CNN | mAP: 0.84 | PPE (hard hat) | (General accident) | [108] | |

| Images | 2583–N/A–726 | CNN | Accuracy: 0.9 | PPE (hard hat, vest) | Fall and struck-by | [109] | |

| Images | N/A | CNN | Precision: 0.9 Recall: 0.93 | PPE (hard hat, harness, anchorage) | Fall | [110] | |

| Images | 693–N/A–130 | CNN | Precision: 0.99 Recall: 0.95 | PPE (harness) | Fall | [111] | |

| Images | 8000–N/A–N/A | CNN | Precision: 0.83 Recall: 0.83 | Noncertified work of workers | (General accident) | [112] | |

| Images | 1366–N/A–N/A | CNN | mAP: 0.55 | PPE (hard hat) | Struck-by | [113] | |

| Images | 7000–N/A–200 | CNN | Precision: 0.91 Recall: 0.9 | PPE (hard hat) | (General accident) | [114] | |

| Images | 64,115–2693–N/A | CNN | mAP: 0.86 | PPE (hard hat) | Fall and struck-by | [115] | |

| Images | 1587–N/A−1587 | CNN | mAP: 0.87 | PPE (hard hat) | (General accident) | [116] | |

| Images | 100,000–N/A–N/A | CNN | mAP: 0.89 | PPE (hard hat, vest) | Struck-by | [117] | |

| Images | 9800–N/A–9000 | CNN | Accuracy: 0.94 Precision: 0.96 Recall: 0.96 | PPE (hard hat) | Struck-by | [118] | |

| Images | 13,000–N/A–1300 | CNN | mAP: 0.93 | PPE (hard hat) | Fall and struck-by | [119] | |

| Images | 20,554–N/A–1501 | CNN | mAP: 0.94 | PPE (hard hat) | (General accident) | [120] | |

| Images | 5000–N/A–1000 | CNN | mAP: 0.96 | PPE (hard hat) | (General accident) | [121] | |

| Images | N/A | CNN | mAP: 0.58 | PPE (hard hat) | Fall | [122] |

| Categories | Type of Data | Numbers of Data Training–Validation–Testing | Method | Accuracy Value | Object/Action | Accident Type | References |

|---|---|---|---|---|---|---|---|

| Work environment (WE) | Images | 4000–N/A–667 | CNN | Accuracy: 0.97 | Guardrail | Fall | [14] |

| Images | N/A | CNN | Accuracy: 0.9 | Crane cracks | (General accident) | [135] | |

| Signal | N/A | CNN | N/A | Diaphragm wall deformation | (General accident) | [136] | |

| Signal | 55–N/A–15 | RNN | N/A | Brake pedal aperture for automatic wheel loader | (General accident) | [137] | |

| Images | 10,000–N/A–N/A | CNN | Accuracy: 0.95 Precision: 0.76 | Construction machines at nighttime | Struck-by | [138] | |

| Site layout (SL) | Images | 240 (90%–N/A–10%) | CNN | mAP: 0.99 | Dense multiple construction vehicles | (General accident) | [139] |

| Images | N/A | GNN | Accuracy: 0.95 | Safety-rule violations of complex construction scenes | (General accident) | [65] | |

| Site condition (SC) | Signal | 600 (80%–N/A–20%) | RNN | Accuracy: 0.99 | Prediction of water inflow into drill and blast tunnels | (General accident) | [140] |

| Categories | Type of Data | Numbers of Data Training–Validation–Testing | Method | Accuracy Value | Object/Action | Accident Type | References |

|---|---|---|---|---|---|---|---|

| Safety management plan | Images | 10,000–N/A–1500 | CNN | Accuracy: 0.95 | Workers and excavators | (General accident) | [148] |

| Images | 19,404–4000–18,264 | CNN | mAP: 0.55 | Moving object detection (workers and equipment) | Struck-by | [149] | |

| Images | 2324–26–231 | CNN | Recall: 0.86 mAP: 0.83 | Construction equipment | Struck-by | [150] | |

| Images | 34,510 (66%–17%–17%) | CNN + RNN | Precision: 0.99 Recall: 1 F1-score: 0.99 | Construction activity scenes | (General accident) | [151] | |

| Videos | 4–N/A–8 | CNN + RNN | Accuracy: 0.79 | Recognizing people’s identity | (General accident) | [152] | |

| Images | 2094–523–654 | CNN | mAP: 0.91 | Construction equipment | (Struck-by) | [153] | |

| Accident investigation and analysis | Text | 95–N/A–50 | RNN | F1-score: 0.84 | Information extraction from accident reports | General accident | [38] |

| Text | 2624–N/A–657 | GNN | Accuracy: 0.87 Precision: 0.51 Recall: 0.54 | Text classification of near-misses safety reports | General accident | [39] | |

| Text | 3000 | CNN | Precision: 0.8 Recall: 0.68 F1-score: 0.71 | Hazard record analysis | General accident | [154] | |

| Text | 90,000 (90%–N/A–10%) | RNN | F1-score: 0.87 | Automatically learning injury precursors | General accident | [16] | |

| Text | 2000 | CNN | Precision: 0.65 Recall: 0.61 | Classifying and visualizing accident narratives | General accident | [18] | |

| Images | 2000–N/A–N/A | CNN | Precision: 0.89 Recall: 0.93 | A gate scenario and an earthmoving scenario | General accident | [155] | |

| Hazard identification and risk management | Images | 40,000 | CNN | N/A | Identifying hazards | General accident | [156] |

| Images | 6000–N/A–1000 | CNN | Accuracy: 0.93 | Worker localization and hazard detection | General accident | [157] |

| Safety Factors | Methods | Accuracy Value | (Min–Max) Value | Mean Value | References |

|---|---|---|---|---|---|

| Behaviors | CNN | Accuracy | 0.83–0.96 | 0.91 | [2,15,84,85,87,88,92,101,103,104,109,118,148] |

| Precision | 0.52–0.99 | 0.88 | [2,35,85,93,96,97,98,99,102,106,107,110,111,112,114,118] | ||

| Recall | 0.45–0.98 | 0.84 | [2,35,85,93,97,99,100,102,106,107,110,111,112,114,118] | ||

| F1-score | 0.48–0.98 | 0.76 | [2,35,89] | ||

| mAP | 0.55–0.96 | 0.81 | [36,105,108,113,115,116,117,119,120,121,122] | ||

| RNN | Accuracy | 0.90–0.99 | 0.95 | [15,37,86,90,91,95] | |

| F1-score | 0.83–0.99 | 0.91 | [89,90] | ||

| mAP | 0.73 | [36] | |||

| Physical conditions | CNN | Accuracy | 0.9–0.97 | 0.94 | [14,135,138] |

| Precision | 0.76 | [138] | |||

| mAP | 0.99 | [139] | |||

| RNN | Accuracy | 0.99 | [140] | ||

| GNN | Accuracy | 0.95 | [65] | ||

| Management issues | CNN | Accuracy | 0.79–0.95 | 0.89 | [148,152,157] |

| Precision | 0.65–0.99 | 0.83 | [18,151,154,155] | ||

| Recall | 0.61–1.0 | 0.82 | [18,150,151,154,155] | ||

| F1-score | 0.71–0.99 | 0.85 | [151,154] | ||

| mAP | 0.55–0.91 | 0.76 | [149,150,153] | ||

| RNN | Accuracy | 0.79 | [152] | ||

| Precision | 0.99 | [151] | |||

| Recall | 1 | [151] | |||

| F1-score | 0.84–0.99 | 0.90 | [16,38,151] | ||

| GNN | Accuracy | 0.87 | [39] | ||

| Precision | 0.51 | [39] | |||

| Recall | 0.54 | [39] |

| Purposes | Contributions | Limitations | References |

|---|---|---|---|

| Detecting workers and equipment, estimating, recognizing, and analyzing their behaviors. | DL models can support monitoring safety and proactively preventing hazards by sending early warning information combined with the on-site alarm equipment to the management staff so they can provide instant feedback concerning unsafe behavior, and appropriate actions can be put in place to prevent reoccurrence. | The training dataset was limited. | [2,15,35,84,91,93,94,96,98,99,103,111,148,149,151,152,156,157] |

| The accuracy of the method is affected by the presence of occlusions, confusion with background patches, poor illumination, and blurriness. | [84,87,97,105,107,110,148,155,156] | ||

| They do not associate any personal identification with the output for verification. | [105,106,118,156,157] | ||

| Not mentioned. | [37,86,95,101,102,108,109,112,113,114,115,116,117,119,120,121,122,150,153] | ||

| Proactive and automatic safety risk level can be analyzed and evaluated for making decisions on risk management. | The dataset was limited. | [36,89,94,99] | |

| Cases of the on-site experiment failed due to visual obstacles. | [92] | ||

| Not mentioned. | [85,86,88,90,104] | ||

| DL models support strategizing effective training solutions and designing effective hazard recognition and management practices. | The dataset was limited. | [93,99,100] | |

| The accuracy of the method is affected by the presence of occlusions. | [93] | ||

| Individual workers need to be identified. | [157] | ||

| The proposed method can be applied to operator assistance systems in construction machinery to achieve active safety. | The dataset was limited. | [2] | |

| Detecting unsafe physical conditions. | DL models can support monitoring safety and early warning, so managers can provide the appropriate solutions to prevent or control risks. | The dataset was limited. | [14,140] |

| Occlusion was not addressed. | [14] | ||

| Not mentioned. | [65,135,136,137,138,139] | ||

| Before the predicted deformation reaches the threshold limit, control strategies can be implemented to avoid excessive deformation and the corresponding risks to the engineering project and surrounding environment. | Not mentioned. | [136] | |

| Investigating and analyzing safety reports. | The results can be used proactively during typical work planning, job hazard analyses, prejob meetings, and audits. | Not mentioned | [16,39] |

| DL models raise the security awareness of workers and professionals to better understand and prevent hazards and accidents, and aid in educating workers about “what not to do” and “what to do”. | The dataset was limited. | [18,38,154] | |

| Not mentioned. | [16,39] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, H.T.T.L.; Rafieizonooz, M.; Han, S.; Lee, D.-E. Current Status and Future Directions of Deep Learning Applications for Safety Management in Construction. Sustainability 2021, 13, 13579. https://doi.org/10.3390/su132413579

Pham HTTL, Rafieizonooz M, Han S, Lee D-E. Current Status and Future Directions of Deep Learning Applications for Safety Management in Construction. Sustainability. 2021; 13(24):13579. https://doi.org/10.3390/su132413579

Chicago/Turabian StylePham, Hieu T. T. L., Mahdi Rafieizonooz, SangUk Han, and Dong-Eun Lee. 2021. "Current Status and Future Directions of Deep Learning Applications for Safety Management in Construction" Sustainability 13, no. 24: 13579. https://doi.org/10.3390/su132413579

APA StylePham, H. T. T. L., Rafieizonooz, M., Han, S., & Lee, D.-E. (2021). Current Status and Future Directions of Deep Learning Applications for Safety Management in Construction. Sustainability, 13(24), 13579. https://doi.org/10.3390/su132413579