Data Usage and Access Control in Industrial Data Spaces: Implementation Using FIWARE

Abstract

1. Introduction

2. Related Work

- Collection: Apache Kafka(Apache Kafka: https://kafka.apache.org/), Apache NiFi (Apache NiFi: https://nifi.apache.org/), Orion FIWARE Context Broker (FIWARE Orion: https://fiware-orion.readthedocs.io).

- Storage: CassandraDB (CassandraDB: http://cassandra.apache.org/), HDFS (HDFS: https://hadoop.apache.org/docs/r1.2.1/hdfs_design.html), MongoDB (MongoDB: https://www.mongodb.com/es).

- Processing and analytics: Apache Spark (Apache Spark: https://spark.apache.org/), Apache Flink (Apache Flink: https://flink.apache.org/), Apache Storm (Apache Storm: http://storm.apache.org/), FIWARE Cosmos (FIWARE Cosmos: https://fiware-cosmos.readthedocs.io).

- Application: Apache Zeppelin (Apache Zeppelin: https://zeppelin.apache.org/), Kibana (Kibana: https://www.elastic.co/es/kibana), GeoSpark (GeoSpark: https://github.com/DataSystemsLab/GeoSpark).

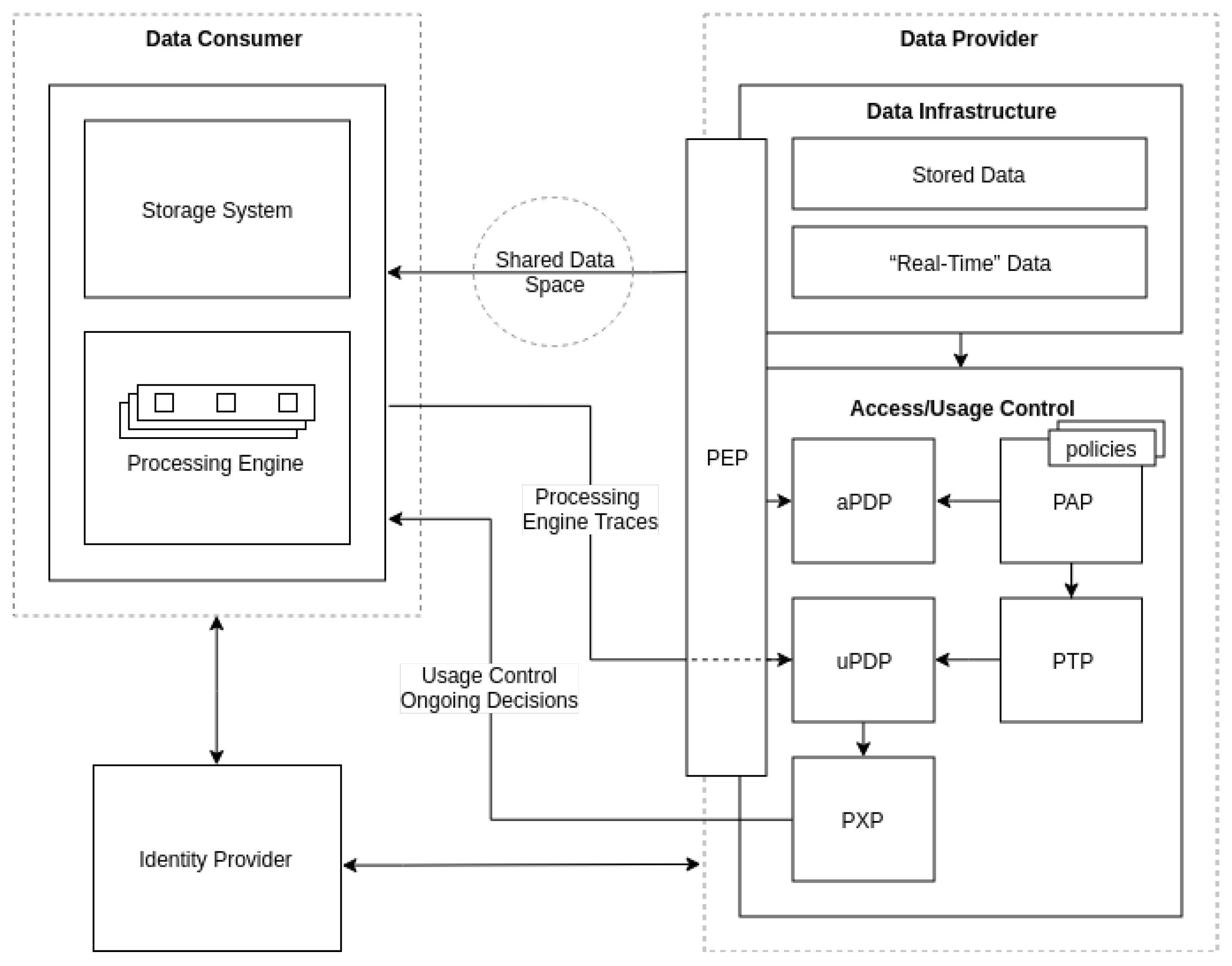

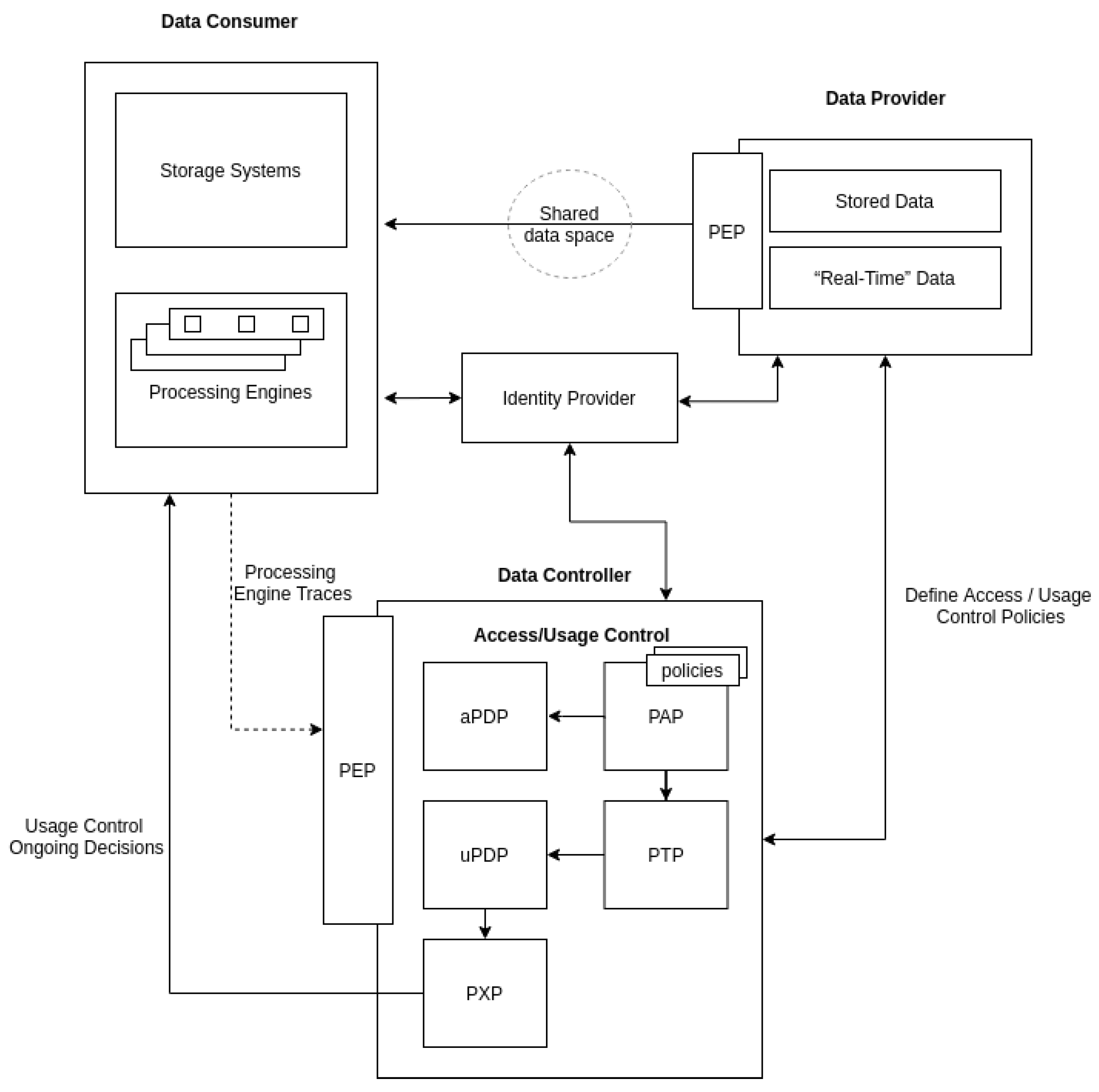

3. Proposed Solution

3.1. Design Principles

- Trust. IDS Connectors provide a trusted environment that enables the achievement of data Usage Control [10].

- Interoperability. Standardization of protocols is crucial to ensure the understanding between all the components involved in the architecture, for managing both Usage Control and identity.

- Governance. Emerging data-centered businesses need to define data governance programs to exploit data in a cost-effective manner [49]. Data sharing should comply with the data governance rules defined by each of the organizations involved. In this scope, providing ways to respect and protect the data of all the parties involved is one of the main requirements that data Usage Control must fulfill. Thus, data providers must have access to monitoring and configuration tools that allow them to control what becomes of their data. Nevertheless, as pointed out by [50], in collaborative systems, resources can be administered by multiple data owners. Due to this fact, the aspects of data governance model, policy composition and conflict resolution need to be addressed. In this context, the concept of “data governance model” defines the authority that entities have over a resource; “policy composition” describes how the authorization requirements authored by multiple entities are combined or reconciled to regulate the access to a resource, and “conflict resolution” indicates the method used to resolve policy conflicts in order to obtain a conclusive decision [50]. In this regard, the preliminary version of this proposed architecture takes the work presented by Mahmudlu et al. as a reference, in which they define multiple ownership, authoritative and predefined mechanisms for addressing the main aspects of governance model, policy combination and conflict resolution respectively [51].

- Performance. The accomplishment data Usage Control policies can be only ensured if reaction to policies violation is quick and efficient.

- Flexibility. As many data-sharing scenarios and use cases are contemplated, the solution must be adaptable to the specific requirements of such scenarios.

3.2. Agents Involved

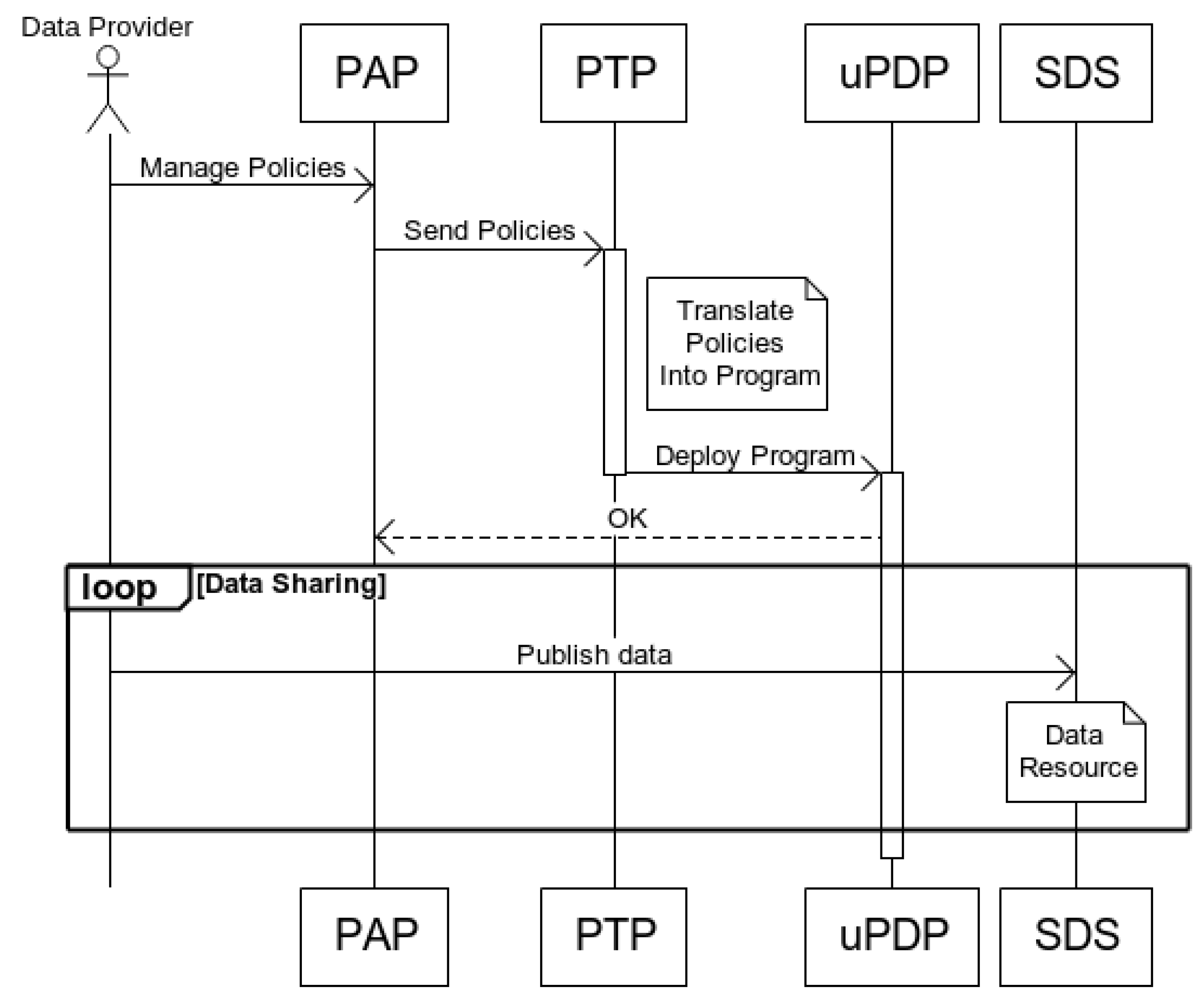

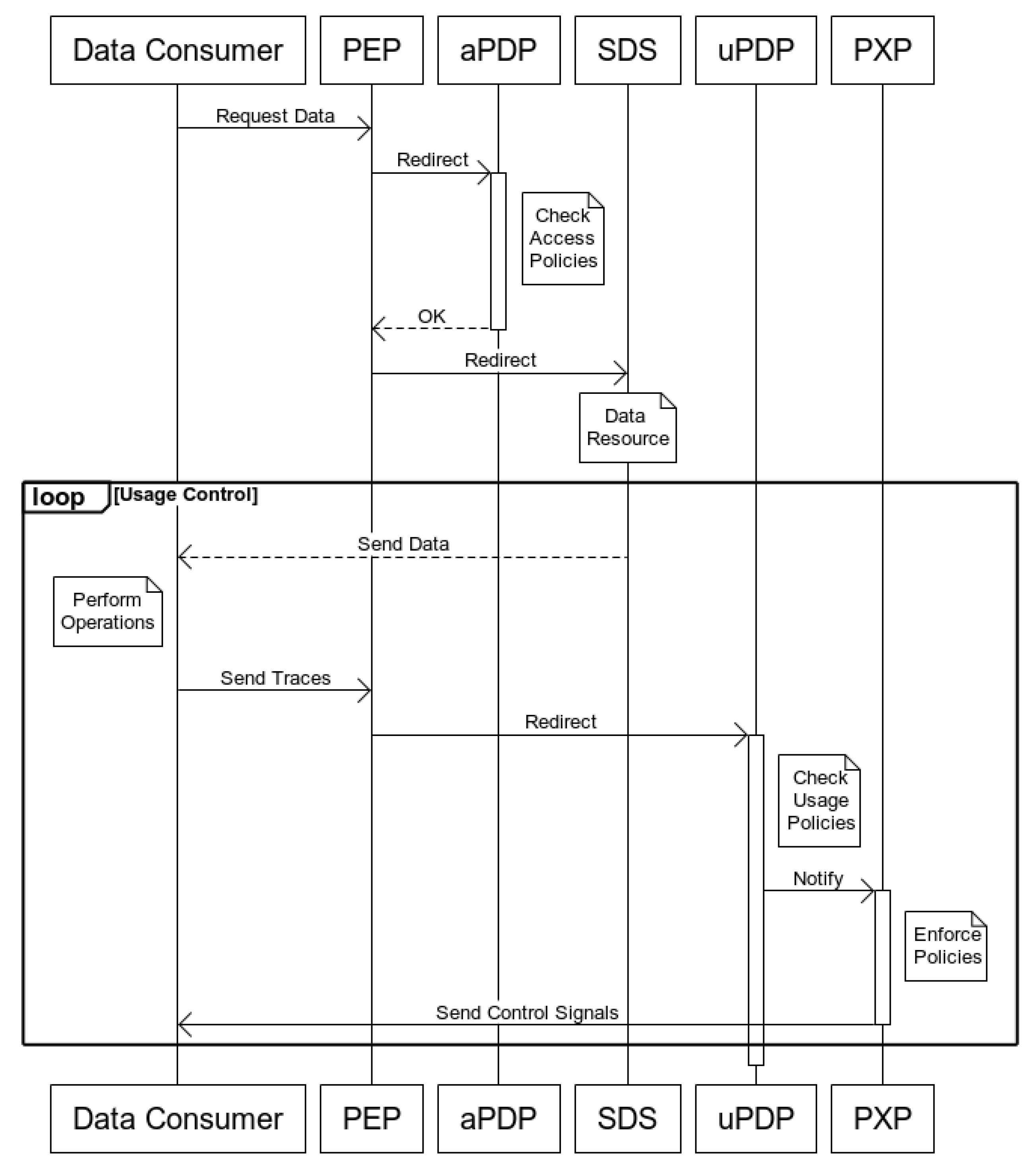

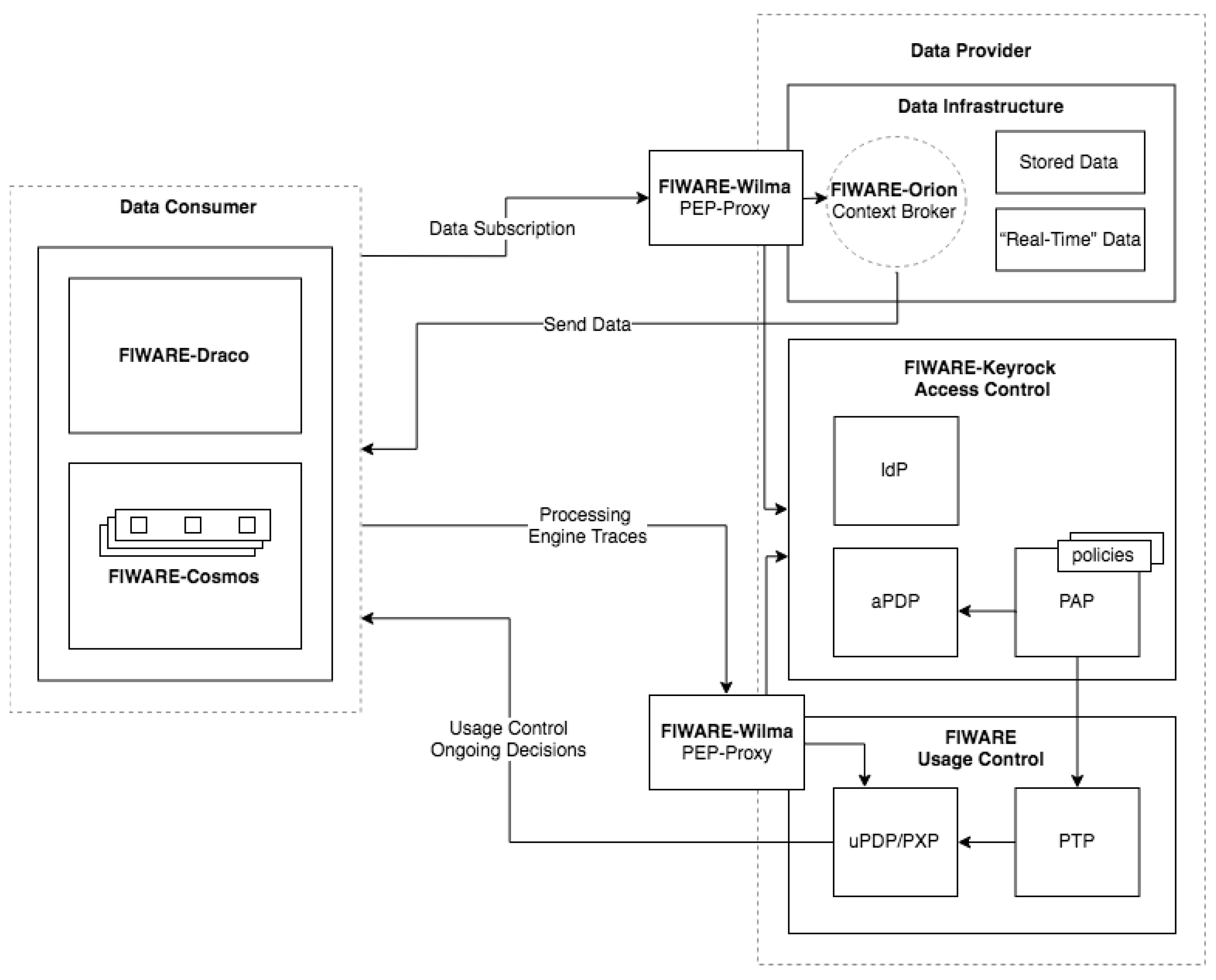

3.3. Architecture and Workflow

4. Implementation Using FIWARE

- Keyrock The Keyrock GE (FIWARE Keyrock: https://fiware-idm.readthedocs.io) is responsible for Identity Management. Using Keyrock enables OAuth 2.0-based authentication and authorization security to services and applications, as described in [13,14]. In the context of this implementation, Keyrock plays the role of IdP, manages authorization policies (PAP) and decides which DCs can access which resources in the data infrastructure (aPDP). Therefore, DPs and DCs perform the authentication process relying on Keyrock. Guaranteeing the unequivocal identification of all the agents involved in the data usage architecture is mandatory to ensure a secure way of providing or consuming data. By using Keyrock, DPs can create authorization policies to constrain DCs’ access to the data infrastructure.

- Wilma: The Wilma GE (FIWARE Wilma: https://fiware-pep-proxy.readthedocs.io) brings support of proxy functions within OAuth 2.0-based authentication schemas. It also implements PEP functions within an XACML-based Access Control schema [12]. In the scope of this implementation, two Wilma instances are needed. One Wilma instance is in charge of enforcing access policies over requests sent to the data infrastructure [17]. When a DC is authenticated through Keyrock, an OAuth 2.0 token is generated, which must be included in every request sent to the DP’s data infrastructure. Wilma intercepts requests and asks Keyrock to validate the token, verifying the DC’s identity. Since Keyrock also acts as the aPDP, it checks the DC’s access authorization policies. In case that the DC’s request complies with the established policies, Wilma grants access to the requested resource. With regard to data Usage Control, a second Wilma instance is needed as a PEP proxy to authenticate the traces sent from the DC’s processing engine to the uPDP.

- AuthZForce: The AuthZForce GE (FIWARE AuthZForce: https://authzforce-ce-fiware.readthedocs.io) brings additional support to aPDP/PAP functions within an Access Control schema based on the XACML standard. It has not been included in the present implementation, but it could be used to create more advanced fine-grained authorization policies and to make decisions over requests received from PEPs.

- Orion: The Context Broker (Orion) GE (FIWARE Orion: https://fiware-orion.readthedocs.io) manages the entire lifecycle of context information including updates, queries, registrations and subscriptions. The Context Broker offers the FIWARE NGSI (Next Generation Service Interface) [54] APIs and associated information model (entity, attribute, metadata) as the main interface for sharing data among stakeholders. In addition to being the centerpiece of any platform “powered by FIWARE”, the Context Broker has been recognized as a CEF Building Block, which is one step forward on its path towards becoming a global standard for large scale contextual information management [55]. In the context of this implementation, it constitutes the DP’s data infrastructure and SDS, which enables the sharing of data between the DC and the DP in a secure way. In other words, the DP makes use of the NGSI API provided by the Orion Context Broker in order to publish or expose the data they have to offer, whereas DCs retrieve or subscribe to said data.

- Cosmos: The Cosmos GE (FIWARE Cosmos: https://fiware-cosmos-flink.readthedocs.io) provides an interface for integrating Apache Flink and Apache Spark with the rest of the components in the FIWARE Ecosystem. Over recent years, Apache Flink and Apache Spark have established themselves as the most popular open source data processing frameworks. Both provide a wide assortment of resources for processing data both in streaming and batch modes. Since Apache Spark and Apache Flink provide similar functionalities, an implementation with only one of these frameworks is enough to validate the proposed architecture. Therefore, for the implementation presented in this work, we have relied on the Apache Flink as the processing engine on the DC’s side, in charge of performing operations on the DP’s data.

- Draco: The Draco GE (FIWARE Draco: https://fiware-draco.readthedocs.io) is aimed at providing storage of historical context data, allowing the reception of data events and dynamically recording them with a predefined structure in several data storage systems. In the scope of this implementation, Draco is proposed as the building block for providing the storage system in the DC’s infrastructure.

- The PTP is a piece of software written in Python in charge of translating the ODRL usage policies defined through Keyrock into a Complex Event Processing (CEP) program using the Flink CEP Scala API. Every time the usage policies that apply to a certain DC are modified by the DP, a new program is generated by the PTP containing a CEP rule for each policy. This program is then compiled, packaged, and sent to the uPDP.

- The uPDP is an Apache Flink computation cluster that runs all the CEP programs generated by the PTP: one for each DC. These programs take advantage of the CEP capabilities of Apache Flink to verify whether the DC complies with the policies defined by the DP or not. This is done by analyzing the traces generated by the processing engine on the DC’s side (Apache Flink in this case) which are sent to the uPDP. In the event of noncompliance, the PXP is notified.

- The PXP is a piece of software that is notified each time the uPDP detects policy noncompliance. It is written in Scala and attached to each program that runs on the uPDP. The PXP enforces the control signal established by the DP for the unfulfilled policy. For instance, in order to stop a DC from receiving data as a punishment for policy noncompliance, the control signal sent by the PXP is an unsubscription request to Orion. If the DC is, in turn, processing data in an incorrect manner, one way to punish this policy violation would be to send a control signal that kills the processing job on the DC’s side. These are the control signals that have been implemented so far, but the goal is to extend the capabilities of the PXP to support custom control signals written by the DP.

- The DC sends a subscription request to the Orion Context Broker to retrieve data from the DP.

- The subscription request is intercepted by the access PEP Proxy and validated by the IdP and the aPDP by checking whether the token containing the user information is valid an if the user has the right to access the requested resource.

- Once the subscription is done, the DC starts receiving data from the Orion Context Broker at the processing engine. The traces generated by the program containing all the operations performed on data are sent to the uPDP, verifying the DC’s identity through the usage PEP Proxy. Moreover, this instance of the PEP Proxy is in charge of redirecting the traces to each specific uPDP CEP program. When translating the defined ODRL policies for a DC, the PTP generates a new CEP program and maps the port where it runs to the DC. Thus, when receiving the traces and after verifying the DC’s identity, the PEP Proxy knows the port in which the corresponding CEP program is running and can redirect the traces to it. The uPDP then verifies that the DC complies with all the policies defined through the PAP. Otherwise, the uPDP notifies the PXP, who sends the corresponding control signal.

5. Validation: A Case Study in the Food Industry

5.1. Scenario Overview

5.2. Policy Specification

- Policy A: The DC shall NOT save the data without aggregating them every 15 min first or else the processing job will be terminated

- Policy B: The DC shall NOT receive more than 200 notifications from the Context Broker in 1 min or else the subscription to the entity will be deleted

| Listing 1: ODRL Specification of Policy A and Policy B. |

| { |

| “@context”: [“http://www.w3.org/ns/odrl.jsonld”, |

| “http://keyrock.fiware.org/FIDusageML/profile/FIDusageML.jsonld”], |

| “@type”: “Set”, |

| “uid”: “ http://keyrock.fiware.org/FIDusageML/policy:1010”, |

| “profile”: “http://keyrock.fiware.org/FIDusageML/profile”, |

| “obligation”: [{ |

| “target”: “http://orion.fiware.org/NGSInotification”, |

| “action”: “aggregate”, |

| “constraint”: [{ |

| “leftOperand”:“WindowNotification”, |

| “operator”: “gt”, |

| “rightOperand”: { |

| “@value”: “PT15M”, |

| “@type”: “xsd:duration”} |

| }], |

| “consequence”: [{ |

| “action”: “killJob”, |

| “value”: “http://orion.fiware.org/NGSIkilljob” |

| }], |

| }, |

| { |

| “target”: “http://orion.fiware.org/NGSInotification”, |

| “action”: “NGSIEventLimit”, |

| “constraint”: [{ |

| “leftOperand”: “NGSIevent”, |

| “operator”: “lt”, |

| “rightOperand”: { |

| “@value”: “200”, |

| “@type”: “xsd:integer” }}, |

| { |

| “leftOperand”:“WindowNotification”, |

| “operator”: “gt”, |

| “rightOperand”: { |

| “@value”: “PT1M”, |

| “@type”: “xsd:duration”} |

| }], |

| “consequence”: [{ |

| “action”: “unsubscribe”, |

| “value”: “http://orion.fiware.org/NGSIunsubscribe” |

| }], |

| }, |

| ] |

| } |

| Listing 2: uPDP code generated from the ODRL Specification by the PTP. |

| val operationStream : DataStream[ExecutionGraph] = stream |

| .filter(_.isRight) |

| .map(_.right.get) |

| .flatMap(_.msg.split(“ -> ”)) |

| .map(ExecutionGraph) |

| // Entity Stream |

| val entityStream : DataStream[Entity] = stream |

| .filter(_.isLeft) |

| .map(_.left.get ) |

| .flatMap(_.entities) |

| // First pattern: At least N events in T. Any other time |

| val countPattern = Pattern.begin[Entity](“events” ) |

| .timesOrMore(Policies.numMaxEvents+1) |

| .within(Time.seconds(Policies.facturationTime)) |

| CEP.pattern(entityStream, countPattern) |

| .select(events => |

| Signals.createAlert(Policy.COUNT_POLICY, events, Punishment.UNSUBSCRIBE)) |

| // Second pattern: Source -> Sink. Aggregation TimeWindow |

| val aggregatePattern = Pattern.begin[ExecutionGraph] |

| (“start”, AfterMatchSkipStrategy.skipPastLastEvent()) |

| .where(Policies.executionGraphChecker(_, “source”)) |

| .notFollowedBy(“middle”) |

| .where(Policies.executionGraphChecker(_,“aggregation”,Policies.aggregateTime)) |

| .followedBy(“end”) |

| .where(Policies.executionGraphChecker(_, “sink”)) |

| .timesOrMore(1) |

| CEP.pattern(operationStream, aggregatePattern).select(events => |

| Signals.createAlert(Policy.AGGREGATION_POLICY, events, Punishment.KILL_JOB)) |

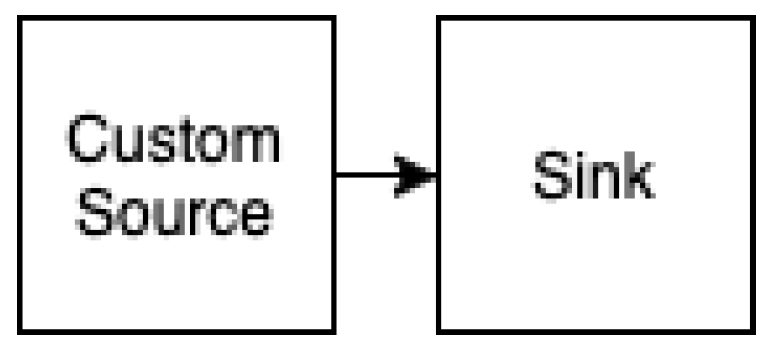

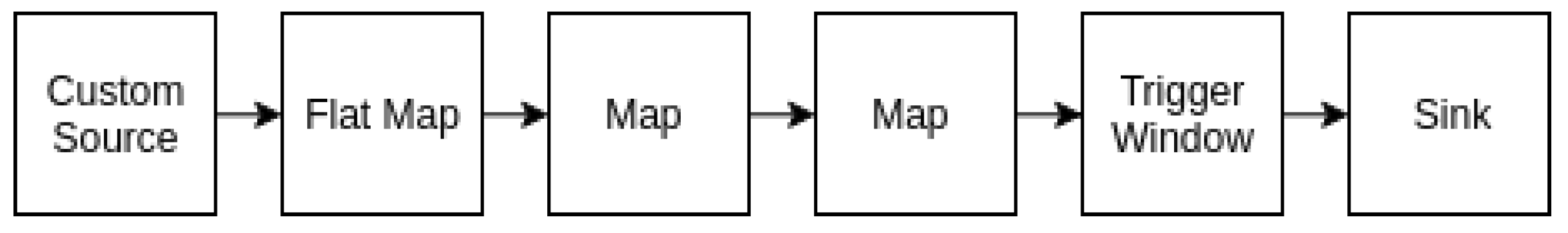

5.3. Data Processing and Policy Enforcement

| Listing 3: Sample Execution Graph Log generated by the DC’s Flink processing engine for Job I. |

| org.apache.flink.runtime.executiongraph.ExecutionGraph - Source: Custom Source -> Sink: Print to Std. Out |

| (1/1) (5dd7fd1626577f325e61fe1effc996c2) switched from SCHEDULED to~DEPLOYING. |

| Listing 4: Execution Graph Log generated by the DC’s Flink processing engine for Job II. |

| 2019-07-18 11:07:59.773 [flink-akka.actor.default-dispatcher-2] INFO |

| org.apache.flink.runtime.executiongraph.ExecutionGraph - Source: Custom Source -> Flat Map -> Map -> |

| Map (1/1) (b613fa2767c444587f05a3502b8fc7b0) switched from SCHEDULED to~DEPLOYING. |

| 2019-07-18 11:07:59.773 [flink-akka.actor.default-dispatcher-2] INFO |

| org.apache.flink.runtime.executiongraph.ExecutionGraph - Deploying Source: Custom Source -> |

| Flat Map -> Map -> Map (1/1) (attempt #0) to~fc98c42cd3a0 |

| 2019-07-18 11:07:59.778 [flink-akka.actor.default-dispatcher-2] INFO |

| org.apache.flink.runtime.executiongraph.ExecutionGraph - TriggerWindow(TumblingProcessingTimeWindows |

| (15000), AggregatingStateDescriptor{name=window-contents, defaultValue=null, |

| serializer=org.fiware.cosmos.orion.flink.cep.examples.example1.AveragePrice$$anon$26$$anon$11@3bbaf7ca}, |

| ProcessingTimeTrigger(), AllWindowedStream.aggregate(AllWindowedStream.java:475)) -> |

| Sink: Print to Std. Out (1/1) (de683f055949331f5602e94b306ae10d) switched from SCHEDULED to~DEPLOYING. |

| Listing 5: Sample notification Log generated by the DC’s Flink processing engine for Job II. |

| 2019-07-18 15:15:32.032 [nioEventLoopGroup-3-2] INFO |

| org.fiware.cosmos.orion.flink.connector.OrionHttpHandler - |

| {“creationTime”:1563462932031,“fiwareService”:“555”,“fiwareServicePath”: “application/json; |

| charset=utf-8”,“entities”: [{“id”:“ticket”,“type”:“ticket”,“attrs”:{“_id”:{“type”:“String”,“value”:75, |

| “metadata”:{}},“items”:{“type”:“object”,“value”:[{“net_am”:4.99,“n_unit”:1,“desc”:“BREAD”},{“net_am”: |

| “metadata”:{}},“items”:{“type”:“object”,“value”:[{“net_am”:4.99,“n_unit”:1,“desc”:“BREAD”},{“net_am”: |

| 5.5,“n_unit”:2,“desc”:“PIZZA HAM/CHEESE”},{“net_am”:2.39,“n_unit”:1,“desc”:“FRANKFURT SAUSAGES"}, |

| {“net_am”:0.05,“n_unit”:1,“desc”:“SHOPPING BAG”}],“metadata”:{}},“mall”:{“type”:“String”,“value”:1, |

| “metadata”:{}},“date”:{“type”:“date”,“value”:“01/14/2016”,“metadata”:{}},“client”:{“type”:“int”,“value”: |

| 77053280208,“metadata":{}}}}],“subscriptionId”:“5d308d139d5b4d3e64685da0”} |

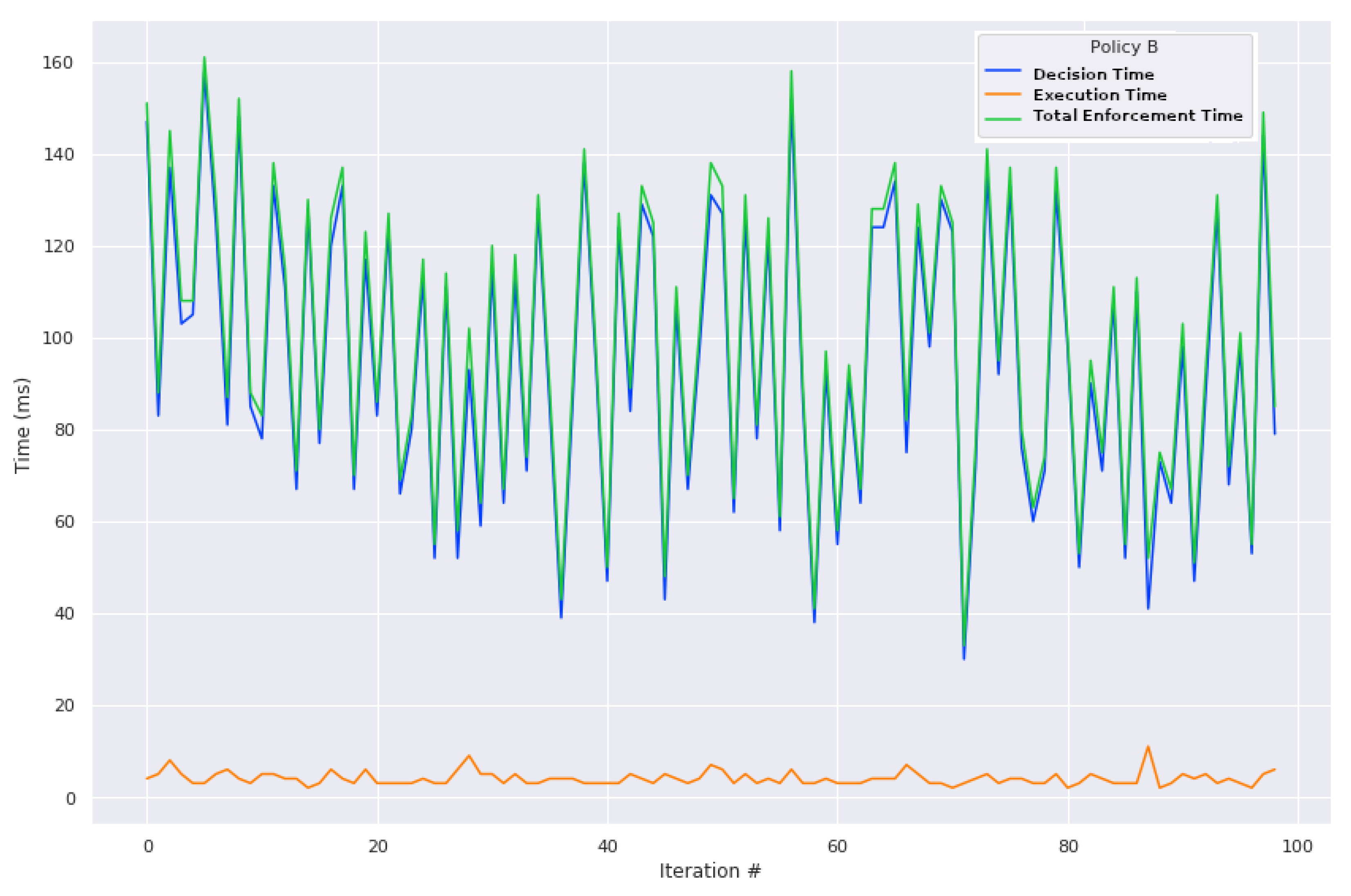

5.4. Results

- Decision time (Td): Time between the policy infringement on the DC’s side and the detection of said infringement at the uPDP on the DP’s side.

- Execution time (Tx): Time between the policy infringement detection at the uPDP and the execution of the punishment at the PXP.

- Total enforcement time (Tt): Sum of Td and Tx.

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Jeschke, S.; Brecher, C.; Meisen, T.; Özdemir, D.; Eschert, T. Industrial Internet of Things and Cyber manufacturing systems. In Ind. Internet Things; Springer: Berlin/Heidelberg, Germany, 2017; pp. 3–19. [Google Scholar]

- Lu, Y. Industry 4.0: A survey on technologies, applications and open research issues. J. Ind. Inf. Integr. 2017, 6, 1–10. [Google Scholar] [CrossRef]

- Mosavi, A.; Vaezipour, A. Developing Effective Tools for Predictive Analytics and Informed Decisions; Technical Report; University of Tallinn: Tallinn, Estonia, 2013. [Google Scholar]

- Tiwari, V. Study of Internet of Things (IoT): A Vision, Architectural Elements, and Future Directions. Int. J. Adv. Res. Comp. Sci. 2016, 7, 65–84. [Google Scholar]

- Kagermann, H.; Helbig, J.; Hellinger, A.; Wahlster, W. Recommendations for Implementing the Strategic Initiative INDUSTRIE 4.0: Securing the Future of German Manufacturing Industry; Final Report of the Industrie 4.0 Working Group; Forschungsunion: Essen, Germany, 2013. [Google Scholar]

- Mosavi, A.; Lopez, A.; Varkonyi-Koczy, A.R. Industrial applications of big data: State of the art survey. In International Conference on Global Research and Education; Springer: Berlin/Heidelberg, Germany, 2017; pp. 225–232. [Google Scholar]

- Sandhu, R.S.; Samarati, P. Access control: Principle and practice. IEEE Comm. Mag. 1994, 32, 40–48. [Google Scholar] [CrossRef]

- Sandhu, R.; Park, J. Usage Control: A Vision for Next Generation Access Control. In Computer Network Security, Proceedings of the 2nd International Workshop on Mathematical Methods, Models, and Architectures for Computer Network Security, MMM-ACNS 2003, St. Petersburg, Russia, 21–23 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 17–31. [Google Scholar]

- Voigt, P.; von dem Bussche, A. The EU General Data Protection Regulation (GDPR). A Practical Guide; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Otto, B.; Lohmann, S.; Steinbuss, S.; Teuscher, A. IDS Reference Architecture Model Version 2.0; Technical Report; Fraunhofer: Munich, Germany, 2018. [Google Scholar]

- Bettini, C.; Jajodia, S.; Wang, X.S.; Wijesekera, D. Provisions and Obligations in Policy Rule Management. J. Netw. Syst. Manag. 2003, 11, 351–372. [Google Scholar] [CrossRef]

- OASIS Standard. eXtensible Access Control Markup Language (XACML) Version 3.0. Available online: http://docs.oasis-open.org/xacml/3.0/xacml-3.0-core-spec-os-en.pdf (accessed on 3 June 2019).

- Alonso, Á.; Fernández, F.; Marco, L.; Salvachúa, J. IAACaaS: IoT Application-Scoped Access Control as a Service. Futur. Internet 2017, 9, 64. [Google Scholar] [CrossRef]

- Fernández, F.; Alonso, Á.; Marco, L.; Salvachúa, J. A model to enable application-scoped access control as a service for IoT using OAuth 2.0. In Proceedings of the 2017 20th Conference on Innovations in Clouds, Internet and Networks (ICIN), Paris, France, 7–9 March 2017; pp. 322–324. [Google Scholar] [CrossRef]

- Munoz-Arcentales, A.; López-Pernas, S.; Pozo, A.; Álvaro, A.; Salvachúa, J.; Huecas, G. An Architecture for Providing Data Usage and Access Control in Data Sharing Ecosystems. Procedia Comput. Sci. 2019, 160, 590–597. [Google Scholar] [CrossRef]

- Ravidas, S.; Lekidis, A.; Paci, F.; Zannone, N. Access control in Internet-of-Things: A survey. J. Netw. Comp. Appl. 2019, 144, 79–101. [Google Scholar] [CrossRef]

- Alonso, Á.; Pozo, A.; Cantera, J.M.; la Vega, F.; Hierro, J.J. Industrial Data Space Architecture Implementation Using FIWARE. Sensors 2018, 18, 2226. [Google Scholar] [CrossRef]

- Xu, L.D.; Duan, L. Big data for cyber physical systems in industry 4.0: A survey. Ent. Inf. Syst. 2019, 13, 148–169. [Google Scholar] [CrossRef]

- Lee, J.; Kao, H.A.; Yang, S. Service innovation and smart analytics for industry 4.0 and big data environment. Procedia Cirp 2014, 16, 3–8. [Google Scholar] [CrossRef]

- Yin, S.; Kaynak, O. Big data for modern industry: Challenges and trends [point of view]. Proc. IEEE 2015, 103, 143–146. [Google Scholar] [CrossRef]

- Mourtzis, D.; Vlachou, E.; Milas, N. Industrial Big Data as a result of IoT adoption in manufacturing. Procedia Cirp 2016, 55, 290–295. [Google Scholar] [CrossRef]

- Gölzer, P.; Cato, P.; Amberg, M. Data Processing Requirements of Industry 4.0-Use Cases for Big Data Applications. In Proceedings of the ECIS 2015, Münster, Germany, 26–29 May 2015. [Google Scholar]

- Gokalp, M.O.; Kayabay, K.; Akyol, M.A.; Eren, P.E.; Koçyiğit, A. Big data for industry 4.0: A conceptual framework. In Proceedings of the 2016 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 15–17 December 2016; pp. 431–434. [Google Scholar]

- Osman, A.M.S. A novel big data analytics framework for smart cities. Future Gener. Comp. Syst. 2019, 91, 620–633. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Tang, B.; Li, V.O. A five-layer architecture for big data processing and analytics. Int. J. Big Data Int. 2019, 6, 38–49. [Google Scholar]

- NGSI-LD, E. Context Information Management (CIM) and Application Programming Interface (API). ETSI GS CIM 2018, 4, V1. [Google Scholar]

- Russello, G.; Dulay, N. xDUCON: Cross Domain Usage Control through Shared Data Spaces. In Proceedings of the 2009 IEEE International Symposium on Policies for Distributed Systems and Networks, London, UK, 20–22 July 2009; pp. 178–181. [Google Scholar] [CrossRef]

- Russello, G.; Dulay, N. xDUCON: Coordinating Usage Control Policies in Distributed Domains. In Proceedings of the 2009 Third International Conference on Network and System Security, Gold Coast, QLD, Australia, 19–21 October 2009; pp. 246–253. [Google Scholar] [CrossRef]

- Cerbo, F.D.; Some, D.; Gomez, L.; Trabelsi, S. PPL v2.0: Uniform Data Access and Usage Control on Cloud and Mobile. In Proceedings of the 2015 IEEE/ACM 1st International Workshop on TEchnical and LEgal aspects of data pRivacy and SEcurity, Florence, Italy, 18 May 2015; pp. 2–7. [Google Scholar] [CrossRef]

- Ardagna, C.A.; Bussard, L.; De Capitani di Vimercati, S.; Neven, G.; Pedrini, E.; Paraboschi, S.; Preiss, F.; Samarati, P.; Trabelsi, S.; Verdicchio, M. PrimeLife Policy Language. In Proceedings of the W3C Work Access Control Appl. Scenar., Luxembourg, 17–18 November 2009. [Google Scholar]

- Jiao, D.; Lianzhong, L.; Ting, L.; Shilong, M. Realization of UCON Model Based on Extended-XACML. In Proceedings of the 2011 International Conference on Future Computer Sciences and Application, Hong Kong, China, 18–19 June 2011; pp. 90–93. [Google Scholar] [CrossRef]

- Lazouski, A.; Mancini, G.; Martinelli, F.; Mori, P. Usage control in cloud systems. In Proceedings of the 2012 International Conference for Internet Technology and Secured Transactions, London, UK, 10–12 December 2012; pp. 202–207. [Google Scholar]

- Wu, J.; Dong, M.; Ota, K.; Tariq, M.; Guo, L. Cross-Domain Fine-Grained Data Usage Control Service for Industrial Wireless Sensor Networks. IEEE Access 2015, 3, 2939–2949. [Google Scholar] [CrossRef]

- Marra, A.L.; Martinelli, F.; Mori, P.; Saracino, A. Implementing Usage Control in Internet of Things: A Smart Home Use Case. In Proceedings of the 2017 IEEE Trustcom/BigDataSE/ICESS, Sydney, NSW, Australia, 1–4 August 2017; pp. 1056–1063. [Google Scholar] [CrossRef]

- Bertolino, A.; Calabrò, A.; Lonetti, F.; Sabetta, A. Glimpse: A generic and flexible monitoring infrastructure. In Proceedings of the 13th European Workshop on Dependable Computing (EWDC), Pisa, Italy, 11–12 May 2011. [Google Scholar]

- Barsocchi, P.; Calabrò, A.; Ferro, E.; Gennaro, C.; Marchetti, E.; Vairo, C. Boosting a low-cost smart home environment with usage and access control rules. Sensors 2018, 18, 1886. [Google Scholar] [CrossRef] [PubMed]

- Gkioulos, V.; Rizos, A.; Michailidou, C.; Mori, P.; Saracino, A. Enhancing Usage Control for Performance: An Architecture for Systems. In Comp. Sec.; Katsikas, S.K., Cuppens, F., Cuppens, N., Lambrinoudakis, C., Antón, A., Gritzalis, S., Mylopoulos, J., Kalloniatis, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 69–84. [Google Scholar]

- Martinelli, F.; Michailidou, C.; Mori, P.; Saracino, A. Managing QoS in Smart Buildings Through Software Defined Network and Usage Control. In Proceedings of the 2019 IEEE International Conference on Pervasive Computing and Communications Workshops (PerCom Workshops), Kyoto, Japan, 11–15 March 2019; pp. 626–632. [Google Scholar]

- Petković, M.; Prandi, D.; Zannone, N. Purpose control: Did you process the data for the intended purpose? In Workshop on Secure Data Management; Springer: Berlin/Heidelberg, Germany, 2011; pp. 145–168. [Google Scholar]

- Poullet, Y. EU data protection policy. The Directive 95/46/EC: Ten years after. Comput. Law Secur. Rev. 2006, 22, 206–217. [Google Scholar] [CrossRef]

- Bartolini, C.; Daoudagh, S.; Lenzini, G.; Marchetti, E. Towards a lawful authorized access: A preliminary GDPR-based authorized access. In Proceedings of the ICSOFT 2019, Prague, Czech Republic, 26–28 July 2019; pp. 26–28. [Google Scholar]

- Bartolini, C.; Daoudagh, S.; Lenzini, G.; Marchetti, E. GDPR-Based User Stories in the Access Control Perspective. In Quality of Information and Communications Technology, Proceedings of the 12th International Conference, QUATIC 2019, Ciudad Real, Spain, 11–13 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–17. [Google Scholar]

- Calabró, A.; Daoudagh, S.; Marchetti, E. Integrating Access Control and Business Process for GDPR Compliance: A Preliminary Study. In Proceedings of the ITASEC 2019, Pisa, Italy, 13–15 February 2019. [Google Scholar]

- Arfelt, E.; Basin, D.; Debois, S. Monitoring the GDPR. In Comp. Sec.–ESORICS 2019; Sako, K., Schneider, S., Ryan, P.Y.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 681–699. [Google Scholar]

- Basin, D.; Harvan, M.; Klaedtke, F.; Zălinescu, E. MONPOLY: Monitoring Usage-Control Policies. In Runt. Verif.; Khurshid, S., Sen, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 360–364. [Google Scholar]

- Neisse, R.; Steri, G.; Nai-Fovino, I. A Blockchain-Based Approach for Data Accountability and Provenance Tracking. In Proceedings of the 12th International Conference on Availability, Reliability and Security, ARES ’17; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Outchakoucht, A.; Hamza, E.; Leroy, J.P. Dynamic access control policy based on blockchain and machine learning for the internet of things. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 417–424. [Google Scholar] [CrossRef]

- Ouaddah, A.; Abou Elkalam, A.; Ait Ouahman, A. FairAccess: A new Blockchain-based access control framework for the Internet of Things. Sec. Comm. Netw. 2016, 9, 5943–5964. [Google Scholar] [CrossRef]

- Panian, Z. Some practical experiences in data governance. World Acad. Sci. Eng. Technol. 2010, 62, 939–946. [Google Scholar]

- Paci, F.; Squicciarini, A.; Zannone, N. Survey on access control for community-centered collaborative systems. ACM Comp. Surv. 2018, 51, 1–38. [Google Scholar] [CrossRef]

- Mahmudlu, R.; den Hartog, J.; Zannone, N. Data governance and transparency for collaborative systems. In Data and Applications Security and Privacy XXX, Proceedings of the 30th Annual IFIP WG 11.3 Conference, DBSec 2016, Trento, Italy, 18–20 July 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 199–216. [Google Scholar]

- European Data Protection Supervisor. European Data Protection Supervisor Glossary. 2019. Available online: https://edps.europa.eu/data-protection/data-protection/glossary/d_en (accessed on 3 June 2019).

- McRoberts, M.; Rodriguez Doncel, V. Open Digital Rights Language (ODRL) Ontology; Technical Report; W3C: Cambridge, MA, USA, 2014. [Google Scholar]

- Open Mobile Alliance. NGSI Context Management. Available online: http://www.openmobilealliance.org/release/NGSI/V1_0-20120529-A/OMA-TS-NGSI_Context_Management-V1_0-20120529-A.pdf (accessed on 8 July 2019).

- Digital CEF. Context Broker, Make Data-Driven Decisions in Real Time, at the Right Time. Available online: https://ec.europa.eu/cefdigital/wiki/display/CEFDIGITAL/Context+Broker (accessed on 3 September 2019).

- Rescorla, E. HTTP Over TLS; RFC 2818, RFC Editor; California, United States. 2000. Available online: https://tools.ietf.org/html/rfc2818 (accessed on 1 May 2020).

- Teixeira, A.; Pérez, D.; Sandberg, H.; Johansson, K.H. Attack models and scenarios for networked control systems. In Proceedings of the 1st International Conference on High Confidence Networked Systems, Beijing, China, 17–18 April 2012; pp. 55–64. [Google Scholar]

- Steyskal, S.; Polleres, A. Towards Formal Semantics for ODRL Policies. In Rule Tech. Found., Tools, App.; Bassiliades, N., Gottlob, G., Sadri, F., Paschke, A., Roman, D., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 360–375. [Google Scholar]

- De Vos, M.; Kirrane, S.; Padget, J.; Satoh, K. ODRL policy modelling and compliance checking. In Rules and Reasoning, Proceedings of the Third International Joint Conference, RuleML+RR 2019, Bolzano, Italy, 16–19 September 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 36–51. [Google Scholar]

| References | Access Control | Remediation | PSL | Multi-Actor | Application Domain |

|---|---|---|---|---|---|

| [27] | ABAC | Cross-Domain Policy (xDPolicy) | ✓ | Generic | |

| [29,30] | ABAC | PPL, XACML | ✓ | Cloud, mobile | |

| [31,32] | ABAC, RBAC, IBAC | U-XACML | ✓ | Cloud | |

| [33] | RBAC | Industrial WSNs | |||

| [34] | ABAC | ✓ | U-XACML | IoT | |

| [36] | ABAC, RBAC | ✓ | Drools Rule Language (DRL) | ✓ | IoT |

| [37] | ABAC | ✓ | U-XACML | IoT | |

| [38] | ABAC, RBAC | SDN | |||

| [39] | ABAC | ✓ | ad-hoc | Generic |

| Policy A | Policy B | |||

|---|---|---|---|---|

| M | SD | M | SD | |

| Td (ms) | 125.8 | 33.8 | 94.6 | 31.4 |

| Tx (ms) | 3.7 | 5.0 | 4.0 | 1.5 |

| Tt (ms) | 129.5 | 34.5 | 98.6 | 31.6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Munoz-Arcentales, A.; López-Pernas, S.; Pozo, A.; Alonso, Á.; Salvachúa, J.; Huecas, G. Data Usage and Access Control in Industrial Data Spaces: Implementation Using FIWARE. Sustainability 2020, 12, 3885. https://doi.org/10.3390/su12093885

Munoz-Arcentales A, López-Pernas S, Pozo A, Alonso Á, Salvachúa J, Huecas G. Data Usage and Access Control in Industrial Data Spaces: Implementation Using FIWARE. Sustainability. 2020; 12(9):3885. https://doi.org/10.3390/su12093885

Chicago/Turabian StyleMunoz-Arcentales, Andres, Sonsoles López-Pernas, Alejandro Pozo, Álvaro Alonso, Joaquín Salvachúa, and Gabriel Huecas. 2020. "Data Usage and Access Control in Industrial Data Spaces: Implementation Using FIWARE" Sustainability 12, no. 9: 3885. https://doi.org/10.3390/su12093885

APA StyleMunoz-Arcentales, A., López-Pernas, S., Pozo, A., Alonso, Á., Salvachúa, J., & Huecas, G. (2020). Data Usage and Access Control in Industrial Data Spaces: Implementation Using FIWARE. Sustainability, 12(9), 3885. https://doi.org/10.3390/su12093885