Abstract

Artificial intelligence (AI) has been applied to various decision-making tasks. However, scholars have yet to comprehend how computers can integrate decision making with uncertainty management. Obtaining such comprehension would enable scholars to deliver sustainable AI decision-making applications that adapt to the changing world. This research examines uncertainties in AI-enabled decision-making applications and some approaches for managing various types of uncertainty. By referring to studies on uncertainty in decision making, this research describes three dimensions of uncertainty, namely informational, environmental and intentional. To understand how to manage uncertainty in AI-enabled decision-making applications, the authors conduct a literature review using content analysis with practical approaches. According to the analysis results, a mechanism related to those practical approaches is proposed for managing diverse types of uncertainty in AI-enabled decision making.

1. Introduction

Various artificial intelligence (AI) technologies have been rapidly developed and implemented for an array of crucial decision-making tasks. Stakeholders hold high expectations that AI can deliver excellent decisions. AI-enabled decision-making applications have enabled civilizations to enhance humans’ quality of life. As an example, the autonomous vehicle is a novel AI application that provides humans with convenient transport services by autonomously analyzing road conditions and making driving decisions. The autonomous driving technology is expected to provide benefits such as improved life convenience, time efficiency, reduction in congestion, and efficient use of traffic resources. However, since the testing of autonomous vehicles on public roads was first permitted, numerous accidents and problems have occurred. Even if equipped with the most advanced camera and sensing technologies for object recognition, autonomous vehicles still cannot perfectly anticipate every trajectory of surrounding objects and accurately identify animals in the road if they do not have descriptive data in their databases [1]. When an autonomous vehicle encounters an unfamiliar situation (such as heavy rain, flooding, or mud puddles), the uncertainty of the road situation increases, and so does the risk to passengers. Furthermore, autonomous vehicles may cause safety risks if their algorithmic decision-making mechanism cannot address ethical challenges [2]. The aforementioned scenarios indicate that AI applications cannot anticipate every situation and inevitably must deal with various uncertainties in the decision-making process. The uncertainty challenge is critical to AI-enabled decision-making applications; AI technologies must grapple with uncertainty to adapt to the changing world in the long term [3] and ensure the sustainability of AI-enabled decision-making applications.

Decision making is a process that enables people to gather intelligence, design alternatives, and make decisions to achieve a purpose [4,5]. Decisions are diverse in terms of scope, context, frequency, and influence. A wide decision scope results in a high degree of decision influence. Frequent and sophisticated situational changes lead to numerous uncertainties in decision making. Decision making heavily relies on information and knowledge; however, the collection of information and diffusion of knowledge require considerable investments of resources (e.g., time, stamina, and attention). The acquisition, storage, and application of information often exceed the limits of the human brain and burden their decision-making capabilities. With the assistance of advanced computing, programming, and networking technologies, people can efficiently process data and foster technology adoption.

In the past, computers were mainly decision supporters that offered functions for collecting, storing, and calculating data by using one or more given formulas. Advances in AI have gradually expanded the role of computers in decision making; a modern computer can serve as a decision maker, performing functions such as identifying models (to generate a formula), judging, and making decisions. Industries are applying those information communication technologies to enable decision making in various industries, such as retail, recruitment, manufacturing, and transportation. For example, an unmanned store does not require the presence of personnel to monitor and handle inventory; an autonomous vehicle does not require a driver to detect and react to road conditions. Smart factories (functioning in the Industry 4.0 paradigm) employ machines to operate and manage production lines. Human resource (HR) departments utilize computers to evaluate and interview candidates. AI applications can perceive their surroundings and execute actions based on those perceptions [6]. However, computers have both advantages and drawbacks; thus, the more crucial the decision is, the more prudent the user must be when applying such applications.

AI-enabled decision-making applications rely on computers to collect available information, build modeling algorithms, and extrapolate rules to make decisions. However, the uncertainty challenge that exists in the decision-making process has not been comprehensively addressed. This research plans to examine two pending questions:

- (1)

- What are the sources of uncertainty in AI-enabled decision making?

- (2)

- How can uncertainty be managed in AI-enabled decision making?

To understand uncertainty in AI-enabled decision making and address the related research gap, a literature review was conducted and three sources of uncertainty (i.e., informational, environmental, and intentional uncertainty) were identified and used as three dimensions for an analytical framework of uncertainty. Furthermore, this paper examines the functions and possible limitations of AI technologies. We conducted a content analysis of the published AI literature to answer research questions. Departing from technical and computer engineering perspectives, this research investigated the development of AI-enabled decision making from a social science perspective, focusing particularly on realistic procedures for managing uncertainty. A mechanism model is proposed along with practical approaches for regulating the development of AI-enabled decision-making applications, mitigating irreversible consequences, and achieving sustainability. Considering that this model has been generalized from empirical data, this research is an exploratory research that aims to identify principles supporting the capability of computers to make decisions and overcome the uncertainty problems. Discussions and conclusions are provided in the final section of this paper.

2. Uncertainty in AI-Enabled Decision Making

Decision making is challenging and heavily relies on information and knowledge. Information is messages that imply the state of the world, whereas knowledge is interpretative frames used to understand messages and construct an inferential understanding of the world. With the advancement of computers, current AI can efficiently process information and make decisions for people. This section scrutinizes uncertainty in decision making and the functions and possible limitations of AI technologies.

2.1. Uncertainty in Decision Making

Uncertainty is ubiquitous in realistic settings and generates a major obstacle to effective decision making. Lipshitz and Strauss [7] pointed out that uncertainty has three features: subjectivity; inclusiveness; and conceptualization as hesitancy, indecisiveness, or procrastination. The definition of uncertainty has been widely discussed and is easily confounded by similar concepts [7,8,9,10,11,12,13], such as risk and complexity. Uncertainty is distinct from risk. Risk can be evaluated through statistical methods, but uncertainty cannot because it involves the inherent inability to recognize relevant influential variables and their functional relationships. Uncertainty is distinct from complexity. Complexity occurs in any system with numerous parts that interact in a complicated manner [14]. If a decision task is formidable and intricate, extensive computing capability may be helpful; but if the decision task is uncertain, then human analysts are far from comprehending that task; those human analysts may even be totally unable to make a proper decision regarding that task.

Uncertainty is difficult to concretely assess, accurately predict, and avoid. Lipshitz and Strauss [7] proposed three sources of uncertainty: incomplete information, inadequate understanding, and undifferentiated alternatives. As AI applications can apply to individual decisions, three sources with three dimensions are used as the analytical framework in this research to clarify the diversity of uncertainty. Herein, the three dimensions of uncertainty are informational, environmental, and intentional uncertainty [7,8,9,10,15].

Informational uncertainty. Typically, a higher degree of uncertainty corresponds to a greater need for complete information processing [11]. Incomplete information is the most frequently cited source of uncertainty [10]. Two situations require the use of information. The first involves inductive reasoning. When everything about a situation is unfamiliar, sufficient information (i.e., observations) is required to construct and verify possible assumptions. The second situation is when the assumptive formula is ready and information (i.e., an independent variable) is required to generate results.

The most uncertain part of information acquisition is the quality of information with respect to factors such as information diversity [16], information quantity, information representability, and information sources [17]. Some informational problems are related to the characterization of data as objective or subjective and as primary or secondary. Although Zack [13] contended that computers can reduce uncertainty by collecting information, ascertaining whether the information available is adequate and sufficient is not always possible [7,15].

Environmental uncertainty. Every decision task exists in a specific environment in the real world [18,19], which contains various anticipated and indeterminate contingencies [20]. Furthermore, the real world is a complex system with a fundamental mismatch between causal relationships and other phenomena. When decision makers are unable to understand the whole situation and manage environmental factors [8], they may be confused by the contradictory meaning conveyed by obtained messages. Some contradictory messages result from a fragmented rather than holistic perspective of the world. Consequently, inadequate understanding can prevent the achievement of a smooth decision-making process [7].

In addition, scholars in computer science have pointed out that causal explanations are ubiquitous in AI training [21], which tends to increase contextual uncertainty. Rust [22] further noted that “the biggest constraint on progress is not limited computer power, but instead the difficulty of learning the underlying structure of the decision problem.” When humans inadequately understand the contextual structure of decision problems, it is dangerous for those humans to allow AIs to take charge of unclear or even harmful processes and structures [23] because those AIs may calculate an optimal solution for the wrong problem or target.

Intentional uncertainty. After alternatives have been generated in the decision-making process, the decision maker relies on criteria to make a final decision. Even when decisions are concrete and objective, the generation of criteria is still variable. In other words, even when a community has a standard decision-making process, humans inevitably make final decisions according to heuristics [12,24], prospections [25], or preferences. March [9] argued that rational choices encompass guesses regarding uncertain future preferences in addition to guesses regarding uncertain future consequences. The preference problem reflects a nonlinear weighting of decisions, and most aspects are computationally expensive with rational functions [26].

Regarding decision tasks involving a moral challenge, Nissan-Rozen [27] argued that making comparisons between the values and judgments of different moral reasoning processes is impossible. Regarding decision-making tasks involving the fairness challenge, Lee et al. [28] argued that understanding distinct human requirements constitutes a critical step because multiple stakeholders have conflicting expectations. Individual decisions such as driving and purchasing decisions are more likely to involve a preference problem or even a dilemma problem [29] because of those incomparable and conflicting expectations. Furthermore, another type of intentional problem is undifferentiated alternatives, which reflects the lack of a significant preference. Undifferentiated alternatives may cause uncertainty [7] because the likelihood of future events is unclear [10].

In summary, three sources of uncertainty in the decision-making process are information completeness, environmental context, and individual intentions. Environmental uncertainty originates from the unpredictability of the environment, whereas intentional uncertainty originates from individuals’ specific preferences and diverse needs. Informational uncertainty can be managed with relative simplicity by clarifying the causal relationships between variables and acquiring information that is as complete as possible. However, unanticipated occurrences may always occur in the real world, meaning that the management of informational uncertainty remains difficult. The classification of three uncertainty sources has been widely used in many studies [7,8,10,15] and was applied in the current research to reexamine decision-making tasks in AI applications.

2.2. How Does AI Learn to Make Decisions?

Computers are skilled at processing information, although this processing is generally passive. In addition to the growth of big data analysis and increased availability of computational power, advancements in machine learning technologies have led to considerable progress in AI [6]. AI is defined as “a system’s ability to correctly interpret external data, to learn from such data, and to use those learnings to achieve specific goals and tasks [30].” By employing AI, computers can actively learn from available information. Herein, we discuss three learning paradigms, each of which provides a specific function for AI in learning to make decisions.

Supervised learning. A computer learns to recognize labels (or “features”) by using training data (i.e., a set of samples composed of labeled data) and develops a function that relates the features to the objective. After a computer has been trained, the validation function is applied to predict other example sets. Examples of supervised learning technologies are classification [31], decision trees, and support vector machines. Supervised learning can be used in areas such as recommendation systems and systems designed to check for email spam; for example, consumers label their favorite products and provide them to a recommendation system to predict other products that they may like [32]. Supervised learning is data-driven bottom-up processing. The quality of labeled data is key to the predictability of supervised learning [33]. Thus, developers must ensure that the labels have no faults or impurities. Furthermore, the number of labeled data should be neither too small nor too large.

Unsupervised learning. No training data (or labeled data) are available in advance. The input data must be grouped into patterns or classified with certain observations; this approach enables the computer to learn how to recognize patterns and similarities in the data. Unsupervised learning is a top–down approach [34] used to discover the structural form of data [35]. Examples of unsupervised learning are clustering, use of artificial neural networks, and dimensionality reduction. Unsupervised learning is a generative model used to identify new dimensions and subgroups based on environmental data, which enables unsupervised learning to be particularly effective for specific functions such as anomaly detection [36,37]. However, its detection capability mainly relies on extrapolating relations but not exactly causal relations. Causal relations may be blurred and require further examination by humans; therefore, applying AI for complex decision tasks is dangerous [38].

Reinforcement learning. The learning mechanism of the human brain involves the constant adjustment of behavior in response to encouragement and punishment for adaptation to the environment. Reinforcement learning is a consecutive decision-making process that involves repeatedly making a decision, interacting with the environment, obtaining a return (or “reward”), and modifying the decision until the expected value of the overall return function is maximized (or “optimized”). As it does not require the labeling of input–output pairs in advance or the explicit correction of suboptimal actions, reinforcement learning is a powerful exploitation mechanism for an autonomous agent that focuses on real-time planning to perform trial-and-error processes in the environment [39]. Examples of reinforcement learning include temporal difference learning, dynamic programming [22], and Q-learning [40]. Reinforcement learning involves the use of Bayes’ theorem to calculate conditional probabilities. Critics have noted that even a simple problem requires heavy calculation, and the normative framework for processing information is too unified [26].

Although AI efficiently collects as much information as possible to make decisions, most AI-enabled successes are limited to relatively narrow domains such as board games, in which machine learning technologies have achieved outstanding performance [22]. AI technology still has at least two unsolved limitations. The first limitation concerns sample selection and model fitting. Sample selection is key to model building in machine learning. The sample may be only labels or historical data. Developers must ensure that the labels do not have faults or impurities. Even though the fault tolerance and noise immunity of models can be evaluated using some measurements [41] and even though erroneous input can be handled using some methods [42], additional problems persist. Using historical data to make decisions is highly risky [38] because the real world constantly changes and contingencies may occur at any time. During turbulent times in particular, predictions based on historical data are easily distorted. Another concern regarding the use of historical data is that the sampling data may be a biased indicator [21]. In addition to data representability, the quantity of sampling data is another problem. An insufficient amount of data cannot be used for an effective algorithm [21,43], whereas an excessive amount can lead to the problem of dimensionality. Moreover, even when the amount of data is barely sufficient, a model can still exhibit an “overfitting” problem, in which the model has too close of a fit to the training data but insufficient fit to independent (nontraining) data [44]. Particularly when sophisticated models and rules are difficult to fully understand, misunderstanding between computers and humans may cause potential crises.

The second limitation concerns the criteria setting. Machine learning still requires a criterion set, label set, or reward set. It is unclear whether the criterion is produced by individuals’ preferences. Most computers not only assume the environment is homogeneous but also assume every individual has the same preferences and intentions. However, individuality is a highly valued trait. All humans have their own will and unique life meaning. By contrast, computers are produced from a single standard criterion, and a lack of diversity can lead to worldwide disasters. Particularly when some criteria are controversial, a compulsory code to unify rules is improper.

From the aforementioned examination of AI technology, the limitation of data sampling can be considered to correspond to environmental uncertainty because variations in the environment lead to difficulty in sampling and modeling. Furthermore, the limitation of criteria setting can correspond to intentional uncertainty. It can be speculated that the two types of uncertainty in AI-enabled decision-making applications are influential but recalcitrant. Russell and Norvig [45] pointed out that “acting rationally” is one approach to AI. Furthermore, a rational AI is “one that acts so as to achieve the best outcome or, when there is uncertainty, the best expected outcome [45].” Coping with various uncertainties that may result from particular limitations is clearly the key to AI development.

3. Materials and Methods

This section addresses the research question of how uncertainty can be managed in AI-enabled decision making. Most AI applications are still in the initial development stage; examples include unmanned stores and autonomous vehicles. Obtaining first-hand information on how AI applications manage uncertainty is difficult at this stage. Instead of examining first-hand materials, this research examined case study content and project reviews in the literature. This methodology involves content analysis of multiple cases reported in the literature [4,46,47].

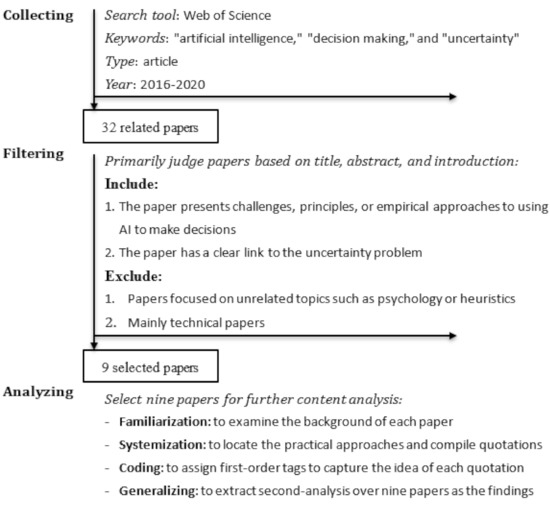

Businesses often confront emerging technology implementation projects and possess first-hand experience in the skills required to implement AI. For decades, organizations have tried to use AI to facilitate operations or to evaluate performance [48]. From this, researchers have turned their implementation experiences into case studies and published them. Refering to Eisenhardt [49], we collected cases by analyzing those publications. We began our review by searching journals in the Web of Science by using the following keywords: “artificial intelligence,” “decision making,” and “uncertainty” within five years. Then, we filtered papers by two criteria: (1) the paper presents challenges, principles, or empirical approaches of using AI to make decisions and (2) the paper has a clear link to the uncertainty problem. We then excluded papers focused on unrelated topics such as psychology or heuristics. Furthermore, instead of purely technical papers, this research included papers that discussed managerial and technical tasks. According to the processes mentioned above, we searched for, collected, filtered, and selected nine papers for further analysis (see Figure 1).

Figure 1.

Flow chart of research process.

Here we briefly describe the content and background of the nine papers.

- Bogosian [50] examined how machines cope with moral uncertainty. By characterizing moral uncertainty as a voting problem, the author proposed a computational framework to resolve the morality problem in AI machines.

- Costea et al. [31] proposed a two-stage methodology to classify nonbanking financial institutions based on their financial performance. They clarified how to identify and reallocate uncertain observations.

- Love-Koh et al. [51] discussed precision medicine that enables health care interventions to be tailored to groups of patients based on their disease susceptibility, diagnostic or prognostic information, or treatment responses. The complex and uncertain treatment pathways associated with patient stratification and fast-paced technology are critical topics for this development.

- Lu et al. [40] proposed a dynamic pricing demand response algorithm for energy management in a hierarchical electricity market that considers service provider’s (SP) profit and customers’ (CUs’) costs. Reinforcement learning was used to illustrate the hierarchical decision-making framework in which the dynamic pricing problem was formulated as a discrete finite Markov decision process, and Q-learning was adopted to solve this decision-making problem. Through reinforcement learning, the SP was able to adaptively decide a retail electricity price during its online learning process, in which the uncertainty of CUs’ load demand profiles and the flexibility of wholesale electricity prices were addressed.

- Willcock et al. [52] examined two ecosystem cases and discussed how data-driven modeling (DDM) can be made more accessible to decision makers who demonstrate the capacity and willingness to engage with uncertain information. Uncertainty estimates, produced as part of the DDM process, enable decision makers to determine what level of uncertainty is acceptable to them and how to use their expertise to make decisions in potentially contentious situations. They concluded that DDM helps to produce interdisciplinary models and holistic solutions to complex socioecological problems.

- Overgoor et al. [6] examined three marketing cases and discussed how AI can be used to provide support for marketing decisions. Based on the established Cross-Industry Standard Process for Data Mining framework, they created a process for managers to follow when executing marketing AI projects.

- Rust [22] examined three firm cases and discussed developments in dynamic programming. He argued that the fuzziness of real-world decision problems and the difficulty in mathematical modeling are key obstacles to a wider application of dynamic programming in real-world settings. He reviewed the developments in dynamic programming and contrasted its revolutionary effects on economics, operations research, engineering, and AI with the comparative paucity of its real-world applications to improve the decision making of individuals and firms. Finally, he concluded that dynamic programming has notable potential for improving decision making.

- Tambe et al. [21] identified challenges in using data science techniques for HR tasks. These challenges included the complexity of HR phenomena, constraints imposed by small data sets, accountability questions associated with fairness and other ethical and legal constraints, and possible adverse employee reactions to management decisions. On the basis of three principles (i.e., causal reasoning, randomization and experiments, and employee contribution), they proposed practical responses to these challenges that are economically efficient and socially appropriate for using data science to manage employees.

- Lima-Junior and Carpinetti [53] proposed an approach to supporting supply chain performance evaluations by combining supply chain operations reference metrics with an adaptive network-based fuzzy inference system (ANFIS). A random subsampling cross-validation method was applied to select the most appropriate topological parameters for each ANFIS model.

Table 1 presents descriptions of each paper, including its decision task, case quantity, and main learning paradigms. The nine papers helped us to examine 14 real cases and generalize their practical approaches.

Table 1.

Basic descriptions of nine papers.

4. Data Analysis

After collecting and selecting papers, we engaged in the iterative process of analyzing and synthesizing the uncertainty problems and solutions from the selected papers. Qualitative content analysis can be performed through data collection, data condensation, data display, and verification of correlations [54].

First, we familiarized ourselves with the research material by reading the nine papers after collecting data. Most papers presented a set of procedures for constructing an AI-enabled decision-making mechanism as well as the implementation principles and relevant difficulties. We carefully checked whether each paper addressed uncertainty problems in their AI-enabled decision-making procedure. Second, we systematized practical approaches to managing uncertainty problems. The process of data condensation (i.e., the coding of data) revealed ideas regarding what should be included in the data display. The approaches mentioned in each paper were compiled, and by using quoted text (Table 2) from the selected papers, we determined how the three uncertainties were addressed and managed. We then applied a technique of second-order tag, which involved assigning tags after a primary code to detail and enrich the entry. Given our focus on the three dimensions of the uncertainty framework, we performed “first-order analysis” to capture the ideas of each papers’ illustration of how each AI-enabled decision-making procedure handles various uncertainties and “second-order analysis” to extract the findings. Finally, to increase reliability, the first round of analytical results was independently reviewed and verified with the other research team members. Table 2 shows the final version of the analytical results.

Table 2.

Approaches to managing uncertainties in collected data.

5. Results

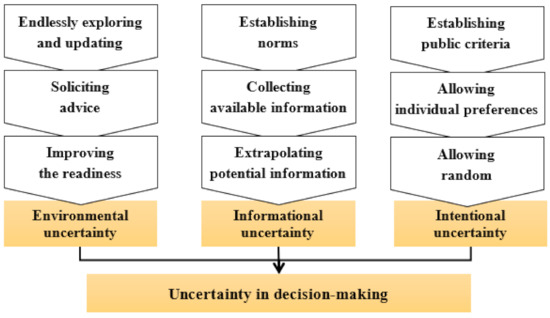

As exemplified in Table 2, approaches were extracted from collected papers addressing methods of managing various uncertainties. First, informational uncertainty can be managed by establishing norms, collecting available information, and extrapolating potential information. Among the collected papers, eight mentioned the relevant keywords or concept of establishing norms, eight papers mentioned collecting available information, and six papers mentioned extrapolating potential information. Second, environmental uncertainty can be managed through continual exploration and updates, the solicitation of advice, and improved readiness. Among the collected papers, five mentioned the relevant keywords or concept of continually exploring and updating, eight mentioned soliciting advice, and three mentioned improving readiness. Third, intentional uncertainty can be managed by establishing public criteria, allowing individual preferences, and allowing randomness. Among the collected papers, seven mentioned the relevant keywords or concept of establishing public criteria, six mentioned allowing individual preferences, and three mentioned allowing randomness. As discussed in Section 2, the analysis results indicated that environmental uncertainty and intentional uncertainty are relatively rarely focused on. The present section examines those approaches and elaborates on their mechanism for managing uncertainties in AI-enabled decision making.

5.1. Managing Informational Uncertainty in AI-Enabled Decision Making

To manage informational uncertainty, the main approach is exploiting accessible information [55]. When the exploitative model applied for making predictions is insufficient, data are collected to construct (or train) the model. In addition, the model must be further verified with additional independent (nontraining) data. Applying information deeply exploits the content of these data and is the main strength of AI. Many activities can be used to apply information, including collecting, formatting, recognizing, circulating, storing, retrieving, sharing, filtering, and tracking. According to research in the field of management [11], organizations typically implement standard operating procedures to constrain the variability of their internal environment. Similarly, an organization can establish operating norms to effectively acquire, distribute, and interpret information [11,56]. These norms can ensure that the organization focuses on specific decision tasks in a given environment scope. Establishing norms for archiving, accessing, and integrating various data sets from different departments and hierarchies is difficult but crucial to AI training. Another major challenge is data compatibility [21]. Some papers described pragmatically implementing data clearing and formatting steps to make data compatible [6,21,52], whereas most papers mentioned details of initialization, normalization, and standardization [6,21,40,50,52,53] based on scientific rigor or guidance [31,51].

Typically, AI need to exploit, request, and collect adequate information with historical data or multiple data sets [6,21,31,40,51,52,53]. However, common among AI applications is the challenge of “small data,” in which the accessible data are far from adequate for devising a machine learning algorithm for a specific decision-making task [21]. When information is incomplete, a human decision maker must extrapolate possible rules from available information through statistical methods or assumption-based reasoning [57]. AI-enabled decision-making mechanisms must flexibly deal with the impreciseness of accessible data and domain knowledge [6,21,50,53] by applying techniques [52] or tools, such as fuzzy sets [31,53] and rough sets [58]. For example, Jang [59] proposed ANFIS for combining the abilities of fuzzy set theory with artificial neural networks to solve complex and uncertain problems [53]. Fuzzy sets [60] are useful for managing uncertainty, although formalizing a fuzzy rule base can be difficult. The artificial neural network is a powerful tool for developing self-learning and self-adaptive systems through the identification of characteristics of available examples; however, it cannot explain relationships between variables. As ANFIS applies fuzzy sets as the model architecture [59], not only can original rules be converted into fuzzy sets but experts’ experience and knowledge can be converted into inference sets to make up for the lack of data descriptions.

5.2. Managing Environmental Uncertainty in AI-Enabled Decision Making

To manage environmental uncertainty, applying the tactics of acknowledging uncertainty is worthwhile [7]. First, we recognize the decision environment and its context as well as set the definitions and boundaries of decision problems. Various environments must be explored within the boundaries. In addition to the variability of a decision environment, explorations cannot always be completed. In other words, the only strategy for survival in a changeable environment is the continual exploration and gradual expansion of knowledge regarding the world. For the development of AI-enabled decision-making applications, a basic approach is regularly scanning the environment with an inquisitive attitude [6,21,48]. To adapt to environmental volatility, an AI-enabled decision-making mechanism must remain flexible and continually adjust and update its variables [6,40]. Although such flexibility may be inconsistent with productivity improvement and cost reduction, it is key to expandability and sustainability [61].

Experts have domain knowledge and experience and can provide decision makers with anchors and guidance regarding a wide range of explorative topics [62], particularly initial access to knowledge. When applying AI to develop a decision-making mechanism, soliciting advice from experts or specialists [31,50,51,52,53] is an essential step for acquiring environmental knowledge and enriching the scope of interpretable frames. In addition to experts, various sources can contribute useful facts for decision makers, such as consumers, employees, investors, business associates, and consultation organizations [6,21].

Regarding the unpredictability of the decision environment, a realistic approach to improving the readiness of the AI-enabled decision-making mechanism is to develop procedures for responding to any unanticipated or undesired situations. In particular, preset procedures must avoid irreversible consequences [7] and adapt to the changing environment in the long term [3]. An AI-enabled decision-making mechanism can weigh potential gains and losses in advance [50], establish a buffer against unstable states, rearrange priorities after unpredictable contingencies [11,21], or establish a threshold for determining when to yield control rights to humans [21].

5.3. Managing Intentional Uncertainty in AI-Enabled Decision Making

The key to managing intentional uncertainty in AI-enabled decision-making applications is to manage the priorities of different criteria for various stakeholders (or groups). Each criterion can be attributed to diverse stakeholder expectations of AI-enabled decision-making applications. To satisfy stakeholders and sustain the development of AI applications, the balance of meeting distinct expectations between different stakeholders is essential. Especially in some specific decision tasks, statistical models trained by computers require a transparent reasoning mechanism for selecting variables and characterizing their relationship to the decision problem. Interpretability is critical for convincing humans. One method for improving the interpretability of AI is to enhance the generalizability of algorithms; another is to allocate criteria selection to human workers. Decision making requires criteria for the evaluation of alternatives and decision making. These criteria must prioritize the public values [63], principles, criteria, or metrics [6,21,31,50,52,53] in advance. There have been useful methods to evaluate the multi-criteria decision making, such as the analytic hierarchy process.

Most decision-making processes prefer consistent and stable criteria. Some research has even regarded the variability of individual factors (e.g., differences in experience, attention, context, and the emotional state of decision makers) as being characteristic of human decision making [64], and variability may negatively affect decision making. However, a decision-making mechanism with only a single criterion may fail to meet numerous distinctive objectives or make decisions that meet humans’ real needs. AI-enabled decision making must be aligned with individuals’ preferences to enhance the predictability of AI decision-making results, thereby increasing human trust. Furthermore, for cases not influenced by individual preferences among the available options (referring the concept of “undifferentiated alternatives” in Lipshitz and Strauss [7]), the setting of random parameters in machine learning is another common approach [21,53]. Accordingly, AI applications manage intentional uncertainty by establishing criteria reflective of human values, considering individuals’ preferences, and allowing randomness.

6. Discussion

This research aimed to clarify how AI can be used to assist organizations in decision-making tasks and even overcome uncertainty problems. From a review of the literature, we found that uncertainty in decision making may be related to the degree of information completeness, environmental context, and individual intentions. By examining recent AI developments, we found that these three factors of uncertainty are present even with the assistance of computers in decision-making tasks. According to these insights, we applied these three dimensions to the framework of uncertainty (i.e., informational, environmental, and intentional uncertainty) to form a unified concept of uncertainty. Considering that most AI applications are still in the initial development stage, we collected data by filtering managerial and academic publications. As data were collected from various research backgrounds, multiple cases helped to clarify the developing dynamics in this novel field and achieve some explorative findings that are generalizable to different contexts.

Figure 2 illustrates the final findings; it summarizes a decision-making mechanism for managing uncertainties in the AI-enabled decision-making deployment process. This mechanism involves three approaches for each type of uncertainty. Other studies in the AI field have only focused on technical approaches, whereas the present study also considers managerial approaches. Acknowledging the perspective of collaboration between humans and computers [65], this research particularly focuses on the pre- and post-implementation stages in designing an AI-enabled decision-making mechanism. Most development processes for AI applications require ongoing updates to sustain their adaptability to the changing world.

Figure 2.

A management mechanism for AI-enabled decision making.

We collected analytical material from the relevant literature, and most of the analytical techniques applied were derived from Miles and Huberman [54] and Eisenhardt [49]. Eisenhardt [49] discussed two weaknesses in the methodology of multiple cases. First, with the intensive use of empirical evidence, analysis results may lack the simplicity of an overall perspective. Second, the analysis results may be too narrow and idiosyncratic. Although the decision tasks collected in the research material were distinct, it can still be worthwhile to examine the diverse cases and to generalize some findings for this exploratory research. Future research should accumulate more facts and evidence to verify our findings.

7. Conclusions

In this research, we reviewed studies on uncertainty in decision making. When a decision is made, uncertainty is the most common and difficult problem to solve. Uncertainty can delay decision making and mainly arises from three sources: informational, environmental, and intentional uncertainty. With the evolution of AI and improvements in computer science, the role of ICT in decision making has gradually transitioned from decision-support functions to decision making functions. Computers can learn to recognize labels, patterns, and similarities in data. Computers can provide an exploitation mechanism that repeatedly reacts to environmental conditions. However, given the limitations of AI, uncertainty still appears to be an imperative problem in AI-enabled decision making and warrants further examination.

This research makes several contributions. This was the first study to adopt the three dimensions of uncertainty to elucidate uncertainty problems in AI decision making and identify solutions. In the second chapter, we acknowledged the potential limitations of AI technologies by exploring their current development. With reference to related studies, we proposed a mechanism model with practical approaches for managing various uncertainties. As we explored and classified uncertainties in depth in the definition stage, the management mechanism proposed in the research was more complete.

This research adopted the social-science perspective and proposed a management mechanism to cope with uncertainty problems in the development of AI-enabled decision making. The advancement of computer science has made solutions to cope with uncertainty problems available. Current solutions for uncertainty problems mostly focus on technical approaches, such as fuzzy sets [66] and stochastic optimization [67]. By contrast, this study applied the social-science perspective and developed a management mechanism that demonstrates how humans and computers cooperate. However, the management mechanism was not designed to replace current technical approaches but to complement them. In other words, this research focused on cooperation between humans (managerial) and computers (technical). The concept of human–computer cooperation is lacking in the development of AI applications. Most people still have unrealistic dreams for convenient services delivered by AI applications. Scholars have argued that such high convenience will be harmful in the long term [68]. The management mechanism proposed in this research is useful for guiding the development of AI applications to become more sustainable.

Finally, the management mechanism can be deployed in the pre- and post-implementation stages of AI application development. The execution of the management mechanism as well as its ongoing updates for AI-enabled decision-making applications may enhance the smoothness and accuracy of the overall decision-making process and its components. Although most people expect to enjoy the convenience of advanced technologies, AI is not omnipotent and should not be considered an almighty technology. Although some people believe that computers can learn by transforming acquired information into knowledge, AI still requires a management mechanism to maintain its decision-making process. Furthermore, the application of AI requires the investment of resources and assistance from (human) research and development teams, including for setting goals, defining problems, determining criteria, and calibrating the system to ensure satisfactory results. Hence, a maintenance mechanism instituted by human teams must be included during the measurement of the decision-making performance of AI applications.

Nonetheless, this research has some limitations. First, the approaches collected from academic literature may not be sufficient. With more mature applications appearing, future research needs to consider empirical cases further. The coding process is another limitation because the researcher’s knowledge, experience, and mindset substantially impact the results (of coding) [69].

Author Contributions

J.W. and S.S. contribute to the conceptualization and methodology design. After S.S. validated the project, J.W. collected and analyzed data and provided the original draft. S.S. reviewed the manuscript and supervised the research project. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank the anonymous reviewers for their suggestions and helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Evans, J. Driverless cars: Kangaroos throwing off animal detection software. ABC Aust. 2017. [Google Scholar] [CrossRef]

- Lim, H.S.M.; Taeihagh, A. Algorithmic decision-making in AVs: Understanding ethical and technical concerns for smart cities. Sustainability 2019, 11, 5791. [Google Scholar] [CrossRef]

- Walker, W.E.; Haasnoot, M.; Kwakkel, J.H. Adapt or perish: A review of planning approaches for adaptation under deep uncertainty. Sustainability 2013, 5, 955–979. [Google Scholar] [CrossRef]

- Mintzberg, H.; Raisinghani, D.; Theoret, A. The structure of "unstructured" decision processes. Adm. Sci. Q. 1976, 21, 246. [Google Scholar] [CrossRef]

- Simon, H.; March, J. Administrative Behavior Organization; Free Press: New York, NY, USA, 1976. [Google Scholar]

- Overgoor, G.; Chica, M.; Rand, W.; Weishampel, A. Letting the Computers Take Over: Using AI to Solve Marketing Problems. Calif. Manag. Rev. 2019, 61, 156–185. [Google Scholar] [CrossRef]

- Lipshitz, R.; Strauss, O. Coping with uncertainty: A naturalistic decision-making analysis. Organ. Behav. Hum. Decis. Process. 1997, 69, 149–163. [Google Scholar] [CrossRef]

- Duncan, R.B. Characteristics of organizational environments and perceived environmental uncertainty. Adm. Sci. Q. 1972, 17, 313. [Google Scholar] [CrossRef]

- March, J.G. Bounded rationality, ambiguity, and the engineering of choice. Bell J. Econ. 1978, 9, 587. [Google Scholar] [CrossRef]

- Milliken, F.J. Three types of perceived uncertainty about the environment: State, effect, and response uncertainty. Acad. Manag. Rev. 1987, 12, 133–143. [Google Scholar] [CrossRef]

- Thompson, J.D. Organizations in action. In Thompson Organizations in Action 1967; McGraw-Hill: New York, NY, USA, 1967. [Google Scholar]

- Tversky, A.; Kahneman, D. Judgment under uncertainty: Heuristics and biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Zack, M.H. The role of decision support systems in an indeterminate world. Decis. Support Syst. 2007, 43, 1664–1674. [Google Scholar] [CrossRef]

- Simon, H. The Sciences of the Artificial, 2nd ed.; MIT Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Lawrence, P.R.; Lorsch, J.W. Organization and Environment; Harvard University Press: Cambridge, MA, USA, 1967. [Google Scholar]

- Iselin, E. The impact of information diversity on information overload effects in unstructured managerial decision making. J. Inf. Sci. 1989, 15, 163–173. [Google Scholar] [CrossRef]

- Saunders, C.; Jones, J.W. Temporal sequences in information acquisition for decision making: A focus on source and medium. Acad. Manag. Rev. 1990, 15, 29–46. [Google Scholar] [CrossRef]

- Bourgeois, L.J., III. Strategy and environment: A conceptual integration. Acad. Manag. Rev. 1980, 5, 25–39. [Google Scholar] [CrossRef]

- Dill, W.R. Environment as an influence on managerial autonomy. Adm. Sci. Q. 1958, 2, 409. [Google Scholar] [CrossRef]

- Grandori, A. A prescriptive contingency view of organizational decision making. Adm. Sci. Q. 1984, 29, 192. [Google Scholar] [CrossRef]

- Tambe, P.; Cappelli, P.; Yakubovich, V. Artificial Intelligence in Human Resources Management: Challenges and a Path Forward. Calif. Manag. Rev. 2019, 61, 15–42. [Google Scholar] [CrossRef]

- Rust, J. Has dynamic programming improved decision making? Annu. Rev. Econ. 2019, 11, 833–858. [Google Scholar] [CrossRef]

- Ransbotham, S. Don’t let artificial intelligence supercharge bad processes. MIT Sloan Manag. Rev. March 2018, 20. [Google Scholar] [CrossRef]

- Das, T.; Teng, B.S. Cognitive biases and strategic decision processes: An integrative perspective. J. Manag. Stud. 1999, 36, 757–778. [Google Scholar] [CrossRef]

- Seligman, M.E.; Railton, P.; Baumeister, R.F.; Sripada, C. Navigating into the future or driven by the past. Perspect. Psychol. Sci. 2013, 8, 119–141. [Google Scholar] [CrossRef]

- Pigozzi, G.; Tsoukias, A.; Viappiani, P. Preferences in artificial intelligence. Ann. Math. Artif. Intell. 2016, 77, 361–401. [Google Scholar] [CrossRef]

- Nissan-Rozen, I. Against moral hedging. Econ. Philos. 2015, 31, 349–369. [Google Scholar] [CrossRef]

- Lee, M.K.; Kim, J.T.; Lizarondo, L. A human-centered approach to algorithmic services: Considerations for fair and motivating smart community service management that allocates donations to non-profit organizations. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 3365–3376. [Google Scholar]

- Greene, J.D. Our driverless dilemma. Science 2016, 352, 1514–1515. [Google Scholar] [CrossRef]

- Kaplan, A.; Haenlein, M. Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Costea, A.; Ferrara, M.; Şerban, F. An integrated two-stage methodology for optimising the accuracy of performance classification models. Technol. Econ. Dev. Econ. 2017, 23, 111–139. [Google Scholar] [CrossRef]

- Paradarami, T.K.; Bastian, N.D.; Wightman, J.L. A hybrid recommender system using artificial neural networks. Expert Syst. Appl. 2017, 83, 300–313. [Google Scholar] [CrossRef]

- Yuille, A.L.; Liu, C. Deep Nets: What have they ever done for Vision? arXiv 2018, arXiv:1805.04025. [Google Scholar]

- Testolin, A.; Zorzi, M. Probabilistic models and generative neural networks: Towards an unified framework for modeling normal and impaired neurocognitive functions. Front. Comput. Neurosci. 2016, 10, 73. [Google Scholar] [CrossRef] [PubMed]

- Kemp, C.; Tenenbaum, J.B. The discovery of structural form. Proc. Natl. Acad. Sci. USA 2008, 105, 10687–10692. [Google Scholar] [CrossRef] [PubMed]

- Cauteruccio, F.; Fortino, G.; Guerrieri, A.; Liotta, A.; Mocanu, D.C.; Perra, C.; Terracina, G.; Vega, M.T. Short-long term anomaly detection in wireless sensor networks based on machine learning and multi-parameterized edit distance. Inf. Fusion 2019, 52, 13–30. [Google Scholar] [CrossRef]

- Chen, Y.; Nyemba, S.; Malin, B. Detecting Anomalous Insiders in Collaborative Information Systems. IEEE Trans. Dependable Secur. Comput. 2012, 9, 332–344. [Google Scholar] [CrossRef] [PubMed]

- Klotz, F. The Perils of Applying AI Prediction to Complex Decisions. MIT Sloan Manag. Rev. 2019, 60, 1–4. [Google Scholar]

- Baker, B.; Kanitscheider, I.; Markov, T.; Wu, Y.; Powell, G.; McGrew, B.; Mordatch, I. Emergent tool use from multi-agent autocurricula. arXiv 2019, arXiv:1909.07528. [Google Scholar]

- Lu, R.; Hong, S.H.; Zhang, X. A dynamic pricing demand response algorithm for smart grid: Reinforcement learning approach. Appl. Energy 2018, 220, 220–230. [Google Scholar] [CrossRef]

- Bernier, J.L.; Ortega, J.; Ros, E.; Rojas, I.; Prieto, A. A quantitative study of fault tolerance, noise immunity, and generalization ability of MLPs. Neural Comput. 2000, 12, 2941–2964. [Google Scholar] [CrossRef] [PubMed]

- Hampel, F.R.; Ronchetti, E.M.; Rousseeuw, P.J.; Stahel, W.A. Robust Statistics: The Approach Based on Influence Functions; John Wiley & Sons: Hoboken, NJ, USA, 2011; Volume 196. [Google Scholar]

- Junqué de Fortuny, E.; Martens, D.; Provost, F. Predictive modeling with big data: Is bigger really better? Big Data 2013, 1, 215–226. [Google Scholar] [CrossRef] [PubMed]

- Clark, J.S. Uncertainty and variability in demography and population growth: A hierarchical approach. Ecology 2003, 84, 1370–1381. [Google Scholar] [CrossRef]

- Russell, S.J.; Norvig, P. Artificial Intelligence: A Modern Approach, 4th ed.; Pearson: Boston, MA, USA, 2020. [Google Scholar]

- Nutt, P.C. Why decisions fail: Avoiding the Blunders and Traps that Lead to Debacles; Berrett-Koehler: Oakland, CA, USA, 2002. [Google Scholar]

- Eisenhardt, K.M. Making fast strategic decisions in high-velocity environments. Acad. Manag. J. 1989, 32, 543–576. [Google Scholar]

- Tarafdar, M.; Beath, C.M.; Ross, J.W. Using AI to Enhance Business Operations. Mit Sloan Manag. Rev. 2019, 60, 37–44. [Google Scholar]

- Eisenhardt, K.M. Building theories from case study research. Acad. Manag. Rev. 1989, 14, 532–550. [Google Scholar] [CrossRef]

- Bogosian, K. Implementation of moral uncertainty in intelligent machines. Minds Mach. 2017, 27, 591–608. [Google Scholar] [CrossRef]

- Love-Koh, J.; Peel, A.; Rejon-Parrilla, J.C.; Ennis, K.; Lovett, R.; Manca, A.; Chalkidou, A.; Wood, H.; Taylor, M. The future of precision medicine: Potential impacts for health technology assessment. Pharmacoeconomics 2018, 36, 1439–1451. [Google Scholar] [CrossRef]

- Willcock, S.; Martínez-López, J.; Hooftman, D.A.; Bagstad, K.J.; Balbi, S.; Marzo, A.; Prato, C.; Sciandrello, S.; Signorello, G.; Voigt, B. Machine learning for ecosystem services. Ecosyst. Serv. 2018, 33, 165–174. [Google Scholar] [CrossRef]

- Lima-Junior, F.R.; Carpinetti, L.C.R. An adaptive network-based fuzzy inference system to supply chain performance evaluation based on SCOR® metrics. Comput. Ind. Eng. 2020, 139, 106191. [Google Scholar] [CrossRef]

- Miles, M.B.; Huberman, A.M.; Saldaña, J. Qualitative Data Analysis: A Methods Sourcebook, 3rd ed.; SAGE: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Janis, I.L.; Mann, L. Decision Making: A Psychological Analysis of Conflict, Choice, and Commitment; Free Press: New York, NY, USA, 1977. [Google Scholar]

- Huber, G.P. Organizational learning: The contributing processes and the literatures. Organ. Sci. 1991, 2, 88–115. [Google Scholar] [CrossRef]

- Cohen, M.S. A database tool to support probabilistic assumption-based reasoning in intelligence analysis. In Proceedings of the 1989 Joint Director of the C2 Symposium, Ft. McNair, VA, USA, 27–29 June 1989; pp. 27–29. [Google Scholar]

- Pawlak, Z. Rough set approach to knowledge-based decision support. Eur. J. Oper. Res. 1997, 99, 48–57. [Google Scholar] [CrossRef]

- Jang, J.-S. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans.Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Benner, M.J.; Tushman, M.L. Exploitation, exploration, and process management: The productivity dilemma revisited. Acad. Manag. Rev. 2003, 28, 238–256. [Google Scholar] [CrossRef]

- Krueger, T.; Page, T.; Hubacek, K.; Smith, L.; Hiscock, K. The role of expert opinion in environmental modelling. Environ. Model. Softw. 2012, 36, 4–18. [Google Scholar] [CrossRef]

- Sagoff, M. Values and preferences. Ethics 1986, 96, 301–316. [Google Scholar] [CrossRef]

- Shrestha, Y.R.; Ben-Menahem, S.M.; von Krogh, G. Organizational Decision-Making Structures in the Age of Artificial Intelligence. Calif. Manag. Rev. 2019, 61, 66–83. [Google Scholar] [CrossRef]

- Barro, S.; Davenport, T.H. People and Machines: Partners in Innovation. MIT Sloan Manag. Rev. 2019, 60, 22–28. [Google Scholar]

- Keshtkar, A.; Arzanpour, S. An adaptive fuzzy logic system for residential energy management in smart grid environments. Appl. Energy 2017, 186, 68–81. [Google Scholar] [CrossRef]

- Gabrel, V.; Murat, C.; Thiele, A. Recent advances in robust optimization: An overview. Eur. J. Oper. Res. 2014, 235, 471–483. [Google Scholar] [CrossRef]

- Sullivan, L.S.; Reiner, P.B. Ethics in the Digital Era: Nothing New? IT Prof. 2020, 22, 39–42. [Google Scholar] [CrossRef]

- Seuring, S.; Müller, M. From a literature review to a conceptual framework for sustainable supply chain management. J. Clean. Prod. 2008, 16, 1699–1710. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).