Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media

Abstract

:1. Introduction

1.1. News Bots: The Use of Bots in News Organizations

1.2. The Influence of Self-Efficacy on the Acceptance of News Bots

1.3. The Influence of Perceived Prevalence on the Acceptance of News Bots

1.4. The Influence of Demographics

2. Materials and Methods

2.1. Procedure and Sample

2.2. Survey Instrument

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wu, S.; Tandoc, E.C.; Salmon, C.T. When Journalism and Automation Intersect: Assessing the Influence of the Technological Field on Contemporary Newsrooms. J. Pract. 2019, 13, 1238–1254. [Google Scholar] [CrossRef]

- Nielsen, R.K.; Cornia, A.; Kalogeropoulos, A. Challenges and Opportunities for News Media and Journalism in an Increasingly Digital, Mobile and Social Media Environment; Reuters Institute for the Study of Journalism: Oxford, UK, 2016; Available online: https://ssrn.com/abstract=2879383 (accessed on 1 June 2020).

- Shearer, K.; Matsa, K.E. News Use across Social Media Platforms; Pew Research Center: Washington, DC, USA, 2018; Available online: https://www.journalism.org/2018/09/10/news-use-across-social-media-platforms-2018/ (accessed on 11 June 2020).

- Shu, K.; Mahudeswaran, D.; Wang, S.; Lee, D.; Liu, H. FakeNewsNet: A Data Repository with News Content, Social Context, and Spatiotemporal Information for Studying Fake News on Social Media. Big Data 2020, 8, 171–188. [Google Scholar] [CrossRef]

- Boczkowski, P.J.; Mitchelstein, E.; Matassi, M. News Comes across When I’m in a Moment of Leisure: Understanding the Practices of Incidental News Consumption on Social Media. New Media Soc. 2018, 20, 3523–3539. [Google Scholar] [CrossRef]

- An, J.; Quercia, D.; Crowcroft, J. Fragmented Social Media: A Look into Selective Exposure to Political News Categories and Subject Descriptors. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 51–52. [Google Scholar]

- Cinelli, M.; Brugnoli, E.; Schmidt, A.L.; Zollo, F.; Quattrociocchi, W.; Scala, A. Selective Exposure Shapes the Facebook News Diet. PLoS ONE 2020, 15, e0229129. [Google Scholar] [CrossRef] [Green Version]

- Messing, S.; Westwood, S.J. Selective Exposure in the Age of Social Media: Endorsements Trump Partisan Source Affiliation When Selecting News Online. Communic. Res. 2012, 41, 1042–1063. [Google Scholar] [CrossRef]

- Jung, J.; Song, H.; Kim, Y.; Im, H.; Oh, S. Intrusion of Software Robots into Journalism: The Public’s and Journalists’ Perceptions of News Written by Algorithms and Human Journalists. Comput. Hum. Behav. 2017, 71, 291–298. [Google Scholar] [CrossRef]

- Lokot, T.; Diakopoulos, N. News Bots: Automating News and Information Dissemination on Twitter. Digit. J. 2016, 4, 682–699. [Google Scholar] [CrossRef]

- Montal, T.; Reich, Z. I, Robot. You, Journalist. Who Is the Author? Authorship, Bylines and Full Disclosure in Automated Journalism. Digit. J. 2017, 5, 829–849. [Google Scholar] [CrossRef]

- Edwards, C.; Edwards, A.; Spence, P.R.; Shelton, A.K. Is That a Bot Running the Social Media Feed? Testing the Differences in Perceptions of Communication Quality for a Human Agent and a Bot Agent on Twitter. Comput. Hum. Behav. 2014, 33, 372–376. [Google Scholar] [CrossRef]

- Deuze, M. What Is Journalism? Professional Identity and Ideology of Journalists Reconsidered. Journalism 2005, 6, 442–464. [Google Scholar] [CrossRef]

- Diakopoulos, N. Algorithmic Accountability: Journalistic Investigation of Computational Power Structures. Digit. J. 2015, 3, 398–415. [Google Scholar] [CrossRef]

- Stocking, G.; Sumida, N. Social Media Bots Draw Public’s Attention and Concern; Pew Research Center: Washington, DC, USA, 2018; Available online: https://www.journalism.org/2018/10/15/social-media-bots-draw-publics-attention-and-concern/ (accessed on 2 May 2020).

- Varol, O.; Ferrara, E.; Davis, C.A.; Menczer, F.; Flammini, A. Online Human-Bot Interactions: Detection, Estimation, and Characterization. In Proceedings of the International AAAI Conference on Web and Social Media (ICWSM), Montreal, QC, Canada, 15–18 May 2017; pp. 280–289. Available online: https://arxiv.org/abs/1703.03107 (accessed on 10 June 2020).

- Keller, T.R.; Klinger, U. Social Bots in Election Campaigns: Theoretical, Empirical, and Methodological Implications. Political Commun. 2019, 36, 171–189. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y.; Zhong, B.; Yang, F. When Algorithms Meet Journalism: The User Perception to Automated News in a Cross-Cultural Context. Comput. Hum. Behav. 2018, 86, 266–275. [Google Scholar] [CrossRef]

- Ford, H.; Hutchinson, J. Newsbots That Mediate Journalist and Audience Relationships. Digit. J. 2019, 7, 1013–1031. [Google Scholar] [CrossRef]

- Maniou, T.A.; Veglis, A. Employing a Chatbot for News Dissemination during Crisis: Design, Implementation and Evaluation. Future Internet 2020, 12, 109. [Google Scholar] [CrossRef]

- Veglis, A.; Maniou, T.A. Embedding a Chatbot in a News Article: Design and Implementation. In Proceedings of the 23rd Pan-Hellenic Conference on Informatics (PCI), Nicosia, Cyprus, 28–30 November 2019; pp. 169–172. [Google Scholar] [CrossRef]

- Veglis, A.; Maniou, T.A. Chatbots on the Rise: A New Narrative in Journalism. Stud. Media Commun. 2019, 7, 1–6. [Google Scholar] [CrossRef]

- Jones, B.; Jones, R. Public Service Chatbots: Automating Conversation with BBC News. Digit. J. 2019, 7, 1032–1053. [Google Scholar] [CrossRef]

- Piccolo, L.S.G.; Roberts, S.; Iosif, A.; Harith, A. Designing Chatbots for Crises: A Case Study Contrasting Potential and Reality. In Proceedings of the 32nd International BCS Human Computer Interaction Conference (HCI), Belfast, UK, 4–6 July 2018; pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Radziwill, N.M.; Benton, M.C. Evaluating Quality of Chatbots and Intelligent Conversational Agents. arXiv 2017, arXiv:1704.04579. Available online: https://arxiv.org/abs/1704.04579 (accessed on 1 August 2020).

- Lewis, S.C.; Guzman, A.L.; Schmidt, T.R. Automation, Journalism, and Human–Machine Communication: Rethinking Roles and Relationships of Humans and Machines in News. Digit. J. 2019, 7, 409–427. [Google Scholar] [CrossRef]

- Keohane, J. What News-Writing Bots Mean for the Future of Journalism. Wired, 16 February 2017. Available online: https://www.wired.com/2017/02/robots-wrote-this-story/(accessed on 2 August 2020).

- Peiser, J. The Rise of the Robot Reporter. The New York Times, 5 February 2019. Available online: https://www.nytimes.com/2019/02/05/business/media/artificial-intelligence-journalism-robots.html(accessed on 3 August 2020).

- BBC News Labs. Available online: https://bbcnewslabs.co.uk/projects/bots/ (accessed on 2 August 2020).

- Bessi, A.; Ferrara, E. Social Bots Distort the 2016 U.S. Presidential Election Online Discussion. First Monday 2016, 21. [Google Scholar] [CrossRef]

- Bovet, A.; Makse, H.A. Influence of Fake News in Twitter during the 2016 US Presidential Election. Nat. Commun. 2019, 10, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, B.Y.E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The Rise of Social Bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef] [Green Version]

- Lou, X.; Flammini, A.; Menczer, F. Manipulating the Online Marketplace of Ideas; Technical Report; 2020. Available online: https://arxiv.org/abs/1907.06130 (accessed on 13 June 2020).

- Ross, B.; Pilz, L.; Cabrera, B.; Brachten, F.; Neubaum, G.; Stieglitz, S. Are Social Bots a Real Threat? An Agent-Based Model of the Spiral of Silence to Analyse the Impact of Manipulative Actors in Social Networks. Eur. J. Inf. Syst. 2019, 28, 394–412. [Google Scholar] [CrossRef]

- Shao, C.; Ciampaglia, G.L.; Varol, O.; Yang, K.C.; Flammini, A.; Menczer, F. The Spread of Low-Credibility Content by Social Bots. Nat. Commun. 2018, 9, 4787. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vosoughi, S.; Roy, D.; Aral, S. The Spread of True and False News Online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Yang, K.; Varol, O.; Davis, C.A.; Ferrara, E.; Flammini, A.; Menczer, F. Arming the Public with Artificial Intelligence to Counter Social Bots. Hum. Behav. Emerg. Technol. 2019, 1, 48–61. [Google Scholar] [CrossRef] [Green Version]

- Ferrara, E. Disinformation and Social Bot Operations in the Run up to the 2017 French Presidential Election. First Monday 2017, 22. [Google Scholar] [CrossRef] [Green Version]

- Duh, A.; Rupnik, M.S.; Korošak, D. Collective Behavior of Social Bots Is Encoded in Their Temporal Twitter Activity. Big Data 2018, 6, 113–123. [Google Scholar] [CrossRef] [Green Version]

- Lazer, D.M.J.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Metzger, M.J.; Nyhan, B.; Pennycook, G.; Rothschild, D.; et al. The Science of Fake News. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef]

- Everett, R.M.; Nurse, J.R.C.; Erola, A. The Anatomy of Online Deception: What Makes Automated Text Convincing? In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 6–8 April 2016; pp. 1115–1120. [Google Scholar]

- Bates, R.; Khasawneh, S. Self-Efficacy and College Students’ Perceptions and Use of Online Learning Systems. Comput. Hum. Behav. 2007, 23, 175–191. [Google Scholar] [CrossRef]

- Vishnumolakala, V.R.; Southam, D.C.; Treagust, D.F.; Mocerino, M.; Qureshi, S. Students’ Attitudes, Self-Efficacy and Experiences in a Modified Process-Oriented Guided Inquiry Learning Undergraduate Chemistry Classroom. Chem. Educ. Res. Pract. 2017, 18, 340–352. [Google Scholar] [CrossRef] [Green Version]

- Bandura, A. Self-Efficacy: The Exercise of Control; W.H. Freeman and Company: New York, NY, USA, 1997. [Google Scholar]

- Schwarzer, R.; Warner, L.M. Perceived Self-Efficacy and Its Relationship to Resilience. In Resilience in Children, Adolescents, and Adults: Translating Research into Practice; Prince-Embury, S., Saklofske, D.H., Eds.; Springer Science + Business Media: New York, NY, USA, 2013; pp. 139–150. ISBN 978-1-4614-4938-6. [Google Scholar]

- Hocevar, K.P.; Flanagin, A.J.; Metzger, M.J. Social Media Self-Efficacy and Information Evaluation Online. Comput. Hum. Behav. 2014, 39, 254–262. [Google Scholar] [CrossRef]

- Kundu, A.; Ghose, A. The Relationship between Attitude and Self Efficacy in Mathematics among Higher Secondary Students. J. Hum. Soc. Sci. 2016, 21, 25–31. [Google Scholar] [CrossRef]

- Yau, H.K.; Leung, Y.F. The Relationship between Self-Efficacy and Attitudes towards the Use of Technology in Learning in Hong Kong Higher Education. In Proceedings of the International MultiConference of Engineers and Computer Scientists, Hong Kong, China, 14–16 March 2018; pp. 832–834. [Google Scholar]

- Tsai, P.-S.; Tsai, C.-C.; Hwang, G.-H. Elementary School Students’ Attitudes and Self-Efficacy of Using PDAs in a Ubiquitous Learning Context. Australas. J. Educ. Technol. 2010, 26, 297–308. [Google Scholar] [CrossRef] [Green Version]

- Kardas, M.; O’Brien, E. Easier Seen than Done: Merely Watching Others Perform Can Foster an Illusion of Skill Acquisition. Psychol. Sci. 2018, 29, 521–536. [Google Scholar] [CrossRef]

- Stella, M.; Ferrara, E.; De Domenico, M. Bots Increase Exposure to Negative and Inflammatory Content in Online Social Systems. Proc. Natl. Acad. Sci. USA 2018, 115, 12435–12440. [Google Scholar] [CrossRef] [Green Version]

- Bolsover, G.; Howard, P. Computational Propaganda and Political Big Data: Moving toward a More Critical Research Agenda. Big Data 2017, 5, 273–276. [Google Scholar] [CrossRef]

- Social Media, Sentiment and Public Opinions: Evidence from #Brexit and #USElection. Available online: https://ssrn.com/abstract=3182227 (accessed on 20 June 2020).

- Reuter, C.; Kaufhold, M.; Steinfort, R. Rumors, Fake News and Social Bots in Conflicts and Emergencies: Towards a Model for Believability in Social Media. In Proceedings of the 14th ISCRAM Conference, Albi, France, 21–24 May 2017; ISCRAM: Ocean City, MD, USA, 2017; Comes, T., Bénaben, F., Hanachi, C., Lauras, M., Montarnal, A., Eds.; pp. 583–591. [Google Scholar]

- Chao, C.M. Factors Determining the Behavioral Intention to Use Mobile Learning: An Application and Extension of the UTAUT Model. Front. Psychol. 2019, 10, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Pedersen, P.E.; Ling, R. Modifying Adoption Research for Mobile Internet Service Adoption: Cross-Disciplinary Interactions. In Proceedings of the 36th Annual Hawaii International Conference on System Sciences, Big Island, HI, USA, 6–9 January 2003. [Google Scholar] [CrossRef]

- Wang, H.; Wang, S.-H. User Acceptance of Mobile Internet Based on the Unified Theory of Acceptance and Use of Technology: Investigating the Determinants and Gender Differences. Soc. Behav. Pers. 2010, 38, 415–426. [Google Scholar] [CrossRef]

- Yuan, Y.H.; Tsai, S.B.; Dai, C.Y.; Chen, H.M.; Chen, W.F.; Wu, C.H.; Li, G.; Wang, J. An Empirical Research on Relationships between Subjective Judgement, Technology Acceptance Tendency and Knowledge Transfer. PLoS ONE 2017, 12, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Clerwall, C. Enter the Robot Journalist. J. Pract. 2014, 8, 519–531. [Google Scholar] [CrossRef]

- Graefe, A.; Haim, M.; Haarmann, B.; Brosius, H.B. Readers’ Perception of Computer-Generated News: Credibility, Expertise, and Readability. Journalism 2018, 19, 595–610. [Google Scholar] [CrossRef] [Green Version]

- Thurman, N.; Moeller, J.; Helberger, N.; Trilling, D. My Friends, Editors, Algorithms, and I. Digit. J. 2019, 7, 447–469. [Google Scholar] [CrossRef] [Green Version]

- Glynn, C.J.; Huge, M.E.; Hoffman, L.H. All the News That’s Fit to Post: A Profile of News Use on Social Networking Sites. Comput. Hum. Behav. 2012, 28, 113–119. [Google Scholar] [CrossRef]

- Warner-Søderholm, G.; Bertsch, A.; Sawe, E.; Lee, D.; Wolfe, T.; Meyer, J.; Engel, J.; Fatilua, U.N. Who Trusts Social Media? Comput. Hum. Behav. 2018, 81, 303–315. [Google Scholar] [CrossRef]

- Khan, M.L.; Idris, I.K. Recognise Misinformation and Verify before Sharing: A Reasoned Action and Information Literacy Perspective. Behav. Inf. Technol. 2019, 38, 1194–1212. [Google Scholar] [CrossRef]

- Sundar, S.S.; Waddell, T.F.; Jung, E.H. The Hollywood Robot Syndrome: Media Effects on Older Adults’ Attitudes toward Robots and Adoption Intentions. In Proceedings of the 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 343–350. [Google Scholar] [CrossRef]

- Waddell, T.F. A Robot Wrote This? How Perceived Machine Authorship Affects News Credibility. Digit. J. 2018, 6, 236–255. [Google Scholar] [CrossRef]

- Gallup. Indicators of News Media Trust; Knight Foundation: 2018. Available online: https://kf-site-production.s3.amazonaws.com/media_elements/files/000/000/216/original/KnightFoundation_Panel4_Trust_Indicators_FINAL.pdf (accessed on 12 June 2020).

| Self−Efficacy | Perceived Prevalence | Acceptance of News Bots | SM News Evaluation: Accurate | SM News Evaluation: Helpful | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Self-efficacy | 1 | |||||||||

| Prevalence | 0.100 *** | 1 | ||||||||

| Acceptance | 0.045 * | −0.007 | 1 | |||||||

| Accurate | 0.089 *** | −0.153 *** | 0.095 *** | 1 | ||||||

| Helpful | 0.118 *** | −0.073 ** | 0.075 *** | 0.346 *** | 1 | |||||

| Frequency | 1 = 299 | 13.2% | 1 = 19 | 0.8% | 0 = 1067 | 47.0% | 0 = 1351 | 59.5% | 1 = 310 | 13.7% |

| 2 = 892 | 39.3% | 2 = 312 | 13.7% | 1 = 1203 | 53.0% | 1 = 919 | 40.5% | 2 =136 | 50.0% | |

| 3 = 900 | 39.6% | 3 = 1579 | 69.6% | 3 = 824 | 36.3% | |||||

| 4 = 179 | 7.9% | 4 = 360 | 15.9% | |||||||

| Mean (SD) | 2.423 (0.816) | 3.004 (0.574) | 0.530 (0.499) | 0.405 (0.491) | 2.226 (0.670) | |||||

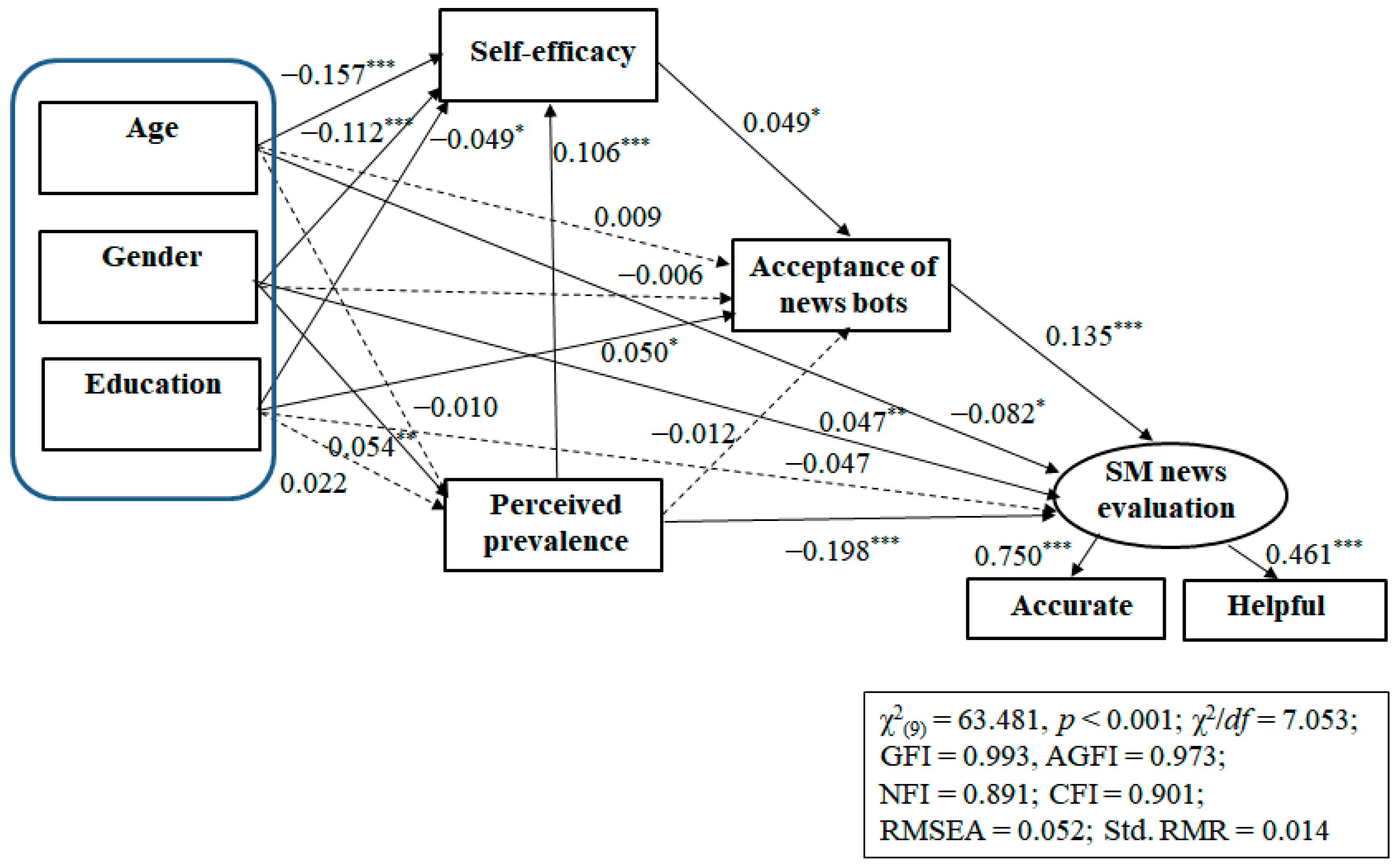

| ß | B | S.E. | C.R. | p | |||

|---|---|---|---|---|---|---|---|

| Acceptance | ← | Self-efficacy | 0.049 | 0.030 | 0.013 | 2.288 | 0.022 |

| Acceptance | ← | Prevalence | −0.012 | −0.011 | 0.018 | −0.576 | 0.564 |

| Self-efficacy | ← | Prevalence | 0.106 | 0.151 | 0.029 | 5.168 | <0.001 |

| SM news evaluation | ← | Acceptance | 0.135 | 0.099 | 0.020 | 5.047 | <0.001 |

| SM news evaluation | ← | Prevalence | −0.198 | −0.127 | 0.017 | −7.304 | <0.001 |

| Prevalence | ← | Age | −0.010 | −0.006 | 0.013 | −0.463 | 0.643 |

| Prevalence | ← | Gender | 0.054 | 0.062 | 0.024 | 2.561 | 0.010 |

| Prevalence | ← | Education | 0.022 | 0.018 | 0.017 | 1.060 | 0.289 |

| Self-efficacy | ← | Age | −0.157 | −0.133 | 0.017 | −7.661 | <0.001 |

| Self-efficacy | ← | Gender | −0.112 | −0.183 | 0.033 | −5.458 | <0.001 |

| Self-efficacy | ← | Education | −0.049 | −0.056 | 0.024 | −2.376 | 0.018 |

| Acceptance | ← | Age | 0.009 | 0.005 | 0.011 | 0.415 | 0.678 |

| Acceptance | ← | Gender | −0.006 | −0.006 | 0.021 | −0.286 | 0.775 |

| Acceptance | ← | Education | 0.050 | 0.036 | 0.015 | 2.405 | 0.016 |

| SM news evaluation | ← | Age | −0.082 | −0.032 | 0.010 | −3.115 | 0.002 |

| SM news evaluation | ← | Gender | 0.047 | 0.034 | 0.019 | 1.759 | 0.078 |

| SM news evaluation | ← | Education | −0.047 | −0.025 | 0.014 | −1.791 | 0.073 |

| Accurate | ← | SM news evaluation | 0.750 | 1.000 | |||

| Helpful | ← | SM news evaluation | 0.461 | 0.839 | 0.144 | 5.826 | <0.001 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, H.; Oh, H.J. Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media. Sustainability 2020, 12, 6515. https://doi.org/10.3390/su12166515

Hong H, Oh HJ. Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media. Sustainability. 2020; 12(16):6515. https://doi.org/10.3390/su12166515

Chicago/Turabian StyleHong, Hyehyun, and Hyun Jee Oh. 2020. "Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media" Sustainability 12, no. 16: 6515. https://doi.org/10.3390/su12166515

APA StyleHong, H., & Oh, H. J. (2020). Utilizing Bots for Sustainable News Business: Understanding Users’ Perspectives of News Bots in the Age of Social Media. Sustainability, 12(16), 6515. https://doi.org/10.3390/su12166515