Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators

Abstract

1. Introduction

2. Literature Review

2.1. Sustainability Assessment Tools in Higher Education

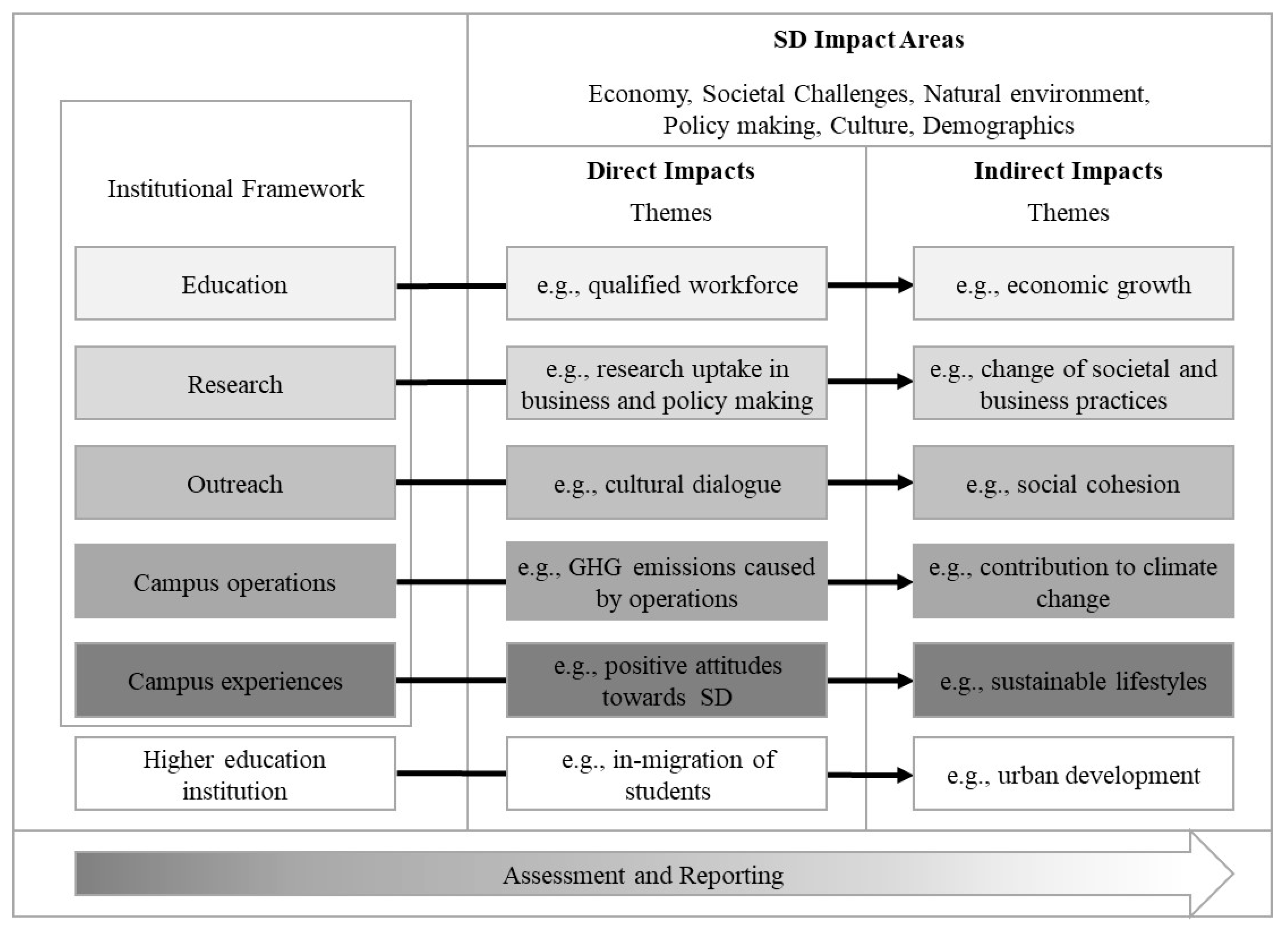

2.2. Impacts of Higher Education Institutions on Sustainable Development

3. Sample and Method

3.1. Sampling Strategy and Description

- The Auditing Instrument for Sustainability in Higher Education (AISHE) was developed in 2001 in the Netherlands by the Dutch Foundation for Sustainable Higher Education and aims at measuring sustainable education [49]. The latest version “AISHE 2.0” has 30 indicators across the five modules Identity, Education, Research, Operations, and Societal Outreach, and it offers a five-stage description for each criterion for benchmarking;

- The Adaptable Model for Assessing Sustainability in Higher Education (AMAS) was developed by Gomez et al. [50] in 2015 at the Pontificia Universidad Católica de Chile. The tool has 25 indicators that are subordinated to a goal, a criterion, and a subcriterion. It aims at enabling HEIs to assess sustainability along different implementation stages;

- The Business School Impact System (BSIS) is designed to determine the extent of a school’s impact upon its regional environment. It was launched in France in 2014 by EFMD Global Network and has 126 indicators across seven categories. Business schools can apply to enter the BSIS process and are then reviewed by an expert team [51];

- The CSA framework resulted from the Campus Sustainability Assessment Review Project in 2002 at the Western Michigan University (US). The CSA framework includes 43 best practice indicators across 15 dimensions compiled from an analysis of various CSA reports [52]. A benchmarking possibility is provided in the form of a “potential end goal”;

- Waheed, Khan, and Veitch [55] developed a quantitative sustainability assessment tool using a driving force-pressure-state-exposure-effect-action (DPSEEA) framework to achieve a causality-based impact assessment. The Canadian model is called DPSEEA-Sustainability index Model (D-SiM). The D-SiM includes 56 indicators across five categories;

- The German Commission for UNESCO (Deutsche UNESCO Kommission [DUK]) developed a sustainability self-assessment concept for HEIs in 2011 containing 10 fields of action/indicators. Each of the fields of action offers five stages of implementation to which HEIs can assign themselves [56];

- The Graphical Assessment of Sustainability in Universities (GASU) has 174 indicators that build on a modification of the Global Reporting Initiative (GRI) Sustainability Guidelines. It was developed in 2006 by Rodrigo Lozano at Cardiff University (UK) and last updated in 2011. GASU aims to enable analysis and comparison of universities’ sustainability efforts [9];

- The Graz Model of Integrative Development (GMID) evaluates the transformative potentials of sustainability processes within Regional Centers of Expertise (RCE) on Education for Sustainable Development (ESD), and thus focuses on the interrelations between an HEI and regional stakeholders. It includes 15 indicators across the basic principles of Leadership, Social Networks, Participation, Education and Learning, and Research Integration, and it was developed by Clemens Mader in Graz (Austria) [57];

- People and Planet’s University League (P&P) ranks UK universities by environmental and ethical performance using 51 indicators across 13 categories. The university sustainability ranking was first conducted in 2007 and has been updated each year [58];

- The Penn State Indicators Report (PENN) evaluated the sustainability performance at Pennsylvania State University (US) in 2000. It covers 33 indicators across 10 categories. These indicators were subsequently used by other HEIs for sustainability assessment [59];

- The Association of University Leaders for a Sustainable Future (ULSF) [60] created the Sustainability Assessment Questionnaire (SAQ) for colleges and universities. It was developed in the US and is designed to assess how sustainable a university’s teaching, research, operations, and outreach are with 41 indicators;

- The National Wildlife Federation’s [61] State of the Campus Environment (SCE) is a national (US) report card on Environmental Performance and Sustainability in Higher Education. It covers 69 indicators across 12 categories;

- Good Company’s Sustainable Pathways Toolkit (SPT) developed in 2002 in the US evaluates the social and environmental impacts of HEIs using 29 indicators (20 core indicators and nine supplementary indicators). Along with each indicator goes a benchmark suggesting a desirable performance for the respective area of application [62];

- The Sustainability Tracking, Assessment and Rating System (STARS) version 2.1 was developed by the Association for the Advancement of Sustainability in Higher Education (AASHE) [63] in North America. It includes 68 indicators with benchmarks that determine possible scoring across the categories Academics, Engagement, Operations, Planning and Administration, and Innovation and Leadership. HEIs that submit a self-assessment using STARS may achieve a gold, silver, or bronze rating;

- The Sustainability Tool for Auditing for University Curricula in Higher-Education (STAUNCH®), developed by Rodrigo Lozano in 2009 in the UK, enables HEIs to assess their curricula’s contribution to sustainable development by using 36 indicators that are subdivided into environmental, economic, social, and cross-cutting themes [64];

- Lukman, Krajnc, and Glavic [65] created the Three-dimensional University Ranking (TUR) in 2010 at the University of Maribor (SI). The model offers 15 indicators to evaluate HEIs along their research, educational and environmental performance in a way that enables inter-organizational comparison;

- The UI GreenMetric World University Ranking (UIGM) is an initiative of Universitas Indonesia (ID), launched in 2010. It ranks universities’ performance in the categories of Setting and Infrastructure, Energy and Climate Change, Waste, Water, Transportation, and Education using 69 indicators [66]; and,

- In 2009, the United Nations Environment Programme (UNEP) designed the Unit-based Sustainability Assessment Tool (USAT) to determine to what degree HEIs have integrated sustainability concerns. USAT employs 75 indicators across the dimensions teaching, operations and management, student involvement, and policy and written statements [67].

3.2. Coding Strategy

- Economy: Local food purchasing, alumni in the job market;

- Societal challenges: Research ethics, student fees;

- Natural environment: Noise pollution, resource consumption, GHG emissions;

- Policy making: Contribution to public policy development;

- Culture: Cultural dialog, cultural diversity; and

- Demographics: Composition and change of local population, including student and alumni population.

3.3. Analysis of the Coding Matrix

3.4. Limitations

4. Results

4.1. Descriptive Analysis

4.2. Inductive Content Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Stephens, J.; Hernandez, M.; Roman, M.; Graham, A.; Scholz, R. Higher Education as a Change Agent for Sustainability in Different Cultures and Contexts. Int. J. Sustain. High. Educ. 2008, 9, 317–338. [Google Scholar] [CrossRef]

- Cortese, A.D. The Critical Role of Higher Education in Creating a Sustainable Future. Plan. High. Educ. 2003, 31, 15–22. [Google Scholar]

- Lozano, R. Incorporation and Institutionalization of Sd into Universities: Breaking through Barriers to Change. J. Clean. Prod. 2006, 14, 787–796. [Google Scholar] [CrossRef]

- UN (United Nations). Declaration of the United Nations Conference on the Human Environment. Available online: http://www.un-documents.net/unchedec.htm (accessed on 27 August 2018).

- Lozano, R.; Lukman, R.; Lozano, F.J.; Huisingh, D.; Lambrechts, W. Declarations for Sustainability in Higher Education: Becoming Better Leaders, through Addressing the University System. J. Clean. Prod. 2013, 48, 10–19. [Google Scholar] [CrossRef]

- UN (United Nations). Higher Education and Research for Sustainable Development (Hesd). Available online: https://sustainabledevelopment.un.org/partnership/?p=11748 (accessed on 27 August 2018).

- UN (United Nations). Higher Education Sustainability Initiative (Hesi). Available online: https://sustainabledevelopment.un.org/sdinaction/hesi (accessed on 27 August 2018).

- Gamage, P.; Sciulli, N. Sustainability Reporting by Australian Universities. Aust. J. Public Adm. 2016, 76, 187–203. [Google Scholar] [CrossRef]

- Lozano, R. A Tool for a Graphical Assessment of Sustainability in Universities (Gasu). J. Clean. Prod. 2006, 14, 963–972. [Google Scholar] [CrossRef]

- Bullock, G.; Wilder, N. The Comprehensiveness of Competing Higher Education Sustainability Assessments. Int. J. Sustain. High. Educ. 2016, 17, 282–304. [Google Scholar] [CrossRef]

- Saadatian, O.; Dola, K.B.; Salleh, E.I.; Tahir, O.M. Identifying Strength and Weakness of Sustainable Higher Educational Assessment Approaches. Int. J. Bus. Soc. Sci. 2011, 2, 137–146. [Google Scholar]

- Gilbert, D.U.; Rasche, A.; Waddock, S. Accountability in a Global Economy: The Emergence of International Accountability Standards. Bus. Ethics 2011, 21, 23–44. [Google Scholar] [CrossRef]

- Shriberg, M. Institutional Assessment Tools for Sustainability in Higher Education. Int. J. Sustain. High. Educ. 2002, 3, 254–270. [Google Scholar] [CrossRef]

- Berzosa, A.; Bernaldo, M.O.; Fernández-Sanchez, G. Sustainability Assessment Tools for Higher Education: An Empirical Comparative Analysis. J. Clean. Prod. 2017, 161, 812–820. [Google Scholar] [CrossRef]

- Fischer, D.; Jenssen, S.; Tappeser, V. Getting an Empirical Hold of Thesustainable University: A Comparative Analysis of Evaluation Frameworks across 12 Contemporary Sustainability Assessment Tools. Assess. Eval. High. Educ. 2015, 40, 785–800. [Google Scholar] [CrossRef]

- Yarime, M.; Tanaka, Y. The Issues and Methodologies in Sustainability Assessment Tools for Higher Education Institutions—A Review of Recent Trends and Future Challenges. J. Educ. Sustain. Dev. 2012, 6, 63–77. [Google Scholar] [CrossRef]

- Findler, F.; Schönherr, N.; Lozano, R.; Reider, D.; Martinuzzi, A. Conceptualizing Sustainable Development Impacts in Higher Education—A Systematic Literature Review. Int. J. Sustain. High. Educ. in press.

- Vaughter, P.; Wright, T.; McKenzie, M.; Lidstone, L. Greening the Ivory Tower: A Review of Educational Research on Sustainability in Post-Secondary Education. Sustainability 2013, 5, 2252–2271. [Google Scholar] [CrossRef]

- Koehn, P.H.; Uitto, J.I. Evaluating Sustainability Education: Lessons from International Development Experience. High. Educ. 2014, 67, 621–635. [Google Scholar] [CrossRef]

- Lebeau, Y.; Cochrane, A. Rethinking the ‘Third Mission’: Uk Universities and Regional Engagement in Challenging Times. Eur. J. High. Educ. 2015, 5, 250–263. [Google Scholar] [CrossRef]

- Gupta, H.; Singhal, N. Framework for Embedding Sustainability in Business Schools: A Review. Vis. J. Bus. Perspect. 2017, 21, 195–203. [Google Scholar] [CrossRef]

- Bonaccorsi, A.; Daraio, C.; Geuna, A. Universities in the New Knowledge Landscape: Tensions, Challenges, Change-an Introduction. Minerva 2010, 48, 1–4. [Google Scholar] [CrossRef]

- Caeiro, S.; Jabbour, C.; Leal Filho, W. Sustainability Assessment Tools in Higher Education Institutions Mapping Trends and Good Practices around the World; Springer: Cham, Gemany, 2013; p. 432. [Google Scholar]

- Ceulemans, K.; Molderez, I.; Van Liedekerke, L. Sustainability Reporting in Higher Education: A Comprehensive Review of the Recent Literature and Paths for Further Research. J. Clean. Prod. 2015, 106, 127–143. [Google Scholar] [CrossRef]

- Dalal-Clayton, B.; Bass, S. Sustainable Development Strategies: A Resource Book; Earthscan Publications Ltd.: London, UK, 2002. [Google Scholar]

- Daub, C.H. Assessing the Quality of Sustainability Reporting: An Alternative Methodological Approach. J. Clean. Prod. 2007, 15, 75–85. [Google Scholar] [CrossRef]

- Prado-Lorenzo, J.M.; Gallego-Alvarez, I.; Garcia-Sanchez, I.M. Stakeholder Engagement and Corporate Social Responsibility Reporting: The Ownership Structure Effect. Corp. Soc. Responsib. Environ. Manag. 2009, 16, 94–107. [Google Scholar] [CrossRef]

- Ceulemans, K.; Lozano, R.; Alonso-Almeida, M.M. Sustainability Reporting in Higher Education: Interconnecting the Reporting Process and Organisational Change Management for Sustainability. Sustainability 2015, 7, 8881–8903. [Google Scholar] [CrossRef]

- Huber, S.; Bassen, A. Towards a Sustainability Reporting Guideline in Higher Education. Int. J. Sustain. High. Educ. 2018, 19, 218–232. [Google Scholar] [CrossRef]

- Lozano, R.; Llobet, J.; Tideswell, G. The Process of Assessing and Reporting Sustainability at Universities: Preparing the Report of the University of Leeds. Sostenibilidad, Tecnologia y Humanismo 2013, 6, 85–112. [Google Scholar]

- Adams, C.A. Sustainability Reporting and Performance Management in Universities—Challenges and Benefits. Sustain. Account. Manag. Policy J. 2013, 4, 384–392. [Google Scholar] [CrossRef]

- Lambrechts, W. The Contribution of Sustainability Assessment to Policy Development in Higher Education. Assess. Eval. High. Educ. 2015, 40, 801–816. [Google Scholar] [CrossRef]

- Alghamdi, N.; den Heijer, A.; de Jonge, H. Assessment Tools’ Indicators for Sustainability in Universities: An Analytical Overview. Int. J. Sustain. High. Educ. 2017, 18, 84–115. [Google Scholar] [CrossRef]

- Ramos, T.B. Development of Regional Sustainability Indicators and the Role of Academia in This Process: The Portuguese Practice. J. Clean. Prod. 2009, 17, 1101–1115. [Google Scholar] [CrossRef]

- Gallopín, G. Indicators and Their Use: Information for Decision Making. In Sustainability Indicators. Report on the Project on Indicators of Sustainable Development; Moldan, B., Billharz, S., Eds.; John Wiley and Sons: Chichester, UK, 1997. [Google Scholar]

- Jorgensen, A.; Le Bocq, A.; Nazarkina, L.; Hauschild, M. Methodologies for Social Life Cycle Assessment. Int. J. Life Cycle Assess. 2008, 13, 96–103. [Google Scholar] [CrossRef]

- Sayed, A.; Asmuss, M. Benchmarking Tools for Assessing and Tracking Sustainability in Higher Educational Institutions. Int. J. Sustain. High. Educ. 2013, 14, 449–465. [Google Scholar] [CrossRef]

- European Commission. Horizon 2020 Work Program 2014-2015 General Annexes H. Evaluation. Available online: http://ec.europa.eu/research/participants/data/ref/h2020/wp/2014_2015/annexes/h2020-wp1415-annex-h-esacrit_en.pdf (accessed on 20 July 2018).

- REF (Research Excellence Framework). What Is the Ref? Available online: http://www.ref.ac.uk/about/whatref/ (accessed on 23 July 2018).

- EFMD. Efmd Launch Business School Impact System (Bsis). Available online: https://www.efmd.org/efmd-deans-across-frontiers/view/467-efmd-launch-business-school-impact-survey (accessed on 20 July 2018).

- Maas, K.; Liket, K. Social Impact Measurement: Classification of Methods. In Environmental Management Accounting and Supply Chain Management; Burritt, R.L., Schaltegger, S., Bennett, M., Pohjola, T., Csutora, M., Eds.; Springer: Berlin, Gemany, 2011; pp. 171–202. [Google Scholar]

- Findler, F.; Schönherr, N.; Martinuzzi, A. Higher Education Institutions as Transformative Agents for a Sustainable Society. In Chancen Und Grenzen Der Nachhaltigkeitstransformation; Luks, F., Ed.; Springer Gabler: Wiesbaden, Gemany, 2019. [Google Scholar]

- Buckler, C.; Creech, H. Shaping the Future We Want; United Nations Educational, Scientific and Cultural Organization: Paris, France, 2014. [Google Scholar]

- Versteijlen, M.; Perez Salgado, F.; Janssen Groesbeek, M.; Counotte, A. Pros and Cons of Online Education as a Measure to Reduce Carbon Emissions in Higher Education in the Netherlands. Curr. Opin. Environ. Sustain. 2017, 28, 80–89. [Google Scholar] [CrossRef]

- Hubbard, P. Regulating the Social Impacts of Studentification: A Loughborough Case Study. Environ. Plan. A 2008, 40, 323–341. [Google Scholar] [CrossRef]

- Yao, L.J.; Bai, Y. The Sustainability of Economic and Cultural Impacts of International Students to Regional Australia. Humanomics 2008, 24, 250–262. [Google Scholar] [CrossRef]

- Malina, M.A.; Nørreklit, H.S.O.; Selto, F.H. Lessons Learned: Advantages and Disadvantages of Mixed Method Research. Qual. Res. Account. Manag. 2011, 8, 59–71. [Google Scholar] [CrossRef]

- Teddlie, C.; Tashakkori, A. Foundations of Mixed Methods Research Integrating Quantitative and Qualitative Approaches in the Social and Behavioral Sciences, 3rd ed.; SAGE Publications: Los Angeles, CA, USA, 2010; p. 387. [Google Scholar]

- Roorda, N.; Rammel, C.; Waara, S.; Fra Paleo, U. Aishe 2.0 Manual: Assessment Instrument for Sustainability in Higher Education. Available online: http://www.eauc.org.uk/theplatform/aishe (accessed on 9 August 2018).

- Gómez, F.; Sáez-Navarrete, C.; Lioi, S.; Marzuca, V. Adaptable Model for Assessing Sustainability in Higher Education. J. Clean. Prod. 2015, 107, 475–485. [Google Scholar] [CrossRef]

- EFMD Global Network. Bsis Asessment Criteria Guide. Available online: http://www.efmdglobal.org/bsis (accessed on 15 September 2018).

- Nixon, A. Improving the Campus Sustainability Assessment Process. Bachelor Thesis, Western Michigan University, Kalamazoo, MI, USA, 2002. [Google Scholar]

- Cole, L. Assessing Sustainability on Canadian University Campuses: Development of a Campus Sustainability Assessment Framework. Available online: http://neumann.hec.ca/humaniterre/campus_durable/campus_memoire.pdf (accessed on 13 September 2018).

- Legacy, C. Campus Sustainability: Sustainability Assessment Framework at the University of Waterloo. Available online: http://environment.uwaterloo.ca/research/watgreen/projects/library/w04sustframework.pdf (accessed on 3 September 2018).

- Waheed, B.; Khan, F.I.; Veitch, B. Developing a Quantitative Tool for Sustainability Assessment of Heis. Int. J. Sustain. High. Educ. 2011, 12, 355–368. [Google Scholar] [CrossRef]

- Deutsche UNESCO-Kommission e.V. Hochschulen Für Eine Nachhaltige Entwicklung: Nachhaltigkeit in Forschung, Lehre Und Betrieb. Available online: https://www.hrk.de/uploads/media/Hochschulen_fuer_eine_nachhaltige_Entwicklung_Feb2012.pdf (accessed on 12 September 2018).

- Mader, C. Sustainability Process Assessment on Transformative Potentials: The Graz Model for Integrative Development. J. Clean. Prod. 2013, 49, 54–63. [Google Scholar] [CrossRef]

- People & Planet. People & Planet University League. How Sustainable Is Your University? Available online: https://peopleandplanet.org/university-league (accessed on 22 October 2018).

- Penn State Green Destiny Council. Penn State Indicators Report. Steps toward a Sustainable University. Available online: http://www.willamette.edu/~nboyce/assessment/PennState.pdf (accessed on 15 October 2018).

- ULSF (University Leaders for a Sustainable Future). Sustainability Assessment Questionnaire (Saq) for Colleges and Universities. Available online: http://ulsf.org/wp-content/uploads/2015/06/SAQforHigherEd09.pdf (accessed on 12 July 2018).

- National Wildlife Federation. State of the Campus Environment. Available online: https://www.nwf.org/en/EcoLeaders/Campus-Ecology-Resource-Center/Reports/State-of-the-Campus-Environment (accessed on 14 September 2018).

- Good Company. Sustainability Assessment of the University of Oregon Based on Good Company’s Sustainable Pathways Toolkit. Available online: https://cpfm.uoregon.edu/sites/cpfm2.uoregon.edu/files/sust_assessment_-_sust_pathways_toolkit_0.pdf (accessed on 12 September 2018).

- AASHE (The Association for the Advancement of Sustainability in Higher Education). Stars Technical Manual. Version 2.1. Available online: https://stars.aashe.org/pages/about/technical-manual.html (accessed on 30 August 2018).

- Lozano, R. Developing a Tool to Audit Curricula Contributions to Sustainable Development. In Sustainability at Universities—Opportunities, Challenges and Trends; Peattie, K., Ed.; Peter Lang Publishing Group: Frankfurt am Main, Germany, 2009; pp. 179–194. [Google Scholar]

- Lukman, R.; Krajnc, D.; Glavic, P. University Ranking Using Research, Educational and Environmental Indicators. J. Clean. Prod. 2010, 18, 619–628. [Google Scholar] [CrossRef]

- Greenmetric UI. Ui Greenmetric. Criteria & Indicators. Available online: http://greenmetric.ui.ac.id/criterian-indicator/ (accessed on 22 August 2018).

- Togo, M.; Lotz-Sisitka, H. Unit Based Sustainability Assessment Tool. A Resource Book to Complement the Unep Mainstreaming Environment and Sustainability in African Universities Partnership; Share-Net: Howick, ZA, South Africa, 2009. [Google Scholar]

- De Wet, J.; Erasmus, Z. Towards Rigour in Qualitative Analysis. Qual. Res. J. 2005, 5, 27–40. [Google Scholar] [CrossRef]

- Patton, M.Q. Qualitative Research & Evaluation Methods Integrating Theory and Practice, 4th ed.; Sage Publications: New York, NY, USA, 2015; p. 806. [Google Scholar]

- Seuring, S.; Müller, M. From a Literature Review to a Conceptual Framework for Sustainable Supply Chain Management. J. Clean. Prod. 2008, 16, 1699–1710. [Google Scholar] [CrossRef]

- Tinsley, H.E.A.; Weiss, D.J. Interrater Reliability and Agreement. In Handbook of Applied Multivariate Statistics and Mathematical Modeling; Tinsley, H.E.A., Brown, S.D., Eds.; Academic Press: San Diego, CA, USA, 2000; pp. 95–124. [Google Scholar]

- Sinkovics, N. Pattern Matching in Qualitative Analysis. In The Sage Handbook of Qualitative Business and Management Research Methods; Cassell, C., Cunliffe, A., Grandy, G., Eds.; Sage Publications, Inc.: Thousand Oaks, CA, USA, 2018; pp. 468–485. [Google Scholar]

- IBM. Ibm Spss Statistics. Available online: https://www.ibm.com/products/spss-statistics (accessed on 17 October 2018).

- Singh, K. Quantitative Social Research Methods, 1st ed.; SAGE Publications: New Delhi, India, 2007; p. 431. [Google Scholar]

- Field, A. Discovering Statistics Using Ibm Spss Statistics and Sex and Drugs and Rock’n’roll, 4th ed.; SAGE: Los Angeles, CA, USA, 2013; p. 915. [Google Scholar]

- VERBI. Maxdqa—The Art of Data Analysis. Available online: http://www.maxqda.com/ (accessed on 7 October 2018).

- Flint, D.J.; Woodruff, R.B.; Fisher Gardial, S. Exploring the Phenomenon of Customers’ Desired Value Change in a Business-to-Business Context. J. Mark. 2002, 66, 102–117. [Google Scholar] [CrossRef]

- Wallendorf, M.; Belk, R.W. Assessing Trustworthiness in Naturalistic Consumer Research. Interpret. Consum. Res. 1989, 69–84. [Google Scholar]

- Gaviglio, A.; Bertocchi, M.; Demartini, E. A Tool for the Sustainability Assessment of Farms: Selection, Adaptation and Use of Indicators for an Italian Case Study. Resources 2017, 6, 60. [Google Scholar] [CrossRef]

- Schönherr, N.; Findler, F.; Martinuzzi, A. Exploring the Interface of Csr and the Sustainable Development Goals. Trans. Corp. 2017, 24, 33–47. [Google Scholar] [CrossRef]

- Bedenlier, S.; Kondakci, Y.; Zawacki-Richter, O. Two Decades of Research into the Internationalization of Higher Education: Major Themes in the Journal of Studies in International Education (1997–2016). J. Stud. Int. Educ. 2017, 22, 108–135. [Google Scholar] [CrossRef]

| Author(s) | Tools Analyzed | Methodology | Main Findings |

|---|---|---|---|

| Shriberg (2002) [13] | n = 11 AISHE, Campus Ecology, Environmental EMS Self-Assessment, Environmental Workbook and Report, Greening Campuses, Grey Pinstripes with Green Ties, Higher Education 21’s Sustainability Indicators, Indicators Snapshot Guide, Performance Survey, SAQ, State of the Campus Environment | A content analysis with a focus on strengths and weaknesses of tools was conducted. | The tools vary greatly in their purpose, function, scope, and state of development. |

| Yarime & Tanaka (2012) [16] | n = 16 AISHE, Campus Ecology, Campus Sustainability Selected Indicators Snapshot, College Sustainability Report Card, CSAF, CSAF core, CSARP, EMS Self-Assessment, Environmental Workbook and Report, GASU, Good Company’s Sustainable Pathways Toolkit, HEPS, Penn State Indicator Report, SAQ, STARS, State of the Campus Environment | A mixed-method approach with a quantitative and a qualitative part was applied: (1) comparative analysis of criteria and (2) content analysis of individual indicators. | The main focus of the tools is on campus operations and governance issues. Education, research, and outreach are not well addressed. |

| Sayed et al. (2013) [37] | n = 4 SAQ, CSAF, CSRC, STARS | Each tool was rated based on 27 questions related to five areas of campus life of a specific university. | STARS was identified to be the most effective SAT. SAQ and CSAF have limitations in assessing SD in campus operations. |

| Fischer et al. (2015) [15] | n = 12 AISHE, Alternative Universal Appraisal, Conference of Rectors of Spanish Universities, CSAF, German Commission for UNESCO, Graz Model of Integrative Development, Green Plan, Innovación y Educación Ambiental en Iberoamérica, People & Planet, Red de Ciencia, Tecnología, SAQ, STARS, UI GreenMetric | A mixed-method approach with a quantitative and a qualitative part was applied: (1) comparative analysis of criteria and (2) introductory passages in supporting documents. | Indicators and criteria are biased towards the field of operations. |

| Bullock & Wilder (2016) [10] | n = 9 ACUPCC, College Sustainability Report Card, Grey Pinstripes with Green Ties, Pacific Sustainability Index (PSI), Princeton Review’s Green Ratings, Sierra Club’s Cool Schools, STARS, The Guardian’s Green League, UI GreenMetric | Sustainability assessment frameworks and SATs based on the GRI-HE framework were evaluated. | The evaluated sustainability assessments and SATs are not comprehensive and lack coverage of the economic and social dimension of sustainability. |

| Alghamdi et al. (2017) [33] | n = 12 Adaptable Model for Assessing Sustainability in Higher Education, AISHE, Alternative University Appraisal, GASU, Green Plan, SAQ, STARS, Sustainable Campus Assessment System, Sustainable University Model, UI GreenMetric, Unit-based Sustainability Assessment Tool, University Environmental Management System | A desk study approach with quantitative and qualitative elements was applied including a review of research articles, academic books, network platforms, graduate theses, and websites. | The tools share similar characteristics in terms of their criteria and indicators, which can be grouped into: management, academia, environment, engagement, and innovation. Environmental indicators have the highest share among the tools. |

| Berzosa et al. (2017) [14] | n = 4 AISHE, SAQ, Sustain Tool, USAT | A descriptive analysis of SATs based on single case studies was performed. | The SATs positively influence creating specific plans in education, research, outreach, and campus operations. They have a strong focus on the environmental dimension and delivered similar outcomes. |

| (Core) Element | Examples |

|---|---|

| Institutional framework | On broad-scale policies and the administrative structure of the HEIs, including, e.g., governance body structure, vision and mission statements, policies for staff and faculty hiring, budget issues, student associations, and development programs for staff and faculty |

| Education | Teaching, curriculum, and all other activities aiming for the education of students |

| Research | Research-related activities of the HEIs, e.g., allocation of research funds, transdisciplinary research programs |

| Outreach | HEI’s collaboration efforts with external stakeholders on regional, national, and international level |

| Campus operations | HEI’s environmental management, procurement policies and practices, infrastructure, and workspace-related issues such as safety regulations |

| Campus experiences | On-campus experiences for students and staff (e.g., student crime) and individual behaviors not related to studying or working (e.g., alcohol consumption) |

| Assessment and reporting | HEI’s assessment and reporting processes that are geared towards the engagement with external stakeholders (e.g., external assurance, reporting cycles, stakeholder identification processes) |

| Higher education institution | Activities or impacts on the institutional level that cannot be influenced by measures in one of the core elements, e.g., demographic effects on the region through student in-migration |

| Assessment Tool | Institutional Framework | Education | Research | Outreach | Campus Operations | Campus Experiences | Assessment & Reporting | HEI | NA | ∑ |

|---|---|---|---|---|---|---|---|---|---|---|

| AISHE | 23.33 | 20.00 | 20.00 | 20.00 | 6.67 | 0.00 | 10.00 | 0.00 | 0.00 | 100.00 |

| AMAS | 44.00 | 4.00 | 4.00 | 8.00 | 24.00 | 0.00 | 12.00 | 4.00 | 0.00 | 100.00 |

| BSIS | 7.14 | 26.19 | 19.05 | 18.25 | 0.79 | 0.00 | 0.00 | 23.02 | 5.56 | 100.00 |

| CSA | 4.65 | 6.98 | 6.98 | 2.33 | 72.08 | 6.98 | 0.00 | 0.00 | 0.00 | 100.00 |

| CSAF | 31.95 | 5.33 | 3.54 | 1.78 | 48.52 | 5.92 | 0.00 | 1.18 | 1.78 | 100.00 |

| D-SiM | 8.93 | 10.71 | 5.36 | 1.79 | 46.43 | 0.00 | 0.00 | 14.29 | 12.49 | 100.00 |

| DUK | 20.00 | 30.00 | 20.00 | 0.00 | 20.00 | 0.00 | 10.00 | 0.00 | 0.00 | 100.00 |

| GASU | 28.16 | 10.92 | 6.90 | 1.72 | 21.84 | 1.72 | 11.49 | 4.61 | 12.64 | 100.00 |

| GMID | 20.00 | 20.00 | 20.00 | 40.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| P&P | 33.33 | 11.76 | 0.00 | 0.00 | 50.99 | 1.96 | 1.96 | 0.00 | 0.00 | 100.00 |

| PENN | 6.06 | 3.03 | 9.09 | 0.00 | 69.70 | 12.12 | 0.00 | 0.00 | 0.00 | 100.00 |

| SAQ | 21.94 | 12.20 | 9.76 | 4.88 | 29.26 | 9.76 | 0.00 | 12.20 | 0.00 | 100.00 |

| SCE | 23.19 | 18.84 | 2.90 | 0.00 | 55.07 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| SPT | 24.14 | 6.89 | 0.00 | 0.00 | 68.97 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| STARS | 26.48 | 16.18 | 4.41 | 8.82 | 33.82 | 0.00 | 2.94 | 2.94 | 4.41 | 100.00 |

| STAUNCH® | 0.00 | 100.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| TUR | 20.00 | 40.00 | 33.33 | 0.00 | 6.67 | 0.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| UIGM | 0.00 | 4.35 | 7.25 | 0.00 | 63.77 | 1.45 | 4.35 | 1.45 | 17.38 | 100.00 |

| USAT | 30.67 | 21.33 | 9.34 | 8.00 | 21.33 | 4.00 | 0.00 | 1.33 | 4.00 | 100.00 |

| Overall average | 20.90 | 16.04 | 7.85 | 5.20 | 34.48 | 2.56 | 2.91 | 5.03 | 5.03 | 100.00 |

| Assessment Tool | Performance Indicator | Proxy Indicator | Impact Indicator | NA | ∑ |

|---|---|---|---|---|---|

| AISHE | 83.33 | 0.00 | 16.67 | 0.00 | 100.00 |

| AMAS | 84.00 | 16.00 | 0.00 | 0.00 | 100.00 |

| BSIS | 18.25 | 70.64 | 11.11 | 0.00 | 100.00 |

| CSA | 67.44 | 25.58 | 6.98 | 0.00 | 100.00 |

| CSAF | 81.66 | 16.57 | 1.18 | 0.59 | 100.00 |

| D-SiM | 28.56 | 17.86 | 14.29 | 39.29 | 100.00 |

| DUK | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| GASU | 59.77 | 19.54 | 4.02 | 16.67 | 100.00 |

| GMID | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| P&P | 82.35 | 17.65 | 0.00 | 0.00 | 100.00 |

| PENN | 39.39 | 51.52 | 9.09 | 0.00 | 100.00 |

| SAQ | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| SCE | 86.96 | 13.04 | 0.00 | 0.00 | 100.00 |

| SPT | 65.52 | 17.24 | 17.24 | 0.00 | 100.00 |

| STARS | 88.24 | 7.35 | 0.00 | 4.41 | 100.00 |

| STAUNCH® | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| TUR | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| UIGM | 72.47 | 17.39 | 0.00 | 10.14 | 100.00 |

| USAT | 100.00 | 0.00 | 0.00 | 0.00 | 100.00 |

| Overall average | 69.84 | 20.55 | 4.14 | 5.47 | 100.00 |

| Proxy Indicator | Impact Indicator | Total | X2 1 | ||

|---|---|---|---|---|---|

| (Core) Elements | Campus Operations | 121 (43.21%) | 14 (5.00%) | 135 (48.21%) | 52.20 *** |

| Higher Education Institution | 33 (11.79%) | 12 (4.29%) | 45 (16.08%) | ||

| Outreach | 22 (7.85%) | 6 (2.15%) | 28 (10.00%) | ||

| Research | 22 (7.85%) | 1 (0.36%) | 23 (8.21%) | ||

| Education | 10 (3.57%) | 7 (2.50%) | 17 (6.07%) | ||

| Institutional Framework | 13 (4.65%) | 2 (0.71%) | 15 (5.36%) | ||

| Campus Experiences | 4 (1.43%) | 3 (1.07%) | 7 (2.50%) | ||

| Assessment & Reporting | 0 (0.00%) | 0 (0.00%) | 0 (0.00%) | ||

| NA | 8 (2.86%) | 2 (0.71%) | 10 (3.57%) | ||

| Total | 233 (83.21%) | 47 (16.79%) | 280 (100.00%) | ||

| SD Impact Areas | Natural Environment | 123 (43.94%) | 15 (5.36%) | 138 (49.30%) | 45.87 *** |

| Economy | 53 (18.93%) | 13 (4.64%) | 66 (23.57%) | ||

| Societal Challenges | 24 (8.57%) | 13 (4.64%) | 37 (13.21%) | ||

| Culture | 2 (0.71%) | 2 (0.71%) | 4 (1.42%) | ||

| Policy making | 2 (0.71%) | 1 (0.36%) | 3 (1.07%) | ||

| Demographics | 2 (0.71%) | 0 (0.00%) | 2 (0.71%) | ||

| NA | 27 (9.64%) | 3 (1.08%) | 30 (10.72%) | ||

| Total | 233 (83.21%) | 47 (16.79%) | 280 (100.00%) | ||

| Impact Type | Direct | 232 (82.85%) | 40 (14.29%) | 272 (97.14%) | 117.93 *** |

| Indirect | 1 (0.36%) | 7 (2.50%) | 8 (2.86%) | ||

| Total | 233 (83.21%) | 47 (16.79%) | 280 (100.00%) | ||

| Indicator Type | Quantitative | 162 (57.86%) | 13 (4.64%) | 175 (62.50%) | 107.49 *** |

| Quasi-Quantitative | 39 (13.93%) | 15 (5.36%) | 54 (19.29%) | ||

| Qualitative | 32 (11.42%) | 19 (6.79%) | 51 (18.21%) | ||

| Total | 233 (83.21%) | 47 (16.79%) | 280 (100.00%) | ||

| Level of analysis | Organizational | 211 (75.36%) | 32 (11.43%) | 243 (86.79%) | 68.89 *** |

| Individual | 22 (7.85%) | 15 (5.36%) | 37 (13.21%) | ||

| Total | 233 (83.21%) | 47 (16.79%) | 280 (100.00%) | ||

| SD Impact Area | Proxy Indicator | Impact Indicator |

|---|---|---|

| Natural Environment |

|

|

| Economy |

|

|

| Societal Challenges |

|

|

| Policy making |

|

|

| Culture |

| - |

| Demographics |

| - |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Findler, F.; Schönherr, N.; Lozano, R.; Stacherl, B. Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators. Sustainability 2019, 11, 59. https://doi.org/10.3390/su11010059

Findler F, Schönherr N, Lozano R, Stacherl B. Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators. Sustainability. 2019; 11(1):59. https://doi.org/10.3390/su11010059

Chicago/Turabian StyleFindler, Florian, Norma Schönherr, Rodrigo Lozano, and Barbara Stacherl. 2019. "Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators" Sustainability 11, no. 1: 59. https://doi.org/10.3390/su11010059

APA StyleFindler, F., Schönherr, N., Lozano, R., & Stacherl, B. (2019). Assessing the Impacts of Higher Education Institutions on Sustainable Development—An Analysis of Tools and Indicators. Sustainability, 11(1), 59. https://doi.org/10.3390/su11010059