Strategies for Dealing with Uncertainties in Strategic Environmental Assessment: An Analytical Framework Illustrated with Case Studies from The Netherlands

Abstract

1. Introduction

2. Analytical Framework

2.1. Uncertainties in SEA Discussed in EA Literature

2.2. Uncertainties Discussed in Other Bodies of Environmental Literature

2.3. Toward a Typology of Uncertainties Relevant for SEAs

- Inherent uncertainties (“we cannot know (exactly)”);

- Scientific uncertainty (“our information and understanding could be wrong or incomplete”);

- Social uncertainty (“we do not agree on what information is or will be relevant”);

- Legal uncertainty (“we do not know what information we should (legally) provide”).

2.3.1. Inherent Uncertainty—“We Cannot Know (Exactly)”

- Because of variability of the system, the appropriate system boundaries regarding time and spatial scales are unknown or unclear, or the vulnerability of the system(s), populations, or individuals impacted varies. It may be possible to give “likely” bounds, but the precise impacts in practice will vary, and outliers cannot be ruled out. Examples include variability in local weather conditions, in local activities, or in the way local plants and animals might respond to the effects of a plan in the environment. For SEAs, it means that the exact magnitude and full range of environmental impacts of an activity cannot be known. Our knowledge of the natural system determines how we represent these properties in assessments, and how we design tools to evaluate impact.

- In understanding environmental processes, it is important to study the relationships between cause and effect. Cause-and-effect mechanisms can only be established if these relationships are well understood. In the case of very complex systems and issues, such as climate change, this is difficult to establish, and the system may exhibit “chaotic” behavior. As a consequence, assessing the impact of a future activity in SEAs can become very difficult, especially in complex systems and for long-term impacts.

- Uncertainties also arise in the assessment of cumulative effects [25]. Noise pollution is a good example for cumulative effects. If an activity takes place on a larger scale, other existing sources of noise have to be taken into account to study the total impact of noise. Different sources of noise reinforce each other, called accumulation. Noise increase from the assessed activity might seem irrelevant, yet, in total, it could mean a significant increase in noise pollution in the area. It can be difficult to understand how natural phenomena reinforce themselves. Consequently, the full impact of an activity in an existing situation with multiple sources and burdens may not be clear, and it can be difficult to attribute reported problems to a specific activity.

2.3.2. Scientific Uncertainty—“Our Information and Understanding Could Be Wrong or Incomplete”

- Models are simplified abstractions of the real world, and are, therefore, never fully accurate [26]. Uncertainties can occur in the model structure, variables, and parameters [8]. Similarly, many assumptions are made in the modeling process, e.g., in designing a model or combining models in a model chain, where different researchers might make different choices [48,49]. That models make simplifications and assumptions is, in itself, not necessarily bad—it is a necessary aspect of generalizing and applying knowledge of environmental processes to evaluate new situations (i.e., not yet existing in exactly the set-up proposed). Rather, one should relate models to model and knowledge quality [28], and to the fitness of the model for the purpose for which it is used in the assessment [50,51]. Often, generic models are developed and used in SEAs to find consistency in the research methodology, and thus, overcome uncertainty due to limitations in models. Interactions and variables that are unique to the situation might be overlooked.

- Models use the input of data. Uncertainty about data can occur due to limited access to information, measurement errors, type of data, and presentation of data [25,46,52]. Also, data might become invalid in the long term due to greater variability, depending on the time horizon that is selected. Limitations in data seriously influence the impact prediction that is the outcome of the model.

- Data on baseline conditions is a specific issue. Baseline conditions include the developments, impacts, and environmental dynamics that would occur without the proposed activity. Baseline conditions are a critical starting point in SEAs, as they provide the benchmark against which assessments are predicted. Measurement errors occur in baseline data [52].

- Uncertainties can occur in the choices of data, methods, parameters, and statistics, in other words, the assessment framework. Science is looking for measures to represent phenomena. It applies to SEAs in the sense that indicators are selected to study environmental effects, which may not be the best representation of the real environment [26,52].

- When determining change and impact, we need to determine past, present, and future activities for the development at issue [25]. To create an inventory of all activities, a large amount of effort and input is needed from different stakeholders. Future activities are especially difficult to include, since they occur over a longer time scale, influenced by many other factors.

2.3.3. Social Uncertainty—“We Do Not Agree on What Information Is or Will Be Relevant”

- Stakeholders, as well as decision-makers and researchers in SEAs have different values, interests, and perceptions of environmental components [8,46]. Examples are conflicts of interest regarding the objects to be studied, and different world views regarding what is important. It influences the framing of the problem, and therefore, the scope of the assessment. It also entails a subjective selection of criteria and indicators. The assessment of system boundaries and impacts are a result of negotiations between stakeholders.

- The political climate influences whether an environmental problem is addressed, and which alternatives are considered and selected [43]. Political groups or lobbyists can have a large influence on the outcome of the decision-making process. They can also demand to study specific environmental aspects, such as health or sustainability. It depends on the societal context and the period. It could also mean that politicians pursue political goals, and overrule environmental issues.

- Knowledge frames and capacities of stakeholders are strongly related to inherent and scientific uncertainty. It entails our understanding of the environmental processes at hand, but it also entails an understanding of what information is delivered in SEAs. This depends on the capacities and skills of responsible persons such as policy makers and project managers [39]. Similarly, the frames of the analysts and competent authorities play a role in shaping the scientific analysis in the (S)EAs; issue-framing plays a key role in setting the research questions and boundaries, strongly impacting what is analyzed and how, and consequently, the results of the analysis [22,28].

2.3.4. Legal Uncertainty—“We Do Not Know What Information We Should (Legally) Provide”

- The decision-making context poses uncertainty as to what information the SEA needs to deliver. The task of supplying information is imposed on the initiator of the policy or plan [52]. Often, legal guidelines exist to address the type and amount of information that needs to be delivered in SEAs to make a decision. However, uncertainty increases when the decision-making context changes due to new (environmental) legislation or revisions of existing legislation.

- The institutional context influences rights and responsibilities, and shapes the degree of power and influence [52]. This also relates to how responsibilities and definitions, for instance, the definition of the “precautionary principle”, are embedded in national or European Union (EU) law or international agreements. Such differences can lead to different levels of proof that are required before allowing a plan, or to demanding precautionary risk-mitigation actions, and who should bear the burden of proof [53,54].

- Furthermore, De Marchi [39] describes legal uncertainty as the future contingencies or personal liability for actions or inactions. The people involved in an SEA process, including the initiator, consultants, and decision-makers, are primarily concerned with making their assessments and decisions appear defensible and politically palatable [7]. Providing information about significant impacts in a worst-case scenario, or uncertainties in the assessment can have consequences for the public image, social trust, legitimacy, and political acceptability. The public can use this kind of information to appeal to a proposal, or at least policy-makers feel that this is the case.

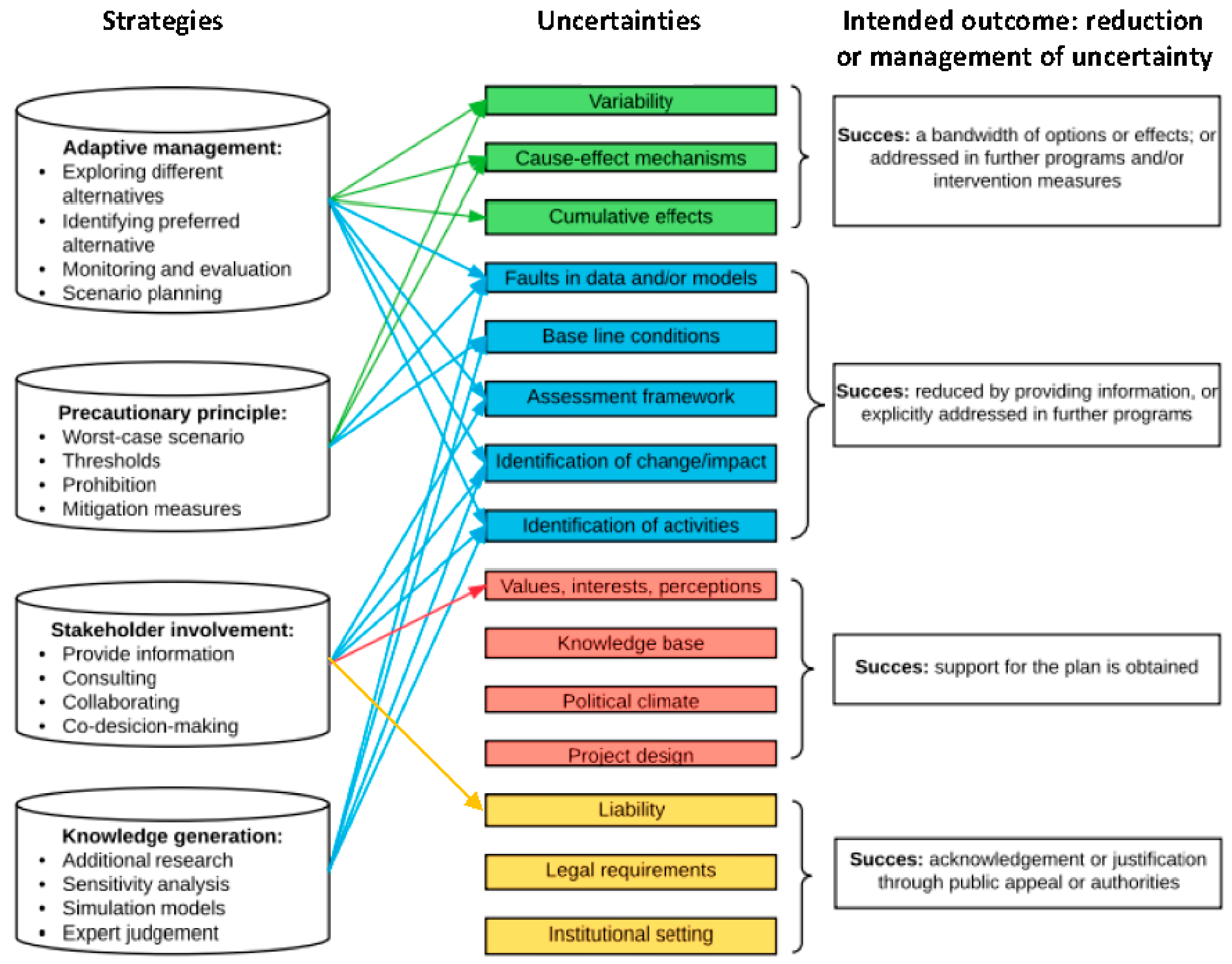

2.4. Strategies for Dealing with Uncertainties in SEAs

2.4.1. Ideally or Typically Dealing with Uncertainties

2.4.2. Knowledge Generation

2.4.3. Stakeholder Involvement

2.4.4. Adaptive Management

2.4.5. Employing the Precautionary Principle

2.4.6. Linking Uncertainties to Strategies

3. Case Studies to Illustrate and to Refine and Bridge the Gap between Theory and Practice

3.1. Case Selection and Data Collection

- The planning initiatives have a relatively high level of abstraction, and therefore, contain uncertainties.

- The Netherlands Commission for Environmental Assessment (NCEA) reviewed the SEA report (which was expected to explicitly reveal uncertainties and suggestions for dealing with them).

- The SEAs ran in the past five years, ensuring respondents remembered the project.

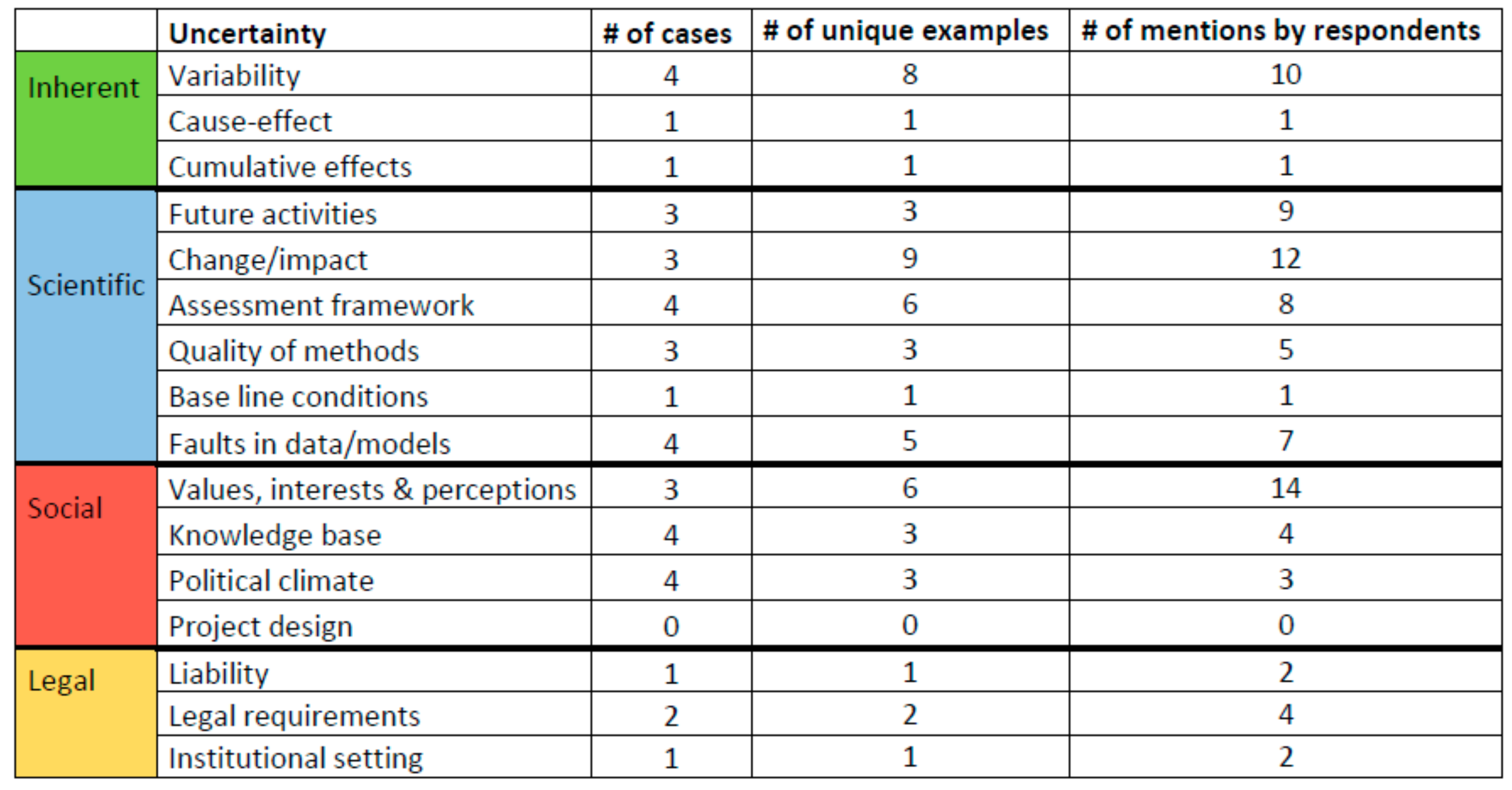

3.2. Uncertainties Perceived in Practice and Strategies Employed for Dealing with Them

3.3. Dealing with Inherent Uncertainty

3.4. Dealing with Scientific Uncertainty

3.5. Dealing with Social Uncertainty

3.6. Dealing with Legal Uncertainty

4. Conclusions and Discussion

Author Contributions

Funding

Conflicts of Interest

Appendix A. Outline of Case Studies and Interviewees

Appendix A.1. Waalweelde West

Appendix A.2. Binckhorst

Appendix A.3. Oosterwold

Appendix A.4. Greenport Venlo

Appendix A.5. Eemsmond Delfzijl

Appendix B. Interviewees

| No. | Affiliation | Organization | Date of Interview |

|---|---|---|---|

| EXPERT INTERVIEWS | |||

| 1. | Secretary | NCEA | 1 November 2016 |

| 2. | Senior researcher | Utrecht University | 11 November 2016 |

| 3. | Senior advisor SEA | Arcadis NL | 21 November 2016 |

| CASE I: WAALWEELDE WEST | |||

| 4. | SEA project manager | Arcadis NL | 23 January 2017 |

| 5. | Project manager & SEA coordinator | Province of Gelderland | 26 January 2017 |

| 6. | Secretary | NCEA | 27 January 2017 |

| 7. | SEA specialist | Arcadis NL | 30 January 2017 |

| CASE II: BINCKHORST | |||

| 8. | Policymaker | Municipality of The Hague | 29 November 2016 |

| 9. | Project manager Omgevingsplan | Municipality of The Hague | 29 November 2016 |

| 10. | Strategic consultant | Antea Group | 8 December 2016 |

| 11. | Secretary | NCEA | 22 December 2016 |

| 12. | Legal advisor | Municipality of The Hague | 23 December 2016 |

| CASE III: OOSTERWOLD | |||

| 13. | Secretary | NCEA | 8 December 2016 |

| 14. | SEA practitioner & SEA project manager | Sweco | 10 January 2017 |

| 15. | SEA coordinator | Municipality of Almere | 25 January 2017 |

| CASE IV: GREENPORT VENLO | |||

| 16. | Project manager SEA | Arcadis NL | 19 December 2016 |

| 17. | Project manager SEA | Development Company | 21 December 2016 |

| 18. | Secretary | NCEA | 7 March 2017 |

| CASE V: EEMSMOND DELFZIJL | |||

| 19. | Project manager SEA | Arcadis NL | 13 January 2017 |

| 20. | Project manager SV Eemsmond Delfzijl | Province of Groningen | 20 January 2017 |

| 21. | SEA coordinator | Province of Groningen | 20 January 2017 |

| 22. | Secretary | NCEA | 7 March 2017 |

References

- Tetlow, M.; Hanusch, M. Strategic environmental assessment: The state of the art. Impact Assess. Proj. Apprais. 2012, 30, 15–24. [Google Scholar] [CrossRef]

- Runhaar, H.A.C.; Arts, J. Getting Ea Research out of the Comfort Zone: Critical Reflections From The Netherlands. J. Environ. Assess. Policy Manag. 2015, 17, 1550011. [Google Scholar] [CrossRef]

- Fischer, T.B. Theory and Practice of Strategic Environmental Assessment. Towards a More Systemic Approach; Earthscan: London, UK, 2007. [Google Scholar]

- Morgan, R.K. Environmental impact assessment: The state of the art. Impact Assess. Proj. Apprais. 2012, 30, 5–14. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, J.; He, W.; Tong, Q.; Li, W. Application of an Uncertainty Analysis Approach to Strategic Environmental Assessment for Urban Planning. Environ. Sci. Technol. 2010, 44, 3136–3141. [Google Scholar] [CrossRef] [PubMed]

- Tenney, A.; Kværner, J.; Gjerstad, K.I. Uncertainty in environmental impact assessment predictions: The need for better communication and more transparency. Impact Assess. Proj. Apprais. 2006, 24, 45–56. [Google Scholar] [CrossRef]

- Leung, W.; Noble, B.; Gunn, J.; Jaeger, J.A. A review of uncertainty research in impact assessment. Environ. Impact Assess. Rev. 2015, 50, 116–123. [Google Scholar] [CrossRef]

- Cardenas, I.C.; Halman, J.I. Coping with uncertainty in environmental impact assessments: Open criteria and techniques. Environ. Impact Assess. Rev. 2016, 60, 24–39. [Google Scholar] [CrossRef]

- Morrison-Saunders, A.; Arts, J. Assessing Impact: Handbook of EIA and SEA Follow-Up; Earthscan: London, UK, 2004. [Google Scholar]

- Noble, B.F. Strengthening EIA through adaptive management: A systems perspective. Environ. Impact Assess. Rev. 2000, 20, 97–111. [Google Scholar] [CrossRef]

- Wardekker, J.A.; van der Sluijs, J.P.; Janssen, P.H.; Kloprogge, P.; Petersen, A.C. Uncertainty communication in environmental assessments: Views from the Dutch science-policy interface. Environ. Sci. Policy 2008, 11, 627–641. [Google Scholar] [CrossRef]

- Zandvoort, M.; van der Vlist, M.J.; Klijn, F.; van den Brink, A. Navigating amid uncertainty in spatial planning. Plan. Theory 2018, 17, 96–116. [Google Scholar] [CrossRef]

- Lees, J.; Jaeger, J.A.G.; Gunn, J.A.E.; Noble, B.F. Analysis of uncertainty consideration in environmental assessment: An empirical study of Canadian EA practice. J. Environ. Plan. Manag. 2016, 568, 1–21. [Google Scholar] [CrossRef]

- Raadgever, G.T; Dieperink, C.; Driessen, P.P.J.; Smit, A.A.H.; van Rijswick, H.F.M.W. Uncertainty management strategies: Lessons from the regional implementation of the Water Framework Directive in The Netherlands. Environ. Sci. Policy 2011, 14, 64–75. [Google Scholar] [CrossRef]

- Brugnach, M.; Dewulf, A.; Pahl-Wostl, C.; Taillieu, T. Toward a relational concept of uncertainty: About knowing too little, knowing too differently, and accepting not to know. Ecol. Soc. 2008, 13, 30. [Google Scholar] [CrossRef]

- Arts, J.; Runhaar, H.A.C.; Fischer, T.B.; Jha-Thakur, U.; van Laerhoven, F.; Driessen, P.P.J.; Onyango, V. The effectiveness of EIA as an instrument for environmental governance: Reflecting on 25 years of EIA practice in The Netherlands and the UK. J. Environ. Assess. Policy Manag. 2012, 14, 1250025. [Google Scholar] [CrossRef]

- Van Asselt, M.; Petersen, A. Niet Bang Voor Onzekerheid; Advisory Council for Research on Spatial Planning, Nature and the Environment (RMNO): The Hague, The Netherlands, 2003. [Google Scholar]

- Slob, M. Zeker Weten. In Gesprek Met Politici, Bestuurders en Wetenschappers over Omgaan Met Onzekerheid; Rathenau Institute: The Hague, The Netherlands, 2006. [Google Scholar]

- Mathijssen, J.; Petersen, A.; Besseling, P.; Rahman, A.; Don, H. Dealing with Uncertainty in Policymaking; CPB, PBL, RAND Europe: The Hague, The Netherlands, 2008. [Google Scholar]

- Brenninkmeijer, A.; de Graaf, B.; Roeser, S.; Passchier, W. Omgaan Met Omgevingsrisico’s en Onzekerheden: Hoe Doen We Dat Samen? Ministry of Infrastructure and the Environment: The Hague, The Netherlands, 2013. [Google Scholar]

- Petersen, A.C.; Cath, A.; Hage, M.; Kunseler, E.; van der Sluijs, J.P. Post-Normal Science in Practice at The Netherlands Environmental Assessment Agency. Sci. Technol. Hum. Values 2011, 36, 362–388. [Google Scholar] [CrossRef] [PubMed]

- Petersen, A.C.; Janssen, P.H.; van der Sluijs, J.P.; Risbey, J.S.; Ravetz, J.R.; Wardekker, J.A.; Martinson Hughes, H. Guidance for Uncertainty Assessment and Communication; PBL Netherlands Environmental Assessment Agency: The Hague, The Netherlands, 2013. [Google Scholar]

- Wardekker, J.A.; Kloprogge, P.; Petersen, A.C.; Janssen, P.H.M.; van der Sluijs, J.P. Guide for Uncertainty Communication; PBL Netherlands Environmental Assessment Agency: The Hague, The Netherlands, 2013. [Google Scholar]

- Kunseler, E.M.; Tuinstra, W.; Vasileiadou, E.; Petersen, A.C. The reflective futures practitioner: Balancing salience, credibility and legitimacy in generating foresight knowledge with stakeholders. Futures 2015, 66, 1–12. [Google Scholar] [CrossRef]

- Phillips, P.D. Evaluating Approaches to Dealing with Uncertainties in Environmental Assessment. Master’s Thesis, Unversity of East Anglia, Norwhich, UK, 2005. [Google Scholar]

- Pavlyuk, O. An Analysis of Legislation and Guidance for Uncertainty Disclosure and Consideration in Canadian Environmental Impact Assessment. Ph.D. Thesis, University of Saskatchewan, Saskatoon, SK, Canada, 2016. [Google Scholar]

- Larsen, S.V.; Kørnøv, L.; Driscoll, P. Avoiding climate change uncertainties in Strategic Environmental Assessment. Environ. Impact Assess. Rev. 2013, 43, 144–150. [Google Scholar] [CrossRef]

- Van der Sluijs, J.P.; Petersen, A.C.; Janssen, P.H.; Risbey, J.S.; Ravetz, J.R. Exploring the quality of evidence for complex and contested policy decisions. Environ. Res. Lett. 2008, 3, 024008. [Google Scholar] [CrossRef]

- Wibeck, V. Communicating uncertainty: Models of communication and the role of science in assessing progress towards environmental objectives. J. Environ. Policy Plan. 2009, 11, 87–102. [Google Scholar] [CrossRef]

- Thissen, W.; Kwakkel, J.; Mens, M.; van der Sluijs, J.; Stemberger, S.; Wardekker, A.; Wildschut, D. Dealing with uncertainties in fresh water supply: Experiences in The Netherlands. Water Resour. Manag. 2017, 31, 703–725. [Google Scholar] [CrossRef]

- Woodruff, S.C. Planning for an unknowable future: Uncertainty in climate change adaptation planning. Clim. Chang. 2016, 139, 445–459. [Google Scholar] [CrossRef]

- Walker, W.E.; Harremoës, P.; Rotmans, J.; Sluijs, J.P.; van der Asselt, M.B.A.; van Janssen, P.; Krauss, M.P.K. A Conceptual Basis for Uncertainty Management. Integr. Assess. 2003, 4, 5–17. [Google Scholar] [CrossRef]

- Sigel, K.; Klauer, B.; Pahl-Wostl, C. Conceptualising uncertainty in environmental decision-making: The example of the EU water framework directive. Ecolog. Econ. 2010, 69, 502–510. [Google Scholar] [CrossRef]

- Klauer, B.; Brown, J.D. Conceptualising imperfect knowledge in public decision making: Ignorance, uncertainty, error and ‘risk situations’. Eng. Manag. 2004, 27, 124–128. [Google Scholar]

- Refsgaard, J.C.; van der Sluijs, J.P.; Højberg, A.L.; Vanrolleghem, P.A. Uncertainty in the environmental modelling process—A framework and guidance. Environ. Model. Softw. 2007, 22, 1543–1556. [Google Scholar] [CrossRef]

- Ascough, J.C.; Maier, H.R.; Ravalico, J.K.; Strudley, M.W. Future research challenges for incorporation of uncertainty in environmental and ecological decision-making. Ecolog. Model. 2008, 219, 383–399. [Google Scholar] [CrossRef]

- Wardekker, J.A. Climate Change Impact Assessment and Adaptation under Uncertainty. Ph.D. Thesis, Utrecht University, Utrecht, The Netherlands, 2011. [Google Scholar]

- Kwakkel, J.H.; Walker, W.E.; Marchau, V.A. Classifying and communicating uncertainties in model-based policy analysis. Int. J. Technol. Policy Manag. 2010, 10, 299–315. [Google Scholar] [CrossRef]

- De Marchi, B. Uncertainty in environmental emergencies: A diagnostic tool. J. Conting. Crisis Manag. 1995, 3, 103–112. [Google Scholar] [CrossRef]

- Van Asselt, M.B.A. Perspectives on Uncertainty and Risk: The PRIMA Approach to Decision Support; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2000. [Google Scholar]

- Meijer, I.S.; Hekkert, M.P.; Faber, J.; Smits, R.E. Perceived uncertainties regarding socio-technological transformations: Towards a framework. Int. J. For. Innov. Policy 2006, 2, 214–240. [Google Scholar] [CrossRef]

- Pahl-Wostl, C.; Sendzimir, J.; Jeffrey, P.; Aerts, J.; Berkamp, G.; Cross, K. Managing change toward adaptive water management through social learning. Ecol. Society 2007, 12, 30. [Google Scholar] [CrossRef]

- Maier, H.R.; Ascough II, J.C.; Wattenbach, M.; Renschler, C.S.; Labiosa, W.B.; Ravalico, J.K. Environmental modelling, software and decision support. Develop. Integr. Environ. Assess. 2008, 3, 69–85. [Google Scholar]

- Broekmeyer, M.E.; Opdam, P.F.; Kistenkas, F.H. Het Bepalen van Significante Effecten: Omgaan Met Onzekerheden; Alterra: Wageningen, The Netherlands, 2008. [Google Scholar]

- Knol, A.B.; Petersen, A.C.; Van der Sluijs, J.P.; Lebret, E. Dealing with uncertainties in environmental burden of disease assessment. Environ. Health 2009, 8, 21. [Google Scholar] [CrossRef] [PubMed]

- Maxim, L.; van der Sluijs, J.P. Quality in environmental science for policy: Assessing uncertainty as a component of policy analysis. Environ. Sci. Policy 2011, 14, 482–492. [Google Scholar] [CrossRef]

- Skinner, D.J.; Rocks, S.A.; Pollard, S.J.; Drew, G.H. Identifying uncertainty in environmental risk assessments: The development of a novel typology and its implications for risk characterization. Hum. Ecol. Risk Assess. 2014, 20, 607–640. [Google Scholar] [CrossRef]

- Kloprogge, P.; Van der Sluijs, J.P.; Petersen, A.C. A method for the analysis of assumptions in model-based environmental assessments. Environ. Model. Softw. 2011, 26, 280–301. [Google Scholar] [CrossRef]

- De Jong, A.; Wardekker, J.A.; Van der Sluijs, J.P. Assumptions in quantitative analyses of health risks of overhead power lines. Environ. Sci. Policy 2012, 16, 114–121. [Google Scholar] [CrossRef]

- Jakeman, A.J.; Letcher, R.A.; Norton, J.P. Ten iterative steps in development and evaluation of environmental models. Environ. Model. Softw. 2006, 21, 602–614. [Google Scholar] [CrossRef]

- Guillera-Arroita, G.; Lahoz-Monfort, J.J.; Elith, J.; Gordon, A.; Kujala, H.; Lentini, P.E.; McCarthy, M.A.; Tingley, R.; Wintle, B.A. Is my species distribution model fit for purpose? Matching data and models to applications. Glob. Ecol. Biogeogr. 2015, 24, 276–292. [Google Scholar] [CrossRef]

- Wood, G. Thresholds and criteria for evaluating and communicating impact significance in environmental statements: “See no evil, hear no evil, speak no evil”? Environ. Impact Assess. Rev. 2008, 28, 22–38. [Google Scholar] [CrossRef]

- Weiss, C. Scientific uncertainty and science-based precaution. Int. Environ. Agreem. 2003, 3, 137–166. [Google Scholar] [CrossRef]

- Weiss, C. Can there be science-based precaution? Environ. Res. Lett. 2006, 1, 014003. [Google Scholar] [CrossRef]

- Peterson, G.D.; Cumming, G.S.; Carpenter, S.R. Scenario planning: A tool for conservation in an uncertain world. Conserv. Biol. 2003, 17, 358–366. [Google Scholar] [CrossRef]

- Allen, C.R.; Garmestani, A.S. Adaptive Management of Social-Ecological Systems; Springer: Dordrecht, The Netherlands, 2015. [Google Scholar]

- Cooke, R.M. Experts in Uncertainty: Opinion and Subjective Probability in Science; Oxford University Press: New York, NY, USA, 1991. [Google Scholar]

- Harremoës, P.; Gee, D.; MacGarvin, M.; Stirling, A.; Keys, J.; Wynne, B.; Guedes Vaz, S. Late Lessons from Early Warnings: The Precautionary Principle 1896–2000; European Environmental Agency: Copenhagen, Denmark, 2001. [Google Scholar]

- Hage, M.; Leroy, P.; Petersen, A.C. Stakeholder participation in environmental knowledge production. Futures 2010, 42, 254–264. [Google Scholar] [CrossRef]

- Glucker, A.N.; Driessen, P.P.; Kolhoff, A.; Runhaar, H.A. Public participation in environmental impact assessment: Why, who, and how? Environ. Impact Assess. Rev. 2013, 43, 104–111. [Google Scholar] [CrossRef]

- Isendahl, J; Pahl-Wostl, C.; Dewulf, A. Using framing parameters to improve handling of uncertainties in water management practice. Environmental Policy and Governance 2010, 20, 107–122. [Google Scholar] [CrossRef]

- Holling, C.S. Adaptive Environmental Assessment and Management; The Pitman Press: Bath, UK, 1978. [Google Scholar]

- Canter, L.; Atkinson, S.F. Adaptive management with integrated decision making: an emerging tool for cumulative effects management. Impact Assess. Proj. Apprais. 2010, 28, 287–297. [Google Scholar] [CrossRef]

- Morrison-Saunders, A.; Arts, J. Learning from experience: Emerging trends in environmental impact assessment follow-up. Impact Assess. Proj. Apprais. 2005, 23, 170–174. [Google Scholar] [CrossRef]

- Williams, B.K.; Szaro, R.C.; Shapiro, C.D. Adaptive Management: The U.S. Department of the Interior Technical Guide, 2nd ed.; U.S. Department of the Interior: Washington, DC, USA, 2009. [Google Scholar]

- United Nations. The Rio Declaration on Environment and Development; United Nations: Rio de Janeiro, Brazil, 1992. [Google Scholar]

- United Nations Educational, Scientific and Cultural Organization. The precautionary principle. In World Commission on the Ethics of Scientific Knowledge and Technology; UNESCO: Paris, France, 2005. [Google Scholar]

- Bond, A.; Morrison-Saunders, A.; Gunn, J.A.; Pope, J.; Retief, F. Managing uncertainty, ambiguity and ignorance in Impact Assessment by embedding evolutionary resilience, participatory modelling and adaptive management. Environ. Manag. 2015, 151, 97–104. [Google Scholar] [CrossRef] [PubMed]

- Khosravi, F.; Jha-Thakur, U. Managing uncertainties through scenario analysis in strategic environmental assessment. J. Environ. Plan. Manag. 2018. [Google Scholar] [CrossRef]

| Reference | Typologies or Interpretations of Uncertainty |

|---|---|

| [39] | Scientific, legal, moral, societal, institutional, proprietary, situational |

| [40] | Uncertainty due to variability: natural randomness, value diversity, behavioral variability, societal randomness, technological surprise Uncertainty due to lack of knowledge: unreliability, structural uncertainty |

| [32] | Location of uncertainty: context (natural, technical, economic, social, political, representation), model (structure, technical aspects), inputs (driving forces, system data), parameters, model outcomes Level of uncertainty: statistical, scenario, recognized ignorance, total ignorance Nature of uncertainty: epistemic or variability |

| [41] | Nature: knowledge uncertainty, variability uncertainty Level: from low to high Source: technology, resources, competitors, suppliers, consumers, politics |

| [42] | Lack of knowledge due to limited data Understanding of the system Unpredictability of factors in the system (randomness) Diversity of rules and mental models that determine stakeholder perceptions |

| [15] | Unpredictability, incomplete knowledge or multiple knowledge frames about the natural system, technical system or social system |

| [43] | Data uncertainty, model uncertainty, human uncertainty |

| [36] | Knowledge uncertainty: process understanding, model/data uncertainty Variability: natural, human, institutional, technological Linguistic uncertainty: vagueness, ambiguity, underspecificity |

| [44] | Data or methods/knowledge gaps, inherent to complexity/ecological systems, societal interpretation of effects and values |

| [45] | Location: model structure, parameters, input data Nature: epistemic, ontic (process variability, normative uncertainty) Range: statistical (range + chance), scenario (range + “what if”) Recognized ignorance Methodological unreliability Value diversity among analysts |

| [38] | Location: system boundary, conceptual model, computer model (structure, parameters inside model, input parameters to model), input data, model implementation, processed output data Level: shallow, medium, deep, recognized ignorance Nature: ambiguity, epistemology, ontology |

| [46] | Location in a model: content, process, context of knowledge Sources: lack of knowledge, variability, expert subjectivity, communication |

| [47] | Epistemic uncertainty: data, language, system Aleatory uncertainty: variability, extrapolation Combined Epistemic-Aleatory: model, decision |

| Type | What Is Uncertain? | What Is a Successful Outcome? |

|---|---|---|

| Inherent Unpredictability in the natural system | The full range of options and impacts | A bandwidth of options or effects is identified; it is explicitly addressed in future programs and/or intervention measures are explicitly available |

| Scientific Limited or false information | Outcomes of models are inaccurate or not representative | Additional information is provided, or the issue is explicitly addressed in a follow-up program |

| Social Doubts or ambiguity about information | Differences in values, interests, and perceptions | Support for the plan is obtained |

| Legal Decision-making context | The justification of decisions | Acknowledgement or justification through public appeal or authorities |

| Case, Publishing Year, and Consultancy Firm | Project Characteristics |

|---|---|

| #1 Structure Vision Waalweelde West (2015) | Initiator: Province of Gelderland Goal: Flood protection program including regional economic, urban, and ecologic development |

| #2 Zoning plan Binckhorst Den Haag (2015–ongoing) | Initiator: Municipality of The Hague Goal: Transformation of the Binckhorst into a sustainable mixed urban living area (5000 houses) |

| #3 Structure Vision Almere Oosterwold (2013) & Zoning plan Oosterwold (2016) | Initiator: Municipality of Almere Goal: Sustainable development of Oosterwold into a low density living/working area (15,000 houses) |

| #4 Structure Vision Greenport Venlo (2012) | Initiator: Development Company Greenport Venlo (partnership of public organizations and private shareholders) Goal: Sustainable development of agribusiness (14,000 jobs) |

| #5 Structure Vision Eemsmond Delfzijl (2017) | Initiator: Province of Groningen Goal: Industrial development of Eemsmond region (wind farms, heliport, industrial areas) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bodde, M.; Van der Wel, K.; Driessen, P.; Wardekker, A.; Runhaar, H. Strategies for Dealing with Uncertainties in Strategic Environmental Assessment: An Analytical Framework Illustrated with Case Studies from The Netherlands. Sustainability 2018, 10, 2463. https://doi.org/10.3390/su10072463

Bodde M, Van der Wel K, Driessen P, Wardekker A, Runhaar H. Strategies for Dealing with Uncertainties in Strategic Environmental Assessment: An Analytical Framework Illustrated with Case Studies from The Netherlands. Sustainability. 2018; 10(7):2463. https://doi.org/10.3390/su10072463

Chicago/Turabian StyleBodde, Maartje, Karin Van der Wel, Peter Driessen, Arjan Wardekker, and Hens Runhaar. 2018. "Strategies for Dealing with Uncertainties in Strategic Environmental Assessment: An Analytical Framework Illustrated with Case Studies from The Netherlands" Sustainability 10, no. 7: 2463. https://doi.org/10.3390/su10072463

APA StyleBodde, M., Van der Wel, K., Driessen, P., Wardekker, A., & Runhaar, H. (2018). Strategies for Dealing with Uncertainties in Strategic Environmental Assessment: An Analytical Framework Illustrated with Case Studies from The Netherlands. Sustainability, 10(7), 2463. https://doi.org/10.3390/su10072463