A Study on the Instructor Role in Dealing with Mixed Contents: How It Affects Learner Satisfaction and Retention in e-Learning

Abstract

1. Introduction

Case Study

2. Theoretical Background

2.1. Blended-Learning and Mixed Contents

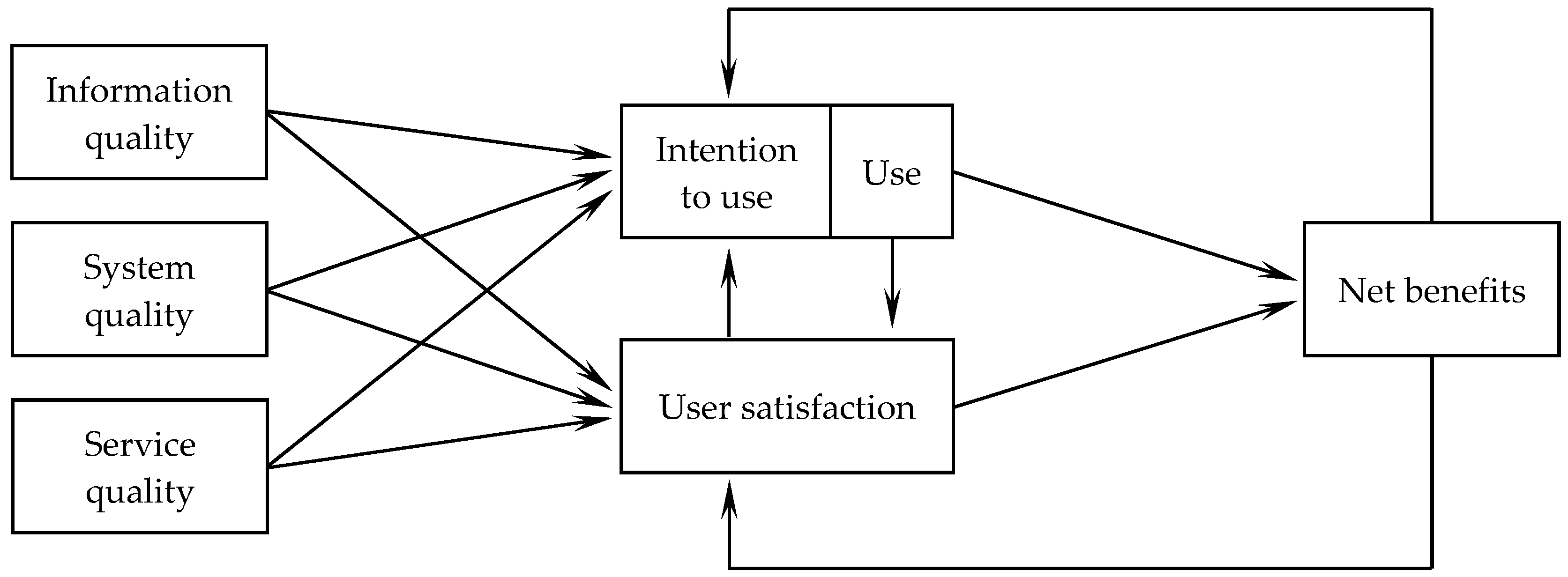

2.2. IS Success Model

3. Involvement

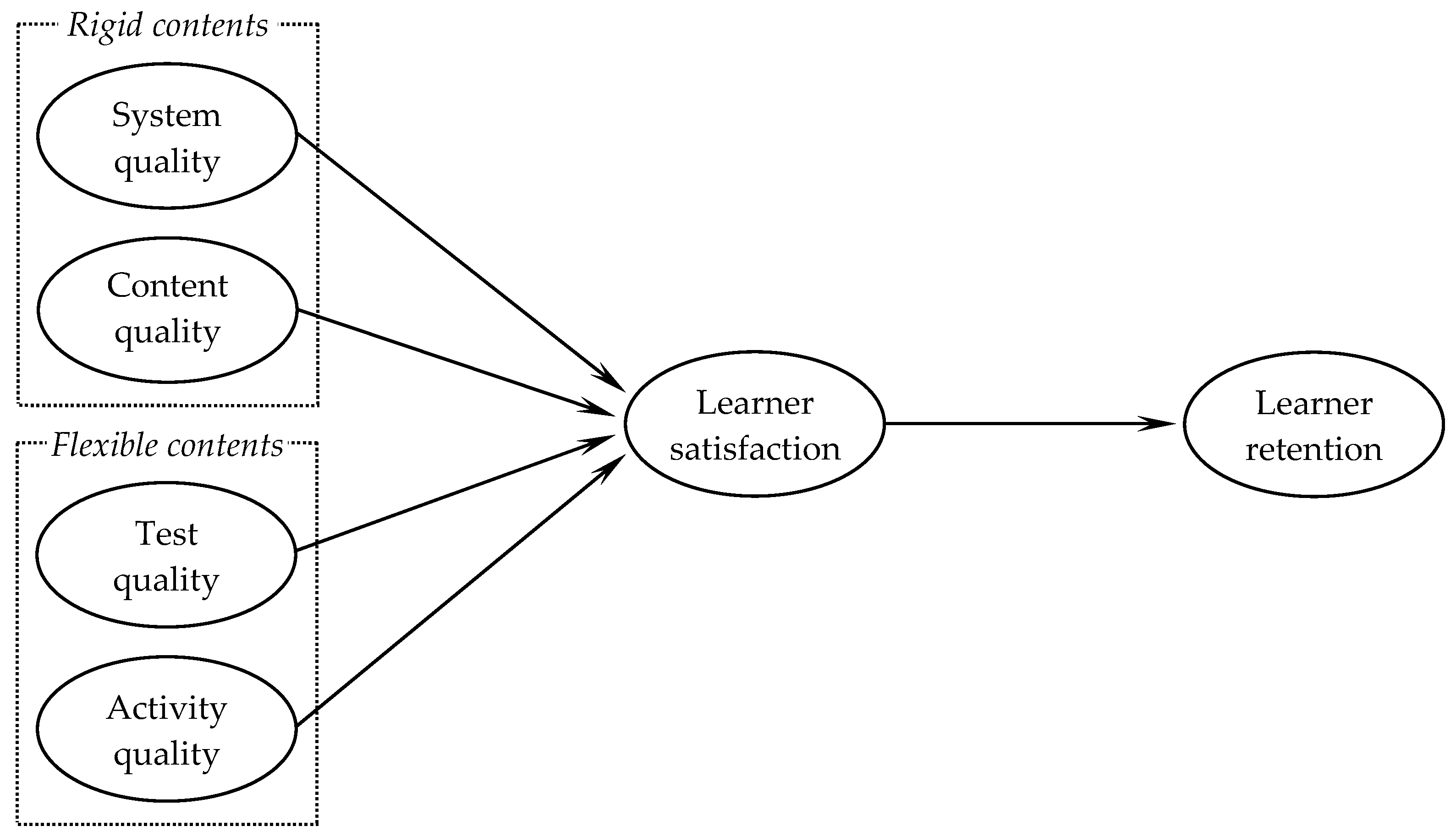

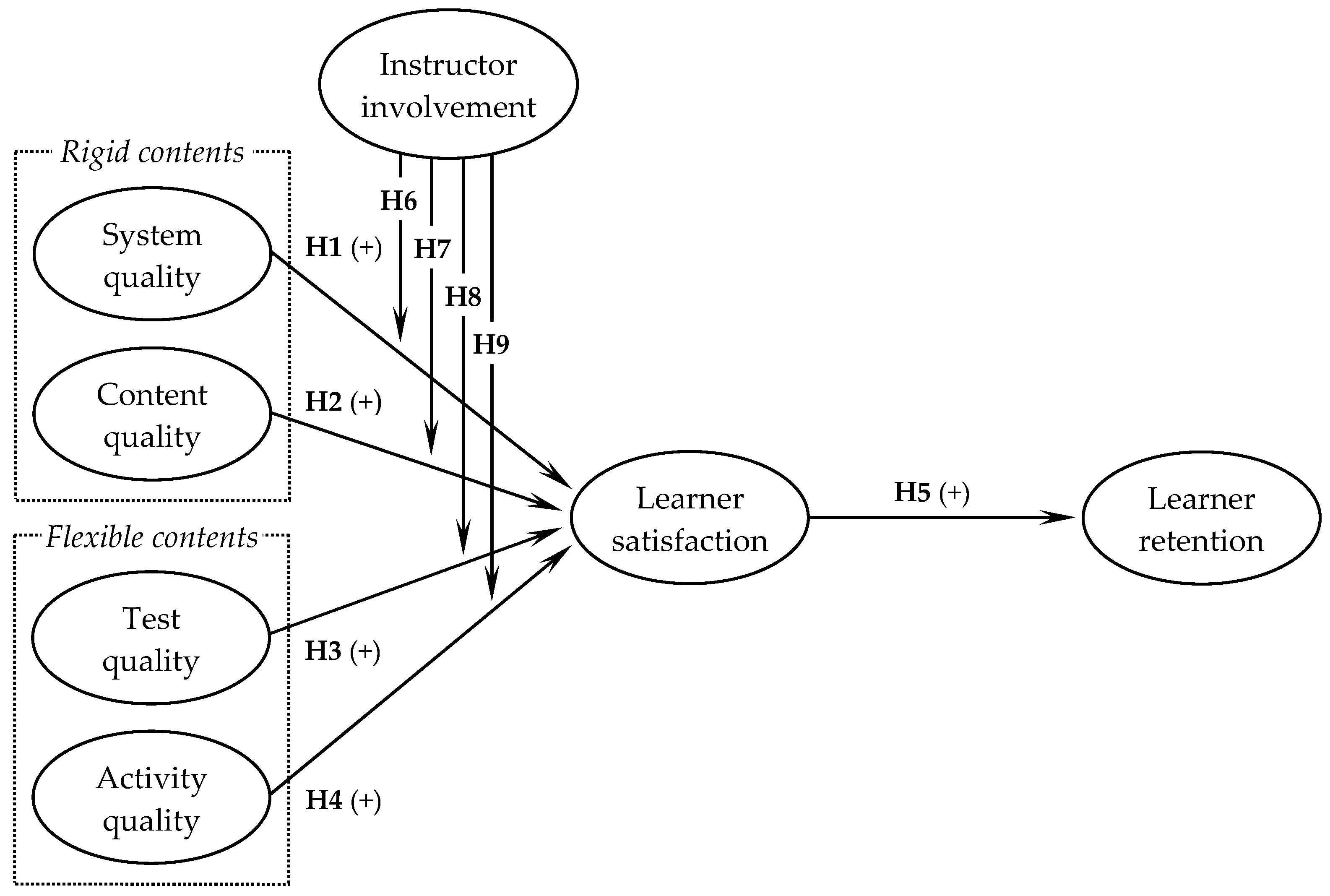

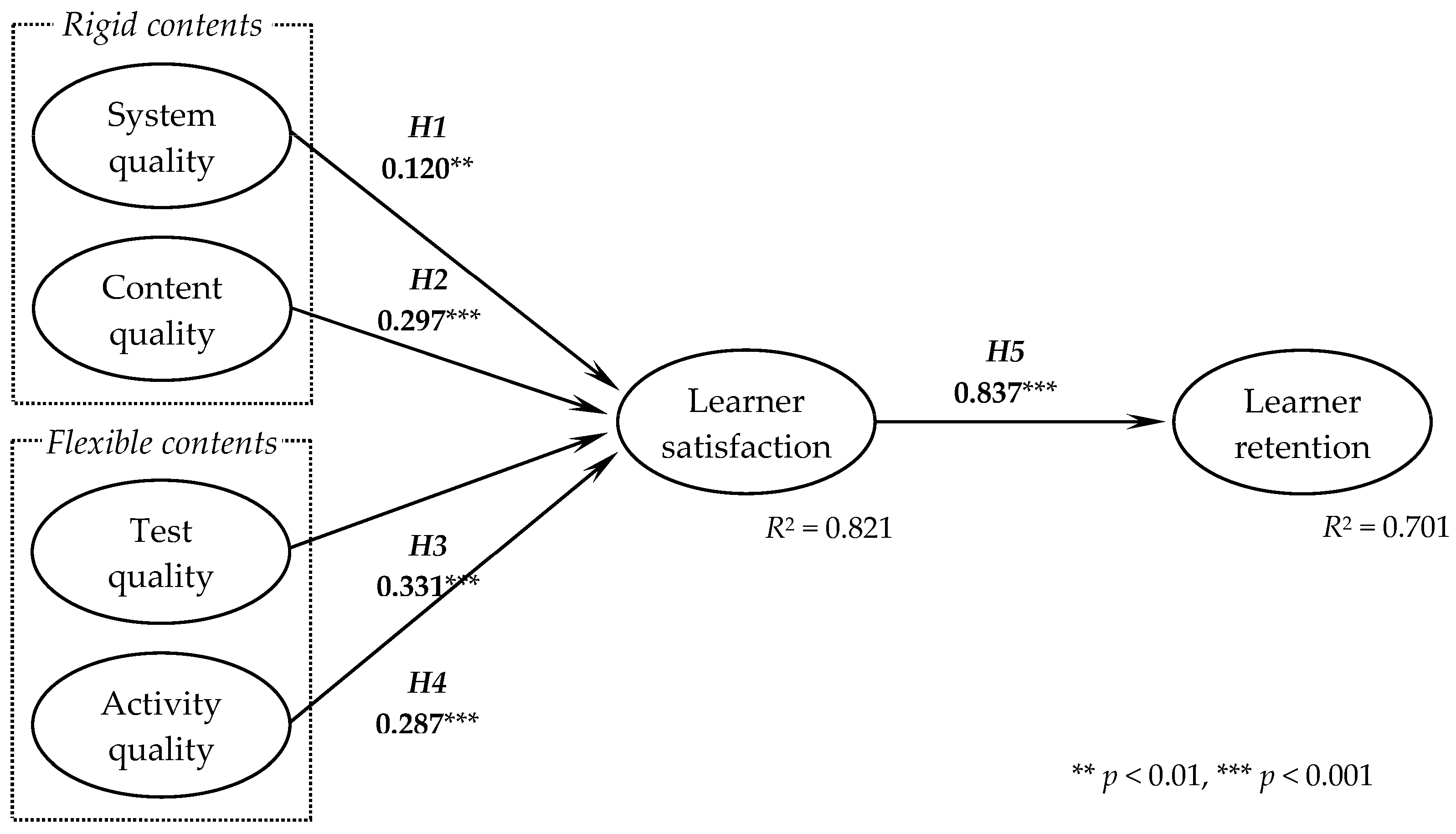

3.1. Research Model and Hypotheses

3.2. Learning Environments and Learner Satisfaction

3.2.1. System Quality and Learner Satisfaction

3.2.2. Content Quality and Learner Satisfaction

3.2.3. Test Quality and Learner Satisfaction

3.2.4. Collaborative Activity Quality and Learner Satisfaction

3.3. Learner Satisfaction

3.4. Learner Retention

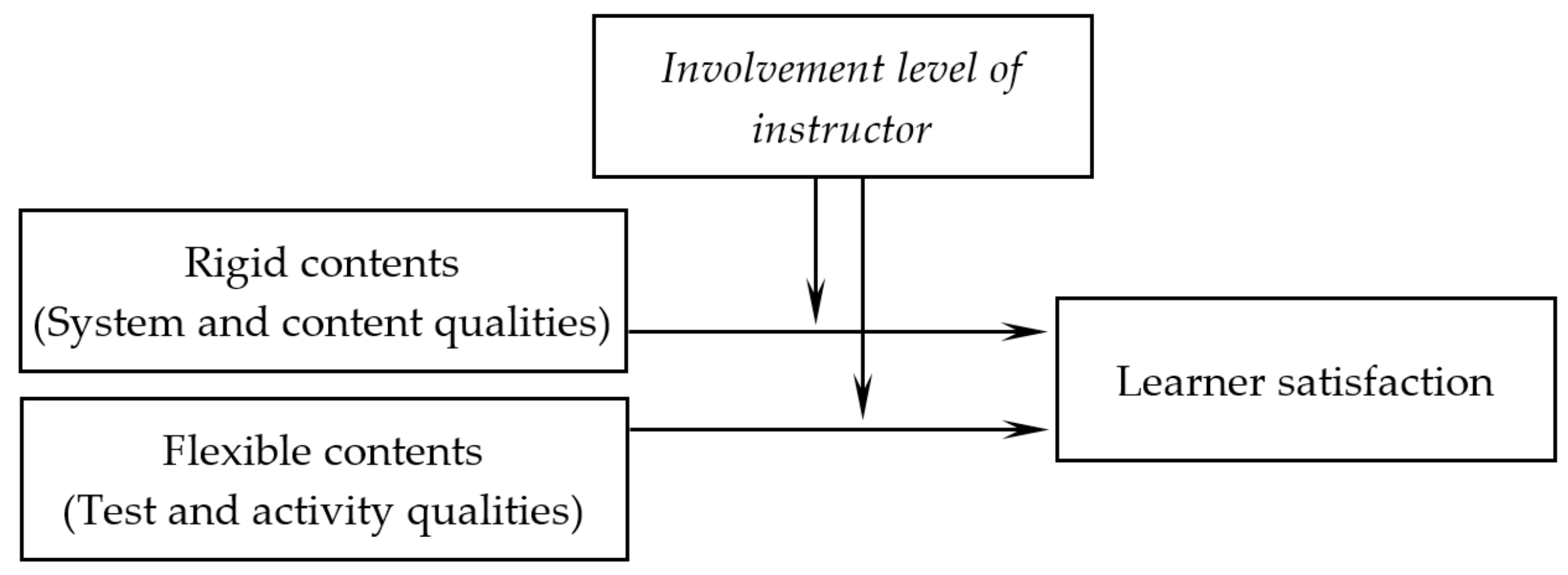

3.5. Instructor Involvement

4. Methods

4.1. Data Collection

4.2. Measures

5. Analysis and Results

5.1. Measurement Model

5.2. PLS Analysis and Moderating Effect of Involvement

6. Discussion and Implications

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- López-Pérez, M.V.; Pérez-López, M.C.; Rodríguez-Ariza, L. Blended learning in higher education: Students’ perceptions and their relation to outcomes. Comput. Educ. 2011, 56, 818–826. [Google Scholar] [CrossRef]

- Zang, D.; Zhao, J.L.; Zhou, L.; Nunamaker, J.F. Can e-learning replace classroom learning? Commun. ACM 2004, 47, 75–79. [Google Scholar] [CrossRef]

- Clark, R.C.; Mayer, R.E. E-Learning and the Science of Instruction: Proven Guidelines for Consumers and Designers of Multimedia Learning; John Wiley & Sons: Hoboken, NJ, USA, 2016. [Google Scholar]

- Chen, D.T. Uncovering the provisos behind flexible learning. J. Educ. Technol. Soc. 2003, 6, 25–30. [Google Scholar]

- Herrington, J.; Oliver, R. An instructional design framework for authentic learning environments. Educ. Technol. Res. Dev. 2000, 48, 23–48. [Google Scholar] [CrossRef]

- Levine, A.; Sun, J.C. Barriers to Distance Education; American Council on Education: Washington, DC, USA, 2002. [Google Scholar]

- Graham, C.R. Blended learning system: Definition, current trends, and future directions. In Handbook of Blended Learning: Global Perspectives; Bonk, C.J., Graham, C.R., Eds.; Pfeiffer Publishing: Zurich, Switzerland, 2004. [Google Scholar]

- Masiello, I.; Ramberg, R.; Lonka, K. Attitudes to the application of a web-based learning system in a microbiology course. Comput. Educ. 2005, 45, 171–185. [Google Scholar] [CrossRef]

- Lim, D.H.; Morris, M.L. Learner and instructional factors influencing learning outcomes within a blended learning environment. J. Educ. Technol. Soc. 2009, 12, 282–293. [Google Scholar]

- Liaw, S.S. Investigating students’ perceived satisfaction, behavioral intention, and effectiveness of e-learning: A case study of the Blackboard system. Comput. Educ. 2008, 51, 864–873. [Google Scholar] [CrossRef]

- Wagner, N.; Hassanein, K.; Head, M. Who is responsible for e-learning success in higher education? A stakeholders’ analysis. J. Educ. Technol. Soc. 2008, 11, 26–36. [Google Scholar]

- Johnson, R.D.; Gueutal, H.; Falbe, C.M. Technology, trainees, meta cognitive activity and e-learning effectiveness. J. Manag. Psychol. 2009, 24, 545–566. [Google Scholar] [CrossRef]

- Lee, M.C. Explaining and predicting users’ continuance intention toward e-learning: An extension of the expectation–confirmation model. Comput. Educ. 2010, 54, 506–516. [Google Scholar] [CrossRef]

- Welsh, E.T.; Wanberg, C.R.; Brown, K.G.; Simmering, M.J. E-learning: Emerging uses, empirical results and future directions. Int. J. Train. Dev. 2003, 7, 245–258. [Google Scholar] [CrossRef]

- Tallent-Runnels, M.K.; Thomas, J.A.; Lan, W.Y.; Cooper, S.; Ahern, T.C.; Shaw, S.M.; Liu, M. Teaching courses online: A review of the research. Rev. Educ. Res. 2006, 76, 93–135. [Google Scholar] [CrossRef]

- Dowling, C.; Godfrey, J.M.; Gyles, N. Do hybrid flexible delivery teaching methods improve accounting students’ learning outcomes? Acc. Educ. 2003, 12, 373–391. [Google Scholar] [CrossRef]

- Osguthorpe, R.T.; Graham, C.R. Blended learning environments: Definitions and directions. Q. Rev. Distance Educ. 2003, 4, 227–233. [Google Scholar]

- Allen, I.E.; Seaman, J.; Garrett, R. Blending in: The Extent and Promise of Blended Education in the United States; Sloan Consortium: Needham, MA, USA, 2007. [Google Scholar]

- Alonso, F.; López, G.; Manrique, D.; Viñes, J.M. An instructional model for web-based e-learning education with a blended learning process approach. Br. J. Educ. Technol. 2005, 36, 217–235. [Google Scholar] [CrossRef]

- Bhatiasevi, V. Acceptance of e-learning for users in higher education: An extension of the technology acceptance model. Soc. Sci. 2011, 6, 513–520. [Google Scholar]

- Cheng, B.; Wang, M.; Yang, S.J.; Peng, J. Acceptance of competency-based workplace e-learning systems: Effects of individual and peer learning support. Comput. Educ. 2011, 57, 1317–1333. [Google Scholar] [CrossRef]

- Lu, H.P.; Chiou, M.J. The impact of individual differences on e-learning system satisfaction: A contingency approach. Br. J. Educ. Technol. 2010, 41, 307–323. [Google Scholar] [CrossRef]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- Delone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Garrison, D.R.; Kanuka, H. Blended learning: Uncovering its transformative potential in higher education. Internet High. Educ. 2004, 7, 95–105. [Google Scholar] [CrossRef]

- Salomon, G.; Perkins, D.N.; Globerson, T. Partners in cognition: Extending human intelligence with intelligent technologies. Educ. Res. 1991, 20, 2–9. [Google Scholar] [CrossRef]

- Perkins, D.N. What constructivism demands of the learner. Educ. Technol. 1991, 31, 18–23. [Google Scholar]

- Perkins, D.N. Person-plus: A distributed view of thinking and learning. In Distributed Cognitions: Psychological and Educational Considerations; Salomon, G., Ed.; Cambridge University Press: Cambridge, UK, 1993; pp. 88–110. [Google Scholar]

- Harley, S. Situated learning and classroom instruction. Educ. Technol. 1993, 33, 46–51. [Google Scholar]

- Harding, A.; Engelbrecht, J.; Lazenby, K.; le Roux, I. Blended learning in mathematics: Flexibility and Taxonomy. In Handbook of Blended Learning Environments: Global Perspectives, Local Designs; Bonk, C., Graham, C., Eds.; Pfeiffer Publishing: San Francisco, CA, USA, 2005; pp. 400–415. [Google Scholar]

- Owston, R.; Lupshenyuk, D.; Wideman, H. Lecture capture in large undergraduate classes: What is the impact on the teaching and learning environment? Internet High. Educ. 2011, 14, 262–268. [Google Scholar] [CrossRef]

- Wang, M.; Shen, R.; Novak, D.; Pan, X. The impact of mobile learning on students’ learning behaviors and performance: Report from a large blended classroom. Br. J. Educ. Technol. 2009, 40, 673–695. [Google Scholar] [CrossRef]

- Bliuc, A.M.; Goodyear, P.; Ellis, R.A. Research focus and methodological choices in studies into students’ experiences of blended learning in higher education. Internet High. Educ. 2007, 10, 231–244. [Google Scholar] [CrossRef]

- Herrington, A.; Herrington, J. What is an authentic learning environment? In Authentic Learning Environments in Higher Education; Herrington, A., Harrington, J., Eds.; Information Science Publishing: Hershey, PA, USA, 2006; pp. 1–13. [Google Scholar]

- Young, J. ‘Hybrid’ teaching seeks to end the divide between traditional and online instruction. Chron. High. Educ. 2002, 48, A33–A34. [Google Scholar]

- Wu, D.; Hiltz, R. Predicting learning from asynchronous online discussions. J. Asynchronous Learn. Netw. 2004, 8, 139–152. [Google Scholar]

- Bhatti, A.; Tubaisahat, A.; El-Qawasmeh, E. Using technology-mediated learning environment to overcome social and cultural limitations in higher education. Issues Inf. Sci. Inf. Technol. 2005, 2, 67–76. [Google Scholar]

- Buzzetto-More, N.; Sweat-Guy, R. Incorporating the hybrid learning model into minority education at a historically black university. J. Inf. Technol. Educ. 2006, 5, 153–164. [Google Scholar]

- Aspden, L.; Helm, P. Making the connection in a blended learning environment. Educ. Media Int. 2004, 41, 245–252. [Google Scholar] [CrossRef]

- Khine, M.S.; Lourdusamy, A. Blended learning approach in teacher education: Combining face-to-face instruction, multimedia viewing and online discussion. Br. J. Educ. Technol. 2003, 34, 671–675. [Google Scholar] [CrossRef]

- Lambropoulos, N.; Faulkner, X.; Culwin, F. Supporting social awareness in collaborative e-learning. Br. J. Educ. Technol. 2012, 43, 295–306. [Google Scholar] [CrossRef]

- Ludwig-Hardman, S.; Dunlap, J.C. Learner Support Services for Online Students: Scaffolding for Success. International Review of Research in Open and Distance Learning. Available online: http://www.irrodl.org/content/v4.1/dunlap.html (accessed on 25 November 2017).

- Barron, B.; Darling-Hammond, L. Teaching for Meaningful Learning: A Review of Research on Inquiry-Based and Cooperative Learning; Stanford University: Stanford, CA, USA, 2008. [Google Scholar]

- Carr-Chellman, A.; Duchastel, P. The ideal online course. Br. J. Educ. Technol. 2000, 31, 229–241. [Google Scholar] [CrossRef]

- Wright, P. Astrology and science in seventeenth-century England. Soc. Stud. Sci. 1975, 5, 399–422. [Google Scholar] [CrossRef] [PubMed]

- Wiegers, K.E. Software Requirements: Practical Techniques for Gathering and Managing Requirement through the Product Development Cycle; Microsoft Press: Redmond, OR, USA, 2003. [Google Scholar]

- Stacey, E. Collaborative learning in an online environment. Int. J. E-Learn. Distance Educ. 2007, 14, 14–33. [Google Scholar]

- Pawan, F.; Paulus, T.M.; Yalcin, S.; Chang, C. Online learning: Patterns of engagement and interaction among in-service teachers. Lang. Learn. Technol. 2003, 7, 119–140. [Google Scholar]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351–370. [Google Scholar] [CrossRef]

- Roca, J.C.; Chiu, C.M.; Martínez, F.J. Understanding e-learning continuance intention: An extension of the Technology Acceptance Model. Int. J. Hum. Comput. Stud. 2006, 64, 683–696. [Google Scholar] [CrossRef]

- Stauss, B.; Chojnacki, K.; Decker, A.; Hoffmann, F. Retention effects of a customer club. Int. J. Serv. Ind. Manag. 2001, 12, 7–19. [Google Scholar] [CrossRef]

- Berge, Z.L.; Huang, Y.P. A model for sustainable student retention: A holistic perspective on the student dropout problem with special attention to e-learning. DEOSNEWS 2004, 13, 1–26. [Google Scholar]

- Midgley, C.; Feldlaufer, H.; Eccles, J.S. Change in teacher efficacy and student self-and task-related beliefs in mathematics during the transition to junior high school. J. Educ. Psychol. 1989, 81, 247–258. [Google Scholar] [CrossRef]

- Lee, K.C.; Chung, N. Understanding factors affecting trust in and satisfaction with mobile banking in Korea: A modified DeLone and McLean’s model perspective. Int. Comp. 2009, 21, 385–392. [Google Scholar] [CrossRef]

- Chung, N.; Lee, H.; Lee, S.J.; Koo, C. The influence of tourism website on tourists’ behavior to determine destination selection: A case study of creative economy in Korea. Technol. Forecast. Soc. Chang. 2015, 96, 130–143. [Google Scholar] [CrossRef]

- Bru, E.; Stephens, P.; Torsheim, T. Students perceptions of teachers class management styles and their report of misbehavior. J. Sch. Psychol. 2002, 40, 287–307. [Google Scholar] [CrossRef]

- Stornes, T.; Bru, E.; Idsoe, T. Classroom social structure and motivational climates: On the influence of teachers’ involvement, teachers’ autonomy support and regulation in relation to motivational climates in school classrooms. Scan. J. Educ. Res. 2008, 52, 315–329. [Google Scholar] [CrossRef]

- Ahuja, M.K.; Thatcher, J.B. Moving beyond intentions and toward the theory of trying: Effects of work environments and gender on post-adoption information technology use. MIS Q. 2005, 29, 427–459. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1978. [Google Scholar]

- Anderson, J.C.; Gerbing, D.W. Structural equation modeling in practice: A review and recommended two-step approach. Psychol. Bull. 1988, 103, 411–423. [Google Scholar] [CrossRef]

- Black, A.E.; Deci, E.L. The effects of instructors’ autonomy support and students’ autonomous motivation on learning organic chemistry: A self-determination theory perspective. Sci. Educ. 2000, 84, 740–756. [Google Scholar] [CrossRef]

- Barr, R.B.; Tagg, J. From teaching to learning—A new paradigm for undergraduate education. Chang. Mag. Higher Learn. 1995, 27, 12–26. [Google Scholar] [CrossRef]

| Frequency | Percentage | |

|---|---|---|

| Gender | ||

| Male | 82 | 40.2 |

| Female | 122 | 59.8 |

| Age | ||

| 19 | 6 | 2.9 |

| 20 | 78 | 38.2 |

| 21 | 50 | 24.5 |

| 22 | 26 | 12.7 |

| ≥23 | 44 | 21.6 |

| Major | ||

| Hospitality & tourism | 179 | 87.7 |

| Business | 10 | 4.9 |

| Foreign language & culture | 6 | 2.9 |

| Natural sciences & engineering | 5 | 2.5 |

| Undeclared | 4 | 2.0 |

| Construct and Indicator | Loading | t |

|---|---|---|

| Instructor involvement (CR 1 = 0.932; α 2 = 0.918; AVE 3 = 0.605) | ||

| Has the instructor appropriately managed the students? | 0.840 | 33.458 |

| Has the instructor fulfilled his or her role as the counselor by understanding the needs of the students and responding in a professional manner? | 0.791 | 28.230 |

| Did the instructor promptly fix any problems related to the online system? | 0.814 | 25.426 |

| Were the text messages for lecture notification helpful to taking the lectures? | 0.755 | 21.567 |

| Were the text messages for quiz notification helpful in preparing for the quizzes? | 0.769 | 21.337 |

| Has the teaching assistant promptly attend to problems in the KLAS system and fix them? | 0.696 | 14.203 |

| Has the teaching assistant promptly respond to students’ questions? | 0.743 | 18.179 |

| Did the bulletin board for the course provide information punctually? | 0.836 | 27.930 |

| This lecture publishes class attendance and mid-term evaluations. Were these resources useful for taking the course? | 0.746 | 13.396 |

| Systemquality (CR = 0.925; α = 0.877; AVE = 0.805) | ||

| Were you able to take lectures without difficulties via the KLAS system? | 0.920 | 67.013 |

| Were you able to easily access course materials via the KLAS system while taking lectures? | 0.889 | 47.707 |

| Was the KLAS system stable while taking lectures? | 0.882 | 36.590 |

| Did you have any trouble connecting to the KLAS 4 system to evaluate courses? R | - | - |

| Have you experienced any inconvenience during course evaluation due to instability of the KLAS system? R | - | - |

| Contentquality (CR = 0.903; α = 0.864; AVE = 0.651) | ||

| Were the six major courses offered this term helpful for understanding the respective individual majors? | 0.835 | 41.595 |

| Was the special course on the six majors helpful for understanding the respective majors? | 0.805 | 26.573 |

| Was the video quality good enough to follow the lecture? | 0.744 | 15.215 |

| Were the course notes suitable for the lectures? | 0.829 | 27.153 |

| Was the course loading suitable for the lectures? | 0.815 | 26.761 |

| Testquality (CR = 0.907; α = 0.865; AVE = 0.711) | ||

| Were the quizzes helpful in understanding the lectures? | 0.888 | 48.980 |

| Were the quizzes for the major courses manageable in difficulty? | 0.861 | 32.695 |

| Was there enough time to finish the quizzes? | 0.739 | 12.935 |

| Were the problems in the quiz suitable for evaluating your understanding in the subject? | 0.876 | 30.715 |

| Activityquality (CR = 0.912; α = 0.855; AVE = 0.776) | ||

| Was the group activity helpful in choosing a major? | 0.884 | 41.460 |

| Were your teammates for the group activity cooperative? | - | - |

| Do you think group activities can overcome the individualistic nature of the online course? | 0.891 | 51.686 |

| Do you think the UCC 5 production as the assessment was helpful in deciding your major? | 0.868 | 35.230 |

| Learner satisfaction (CR = 0.955; α = 0.944; AVE = 0.704) | ||

| Did you gain a better understanding of H&T 6 by taking this course? | 0.867 | 37.409 |

| Are you satisfied with the course design? | 0.864 | 43.224 |

| Are you satisfied with the KLAS system? | 0.854 | 27.583 |

| Are you satisfied with the evaluation system for this course? | 0.897 | 52.477 |

| Are you satisfied with group activity in this course? | 0.777 | 21.084 |

| Do you think you have successfully completed this self-directed course? | 0.856 | 33.372 |

| Do you think the off-line activities are sufficiently complementary for the online part of the course? | 0.768 | 16.938 |

| Do you think the involvement of the instructor had a positive effect overall on the course? | 0.817 | 22.186 |

| Was this course helpful for deciding your major? | 0.847 | 27.618 |

| Learner retention (CR = 0.921; α = 0.871; AVE = 0.795) | ||

| Would you refer this course to someone else? | 0.895 | 42.790 |

| Would you take a similar course, if one exists? | 0.905 | 45.607 |

| Would you take other courses offered by the same instructor? | 0.875 | 31.916 |

| Mean | SD 1 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Instructor involvement | 4.349 | 0.606 | 0.778 | ||||||

| 2. System quality | 3.920 | 0.866 | 0.471 | 0.897 | |||||

| 3. Content quality | 4.056 | 0.703 | 0.728 | 0.670 | 0.807 | ||||

| 4. Test quality | 4.130 | 0.649 | 0.755 | 0.591 | 0.799 | 0.843 | |||

| 5. Activity quality | 3.660 | 0.953 | 0.436 | 0.564 | 0.673 | 0.612 | 0.881 | ||

| 6. Learner satisfaction | 3.924 | 0.767 | 0.696 | 0.675 | 0.833 | 0.812 | 0.772 | 0.839 | |

| 7. Learner retention | 4.041 | 0.842 | 0.664 | 0.550 | 0.712 | 0.744 | 0.599 | 0.831 | 0.892 |

| Model 1 | Model 2 | Model 3 | ||||

|---|---|---|---|---|---|---|

| Dependent variable: Learner satisfaction | β | t | β | t | β | t |

| Independent variables | ||||||

| System quality | 0.115 | 2.774 ** | 0.122 | 2.998 ** | 0.108 | 2.688 ** |

| Content quality | 0.284 | 4.979 *** | 0.219 | 3.648 *** | 0.185 | 3.165 ** |

| Test quality | 0.322 | 6.351 *** | 0.248 | 4.450 *** | 0.286 | 5.260 *** |

| Activity quality | 0.319 | 7.639 *** | 0.342 | 8.198 *** | 0.355 | 8.471 *** |

| Moderating variable | ||||||

| Instructor involvement | 0.143 | 2.963 ** | 0.158 | 3.154 ** | ||

| Interactions | ||||||

| System quality * Instructor involvement | 0.051 | 1.226 | ||||

| Content quality * Instructor involvement | −0.092 | −1.540 | ||||

| Test quality * Instructor involvement | 0.168 | 3.184 ** | ||||

| Activity quality * Instructor involvement | −0.126 | −2.829 ** | ||||

| R2 | 0.822 | 0.830 | 0.846 | |||

| Adjusted R2 | 0.818 | 0.825 | 0.838 | |||

| F | 229.666 *** | 192.669 *** | 118.005 *** | |||

| ΔR2 | - | 0.008 | 0.016 | |||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.J.; Lee, H.; Kim, T.T. A Study on the Instructor Role in Dealing with Mixed Contents: How It Affects Learner Satisfaction and Retention in e-Learning. Sustainability 2018, 10, 850. https://doi.org/10.3390/su10030850

Lee SJ, Lee H, Kim TT. A Study on the Instructor Role in Dealing with Mixed Contents: How It Affects Learner Satisfaction and Retention in e-Learning. Sustainability. 2018; 10(3):850. https://doi.org/10.3390/su10030850

Chicago/Turabian StyleLee, Seung Jae, Hyunae Lee, and Taegoo Terry Kim. 2018. "A Study on the Instructor Role in Dealing with Mixed Contents: How It Affects Learner Satisfaction and Retention in e-Learning" Sustainability 10, no. 3: 850. https://doi.org/10.3390/su10030850

APA StyleLee, S. J., Lee, H., & Kim, T. T. (2018). A Study on the Instructor Role in Dealing with Mixed Contents: How It Affects Learner Satisfaction and Retention in e-Learning. Sustainability, 10(3), 850. https://doi.org/10.3390/su10030850