Ethical Dilemmas in Using AI for Academic Writing and an Example Framework for Peer Review in Nephrology Academia: A Narrative Review

Abstract

:1. Introduction

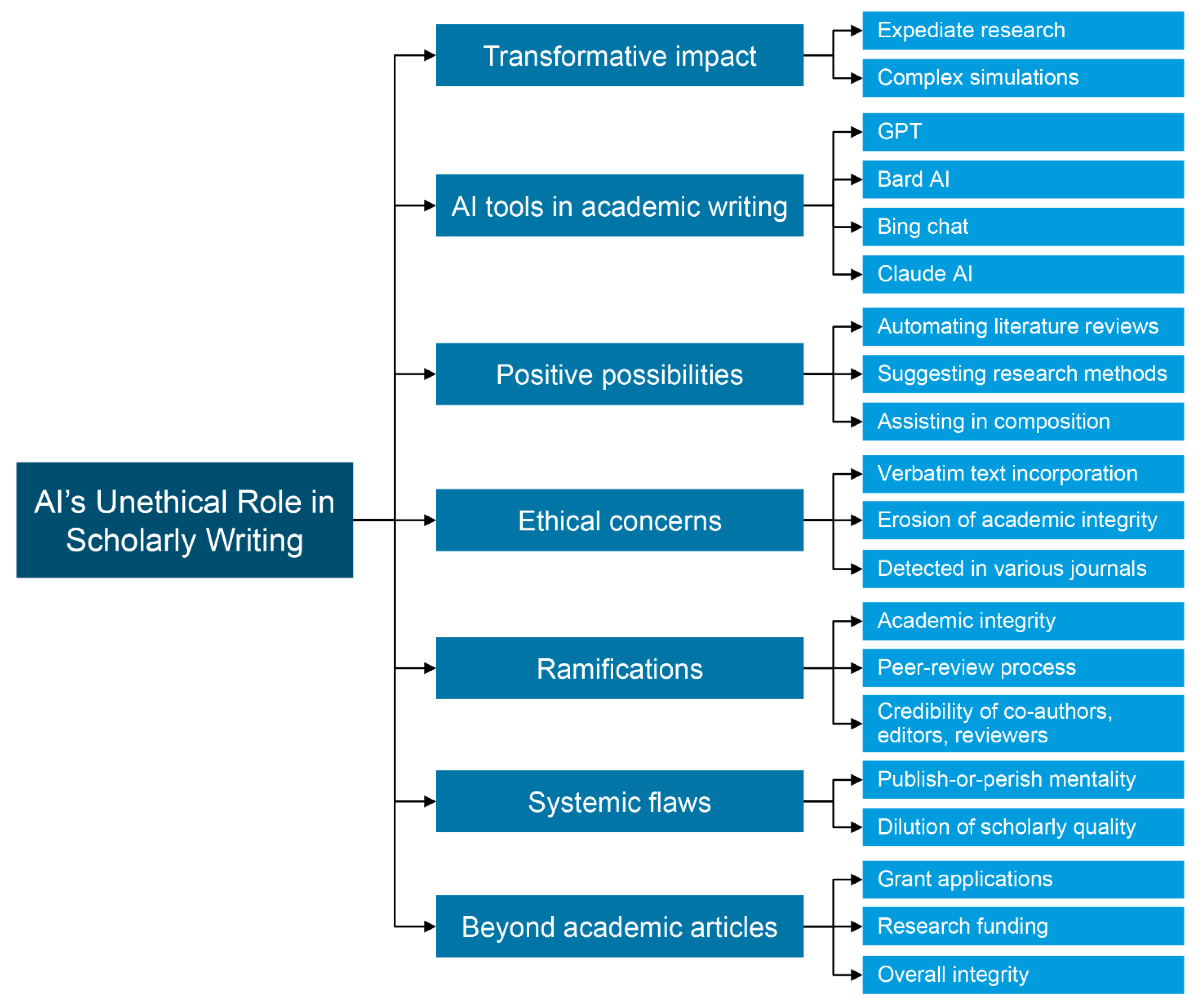

2. AI’s Unethical Role in Scholarly Writing

2.1. Examples of Academic Papers That Have Used AI-Generated Content, Focusing on ChatGPT-Based Chatbots

2.2. Systemic Failures: The Root of the Problem

2.3. The Infiltration of AI in Academic Theses

2.4. The Impact on Grant Applications

2.5. The Inevitability of AI in Academia

2.6. Proposed Solutions and Policy Recommendations

- Advanced AI-driven plagiarism detection: AI-generated content often surpasses the detection capabilities of conventional plagiarism checkers. Implementing next-level, AI-driven plagiarism detection technologies could significantly alter this landscape. Such technologies should be designed to discern the subtle characteristics and structures unique to AI-generated text, facilitating its identification during the review phases. A recent study compared Japanese stylometric features of texts generated using ChatGPT (GPT-3.5 and GPT-4) and those written by humans, and verified the classification performance of random forest classifier for two classes [75]. The results showed that the random forest classifier focusing on the rate of function words achieved 98.1% accuracy, and focusing on all stylometric features, reached 100% in terms of all performance indexes including accuracy, recall, precision, and F1 score [75].

- Revisiting and strengthening the peer-review process: The integrity of academic work hinges on a robust peer-review system, which has shown vulnerabilities in detecting AI-generated content. A viable solution could be the mandatory inclusion of an “AI scrutiny” phase within the peer-review workflow. This would equip reviewers with specialized tools for detecting AI-generated content. Furthermore, academic journals could deploy AI algorithms to preliminarily screen submissions for AI-generated material before they reach human evaluators.

- Training and resources for academics on ethical AI usage: While academics excel in their specialized domains, they may lack awareness of the ethical dimensions of AI application in research. Educational institutions and scholarly organizations should develop and offer training modules that focus on the ethical and responsible deployment of AI in academic endeavors. These could range from using AI in data analytics and literature surveys to crafting academic papers. In this era of significant advancements, we must recognize and embrace the potential of chatbots in education while simultaneously emphasizing the necessity for ethical guidelines governing their use. Chatbots offer a plethora of benefits, such as providing personalized instruction, facilitating 24/7 access to support, and fostering engagement and motivation. However, it is crucial to ensure that they are used in a manner that aligns with educational values and promotes responsible learning [76]. In an effort to uphold academic integrity, the New York Education Department implemented a comprehensive ban on the use of AI tools on network devices [77]. Similarly, the International Conference on Machine Learning (ICML) prohibited authors from submitting scientific writing generated by AI tools [78]. Furthermore, many scientists disapproved ChatGPT being listed as an author on research papers [58].

- Acknowledgment for AI as contributor: The use of ChatGPT as an author of academic papers is a controversial issue that raises important questions about accountability and contributorship [79]. On the one hand, ChatGPT can be a valuable tool for assisting with the writing process. It can help to generate ideas, organize thoughts, and produce clear and concise prose. However, ChatGPT is not a human author. It cannot understand the nuances of human language or the complexities of academic discourse. As a result, ChatGPT-generated text can often be superficial and lacking in originality. In addition, the use of ChatGPT raises concerns about accountability. Who is responsible for the content of a paper that is written using ChatGPT? Is it the human user who prompts the chatbot, or is it the chatbot itself? If a paper is found to be flawed or misleading, who can be held accountable? The issue of contributorship is also relevant. If a paper is written using ChatGPT, who should be listed as the author? Should the human user be listed as the sole author, or should ChatGPT be given some form of credit? Therefore, promoting a culture of transparency and safeguarding the integrity of academic work necessitates the acknowledgment of AI’s contribution in research and composition endeavors. It is crucial for authors to openly disclose the degree of AI assistance in a specially designated acknowledgment section within the publication. This acknowledgment should specify the particular roles played by AI, whether in data analysis, literature reviews, or drafting segments of the manuscript, alongside any human oversight exerted to ensure ethical deployment of AI. For example: “Acknowledgment: We hereby recognize the aid of [Specific AI Tool/Technology] in carrying out data analytics, conducting literature surveys, and drafting initial versions of the manuscript. This AI technology enabled a more streamlined research process, under the careful supervision of [Names of Individuals] to comply with ethical guidelines. The perspectives generated by AI significantly contributed to the articulation of arguments in this publication, affirming its valuable input to our work”.

- Inevitability of Technological Integration: While recognizing ethical concerns, the argument asserts that the adoption of advanced technologies such as AI in academia is inevitable. It recommends shifting the focus from resistance to the establishment of robust ethical frameworks and guidelines to ensure responsible AI usage [76]. From this perspective, taking a proactive stance on AI integration, firmly rooted in ethical principles, can facilitate the utilization of AI’s advantages in academia while mitigating the associated risks of unethical AI use. By fostering a culture of transparency, accountability, and continuous learning, there is a belief that the academic community can navigate the complexities of AI. This includes crafting policies that clearly define the ethical use of AI tools, creating mechanisms for disclosing AI assistance in academic work, and promoting collaborative efforts to explore and comprehend the implications of AI in academic writing and research.

3. Ideal Proposal for AI Integration in Nephrology Academic Writing and Peer Review

3.1. Transparent AI Assistance Acknowledgment

3.2. Enhanced Peer Review Process with AI Scrutiny

3.3. AI Ethics Training for Nephrologist

3.4. AI as a Collaborative Contributor

3.5. Continuous Monitoring and Research

3.6. Ethics Checklist

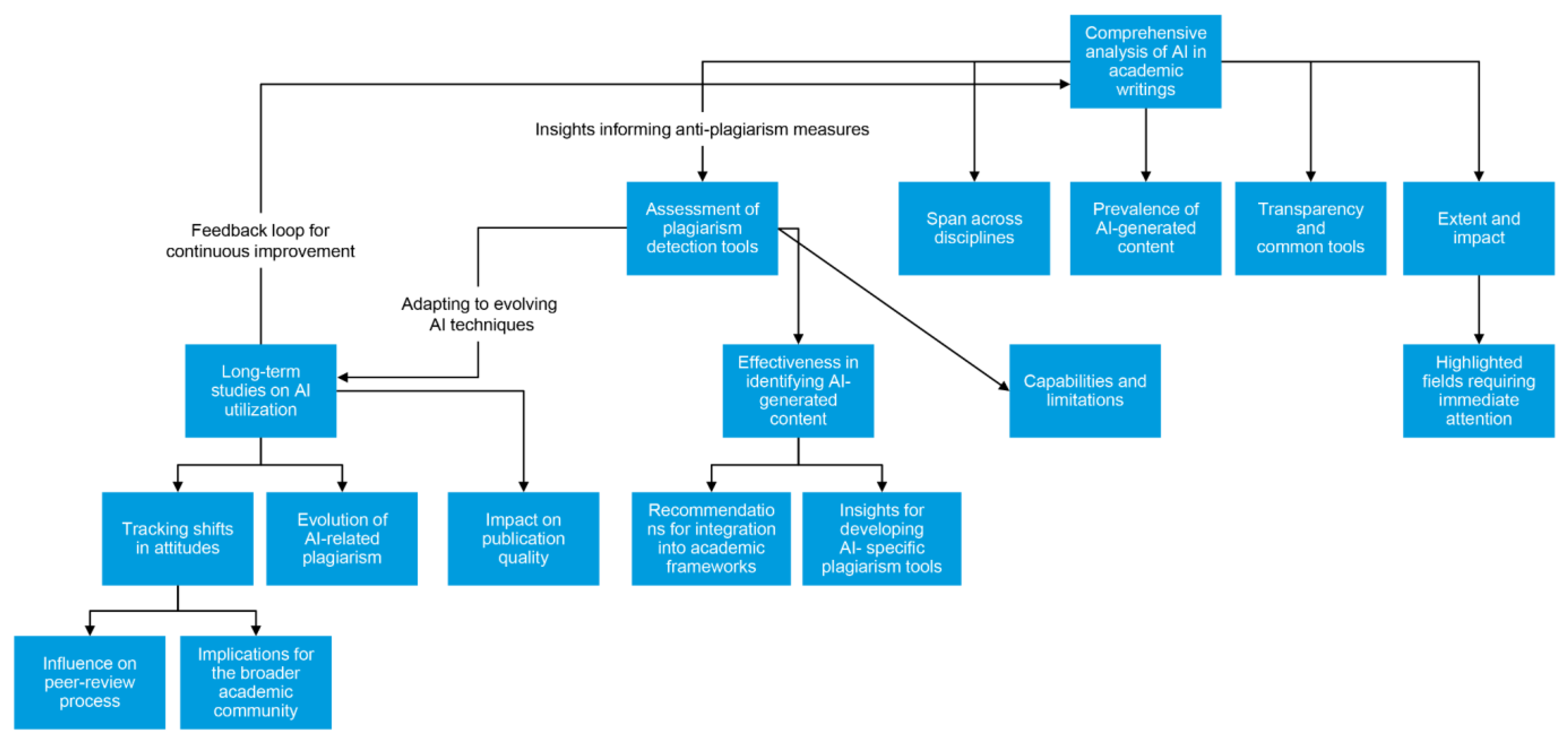

4. Future Studies and Research Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moshawrab, M.; Adda, M.; Bouzouane, A.; Ibrahim, H.; Raad, A. Reviewing Federated Machine Learning and Its Use in Diseases Prediction. Sensors 2023, 23, 2112. [Google Scholar] [CrossRef]

- Rojas, J.C.; Teran, M.; Umscheid, C.A. Clinician Trust in Artificial Intelligence: What is Known and How Trust Can Be Facilitated. Crit. Care Clin. 2023, 39, 769–782. [Google Scholar] [CrossRef] [PubMed]

- Boukherouaa, E.B.; Shabsigh, M.G.; AlAjmi, K.; Deodoro, J.; Farias, A.; Iskender, E.S.; Mirestean, M.A.T.; Ravikumar, R. Powering the Digital Economy: Opportunities and Risks of Artificial Intelligence in Finance; International Monetary Fund (IMF eLIBRARY): Washington, DC, USA, 2021; Volume 2021, pp. 5–20. [Google Scholar]

- Gülen, K. A Match Made in Transportation Heaven: AI and Self-Driving Cars. Available online: https://dataconomy.com/2022/12/28/artificial-intelligence-and-self-driving/ (accessed on 29 December 2022).

- Frąckiewicz, M. The Future of AI in Entertainment. Available online: https://ts2.space/en/the-future-of-ai-in-entertainment/ (accessed on 24 June 2023).

- Introducing ChatGPT. Available online: https://openai.com/blog/chatgpt (accessed on 18 April 2023).

- Bard. Available online: https://bard.google.com/chat (accessed on 21 March 2023).

- Bing Chat with GPT-4. Available online: https://www.microsoft.com/en-us/bing?form=MA13FV (accessed on 14 October 2023).

- Meet Claude. Available online: https://claude.ai/chats (accessed on 7 February 2023).

- OpenAI. GPT-4V(ision) System Card. Available online: https://cdn.openai.com/papers/GPTV_System_Card.pdf (accessed on 25 September 2023).

- Majnaric, L.T.; Babic, F.; O’Sullivan, S.; Holzinger, A. AI and Big Data in Healthcare: Towards a More Comprehensive Research Framework for Multimorbidity. J. Clin. Med. 2021, 10, 766. [Google Scholar] [CrossRef] [PubMed]

- Joshi, G.; Jain, A.; Araveeti, S.R.; Adhikari, S.; Garg, H.; Bhandari, M. FDA Approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices: An Updated Landscape. Available online: https://www.medrxiv.org/content/10.1101/2022.12.07.22283216v3 (accessed on 12 December 2022).

- Oh, N.; Choi, G.S.; Lee, W.Y. ChatGPT goes to the operating room: Evaluating GPT-4 performance and its potential in surgical education and training in the era of large language models. Ann. Surg. Treat. Res. 2023, 104, 269–273. [Google Scholar] [CrossRef] [PubMed]

- Eysenbach, G. The Role of ChatGPT, Generative Language Models, and Artificial Intelligence in Medical Education: A Conversation With ChatGPT and a Call for Papers. JMIR Med. Educ. 2023, 9, e46885. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef] [PubMed]

- Reese, J.T.; Danis, D.; Caulfied, J.H.; Casiraghi, E.; Valentini, G.; Mungall, C.J.; Robinson, P.N. On the limitations of large language models in clinical diagnosis. medRxiv 2023. [Google Scholar] [CrossRef]

- Eriksen, A.V.; Möller, S.; Ryg, J. Use of GPT-4 to Diagnose Complex Clinical Cases. NEJM AI 2023, 1–3. [Google Scholar] [CrossRef]

- Kanjee, Z.; Crowe, B.; Rodman, A. Accuracy of a Generative Artificial Intelligence Model in a Complex Diagnostic Challenge. JAMA 2023, 330, 78–80. [Google Scholar] [CrossRef]

- Zuniga Salazar, G.; Zuniga, D.; Vindel, C.L.; Yoong, A.M.; Hincapie, S.; Zuniga, A.B.; Zuniga, P.; Salazar, E.; Zuniga, B. Efficacy of AI Chats to Determine an Emergency: A Comparison Between OpenAI’s ChatGPT, Google Bard, and Microsoft Bing AI Chat. Cureus 2023, 15, e45473. [Google Scholar] [CrossRef]

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M.; et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern. Med. 2023, 183, 589–596. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Mello, M.M.; Guha, N. ChatGPT and Physicians’ Malpractice Risk. JAMA Health Forum 2023, 4, e231938. [Google Scholar] [CrossRef] [PubMed]

- Suppadungsuk, S.; Thongprayoon, C.; Miao, J.; Krisanapan, P.; Qureshi, F.; Kashani, K.; Cheungpasitporn, W. Exploring the Potential of Chatbots in Critical Care Nephrology. Medicines 2023, 10, 58. [Google Scholar] [CrossRef] [PubMed]

- Garcia Valencia, O.A.; Thongprayoon, C.; Jadlowiec, C.C.; Mao, S.A.; Miao, J.; Cheungpasitporn, W. Enhancing Kidney Transplant Care through the Integration of Chatbot. Healthcare 2023, 11, 2518. [Google Scholar] [CrossRef] [PubMed]

- Qarajeh, A.; Tangpanithandee, S.; Thongprayoon, C.; Suppadungsuk, S.; Krisanapan, P.; Aiumtrakul, N.; Garcia Valencia, O.A.; Miao, J.; Qureshi, F.; Cheungpasitporn, W. AI-Powered Renal Diet Support: Performance of ChatGPT, Bard AI, and Bing Chat. Clin. Pract. 2023, 13, 1160–1172. [Google Scholar] [CrossRef] [PubMed]

- Suppadungsuk, S.; Thongprayoon, C.; Krisanapan, P.; Tangpanithandee, S.; Garcia Valencia, O.; Miao, J.; Mekraksakit, P.; Kashani, K.; Cheungpasitporn, W. Examining the Validity of ChatGPT in Identifying Relevant Nephrology Literature: Findings and Implications. J. Clin. Med. 2023, 12, 5550. [Google Scholar] [CrossRef]

- Miao, J.; Thongprayoon, C.; Garcia Valencia, O.A.; Krisanapan, P.; Sheikh, M.S.; Davis, P.W.; Mekraksakit, P.; Suarez, M.G.; Craici, I.M.; Cheungpasitporn, W. Performance of ChatGPT on Nephrology Test Questions. Clin. J. Am. Soc. Nephrol. 2023. [Google Scholar] [CrossRef]

- Temsah, M.H.; Altamimi, I.; Jamal, A.; Alhasan, K.; Al-Eyadhy, A. ChatGPT Surpasses 1000 Publications on PubMed: Envisioning the Road Ahead. Cureus 2023, 15, e44769. [Google Scholar] [CrossRef]

- VanderLinden, S. Exploring the Ethics of AI. Available online: https://alchemycrew.com/exploring-the-ethics-of-ai/ (accessed on 22 July 2021).

- WHO Calls for Safe and Ethical AI for Health. Available online: https://www.who.int/news/item/16-05-2023-who-calls-for-safe-and-ethical-ai-for-health (accessed on 16 May 2023).

- Dergaa, I.; Chamari, K.; Zmijewski, P.; Ben Saad, H. From human writing to artificial intelligence generated text: Examining the prospects and potential threats of ChatGPT in academic writing. Biol. Sport 2023, 40, 615–622. [Google Scholar] [CrossRef]

- Hosseini, M.; Horbach, S. Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other large language models in scholarly peer review. Res. Integr. Peer Rev. 2023, 8, 4. [Google Scholar] [CrossRef]

- Leung, T.I.; de Azevedo Cardoso, T.; Mavragani, A.; Eysenbach, G. Best Practices for Using AI Tools as an Author, Peer Reviewer, or Editor. J. Med. Internet Res. 2023, 25, e51584. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, M.; Rasmussen, L.M.; Resnik, D.B. Using AI to write scholarly publications. Account. Res. 2023, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Liu, X.Q. Potential and limitations of ChatGPT and generative artificial intelligence in medical safety education. World J. Clin. Cases 2023, 11, 7935–7939. [Google Scholar] [CrossRef] [PubMed]

- Lemley, K.V. Does ChatGPT Help Us Understand the Medical Literature? J. Am. Soc. Nephrol. 2023. [Google Scholar] [CrossRef]

- Jin, Q.; Leaman, R.; Lu, Z. Retrieve, Summarize, and Verify: How Will ChatGPT Affect Information Seeking from the Medical Literature? J. Am. Soc. Nephrol. 2023, 34, 1302–1304. [Google Scholar] [CrossRef] [PubMed]

- Eppler, M.; Ganjavi, C.; Ramacciotti, L.S.; Piazza, P.; Rodler, S.; Checcucci, E.; Gomez Rivas, J.; Kowalewski, K.F.; Belenchon, I.R.; Puliatti, S.; et al. Awareness and Use of ChatGPT and Large Language Models: A Prospective Cross-sectional Global Survey in Urology. Eur. Urol. 2023, in press. [CrossRef]

- Kurian, N.; Cherian, J.M.; Sudharson, N.A.; Varghese, K.G.; Wadhwa, S. AI is now everywhere. Br. Dent. J. 2023, 234, 72. [Google Scholar] [CrossRef]

- Gomes, W.J.; Evora, P.R.B.; Guizilini, S. Artificial Intelligence is Irreversibly Bound to Academic Publishing—ChatGPT is Cleared for Scientific Writing and Peer Review. Braz. J. Cardiovasc. Surg. 2023, 38, e20230963. [Google Scholar] [CrossRef]

- Kitamura, F.C. ChatGPT Is Shaping the Future of Medical Writing But Still Requires Human Judgment. Radiology 2023, 307, e230171. [Google Scholar] [CrossRef]

- Huang, J.; Tan, M. The role of ChatGPT in scientific communication: Writing better scientific review articles. Am. J. Cancer Res. 2023, 13, 1148–1154. [Google Scholar] [PubMed]

- Guleria, A.; Krishan, K.; Sharma, V.; Kanchan, T. ChatGPT: Ethical concerns and challenges in academics and research. J. Infect. Dev. Ctries. 2023, 17, 1292–1299. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Azam, M.; Bin Naeem, S.; Faiola, A. An overview of the capabilities of ChatGPT for medical writing and its implications for academic integrity. Health Inf. Libr. J. 2023, 40, 440–446. [Google Scholar] [CrossRef] [PubMed]

- Zheng, H.; Zhan, H. ChatGPT in Scientific Writing: A Cautionary Tale. Am. J. Med. 2023, 136, 725–726.e6. [Google Scholar] [CrossRef] [PubMed]

- Kleebayoon, A.; Wiwanitkit, V. ChatGPT in medical practice, education and research: Malpractice and plagiarism. Clin. Med. 2023, 23, 280. [Google Scholar] [CrossRef]

- Gandhi Periaysamy, A.; Satapathy, P.; Neyazi, A.; Padhi, B.K. ChatGPT: Roles and boundaries of the new artificial intelligence tool in medical education and health research–correspondence. Ann. Med. Surg. 2023, 85, 1317–1318. [Google Scholar] [CrossRef]

- Mihalache, A.; Popovic, M.M.; Muni, R.H. Performance of an Artificial Intelligence Chatbot in Ophthalmic Knowledge Assessment. JAMA Ophthalmol. 2023, 141, 589–597. [Google Scholar] [CrossRef]

- Giannos, P.; Delardas, O. Performance of ChatGPT on UK Standardized Admission Tests: Insights From the BMAT, TMUA, LNAT, and TSA Examinations. JMIR Med. Educ. 2023, 9, e47737. [Google Scholar] [CrossRef]

- Takagi, S.; Watari, T.; Erabi, A.; Sakaguchi, K. Performance of GPT-3.5 and GPT-4 on the Japanese Medical Licensing Examination: Comparison Study. JMIR Med. Educ. 2023, 9, e48002. [Google Scholar] [CrossRef]

- Bhayana, R.; Krishna, S.; Bleakney, R.R. Performance of ChatGPT on a Radiology Board-style Examination: Insights into Current Strengths and Limitations. Radiology 2023, 307, e230582. [Google Scholar] [CrossRef]

- Sikander, B.; Baker, J.J.; Deveci, C.D.; Lund, L.; Rosenberg, J. ChatGPT-4 and Human Researchers Are Equal in Writing Scientific Introduction Sections: A Blinded, Randomized, Non-inferiority Controlled Study. Cureus 2023, 15, e49019. [Google Scholar] [CrossRef] [PubMed]

- Osmanovic-Thunström, A.; Steingrimsson, S. Does GPT-3 qualify as a co-author of a scientific paper publishable in peer-review journals according to the ICMJE criteria? A case study. Discov. Artif. Intell. 2023, 3, 12. [Google Scholar] [CrossRef]

- ChatGPT Generative Pre-trained Transformer; Zhavoronkov, A. Rapamycin in the context of Pascal’s Wager: Generative pre-trained transformer perspective. Oncoscience 2022, 9, 82–84. [Google Scholar] [CrossRef]

- Wattanapisit, A.; Photia, A.; Wattanapisit, S. Should ChatGPT be considered a medical writer? Malays. Fam. Physician 2023, 18, 69. [Google Scholar] [CrossRef] [PubMed]

- Miao, J.; Thongprayoon, C.; Cheungpasitporn, W. Assessing the Accuracy of ChatGPT on Core Questions in Glomerular Disease. Kidney Int. Rep. 2023, 8, 1657–1659. [Google Scholar] [CrossRef] [PubMed]

- Tang, G. Letter to editor: Academic journals should clarify the proportion of NLP-generated content in papers. Account. Res. 2023, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Stokel-Walker, C. ChatGPT listed as author on research papers: Many scientists disapprove. Nature 2023, 613, 620–621. [Google Scholar] [CrossRef]

- Bahsi, I.; Balat, A. The Role of AI in Writing an Article and Whether it Can Be a Co-author: What if it Gets Support From 2 Different AIs Like ChatGPT and Google Bard for the Same Theme? J. Craniofac. Surg. 2023. [Google Scholar] [CrossRef]

- Grove, J. Science Journals Overturn Ban on ChatGPT-Authored Papers. Available online: https://www.timeshighereducation.com/news/science-journals-overturn-ban-chatgpt-authored-papers#:~:text=The%20prestigious%20Science%20family%20of,intelligence%20tools%20in%20submitted%20papers (accessed on 16 November 2023).

- Zielinski, C.; Winker, M.A.; Aggarwal, R.; Ferris, L.E.; Heinemann, M.; Lapena, J.F., Jr.; Pai, S.A.; Ing, E.; Citrome, L.; Alam, M.; et al. Chatbots, generative AI, and scholarly manuscripts: WAME recommendations on chatbots and generative artificial intelligence in relation to scholarly publications. Colomb. Médica 2023, 54, e1015868. [Google Scholar] [CrossRef]

- Daugirdas, J.T. OpenAI’s ChatGPT and Its Potential Impact on Narrative and Scientific Writing in Nephrology. Am. J. Kidney Dis. 2023, 82, A13–A14. [Google Scholar] [CrossRef]

- Dönmez, I.; Idil, S.; Gulen, S. Conducting Academic Research with the AI Interface ChatGPT: Challenges and Opportunities. J. STEAM Educ. 2023, 6, 101–118. [Google Scholar]

- Else, H. Abstracts written by ChatGPT fool scientists. Nature 2023, 613, 423. [Google Scholar] [CrossRef] [PubMed]

- Casal, J.E.; Kessler, M. Can linguists distinguish between ChatGPT/AI and human writing?: A study of research ethics and academic publishing. Res. Methods Appl. Linguist. 2023, 2, 100068. [Google Scholar] [CrossRef]

- Nearly 1 in 3 College Students Have Used Chatgpt on Written Assignments. Available online: https://www.intelligent.com/nearly-1-in-3-college-students-have-used-chatgpt-on-written-assignments/ (accessed on 23 January 2023).

- Kamilia, B. The Reality of Contemporary Arab-American Literary Character and the Idea of the Third Space Female Character Analysis of Abu Jaber Novel Arabian Jazz. Ph.D. Thesis, Kasdi Merbah Ouargla University, Ouargla, Algeria, 2023. [Google Scholar]

- Jayachandran, M. ChatGPT: Guide to Scientific Thesis Writing. Independently Published. 2023. Available online: https://www.barnesandnoble.com/w/chatgpt-guide-to-scientific-thesis-writing-jayachandran-m/1144451253 (accessed on 5 December 2023).

- Lu, D. Are Australian Research Council Reports Being Written by ChatGPT? Available online: https://www.theguardian.com/technology/2023/jul/08/australian-research-council-scrutiny-allegations-chatgpt-artifical-intelligence (accessed on 7 July 2023).

- Van Noorden, R.; Perkel, J.M. AI and science: What 1,600 researchers think. Nature 2023, 621, 672–675. [Google Scholar] [CrossRef]

- Parrilla, J.M. ChatGPT use shows that the grant-application system is broken. Nature 2023, 623, 443. [Google Scholar] [CrossRef]

- Khan, S.H. AI at Doorstep: ChatGPT and Academia. J. Coll. Physicians Surg. Pak. 2023, 33, 1085–1086. [Google Scholar] [CrossRef]

- Jeyaraman, M.; Ramasubramanian, S.; Balaji, S.; Jeyaraman, N.; Nallakumarasamy, A.; Sharma, S. ChatGPT in action: Harnessing artificial intelligence potential and addressing ethical challenges in medicine, education, and scientific research. World J. Methodol. 2023, 13, 170–178. [Google Scholar] [CrossRef]

- Meyer, J.G.; Urbanowicz, R.J.; Martin, P.C.N.; O’Connor, K.; Li, R.; Peng, P.C.; Bright, T.J.; Tatonetti, N.; Won, K.J.; Gonzalez-Hernandez, G.; et al. ChatGPT and large language models in academia: Opportunities and challenges. BioData Min. 2023, 16, 20. [Google Scholar] [CrossRef]

- Zaitsu, W.; Jin, M. Distinguishing ChatGPT(-3.5, -4)-generated and human-written papers through Japanese stylometric analysis. PLoS ONE 2023, 18, e0288453. [Google Scholar] [CrossRef]

- Koo, M. Harnessing the potential of chatbots in education: The need for guidelines to their ethical use. Nurse Educ. Pract. 2023, 68, 103590. [Google Scholar] [CrossRef]

- Yang, M. New York City Schools Ban AI Chatbot That Writes Essays and Answers Prompts. Available online: https://www.theguardian.com/us-news/2023/jan/06/new-york-city-schools-ban-ai-chatbot-chatgpt (accessed on 6 January 2023).

- Vincent, J. Top AI Conference Bans Use of ChatGPT and AI Language Tools to Write Academic Papers. Available online: https://www.theverge.com/2023/1/5/23540291/chatgpt-ai-writing-tool-banned-writing-academic-icml-paper (accessed on 5 January 2023).

- Siegerink, B.; Pet, L.A.; Rosendaal, F.R.; Schoones, J.W. ChatGPT as an author of academic papers is wrong and highlights the concepts of accountability and contributorship. Nurse Educ. Pract. 2023, 68, 103599. [Google Scholar] [CrossRef] [PubMed]

- Garcia Valencia, O.A.; Suppadungsuk, S.; Thongprayoon, C.; Miao, J.; Tangpanithandee, S.; Craici, I.M.; Cheungpasitporn, W. Ethical Implications of Chatbot Utilization in Nephrology. J. Pers. Med. 2023, 13, 1363. [Google Scholar] [CrossRef] [PubMed]

- Thongprayoon, C.; Vaitla, P.; Jadlowiec, C.C.; Leeaphorn, N.; Mao, S.A.; Mao, M.A.; Pattharanitima, P.; Bruminhent, J.; Khoury, N.J.; Garovic, V.D.; et al. Use of Machine Learning Consensus Clustering to Identify Distinct Subtypes of Black Kidney Transplant Recipients and Associated Outcomes. JAMA Surg. 2022, 157, e221286. [Google Scholar] [CrossRef] [PubMed]

- Cacciamani, G.E.; Eppler, M.B.; Ganjavi, C.; Pekan, A.; Biedermann, B.; Collins, G.S.; Gill, I.S. Development of the ChatGPT, Generative Artificial Intelligence and Natural Large Language Models for Accountable Reporting and Use (CANGARU) Guidelines. arXiv 2023, arXiv:2307.08974. [Google Scholar]

| Component | Objective | Action Items | Stakeholders Involved | Metrics for Success |

|---|---|---|---|---|

| Transparent AI assistance acknowledgment | Ensure full disclosure of AI contributions in research. | 1. Add acknowledgment section in paper. 2. Specify AI role. | Authors, journal editors | Number of publications with transparent acknowledgments |

| Enhanced peer review process with AI scrutiny | Maintain academic rigor and integrity in the use of AI. | 1. Add “AI Scrutiny” phase in peer review. 2. Train reviewers on AI. | Peer reviewers, AI experts | Reduced rate of publication errors related to AI misuse |

| AI ethics training for nephrologists | Equip nephrologists with the knowledge to use AI ethically. | 1. Develop training modules. 2. Conduct workshops. | Nephrologists, ethicists, AI experts | Number of trained personnel |

| AI as a collaborative contributor | Foster a culture where AI and human expertise are seen as complementary. | 1. Advocate for collaboration in publications. 2. Develop guidelines for collaboration. | Nephrologists, AI developers | Number of collaborative publications |

| Continuous monitoring and research | Understand the impact of AI on the field and adapt accordingly. | 1. Initiate long-term studies. 2. Develop AI-specific plagiarism tools. | Nephrologists, data scientists | Published long-term impact studies |

| Ethics checklist | Ensure preliminary ethical compliance in AI usage. | Integrate ethics checklist into manuscript submission. | Authors, journal editors, ethicists | Number of manuscripts screened for ethical compliance |

| AI Ethics Checklist for Journal Submissions General Information

AI Contribution

I, the undersigned, declare that the information provided in this checklist is accurate and complete to the best of my knowledge. Signature: ___________________________ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miao, J.; Thongprayoon, C.; Suppadungsuk, S.; Garcia Valencia, O.A.; Qureshi, F.; Cheungpasitporn, W. Ethical Dilemmas in Using AI for Academic Writing and an Example Framework for Peer Review in Nephrology Academia: A Narrative Review. Clin. Pract. 2024, 14, 89-105. https://doi.org/10.3390/clinpract14010008

Miao J, Thongprayoon C, Suppadungsuk S, Garcia Valencia OA, Qureshi F, Cheungpasitporn W. Ethical Dilemmas in Using AI for Academic Writing and an Example Framework for Peer Review in Nephrology Academia: A Narrative Review. Clinics and Practice. 2024; 14(1):89-105. https://doi.org/10.3390/clinpract14010008

Chicago/Turabian StyleMiao, Jing, Charat Thongprayoon, Supawadee Suppadungsuk, Oscar A. Garcia Valencia, Fawad Qureshi, and Wisit Cheungpasitporn. 2024. "Ethical Dilemmas in Using AI for Academic Writing and an Example Framework for Peer Review in Nephrology Academia: A Narrative Review" Clinics and Practice 14, no. 1: 89-105. https://doi.org/10.3390/clinpract14010008

APA StyleMiao, J., Thongprayoon, C., Suppadungsuk, S., Garcia Valencia, O. A., Qureshi, F., & Cheungpasitporn, W. (2024). Ethical Dilemmas in Using AI for Academic Writing and an Example Framework for Peer Review in Nephrology Academia: A Narrative Review. Clinics and Practice, 14(1), 89-105. https://doi.org/10.3390/clinpract14010008