Abstract

This paper proposes a novel navigation approach for Unmanned Tracked Vehicles (UTVs) using prior-based ensemble deep reinforcement learning, which fuses the policy of the ensemble Deep Reinforcement Learning (DRL) and Dynamic Window Approach (DWA) to enhance both exploration efficiency and deployment safety in unstructured off-road environments. First, by integrating kinematic analysis, we introduce a novel state and an action space that account for rugged terrain features and track–ground interactions. Local elevation information and vehicle pose changes over consecutive time steps are used as inputs to the DRL model, enabling the UTVs to implicitly learn policies for safe navigation in complex terrains while minimizing the impact of slipping disturbances. Then, we introduce an ensemble Soft Actor–Critic (SAC) learning framework, which introduces the DWA as a behavioral prior, referred to as the SAC-based Hybrid Policy (SAC-HP). Ensemble SAC uses multiple policy networks to effectively reduce the variance of DRL outputs. We combine the DRL actions with the DWA method by reconstructing the hybrid Gaussian distribution of both. Experimental results indicate that the proposed SAC-HP converges faster than traditional SAC models, which enables efficient large-scale navigation tasks. Additionally, a penalty term in the reward function about energy optimization is proposed to reduce velocity oscillations, ensuring fast convergence and smooth robot movement. Scenarios with obstacles and rugged terrain have been considered to prove the SAC-HP’s efficiency, robustness, and smoothness when compared with the state of the art.

1. Introduction

Unmanned Tracked Vehicles (UTVs) play a pivotal role in construction engineering, search and rescue missions, and planetary exploration tasks due to their exceptional ability to move over unstructured terrains [1,2,3]. In real-world off-road exploration, to ensure safety and stability during navigation, UTVs are required to make decisions automatically using the collected real-time environmental information [4].

In recent years, DRL techniques have contributed greatly to solving complex non-linear problems in autonomous planning and decision making [5,6]. For instance, researchers have proposed dueling double deep Q-networks with prioritized experience replay (D3QN-PER) to enhance dynamic path planning performance by balancing exploration and exploitation more effectively [7]. Maoudj and Hentout [8] refined traditional Q-learning via new reward functions and state–action selection strategies to speed up convergence. Zhou et al. [9] tackled multiagent path planning under high risk by employing dual deep Q-networks that discretize the environment and separate action selection from evaluation, enhancing convergence speed and inter-agent collaboration. Moreover, hybrid methods merge deep reinforcement learning with external optimization, such as immune-based strategies [10], sum-tree prioritized experience replay [11] or heuristic algorithms [12] to ensure safer routes, shorter travel distances, and better path quality, demonstrating improved adaptability in unknown or dynamic environments. To enable the robots to move in off-road environments, multidimensional sensor data, including the global elevation map and the robot pose, are used as input to learn planning policies [13,14].

However, the global elevation map is unavailable in unknown off-road environments, making it more suitable to use local sensing technology during exploration. Zhang et al. [15] employed deep reinforcement learning to process raw sensor data for local navigation, enabling reliable obstacle avoidance in disaster-like environments without prior terrain knowledge. Weerakoon et al. [16] combined a cost map and an attention mechanism to highlight unstable zones in the elevation map, filter out risky paths, and ensure safe and efficient routes, which improved success rates on uneven outdoor terrain. Nguyen et al. [17] introduced a multimodal network that fuses RGB images, point clouds, and laser data, effectively handling challenging visuals and structures in collapsed or cave-like settings and enhancing navigation accuracy and stability.

Moreover, energy efficiency is a growing priority in intelligent transportation and autonomous navigation systems. Viadero-Monasterio et al. [18] proposed a traffic signal optimization framework that improves energy consumption by adapting to heterogeneous vehicle powertrains and driver preferences. Their method achieved up to 9.67% energy savings for diesel vehicles by customizing velocity profiles and acceleration behaviors. Although their work focuses on signalized intersection traffic, the idea of embedding energy-aware policy adaptation inspires us to explore lightweight reward shaping strategies. In this study, we introduce a velocity-stabilizing term in the reward to indirectly suppress excessive acceleration and promote smoother, energy-conscious navigation.

Apart from the abovementioned problem, the sample efficiency and deployment safety of DRL limit the navigation performance [19]. In terms of the sample efficiency, especially in cases with sparse rewards, DRL agents might converge to local optima, thereby hindering the learning of optimal policies [20]. Due to the black box nature of deep networks, DRL is unable to handle hard constraints effectively, which limits the generalization of the model and increases the difficulty of deployment safety [6]. These two problems can be alleviated by integrating prior knowledge using functional regularization [21,22].

This paper proposes an end-to-end DRL-based planner for real-time autonomous motion planning of UTVs on unknown rugged terrains. To enhance the training efficiency of UTVs and improve its adaptability in unknown environments, a prior-based ensemble learning framework, called SAC-based Hybrid Policy (SAC-HP), is introduced. The main contributions of this paper are as follows:

- This paper proposes a novel action and state space considering terrains and disturbances from the environment, which allows the UTVs to autonomously learn how to traverse on continuous rugged terrains with collision avoidance, as well as mitigate the effects of track slip problems without an explicit model.

- This paper introduces a comprehensive reward function considering obstacle avoidance, safe navigation, and energy optimization. Additionally, a novel optimization metric based on off-road environmental characteristics is proposed to enhance the algorithm’s robustness in complex environments. This reward function enables a shorter and faster exploration with smooth movement.

- To address the issues of low exploration efficiency and deployment safety in traditional DRL-based methods, we propose an ensemble Soft Actor–Critic (SAC) learning framework, which introduces the Dynamic Window Approach (DWA) as a behavioral prior, called SAC-based Hybrid Policy (SAC-HP). We combine the DRL actions with the DWA method by reconstructing the hybrid Gaussian distribution of both. This method employs a suboptimal policy to accelerate learning while still allowing the final policy to discover the optimal behaviors.

Notations: In this article, several symbols and variables are used, which are defined in Table 1. Note that the bold variables in this paper represent vectors and matrices.

Table 1.

Notation.

2. Preliminaries

2.1. Kinematics Analysis

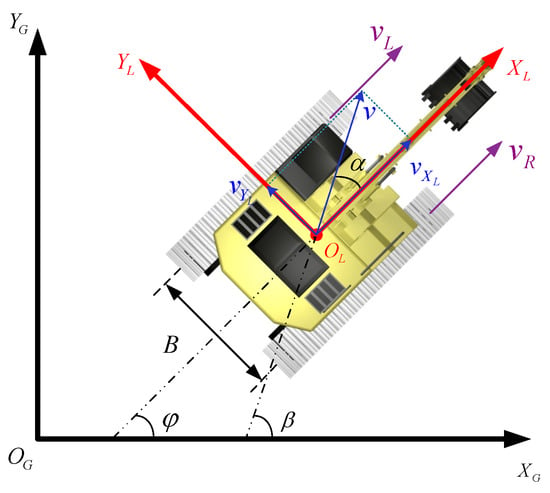

As shown in Figure 1, the robot’s motion is represented by a global coordinate system () and a local coordinate system (). The position and the orientations of the global coordinate remain constant, while the local coordinate system moves with the robot. The positive direction of the axis corresponds to the robot’s forward direction, while the positive direction of the axis is perpendicular to and points to the left side of the robot’s forward direction. v denotes the robot’s actual travel speed. The angle represents the orientation difference between the local axis and the global horizontal axis (i.e., the heading angle). The angle is the angle between the motion axis and v. The direction angle is . and represent the actual forward velocities of the left and right tracks. r denotes the effective driving radius of the tracked drive wheels, and B represents the track gauge (the lateral distance between the two tracks).

Figure 1.

Kinematic model of UTV.

In the local coordinate system, the vehicle speed v and the sideslip angle can be expressed as

where

According to [23], the kinematic model of the UTV in the global coordinate system can be derived as

where

where and are the angular velocities of the left and right drive wheels, and and denote the slip rates of the left and right tracks, respectively.

2.2. Soft Actor–Critic Algorithm

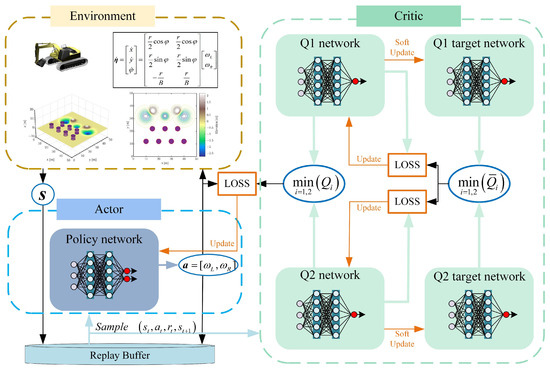

As shown in Figure 2, the Soft Actor–Critic (SAC) algorithm [24] builds upon the Actor–Critic framework by introducing a maximum policy entropy objective to address the exploration–exploitation tradeoff in reinforcement learning. By incorporating entropy into the objective function, the SAC algorithm aims to achieve a balance between reward maximization and entropy (i.e., policy randomness), thereby significantly enhancing the agent’s exploration capability and robustness.

Figure 2.

SAC framework.

The optimal policy for SAC can be expressed as

where represents the policy updated to find the maximum cumulative reward; denotes the state and action at time t, sampled from the probability density distribution ; is the immediate reward obtained at time t; is the regularization coefficient that controls the importance of entropy; and represents the entropy of the policy under state .

SAC employs neural networks to approximate the soft Q-function and the policy. The Q-value is calculated using an entropy-augmented Bellman equation, and the parameters are learned by minimizing the soft Bellman residual. This can be expressed as

where represents the replay buffer storing tuples of experience. denotes the parameters of the soft Q-function. represents the state value function, which is provided by

The policy network parameter can be optimized through the following objective function to minimize the divergence:

3. Markov Decision Process (MDP) Modeling

In this section, the real-time motion planning problem for the UTV is formulated as a Markov Decision Process (MDP), with detailed designs for the state space, action space, and reward function.

3.1. State Space Design

The state space represents the local environmental information accessible to the UTV at the current moment, encapsulating the robot’s perception of the world and ensuring that it can make informed decisions under specific conditions.

3.1.1. Basic State Space

The basic state space serves as the fundamental decision-making basis for the agent’s primary tasks, consisting of the target position state and the obstacle position state. These are defined in what follows:

- Target position state: Assuming the relative position between the UTV and the target location can be obtained by GPS measurements, the target position can be transformed into the robot’s local coordinate system aswhere is a rotation matrix that transforms coordinates from the global coordinate system into the robot’s local coordinate frame. The specific transformation is as follows:where denotes the target’s position in the global coordinate system, while represents the UTV’s current position in the global coordinate system.

- Obstacle position state: The 180° scanning region in front of the robot is divided into i segments, and the closest obstacle distance in each segment iswhere represents the closest obstacle distance in the i-th segment. If no obstacle is detected within that segment, its value is set to the maximum scanning range . Note that the orientation information is implicitly encoded in the index of each segment. Specifically, the 180° frontal field is divided into N equal angular sectors, and each distance measurement corresponds to a fixed direction relative to the robot’s heading.We combine the aforementioned state space elements to form the basic state space, which is represented aswhere denotes the comprehensive state representation that encompasses both the task-relevant observations and the UTV’s state. Meanwhile, N represents the number of detected obstacles.

3.1.2. Extended State Space

The off-road rugged terrain and soft sediments cause vehicle bumping and slipping, significantly impacting the control performance and safety of the agent. To address this problem, the environmental information in the basic state space is enlarged to provide safer decision-making policies. The relevant information is defined in the following:

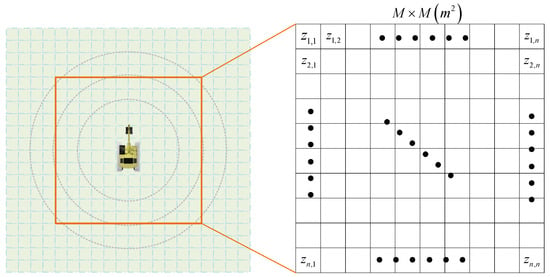

- Local Elevation Information: To enable the agent to perceive the surrounding terrain’s undulations, local elevation information based on the Digital Elevation Model (DEM) is incorporated into the state space. A DEM is a collection of elevation values within a specific area, which is an array of three-dimensional vectors describing the region’s terrain. The DEM can be expressed in functional form aswhere and represent planar coordinates, and denotes the elevation value at the point . The sampling is performed based on a regular grid pattern, where the elevation values corresponding to the grid points are stored, as illustrated in Figure 3.

Figure 3. Local elevation information.Its mathematical description is written aswhere represents the elevation value corresponding to a grid point, as defined in Figure 3. The matrix size n is determined based on the sensor detection range M and the grid sampling resolution.

Figure 3. Local elevation information.Its mathematical description is written aswhere represents the elevation value corresponding to a grid point, as defined in Figure 3. The matrix size n is determined based on the sensor detection range M and the grid sampling resolution. - UTV’s attitude change: To implicitly learn the effects of wheel slipping on the agent’s attitude without explicitly providing a model approximation, the attitude transformation between two consecutive time steps and the corresponding actions are used as state inputs, and this is defined aswhere , , represent the attitude changes of the agent between two consecutive time steps. denotes the angular velocities of the left and right drive wheels at the previous time step.

Thus, the extended state space can be expressed as

To sum up, the complete state space can be written as

where represents the fundamental state space, providing the decision-making foundation for basic navigation tasks, while denotes the extended state space, characterizing observable features under the conditions of the off-road environment.

3.2. Action Space Design

In DRL-based control tasks, the action represents the control input that the agent can apply at each state. To facilitate the transfer of the model to real-world control tasks, this paper designs the action space based on the UTV’s kinematic model.

Compared to the virtual grid-based environments commonly used in the literature, the UTV under the kinematic model (3) can freely move in any direction and continuously adjust its speed to reach the target. According to the kinematic model (3), the angular velocities and of the TUR’s left and right drive wheels are factors influencing its state. Therefore, we define the action space of the UTV as

where and denote the angular velocities of the left and right drive wheels.

3.3. Composite Reward Function

In traditional DRL methods for navigation tasks, discrete reward functions are commonly used. However, this approach can result in sparse rewards, which significantly reduce the training efficiency of the agent [25]. To address this challenge, we propose a novel approach: a composite reward function that integrates safe navigation, obstacle avoidance, target proximity, and energy optimization. This composite reward function is designed to assist the agent in accomplishing navigation tasks more effectively. The relevant reward designs are described in the following:

- Target Distance Guidance Reward Design: The design principle of this reward function is to guide the agent to make decisions that continuously move toward the target position, which is represented by the Euclidean distance between points, , which is written aswhere represents the position of the agent, and denotes the position of the target. and represent the Euclidean distance to the target at the previous and current time instant, respectively. is a normalization factor, representing a time-synchronous maximum allowable distance for the agent to move. Upon the UTV approaching to the target, a positive reward is given. Otherwise, a penalty is imposed.

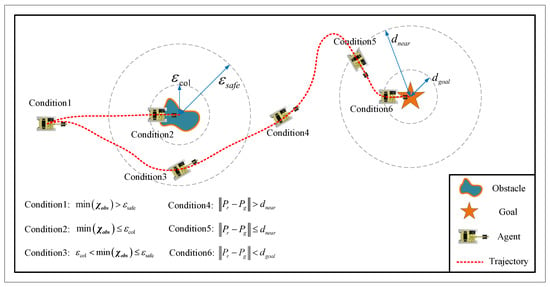

- Target Orientation Guidance Reward Design: When approaching to the destination, the UTV may exhibit greedy behavior, lingering near the target point to maximize cumulative distance rewards. To avoid this behavior, a target orientation guidance reward function is designed aswhere represents the heading angle between the moving direction of the UTV and its direction to the target. The design principle of this reward function is to maximize the reward as the UTV aligns more closely with the target direction. denotes the proximity distance. and are constant hyperparameters, where . If the Euclidean distance between the UTV and the target exceeds , the weight of this reward is reduced. Otherwise, the impact of this reward increases, encouraging the UTV to proceed toward the target. A more detailed illustration is provided in Figure 4 (see, for instance, Conditions 4 and 5 in the figure).

Figure 4. Illustration of a UTV navigation in an environment with obstacles.

Figure 4. Illustration of a UTV navigation in an environment with obstacles. - Arrival Reward Design: When the UTV reaches the target point, the arrival reward can be defined aswhere is a hyperparameter, denotes the total task duration, and is the task completion threshold. A more detailed illustration is provided in Figure 4 (see, for instance, Condition 6 in the figure).

- Obstacle Avoidance Reward Design: The obstacle avoidance reward function is designed to encourage the UTV to maintain a safe distance to obstacles, thereby ensuring its safe arrival to the destination. The reward function can be expressed aswhere represents the collision threshold, which defines the minimum distance between the agent and an obstacle. denotes the safe distance between the agent and obstacles to ensure safe operation. A detailed illustration is provided in Figure 4 (see, for instance, Conditions 1–3 in the figure).

- Safe Driving Reward Design: The design principle of this reward function is to encourage the agent to avoid rugged, steep, or pothole areas with smooth movement. The specific formulation is as follows:where represents the elevation difference between the robot’s current position and its elevation value at the initial position.

- Energy Optimization Reward Design: To enhance the efficiency of agent navigation and minimize energy consumption, it is necessary to reduce the speed fluctuations of the UTV. The energy optimization reward function can be represented as the difference between the current velocity and the average velocity over the past time steps:where the current velocity and the average velocity over the past time steps, , are used.

In summary, the composite reward based on the aforementioned individual rewards can be expressed as

where denotes the weights associated with each reward component. The composite reward function incorporates multiple optimization objectives to improve the UTV’s navigation efficiency, energy utilization, control stability, and operational safety. For example, in environments with fewer obstacles, greater impact can be placed on task completion and time efficiency by increasing the weights of , , and , thereby maximizing the corresponding rewards.

4. SAC-Based Hybrid Policy

In this section, we provide a detailed explanation of the implementation details of the proposed SAC-based Hybrid Policy (SAC-HP) algorithm.

Although the standard SAC algorithm [24] demonstrates outstanding performance in navigation tasks, its performance is limited by the following reasons. Firstly, when the dimensions of the state and action spaces are high, the agent requires significant exploration time at the beginning to collect sufficient experience. Secondly, due to the black box nature of deep networks, DRL policies might overfit the training environment, reducing the safety and adaptability in new environments.

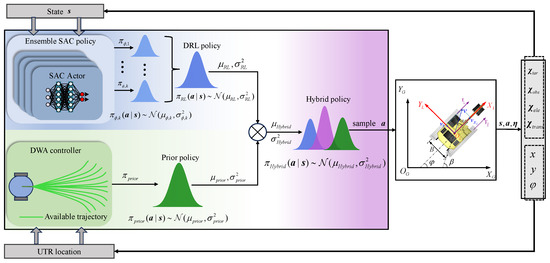

The SAC-HP framework is illustrated in Figure 5. SAC-HP consists of two primary components: the DRL policy and the classical (prior) policy. The robot’s actions are determined jointly by these two policies and can be expressed using the following equation:

where represents the DRL policy, and denotes the prior policy derived from classical controllers (i.e., DWA). is a normalization factor. Specifically, ensures that the resulting hybrid policy distribution remains a valid probability distribution after the multiplicative fusion of the learned DRL policy and the prior controller distribution . When both components are Gaussian distributions, their product results in an unnormalized Gaussian, and corresponds to the integral of this product over the action space. In practice, the parameters of the fused distribution (mean and variance) can be derived in closed form using Gaussian product rules (i.e., Equation (31)), and the normalization constant is implicitly handled within this derivation.

Figure 5.

The SAC-HP framework. The hybrid policy generates the hybrid action directly via a combination of the DRL policy based on SAC and the classical (prior) policy based on DWA controller. The DRL policy is obtained by using an ensemble SAC policy that is a mixture of a single SAC policy with state as an input, which is defined in Section 3.1. The DWA controller uses the UTV’s position as inputs.

In the SAC algorithm, the output actions from the Actor network obey an independent Gaussian distribution, , where represents the mean of the output actions, and denotes the variance of the output actions. To leverage the advantage of stochastic policies and reduce variance, we utilize ensemble learning-based uncertainty estimation techniques [26]. The ensemble consists of K agents to construct an approximately uniform and robust mixture model. The predicted outputs of the ensemble are fuses to a single Gaussian distribution with a mean and variance , which is written as

where and represent the mean and variance of the individual DRL policy , respectively.

Once the DWA controller predicts the optimal linear velocity and angular velocity , the optimal angular velocities of the left and right driving wheels can be calculated based on Equations (1) and (3) as

Furthermore, the preliminary action output from the DWA controller can be obtained. To acquire a distributional action from a prior controller, assuming the variance of the prior policy is , with a mean corresponding to the prior controller’s deterministic output . Thus, the prior policy can be expressed as . The prior policy is able to guide the agent within a certain range of area at the beginning, thereby avoiding unnecessary high-risk exploratory actions.

The details of the hybrid policy are described in Algorithm 1.

| Algorithm 1 SAC-based Hybrid Policy |

Require: Initialize ensemble of K SAC policies ; prior variance ; Initialize experience replay buffer ; Set update frequency and max iteration .

|

In the SAC-HP framework, each iteration begins by sampling actions from an ensemble of K SAC policies. These K outputs are first aggregated to form a robust and approximately uniform Gaussian distribution (i.e., Equations (27) and (28)), which captures policy uncertainty and reduces overfitting. In parallel, the DWA controller produces a deterministic action based on the current robot state, which is modeled as a fixed-variance Gaussian distribution . These two distributions are then fused into a hybrid Gaussian policy using Equation (31). An action is sampled from , executed in the environment, and the resulting transition is stored in the replay buffer. All ensemble SAC agents are updated based on the collected experience. This hierarchical fusion structure ensures both safe exploration and generalizable policy learning under rugged terrain conditions. Note that the hybrid action is sampled at every decision-making step during both training and evaluation. This ensures that the prior (DWA) continuously guides the exploration throughout training and contributes to policy robustness during testing.

5. Simulation Results

Compared against baselines, several experiments were designed to verify the performance of the proposed hybrid policy controller for navigation.

5.1. Simulation Environment Setting

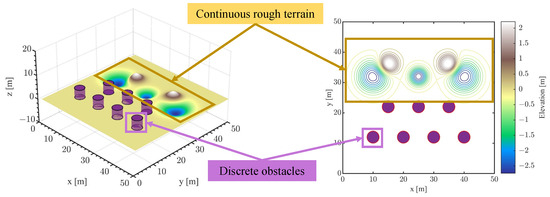

The simulation area (i.e., ) with unstructured obstacles was configured as shown in Figure 6. Purple cylindrical shapes represent discrete binary obstacles, while the contour regions indicate continuous rugged terrains. Assuming that the UTV will encounter random slipping disturbances within the rugged terrain areas, the task objective is to enable the UTV to navigate from the blue starting point to the red endpoint. The experimental configuration parameters and SAC training hyperparameters are listed in Table 2 and Table 3, respectively. Notably, the maximum rotational speed of the UTV’s left and right drive wheels is .

Figure 6.

Experimental scenario.

Table 2.

Parametric setting in the simulation.

Table 3.

Training configuration.

The control time step was set to 0.2 s throughout training and evaluation. This choice was motivated by three considerations: (1) The dynamic response delay of tracked vehicles is typically around 0.15–0.2 s, making finer control updates less impactful [1]. (2) The onboard sensor suite, including LiDAR, operates at a sampling frequency of 5 Hz, corresponding to one observation every 0.2 s. (3) Empirical tests indicate that this step size provides a good tradeoff between control accuracy and computational efficiency, especially in long-horizon navigation scenarios. This setting ensures stable policy learning without excessive computational overhead.

5.2. Performance of the Extended State Space

To evaluate the impact of the extended state space on the navigation performance of UTV, we trained four separate agents. The state space and training environment configurations for these agents are detailed in Table 4.

Table 4.

Configuration of agents and environment.

During training, each episode started with different initial positions and target points, enabling the agent to explore various behaviors and regions. For Agents 3 and 4, the map area within was configured as a low-friction region. When the UTV operates in this region, random slipping rates are applied to the left and right tracks. The slipping rates were uniformly sampled from the range .

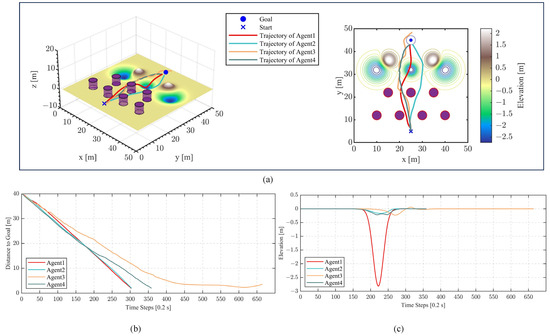

Figure 7 highlights the performance differences among the four agents. According to the blue curve in Figure 7c, the results demonstrate that incorporating elevation information into the state space allowed the UTV to detect terrain undulations, enabling it to avoid deep pits and steep slopes while navigating through relatively smooth regions toward the target (i.e., Agent 2). In contrast, the UTV failed to avoid rugged terrain and fell into a deep pit at approximately the 200th time step according to the red curve in Figure 7c.

Figure 7.

Performance of extend state space. (a) The planned trajectories of the agents. (b) The temporal variation in the distance between the UTV and the target point. (c) The elevation values of the UTV’s position at each time step.

Under slipping disturbances, the trajectory planned by Agent 3 exhibited significant oscillations and failed to reach the goal according to the yellow curve in Figure 7c. In contrast, the trajectory planned by Agent 4 was much smoother, indicating that the introduction of agent pose changes in the state space allows the UTV to effectively mitigate the effects of slip disturbances.

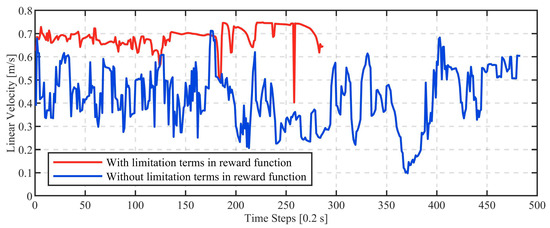

5.3. Reward Function Evaluation

To validate the influence of the in the reward function (24), we recorded the linear velocity curve over time during the driving stage. Figure 8 illustrates the velocity outputs of two agents (i.e., one trained with and the other without) during the driving stage. Both agents were trained using the complete state space, with random slip disturbances introduced in the environment (i.e., same as the configuration of Agent 4 described in Section 5.2). As shown in Table 5, when was included in the reward function, the UTV reached the destination at the 287th time step, with an average linear velocity of 0.69 and a variance of 0.0019 (i.e., represented by the red curve). In contrast, when was excluded, the UTV took 483 time steps to reach the destination, with an average linear velocity of 0.42 and a variance of 0.0152 (i.e., represented by the blue curve). The experimental results indicate that the energy optimization term in the reward function (24) significantly reduces velocity oscillations in the UTV, enabling it to operate more smoothly and at higher speeds.

Figure 8.

The linear velocity curve along with time.

Table 5.

Results of reward function evaluation.

5.4. Performance of SAC-HP

The SAC algorithm based on the extended state space enables the robot to handle continuous rugged terrain and slip disturbances, thereby improving the safety and robustness of the navigation process. However, in large-scale environments (i.e., long-sequence navigation environments) with extensive map coverage, it becomes difficult for the robot to reach the target with a larger exploration space. Additionally, deep reinforcement learning generally suffers from poor generalization performance. When the robot enters an unknown environment, it is challenging to plan a collision-free path to the target using the overfitted model obtained from the previous experience.

To address the issue of slow exploration and difficulty in reaching the target point in long-sequence environments, the SAC-based Hybrid Policy (SAC-HP) algorithm is proposed.

We trained the agent in a long-sequence environment of 60 m by 100 m. The robot was able to avoid obstacles and navigate to the target with the trained model.

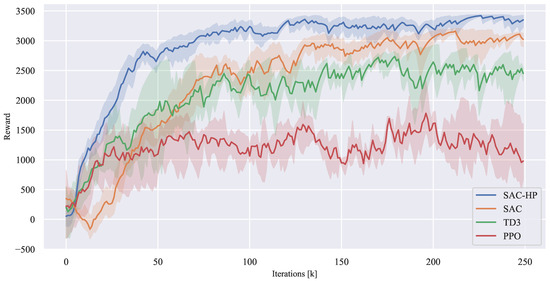

As shown in Figure 9, the cumulative reward value obtained by the agent in each episode is plotted as the number of algorithm iterations increases. Compared to the classic SAC algorithm, the blue curve in Figure 9 represents that the SAC-HP algorithm, with the reward curve converging at approximately 3400, has a 16% improvement compared to SAC, showing a significantly faster convergence rate. This indicates that the learning efficiency in long-sequence environments is enhanced by introducing DWA as a prior policy to guide the agent’s early exploration.

Figure 9.

Average reward curves.

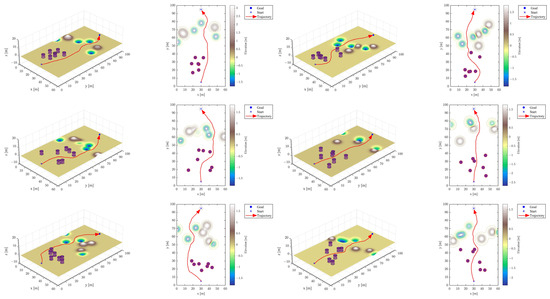

To validate the generalization performance of the model, we conducted tests in a random environment. As shown in Figure 10, the robot’s navigation trajectory in scenarios with random obstacles and terrains is plotted. The experimental results demonstrate that, in the random environment, the robot was able to successfully reach the target and avoid obstacles and rugged terrain.

Figure 10.

The optimal trajectory produced by SAC-HP controller in random environments.

5.5. Comparison Between Baselines

To evaluate the superiority of the proposed SAC-HP algorithm, five baselines were selected for comparison experiments (i.e., SAC [24], Twin Delayed Deep Deterministic Policy Gradient (TD3) [27], Proximal Policy Optimization (PPO) [28], APF [29], and DWA [30]). The experiments were conducted in two scenarios: one was with slipping disturbance (i.e., Env.a), and the other one was without slipping disturbance (i.e., Env.b), using a randomly generated 50 × 100 map. Each experiment was repeated for 500 episodes.

We established four metrics to evaluate the performance of various algorithms, including Average Path Length (APL), Average Time Steps (ATS), Elevation Standard Deviation (ESD), and Success Rates (SR):

- APL (Average Path Length): APL represents the mean total length of the path traveled by the robot from the starting point to the target point across all successful experimental episodes. It is used to assess the efficiency of path planning algorithms:where is the total path length in the i-th successful episode, and is the total number of successful episodes.

- ATS (Average Time Steps): ATS reflects the mean number of time steps required for the robot to complete the navigation task, measuring the temporal efficiency of the algorithm:where is the total number of time steps in the i-th successful episode.

- ESD (Elevation Standard Deviation): The elevation standard deviation for each episode represents the variation in the robot’s height along the navigation path during that episode. It is used to assess the smoothness of the planned trajectory and the robot’s adaptability to complex terrains:where is the height of the j-th point on the path, is the mean height, and is the total number of path points. Next, calculate the average of all episodes:where is the total number of episodes.

- SR (Success Rates): SR quantifies the proportion of episodes in which the robot successfully reached the target point, providing a measure of the algorithm’s reliability and robustnesswhere is the number of successful episodes.

As shown in Table 6, in the cases without slipping disturbances (i.e., Env.a), it can be observed that SAC-HP outperformed traditional DRL algorithms, achieving a success rate improvement of over 6%. Moreover, compared to SAC, it has a 1.9% improvement on APL, 4.5% improvement on ATS, and 35% improvement on ESD. In the cases with slipping disturbances (i.e., Env.b), the performance of the DRL baseline model dropped sharply, while the success rate of SAC-HP was able to maintain over 90%. The results demonstrate its robustness and reliability in completing navigation tasks, even in challenging environments.

Table 6.

Performance comparison of SAC-HP and baseline algorithms across metrics.

In the cases without slipping disturbances, APF and DWA exhibited higher success rates than some DRL algorithms. However, when slipping disturbances are introduced, the performance of traditional local planning algorithms deteriorates significantly. This indicates that DRL-based algorithms, particularly SAC-HP, have a stronger capability to cope with random disturbances. Furthermore, traditional planning algorithms struggle to handle rugged terrains, as evidenced by their significantly higher elevation standard deviations in each experimental episode compared to DRL algorithms. This confirms the advantage of DRL algorithms in generating smoother trajectories and maintaining stability under complex terrain conditions.

6. Conclusions

This paper presents a novel approach for real-time autonomous path planning of UTVs in unknown off-road environments, utilizing the SAC-based Hybrid Policy (SAC-HP) algorithm. By integrating the kinematic analysis of tracked vehicles, a new state space and action space are designed to account for rugged terrain features and the interaction between the tracks and the ground. This enables the UTV to implicitly learn policies for safely traversing rugged terrains while minimizing the effects of slipping disturbances.

The proposed SAC-HP algorithm combines the advantages of deep reinforcement learning (DRL) with prior control policies (i.e., Dynamic Window Approach) to enhance exploration efficiency and generalization performance in long-sequence environments. Experimental results show that SAC-HP converged 16% faster than the traditional SAC algorithm and achieved a more than 6% higher success rate in rough terrain scenarios. Additionally, it reduced the elevation standard deviation by 35% (from 0.175 m to 0.113 m), indicating smoother trajectories, and decreased the average time steps by 4.5%. The introduction of the energy optimization term in the reward function also effectively reduced velocity oscillations, allowing the robot to operate more smoothly and at higher speeds.

Tests conducted in random environments with obstacles and rugged terrain demonstrate the robustness of the model, as the robot was able to autonomously avoid obstacles and navigate to the target location, even in unknown environments. These results highlight the potential of the SAC-HP algorithm to enhance the safety, robustness, and efficiency of UTV in complex environments.

Although the SAC-HP algorithm demonstrates strong performance in autonomous navigation for UTVs, several potential improvements can be explored in future work. First, incorporating additional environmental factors, such as water currents and dynamic obstacles, could enhance the algorithm’s adaptability to complex off-road environments. Secondly, the scalability of the algorithm for larger and more complex terrains, particularly in high-dimensional state and action spaces, warrants further investigation. Moreover, although the SAC-HP agent significantly reduces training time compared to vanilla SAC, achieving satisfactory performance within extremely low iteration counts remains a challenge. To address this, future work could explore few-shot reinforcement learning [31] or RL with expert demonstrations [32] to enable rapid policy adaptation in resource-constrained or time-critical deployment scenarios.

In addition, real-world deployment remains a major challenge for DRL-based methods. Potential remedies such as domain randomization [33], noise injection during training [34], and human-guided RL [35] may help close the simulation-to-reality gap. Moreover, robust sensor fusion using IMU, LiDAR, and visual input can enhance policy generalization under uncertain terrain conditions [36,37]. Future extensions of SAC-HP will integrate these mechanisms to facilitate more reliable and scalable deployment in the field.

Future work could also explore extending the SAC-HP framework to aerial systems, such as fixed-wing UAVs. For example, the ensemble-based policy fusion used in our UTV strategy could be adapted to address UAV-specific constraints (e.g., roll angle limits and aerodynamic load factors). Insights from UAV trajectory optimization studies [38] could inform such extensions, bridging the gap between ground and aerial autonomous navigation.

Author Contributions

Conceptualization, Y.X., S.Z., and D.Z.; methodology; Y.X. and S.Z.; software, S.Z. and Y.F.; validation, S.Z. and D.Z.; formal analysis, Y.X. and S.Z.; resources, Y.X. and D.Z.; data curation, D.Z. and Y.F.; visualization, S.Z. and Y.F.; writing—original draft preparation, Y.X.; writing—review and editing: D.Z. and M.V.; supervision, Y.X., D.Z., and M.V.; project administration, D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 52377117).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Amokrane, S.B.; Laidouni, M.Z.; Adli, T.; Madonski, R.; Stanković, M. Active disturbance rejection control for unmanned tracked vehicles in leader–follower scenarios: Discrete-time implementation and field test validation. Mechatronics 2024, 97, 103114. [Google Scholar] [CrossRef]

- Ugenti, A.; Galati, R.; Mantriota, G.; Reina, G. Analysis of an all-terrain tracked robot with innovative suspension system. Mech. Mach. Theory 2023, 182, 105237. [Google Scholar] [CrossRef]

- Zou, T.; Angeles, J.; Hassani, F. Dynamic modeling and trajectory tracking control of unmanned tracked vehicles. Robot. Auton. Syst. 2018, 110, 102–111. [Google Scholar] [CrossRef]

- Zhai, L.; Liu, C.; Zhang, X.; Wang, C. Local Trajectory Planning for Obstacle Avoidance of Unmanned Tracked Vehicles Based on Artificial Potential Field Method. IEEE Access 2024, 12, 19665–19681. [Google Scholar] [CrossRef]

- Aradi, S. Survey of Deep Reinforcement Learning for Motion Planning of Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 740–759. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, W.; Wang, J.; Yuan, Y. Recent progress, challenges and future prospects of applied deep reinforcement learning: A practical perspective in path planning. Neurocomputing 2024, 608, 128423. [Google Scholar] [CrossRef]

- Gök, M. Dynamic path planning via Dueling Double Deep Q-Network (D3QN) with prioritized experience replay. Appl. Soft Comput. 2024, 158, 111503. [Google Scholar] [CrossRef]

- Maoudj, A.; Hentout, A. Optimal path planning approach based on Q-learning algorithm for mobile robots. Appl. Soft Comput. 2020, 97, 106796. [Google Scholar] [CrossRef]

- Zhou, W.; Zhang, C.; Chen, S. Dual deep Q-learning network guiding a multiagent path planning approach for virtual fire emergency scenarios. Appl. Intell. 2023, 53, 21858–21874. [Google Scholar] [CrossRef]

- Rao, Z.; Wu, Y.; Yang, Z.; Zhang, W.; Lu, S.; Lu, W.; Zha, Z. Visual Navigation With Multiple Goals Based on Deep Reinforcement Learning. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 5445–5455. [Google Scholar] [CrossRef]

- Guo, H.; Ren, Z.; Lai, J.; Wu, Z.; Xie, S. Optimal navigation for AGVs: A soft actor–critic-based reinforcement learning approach with composite auxiliary rewards. Eng. Appl. Artif. Intell. 2023, 124, 106613. [Google Scholar] [CrossRef]

- Li, C.; Yue, X.; Liu, Z.; Ma, G.; Zhang, H.; Zhou, Y.; Zhu, J. A modified dueling DQN algorithm for robot path planning incorporating priority experience replay and artificial potential fields. Appl. Intell. 2025, 55, 366. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, C. On Hierarchical Path Planning Based on Deep Reinforcement Learning in Off- Road Environments. In Proceedings of the 2024 10th International Conference on Automation, Robotics and Applications (ICARA), Athens, Greece, 22–24 February 2024; pp. 461–465. [Google Scholar] [CrossRef]

- Tang, C.; Peng, T.; Xie, X.; Peng, J. 3D path planning of unmanned ground vehicles based on improved DDQN. J. Supercomput. 2025, 81, 276. [Google Scholar] [CrossRef]

- Zhang, K.; Niroui, F.; Ficocelli, M.; Nejat, G. Robot Navigation of Environments with Unknown Rough Terrain Using deep Reinforcement Learning. In Proceedings of the 2018 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Philadelphia, PA, USA, 6–8 August 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Weerakoon, K.; Sathyamoorthy, A.J.; Patel, U.; Manocha, D. TERP: Reliable Planning in Uneven Outdoor Environments using Deep Reinforcement Learning. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 9447–9453. [Google Scholar] [CrossRef]

- Nguyen, A.; Nguyen, N.; Tran, K.; Tjiputra, E.; Tran, Q.D. Autonomous Navigation in Complex Environments with Deep Multimodal Fusion Network. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 5824–5830. [Google Scholar] [CrossRef]

- Viadero-Monasterio, F.; Meléndez-Useros, M.; Zhang, H.; Boada, B.L.; Boada, M.J.L. Signalized Traffic Management Optimizing Energy Efficiency Under Driver Preferences for Vehicles With Heterogeneous Powertrains. IEEE Trans. Consum. Electron. 2025. [Google Scholar] [CrossRef]

- Rana, K.; Dasagi, V.; Haviland, J.; Talbot, B.; Milford, M.; Sünderhauf, N. Bayesian controller fusion: Leveraging control priors in deep reinforcement learning for robotics. Int. J. Robot. Res. 2023, 42, 123–146. [Google Scholar] [CrossRef]

- Wu, J.; Huang, Z.; Hu, Z.; Lv, C. Toward Human-in-the-Loop AI: Enhancing Deep Reinforcement Learning via Real-Time Human Guidance for Autonomous Driving. Engineering 2023, 21, 75–91. [Google Scholar] [CrossRef]

- Cheng, R.; Verma, A.; Orosz, G.; Chaudhuri, S.; Yue, Y.; Burdick, J. Control regularization for reduced variance reinforcement learning. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 1141–1150. [Google Scholar]

- Johannink, T.; Bahl, S.; Nair, A.; Luo, J.; Kumar, A.; Loskyll, M.; Ojea, J.A.; Solowjow, E.; Levine, S. Residual Reinforcement Learning for Robot Control. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 6023–6029. [Google Scholar] [CrossRef]

- Sabiha, A.D.; Kamel, M.A.; Said, E.; Hussein, W.M. ROS-based trajectory tracking control for autonomous tracked vehicle using optimized backstepping and sliding mode control. Robot. Auton. Syst. 2022, 152, 104058. [Google Scholar] [CrossRef]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Shi, H.; Shi, L.; Xu, M.; Hwang, K.S. End-to-End Navigation Strategy With Deep Reinforcement Learning for Mobile Robots. IEEE Trans. Ind. Inform. 2020, 16, 2393–2402. [Google Scholar] [CrossRef]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; Volume 30. [Google Scholar]

- Fujimoto, S.; van Hoof, H.; Meger, D. Addressing Function Approximation Error in Actor-Critic Methods. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Proceedings of Machine Learning Research Volume 80. pp. 1587–1596. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Yang, H.; He, Y.; Xu, Y.; Zhao, H. Collision Avoidance for Autonomous Vehicles Based on MPC With Adaptive APF. IEEE Trans. Intell. Veh. 2024, 9, 1559–1570. [Google Scholar] [CrossRef]

- Yang, H.; Xu, X.; Hong, J. Automatic Parking Path Planning of Tracked Vehicle Based on Improved A* and DWA Algorithms. IEEE Trans. Transp. Electrif. 2023, 9, 283–292. [Google Scholar] [CrossRef]

- Wang, Z.; Fu, Q.; Chen, J.; Wang, Y.; Lu, Y.; Wu, H. Reinforcement learning in few-shot scenarios: A survey. J. Grid Comput. 2023, 21, 30. [Google Scholar] [CrossRef]

- Elallid, B.B.; Benamar, N.; Bagaa, M.; Kelouwani, S.; Mrani, N. Improving Reinforcement Learning with Expert Demonstrations and Vision Transformers for Autonomous Vehicle Control. World Electr. Veh. J. 2024, 15, 585. [Google Scholar] [CrossRef]

- Garcia, R.; Strudel, R.; Chen, S.; Arlaud, E.; Laptev, I.; Schmid, C. Robust Visual Sim-to-Real Transfer for Robotic Manipulation. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 992–999. [Google Scholar] [CrossRef]

- Joshi, B.; Kapur, D.; Kandath, H. Sim-to-Real Deep Reinforcement Learning Based Obstacle Avoidance for UAVs Under Measurement Uncertainty. In Proceedings of the 2024 10th International Conference on Automation, Robotics and Applications (ICARA), Athens, Greece, 22–24 February 2024; pp. 278–284. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, Y.; Yang, H.; Huang, Z.; Lv, C. Human-Guided Reinforcement Learning With Sim-to-Real Transfer for Autonomous Navigation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 14745–14759. [Google Scholar] [CrossRef] [PubMed]

- Ou, Y.; Cai, Y.; Sun, Y.; Qin, T. Autonomous Navigation by Mobile Robot with Sensor Fusion Based on Deep Reinforcement Learning. Sensors 2024, 24, 3895. [Google Scholar] [CrossRef]

- Tan, J. A Method to Plan the Path of a Robot Utilizing Deep Reinforcement Learning and Multi-Sensory Information Fusion. Appl. Artif. Intell. 2023, 37, 2224996. [Google Scholar] [CrossRef]

- Machmudah, A.; Shanmugavel, M.; Parman, S.; Manan, T.S.A.; Dutykh, D.; Beddu, S.; Rajabi, A. Flight Trajectories Optimization of Fixed-Wing UAV by Bank-Turn Mechanism. Drones 2022, 6, 69. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).