1. Introduction

With the rapid advancement of intelligent driving technology in recent years, object detection has been increasingly applied in autonomous driving, intelligent surveillance, and related fields. However, in complex degraded environments such as nighttime, low-light, haze, or polluted road surfaces (e.g., harbor pavements with tire marks or unclean conditions), the significant degradation of image quality makes it difficult for traditional detection algorithms to effectively extract meaningful features, leading to a marked decline in both detection accuracy and robustness. [

1,

2]. In particular, “small objects”—defined in this study as targets occupying relatively few pixels in an image, such as distant pedestrians, traffic signs, and far-away vehicles—are more likely to be overlooked due to their low resolution and inconspicuous features [

3,

4,

5]. This makes small-object detection under degraded visual conditions a critical weakness in current computer vision systems. Moreover, since autonomous driving and surveillance applications require reliable detection across different scales of objects (from pedestrians to large vehicles), robust multi-scale feature representation is equally important. Therefore, accurately locating and recognizing both small and larger targets in low-quality images has become a key challenge in contemporary visual perception research, motivating the need for more effective and consistent detection frameworks [

6,

7,

8].

Recent studies on small object detection have primarily focused on feature fusion and lightweight network designs. Methods such as ASFF [

9] and CSPNe [

10] have achieved notable results in general scenarios. However, issues such as limited adaptability and inefficient multi-scale feature interaction remain prominent in degraded environments. To enhance model robustness, Chen et al. [

11] proposed Dual Perturbation Optimization (DPO), which minimizes loss function sharpness by simultaneously applying adversarial perturbations to both model weights and the input feature space, significantly improving the generalization of detection models in noisy and degraded conditions. Similarly, Shi et al. [

12] introduced ASG-YOLOv5, which enhances the detection accuracy and real-time performance for small objects in UAV remote sensing images by integrating a dynamic context attention module and a spatial gating fusion module. Khalili and Smyth [

5] presented SOD-YOLOv8, combining an efficient general feature pyramid (GFPN), a high-resolution detection layer, and an EMA attention mechanism, while proposing a novel Powerful IoU loss to improve the detection accuracy of small and medium-sized objects in traffic scenes at minimal computational cost.

Object detection, as a fundamental task in computer vision, aims to automatically locate and classify objects within images or videos. Its core techniques include backbone network design, data augmentation strategies, loss function optimization, and model compression and deployment. Among these, the YOLO (You Only Look Once) series has garnered significant attention as a representative one-stage object detection framework due to its high efficiency and real-time performance.

Moreover, several researchers have turned their attention to object detection in degraded and low-resolution environments. Liu et al. [

13] proposed Image-Adaptive YOLO (IA-YOLO), based on the YOLOv3 [

14] framework, by incorporating a differentiable image enhancement branch that enables adaptive preprocessing and joint training with the detection head, thereby improving performance in hazy and dim conditions. Further, Liu et al. [

15] introduced Dark YOLO, which integrates SimAM local attention and dimensional complementary attention, alongside a SCINet-based cascade illumination enhancement structure, enabling robust detection in extremely dark scenarios. Lan et al. [

16] employed decoupled contrast translation to enhance detection accuracy in nighttime surveillance. RestoreDet, proposed by Cui et al. [

17], improves detection stability on low-resolution degraded images by designing a degradation-equivariant representation mechanism and integrating a super-resolution reconstruction branch for auxiliary training. Recently, Wang et al. [

18] proposed UniDet-D, which incorporates a unified dynamic spectral attention mechanism to adaptively focus on critical spectral components under complex conditions such as rain, fog, darkness, and dust. This design achieves end-to-end fusion of image enhancement and object detection, ensuring consistent high performance across various degraded environments. Additionally, Hong et al. [

19] introduced an illumination-invariant learning strategy that significantly enhances robustness under low-light conditions by decoupling feature extraction from illumination cues, while Tran et al. [

20] combined a low-light enhancement framework with fisheye camera detection for intelligent surveillance, further emphasizing the importance of illumination-adaptive modules in real-world perception systems.

In recent years, the integration of LiDAR and image-based perception has gained significant attention in autonomous driving and UAV research. LiDAR-based detection excels at providing accurate depth and spatial geometry, whereas image-based methods are superior in semantic and texture representation. Recent surveys such as Wang et al. [

21] highlight that multimodal fusion of LiDAR and visual cues can substantially enhance robustness under adverse weather or low-light conditions. However, the computational cost and sensor calibration complexity often limit the deployment of LiDAR-based systems in small-scale UAVs or embedded platforms. Conversely, image-based detectors, such as DRF-YOLO, can achieve competitive performance through efficient illumination adaptation and multi-scale enhancement, providing a lightweight yet effective alternative for small-object detection tasks. Furthermore, Nikouei et al. [

3] and Mukherjee et al. [

22] provide comprehensive overviews and multimodal strategies for small object detection under occlusion, blur, and illumination degradation—issues that this work directly addresses through architectural and loss function optimization.

Since the introduction of YOLOv1 by Redmon et al. [

23], the YOLO family has evolved rapidly. It pioneered the integration of object localization and classification into a unified end-to-end neural network, eliminating the need for region proposal stages as used in traditional two-stage detectors. Unlike two-stage detectors such as Faster R-CNN [

24], YOLO directly divides the image into grids for joint object classification and bounding box regression, significantly improving inference speed while maintaining competitive accuracy—an advantage that makes YOLO particularly suitable for industrial applications requiring real-time feedback.

The evolution of the YOLO family has led to notable improvements in accuracy, speed, and architectural efficiency. YOLOv2 [

25] introduced Batch Normalization (BN) in all convolutional layers and adopted high-resolution inputs (448 × 448), leading to an overall mAP improvement of approximately 6.4%. Specifically, BN provided regularization and faster convergence (+2.4% mAP), and a high-resolution classifier pretrained on ImageNet contributed a further +4% gain. YOLOv3 adopted a new backbone, Darknet-53, and a multi-scale detection strategy (from

to

grid sizes), alongside residual connections, resulting in a performance boost of around 12% on the PASCAL VOC dataset [

14].

YOLOv5 incorporated Automatic Mixed Precision (AMP) training [

26], which reduced training time by approximately 40% using FP16/FP32 computation. YOLOv8 further innovated by unifying detection, segmentation, and pose estimation into a single architecture, while supporting dynamic model scaling from 2.3 M to 43.7 M parameters to adapt across various computational platforms. YOLOv9 introduced Programmable Gradient Information (PGI) and the Generalized Efficient Layer Aggregation Network (GELAN) [

27], enhancing gradient modeling and detection accuracy while maintaining computational efficiency. YOLOv10 removed the conventional Non-Maximum Suppression (NMS) module and introduced a dual assignment strategy and a lightweight classification head, further improving inference speed [

28]. The most recent YOLOv11 optimized its data augmentation policies, network structure, and loss functions, achieving a better trade-off between accuracy and efficiency in challenging environments.

Despite considerable progress, existing detection algorithms still face challenges when detecting small objects under combined degradation conditions such as low light, haze, and nighttime scenarios. The performance degradation and limited generalization in such environments motivate the need for more robust models. In this work, we propose DRF-YOLO (Degradation-Robust and Feature-enhanced YOLO), a novel detection framework based on YOLOv11, specifically tailored for small object detection in degraded environments. Our main contributions are summarized as follows:

Lightweight Feature Module:We propose CSP-MSEE (CSP Multi-Scale Edge Enhancement), a lightweight module based on the original C3k2 block from YOLOv11. This module integrates multi-scale pooling and edge-aware enhancements to improve feature representation and boundary perception while reducing parameter count and computational complexity.

Multi-Scale Attention Mechanism: We replace the original SPPF module with the Focal Modulation [

29] mechanism. This attention-based design enhances the model’s sensitivity to both local and global semantic contexts and improves detection robustness for small objects across varying scales.

Dynamic Head Design: A novel Dynamic Interaction Head (DIH) is designed, integrating task alignment and multi-task interaction. It utilizes shared convolutions, scale-aware encoding [

30], Group Normalization [

31], and Deformable Convolution v2 (DCNv2) [

32], enabling more flexible feature fusion between classification and regression tasks, especially for targets with varying sizes and shapes.

Robustness in Degraded Environments: We incorporate an unsupervised image enhancement algorithm, Zero-DCE [

33], during training to improve visibility in low-light and low-contrast conditions. Additionally, we replace traditional IoU/CIoU with the GIoU loss [

34] to improve bounding box localization in foggy and blurred edge conditions.

In summary, DRF-YOLO achieves a balanced trade-off among accuracy, robustness, and computational efficiency through structural innovation and feature enhancement strategies, offering a promising solution for small object detection under various degraded environmental conditions.

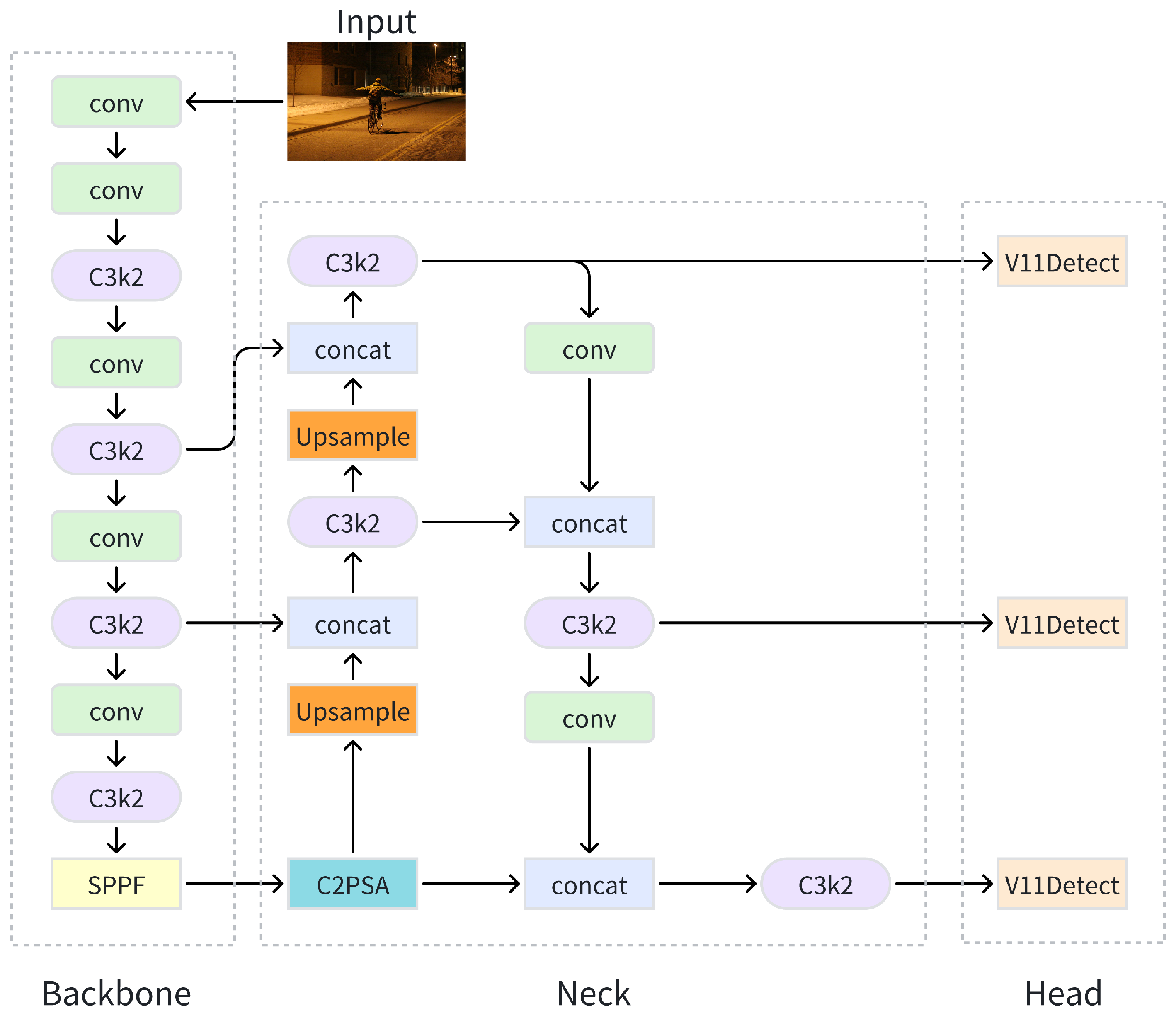

3. Improved DRF-YOLO Algorithm

To enhance the robustness and accuracy of object detection models under nighttime, low-light, and various degraded conditions, this paper proposes a novel lightweight detection algorithm named

DRF-YOLO (Degradation-Robust and Feature-enhanced YOLO), based on YOLOv11, as illustrated in

Figure 2. The proposed algorithm introduces systematic improvements in network architecture, feature enhancement strategies, and environmental adaptability.

First, a self-designed module named CSP-MSEE (CSP Multi-Scale Edge Enhancement) is introduced to replace the original C3k2 structure in the backbone. This module integrates multi-scale feature extraction with edge enhancement. By employing multi-scale pooling techniques such as nn.AdaptiveAvgPool2d, the model captures features across various receptive fields. Coupled with the EdgeEnhancer sub-module, it precisely highlights object contours. The extracted multi-scale features are then aligned and fused through convolutional layers, significantly enhancing both edge sensitivity and multi-scale feature representation.

Second, to further improve the model’s ability to perceive key object regions, the original Spatial Pyramid Pooling Fast (SPPF) module is replaced with a Focal Modulation attention mechanism. Unlike conventional spatial pooling, Focal Modulation leverages both local spatial cues and global context to flexibly construct semantic representations of salient regions. This not only strengthens contextual understanding in cluttered scenes but also improves detection of small objects and enhances robustness in low-contrast and noisy conditions.

For the detection head, a new architecture called

Dynamic Interaction Head (DIH) is designed. It incorporates the Adaptive Spatial Feature Fusion (ASFF) mechanism along with a P2 layer for small object detection [

37], enabling cross-scale feature fusion with precise spatial alignment. This yields improvements in both localization precision and detail recovery in small object scenarios.

At the image preprocessing stage, an unsupervised enhancement algorithm called Zero-DCE is employed. It performs end-to-end brightness and contrast correction for low-light inputs, thereby optimizing the input quality in nighttime environments.

In terms of the loss function, the original Complete IoU (CIoU) regression loss [

38] is replaced with Generalized IoU (GIoU) to improve bounding box localization and mitigate spatial ambiguity in degraded images.

Through these enhancements, DRF-YOLO achieves improved detection accuracy for small objects and exhibits strong robustness in adverse environments while maintaining a lightweight architecture. Experimental results demonstrate its superior performance in degraded conditions such as nighttime, low-light, and foggy scenarios.

3.1. CSP-MSEE Module

To enhance the network’s ability to perceive and represent multi-scale edge information, this paper introduces a lightweight feature enhancement module named

CSP-MSEE (CSP Multi-Scale Edge Enhancement), as illustrated in

Figure 3. The module is integrated into the C3k and C3k2 components of the YOLOv11 backbone, combining three core advantages—multi-scale feature extraction, edge information enhancement, and an efficient convolutional structure. This design effectively reduces computational overhead while significantly improving detection performance for small targets and blurred boundaries in degraded environments.

The CSP-MSEE module comprises two primary components:

Multi-Scale Feature Extraction Channel: This sub-module employs adaptive average pooling at multiple scales (e.g., , , , and ) to capture contextual information across diverse receptive fields. Channel adaptation and local feature modeling are achieved through pointwise convolutions followed by depthwise separable convolutions, ensuring efficient processing.

EdgeEnhancer Module: As shown in

Figure 4, this sub-module first applies local average pooling to extract low-frequency background responses from the input feature maps. It then subtracts this smoothed representation from the original feature map to isolate high-frequency edge details. The resulting edge signals are further amplified using a Sigmoid-activated convolutional layer. Finally, the enhanced edge features are combined with the original input via residual fusion to enrich the final representation.

All scale-enhanced feature maps are upsampled to a unified spatial resolution, concatenated with local feature branches, and fused through a convolution layer to produce the module’s output.

In terms of design philosophy, CSP-MSEE inherits the residual connection and channel-splitting strategies from the original C3 module in YOLOv11, maintaining a balance between network depth and width. This architecture not only improves the model’s sensitivity to multi-scale and edge-level details but also preserves computational efficiency, thereby offering robust support for visual perception tasks under complex lighting and degraded environmental conditions.

3.2. Focus Modulation Module

To enhance the model’s ability to selectively model critical information across diverse receptive fields, we propose the Focal Modulation Attention Enhancement Module as a key feature interaction component. This module leverages a gating-guided multi-scale contextual modeling mechanism to effectively regulate local–global information fusion while maintaining low computational complexity. Consequently, it improves the network’s adaptability to complex backgrounds and multi-scale object scenarios.

Unlike traditional self-attention mechanisms that rely on pairwise token similarity calculations, the proposed Focus Modulation module employs a combination of focal context modulation, gated aggregation, and element-wise affine transformations. Specifically, it utilizes multi-scale contextual convolutions to extract features from varying receptive fields. Learnable gating functions are then applied to adaptively adjust the weighting of contextual features across layers, enhancing the model’s focus on task-relevant regions.

A global context aggregation branch—comprising global average pooling and non-linear activation—is further incorporated to strengthen long-range dependency modeling, enabling more comprehensive visual field control. The architecture of the proposed module is illustrated in

Figure 5.

3.3. Self-Developed Task-Aligned Dynamic DIH Detection Head

To further improve the generalization ability and localization accuracy of detection heads under multi-scale targets and complex backgrounds, we propose a self-developed Dynamic Interactive Head (DIH). DIH integrates key techniques such as task decomposition mechanisms, dynamic convolutional alignment, offset-guided attention, and class probability enhancement. Its design aims to efficiently decouple task-specific features, achieve spatial alignment, and enable precise multi-scale fusion.

The DIH adopts adaptive spatial feature fusion strategies similar to ASFF and the improved YOLOv8 detection head. It is further enhanced with multi-scale feature pyramid networks and attention mechanisms to improve detection robustness across various target sizes. The architecture follows the processing pipeline of

“sharing, decoupling, alignment, and fusion”, as illustrated in

Figure 6. The key components of DIH are described below:

Shared Feature Extraction Module: A two-layer Conv_GN structure extracts shallow features from the input and forms a unified feature representation shared by downstream tasks.

Task Decomposition Module: Inspired by DyHead, two TaskDecomposition modules are used to decouple classification and regression branches. Global average pooling is used to guide cross-channel attention, allowing task-specific adaptation.

Offset-Guided Dynamic Convolution Alignment Module:The regression branch incorporates DyDCNv2, combining offsets and masks for spatially adaptive convolution. A convolutional layer dynamically generates spatial offsets and masks to adjust sampling points and aggregation weights, improving spatial alignment and edge localization.

Class Probability Alignment Module (CLSProbAlign): A class probability heatmap is explicitly constructed via two convolution layers. This heatmap is element-wise multiplied with classification features to guide them toward target regions and reduce background interference.

Prediction Branches:

Scale Regulator (Scale): Before final output, each feature layer includes a learnable Scale parameter to adaptively fine-tune the offset amplitude of predicted bounding boxes.

DFL Decoder: A Distribution Focal Loss (DFL) decoder is employed to transform the regression logits into continuous bounding box offset values.

Although the proposed DIH draws inspiration from dynamic detection heads such as DyHead and ATSS, it introduces several key differences. Unlike DyHead, which primarily relies on iterative attention layers for task interaction, DIH employs a task decomposition mechanism that explicitly separates classification and regression through dedicated modules, ensuring clearer task boundaries and reduced feature entanglement. Compared to ATSS Head, which focuses on adaptive sample selection to balance positive and negative anchors, DIH emphasizes offset-guided dynamic convolution alignment, allowing spatially precise feature aggregation at the pixel level. Moreover, the integration of a Class Probability Alignment branch is unique to DIH, guiding classification features toward target regions while suppressing background noise. These design choices collectively differentiate DIH from existing approaches, highlighting its stronger adaptability to degraded environments and small-object detection scenarios.

By combining DyDCNv2 with offset-guided attention, DIH achieves pixel-level precise spatial alignment, enhancing edge regression accuracy. The TaskDecomposition modules enable classification to focus on semantic structure, while regression emphasizes boundary geometry. Furthermore, the Class Probability Alignment mechanism enhances spatial attention guidance by highlighting target regions and suppressing background noise, ultimately improving detection precision in complex scenarios.

3.4. Design Rationale and Synergistic Effect

The proposed DRF-YOLO is not merely a collection of isolated modules but a carefully designed architecture where each component addresses specific challenges while complementing others. The CSP-MSEE module strengthens multi-scale feature extraction and edge enhancement, ensuring that even in low-contrast regions, object contours are preserved. Building upon this foundation, the Focal Modulation module adaptively emphasizes task-relevant regions by combining local and global contextual cues, which enhances semantic understanding in cluttered or degraded scenes. The Dynamic Interaction Head (DIH) then leverages these enriched representations by performing precise cross-scale feature fusion and task-aligned dynamic alignment, thereby improving both classification confidence and bounding box localization, especially for small or blurred objects. Finally, the Zero-DCE preprocessing step ensures that the input images themselves are optimized for subsequent feature extraction, further amplifying the effectiveness of downstream modules.

By integrating these modules into a unified framework, DRF-YOLO achieves a synergistic improvement: edge-aware multi-scale features are contextually refined and dynamically aligned across detection tasks, while input enhancement reduces the burden on feature extractors. This holistic design philosophy explains why DRF-YOLO demonstrates superior robustness and accuracy in adverse conditions such as nighttime, low-light, and foggy environments, without significantly increasing model complexity.

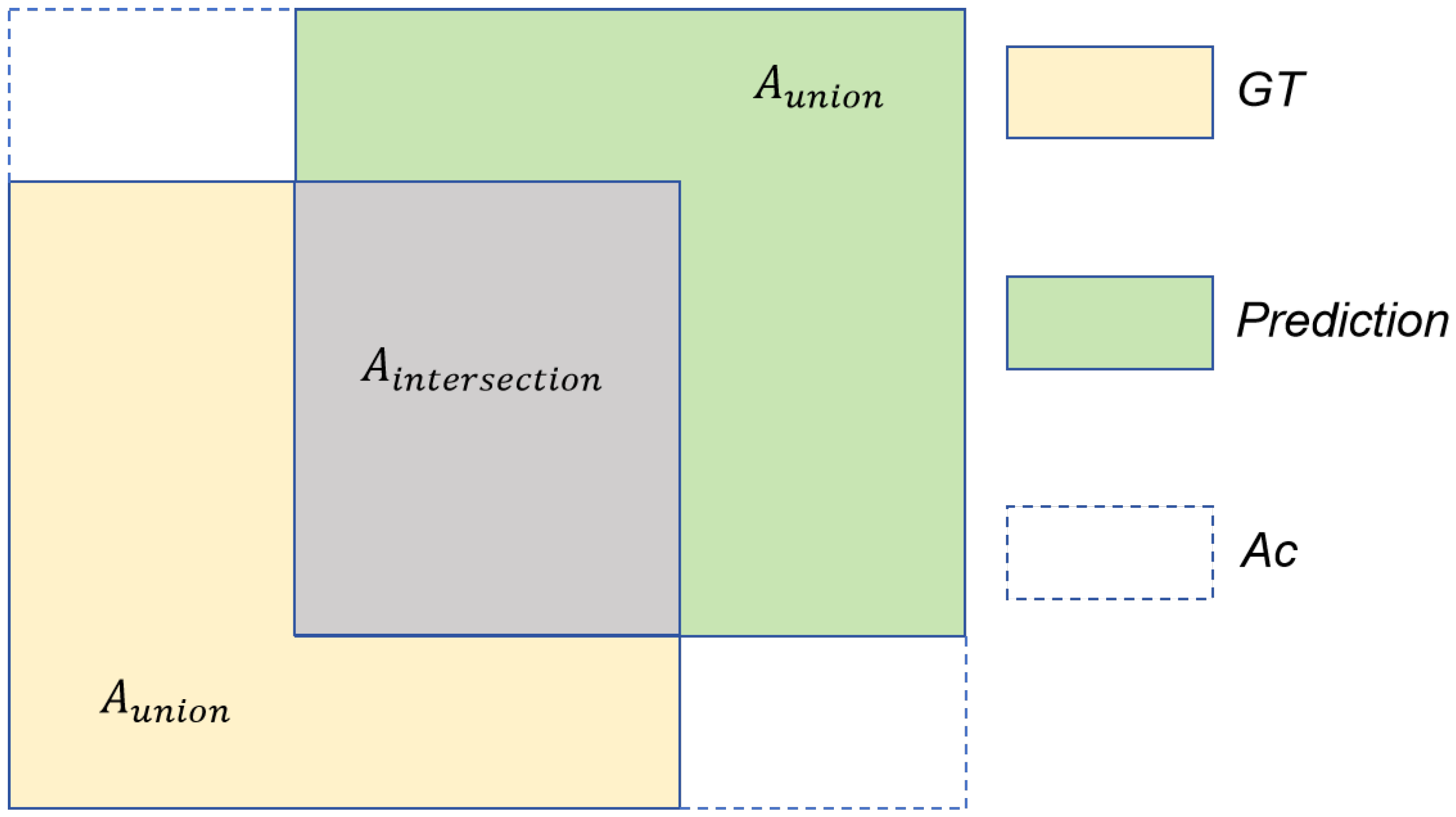

3.5. GIoU Loss Function

YOLOv11 employs the Complete Intersection over Union (CIoU) loss function as the default for bounding box regression. While CIoU offers advantages in modeling target geometry, handling bounding box overlap, and considering center-to-center distance, it provides relatively stable optimization under standard lighting conditions. However, in scenarios involving small-scale targets, complex backgrounds, or sparse object distributions, CIoU becomes vulnerable to interference. Its robustness deteriorates significantly when dealing with low-quality input images—such as those affected by poor illumination or degraded weather—resulting in a notable decline in bounding box regression accuracy.

To address this limitation and enhance object detection performance in adverse visual environments, this paper adopts the Generalized Intersection over Union (GIoU) loss as a substitute for the CIoU metric. GIoU improves training feedback especially for non-overlapping or partially overlapping bounding boxes. Unlike conventional IoU-based metrics, GIoU incorporates an additional penalty term based on the smallest enclosing box, allowing effective gradient propagation even when predicted and ground truth boxes do not intersect. This characteristic is particularly beneficial in scenarios with occlusion or localization uncertainty.

The Generalized Intersection over Union (GIoU) loss, proposed by Rezatofighi et al. [

34], extends the traditional IoU metric by incorporating the spatial relationship between the predicted box and the ground truth box. Unlike the standard IoU, which only measures the ratio of intersection over union between the predicted bounding box and the ground truth, GIoU introduces a penalty based on the area of the smallest enclosing box that contains both boxes.

The GIoU is defined as:

where

, and

denotes the area of the smallest enclosing box covering both the predicted box and the ground truth box. The subtraction term penalizes non-overlapping regions, enabling effective gradient updates even when there is no intersection between boxes.

This design allows the model to receive gradient updates even in challenging cases such as object occlusion or extreme aspect ratios. By incorporating GIoU into the loss computation, YOLOv11 demonstrates improved performance in detecting targets under poor lighting conditions, enhancing its overall robustness in degraded scenarios.

As illustrated in

Figure 7, the structure of the Generalized Intersection over Union (GIoU) loss function is defined as follows: the union area is determined by the intersection between the predicted bounding box and the ground truth box, while the minimum enclosing region (denoted as MWER) refers to the smallest region that simultaneously encloses both boxes. By incorporating the area of the MWER, the GIoU metric not only captures the overlapping region but also accounts for spatial discrepancies in shape, position, and scale between the two boxes.

Specifically, GIoU exhibits the following properties: when the predicted and ground truth boxes perfectly overlap, the GIoU reaches 1; when there is no intersection, GIoU equals 0; and when the predicted box diverges significantly in shape or size from the ground truth, GIoU may be negative, reflecting a poor localization performance.

The GIoU loss function offers notable advantages in complex environments such as low-light conditions and degraded images. First, in scenarios where environmental noise causes bounding box predictions to deviate from their true positions, the enclosing region penalty of GIoU effectively guides the optimization direction and suppresses regression divergence during training. Furthermore, since GIoU evaluates geometric consistency, it is less sensitive to noisy features and yields more robust gradient signals than traditional IoU.

Second, in degraded scenes where the contrast between foreground objects and background is low, models are prone to false positives. GIoU introduces stronger shape constraints that prevent predicted boxes from expanding arbitrarily into background regions, thus enhancing detection robustness. For small object detection, even minor displacement of bounding boxes can result in accuracy loss. Traditional IoU often suffers from gradient vanishing in such low-overlap conditions. In contrast, by considering the area of the minimum enclosing rectangle, GIoU still provides meaningful gradients, thereby improving optimization efficiency and localization precision during training.

In conclusion, by introducing a geometric penalty through the enclosing region, the GIoU loss function addresses the limitations of traditional IoU, particularly in degraded environments and small-object detection tasks. Its robust anti-interference capability and stable gradient design make it an effective and reliable objective function for enhancing target detection accuracy in complex scenarios.

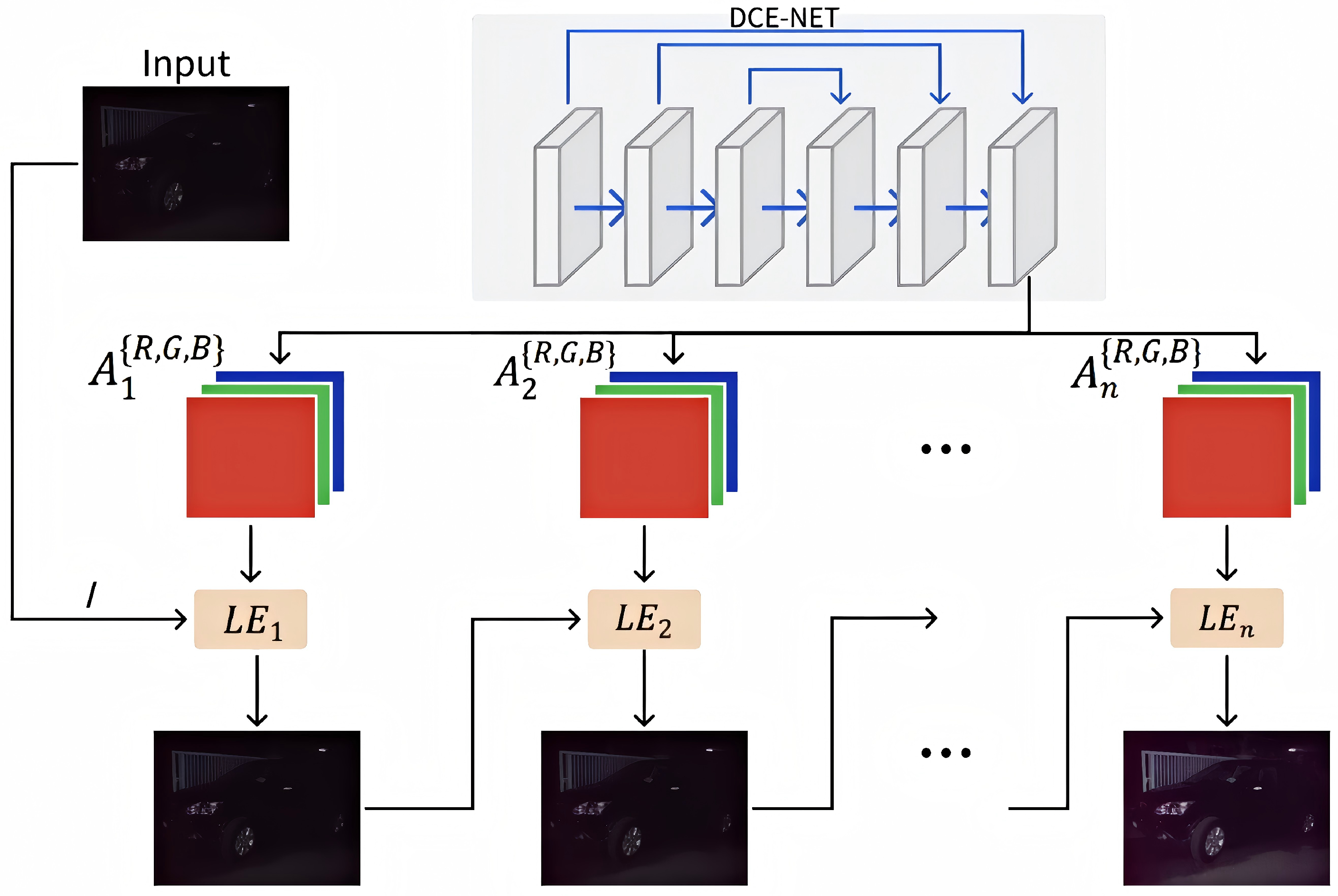

3.6. Zero-DCE: An Unsupervised Image Enhancement Algorithm

In low-light environments, the overall brightness, contrast, and detail of images are often significantly degraded, which directly affects the accuracy and robustness of subsequent object detection models. To address this issue, image enhancement has become a vital preprocessing step. Traditional methods—such as histogram equalization and Retinex-based techniques [

39]—typically rely on handcrafted rules, which limits their adaptability and generalization capability in diverse scenarios.

In recent years, deep learning-based enhancement methods have gained increasing attention for their superior performance. Among them, Zero-DCE (Zero-Reference Deep Curve Estimation) stands out as an end-to-end, reference-free image enhancement algorithm specifically designed for low-light conditions. It leverages parameter-free convolutional operations to effectively suppress noise and artifacts, thereby improving image brightness, contrast, and detail without requiring paired supervision.

Proposed by Guo et al. [

33], Zero-DCE redefines low-light enhancement as a curve estimation task rather than a conventional image-to-image translation problem. It learns a set of pixel-wise, content-aware illumination adjustment curves under fully unsupervised conditions. These curves are applied to the input image to produce an enhanced output with better perceptual quality. Importantly, Zero-DCE requires no paired training data and instead relies on intrinsic brightness statistics and structural regularities for self-supervision.

The core idea is to iteratively optimize the image enhancement through a learned set of functions

, where each

is a curve parameter corresponding to pixel location

x. These curves are applied to each pixel of the original image to yield the enhanced result. The formulation of the enhancement operation is defined in Equation (

2).

Here, represents the pixel value at position x in the input image, and are the curve parameters learned by the deep network. This formulation enables Zero-DCE to adaptively enhance a wide variety of image types—including indoor scenes, outdoor environments, and nighttime images—making it a powerful tool in fields such as computer vision, image recognition, and artificial intelligence.

In this study, the number of enhancement iterations

n is set to 8, which yields relatively optimal enhancement performance. At each iteration,

represents the enhanced image obtained from the previous result

, while

denotes the corresponding curve parameter map, which has the same spatial dimensions as the input image. The overall structure of the Zero-DCE enhancement process is illustrated in

Figure 8.

Another key feature of Zero-DCE is its unsupervised loss function design, which does not rely on reference images. It consists of four components:

Spatial Consistency Loss ()

This loss evaluates the variation in pixel differences between adjacent regions before and after enhancement. The goal is to preserve the spatial consistency of local image structures. Specifically, the image is divided into

K local regions, and the pixel differences between each region and its four neighboring regions (top, bottom, left, and right) are computed and averaged. Let

I be the input image and

Y the enhanced image. The average pixel values are obtained via average pooling, typically using

regions implemented with convolutional layers.

Exposure Control Loss ()

This loss constrains the image exposure level to avoid over- or under-enhancement. A target exposure value is predefined, and the brightness difference between the local average and this target value is penalized. Each local region is of size

. Let

M denote the total number of such regions.

Color Constancy Loss ()

Loss is measured according to a conclusion on whether the color is normal. According to the Gray-World color constancy hypothesis, for a colorful image, the average value of the three color components R, G, and B tends to the same gray value K, as shown in the formula below.

Illumination Smoothness Loss ()

This loss enforces spatial smoothness in the learned enhancement curves by constraining the gradient magnitude of the curve parameter map

along horizontal and vertical directions. Let

N be the total number of iterations, and

,

represent horizontal and vertical gradients, respectively.

The overall loss function is a weighted sum of the above components:

In this study, Zero-DCE is integrated as a preprocessing module before the YOLOv11 detection pipeline, particularly suited for image enhancement in extreme conditions such as nighttime or low-light scenarios. Experimental results demonstrate that images enhanced by Zero-DCE exhibit significantly improved brightness, sharper target edges, and substantial gains in both detection accuracy and recall. Quantitatively, the enhanced images achieve a PSNR of 12.57 dB and an SSIM of 0.5616, confirming notable improvements in image quality. The specific enhancement effects are visualized in

Figure 9.

4. Experimental Results and Analysis

4.1. Experimental Environment and Configuration

All experiments in this study were conducted under a unified configuration: Ubuntu 22.04 operating system, Python 3.10 environment, PyTorch 2.1.2, and CUDA 11.8. The hardware platform utilized an NVIDIA GeForce RTX 3080 Ti GPU. The detailed experimental settings are presented in

Table 1.

4.2. Datasets

In this study, two representative nighttime vision datasets are utilized to train and evaluate the proposed model: the nighttime subset of BDD100K and the ExDark dataset.

The

BDD100K (Berkeley DeepDrive 100K) dataset [

40] was developed by the Berkeley DeepDrive project team at UC Berkeley. It is a large-scale autonomous driving dataset containing over 100,000 driving videos under various weather conditions, time periods, and road types. A notable portion of the dataset includes extensive nighttime driving footage, which serves as valuable training material for object detection in complex environments. For this study, images labeled as “night” were extracted to form a nighttime subset, resulting in a training set of 27,445 images and a validation set of 4394 images. The images have a resolution of 1280 × 720 pixels and include annotations for diverse object categories such as vehicles, pedestrians, and traffic signs, offering both diversity and realism.

The ExDark (Exclusively Dark) dataset is specifically designed for object detection in low-light conditions. It contains 7363 real-world nighttime images across 12 object categories, including typical environments such as streets, indoor scenes, seaports, and rural areas. The dataset poses significant challenges due to low illumination, small objects, complex backgrounds, and occlusions, thereby providing a robust benchmark for evaluating detection accuracy and model resilience in extreme conditions.

Together, these two datasets complement each other in lighting conditions and scene composition, offering a comprehensive foundation for validating the effectiveness of the proposed algorithm in diverse nighttime scenarios.

4.3. Model Evaluation Metrics

Model performance is assessed using both quantitative and qualitative approaches. Quantitatively, the following metrics are computed: Precision (P), Recall (R), mean Average Precision (mAP), mAP@0.5, number of parameters (Params), and computational complexity (GFLOPs). Qualitatively, visual inspection is conducted on detection results over real-world nighttime images to assess the model’s practical effectiveness.

4.4. Ablation and Comparative Experiments

To evaluate the detection performance of the proposed DRF-YOLO algorithm in low-light environments, comprehensive comparative experiments were conducted against several mainstream object detection models, including Faster R-CNN, SSD, YOLOv3, YOLOv5n, YOLOv8n, YOLOv10n, and YOLOv11n.

Experiments were carried out on two typical nighttime datasets:

ExDark and the

BDD100K Night Subset. Evaluation metrics included

mAP@0.5,

Precision,

Recall,

Params, and

GFLOPs. The results are detailed in

Table 2 and

Table 3 and

Figure 10.

As shown in

Table 2, DRF-YOLO achieves superior performance on the ExDark dataset, outperforming all compared methods across all metrics. For instance, compared to YOLOv3, DRF-YOLO reduces parameters by approximately 96% and computational cost by 96.3%, while achieving improvements of 7.2% in Precision, 14.2% in Recall, and 16.7% in mAP@0.5. Compared to the mainstream lightweight model YOLOv11n, DRF-YOLO only increases parameter count by 1.3 M and GFLOPs by 3.4, yet delivers gains of 2.9%, 2.3%, and 3.4% in Precision, Recall, and mAP@0.5 respectively.

Compared to YOLOv11s, DRF-YOLO achieves better detection accuracy while reducing parameters by 58.9% and GFLOPs by 55%. Similar performance trends are observed on the BDD100K Night Subset (

Table 3), confirming the robustness and effectiveness of DRF-YOLO in low-light conditions without significant increases in model size or computational burden. Furthermore, we conducted cross-dataset generalization experiments (training on ExDark and testing on BDD100K, and vice versa), and observed consistent improvements, demonstrating that DRF-YOLO maintains strong generalization capability across different low-light datasets.

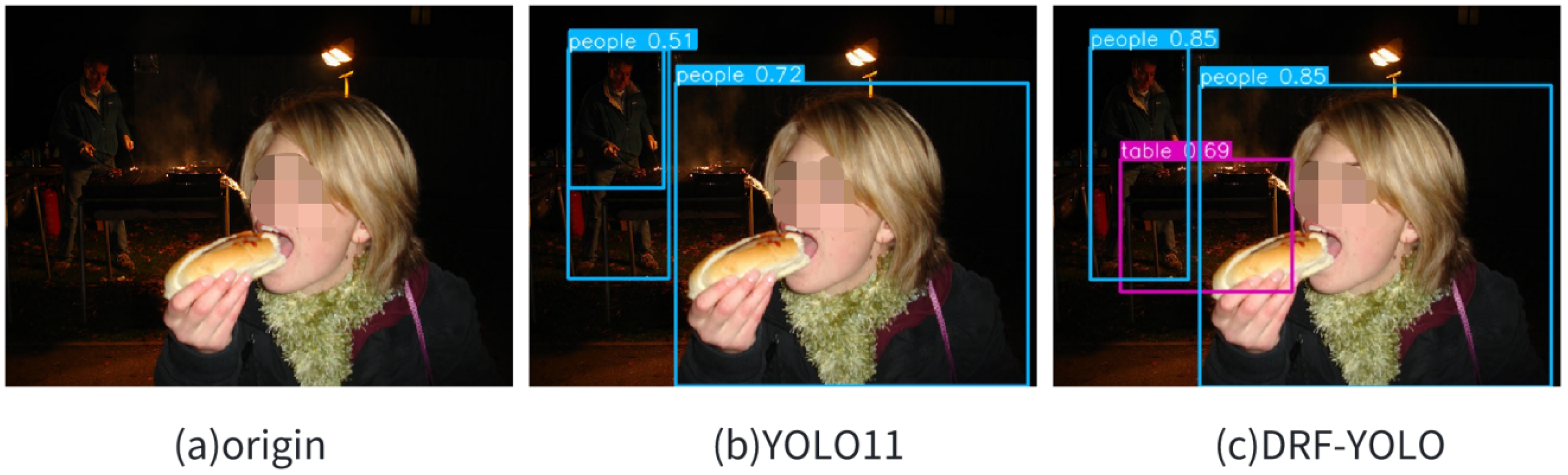

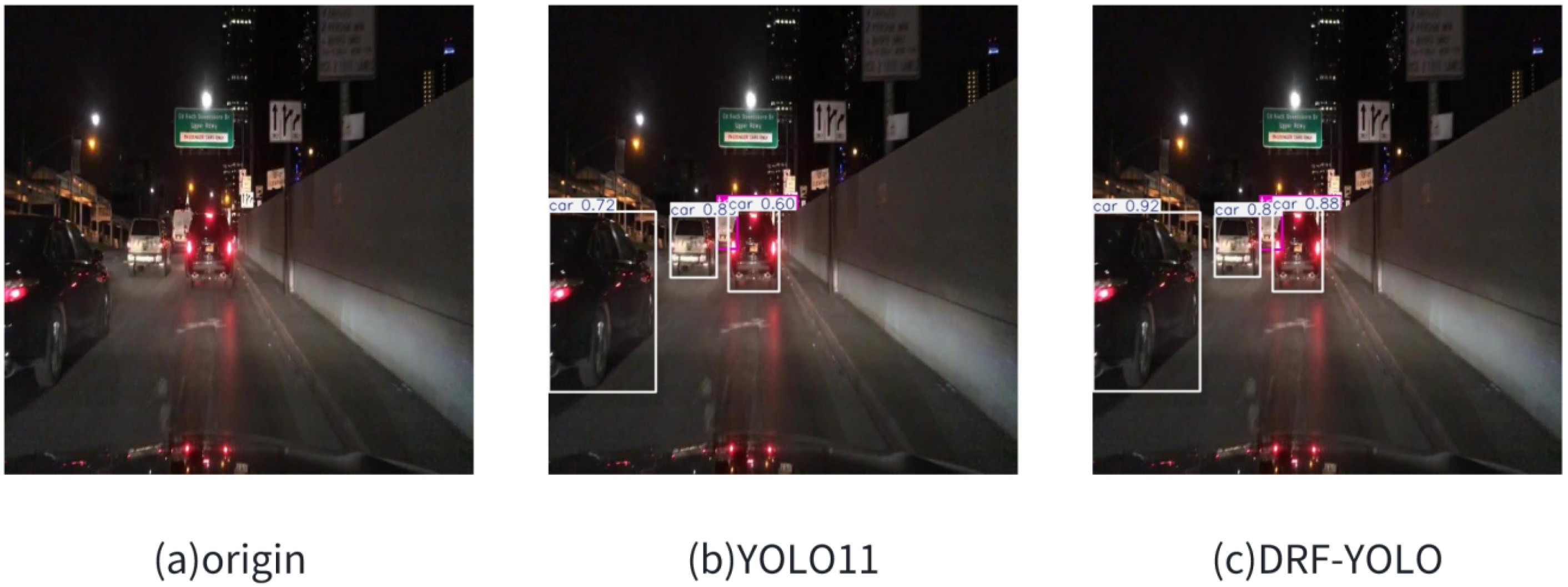

4.5. Detection Performance in Complex Nighttime Environments

The detection performance of the proposed DRF-YOLO algorithm in complex nighttime environments is visually illustrated in

Figure 11,

Figure 12,

Figure 13 and

Figure 14, using samples from the ExDark dataset and the nighttime subset of BDD100K. These images encompass typical low-light scenarios and urban road conditions at night, serving to evaluate the algorithm’s effectiveness under real-world degraded environments.

As shown in

Figure 11 and

Figure 12 (ExDark dataset), DRF-YOLO demonstrates high-confidence detection of common nighttime targets such as pedestrians and various objects. Under extreme low-light conditions, DRF-YOLO achieves up to 34% higher confidence in pedestrian detection compared to mainstream algorithms like YOLOv11 and YOLOv8, while significantly reducing both false positives and false negatives. Even when faced with occluded objects or blurred edges, the algorithm consistently generates accurate bounding boxes and category labels, highlighting its strong adaptability in low-illumination scenes.

Figure 13 and

Figure 14 further present the detection performance of DRF-YOLO on nighttime urban road images from the BDD100K dataset. In these complex environments, interference factors such as strong reflections, streetlight glare, and motion blur impose significant challenges on object detection. Experimental results reveal that DRF-YOLO can precisely detect small vehicles, pedestrians, and non-motorized road users. Compared to lightweight models like YOLOv8n, DRF-YOLO shows a 16% improvement in small object recognition and a notable reduction in false detection rates under occlusion.

In summary, the DRF-YOLO algorithm exhibits outstanding object detection capabilities across both the ExDark and BDD100K night datasets. It not only enhances detection accuracy in low-light and complex scenarios but also significantly improves performance in detecting small and occluded objects. These results confirm the algorithm’s robustness and practical generalization ability in real-world nighttime applications.

4.6. Ablation Study

To validate the effectiveness and necessity of each module in DRF-YOLO under degraded scenarios, systematic ablation experiments were conducted on the ExDark dataset. As a benchmark dataset for low-light object detection, ExDark encompasses various typical target categories and challenging nighttime scenarios, making it ideal for evaluating structural improvements.

4.6.1. Backbone Improvement

As shown in

Table 4, replacing the original C3K2 module in the YOLOv11 backbone with the proposed CSP-MSEE (Multi-Scale Edge Enhancement) module resulted in a significant reduction in parameters (approximately 0.4 M) and improved computational efficiency (0.3 GFLOPs), while maintaining stable detection accuracy. This demonstrates the module’s capability to achieve lightweight design without sacrificing feature representation quality.

4.6.2. Attention Enhancement

Integrating the Focal Modulation attention mechanism led to modest yet consistent improvements in both precision (P) and mAP@0.5, underscoring its advantages in small object localization and global contextual modeling.

4.6.3. Detection Head Optimization

The introduction of the Dynamic Interaction Head (DIH) further enhanced detection performance by leveraging cross-scale semantic enhancement and spatial attention fusion. This architecture significantly boosted detection accuracy for small and edge-region objects in complex nighttime scenes, with an observed gain of over 1.5% in mAP@0.5.

4.6.4. Loss Function Analysis

Different IoU-based regression losses were evaluated to enhance localization accuracy and convergence stability. As shown in

Table 5, the baseline YOLOv11 adopted CIoU, which considers overlap, center distance, and aspect ratio. However, it still suffers from unstable optimization when object aspect ratios vary significantly. To address this, several variants were tested.

DIoU improves upon CIoU by adding a penalty for the distance between the predicted and ground-truth box centers, accelerating convergence. EIoU further decouples width and height regression to improve bounding box aspect ratio alignment. SIoU introduces a geometric decomposition of the loss into angle, distance, and shape components, leading to smoother gradients and faster convergence. WIoU adaptively reweights loss contributions based on object scale and localization uncertainty, helping balance the learning between large and small targets. Finally, GIoU extends IoU by introducing a geometric penalty based on the area of the smallest enclosing box, improving robustness under occlusion and partial visibility.

In DRF-YOLO, these losses were applied only to the localization branch, while the classification and confidence branches used the Varifocal Loss. Empirically, GIoU achieved the best overall balance between precision and recall, with an mAP@0.5 of 65.2%, indicating superior generalization in low-light and occluded environments.

4.6.5. Image Enhancement

Finally, to enhance visual features in raw low-light images, the lightweight unsupervised enhancement algorithm Zero-DCE was employed during preprocessing. Without adding inference cost, it substantially improved image visibility, leading to further detection performance gains.

DRF-YOLO’s performance gains arise from both the individual strengths of CSP-MSEE, Focal Modulation, and DIH, and their synergistic interplay. CSP-MSEE enriches multi-scale and edge features, guiding Focal Modulation to focus on key regions, which in turn enables DIH to achieve precise spatial alignment and task-specific feature fusion. This coordinated design effectively enhances detection accuracy for small objects in degraded conditions, yielding a mAP@0.5 of 65.2% in low-light and complex nighttime environments while maintaining computational efficiency.

5. Conclusions

This study addresses the challenge of small-object detection in degraded visual conditions, such as nighttime and low-light environments in autonomous driving. We present DRF-YOLO, an improved YOLOv11-based model featuring CSP-MSEE for multi-scale edge enhancement, Focal Modulation for context-aware attention, and a Dynamic Interaction Head for precise small-object localization. The design is further reinforced by GIoU loss and Zero-DCE image enhancement to boost robustness in adverse lighting.

On the ExDark and BDD100K datasets, DRF-YOLO improves mAP@0.5 by 3.4% and 2.3% over YOLOv11. Although the parameter count and GFLOPs increase moderately (from 2.6 M to 3.9 M and 6.4 to 9.8, respectively), the architecture remains lightweight and efficient. Ablation studies confirm the contribution of each module, and visual results demonstrate stable detection under occlusion, blur, and low illumination. These improvements translate into practical benefits in real-world applications, such as earlier obstacle detection by several meters, faster reaction times, and enhanced road safety in autonomous driving scenarios.

Despite its strengths, DRF-YOLO has limitations. The current evaluation primarily uses existing nighttime datasets, which may not fully reflect performance on completely unseen environments or diverse object types. Future work could explore cross-dataset generalization, extend the model to UAV or traffic surveillance imagery, and further optimize the architecture to balance accuracy with computational cost. Additionally, investigating adaptive mechanisms for extremely small or densely packed objects could further enhance detection robustness.

In summary, DRF-YOLO provides an efficient, accurate, and robust solution for small-object detection in degraded environments, offering tangible advantages for intelligent vision systems in autonomous driving and surveillance.