Online Capacity Estimation for Lithium-Ion Batteries Based on Semi-Supervised Convolutional Neural Network

Abstract

:1. Introduction

2. Methodology

2.1. Input and Output Structures

2.2. Design of the SS-CNN

2.3. Design of the Training Strategy

2.3.1. Unsupervised Reconstruction

2.3.2. Supervised Regression

2.3.3. Supervised Fine-Tuning

3. Results and Discussion

3.1. Battery Dataset

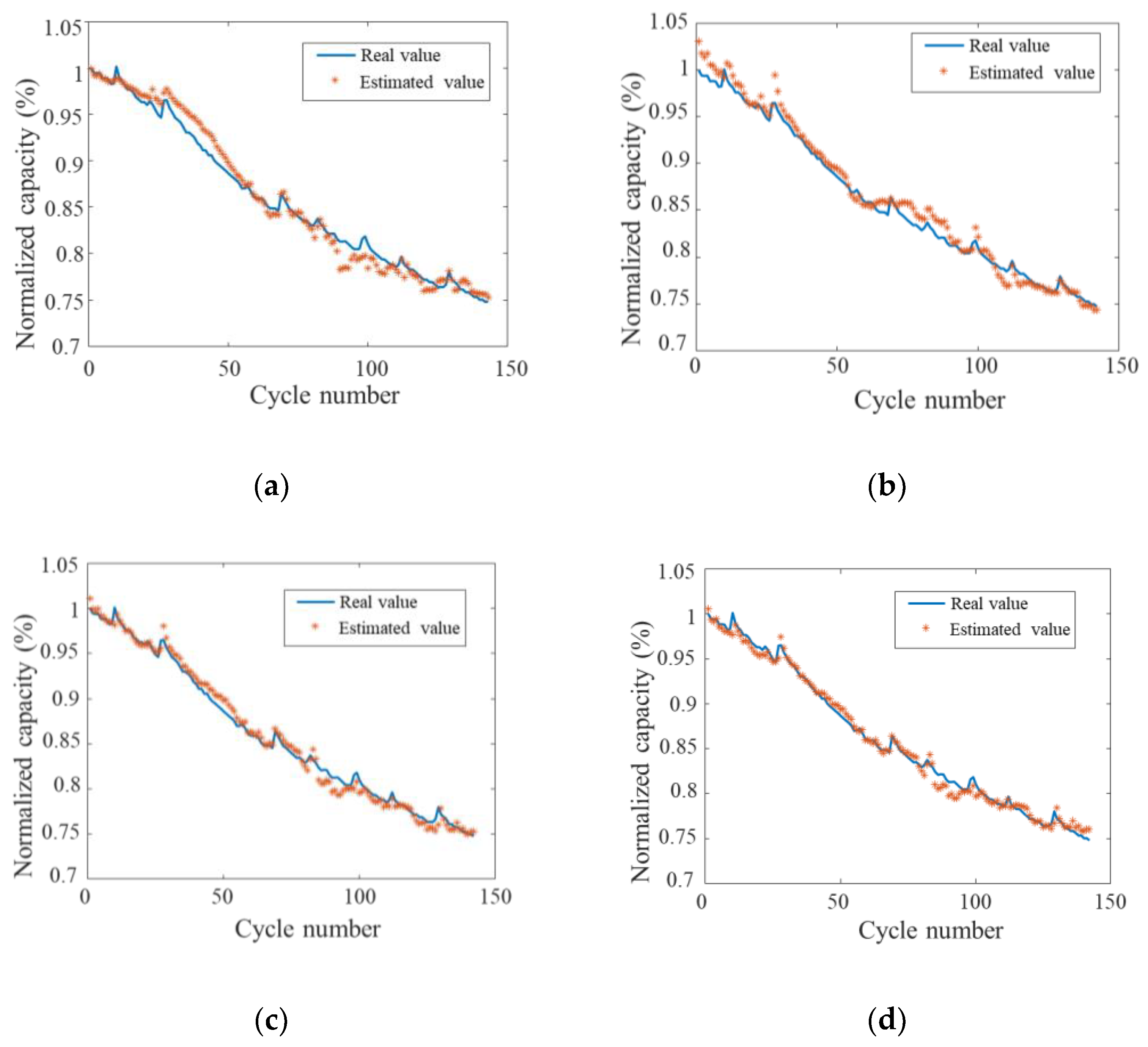

3.2. Capacity Estimation Results

3.3. Effect of the Starting Charge Voltage

3.4. Effect of the Training Sample Size

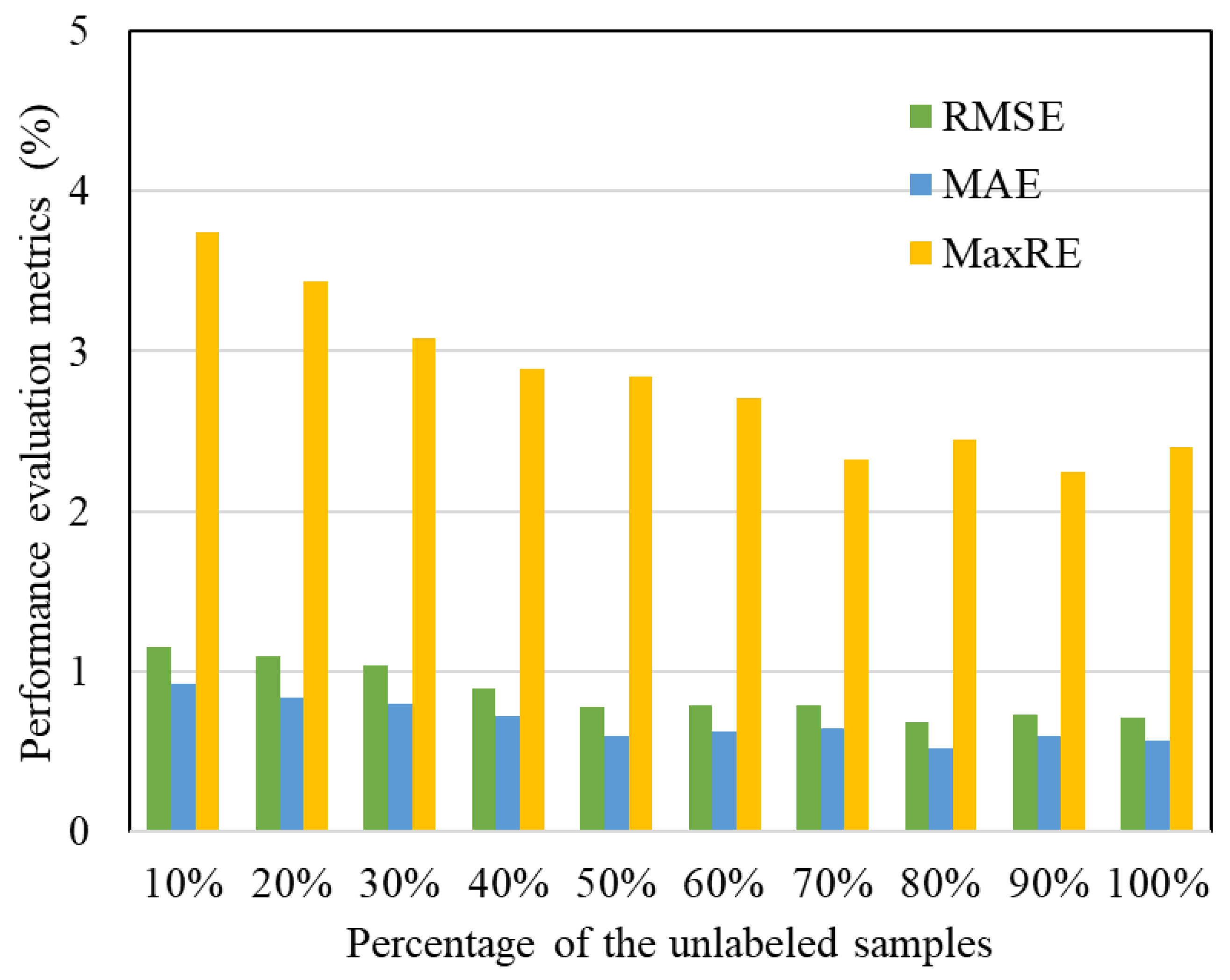

3.4.1. Different Sizes of Unlabeled Samples

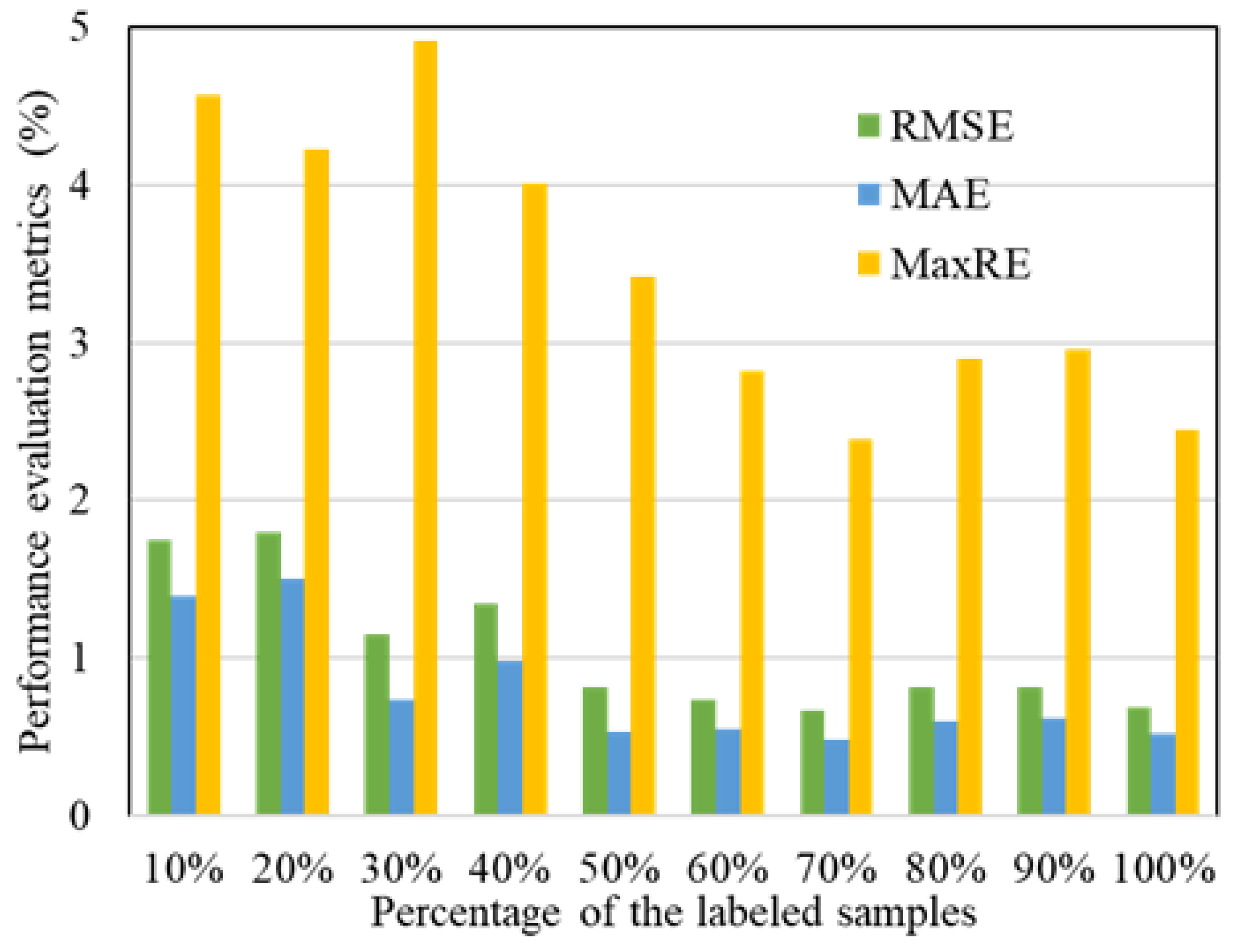

3.4.2. Different Sizes of Labeled Samples

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Dai, H.; Jiang, B.; Hu, X.; Lin, X.; Wei, X.; Pecht, M. Advanced battery management strategies for a sustainable energy future: Multilayer design concepts and research trends. Renew. Sustain. Energy Rev. 2021, 138, 110480. [Google Scholar] [CrossRef]

- Hossain Lipu, M.S.; Hannan, M.A.; Karim, T.F.; Hussain, A.; Saad, M.H.M.; Ayob, A.; Miah, M.S.; Indra Mahlia, T.M. Intelligent algorithms and control strategies for battery management system in electric vehicles: Progress, challenges and future outlook. J. Clean. Prod. 2021, 292, 126044. [Google Scholar] [CrossRef]

- Sarmah, S.B.; Kalita, P.; Garg, A.; Niu, X.D.; Zhang, X.W.; Peng, X.; Bhattacharjee, D. A review of state of health estimation of energy storage systems: Challenges and possible solutions for futuristic applications of li-ion battery packs in electric vehicles. J. Electrochem. Energy Convers. Storage 2019, 16, 40801. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Xiong, R.; Shen, W. A review on state of health estimation for lithium ion batteries in photovoltaic systems. eTransportation 2019, 2, 100028. [Google Scholar] [CrossRef]

- Li, J.; Landers, R.G.; Park, J. A comprehensive single-particle-degradation model for battery state-of-health prediction. J. Power Sources 2020, 456, 227950. [Google Scholar] [CrossRef]

- Wang, X.; Wei, X.; Dai, H. Estimation of state of health of lithium-ion batteries based on charge transfer resistance considering different temperature and state of charge. J. Energy Storage 2019, 21, 618–631. [Google Scholar] [CrossRef]

- Li, J.; Adewuyi, K.; Lotfi, N.; Landers, R.G.; Park, J. A single particle model with chemical/mechanical degradation physics for lithium ion battery State of Health (SOH) estimation. Appl. Energy 2018, 212, 1178–1190. [Google Scholar] [CrossRef]

- Li, X.; Yuan, C.; Wang, Z. State of health estimation for Li-ion battery via partial incremental capacity analysis based on support vector regression. Energy 2020, 203, 117852. [Google Scholar] [CrossRef]

- Li, Y.; Liu, K.; Foley, A.M.; Zülke, A.; Berecibar, M.; Nanini-Maury, E.; Van Mierlo, J.; Hoster, H.E. Data-driven health estimation and lifetime prediction of lithium-ion batteries: A review. Renew. Sustain. Energy Rev. 2019, 113, 109254. [Google Scholar] [CrossRef]

- Yang, D.; Zhang, X.; Pan, R.; Wang, Y.; Chen, Z. A novel Gaussian process regression model for state-of-health estimation of lithium-ion battery using charging curve. J. Power Sources 2018, 384, 387–395. [Google Scholar] [CrossRef]

- Deng, Y.; Ying, H.; E, J.; Zhu, H.; Wei, K.; Chen, J.; Zhang, F.; Liao, G. Feature parameter extraction and intelligent estimation of the State-of-Health of lithium-ion batteries. Energy 2019, 176, 91–102. [Google Scholar] [CrossRef]

- Stroe, D.I.; Schaltz, E. Lithium-ion battery state-of-health estimation using the incremental capacity analysis technique. IEEE Trans. Ind. Appl. 2020, 56, 678–685. [Google Scholar] [CrossRef]

- Tian, J.; Xiong, R.; Shen, W. State-of-health estimation based on differential temperature for lithium ion batteries. IEEE Trans. Power Electron. 2020, 35, 10363–10373. [Google Scholar] [CrossRef]

- Richardson, R.R.; Birkl, C.R.; Osborne, M.A.; Howey, D.A. Gaussian process regression for in situ capacity estimation of lithium-ion batteries. IEEE Trans. Ind. Inform. 2019, 15, 127–138. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zou, C.; Berecibar, M.; Nanini-Maury, E.; Chan, J.C.W.; van den Bossche, P.; Van Mierlo, J.; Omar, N. Random forest regression for online capacity estimation of lithium-ion batteries. Appl. Energy 2018, 232, 197–210. [Google Scholar] [CrossRef]

- Rezaeianjouybari, B.; Shang, Y. Deep learning for prognostics and health management: State of the art, challenges, and opportunities. Measurement 2020, 163, 107929. [Google Scholar] [CrossRef]

- Kaur, K.; Garg, A.; Cui, X.; Singh, S.; Panigrahi, B.K. Deep learning networks for capacity estimation for monitoring SOH of Li-ion batteries for electric vehicles. Int. J. Energy Res. 2021, 45, 3113–3128. [Google Scholar] [CrossRef]

- Shen, S.; Sadoughi, M.; Chen, X.; Hong, M.; Hu, C. A deep learning method for online capacity estimation of lithium-ion batteries. J. Energy Storage 2019, 25, 100817. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Saha, B.; Goebel, K. Battery Data Set; NASA Ames Res. Center: Moffett Field, CA, USA, 2007. Available online: http://ti.arc.nasa.gov/tech/dash/pcoe/prognostic-data-repository/ (accessed on 5 September 2021).

- Li, X.; Yuan, C.; Li, X.; Wang, Z. State of health estimation for Li-Ion battery using incremental capacity analysis and Gaussian process regression. Energy 2020, 190, 116467. [Google Scholar] [CrossRef]

| Method | Index | Battery #5 (%) | Battery #6 (%) | Battery #7 (%) | Battery #18 (%) | Average (%) |

|---|---|---|---|---|---|---|

| RMSE | 1.2983 | 1.3293 | 1.1586 | 1.4239 | 1.3025 | |

| NN | MAE | 1.0498 | 1.0893 | 0.9782 | 1.2843 | 1.1004 |

| MaxRE | 3.7945 | 3.8098 | 3.6555 | 4.0128 | 4.0128 | |

| RMSE | 1.1349 | 1.2302 | 1.0204 | 1.2983 | 1.1709 | |

| CNN | MAE | 0.9825 | 1.0472 | 0.7938 | 1.0529 | 0.9691 |

| MaxRE | 3.6416 | 3.1983 | 3.0781 | 3.7231 | 3.7231 | |

| RMSE | 0.8248 | 0.8339 | 0.7702 | 0.9149 | 0.8359 | |

| SS-CNN-S2 | MAE | 0.7849 | 0.7639 | 0.6329 | 0.8539 | 0.7589 |

| MaxRE | 2.6839 | 2.8493 | 2.5479 | 3.0329 | 3.0329 | |

| RMSE | 0.7382 | 0.8137 | 0.6839 | 0.9087 | 0.7861 | |

| SS-CNN-S3 | MAE | 0.6782 | 0.7483 | 0.5225 | 0.8389 | 0.6970 |

| MaxRE | 2.5392 | 2.7839 | 2.4440 | 2.8403 | 2.8403 |

| Starting Voltage (V) | 3.7 | 3.75 | 3.8 | 3.85 | 3.9 |

|---|---|---|---|---|---|

| RMSE (%) | 0.6544 | 0.8969 | 0.6839 | 1.0550 | 1.0765 |

| MAE (%) | 0.4966 | 0.7125 | 0.5225 | 0.7619 | 0.7568 |

| MaxRE (%) | 2.7178 | 2.9214 | 2.4440 | 3.5384 | 3.5370 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Li, W. Online Capacity Estimation for Lithium-Ion Batteries Based on Semi-Supervised Convolutional Neural Network. World Electr. Veh. J. 2021, 12, 256. https://doi.org/10.3390/wevj12040256

Wu Y, Li W. Online Capacity Estimation for Lithium-Ion Batteries Based on Semi-Supervised Convolutional Neural Network. World Electric Vehicle Journal. 2021; 12(4):256. https://doi.org/10.3390/wevj12040256

Chicago/Turabian StyleWu, Yi, and Wei Li. 2021. "Online Capacity Estimation for Lithium-Ion Batteries Based on Semi-Supervised Convolutional Neural Network" World Electric Vehicle Journal 12, no. 4: 256. https://doi.org/10.3390/wevj12040256

APA StyleWu, Y., & Li, W. (2021). Online Capacity Estimation for Lithium-Ion Batteries Based on Semi-Supervised Convolutional Neural Network. World Electric Vehicle Journal, 12(4), 256. https://doi.org/10.3390/wevj12040256