Abstract

This study examines the influence of software agents on information-sharing behavior within security-sensitive organizations, where confidentiality and hierarchical culture often limit the flow of knowledge. While such organizations aim to collect, analyze, and disseminate information for security purposes, internal sharing dynamics are shaped by competing norms of secrecy and collaboration. To explore this tension, we developed a digital simulation game in which participants from security-sensitive organizations engaged in collaborative tasks over three rounds. In rounds two and three, software agents were introduced to interact with participants by sharing public and classified information. A total of 28 participants took part, generating 1626 text-based interactions. Findings indicate that (1) information-sharing patterns in security-sensitive contexts differ significantly from those in non-sensitive environments; (2) when software agents share classified information, participants are more likely to share sensitive data in return; (3) when participants are aware of the agents’ presence, they reduce classified sharing and increase public sharing; and (4) agents that share both public and classified information lead to decreased public and increased classified sharing. These results provide insight into the role of artificial agents in shaping communication behaviors in secure environments and inform strategies for training and design in knowledge-sensitive organizational settings.

1. Introduction

Information sharing is fundamentally essential for knowledge production and organizational decision-making, providing the foundation for innovation, learning, and strategic action [1,2,3]. In security-sensitive organizations, such as military units or intelligence agencies, this dynamic becomes complicated. Although these entities require shared insight to fulfill their missions, their operational ethos emphasizes confidentiality, hierarchy, and compartmentalization, which limits information exchange. As a result, even innocuous data may be withheld, reinforcing silos and impeding collective intelligence [4,5].

While prior research has examined trust, organizational culture, and knowledge-sharing behaviors [6,7,8], few studies have focused on high-security environments. The tension between maintaining secrecy and fostering effective collaboration presents a research gap that warrants attention. Furthermore, there is limited evidence regarding the potential role of artificial agents in modulating these dynamics under strict security constraints [9,10].

Against this backdrop, this study investigates whether intelligent software agents can influence information-sharing behavior in simulated high-security contexts [8]. Using a digital collaborative game designed to replicate intelligence-community dynamics, we embed agents that selectively disseminate public and classified information. Participants, drawn from security-sensitive and non-sensitive organizations, engage in the game over three rounds, providing rich data on human–agent interaction and disclosure behavior.

The objectives are to compare sharing patterns across participant groups, to examine the agents’ impact on human willingness to share, and to evaluate how variations in agent behavior affect information disclosure. Through this approach, we introduce a novel methodological framework that combines experimental gaming, behavioral analysis, and AI to address real-world challenges in trust, secrecy, and communication. We present a novel experimental design combining intelligent software agents with a controlled, game-based simulation. The agents were autonomous participants embedded in the digital environment, programmed to share information using one of two strategies: either classified-only or mixed public-and-classified sharing. In round two, participants were unaware of the agents’ presence, while in round three, they were informed that agents were among them. This design enabled us to observe how agent behavior and transparency influence human information-sharing dynamics. Our method is unique in its ability to simulate sensitive organizational conditions while safely analyzing real behavioral responses. It contributes a new framework for studying trust, disclosure, and AI–human interaction under secrecy constraints.

The paper is organized as follows: Section 2 reviews the theory on knowledge sharing, trust, and agent-human interaction; Section 3 describes the game-based methodology and analytical procedures; Section 4 presents the empirical findings; Section 5 discusses theoretical and practical implications; Section 6 outlines the limitations and directions for future research; and Section 7 concludes with recommendations for policy and system design.

2. Theoretical Foundation

This section begins with a brief description of the special characteristics of security-sensitive organizations in reference to information sharing. Next, we explain how we develop indicators for measuring information-sharing interactions among community members. Finally, we describe software agents as a method of enhancing information sharing in a game environment for members of the intelligence community.

2.1. The Conflict of Information Sharing in Intelligence Communities

Information sharing is claimed to be interactive and ‘socially distributed’ as it occurs via applications and transdisciplinary collaborations [11]. Yet, sharing information leads to greater knowledge production when people share some overlapping research interests [4] and collaboration fueled by cultural fit has proven to produce abundant new knowledge [10]. In other words, knowledge production requires some degree of echo chamber.

Information sharing relies on organizational culture, and security-sensitive organizations are no exception. Security-sensitive communities (e.g., military/intelligence) exhibit more tightly connected communication patterns compared to non-sensitive organizations, reflecting higher trust and cohesion [12,13]. For effective intelligence work, there needs to be a sense of community among all those working closely with confidential information. Understanding the dynamics that arise from the organization’s culture is critical. In the case of the intelligence community, confidential information is actively prevented from exchange, a restriction that carries over to non-confidential information. Organizational culture—especially norms around secrecy and trust—directly influences network topology, which in turn affects the extent and quality of knowledge sharing [12]. Success in obtaining secret intelligence, and the sources and methods by which it is acquired, must be concealed from outside gaze in a working environment where reticence if not outright concealment is second nature. The shared risks and need for mutual support for those within create strong interpersonal bonds, but at times create tensions with those on the outside [5]. Therefore, unique methods must be used to enhance intelligence information sharing of non-confidential information.

Although the intelligence community strives to adopt and adapt to technological advances, a delay in adopting new paradigms causes a gap between the technological advances and the functioning of security-sensitive organizations [9]. A similar phenomenon occurs in governmental organizations and federal agencies, where data is private, secure and archived [14]. Some difficulties and challenges prevent the implementation of new approaches to achieve information sharing using advanced shared spaces. Generally, group members consistently focus on information shared by all group members, at the expense of information not shared by all. One reason for this is that common knowledge is mentioned more often during group discussions [15]. Specifically, in intelligence communities’ additional obstacles are known. First, there are the inhibiting intelligence traditions of secrecy and compartmentalization. Second, there is differentiation and competition among the units of the intelligence community. Third, there is a culture of “knowledge is power,” whereby information is revealed only if necessary [16].

The ability of groups to share and use information is critical for group coordination and decision-making. Therefore, a change in approach to “sharing is power” is called for [16]. The existing conflict between the goal of the intelligence communities to encourage information sharing, and the organizational culture and national security, which strain information sharing, must be addressed. The current study aims to measure and enhance information sharing in intelligence communities. In order to quantify the baseline of the issue, the first hypothesis compares information sharing behavior by security-sensitive personnel to people who are not affiliated with the security sensitivity:

H1.

Security-sensitive personnel share information at a slower rate than people who are not sensitive to information.

The next section introduces software agents and describes information sharing and interactions within intelligence communities as an indicator for information-sharing measurement.

2.2. Information Sharing in Digital Games

We seek to understand the processes that affect information sharing, in particular among personnel in security-sensitive organizations. One way to understand and measure information sharing in an intelligence-based social network, where information is confidential, classified, and hidden, is by referring to text interactions between members. Persistent exchange of text interactions in virtual platforms leads to the appearance of interactivity. Given that person A communicates with person B using a computer software, interactivity is defined as the degree of B’s reaction [17]. Interactivity is a psychological factor which varies across communication technologies, contexts, and perceptions [18]. Organizations strive to enhance interactivity and information sharing, while considering attitudes of people [19]. This study refers to communities engaged in the production of intelligence knowledge, where information sharing between members of the community is carried out through interactions among its members. Yet, recent developments in artificial intelligence suggest that interactivity may occur with software agents alongside human–human conversations.

Artificial-intelligent software agents were shown to have a behavioral effect on people in various environments and settings. Mubin et al. [7] incorporated social robots in human–human collaborative settings, showing improvement in the effectiveness of a meeting. Chalamish and Kraus [20] successfully used a software-intelligent agent as a mediator in human negotiations. Artificial intelligence agents were incorporated in games to identify and manipulate the human-player behavior [21]. In the present study, human users’ playing strategies without the presence of software agents are documented and analyzed. Next, an artificial software agent is designed to affect human players’ behavior in a desired way. In this study, intelligent agents are designed to encourage text communication among the human players. Given the behavioral effect artificial intelligent agents have on humans, the second hypothesis is as follows:

H2.

Text messaging by intelligent software agents increases the rate of text messages among security-sensitive personnel.

In a study that tried to create a superior artificial intelligence (AI) teammate for human–machine collaborative games, Sui et al. [22] found that people clearly prefer a rule-based AI teammate (SmartBot), that is an agent which acts according to pre-defined rules, over a state-of-the-art learning-based AI teammate, that is an agent which adapts its behavior to the on-going game, across nearly all subjective metrics. Generally, the learning-based agent is viewed negatively, despite no statistical difference in the game score. Cruz, Uresti, and Adolfo [23], in their exploration of a generic flow framework for game AI, point to the importance of controlling behavior adaptation in game AI for creating a sense of flow and immersion in a game.

The human players’ single objective is to play the game. In other words, their goal is to stay alive, reach the end, and win. The software agents act as “super-players” in the sense that they have an additional objective, which is to influence the human players behavior, as well as play the game. They are undetectable visually, and their behavior is intelligent in the sense that during the game they make their own decisions as to which information item to share and with whom, according to their defined strategy. They are not avatars controlled by the researchers.

We are interested in comparing the influence of different software agent behavior strategies on the human players. For the purposes of this research, two strategies were defined and implemented, and their effects on the intelligence communities’ experiment participants were compared. The strategies were defined as follows:

‘complete’, where the agents shared with the human players both items of public and of confidential information;

‘classified’, where the agents shared with the human players only items of confidential information.

The aim is to observe the human players’ behavior and detect whether changes appear compared to their former knowledge-sharing behavior. For example—a person who was reluctant to share knowledge may share more knowledge, or a person who had no problem sharing knowledge will cease to do so. Given the behavioral effect different software agents’ strategies might have on humans, the third hypothesis is:

H3.

Intelligent software agents’ strategy of sharing classified information increases the sharing behavior of classified information by humans in intelligence communities.

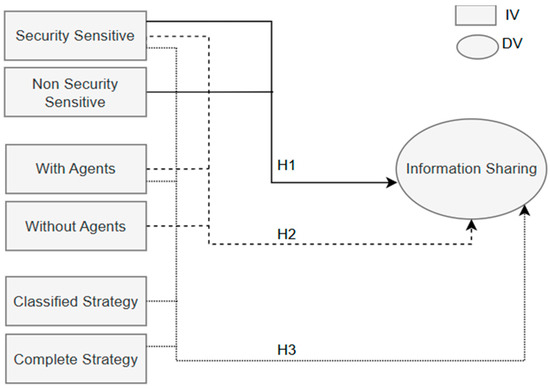

To summarize, Figure 1 incorporates all hypotheses and shows the relation between them in the research model, where H1 compares the two populations and H2–H3 apply to security-sensitive personnel.

Figure 1.

Research model.

3. Method

The research method chosen is a game-based experiment.

To examine information-sharing processes among members of a classified community, this study considers two requirements: (1) the integration of software agents whose behavior can be controlled and their impact on information sharing processes in the community can be measured, (2) the assimilation of community members and agents into a collaborative game environment.

In the game, players are exposed to public and confidential information. They can share information in order to improve their chances of making progress in the game. To examine the players’ information-sharing culture, the game incorporates artificial-intelligent software agents, which are programmed to share information according to two strategies explained below. At the end of the three game sessions, all text messages exchanged between the players are saved.

The following sections detail the game and the strategies of the software agents.

3.1. Game Story and Rules

The game story is about a planet called Mercury. The main goal of the players in the game is to colonize Mercury without being infected by a lethal and contagious virus. During the game, the players can exchange text messages in order to identify infected players among them. In rounds 2 and 3, the game incorporates artificial-intelligent software agents, which exchange messages with the other players.

The game chosen for the information-sharing measurement is an interaction-based multiplayer game. The game is based on two well-known games: “Werewolf” and “Guess Who?”. In the Werewolf game, players, known as villagers, guess who the secret werewolves among them are, before the werewolves kill them. In the “Guess Who?” game, players have to guess the identity of the other players by asking yes/no questions. Based on the integration of these two games, we designed the Mercury interaction-based game, as follows.

The year is 2077, a group of 20 people were sent to colonize the planet Mercury. A week after arriving at their destination, one of the group members was found dead. After investigating the case, players discover that at least one member has been infected by the Sukuna virus. The colony has to find the infected members and kill them before they spread the virus to others and kill the entire colony. Each colony member has his/her own personal combination of attributes, including hair color, skin color, clothes, and gender.

In the beginning of the game, all colony members receive the same public information (common knowledge) regarding one attribute value for one of the infected players (killers), for example, light skin or dark skin. In addition, once a day, each colony member receives an additional attribute value for one of the killers, which is confidential and known only to him/her. Only by interacting and sharing information can colony members improve their chances of guessing who the killers are, to save themselves. Every night, while the colony members sleep, the killers, who are infected with the Sukuna virus, vote to decide which colony member to kill in the coming day.

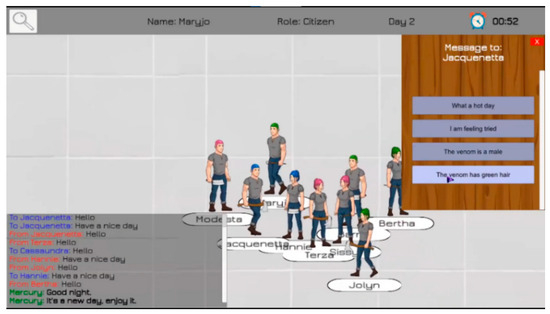

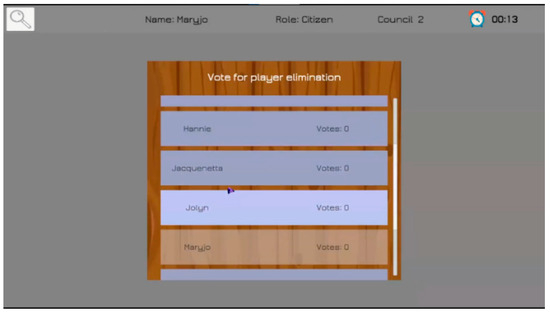

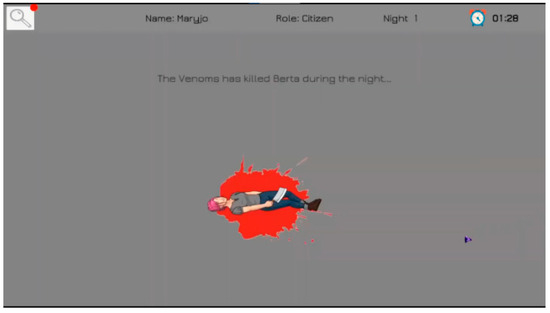

Figure 2 displays the main game screen, Figure 3 and Figure 4 show night screens: voting for eliminations and the decision who will die.

Figure 2.

Main game screen with interactions.

Figure 3.

A night screen: voting for eliminations.

Figure 4.

A night screen: participant’s death.

In this research, two software agent strategies were implemented and tested for their effect on the human players—the complete and the classified strategies:

- Software agents employing the complete strategy function as colony members, sharing with other players both confidential and public information with equal probability.

- Software agents using the classified strategy act as a colony member who only shares the confidential information type with other colony members.

The software agents do not possess superior knowledge over other villagers since they receive public and confidential information in similar fashion to the human players. The agents act by one of the two strategies defined above—the “classified” strategy or the “complete” strategy.

Every human participant plays three game rounds: (1) no intelligent agent included, (2) intelligent agents are included without the human participants’ awareness, (3) intelligent agents are included with the human participants’ awareness.

3.2. Procedure

Three experiments were conducted. Every experiment included three game rounds for each player. Group 1 included academic adults who are not security-sensitive personnel. Groups 2 and 3 included security-sensitive personnel. Participants volunteered and were not rewarded.

Subjects: A total of 28 participants took part in the experimental treatments. In total, 22 were academic adults and 8 were undergraduate students. Each participant participated in one experiment following a briefing. A note about the sample size: Security-sensitive personnel are limited in terms of obtaining permission to participate in experiments and exposing behavior.

Variables: Independent variables: (1) Type of participant: a person belonging to the intelligence community or not. (2) Integration of artificial-intelligent software agents: yes or no. (3) Artificial intelligent agents’ strategy: complete strategy or classified strategy.

The dependent variable in all three experiments: information sharing (explained next).

Information sharing was measured by the type and number of text interactions between participants. Types of information shared at the participants’ choice could be as follows: trivial (such as “today is a beautiful day”), public (such as “the killer has a knife”, where all participants had this information) or confidential (such as “the killer has a knife”, where only the sharing participant had this information). When participant A shared information with participant B, it was counted as one interaction with a type of information, type of strategy and number of the round as the interaction’s metadata. Table 1 summarizes the experiments and participants.

Table 1.

Information regarding the three experiments.

Every participant played three rounds in one whole game: (1) No intelligent software agent players were included. (2) The human participants were unaware of the intelligent software agents’ presence. (3) The human participants have been notified of the intelligent software agents’ presence.

Data from unfinished experiments was omitted from statistical analysis (2 participants who did not finish the game due to connectivity issues).

4. Results

The dependent variables in this study are the various types of messages exchanged among game participants during the simulation game. We counted the total number of messages and their breakdown into three sub-types: trivial, public, and confidential messages. Descriptive statistics of the dependent variables are displayed in Table 2.

Table 2.

Descriptive statistics of the dependent variable, information sharing messages.

4.1. Information-Sharing Differences Between Security-Sensitive Community and Non Security-Sensitive Communities

H1 results. In order to test whether security-sensitive personnel share information differently than non security-sensitive personnel, a t-test for independent samples was conducted on the interactions of three types of information (trivial, public or confidential) in experiments 1 (non-intelligence) and 3 (intelligence). The same software agent strategy was used in both experiments. A statistically significant effect was found t(916) = 2.263, and p = 0.024. Information is shared more by security-sensitive personnel compared to non-security-sensitive personnel.

To understand which information is shared, follow-up tests were performed as follows: (1) by type of information, and (2) by game round.

To test whether types of information are shared differently by security-sensitive personnel in different rounds, a Cramer’s Phi-Coefficient test was calculated to analyze the relationship between the type of participant (non-intelligence = 0 and intelligence = 1) and type of information shared (trivial = 0, public = 1, and confidential = 2) in each round separately.

For rounds 1 (no software agent) and 3 (participants were aware of software agents in the game), no statistically significant correlation was found in the type of information shared. In other words, when players are aware of agents, the agents’ activity does not affect the type of information shared.

Following are the results for round 2 (participants were not aware of software agents in the game):

Results indicate that security-sensitive personnel are significantly more likely to share trivial and public information (N = 126, N = 46, respectively) than non-security-sensitive personnel (N = 52, N = 17, respectively). In addition, security-sensitive personnel are significantly less likely to share confidential information (N = 51) than non-security-sensitive personnel (N = 62). Φ = 0.254, p = 0.000. Results are displayed in Table 3.

Table 3.

Number of messages shared in round 2 when the presence of agents was unknown.

Hypothesis H1 was accepted. Security-sensitive personnel share information differently than non-security-sensitive personnel, sharing more messages yet preserving confidential information.

4.2. The Effect of Software-Intelligent Agents

In order to test whether software agents affect information sharing, a Cramér’s Phi-Coefficient test was calculated to analyze the relationship between rounds (no AI = 0, unaware of AI = 1, aware of AI = 2) and type of information shared (trivial = 0, public = 1, confidential = 2). Data was analyzed from experiments 2 (intelligence) and 3 (intelligence).

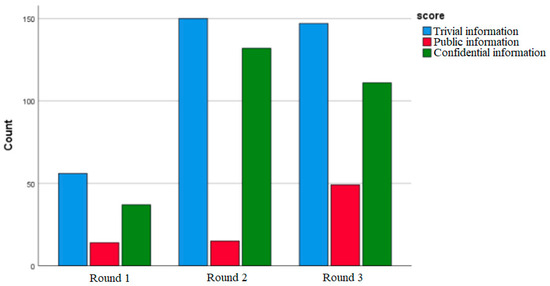

Results indicate that when software agents share confidential information, participants from security-sensitive organizations are significantly more likely to share trivial and confidential information (N = 150, N = 132, respectively) than without software agents (N = 56, N = 37, respectively).

Further, when security-sensitive personnel are aware of the existence of software agents, they are significantly more likely to share public information while reducing the sharing of confidential information (N = 49, N = 111, respectively) than when they are unaware of the existence of AI agents (N = 15, N = 132). Φ = 0.121, p = 0.000.

Figure 5 displays the type of information shared by game rounds for experiments 2 and 3. First round—no agents, second round—players are aware of the existence of agents and third round—players are aware that agents are playing among them.

Figure 5.

Information sharing by game rounds.

Hypothesis H2 is accepted. Software agents affect information sharing by security-sensitive personnel. When software agents share confidential information, security-sensitive personnel increase the sharing of trivial and confidential information. Nevertheless, when they are aware of the existence of software agents, security-sensitive personnel reduce the sharing of confidential information and increase the sharing of public information.

4.3. The Effect of Software Intelligent Agents’ Strategy

A one-way between subjects ANOVA test was conducted to compare the effect of software agents’ strategy on information sharing in security-sensitive organizations. The test was conducted on experiment 2 (only security-sensitive personnel using the classified strategy) and experiment 3 (only security-sensitive personnel using the complete strategy). There was a statistically significant effect of the software agents’ strategy on information sharing of the three conditions, F(2,1237) = 7.187, p = 0.001.

Post hoc comparisons using Tukey’s test indicated that information sharing using the complete strategy is significantly different than information sharing using the classified strategy. Table 4 reports the descriptive statistics.

Table 4.

Number of messages shared per types of information and strategy by security-sensitive personnel.

Table 5 reports the post hoc test results.

Table 5.

Post hoc results.

Hypothesis H3 is accepted. The strategy of artificial-intelligence software agents affects security-sensitive personnel sharing information behavior. When agents share confidential information, security-sensitive personnel mimic their behavior and share more confidential information. When the agents share complete information, that is, both public and confidential information, the result is a decrease in public information sharing and increase in confidential information sharing.

5. Discussion

Security-sensitive organizations face a paradox: while knowledge sharing is essential for effective decision-making, their foundational culture is rooted in confidentiality, hierarchy, and compartmentalization. These cultural norms shape communication behavior and, by extension, the degree and nature of knowledge exchange within such communities. This study sought to explore how information-sharing patterns can be positively influenced in security-sensitive organizations by using artificial-intelligence (AI) agents embedded within a game-based simulation.

The primary contribution of this study is its novel integration of autonomous software agents, designed to share information using predefined strategies, into an interactive serious game simulating intelligence community dynamics. This methodology enabled a real-time observation of how such agents influence human behavior across different conditions of transparency and information strategy. Prior studies have explored the effects of AI agents in collaborative or negotiation contexts [20,21] and in human–machine teams [22] but have rarely examined how AI can actively modulate behavior within security-sensitive environments.

Three empirical findings support our hypotheses and advance the field. First, consistent with earlier work, we confirm that personnel in intelligence communities exhibit different communication behaviors than those in non-sensitive organizations, specifically, sharing more public information while withholding confidential disclosures [12]. Second, software agents significantly influence this behavior: when agents share only confidential information, security-sensitive participants increase their own confidential and trivial sharing. Third, when participants are informed of the agents’ presence, they adopt a more cautious communication profile, reducing confidential sharing while increasing public sharing. This shows that agent transparency can significantly alter trust and disclosure patterns.

Furthermore, our findings align with research on behavioral mimicry in team settings: participants mirrored the agent’s strategy when it shared classified content, a phenomenon supported by recent studies on social influence and AI-guided interaction [24]. Additionally, the observed confidence calibration effect [3], where awareness of non-human participants led to conservative disclosure, resulted in participants’ reduced classified sharing and increased public disclosure. This aligns with findings in broader human–AI interaction literature addressing how awareness of AI presence moderates trust and engagement [25].

Compared to previous studies, which often relied on surveys, abstract simulations, or student samples [6,26], our work advances both methodology and application. We combine live, interactive decision-making tasks with embedded, strategy-driven AI agents, offering ecologically valid insight into trust, secrecy, and communication in contexts where information sensitivity is paramount.

Notably, this study transitions knowledge-sharing research from description to behavioral intervention. By simulating realistic, high-stakes communication dynamics with AI influence in a secure but ethical setting, we provide a robust testbed for designing future decision-support tools and training protocols in defense, intelligence, and critical infrastructure domains.

6. Limitations

Simulations and games are partial reflections of real situations. The game in the current study serves as a controlled space to examine behaviors that are otherwise difficult to observe safely. In practice, software agents could take the form of AI-driven assistants or decision-support tools within secure systems, guiding collaboration without violating confidentiality. We aim to provide a conceptual model, rather than a direct replication in real life.

7. Future Work

This study did not relate to the structure of the networks which can be represented by the interactions, where each player is represented as a node, and the interaction of information sharing is the arc between players. Therefore, there are no comparisons between structures of the network and the way information circulates. Future research may employ Social Network Analysis techniques in order to understand the differences in the structure and flow of information.

Future research may implement additional software agents’ strategies. For example, different information-sharing frequencies (should sharing be continuous or intermittent?) or different sharing targets (should sharing be with everyone or only with selected players?).

8. Conclusions

This study contributes to a deeper understanding of how intelligent software agents can be employed to enhance information-sharing behavior in security-sensitive organizations. These environments are uniquely constrained by cultural and procedural secrecy yet rely heavily on internal knowledge exchange for mission-critical operations. By designing and implementing a game-based simulation with embedded AI agents, we were able to observe, in a controlled setting, the behavioral dynamics triggered by agent strategies in different awareness scenarios.

Our findings suggest three key conclusions. First, security-sensitive personnel exhibit distinct information-sharing behaviors compared to non-sensitive individuals, emphasizing public and trivial exchanges over confidential disclosures. Second, when software agents share classified information, they can effectively stimulate participants to reciprocate, especially when participants are unaware of the agents’ presence. Third, awareness of the agent presence reduces classified sharing and increases public sharing, indicating that trust, transparency, and perceived surveillance significantly mediate communication behaviors.

The originality of this research lies in its use of dialogical, autonomous software agents as behavior influencers rather than passive observers. Unlike previous studies that examined information exchange in static or survey-based contexts, this study offers a dynamic and ecologically valid approach for assessing and training knowledge sharing in high-security settings.

Nevertheless, the study has limitations. The sample size was relatively small and drawn from a specialized population; moreover, the simulation abstracted real-world complexities, which may limit generalizability. Future research should incorporate larger and more diverse samples, include longitudinal designs to observe sustained behavior changes, and integrate advanced network analysis tools to map the structure of interaction patterns over time.

Ultimately, these insights are valuable for designing future training systems, decision-support platforms, and human–AI collaboration frameworks in confidential environments. As AI systems are increasingly integrated into organizational workflows, understanding their influence on human behavior, especially in contexts of trust and secrecy, is both timely and critical.

Author Contributions

Conceptualization, Y.R., D.R.R., M.C. and V.P.; methodology, Y.R., D.R.R., M.C. and V.P.; software, Y.R. and M.C.; validation, Y.R., D.R.R., M.C. and V.P.; formal analysis, Y.R. and D.R.R.; writing—original draft preparation, Y.R., D.R.R., M.C. and V.P.; writing—review and editing, Y.R. and D.R.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

References

- Rafaeli, S.; Raban, D.R. Information sharing online: A research challenge. Int. J. Knowl. Learn. 2005, 1, 62–79. [Google Scholar] [CrossRef]

- Hussein, A.A.; Hussein, A.A.; Hassan, W.J.; Kadhim, H.A.K.; Hussein, A.M. Knowledge Sharing and Decision-Making Effectiveness in Higher Education: Empirical Evidence from a University. Acad. J. Interdiscip. Stud. 2025, 14, 334. [Google Scholar] [CrossRef]

- Danko, L.; Crhová, Z. Rethinking the Role of Knowledge Sharing on Organizational Performance in Knowledge-Intensive Business Services. J. Knowl. Econ. 2024, 1–21. [Google Scholar] [CrossRef]

- Lane, J.N.; Ganguli, I.; Gaule, P.; Guinan, E.; Lakhani, K.R. Engineering serendipity: When does knowledge sharing lead to knowledge production? Strat. Manag. J. 2021, 42, 1215–1244. [Google Scholar] [CrossRef]

- Omand, D. Creating intelligence communities. Public Policy Adm. 2010, 25, 99–116. [Google Scholar] [CrossRef]

- Hsu, M.H.; Ju, T.L.; Yen, C.H.; Chang, C.M. Knowledge sharing behavior in virtual communities: The relationship between trust, self-efficacy, and outcome expectations. Int. J. Hum. Comput. Stud. 2007, 65, 153–169. [Google Scholar] [CrossRef]

- Mubin, O.; D’Arcy, T.; Murtaza, G.; Simoff, S.; Stanton, C.; Stevens, C. Active or passive?: Investigating the impact of robot role in meetings. In Proceedings of the IEEE RO-MAN 2014—23rd IEEE International Symposium on Robot and Human Interactive Communication: Human-Robot Co-Existence: Adaptive Interfaces and Systems for Daily Life, Therapy, Assistance and Socially Engaging Interactions, Edinburgh, Scotland, UK, 25–29 August 2014; pp. 580–585. [Google Scholar]

- Noy, A.; Raban, D.R.; Ravid, G. Testing social theories in computer-mediated communication through gaming and simulation. Simul. Gaming 2006, 37, 174–194. [Google Scholar] [CrossRef]

- Siman-tov, D.; Alon, N. The Cybersphere Obligates and Facilitates a Revolution in Intelligence Affairs. Cyber Intell. Secur. 2018, 2. Available online: https://www.inss.org.il/publication/cybersphere-obligates-facilitates-revolution-intelligence-affairs/ (accessed on 31 March 2021).

- Vick, T.E.; Nagano, M.S.; Popadiuk, S. Information culture and its influences in knowledge creation: Evidence from university teams engaged in collaborative innovation projects. Int. J. Inf. Manag. 2015, 35, 292–298. [Google Scholar] [CrossRef]

- Hessels, L.K.; van Lente, H. Re-thinking new knowledge production: A literature review and a research agenda. Res. Policy 2008, 37, 740–760. [Google Scholar] [CrossRef]

- Rusho, Y.; Raban, D.R.; Simantov, D.; Ravid, G. Knowledge Sharing in Security-Sensitive Communities. Futur. Internet 2025, 17, 144. [Google Scholar] [CrossRef]

- Rusho, Y.; Nudler, I.; Ravid, G. Knowledge Development in Communities During Crises: A Discourse Comparison Tool; EasyChair: Stockport, UK, 2023. [Google Scholar]

- Criado, J.I.; Sandoval-Almazan, R.; Gil-Garcia, J.R. Government innovation through social media. Gov. Inf. Q. 2013, 30, 319–326. [Google Scholar] [CrossRef]

- Stasser, G.; Titus, W. Hidden Profiles: A Brief History. Psychol. Inq. 2003, 14, 304–313. [Google Scholar] [CrossRef]

- Siman-tov, D.; Ofer, G. Intelligence 2.0: A New Approach to the Production of Intelligence. Mil. Strateg. Aff. 2013, 5, 31–51. [Google Scholar]

- Rafaeli, S. From new media to communication. Sage Annu. Rev. Commun. Res. Adv. Commun. Sci. 1988, 16, 110–134. [Google Scholar]

- Kiousis, S. Interactivity: A concept explication. New Media Soc. 2002, 4, 355–383. [Google Scholar] [CrossRef]

- Pai, F.Y.; Yeh, T.M. The effects of information sharing and interactivity on the intention to use social networking websites. Qual. Quant. 2014, 48, 2191–2207. [Google Scholar] [CrossRef]

- Chalamish, M.; Kraus, S. AutoMed: An automated mediator for multi-issue bilateral negotiations. Auton. Agent. Multi. Agent. Syst. 2012, 24, 536–564. [Google Scholar] [CrossRef]

- Peled, N.; Gal, Y.; Kraus, S. A study of computational and human strategies in revelation games. Auton. Agent. Multi. Agent. Syst. 2015, 29, 73–97. [Google Scholar] [CrossRef]

- Siu, H.C.; Pena, J.D.; Chen, E.; Zhou, Y.; Lopez, V.J.; Palko, K.; Chang, K.C.; Allen, R.E. Evaluation of Human-AI Teams for Learned and Rule-Based Agents in Hanabi. NeurIPS Proceedings. 2021. Available online: https://proceedings.neurips.cc/paper/2021/hash/86e8f7ab32cfd12577bc2619bc635690-Abstract.html (accessed on 9 January 2022).

- Arzate Cruz, C.; Ramirez Uresti, J.A. Player-centered game AI from a flow perspective: Towards a better understanding of past trends and future directions. Entertain. Comput. 2017, 20, 11–24. [Google Scholar] [CrossRef]

- Zvelebilova, J.; Savage, S.; Riedl, C. Collective Attention in Human-AI Teams. arXiv. 2024. Available online: https://arxiv.org/pdf/2407.17489 (accessed on 15 July 2025).

- Hemmer, P.; Westphal, M.; Schemmer, M.; Vetter, S.; Vössing, M.; Satzger, G. Human-AI Collaboration: The Effect of AI Delegation on Human Task Performance and Task Satisfaction. In Proceedings of the International Conference on Intelligent User Interfaces; Proceedings IUI. Association for Computing Machinery: New York, NY, USA, 2023; pp. 453–463. [Google Scholar] [CrossRef]

- Dawes, S.S.; Gharawi, M.A.; Burke, G.B. Transnational public sector knowledge networks: Knowledge and information sharing in a multi-dimensional context. Gov. Inf. Q. 2012, 29, S112–S120. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).