1. Introduction

Database Management Systems (DBMSs) often store sensitive information, such as company secrets, financial data, and personal privacy details [

1]. However, these systems are vulnerable to both external and internal threats. External threats include social engineering attacks, while internal threats arise from unauthorised access, privilege abuse, and vulnerabilities like SQL injection [

2].

The authors of the research paper by Kaneko et al. [

3] proposed a privacy-enhancing approach involving the deployment of resource-efficient Docker containers within cloud environments. This method effectively isolates sensitive information, enabling flexible implementation of enhanced privacy protections and management strategies as needed. Given the huge increase in the number of network security threats, such as vulnerabilities, attacks, data breaches, and privacy violations [

4], this research aims to address these challenges by developing a robust security framework for databases. This framework will mitigate risks associated with external attacks [

5], while also safeguarding user data from the destructive potential of SQL injection (SQLi) [

6].

On the other hand, cloud computing has enabled distributed computing, outsourcing infrastructure installation, pricing, maintenance, and server scalability. This has led to a profitable computing industry, where data owners often relinquish control over their data despite significant financial investment [

7]. To address this, a comprehensive evaluation of security frameworks is necessary, particularly in scenarios requiring frequent user access to data for real-world applications [

8]. While various research papers have explored the use of Docker as a framework, there remains a gap in understanding how to leverage Docker from a user-centric perspective to protect both user data and the underlying database. This research aims to bridge this gap by fostering trust between users and the authorities managing centralised databases.

This paper is organised with the following structure:

Section 1 talks about the introduction of the Database, Docker as a framework, SQL injection (SQLi), research questions, research objectives, Docker containers, and security.

Section 2 describes related works, research objectives, suitability of the Docker framework, Docker containers, and the security aims of the research.

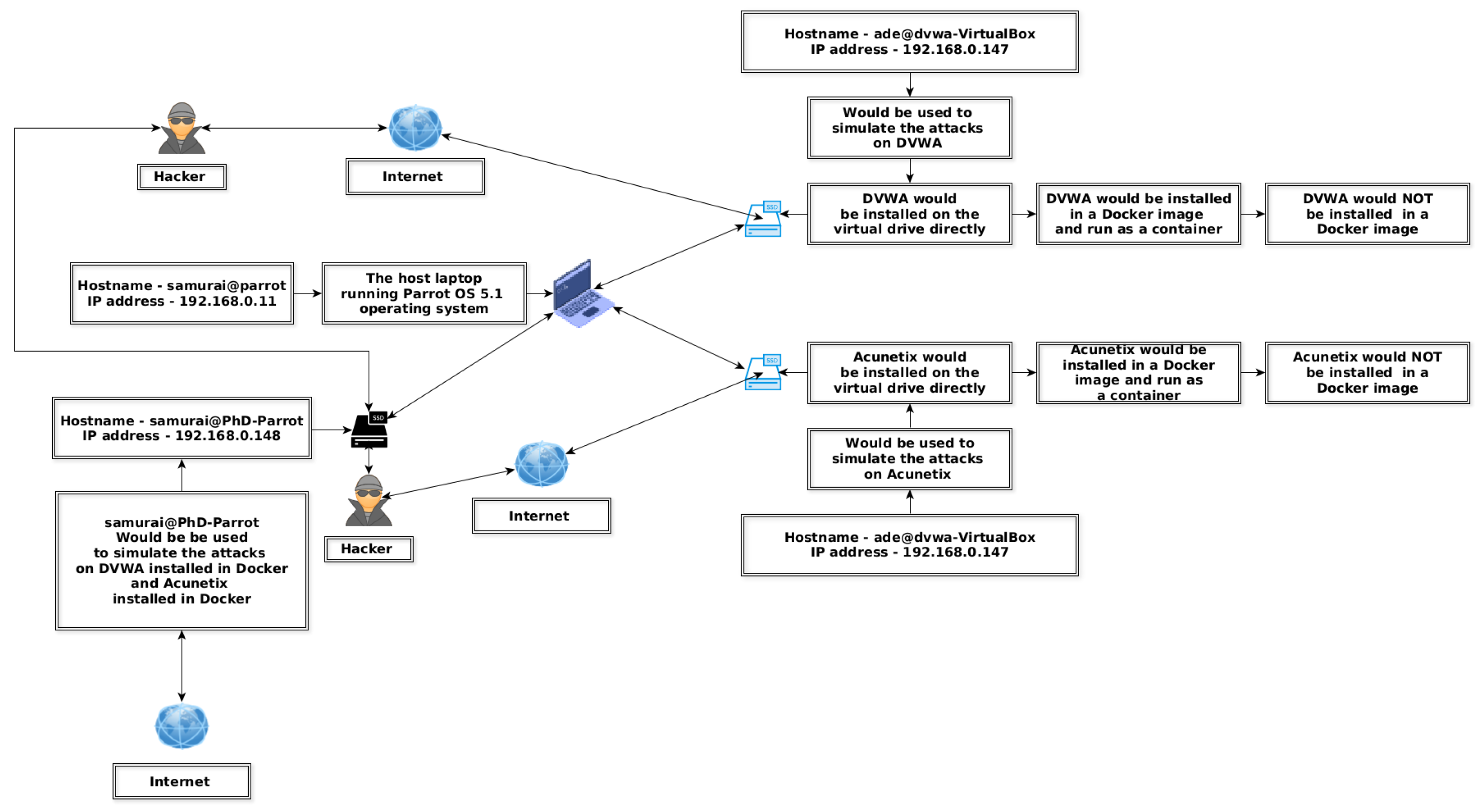

Section 3 consists of the implementation, the hypotheses, the type of variables, the schematic diagram of the laboratory, ethics approval and GDPR, the environment, databases, network analysis, and data collection.

Section 4 presents the results, statistical analysis, tables, and graphs.

Section 5 is a summary of findings, while

Section 6 elaborates on the conclusions.

2. Related Works

Table 1 highlights the software used in related works, especially, the use of Docker images and Docker containers to conduct their implementation, just as this research paper has used the Docker framework for its implementation. The authors of the research paper by Zhao et al. [

1] highlighted the significant challenges posed by data breaches in 2016. Real-time processing databases, designed to handle constantly changing workloads, are particularly susceptible to these threats. Moreover, both external and internal attacks pose serious risks to databases, often targeting sensitive information, such as commercial secrets, bank details, and personal privacy [

1]. The authors of the research paper by Wagner et al. [

2] further corroborated these findings in their 2017 research. Database Management Systems (DBMSs) are employed to process and store user data.

Furthermore, security mechanisms and access controls, such as audit logs, cannot always be relied upon to prevent data breaches. Users, both legitimate and malicious, may abuse their privileges in order to compromise database security. Databases must be capable of promptly detecting breaches and collecting evidence to facilitate investigations [

2]. According to the authors Said and Mostafa [

9], insider threats, particularly those involving the misuse of legitimate account privileges, remain a persistent challenge in database security. Detecting such breaches can be extremely difficult. To address this issue, Said et al. proposed an adaptive and efficient database intrusion detection algorithm inspired by the Negative Selection algorithm from artificial immune systems and the Danger Theory model [

9].

Two recent studies published in 2021 by research authors Neto et al. [

10] and Algarni et al. [

11] emphasise the persistent challenges of data breaches and database security. The increasing reliance on the internet has exacerbated the risks of data leakage and cyber threats. The research paper by Neto et al. [

10] analysed data breaches involving personal information between 2018 and 2019, including the 2019 cyberattack on Capital One, which exposed sensitive customer information [

9]. Similarly, the research paper by Algarni et al. 2021 [

11] examined the vulnerabilities of modern business systems to cybersecurity threats. While cybersecurity solutions can mitigate attacks on database storage, human errors, such as the loss or theft of devices containing sensitive data or accidental exposure of security credentials, remain significant contributors to security breaches. Common cyberattacks include cross-site scripting (XSS), privilege escalation, and SQL injection (SQLi).

The authors of the research paper by Mahrouqi et al. [

12] conducted a notable study in 2016, simulating an SQLi attack in a virtual environment using tools such as VirtualBox, VMware Workstation, Wireshark, and GNS3. This research aimed to identify websites vulnerable to SQLi attacks. Subsequently, the authors of the research paper by Grubbs et al. [

6] advocated for the development of strategies to limit the damage SQLi attacks inflict on user data stored in databases. The research paper by Williams et al. [

13] underscored the importance of acquiring knowledge about software vulnerabilities, given the heavy reliance of academic institutions, the private sector, and government entities on database-related software. This research builds upon this foundation by proposing a method to mitigate SQLi attack damage to user data held in databases [

6].

Hospitals and banks are among the primary victims of SQLi attacks [

14]. Databases accessible via the internet are particularly vulnerable to various types of attacks [

15]. As proposed in the research paper by Kaneko et al. [

3], an innovative approach involves storing private information within Docker containers. These containers, which are resource-efficient and designed for cloud environments, enhance privacy protection and can be deployed as needed. This approach aligns with the increasing prevalence of network vulnerabilities, attacks, and data privacy issues in today’s IT sphere, driven by advancements in network technology [

4]. This research aims to develop a robust security framework that protects databases from both internal and external threats, specifically addressing SQLi attack mitigation [

6].

Moreover, the widespread adoption of distributed computing via the cloud has transformed the computing industry. While outsourcing installation, maintenance, and scalability has proven financially advantageous, data owners often lack direct control over their data despite paying high costs for its security [

7]. This research evaluates a security framework through use cases that simulate real-world scenarios, ensuring frequent and secure access to data [

8].

The injection of carefully crafted malicious SQL code through web page input can potentially lead to the destruction of a database [

11]. In contrast to the findings of this research paper, Wang and Reiter [

16] and Hassanzadeh et al. [

17] provide a more alarming assessment of data breaches and database security. A significant number of data breaches have been reported globally, with 3950 incidents occurring between November 2018 and October 2019, resulting in the exposure of 60% of victim identities, due to 1665 breaches in various credential databases [

16]. This widespread issue has raised serious concerns among both companies and individuals. High-profile data breaches, such as those experienced by Yahoo in 2013 and 2014, LinkedIn in 2012, Marriott International in 2014 and 2018, and Equifax in 2017, demonstrate that many organisations continue to implement inadequate cybersecurity measures, despite advancements in security technology and increased awareness [

17].

Similarly, NoSQL databases, which store data in a format different from relational tables, have also been targeted by hackers in 2021. While these databases disrupted the market with their scalability, performance, and availability, compromises were often made in other areas, including privacy. NoSQL databases are designed to handle unstructured data which lack a predefined organisation or data model. This flexibility, however, can come at the cost of privacy features. Data privacy, the right to control how data is collected and disclosed, is a critical concern that cannot be easily overlooked [

18]. Furthermore, traditional database services have now moved online. In other words, a database is an organised collection of user data. After all, if the security employed for the database is capable of protecting the data that it is hosting, malicious attacks or threats from hackers can prevent the data being stolen. This was stated in 2021 in the research paper published by Crooks [

19], though in 2016 an investigation on how to build a database that would not be vulnerable to internal or external attacks was proposed in the research paper by Toapanta et al. [

5]. Meanwhile, it is possible to consider network problems and extremely high computer loads with the introduction of modern applications; however, there has to be continuity in the flow of data to ensure that the service is guaranteed. The constant evolution of the scalability and availability of information technology (IT) services is why Docker containers can be of great value to the information technology industry [

20].

Table 1.

Comparison of this research paper and other research papers that have employed Docker.

Table 1.

Comparison of this research paper and other research papers that have employed Docker.

| Software | What the Software Was Used for |

|---|

| Docker machine [21] | Apache Airavata (an open source software suite). |

| Docker containers [22] | Run the Docker containers from different databases. |

| Docker containers [23] | Docker server and Docker client. |

| Docker container | |

| networking [24] | Monitoring server and anomaly monitoring system. |

| Docker framework [25] | SDN-Docker-based architecture for IoT. |

| Docker [26] | Virtual machine. |

| Docker [20] | DNS server, HAproxy, and mail server. |

| Docker containers [27] | Run the Docker containers. |

| Docker [28] | Optimise development and increase efficiency of methods. |

| Docker [29] | 5G mobile networks using Docker containers. |

| Docker [30] | Picto Web would be used within a Docker container. |

2.1. Suitability of the Docker Framework in an Environment with High Computational Demands

The authors of the research paper by Moysiadis et al. [

31] consider the association of Docker containers as a virtual technology with traditional framing, cloud computing, and Information Communication Technologies (ICT). They also consider the advantages that could be derived from this, including reduction in production costs, boosting of productivity, better security, and scalability with regard to future upgrades and increasing performance. As a result, cloud computing can support the demand for handling large user data and a large number of end devices [

31]. Another key thing to remember is that servers handling the increase in resources used relating to big data applications increase in storage due to increase in the applications being stored on the servers and balancing of the load within each server running these applications. Setting up of a Docker Swarm would be most appropriate to solve these problems as it can deploy multiple Docker containers on multiple computer hosts in a very short period [

28]. The current debate, that was highlighted in the research paper by Singh et al. [

28] published this year (2023), considers the merits of Docker containers as follows:

A Docker Swarm efficiently deals with the deletion and duplication of containers [

28].

Docker containers work well with load balancing and when dealing with applications that are complex [

28].

A Docker Swarm can effectively manage the employment of Docker containers [

28].

Correspondingly, the research paper by Zou et al. [

24], in comparison to the research paper by Alyas et al. [

32], also mentions the security side of Docker containers. The issues of the stability and security of the container have become important issues with the rejection of thousands of apps and websites, for example, the collapse of the Amazon cloud, which was built upon a virtual machine cluster and container [

33]. More researchers are using Docker because of the advantages it has been reported as having over traditional virtual machines [

24]. Furthermore, according to the research paper by Hersyah et al. [

34], it is known that with the aid of Docker containers supported with automation, the availability, deployment, redundancy, and granting of authorisation can be applied securely. Additionally, care should be taken to avoid becoming complacent when using cloud services [

34]. To exemplify, the same tools that are meant to help with security and easy navigation within the cloud have been known to be reverse-engineered and used by hackers for attacks against the cloud services. Furthermore, the cloud service provider has to make sure that security at their end is up to date, with regard to their software, hardware, and their staff being highly trained. To this end, virtualisation and the cloud are very closely associated with each other [

34]. In addition, the research paper by Qian et al. [

35] refers to storing users’ information in the cloud, which involves the inclusion of virtualisation and cloud computing. In other words, compared to the traditional way of utilising virtualisation, although Docker virtualisation performance expenses are lower, the deployment and delivery rate are faster. To clarify, with the aid of cloud computing, the following advantages can be gained: cloud computing can handle large amounts of data traffic and reduce the delay in network transmission [

35]. Furthermore, the research paper by Singh et al. [

28] supports the research paper of da Silva and Lima [

36] by explaining how a Docker Swarm provides an architecture which is decentralised and fault tolerant, and with the aid of Swarm mode, we can create a Docker Swarm which consists of Docker hosts. In other words, a Docker Swarm can be seen as improving the quality of Docker regarding availability, security, maintainability, reliability, and scalability. A Docker Swarm can reassign containers if it discovers that any one of the containers has failed within the Docker Swarm [

28].

2.2. Docker Containers and Security

The primary purpose of Docker containers is to isolate individual microservices effectively [

31]. Docker provides a service called (

https://hub.docker.com/ accessed on 20 March 2025), the world’s largest library of container images, which allows users to share or search for container images. This service is accessible via the Docker Hub website. The authors of the research paper by Alyas et al. [

32] support the findings of the research paper by Moysiadis et al. [

31], while emphasising the importance of addressing security, sovereignty, and compliance in cloud computing. This highlights the need for solutions to resolve privacy and security concerns, which remain key barriers to the effective adoption of mobile cloud computing (MCC) in healthcare environments [

37].

Moreover, a single computer can host only a limited number of virtual machines, whereas the same computer can run thousands of Docker containers simultaneously, offering superior scalability and mobility. Docker’s ability to operate on almost any platform, coupled with its ease of deployment and maintenance, further underscores its advantages [

24].

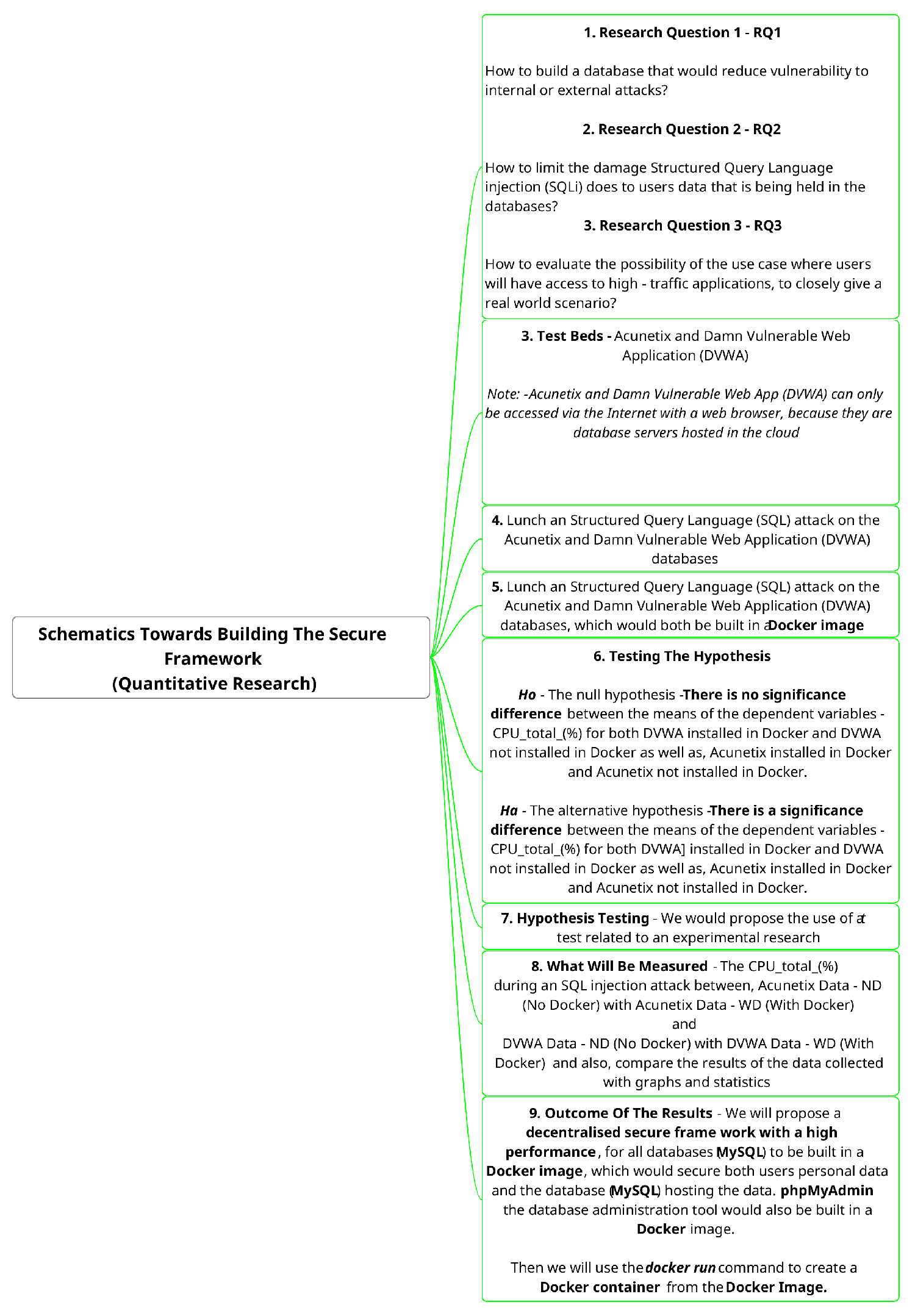

2.3. Aims of Research and Research Objectives

This research aims to design and evaluate a high-performance and secure database system. Specifically, the study focuses on three key objectives as presented below. By addressing these challenges, this research seeks to enhance the overall security and performance of database systems, safeguarding critical information and ensuring reliable service delivery.

Research Objective 1—RO1—Investigation into the development of a database that would not be vulnerable to internal or external attacks and then developing a security framework.

Research Objective 2—RO2—Proposing a way to limit the damage Structured Query Language injection (SQLi) causes to users’ data that are being held in a database.

Research Objective 3—RO3—Evaluating and proposing a security framework with the use case where users will have access to high-traffic applications, to closely represent a real world scenario.

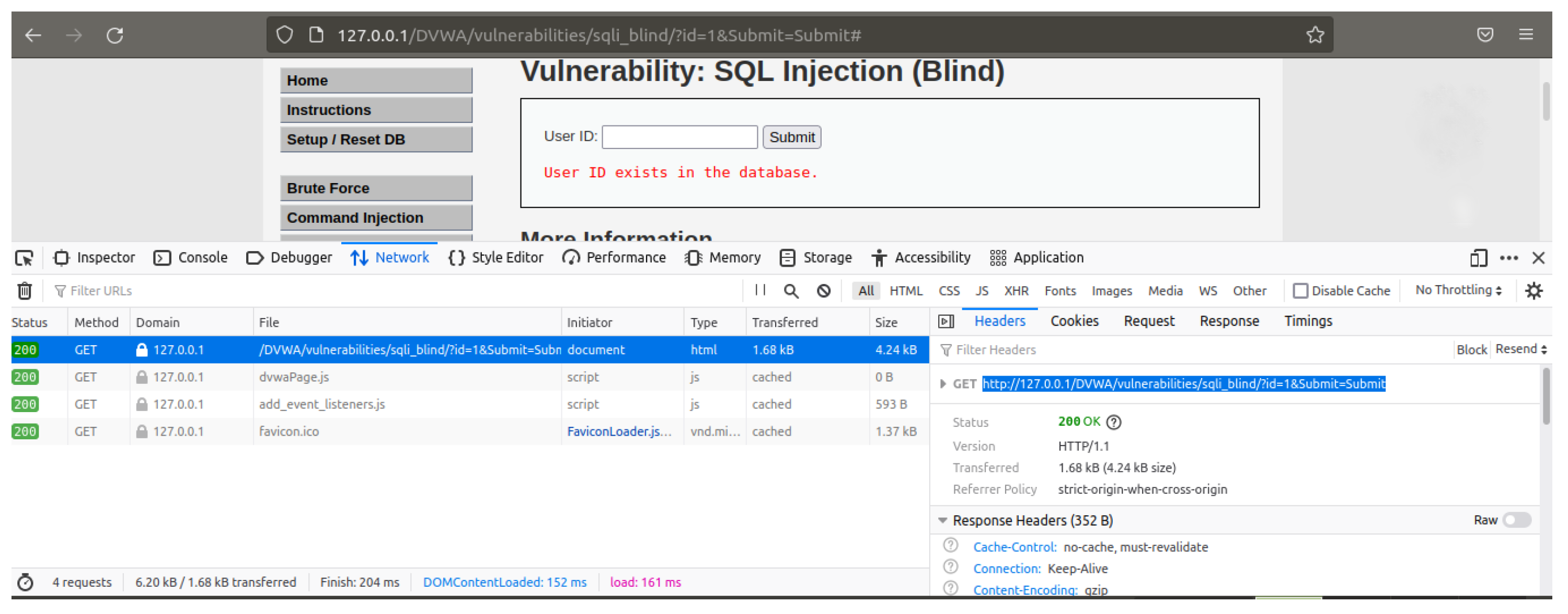

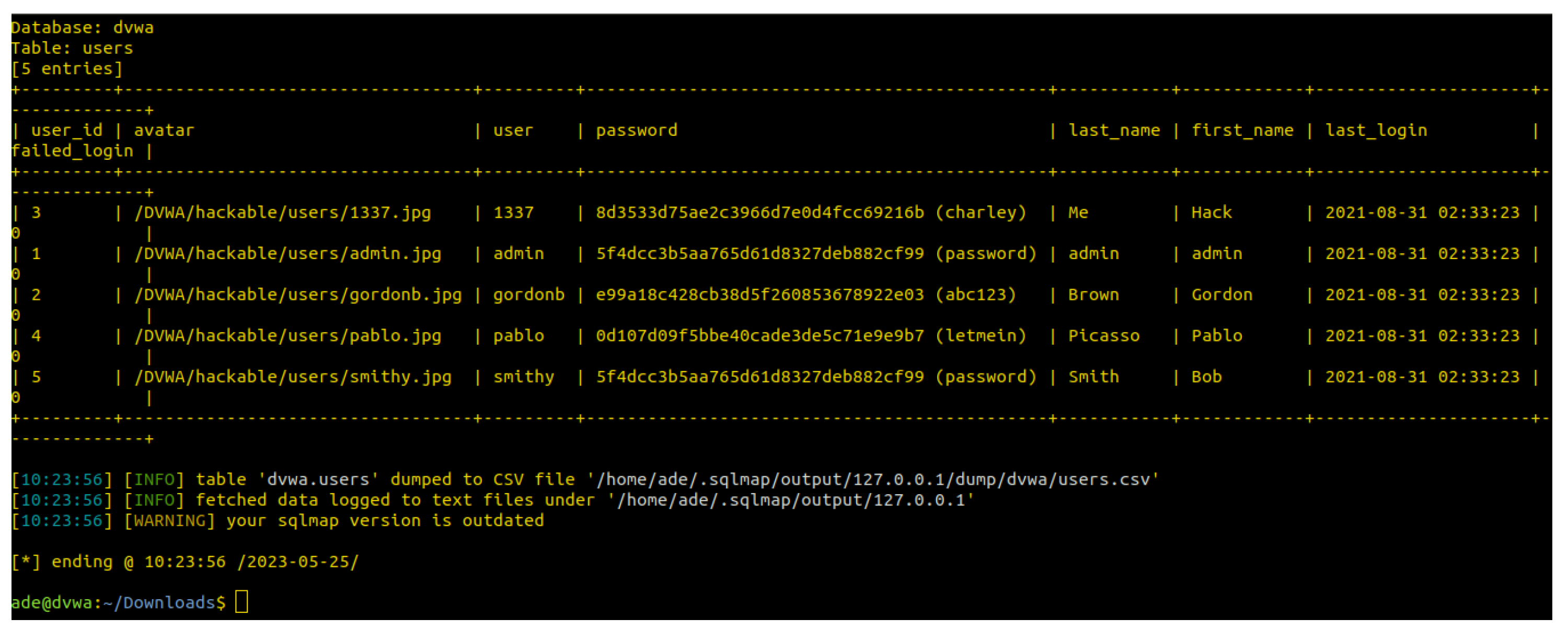

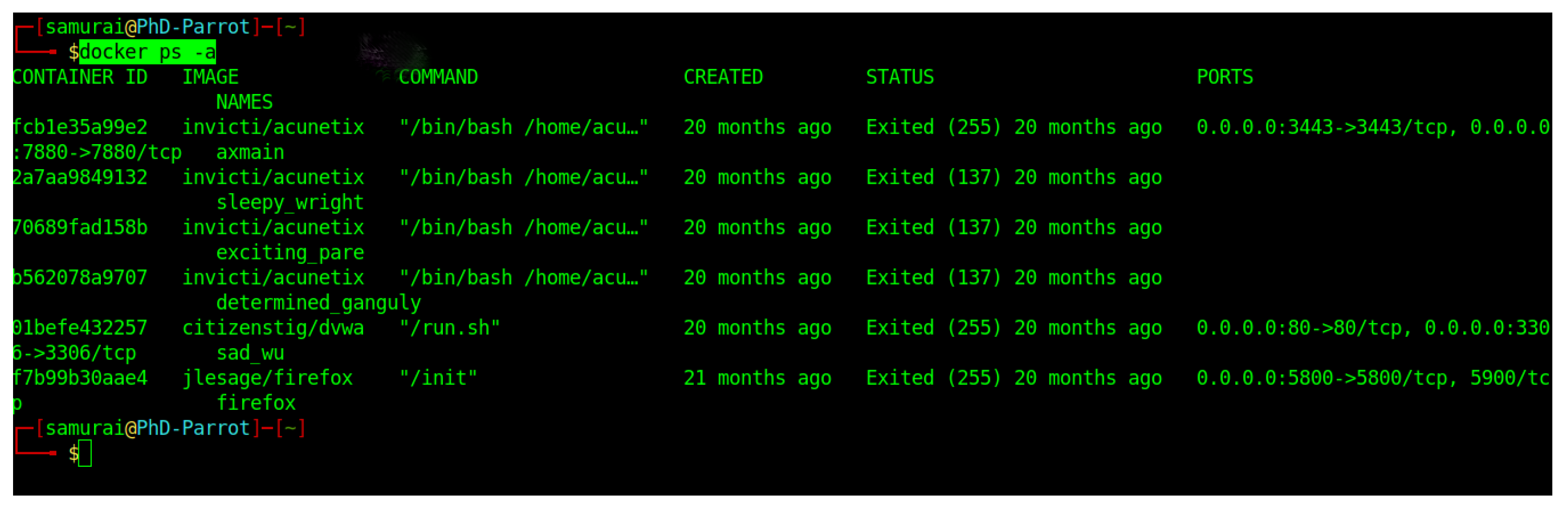

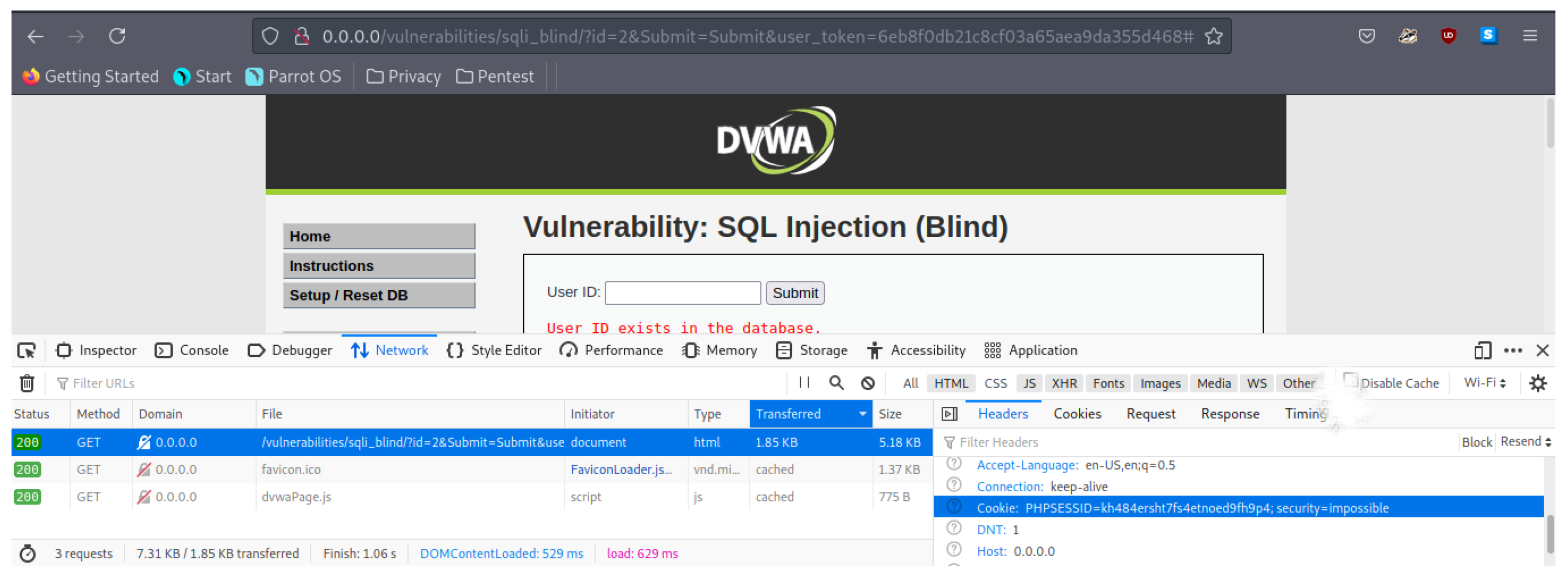

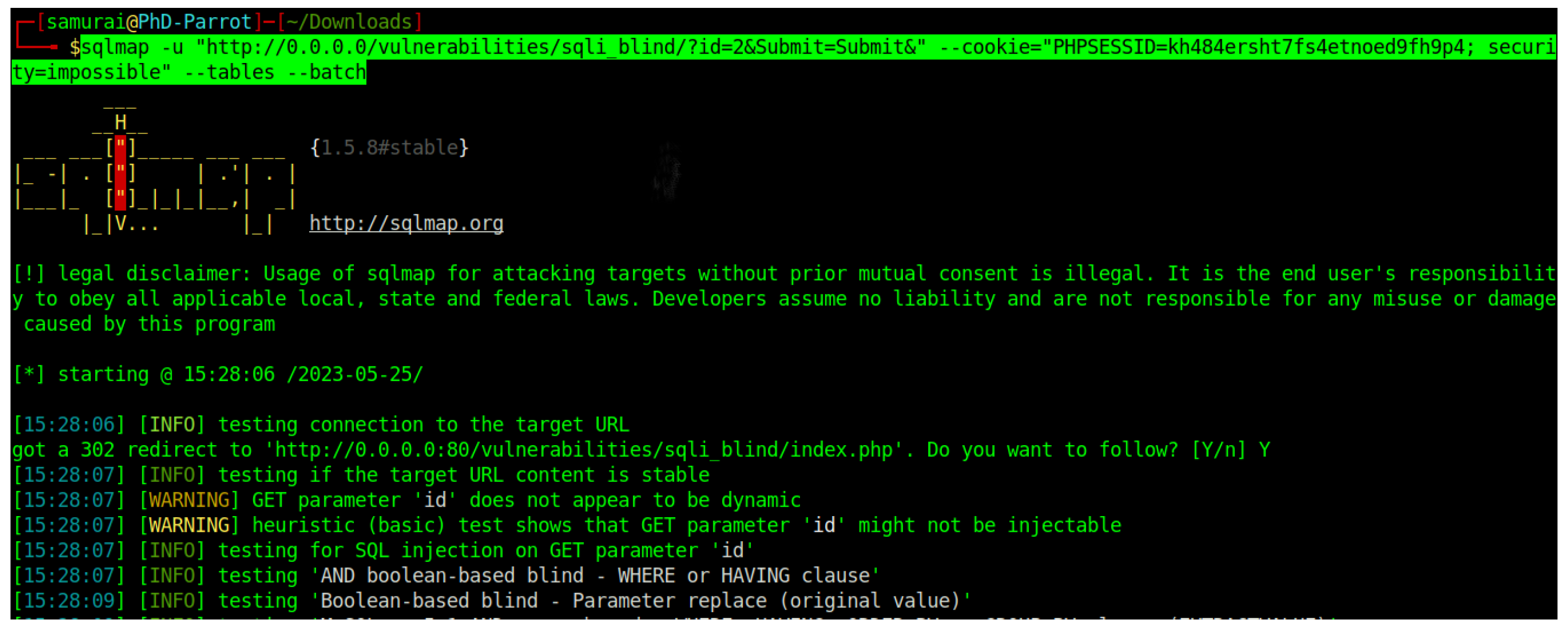

This review involved searching for existing research, critically evaluating relevant studies, and synthesising the findings. The next step involved formulating alternative hypotheses (

) and selecting appropriate statistical tests. For example, to evaluate the effectiveness of Docker in mitigating SQL injection attacks, we launched controlled SQLi attacks against (

https://www.acunetix.com/, Acunetix, accessed on 19 December 2024) and (

https://github.com/digininja/DVWA, DVWA, accessed on 19 December 2024) databases (without Docker protection) and recorded the data exfiltration levels. Subsequently, we then implemented (

https://www.docker.com/company Docker, accessed on 19 December 2024) protection for the same databases and repeated the SQLi attacks, measuring and comparing the data exfiltration in both scenarios. Statistical tests (e.g.,

t-tests) were then used to determine if the observed differences between the two settings were statistically significant.

We, therefore, used a quantitative approach, specifically, a true experimental design with a between-groups post-test-only structure. This design utilises two groups: a control group and an experimental group. Both groups are measured on a dependent variable, which reflects the outcome of interest. The independent variable, representing the manipulation introduced by the experiment, is applied only to the experimental group. Following the post-test, the main analysis focuses on the differences between the control and experimental groups in terms of the dependent variable. To determine if the observed differences are statistically significant, a

t-test is employed. This test is suitable when comparing the means of two independent groups with normally distributed, continuous (metric) data (as outlined by (

https://datatab.net/tutorial/paired-t-test DATAtab, accessed on 19 December 2024)).

4. Results

This section presents the statistical analysis conducted to evaluate the impact of Docker on CPU utilisation during SQL injection attacks against DVWA and Acunetix web applications. The type of sampling used to collect the data was one of the non-probability sampling methods called convenience sampling. The results are readily available and easy to collect. Additionally, in comparison to other sampling techniques, convenience sampling can help overcome most limitations associated with research. For example, if old people always assemble in a park, the researcher can then go to the park and collect the samples—the park acts as a convenient place to collect samples [

76]. Furthermore, convenience sampling is inexpensive (cheap), which can be seen as an advantage. Convenience sampling is easy to execute and efficient, but in this research, limitations include a small sample size drawn from a large sample pool, which limits generalisability [

77]. In the case of the Damn Vulnerable Web App (DVWA) database results, the first 20 (number of values) data items were collected, while, for the Acunetix database results, the first 15 (number of values) data items were collected.

Paired Samples t-Test: A paired-samples t-test was employed for each dataset (DVWA and Acunetix) to compare the means of CPU utilisation (CPU_total_(%)) between scenarios with and without Docker protection (ND—No Docker, WD—With Docker). This test is appropriate as we are analysing data from the same set of systems measured under two different conditions (attack with and without Docker). Additionally, a two-tailed test was chosen as we are not pre-determining the direction of the difference (improvement or degradation) in CPU utilisation.

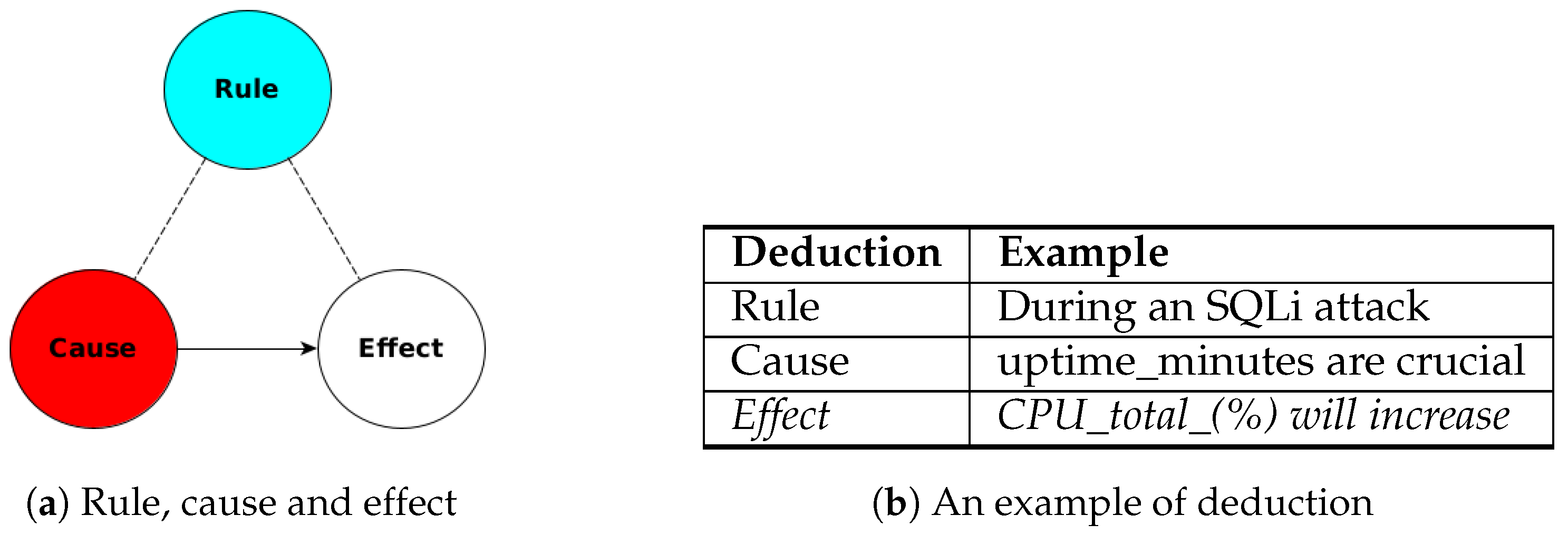

Hypothesis Testing: The null hypothesis () states that there is no significant difference in the mean CPU utilisation between the No Docker (ND) and With Docker (WD) scenarios for both DVWA and Acunetix. The alternative hypothesis () proposes that there is a significant difference.

Significance Level and Critical Value: The level of significance () was set at 0.05, indicating a 5% chance of rejecting the null hypothesis when it is actually true. Based on the degrees of freedom (df = n − 1, where n is the number of samples in each group), the critical values for the two-tailed test were determined from a t-distribution table.

Table 6 presents the descriptive statistics for the experiments conducted on Acunetix and DVWA, both with and without Docker protection. A total of 15 experiments were performed on Acunetix, and 20 experiments were conducted on DVWA. Each experiment was repeated twice: once with Docker and once without. CPU utilisation was measured both before the SQL injection attack (Before) and during the attack (During). While the maximum CPU usage reached 100% in all cases, the mean and median CPU utilisation values were significantly higher during the attack when Docker was used. This increased CPU usage was further evidenced by the higher standard deviation observed in the “During” phase with Docker, indicating greater variability in CPU utilisation during these periods.

Figure 11 and

Figure 12 present histograms illustrating the distribution of CPU utilisation for all eight experimental scenarios: before and during SQL injection attacks on Acunetix and DVWA, both with and without Docker protection. A visual inspection of these histograms reveals a notable trend: the CPU utilisation exceeds 50% more frequently in scenarios involving Docker compared to those without Docker. This suggests that Docker, while enhancing security, may also incur a performance overhead in certain scenarios. This is considered further in the next paragraph.

As shown in

Table 7, for the DVWA data, the calculated t-statistic (t = 3.307) exceeded the critical value (CV = ±2.093). This statistically significant result (

p < 0.05) rejects the null hypothesis, indicating a significant difference in CPU utilisation between the No Docker and With Docker scenarios when attacking DVWA. Similarly (see

Table 8), for the Acunetix data, the t-statistic (t = 2.339) exceeded the critical value (CV = ±2.145), leading to rejection of the null hypothesis (

p < 0.05). This suggests a statistically significant difference in CPU utilisation between the two scenarios for Acunetix as well. As such, the statistical analysis confirms that Docker plays a role in influencing CPU utilisation during SQL injection attacks (see

Table 9).

In terms of the effectiveness of an SQLi attack, both DVWA and Acunetix were successfully compromised when Docker was not installed. This indicates that these applications, in their default state, are susceptible to SQL injection attacks (as expected). When Docker was installed on the systems hosting DVWA and Acunetix, the SQL injection attacks were unsuccessful. This suggests that Docker, in this context, effectively mitigated the risks associated with SQL injection. By isolating the application within a container, Docker can help prevent attackers from exploiting vulnerabilities and compromising the underlying system. This is due to Docker’s ability to restrict access to resources and isolate the application from the host environment.

Table 9 presents the readings from the paired samples

t-test results for DVWA and the readings from the paired samples

t-test results for Acunetix. Additionally, in

Table 9, the sampling used was non-probability (planning) convenience sampling, which reflects the easy availability of the samples that were chosen from all the samples available.

This is why the Critical Value (CV) used is CV(19) for DVWA and CV(14) for Acunetix.

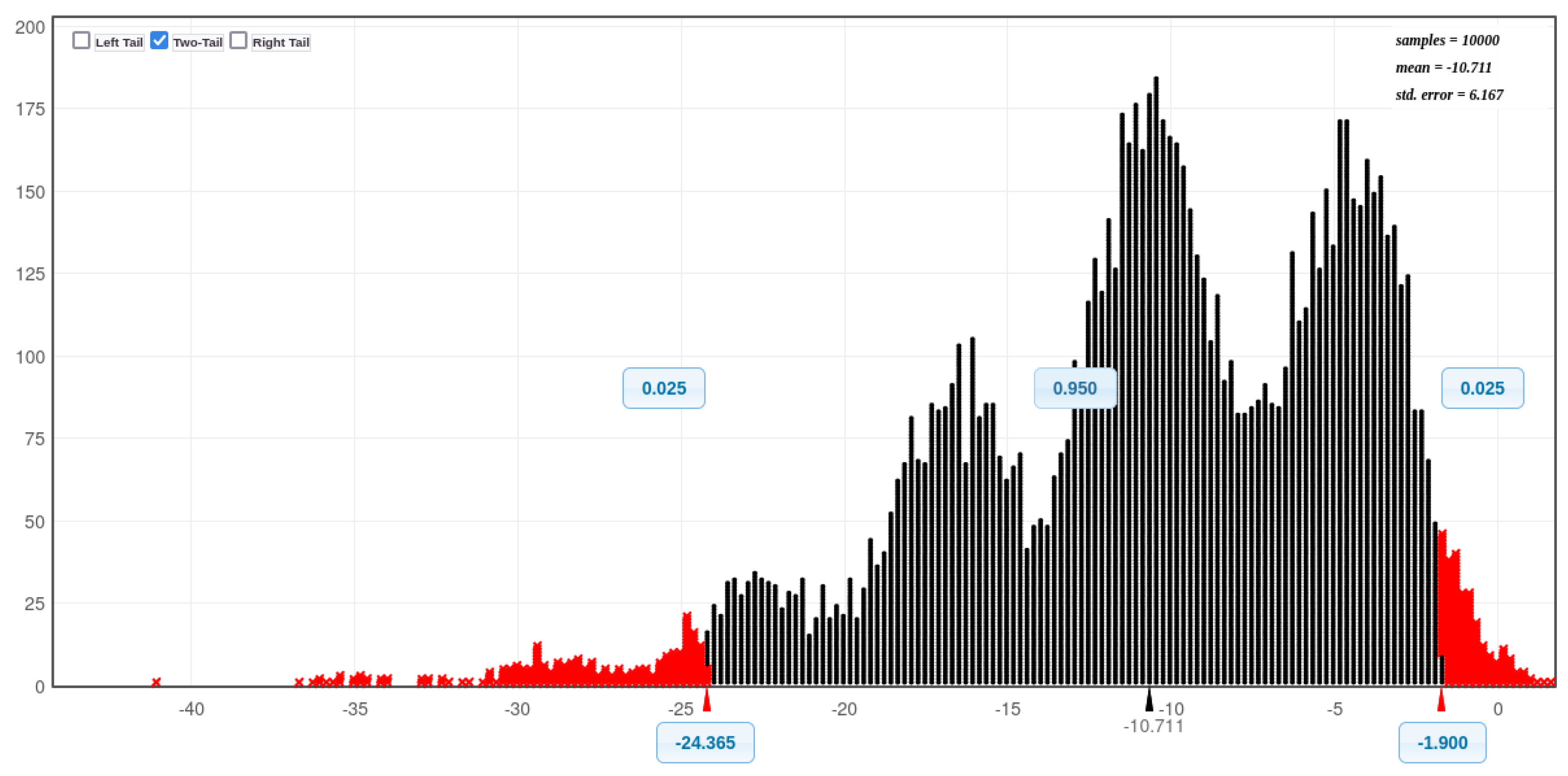

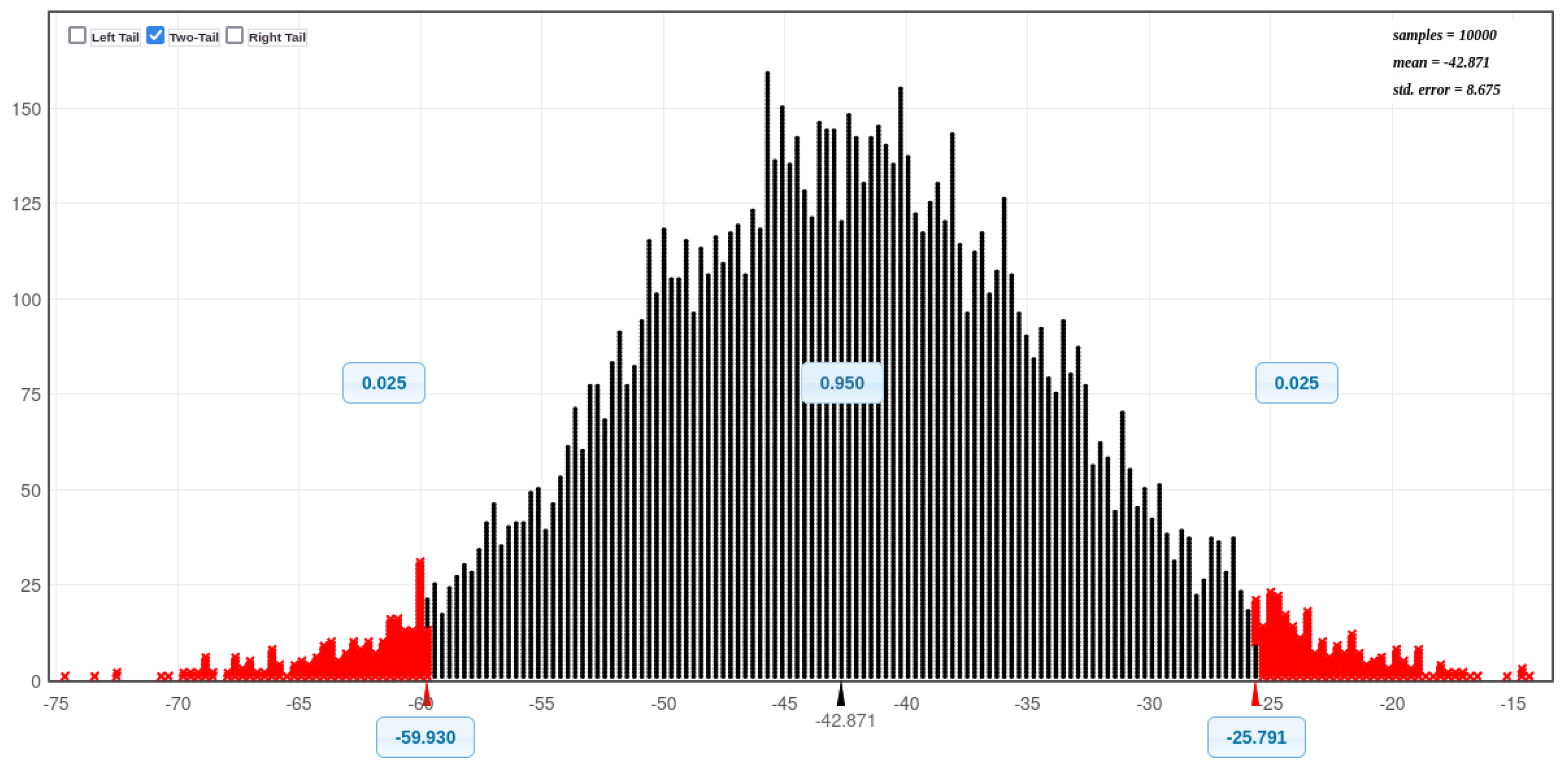

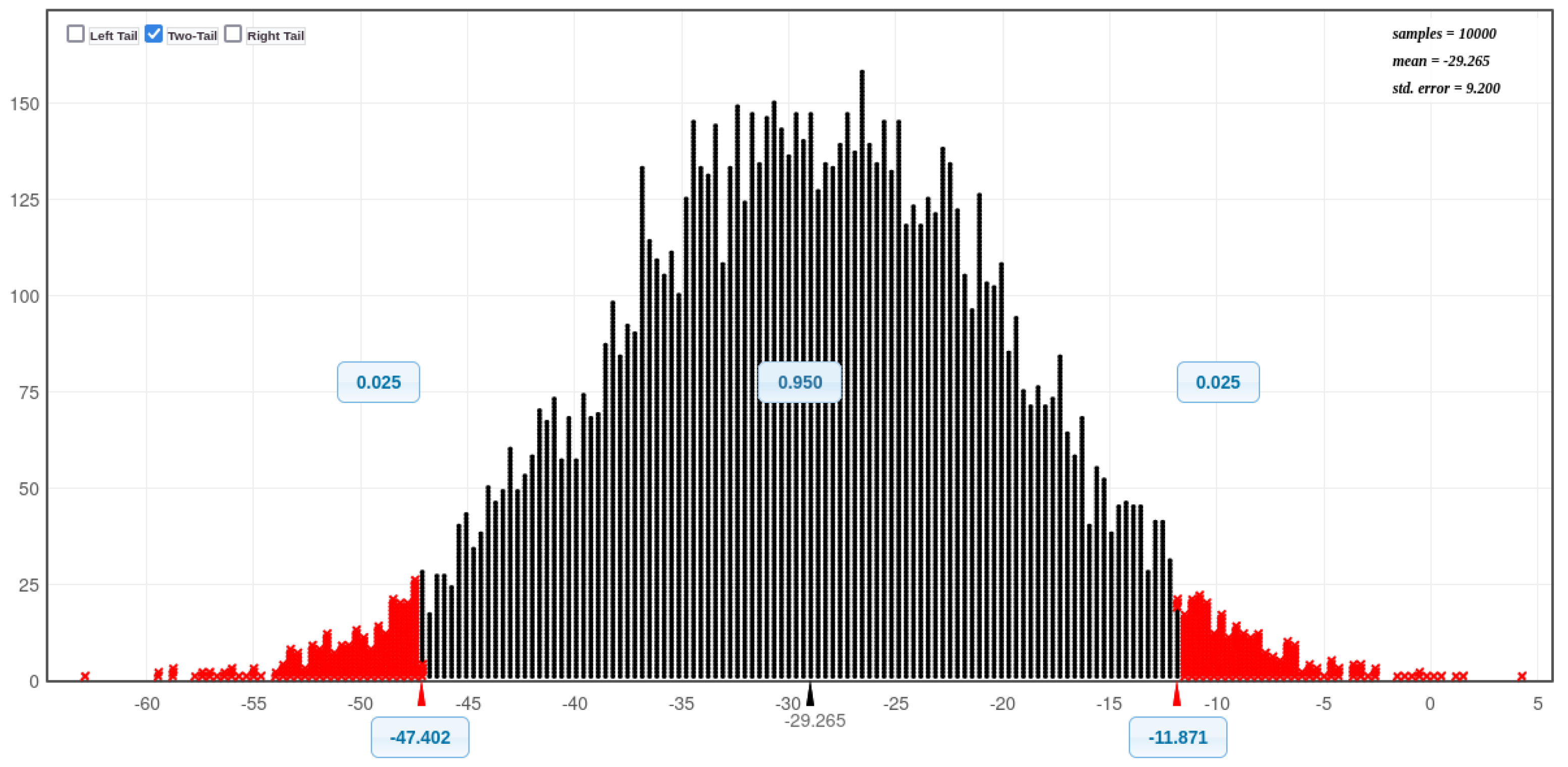

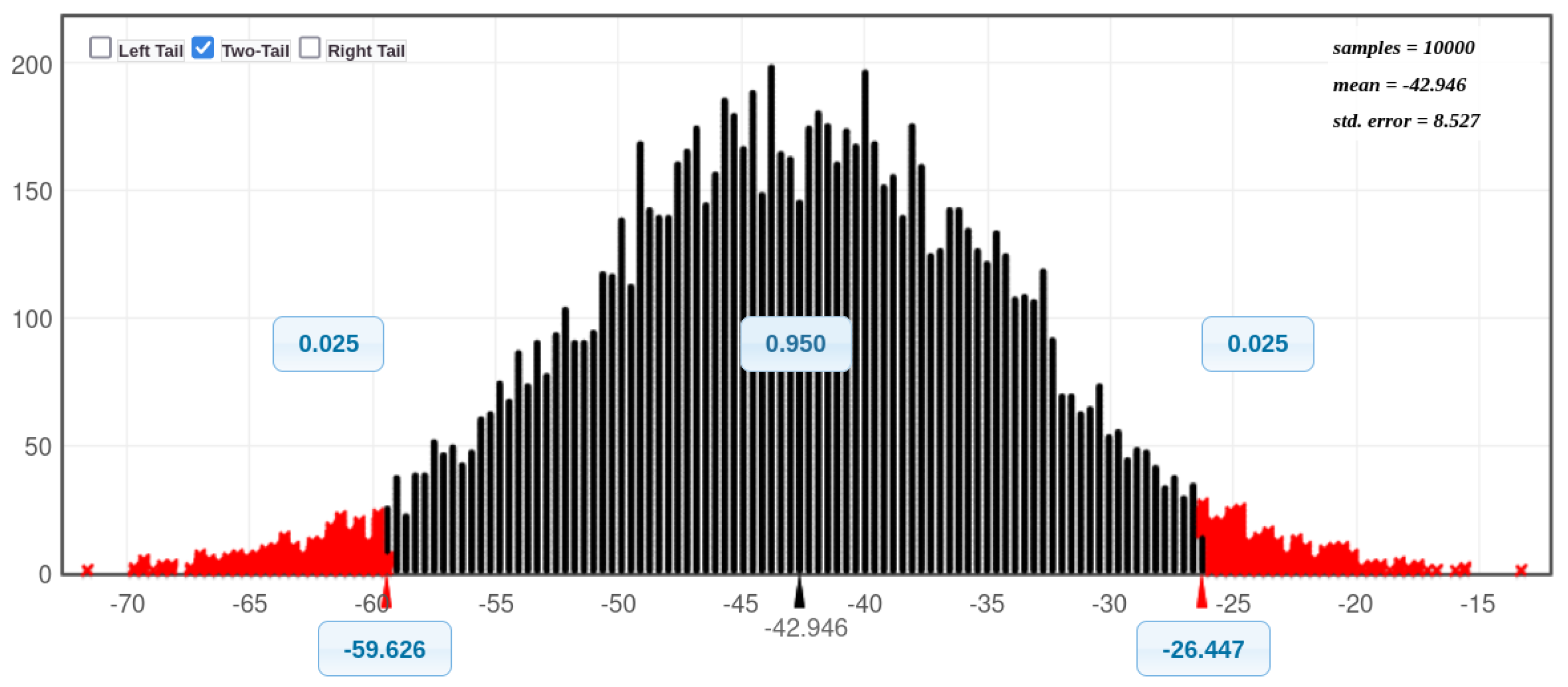

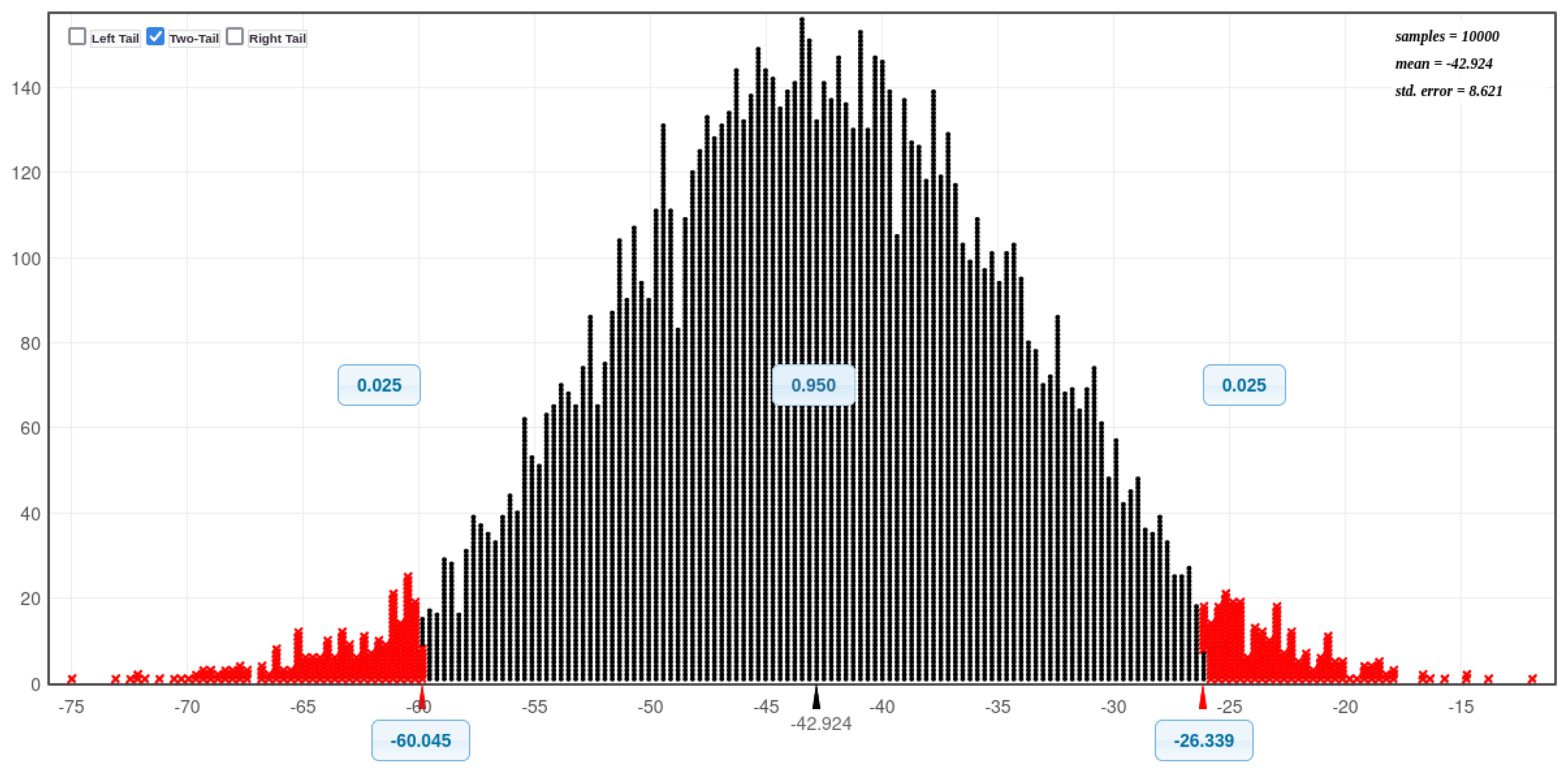

Additionally, we used bootstrapping to compare the means between CPU usage in different situations to further examine the hypothesis.

Figure 13,

Figure 14,

Figure 15,

Figure 16,

Figure 17,

Figure 18,

Figure 19 and

Figure 20 (Bell curves) prove that there are a significance difference with DVWA - (before an SQLi attack without Docker, During an SQLi attack without Docker), (before an SQLi attack with Docker and During an SQLi attack with Docker). There is also a significance difference with Acunetix - (before an SQLi attack without Docker, During an SQLi attack without Docker), (before an SQLi attack with Docker and During an SQLi attack with Docker). Additionally, there is also a significance difference (without Docker installed: before an SQLI attack with both databases combined, During an SQLI attack both databases combined. Furthermore, there is also a significance difference (with Docker installed: before an SQLI attack with both databases combined, During an SQLI attack both databases combined (see

Table 10).

5. Summary of Findings

This research aimed to design and evaluate a database system that prioritises performance, security, and user accessibility, particularly in the face of SQL injection attacks and high-traffic demands. While numerous studies have explored the use of Docker as a framework for various applications, there remains a significant gap in understanding how Docker can be used to enhance database security.

The findings of this study demonstrate the efficacy of a decentralised database approach built upon the Docker framework. By isolating database instances within Docker containers, the risk of external attacks, including SQL injection, is significantly reduced, addressing Research Question 1. Additionally, such an environment provides a robust defence against SQL injection attempts, safeguarding user data and database integrity, thus addressing Research Question 2. Furthermore, the Docker-based database showed high CPU utilisation during attack, which needs to be considered when used in high-traffic applications, fulfilling Research Question 3. A key advantage of this approach is that it does not necessitate modifications to the traditional database infrastructure. By introducing Docker as a layer of abstraction, the existing database remains unaffected. The Docker framework offers improved security. Although, this research was designed from the user’s perspective as a decentralised database without altering the existing centralised database, developers or administrators of a centralised database should look at the practical benefits of being able to run Docker images with any assigned IP (Internet Protocol) address of their choice; this improves the security of a database running in a Docker container. Meanwhile, the authors of the research paper Gore et al. [

43] obtained a positive result when utilising Docker containers to handle data in a network environment compared to the results of the research paper by Velasquez et al. [

22] that showed Microsoft Azure, Amazon Web Services, OpenStack, IBM, VMware and Google Compute Engine, which are all cloud providers, are now being supported by Docker. Additionally, the research paper by Reis et al. [

47] reported that developers are not keen on using different types of tools to build their Docker-compose.yml files and Dockerfiles. Lastly, as presented in

Table 3, under graphics Intel/Nvidia 2GBRam, the more memory (Ram) a video card (Nvidia (GPU) Graphics Processing Unit) has, the more this would help in reducing the high-traffic bottle necks encountered where the CPU (Central Processing Unit) is the only processor processing information within a database. A limitation of this research was the use of convenience sampling. Positive ideas for future work/research include measuring the computer memory and investigating the potential for more data output.