1. Introduction

In the modern era of pervasive digital technology within an increasingly interconnected society, safeguarding privacy and security has emerged as a critical concern. The extensive volume of visual data generated and disseminated daily—via security cameras, social media platforms, and mobile devices—poses substantial privacy risks [

1]. Visual media, such as images and videos, frequently encapsulate sensitive information, including personal details, geolocations, and identities, underscoring the necessity for robust privacy protection mechanisms [

2].

The evolution of privacy threats has paralleled technological advancement, creating a complex landscape where traditional privacy protection methods often prove insufficient [

3]. As technologies become more immersive and pervasive, the lines separating private and public spheres are becoming increasingly blurred due to rising immersion and pervasiveness. This shift highlights the pressing need for novel approaches or advancements in privacy protection [

4].

A notable immersive technology in recent years is augmented reality (AR), which superimposes digital data onto the real environment [

5]. Through the integration of virtual content with the physical world, AR offers an interactive experience [

6]. Its uses are found in several domains, such as industry, gaming, healthcare, and education [

7,

8,

9,

10].

However, integrating AR into our daily lives also introduces a new wave of privacy and security concerns [

11]. As AR devices continuously scan our surroundings, they inadvertently collect sensitive information, raising concerns about bystander privacy [

12]. Furthermore, real-time data processing and storage, coupled with location tracking and behavioral pattern recognition, pose significant risks to the exposure of personal information [

13]. The advent of shared AR spaces further complicates the privacy landscape as individuals with diverse privacy preferences engage in a common digital environment [

14].

To address these privacy challenges, some techniques can be used to anonymize or obscure visual data captured by AR devices to ensure that the identities and sensitive information of individuals are protected [

15]. Common methods include blurring, blocking, and pixelating sensitive information while preserving the overall visual context. However, the effectiveness and suitability of these techniques can vary depending on the specific application, context, and the required level of privacy [

13]. Thus, we developed SafeAR (

https://safear.ipleiria.pt/ accessed on 13 December 2024), a funded project to enhance privacy in AR environments. SafeAR focuses on developing services [

16] and libraries [

17] to apply privacy-preserving techniques to sensitive visual data captured by AR devices. Using machine learning to identify sensitive data and obfuscation methods such as blurring, blocking, and pixelation, SafeAR seeks to protect the identities of individuals and personal information without compromising the functionality of AR applications.

In this paper, we explore user preferences and perceptions of these privacy-enhancing techniques, examining which method is considered more secure or effective in different scenarios. We address the following research questions:

RQ1: what is the relationship between demographics (age, education, and occupation) and the perceived security of different obfuscation methods?

RQ2: how do different obfuscation techniques (masking, pixelation, and blurring) affect user experience in AR applications?

RQ3: what is the relationship between the level of familiarity with AR and the acceptance of different obfuscation methods?

To answer these questions, we developed and disseminated a questionnaire to a diverse group of participants. The questionnaire was designed to capture information on user demographics, their familiarity with AR, and their perceptions of pixelation, masking, and blurring obfuscation techniques. The collected data were analyzed using statistical methods to validate the results.

The remainder of this paper is organized as follows.

Section 2 provides essential background information on AR technology and existing privacy protection techniques and reviews relevant research in AR privacy protection and user studies. To systematically explore the research question,

Section 3 details the research methodology and study design. Subsequently,

Section 4 presents the findings of our study and we analyze the effects of these findings, offering insight into the considerations of AR privacy. Finally,

Section 5 summarizes the conclusions and recommendations for future research directions and potential solutions to address the privacy challenges posed by AR technology.

2. Background and Related Work

The integration of digital information into the physical world has reached new levels of sophistication with advancements in AR technology. AR systems seamlessly incorporate computer-generated content, including images, sounds, and 3D models, into users’ real-time view of their environment. This augmentation is typically experienced through smart glasses or mobile devices equipped with cameras and sensors that analyze the surroundings to accurately position and display digital content [

18].

As AR applications become more prevalent, particularly on mobile platforms, they present new challenges related to the exposure of sensitive information in both personal and professional contexts. The technology’s capacity to overlay digital content onto the real world, while innovative, raises significant privacy concerns that require careful consideration and management [

19].

2.1. Privacy Challenges in AR Environments

Privacy challenges in AR environments are inherently multifaceted and complex. AR systems continuously scan and process their surroundings, often capturing sensitive information that may inadvertently appear in the background of a user’s view. This continuous environmental scanning creates a persistent stream of data that must be carefully managed to protect user privacy [

20]. The complexity increases in multi-user scenarios, where different users may have varying privacy requirements and familiarity levels with shared AR experiences [

21]. Furthermore, the context-dependent nature of AR applications makes it particularly challenging to implement universal privacy protection measures that work effectively across all situations [

22]. The potential for AR systems to store or process captured information also raises important questions about data retention and security [

23].

2.2. Visual Privacy Protection Techniques

To address these privacy concerns, several visual privacy protection techniques have been developed and implemented in AR systems. These techniques offer different approaches to evalute privacy protection with user experience and functionality.

Each of these privacy protection techniques offer distinct advantages and limitations, making them suitable for different use cases and privacy requirements. The choice between these methods often depends on factors such as the sensitivity of the information being protected, the required level of privacy, and the desired user experience. The effectiveness of these techniques in AR environments is particularly important as the technology continues to evolve and become more integrated into our daily life.

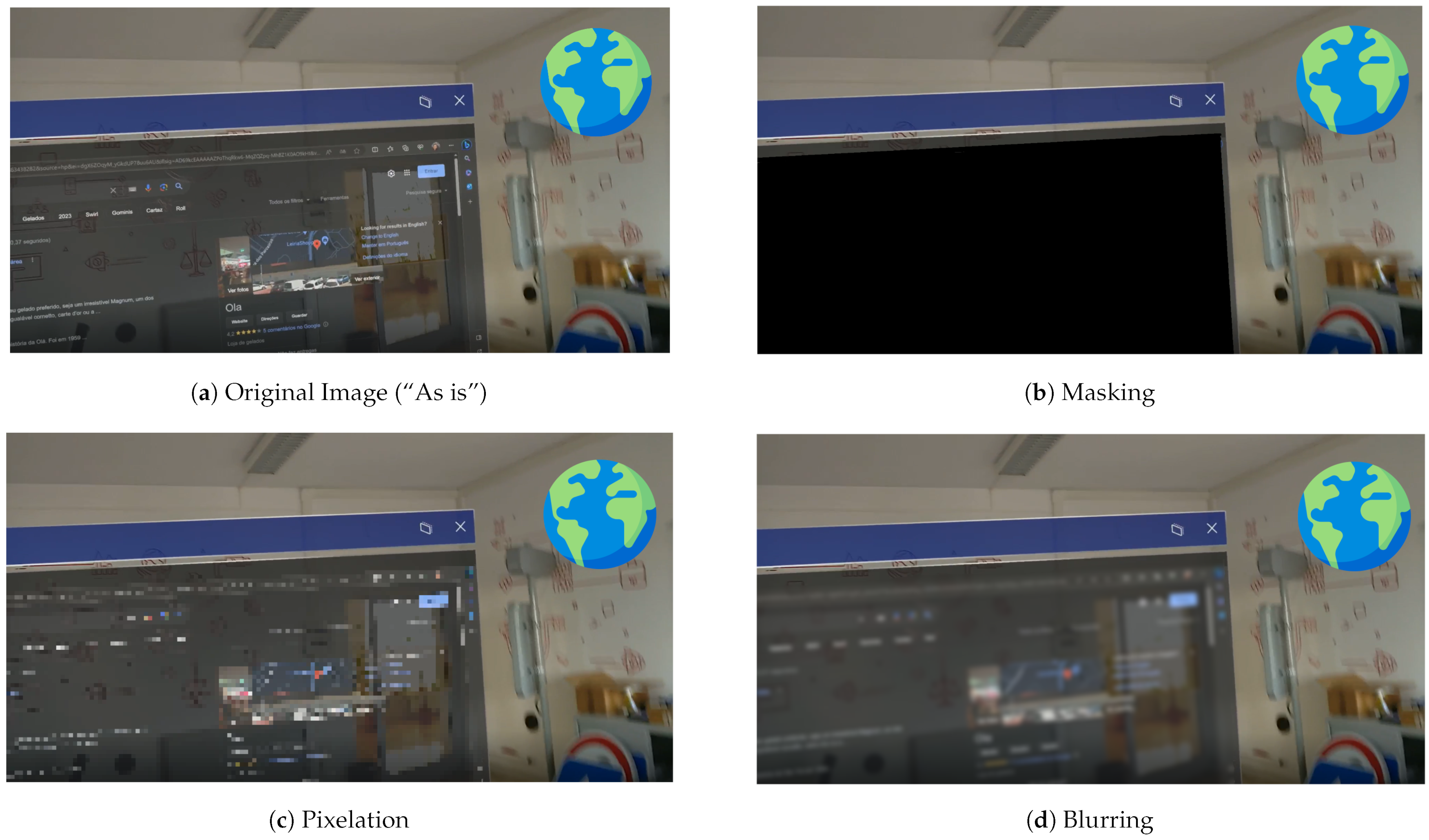

To illustrate the impact of different obfuscation techniques,

Figure 1 compares the effects of masking (

Figure 1b), pixelation (

Figure 1c), and blurring (

Figure 1d) when applied to an AR system. These techniques are used to conceal sensitive information displayed on the screen, demonstrating various methods of privacy protection in AR environments.

Masking: Figure 1b illustrates how this technique involves completely covering sensitive information with solid shapes or patterns, effectively creating a visual barrier between the protected content and any observers. While masking provides possibly better privacy protection and is computationally efficient to implement, it can significantly impact the user experience by removing all visual context from the protected area [

24,

25].

Pixelization: In

Figure 1c, the pixelization technique, also known as the mosaic effect, offers a different approach to privacy protection. This technique reduces image resolution in specific areas by averaging pixel values within defined blocks. The result maintains the visual structure of the protected content while making specific details unclear [

26].

Blurring: Figure 1d illustrates how this technique applies a smoothing effect that preserves general shapes and colors while obscuring specific details that might be sensitive or private. Blurring can be implemented with varying intensity levels, offering adaptable privacy protection based on specific needs [

27].

2.3. Related Work

Important research addresses privacy and security challenges associated with the protection of personal information, alongside obfuscation techniques that are applied to visual data.

In Roesner et al. [

28], the authors examine security and privacy challenges in AR systems, focusing on how sensitive information can inadvertently be displayed or recorded by AR devices. The study discusses how AR-specific contexts, such as shared visual data and continuous environmental scanning, create privacy risks. The authors propose mechanisms like access controls for data streams, the obfuscation of sensitive elements, and user-consent mechanisms that address privacy without interrupting the AR experience. The study is relevant as it focuses on developing privacy techniques that are uniquely adapted to the immersive and interactive characteristics of AR environments.

Li et al. [

29] explore the effectiveness of blurring and pixelation obfuscation techniques in protecting the privacy of individuals depicted in images. The study examines how well these techniques prevent the recognition of sensitive content while balancing the need for interpretability in different contexts. The study indicates that while both blurring and pixelation can obscure facial features, their effectiveness varies depending on the context and the observer’s familiarity with the target subjects.

In Oh et al. [

30], the authors explore techniques for person recognition while omitting facial features, such as relying on body shape, clothing, and pose. The authors address how these methods can still allow for recognition in social media contexts, raising privacy concerns even when facial details are obscured. Their findings suggest that solely obscuring faces may be inadequate for ensuring comprehensive privacy protection.

Demiris et al. [

31] assess the privacy concerns associated with vision-based monitoring systems, which capture video or images for safety monitoring. The study finds that while monitoring enhances safety, privacy concerns arise from the continuous capture of personal spaces. They propose obfuscation techniques that extend beyond face concealment, incorporating methods such as low-resolution imagery and the selective masking of sensitive areas.

While a few studies have explored the integration of obfuscation techniques within AR systems, to the best of our knowledge, none have conducted surveys with volunteers to empirically measure the effectiveness of these techniques in AR-specific contexts.

3. Methodology

This section provides an overview of the research methodology adopted in this study. It describes the systematic approach taken, detailing the planning, data collection, and analysis. By outlining each stage, the methodology illustrates how the research was structured to achieve the study’s objectives in five distinct phases:

Phase 1—Preparation: This initial step involved defining the core research objectives, reviewing related studies, and structuring the questionnaire. The goal was to outline the research questions and ensure that the questionnaire was thoughtfully designed to collect the necessary data.

Phase 2—Questionnaire: In this phase, the questionnaire was created, using Google Forms [

32] to design and distribute the digital survey. Each question was crafted to try to align it with the research objectives.

Phase 3—Dissemination: This phase involved disseminating the survey link through social media messages and in-person requests for participation. The survey was also shared in class with students to further extend its reach.

Phase 4—Data Analysis: Once the survey responses were collected, we began the data analysis. Using statistical methods, we examined the data to identify patterns and insights that addressed the research questions.

Phase 5—Reporting and Documentation: Finally, we compiled the results of our data analysis, drew conclusions, and interpreted the significance of the findings.

This study examined user perceptions of privacy protection strategies in AR using a primarily quantitative research approach, supplemented with qualitative analysis. The aim was to explore user preferences, experiences, and concerns regarding visual obfuscation techniques in AR contexts.

The research methodology was structured to address three primary research questions. We sought to identify how different obfuscation techniques (masking, pixelation, and blurring) affect user experience in AR applications. Additionally, we aimed to explore the relationship between demographics (age, education, and occupation) and the perceived security of different obfuscation methods. Finally, we explored the relationship between the level of familiarity with AR and the acceptance of different obfuscation methods.

The survey was implemented using Google Forms and was thoughtfully structured with four distinct sections: demographic user information, privacy and obfuscation awareness, obfuscation technique preference in an AR context, and the adoption and application of obfuscation techniques. These built upon each other to provide a comprehensive understanding of user preferences and perceptions.

Demographic information. The first section of the SafeAR questionnaire gathered demographic information from participants, including gender identity, age, education level, and current occupation. The questions are visualized in the Appendix in

Table A1; these demographic data were essential for analyzing how different population segments perceive and accept various privacy protection methods. Also, questions related to smartphone usage at work and prior AR experience were included to assess participants’ familiarity with technology, which could influence their acceptance of and engagement with AR solutions.

Privacy and obfuscation awareness. The second section of the questionnaire, detailed in the Appendix in

Table A2, focused on participants’ habits, concerns, and knowledge related to privacy and obfuscation techniques. This section was designed to capture both behavioral data and awareness levels, providing insight into how participants handle privacy when sharing visual content online and their familiarity with methods used to protect that privacy. Participants were inquired about their prior knowledge of different obfuscation methods and their awareness of potential security vulnerabilities in these techniques.

Obfuscation technique preference in an AR context. The third section focused on privacy and obfuscation awareness, introducing participants to fundamental concepts of privacy and obfuscation in digital contexts. As shown in the Appendix in

Table A3, we examined the participants’ perceptions of different obfuscation techniques (masking, pixelating, and blurring) across a variety of AR contexts, as can be seen in

Figure 2. This section aimed to evaluate the suitability of each technique for different scenarios, such as outdoor environments (bike or people) and indoor environments (document or screen). They were asked to rate how appropriate each obfuscation technique is for these contexts on a scale ranging from “Not at all” to “Extremely”, offering insights into their preferences for privacy protection based on situational needs.

In addition to contextual suitability, the questionnaire also explored participants’ perceptions of using each obfuscation method across all AR contexts. Specifically, it addressed aesthetic appeal, information preservation, satisfaction, security, and trust, allowing us to assess how these factors influence users’ approaches toward each technique.

The adoption and application of obfuscation techniques. The final section, detailed in the Appendix in

Table A4, offered an overview of users’ experiences and approaches toward incorporating obfuscation techniques into their daily lives. Participants were asked to evaluate the suitability of obfuscation techniques, such as masking, pixelating, and blurring, in different AR contexts. In addition to this, the interest in having control over the degree of obfuscation applied provided insights into how much autonomy users expect when managing privacy settings. It also assessed their familiarity with obfuscation applications by asking if they had ever used apps to obfuscate data.

Over the course of a week, the full data collection process was conducted. An introductory note outlining the goal of this study and its relationship to the project was included at the start of the questionnaire. In order to balance collecting thorough data with preserving participant involvement, the survey was developed so that it could take less than 10 min to complete. No personal identifiable information was taken during the procedure, and all replies were gathered anonymously to protect participant privacy.

After collecting the data from the questionnaire, the results were analyzed using the Pandas library [

33], which facilitated efficient data manipulation and analysis. Necessary libraries for data analysis and visualization include Pandas, NumPy, Seaborn, Matplotlib, SciPy, and Scikit-learn [

34].

The analysis focused on understanding the relationship between the level of familiarity with AR and the acceptance of different obfuscation methods (masking, pixelation, and blurring). Various statistical methods and visualizations were employed to analyze the data. Descriptive statistics were calculated, including the mean, median, standard deviation, minimum, and maximum values for satisfaction ratings across different AR familiarity levels. Boxplots were used to visualize the distribution of satisfaction ratings for each obfuscation method across different AR familiarity levels. The Kruskal–Wallis (H) test [

35] was conducted to determine if there were statistically significant differences between the satisfaction ratings of different obfuscation methods.

4. Results and Discussion

In this section, we present and discuss the results of our study, where we analyze the responses collected using various data analysis techniques.

4.1. Participant Demographic

Our study included 54 participants (33 were male, 19 were female, and 2 preferred not to answer) with ages ranging from 15 to 77 years. The mean age () was 30.6 years, with a standard deviation (s) of 13.1 years. Participants primarily consisted of students and professionals in education and information technology fields; most of them were familiar with AR technology.

4.2. Open-Ended User Responses Analysis

Responses about familiarity with obfuscation techniques revealed that over 85% of participants had limited knowledge of these methods. Nonetheless, some participants mentioned specific techniques like inpainting [

36]—a common approach in visual data obfuscation—indicating recognition of its applications. One participant noted image swirling as an obfuscation method, while privacy-preserving photo sharing (P3) [

37] and techniques like halftone and image quantization were also mentioned, reflecting some awareness of privacy-focused approaches among participants.

The analysis of open-ended responses about barriers to the adoption of visual data obfuscation techniques revealed various perceived obstacles. Respondents noted technical and implementation challenges, usability concerns, and security issues. For example, some highlighted the “technical complexity” required to obfuscate visual data accurately without losing precision or causing high computational costs, which could impact device performance.

Usability was another concern. Participants expressed reluctance toward functionalities that add complexity to image-sharing processes, suggesting “convenience” as an essential factor. Some suggested integrating optional obfuscation features within social media and photo-sharing platforms to reduce friction and improve user experience.

Finally, concerns about the effectiveness and security of obfuscation methods were raised, with some participants worried about the potential for reversed obfuscation techniques. This emphasizes that trust in the security of these methods is crucial for widespread adoption.

4.3. Addressing Research Questions

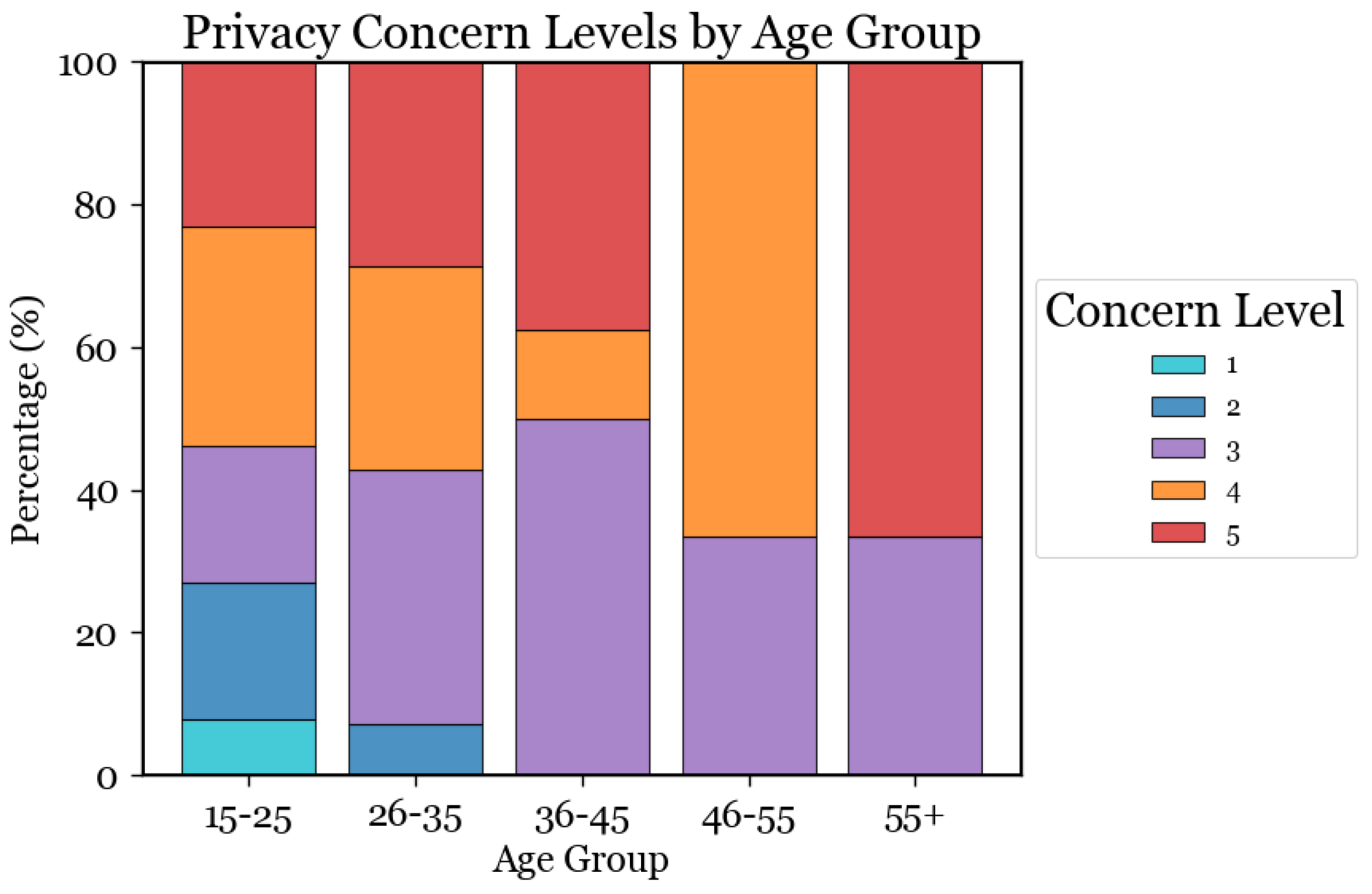

(RQ1): The first research question examined the relationship between demographics and the perceived security of different obfuscation techniques.

Figure 3 shows privacy concerns by age group, highlighting a trend of increasing concern with age. Younger participants (ages 15–25 and 26–35) generally showed fewer privacy concerns, with many expressing little or no concern. Conversely, those aged 55+ expressed the most privacy concerns, with a significant proportion showing extreme concern. The age groups 36–45 and 46–55 exhibited moderate concerns. This suggests that younger generations may be more accustomed to sharing personal information online, while older generations, more aware of privacy risks, demonstrate greater caution.

Figure 4 presents an analysis of privacy concern levels by educational level. Elementary school graduates tended to have fewer privacy concerns, possibly due to less exposure to online activities or a lack of awareness about privacy issues. High school graduates showed a more varied distribution of privacy concerns, with a noticeable increase in moderate to high levels when compared to elementary school graduates. Participants with a higher technical qualification exhibited a higher percentage of moderate to high privacy concerns, likely due to their technical background and awareness of privacy issues. Bachelor’s degree holders had a significant proportion of high levels of privacy concerns, indicating more awareness and concern about privacy issues. Master’s degree holders showed a high level of privacy concerns, similarly to bachelor’s degree holders, suggesting that higher education levels correlate with increased privacy awareness and concern. Finally, due to their extensive education and potential involvement in research and data processing, PhD holders exhibited the highest levels of privacy concerns.

Figure 5 shows the distribution of privacy concerns across occupations. Students revealed the most variation and generally fewer concerns. Participants with backgrounds in technology and educational fields displayed more concern, suggesting a greater awareness of privacy risks in these fields. Health professionals, in particular, had concentrated concerns at level 4, likely due to their sensitivity to patient privacy. Retirees exhibited moderate concern, and unemployed participants showed lower levels, with responses centered around levels 2 and 3.

The analysis indicates that privacy concerns tend to vary across different occupations. Careers in technology, education, and health are more likely to have high levels of privacy concerns. This trend suggests that professional background plays a significant role in raising awareness about privacy issues and the importance of protecting personal information online.

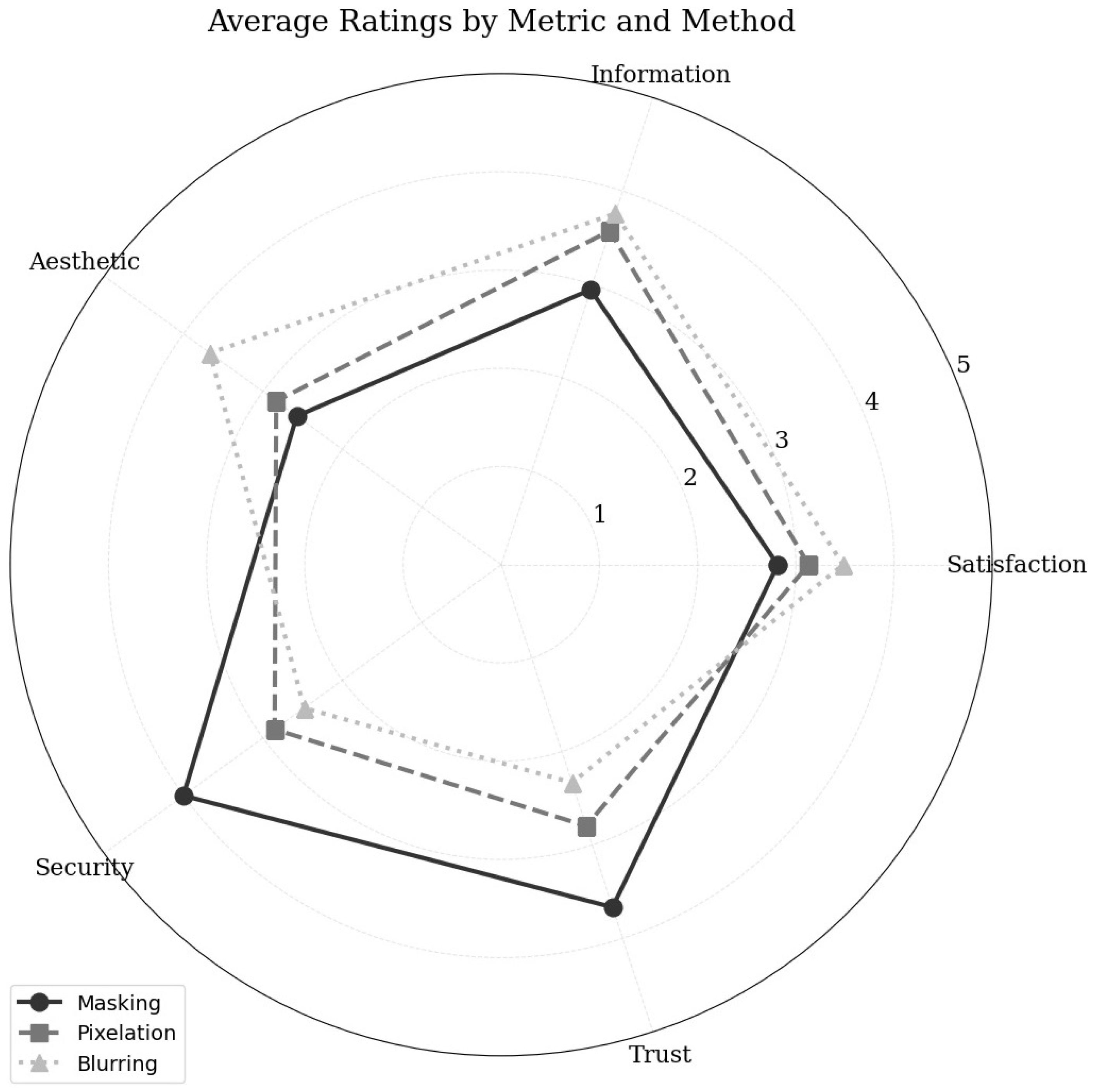

(RQ2): The second research question examined how different obfuscation techniques affect user experience in AR applications.

Figure 6 exhibits the adequacy ratings for three obfuscation techniques (masking, pixelation, and blurring) in four distinct AR contexts: the outdoor bike, outdoor people, the indoor document, and the indoor screen. We used the Kruskal–Wallis test (H) to analyze how the different obfuscation techniques affect user experience across the four different AR contexts. The test aimed to determine if there were statistically significant differences in the perceived adequacy of obfuscation methods between these various AR contexts.

Our results revealed that the best privacy protection method depended on the specific situation, while no single obfuscation technique was superior. For the outdoor AR contexts, we obtained the following results:

Bicyle: People did not strongly prefer any particular technique (H = 3.58; p = 0.1670), and they had a slight preference for blurring (M = 3.46; = 1.35), followed by pixelation (M = 3.15; = 1.03) and masking (M = 2.91; = 1.55).

People: Significant differences were observed (H = 6.00; p = 0.0499). Blurring (M = 3.46; = 1.11) was the most preferred, significantly more so than masking (M = 2.80; = 1.44), with pixelation (M = 3.26; = 1.04) in the middle.

For the indoor AR contexts, we obtained the following results:

Document: Significant differences emerged between the methods (H = 7.91; p = 0.0191). Pixelation (M = 3.70; = 1.19) was the most favored, significantly outperforming masking (M = 2.87; = 1.63), and blurring (M = 3.11; = 1.27) fell in the middle.

Screen: People did not state strong preferences, rating all three techniques—blurring (M = 3.46; = 1.03), masking (M = 3.41; = 1.47), and pixelation (M = 3.37, = 1.16)—similarly (H = 0.18; p = 0.9120).

These findings suggest that the suitability of obfuscation techniques can vary depending on the specific AR context and the type of visual content involved.

Figure 7 presents the average rating of the three different methods of obfuscation, masking, pixelation, and blurring, providing comprehensive insight into this question, comparing these techniques across the following five metrics: aesthetic, information, satisfaction, security, and trust.

Masking was suggested as the most trusted and secure method, but it compromised aesthetic appeal and user satisfaction. Pixelation offered a balanced approach, preserving information while maintaining moderate levels of trust and security, albeit at the cost of aesthetic appeal. Blurring prioritized aesthetic appeal and information retention, but it significantly underperformed in terms of security and trustworthiness. This can indicate that the optimal choice depends on the specific requirements of the application. If prioritizing trust and security is paramount, masking could be the ideal choice. For applications where information preservation and aesthetic appeal are crucial, blurring might be considered. However, pixelation offers a middle ground, balancing information preservation with reasonable levels of trust and security, even though it sacrifices aesthetic appeal.

(RQ3): Our third research question studied how users’ familiarity with AR technology influenced their acceptance of different obfuscation methods.

Table 1 presents the mean satisfaction ratings for the blurring, masking, and pixelation methods across different AR familiarity levels (1 = Not at all; 5 = Extremely).

The data demonstrate that blurring received consistent preference across both ends of the AR familiarity spectrum, with its highest satisfaction score at AR level 4 (3.75) and its lowest at AR level 1 (2.33). It still stood out with the highest mean score overall (3.45), suggesting that it may be the most generally acceptable technique for users across different familiarity levels. This could indicate that blurring is more universally suited for various AR environments, making it the most versatile option.

Masking had a lower mean satisfaction score (2.81) and showed moderate variability in the satisfaction scores, with a standard deviation of 1.33. The highest satisfaction score for masking was at AR level 1 (3.67), and the lowest was at AR level 5 (2.47). This suggests that while masking may not be the most preferred method overall, it still has specific contexts (like AR level 1) where it performs relatively well. The moderate variability indicates that user experiences with masking can differ, which might imply that it is more context-dependent and may not provide a uniformly reliable experience across all AR familiarity levels.

Pixelation had a mean satisfaction score of 3.13 and showed higher variability, especially with a score of 2.00 at AR level 2. This indicates that while some participants found pixelation acceptable, it may not be as effective across the full range of AR familiarity levels. The variability in user responses suggests that pixelation may be less suitable for diverse AR contexts.

4.4. Discussion

The results of our study provide some insights into user perceptions and preferences regarding obfuscation techniques for privacy protection in AR applications. Firstly, demographic factors such as age, education level, and occupation significantly influence privacy concerns. Older participants and those with higher education levels or technical backgrounds tended to exhibit more privacy concerns, highlighting the importance of tailoring privacy solutions to different user groups.

Secondly, the user acceptance of obfuscation techniques varied depending on the AR context and the type of visual content. Blurring emerged as the most preferred technique for outdoor contexts involving people, while pixelation was favored for indoor document contexts. This suggests that a standard approach may not be suitable, and context-specific solutions are necessary to balance privacy protection and user experience.

Thirdly, user familiarity with AR technology played a crucial role in the acceptance of obfuscation methods. Blurring was consistently preferred across different familiarity levels, indicating its versatility. However, masking and pixelation showed more variability in user satisfaction, suggesting that these techniques may be more context-dependent.

Overall, our findings emphasize the need for a nuanced approach to implementing obfuscation techniques in AR applications. Privacy protection solutions should take into account demographic factors, contextual requirements, and the varying levels of user familiarity with AR technology.

5. Conclusions and Future Work

As AR evolves and is applied in fields such as education, healthcare, and entertainment, addressing privacy concerns becomes more critical for its sustainable adoption. This work is on the interplay between AR and user privacy, aiming to identify user preferences and perceptions regarding obfuscation techniques. By understanding user preferences for obfuscation techniques, one can design AR applications that align with user expectations while mitigating privacy risks. In this work, we conducted a comprehensive survey.

An analysis of the obtained results led to valuable insights on how users perceived various obfuscation methods in an AR context. Users generally expressed concerns about privacy in AR environments but had varying preferences for obfuscation techniques. Blurring emerged as a popular choice for its ability to maintain visual appeal and preserve information, making it suitable for scenarios in which one prioritizes aesthetics. Masking was regarded as the most secure and trusted method, though its intrusive nature often detracted from the overall user experience. However, pixelation was perceived as a median method across all evaluated criteria. It did not emerge as the best or worst in terms of visual appeal, preserving information, user satisfaction, trust, and security, positioning it centrally within the spectrum of user preferences. This indicates that while pixelation may not excel in any specific category, it offers a balanced approach that could be suitable for general use cases where a moderate level of obfuscation is acceptable.

Also, results demonstrated that user familiarity with AR technology influenced the acceptance of obfuscation methods. Although all three methods (blurring, masking, and pixelation) were generally well received, blurring emerged as the most universally accepted technique, particularly among users with higher AR familiarity levels. Pixelation had a medium preference overall and demonstrated consistent user satisfaction across different familiarity levels. Masking showed more variability in user acceptance, indicating that it may not be the optimal choice for all AR contexts, especially for high AR familiarity levels.

Demographic factors significantly influenced perceptions of privacy and the acceptance of obfuscation methods. Younger individuals and those with a high-school education tended to have fewer privacy concerns, while older individuals and those with higher education levels exhibited greater awareness and caution. Similarly, professional background emerged as a significant factor, with individuals in the technology, education, and health sectors demonstrating heightened privacy awareness and concern.

The methods explored in this study have the potential to be applied in safeguarding sensitive information in shared AR spaces, such as obscuring bystander identities or protecting proprietary data in industrial settings.

In future work, we intend to incorporate these results into the automatic selection of obfuscation methods in AR environments, in order to combine the protection of sensitive data with the quality of user experience.

To address the limitations highlighted by the small sample size and demographic diversity, future research will focus on expanding the participant pool to enhance the reliability and generalizability of our findings. We will aim to recruit a larger and more diverse sample, allowing for more robust conclusions across different user demographics.

Also, some findings can be generalized to other populations and contexts, considering limitations and specific variables. Excessive generalization may lead to premature conclusions, warranting further research. Obfuscation methods can protect data privacy and security but require consideration of ethical and legal implications. Transparent and auditable methods that preserve data integrity must be developed.

Exploring different cultural and social contexts can provide more valuable insights into obfuscation methods. Quantitative approaches have limitations, particularly regarding “unknown unknowns”. Qualitative methods, such as interviews or content analysis, can provide a deeper understanding of phenomena, enabling the identification of patterns and relationships not captured by quantitative approaches.

Author Contributions

Conceptualization, A.C.C., R.L.d.C.C., A.M., A.G. and L.S.; data curation, A.C.C.; formal analysis, A.C.C.; funding acquisition, C.R. and R.L.d.C.C.; investigation, A.C.C., C.R., R.L.d.C.C., A.M., A.G. and L.S.; project administration, C.R. and R.L.; supervision: A.G. and A.M.; visualization, A.C.C.; writing—original draft, A.C.C., A.G., A.M., C.R., R.L. and L.S.; writing—review and editing, A.C.C., A.G., A.M., C.R., R.L. and L.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work is partially funded by national funds through FCT—Fundação para a Ciência e a Tecnologia, I.P., in the context of projects SafeAR—2022.09235.PTDC and UIDB/04524/2020 and under the Scientific Employment Stimulus—CEECINS/00051/2018.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

This appendix presents the questionnaires used in our study to evaluate the AR System. The SafeAR questionnaire was structured into four distinct sections to collect detailed data on user profiles (

Table A1), privacy awareness (

Table A2), preferences for obfuscation techniques (

Table A3), and overall perceptions (

Table A4).

In some questions in the questionnaire, we evaluated user perceptions of three obfuscation techniques: masking, pixelating, and blurring. For clarity, each technique is represented by a number throughout the tables:

Masking

Pixelating

Blurring

Similarly, when evaluating user perceptions of these techniques across different criteria, we used a similar approach:

Aesthetic: the visual appeal of the technique.

Information: the clarity and usability of information after obfuscation.

Satisfaction: overall satisfaction with the technique.

Security: the perceived security enhancement provided by the technique.

Trust: the trustworthiness and reliability of the technique.

Each question was rated individually in a multiple-choice grid format, as indicated by the symbol #.

Table A1.

SafeAR Questionnaire section: user profile questionnaire.

Table A1.

SafeAR Questionnaire section: user profile questionnaire.

| Question | Options |

|---|

| Gender | □ Female

□ Male

□ Prefer not to answer |

| Age | ______ |

| Education level | □ Primary

□ Secondary

□ Technical

□ Bachelor’s

□ Master’s

□ Doctorate |

| Professional field | □ Student

□ Healthcare

□ Education

□ IT

□ Legal

□ Finance

□ Services

□ Unemployed |

| Previous AR experience | □ Glasses/Headset

□ Smartphone/Tablet

□ Spatial projection |

| Familiarity level with AR | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

Table A2.

SafeAR Questionnaire section: privacy and obfuscation.

Table A2.

SafeAR Questionnaire section: privacy and obfuscation.

| Question | Options |

|---|

| Sharing photos or video online | □ Never

□ Rarely

□ Sometimes

□ Often

□ Very often |

| Concerned about privacy | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

| Heard about obfuscation technique # |

| 1-Pixelating 2-Blurring 3-Masking | □ Yes, I use it regularly

□ Yes, I have used it

□ Yes, but I don’t use it

□ Yes, but I don’t know what they are

□ I’ve never heard of it |

| Knowledge of reversal of obfuscation methods | □ Yes

□ No

□ Maybe |

| Knowledge about others obfuscation methods | ______ |

Table A3.

SafeAR Questionnaire section: masking vs. pixelating vs. blurring.

Table A3.

SafeAR Questionnaire section: masking vs. pixelating vs. blurring.

| Question | Options |

|---|

Suitable of obfuscation for the current context #

(Bike outdoor) |

| 1-Masking 2-Pixelating 3-Blurring | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

Suitable of obfuscation for the current context #

(Document indoor) |

| 1-Masking 2-Pixelating 3-Blurring | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

Suitable of obfuscation for the current context #

(Screen indoor) |

| 1-Masking 2-Pixelating 3-Blurring | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

Suitable of obfuscation for the current context #

(People outdoor) |

| 1-Masking 2-Pixelating 3-Blurring | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

| User perception using Masking to all contexts AR # |

| 1-Aesthetic 2-Information 3-Satisfaction 4-Security 5-Trust | □ 1—Disagree totally

□ 2—Disagree somewhat

□ 3—Neither agree nor disagree

□ 4—Agree somewhat

□ 5—Agree totally |

| User perception using Pixelating to all contexts AR # |

| 1-Aesthetic 2-Information 3-Satisfaction 4-Security 5-Trust | □ 1—Disagree totally

□ 2—Disagree somewhat

□ 3—Neither agree nor disagree

□ 4—Agree somewhat

□ 5—Agree totally |

| User perception using Blurring to all contexts AR # |

| 1-Aesthetic 2-Information 3-Satisfaction 4-Security 5-Trust | □ 1—Disagree totally

□ 2—Disagree somewhat

□ 3—Neither agree nor disagree

□ 4—Agree somewhat

□ 5—Agree totally |

Table A4.

SafeAR Questionnaire section: overview of using obfuscation techniques.

Table A4.

SafeAR Questionnaire section: overview of using obfuscation techniques.

| Question | Options |

|---|

| Importance of control over the level of obfuscation # |

| 1-Masking 2-Pixelating 3-Blurring | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

| Have ever used an app to obfuscate data | □ Yes, frequently

□ Yes, sometimes

□ No |

| Interested in including obfuscation techniques in daily life | □ 1—Not at all

□ 2—Slightly

□ 3—Moderately

□ 4—Very

□ 5—Extremely |

| Obfuscation techniques should be applied automatically | □ Yes

□ No

□ Maybe |

| Obstacles in the process of adopting obfuscation on a daily basis | ______ |

References

- Orekondy, T.; Schiele, B.; Fritz, M. Towards a Visual Privacy Advisor: Understanding and Predicting Privacy Risks in Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3706–3715. [Google Scholar] [CrossRef]

- Zhao, Y.; Wei, S.; Guo, T. Privacy-preserving Reflection Rendering for Augmented Reality. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; ACM: Lisboa, Portugal, 2022; pp. 2909–2918. [Google Scholar] [CrossRef]

- Moon, J.; Bukhari, M.; Kim, C.; Nam, Y.; Maqsood, M.; Rho, S. Object detection under the lens of privacy: A critical survey of methods, challenges, and future directions. ICT Express 2024, 10, 1124–1144. [Google Scholar] [CrossRef]

- Fuchs, C. Social media and the public sphere. In Culture and Economy in the Age of Social Media; Routledge: London, UK, 2015; pp. 315–372. [Google Scholar]

- Minaee, S.; Liang, X.; Yan, S. Modern Augmented Reality: Applications, Trends, and Future Directions. arXiv 2022, arXiv:2202.09450. [Google Scholar]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Francisco, D.; Cruz, A.; Rodrigues, N.; Gonçalves, A.; Ribeiro, R. Augmented Reality and Digital Twin for Mineral Industry. In Proceedings of the 2023 International Conference on Graphics and Interaction (ICGI), Tomar, Portugal, 2–3 November 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Leitão, B.; Costa, C.; Gonçalves, A.; Marto, A. Defend Your Castle–A Mobile Augmented Reality Multiplayer Game. In Proceedings of the 2023 International Conference on Graphics and Interaction (ICGI), Tomar, Portugal, 2–3 November 2023; pp. 1–7. [Google Scholar] [CrossRef]

- Marto, A.; Almeida, H.A.; Gonçalves, A. Using Augmented Reality in Patients with Autism: A Systematic Review. In VipIMAGE 2019; Tavares, J.M.R.S., Natal Jorge, R.M., Eds.; Series Title: Lecture Notes in Computational Vision and Biomechanics; Springer International Publishing: Porto, Portugal, 2019; Volume 34, pp. 454–463. [Google Scholar] [CrossRef]

- Kesim, M.; Ozarslan, Y. Augmented Reality in Education: Current Technologies and the Potential for Education. Procedia—Soc. Behav. Sci. 2012, 47, 297–302. [Google Scholar] [CrossRef]

- King, A.; Kaleem, F.; Rabieh, K. A Survey on Privacy Issues of Augmented Reality Applications. In Proceedings of the 2020 IEEE Conference on Application, Information and Network Security (AINS), Kota Kinabalu, Sabah, Malaysia, 17–19 November 2020; pp. 32–40. [Google Scholar] [CrossRef]

- Xue, J.; Hu, R.; Zhang, W.; Zhao, Y.; Zhang, B.; Liu, N.; Li, S.C.; Logan, J. Virtual Reality or Augmented Reality as a Tool for Studying Bystander Behaviors in Interpersonal Violence: Scoping Review. J. Med. Internet Res. 2021, 23, e25322. [Google Scholar] [CrossRef] [PubMed]

- Heller, B. Reimagining Reality: Human Rights and Immersive Technology. Harvard Carr Center Discussion Paper Series. 2020. Available online: https://scholar.google.com/citations?view_op=view_citation&hl=en&user=DJmbBPIAAAAJ&citation_for_view=DJmbBPIAAAAJ:IjCSPb-OGe4C (accessed on 5 November 2024). [CrossRef]

- Ruth, K.; Kohno, T.; Roesner, F. Secure Multi-User Content Sharing for Augmented Reality Applications. In Proceedings of the 28th USENIX Security Symposium (USENIX Security 19), Santa Clara, CA, USA, 14–16 August 2019; pp. 141–158. [Google Scholar]

- Guzman, J.A.d.; Thilakarathna, K.; Seneviratne, A. Security and Privacy Approaches in Mixed Reality: A Literature Survey. ACM Comput. Surv. 2020, 52, 1–37. [Google Scholar] [CrossRef]

- Alves, M.; Ribeiro, T.; Santos, L.; Marto, A.; Gonçalves, A.; Rabadão, C.; de C. Costa, R.L. Evaluating Service-based Privacy-Protection for Augmented Reality Applications. In Proceedings of the 16th International Conference on Management of Digital Ecosystems (MEDES 2024), Naples, Italy, 18–20 November 2024. [Google Scholar]

- Ribeiro, T.; Marto, A.; Gonçalves, A.; Santos, L.; Rabadão, C.; de C. Costa, R.L. SafeARUnity: Real-time image processing to enhance privacy protection in LBARGs. In Proceedings of the 14th International Conference on Videogame Sciences and Arts (VideoJogos’24), Leiria, Portugal, 5–6 December 2024. [Google Scholar]

- Van Krevelen, D.; Poelman, R. A Survey of Augmented Reality Technologies, Applications and Limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar] [CrossRef]

- Grubert, J.; Langlotz, T.; Zollmann, S.; Regenbrecht, H. Towards Pervasive Augmented Reality: Context-Awareness in Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1706–1724. [Google Scholar] [CrossRef] [PubMed]

- Jana, S.; Narayanan, A.; Shmatikov, V. A Scanner Darkly: Protecting User Privacy from Perceptual Applications. In Proceedings of the 2013 IEEE Symposium on Security and Privacy, Berkeley, CA, USA, 19–22 May 2013; pp. 349–363. [Google Scholar] [CrossRef]

- Lebeck, K.; Ruth, K.; Kohno, T.; Roesner, F. Towards Security and Privacy for Multi-user Augmented Reality: Foundations with End Users. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 20–24 May 2018; pp. 392–408. [Google Scholar] [CrossRef]

- Ramajayam, G.; Sun, T.; Tan, C.C.; Luo, L.; Ling, H. Saliency-Aware Privacy Protection in Augmented Reality Systems. In Proceedings of the First Workshop on Metaverse Systems and Applications, Helsinki, Finland, 18–22 June 2023; ACM: New York, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Diyora, V.; Khalil, B. Impact of Augmented Reality on Cloud Data Security. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Mandi, Himachal Pradesh, India, 24–28 June 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Enireddy, V.; Somasundaram, K.; Mahesh, M.P.C.S.; Ramkumar Prabhu, M.; Babu, D.V.; Karthikeyan, C. Data Obfuscation Technique in Cloud Security. In Proceedings of the 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 7–9 October 2021; pp. 358–362. [Google Scholar] [CrossRef]

- Martínez, S.; Sánchez, D.; Valls, A.; Batet, M. Privacy protection of textual attributes through a semantic-based masking method. Inf. Fusion 2012, 13, 304–314. [Google Scholar] [CrossRef]

- Fan, L. Image Pixelization with Differential Privacy. In Proceedings of the Data and Applications Security and Privacy XXXII, Bergamo, Italy, 16–18 July 2018; Kerschbaum, F., Paraboschi, S., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 148–162. [Google Scholar]

- Neustaedter, C.; Greenberg, S.; Boyle, M. Blur filtration fails to preserve privacy for home-based video conferencing. ACM Trans. Comput. Hum. Interact. 2006, 13, 1–36. [Google Scholar] [CrossRef]

- Roesner, F.; Kohno, T.; Molnar, D. Security and privacy for augmented reality systems. Commun. ACM 2014, 57, 88–96. [Google Scholar] [CrossRef]

- Li, Y.; Vishwamitra, N.; Knijnenburg, B.P.; Hu, H.; Caine, K. Blur vs. Block: Investigating the Effectiveness of Privacy-Enhancing Obfuscation for Images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1343–1351. [Google Scholar] [CrossRef]

- Oh, S.J.; Benenson, R.; Fritz, M.; Schiele, B. Faceless Person Recognition; Privacy Implications in Social Media. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 19–35. [Google Scholar]

- Demiris, G.; Oliver, D.P.; Giger, J.; Skubic, M.; Rantz, M. Older adults’ privacy considerations for vision based recognition methods of eldercare applications. Technol. Health Care 2009, 17, 41–48. [Google Scholar] [CrossRef] [PubMed]

- Vasantha, R.N.; Harinarayana, N. Online survey tools: A case study of Google Forms. In Proceedings of the National Conference on Scientific, Computational & Information Research Trends in Engineering, Chennai, India, 20–21 October 2016. [Google Scholar]

- W3Schools. Pandas Tutorial—Introduction. Available online: https://www.w3schools.com/python/pandas/pandas_intro.asp (accessed on 9 December 2024).

- Ranjan, M.; Barot, K.; Khairnar, V.; Rawal, V.; Pimpalgaonkar, A.; Saxena, S.; Sattar, A.M. Python: Empowering Data Science Applications and Research. J. Oper. Syst. Dev. Trends 2023, 10, 27–33. [Google Scholar] [CrossRef]

- Vargha, A.; Delaney, H.D. The Kruskal-Wallis test and stochastic homogeneity. J. Educ. Behav. Stat. 1998, 23, 170–192. [Google Scholar] [CrossRef]

- Li, Y.; Vishwamitra, N.; Knijnenburg, B.P.; Hu, H.; Caine, K. Effectiveness and Users’ Experience of Obfuscation as a Privacy-Enhancing Technology for Sharing Photos. Proc. ACM -Hum.-Comput. Interact. 2017, 1, 1–24. [Google Scholar] [CrossRef]

- Ra, M.R.; Govindan, R.; Ortega, A. P3: Toward Privacy-Preserving Photo Sharing. In Proceedings of the 10th USENIX Symposium on Networked Systems Design and Implementation (NSDI 13), Lombard, IL, USA, 2–5 April 2013; pp. 515–528. [Google Scholar]

Figure 1.

Visualizations of privacy protection techniques in AR. The “As is” image shows the original image, while masking, pixelation, and blurring can be used to obscure sensitive information on screens within AR environments.

Figure 1.

Visualizations of privacy protection techniques in AR. The “As is” image shows the original image, while masking, pixelation, and blurring can be used to obscure sensitive information on screens within AR environments.

Figure 2.

Examples of obfuscated images in different AR contexts (indoor and outdoor), demonstrating techniques such as masking, pixelation, and blurring.

Figure 2.

Examples of obfuscated images in different AR contexts (indoor and outdoor), demonstrating techniques such as masking, pixelation, and blurring.

Figure 3.

The distribution of privacy concern levels across five age groups (18–25, 26–35, 36–45, 46–55, 55+). The different colored segments within each bar correspond to the percentage of individuals who selected a particular level of privacy concern on a Likert scale (1 = Not at all; 5 = Extremely).

Figure 3.

The distribution of privacy concern levels across five age groups (18–25, 26–35, 36–45, 46–55, 55+). The different colored segments within each bar correspond to the percentage of individuals who selected a particular level of privacy concern on a Likert scale (1 = Not at all; 5 = Extremely).

Figure 4.

Privacy concern levels vary across different education levels. The chart shows the distribution of privacy concern levels (1 = Not at all; 5 = Extremely) for individuals at six different education levels.

Figure 4.

Privacy concern levels vary across different education levels. The chart shows the distribution of privacy concern levels (1 = Not at all; 5 = Extremely) for individuals at six different education levels.

Figure 5.

The distribution of privacy concern levels across six different occupations (student, technology, education, health, retired, unemployed). The different colored segments within each bar correspond to the percentage of individuals who selected a particular level of privacy concern on a Likert scale (1 = Not at all; 5 = Extremely).

Figure 5.

The distribution of privacy concern levels across six different occupations (student, technology, education, health, retired, unemployed). The different colored segments within each bar correspond to the percentage of individuals who selected a particular level of privacy concern on a Likert scale (1 = Not at all; 5 = Extremely).

Figure 6.

Mean adequacy ratings for masking, pixelation, and blurring techniques in four different AR contexts: outdoor bike, outdoor people, indoor document, and indoor screen.

Figure 6.

Mean adequacy ratings for masking, pixelation, and blurring techniques in four different AR contexts: outdoor bike, outdoor people, indoor document, and indoor screen.

Figure 7.

A comparative analysis of three obfuscation techniques (masking, pixelation, and blurring) based on five key metrics: aesthetic, information, satisfaction, trust, and security. The radial axis represents the rating scale using the Likert scale (1 = totally disagree; 5 = totally agree), with higher values indicating better performance.

Figure 7.

A comparative analysis of three obfuscation techniques (masking, pixelation, and blurring) based on five key metrics: aesthetic, information, satisfaction, trust, and security. The radial axis represents the rating scale using the Likert scale (1 = totally disagree; 5 = totally agree), with higher values indicating better performance.

Table 1.

Mean satisfaction – Comparison of blurring, masking, and pixelation across different AR familiarity levels.

Table 1.

Mean satisfaction – Comparison of blurring, masking, and pixelation across different AR familiarity levels.

| AR Level | Blurring | Masking | Pixelation |

|---|

| 1 | 2.33 | 3.67 | 3.33 |

| 2 | 3.67 | 3.33 | 2.00 |

| 3 | 3.29 | 2.94 | 3.00 |

| 4 | 3.75 | 2.75 | 3.44 |

| 5 | 3.60 | 2.47 | 3.13 |

| Mean | 3.48 | 2.81 | 3.13 |

| Std. Dev. | 1.37 | 1.33 | 1.10 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).