Abstract

The metaverse concept has been evolving from static, pre-rendered virtual environments to a new frontier: the real-time metaverse. This survey paper explores the emerging field of real-time metaverse technologies, which enable the continuous integration of dynamic, real-world data into immersive virtual environments. We examine the key technologies driving this evolution, including advanced sensor systems (LiDAR, radar, cameras), artificial intelligence (AI) models for data interpretation, fast data fusion algorithms, and edge computing with 5G networks for low-latency data transmission. This paper reveals how these technologies are orchestrated to achieve near-instantaneous synchronization between physical and virtual worlds, a defining characteristic that distinguishes the real-time metaverse from its traditional counterparts. The survey provides a comprehensive insight into the technical challenges and discusses solutions to realize responsive dynamic virtual environments. The potential applications and impact of real-time metaverse technologies across various fields are considered, including live entertainment, remote collaboration, dynamic simulations, and urban planning with digital twins. By synthesizing current research and identifying future directions, this survey provides a foundation for understanding and advancing the rapidly evolving landscape of real-time metaverse technologies, contributing to the growing body of knowledge on immersive digital experiences and setting the stage for further innovations in the Metaverse transformative field.

1. Introduction

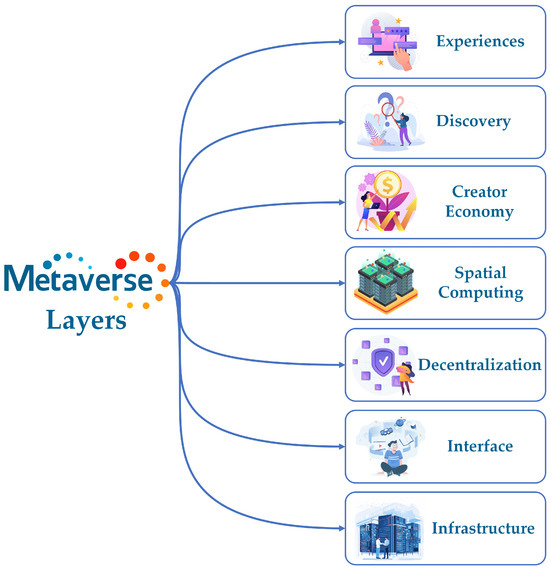

The metaverse is a complex, multi-component, hierarchical construct integrating various technologies and systems to create an immersive, three-dimensional (3D) interconnected virtual universe [1]. Figure 1 illustrates a seven-layer architecture of the metaverse. At its foundation, the Infrastructure Layer is the technical backbone that ensures the metaverse operates smoothly and efficiently [2]. It includes the physical hardware like servers and data centers and the cloud computing resources that provide the necessary computational power. A robust infrastructure is essential for scalability, enabling the metaverse to grow and accommodate increasing users and experiences.

Figure 1.

An illustration of the 7-layer metaverse architecture.

The Interface layer determines how users interact with the metaverse [3], which includes the devices they use, such as VR headsets, AR glasses, and smartphones, as well as the software interfaces that make the metaverse accessible and user-friendly [1]. A well-designed interface is key to ensuring that the metaverse is easy to navigate and engaging, making it accessible to a wide range of users, regardless of their technical expertise.

The core of the metaverse is the Decentralization layer, which ensures that the metaverse operates without being controlled by a single entity. The decentralization layer is crucial for maintaining user autonomy, privacy, and security, often achieved through blockchain technology [4]. By distributing power and data across a network of users, decentralization enables true ownership of digital assets, allowing participants to engage in transactions and interactions with confidence that their data and property are secure [5].

Spatial computing plays an essential role in a metaverse, which merges the physical and digital worlds to create immersive experiences. The computing layer utilizes technologies like virtual reality (VR) [6], augmented reality (AR) [7], mixed reality (MR) [8], and haptic feedback systems [9], allowing users to interact with digital objects as if they were part of the physical world. Spatial computing makes the metaverse more tangible and real, enabling users to experience and manipulate 3D environments in ways that go beyond the limitations of traditional computing interfaces [10].

The Creator Economy layer is the engine that drives innovation and content within the metaverse [11]. This layer supports the tools and platforms that allow users to create, distribute, and monetize digital content and experiences [12]. The creator economy fosters a vibrant, self-sustaining ecosystem where creativity is rewarded by empowering individuals to produce and profit from their creations [13]. The creator’s content fuels the diversity of experiences available and encourages continual growth and expansion of the metaverse.

The Discovery layer helps users navigate the vast expanse of the metaverse [14]. It includes search engines, social networks, and recommendation systems that guide users toward content, experiences, and services that match their interests [15]. Effective discovery mechanisms are essential for helping users find and engage with what they are looking for, ensuring that the metaverse remains a dynamic and accessible space.

Finally, the Experiences layer is where the real value of the metaverse is realized [3]. It encompasses all the activities users can participate in, from socializing and gaming to education and commerce. The quality and variety of these experiences make the metaverse an engaging and appealing place for users to spend their time. The experience layer is constantly evolving, driven by the creativity of the community and the opportunities enabled by the other layers.

The metaverse is driven by several key technologies that collectively create an immersive, interactive, and interconnected nature [16], as conceptually shown in Figure 2. Blockchain technology underpins the decentralization and security aspects of the metaverse [17]. Blockchain ensures that digital assets, including virtual currencies, property, and identities, are securely managed and owned by users without relying on centralized authorities [18]. Blockchain technology provides transparency, immutability, and trust, enabling users to engage in secure transactions and interactions within the metaverse [19]. By leveraging blockchain, the metaverse can maintain a fair and open economy where users have full control over their digital assets and data.

Figure 2.

Metaverse technologies.

Augmented reality (AR) and virtual reality (VR) are at the forefront of creating immersive experiences within the metaverse [20]. VR creates fully digital environments that users can explore and interact with using devices like headsets and gloves, effectively transporting them to another world. AR, on the other hand, overlays digital content in the real world, enhancing users’ perceptions and interactions with their surroundings [21]. Together, AR and VR provide the sensory and spatial components that make the metaverse feel tangible and engaging, allowing users to interact with digital spaces in ways that mimic real-world experiences. 5G is essential for enabling the metaverse due to its ultra-high-speed connectivity and low latency [22]. This technology allows for seamless, real-time interactions within virtual environments. It supports high-bandwidth applications like augmented reality (AR), virtual reality (VR), and 3D reconstructions by providing faster data transfer, improving the user experience even in highly populated virtual spaces [23]. It also enables advanced features like haptic feedback in virtual reality, enhancing immersion by allowing users to “feel” virtual objects or textures.

Artificial intelligence (AI) technologies drive the intelligence and responsiveness of the metaverse [24]. AI powers various aspects of the metaverse, including creating realistic virtual characters to interact with users and generating dynamic and adaptive environments [25]. AI also plays a crucial role in personalization, learning from users’ behaviors and preferences to tailor experiences that meet individual needs. By enabling complex decision-making and learning within the metaverse, AI ensures that the digital world is not only immersive but also intelligent and responsive. Brain–computer interfaces (BCIs) allow users to interact with virtual environments through brain signals, bypassing traditional input methods like keyboards or controllers [26]. This technology, while still in its early stages, has the potential to revolutionize the metaverse by enabling thought-based commands and avatar control. Early applications in gaming and productivity could lead to a more immersive and efficient experience. More people may use BCIs, which connect directly to the human neocortex and allow for advanced cognitive functions and interaction in virtual spaces [27].

Internet of Things (IoT) technology bridges the gap between the physical and digital worlds by connecting real-world edge devices to the metaverse [28]. IoT technology allows physical objects, from home appliances to vehicles, to communicate and interact with the digital environment. IoT integration enables real-time data exchange and interaction, making the metaverse a more seamless extension of the physical world [2,29]. For instance, IoT can allow users to control real-world devices within a virtual environment or bring physical data into the metaverse for analysis and visualization. Similarly, 3D reconstruction technology is vital in creating detailed and accurate digital representations of real-world environments [30]. This technology captures and digitizes physical spaces, objects, and people, bringing them into the metaverse with high fidelity. Three-dimensional (3D) reconstruction allows for creating virtual replicas of real-world locations, enabling users to explore and interact with these spaces as if they were physically present [31]. Three-dimensional (3D) capabilities are important for applications such as virtual tourism, real estate, and architecture within the metaverse.

All these technologies, working together, form the backbone of the metaverse, enabling users to create, explore, and interact in a fully realized digital universe [2].

While there are many survey papers about the full concept of the metaverse, this paper focuses specifically on the new frontier of the real-time metaverse paradigm. This survey is the first to examine the emerging technological ecosystem comprehensively. We present the challenges and opportunities brought by creating genuinely responsive virtual environments, from the analysis of state-of-the-art enabling technologies to their synergistic integration. It reconciles metaverse ideas and live systems and provides a starting point for researchers, developers, and industry stakeholders interested in exploring the future of immersive digital experiences. We would like to see our survey help guide this large and complex area, close the reality gap further, and lead the way toward an integral use of dynamic, interactive virtual worlds that are seamlessly integrated with our physical world.

The rest of this paper is structured as follows. Section 2 presents a general overview of the real-time metaverse concept. Section 3 introduces critical enabling technologies. The state-of-the-art real-time metaverse is discussed in Section 4, followed by sections that address multiple key issues in the real-time metaverse. Section 5 outlines the technological infrastructure that supports real-time virtual experiences. Section 6 details the engines for immersive technologies. Section 7 tackles one of the critical but long-time missed components in the metaverse ecosystem, the standard. The challenges and opportunities are illustrated in Section 8. Finally, Section 9 concludes this survey with future directions.

2. The Real-Time Metaverse

The real-time metaverse is a cutting-edge component of the ongoing digital revolution [32], serving as an advanced expansion of the broader metaverse concept. The real-time metaverse represents the next evolution in virtual worlds, transforming static digital environments into dynamic, interactive spaces continuously updated based on real-world data. The real-time metaverse goes beyond the traditional metaverse’s pre-built, static worlds [33]—where users can socialize, work, play, and explore—by incorporating actual changes in the real world as they happen. This shift unlocks a new realm of possibilities, blending physical and digital realities to create more immersive and interactive experiences.

The core of the real-time metaverse instantaneously syncs real-world activities and environments with their digital counterparts. This is achieved through advanced sensor technologies and other inputs that continuously capture and send data from the physical world. These sensors gather critical information about an environment’s geometry, movement, and visual details, feeding it into powerful data processing systems [34]. Data fusion techniques combine these diverse data streams into a single coherent model [35], which is then rendered in real-time within the virtual space. The result is a virtual environment that evolves in response to real-world changes, whether it is the movement of objects, changes in lighting, or adding new elements to a scene.

2.1. Dynamic Environments and Real-Time Interaction

One of the key distinguishing features of the real-time metaverse is its dynamic nature. In a traditional metaverse, environments are typically static or periodically updated [21,36], meaning that users interact with a world that may not reflect ongoing changes in the physical world. This makes the experience somewhat disconnected from reality. On the other hand, the real-time metaverse is designed to be constantly evolving [37]. As sensors capture real-world changes—such as the construction of a new building, the movement of vehicles, or even weather conditions—those changes are reflected instantly in the virtual environment [38]. This dynamic interaction opens up a wide range of applications. For example, a virtual replica of a city can be continuously updated to reflect real-time traffic patterns [39,40], construction projects [41], or even local events. Users could navigate this city virtually and interact with it as they would in the physical world. The virtual ability to mirror real-world changes in real-time has far-reaching implications for industries such as urban planning [42], architecture [43], education [44], and entertainment [45].

2.2. Enhanced Immersion and Engagement

The real-time metaverse offers a profound immersion compared to its traditional counterpart of independent systems. The user experience becomes more engaging and lifelike with the seamless integration of real-world data. Imagine attending a virtual concert that mirrors a live performance in a physical venue, with sound, lighting, and audience reactions captured in real-time [46]. This blurring of boundaries between the real and the virtual world creates a sense of presence and immediacy that is difficult to achieve in static environments. Furthermore, the real-time metaverse enhances the interactivity of virtual experiences [47]. Users are no longer just passive participants in a pre-rendered environment but can actively interact with objects and spaces that reflect real-world conditions. For example, in a real-time virtual meeting space, participants could interact with digital versions of objects [48] that are being manipulated in the physical world. The increased level of interaction could revolutionize fields like remote collaboration, virtual workspaces, and education, where the ability to manipulate and experience real-time objects and data is crucial.

2.3. The Role of Advanced Technology

The realization of the real-time metaverse hinges on several key technological advancements. One of the most important is the ability to capture, transmit, and process data in real-time. This requires a high-speed, low-latency infrastructure, such as 5G networks and edge computing, which allows sensor data to be processed locally or close to the source, reducing transmission delays [49]. Additionally, powerful AI models are necessary to analyze and interpret the incoming data, ensuring it is accurately reflected in the virtual environment. AI also plays a crucial role in managing the complexity of real-time environments [50]. Machine learning algorithms can help predict how objects will move or change over time, making the real-time metaverse more fluid and reducing the impact of any delays in data transmission. Furthermore, AI-driven data fusion techniques allow the system to combine data from multiple sensors [51] into a unified 3D model.

2.4. Applications and Potential Impact

As we approach the beginning of the digital transformation, the implications of the real-time metaverse extend far beyond mere entertainment. The potential applications of the real-time metaverse are vast and diverse. In terms of entertainment, live events such as sports games or concerts [52] could be experienced virtually in real-time, allowing users to engage with the event from anywhere in the world. In urban planning and architecture, creating a real-time virtual replica of a city can help planners and architects test designs and simulate the impact of changes [53] before they are made in the physical world. Education can also benefit from real-time virtual classrooms that mirror real-world laboratories or simulations [54], giving students hands-on experience in science, engineering, and medicine. The real-time metaverse also has the potential to transform social interactions [33]. Instead of static avatars meeting in a fixed environment, people could interact dynamically with each other in spaces that reflect their real-world surroundings, allowing for more meaningful and immersive connections. For example, a person in one part of the world could invite a friend to virtually experience their real-world environment in real-time, whether a stroll through a park or a live museum tour.

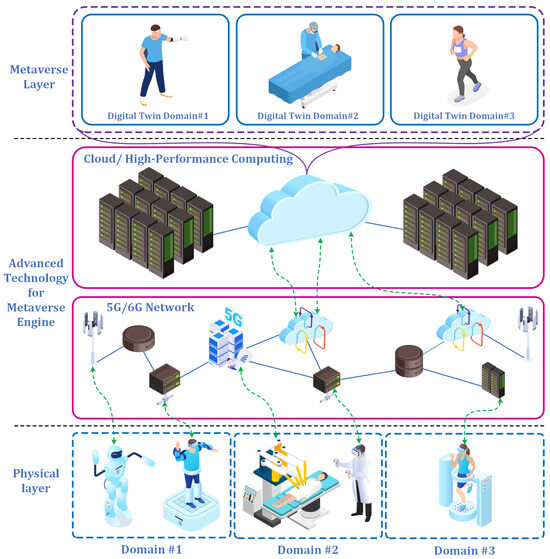

Figure 3 illustrates a hierarchical system for the real-time metaverse, consisting of three layers. At the physical layer, real-world actions and data are captured through sensors and devices in various domains, such as robotics, medical procedures, and fitness tracking. The data are transmitted via 5G networks to the technology layer, where cloud and high-performance computing (HPC) infrastructure processes and synchronizes it in real-time. The top metaverse layer represents digital twin environments, where the processed data are rendered into virtual replicas of physical actions, enabling immersive real-time interactions. This system ensures that real-world activities are mirrored seamlessly in the virtual world, supporting real-time metaverse experiences.

Figure 3.

Real-time metaverse hierarchical system.

3. Key Enabling Technologies: An Overview

The metaverse represents a burgeoning frontier where the digital and physical worlds converge, offering immersive experiences through advancements in virtual reality (VR), augmented reality (AR), and other cutting-edge technologies. This section delves into the critical aspects of the metaverse, including blockchain integration, artificial intelligence (AI), edge computing, and the myriad challenges and implications of these innovations.

Blockchain technology is foundational for establishing a decentralized, secure, and interoperable metaverse [55]. Blockchain enhances metaverse functionalities such as data acquisition, storage, sharing, interoperability, and privacy preservation. Blockchain ensures the trustworthiness of transactions and interactions by providing a transparent and tamper-proof ledger, essential for managing digital assets and identities in a virtual world [1]. Blockchain can facilitate the creation of interoperable virtual worlds, allowing users to seamlessly transition and securely interact across different platforms, which is crucial for realizing a unified metaverse [56].

In the metaverse, AI is pivotal in enhancing user experiences by enabling more natural and intuitive interactions [55]. AI applications such as natural language processing, computer vision, and neural interfaces are instrumental in creating responsive and adaptive virtual environments [57]. AI-driven avatars, capable of understanding and reacting to user inputs, significantly enhance the realism and immersion of the metaverse [58]. Edge computing complements AI by providing computational power and low-latency communication for real-time interactions in the metaverse [59].

The deployment of the metaverse faces several technological and infrastructural challenges [21]. The role of 5G/6G technology in overcoming these hurdles lies in its ability to provide ultra-low latency, high data rates, and enhanced reliability. The proposed layered architecture for integrating 6G with the Metaverse addresses the need for scalable and efficient network infrastructure to handle the vast amounts of data generated by Metaverse applications. Network scalability, data privacy, and security are identified as key challenges [32]. The advanced cryptographic algorithms and robust security protocols protect user data and ensure secure transactions within the metaverse are required [60]. Developing interoperability standards is also critical to facilitate seamless interactions across different virtual environments [61].

Edge computing complements VR and AI by providing the computational power and low-latency communication necessary for real-time interactions in the metaverse. Some research indicates that seamless real-time feedback ensures user safety and effectiveness. Edge computing is essential for the Metaverse, particularly in enhancing the performance and scalability of virtual environments. The role of edge-enabled technologies in managing the real-time demands of VR and AR applications is crucial. By processing data closer to the user, edge computing reduces latency and improves the overall user experience [36]. In addition, integrating advanced queue management algorithms with edge computing, as some research shows, can optimize network performance for data-intensive applications [62]. Network integration is paramount for maintaining the high levels of responsiveness and interaction fidelity required by the metaverse. By processing data closer to the user, edge computing minimizes latency. It ensures high-quality user experiences, particularly in applications that demand immediate feedback and minimal delays, such as health, education, and interactive entertainment.

VR and AR are core technologies driving the immersive experiences of the metaverse. Integrating haptic feedback in AR shows how tactile sensations can enhance user interactions in virtual environments [9,63]. Innovations such as the FingerTac haptic gloves provide real-time haptic feedback and improve the realism of AR applications [9]. The infrastructure supporting the metaverse must handle compute- and data-intensive tasks efficiently. Research has demonstrated that the application of VR combined with treadmill training can improve balance and mobility in individuals with traumatic brain injury (TBI) [64]. The integration of VR in rehabilitation highlights its potential in the metaverse, where real-time feedback and dynamic interaction are key. The study showed that participants engaged more with the VR-assisted training, reporting improved balance and mobility [65]. This aligns with the metaverse’s vision of creating adaptive and immersive spaces customized to individual user needs, whether for entertainment, social interaction, or healthcare.

The above research underscores VR’s transformative potential, especially in healthcare applications within the metaverse [66]. By offering real-time sensory feedback and stimulating neural pathways, as seen in neurorehabilitation, VR in the metaverse can provide personalized and therapeutic environments for users [67]. Therapeutic applications can have significant implications, particularly for industries such as healthcare, where virtual environments could be used for rehabilitation [68], therapy [69], and patient care [70]. Additionally, by enabling real-time interaction and multisensory engagement, VR enhances the immersive potential of the metaverse applications, making them more impactful and effective. The study on virtual taste and smell technologies further expands the sensory dimensions of VR, which is essential for a more immersive metaverse experience [71]. By integrating taste and smell, the metaverse can replicate real-world environments with a higher level of fidelity, crucial for applications in sectors such as education [72], gaming [72], and virtual tourism [73]. These technologies can enrich user experiences, offering deeper emotional and cognitive connections to the virtual world, which could be used in leisure and therapeutic contexts.

Developing multisensory technologies, such as taste and smell in VR, is essential for expanding the metaverse’s immersive capabilities [74,75]. Incorporating sensory feedback beyond visual and auditory stimuli could transform user experiences across various applications, from entertainment to education and healthcare [76]. By enhancing the multisensory engagement, the metaverse can simulate real-world interactions more effectively, providing users with a richer, more connected virtual experience. Moreover, research into VR’s potential in therapeutic settings showcases the broader applications of immersive virtual worlds. The metaverse could provide virtual spaces where patients receive specialized, personalized treatment plans, leveraging VR’s ability to simulate realistic environments and offer real-time sensory feedback [77].

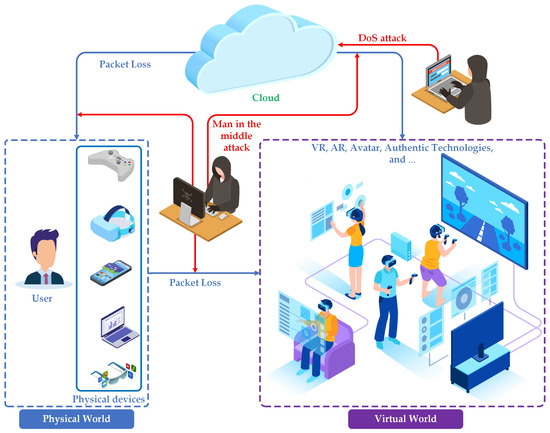

Security and privacy are among the top concerns in the metaverse, where vast amounts of personal data and digital assets are exchanged [78]. The immersive and persistent nature of the metaverse magnifies these concerns, as users’ real-world identities, behaviors, and biometric data, such as eye movements or facial expressions, may be tracked and stored [21]. The importance of advanced cryptographic algorithms and robust security protocols to protect user data and ensure secure transactions is a fundamental requirement [79]. Also, zero-knowledge proofs and homomorphic encryption are receiving more attention as possible ways to keep private user data safe while still allowing transactions to happen without trust [80,81].

Some research explains that the decentralized nature of the metaverse offers a layer of security by eliminating the need for a central authority [17,82]. However, decentralization also presents challenges, particularly in maintaining data integrity and preventing unauthorized access. Without a centralized body to enforce security standards, the responsibility for safeguarding personal data is often distributed across numerous stakeholders. This opens up the system to vulnerabilities, where malicious actors could gain control over decentralized networks. Non-fungible tokens (NFTs) are becoming more popular and important to the metaverse economy. However, there are risks of digital asset theft, fake NFTs, and fraud, so strong identity verification systems and anti-fraud mechanisms are needed.

The findings showed that the quality of user experience is another critical factor in the success of the metaverse [83]. The immersive nature of VR and AR technologies can significantly enhance user engagement, but they also come with challenges. Integrating haptic feedback in AR can improve the realism of virtual interactions [9]. However, the technical difficulties in delivering consistent and intuitive haptic feedback are due to network latency and resource allocation issues [84]. AI can enhance user interaction by making virtual environments more responsive and adaptive. AI-driven avatars, capable of understanding and reacting to user inputs, can create more engaging and personalized experiences. However, ensuring these AI systems operate seamlessly across different platforms and devices remains a significant challenge.

Some research has highlighted that scalability is another major challenge in the development of the metaverse [85]. The current social VR platforms struggle to support large numbers of concurrent users. The bandwidth requirements for transmitting high-resolution 3D content and real-time interactions can be immense, necessitating advanced networking techniques to ensure scalability. The potential of 5G, and 6G technologies is to address these scalability issues by providing ultra-low latency, high data rates, and enhanced reliability. The proposed layered architecture for integrating 6G with the metaverse could help manage the massive data traffic and support large-scale user interactions. However, deploying such infrastructure poses significant technical and economic challenges. Recently, researchers proposed Microverse [16], a task-oriented, scaled-down metaverse instance, as a practical approach to current technologies [86,87].

According to some studies, data integration is essential for creating seamless and coherent virtual experiences in the metaverse [21,88]. The role of the edge is to compute and manage the real-time demands of data integration by processing data closer to the user. This approach reduces latency and improves the quality of experience. However, ensuring consistent and accurate data synchronization across distributed edge nodes is complex. Integrating diverse data sources, including IoT devices, digital twins, and AI systems, into the metaverse is essential. Integrating devices enables the creation of rich and dynamic virtual environments but requires robust frameworks to manage data interoperability and consistency.

In addition, data compatibility is another major challenge in the interoperability of different systems within the metaverse [88]. The lack of standardized data formats and protocols can hinder seamless interactions between virtual environments. Ensuring data compatibility involves developing common standards and protocols that can be adopted across various platforms and technologies. Cross-platform compatibility is essential for allowing users to access the metaverse from different devices and platforms [89]. The main challenge is achieving cross-platform compatibility, particularly in delivering consistent user experiences across devices with varying capabilities. Ensuring that applications and content are accessible and functional on different platforms requires significant effort in standardization and optimization.

Interoperability is another area of research in the metaverse, enabling seamless transitions and interactions across different virtual worlds [90]. Ensuring a cohesive experience of interoperability in the metaverse is crucial. AI and machine learning can facilitate interoperability by enabling systems to understand and adapt to different environments [91]. However, achieving true interoperability requires collaboration between developers, platform providers, and standardization bodies to establish common frameworks and protocols. To enable seamless interactions, it is required to integrate diverse technologies, such as blockchain, AI, and edge computing, and the need for standardized interfaces to enable seamless interactions [19]. Without interoperability, the metaverse risks becoming fragmented, with isolated virtual environments that cannot communicate with each other.

The metaverse holds significant potential for transforming education by creating immersive and interactive learning environments [92]. The metaverse can enable virtual classrooms where students and teachers interact in a shared virtual space, enhancing the learning experience through interactive simulations and collaborative projects. The flexibility and accessibility of the metaverse can democratize education, making high-quality learning resources available to a global audience. The concept of distance online learning in the metaverse can be expanded through evidence-based insights by leveraging immersive technologies like social virtual reality environments (SVREs) and AR. Research highlights that SVREs foster deep and meaningful learning (DML) by enabling collaborative, authentic interactions among learners [93]. These virtual environments allow students to engage in social and cognitive activities that parallel in-person experiences, thus addressing traditional distance learning challenges like isolation and disengagement [94]. AR and VR technologies further support DML by creating simulations that encourage active, reflective, and goal-oriented learning, which enhances knowledge retention and motivation. Moreover, studies indicate that integrating SVREs into distance learning can foster a strong sense of presence and co-presence, leading to richer educational experiences that replicate the dynamics of physical classrooms [93].

Beyond technological advancements, the metaverse presents several social and ethical considerations [95]. Some issues, such as user addiction, digital harassment, and equitable representation of avatars, are highlighted. The immersive nature of the metaverse can exacerbate these issues, necessitating the development of guidelines and regulations to protect users and promote a healthy virtual environment [96]. Additionally, integrating AI and blockchain raises data privacy and security concerns, which must be addressed to ensure user trust and safety in the metaverse.

4. State-of-the-Art Real-Time Metaverse

4.1. Integration of the Physical and Virtual Worlds

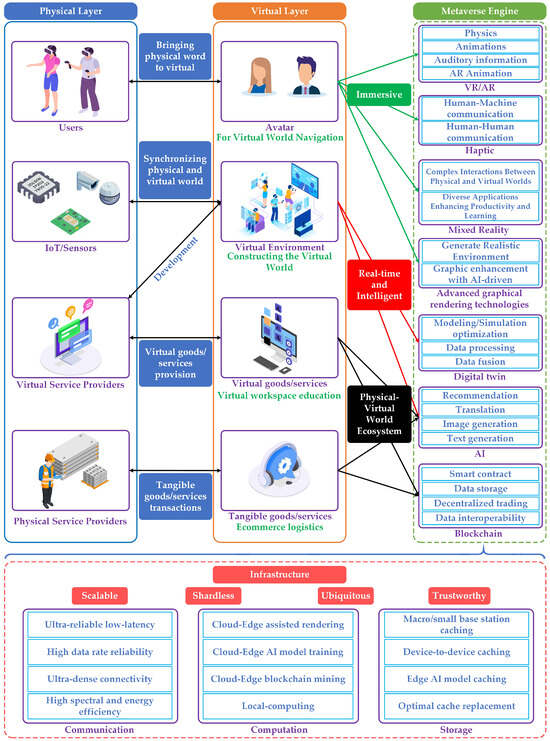

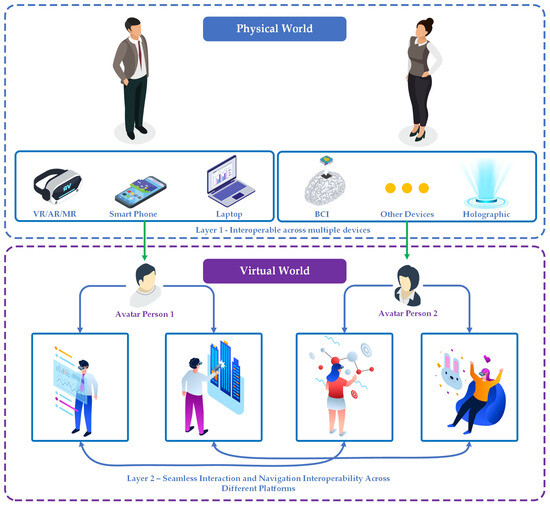

Figure 4 presents a detailed view of the architecture for integrating physical, virtual, and real-time metaverse layers within a digital ecosystem. This image presents a conceptual overview of the physical–virtual world ecosystem, highlighting the synchronization of the physical and virtual environments. The Physical layer comprises four main components: users, IoT/sensors, virtual service providers, and physical service providers. Users engage with the virtual world using various devices, and IoT/sensors facilitate the synchronization between the physical and virtual worlds [15,97]. Virtual service providers offer digital goods and services. In contrast, physical service providers handle tangible goods and services transactions, highlighting the importance of interaction and synchronization between physical and virtual entities for a seamless user experience.

Figure 4.

Metaverse architecture.

The Virtual layer includes avatars for virtual navigation, virtual environments for constructing the virtual world, and virtual goods/services such as virtual workspace and education [21]. The virtual layer bridges the gap between the physical and virtual worlds by providing immersive and interactive experiences. At its core, the real-time metaverse leverages data from IoT devices, sensors, and service providers to continuously update the virtual world in alignment with physical events [98]. The ability to “bring the physical world to the virtual” lies in deploying advanced sensors. These sensors capture critical information about real-world environments, such as the spatial layout, temperature, and motion of objects [99]. The data are then transmitted to cloud processing centers via high-speed communication technologies, enabling the real-time updating of virtual spaces [100]. Once the data are collected, they undergo data fusion—a process in which inputs from different sensors are combined to create a single, cohesive representation of the physical environment in the virtual world [101,102]. Fusing these diverse data types creates a rich, detailed, and accurate 3D model of the physical environment in the virtual world [103]. The data are processed in the cloud, allowing real-time synchronization between the two realms.

4.2. The Role of the Metaverse Engine

The Metaverse engine is crucial for maintaining the intelligence and real-time nature of the virtual environment, as shown in Figure 4. This engine incorporates a variety of advanced technologies [104], including VR/AR, haptic feedback, digital twin (DT), AI, blockchain, mixed reality [8], and advanced graphical rendering [105]. These features allow users to interact with the digital world in a way that feels natural and immersive. The metaverse engine also ensures that the virtual environment responds dynamically to user inputs and real-world changes. For example, in an industrial setting, IoT sensors embedded in machinery could transmit live data about the status and operation of physical assets to the metaverse [106]. The engine would interpret this information and update the virtual workspace to reflect real-time changes in equipment status, such as a machine overheating or requiring maintenance. AI-driven systems further enhance the realism of the environment by predicting how objects might behave in the future, ensuring the virtual world is not only reactive but also proactive [107].

AI models play a critical role in maintaining the intelligence of the real-time metaverse [108]. These models are responsible for analyzing sensor data, detecting patterns, and automating processes within the virtual environment [24]. For example, natural language processing (NLP) enables seamless human–human communication in virtual spaces, while image generation models can create realistic textures and visuals from raw data. The Metaverse engine further personalizes the user experience by suggesting virtual environments, services, or goods based on real-time preferences and interactions.

4.3. Communication and Computational Infrastructure

For the real-time metaverse to function efficiently, robust infrastructure is necessary. Figure 4 highlights key infrastructure components—communication, computation, and storage—that support the real-time data flow between the physical and virtual worlds. Scalability is essential, as the system must handle ultra-dense connectivity with high data rate reliability and low-latency communication. Technologies like 5G and edge computing are pivotal in ensuring that real-time data can be collected and transmitted without delays [109]. These communication technologies facilitate real-time data transmission and control signals between sensors, robots, and user interfaces, ensuring low latency and high reliability [110]. Real-time connectivity is essential for maintaining the interactive nature of the real-time metaverse, allowing users to experience immediate responses to their actions.

In addition to scalability, the infrastructure must be ubiquitous [37] and shardless [111]. Trustworthiness [80] is another critical factor in real-time metaverse systems, as users must trust the data and services they interact with. Localized computing, with cloud-edge-assisted rendering, cloud-edge AI model training, and blockchain mining are essential for delivering real-time experiences at scale, whether users are in urban areas with high connectivity or remote locations with limited infrastructure [90]. Technologies like blockchain provide decentralized storage, ensuring secure and verifiable transactions within the metaverse, from virtual goods to real-world e-commerce logistics.

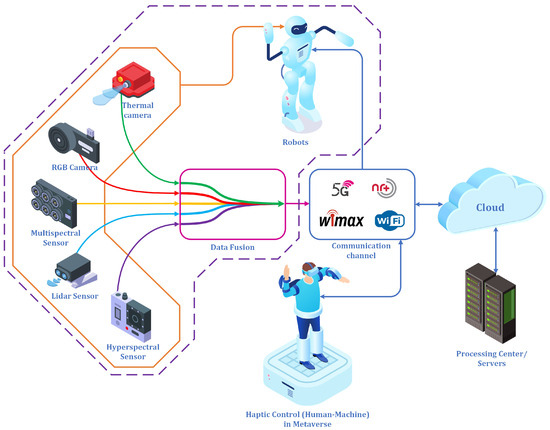

4.4. Data Fusion and Real-Time Interaction

Figure 5 elaborates on the technical process of data fusion within the real-time metaverse. A variety of sensors, such as radar [112], LiDAR [113], RGB cameras [114], multispectral sensors [115], and hyperspectral sensors [25,116], collect data. With the high-speed connectivity of advanced communication systems, these data are transmitted to the cloud, where they are fed into the data fusion process [117,118]. For instance, LiDAR provides depth and spatial information, while RGB cameras contribute visual details like color and texture [119]. Thermal cameras capture temperature differences, and multispectral or hyperspectral sensors gather data across different wavelengths, offering unique insights into material properties [120]. This fusion of different sensor streams enables the creation of an integrated and high-fidelity virtual model that accurately reflects the physical world [121]. The fused data are then transmitted to robots and haptic control systems [122], enabling both physical and virtual objects to interact in real-time. For instance, an avatar in the metaverse could control a robot in the real world, with haptic feedback systems giving the user real-world sensations based on the robot’s actions. Haptic controls play a vital role in ensuring that users can feel and interact with virtual objects as if they were physical [76]. This real-time feedback system enables greater immersion and interaction, bridging the gap between the virtual and real worlds. In combination with AI, these systems allow for complex interactions between physical and virtual entities, whether it is for remote collaboration, education, or e-commerce applications.

Figure 5.

Real-time metaverse in a closed-loop system.

Cloud-edge computing, as depicted, ensures that data processing occurs as close as possible to the source, reducing the latency involved in transferring data to central servers [123]. Data and commands are processed in centralized servers or cloud-based processing centers. This setup supports complex computations and large-scale data storage, enabling the system to handle vast amounts of information and deliver real-time responses. The system benefits from scalable processing power and robust data management capabilities by leveraging cloud integration.

5. Technological Infrastructure

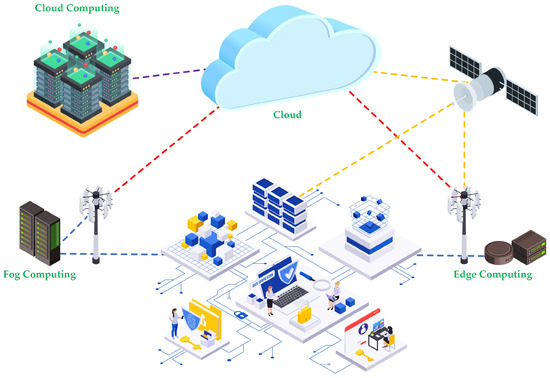

The technological infrastructure of the metaverse comprises a sophisticated and interconnected ecosystem of advanced technologies designed to support immersive, real-time virtual experiences [90]. The hardware includes high-speed and low-latency networks like 5G and fiber optics, powerful computing hardware such as graphics processing units (GPUs) and cloud computing resources, and robust data storage solutions [124]. Together, these components ensure the metaverse is a seamless, scalable, and interactive digital universe capable of supporting various applications from gaming and social interactions to professional and educational environments. Key enablers include high-performance computing, cloud and fog architectures, and edge devices as shown in Figure 6.

Figure 6.

Structures of computing in the network.

5.1. High-Performance Computing (HPC)

High-Performance computing (HPC) leverages the combined power of supercomputers, cluster centers, and parallel processing techniques to tackle complex computational problems beyond standard desktop computers’ capabilities [125]. HPC systems are essential in various domains, such as scientific research, design engineering, and data analysis, enabling large-scale simulations, systems modeling, and processing of massive datasets. Supercomputers and HPC clusters of interconnected nodes perform calculations at incredible speeds by dividing tasks into smaller sub-problems solved simultaneously. Advanced data centers equipped with high-performance servers and GPUs are essential for processing the vast amounts of data required for real-time metaverse interactions [105]. Specialized software and tools, like MPI (message passing interface), OpenMP (open multi-processing), and CUDA (compute unified device architecture), support the development and execution of HPC applications, ensuring efficient use of the vast computing resources available [126]. Applications range from climate modeling and molecular dynamics in scientific research to computational fluid dynamics and structural analysis in engineering, as well as big data analytics and machine learning in data-intensive fields.

Despite the immense capabilities of HPC for a real-time metaverse, it faces several challenges, including scalability, energy consumption, cost, and complexity [127]. Scaling applications across thousands of processors efficiently requires optimizing code to minimize communication overhead. The significant power consumption of supercomputers and large clusters necessitates the development of energy-efficient designs. The high costs of HPC infrastructure and the specialized knowledge required for developing and maintaining applications further complicate its adoption. However, the future of HPC is promising, with advancements in exascale computing, quantum computing, and AI integration. Exascale systems will enable even more complex simulations and data analyses, while quantum computing could revolutionize fields like cryptography and material science [128]. Combining HPC with AI and machine learning will drive innovations across various domains, and research into energy-efficient technologies aims to reduce the environmental impact of HPC.

5.2. Cloud Computing

Cloud computing is a transformative technology that allows individuals and organizations to access and store data, applications, and computing power over the internet rather than relying on local servers or personal devices [129]. Cloud technology offers several key advantages, including scalability, flexibility, cost-efficiency, and accessibility [130]. Cloud services are typically categorized into three main types: infrastructure as a service (IaaS) [131], which provides virtualized computing resources over the internet; platform-as-a-service (PaaS) [132], which offers hardware and software tools for application development; and software as a service (SaaS) [133], which delivers software applications over the internet on a subscription basis [134]. Major cloud service providers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) offer robust solutions that cater to a wide range of needs, from startups requiring minimal resources to enterprises needing extensive infrastructure and advanced services.

Cloud computing has revolutionized various industries by enabling more efficient and innovative business models. For instance, in the healthcare sector, cloud computing facilitates the secure storage and sharing of patient data, supports telehealth services, and enhances collaborative research through data analytics [135]. In finance, it allows for real-time transaction processing and advanced fraud detection. Additionally, cloud computing supports the growing field of remote work by providing seamless access to applications and data from any location, fostering collaboration and productivity [136]. Despite its many benefits, cloud computing also poses challenges such as data security, privacy concerns, and dependency on internet connectivity. However, ongoing advancements in cloud security protocols and hybrid cloud solutions, which combine private and public cloud resources, are addressing these issues and enhancing the reliability and security of cloud services.

5.3. Edge and Fog Computing

Edge and fog computing are paradigms that enhance data processing and analysis capabilities closer to the source of data generation, reducing latency and improving efficiency [137]. Edge computing involves processing data directly on devices or near the data source, such as sensors, IoT devices, or local servers [138]. The edge approach minimizes the need to send data to centralized cloud servers, reducing latency and bandwidth usage. Edge computing applications include real-time analytics, autonomous vehicles, and smart cities, where immediate data processing is crucial. For instance, in autonomous cars, edge computing allows for rapid decision-making based on real-time data from sensors, enhancing safety and performance.

Fog computing, on the other hand, extends the concept of edge computing by providing a distributed computing infrastructure that includes edge devices, local servers, and potentially the cloud [139]. The fog architecture acts as an intermediary layer that processes data before it reaches the cloud, providing additional storage and computational resources closer to the data source [140]. The fog–edge layered approach benefits applications requiring real-time processing and more substantial computational power or data aggregation. Fog computing is beneficial in scenarios like industrial IoT, where data from numerous devices needs to be aggregated and analyzed swiftly to optimize operations and maintenance. By distributing resources across multiple layers, fog computing improves overall system efficiency, scalability, and reliability.

Figure 6 illustrates a comprehensive architecture that integrates cloud computing, fog computing, and edge computing. At the center of the diagram is the cloud, symbolizing the centralized and extensive data processing capabilities of cloud computing. Cloud computing is depicted with multiple connections, including data centers and satellites, highlighting its broad reach and ability to handle significant computational tasks and data storage. Servers and network infrastructure placed closer to the data sources, such as IoT devices and sensors, represent fog computing. The fog layer aims to reduce latency by processing data near its origin before sending it to the cloud. Edge computing, depicted with smaller, decentralized servers and network nodes, is even closer to the end-users and devices. It emphasizes real-time data processing and immediate response actions, crucial for applications requiring low latency and high reliability. Integrating these computing paradigms ensures a balanced and efficient data handling from the core cloud to the network’s edge, optimizing performance and resource utilization.

5.4. 5G Communication Technology

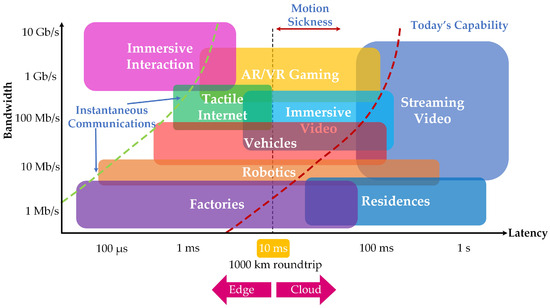

Fifth-generation (5G) communication technology represents a significant leap in mobile communications, offering faster data speeds, lower latency, and more excellent connectivity compared to previous generations [141].

With theoretical speeds of up to 10 Gbps and latency as low as 1 millisecond, 5G enables a wide range of applications that require real-time data transmission and high bandwidth. These include enhanced mobile broadband, ultra-reliable low-latency communications (URLLC), and massive machine-type communications (mMTC) [142]. Industries such as healthcare, automotive, and entertainment are poised to benefit immensely from 5G. For example, 5G supports telemedicine and remote surgeries in healthcare by providing reliable, high-speed connections necessary for transmitting high-definition video and large medical data files in real-time.

Beyond 5G, the focus is on developing technologies like 6G, which aims to provide even higher speeds, lower latency, and more extensive connectivity [59]. 6G is expected to integrate advanced technologies such as artificial intelligence, machine learning, and blockchain to enhance network management, security, and efficiency. Potential applications of 6G include holographic communications, advanced AR and VR, and ubiquitous IoT connectivity, enabling smart environments and autonomous systems to operate seamlessly [143]. Research and development in 6G technology are exploring new spectrum bands, such as terahertz frequencies, to achieve these ambitious goals. As society moves toward 6G, the focus will also be on sustainable and energy-efficient solutions to support the ever-growing demand for data and connectivity.

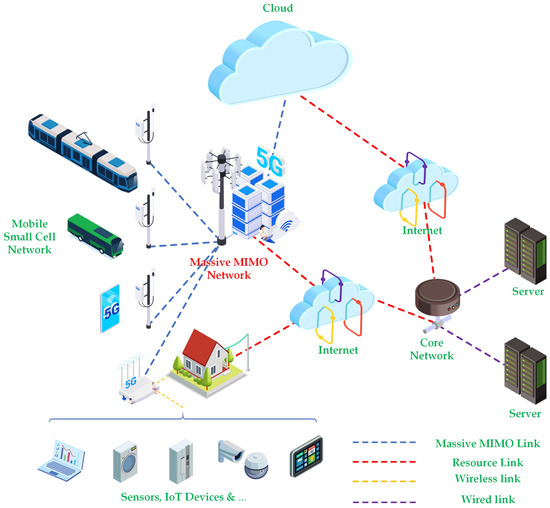

Figure 7 shows a 5G network architecture, integrating various components and technologies to create a robust communication system. The core is a massive multiple-input multiple-output (MIMO) network that connects to resources and wireless and wired links [144]. The cloud provides extensive data processing and storage capabilities, connected to the core network and servers via wired links. The internet is crucial, linking the core network and servers to broader online resources. The mobile small cell network, including 5G-enabled devices, ensures coverage and connectivity for mobile users. The edge network includes sensors, IoT devices, and connected appliances, receiving and sending data through wireless links. A residential setup is also included, demonstrating the network’s ability to provide high-speed internet access to homes.

Figure 7.

A general 5G cellular network architecture.

5.5. Storage Technology

Storage technology plays a critical role in the real-time metaverse by ensuring fast, secure, and efficient data handling for continuous immersive experiences [145]. As the metaverse involves interactions between physical and virtual environments, data from various sources such as sensors, edge devices, and AI systems must be cached and retrieved in real-time [146]. Technologies like macro/small base station caching [147] and device-to-device caching [148] help reduce latency by storing frequently accessed data closer to the user, ensuring faster retrieval times. This is essential in high-demand environments where real-time responsiveness directly impacts the user experience.

In addition to reducing latency, efficient storage mechanisms like edge AI model caching contribute to the real-time metaverse’s ability to manage large-scale, complex interactions without overwhelming the network [149]. Edge AI model caching allows real-time AI computations to occur closer to the user by caching AI models at edge nodes, which improves performance and reduces the load on central servers [150]. This approach enhances scalability and ensures that the system can support a high number of simultaneous interactions without performance degradation, a necessity in the immersive and constantly evolving world of the real-time metaverse.

Furthermore, optimal cache replacement is crucial in ensuring that the most relevant and frequently accessed data remains readily available, while outdated or less important data are efficiently replaced [151]. As the real-time metaverse generates and processes massive amounts of data, intelligent cache management prevents storage bottlenecks and ensures the availability of real-time data [152]. This ensures seamless transitions and interactions within the real-time metaverse, allowing users to experience smooth, uninterrupted virtual environments while maintaining the overall system’s trustworthiness and performance.

6. Metaverse Engine

The metaverse engine comprises seven key components: immersive technologies (including VR/AR, haptic feedback, mixed reality, and advanced graphical rendering), digital twin, AI, and blockchain. Each of these components will be detailed in the following sections.

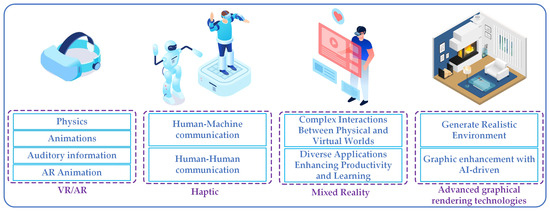

6.1. Immersive Technologies

Immersive technologies for the real-time metaverse encompass a range of advanced tools and systems designed to create deeply engaging and interactive virtual experiences [105,153,154]. These technologies include VR, AR, mixed reality (MR) [155], haptic feedback [9], and advanced graphical rendering techniques [25], all of which work together to blur the lines between the physical and digital worlds. Figure 8 showcases the integration of VR/AR, haptic, and advanced graphical rendering technologies to enhance virtual and augmented reality experiences. VR/AR creates immersive environments using physics, animations, and auditory information. Haptic enhances human-machine communication and provides tactile feedback for real-life interactions. Advanced graphical rendering uses AI-driven techniques to generate realistic environments and enhance graphics, resulting in more immersive and interactive virtual experiences.

Figure 8.

Immersive metaverse technologies.

6.1.1. Virtual Reality (VR)

Virtual reality (VR) is a technology that immerses users in a computer-generated environment, providing a simulated experience that can be similar to or completely different from the real-world [156]. VR typically involves headsets equipped with displays, sensors, and controllers that track the user’s movements and interactions within the virtual environment [157]. VR technology is widely used in gaming and entertainment, creating immersive experiences that engage users in new and exciting ways [158]. Beyond entertainment, VR has significant applications in education, where it enables interactive learning experiences, such as virtual field trips or simulations of complex scientific concepts, allowing students to explore and understand subjects more deeply.

In addition to its impact on gaming and education, VR is transforming industries like healthcare, real estate, and training [159]. In healthcare, VR is used for surgical simulations, allowing surgeons to practice procedures in a risk-free environment and for therapeutic purposes, such as exposure therapy for patients with anxiety disorders. In real estate, VR provides virtual tours of properties, giving potential buyers a realistic sense of space without needing to visit in person [160]. In professional training, VR offers a safe and controlled environment for employees to practice skills and scenarios, such as emergency response or complex machinery operation [161]. As VR technology advances, with improvements in display resolution, motion tracking, and user interfaces, its applications are expected to expand further, offering increasingly sophisticated and practical uses across various fields.

6.1.2. Augmented Reality (AR)

Augmented reality (AR) is a technology that overlays digital information and virtual objects onto the real world, enhancing the user’s perception and interaction with their environment [162]. Unlike virtual reality, which creates an entirely simulated experience, AR blends virtual elements with the physical world, often through smartphones, tablets, or AR glasses [163]. AR technology has gained widespread popularity in applications such as mobile gaming, with games like Pokémon GO allowing users to interact with virtual characters in real-world locations [164]. AR also enhances navigation and location-based services by providing real-time information and directions overlaid on the physical environment, improving user convenience and engagement [165].

Beyond entertainment and navigation, AR is making significant strides in many fields, such as retail, education [166] (distance online learning), and healthcare [167]. In retail, AR applications allow customers to visualize products in their own space before purchasing [168], such as seeing how furniture would look in their home or trying on virtual clothing [169]. AR not only enhances the shopping experience but also reduces return rates and increases customer satisfaction. In education, AR brings learning materials to life by enabling interactive and immersive experiences, such as 3D visualizations of historical events or scientific phenomena, which can deepen student understanding and engagement [54]. In healthcare, AR assists surgeons by providing real-time overlays of critical information during procedures, improving precision and outcomes [170]. As AR technology continues to evolve, its integration into everyday life and various professional fields is expected to grow, offering increasingly innovative and practical applications.

6.1.3. Mixed Reality (MR)

Mixed reality (MR) is an advanced technology that seamlessly blends the physical and digital worlds, creating environments where real and virtual elements coexist and interact in real-time [171]. Unlike VR, which immerses users in a completely virtual environment, or AR, which overlays digital information in the real world, MR allows for more complex interactions between physical and virtual objects. MR is typically achieved using advanced sensors, cameras, and displays, often incorporated into headsets like Microsoft’s HoloLens or Magic Leap. These devices track the user’s position and surroundings, enabling virtual objects to be anchored in the real world and interact with them naturally and intuitively [172].

The potential applications of mixed reality span numerous fields, enhancing productivity, creativity, and learning [8]. MR can be used in industry and manufacturing for remote collaboration, allowing engineers to visualize and manipulate 3D models of equipment or structures as if they were physically present [23]. This can improve design accuracy and speed up problem-solving processes. MR can provide immersive learning experiences in education, such as virtual laboratories where students can conduct experiments without the risk or expense associated with physical setups. MR can assist in medical training in the healthcare sector by simulating complex surgical procedures with real-time feedback and guidance. As MR technology continues to evolve [173], it promises to revolutionize how we interact with both the digital and physical worlds, providing a more integrated and interactive experience.

6.1.4. Haptic Feedback

Haptic feedback is a technology that simulates the sense of touch by applying forces, vibrations, or motions to the user [9,63]. Haptic sensory feedback enhances the immersive experience in virtual environments by allowing users to feel interactions with virtual objects as if they were real. In the context of the metaverse, haptic feedback is delivered through various devices such as gloves, vests, and other wearables, which can replicate sensations like texture, resistance, and impact. For instance, when a user picks up a virtual object or feels a virtual breeze, haptic devices provide physical sensations corresponding to those actions, making the virtual experience more realistic and engaging.

Integrating haptic feedback into the metaverse has significant implications for various applications, including gaming, training, and remote collaboration [174]. In gaming, haptic feedback enhances immersion by allowing players to physically feel in-game actions, such as holding equipment or surface texture. In training simulations, particularly in fields like medicine and engineering, haptic technology can provide realistic practice scenarios, helping users develop practical skills without real-world risks. For remote collaboration, haptic feedback can bridge the gap between digital and physical interactions, enabling more effective and intuitive communication. Overall, haptic feedback enriches the user experience in the metaverse by adding a critical layer of sensory interaction that deepens engagement and realism.

6.1.5. Advanced Graphical Rendering Technologies

Advanced graphical rendering (GR) technologies are crucial for creating highly realistic and visually stunning virtual environments in the metaverse [25]. One of the key advancements in GR is real-time ray tracing, a technique that simulates the way light interacts with objects in a scene to produce highly accurate reflections, refractions, and shadows [105,175]. This level of detail enhances the realism of virtual worlds, making them more immersive and visually engaging. Real-time ray tracing requires significant computational power, but recent advances in GPUs and optimization techniques have made it feasible for real-time applications, allowing users to experience lifelike visuals in interactive settings such as gaming, virtual tours, and social interactions within the metaverse.

Another significant development in advanced graphical rendering is using artificial intelligence (AI) to enhance graphics quality and performance [24,108]. AI-driven techniques, such as deep learning-based upscaling (NVIDIA’s deep learning super sampling (DLSS)), can improve frame rates and image quality by predicting and generating high-resolution frames from lower-resolution inputs. High resolution not only ensures smoother performance but also allows for more detailed and complex scenes without compromising on speed. AI is also used in procedural content generation, enabling the creation of vast and diverse virtual landscapes with minimal manual effort [176]. By leveraging these advanced rendering technologies, the metaverse can offer visually rich, dynamic, and interactive environments that push the boundaries of what is possible in digital experiences, making them more compelling and lifelike for users.

6.2. Digital Twin

The integration of digital twin (DT) technology into the metaverse is transformative, serving as a crucial bridge between the physical and virtual worlds [59]. By creating a real-time digital replica of physical entities, DTs enable an immersive and interactive metaverse experience. This constant synchronization ensures that changes in the physical world are instantly reflected in the virtual environment, allowing users to engage with highly accurate and dynamic digital representations of objects, environments, and even people [177]. In this way, DTs elevate the realism and functionality of the metaverse, making it a more practical and engaging platform for users.

One of the most significant challenges in this integration is achieving real-time synchronization between the physical and digital realms [75]. Digital twins must continually update as real-world changes occur, ensuring that the metaverse remains a faithful and current representation [36]. By distributing the metaverse into smaller, localized sub-metaverses, these systems can process data closer to the source, reducing delays and enhancing the responsiveness of the digital twin environment [178]. This decentralized approach is particularly valuable in applications like autonomous vehicles [179], healthcare [180], and smart cities [181], where real-time accuracy is critical. In smart city ecosystems, the combination of DTs and the metaverse offers new dimensions for urban management and citizen interaction. Digital twins can mirror real-time city infrastructures, providing an immersive way for users to interact with urban spaces as though they are physically present. This not only improves user experience but also enhances urban planning, resource management, and disaster response through real-time simulations and predictive modeling [182]. As cities grow more complex, the ability to manage them in real-time through a metaverse-driven interface becomes increasingly valuable, offering more efficient ways to allocate resources and address challenges.

In sectors like healthcare and manufacturing, DTs integrated into the metaverse offer powerful tools for decision-making and efficiency [183]. For instance, in healthcare, digital twins can monitor patient health in real-time, allowing healthcare providers to predict potential issues and intervene before conditions worsen [180]. Similarly, in manufacturing, DTs simulate production processes, helping companies optimize performance, predict equipment failures, and reduce operational downtime [184]. By enabling these real-time simulations, the metaverse enhances decision-making capabilities and operational efficiency in critical industries. Security and trust are also essential aspects of the metaverse, where DTs play a pivotal role [185]. The decentralized nature of the metaverse, combined with blockchain technology, offers a secure and transparent framework for transactions and interactions. Blockchain ensures that all transactions are immutable, decentralized, and verifiable, making it ideal for safeguarding the integrity of digital twins and their data within the metaverse [186]. This decentralized trust mechanism is crucial as the metaverse becomes more integrated into everyday life, supporting interactions that are both secure and reliable.

6.3. Artificial Intelligence (AI) and Machine Learning (ML)

Artificial intelligence (AI) content generation plays a pivotal role in developing and enhancing the metaverse, a virtual universe where users can interact with each other and in digital environments in real-time [24]. Using AI to make content in a metaverse includes a lot of different technologies, such as procedural generation, natural language processing (NLP) [187], and large language models (LLMs) [188]. These technologies collectively create more immersive, interactive, and personalized user experiences. Procedural generation is a method in which content is created algorithmically rather than manually, allowing for the creation of vast and diverse virtual worlds within the metaverse. The procedural technique can generate everything from complex landscapes to intricate architecture and even entire ecosystems. Procedural generation ensures that the metaverse remains dynamic and expansive, offering users new and unique experiences each time they log in. The ability to automatically generate content also significantly reduces the time and resources needed for manual creation, enabling developers to focus on other aspects of the metaverse.

NLP is another critical component in the AI content generation for the metaverse [187]. NLP enables more natural and intuitive interactions between users and virtual entities, including non-player characters (NPCs) and digital assistants [24]. By understanding and processing human language, NLP allows these virtual entities to respond appropriately to user inputs, facilitating more engaging and meaningful conversations. The NLP capability fosters a seamless and immersive user experience [189], as it bridges the gap between human communication and digital interaction. LLMs, such as GPT-4, further enhance the capabilities of NLP in the metaverse [188].

These models are trained on vast datasets and can generate human-like text based on contextual understanding. LLMs can create complex narratives, generate dialogue for NPCs, and even assist in real-time translation between users of different languages. The integration of LLMs into the metaverse ensures that the virtual world is rich in content and can adapt to the diverse linguistic needs of its global user base. The combination of procedural generation, NLP, and LLMs in the metaverse leads to a highly dynamic and personalized user experience. For instance, users can explore unique environments tailored to their preferences, engage in meaningful conversations with virtual entities, and enjoy evolving narratives based on their interactions [190]. The advanced level of personalization not only enhances user engagement but also fosters a sense of connection and immersion within the virtual world. The AI-driven content generation ensures that the metaverse remains a vibrant and evolving space, continuously offering new experiences. In virtual environments such as the metaverse, AI-driven avatars and non-player characters (NPCs) serve distinct roles, each with unique functionalities and purposes. Although they both use artificial intelligence to power them, their approaches to user interaction, levels of autonomy, and overall goals in virtual spaces are fundamentally different.

6.3.1. AI-Driven Avatars

AI-driven avatars represent a remarkable fusion of artificial intelligence and digital animation, poised to revolutionize how we interact in virtual environments [108]. These avatars are sophisticated digital representations of individuals designed to mimic human behavior, appearance, and communication with high realism and responsiveness. At the core of these avatars is advanced AI technology, enabling them to learn, adapt, and respond to users naturally and intuitively [191]. The AI-driven avatars are integral in enhancing the user experience within the metaverse, providing a seamless and immersive interaction that bridges the gap between humans and machines. One of the most significant aspects of AI-driven avatars is their ability to understand and process natural language [192]. Using natural language processing (NLP) algorithms, these avatars can interpret spoken or written language, allowing for fluid and dynamic conversations with users. Machine learning models further enhance this capability, enabling avatars to learn from interactions and improve their responses over time. As a result, users can engage in meaningful and personalized dialogues with their avatars, making virtual interactions more engaging and lifelike.

In addition to linguistic capabilities, AI-driven avatars are equipped with advanced facial recognition and emotion detection technologies [193]. These technologies allow avatars to read and respond to human emotions, adapting their behavior and expressions accordingly. For instance, an avatar can recognize when a user is happy, sad, or frustrated and tailor its responses to provide appropriate emotional support or feedback. This emotional intelligence adds another layer of depth to virtual interactions, making AI-driven avatars not just functional tools but empathetic companions in the digital world. The applications of AI-driven avatars extend far beyond entertainment and social interactions. These avatars can serve as personalized tutors in educational settings, offering tailored instruction and feedback to students [194]. They can provide efficient and empathetic support in customer service, handling inquiries, and resolving issues with a human touch [195]. In healthcare, AI-driven avatars can act as virtual companions for patients, providing comfort and monitoring their well-being [196]. By integrating AI-driven avatars into various sectors, the metaverse can unlock new possibilities for enhancing user experiences and improving the quality of services across multiple domains.

6.3.2. Non-Player Characters (NPCs)

NPCs are fully autonomous characters embedded in the virtual environment, designed to perform roles independent of any human control. NPCs are generally pre-programmed with specific behaviors or controlled by the system’s AI to serve particular functions [197]. For example, in a gaming context, NPCs often populate the environment as background characters or antagonists. However, in educational and professional settings, NPCs are increasingly being used as interactive tools to enhance user experiences. NPCs in the educational metaverse can take on roles such as virtual tutors, peers, or advisors [198]. These NPCs interact with users to foster engagement, provide feedback, or guide them through learning tasks.

NPCs are particularly useful in education because they can simulate real-world scenarios, offering dynamic, role-based interactions [199]. For instance, an NPC designed as a tutor can offer students advice on solving problems or guide them through complex design thinking processes. NPCs can also be peers or students, enabling users to practice teaching, mentoring, or collaboration skills in a controlled, simulated environment. This creates a scalable and adaptable learning space, where NPCs act as constant participants, available for interaction at any time, regardless of the availability of human peers or instructors [200]. Furthermore, NPCs often leverage advanced natural language processing (NLP) capabilities, enabling them to carry out conversations, understand user queries, and provide meaningful responses in a way that feels interactive and intuitive.

6.3.3. Differences in Purpose and Autonomy

The primary distinction between AI-driven avatars and NPCs lies in their relationship with users and their level of autonomy. AI-driven avatars are designed to represent and act on behalf of the user, making decisions based on user-defined parameters or learned behaviors [201]. They are personalized to the user’s preferences and can be seen as digital extensions of the user’s identity within the metaverse. These avatars help maintain a user’s presence, even when they are not actively engaged in the virtual world, by taking over routine tasks or social interactions. On the other hand, NPCs exist independently of the user [202]. They are part of the virtual environment itself, managed by the system’s AI to provide users with a more immersive and engaging experience. NPCs follow predefined rules or machine learning algorithms, interacting with users to fulfill specific roles—whether as educators, fellow learners, or virtual assistants. While they can simulate human-like behaviors, NPCs do not represent any specific user and are instead designed to enhance the user’s experience by populating the world with interactive, responsive characters. NPCs facilitated design thinking by providing feedback, engaging in empathy-building exercises, and offering diverse perspectives that helped students reframe problems and develop solutions [203].

Another key difference between AI-driven avatars and NPCs is the degree of personalization [201]. AI-driven avatars are highly personalized to their user. They adapt and evolve based on the user’s behavior, preferences, and interactions, becoming more reflective of the user over time. This level of personalization ensures that the avatar represents the user’s unique identity and can act in ways that align with the user’s goals and needs. For example, in a corporate setting, an AI avatar could autonomously schedule meetings, manage tasks, or even negotiate with other AI avatars based on the user’s work habits and objectives. NPCs, in contrast, are designed to serve broader, more generalized purposes within the virtual environment. They are not tied to any specific user and often follow universal rules or patterns set by the system. While NPCs can adapt their behavior based on interactions with multiple users, their core function is to enhance the immersive experience for all users rather than reflecting the behavior of any individual [204]. For example, an NPC in a classroom scenario might adapt to the learning pace of different students, offering tailored guidance and feedback, but its role remains fundamentally as a system-controlled character that enriches the educational experience for everyone, not just a single user.

6.4. Blockchain

Blockchain technology is emerging as a foundational component for the realization of a secure, decentralized, and efficient real-time metaverse [205]. In a digital environment where the virtual and physical worlds merge, blockchain offers several critical benefits that ensure the smooth functioning of this complex ecosystem. By enabling decentralized control, enhancing data security, and ensuring trust, blockchain significantly contributes to the metaverse’s infrastructure, making it not only more reliable but also scalable for widespread use [5]. One of the primary advantages of blockchain in the metaverse is its ability to provide a decentralized architecture [206]. Unlike traditional systems that rely on centralized servers, blockchain allows data and transactions to be distributed across a network of nodes, reducing the risk of single points of failure. In the metaverse, this decentralization ensures that no single entity has complete control over user data or interactions [207]. This is crucial for fostering a sense of trust and transparency in an environment where users engage in social, economic, and virtual activities that require accountability and fairness.