1. Introduction

Modern vehicles have undergone a revolution. It has been transforming from ordinary isolated vehicles to complex connected networks that can serve as a wireless local area network (WLAN) allowing information to be shared among other devices both inside and outside the vehicle [

1]. Connectivity between cars is a rapidly growing trend, driving the value of the global connected car market to USD 80.87 billion in 2023, and it is expected to reach USD 386.82 billion by 2032 with a Compound Annual Growth Rate (CAGR) of 19.2% [

2].

This remarkable growth is driven by technologies such as the low-latency high-bandwidth transmission speeds of 5G networks, which enable devices inside and outside vehicles to exchange data in real-time [

3]. Advanced technologies in the Internet of Things (IoT) transform vehicles into mobile hubs for fast data transfer and secure data exchange [

4]. Since vehicles frequently move between access points, it leads to frequent handovers and potential service interruptions. Edge caching plays a crucial role in mitigating these delays by storing popular or predicted content closer to vehicles to reduce retrieval time and network load [

5]. Caching does not only reduce latency but also enhances security, privacy, and resource efficiency in IoT and IoV systems. By storing frequently accessed data at the edge, it minimizes long-distance transmissions, reduces interception risks, and protects user or vehicle data. In addition, it improves resource utilization by avoiding redundant requests and lowering core network bandwidth consumption [

6]. Moreover, consumer expectations in modern vehicles have changed significantly. Today’s car buyers are interested in digital features such as advanced driver assistance systems (ADAS), seamless smartphone connectivity, in-car entertainment systems, auto driving systems, and safety equipment [

7]. These demands reshape future vehicle designs to be a Vehicle-to-Everything (V2X) communication.

Data exchanges between vehicles and items around help with autonomous driving, real-time traffic management, collision avoidance, and high-definition in-vehicle infotainment [

8,

9]. However, there are many challenges in terms of continuity, low latency, high bandwidth, lower power consumption, and quality of service maintenance due to the high mobility of vehicles.

One of the main challenges in vehicular networks is the network handover when vehicles travel at high speeds between the coverage areas of different network access points such as roadside units (RSUs) or cellular base stations. Transitions from a Previous Access Router (PAR) to a New Access Router (NAR) cause a very short period of disconnection known as a handover latency. This latency leads to a temporary service disruption time, in which the vehicle cannot send or receive data. Therefore, for safety-critical applications, a handover latency may cause a severe accident. For content-streaming services, a temporary service disruption may cause a poor Quality of Service in content-streaming services [

10]. For all of these reasons, developing efficient mobility management schemes to ensure seamless connection performance is a key research focus [

11].

To overcome the limitations of traditional network architectures in dynamic environments, such as those occurring in Vehicle-to-Everything (V2X) communication, Software-Defined Networking (SDN) proposes a powerful solution by decoupling the network’s control plane from the data plane [

12]. With a centralized programmable capability, SDN can gather comprehensive network information and enable more intelligent and agile mobility management providing proactive routing decisions and faster network state convergence during handovers [

13].

SDN handover management approaches have been proposed in 5G vehicular networks to alleviate the disruptions during handover processes [

14,

15]. However, further optimizations are still required to minimize the delay that occurs during and post-handovers. This paper explores two prominent strategies to achieve the delay minimization: a centralized Caching Policy and a distributed Content Delivery Network (CDN). The concept of proactively caching content at the network’s edge has been identified as a key for reducing handover latency in vehicular networks [

16]; thus, content-centric networking must prioritize efficient data distribution [

17]. SDN controllers are responsible for querying a caching policy server to determine caching rules during handover, while in the CDN approach, contents are proactively distributed to edge servers in order to bring the contents closer to the end user.

The primary contribution of this work is a rigorous analytical comparison of service disruption time for two distinct SDN-based mobility strategies. The first, a reactive SDN with a Caching Policy, relies on a centralized controller that queries an external policy server in real-time during a handover, offering flexibility at the cost of signaling overhead. The second, a proactive SDN with a Content Delivery Network (CDN), anticipates user needs by pre-positioning content at the network edge to eliminate data retrieval latency. We evaluate the CDN approach under both cache-miss and cache-hit scenarios to quantify the impact of content availability. The performance of these strategies is compared against the Session-to-Mobility Ratio (SMR), providing a clear understanding of their effectiveness under different mobility and data session patterns. The remainder of this article is organized as follows.

Section 2 provides a review of related work,

Section 3 outlines the system model and methodology, and

Section 4 presents the evaluation results.

Section 5 discusses the implications of these findings, and finally,

Section 6 concludes the article.

3. System Model and Methodology

In this section, the analytical framework used to evaluate the performance of the proposed handover strategies has been explained. The network architecture, the key performance metrics, and the mathematical models used to calculate service disruption time will also be defined.

3.1. Network Architecture

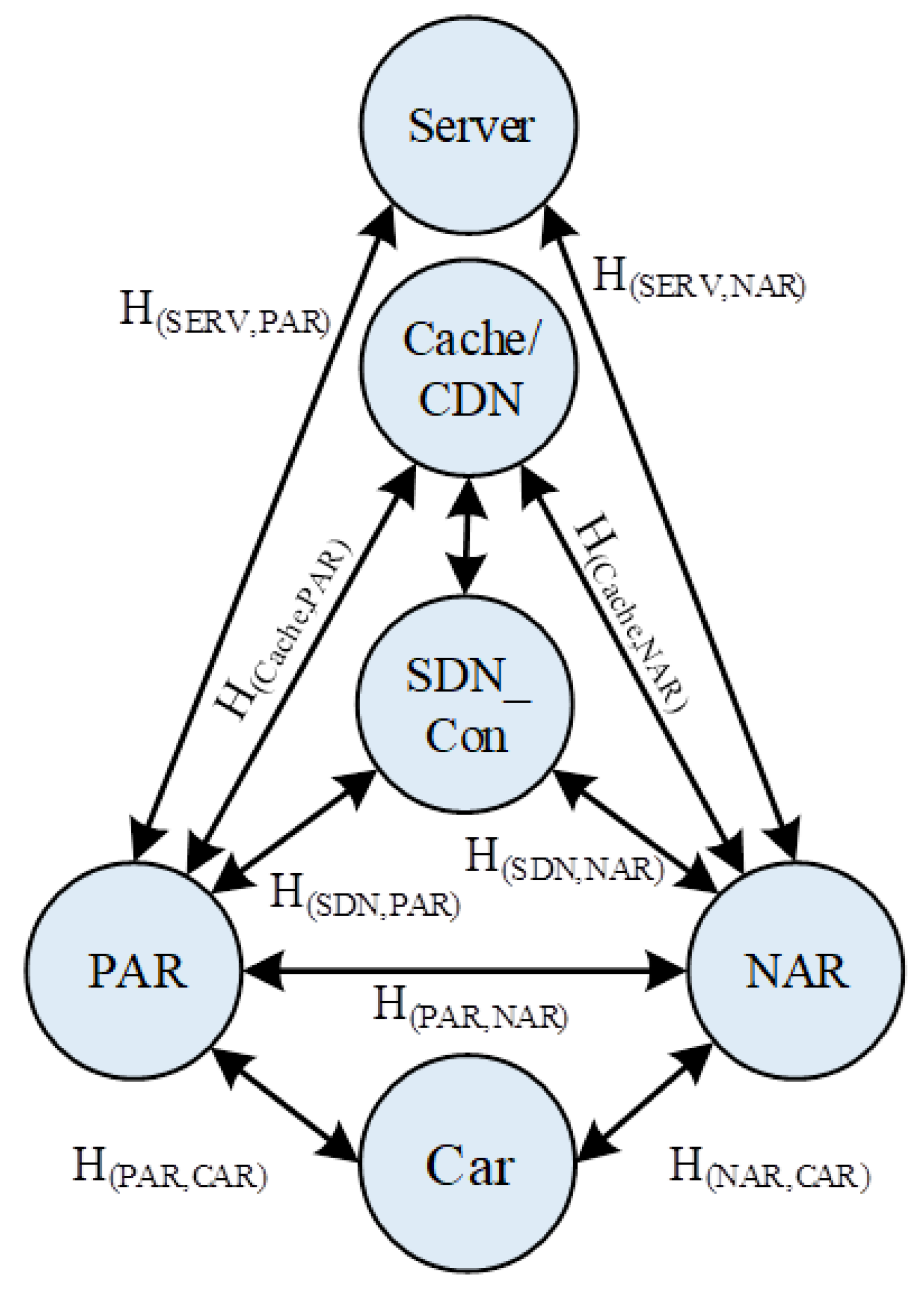

The SDN-CDN Mobility architecture illustrated in

Figure 1 that integrates two main control paradigms to manage vehicle handover. The first is a centralized SDN Controller that communicates with an external Cache Policy server to manage caching rules reactively. The second is a distributed CDN Controller that manages content proactively. Both controllers interact with an OpenFlow Switch (OFSW) to manage the data plane by preloading popular or predicted content to edge servers to ensure cache-hit and to alleviate delay during handovers.

The Connected Car performs as a mobile node moving from the coverage areas of a Previous Access Router/Previous Edge Server (PAR/PES) to a New Access Router/New Edge Server (NAR/NES). Each edge server is equipped with a local database for caching content. All traffic to and from the external Internet, where the Origin Server resides, is routed through this SDN-enabled infrastructure.

The analysis is based on the reference network topology illustrated in

Figure 2. The model consists of the following key entities:

Car: The vehicle, or Connected Car, which is the mobile node.

PAR/NAR: The Previous Access Router and New Access Router, respectively. These represent the edge servers (PEdgeServer/NEdgeServer) to which the CAR connects.

SDN_Con: The SDN Controller, which provides centralized management of the network.

Cache/CDN: The Network/Cache Policy Node or CDN Controller, which manages caching policies and content distribution.

Server: The origin server where the requested content resides.

The communication delays between these nodes are determined by the hop-count distance, denoted as H(a,b), representing the average number of hops between node a and node b.

3.2. Performance Metrics

The primary goal of this study is to minimize service interruption during handovers. We use the following metrics for our evaluation:

3.2.1. Handover Latency

Defined as the time interval during which the vehicle cannot use its global routable IP address for connectivity as it transitions from the PAR to the NAR.

3.2.2. Average Service Disruption (SD) Time

This is the total time from the start of the handover until the first packet of the requested content is successfully delivered to the vehicle via the new connection. It includes the L2 handover delay, control plane signaling delays for routing updates and cache configuration, and the transmission time of the first data packet.

3.2.3. Session-to-Mobility Ratio (SMR)

This dimensionless ratio compares the session arrival rate to the handover rate. A high SMR indicates that handovers are infrequent relative to the duration of data sessions, while a low SMR indicates high mobility. The probability of a handover occurring during a session is given by 1/(1 + SMR), and the average handover latency is weighted by this probability.

3.3. Analytical Model

The average service disruption is calculated based on a set of defined parameters for link delays and hop distances as specified in

Table 1. The delay between two nodes, T(a,b), is calculated as the product of the per-hop link delay (LWD for wired, LWL for wireless) and the hop count H(a,b). The total L2 handover delay is denoted by L_attach.

Similar to [

20], the rationale of LWD 5ms is commonly used in analytical SDN and vehicular network models to represent low-latency fiber or Ethernet backbone connections between network elements while LWL 10 ms gives a realistic mid-value for one wireless hop in our analytical model. In addition, network attach or handover delays are usually set at 10–100 ms [

21]; thus, L_attach at 50 ms is set in our study.

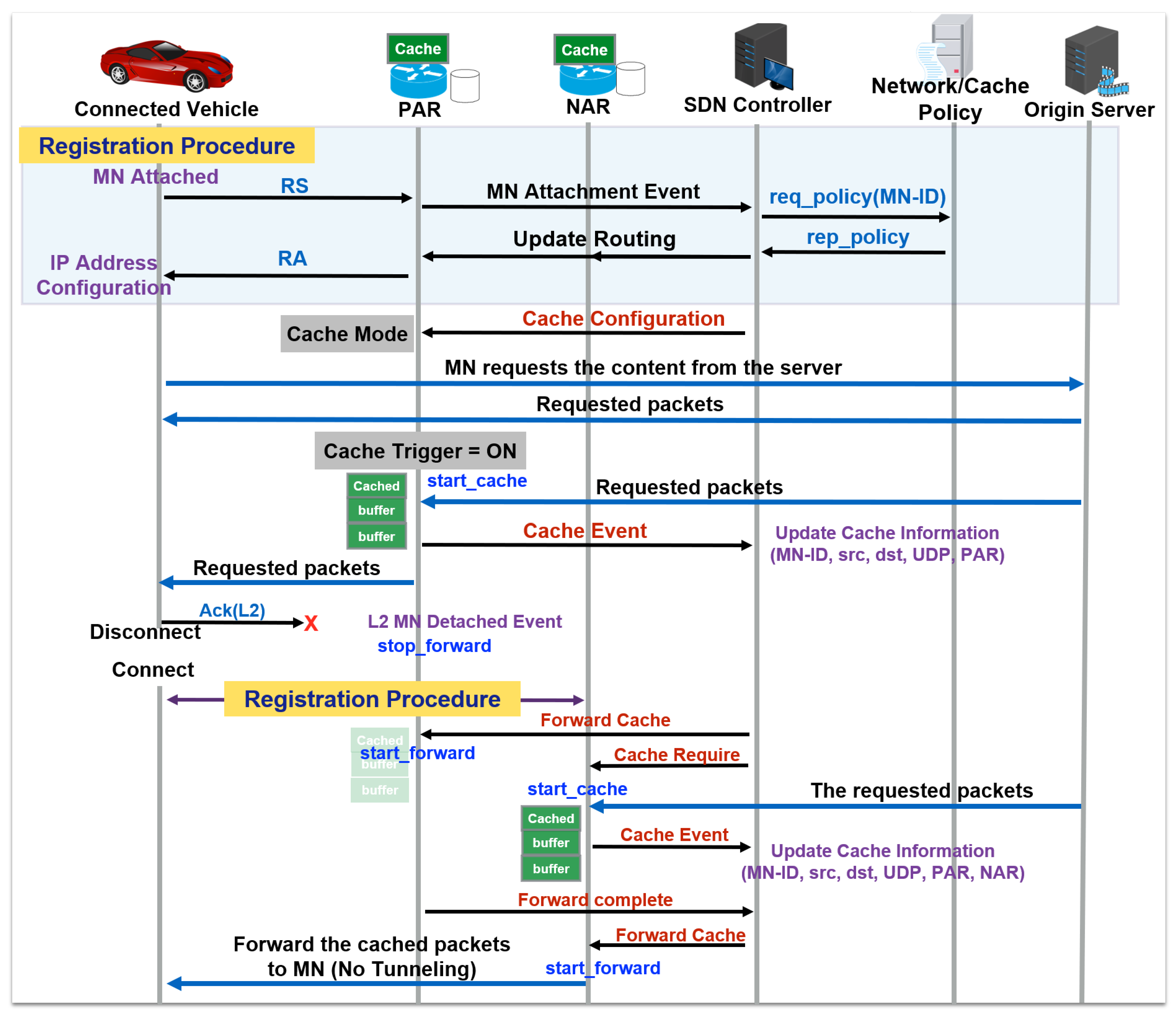

3.3.1. SDN Mobility with Caching Policy

This strategy involves a reactive centralized approach, where the SDN controller queries a policy server during handover. The complete signaling flow for this process is illustrated in

Figure 3.

The key steps contributing to the delay include the initial L2 handover, the NAR notifying the SDN controller, and the crucial round-trip communication, where the controller requests and receives caching rules from the policy server. This signaling overhead is the defining characteristic of this approach. The total service disruption time is formulated as:

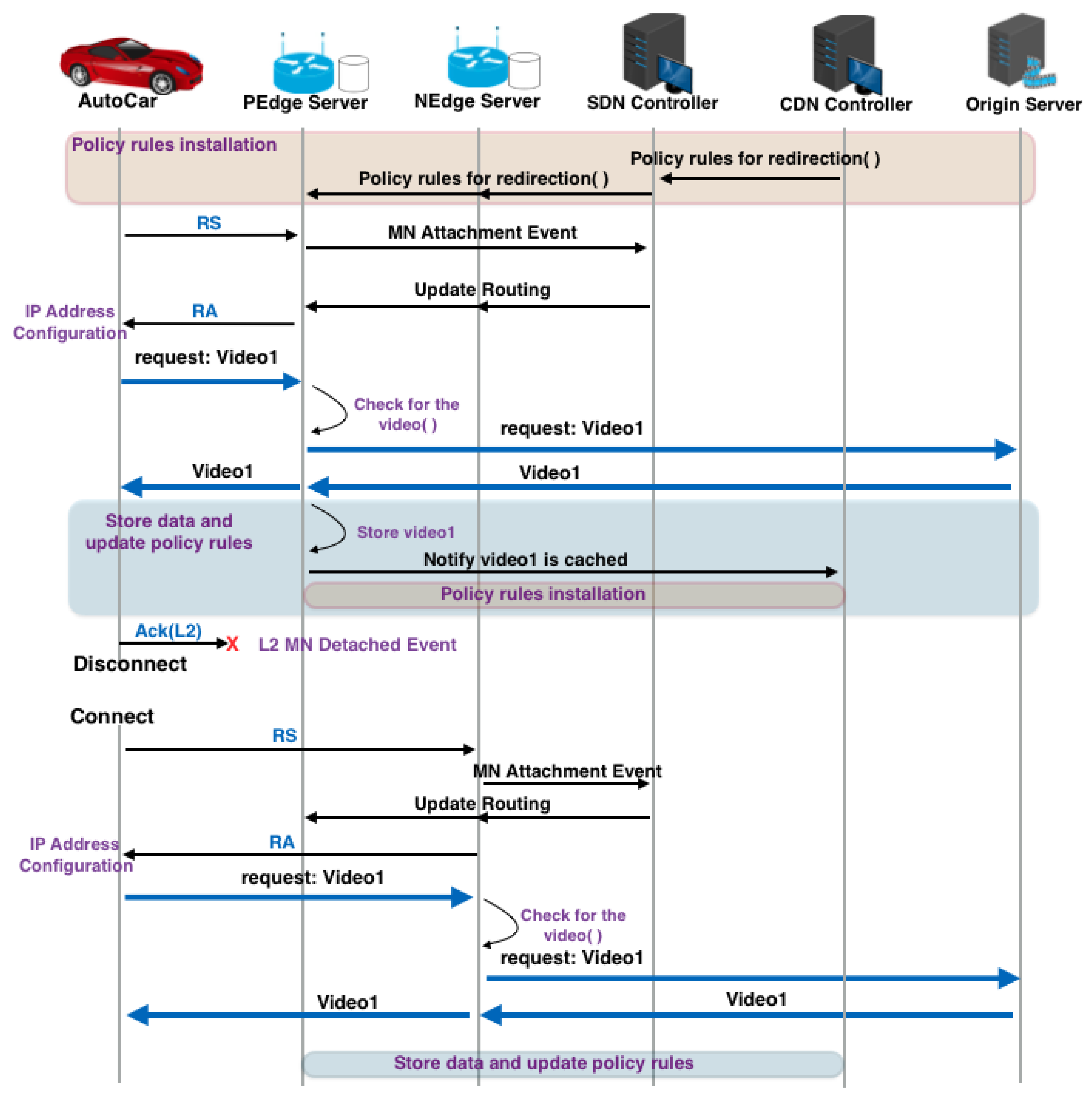

3.3.2. SDN Mobility with CDN (Cache-Miss)

This scenario represents the performance of the CDN strategy when the requested content is not available at the new edge server (NAR). The handover process, detailed in

Figure 4, necessitates fetching the content from the origin server.

Based on L2 handovers and routing updates, when NAR fails to find the content locally (a cache-miss), it will trigger a request to the origin server. This round-trip delay across the core network is the primary contributor to service disruption as shown in the following equation:

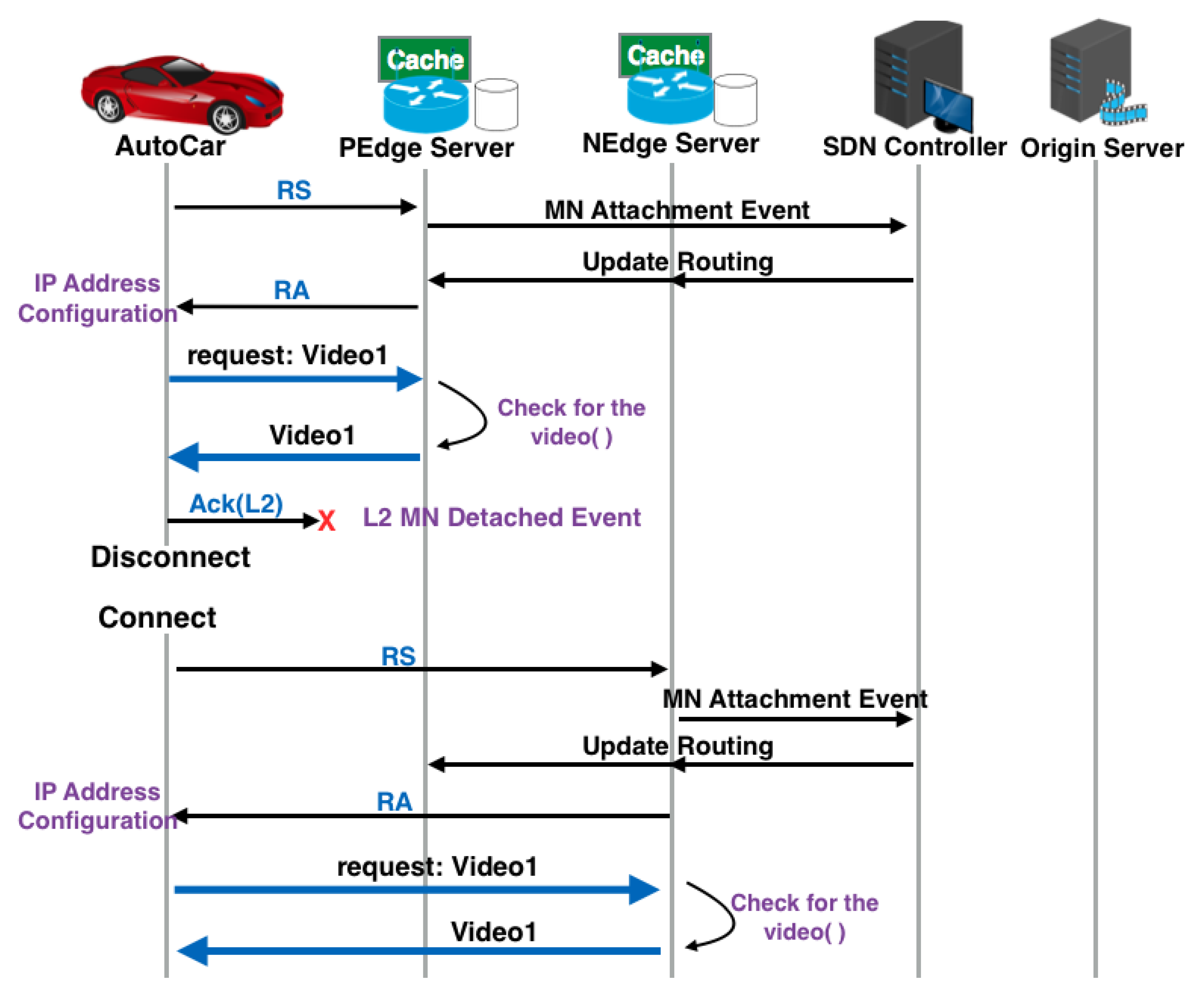

3.3.3. SDN Mobility with CDN (Cache-Hit)

This final scenario illustrates the ideal case where the CDN has proactively cached the content at the new edge server as shown in

Figure 5 allows the fastest possible data retrieval post-handover.

Upon connecting to the NAR, the vehicle’s request is served immediately from the local cache. This eliminates the significant latency of contacting the origin server resulting in the lowest service disruption time. The equation for this optimal case is:

These equations form the basis of our comparative analysis allowing us to calculate the expected service disruption time for each strategy when SMR conditions are varied.

4. Evaluation Results and Discussion

The results obtained from the analytical models as described in

Section 3 will be presented and analyzed. The evaluation begins with a primary performance comparison of the three handover strategies across a range of Session-to-Mobility Ratios (SMR). To assess the robustness of these findings, a sensitivity analysis will be conducted to evaluate the impact of two critical network parameters: core network latency and L2 handover delay. Furthermore, the analysis goes beyond idealized scenarios to explore the practical effectiveness of the proactive CDN strategy by examining its performance as a function of the cache-hit ratio. Finally, the sources of delay are deconstructed and quantified to provide a clear comparison of the overheads inherent in each approach.

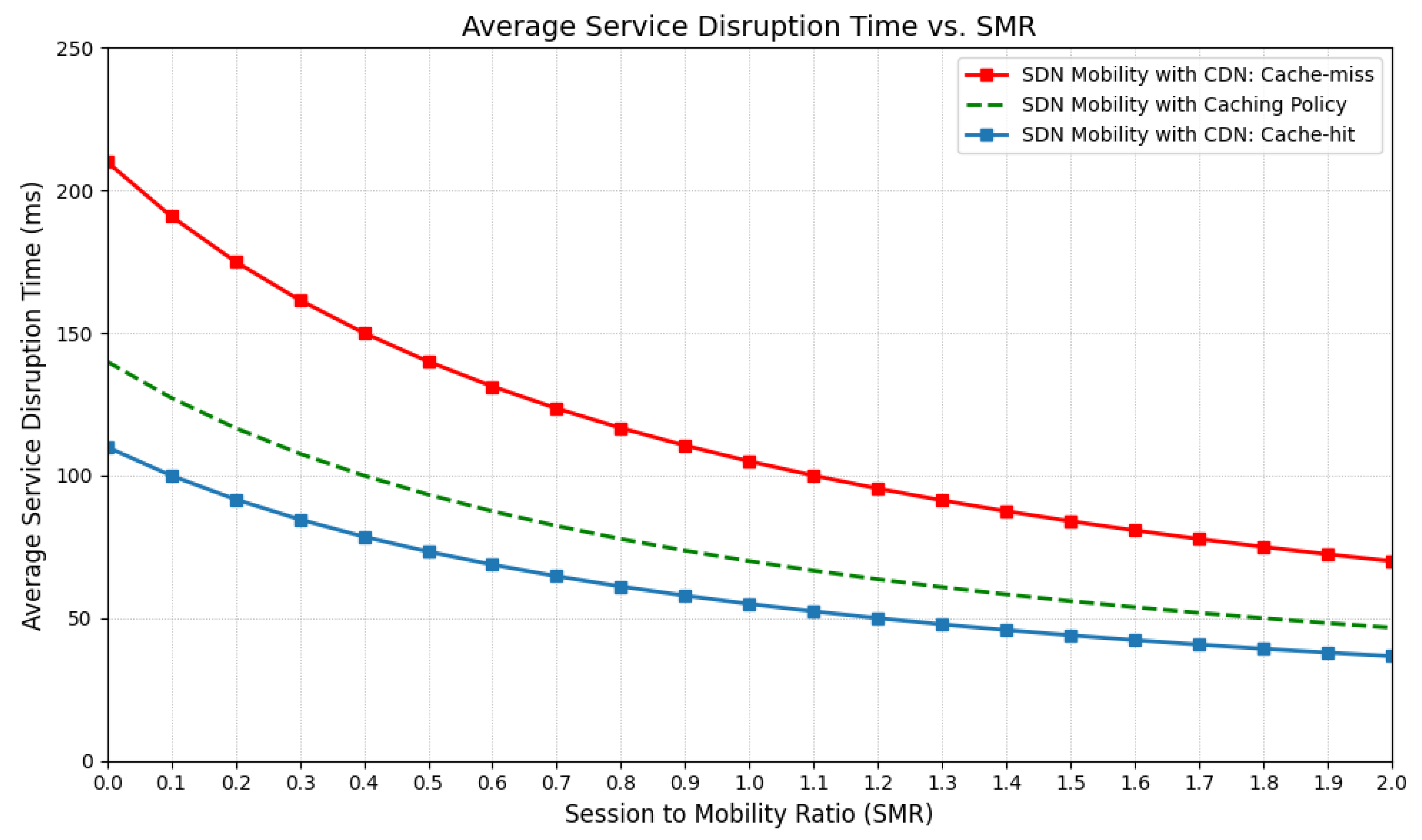

Figure 6 illustrates the average service disruption time for each strategy when the SMR varies from 0 to 2.0.

4.1. Performance Comparison with SMR

4.1.1. Performance of the SDN Mobility with CDN (Cache-Hit) Strategy

This strategy consistently yields the best performance since it exhibits the lowest service disruption time through the entire range of SMR values. This superior performance is directly benefited by the availability of the requested content at the NEdgeServer. With the completion of the L2 handover and SDN-managed routing update, the data can be immediately transmitted to the vehicle; thus, the delay is minimized.

4.1.2. Performance of the SDN Mobility with CDN (Cache-Miss) Strategy

In contrast, the Cache-miss scenario has the highest service disruption time. The delay is significantly caused by the need from the NEdgeServer to send a request to the origin server (SERV) and wait for the content to be delivered. As shown in the analytical model, the term adds a substantial delay corresponding to the round-trip time between the network edge and the core server making this scenario the least efficient.

4.1.3. Performance of the SDN Mobility with Caching Policy Strategy

The performance of this approach lies between the CDN cache-hit and cache-miss scenarios. The additional delay compared to the cache-hit case comes from the control plane signaling overhead. During the handover, the SDN controller must communicate with the Network/Cache Policy server to request and receive the appropriate caching policy represented by the term in its equation. This process introduces a delay that is not present in the CDN-based approaches.

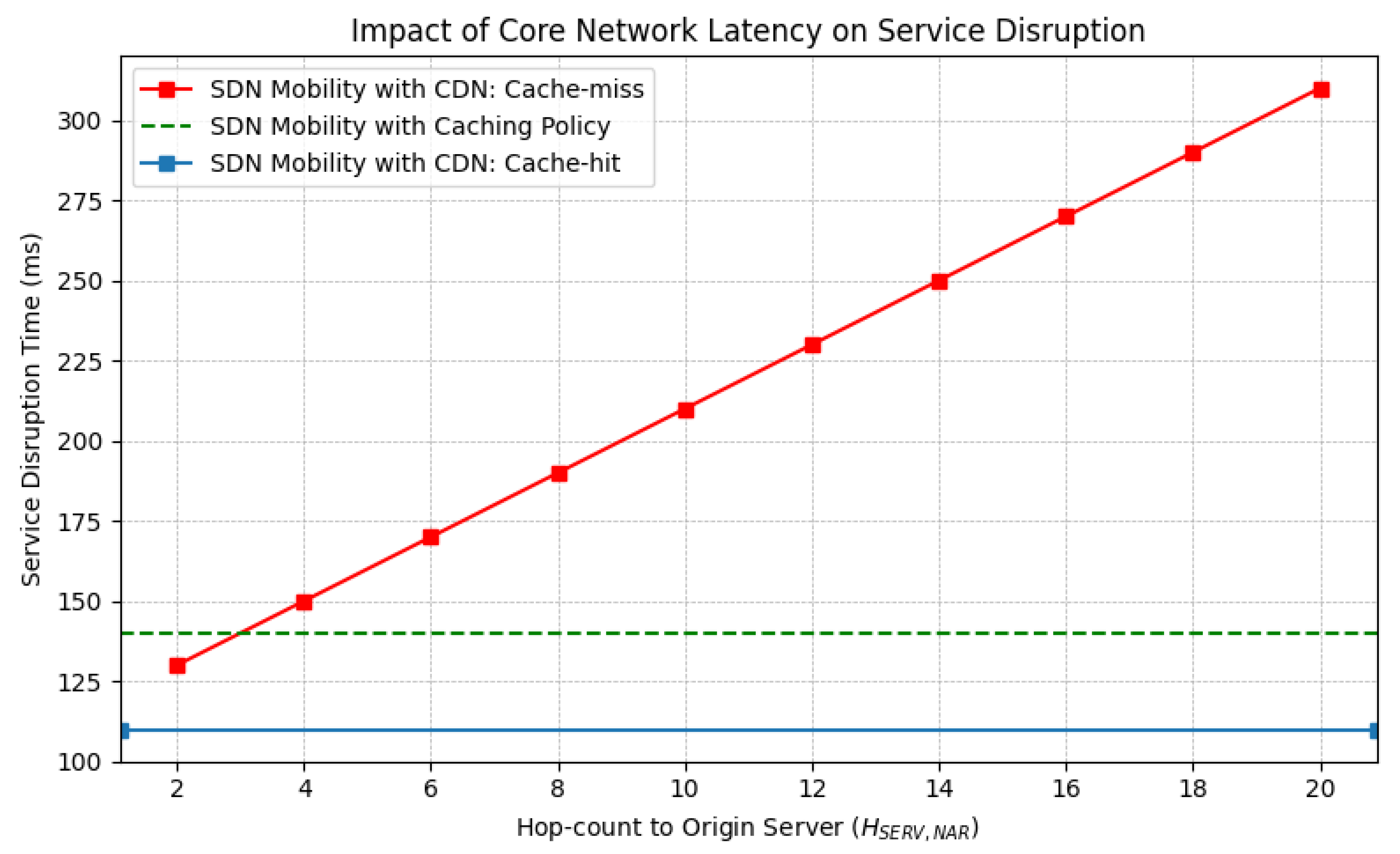

4.2. Analysis of Core Network Latency Impact

To evaluate the robustness of each strategy against variations in core network latency, a sensitivity analysis has been conducted by varying the hop-count to the origin server

. The results illustrated in

Figure 7 demonstrate the impact of content placement on service continuity. The performance of the SDN Mobility with CDN (Cache-miss) strategy, in terms of service disruption time, degrades linearly as the distance to the origin server increases. The cache-miss strategy necessitates fetching content from the origin server after handovers; thus, it makes the service disruption time directly increased proportional to the round-trip network latency

.

In contrast, the service disruption times of SDN Mobility with CDN (Cache-hit) and SDN Mobility with Caching Policy strategies remain constant. They are unaffected by the origin server’s location because both strategies serve contents from the network edge; therefore, they successfully isolate the user’s connection from the latency in the core network. This analysis provides a clear quantitative validation that content availability at the edge is the most critical factor in mitigating service disruption during handover in vehicular networks.

4.3. Analysis of L2 Handover Delay Impact

This analysis investigates the influence of the Layer 2 (L2) connection establishment time to the total service disruption for each strategy. The evaluation was performed by systematically varying the L2 handover delay () and recalculating the performance of each model.

As shown in

Figure 8, the service disruption time for every strategy increases linearly with the L2 handover delay. The parallel slopes of the lines indicate that the initial connection time (

) is a foundational component of the total delay that affects all approaches equally.

The constant gap between each strategy demonstrates that the post-handover data retrieval mechanism—whether from a local cache, a remote server, or after a policy query—is the primary performance differentiator. This finding underscores that while optimizing L2 connectivity is important, effective solutions to mitigate service disruptions come from efficient edge-based content delivery strategies.

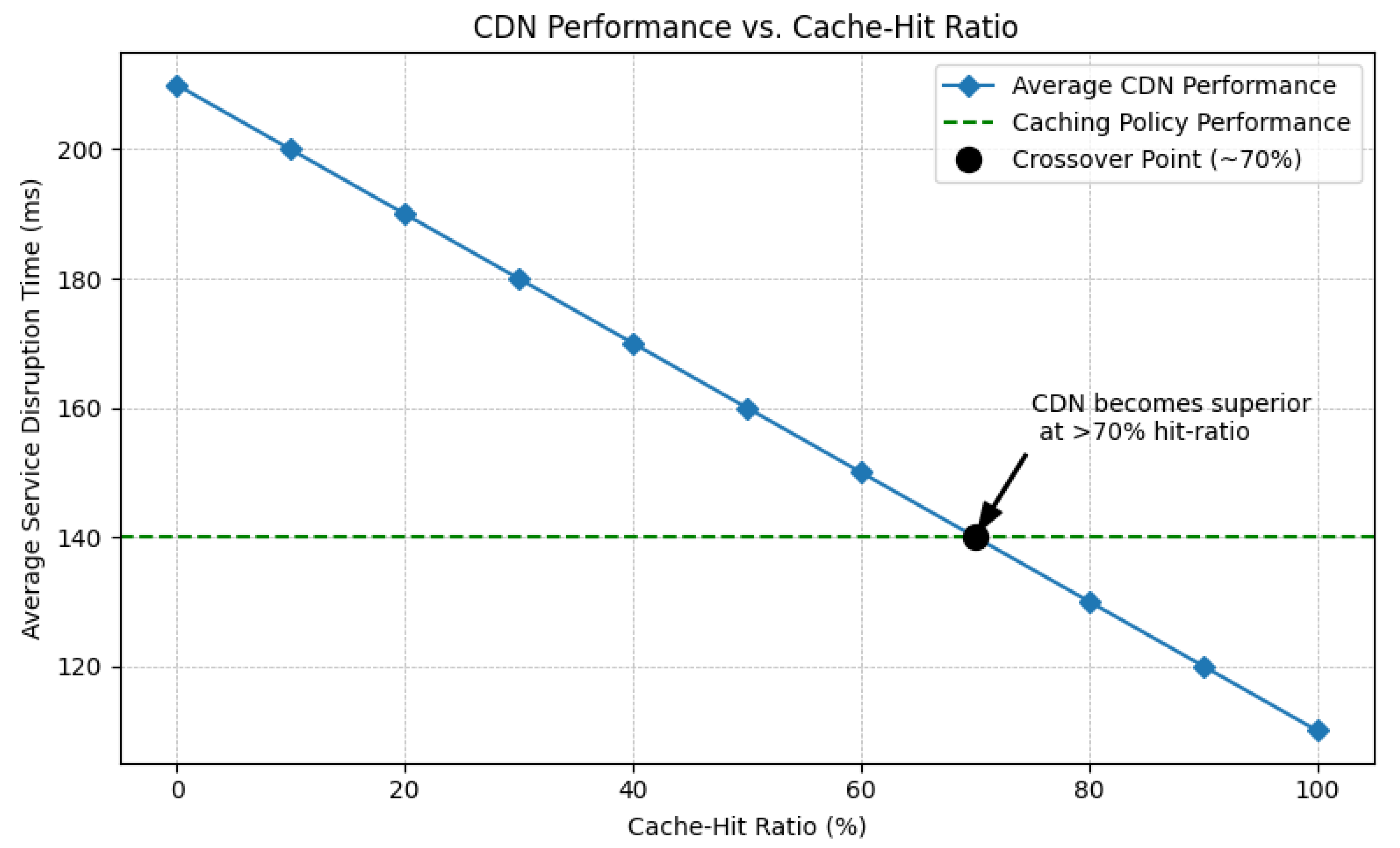

4.4. Analysis of CDN Performance vs. Cache-Hit Ratio

To provide a more realistic evaluation of the proactive CDN strategy, its performance across a variable cache-hit ratio moving beyond the idealized 100% hit or miss scenarios has been analyzed. This analysis determines the minimum cache-hit ratio required for the CDN approach to outperform the reactive Caching Policy. The average CDN service disruption time was calculated using a weighted formula that combines the outcomes of a cache-hit and a cache-miss:

As illustrated in

Figure 9, the average performance of the CDN strategy improves linearly when the cache-hit ratio (

) increases. The key insight from this analysis is the identification of the crossover point, which occurs at a cache-hit ratio of approximately 70% in our model. This result demonstrates that the superiority of a proactive CDN is entirely contingent on the effectiveness of its predictive caching algorithm. To yield a tangible performance benefit over the reactive Caching Policy, the CDN’s algorithm must achieve a cache-hit ratio greater than this 70% threshold. However, please note that the crossover point can be increased (more than 70%) if the core-network latency or 2 handover delay increase. This finding establishes a clear quantitative performance target for the design of intelligent caching mechanisms in vehicular networks.

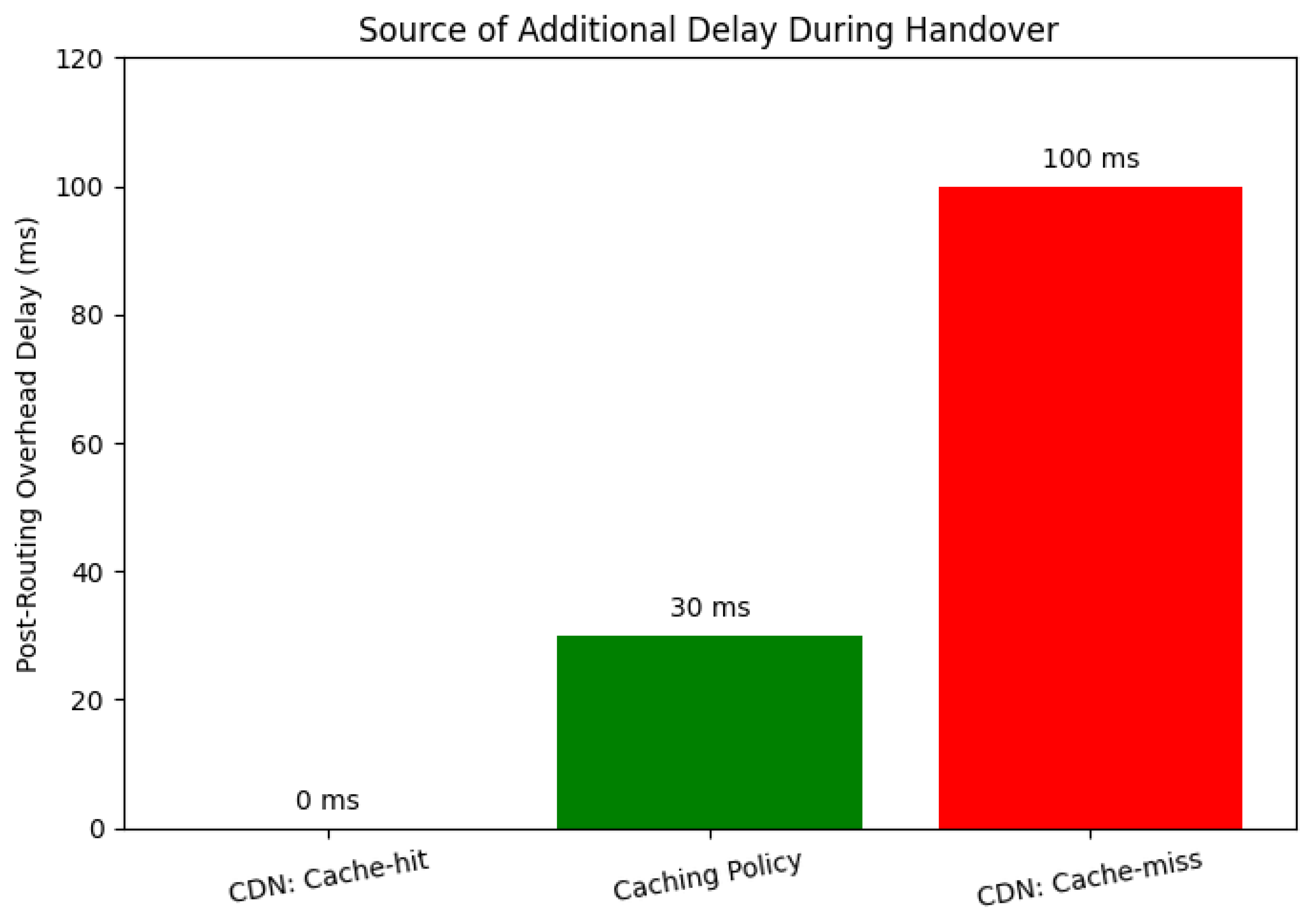

4.5. Analysis of Post-Routing Overhead Delay

To isolate the primary sources of latency, additional delays that each strategy introduces after the common L2 handover and SDN-managed routing updates are complete, will be analyzed. This “post-routing overhead” quantifies the time spent on either control plane signaling or data plane retrieval. The CDN Cache-hit scenario representing the fastest possible data delivery from a local cache serves as the zero-overhead baseline.

Figure 10 provides a clear comparison of these additional delays.

The Caching Policy introduces a 30 ms overhead. This delay is caused by control plane signaling, specifically the round-trip communication required for the SDN controller to query external policy servers.

The CDN Cache-miss scenario incurs a significantly larger overhead of 100 ms. This delay is caused by data plane latency representing the round-trip time needed to fetch the requested content from the origin server.

This analysis effectively distinguishes between the predictable overhead of control plane operations and the variable latency of data plane retrieval from the core network. The results confirm that fetching contents from a remote server is the most significant contributor to service disruption, reinforcing the critical need for effective edge caching strategies.

5. Discussion

The evaluation results presented in this paper emphasize a key principle for mobile networks: the immediate availability of content at a new connection point is the most crucial factor in ensuring service continuity during data transmission. The proactive nature of the SDN Mobility with CDN (Cache-hit) strategy provides the best performance since it can effectively alleviate latency by eliminating the delay in fetching data from the origin server. In contrast, the cache-miss scenario significantly increases the delay. This delay makes cache miss scenarios unsuitable for time-sensitive applications. Therefore, this research confirms that for services with stringent quality of service requirements such as V2X, security applications, and persistent media streaming, proactive edge caching architectures are significantly superior.

The SDN Mobility with Caching Policy approach still has other practical advantages. Although hampered by signaling overhead, this reactive strategy offers significant benefits in terms of implementation and management as follows.

5.1. Ease of Implementation

This model is simple to deploy since it can be integrated with existing mature cache policy technologies and control frameworks. For network operators already managing centralized policy servers, adding caching rules is an incremental enhancement that is less complex than building and maintaining a sophisticated predictive content distribution infrastructure from scratch.

5.2. Scalability and Flexibility

The centralized Caching Policy provides superior operational scalability and flexibility. As the network grows, managing a central set of rules is administratively more scalable than ensuring consistency of content across thousands of distributed edge nodes. Furthermore, policies can be updated dynamically from a single point to enforce fine-grained and real-time control based on some factors such as user subscription tiers, network loads, and specific security requirements. These advantages offer a level of adaptability that a pre-positioned content model cannot easily match.

Ultimately, the choice between these architectures represents a fundamental engineering trade-off between peak performance and operational practicality. The proactive CDN model is optimized for speed, making it essential for services with stringent quality-of-service requirements. In contrast, the Reactive Cache Policy model offers more flexible and potentially cost-effective solutions for services with a minor increase in handover delay. However, it is an acceptable trade-off for its operational benefits. The optimal strategy, therefore, is not universal but depends heavily on the specific technical and business requirements that the vehicular services are deployed.

6. Conclusions and Future Work

Analytical evaluations of the three distinct strategies for minimizing service disruption time during handover in SDN-based connected car networks have been presented in this article: SDN Mobility with a Caching Policy, SDN Mobility with a CDN in a cache-miss scenario, and SDN Mobility with a CDN in a cache-hit scenario. Our primary analysis clearly confirms that the CDN cache-hit strategy provides the lowest service disruption, followed by the Caching Policy, and the cache-miss scenario.

Sensitivity analyses confirm that edge-based strategies are resilient to core network latency. The results also showed that while L2 handover time affects all strategies, the post-handover data retrieval method is the primary performance differentiator.

Importantly, this study focused beyond idealized models to quantify the trade-offs of proactive caching. By analyzing performance against the cache-hit ratio, a distinct crossover point was identified to establish the minimum prediction accuracy that the CDN must achieve in order to outperform the reactive Caching Policy. This provides a key insight: the superiority of a proactive CDN is conditional on its algorithmic effectiveness. Our deconstruction of overheads further clarified the sources of delay isolating the moderate control plane latency of the Caching Policy from the substantial data plane latency of a cache-miss.

The analysis conclusively confirms that content availability at the network edge is the critical factor for ensuring service continuity in vehicular networks. Therefore, this study provides a clear and robustly validated direction for future designs: integrating proactive edge caching architectures such as an intelligent CDN within an SDN-managed framework is an effective method to support the stringent connectivity requirements of connected cars. In future, simulation experiments with realistic variations of hop count, link delay, vehicle speed, and link interference should be conducted.