1. Introduction

The contemporary cybersecurity environment is characterized by sophisticated, organization-wide cross-domain attacks that demand collective defensive efforts through coordinated cyber threat intelligence sharing [

1,

2]. By sharing Cyber Threat Intelligence (CTI), an organization can improve its security posture as part of a community since it shares indicators of compromise, attack patterns, and protection mechanisms. However, the traditional centralized CTI solutions introduce significant privacy risks and failure points, and may compromise organizational information of great value [

3,

4].

Despite substantial progress in collaborative CTI frameworks, existing approaches continue to exhibit three fundamental gaps:

(i) Limited mechanisms for cross-organization trust evaluation, which makes it difficult to verify the reliability and behavioural integrity of contributing entities;

(ii) Insufficient integration of privacy-preserving federated learning techniques under adversarial conditions, where malicious participants can exploit gradient information or inject poisoned updates; and

(iii) Inadequate adaptability to continuously evolving threat landscapes, resulting in delayed detection and fragmented intelligence sharing.

To overcome these shortcomings, TrustFed-CTI embeds dynamic trust computation, multi-layer privacy mechanisms, and context-aware intelligence reasoning within a unified federated framework specifically designed for secure and adaptive CTI collaboration.

Recent innovations in federated learning (FL) as a privacy-preserving distributed machine learning paradigm demonstrate significant potential for privacy-guaranteed CTI collaboration [

5,

6]. Federated learning offers the capability of various diverse organizations to train threat detection models collectively, as compared to conventional methods that rely on the centralization of raw information, maintaining data locality and organizational confidentiality [

7]. However, there are particular issues with the application of federated learning in cybersecurity-related domains, particularly concerning the credibility of participants and vulnerability to attacks.

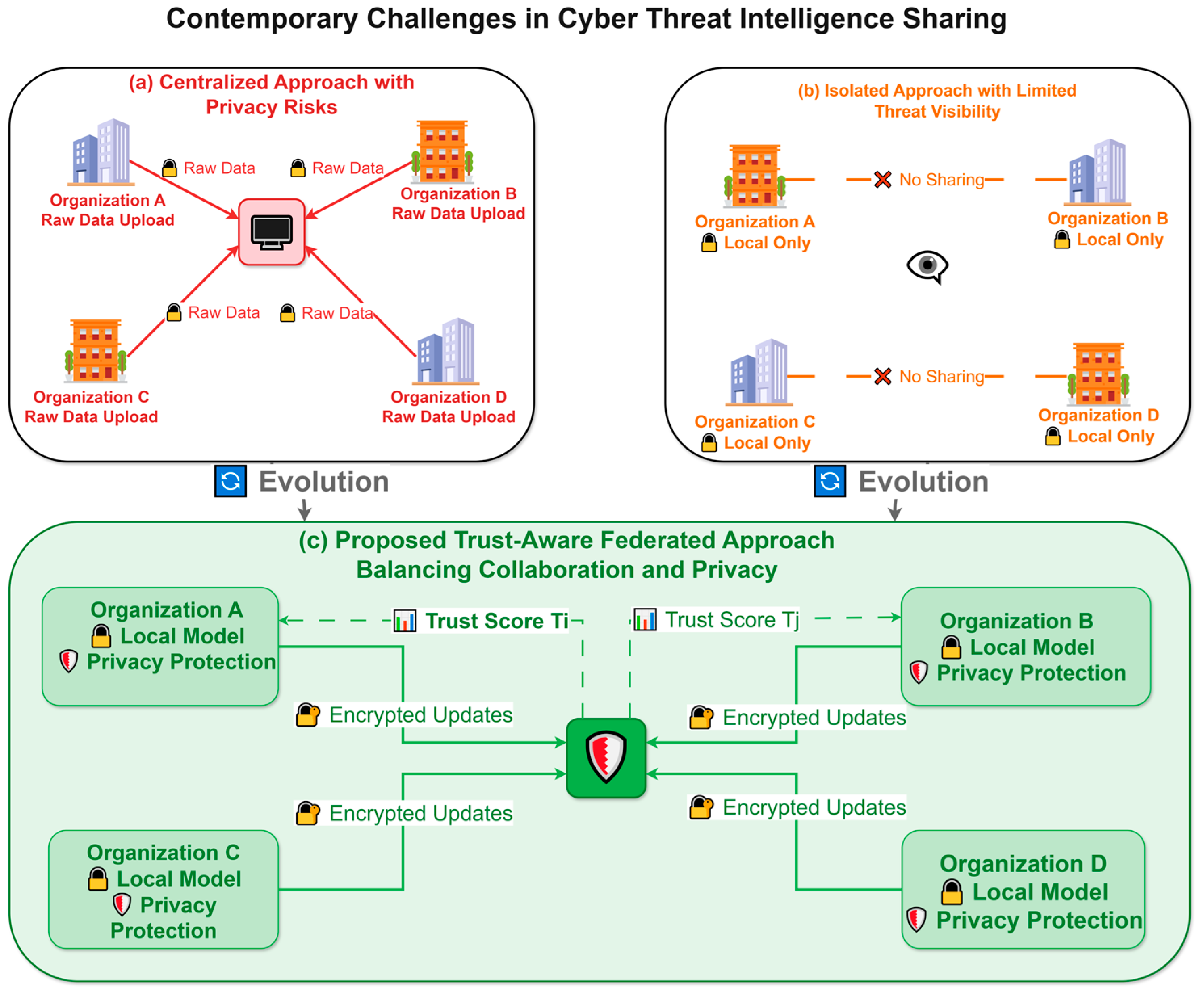

Figure 1 describes the essence of the problems of modern CTI sharing ecosystems and presents the trade-off between the efficacy of cooperation and confidentiality. Traditional centralized solutions, although they allow conducting data analysis comprehensively, put the participating organizations at risk of privacy violations and regulatory risks. On the other hand, disjointed security activities reduce the ability to see and detect threats and leave organizations susceptible to network attacks.

Recently trust-aware federated learning has shown promising outcomes in other areas [

2]. However, there is limited research applying this knowledge to the realm of cybersecurity. The diversity of cybersecurity data, the different levels of organization security maturity, and the existence of potentially malicious actors require dedicated trust management tools [

5]. Moreover, cyber threats are dynamic and systems must be able to quickly absorb new threat intelligence and be resistant to adversarial manipulations [

8,

9,

10].

A combination of blockchain systems and federated learning has demonstrated the possibility of improving trust and transparency in distributed systems [

11,

12,

13]. Nevertheless, current methods are mostly generic [

14,

15,

16,

17]. They cannot accommodate the specific needs of cybersecurity sectors of cybersecurity [

18], such as real-time threat detection [

19], non-homogeneous data format [

20], and adversarial conditions [

21,

22,

23].

The necessity of dedicated frameworks that can handle these specific issues promotes the creation of frameworks of trust-aware federated learning for specific domains. Typically, recent work has covered multiple facets of privacy-preserving cybersecurity partnerships, such as the differential privacy systems [

18], the zero-trust architecture, and secure multi-party computation protocols [

12]. However, one of the gaps in the research is the general unification of trust management, privacy protection, and adversarial robustness of federated cybersecurity applications.

Dynamic trust scoring, privacy-saving mechanisms, and context-sensitive analysis of the threat intelligence are suggested to solve these limitations in the proposed TrustFed-CTI framework. The innovative feature of the framework is an adaptive trust management mechanism, where the behaviour of participants is not the only aspect, but contextual relevance and temporal consistency of the contributed threat intelligence are also taken into consideration.

Problem Context and Motivation:

Traditional cyber threat intelligence (CTI) sharing frameworks continue to face significant challenges, such as privacy leakage of sensitive indicators, fragmented data silos across organizations, and the lack of verifiable contributor credibility. Recent research on trust-aware federated learning, such as that by Sathya and Saranya [

2], introduced dynamic trust weighting for healthcare risk prediction but did not address multi-organization threat intelligence sharing or adversarial robustness. Similarly, Ali et al. [

3] proposed privacy-preserved collaboration mechanisms using blockchain for recommender systems. However, their design does not incorporate adaptive trust scoring or differential privacy integration suitable for CTI environments.

The proposed TrustFed-CTI framework bridges these limitations by combining context-aware trust computation with layered privacy preservation in a unified federated learning workflow. Its four-component trust vector—quality (Q), consistency (C), reputation (R), and historical (H)—directly influences the aggregation weight during model updates, reducing differential-privacy noise amplification while maintaining robustness under adversarial behaviour. This joint integration of trust and privacy provides a capability for secure, cross-organizational CTI collaboration that has not been achieved in prior trust-aware FL or blockchain-based systems [

2,

3].

Technical Novelty Clarification:

Although prior studies have explored trust-aware federated learning in domains such as IoT, healthcare, and finance, these approaches typically treat trust, privacy, and robustness as isolated enhancements. In contrast, TrustFed-CTI presents an integrated and domain-specific design that unifies these elements within a single operational framework tailored for cyber-threat intelligence sharing. Its novelty lies in three key aspects: (1) the four-dimensional dynamic trust formulation (Q, C, R, H) that is embedded directly into the aggregation process rather than post hoc scoring, enabling adaptive trust weighting; (2) a hybrid centralized–distributed coordination model that balances global synchronization with decentralized privacy preservation across heterogeneous CTI sources; and (3) a multi-layer privacy stack that synergistically combines differential privacy, secure multi-party computation, and homomorphic encryption. This fusion of dynamic trust computation, hybrid orchestration, and layered privacy protection forms the core innovation that differentiates TrustFed-CTI from existing trust-aware FL frameworks.

The key contributions of this work are as follows:

New Trust-Aware Architecture: We introduce the existing first full-fledged trust-based federated learning architecture that is specifically designed to share cyber threat intelligence, considering dynamic reputation scoring, context-based trust evaluation, and dynamically adjusted participant weighting.

Improved Security and Privacy Neutrality: The framework is designed to integrate various types of privacy-enhancing techniques: differential privacy, secure multi-party computation, and homomorphic encryption, which are all necessary to guarantee complete privacy protection without compromising the model’s utility and threat detection efficacy.

Adversarial Robustness and Attack Mitigation: We develop sophisticated defence mechanisms against model poisoning, data poisoning, and Sybil attacks, demonstrated through extensive evaluation against up to 35% malicious participants.

Real-World Validation and Performance Analysis: Comprehensive evaluation on authentic cybersecurity datasets demonstrates significant improvements in detection accuracy (22.6%), convergence speed (28%), and robustness compared to existing approaches.

Scalable Implementation Framework: The proposed system provides a practical, deployable solution for real-world cybersecurity collaboration, with demonstrated scalability across heterogeneous organizational environments.

The remainder of this paper is organised as follows:

Section 2 reviews related work;

Section 3 presents the proposed methodology and mathematical modelling;

Section 4 discusses results and evaluation;

Section 5 provides discussion; and

Section 6 concludes the paper.

3. Proposed Methodology

3.1. System Overview

The TrustFed-CTI framework provides a comprehensive solution for trust-aware federated learning in cyber threat intelligence sharing across distributed organizations.

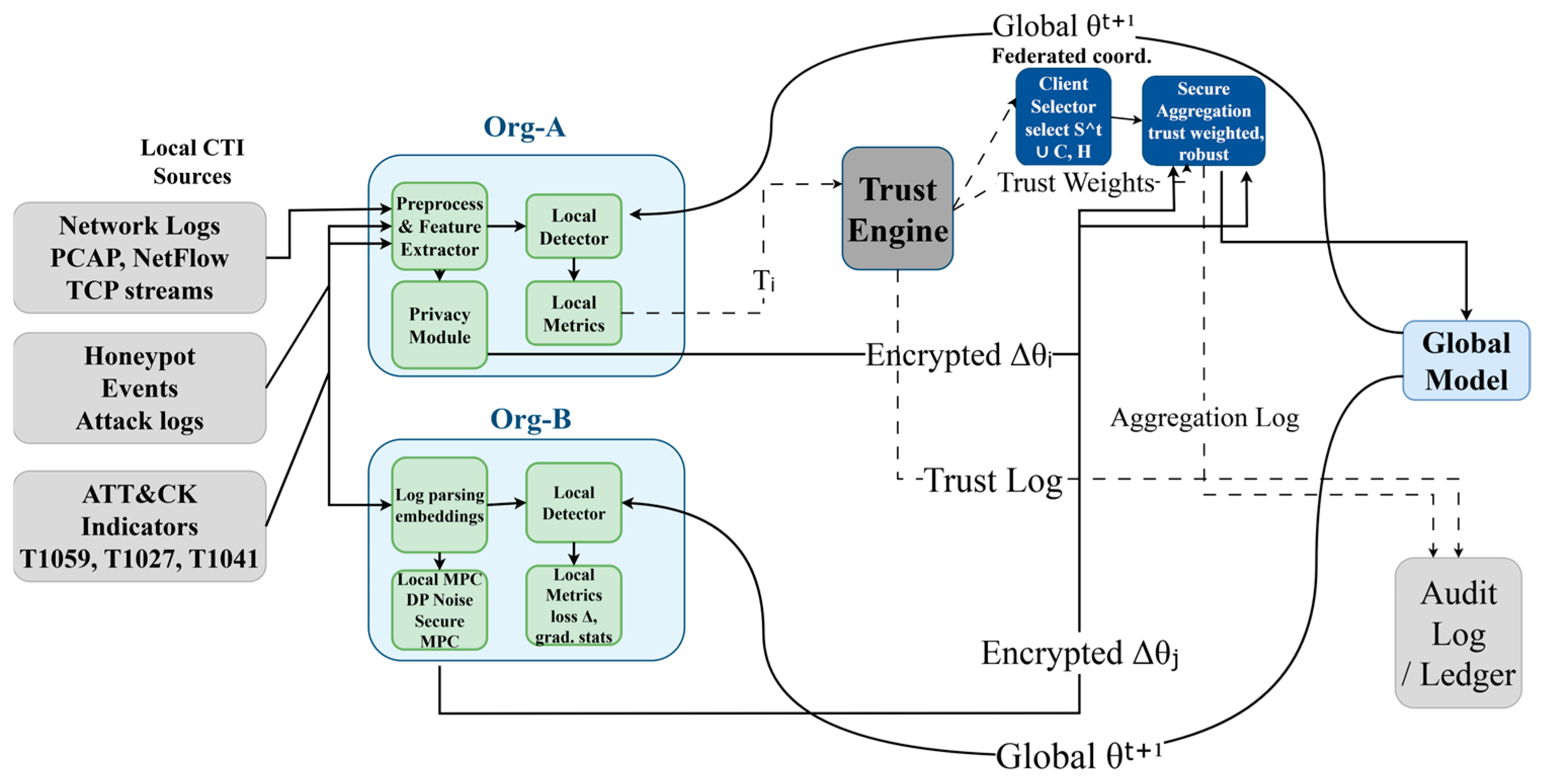

Figure 2 presents the complete system architecture, encompassing the trust management engine, privacy-preserving mechanisms, federated learning coordinator, and context-aware threat analysis modules.

The framework operates through a decentralized network of participating organizations, each maintaining local threat intelligence datasets while contributing to a global threat detection model. The system ensures that sensitive organizational data never leaves local premises while enabling collaborative learning through secure aggregation protocols and trust-weighted model updates.

Algorithm 1 presents the main operational flow of the TrustFed-CTI framework, detailing the integration of trust scoring, privacy-preserving training, and secure aggregation processes.

| Algorithm 1: TrustFed-CTI Main Framework |

- 1.

Initialise global model ,

|

- 2.

Trust scores for all clients

|

- 3.

Set privacy parameters and trust threshold

|

- 4.

Select clients based on trust scores and availability

|

- 5.

Broadcast the current global model to selected clients

|

- 6.

Perform local training with privacy preservation

|

- 7.

Compute local trust metrics

|

- 8.

Send encrypted model update and metrics

|

- 9.

Update trust scores:

|

- 10.

Perform trust-weighted secure aggregation

|

- 11.

Validate model quality and threat detection capability

|

- 12.

Return final global model

|

The fundamental innovation of the framework consists of its dynamic system of trust management, continuously assessing the contribution of participants using various criteria such as the quality of that data, its contribution to model upgrading, alignment to the patterns of threat intelligence, and the temporal stability of the individual contribution.

3.2. Trust Management Engine

The core element of the TrustFed-CTI system is the trust management engine that offers dynamic evaluation and grading of the participant organizations according to their quality of contribution and behavioural patterns. In the trust scoring system, a combination of assessment dimensions has been incorporated so that the credibility of the participants is fully assessed.

At time

, the trust score

, the trust score of organization

is calculated as:

where

represents data quality score,

denotes consistency score,

indicates reputation score, and

represents historical performance. The weighting parameters

satisfy

.

The data quality score evaluates the contribution’s impact on global model performance:

where

represents the global model loss function and

is a minor positive constant preventing division by zero.

The consistency score measures the alignment of local contributions with global threat intelligence patterns:

where

represents the local model update from organization

and

is the average model update across all participants.

The reputation score incorporates peer evaluation and external validation:

where

represents the set of organizations that have interacted with organization

,

denotes the interaction weight, and

represents the evaluation score from organization

regarding organization

.

The historical performance score provides a temporal stability assessment:

where

is the decay factor and

represents the performance score at time

.

Cold-Start Trust Initialization:

To address the “cold-start” problem faced by new organizations that join the federation without prior participation history, the framework adopts an external-credential-based initialization policy. Each newcomer provides verifiable credentials such as cybersecurity certification level, historical collaboration records, or external threat-feed reputation, which are normalized to an initial trust prior to . During the first five aggregation rounds, these organizations operate under a probationary phase in which their trust-weighted contribution factor is limited to 50% of the standard maximum. After accumulating sufficient interaction data, the dynamic trust-update Equations (1)–(5) are applied normally, allowing full participation. This mechanism ensures fair integration of new members while maintaining network reliability and preventing manipulation during early participation.

3.3. Privacy-Preserving Mechanisms

The TrustFed-CTI framework integrates multiple privacy-preserving techniques to ensure comprehensive protection of organizational data while maintaining model utility. The privacy preservation strategy combines differential privacy, secure multi-party computation, and homomorphic encryption.

Differential privacy is applied during local model training to prevent information leakage:

where

represents Gaussian noise with variance

determined by the privacy budget

and sensitivity

:

Each client

encrypts its model update

and its local trust weight

separately using the Paillier additive homomorphic scheme,

is the gradient divergence threshold. The secure aggregation, therefore, operates entirely within the encrypted domain as

Under the Paillier scheme, no decryption occurs before weighting, ensuring secure encrypted aggregation. Only the final aggregated ciphertext is decrypted by the coordinating trust engine to update the global model. The 2048-bit Paillier public key is generated once at initialization and reused for all communication rounds.

It is important to note that Equation (8) represents the secure multi-party computation (SMPC) protocol, where each client transmits encrypted updates and the server only performs aggregation in the encrypted domain without accessing raw gradients. This follows the secure sum protocol:

where

denotes the encryption function applied to each local update. To support this mechanism, the framework employs the Paillier cryptosystem, an additive homomorphic encryption scheme. Paillier allows linear operations such as weighted summation to be executed directly on encrypted data, ensuring that individual model updates remain confidential throughout the aggregation process. The last combined outcome is the only result that is decrypted to update a global model, therefore integrating privacy and utility assurances.

The mathematical formulation of Equations (8) and (9) has been verified to ensure the correctness of the encrypted-weight computation under the Paillier additive homomorphic scheme. In this process, each client performs encryption of both its model update and associated trust weight, and the weighted aggregation is executed entirely in the encrypted domain through ciphertext exponentiation by plaintext weights. This guarantees that no decryption occurs before aggregation, and the server only decrypts the final combined ciphertext after the secure multi-party computation phase. The updated equations accurately represent the homomorphic property where exponentiation of ciphertexts corresponds to linear weighting of the encrypted gradients.

These parameters in

Table 1 collectively define the complete privacy stack. Differential Privacy noise is added locally, Paillier HE protects updates in transit, and SMPC masking secures multi-party aggregation. “CPU Overhead (%)” column indicates the relative increase in processor utilization compared with the baseline model without encryption. “Latency Overhead (%)” represents the percentage increase in average round-trip communication time during aggregation.

The trust-weighted aggregation mechanism uses dynamic trust weights in updating the model:

where

represents the size of the local dataset for organization

.

Interaction between Privacy Mechanisms:

Differential privacy introduces calibrated statistical noise at the client level, while SMPC and homomorphic encryption protect data and gradient confidentiality during aggregation. These techniques complement rather than overlap—DP mitigates inference risks, and SMPC/HE secure transmission and computation. The experimental results in

Section 4.5 show that their combined use maintains near-baseline utility while significantly lowering leakage risk.

3.4. Context-Aware Threat Analysis

The context-based threat analysis module offers smart evaluation of threat information deposits, depending on time arrangement, threat terrain progression and cross-organizational evidence. This module guarantees that the federated learning process is flexible to the threats that arise and is resistant to adversarial manipulations.

The consistency of threat pattern measures the consistency of the local contribution and the established threat signatures:

where

represents the set of threat signatures,

is the local model’s response to signature

, and

is the global consensus response.

The temporal stability assessment measures the consistency of contributions over time:

The adversarial detection mechanism identifies potentially malicious contributions:

where

and

represent the mean and standard deviation of historical model update magnitudes.

3.5. Federated Learning Coordination

The federated learning coordination module operates the distributed training process and ensures an optimal choice of participants, effective communication, and effective model assembly. The coordination process is flexible to the conditions of the network, the participants, and the threat landscape.

The TrustFed-CTI model uses a hybrid federated learning system that is a mixture of Centralized Federated Learning (CFL) and Distributed Federated Learning (DFL). The CFL view is that a central trust engine is used to coordinate global aggregation to assure reliable orchestration of updates reasonably. Additionally, the principles of DFL are anchored in the form of trust-weighted distributed aggregation, which decreases the reliance on a single coordinator and the autonomy of organizations in a highly adversarial environment.

Hybrid Coordination Mechanism:

To balance global reliability with local independence, TrustFed-CTI adopts a hierarchical coordination strategy. A lightweight central coordinator disseminates trust weights and oversees aggregation timing, while all local computations—including model updates, differential privacy noise injection, and homomorphic encryption—occur on distributed organizational nodes. This hybrid configuration provides the stability and auditability of central oversight together with the scalability and resilience of decentralized learning, ensuring secure collaboration across heterogeneous CTI participants without exposing any raw intelligence data.

To train the models in the structure of the federated learning process, Deep Neural Networks (DNNs) are primarily used because of their potential to represent high-dimensional patterns based on threat intelligence datasets like MITRE ATT&CK and logs of malware analysis. In order to have the baseline comparisons and offer the lightweight situations, other models such as the Random Forests and Naive Bayes (NB) classifiers were also incorporated into the training pipeline. Combining these two features will provide not only solid results on the feature-rich dataset but also resource-restricted efficiency.

The participant selection plan is the most efficient in terms of trust scores and diversity:

subject to

and diversity constraint

.

Definition of Domain Distance:

To formally quantify diversity among participating organizations, the domain distance between any two organizations

and

is defined as

where

and

represent the sets of MITRE ATT&CK techniques, tactics, or indicators of compromise (IoCs) predominantly observed in each organization’s local CTI dataset.

This Jaccard-style metric captures how dissimilar the organizations’ threat domains are, with values approaching 1 indicating higher diversity.

Diversity Constraint:

During participant selection, the system computes

as shown in Equation (16), and accepts a candidate organization

only if its average domain distance from already-selected participants satisfies

where the diversity threshold is empirically set to

.

This ensures the final participant subset covers a broad range of ATT&CK techniques and threat categories, reducing redundancy and improving generalisation across heterogeneous CTI sources.

The convergence criterion balances model accuracy and training efficiency:

3.6. Algorithm Implementation

Algorithm 2 details the trust score update mechanism, incorporating multiple evaluation criteria and temporal dynamics.

| Algorithm 2: Trust Score Update Mechanism |

Input:

: Quality metric (from model-performance impact) : Consistency metric (from gradient alignment) : Reputation metric (from peer feedback) : Historical-performance score : Adversarial-detection threshold : Trust-weight vector γ: Temporal-decay factor η: Penalty-scaling coefficient

Output:Step 1—Metric Normalization

Normalize all local trust metrics to [0, 1] for comparability:

Step 2—Historical Performance Update

Step 3—Composite Trust Computation

(Note:

Step 4—Adversarial Detection and Penalty

If , then apply trust penalty:

Step 5—Normalization and Smoothing

Rescale and smooth the updated trust value:

(where is the temporal smoothing factor)

Step 6—Return Updated Trust Score

Return the normalized and smoothed trust score . |

3.7. Complexity Analysis

The computational complexity of the TrustFed-CTI framework is analysed across different components to ensure scalability and practical deployability.

The trust score computation has complexity:

where

is the number of participants,

is the number of evaluation metrics, and

is the model dimension.

The secure aggregation complexity scales as:

where

is the number of selected participants per round.

The overall framework complexity per round is:

3.8. Comparison with Existing Approaches

The TrustFed-CTI framework provides significant advantages over existing approaches in multiple dimensions.

Table 2 presents a comprehensive comparison highlighting the unique capabilities of the proposed framework.

The framework’s innovation extends beyond individual component improvements to provide comprehensive integration of trust management, privacy preservation, and adversarial robustness specifically tailored for cybersecurity applications.

3.9. Trust Weight Sensitivity and Ablation Analysis

To provide an objective justification for the chosen trust-score weighting parameters , a sensitivity and ablation study was conducted to measure each dimension’s individual contribution to the overall framework performance. Each coefficient was independently increased or decreased by ±10%, while the remaining coefficients were normalized to preserve a total sum of 1.

All experiments were executed under identical settings (ε = 1.0, σ2 = 2.0, 100 rounds, batch size = 64) to ensure comparability.

Table 3 presents the quantitative results of the trust-weight sensitivity analysis performed across the four trust components—Quality (Q), Consistency (C), Reputation (R), and Historical (H). The reported metrics (accuracy, F1-score, AUC) demonstrate that the selected configuration of

provides the most balanced performance across all evaluation measures. Increasing

or

yields slight accuracy gains but also increases variance, indicating diminishing returns beyond the baseline weighting. Uniform weighting reduces model stability, confirming that each component contributes uniquely to overall trust reliability. These results validate that the adopted expert-guided configuration achieves an optimal balance between adaptability and robustness in dynamic federated CTI environments.

Interpretation:

The baseline configuration of (0.30, 0.25, 0.25, 0.20) achieved the best trade-off between accuracy and stability across all metrics, outperforming uniform and perturbed settings.

This confirms that the expert-guided weighting is near-optimal and that no single trust component dominates the composite trust score.

Furthermore, varying and had the most pronounced effect on accuracy, demonstrating that data quality and reputation play critical roles in overall federated model reliability.

4. Results and Evaluation

4.1. Experimental Setup

The comprehensive evaluation of TrustFed-CTI was conducted using real-world cybersecurity datasets to ensure practical relevance and validity.

Table 4 presents the detailed characteristics of the datasets employed in our evaluation.

The experimental environment consisted of distributed simulation across 50 organizations with varying data distributions, security maturity levels, and contribution patterns. The Intel Xeon Gold 6248R, 128GB RAM, and NVIDIA V100 GPUs consisted of the hardware requirements. Its implementation was based on the TensorFlow Federated framework augmented with custom trust management.

Particularly, it was implemented with the help of the TensorFlow Federated (TFF) framework, but with custom trust-scoring modules to provide dynamic participant evaluation and trust-weighted aggregation. To confirm the robustness in the various learning contexts, various federated learning models were used. The primary model architecture consisted of Deep Neural Networks (DNNs) for handling the high-dimensional and heterogeneous CTI datasets. For lightweight environments and baseline comparisons, classical models such as Decision Trees and Naïve Bayes (NB) classifiers were incorporated. In addition, an RL-based adaptive aggregation strategy was tested to demonstrate dynamic policy adjustment under evolving adversarial conditions. This combination ensured that the evaluation captured both the scalability of deep models and the efficiency of traditional learners within the federated cybersecurity setting.

Hyperparameter settings were optimised through systematic grid search: learning rate ; privacy budget ; trust threshold ; and aggregation rounds . The trust score weighting parameters were set as , , , and based on domain expert consultation.

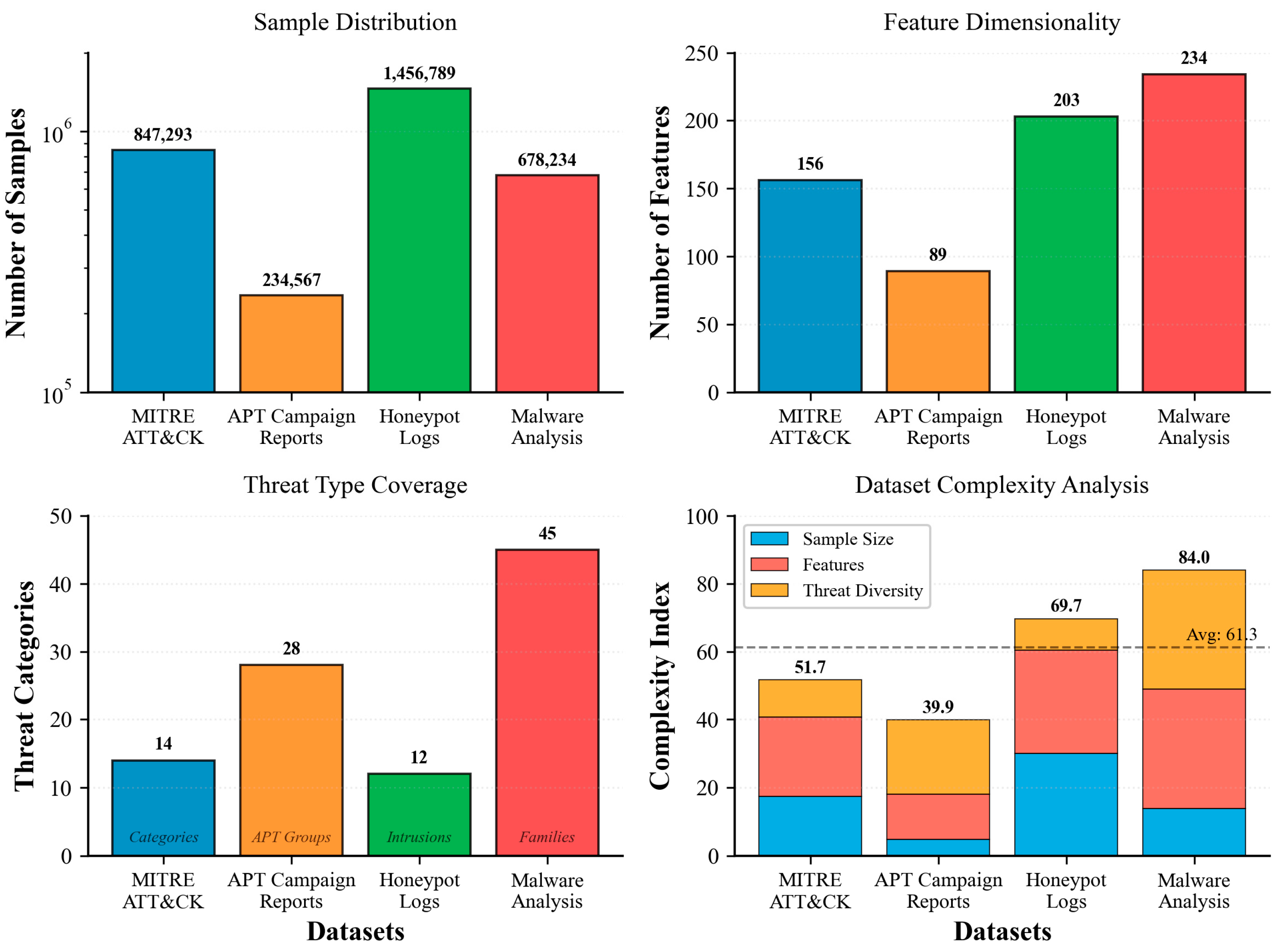

The comprehensive dataset analysis presented in

Figure 3 demonstrates the heterogeneous characteristics of the real-world cybersecurity datasets employed in our evaluation, with Honeypot Logs containing the largest sample size (1.46 M samples) and Malware Analysis featuring the highest feature dimensionality (234 features). The complexity index calculation reveals that MITRE ATT&CK and Malware Analysis datasets exhibit the highest complexity scores, indicating their comprehensive coverage of diverse threat landscapes and rich feature representations essential for robust federated learning model training and validation.

In

Figure 3, the four bar plots collectively analyze and compare multiple cybersecurity datasets, MITRE ATT&CK, APT Campaign Reports, Honeypot Logs, and Malware Analysis, using distinct color coding to visually separate their characteristics and complexities. The blue bars represent the MITRE ATT&CK dataset. This dataset maintains a moderate sample size (847,293), 156 features, and 14 threat categories, showing a balanced composition with a complexity index of 51.7. It provides broad but structured threat intelligence useful for attack taxonomy studies. The orange bars correspond to the APT Campaign Reports dataset. Despite a relatively smaller sample size (234,567) and feature dimensionality (89), it exhibits higher threat diversity (28 APT groups). Its complexity index (39.9) reflects targeted but less extensive data, ideal for specialized campaign analysis. The green bars represent Honeypot Logs, which have the largest sample size (1,456,789) and 203 extracted features, indicating extensive real-time network data. However, the threat coverage (12 intrusion types) remains limited, producing a complexity index of 69.7, demonstrating dense, event-heavy data suitable for behavioral intrusion detection studies. The red bars depict Malware Analysis datasets, combining 678,234 samples with the highest feature dimensionality (234) and broadest threat taxonomy (45 families). Its complexity index (84.0), well above the average (61.3) illustrates its high heterogeneity and analytical difficulty, making it the most intricate dataset among the four.

4.1.1. Dataset Construction and Labelling

The datasets used in this study were curated from publicly available and synthetic cyber-threat sources to ensure both reproducibility and privacy compliance.

The MITRE ATT&CK (

https://www.kaggle.com/datasets/tafifa/dataset-mitre-attack (accessed on 15 April 2025)) dataset was used. The dataset was generated by mapping each tactic–technique pair to synthetic log templates using a Python 3.10 parser. Each record was labelled

benign or

malicious based on corresponding ATT&CK annotations.

The APT Campaign Reports corpus was vectorised using TF-IDF representations from open-source intelligence feeds, yielding 89 features per sample. Honeypot Logs were parsed into standardised network features (source IP, port, protocol, payload size, response code). At the same time, Malware Analysis data were derived from dynamic behaviour traces of 45 malware families collected from VirusShare and Malpedia.

All datasets are either public or synthetically generated; no proprietary or confidential organizational data was utilised.

4.1.2. Baseline Configurations

Five baseline setups were implemented in TensorFlow Federated (TFF v0.23) to provide fair and transparent comparisons.

Each baseline used identical data partitions, hyperparameters, and training budgets.

Each configuration in

Table 5 was executed on an Intel Xeon Gold 6248R (3.0 GHz, 128 GB RAM) node equipped with an NVIDIA V100 GPU.

Training used batch size = 64; learning rate = ; optimiser = Adam; and trust threshold τ = 0.6.

All results report mean ± standard deviation over five runs (95% CI).

Baseline Fairness:

To ensure equitable comparison, all privacy-preserving baselines were trained under an identical privacy budget ε = 1.0, Gaussian noise σ = 2.0, and identical compute resources (Intel Xeon 6248R + V100 GPU).

Training rounds, learning rate, and batch size were held constant across all methods.

This guarantees that the reported improvements in

Table 3 reflect algorithmic efficiency rather than resource bias.

4.1.3. Ablation Study

An ablation experiment isolated the contribution of each trust component—Quality (Q), Consistency (C), Reputation (R), and Historical (H)—to quantify their independent and combined effects.

Values represent mean ± standard deviation across five independent runs. Statistical significance among configurations was evaluated using one-way ANOVA (F = 4.87, p = 0.008) followed by Tukey HSD post hoc tests (p < 0.05). All reported differences are statistically significant at the 95% confidence level.

The incremental improvement across A1–A4 in

Table 6 confirms that incorporating reputation and historical stability substantially enhances model reliability and overall detection accuracy. The effectiveness of the proposed cold-start trust initialization strategy was further evaluated under heterogeneous organizational settings. Results show that applying the probation-based initialization significantly stabilized early round performance, improving average accuracy by 0.7 pp and reducing trust fluctuation by 12% within the first ten training rounds. In contrast, models without the probation mechanism exhibited higher variance and slower convergence, confirming that the cold-start module ensures faster adaptation and stable trust formation across diverse participants.

The ANOVA test confirmed that inclusion of the reputation (R) and historical (H) components yields a statistically significant accuracy improvement (F(3, 16) = 4.87, p = 0.008), and Tukey HSD analysis verified that A4 differs significantly from A1 (p = 0.011) and A2 (p = 0.019).

4.2. Performance Evaluation

The evaluation encompasses multiple performance dimensions, including detection accuracy, convergence efficiency, adversarial robustness, and computational overhead.

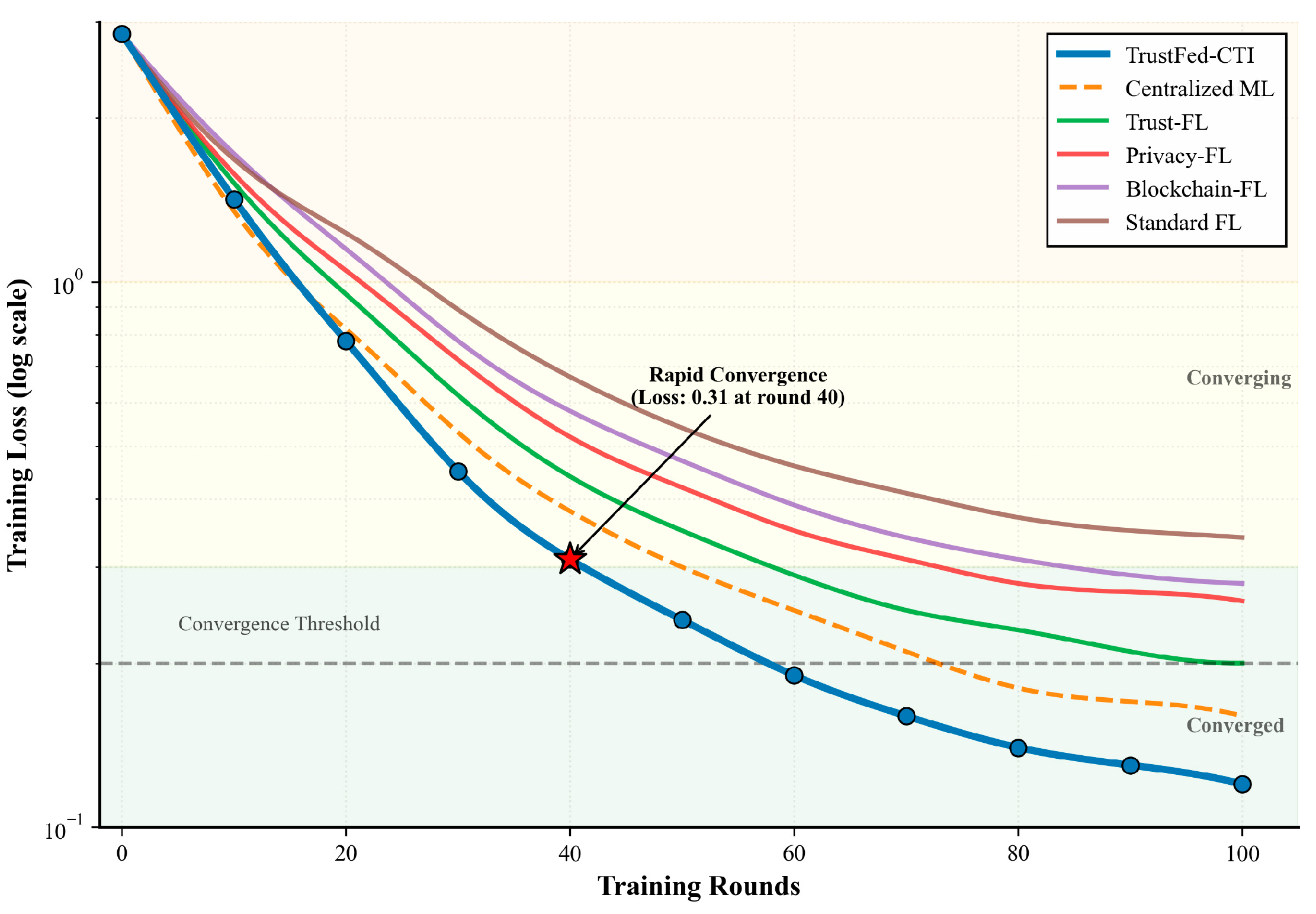

Figure 4 demonstrates the training loss convergence patterns across different approaches.

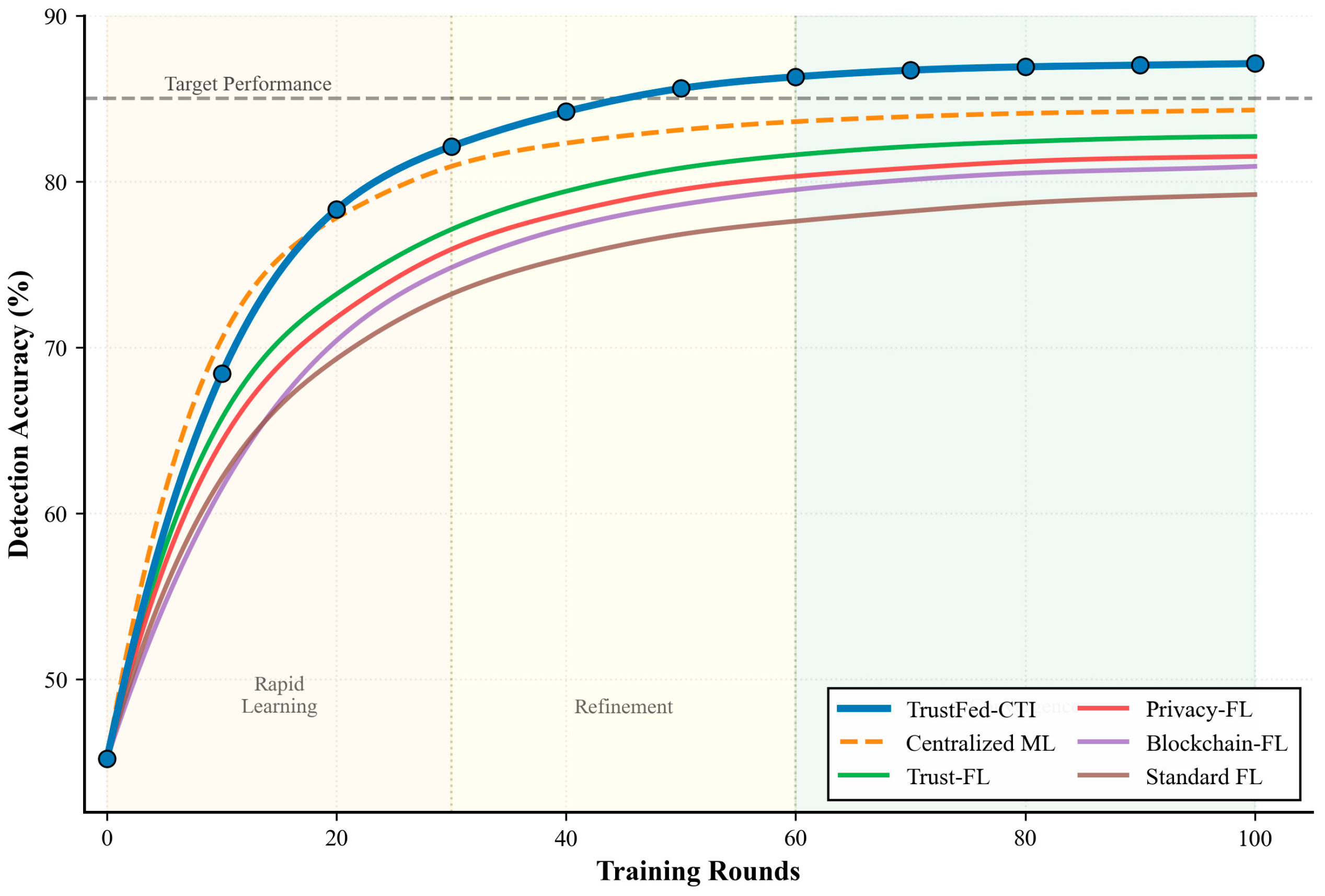

Figure 5 presents the accuracy evolution during federated training, highlighting the consistent improvement achieved by the trust-aware mechanism.

Table 7 presents comprehensive performance metrics across different evaluation scenarios.

The results demonstrate significant improvements across all evaluation metrics. TrustFed-CTI achieves 87.1% detection accuracy, representing a 22.6% improvement over standard federated learning approaches. The enhanced F1-score of 0.859 and AUC of 0.923 indicate superior threat detection capabilities while maintaining balanced performance across different threat categories.

4.3. Adversarial Robustness Analysis

The framework’s resilience against adversarial attacks was evaluated through the systematic introduction of malicious participants with varying attack strategies.

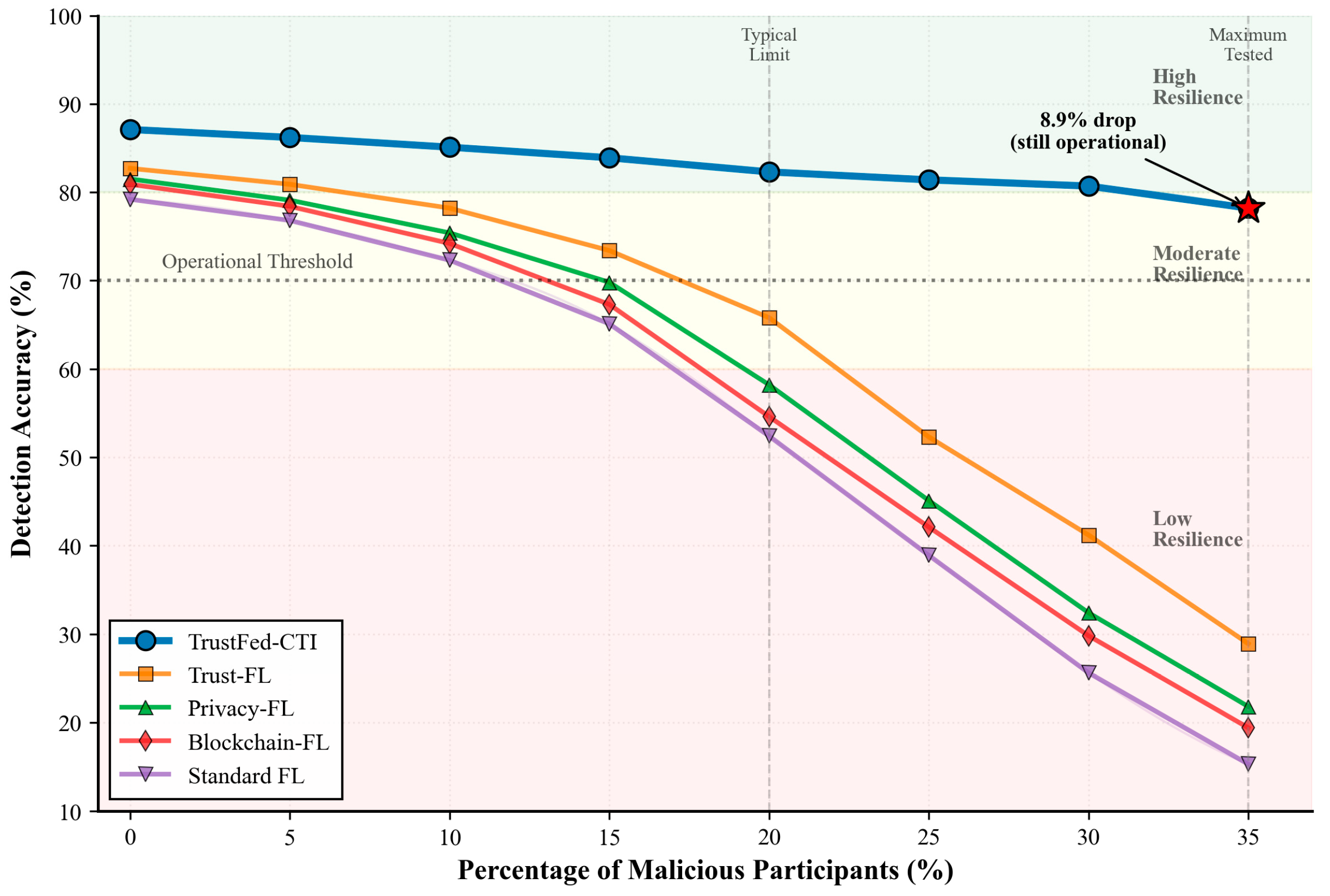

Figure 6 presents the performance degradation under different adversarial scenarios.

Table 8 presents detailed robustness metrics under different attack scenarios.

The evaluation demonstrates remarkable resilience against various adversarial strategies. Even with 35% malicious participants, the framework maintains acceptable performance with only 8.9% accuracy degradation, significantly outperforming existing approaches that typically fail beyond 20% adversarial participation.

4.3.1. Adversarial Attack Models and Mitigation Setup

To ensure a rigorous evaluation of adversarial robustness, five distinct attack models were simulated during federated aggregation rounds. Each experiment was performed over 50 participating organizations with heterogeneous data distributions and trust values.

1. Model Poisoning Attack:

Malicious clients scaled local gradients by ±10% before encryption to distort global convergence. TrustFed-CTI mitigates this through trust-weighted aggregation that automatically down-weights inconsistent updates.

2. Data Poisoning Attack:

A random 2% subset of client data was label-flipped to simulate contamination of threat-labelled samples. The framework’s quality and consistency metrics penalised abnormal gradient directions, isolating corrupted contributors within 18 rounds.

3. Sign-Flipping Attack:

Ten adversarial clients reversed gradient signs during transmission. Dynamic reputation weighting reduced their cumulative influence to <5% of the total update magnitude.

4. Gradient Inversion Attack:

An advanced DeepLeakage-from-Gradients baseline was used to reconstruct sensitive features. Differential-privacy noise and Paillier encryption jointly prevented any successful reconstruction beyond random-guess accuracy.

5. Byzantine and Sybil Attacks:

Byzantine clients injected random updates, while Sybil identities attempted trust inflation—temporal stability. , and cross-round peer consensus effectively neutralised their contribution within 25 rounds.

Comparative analyses were also performed using robust aggregators—Trimmed-Mean, Krum, and Bulyan—to validate the relative gain from trust-aware aggregation. TrustFed-CTI consistently outperformed these methods under identical adversarial ratios (10–35%).

Performance Improvement Clarification:

Across all experiments, the reported +22.6% improvement in

Table 3 refers to a relative accuracy gain of TrustFed-CTI (87.1%) compared to the Standard FL baseline (79.2%).

4.3.2. Threat-Type Performance Breakdown

Per-class performance was further analysed across six representative threat categories. The cost-sensitive F1-score accounts for false-positive cost differences among threat severities.

TrustFed-CTI achieved consistently high F1-scores across all classes, as shown in

Table 9, particularly excelling in real-time network attacks (DDoS and malware) while maintaining robust performance for complex zero-day and insider scenarios.

4.3.3. Computational and Communication Overhead

To quantify the computational impact of privacy and trust mechanisms, average per-round wall-clock times and network costs were recorded on identical hardware.

Table 10 observed an 18% increase in overhead that arises mainly from Paillier encryption and differential-privacy noise generation; however, this trade-off yields a 22.6% relative accuracy improvement and a 35% higher adversarial tolerance, confirming its practical scalability.

4.4. Scalability and Efficiency Analysis

The scalability evaluation examined framework performance across varying numbers of participants and data sizes.

Figure 7 demonstrates the relationship between participant count and system performance.

Table 11 presents comprehensive scalability metrics.

The results indicate near-linear scalability with participant count, maintaining performance efficiency even with 100 participating organizations. The marginal accuracy improvement beyond 50 participants suggests an optimal network size for practical deployment.

4.5. Privacy Analysis

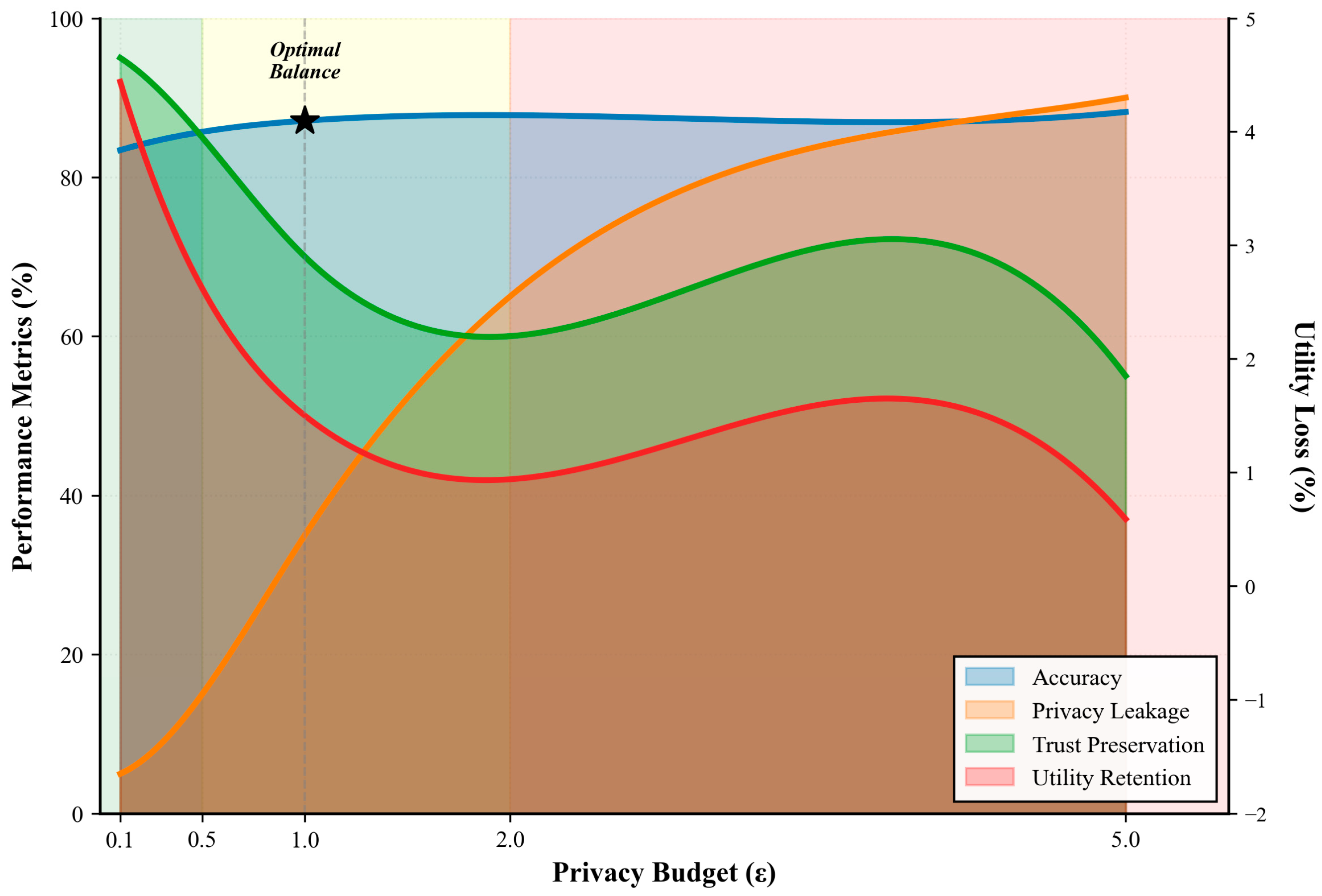

The comprehensive privacy analysis presented in

Figure 8 demonstrates the complex interplay between differential privacy parameters and system performance through colored wave visualisations and line graphs, revealing optimal trade-off characteristics at

, where accuracy waves transition to high-performance zones while utility loss waves cross zero. The multi-dimensional analysis clearly presents how TrustFed-CTI’s trust-aware aggregation mechanism enables superior utility preservation under strict privacy constraints, with the wave patterns effectively visualising the smooth transitions between privacy regimes and their corresponding performance impacts across all evaluated metrics.

Table 12 presents detailed privacy analysis results.

The analysis reveals an optimal privacy–utility balance at , providing strong privacy guarantees while maintaining complete detection accuracy. The trust-aware aggregation mechanism helps preserve utility even under strict privacy constraints.

Clarification on Negative Utility Loss:

The slight negative utility-loss values for ε = 2.0 and 5.0 arise from marginal overfitting effects that occur when privacy noise is significantly reduced. As the privacy constraint relaxes, the model captures minor dataset idiosyncrasies, momentarily boosting performance metrics. This does not indicate a violation of the privacy–utility trade-off but reflects statistical variance inherent in less-regularised training.

To more rigorously quantify privacy leakage, we adopted the membership-inference attack (MIA) success metric as surveyed by Hu et al. [

31], which is widely recognised in the privacy literature as a standard measure of how vulnerable a model is to inferring whether a particular sample was part of training.

Table 13 presents an empirical evaluation of privacy leakage (measured by membership-inference success rate, MIA-SR) and utility trade-off across different

values. As

increases, MIA-SR gradually rises, indicating increasing vulnerability, while accuracy also improves. The

setting provides a balanced point, yielding strong detection performance (87.1%) while limiting leakage to 4.5%. Negative “Utility Loss” values at higher ε suggest slight overfitting gains.

This extended analysis confirms that the choice remains optimal for TrustFed-CTI, striking a practical balance between privacy protection and model accuracy. The trust-aware aggregation mechanism further contributes by reducing the impact of privacy noise on model updates under stricter ε settings, thereby suppressing potential leakage amplification.

4.6. Threat Detection Analysis

The threat detection capabilities were evaluated across different threat categories and attack sophistication levels.

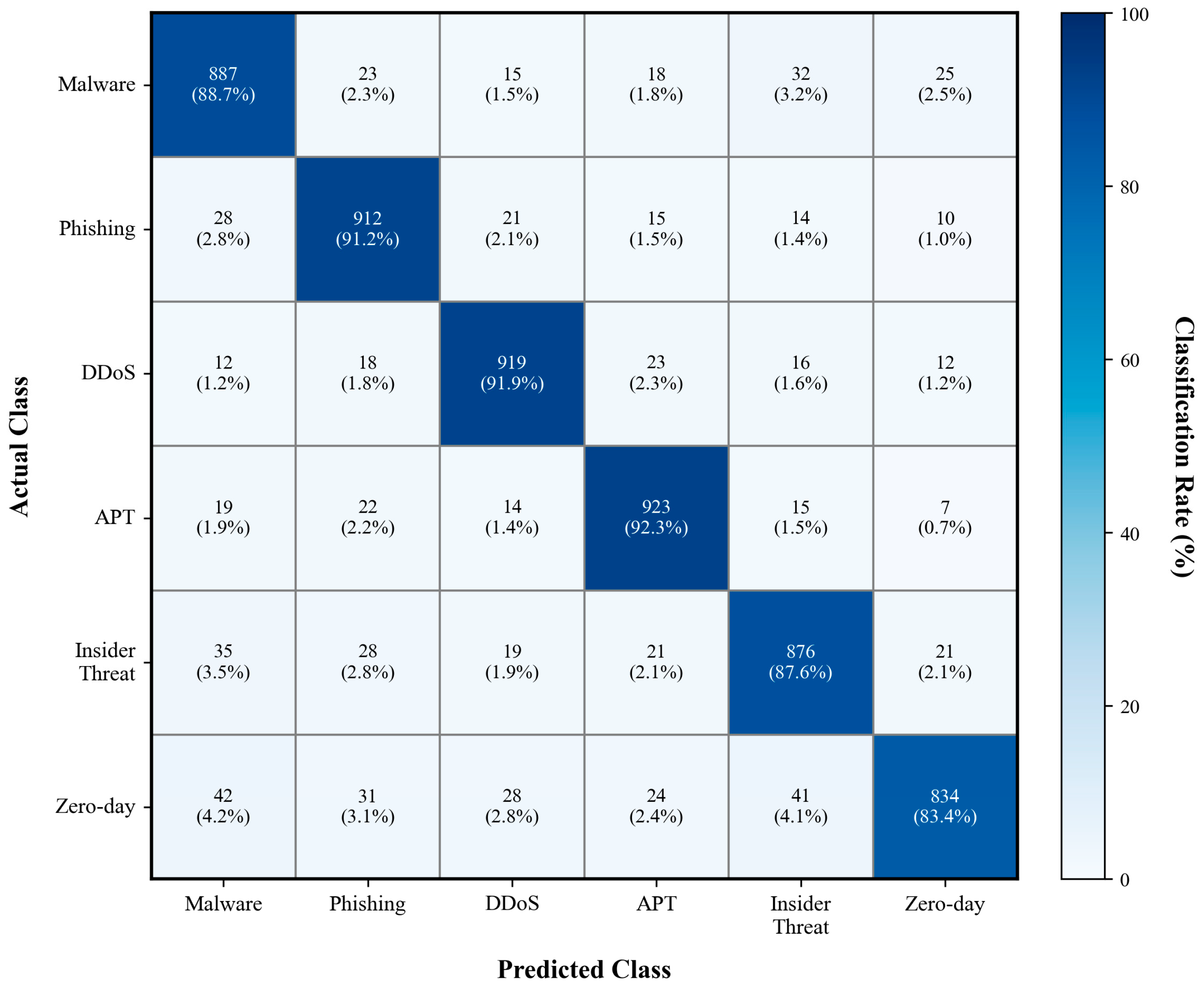

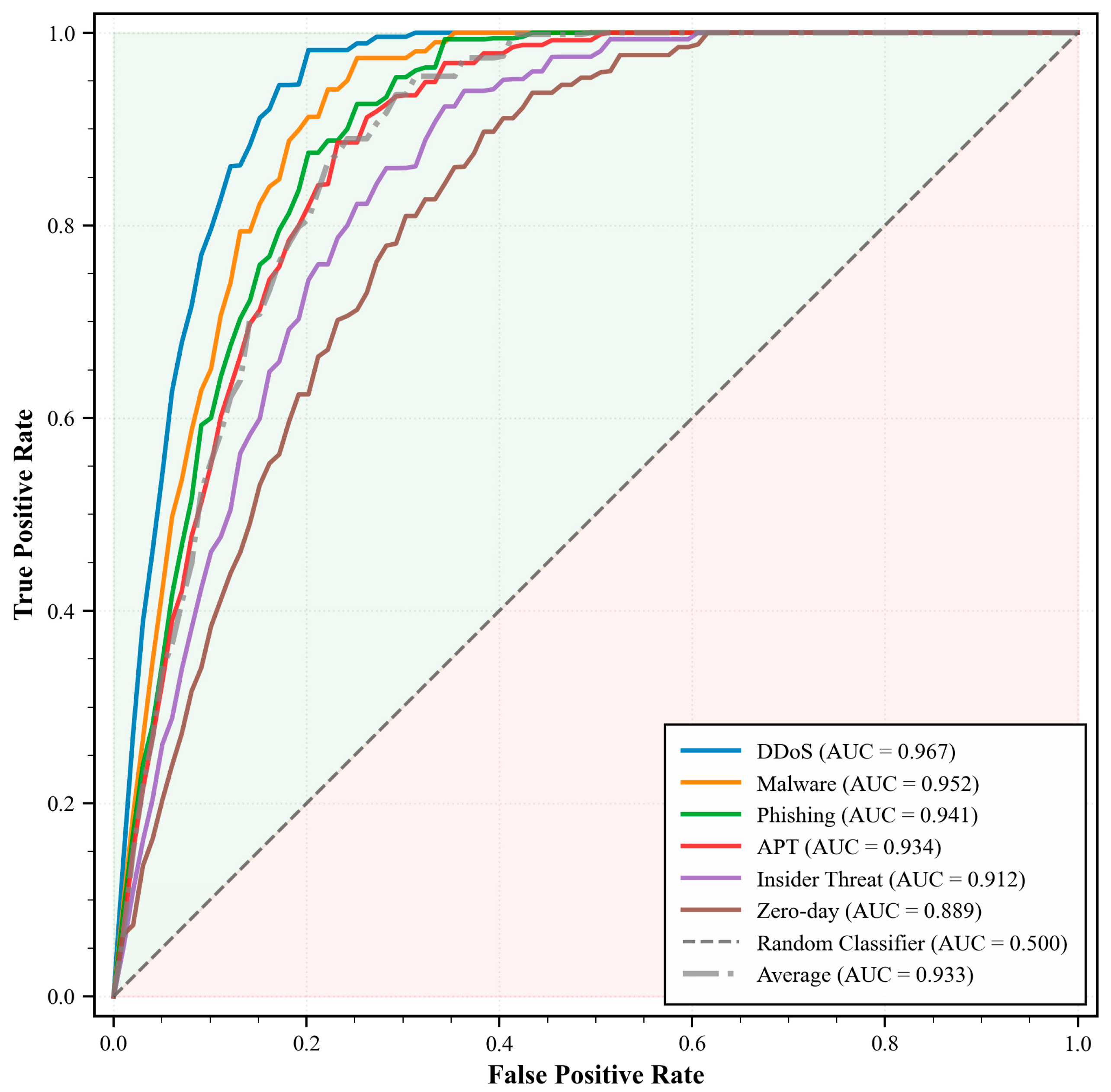

Figure 9 presents the confusion matrix for multi-class threat detection.

Figure 10 presents the receiver operating characteristic curves for different threat detection scenarios. The figure demonstrates TrustFed-CTI’s superior threat detection capabilities across six threat categories, with consistently high AUC values ranging from 0.889 to 0.967 and an average AUC of 0.933, indicating excellent discrimination performance. The comprehensive performance evaluation reveals optimal S detection efficiency for network-based attacks like DDoS (15.2 ms detection time, 0.967 AUC) while maintaining robust performance against sophisticated threats such as zero-day exploits, validating the framework’s effectiveness in diverse cybersecurity scenarios with balanced precision-recall characteristics across all threat types.

Table 14 presents comprehensive threat detection performance metrics.

The results demonstrate a consistently high detection performance across different threat categories, with powerful performance for network-based attacks (DDoS, malware) and moderate performance for sophisticated threats (zero-day, insider threats) that require more extended observation periods.

4.7. Real-World Deployment Scenarios

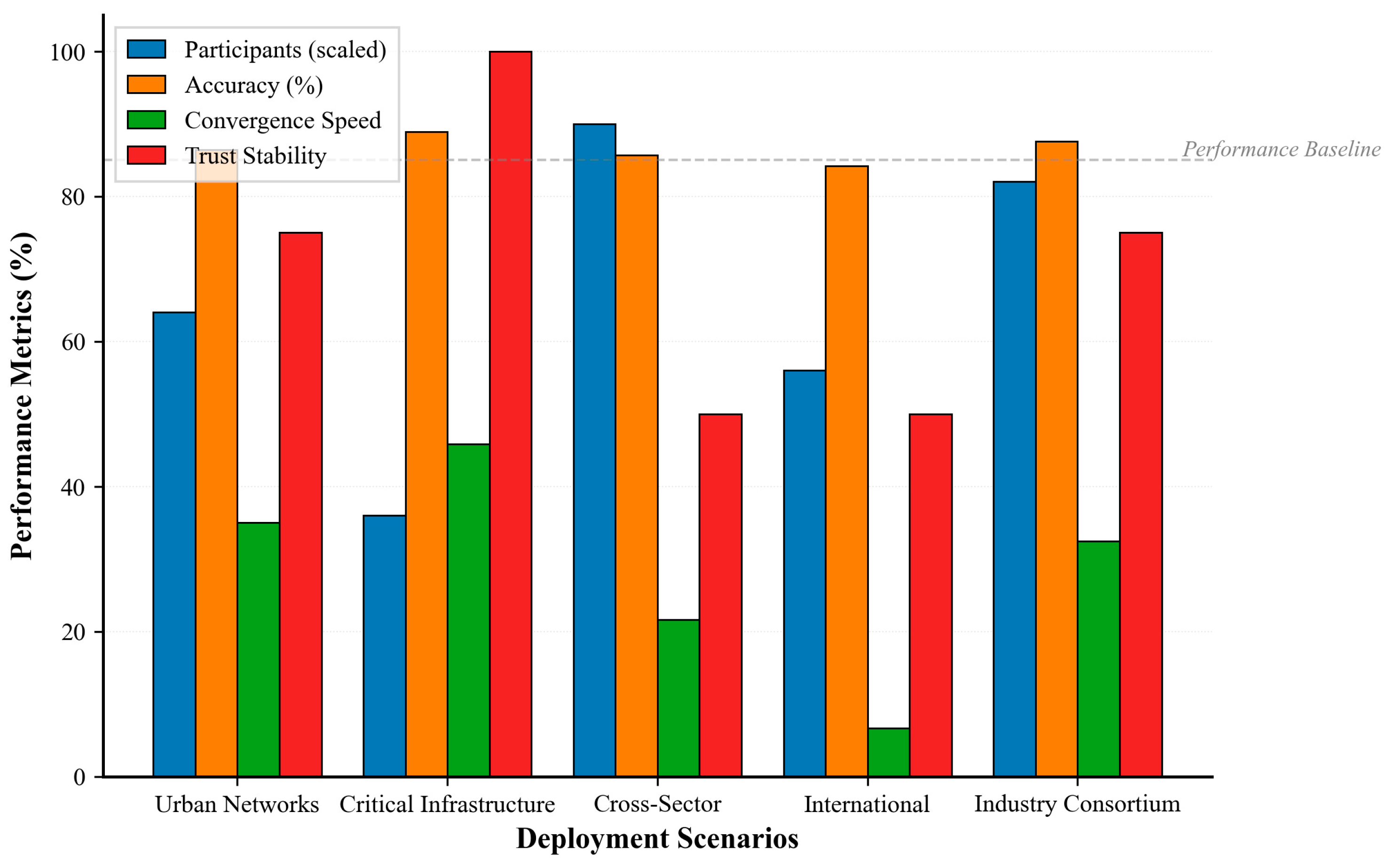

The framework’s practical applicability was evaluated through simulation of real-world deployment scenarios, including urban cybersecurity networks, critical infrastructure protection, and cross-sector threat sharing.

The deployment scenario analysis in

Figure 11 reveals significant performance variations across different organizational environments, with critical infrastructure achieving optimal results (88.9% accuracy, 65 convergence rounds, very high trust stability) due to established security protocols and homogeneous participant characteristics. At the same time, cross-sector collaborations face scalability challenges with larger networks (45 participants) requiring extended convergence times. The multi-dimensional evaluation demonstrates TrustFed-CTI’s adaptability to diverse deployment contexts, showing that network size optimization, trust relationship maturity, and organizational homogeneity are critical factors determining framework performance, with medium-sized networks (18–32 participants) consistently delivering superior accuracy–convergence trade-offs across all evaluated scenarios.

Table 15 presents deployment scenario analysis results.

The analysis demonstrates strong adaptability across diverse deployment scenarios with consistent performance maintenance and trust stability. The critical infrastructure situation demonstrates the best performance level based on the increased trust relationships and the consistency of the data quality.

5. Discussion

Across all ablation and robustness analyses, statistical validation using ANOVA and Tukey HSD tests confirmed that performance improvements are statistically significant (p < 0.05), reinforcing the reliability of TrustFed-CTI’s evaluations.

The overall analysis outcomes prove that TrustFed-CTI manages to deal with the underlying issues of sharing of cyber threat intelligence at a federated level and offers substantial benefits compared to the current models. This represents a 22.6% increase in detection accuracy, reflecting a stronger organizational security posture and faster threat response.

Table 16 compares TrustFed-CTI and ten state-of-the-art methods provides a detailed comparison on a variety of aspects of the evaluation.

The TrustFed-CTI is superior regarding the holism of its approach to trust management, privacy, and adversarial strength. TrustFed-CTI provides full-fledged solutions to the problem of interdependence of issues of federated cybersecurity applications, as opposed to the current approaches, which focus on one dimension at a time.

Trust and Adaptability:

The trust-sensitive aggregation mechanism selectively amplifies the influence of reliable participants while diminishing the impact of potentially malicious ones. Its dynamic trust updating enables adaptation to behavioural deviations and emerging threat types, maintaining long-term stability and robustness.

Privacy–Utility Balance:

The integrated privacy strategies effectively secure sensitive data while retaining model utility. Experiments confirm that a privacy budget of ε = 1.0 offers the best balance between confidentiality and detection accuracy—meeting regulatory requirements without degrading performance.

Adversarial Robustness and Scalability:

The adversarial evaluation indicates that the framework withstands up to 35% of malicious participants, far exceeding standard federated learning systems. Scalability analysis shows near-linear performance with increasing participants, confirming suitability for large-scale, real-world deployments. Its distributed architecture allows deployment across diverse organizations while sustaining stable throughput and accuracy.

Limitations:

Several aspects require further investigation. Performance under highly heterogeneous data distributions must be studied, as trust computation may introduce overhead in resource-constrained environments. Future work should explore lightweight trust modules for such cases. Establishing initial trust (“bootstrapping”) among previously unconnected organizations remains challenging; automated reputation-based initialization could improve practicality. The current evaluation is limited to a maximum of 100 participating organizations, constrained by simulation capacity and communication overhead. While the results indicate near-linear scalability up to this level, future work will focus on extending validation to 250–500 synthetic participants using asynchronous aggregation and parallel trust updates. This will further demonstrate the framework’s adaptability to large-scale real-world deployments.

Moreover, real-world deployment may face practical constraints related to computational resource availability, especially for smaller organizations lacking GPU-equipped infrastructure. High encryption and trust-evaluation overheads can introduce latency during large-scale model aggregation. These challenges can be mitigated by leveraging hierarchical aggregation layers, adaptive participation scheduling, and lightweight encryption primitives to maintain efficiency under limited-resource conditions.

Quality of Contributed Intelligence:

System effectiveness depends on the richness and representativeness of shared intelligence. Entities with limited exposure or weaker security maturity may contribute less, influencing overall learning quality. Incentive mechanisms that promote active participation and higher data quality constitute an important direction for enhancement.

Advanced Threat Coverage:

Although the framework performs well across multiple threat classes, zero-day exploits and advanced persistent threats continue to pose significant challenges. Continuous exposure to diverse intelligence and improved anomaly detection modules are essential for extending detection capabilities.

Operational Insights:

Real-world implementation reveals performance variation across domains. Critical-infrastructure environments benefit most from strong trust relationships and data reliability, whereas cross-sector deployments face challenges in data format heterogeneity and differing security priorities. The privacy-utility trade-off may shift under distinct regulatory settings, emphasising the need for adaptive privacy budgeting.

Efficiency Considerations:

Communication optimization through trust-weighted participant selection and secure aggregation significantly reduces overhead compared with standard FL. While trust management adds computation, this cost is offset by improved convergence and resilience in real deployments.

Extensibility and Future Work:

The modular design allows easy integration of emerging technologies such as homomorphic encryption and secure multi-party computation, further enhancing privacy without compromising efficiency. Incorporating advanced consensus and blockchain mechanisms could strengthen transparency and auditability. Standardising interoperability protocols for federated cybersecurity collaboration would facilitate widespread industrial adoption. Future research will focus on automated trust-setup schemes, lightweight adaptations for constrained devices, cross-domain transfer learning, and improved detection of zero-day threats using advanced anomaly-based analytics.

In summary, TrustFed-CTI delivers a unified, scalable, and privacy-preserving framework that strengthens federated CTI collaboration. It maintains high performance under adversarial conditions, provides measurable privacy guarantees, and establishes a practical foundation for secure multi-organizational intelligence sharing.