Abstract

The integration of artificial intelligence (AI) and edge computing gives rise to edge intelligence (EI), which offers effective solutions to the limitations of traditional cloud-based AI; however, deploying models across distributed edge platforms raises concerns regarding authenticity, thereby necessitating robust mechanisms for ownership verification. Currently, backdoor-based model watermarking techniques represent a state-of-the-art approach for ownership verification; however, their reliance on model poisoning introduces potential security risks and unintended behaviors. To solve this challenge, we propose BIMW, a blockchain-enabled innocuous model watermarking framework that ensures secure and trustworthy AI model deployment and sharing in distributed edge computing environments. Unlike widely applied backdoor-based watermarking methods, BIMW adopts a novel innocuous model watermarking method called interpretable watermarking (IW), which embeds ownership information without compromising model integrity or functionality. In addition, BIMW integrates a blockchain security fabric to ensure the integrity and auditability of watermarked data during storage and sharing. Extensive experiments were conducted on a Jetson Orin Nano board, which simulates edge computing environments. The numerical results show that our framework outperforms baselines in terms of predicate accuracy, p-value, watermark success rate (WSR), and harmlessness H. Our framework demonstrates resilience against watermarking removal attacks, and it introduces limited latency through the blockchain fabric.

1. Introduction

Recent years have witnessed rapid progress in the Internet of Things (IoT), edge computing, and cloud computing. Breakthroughs in artificial intelligence (AI), especially deep learning (DL), have further accelerated this trend [1]. Edge intelligence (EI), which integrates AI with edge computing, has shown great potential to overcome the limitations of traditional cloud-based AI systems [2]. AI model deployment on edge devices enables autonomous and resilient systems for smart cities, while the integration of AI into edge platforms provides additional key benefits [3]. First, edge computing enables AI models to operate locally, facilitating real-time processing with minimal latency. Second, performing computations closer to the data source enhances data privacy by reducing the need to transmit sensitive information to centralized servers [4]. Moreover, decreasing reliance on cloud services lowers data transmission costs and mitigates network bandwidth limitations, thereby improving the scalability and efficiency of AI applications [5]. While we primarily consider edge intelligence (EI) as the general application scenario, the proposed framework is equally applicable in cloud-based environments.

Advancing AI to distributed edge platforms introduces significant security challenges due to the dynamic and heterogeneous nature of edge computing. Typically, cloud servers manage pretrained AI models and deploy them to remote edge environments. However, models on edge platforms are susceptible to model theft attacks, such as model extraction or weight stealing, which can replicate a model’s functionality and lead to intellectual property (IP) infringement [6]. In addition, an adversary also launches poisoning attacks on original models, such as manipulating the training data or the model parameters [7]. As a result, these unauthorized AI models can be published on AI model marketplaces and then integrated into diverse smart applications. These issues not only compromise the model’s commercial value and security, but they also undermine the trustworthiness and reliability of AI ecosystems. Therefore, AI model authenticity and ownership verification are critical to protect model IP and prevent unauthorized access and even malicious use.

The fundamental concept of AI model ownership verification is to embed a specific pattern or structure into the model as a unique identifier (watermark), which can later be extracted to demonstrate ownership when necessary during the inference stage [8]. The verification process typically involves three key phases: watermark embedding, extraction, and validation [9]. In the embedding phase, watermark information is incorporated into the model either during training or through post-processing to establish ownership. Embedding techniques can operate at the parameter level, such as encoding the watermark within model weights, or at the functional level, such as introducing a trigger-based watermark that produces specific outputs for designated inputs [10]. Furthermore, the embedding process must ensure imperceptibility, meaning that the watermark does not degrade the model’s performance on its primary task.

During the watermark extraction phase, predefined methods are used to retrieve the watermark from the target model during inference or auditing. If the extracted watermark matches the original one, ownership of the model can be verified [11]. The extraction method typically corresponds to the embedding technique; for example, if the watermark is encoded within model weights, specific parameter patterns must be analyzed to extract it, whereas trigger-based watermarks require feeding specially crafted inputs to activate the watermark response [12]. Finally, in the ownership verification phase, the extracted watermark is compared against the original to confirm the model’s legitimacy. This verification process should have legal enforceability, allowing it to serve as valid evidence in intellectual property protection. Additionally, the watermark must be robust against various attacks, such as model compression, fine tuning, pruning, and adversarial attacks, ensuring that it remains intact and detectable even after modifications. An ideal model watermarking technique should meet several core requirements. First, it must exhibit robustness, meaning that the watermark remains extractable even if the model undergoes modifications, such as pruning or fine tuning [13]. Second, the embedding should be imperceptible, ensuring that it does not degrade the model’s normal task performance. Furthermore, the watermark should possess security, making it difficult for attackers to remove, forge, or alter the embedded information. Finally, the ownership verification process must be efficient, ensuring that watermark embedding and extraction incur minimal computational overhead, making it suitable for resource-constrained edge devices [14,15,16].

Currently, backdoor-based watermarking methods are among the most advanced techniques for ownership verification [12]. These methods rely on backdoor trigger mechanisms, embedding watermarks by injecting backdoors into models so that they produce predetermined responses when exposed to specific trigger samples. However, despite their effectiveness, backdoor-based watermarking fundamentally relies on poisoning the model, introducing additional security risks [17]. For instance, if an attacker discovers the trigger pattern, they could exploit the backdoor to launch adversarial attacks against the model. Additionally, even if the trigger pattern has a minimal impact, it may still cause unexpected erroneous predictions, affecting the model’s reliability. Moreover, as research on backdoor detection advances, backdoor-based watermarking techniques may become increasingly susceptible to detection and removal, compromising their long-term effectiveness.

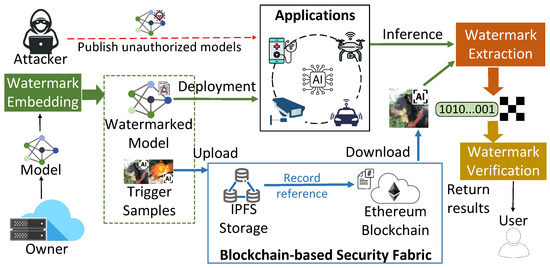

Our Work. To solve these challenges, we propose BIMW, a novel blockchain-enabled innocuous model watermarking framework for secure ownership verification of AI models within distributed application ecosystems. As Figure 1 shows, owners rely on cloud servers to manage pretrained models and deploy models for authorized users. However, an adversary can publish unauthorized models (copied or manipulated) in applications. To ensure verifiable and robust model ownership verification, BIMW introduces an interpretable watermarking (IW) method that avoids model poisoning while maintaining robustness. Instead of embedding watermarks directly into model outputs, the cloud server (model owner) employs feature impact analysis algorithms to generate interpretation-based watermarks, along with corresponding trigger samples. Ownership is verified by using these trigger samples for the model inference and then comparing the resulting interpretations with the original watermark. Thus, users can confirm that models are published by trusted sources with authorized permissions.

Figure 1.

System overview of the BIMW framework.

To further enhance the security and transparency of model ownership verification under a distributed network environment, BIMW integrates a blockchain-based security fabric that ensures the integrity and auditability of watermark data, models, and trigger samples during storage and distribution. As the bottom of Figure 1 shows, the model owner saves models and trigger samples into an IPFS-based distributed storage and then records their reference information on an Ethereum blockchain. Upon model deployment and inference stages, users (or owners) use reference information to identify any tampered models or trigger samples. Therefore, BIMW offers a decentralized, secure, and reliable alternative to traditional backdoor-based watermarking methods. (Our implementation is publicly available at https://github.com/Xinyun999/BIMW (Accessed on 22 October 2025)).

Contributions vs. Ref. [13]. Our work is built upon EaaW [13], introducing several key improvements to the innocuous model watermarking method and system architecture, including differentiable extraction via ridge regression, normalization prior to binarization, dual verification tests, and the synergistic integration of blockchain fabric within distributed networks. The primary contributions over [13] are summarized as follows:

- (1)

- We propose the system architecture of BIMW, a blockchain-enabled innocuous model watermarking framework that ensures secure and trustworthy AI model deployment and sharing in distributed edge computing environments.

- (2)

- We extend the traditional LIME-style feature attribution into a differentiable watermark extraction framework, formulating the interpretation process as a ridge regression optimization problem that supports end-to-end gradient propagation for robust watermark embedding.

- (3)

- To improve robustness and stability, we propose a normalization mechanism applied prior to binarization, which effectively mitigates noise sensitivity and ensures consistent watermark recovery across samples and models. We theoretically analyze and compare two watermark verification mechanisms, combining cosine similarity for differentiable training and the chi-squared test for statistically grounded ownership verification.

- (4)

- We strengthen the data transmission security and transparency of watermark data, models, and trigger samples by using blockchain encryption technology.

- (5)

- We conducted comprehensive experiments by applying BIMW to various AI models. The results demonstrate its effectiveness and resistance against watermark-removal attacks, as well as its efficiency in model data authentication and ownership verification on edge computing platforms.

The remainder of this paper is structured as follows. Section 2 provides a brief overview of the existing solutions for deep learning model watermarking and ownership verification. We also briefly describe interpretable machine learning techniques and blockchain technology. Section 3 presents the system framework of the innocuous model watermarking method, emphasizing the synergic integration of IW and feature impact analysis (FIA) for watermark embedding, extraction, and ownership verification. Section 4 describes the prototype implementation and shows the experimental results to verify the effectiveness and performance of applying BIMW on edge computing platforms. Finally, Section 5 concludes this paper with a summary and some discussions about ongoing efforts.

2. Background Knowledge and Related Work

2.1. Edge Intelligence

With AI advancing rapidly, traditional cloud computing faces growing challenges, like high latency, bandwidth consumption, and data privacy risks [3]. Edge intelligence, which integrates the IoT, edge computing, and AI technology, offers a promising solution. By directly deploying AI models on IoT devices that are close to the network of the edge, edge intelligence demonstrates several merits, such as local data processing, improving real-time responsiveness, enhancing privacy, reducing bandwidth use, and increasing system stability. This technology is widely used in intelligent surveillance, autonomous driving, smart healthcare, industrial inspection, and IoT systems, allowing efficient operation in resource-limited environments [1,18,19]. However, deploying AI models on edge devices also raises model theft risks. Limited computational power and weak security make these devices vulnerable to model extraction and reverse engineering, leading to intellectual property theft and unauthorized use. As a result, ownership verification has become a key challenge in ubiquitous smart applications based on edge intelligence.

2.2. Model Watermarking and Ownership Verification

Model watermarking has emerged as a promising technique for embedding identifiable information into neural networks, enabling model ownership verification while preserving model functionality [20]. Watermark-based ownership verification typically includes three phases: embedding, extraction, and verification [9]. Watermarks can be embedded during training or post-processing, either at the parameter level (e.g., within model weights) or at the functional level (e.g., trigger-based watermarks) [10]. Extraction techniques align with embedding methods; for instance, weight-based schemes analyze parameter patterns, while trigger-based approaches use crafted inputs to elicit responses [12].

Model watermarking techniques can be broadly categorized into white-box or black-box approaches. White-box watermarking embeds a watermark directly into the model’s parameters, such as weights and biases, making it retrievable only when internal access to the model is available [21]. This method provides strong security, but it also requires direct access to the model’s architecture and parameters. In contrast, black-box watermarking enables verification through query-based interactions with the model’s API, embedding specific responses or backdoor triggers that can be used to verify ownership without requiring internal access [22]. Unlike block-box methods that adopt a backdoor watermarking approach, non-backdoor black-box watermarking has been developed for dataset inference defense by relying on the private training data of the victim model as a signature [23]. Inspired by explainable AI, feature attribution methods have been used to enable a harmless and multi-bit black-box model ownership verification approach [13].

The watermarking process generally consists of two key phases: embedding and extraction. During the embedding phase, a unique watermark, such as a specific set of activations, adversarial triggers, or modified training data, is introduced into the model during training. In the extraction phase, the embedded watermark is later retrieved to verify model ownership, either by examining internal parameters in a white-box setting or by evaluating specific outputs based on carefully crafted queries in a black-box setting. An effective model watermarking scheme must satisfy several key properties. Robustness ensures that the watermark remains intact even if the model undergoes transformations, such as pruning, fine tuning, or compression [9]. Fidelity guarantees that the watermark does not degrade the model’s primary performance when operating on its intended tasks. Security is crucial, as the watermark should be resistant to unauthorized removal or detection by adversaries. Finally, verifiability ensures that the ownership verification process is reliable and legally admissible if required.

Ownership verification establishes the legitimacy of a deep learning model by extracting and validating embedded watermarks through techniques such as signature verification, challenge–response mechanisms in black-box settings, and cryptographic hashing to ensure integrity and prevent tampering. Beyond technical validation, watermarks can also be potentially useful for evidentiary purposes in legal disputes, reinforcing accountability in cases of intellectual property theft [24]. Effective verification requires a balance of robustness, security, and efficiency, ensuring ownership can be proven without impairing model performance. In practice, model watermarking is widely applied to safeguard proprietary AI assets, to detect unauthorized or unlicensed usage [11], and to protect distributed models in federated learning and secure AI deployment. As deep learning continues to expand into critical domains, watermarking remains essential for protecting and managing AI ownership.

2.3. Interpretable Machine Learning

Interpretable machine learning (IML) aims to enhance the transparency and understanding of complex machine learning models, enabling users to comprehend how decisions are made [25]. As machine learning models, particularly deep neural networks, become increasingly intricate, the need for interpretability has grown in importance for ensuring trust, accountability, and fairness in AI-driven decision making. Interpretability in machine learning can be categorized into intrinsic and post hoc approaches. Intrinsic interpretability refers to models that are inherently transparent, such as decision trees, linear regression, and rule-based classifiers, where the reasoning behind predictions is easily understood. In contrast, post hoc interpretability applies to complex models, such as deep neural networks, where explanations are generated after training using techniques like feature importance analysis, attention mechanisms, and surrogate models [26]. A key aspect of interpretable machine learning is explainability, which provides insights into how models arrive at specific predictions. Techniques such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) help quantify the contribution of input features to a model’s output, making it easier to interpret predictions [27]. Additionally, saliency maps and attention mechanisms provide visualization-based explanations, particularly in deep learning applications, such as image classification and natural language processing.

Despite its advantages, achieving interpretability often presents tradeoffs. Highly interpretable models, such as linear regression and decision trees, may lack the predictive power of more complex models, like deep neural networks. Conversely, while deep learning models excel in performance, their lack of transparency poses challenges in critical applications. Ongoing research in interpretable AI focuses on developing techniques that balance interpretability with accuracy, ensuring that models remain both effective and understandable [28].

2.4. Blockchain for Data Security

Edge computing poses significant challenges in ensuring data security, integrity, and transparency due to its decentralized nature. Traditional centralized storage systems are inherently vulnerable to single points of failure, data tampering, and unauthorized access. Blockchain technology, with its decentralized and immutable nature, provides a promising solution for securing AI model ownership and protecting sensitive data [24]. One key advantage of blockchain technology is its immutability, ensuring recorded data cannot be altered or deleted without network consensus [1]. This is particularly critical for AI model ownership verification, as it allows watermarked models and ownership claims to be permanently and securely recorded on the blockchain. The blockchain’s decentralized architecture enhances security by eliminating reliance on a central authority, reducing risks of data breaches and system failures through distributed data storage.

In addition to security and integrity, the blockchain also offers auditability and transparency. Every transaction, including model registration, verification, and distribution, is permanently and verifiably stored [1], providing an indisputable record for legal or forensic purposes. Additionally, stakeholders can monitor model usage without compromising data confidentiality. Smart contracts further reinforce the system by automating access control, ensuring that only authorized parties can verify model ownership. For AI model watermarking, the blockchain serves as a trusted repository for watermark registration and verification. The embedding details, verification protocols, and hashes of the watermarked model can be securely stored on the blockchain, ensuring that ownership claims are tamper-proof. When disputes arise, the immutable blockchain ledger acts as an authoritative source for validating ownership, reducing reliance on third-party verification services. This integration enhances the robustness of watermarking methods, making them more resistant to forgery and unauthorized modifications.

3. Methodology

In this section, we provide details of the BIMW framework, especially for two sub-frameworks: the interpretable watermarking (IW) model and blockchain-based data verification. Building upon [13], our BIMW further enhances this line of work by improving both robustness and trustworthiness.

Compared with the watermark extraction process of [13], we extend the traditional LIME-style feature attribution into a differentiable watermark extraction framework. Specifically, the proposed method formulates the interpretation process as a ridge regression optimization problem, enabling end-to-end gradient propagation. Equation (6) is expressed with respect to model parameters. A differentiable interpretation operator , Equation (5), is introduced to connect the explanation and watermark domains, ensuring compatibility with gradient-based watermark embedding. To enhance stability, a normalization mechanism, Equation (7), is applied before binarization, which significantly improves the watermark robustness across samples and architectures. Finally, we theoretically compared two watermark verification methods (Equation (9) and Algorithm 1), forming a complete and theoretically grounded watermark recovery pipeline. In addition, we leveraged blockchain technology to enable decentralized data verification. Blockchain-based verification further strengthens the integrity and transparency of storing and sharing watermark data, models, and trigger samples under distributed IoT networks.

| Algorithm 1 Ownership verification via hypothesis testing. |

| Require: Trigger set , suspicious model , reference watermark , significance level . Ensure: Boolean flag indicating whether ownership is verified.

|

3.1. Insight of Interpretable Watermarking

As discussed in Section 1, traditional backdoor-based watermarking techniques rely on model poisoning, which can introduce security risks and unintended behaviors. To address these challenges, we pose a critical question: is there an alternative space where we can embed an inconspicuous watermark without affecting model predictions? Drawing inspiration from interpretable machine learning (IML) and feature impact analysis (FIA), we propose interpretable watermarking (IW). Our key idea is that, rather than embedding watermarks directly within the model’s predicted classes, we can utilize the interpretations generated by FIA algorithms as a hidden medium for watermarking.

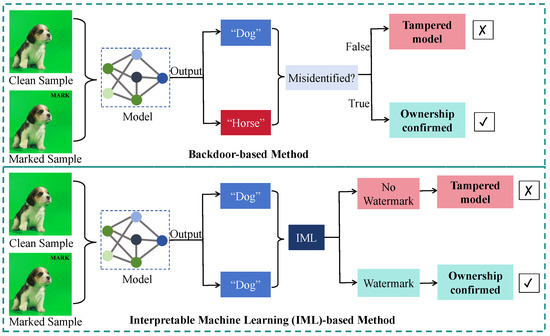

Figure 2 presents an overview of the interpretable watermarking framework and contrasts it with conventional backdoor-based methods. Unlike approaches that modify a model’s predicted class when a marked sample is introduced, IW employs feature impact analysis techniques to derive interpretations for these samples, embedding the watermark within these interpretability outputs. The IW process consists of three distinct phases: (1) embedding the watermark, (2) extracting the watermark, and (3) verifying ownership. Furthermore, we integrate blockchain technology to ensure the immutability and auditability of watermarked model records.

Figure 2.

The main pipeline of our interpretable watermarking (IW) and backdoor-based methods. Backdoor-based approaches identify ownership by exploiting intentional misclassifications. Our IW implants the watermark into the interpretation of feature impact analysis (FIA).

3.2. Embedding the Watermark

Table 1 provides a summary of symbols in this paper. As discussed in Section 1, an ownership verification mechanism aims to achieve three key objectives: effectiveness, robustness, and innocuousness. During the watermark embedding phase, we adjust the parameters to inject a watermark into the model. Moreover, the model’s original performance must be maintained [13]. Thus, this process can be formulated as an optimization problem, which is formally expressed as follows:

where denotes the parameters of the model, and represents the target watermark. The data and labels c correspond to a clean dataset, whereas and represent the watermarked set. The function Interpret(.) refers to an interpretability-based feature impact analysis technique that is employed in our watermarking method for watermark extraction. Moreover, denotes a coefficient. Equation (1) consists of two components. The first term, , represents the model’s loss function, ensuring that the predictions on both the clean and marked datasets remain consistent, thus preserving the model’s performance. The second term, , measures the discrepancy between the output interpretation and the target watermark. By optimizing , the model can align the interpretation more closely with the watermark. We use a hinge-like loss function for because it has been shown to enhance the watermark’s resilience against removal attacks. The hinge-like loss function is expressed as follows:

where . The symbols and represent the -th elements of and , respectively. By optimizing Equation (2), the watermark embedding process can be achieved. The parameter serves as a control factor, promoting the absolute values of the elements in to exceed .

Table 1.

The notation used in this paper.

3.3. Extracting the Watermark via Feature Impact Analysis

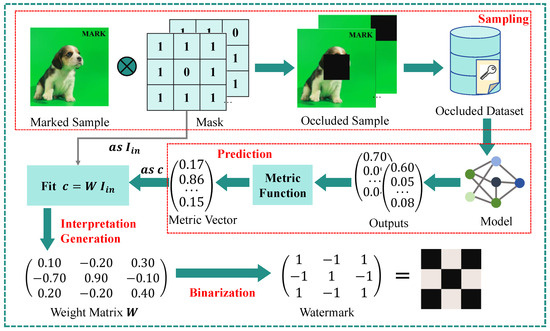

The goal of embedding is to determine the optimal set of model parameters (denoted as ) that minimizes Equation (2). To leverage the widely used gradient descent algorithm for this optimization, it is essential to develop a differentiable and model-agnostic method for feature impact analysis. Drawing inspiration from the well-known Local Interpretable Model-Agnostic Explanations (LIME) algorithm [27], we apply a LIME-based watermark extraction technique that generates feature impact interpretations for the trigger sample. LIME operates by generating local samples around a given input. It then evaluates the significance of each feature. The evaluation is based on the model’s outputs for these samples. The overall workflow is illustrated in Figure 3. Generally, it consists of three key phases: (1) regional sampling, (2) model prediction and assessment, and (3) obtaining the interpretation.

Figure 3.

The overall workflow of the watermark extraction algorithm using feature impact analysis (FIA) proceeds as follows. First, a set of masked samples is generated by randomly occluding several basic regions of the trigger sample. Next, these masked samples are fed into the model to obtain predictions, from which a metric vector is derived. Finally, a linear model is fitted to assess the contribution of each basic region within the trigger sample, and the resulting interpretation’s sign is utilized as the extracted watermark.

Phase 1: Regional Sampling. The input is denoted as . Regional sampling generates multiple samples. These samples are locally adjacent to the trigger sample . To begin, the input space is divided into n fundamental segments based on the length of . Neighboring features are grouped into a single segment, with each segment containing features.

To determine which features have the greatest impact on the prediction of a data point, we first randomly generate s masks, denoted as M. Each mask in M is a binary vector (or matrix) of the same size as . We refer to the -th mask in M as , where, for each i, we have . Next, we generate the masked samples by applying random masks to the key components of the trigger sample, thereby creating a dataset. This masking process is represented by ⊗, i.e., . Specifically, if the corresponding mask element is 1, then the related component in the input retains its original value. If is 0, then the component is replaced with a predefined value.

Phase 2: Model Prediction and Assessment. The masked dataset generated in Phase 1 is fed into the model. The model produces predictions for the masked samples. In label-only settings, the predictions y are binarized to 0 or 1. Subsequently, a metric function is used to assess the accuracy of the predictions. The metric vector for the s masked samples is computed according to Equation (3).

The metric function, denoted as , must be differentiable and capable of offering a quantitative assessment of the output. It can be tailored to suit specific deep learning tasks and prediction types. Given that most deep learning tasks typically have a differentiable metric function (such as a loss function), IW can be readily adapted for use in a wide range of deep learning applications.

Phase 3: Obtaining the Interpretation. After computing the metric vector v, we fit a linear model to evaluate the significance of each component. Ridge regression is used to improve robustness, and the resulting weight matrix W reflects the component importance. This weight matrix W for the linear model can be derived using the following normal equation:

where denotes a hyper-parameter, and represents an identity matrix.

To ensure the watermark embedding remains differentiable and compatible with gradient-based optimization, we define a mapping function as follows:

where represents the DNN parameters, and denotes the differentiable ridge regression operator. Using the chain rule, the gradient of the interpretation weights with respect to the model parameters is as follows:

Since both terms on the right-hand side are well defined in modern DNN frameworks, the entire watermark extraction and embedding pipeline remains differentiable.

Before binarization, it is often beneficial to normalize the weight vector to improve stability across samples:

where and denote the mean and standard deviation of W, respectively. The final extracted binary watermark is obtained through a sign-based quantization:

The resulting binary vector represents the recovered watermark bits. In summary, LIME-based feature impact analysis offers a flexible and differentiable way to extract watermarks that does not depend on the specific model architecture. The process works by generating regional samples, testing the model’s predictions on masked inputs, and then using ridge regression to identify which components matter most. This yields a stable interpretation that carries the watermark signal. Finally, through binarization, the watermark can be recovered in a reliable manner, making ownership verification practical across different tasks while remaining compatible with gradient-based optimization.

3.4. Verifying Ownership

The ownership verification process for DNN models involves extracting the watermark from a suspicious model and comparing it with the original watermark to confirm ownership. It provides the model owner with a reliable means of proving authorship, which is essential in legal disputes, licensing scenarios, and commercial deployments. At the same time, it acts as a deterrent to model piracy, ensuring that only legitimate entities can benefit from the model. In practice, ownership verification can be conducted under different levels of access. In white-box settings, the internal parameters of the suspicious model are available, allowing direct extraction of the watermark. In contrast, black-box settings restrict access to only model outputs, making verification more challenging but also more realistic in real-world scenarios. Robustness against common attacks, such as model compression, fine tuning, or adversarial modifications, is essential to ensure that the embedded watermark remains detectable.

For a suspicious model , the model owner will begin by extracting the watermark using trigger samples and the watermark extraction algorithm via feature impact analysis. The task of comparing with is framed as a hypothesis testing problem.

We provide two types of ownership verification procedures. The first is as follows: Ownership is confirmed if the normalized correlation between the embedded watermark and the extracted watermark exceeds a predefined threshold .

The second ownership verification procedure is outlined in pseudocode form in Algorithm 1. The task of comparing with is framed as a hypothesis testing problem, and it is outlined as follows.

Theorem 1.

Let represent the watermark extracted from the suspicious model, and let denote the original watermark. We define the null hypothesis as , which is independent of , and the alternative hypothesis as , which is related or associated with . The suspicious model can only be considered an unauthorized copy if is rejected.

We apply Pearson’s chi-squared test and determine the corresponding p-value p. If this p-value falls below a predefined significance threshold , then the null hypothesis is dismissed, confirming the model as the rightful intellectual property of its original owner.

While cosine similarity offers a continuous and differentiable measure suitable for integration with gradient-based watermark embedding, the chi-squared test provides a complementary statistical view, producing a p-value that quantifies the likelihood of random agreement. This statistical criterion is particularly valuable for legal or third-party verification scenarios, where significance thresholds (e.g., ) offer objective and interpretable evidence of ownership. Therefore, cosine similarity serves as a training-friendly metric, whereas the chi-squared test provides a rigorous post hoc confirmation. In the experiments section, we show how we adopted the chi-squared test as our evaluation metric.

3.5. Blockchain-Based Data Verification

Both owners and users can rely on a blockchain fabric to verify the integrity of model data during storage and sharing. The blockchain fabric uses IPFS [29] as distributed storage for model data distribution. The owner with identity i can publish model data, which includes a watermarked model and associated trigger sample images , where k is the count of trigger sample images. After successfully uploading the model data onto the IPFS network, the data owner can receive a set of unique hash-based content identifiers (CIDs), which can retrieve model data from the IPFS network. The reference of a model data is represented as a Merkle tree of CIDs of the watermarked model and trigger sample, which is denoted as , where D represents a CID. Finally, a sequential list of CIDs, , along with its Merkle root, , will be saved on the blockchain through smart contracts.

At the model retrieval stage, model users call the smart contract’s function to query D and from the blockchain. To verify the integrity of model data, the user simply reconstructs a Merkle tree of D and calculates its root hash . Any modification on the sequential order of D or the content of the watermarked model and trigger sample images will lead to a different root hash value of the Merkle tree. Thus, the integrity of the received model data can be efficiently verified by comparing with the proof information recorded on the blockchain.

4. Experimental Results

In this section, we implement IW in a widely used deep learning task: image classification. We assess its effectiveness, safety, and uniqueness based on the objectives defined in Section 1. In addition, we also evaluate the latency incurred by different operation stages in the model data sharing and authentication process. Furthermore, we examine IW’s robustness against different watermark removal attacks.

Experimental Setup. The model was trained on using PyTorch (version 2.0.1) and was executed on four NVIDIA Tesla V100 GPUs. After obtaining the pre-trained model, we deployed it on a Jetson Orin Nano Super Developer Kit [30]. The Jetson Orin Nano is widely recognized as an effective edge device across various research domains, particularly in artificial intelligence (AI), robotics, computer vision, and embedded systems. Its combination of high computational power and energy efficiency makes it a suitable choice for real-time processing at the edge. We also implemented a prototype of blockchain-based security fabric. We use Solidity [31] to develop smart contracts that record the reference information of the model and it samples, as well as verifies its data integrity during sharing. Ganache [32] was used to set up a development Ethereum blockchain network. Truffle [33] was used to compile smart contracts and then deploy binary code on the development blockchain network. We set up a private IPFS [34] network to simulate a distributed storage.

Watermark Definition. In Algorithm 1, we set . In addition, we computed the watermark success rate (WSR) to assess the similarity between the recovered watermark and the ground-truth. The WSR is defined by the following equation:

where n represents the length, and denotes the indicator function. A smaller p and a higher WSR indicate that the recovered watermark closely resembles the ground-truth.

4.1. Masked Sample Generation Process

The masked sample generation process serves as a crucial step for extracting the watermark through model interpretation. Specifically, the purpose of generating masked samples is to analyze how different regions or feature components of a trigger sample contribute to the model’s output, thereby revealing the embedded watermark information in a LIME-style attribution manner.

First, the trigger sample is divided into several basic parts, which can be understood as spatial regions or semantic feature groups. These parts act as the masking units in subsequent operations. To probe the model’s sensitivity to each region, a set of binary masks is randomly generated, where each mask assigns a binary value of 1 (to retain a part) or 0 (to mask it out). The number of masks s determines the granularity of the explanation and is typically set to a moderate size to balance accuracy and efficiency.

For each mask , a corresponding masked sample is constructed by applying the mask to the original trigger sample. Formally, this process can be expressed as

where ⊙ denotes element-wise multiplication, and represents a baseline input, such as a zero-valued or mean-valued image. Each masked sample is then fed into the target model to obtain the prediction output . By observing the model’s responses to various masked inputs, the framework captures how different feature regions influence the model’s decision.

Subsequently, a metric function is applied to measure the deviation between each prediction and the target label , resulting in a metric vector . This vector characterizes the model’s sensitivity under different masking conditions and forms the basis for fitting a linear model to infer feature importance. Through this process, the model’s internal reasoning is quantitatively analyzed, allowing the extracted feature importance weights to be further transformed into the watermark bits.

4.2. Key Parameters

Several key hyper-parameters are specified. For the coefficient in Equation (1), which controls the tradeoff between maintaining the model’s performance and embedding the watermark effectively, is set to 1.2, with the optimal range being 1.0 to 1.5. For the hinge margin in Equation (2), we set . The length of the watermark n can be set to 32, 48, 64, 96, 128, and 256. The number of masks s is set to 260, with the optimal range being 250–300. When only a few masked samples are available, it is difficult to accurately assess the significance of each basic part, resulting in unsatisfactory feature impact analysis. For the ridge parameter in Equation (4), it is typically set to a small positive value, such as within the range , which helps prevent the matrix from being singular or numerically unstable while avoiding excessive smoothing of feature importance. In our work, the default setting is .

Regarding the metric function , when we have full control over the training (embedding) process, it is preferable to adopt a differentiable metric, such as the predicted probability of the target class, cross-entropy loss, or cosine similarity between explanation vectors. This choice facilitates gradient-based optimization, allowing the model to effectively encode watermark bits into its feature impact analysis while preserving the original task performance. In contrast, when only label-only access to a suspect model is available, which is a common scenario in real-world ownership verification, the metric must be discretized. Although this setting discards the confidence information contained in softmax outputs, watermark verification remains feasible because the binary pattern of “1” and “0” responses across numerous masked samples implicitly carries the embedded watermark bits. Our method remains applicable to multi-class classification tasks (e.g., CIFAR-10 and ImageNet); however, successful extraction typically requires a larger number of masked samples or API queries (e.g., s > 1024 for a 256-bit watermark on ImageNet with ResNet-18), and it exhibits slightly reduced robustness and statistical reliability per query due to the binary nature of the metric.

4.3. Performance Evaluation

We performed experiments on the CIFAR-10 and a subset of the ImageNet datasets using the widely used convolutional neural network (CNN), ResNet-18. CIFAR-10 is a benchmark dataset consisting of 60,000 color images across 10 classes, with each image having a resolution of . The dataset is divided into 50,000 training images and 10,000 testing images [35]. ImageNet contains high-resolution natural images spanning a wide range of object categories [36]. We randomly selected a subset of 80 classes, with 600 training images and 150 testing images per class. The images were resized to to match the input requirements of ResNet-18. Initially, we pre-trained ResNet-18 on both datasets, and we then fine tuned the models for 40 epochs to embed the watermark using IW.

We employed the stochastic gradient descent (SGD) optimizer with an initial learning rate of . The batch size was configured to 128 for the CIFAR-10 dataset and 1024 for ImageNet, in accordance with the respective dataset scales. The epoch of our training was 300 for both ResNet-18 models on the CIFAR-10 and ImageNet datasets. Early stopping was applied based on dual-criteria monitoring of the validation accuracy and the watermark loss. Specifically, the training process was terminated if neither the validation accuracy improved by more than 0.1% or whether the watermark loss decreased by more than 0.001 for ten consecutive epochs. This criterion prevents unnecessary overfitting of the watermark embedding process while maintaining stable task performance. The random seed used in our experiments was 42.

A Jetson Orin Nano module with 8 GB of memory was utilized for deployment. The module features 128-bit LPDDR5 memory with a standard bandwidth of 68 GB/s. Regarding power configuration, the Orin Nano supports multiple power modes, including 7 W, 15 W, and the 25 W “Super/MAXN” mode available in recent JetPack releases. In the experimental setup, the system operates in the 15 W mode by default, while the 25 W mode can be enabled to maximize CPU, GPU, and memory frequencies when higher performance is required. Note that the model was not trained on the Jetson Orin Nano; instead, we only deployed the pre-trained model on it.

To assess the performance of IW, we implemented various trigger set construction techniques inspired by different backdoor watermarking methods. These include the following: (1) Noise, where Gaussian noise is used as the trigger sample; (2) Patch, where a meaningful patch (e.g., ’MARK’) is inserted into the images; and (3) Black-edge, which involves adding a black border around the images.

To assess both the effectiveness and innocuity, Table 2 and Table 3 report the model’s prediction accuracy (Pred Acc), the p-value from a hypothesis test assessing the statistical significance of accuracy differences, and the watermark success rate (WSR) under three different types of watermark triggers: Noise, Patch, and Black-edge. The watermark length varied from 32 to 256.

Table 2.

The prediction accuracy, p-value, and WSR for the model watermarking performance testing on the CIFAR-10 dataset.

Table 3.

The model watermarking performance testing on a subset of the ImageNet dataset.

Across all experiments, as shown in Table 2 and Table 3, the baseline accuracy of the unwatermarked model (No WM) remained consistently at 91.36% for the CIFAR-10 dataset and 75.81% for the ImageNet dataset. When the watermarks were embedded, the prediction accuracy only showed a negligible decline, indicating minimal impact on the classification performance. The largest deviation was observed with the Black-edge trigger of length 48, as shown in Table 2, where the accuracy slightly dropped to 91.12%.

The p-values were consistently low, ranging from to , as shown in Table 2, i.e., significantly lower than the threshold . Furthermore, the WSR values remained close to 1.000, indicating a nearly 100% success rate in the watermark embedding and extraction across all configurations. The chi-squared test statistic was approximately proportional to , where n represents the watermark length.

Chi-Squared Test for Ownership Verification. The statistic is computed as follows:

With one degree of freedom () and a significance level of , the critical value is . The corresponding p-value is calculated as the right-tail probability of the chi-squared distribution:

Since , we reject the null hypothesis, indicating that the suspicious model contains the owner’s watermark and is likely derived from the protected model.

To analyze the differences from the backdoor watermarks, we conducted a comparative experiment, as shown in Table 4 and Table 5. To assess the level of harmlessness, we introduced the harmless degree H as an evaluation metric. Specifically, H was determined based on the accuracy obtained from both the benign testing dataset and the trigger set using their respective ground-truth labels, as defined below:

where the function consistently outputs the ground-truth label for I, and H represents the proportion of inputs for which the model produces correct predictions. In other words, when the model correctly predicts all samples (i.e., its performance is completely unaffected by watermark embedding), we have . Conversely, if the watermark introduces interference and degrades the model’s performance, then . When H increases, the impact of watermarks on the model’s utility becomes smaller. Moreover, H is averaged over both clean and trigger sets, and it is weighted by their respective sizes.

Table 4.

Performance on CIFAR-10 dataset. The harmless degree is represented by H (where a higher value is better).

Table 5.

The performance on the ImageNet dataset. The harmless degree is represented by H (where a higher value is better).

The harmless degree H is conceptually aligned with several commonly used fidelity and utility metrics. Specifically, it can be regarded as an accuracy-like measure that quantifies the proportion of samples whose predictions remain correct after watermark embedding. Therefore, a higher H implies better task fidelity and minimal degradation in model utility. In practice, H exhibits a similar trend to conventional indicators, such as the accuracy, accuracy delta (i.e., the performance gap before and after watermark embedding), and task-specific loss, all of which evaluate the preservation of model behavior under watermarking.

To illustrate the statistical setup of the test, we provide an example based on a suspicious model that was subjected to verification. Suppose that the suspicious model produces 162 matching bits out of a 256-bit watermark. The corresponding contingency table is shown in Table 6.

Table 6.

Contingency table for the test (watermark length ).

As presented in Table 4 and Table 5, our IW outperforms backdoor-based methods, as reflected in its higher harmlessness degree H. For instance, with a trigger size of 256, our IW achieves an H value approximately 5.5% higher than that of backdoor-based watermarking techniques, demonstrating its effectiveness (i.e., it is comparable to, or even surpasses that of, baseline backdoor-based approaches).

4.4. Resilience Against Watermark Removal Attacks

Once adversaries acquire the model from external sources, they may employ different strategies to eliminate watermarks or bypass detection. Therefore, we assessed whether our IW can withstand such attempts. We specifically examined two types of attacks: fine tuning and model pruning. Fine tuning is a widely used transfer learning technique in which adversaries adapt a pre-trained model to a new dataset or task. This process can alter the parameter distribution and potentially weaken or overwrite the embedded watermark. For instance, if the watermark is encoded in sensitive weight patterns, fine tuning may gradually diminish its detectability while maintaining the model’s predictive performance.

Model pruning is another common attack where redundant or low-magnitude parameters are systematically removed to reduce the model size and improve efficiency. While pruning is often performed to accelerate inference, adversaries may exploit it to erase or distort the watermark. A well-designed IW should be resilient to such structural modifications, allowing reliable watermark extraction despite partial loss of network weights. Therefore, evaluating watermark performance under pruning attacks is critical to demonstrate the watermark’s robustness in real-world deployment scenarios. In this study, we perform pruning by setting to zero the neurons with the smallest norm. Specifically, the pruning rate represents the fraction of neurons that are removed.

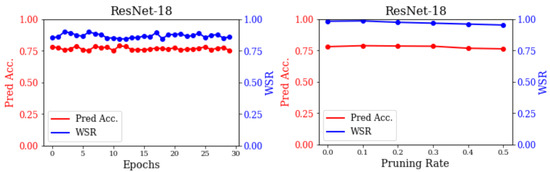

As shown in Figure 4, we first tested the resilience against a fine-tuned attack (left). We fine tuned the IW-watermarked models for 30 epochs, achieving a WSR exceeding 0.84. These findings highlight the robustness of our IW against fine-tuned attacks. We attribute this mainly to preserving the original labels of watermarked samples during training, which minimizes the impact of fine tuning compared to backdoor-based approaches. Then, we evaluated the resilience against the model-pruning attack (right). In the pruning resistance experiments, a structured pruning strategy was adopted, where entire neurons were pruned rather than individual weights. The pruning was based on the global -norm ranking of neurons, which means that those with the lowest norms were removed according to a specified pruning rate. In addition, a global neuron pruning scheme was employed. As the pruning rate increases, the prediction accuracy of ResNet-18 declines, demonstrating a reduction in the model’s effectiveness. Nevertheless, the WSR remains above 0.9. These findings indicate that our IW remains robust against model-pruning attacks.

Figure 4.

The WSR and prediction accuracy of the watermarked ResNet-18 against a fine-tuned attack (left) and model-pruning attack (right).

4.5. Latency of Model Data Authentication

We evaluated the time latency of BIMW for model data authentication in six stages. It needs three stages to publish a model along with the trigger samples on the owner’s side: (1) watermark embedding; (2) publishing the model data onto IPFS; and (3) recording the reference on the blockchain. The user needs three stages to verify model data integrity and model ownership: (4) querying the reference from the blockchain; (5) retrieving the model data from IPFS and verifying its integrity; and (6) extracting the watermark. We conducted 50 Monte Carlo test runs for each test scenario and used the averages to measure the results.

Table 7 shows the time latency incurred by the key stages of BIMW during the model authentication process. All test cases were conducted on a Jetson Orin Nano platform. The watermark embedding process took an average of 0.11 s to create an interpretable watermarked model on the owner’s side. On the users’ side, it took about 0.02 s to extract watermarks by using trigger sample images and verifying the model’s ownership. The numerical results demonstrate the efficiency of running the proposed innocuous model watermarking method under an edge computing environment.

Table 7.

The latency of the model data authentication process (seconds).

We used 10 trigger sample images (about 86 KB per figure) and a watermarked model (about 43.7 MB) to evaluate the delays incurred by the model data distribution and retrieval atop the blockchain fabric. Due to the large size of the watermarked model, it took about 4.5 s to upload the model data to the IPFS network. However, retrieving the model data from the IPFS network only introduced small delays (about 0.7 s). The latency of the recording reference through smart contracts was greatly impacted by the consensus protocol. It took about 1.36 s to save the reference on the Ganache network. In contrast, querying the reference from the blockchain took much less time (about 0.5 s). We can see that the publishing data on the blockchain fabric caused more delays (5.86 s) than the verification process (1.19 s). However, these overheads only occurred once when the owners first publish and distribute their model data. In summary, our solution brings lower latency during model data retrieval and verification procedures on edge computing platforms.

5. Conclusions

This paper introduces the novel BIMW, a decentralized AI model watermarking framework to guarantee the security and verifiable ownership of model usages under a distributed edge computing environment. To solve issues in widely applied backdoor-based model watermarking approaches, we propose an innocuous model watermarking method by leveraging an interpretable watermarking algorithm and feature impact analysis. The comprehensive experiments demonstrate the effectiveness, robustness, and harmlessness of BIMW compared with backdoor-based model watermarking solutions. Through integration of a blockchain-based security fabric, BIMW supports secure and trustworthy AI model deployment and sharing under distributed edge computing environments.

However, the current prototype of BIMW remains nascent, and several challenges are yet to be addressed. The current prototype has been empirically verified on Jetson Nano, but the feasibility and optimization strategies of watermark embedding and extraction have not been thoroughly evaluated under multiple devices with different resource constraints. In addition, the proposed framework uses a local test network to evaluate the latency incurred by IPFS storage and the blockchain network. This may not reflect real-time delays of EI applications under a real-world blockchain network. Moreover, our BIMW relies on IPFS and the blockchain to ensure the integrity and traceability of model distribution, but privacy cannot be guaranteed in model ownership verification.

Our ongoing efforts will focus on several directions. While IW has minimal effect on the watermarked model, it remains an intrusive watermarking technique. It might be more beneficial to explore advanced interpretable AI approaches and include comprehensive multi-seed evaluations. As edge computing continues to expand, deploying and managing model watermarks at scale across numerous devices presents significant challenges, particularly in terms of scalability and management efficiency, making it essential to ensure their reliable performance across different hardware platforms and devices. Last but not least, considering the limited capability of diverse edge devices and dynamic network environments, we will conduct a comprehensive evaluation of the performance and security based on practical EI applications, like federated learning.

Author Contributions

Conceptualization, X.L. and R.X.; methodology, X.L. and R.X.; software, X.L. and R.X.; validation, X.L. and R.X.; formal analysis, X.L. and R.X.; investigation, X.L. and R.X.; resources, X.L. and R.X.; data curation, X.L. and R.X.; writing—original draft preparation, X.L. and R.X.; writing—review and editing, X.L. and R.X.; visualization, X.L. and R.X.; supervision, R.X.; project administration, R.X.; funding acquisition, R.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no funding.

Data Availability Statement

The original data presented in this study are openly available in the following datasets: [CIFAR-10 datasets] [https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 22 October 2025)]; [ImageNet datasets] [https://www.image-net.org/index.php (accessed on 22 October 2025)].

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| EI | Edge Intelligence |

| IW | Interpretable Watermarking |

| IoT | Internet of Things |

| DL | Deep Learning |

| IP | Intellectual Property |

| FIA | Feature Impact Analysis |

| IML | Interpretable Machine Learning |

| SHAP | Shapley Additive Explanations |

| LIME | Local Interpretable Model-Agnostic Explanations |

| CIDs | Content Identifiers |

| WSR | Watermark success rate |

References

- Liu, X.; Xu, R.; Chen, Y. A Decentralized Digital Watermarking Framework for Secure and Auditable Video Data in Smart Vehicular Networks. Future Internet 2024, 16, 11. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge intelligence: Paving the last mile of artificial intelligence with edge computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge intelligence: The confluence of edge computing and artificial intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, Z.; Guan, C.; Wolter, K.; Xu, M. Collaborate edge and cloud computing with distributed deep learning for smart city internet of things. IEEE Internet Things J. 2020, 7, 8099–8110. [Google Scholar] [CrossRef]

- Li, L.; Ota, K.; Dong, M. Deep learning for smart industry: Efficient manufacture inspection system with fog computing. IEEE Trans. Ind. Inform. 2018, 14, 4665–4673. [Google Scholar] [CrossRef]

- Zhang, Y.; Jia, R.; Pei, H.; Wang, W.; Li, B.; Song, D. The secret revealer: Generative model-inversion attacks against deep neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 253–261. [Google Scholar]

- Tian, Z.; Cui, L.; Liang, J.; Yu, S. A comprehensive survey on poisoning attacks and countermeasures in machine learning. ACM Comput. Surv. 2022, 55, 1–35. [Google Scholar] [CrossRef]

- Liang, Y.; Xiao, J.; Gan, W.; Yu, P.S. Watermarking techniques for large language models: A survey. arXiv 2024, arXiv:2409.00089. [Google Scholar]

- Wang, R.; Li, H.; Mu, L.; Ren, J.; Guo, S.; Liu, L.; Fang, L.; Chen, J.; Wang, L. Rethinking the vulnerability of dnn watermarking: Are watermarks robust against naturalness-aware perturbations? In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 1808–1818. [Google Scholar]

- Jia, H.; Choquette-Choo, C.A.; Chandrasekaran, V.; Papernot, N. Entangled watermarks as a defense against model extraction. In Proceedings of the 30th USENIX Security Symposium (USENIX Security 21), Online, 11–13 August 2021; pp. 1937–1954. [Google Scholar]

- Yan, Y.; Pan, X.; Zhang, M.; Yang, M. Rethinking {White-Box} watermarks on deep learning models under neural structural obfuscation. In Proceedings of the 32nd USENIX Security Symposium (USENIX Security 23), Anaheim, CA, USA, 9–11 August 2023; pp. 2347–2364. [Google Scholar]

- Adi, Y.; Baum, C.; Cisse, M.; Pinkas, B.; Keshet, J. Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1615–1631. [Google Scholar]

- Shao, S.; Li, Y.; Yao, H.; He, Y.; Qin, Z.; Ren, K. Explanation as a watermark: Towards harmless and multi-bit model ownership verification via watermarking feature attribution. arXiv 2024, arXiv:2405.04825. [Google Scholar] [CrossRef]

- Singh, P.; Devi, K.J.; Thakkar, H.K.; Bilal, M.; Nayyar, A.; Kwak, D. Robust and secure medical image watermarking for edge-enabled e-healthcare. IEEE Access 2023, 11, 135831–135845. [Google Scholar] [CrossRef]

- Liu, X.; Xu, R.; Peng, X. BEWSAT: Blockchain-enabled watermarking for secure authentication and tamper localization in industrial visual inspection. In Proceedings of the Eighth International Conference on Machine Vision and Applications (ICMVA 2025), Melbourne, Australia, 12–14 June 2025; Volume 13734, pp. 54–65. [Google Scholar]

- Xu, R.; Liu, X.; Nagothu, D.; Qu, Q.; Chen, Y. Detecting Manipulated Digital Entities Through Real-World Anchors. In Proceedings of the International Conference on Advanced Information Networking and Applications; Springer: Berlin/Heidelberg, Germany, 2025; pp. 450–461. [Google Scholar]

- Saha, A.; Subramanya, A.; Pirsiavash, H. Hidden trigger backdoor attacks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11957–11965. [Google Scholar]

- Li, E.; Zhou, Z.; Chen, X. Edge intelligence: On-demand deep learning model co-inference with device-edge synergy. In Proceedings of the 2018 Workshop on Mobile Edge Communications, New York, NY, USA, 20 August 2018; pp. 31–36. [Google Scholar]

- Liu, X.; Xu, R.; Zhao, C. AGFI-GAN: An Attention-Guided and Feature-Integrated Watermarking Model Based on Generative Adversarial Network Framework for Secure and Auditable Medical Imaging Application. Electronics 2024, 14, 86. [Google Scholar] [CrossRef]

- Boenisch, F. A systematic review on model watermarking for neural networks. Front. Big Data 2021, 4, 729663. [Google Scholar] [CrossRef] [PubMed]

- Pan, X.; Zhang, M.; Yan, Y.; Wang, Y.; Yang, M. Cracking white-box dnn watermarks via invariant neuron transforms. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 1783–1794. [Google Scholar]

- Li, Y.; Zhu, M.; Yang, X.; Jiang, Y.; Wei, T.; Xia, S.T. Black-box dataset ownership verification via backdoor watermarking. IEEE Trans. Inf. Forensics Secur. 2023, 18, 2318–2332. [Google Scholar] [CrossRef]

- Dziedzic, A.; Duan, H.; Kaleem, M.A.; Dhawan, N.; Guan, J.; Cattan, Y.; Boenisch, F.; Papernot, N. Dataset inference for self-supervised models. Adv. Neural Inf. Process. Syst. 2022, 35, 12058–12070. [Google Scholar]

- Battah, A.; Madine, M.; Yaqoob, I.; Salah, K.; Hasan, H.R.; Jayaraman, R. Blockchain and NFTs for trusted ownership, trading, and access of AI models. IEEE Access 2022, 10, 112230–112249. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning; Lulu Press: Morrisville, ND, USA, 2020. [Google Scholar]

- Murdoch, W.J.; Singh, C.; Kumbier, K.; Abbasi-Asl, R.; Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl. Acad. Sci. USA 2019, 116, 22071–22080. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why should i trust you?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13 August 2016; pp. 1135–1144. [Google Scholar]

- Garreau, D.; Luxburg, U. Explaining the explainer: A first theoretical analysis of LIME. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, Online, 26–28 August 2020; pp. 1287–1296. [Google Scholar]

- Benet, J. Ipfs-content addressed, versioned, p2p file system. arXiv 2014, arXiv:1407.3561. [Google Scholar]

- Jetson Orin Nano Super Developer Kit. Available online: https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/jetson-orin/nano-super-developer-kit/ (accessed on 22 October 2025).

- Solidity. Available online: https://docs.soliditylang.org/en/v0.8.13/ (accessed on 22 October 2025).

- Ganache. Available online: https://archive.trufflesuite.com/ganache/ (accessed on 22 October 2025).

- Truffle. Available online: https://archive.trufflesuite.com/docs/truffle/ (accessed on 22 October 2025).

- IPFS Docs. Available online: https://docs.ipfs.tech/ (accessed on 22 October 2025).

- Krizhevsky, A.; Hinton, G. Convolutional deep belief networks on cifar-10. 2010, pp. 1–9. Available online: https://www.cs.toronto.edu/~kriz/conv-cifar10-aug2010.pdf (accessed on 22 October 2025).

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).