Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support

Abstract

1. Introduction

- The benchmarking of 17 ML models in SaaS for churn prediction. Related works also focus on benchmarking ML models in SaaS for churn prediction, yet, the proposed work is the first to examine such an extended set of ML models; Sanches et al. [4] examined seven ML models, Rahman et al. [11] examined four models, Tékouabou et al. [12] examined six models, De Lima Lemos et al. [13] examined six models, Al-Najjar et al. [14] examined five models, Suh [15] examined one model, Lalwani et al. [16] examined four models, Rautio [17] used three models and Maan et al. [18] used four models. Moreover, a literature review on ML in SaaS [6] highlights that the recent literature on ML methods used for decision support in SaaS, including churn prediction, has not reported a single work benchmarking more than 12 ML models.

- An exhaustive evaluation using predictive, efficiency and sustainability measures for SaaS churn prediction, which, to the best of our knowledge, is first reported in the literature covering all those aspects in such an exhaustive experimental setup.

- Employment of two public datasets, KKBOX and Telco. Related works mainly use private datasets; while working with public sets, no benchmark results have been presented together for both datasets, KKBOX and Telco. KKBOX has been used separately [19,20,21,22]; the same applies to the Telco dataset [23,24,25,26].

- Presentation of multiple experiments (PC, Google Cloud VMs, different Regions), focusing on cloud-based environments in realistic circumstances for SaaS applications. Specifically, experiments are performed in three different regions (the United States (US), Europe and Asia) in Google Cloud VMs, with both datasets. The differences when using VMs in different regions and times have already been investigated by Dodge et al. in [27]. The authors explicitly measured operational emissions across different cloud regions and times of day (on Microsoft Azure) and concluded that region choice had the largest impact. It should be noted that most of the related research focused solely on the accuracy of the predictions, neglecting aspects such as cloud deployment efficiency and environmental impact.

- Calculation of carbon emissions and energy consumption. Together with Dodge et al. [27], there is only one work, that of Sanchez Ramirez et al. [28], that assessed the carbon footprint of machine learning models for SaaS decision support. The authors examined the use of usage data for churn prediction and assessed the carbon footprint of five different machine learning models. Yet, the calculation of carbon footprint was conducted based on the estimation of energy consumption rather than real-time computation.

- Benchmark against training time, prediction time (latency) and Throughput in cloud-based systems that are important for production-ready systems.

- Benchmark relative gain in predictive performance over energy consumption and emissions again to identify the most eco-friendly ML models to use for churn prediction in SaaS (AUC/Emissions, Log Loss/Emissions).

- Exhausting investigation of trade-offs between performance–efficiency based on the Pareto frontier analysis.

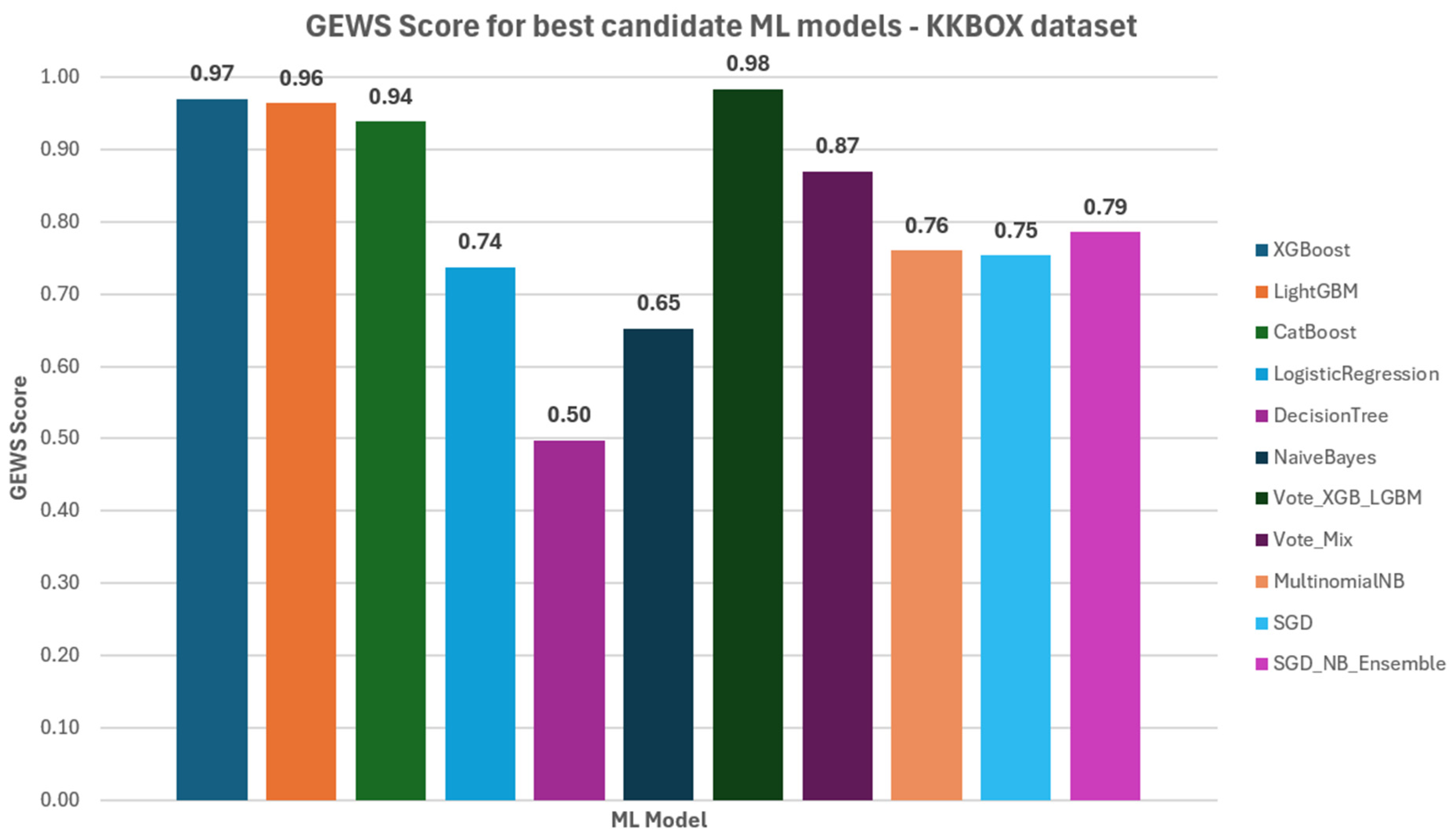

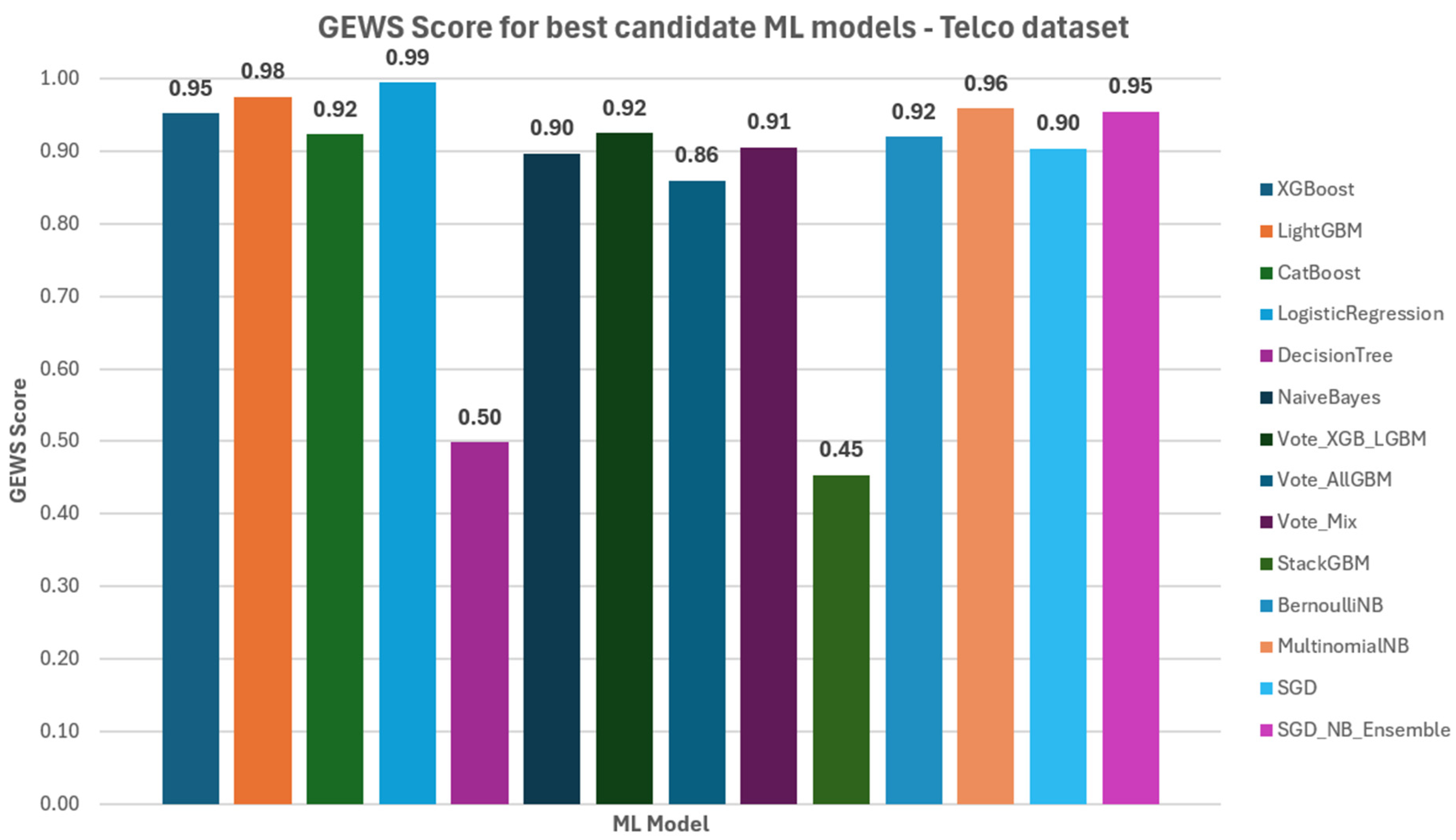

- Introduction of a novel metric, namely the GEWS, defined as a weighted sum of normalized metrics AUC, Log Loss, training time, Total Emissions and Mean Latency, aiming to evaluate ML models based on all these aspects towards choosing simpler, greener and efficient ML models.

2. Materials and Methods

2.1. Proposed Methodology

2.2. Datasets

- train_v2 file, contains the user IDs and whether they have churned (97.0961 records);

- transactions_v2 file, contains information regarding users’ membership, payment plans and payment details (1.048.575 records);

- user_logs_v2 file, contains user logs for daily listening behavior (1.048.575 records);

- members_v3 file, contains user information like city and gender (1.048.575 records).

2.3. Data Preprocessing

- num_25/50/75/985/100, refers to the number of songs played less than 25%, 25–50%, 50–75%, 75–98.5% and 98.5–100% of the song length;

- num_unq refers to unique songs played;

- total_secs refers to the total seconds played.

- log_span_days, as date_max minus date_min, to represent user lifespan;

- avg_secs_per_song, defined as the sum of total_secs for each user divided by the number of unique songs played (sum of num_unq);

- days_since_last_log_missing, which is 1 if there are no logs, otherwise it is 0;

- days_since_last_log, for the number of days since the last log.

2.4. Machine Learning Models

2.5. Training and Evaluation

3. Results

3.1. Performance Evaluation

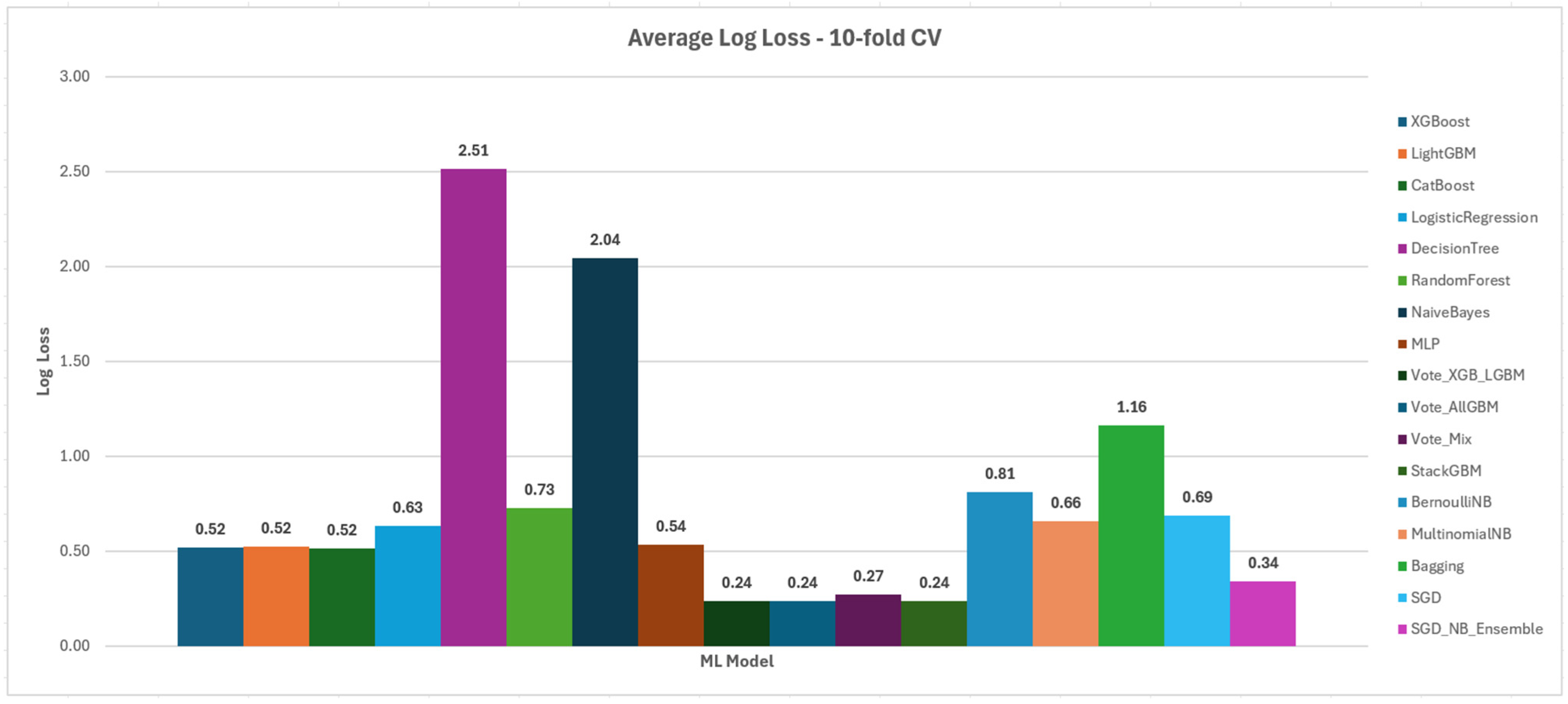

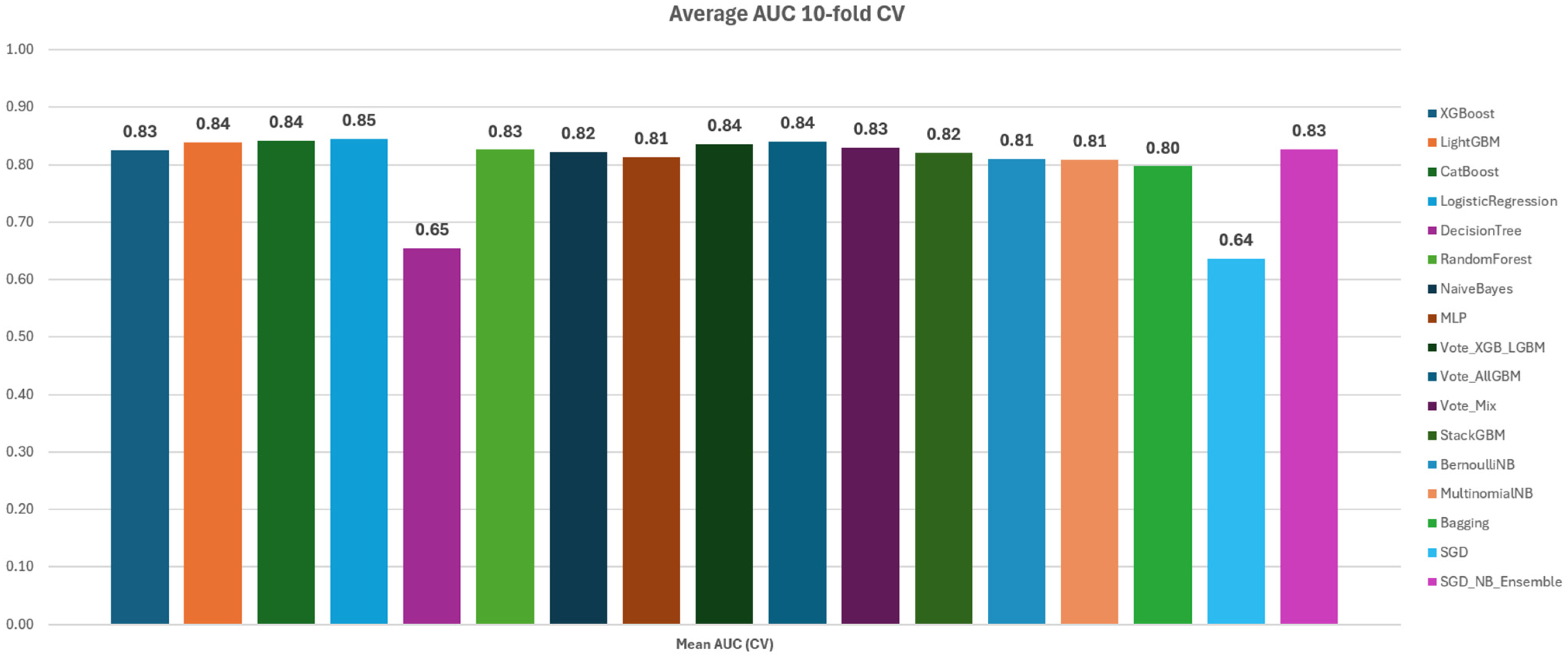

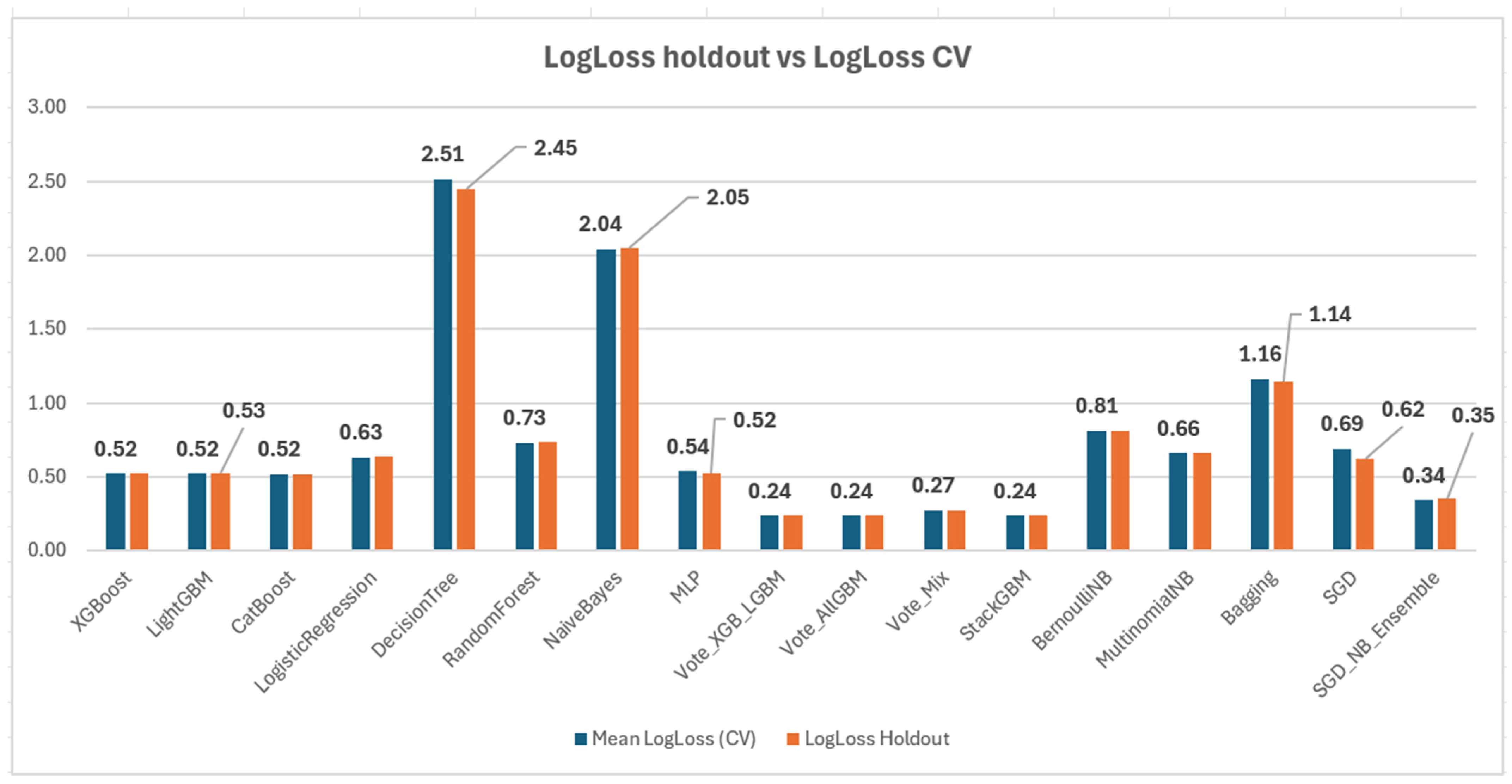

3.1.1. Performance on 10-Fold CV

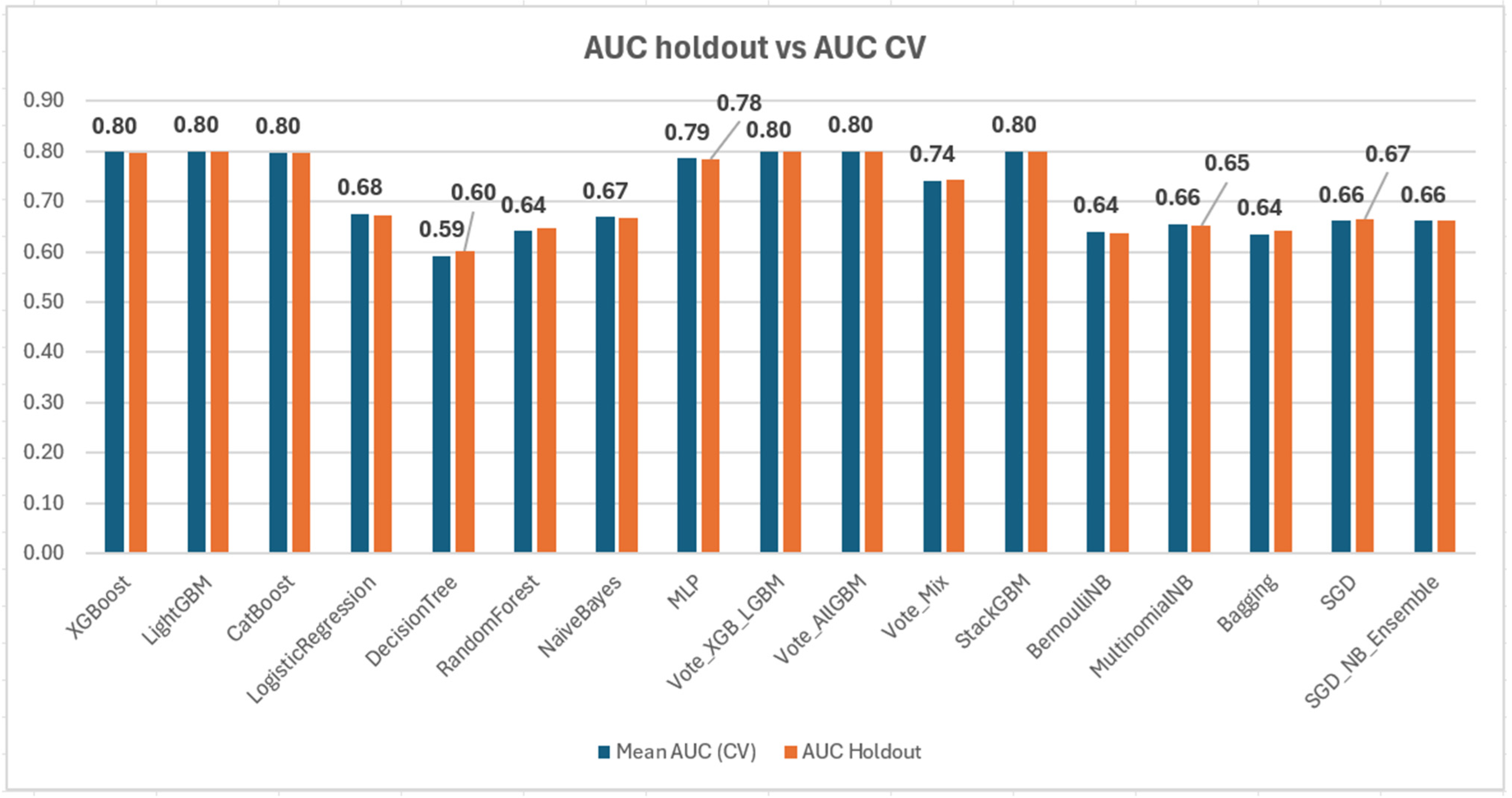

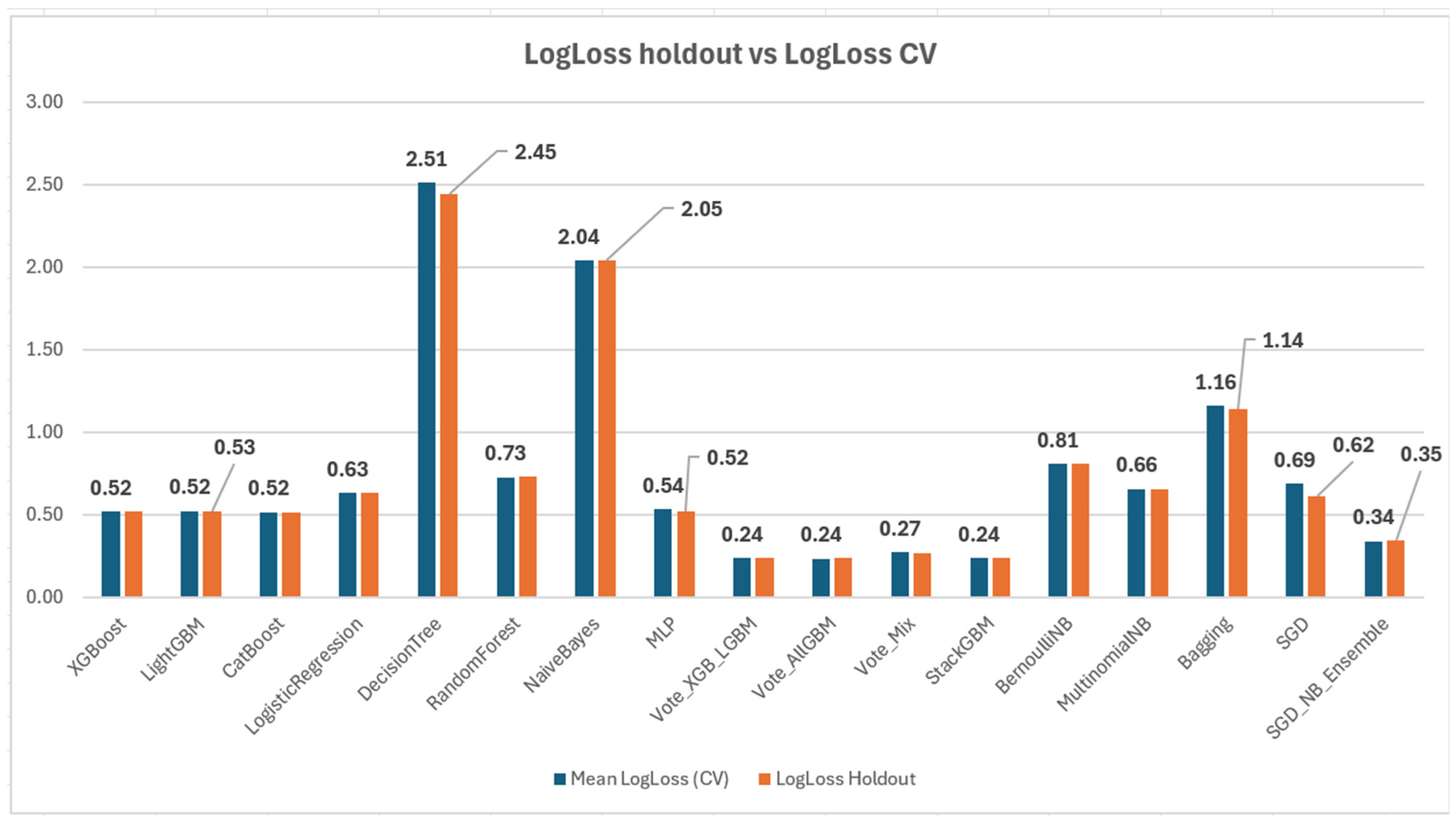

3.1.2. Performance on Holdout

3.2. Efficiency Evaluation

3.2.1. Training Time on PC and Cloud

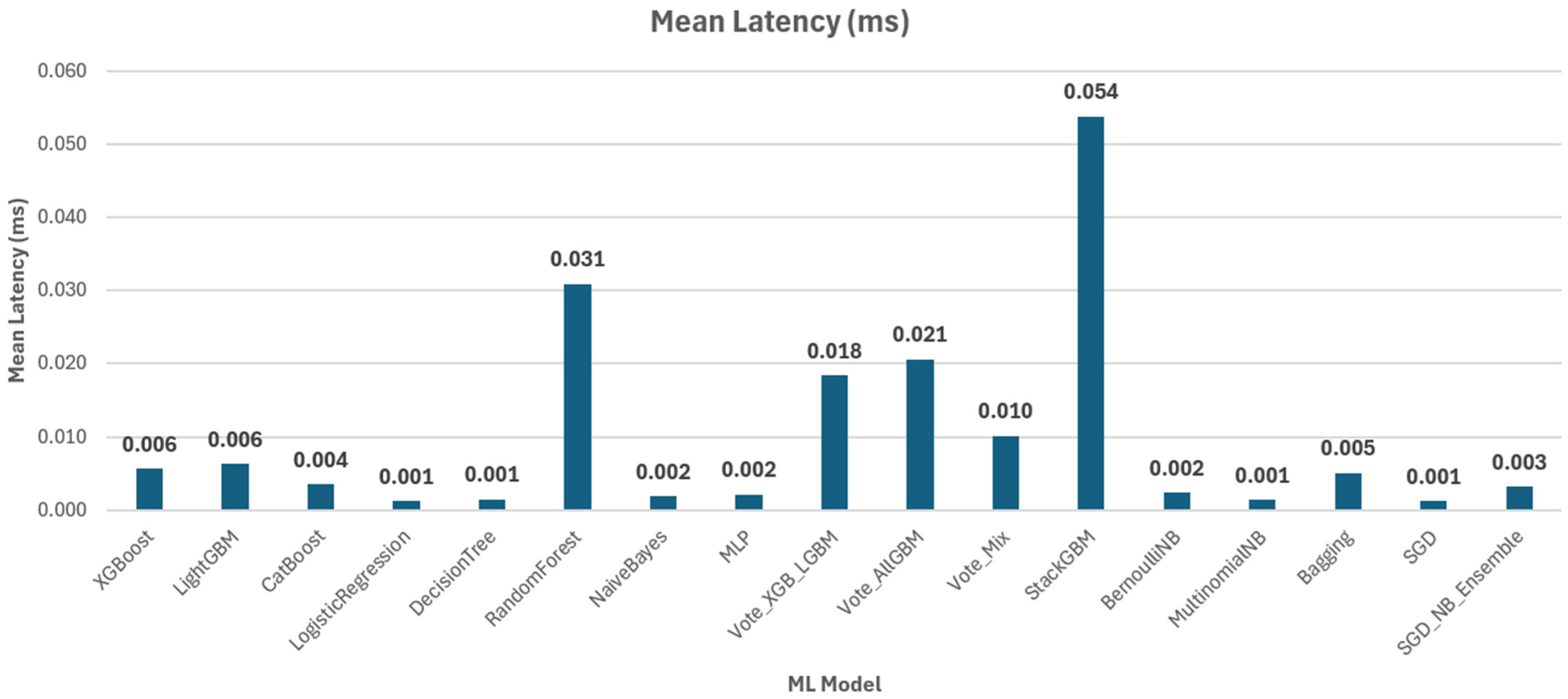

3.2.2. Prediction Time, Mean Latency and Throughput on the Cloud

- Total prediction time for the complete holdout set.

- Mean Latency, referring to the time needed for generating prediction for one sample.

- Throughput, as the number of predictions per second.

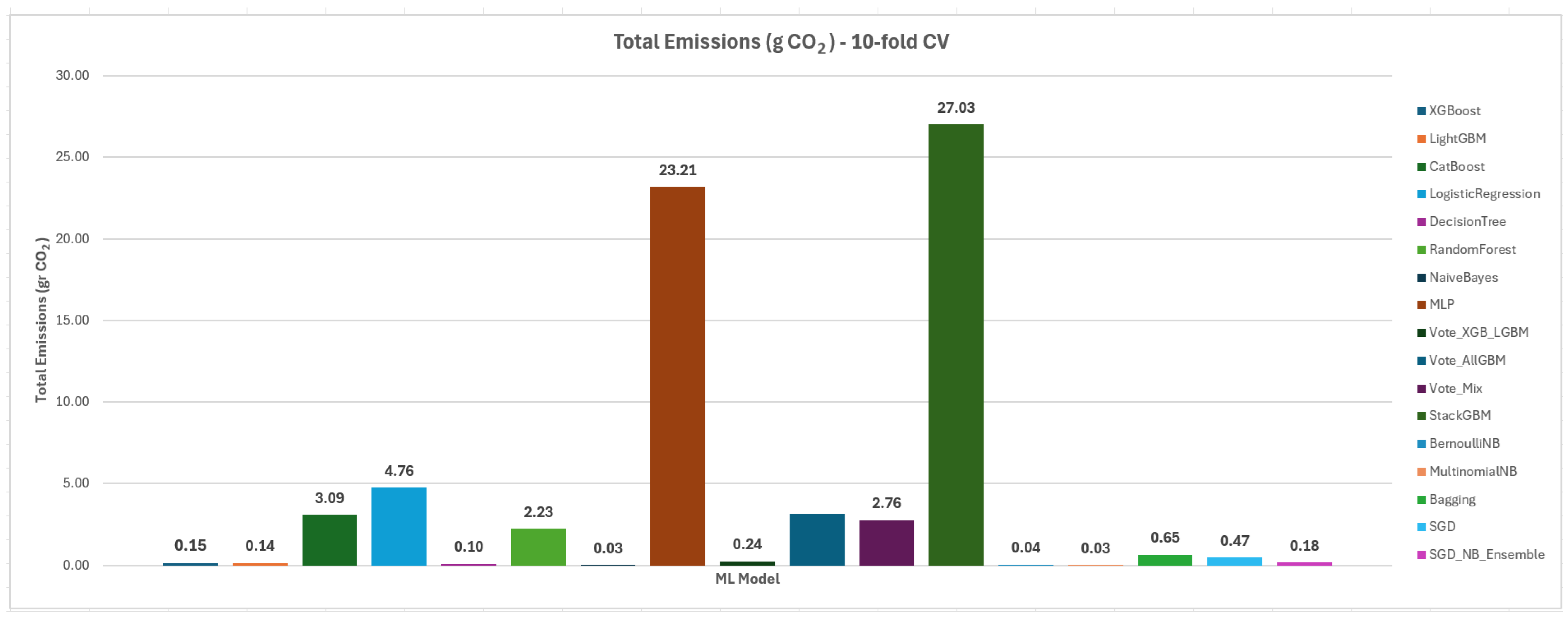

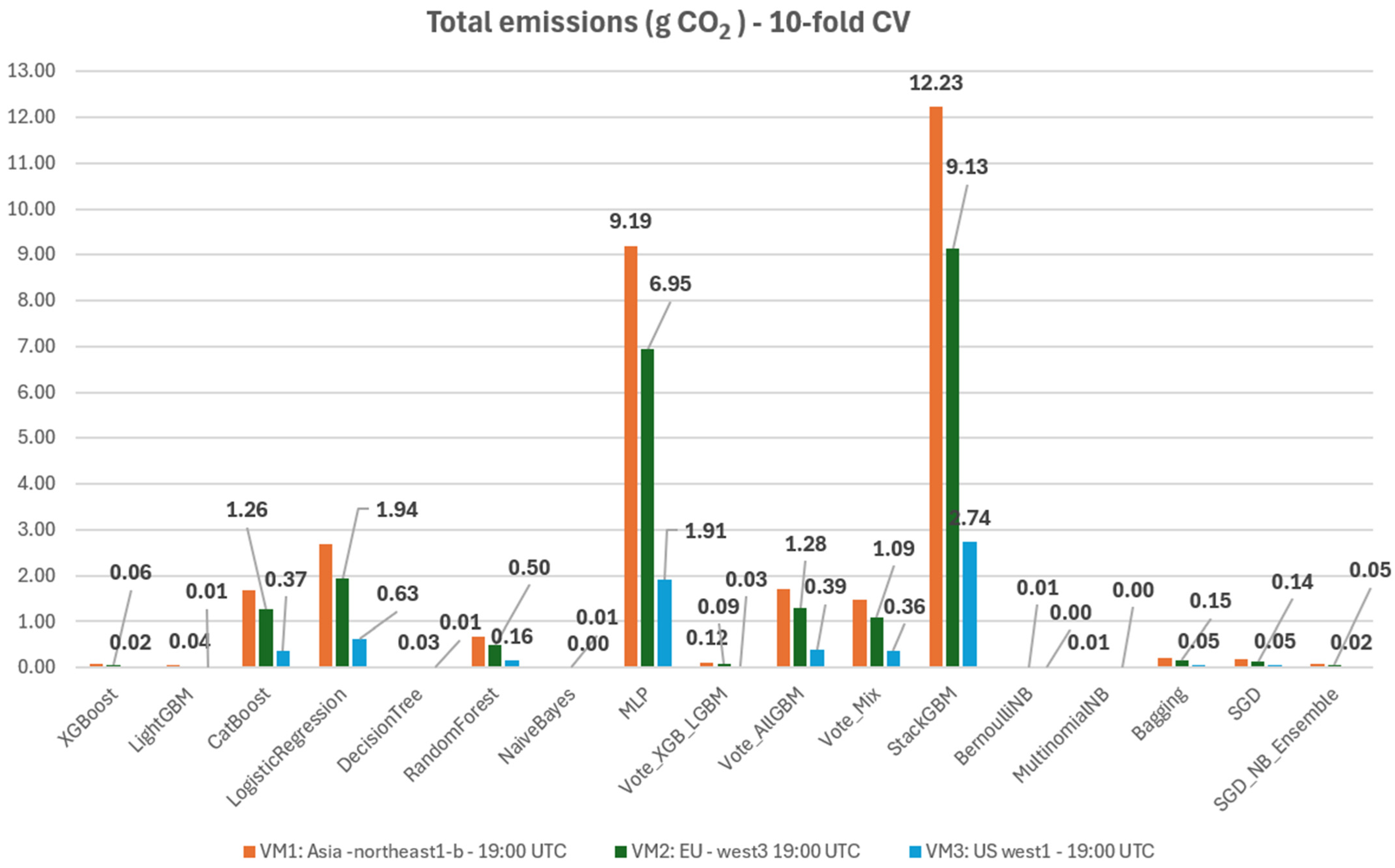

3.3. Sustainability Evaluation

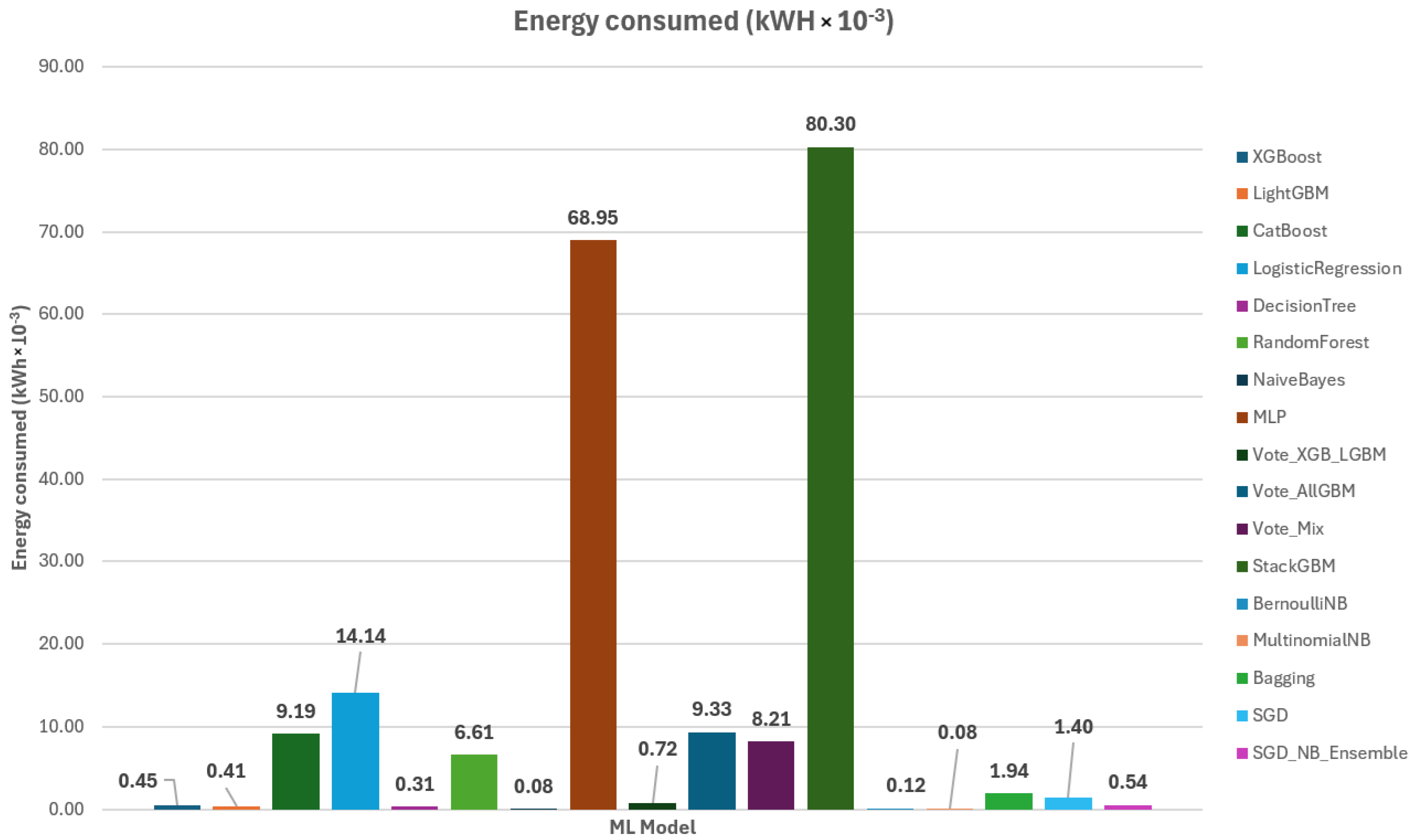

3.3.1. Energy and Emissions on PC

3.3.2. Energy and Emissions on the Cloud

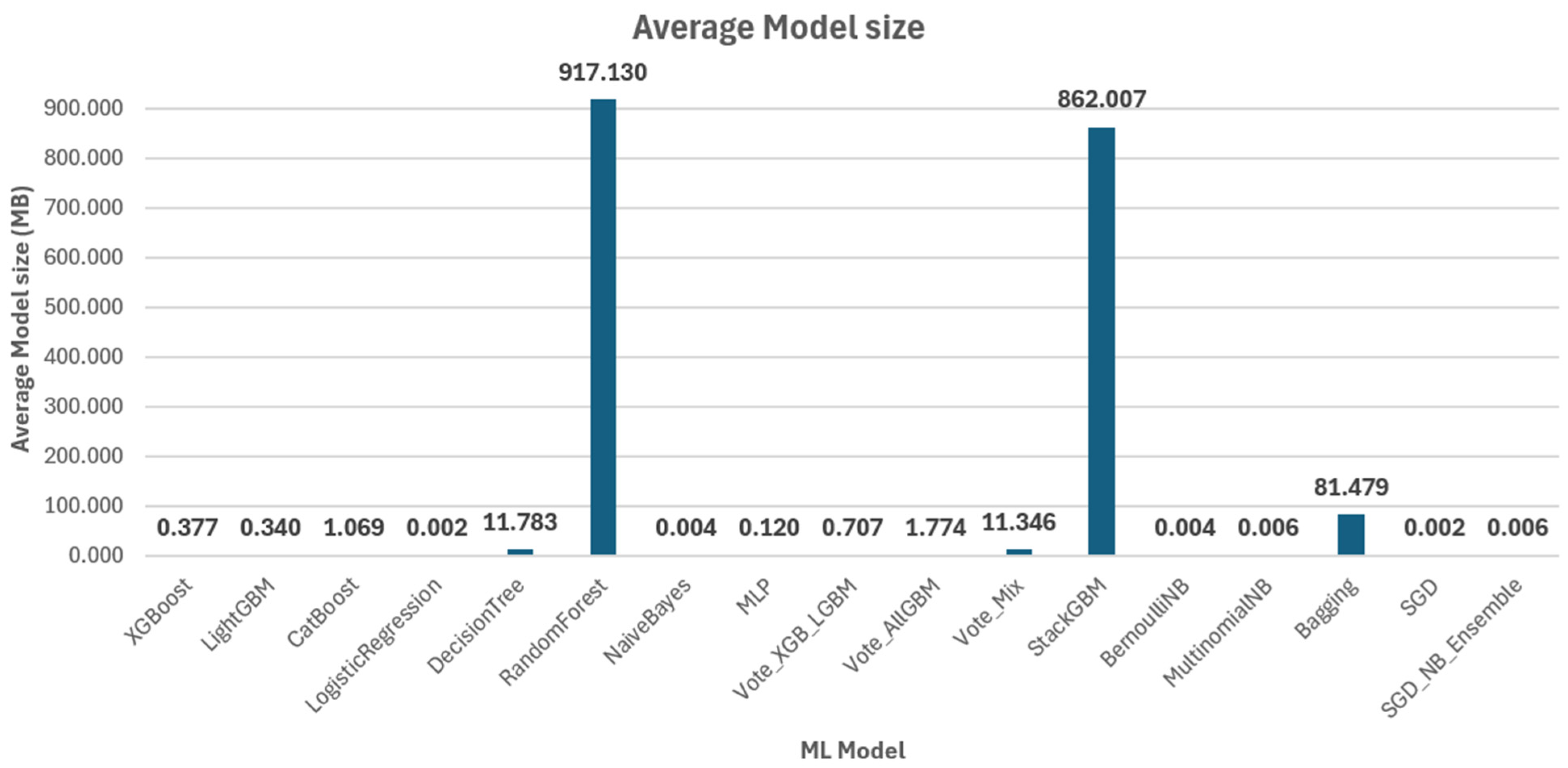

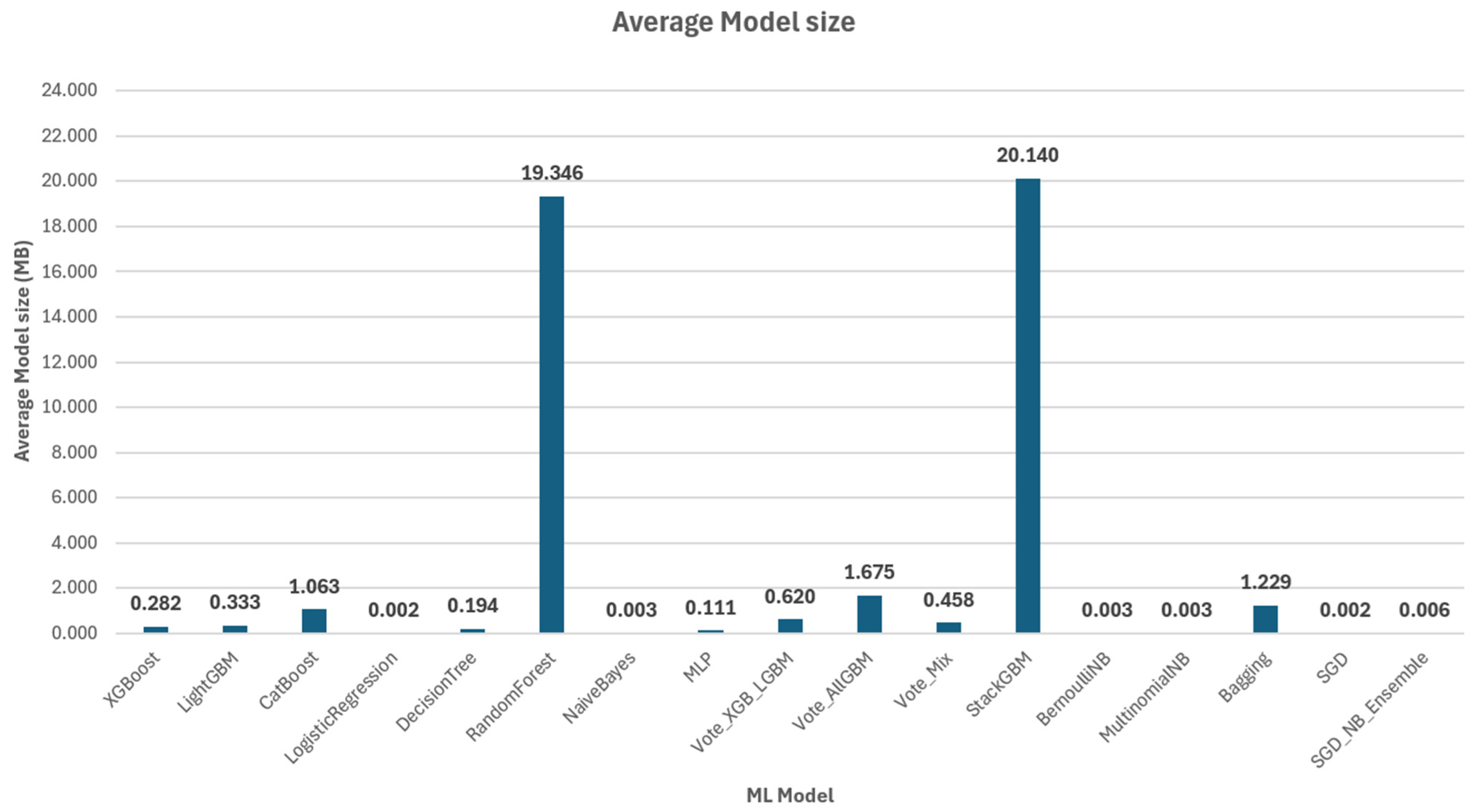

3.3.3. Models’ Size

3.4. Overall Evaluation

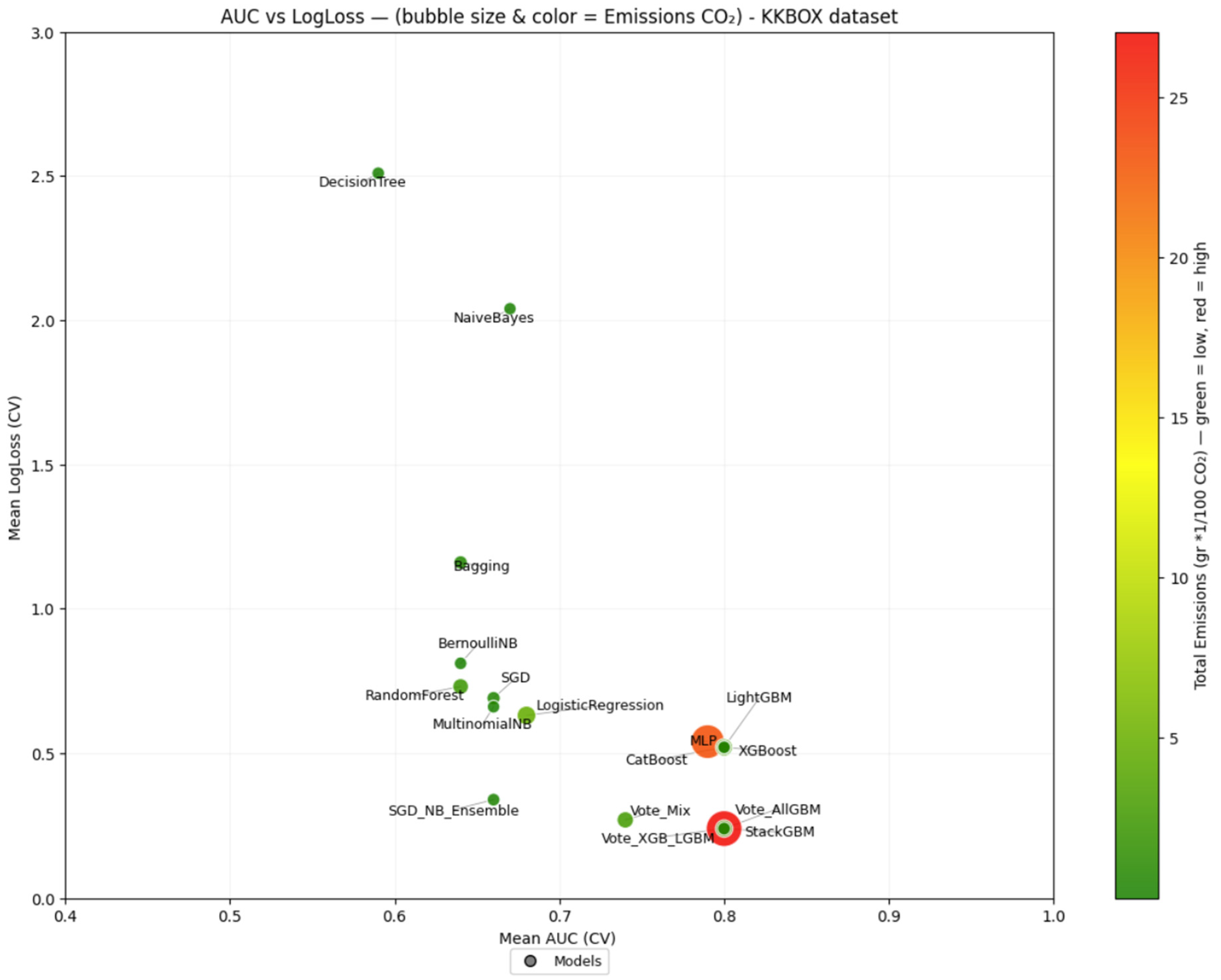

3.4.1. Pareto Frontiers Analysis

3.4.2. Green Efficiency Weighted Score (GEWS)

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ML | Machine Learning |

| SaaS | Software as a Service |

| GEWS | Green Efficiency Weighted Score |

| US | United States |

| CV | Cross-Validation |

| MLP | Multi-layer Perceptron |

| NN | Neural Network |

| AUC | Area Under the Curve |

| VM | Virtual Machine |

| DL | Deep Learning |

Appendix A

| Model [Ref.] | Default Parameters |

|---|---|

| XGBoost [43] | ‘objective’: ‘binary:logistic’, ‘eval_metric’: ‘logloss’, ‘random_state’: 42, ‘use_label_encoder’: False |

| LightGBM [44] | ‘boosting_type’: ‘gbdt’, ‘colsample_bytree’: 1.0, ‘learning_rate’: 0.1, ‘max_depth’: -1, ‘min_child_samples’: 20, ‘min_child_weight’: 0.001, ‘min_split_gain’: 0.0, ‘num_leaves’: 31, ‘random_state’: 42, ‘reg_alpha’: 0.0, ‘reg_lambda’: 0.0, ‘subsample’: 1.0, ‘subsample_for_bin’: 200,000, ‘subsample_freq’: 0, ‘objective’: ‘binary’, ‘metric’: [‘binary’], ‘num_threads’: 16, ‘num_iterations’: 100 |

| CatBoost [45] | nan_mode: Min, eval_metric: Logloss, iterations: 1000, sampling_frequency: PerTree, leaf_estimation_method: Newton, random_score_type: NormalWithModelSizeDecrease, grow_policy: SymmetricTree, penalties_coefficient: 1, boosting_type: Plain, model_shrink_mode: Constant, feature_border_type: GreedyLogSum, bayesian_matrix_reg: 0.10000000149011612, eval_fraction: 0, force_unit_auto_pair_weights: False, l2_leaf_reg: 3, random_strength: 1, rsm: 1, boost_from_average: False, model_size_reg: 0.5, pool_metainfo_options: {‘tags’: {}}, subsample: 0.800000011920929, use_best_model: False, class_names: [0,1], random_seed: 42, depth: 6, posterior_sampling: False, border_count: 254, classes_count: 0, sparse_features_conflict_fraction: 0, leaf_estimation_backtracking: AnyImprovement, best_model_min_trees: 1, model_shrink_rate: 0, min_data_in_leaf: 1, loss_function: Logloss, learning_rate: 0.020607000216841698, score_function: Cosine, task_type: CPU, leaf_estimation_iterations: 10, bootstrap_type: MVS, max_leaves: 64 |

| Logistic Regression [46] | ‘C’: 1.0, ‘dual’: False, ‘fit_intercept’: True, ‘intercept_scaling’: 1, ‘max_iter’: 1000, ‘multi_class’: ‘deprecated’, ‘penalty’: ‘l2’, ‘random_state’: 42, ‘solver’: ‘lbfgs’, ‘tol’: 0.0001, ‘verbose’: 0, ‘warm_start’: False |

| Decision Tree [47] | ‘ccp_alpha’: 0.0, ‘criterion’: ‘gini’, ‘min_impurity_decrease’: 0.0, ‘min_samples_leaf’: 1, ‘min_samples_split’: 2, ‘min_weight_fraction_leaf’: 0.0, ‘random_state’: 42, ‘splitter’: ‘best’ |

| Random Forest [48] | ‘bootstrap’: True, ‘ccp_alpha’: 0.0, ‘criterion’: ‘gini’, ‘max_features’: ‘sqrt’, ‘min_impurity_decrease’: 0.0, ‘min_samples_leaf’: 1, ‘min_samples_split’: 2, ‘min_weight_fraction_leaf’: 0.0, ‘n_estimators’: 100, ‘oob_score’: False, ‘random_state’: 42, ‘verbose’: 0, ‘warm_start’: False |

| Naïve Bayes [49] | ‘var_smoothing’: 1e-09 |

| MLP [50] | ‘activation’: ‘relu’, ‘alpha’: 0.0001, ‘batch_size’: ‘auto’, ‘beta_1’: 0.9, ‘beta_2’: 0.999, ‘early_stopping’: False, ‘epsilon’: 1e-08, ‘hidden_layer_sizes’: (100,), ‘learning_rate’: ‘constant’, ‘learning_rate_init’: 0.001, ‘max_fun’: 15000, ‘max_iter’: 300, ‘momentum’: 0.9, ‘n_iter_no_change’: 10, ‘nesterovs_momentum’: True, ‘power_t’: 0.5, ‘random_state’: 42, ‘shuffle’: True, ‘solver’: ‘adam’, ‘tol’: 0.0001, ‘validation_fraction’: 0.1, ‘verbose’: False, ‘warm_start’: False |

| Vote_XGB_LGBM (Voting of XGBoost and LightGBM) | enable_categorical = False, eval_metric = ‘logloss’, missing = nan, (‘lgbm’, LGBMClassifier(random_state = 42))], ‘flatten_transform’: True, ‘verbose’: False, ‘voting’: ‘soft’, ‘xgb’: XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan), ‘lgbm’: LGBMClassifier(random_state = 42), ‘xgb__objective’: ‘binary:logistic’, ‘xgb__enable_categorical’: False, ‘xgb__eval_metric’: ‘logloss’, ‘xgb__missing’: nan, ‘xgb__random_state’: 42, ‘xgb__use_label_encoder’: False, ‘lgbm__boosting_type’: ‘gbdt’, ‘lgbm__colsample_bytree’: 1.0, ‘lgbm__importance_type’: ‘split’, ‘lgbm__learning_rate’: 0.1, ‘lgbm__max_depth’: -1, ‘lgbm__min_child_samples’: 20, ‘lgbm__min_child_weight’: 0.001, ‘lgbm__min_split_gain’: 0.0, ‘lgbm__n_estimators’: 100, ‘lgbm__num_leaves’: 31, ‘lgbm__random_state’: 42, ‘lgbm__reg_alpha’: 0.0, ‘lgbm__reg_lambda’: 0.0, ‘lgbm__subsample’: 1.0, ‘lgbm__subsample_for_bin’: 200,000, ‘lgbm__subsample_freq’: 0 |

| Vote_AllGBM (Voting of XGBoost, LightGBM and CatBoost) | {‘estimators’: [(‘xgb’, XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan)), (‘lgbm’, LGBMClassifier(random_state = 42)), (‘cat’, <catboost.core.CatBoostClassifier object at 0x000002516EF9A0D0 >)], ‘flatten_transform’: True, ‘verbose’: False, ‘voting’: ‘soft’, ‘xgb’: XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan), ‘lgbm’: LGBMClassifier(random_state = 42), ‘cat’: <catboost.core.CatBoostClassifier object at 0x000002516EF9A0D0 >, ‘xgb__objective’: ‘binary: logistic’, ‘xgb__enable_categorical’: False, ‘xgb__eval_metric’: ‘logloss’, ‘xgb__missing’: nan, ‘xgb__random_state’: 42, ‘xgb__use_label_encoder’: False, ‘lgbm__boosting_type’: ‘gbdt’, ‘lgbm__colsample_bytree’: 1.0, ‘lgbm__importance_type’: ‘split’, ‘lgbm__learning_rate’: 0.1, ‘lgbm__max_depth’: -1, ‘lgbm__min_child_samples’: 20, ‘lgbm__min_child_weight’: 0.001, ‘lgbm__min_split_gain’: 0.0, ‘lgbm__n_estimators’: 100, ‘lgbm__num_leaves’: 31, ‘lgbm__random_state’: 42, ‘lgbm__reg_alpha’: 0.0, ‘lgbm__reg_lambda’: 0.0, ‘lgbm__subsample’: 1.0, ‘lgbm__subsample_for_bin’: 200,000, ‘lgbm__subsample_freq’: 0, ‘cat__verbose’: 0, ‘cat__random_state’: 42} |

| Vote_Mix (Voting of XGBoost, Logistic Regression, Decision Tree and Naïve Bayes) | ‘estimators’: [(‘xgb’, XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan)), (‘lr’, LogisticRegression(max_iter = 1000, random_state = 42)), (‘dt’, DecisionTreeClassifier(random_state = 42)), (‘nb’, GaussianNB())], ‘flatten_transform’: True, ‘verbose’: False, ‘voting’: ‘soft’, ‘xgb’: XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan), ‘lr’: LogisticRegression(max_iter = 1000, random_state = 42), ‘dt’: DecisionTreeClassifier(random_state = 42), ‘nb’: GaussianNB(), ‘xgb__objective’: ‘binary:logistic’, ‘xgb__enable_categorical’: False, ‘xgb__eval_metric’: ‘logloss’, ‘xgb__missing’: nan, ‘xgb__random_state’: 42, ‘xgb__use_label_encoder’: False, ‘lr__C’: 1.0, ‘lr__dual’: False, ‘lr__fit_intercept’: True, ‘lr__intercept_scaling’: 1, ‘lr__max_iter’: 1000, ‘lr__multi_class’: ‘deprecated’, ‘lr__penalty’: ‘l2’, ‘lr__random_state’: 42, ‘lr__solver’: ‘lbfgs’, ‘lr__tol’: 0.0001, ‘lr__verbose’: 0, ‘lr__warm_start’: False, ‘dt__ccp_alpha’: 0.0, ‘dt__criterion’: ‘gini’, ‘dt__min_impurity_decrease’: 0.0, ‘dt__min_samples_leaf’: 1, ‘dt__min_samples_split’: 2, ‘dt__min_weight_fraction_leaf’: 0.0, ‘dt__random_state’: 42, ‘dt__splitter’: ‘best’, ‘nb__var_smoothing’: 1e-09 |

| StackGBM (Stacking Ensemble of Random Forest, XGBoost, LightGBM, CatBoost and final estimator XGBoost) [51] | ‘estimators’: [(‘rf’, RandomForestClassifier(random_state = 42)), (‘xgb’, XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan, m)), (‘lgbm’, LGBMClassifier(random_state = 42)), (‘cat’, <catboost.core.CatBoostClassifier object at 0x0000025159667F90 >)], ‘final_estimator__objective’: ‘binary:logistic’, ‘final_estimator__enable_categorical’: False, ‘final_estimator__eval_metric’: ‘logloss’, ‘final_estimator__missing’: nan, ‘final_estimator__random_state’: 42, ‘final_estimator__use_label_encoder’: False, ‘final_estimator’: XGBClassifier(enable_categorical = False, eval_metric = ‘logloss’, missing = nan), ‘passthrough’: True, ‘stack_method’: ‘auto’, ‘verbose’: 0, ‘rf’: RandomForestClassifier(random_state = 42), ‘xgb’: XGBClassifier(eval_metric = ‘logloss’, missing = nan), ‘lgbm’: LGBMClassifier(random_state = 42), ‘cat’: <catboost.core. CatBoostClassifier object at 0x0000025159667F90 >, ‘rf__bootstrap’: True, ‘rf__ccp_alpha’: 0.0, ‘rf__criterion’: ‘gini’, ‘rf__max_features’: ‘sqrt’, ‘rf__min_impurity_decrease’: 0.0, ‘rf__min_samples_leaf’: 1, ‘rf__min_samples_split’: 2, ‘rf__min_weight_fraction_leaf’: 0.0, ‘rf__n_estimators’: 100, ‘rf__oob_score’: False, ‘rf__random_state’: 42, ‘rf__verbose’: 0, ‘rf__warm_start’: False, ‘xgb__objective’: ‘binary: logistic’, ‘xgb__enable_categorical’: False, ‘xgb__eval_metric’: ‘logloss’, ‘xgb__missing’: nan, ‘xgb__use_label_encoder’: False, ‘lgbm__boosting_type’: ‘gbdt’, ‘lgbm__colsample_bytree’: 1.0, ‘lgbm__importance_type’: ‘split’, ‘lgbm__learning_rate’: 0.1, ‘lgbm__max_depth’: -1, ‘lgbm__min_child_samples’: 20, ‘lgbm__min_child_weight’: 0.001, ‘lgbm__min_split_gain’: 0.0, ‘lgbm__n_estimators’: 100, ‘lgbm__num_leaves’: 31, ‘lgbm__random_state’: 42, ‘lgbm__reg_alpha’: 0.0, ‘lgbm__reg_lambda’: 0.0, ‘lgbm__subsample’: 1.0, ‘lgbm__subsample_for_bin’: 200,000, ‘lgbm__subsample_freq’: 0, ‘cat__verbose’: 0, ‘cat__random_state’: 42} |

| BernoulliNB [52] | ‘alpha’: 1.0, ‘binarize’: 0.0, ‘fit_prior’: True, ‘force_alpha’: True |

| MultinomialNB [53] | ‘alpha’: 1.0, ‘fit_prior’: True, ‘force_alpha’: True |

| Bagging (Bagging of Decision Trees) [54] | ‘bootstrap’: True, ‘bootstrap_features’: False, ‘estimator__ccp_alpha’: 0.0, ‘estimator__criterion’: ‘gini’, ‘estimator__min_impurity_decrease’: 0.0, ‘estimator__min_samples_leaf’: 1, ‘estimator__min_samples_split’: 2, ‘estimator__min_weight_fraction_leaf’: 0.0, ‘estimator__random_state’: 42, ‘estimator__splitter’: ‘best’, ‘estimator’: DecisionTreeClassifier(random_state = 42), ‘max_features’: 1.0, ‘max_samples’: 1.0, ‘n_estimators’: 10, ‘oob_score’: False, ‘random_state’: 42, ‘verbose’: 0, ‘warm_start’: False |

| SGD (Logistic Regression with Stochastic Gradient Descent) [55] | ‘alpha’: 0.0001, ‘average’: False, ‘early_stopping’: False, ‘epsilon’: 0.1, ‘eta0’: 0.0, ‘fit_intercept’: True, ‘l1_ratio’: 0.15, ‘learning_rate’: ‘optimal’, ‘loss’: ‘log_loss’, ‘max_iter’: 2000, ‘n_iter_no_change’: 5, ‘penalty’: ‘l2’, ‘power_t’: 0.5, ‘random_state’: 42, ‘shuffle’: True, ‘tol’: 0.001, ‘validation_fraction’: 0.1, ‘verbose’: 0, ‘warm_start’: False |

| SGD_NB_Ensemble (Voting of SGD and Naïve Bayes) | ‘estimators’: [(‘SGD’, SGDClassifier(loss = ‘log_loss’, max_iter = 2000, random_state = 42)), (‘nb’, GaussianNB())], ‘flatten_transform’: True, ‘verbose’: False, ‘voting’: ‘soft’, ‘SGD’: SGDClassifier(loss = ‘log_loss’, max_iter = 2000, random_state = 42), ‘nb’: GaussianNB(), ‘SGD__alpha’: 0.0001, ‘SGD__average’: False, ‘’SGD__early_stopping’: False, ‘SGD__epsilon’: 0.1, ‘SGD__eta0’: 0.0, ‘SGD__fit_intercept’: True, ‘SGD__l1_ratio’: 0.15, ‘SGD__learning_rate’: ‘optimal’, ‘SGD__loss’: ‘log_loss’, ‘SGD__max_iter’: 2000, ‘SGD__n_iter_no_change’: 5, ‘SGD__penalty’: ‘l2’, ‘SGD__power_t’: 0.5, ‘SGD__random_state’: 42, ‘SGD__shuffle’: True, ‘SGD__tol’: 0.001, ‘SGD__validation_fraction’: 0.1, ‘SGD__verbose’: 0, ‘SGD__warm_start’: False, ‘nb__var_smoothing’: 1e-09 |

References

- Ibrahim, A.M.A.; Abdullah, N.S.; Bahari, M. Software as a Service Challenges: A Systematic Literature Review. In Proceedings of the Future Technologies Conference (FTC) 2022, Vancouver, BC, Canada, 20–21 October 2022; Lecture Notes in Networks and Systems. Springer: Cham, Switzerland, 2023; Volume 3, pp. 257–272, ISBN 9783031183430. [Google Scholar]

- Gupta, M.; Gupta, D.; Rai, P. Exploring the Impact of Software as a Service (SaaS) on Human Life. EAI Endorsed Trans. Internet Things 2024, 10. [Google Scholar] [CrossRef]

- Sanches, H.E.; Possebom, A.T.; Aylon, L.B.R. Churn Prediction for SaaS Company with Machine Learning. Innov. Manag. Rev. 2025, 22, 130–142. [Google Scholar] [CrossRef]

- Phumchusri, N.; Amornvetchayakul, P. Machine Learning Models for Predicting Customer Churn: A Case Study in a Software-as-a-Service Inventory Management Company. Int. J. Bus. Intell. Data Min. 2024, 24, 74–106. [Google Scholar] [CrossRef]

- Dias, J.R.; Antonio, N. Predicting Customer Churn Using Machine Learning: A Case Study in the Software Industry. J. Mark. Anal. 2025, 13, 111–127. [Google Scholar] [CrossRef]

- Charizanis, G.; Mavridou, E.; Vrochidou, E.; Kalampokas, T.; Papakostas, G.A. Data-Driven Decision Support in SaaS Cloud-Based Service Models. Appl. Sci. 2025, 15, 6508. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Morán-Fernández, L.; Cancela, B.; Alonso-Betanzos, A. A Review of Green Artificial Intelligence: Towards a More Sustainable Future. Neurocomputing 2024, 599, 128096. [Google Scholar] [CrossRef]

- Tabbakh, A.; Al Amin, L.; Islam, M.; Mahmud, G.M.I.; Chowdhury, I.K.; Mukta, M.S.H. Towards Sustainable AI: A Comprehensive Framework for Green AI. Discov. Sustain. 2024, 5, 408. [Google Scholar] [CrossRef]

- Iqbal, M.S.A.; Haroon, M. Sustainable Cloud. In Advances in Science, Engineering and Technology; CRC Press: London, UK, 2025; pp. 457–462. [Google Scholar]

- The European Parliament and the Council of the European Union Regulation (EU) 2024/1689. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=OJ:L_202401689 (accessed on 5 September 2025).

- Rahman, M.; Kumar, V. Machine Learning Based Customer Churn Prediction In Banking. In Proceedings of the 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 5–7 November 2020; pp. 1196–1201. [Google Scholar]

- Tékouabou, S.C.K.; Gherghina, Ș.C.; Toulni, H.; Mata, P.N.; Martins, J.M. Towards Explainable Machine Learning for Bank Churn Prediction Using Data Balancing and Ensemble-Based Methods. Mathematics 2022, 10, 2379. [Google Scholar] [CrossRef]

- de Lima Lemos, R.A.; Silva, T.C.; Tabak, B.M. Propension to Customer Churn in a Financial Institution: A Machine Learning Approach. Neural Comput. Appl. 2022, 34, 11751–11768. [Google Scholar] [CrossRef]

- AL-Najjar, D.; Al-Rousan, N.; AL-Najjar, H. Machine Learning to Develop Credit Card Customer Churn Prediction. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 1529–1542. [Google Scholar] [CrossRef]

- Suh, Y. Machine Learning Based Customer Churn Prediction in Home Appliance Rental Business. J. Big Data 2023, 10, 41. [Google Scholar] [CrossRef]

- Lalwani, P.; Mishra, M.K.; Chadha, J.S.; Sethi, P. Customer Churn Prediction System: A Machine Learning Approach. Computing 2022, 104, 271–294. [Google Scholar] [CrossRef]

- Rautio, A.J.O. Churn Prediction in SaaS Using Machine Learning. Master’s Thesis, Tampere University, Tampere, Finland, 2019. [Google Scholar]

- Maan, J.; Maan, H. Customer Churn Prediction Model Using Explainable Machine Learning. arXiv 2023, arXiv:2303.00960. [Google Scholar] [CrossRef]

- Gregory, B. Predicting Customer Churn: Extreme Gradient Boosting with Temporal Data. arXiv 2018, arXiv:1802.03396. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, T. Research on User Churn Prediction of Music Platform Based on Integrated Learning. In Proceedings of the 2024 4th International Conference on Artificial Intelligence, Big Data and Algorithms, Zhengzhou, China, 21–23 June 2024; pp. 267–271. [Google Scholar]

- Nimmagadda, S.; Subramaniam, A.; Wong, M.L. Churn Prediction of Subscription User for a Music Streaming Service; Stanford University: Standford, CA, USA, 2017. [Google Scholar]

- Gaddam, L. Comparison of Machine Learningalgorithms on Predicting Churn Within Music Streaming Service; Blekinge Institute of Technology: Karlskrona, Sweden, 2022. [Google Scholar]

- Wu, S.; Yau, W.-C.; Ong, T.-S.; Chong, S.-C. Integrated Churn Prediction and Customer Segmentation Framework for Telco Business. IEEE Access 2021, 9, 62118–62136. [Google Scholar] [CrossRef]

- Fujo, S.W.; Subramanian, S.; Khder, M.A. Customer Churn Prediction in Telecommunication Industry Using Deep Learning. Inf. Sci. Lett. 2022, 11, 185–198. [Google Scholar] [CrossRef]

- Saha, S.; Saha, C.; Haque, M.M.; Alam, M.G.R.; Talukder, A. ChurnNet: Deep Learning Enhanced Customer Churn Prediction in Telecommunication Industry. IEEE Access 2024, 12, 4471–4484. [Google Scholar] [CrossRef]

- Arockia Panimalar, S.; Krishnakumar, A.; Senthil Kumar, S. Intensified Customer Churn Prediction: Connectivity with Weighted Multi-Layer Perceptron and Enhanced Multipath Back Propagation. Expert Syst. Appl. 2025, 265, 125993. [Google Scholar] [CrossRef]

- Dodge, J.; Prewitt, T.; Tachet des Combes, R.; Odmark, E.; Schwartz, R.; Strubell, E.; Buchanan, W. Measuring the Carbon Intensity of Ai in Cloud Instances. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; pp. 1877–1894. [Google Scholar]

- Sanchez Ramirez, J.; Coussement, K.; De Caigny, A.; Benoit, D.F.; Guliyev, E. Incorporating Usage Data for B2B Churn Prediction Modeling. Ind. Mark. Manag. 2024, 120, 191–205. [Google Scholar] [CrossRef]

- BlastChar Telco Customer Churn. Available online: https://www.kaggle.com/datasets/blastchar/telco-customer-churn (accessed on 13 September 2025).

- Geiler, L.; Affeldt, S.; Nadif, M. A Survey on Machine Learning Methods for Churn Prediction. Int. J. Data Sci. Anal. 2022, 14, 217–242. [Google Scholar] [CrossRef]

- Kolomiiets, A.; Mezentseva, O.; Kolesnikova, K. Customer Churn Prediction in the Software by Subscription Models It Business Using Machine Learning Methods. In Proceedings of the CEUR Workshop Proceedings, Ternopil, Ukraine, 16–18 November 2021. [Google Scholar]

- Addison, H.; Chiu, A.; McDonald, M.; Kan, W. Yianchen WSDM-KKBox’s Churn Prediction Challenge. Available online: https://www.kaggle.com/competitions/kkbox-churn-prediction-challenge/ (accessed on 12 September 2025).

- Sckit Learn Compute_Sample_Weight. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.utils.class_weight.compute_sample_weight.html#sklearn.utils.class_weight.compute_sample_weight (accessed on 12 September 2025).

- Saias, J.; Rato, L.; Gonçalves, T. An Approach to Churn Prediction for Cloud Services Recommendation and User Retention. Information 2022, 13, 227. [Google Scholar] [CrossRef]

- Rothmeier, K.; Pflanzl, N.; Hullmann, J.A.; Preuss, M. Prediction of Player Churn and Disengagement Based on User Activity Data of a Freemium Online Strategy Game. IEEE Trans. Games 2021, 13, 78–88. [Google Scholar] [CrossRef]

- ÇALLI, L.; KASIM, S. Using Machine Learning Algorithms to Analyze Customer Churn in the Software as a Service (SaaS) Industry. Acad. Platf. J. Eng. Smart Syst. 2022, 10, 115–123. [Google Scholar] [CrossRef]

- Ge, Y.; He, S.; Xiong, J.; Brown, D.E. Customer Churn Analysis for a Software-as-a-Service Company. In Proceedings of the 2017 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 28 April 2017; pp. 106–111. [Google Scholar]

- Hoang, H.D.; Cam, N.T. Early Churn Prediction in Freemium Game Mobile Using Transformer-Based Architecture for Tabular Data. In Proceedings of the 2024 IEEE 3rd World Conference on Applied Intelligence and Computing (AIC), Gwalior, India, 27–28 July 2024; pp. 568–573. [Google Scholar]

- Chakraborty, A.; Raturi, V.; Harsola, S. BBE-LSWCM: A Bootstrapped Ensemble of Long and Short Window Clickstream Models. In Proceedings of the 7th Joint International Conference on Data Science & Management of Data (11th ACM IKDD CODS and 29th COMAD), Bangalore, India, 4–7 January 2024; ACM: New York, NY, USA, 2024; pp. 350–358. [Google Scholar]

- Gajananan, K.; Loyola, P.; Katsuno, Y.; Munawar, A.; Trent, S.; Satoh, F. Modeling Sentiment Polarity in Support Ticket Data for Predicting Cloud Service Subscription Renewal. In Proceedings of the 2018 IEEE International Conference on Services Computing (SCC), San Francisco, CA, USA, 2–7 July 2018; pp. 49–56. [Google Scholar]

- Morozov, V.; Mezentseva, O.; Kolomiiets, A.; Proskurin, M. Predicting Customer Churn Using Machine Learning in IT Startups. In Lecture Notes on Data Engineering and Communications Technologies; Springer: Cham, Switzerland, 2022; pp. 645–664. [Google Scholar]

- Li, R. Bank Customer Churn Prediction Based on Stacking Model. Adv. Econ. Manag. Political Sci. 2025, 185, 42–51. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3149–3157. [Google Scholar]

- Dorogush, A.V.; Ershov, V.; Gulin, A. CatBoost: Gradient Boosting with Categorical Features Support. arXiv 2018, arXiv:1810.11363. [Google Scholar] [CrossRef]

- LaValley, M.P. Logistic Regression. Circulation 2008, 117, 2395–2399. [Google Scholar] [CrossRef] [PubMed]

- Loh, W. Classification and Regression Trees. WIREs Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Frank, E.; Trigg, L.; Holmes, G.; Witten, I.H. Naive Bayes for Regression. Mach. Learn. 2000, 41, 5–25. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. Proc. J. Mach. Learn. Res. 2010, 9, 249–256. [Google Scholar]

- Wolpert, D.H. Stacked Generalization. Neural Netw. 1992, 5, 241–259. [Google Scholar] [CrossRef]

- McCallum, A.; Nigam, K. A Comparison of Event Models for Naive Bayes Text Classification. In Proceedings of the AAAI-98 Workshop on Learning for Text Categorization, Madison, WI, USA, 26–27 July 1998; Volume 752, pp. 41–48. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008; ISBN 9780521865715. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Bottou, L. Stochastic Gradient Descent Tricks. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2012; pp. 421–436. ISBN 9783642352881. [Google Scholar]

- Google. General-Purpose Machine Family for Compute Engine: c4 Machine Series. Available online: https://cloud.google.com/compute/docs/general-purpose-machines#c4_series (accessed on 14 September 2025).

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics), 1st ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

- Jahan, A.; Edwards, K.L.; Bahraminasab, M. Multi-Criteria Decision Analysis, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- MacCrimmon, K.R. Decision Making Among Multiple–Attribute Alternatives: A Survey and Consolidated Approach; Arpa Order; RAND: Santa Monica, CA, USA, 1968; No. RM4823ARPA. [Google Scholar]

| Model [Ref.] | Setup Parameters |

|---|---|

| XGBoost [43] | XGBClassifier (eval_metric =“logloss”) (xgboost library) |

| LightGBM [44] | LGBM classifier (lightgbm library) |

| CatBoost [45] | CatBoost classifier (catboost library) |

| Logistic Regression [46] | LogisticRegression (max_iter = 1000) |

| Decision Tree [47] | DecisionTree |

| Random Forest [48] | RandomForest |

| Naïve Bayes [49] | GaussianNB |

| MLP [50] | MLPClassifier(max_iter = 300) |

| Vote_XGB_LGBM (Voting of XGBoost and LightGBM) | VotingClassifier. Estimators: XGBClassifier (eval_metric = “logloss”, LGBMClassifier, Voting = “soft” |

| Vote_AllGBM (Voting of XGBoost, LightGBM and CatBoost) | VotingClassifier. Estimators: XGBClassifier (eval_metric = “logloss”, LGBMClassifier, CatBoostClassifier. Voting = “soft” |

| Vote_Mix (Voting of XGBoost, Logistic Regression, Decision Tree and Naïve Bayes) | VotingClassifier. Estimators: XGBClassifier (eval_metric = “logloss”), LogisticRegression(max_iter = 1000), DecisionTreeClassifier, GaussianNB. Voting = “soft” |

| StackGBM (Stacking Ensemble of Random Forest, XGBoost, LightGBM, CatBoost and final estimator XGBoost) [51] | StackingClassifier. Estimators: RandomForestClassifier, XGBClassifier (eval_metric = “logloss”), LGBMClassifier, CatBoostClassifier. Final_estimator: XGBClassifier(use_label_encoder = False, eval_metric = “logloss, passthrough = True)) |

| BernoulliNB [52] | BernoulliNB |

| Multinomial NB [53] | MultinomialNB |

| Bagging (Bagging of Decision Trees) [54] | BaggingClassifier. Estimator: DecisionTreeClassifier(n_estimators = 10) |

| SGD (Logistic Regression with Stochastic Gradient Descent) [55] | SGDClassifier(loss = “log_loss”, max_iter = 2000) |

| SGD_NB_Ensemble (Voting of SGD and Naïve Bayes) | VotingClassifier. Estimators: SGDClassifier (loss = “log_loss”, max_iter = 2000, GaussianNB()), Voting: soft |

| VM | Google Cloud Region | Location | Grid Carbon Intensity (gCO2eq/kWh) | CO2 Intensity Characterization |

|---|---|---|---|---|

| VM1 | asia-northeast1-b 19:00 UTC | Asia (Tokyo) | 453 | High |

| VM2 | europe-west3 19:00 UTC | Europe (Frankfurt) | 276 | Medium |

| VM3 | us-west1 19:00 UTC | US (Oregon) | >200 | High |

| Evaluation Metrics | Objective |

|---|---|

| AUC | Predictive performance |

| Log-Loss | |

| Accuracy | |

| Precision | |

| Recall | |

| F-measure | |

| Training time | Efficiency |

| Prediction time | |

| Mean Latency | |

| Throughput | |

| Model Size | Sustainability |

| Energy_consumed | |

| Emissions CO2 |

| ML Model | Mean Accuracy | Mean Precision | Mean Recall | Mean F1-Score | Mean AUC (CV) | Mean Log Loss (CV) |

|---|---|---|---|---|---|---|

| XGBoost | 0.72 | 0.21 | 0.74 | 0.32 | 0.80 | 0.52 |

| LightGBM | 0.72 | 0.21 | 0.74 | 0.32 | 0.80 | 0.52 |

| CatBoost | 0.73 | 0.21 | 0.73 | 0.32 | 0.80 | 0.52 |

| Logistic Regression | 0.71 | 0.16 | 0.53 | 0.25 | 0.68 | 0.63 |

| Decision Tree | 0.83 | 0.24 | 0.42 | 0.30 | 0.59 | 2.51 |

| Random Forest | 0.85 | 0.27 | 0.37 | 0.31 | 0.64 | 0.73 |

| Naïve Bayes | 0.85 | 0.18 | 0.19 | 0.18 | 0.67 | 2.04 |

| MLP | 0.73 | 0.21 | 0.71 | 0.32 | 0.79 | 0.54 |

| Vote_XGB_LGBM | 0.92 | 0.93 | 0.16 | 0.27 | 0.80 | 0.24 |

| Vote_AllGBM | 0.92 | 0.92 | 0.16 | 0.27 | 0.80 | 0.24 |

| Vote_Mix | 0.92 | 0.75 | 0.16 | 0.26 | 0.74 | 0.27 |

| StackGBM | 0.92 | 0.91 | 0.16 | 0.27 | 0.80 | 0.24 |

| BernoulliNB | 0.75 | 0.15 | 0.37 | 0.21 | 0.64 | 0.81 |

| MultinomialNB | 0.82 | 0.17 | 0.26 | 0.20 | 0.66 | 0.66 |

| Bagging | 0.86 | 0.27 | 0.36 | 0.31 | 0.64 | 1.16 |

| SGD | 0.71 | 0.16 | 0.54 | 0.25 | 0.68 | 0.64 |

| SGD_NB_Ensemble | 0.85 | 0.18 | 0.19 | 0.18 | 0.66 | 0.33 |

| ML Model | Mean Accuracy | Mean Precision | Mean Recall | Mean F1-Score | Mean AUC (CV) | Mean Log Loss (CV) |

|---|---|---|---|---|---|---|

| XGBoost | 0.76 | 0.54 | 0.67 | 0.60 | 0.83 | 0.50 |

| LightGBM | 0.76 | 0.54 | 0.74 | 0.62 | 0.84 | 0.47 |

| CatBoost | 0.76 | 0.54 | 0.75 | 0.63 | 0.84 | 0.47 |

| Logistic Regression | 0.75 | 0.52 | 0.80 | 0.63 | 0.85 | 0.49 |

| Decision Tree | 0.73 | 0.50 | 0.48 | 0.49 | 0.65 | 9.53 |

| Random Forest | 0.79 | 0.63 | 0.47 | 0.54 | 0.83 | 0.51 |

| Naïve Bayes | 0.65 | 0.43 | 0.89 | 0.58 | 0.82 | 2.88 |

| MLP | 0.74 | 0.51 | 0.73 | 0.60 | 0.81 | 0.55 |

| Vote_XGB_LGBM | 0.80 | 0.65 | 0.52 | 0.58 | 0.84 | 0.44 |

| Vote_AllGBM | 0.80 | 0.65 | 0.51 | 0.57 | 0.84 | 0.43 |

| Vote_Mix | 0.77 | 0.57 | 0.63 | 0.60 | 0.83 | 0.47 |

| StackGBM | 0.79 | 0.62 | 0.49 | 0.55 | 0.82 | 0.50 |

| BernoulliNB | 0.67 | 0.44 | 0.87 | 0.58 | 0.81 | 1.09 |

| MultinomialNB | 0.73 | 0.50 | 0.79 | 0.61 | 0.83 | 0.82 |

| Bagging | 0.78 | 0.61 | 0.43 | 0.50 | 0.80 | 1.31 |

| SGD | 0.77 | 0.61 | 0.60 | 0.58 | 0.82 | 2.69 |

| SGD_NB_Ensemble | 0.75 | 0.54 | 0.72 | 0.61 | 0.83 | 0.84 |

| Model | VM1 | VM2 | VM3 | Average | Standard Deviation |

|---|---|---|---|---|---|

| XGBoost | 0.42 | 0.41 | 0.43 | 0.42 | 0.01 |

| LightGBM | 0.47 | 0.47 | 0.48 | 0.47 | 0.00 |

| CatBoost | 8.57 | 8.50 | 9.23 | 8.77 | 0.33 |

| Logistic Regression | 13.53 | 12.95 | 15.41 | 13.96 | 1.05 |

| Decision Tree | 0.36 | 0.36 | 0.40 | 0.38 | 0.02 |

| Random Forest | 7.58 | 7.40 | 8.46 | 7.82 | 0.46 |

| Naïve Bayes | 0.15 | 0.14 | 0.16 | 0.15 | 0.01 |

| MLP | 101.49 | 102.13 | 102.76 | 102.13 | 0.52 |

| Vote_XGB_LGBM | 0.86 | 0.85 | 0.92 | 0.87 | 0.03 |

| Vote_AllGBM | 8.91 | 8.88 | 9.82 | 9.21 | 0.44 |

| Vote_Mix | 7.71 | 7.56 | 9.15 | 8.14 | 0.72 |

| StackGBM | 84.25 | 83.56 | 93.06 | 86.96 | 4.33 |

| BernoulliNB | 0.18 | 0.16 | 0.19 | 0.17 | 0.01 |

| MultinomialNB | 0.12 | 0.10 | 0.12 | 0.12 | 0.01 |

| Bagging | 2.26 | 2.24 | 2.54 | 2.34 | 0.14 |

| SGD | 2.17 | 2.07 | 2.90 | 2.38 | 0.37 |

| SGD_NB_Ensemble | 0.83 | 0.79 | 1.09 | 0.90 | 0.13 |

| Model | VM1 | VM2 | VM3 | Average | Standard Deviation |

|---|---|---|---|---|---|

| XGBoost | 0.75 | 0.76 | 0.78 | 0.77 | 0.01 |

| LightGBM | 0.74 | 0.74 | 0.77 | 0.75 | 0.01 |

| CatBoost | 18.14 | 18.12 | 18.50 | 18.25 | 0.17 |

| Logistic Regression | 0.84 | 0.84 | 0.89 | 0.86 | 0.02 |

| Decision Tree | 0.33 | 0.33 | 0.34 | 0.33 | 0.00 |

| Random Forest | 4.61 | 4.60 | 4.68 | 4.63 | 0.04 |

| Naïve Bayes | 0.12 | 0.12 | 0.13 | 0.12 | 0.01 |

| MLP | 26.89 | 26.60 | 26.67 | 26.72 | 0.12 |

| Vote_XGB_LGBM | 1.54 | 1.54 | 1.57 | 1.55 | 0.01 |

| Vote_AllGBM | 19.09 | 19.12 | 19.51 | 19.24 | 0.19 |

| Vote_Mix | 2.29 | 2.18 | 2.22 | 2.23 | 0.05 |

| StackGBM | 128.47 | 128.17 | 130.69 | 129.11 | 1.12 |

| BernoulliNB | 0.14 | 0.14 | 0.13 | 0.14 | 0.00 |

| MultinomialNB | 0.11 | 0.11 | 0.11 | 0.11 | 0.00 |

| Bagging | 1.69 | 1.69 | 1.71 | 1.69 | 0.01 |

| SGD | 0.49 | 0.50 | 0.49 | 0.49 | 0.00 |

| SGD_NB_Ensemble | 0.77 | 0.78 | 0.77 | 0.77 | 0.00 |

| VM- Region | VM1: Asia -Northeast1-b 19:00 UTC | VM2: EU—West3 19:00 UTC | VM3: US West1 19:00 UTC | Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) |

| XGBoost | 0.26 | 0.0014 | 734,685 | 0.26 | 0.0014 | 738,269 | 0.27 | 0.0014 | 7,173,49 | 0.27 | 0.0014 | 730,101 |

| LightGBM | 0.72 | 0.0037 | 268,879 | 0.72 | 0.0037 | 271,588 | 0.73 | 0.0037 | 267,240 | 0.72 | 0.0037 | 269,236 |

| CatBoost | 0.15 | 0.0008 | 1,289,414 | 0.15 | 0.0008 | 1,279,011 | 0.15 | 0.0008 | 1,282,835 | 0.15 | 0.0008 | 1,283,753 |

| Logistic Regression | 0.04 | 0.0002 | 5,544,422 | 0.03 | 0.0002 | 5,779,359 | 0.04 | 0.0002 | 4,831,150 | 0.04 | 0.0002 | 5,384,977 |

| Decision Tree | 0.12 | 0.0006 | 1,571,370 | 0.12 | 0.0006 | 1,652,179 | 0.15 | 0.0008 | 1,314,524 | 0.13 | 0.0007 | 1,512,691 |

| Random Forest | 8.36 | 0.0430 | 23,230 | 8.42 | 0.0433 | 23,076 | 9.44 | 0.0486 | 20,566 | 8.74 | 0.0450 | 222,91 |

| Naïve Bayes | 0.20 | 0.0010 | 956,918 | 0.20 | 0.0010 | 973,352 | 0.30 | 0.0015 | 650,896 | 0.23 | 0.0012 | 860,389 |

| MLP | 0.75 | 0.0039 | 259,671 | 0.72 | 0.0037 | 268,738 | 0.81 | 0.0042 | 240,661 | 0.76 | 0.0039 | 256,357 |

| Vote_XGB_LGBM | 0.96 | 0.0049 | 202,767 | 0.94 | 0.0049 | 205,789 | 0.98 | 0.0050 | 198,943 | 0.96 | 0.0049 | 202,500 |

| Vote_AllGBM | 1.08 | 0.0055 | 180,425 | 1.06 | 0.0055 | 182,626 | 1.11 | 0.0057 | 175,146 | 1.08 | 0.0056 | 179,399 |

| Vote_Mix | 0.61 | 0.0031 | 319,971 | 0.59 | 0.0030 | 330,234 | 0.69 | 0.0036 | 281,362 | 0.63 | 0.0032 | 310,522 |

| StackGBM | 9.78 | 0.0504 | 19,854 | 9.85 | 0.0507 | 19,711 | 10.88 | 0.0561 | 17,840 | 10.17 | 0.0524 | 19,135 |

| BernoulliNB | 0.30 | 0.0015 | 648,956 | 0.30 | 0.0015 | 656,327 | 0.41 | 0.0021 | 477,180 | 0.33 | 0.0017 | 594,154 |

| MultinomialNB | 0.18 | 0.0009 | 1,084,657 | 0.18 | 0.0009 | 1,062,531 | 0.26 | 0.0013 | 750,543 | 0.21 | 0.0011 | 965,910 |

| Bagging | 1.06 | 0.0055 | 183,303 | 1.05 | 0.0054 | 185,121 | 1.37 | 0.0070 | 141,957 | 1.16 | 0.0060 | 170,127 |

| SGD | 0.03 | 0.0002 | 5,623,307 | 0.03 | 0.0002 | 6,072,912 | 0.04 | 0.0002 | 4,569,442 | 0.04 | 0.0002 | 5,421,887 |

| SGD_NB_Ensemble | 0.21 | 0.0011 | 938,350 | 0.19 | 0.0010 | 1,024,291 | 0.28 | 0.0014 | 699,297 | 0.22 | 0.0012 | 887,313 |

| VM- Region | VM1: Asia -Northeast1-b 19:00 UTC | VM2: EU—West3 19:00 UTC | VM3: US West1 19:00 UTC | Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) | Prediction Time (s) | Mean Latency (ms) | Throughput (Sample/s) |

| XGBoost | 0.0079 | 0.0056 | 178,923 | 0.0078 | 0.0056 | 180,002 | 0.0081 | 0.0058 | 173,669 | 0.0079 | 0.0056 | 177,532 |

| LightGBM | 0.0088 | 0.0063 | 159,260 | 0.0087 | 0.0062 | 161,350 | 0.0089 | 0.0063 | 157,521 | 0.0088 | 0.0063 | 159,377 |

| CatBoost | 0.0050 | 0.0035 | 282,768 | 0.0047 | 0.0033 | 302,419 | 0.0053 | 0.0038 | 264,270 | 0.0050 | 0.0035 | 283,152 |

| Logistic Regression | 0.0017 | 0.0012 | 829,499 | 0.0016 | 0.0011 | 872,892 | 0.0017 | 0.0012 | 822,225 | 0.0017 | 0.0012 | 841,539 |

| Decision Tree | 0.0020 | 0.0014 | 695,009 | 0.0019 | 0.0014 | 727,719 | 0.0021 | 0.0015 | 685,792 | 0.0020 | 0.0014 | 702,840 |

| Random Forest | 0.0430 | 0.0305 | 32,787 | 0.0421 | 0.0299 | 334,56 | 0.0455 | 0.0323 | 30,939 | 0.0435 | 0.0309 | 32,394 |

| Naïve Bayes | 0.0025 | 0.0018 | 556,942 | 0.0025 | 0.0018 | 561,396 | 0.0027 | 0.0019 | 516,470 | 0.0026 | 0.0018 | 544,936 |

| MLP | 0.0031 | 0.0022 | 449,947 | 0.0027 | 0.0019 | 523,212 | 0.0031 | 0.0022 | 460,401 | 0.0030 | 0.0021 | 477,853 |

| Vote_XGB_LGBM | 0.0245 | 0.0174 | 57,597 | 0.0264 | 0.0188 | 53,283 | 0.0267 | 0.0190 | 52,724 | 0.0259 | 0.0184 | 54,534 |

| Vote_AllGBM | 0.0316 | 0.0224 | 44,575 | 0.0280 | 0.0198 | 50,403 | 0.0277 | 0.0196 | 50,956 | 0.0291 | 0.0206 | 48,645 |

| Vote_Mix | 0.0142 | 0.0101 | 99,065 | 0.0138 | 0.0098 | 102,061 | 0.0148 | 0.0105 | 95,424 | 0.0143 | 0.0101 | 98,850 |

| StackGBM | 0.0792 | 0.0562 | 17,793 | 0.0740 | 0.0525 | 19,035 | 0.0743 | 0.0527 | 18,958 | 0.0758 | 0.0538 | 18,595 |

| BernoulliNB | 0.0034 | 0.0024 | 418,840 | 0.0032 | 0.0023 | 436,877 | 0.0036 | 0.0025 | 395,853 | 0.0034 | 0.0024 | 417,190 |

| MultinomialNB | 0.0020 | 0.0014 | 713,098 | 0.0020 | 0.0014 | 720,366 | 0.0020 | 0.0015 | 688,440 | 0.0020 | 0.0014 | 707,301 |

| Bagging | 0.0070 | 0.0049 | 202,235 | 0.0069 | 0.0049 | 203,858 | 0.0074 | 0.0053 | 189,930 | 0.0071 | 0.0050 | 198,674 |

| SGD | 0.0016 | 0.0012 | 861,509 | 0.0016 | 0.0011 | 874,215 | 0.0018 | 0.0013 | 796,528 | 0.0017 | 0.0012 | 844,084 |

| SGD_NB_Ensemble | 0.0045 | 0.0032 | 315,807 | 0.0044 | 0.0031 | 319,760 | 0.0047 | 0.0034 | 297,607 | 0.0045 | 0.0032 | 311,058 |

| Model | VM1 | VM2 | VM3 | Emission VM1/ Emissions VM2 | Emission VM2/ Emissions VM3 | Emission VM1/ Emissions VM3 |

|---|---|---|---|---|---|---|

| XGBoost | 0.08 | 0.06 | 0.02 | 1.34 | 3.56 | 4.78 |

| LightGBM | 0.05 | 0.04 | 0.01 | 1.35 | 3.61 | 4.87 |

| CatBoost | 1.69 | 1.26 | 0.37 | 1.34 | 3.37 | 4.53 |

| Logistic Regression | 2.69 | 1.94 | 0.63 | 1.39 | 3.09 | 4.29 |

| Decision Tree | 0.03 | 0.03 | 0.01 | 1.34 | 3.31 | 4.43 |

| Random Forest | 0.69 | 0.50 | 0.16 | 1.36 | 3.21 | 4.38 |

| Naïve Bayes | 0.01 | 0.01 | 0.00 | 1.40 | 3.17 | 4.43 |

| MLP | 9.19 | 6.95 | 1.91 | 1.32 | 3.65 | 4.82 |

| Vote_XGB_LGBM | 0.12 | 0.09 | 0.03 | 1.35 | 3.38 | 4.55 |

| Vote_AllGBM | 1.71 | 1.28 | 0.39 | 1.33 | 3.31 | 4.42 |

| Vote_Mix | 1.47 | 1.09 | 0.36 | 1.35 | 3.03 | 4.10 |

| StackGBM | 12.23 | 9.13 | 2.74 | 1.34 | 3.33 | 4.46 |

| BernoulliNB | 0.02 | 0.01 | 0.00 | 1.43 | 3.19 | 4.55 |

| MultinomialNB | 0.01 | 0.01 | 0.00 | 1.54 | 3.10 | 4.77 |

| Bagging | 0.20 | 0.15 | 0.05 | 1.34 | 3.24 | 4.34 |

| SGD | 0.20 | 0.14 | 0.05 | 1.39 | 2.63 | 3.66 |

| SGD_NB_Ensemble | 0.08 | 0.05 | 0.02 | 1.41 | 2.69 | 3.79 |

| Model | VM1 | VM2 | VM3 | Emission VM1/ Emissions VM2 | Emission VM2/ Emissions VM3 | Emission VM1/ Emissions VM3 |

|---|---|---|---|---|---|---|

| XGBoost | 0.20 | 0.15 | 0.04 | 1.32 | 3.54 | 4.68 |

| LightGBM | 0.19 | 0.14 | 0.04 | 1.32 | 3.58 | 4.74 |

| CatBoost | 5.92 | 4.45 | 1.22 | 1.33 | 3.63 | 4.83 |

| Logistic Regression | 0.27 | 0.20 | 0.06 | 1.35 | 3.18 | 4.28 |

| Decision Tree | 0.12 | 0.09 | 0.03 | 1.34 | 3.64 | 4.87 |

| Random Forest | 0.77 | 0.58 | 0.16 | 1.33 | 3.61 | 4.81 |

| Naïve Bayes | 0.09 | 0.07 | 0.02 | 1.33 | 3.60 | 4.79 |

| MLP | 4.18 | 3.11 | 0.85 | 1.35 | 3.66 | 4.93 |

| Vote_XGB_LGBM | 0.36 | 0.27 | 0.08 | 1.34 | 3.57 | 4.78 |

| Vote_AllGBM | 6.04 | 4.54 | 1.23 | 1.33 | 3.69 | 4.91 |

| Vote_Mix | 0.74 | 0.54 | 0.15 | 1.37 | 3.62 | 4.97 |

| StackGBM | 36.73 | 27.50 | 7.69 | 1.34 | 3.58 | 4.78 |

| BernoulliNB | 0.10 | 0.07 | 0.02 | 1.33 | 3.68 | 4.89 |

| MultinomialNB | 0.09 | 0.07 | 0.02 | 1.33 | 3.67 | 4.87 |

| Bagging | 0.33 | 0.25 | 0.07 | 1.33 | 3.64 | 4.83 |

| SGD | 0.15 | 0.11 | 0.03 | 1.33 | 3.69 | 4.89 |

| SGD_NB_Ensemble | 0.19 | 0.14 | 0.04 | 1.32 | 3.70 | 4.88 |

| Model | Mean AUC | Mean Log Loss | Total Training Time (min) | Total Emissions (g CO2) | Mean Latency (ms) | Pareto Frontier |

|---|---|---|---|---|---|---|

| XGBoost | 0.80 | 0.52 | 0.34 | 0.15 | 1.37 × 10−6 | Pareto |

| LightGBM | 0.80 | 0.52 | 0.30 | 0.14 | 3.71 × 10−6 | Pareto |

| CatBoost | 0.80 | 0.52 | 6.48 | 3.09 | 7.79 × 10−7 | Pareto |

| Logistic Regression | 0.68 | 0.63 | 11.36 | 4.76 | 1.87 × 10−7 | Pareto |

| Decision Tree | 0.59 | 2.51 | 0.40 | 0.10 | 6.67 × 10−7 | Pareto |

| Random Forest | 0.64 | 0.73 | 8.61 | 2.23 | 4.5 × 10−5 | Dominated |

| Naïve Bayes | 0.67 | 2.04 | 0.10 | 0.03 | 1.2 × 10−6 | Pareto |

| MLP | 0.79 | 0.54 | 78.88 | 23.21 | 3.91 × 10−6 | Dominated |

| Vote_XGB_LGBM | 0.80 | 0.24 | 0.52 | 0.24 | 4.94 × 10−6 | Pareto |

| Vote_AllGBM | 0.80 | 0.24 | 6.58 | 3.14 | 5.58 × 10−6 | Dominated |

| Vote_Mix | 0.74 | 0.27 | 6.70 | 2.76 | 3.24 × 10−6 | Pareto |

| StackGBM | 0.80 | 0.24 | 76.43 | 27.03 | 5.24 × 10−5 | Dominated |

| BernoulliNB | 0.64 | 0.81 | 0.13 | 0.04 | 1.72 × 10−6 | Dominated |

| MultinomialNB | 0.66 | 0.66 | 0.08 | 0.03 | 1.07 × 10−6 | Pareto |

| Bagging | 0.64 | 1.16 | 2.51 | 0.65 | 5.97 × 10−6 | Dominated |

| SGD | 0.66 | 0.69 | 1.87 | 0.47 | 1.87 × 10−7 | Pareto |

| SGD_NB_Ensemble | 0.66 | 0.34 | 0.69 | 0.18 | 1.16 × 10−6 | Pareto |

| Model | Mean AUC | Mean Log Loss | Total Training Time (min) | Total Emissions (g CO2) | Mean Latency (ms) | Pareto Frontier |

|---|---|---|---|---|---|---|

| XGBoost | 0.83 | 0.50 | 1.34 | 0.87 | 0.000008 | Pareto |

| LightGBM | 0.84 | 0.47 | 0.91 | 0.59 | 0.000005 | Pareto |

| CatBoost | 0.84 | 0.47 | 13.67 | 10.02 | 0.000009 | Pareto |

| Logistic Regression | 0.85 | 0.49 | 1.27 | 0.88 | 0.000001 | Pareto |

| Decision Tree | 0.65 | 9.53 | 0.36 | 0.16 | 0.000002 | Pareto |

| Random Forest | 0.83 | 0.51 | 4.37 | 1.88 | 0.000028 | Dominated |

| Naïve Bayes | 0.82 | 2.88 | 0.16 | 0.07 | 0.000004 | Pareto |

| MLP | 0.81 | 0.55 | 34.89 | 18.13 | 0.000003 | Dominated |

| Vote_XGB_LGBM | 0.84 | 0.44 | 2.08 | 1.34 | 0.000030 | Pareto |

| Vote_AllGBM | 0.84 | 0.43 | 16.61 | 12.31 | 0.000038 | Pareto |

| Vote_Mix | 0.83 | 0.47 | 2.32 | 1.56 | 0.000031 | Pareto |

| StackGBM | 0.82 | 0.50 | 105.15 | 73.36 | 0.000082 | Pareto |

| BernoulliNB | 0.81 | 1.09 | 0.18 | 0.09 | 0.000004 | Pareto |

| MultinomialNB | 0.83 | 0.82 | 0.15 | 0.08 | 0.000002 | Pareto |

| Bagging | 0.80 | 1.31 | 1.64 | 0.84 | 0.000005 | Dominated |

| SGD | 0.82 | 2.69 | 0.63 | 0.29 | 0.000001 | Pareto |

| SGD_NB_Ensemble | 0.83 | 0.84 | 0.95 | 0.42 | 0.000004 | Pareto |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mavridou, E.; Vrochidou, E.; Selvesakis, M.; Papakostas, G.A. Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support. Future Internet 2025, 17, 467. https://doi.org/10.3390/fi17100467

Mavridou E, Vrochidou E, Selvesakis M, Papakostas GA. Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support. Future Internet. 2025; 17(10):467. https://doi.org/10.3390/fi17100467

Chicago/Turabian StyleMavridou, Efthimia, Eleni Vrochidou, Michail Selvesakis, and George A. Papakostas. 2025. "Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support" Future Internet 17, no. 10: 467. https://doi.org/10.3390/fi17100467

APA StyleMavridou, E., Vrochidou, E., Selvesakis, M., & Papakostas, G. A. (2025). Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support. Future Internet, 17(10), 467. https://doi.org/10.3390/fi17100467